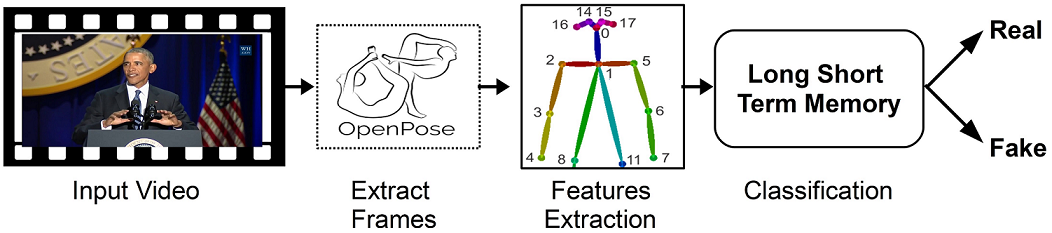

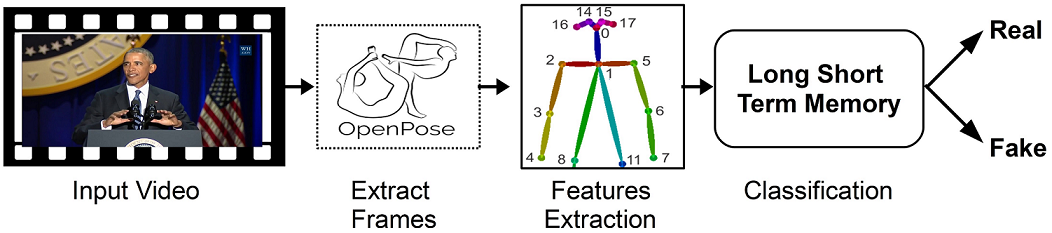

Recent improvements in deepfake creation have made deepfake videos more realistic. Moreover, open-source software has made deepfake creation more accessible, which reduces the barrier to entry for deepfake creation. This could pose a threat to the people’s privacy. There is a potential danger if the deepfake creation techniques are used by people with an ulterior motive to produce deepfake videos of world leaders to disrupt the order of countries and the world. Therefore, research into the automatic detection of deepfaked media is essential for public security. In this work, we propose a deepfake detection method using upper body language analysis. Specifically, a many-to-one LSTM network was designed and trained as a classification model for deepfake detection. Different models were trained by varying the hyperparameters to build a final model with benchmark accuracy. We achieved 94.39% accuracy on the deepfake test set. The experimental results showed that upper body language can effectively detect deepfakes.