Submitted:

23 February 2023

Posted:

24 February 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

1.1. Aim of Thesis

1.2. Rationale for Non-Contact Heart Rate Monitoring

1.3. Importance of the Heart Rate

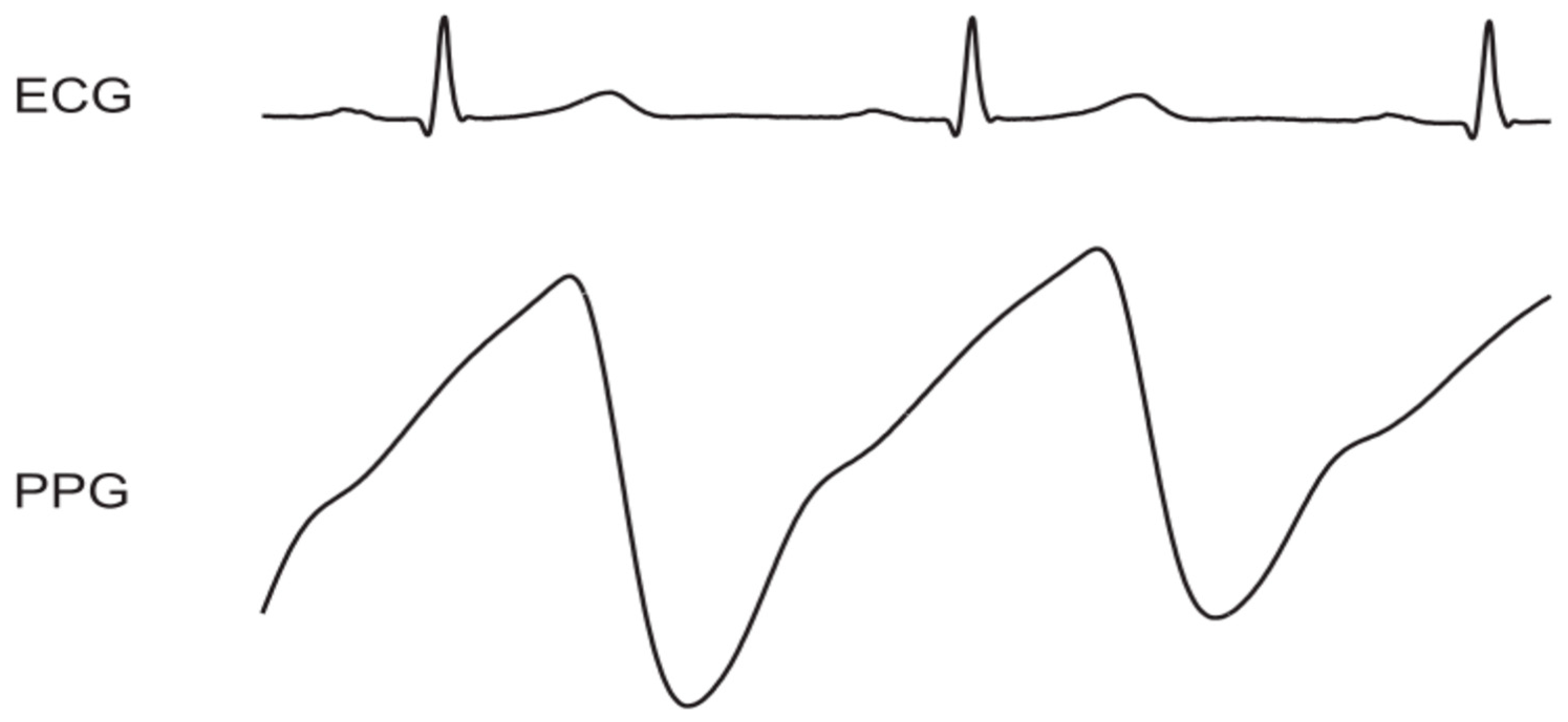

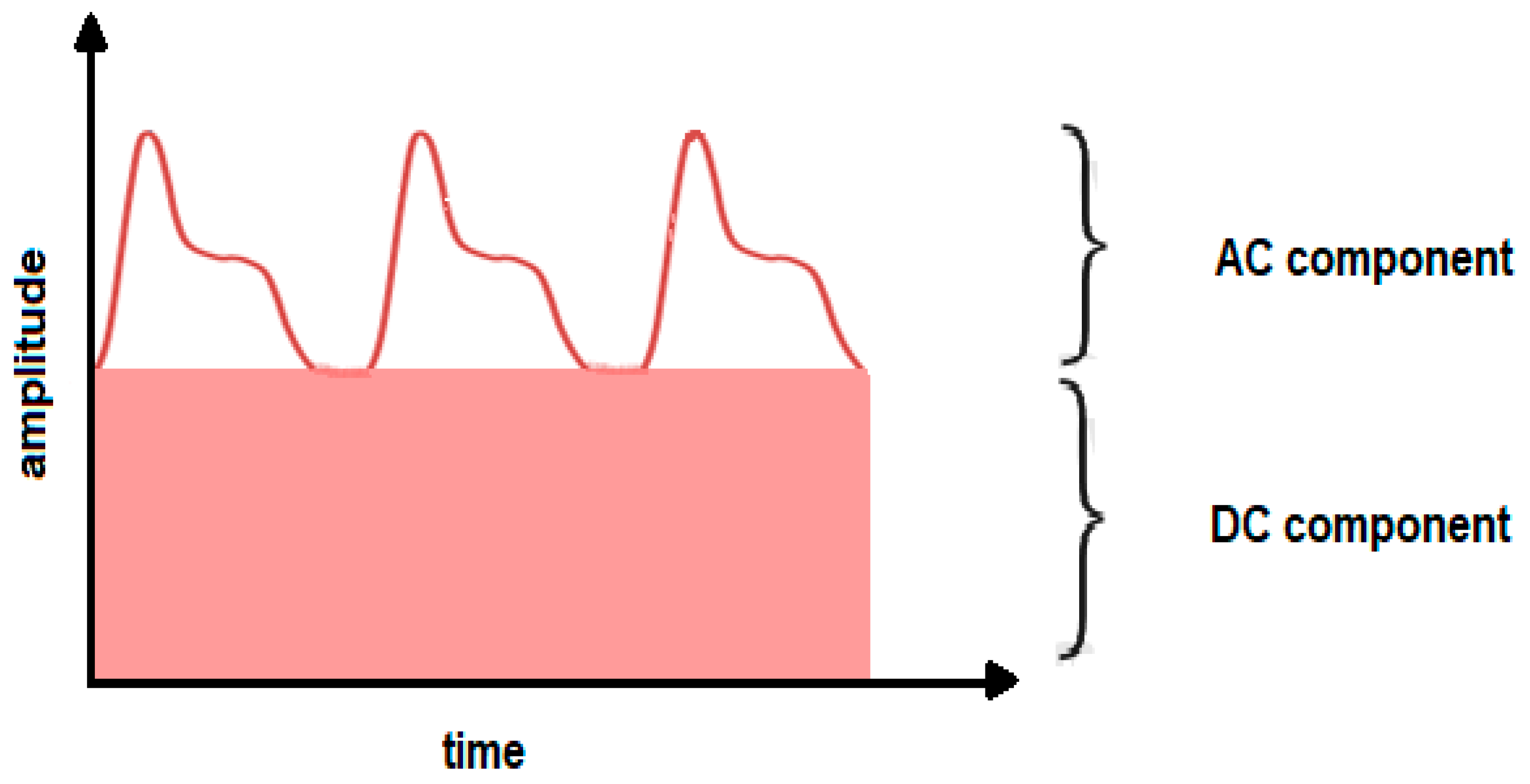

1.4. Fundamental Theory of Photoplethysmography

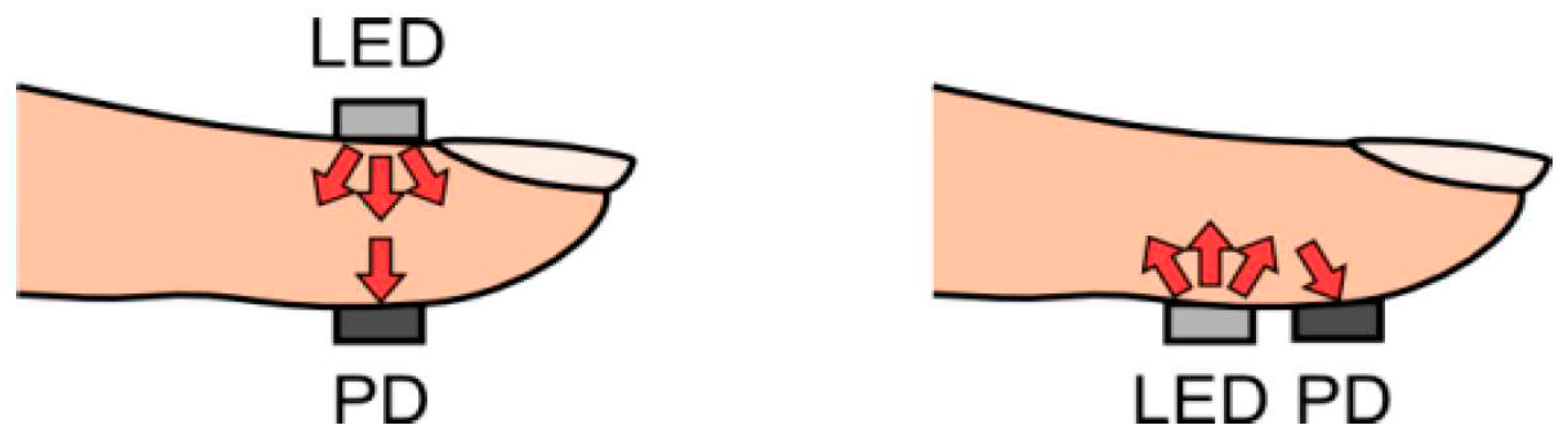

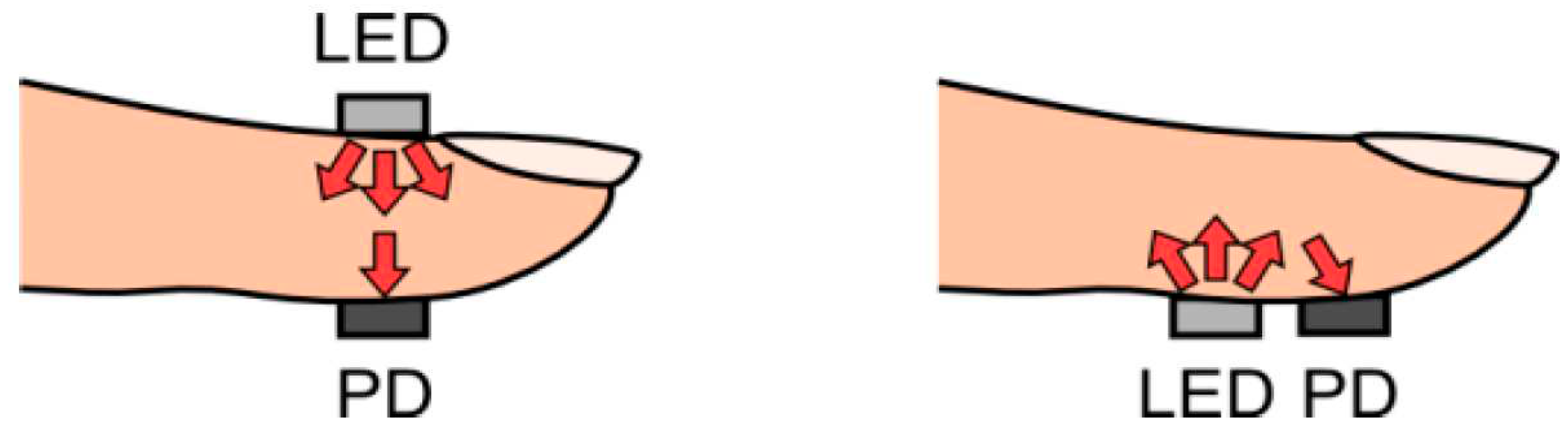

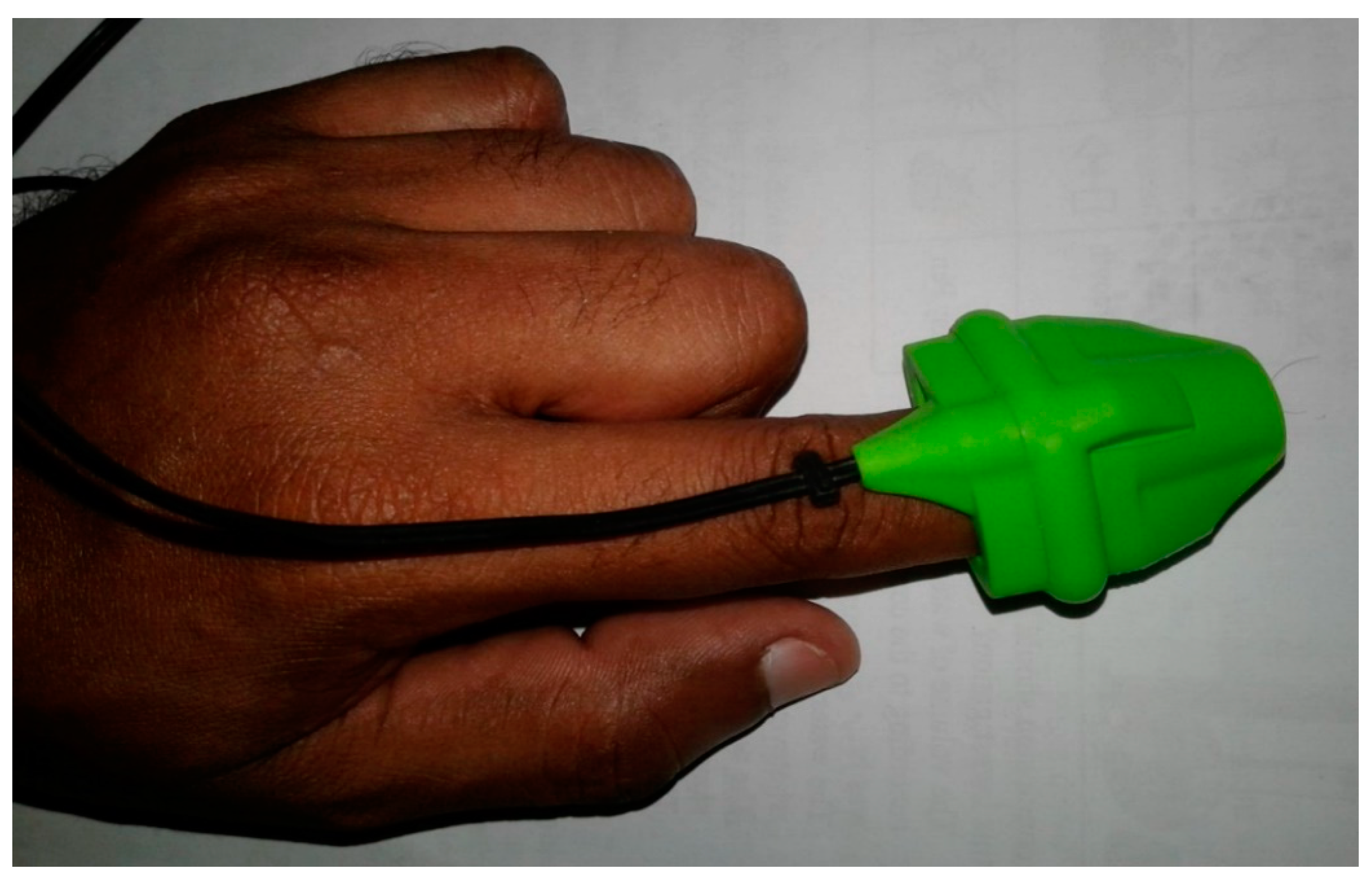

1.5. Pulse Oximetry—An Example of Contact PPG

1.6. Non-Contact Photoplethysmography

1.7. Problems with Video-Based rPPG

- Intrinsically low signal strength

- Motion artefacts

- Noise due to fluctuations in illumination.

1.8. Research Objectives

- Implement an algorithmic framework for HR estimation by video-based reflectance photoplethysmography

- Investigate the effect of the presence/absence of dedicated lighting on the SNR of rPPG signals

- Investigate the effect of choice/size of the region of interest (ROI) on the SNR of rPPG signals

- Compare the SNR of green rPPG signals with that of rPPG signals based on luminance

- Investigate the viability of the use of the summary autocorrelation function (SACF) as a technique for improving the SNR of rPPG signals

- Implement the HR estimation algorithm into a graphical user interface (GUI)

- Prepare a laboratory practical centred on HR estimation by video-based rPPG

1.9. Thesis Outline

2. Literature Review

2.1. Previous Work

3. Materials and Methods 1: Experimental Setup

3.1. Research Materials

3.2. Experimental Setup

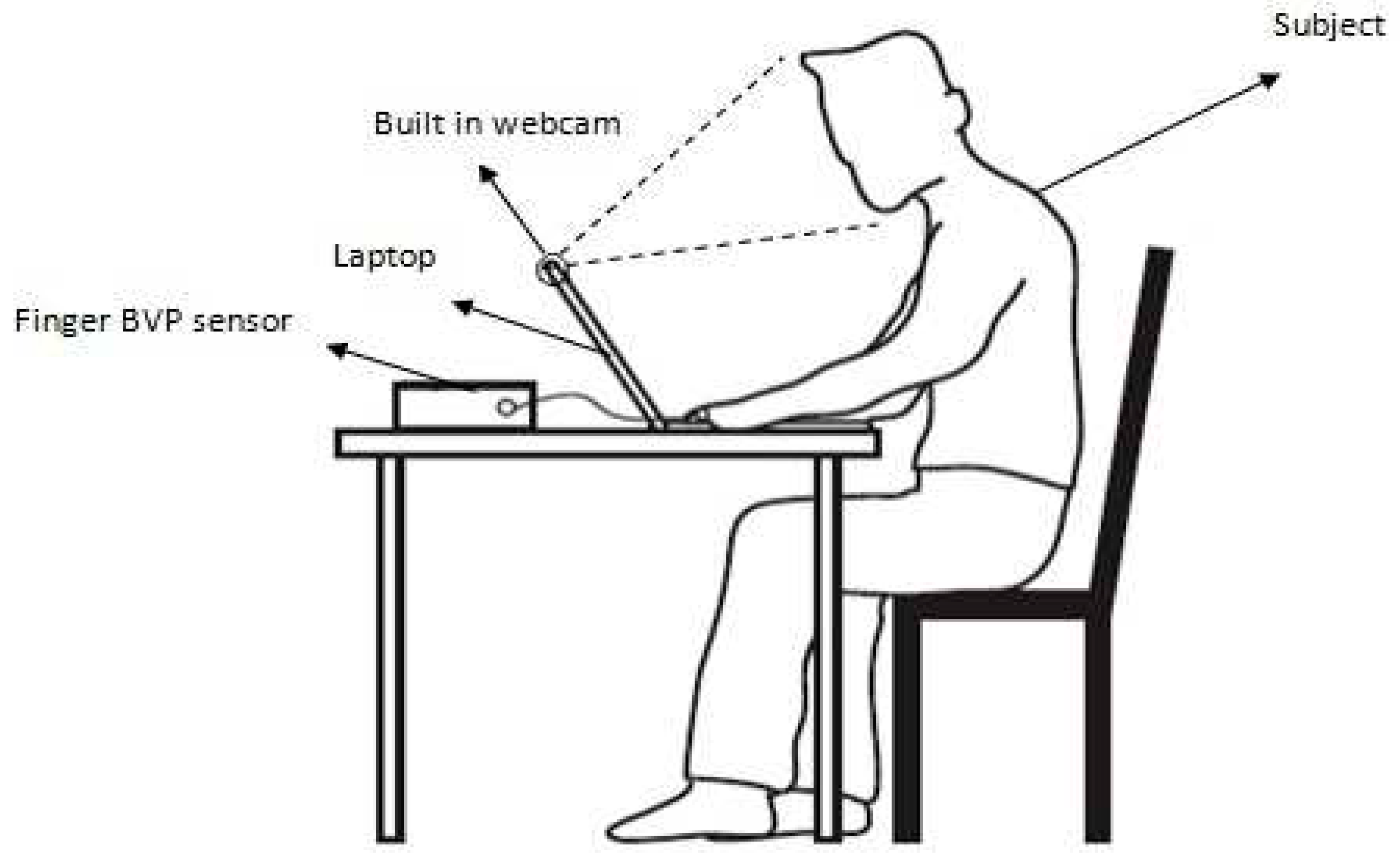

3.2.1. Setup 1—Self-Captured Videos

3.2.2. Setup 2—COHFACE Videos

- Natural (which approximates ambient for self-captured videos): for which all lights were turned off and the blinds were opened, and

- Studio (which approximates dedicated for self-captured videos): for which the blinds were closed to minimize natural light and extra light from a spot was used to illuminate the subject’s face.1

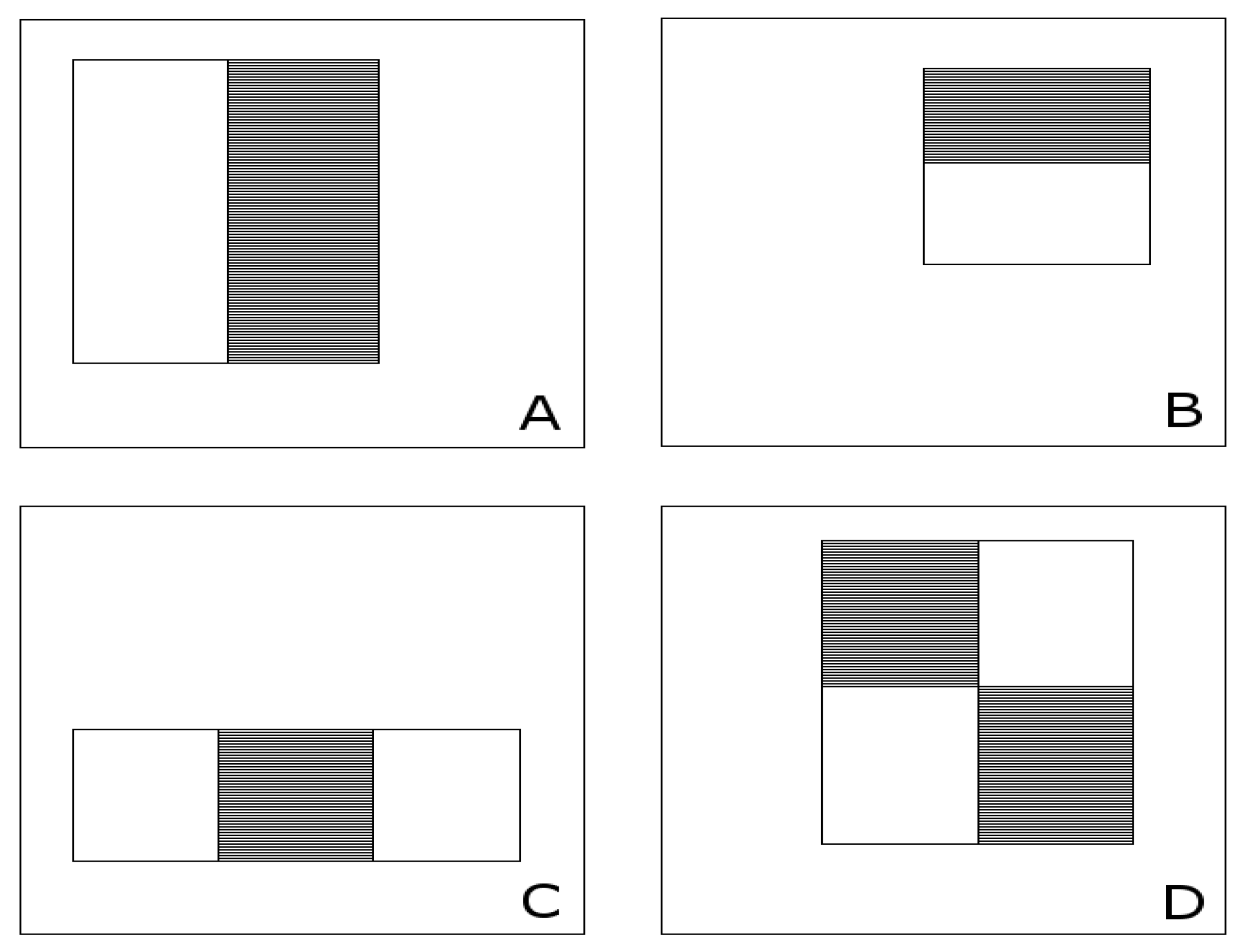

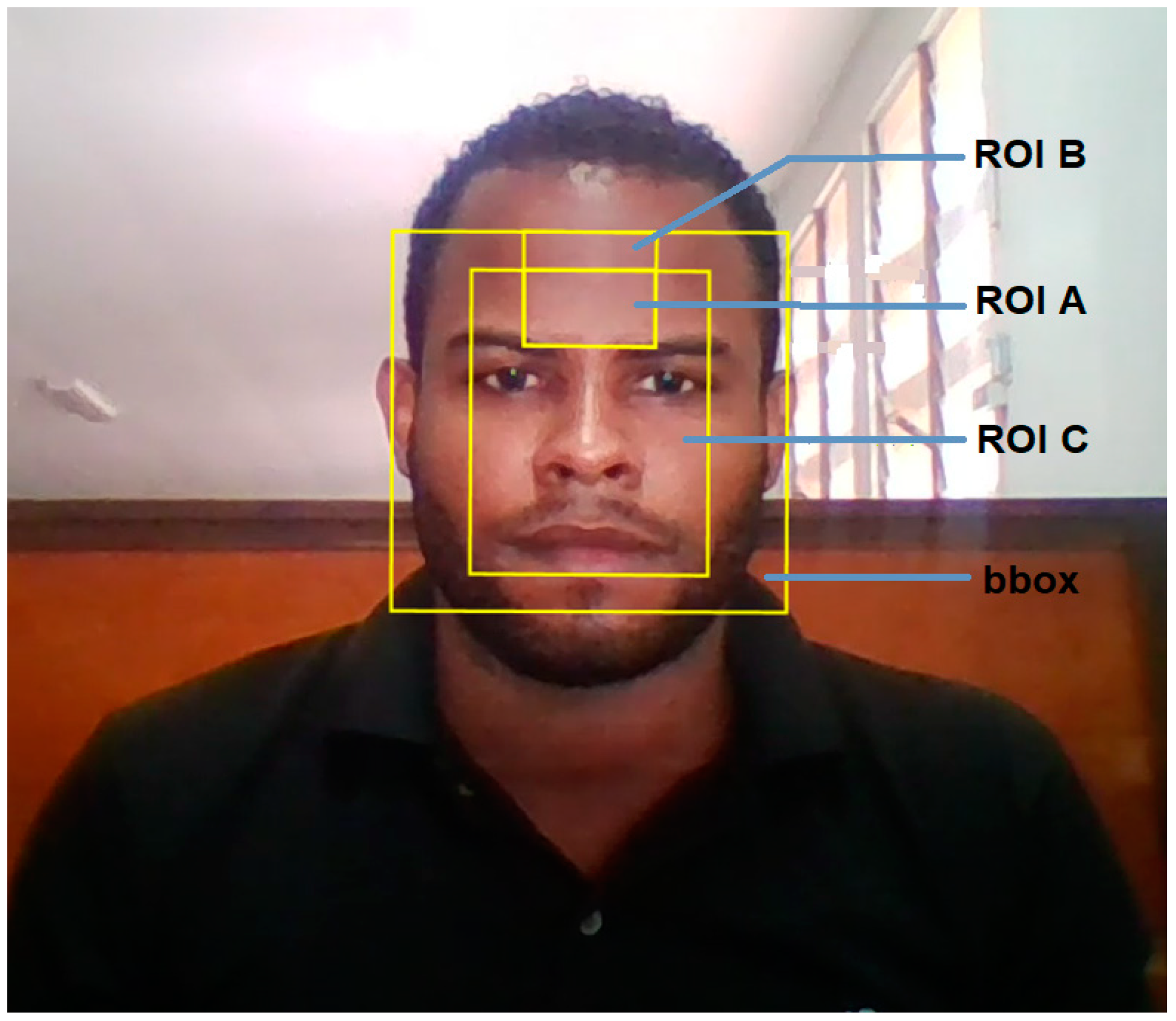

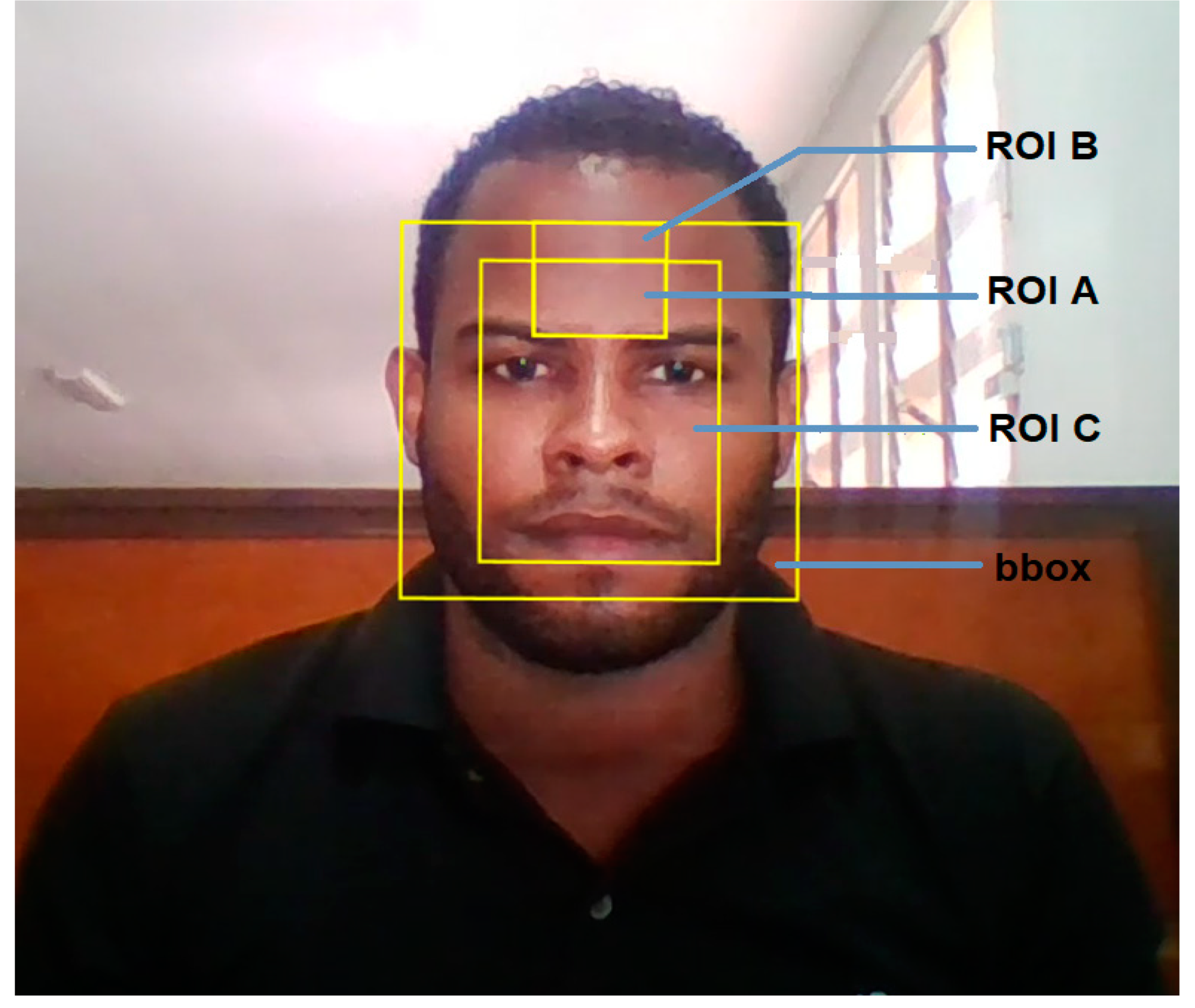

3.3. ROI Description and Classification for Associated rPPG Signals

| ROI Name | ROI Description* | Associated Signals | Description of Signal |

| ROI A |

ROI centred on Forehead xmin=xmin_bbox+(1/3)*bbox_w ymin=ymin_bbox+(1/10)*bbox_h width = (1/3)*bbox_w height = (1/5)*bbox_h |

SIGNAL 1.1 | Green (RGB) signal (FFT) |

| SIGNAL 1.2 | Green (RGB) signal (Welch) | ||

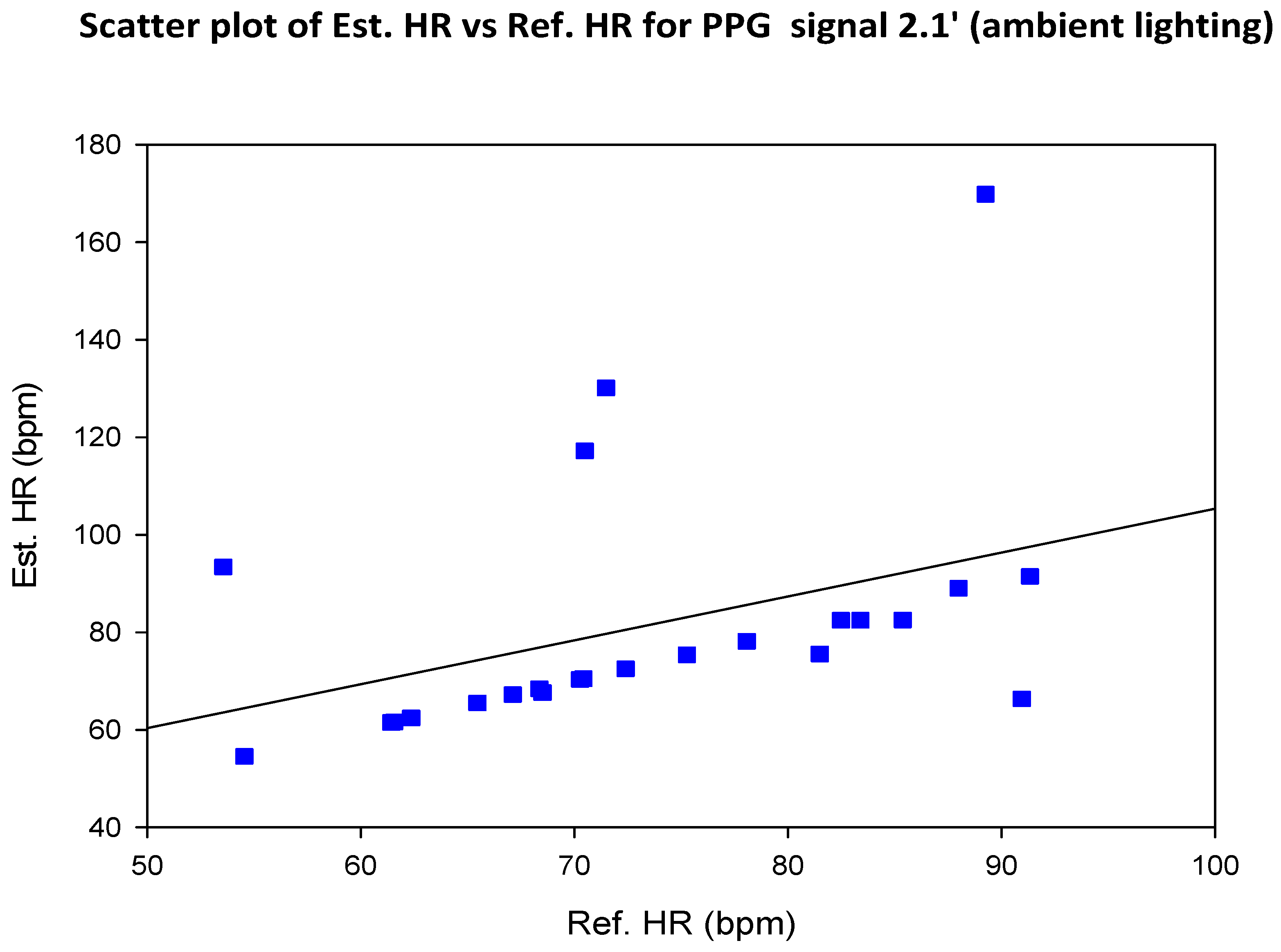

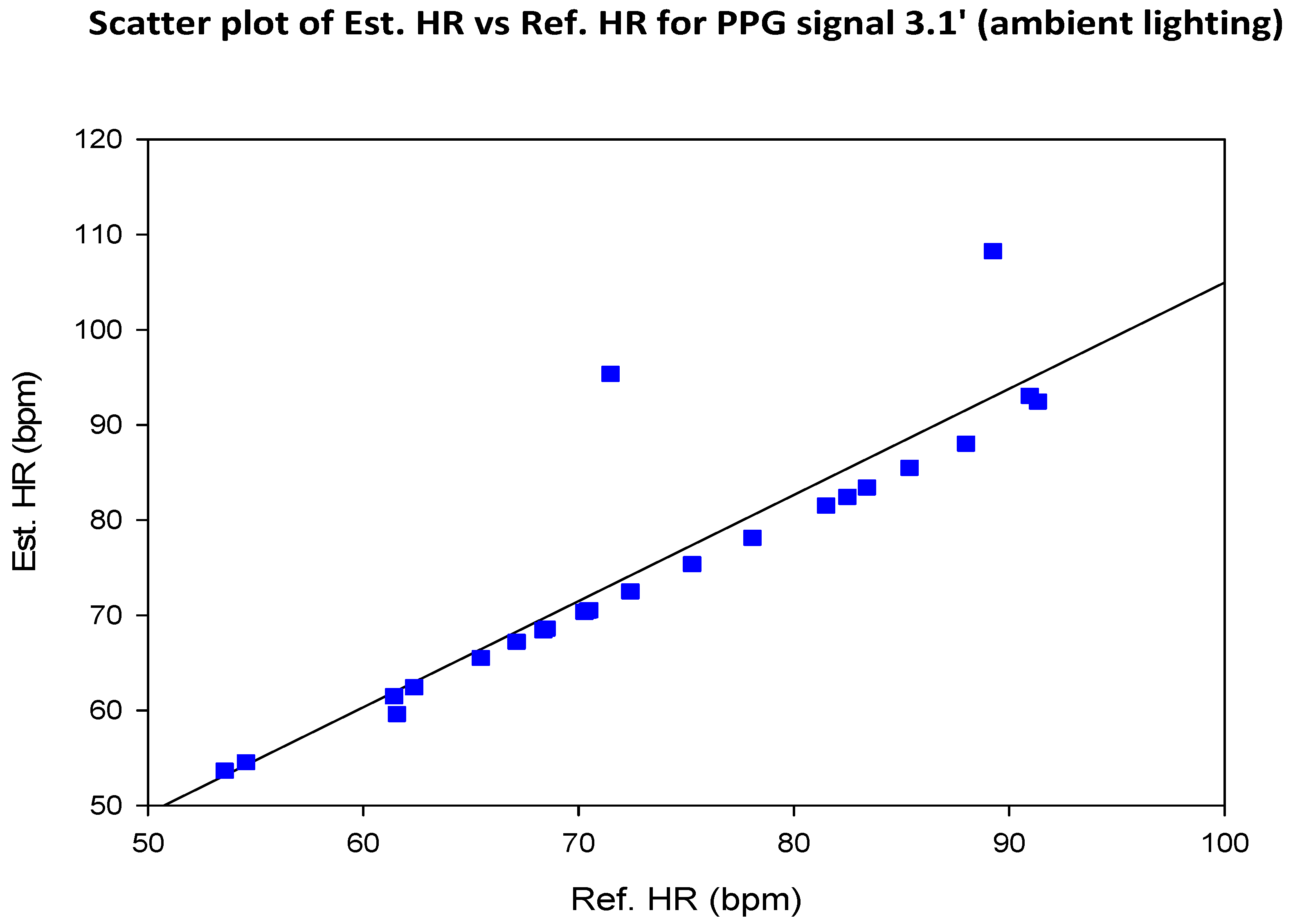

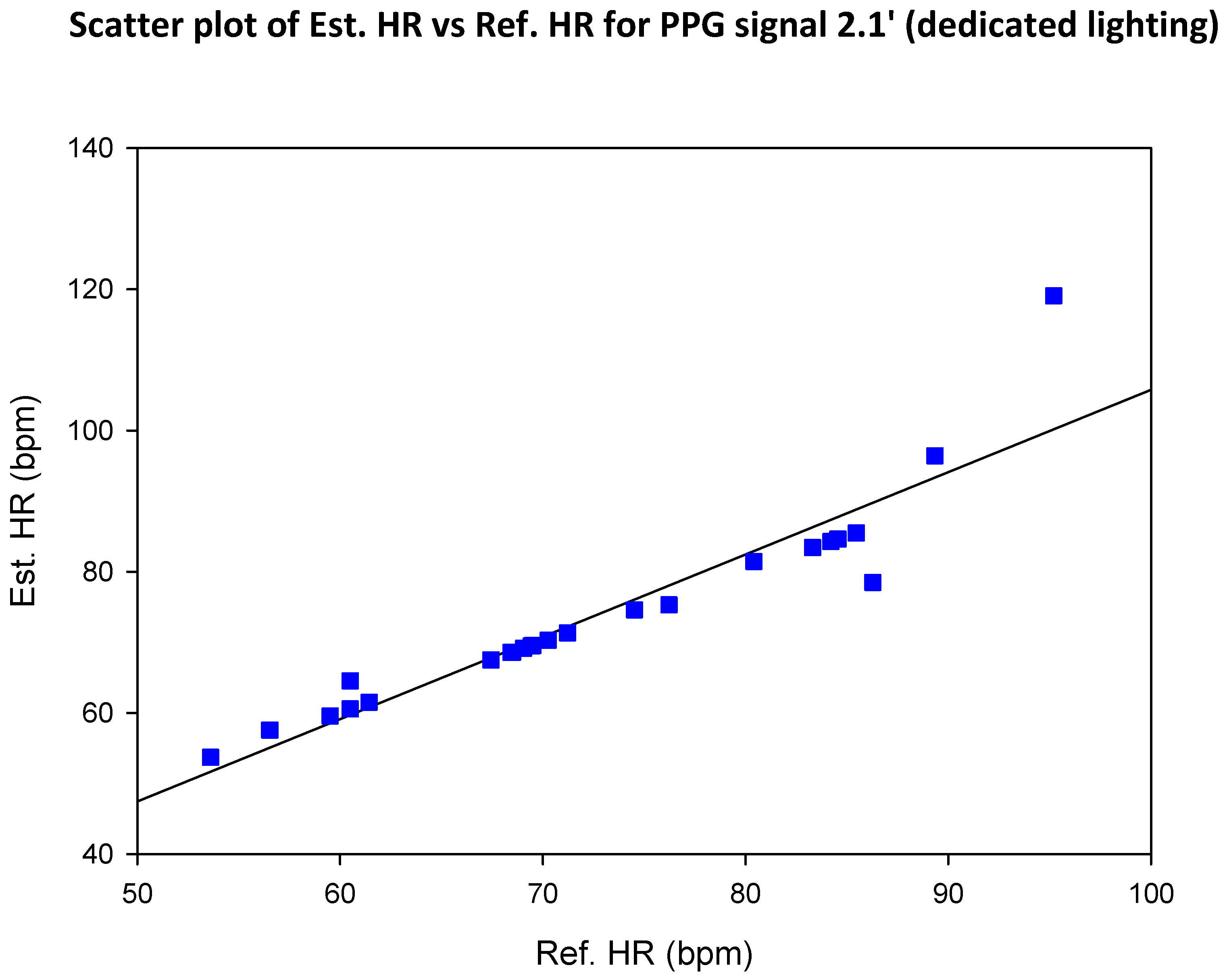

| SIGNAL 2.1 | Luminance (YCbCr) signal (FFT) | ||

| SIGNAL 2.2 | Luminance (YCbCr) signal (Welch) | ||

| ROI B |

Forehead ROI 50% larger than ROI A xmin= xmin_bbox+(1/3)*bbox_w ymin=ymin_bbox width = (1/3)*bbox_w height = (3/10)*bbox_h |

SIGNAL 3.1 | Green (RGB) signal (FFT) |

| SIGNAL 3.2 | Green (RGB) signal (Welch) | ||

| ROI C |

Face ROI comprises central 60% (width) and central 80% (height) of bbox xmin=xmin_bbox+(1/5)*bbox_w ymin=ymin_bbox+(1/10)*bbox_h width = (3/5)*bbox_w height = (4/5)*bbox_h |

SIGNAL 3.1 | Green (RGB) signal (FFT) |

| SIGNAL 3.2 | Green (RGB) signal (Welch) |

3.4. Mean Error, RMSE and SNR Definitions

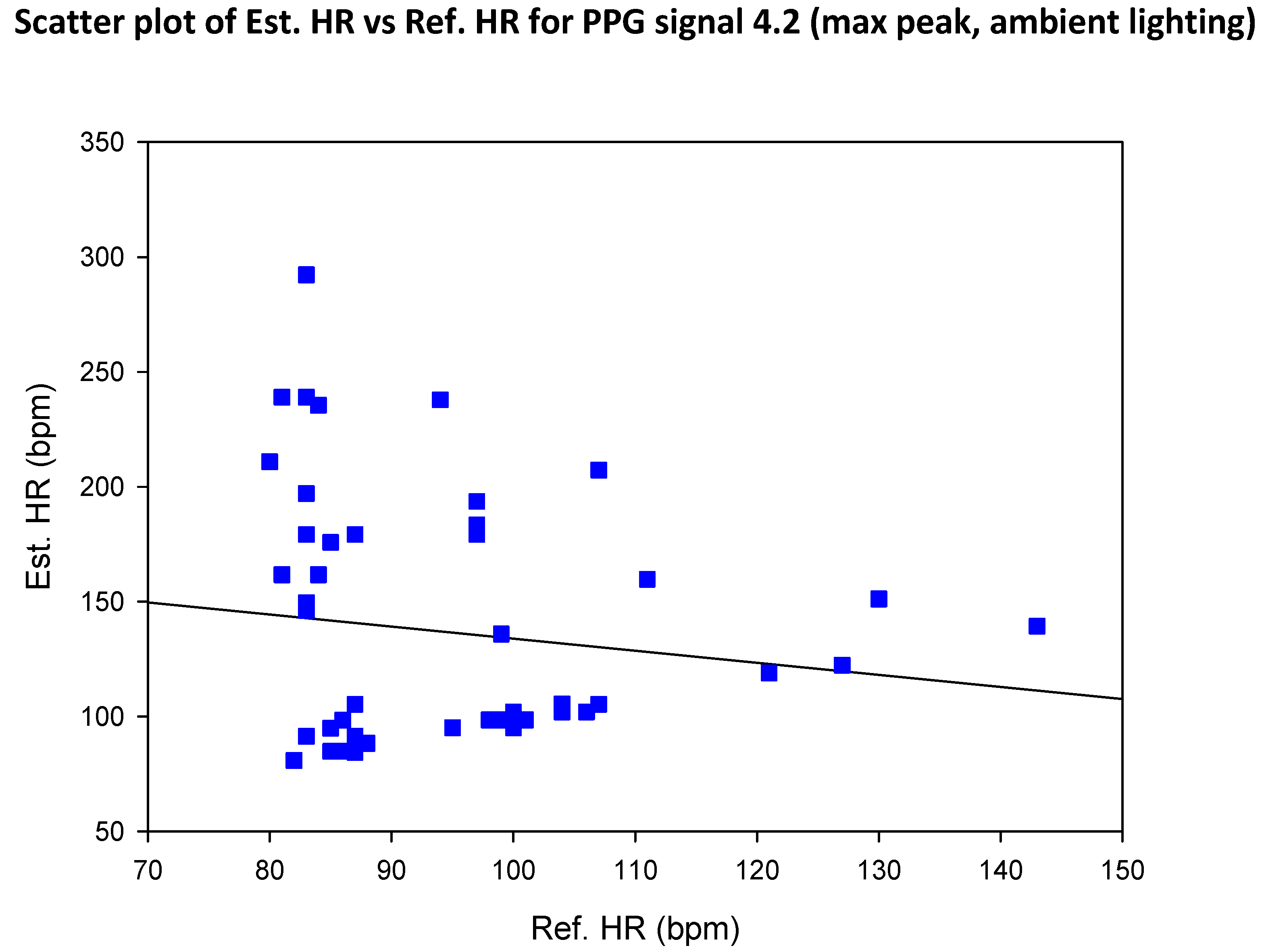

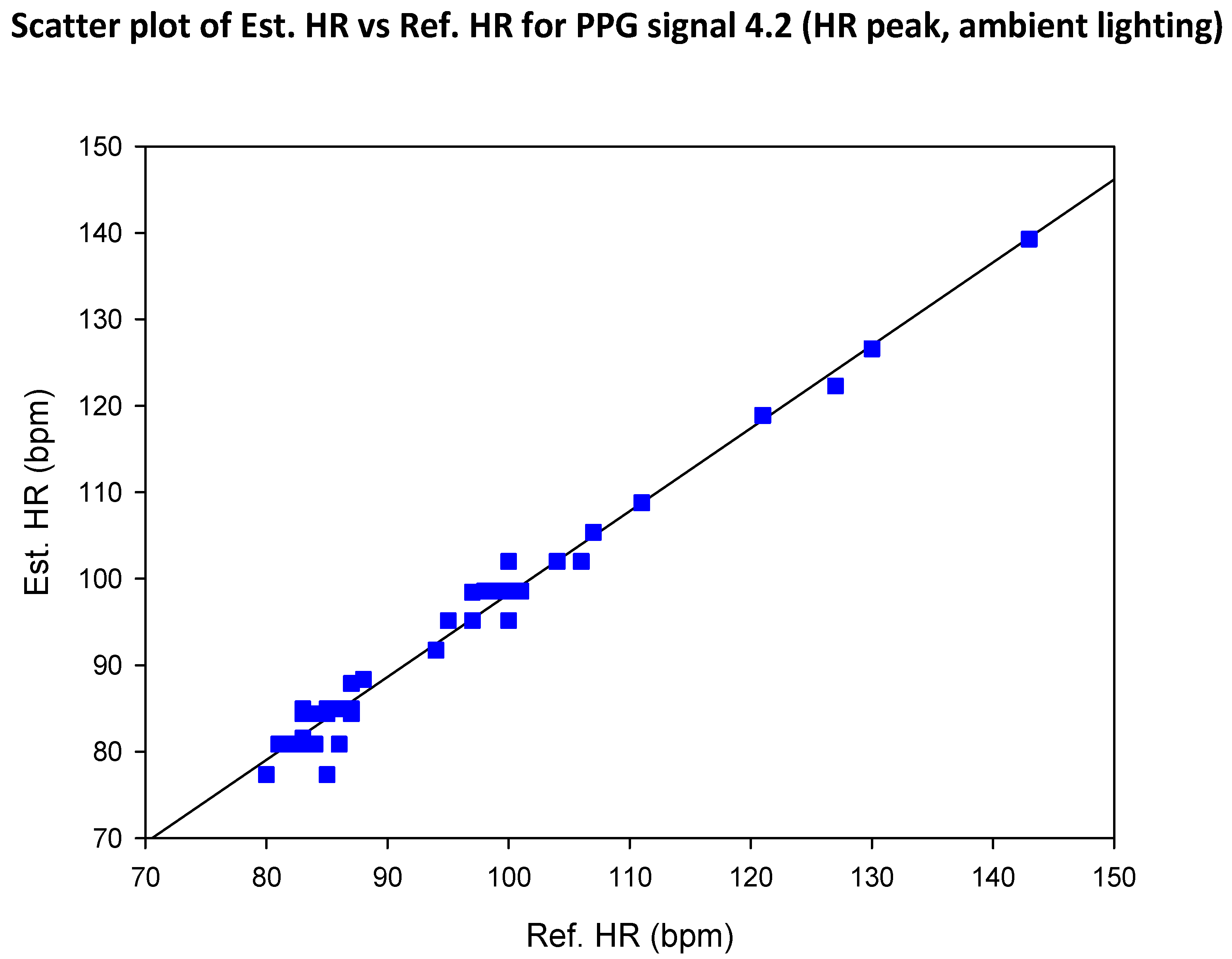

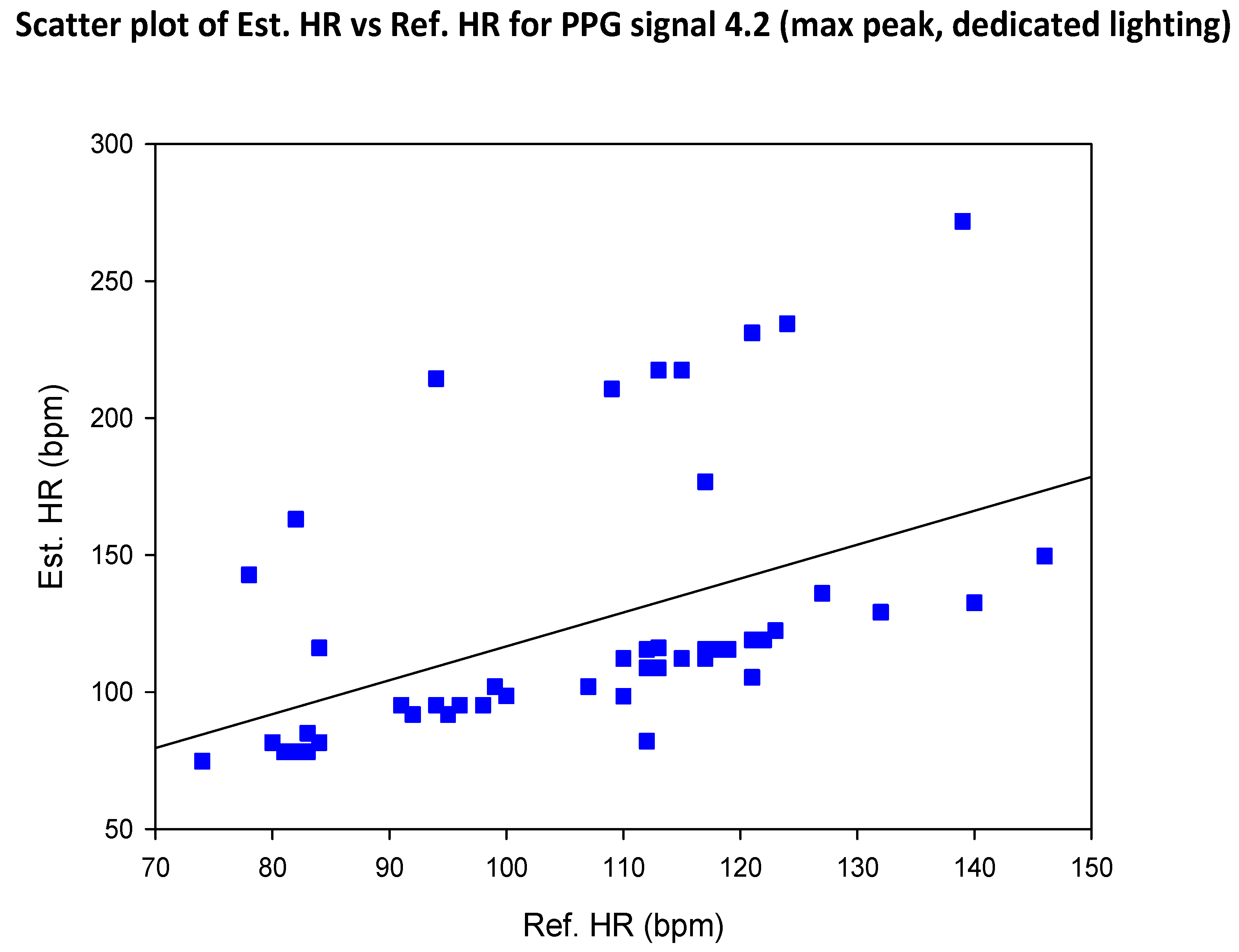

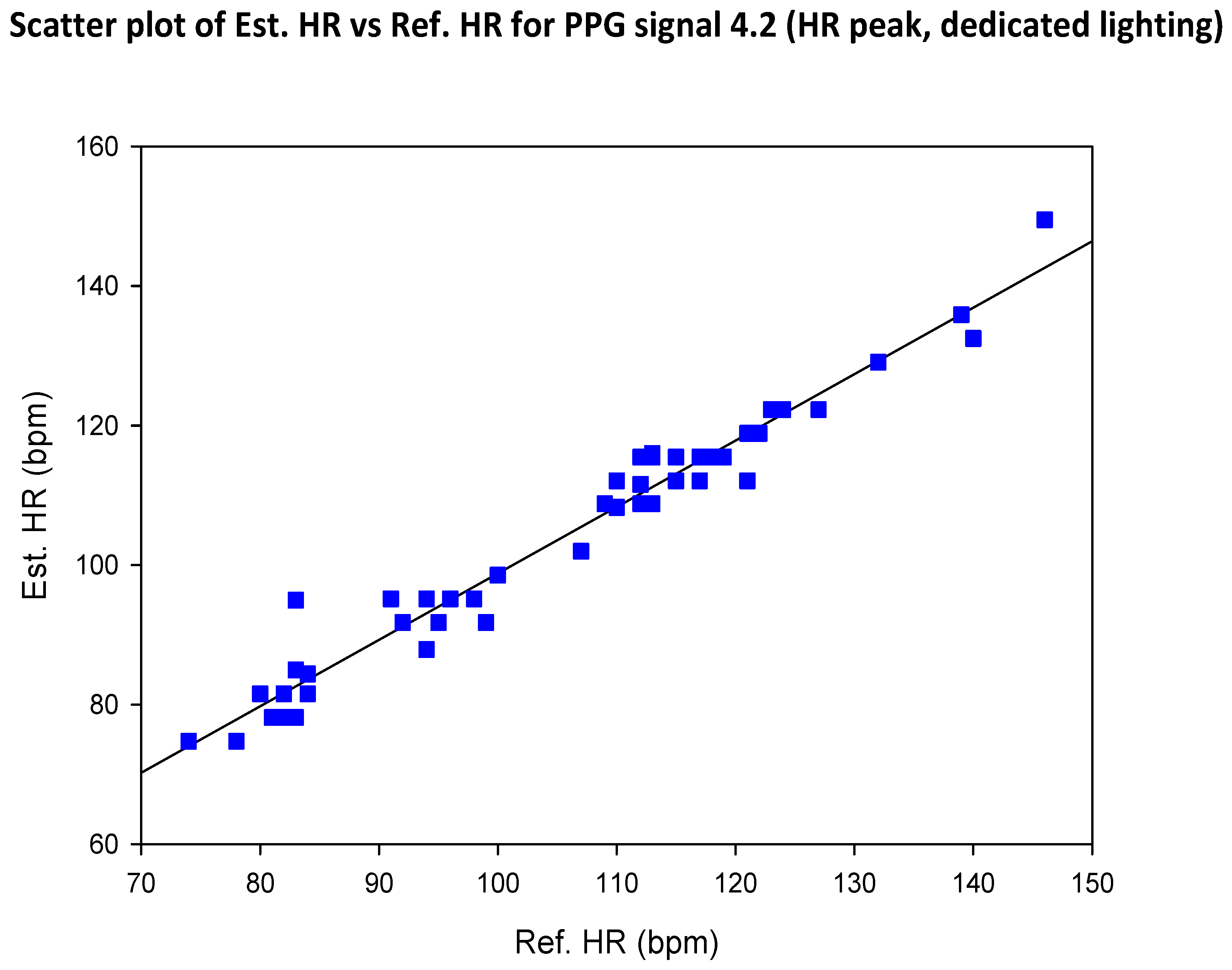

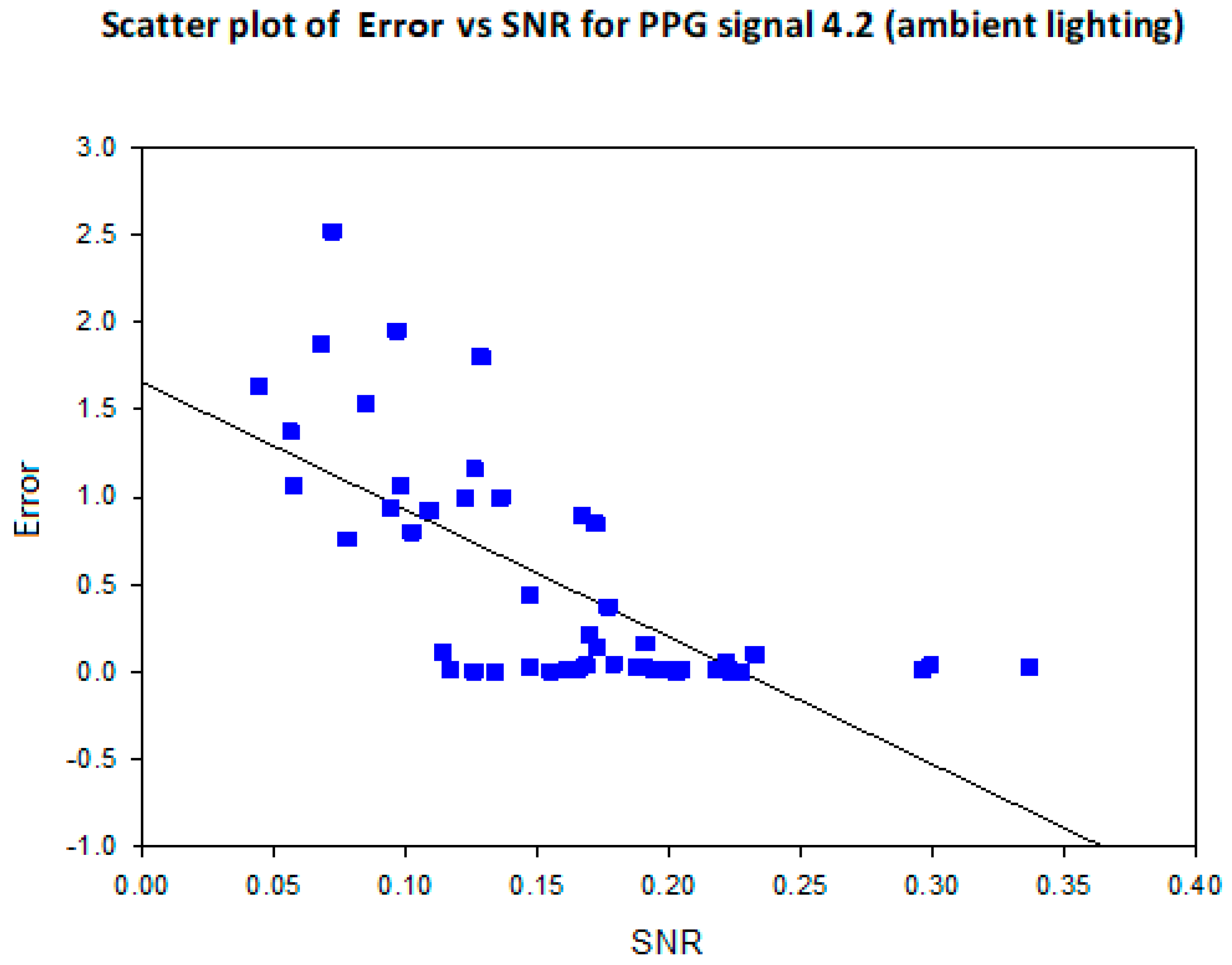

- error is (negatively) correlated with SNR

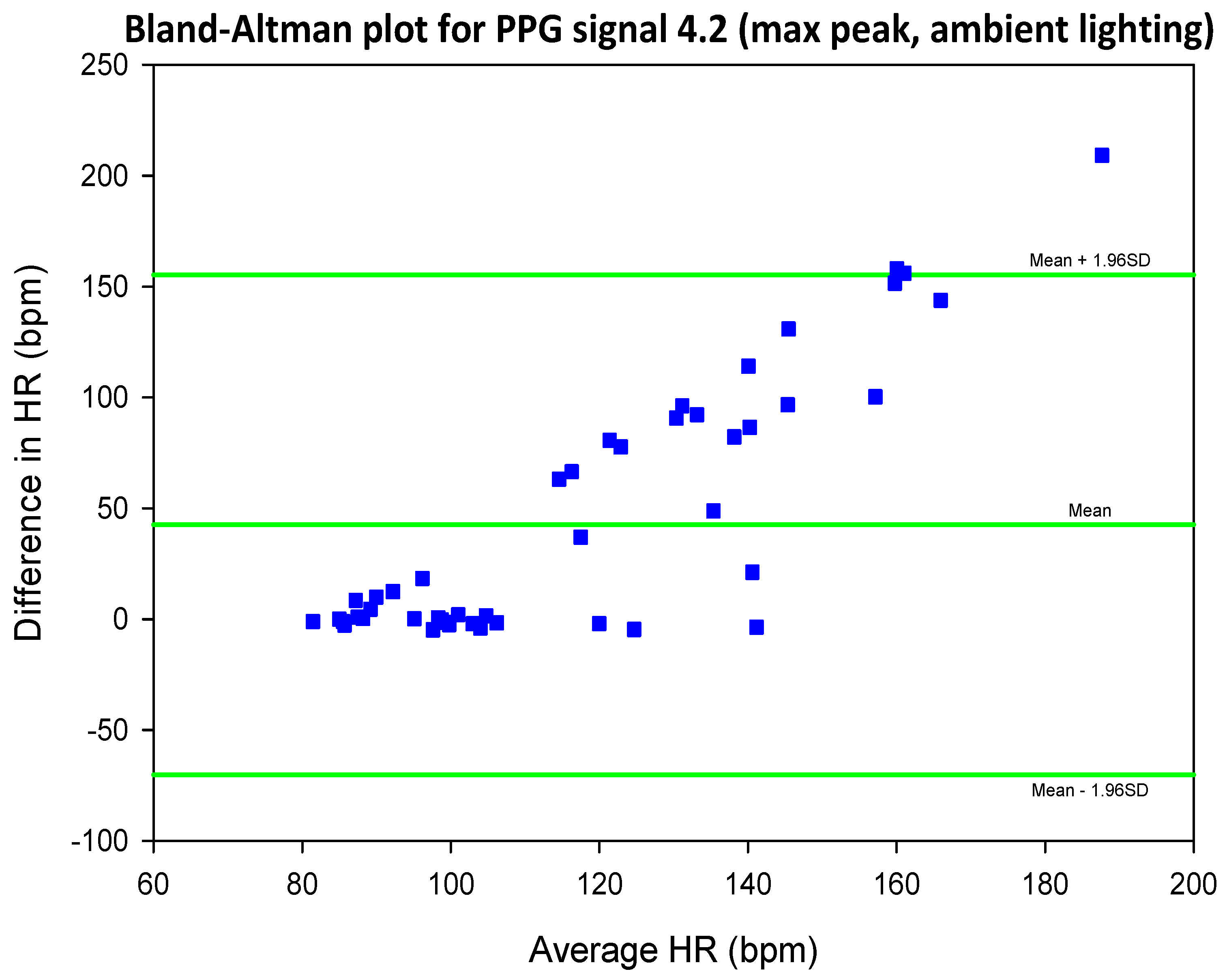

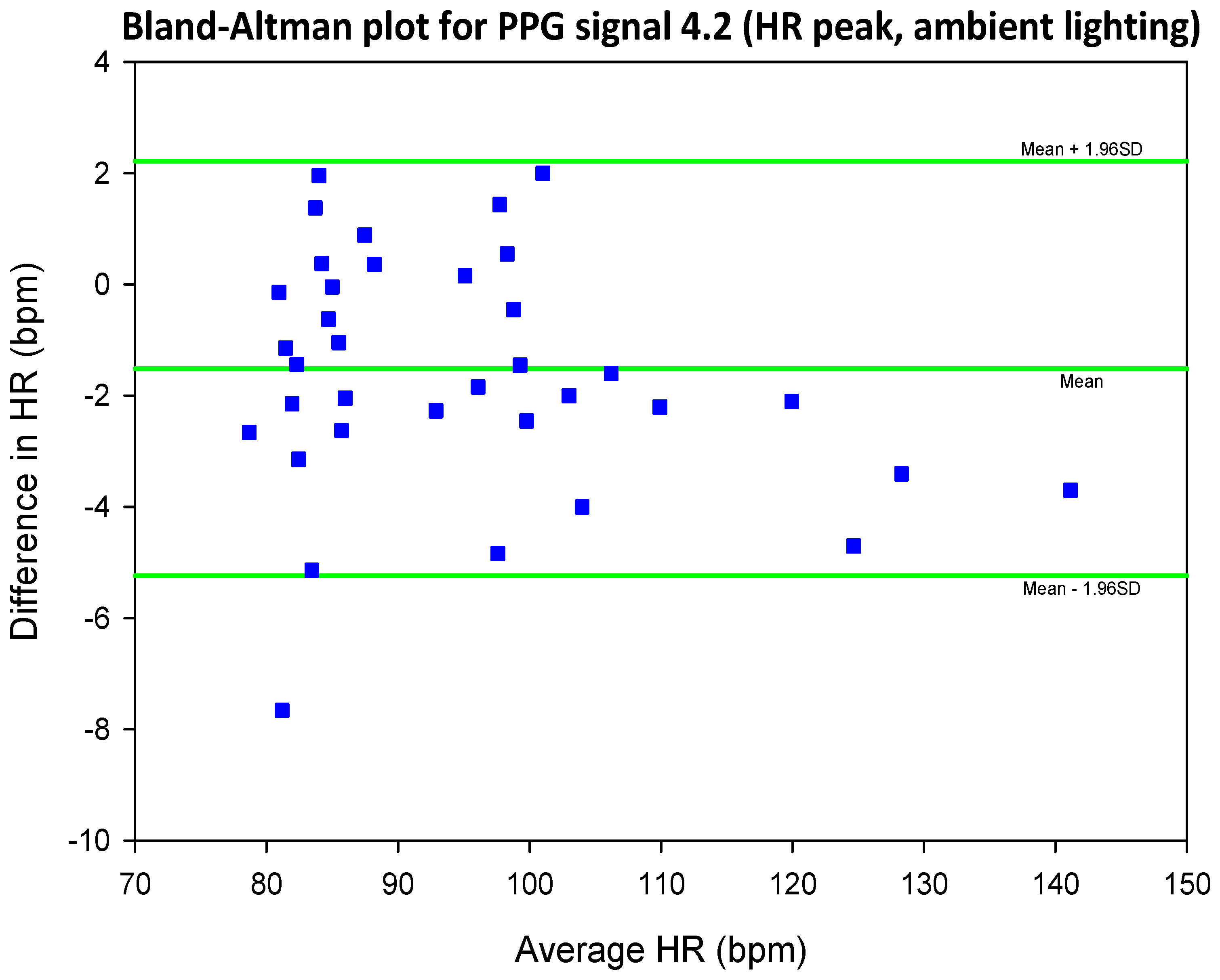

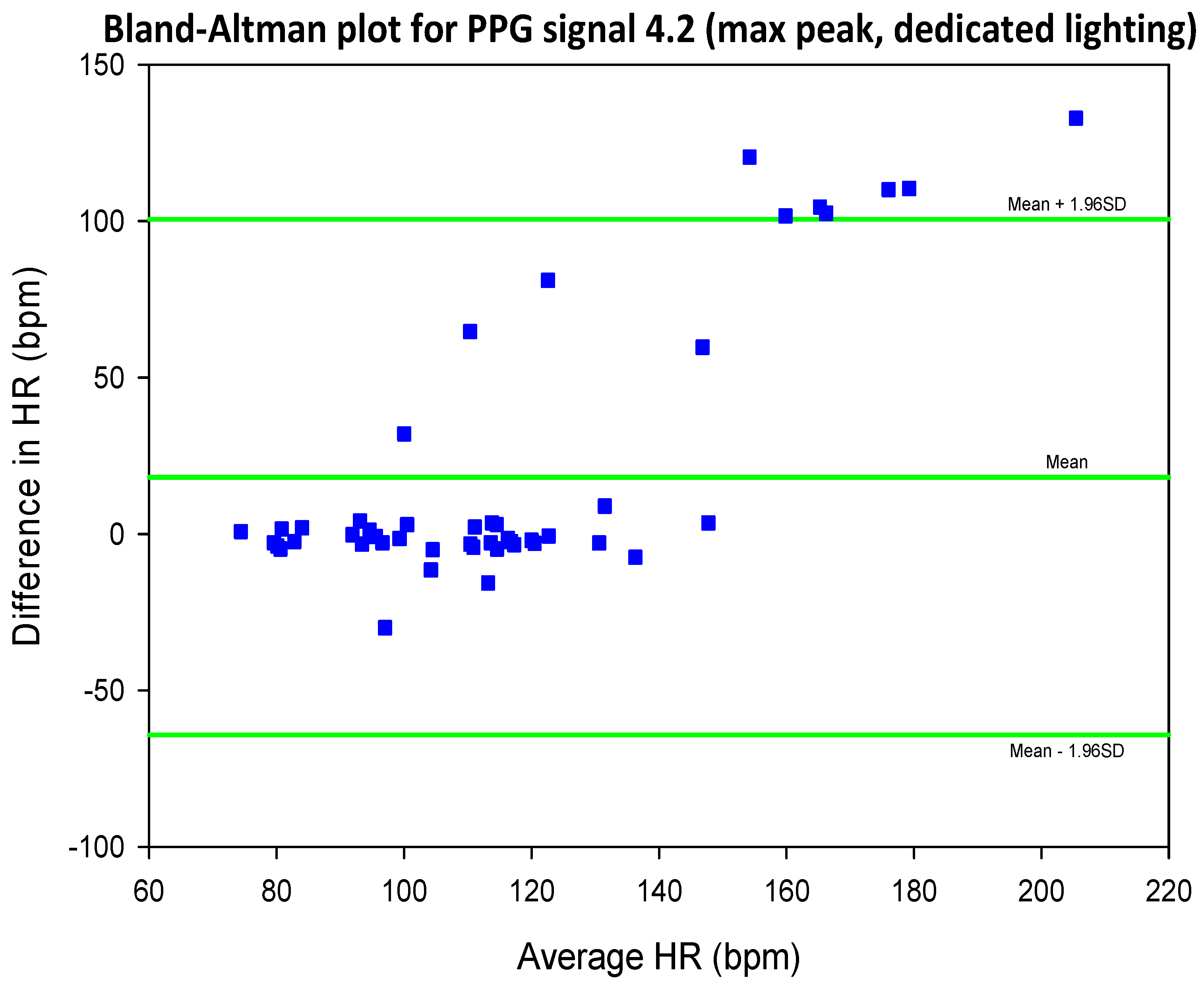

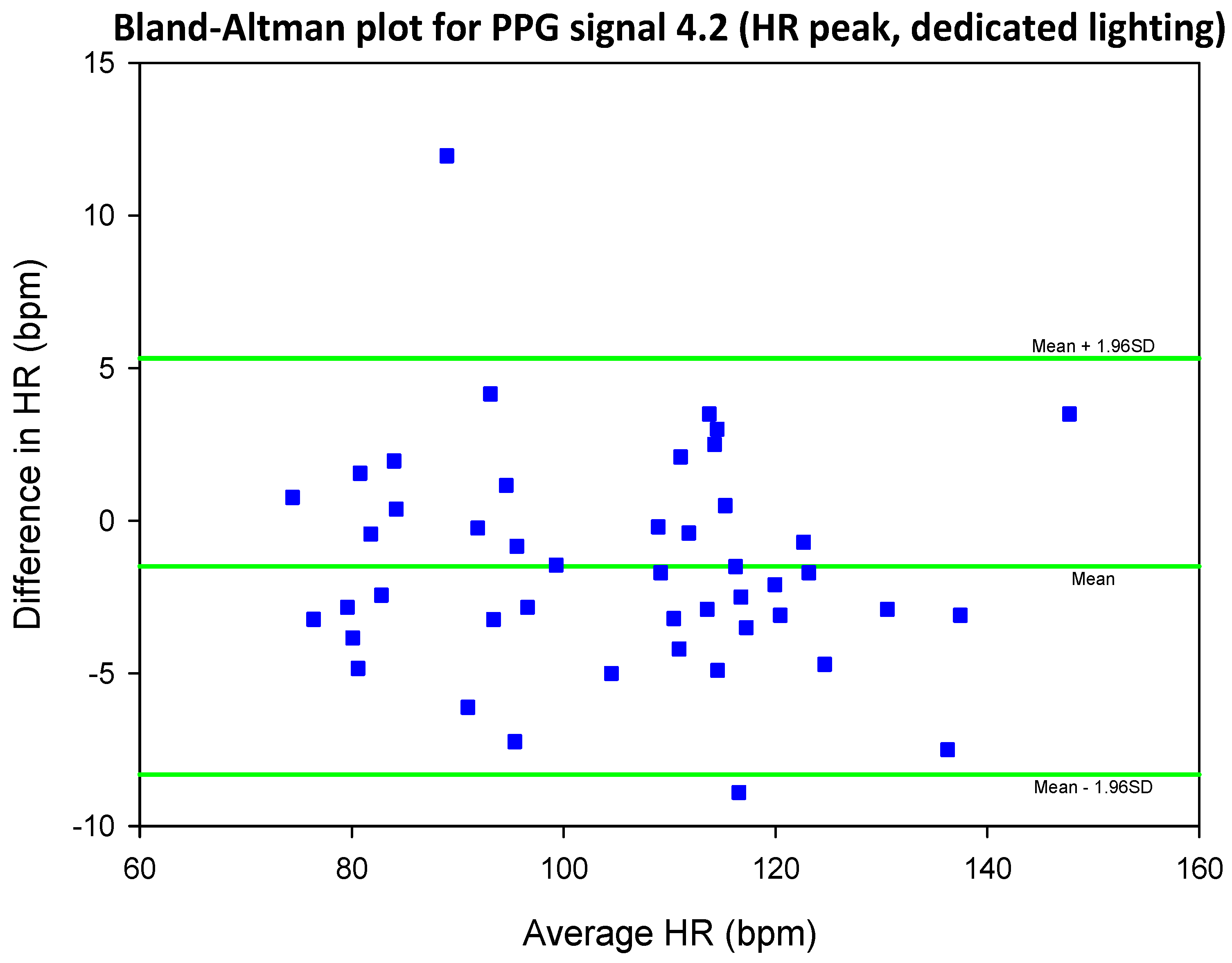

- when the max peak did not correspond to the ground truth HR, a distinct inferior peak which matched the heart rate could most often be seen in the frequency spectrum

4. Materials And Methods 2: Workflow & Algorithms

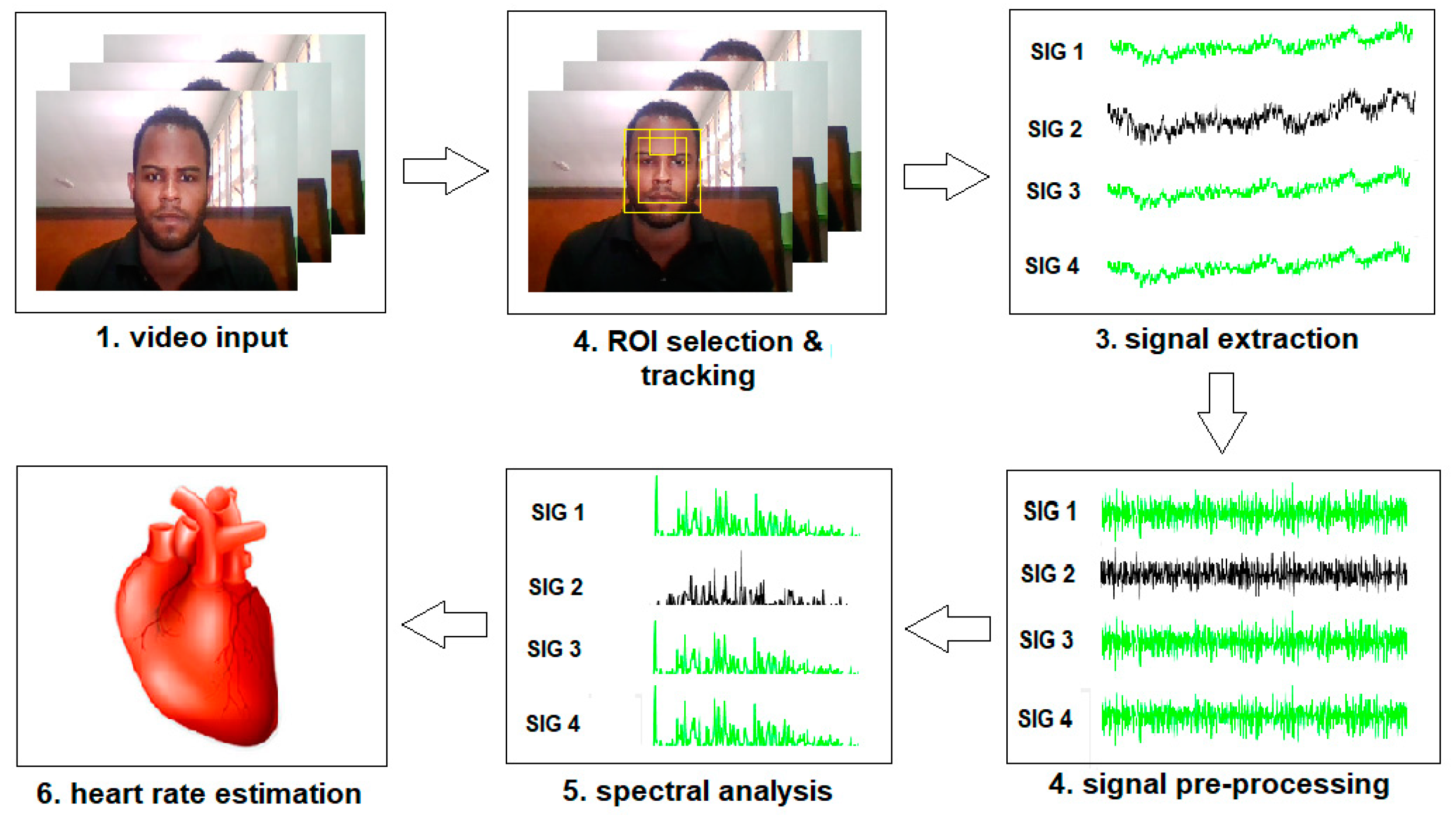

4.1. Overview of Workflow

4.2. Video Input

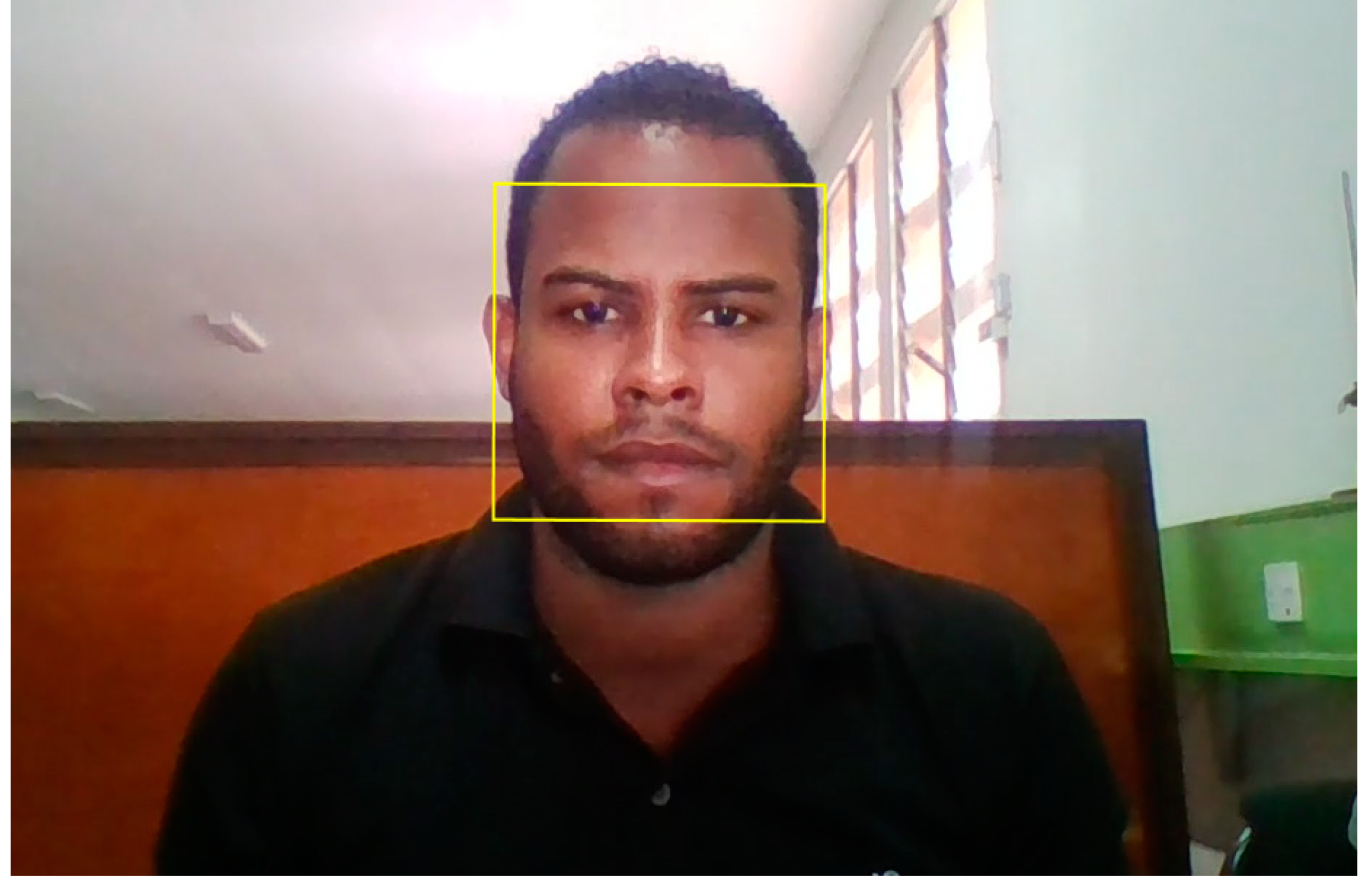

4.3. ROI Selection and Tracking

4.3.1. Face Detection

- Haar-like feature selection

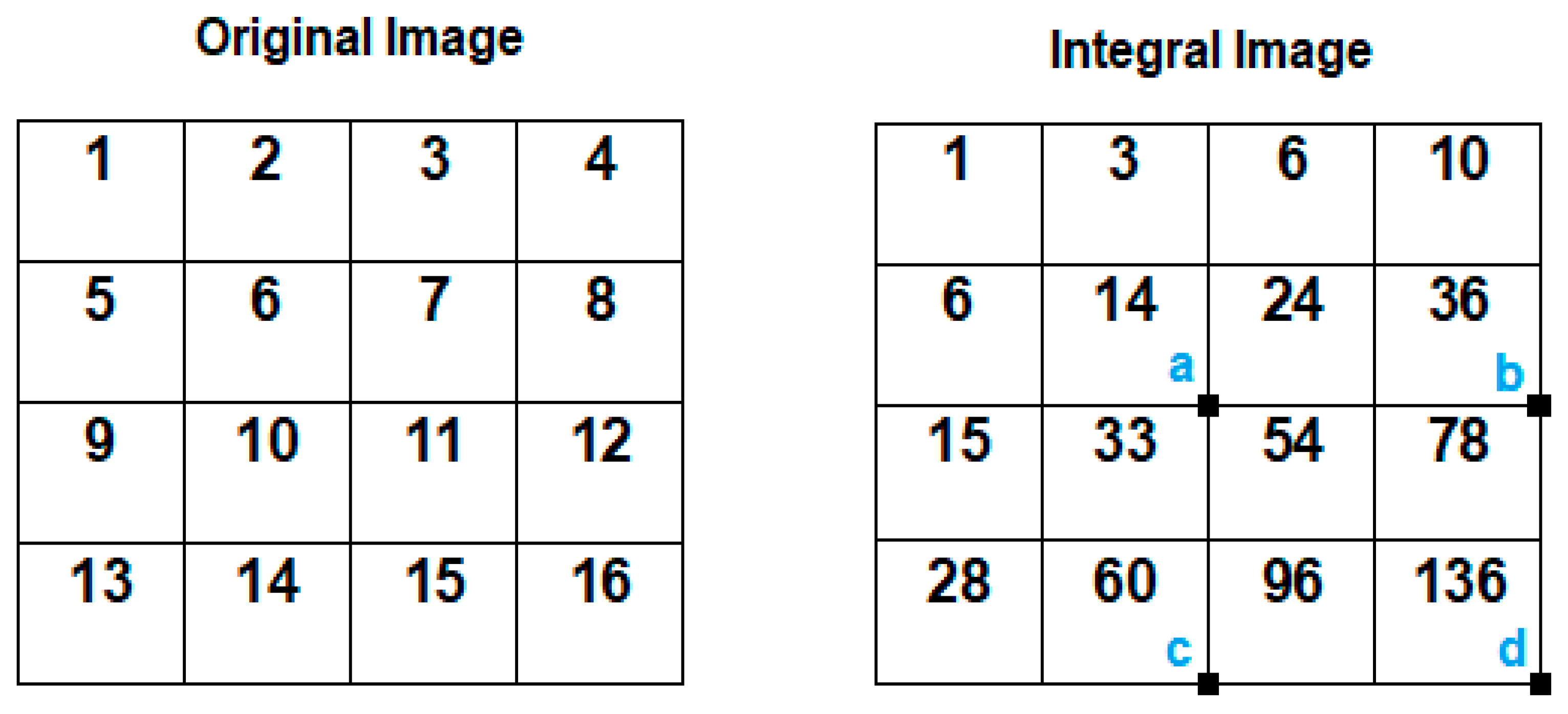

- Integral image creation

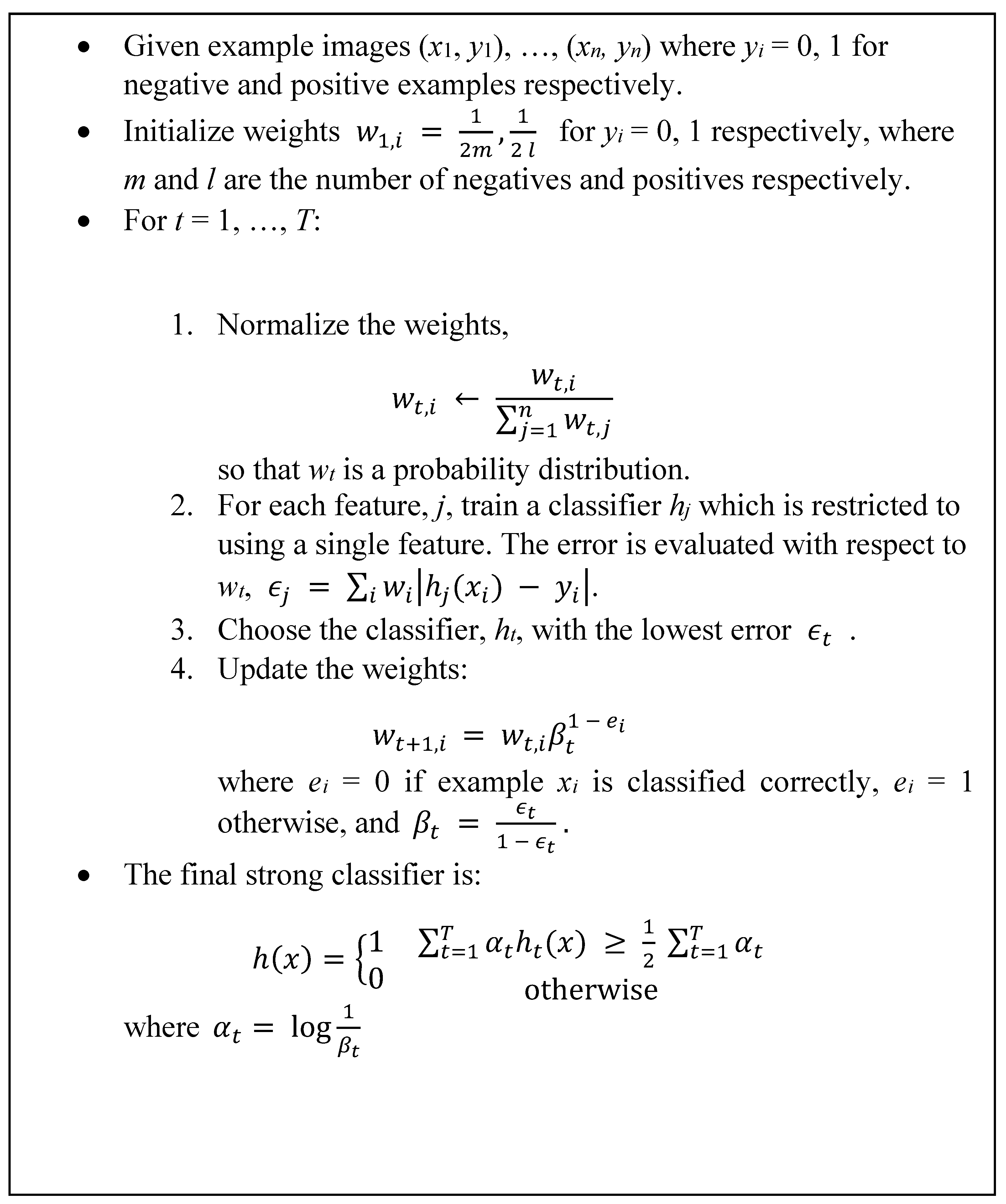

- AdaBoost training

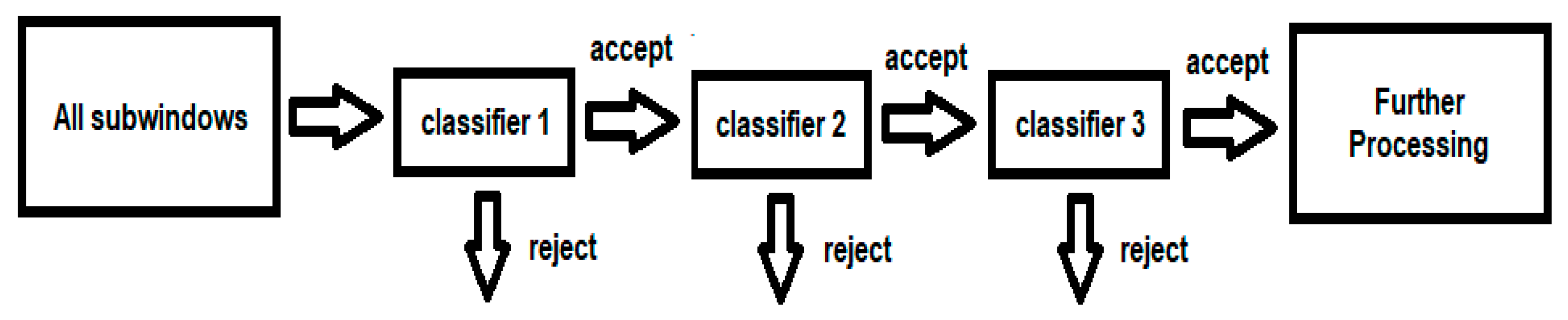

- Cascading classifiers

- Eye region darker than upper cheeks

- Nose bridge region brighter than eyes

4.3.2. ROI Selection

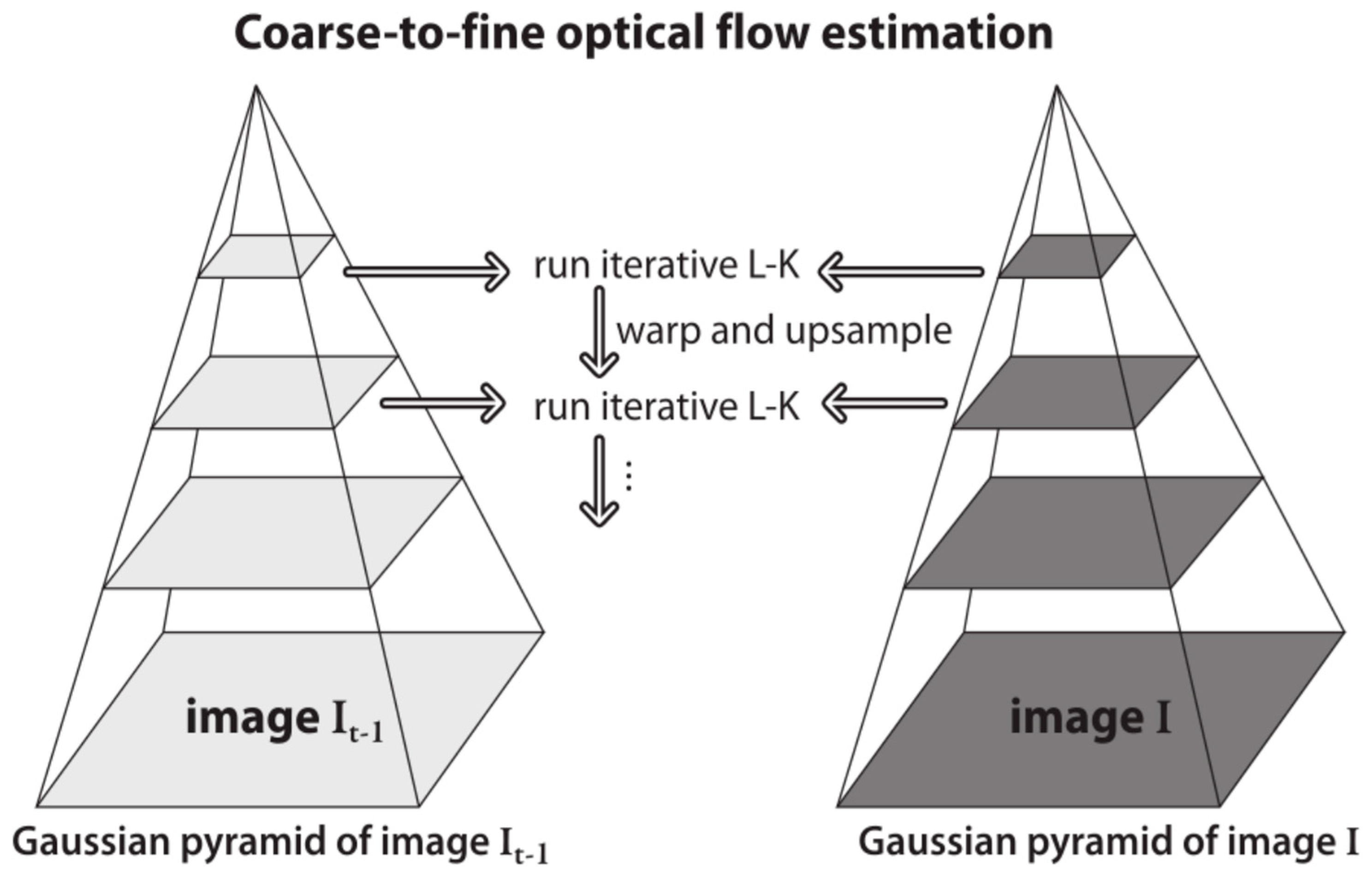

4.3.2. Motion Tracking

- brightness constancy – the brightness of each pixel is constant between two consecutive frames.

- temporal persistence – movements of image objects are small.

- spatial coherence – neighbouring pixels belonging to a certain surface move in a similar way.

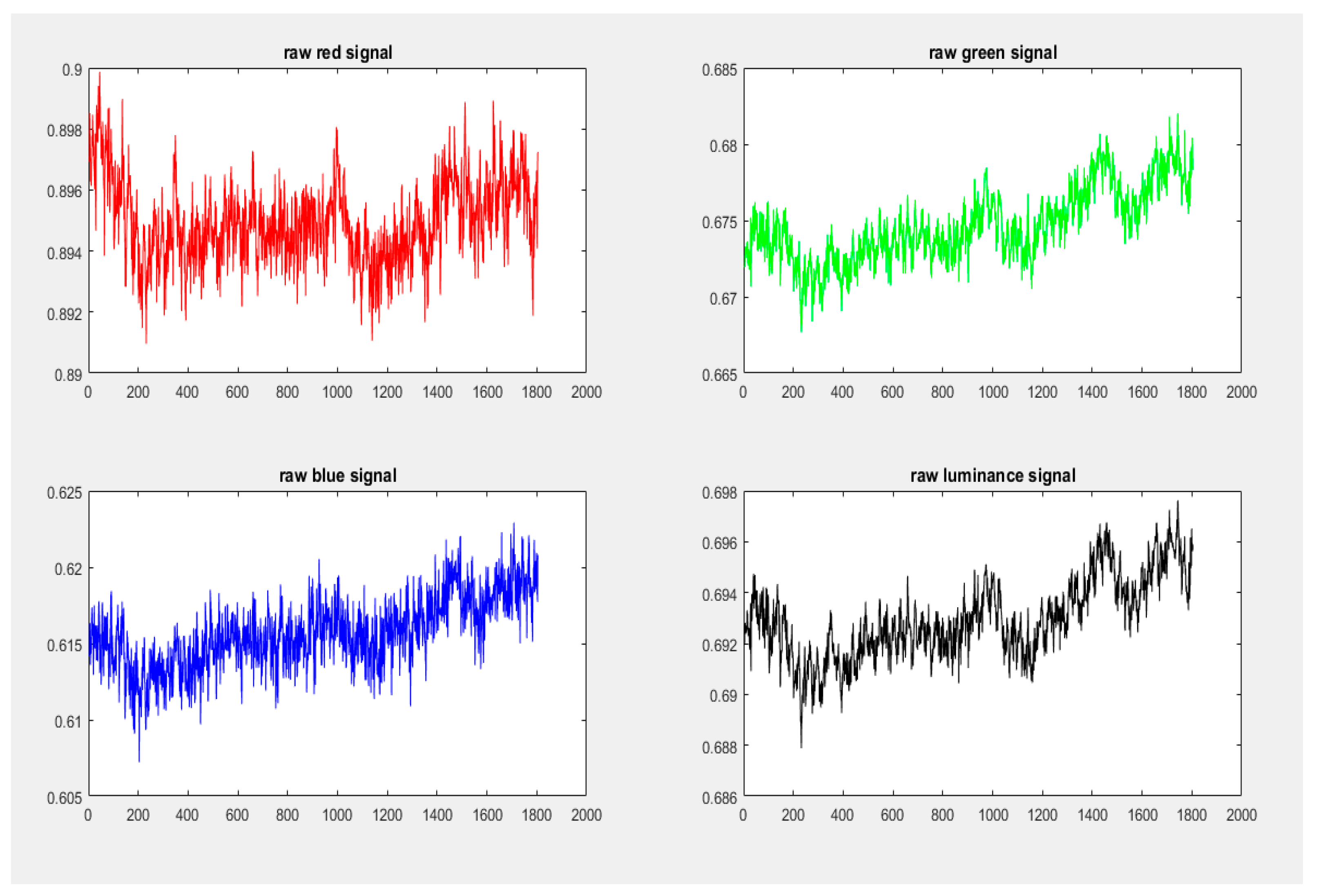

4.4. Signal Extraction

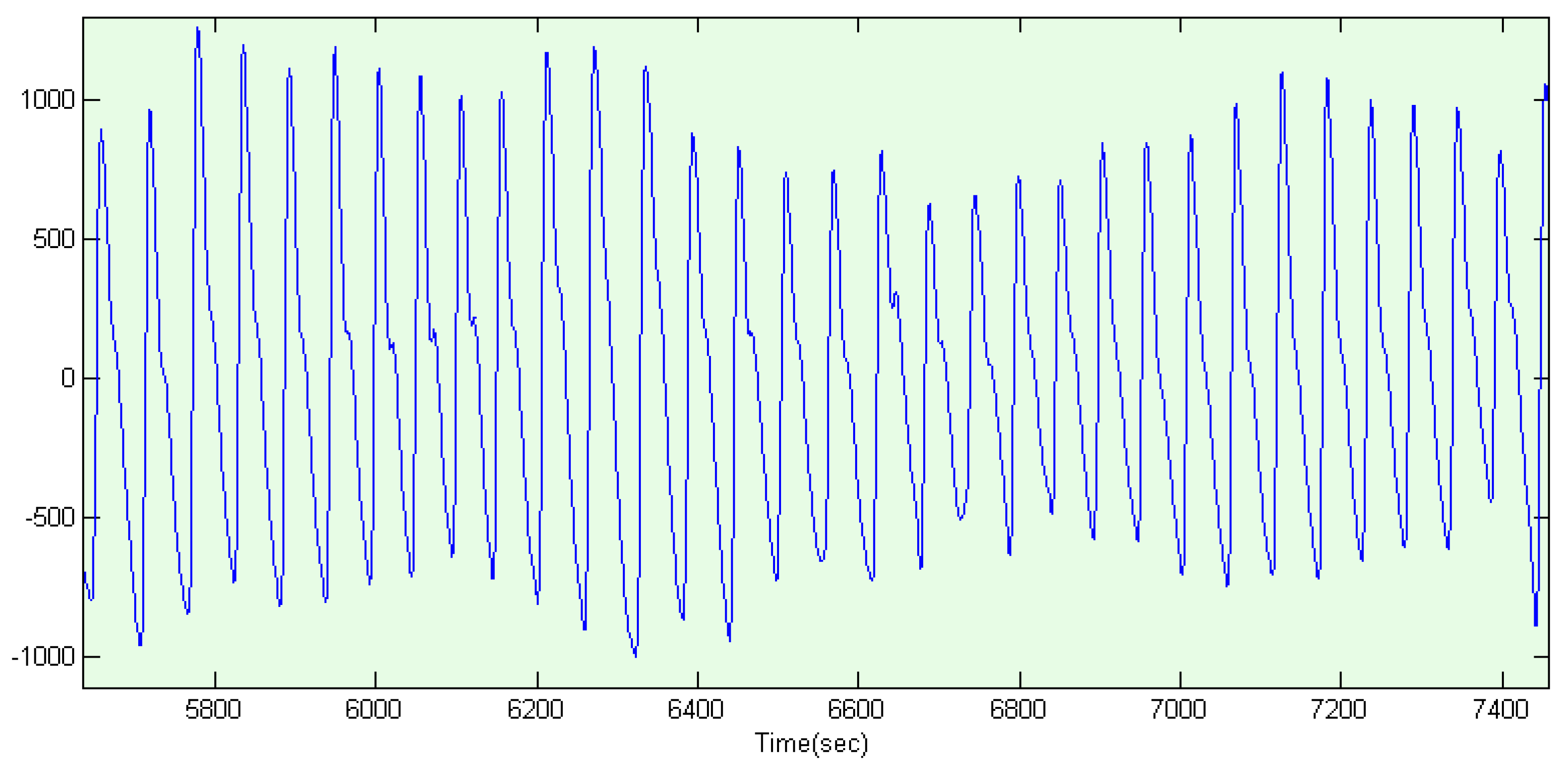

4.5. Signal Pre-Processing

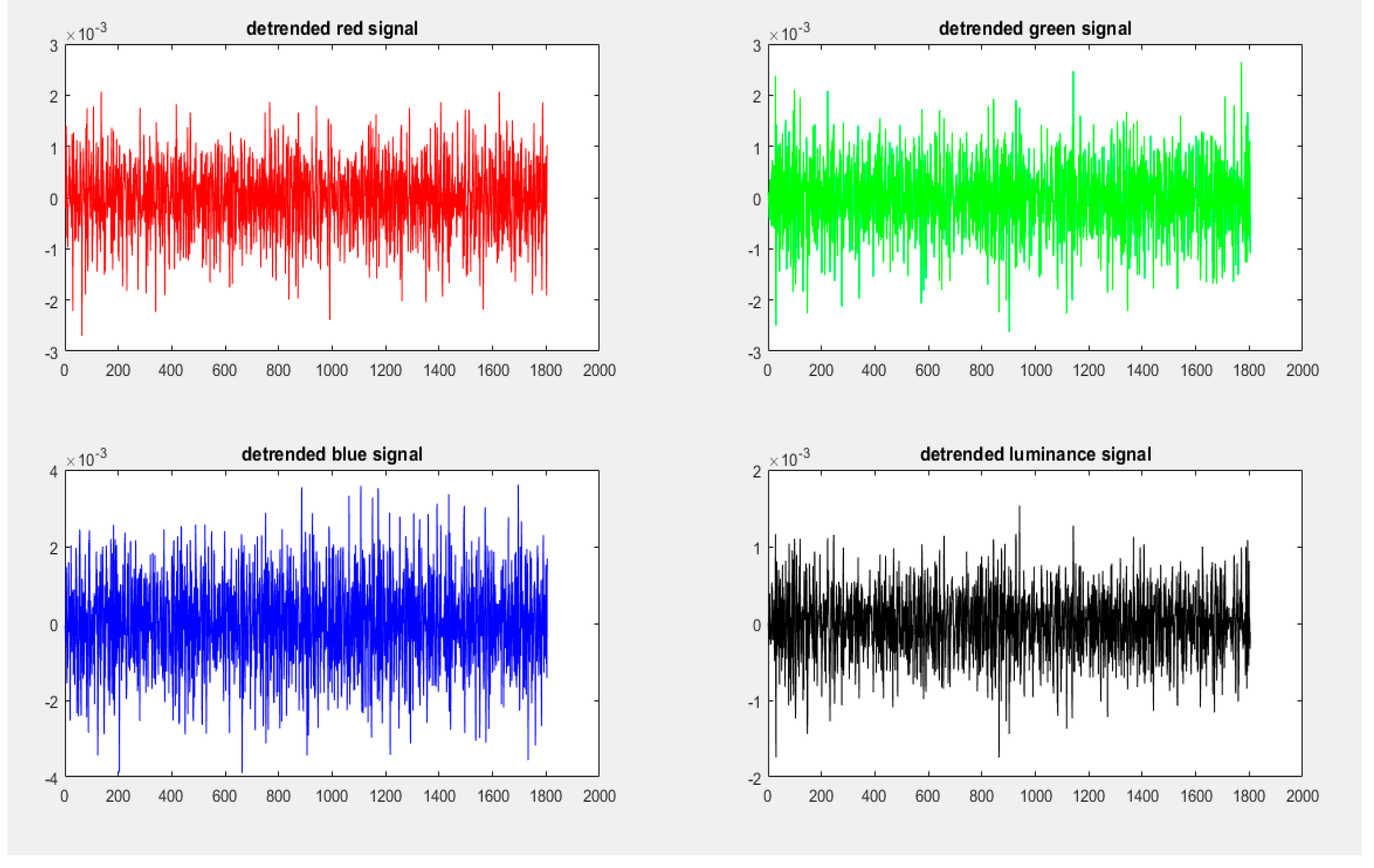

4.5.1. Signal Detrending

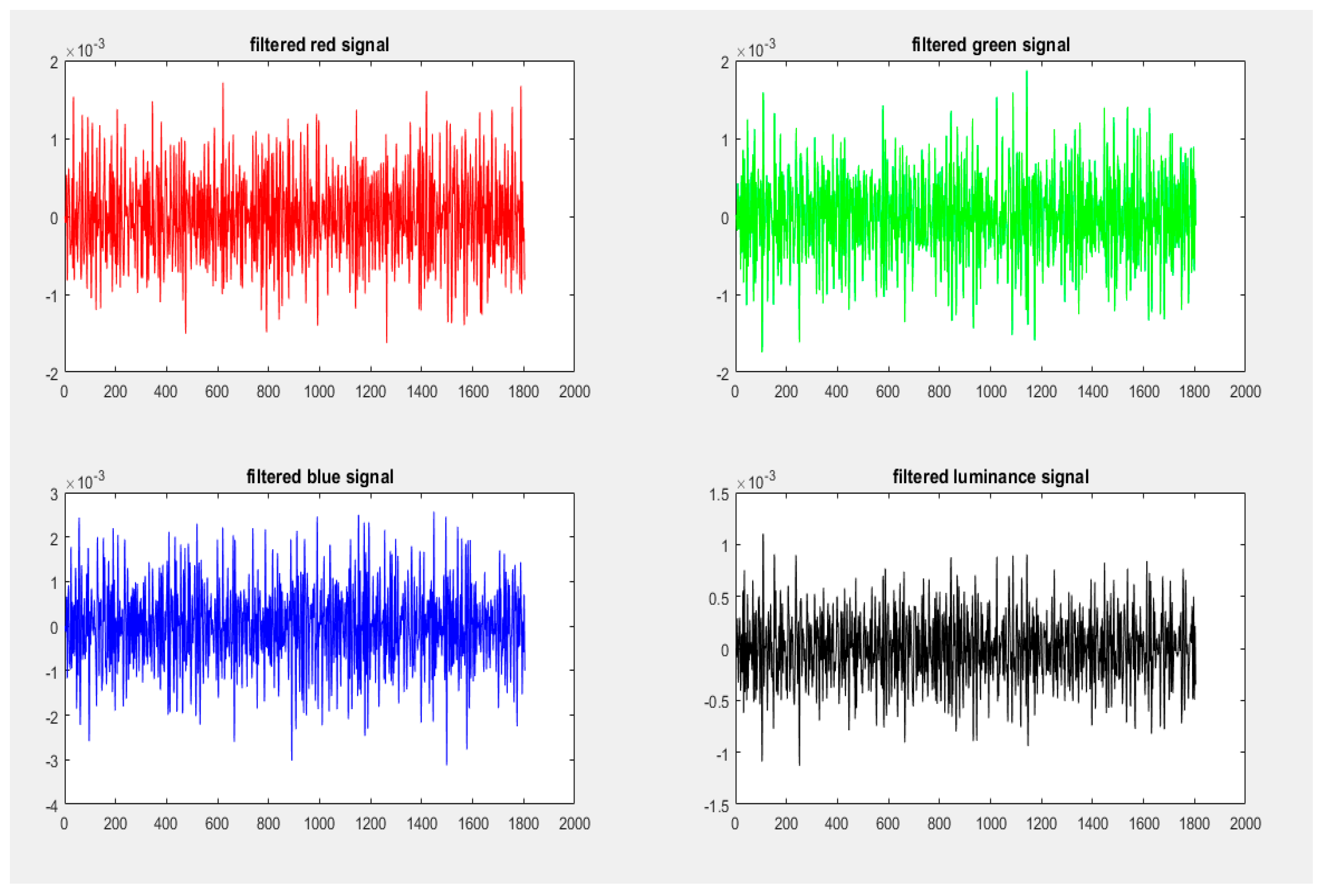

4.5.2. Signal Filtering

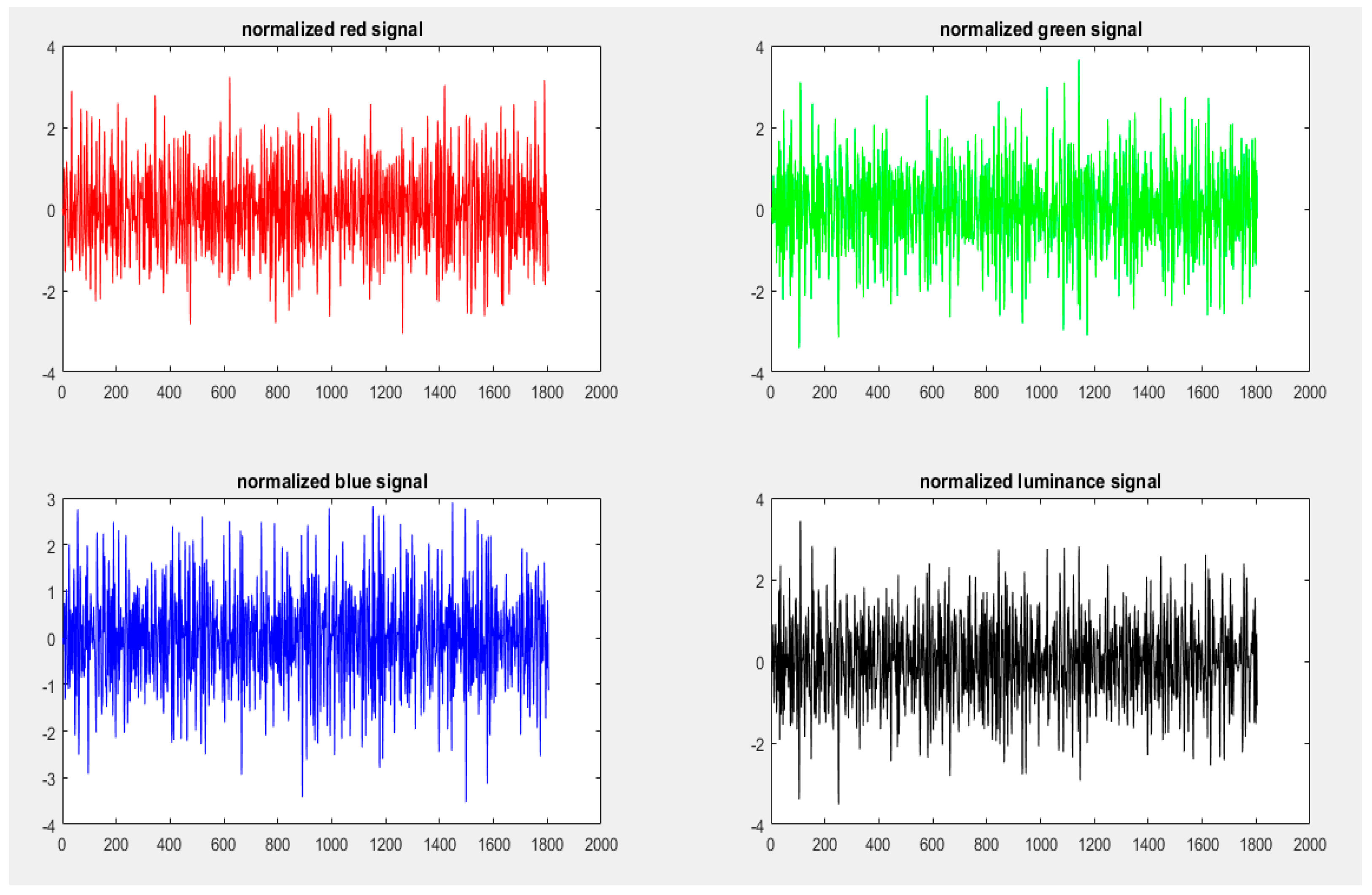

4.5.3. Signal Normalization

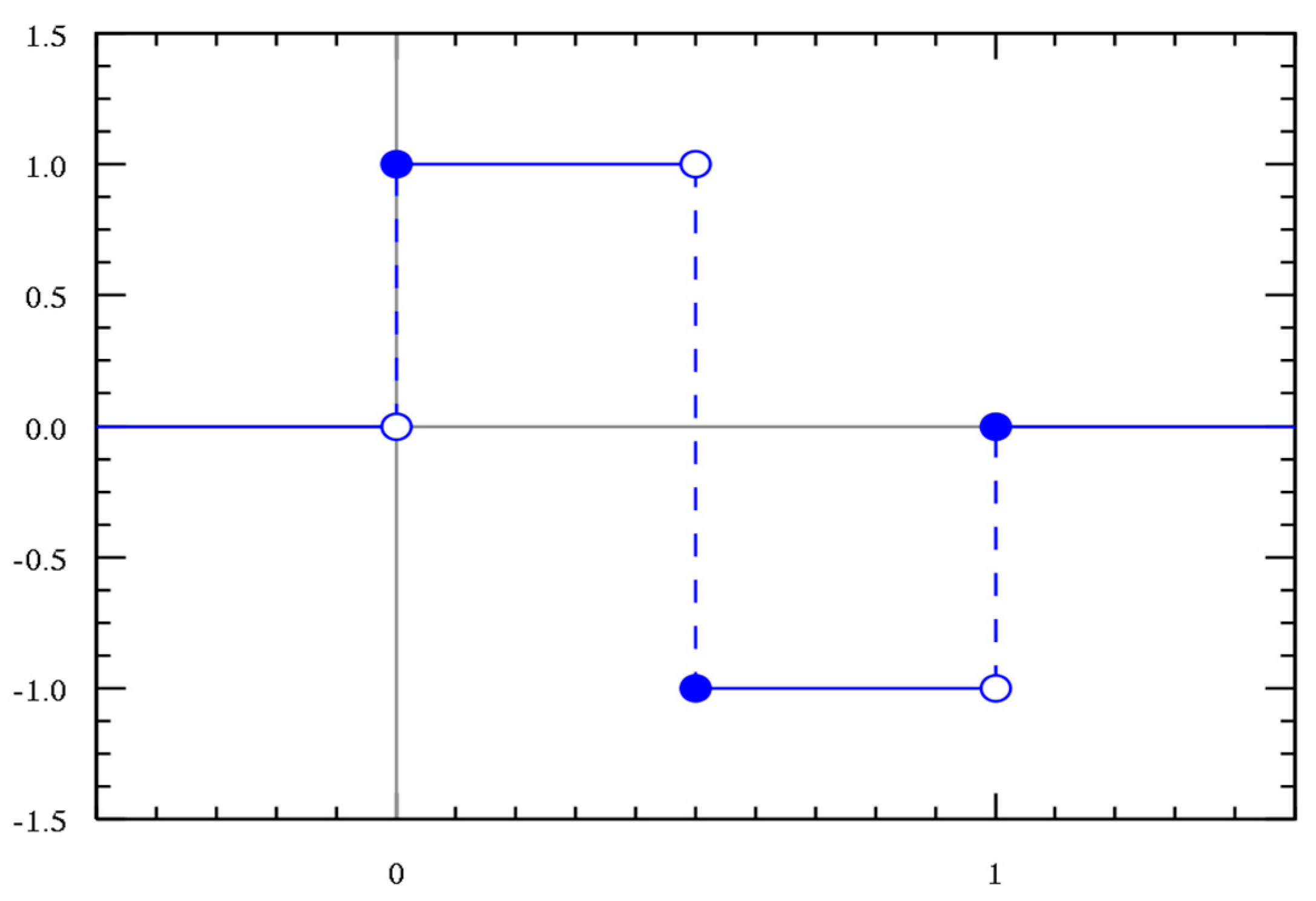

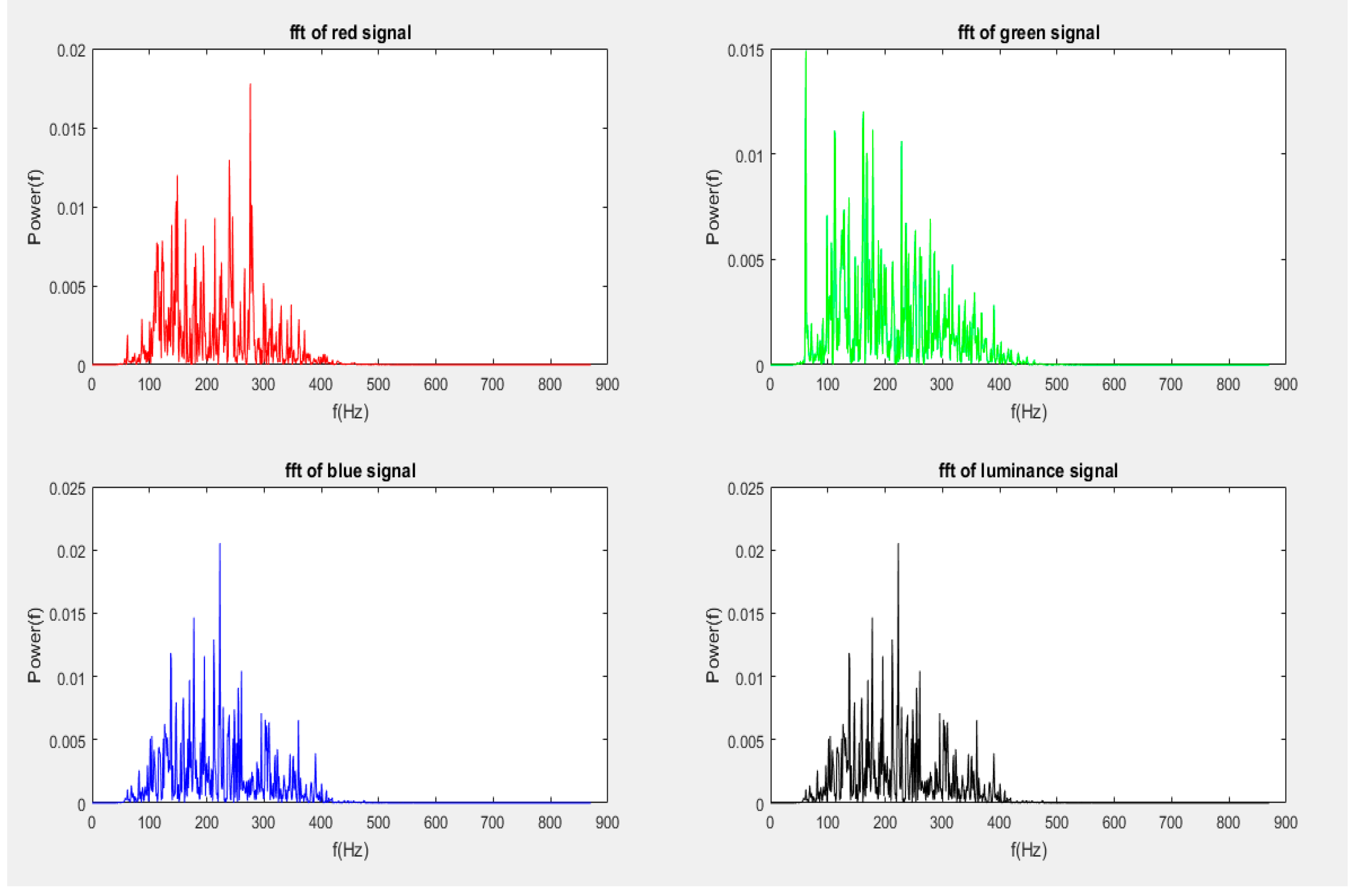

4.6. Spectral Analysis

- spurious peaks presented by the low frequency quasi-DC component of the rPPG signal (it is the AC component which contains the signal of interest)

- spurious peaks created by residual noise left after the denoising of the pre-processing stage

4.6.1. Fast Fourier Transform (FFT)

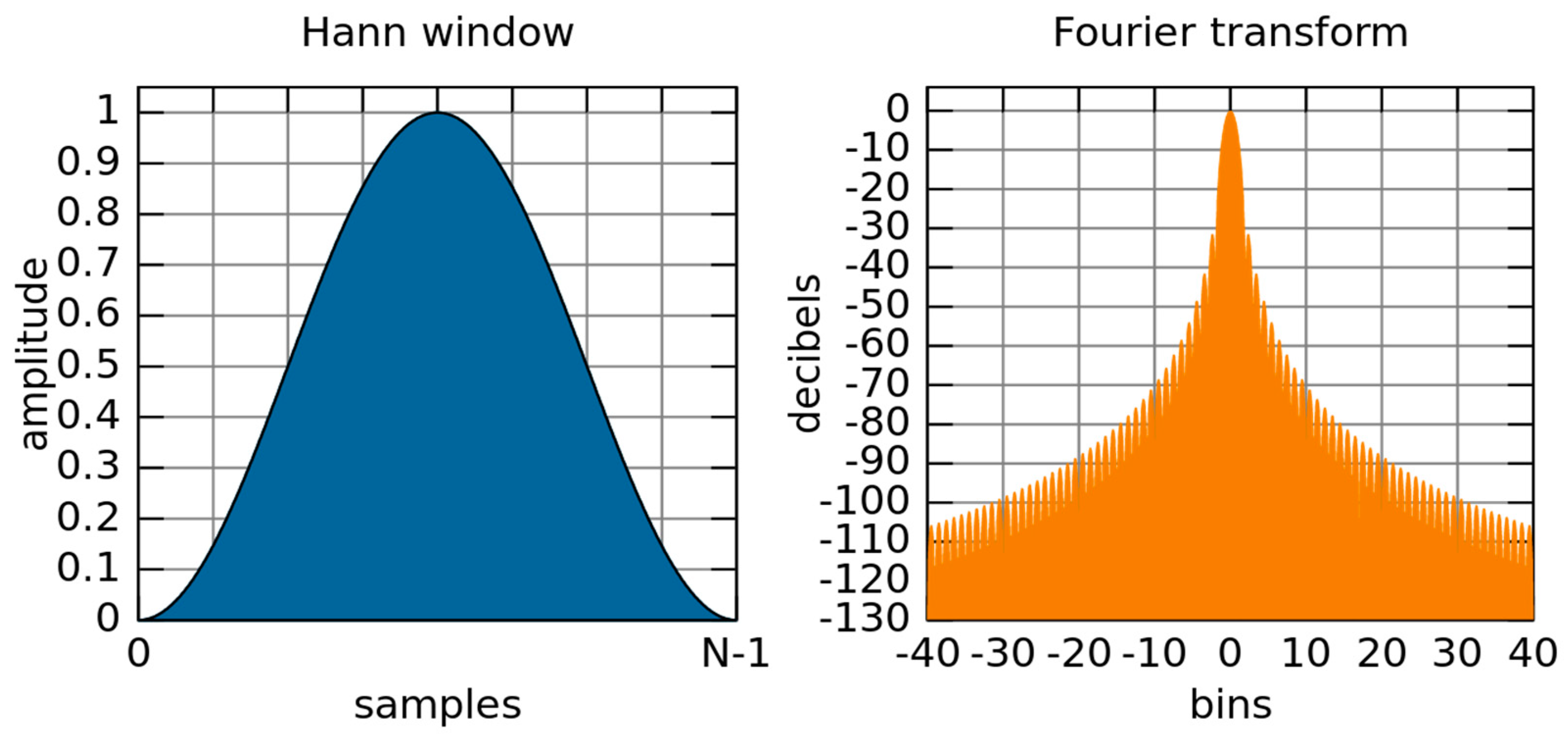

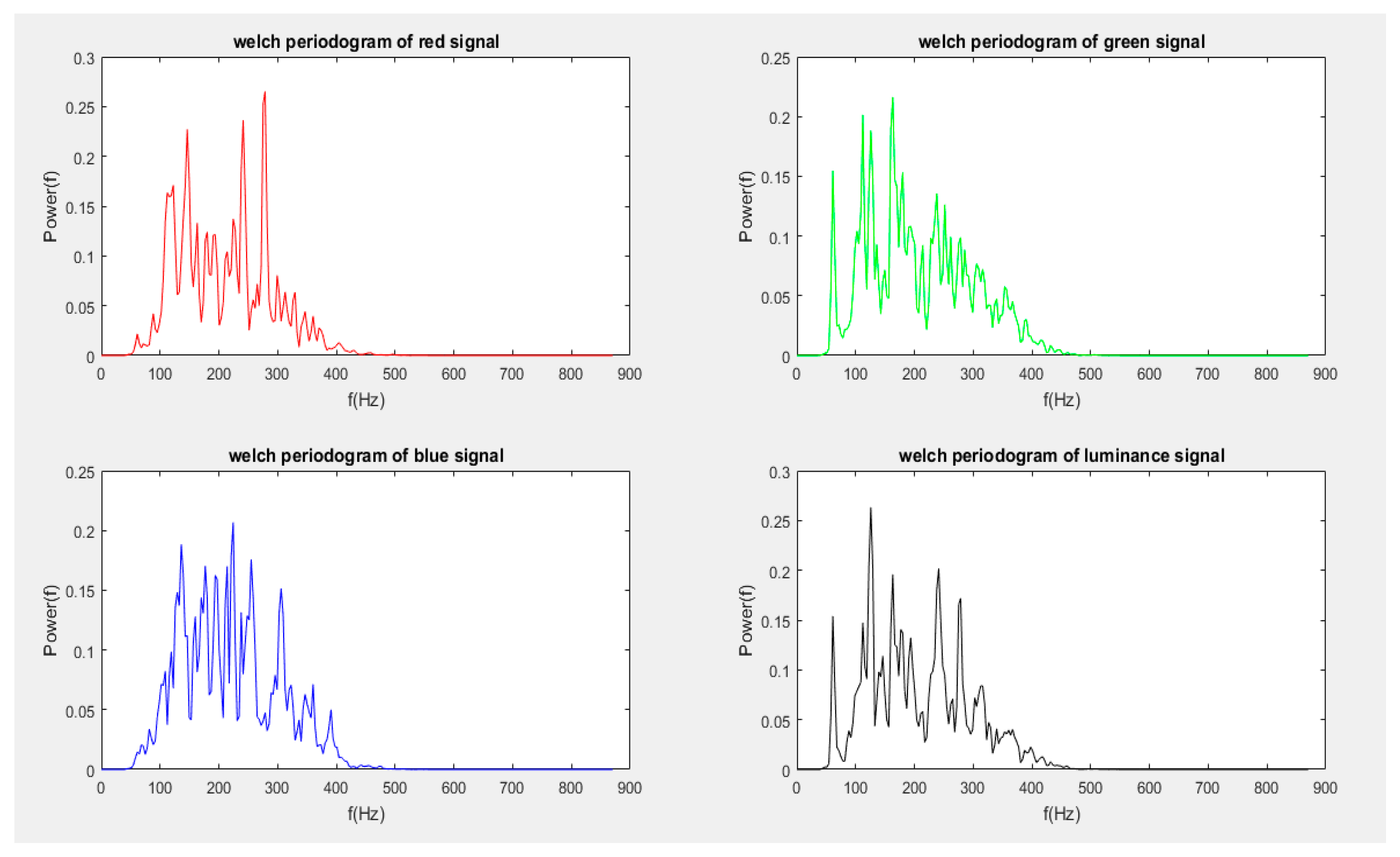

4.6.2. Welch Periodogram

- splitting of signal into overlapping segments

- windowing of overlapped segments in the time domain

- computing of periodograms for individual segments by FFT, followed by squaring of the magnitude

- averaging of individual periodograms (resulting in the reduction of the noise/variance in the power of the frequency components)

4.6.3. Summary Autocorrelation Function (SACF)

4.7. Heart Rate Determination

4.8. Color Models and Spaces

4.8.1. RGB Color Model

4.8.2. YCbCr Color Model

5. Results

- results for self-captured videos

- results for COHFACE videos

5.1. Self-Captured Videos

| SIG1 | SIG2 | SIG3 | SIG4 | |||||

| FFT | Welch | FFT | Welch | FFT | Welch | FFT | Welch | |

| Mean Error | 1.13 | 0.95 | 0.75 | 0.63 | 1.13 | 1.19 | 0.62 | 0.50 |

| RMSE | 124.40 | 105.00 | 93.67 | 76.16 | 122.60 | 126.70 | 83.33 | 71.08 |

| SIG1 | SIG2 | SIG3 | SIG4 | |||||

| FFT | Welch | FFT | Welch | FFT | Welch | FFT | Welch | |

| Mean Error | 0.02 | 0.02 | 0.02 | 0.02 | 0.02 | 0.02 | 0.02 | 0.02 |

| RMSE | 2.54 | 2.49 | 2.54 | 2.49 | 2.59 | 2.49 | 2.49 | 2.42 |

| SIG1 | SIG2 | SIG3 | SIG4 | |||||

| FFT | Welch | FFT | Welch | FFT | Welch | FFT | Welch | |

| Mean Error | 0.57 | 0.48 | 0.39 | 0.39 | 0.55 | 0.61 | 0.25 | 0.22 |

| RMSE | 78.55 | 69.55 | 61.24 | 60.59 | 77.46 | 84.30 | 49.28 | 45.45 |

| SIG1 | SIG2 | SIG3 | SIG4 | |||||

| FFT | Welch | FFT | Welch | FFT | Welch | FFT | Welch | |

| Mean Error | 0.03 | 0.03 | 0.03 | 0.03 | 0.03 | 0.03 | 0.03 | 0.03 |

| RMSE | 3.41 | 3.70 | 3.43 | 3.57 | 3.34 | 3.37 | 3.42 | 3.76 |

| SIG1 | SIG2 | SIG3 | SIG4 | |||||

| FFT | Welch | FFT | Welch | FFT | Welch | FFT | Welch | |

| Mean SNR | 0.08 | 0.09 | 0.09 | 0.11 | 0.08 | 0.09 | 0.14 | 0.16 |

| SIG1 | SIG2 | SIG3 | SIG4 | |||||

| FFT | Welch | FFT | Welch | FFT | Welch | FFT | Welch | |

| Mean SNR | 0.12 | 0.12 | 0.16 | 0.16 | 0.12 | 0.13 | 0.22 | 0.23 |

| SIG1 | SIG2 | SIG3 | SIG4 | |||||

| FFT | Welch | FFT | Welch | FFT | Welch | FFT | Welch | |

| r | -0.15 | -0.03 | -0.09 | -0.06 | -0.28 | -0.35 | -0.22 | -0.13 |

| p | 0.29 | 0.84 | 0.56 | 0.68 | 0.05 | 0.01 | 0.13 | 0.35 |

| SIG1 | SIG2 | SIG3 | SIG4 | |||||

| FFT | Welch | FFT | Welch | FFT | Welch | FFT | Welch | |

| r | 0.99 | 0.99 | 0.99 | 0.99 | 0.99 | 0.99 | 0.99 | 0.99 |

| p | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 |

| SIG1 | SIG2 | SIG3 | SIG4 | |||||

| FFT | Welch | FFT | Welch | FFT | Welch | FFT | Welch | |

| r | 0.03 | 0.09 | 0.08 | 0.20 | 0.18 | 0.03 | 0.36 | 0.47 |

| p | 0.82 | 0.56 | 0.59 | 0.16 | 0.22 | 0.84 | 0.01 | 0.00 |

| SIG1 | SIG2 | SIG3 | SIG4 | |||||

| FFT | Welch | FFT | Welch | FFT | Welch | FFT | Welch | |

| r | 0.99 | 0.98 | 0.99 | 0.98 | 0.99 | 0.99 | 0.99 | 0.98 |

| p | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 |

| SIG1 | SIG2 | SIG3 | SIG4 | |||||

| FFT | Welch | FFT | Welch | FFT | Welch | FFT | Welch | |

| r | -0.50 | -0.55 | -0.47 | -0.56 | -0.54 | -0.60 | -0.63 | -0.69 |

| p | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 |

| SIG1 | SIG2 | SIG3 | SIG4 | |||||

| FFT | Welch | FFT | Welch | FFT | Welch | FFT | Welch | |

| r | -0.45 | -0.43 | -0.44 | -0.46 | -0.48 | -0.43 | -0.45 | -0.52 |

| p | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 |

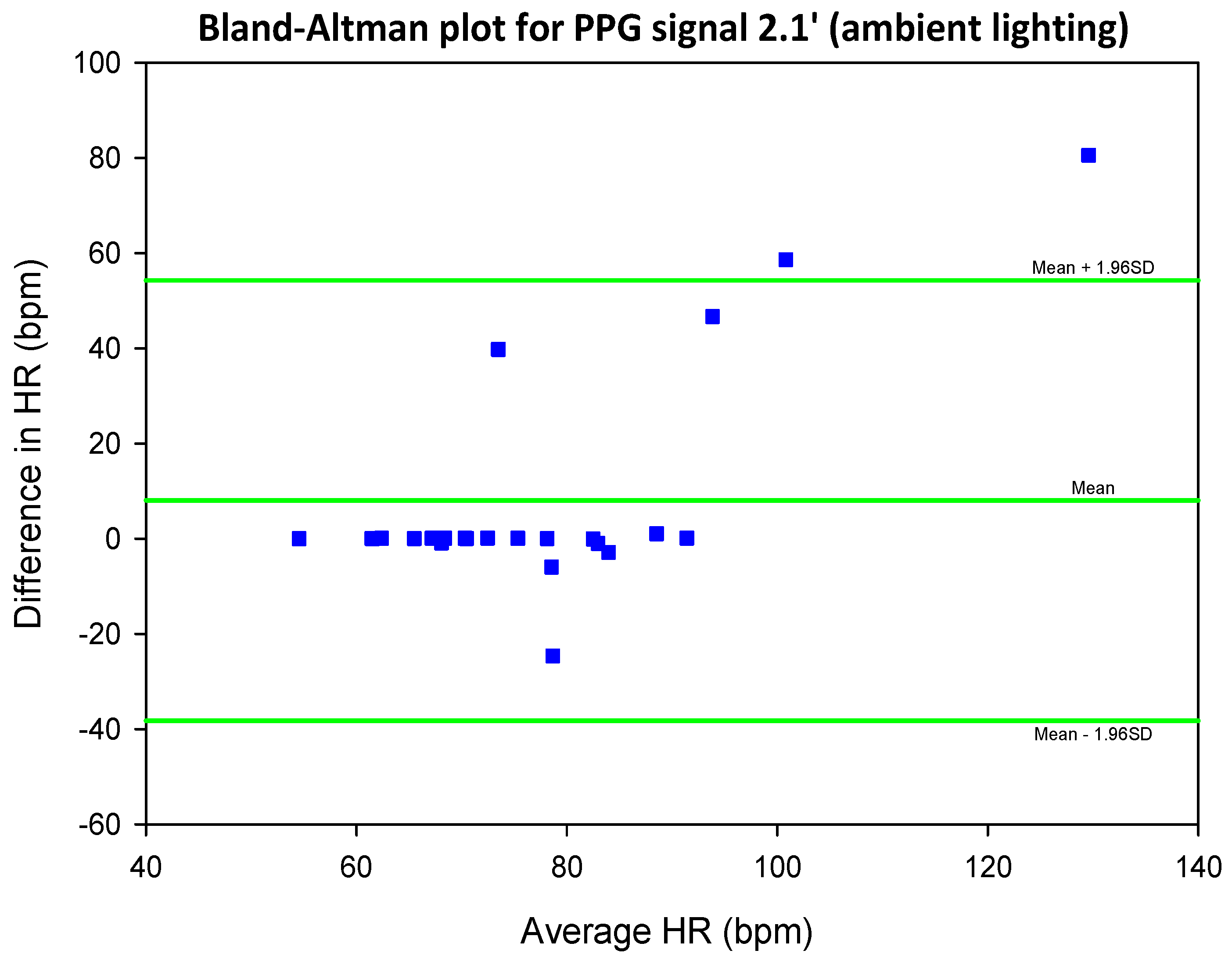

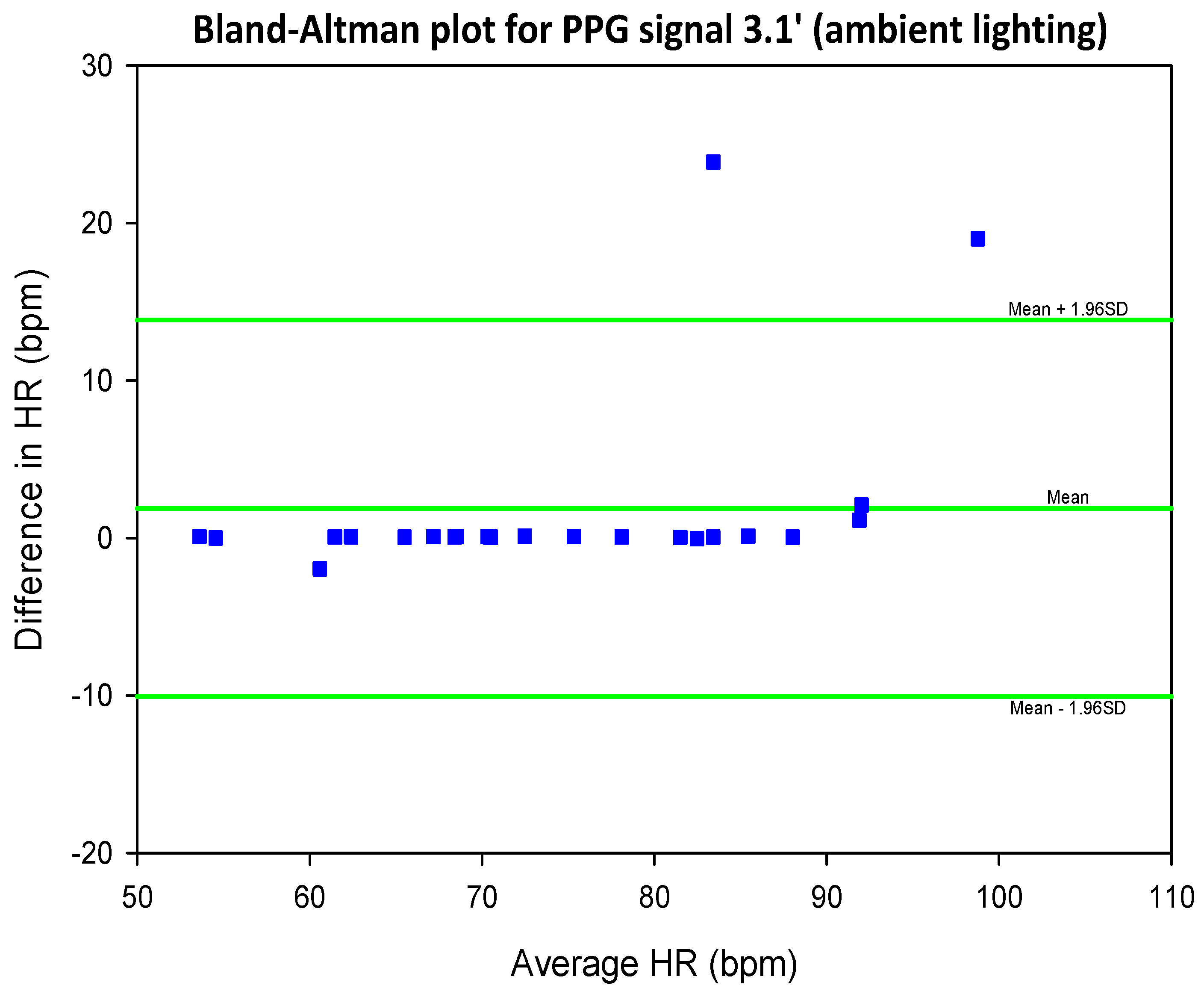

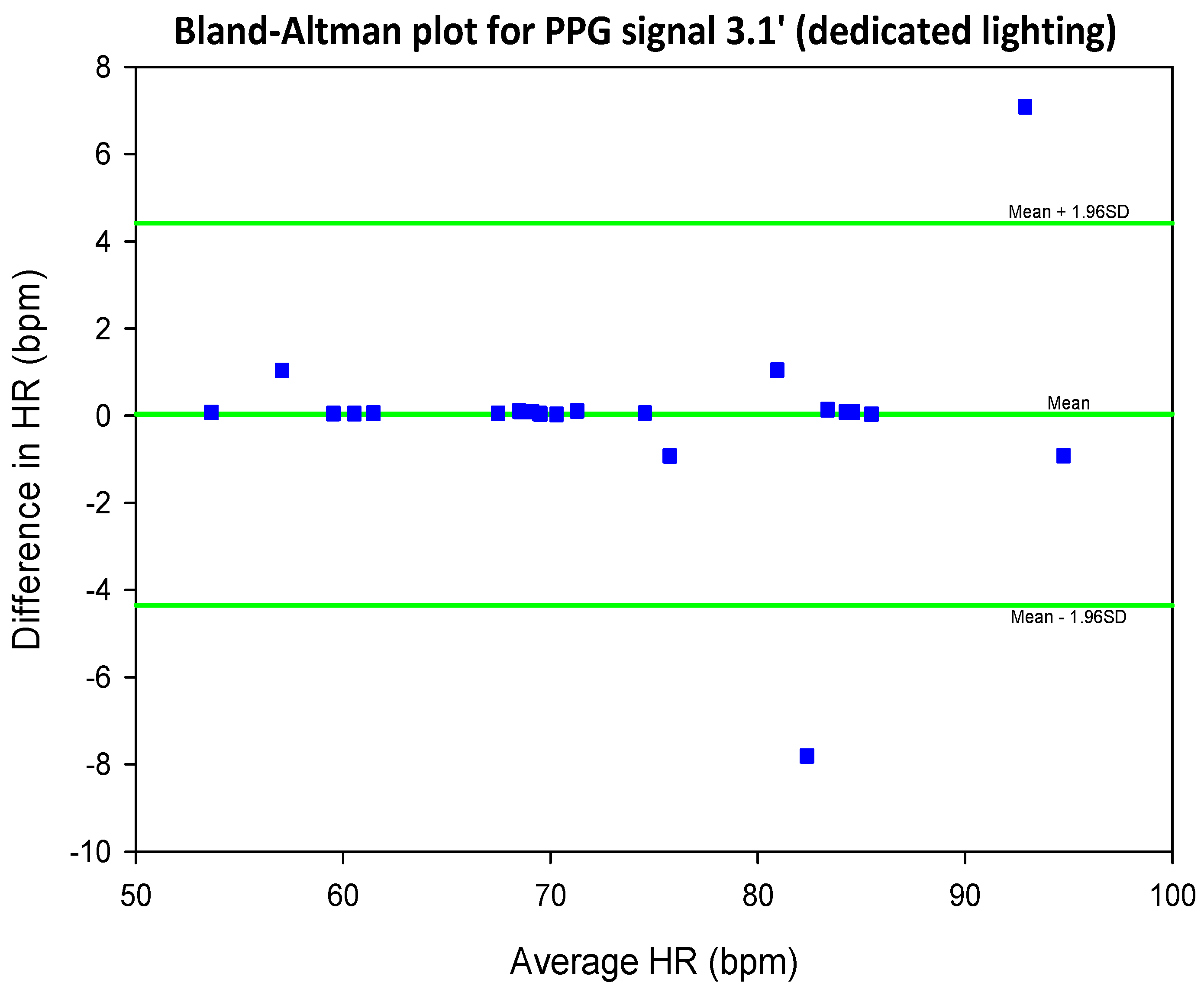

5.2. COHFACE Database Videos

| SIG1′ | SIG2′ | SIG3′ | SIG4′ | |||||

| FFT | Welch | FFT | Welch | FFT | Welch | FFT | Welch | |

| Mean Error | 0.08 | 0.15 | 0.15 | 0.12 | 0.03 | 0.09 | 0.08 | 0.10 |

| RMSE | 14.88 | 24.37 | 24.46 | 18.14 | 6.27 | 14.85 | 16.27 | 16.32 |

| SIG1′ | SIG2′ | SIG3′ | SIG4′ | |||||

| FFT | Welch | FFT | Welch | FFT | Welch | FFT | Welch | |

| Mean Error | 0.01 | 0.02 | 0.02 | 0.09 | 0.01 | 0.02 | 0.00 | 0.01 |

| RMSE | 1.71 | 3.12 | 5.41 | 13.66 | 2.19 | 1.73 | 0.32 | 1.05 |

| SIG1′ | SIG2′ | SIG3′ | SIG4′ | |||||

| FFT | Welch | FFT | Welch | FFT | Welch | FFT | Welch | |

| Mean SNR | 0.27 | 0.28 | 0.26 | 0.27 | 0.35 | 0.35 | 0.36 | 0.36 |

| SIG1′ | SIG2′ | SIG3′ | SIG4′ | |||||

| FFT | Welch | FFT | Welch | FFT | Welch | FFT | Welch | |

| Mean SNR | 0.55 | 0.55 | 0.43 | 0.43 | 0.68 | 0.68 | 0.63 | 0.62 |

| SIG1′ | SIG2′ | SIG3′ | SIG4′ | |||||

| FFT | Welch | FFT | Welch | FFT | Welch | FFT | Welch | |

| r | 0.39 | 0.69 | 0.39 | 0.26 | 0.90 | 0.53 | 0.39 | 0.33 |

| p | 0.06 | 0.00 | 0.06 | 0.22 | 0.00 | 0.01 | 0.06 | 0.12 |

| SIG1′ | SIG2′ | SIG3′ | SIG4′ | |||||

| FFT | Welch | FFT | Welch | FFT | Welch | FFT | Welch | |

| r | 0.99 | 0.96 | 0.93 | 0.55 | 0.98 | 0.99 | 1.00 | 1.00 |

| p | 0.00 | 0.00 | 0.00 | 0.01 | 0.00 | 0.00 | 0.00 | 0.00 |

| SIG1′ | SIG2′ | SIG3′ | SIG4′ | |||||

| FFT | Welch | FFT | Welch | FFT | Welch | FFT | Welch | |

| r | 0.64 | 0.68 | 0.56 | 0.39 | 0.93 | 0.70 | 0.62 | 0.59 |

| p | 0.00 | 0.00 | 0.00 | 0.01 | 0.00 | 0.00 | 0.00 | 0.00 |

6. Discussion

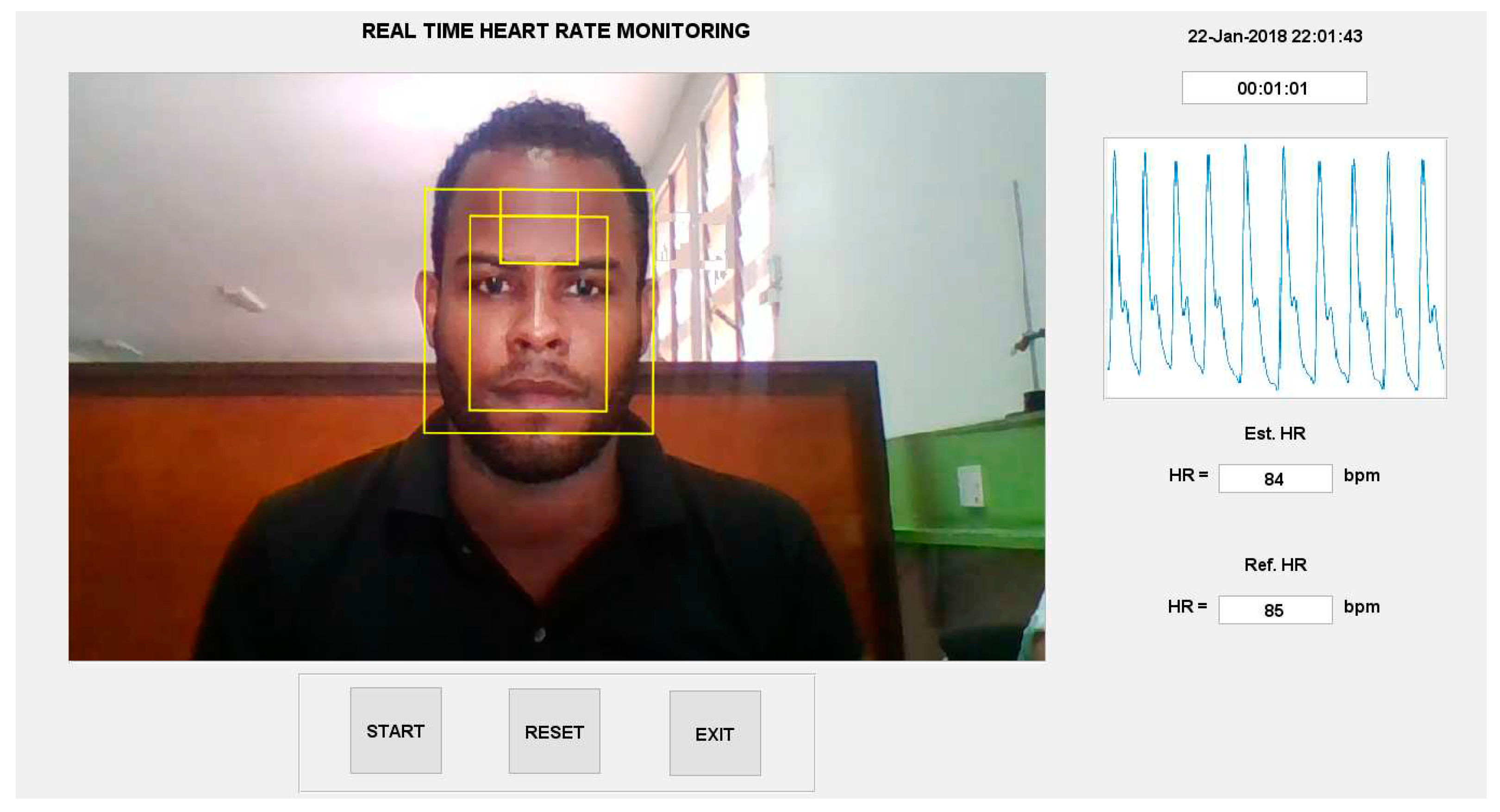

6.1. Implement an Algorithmic Framework for HR Estimation by Video-Based Reflectance Photoplethysmography

6.2. Investigate the Effect of Presence/Absence of Dedicated Lighting on the SNR of rPPG Signals

6.3. Investigate the Effect of Choice/Size of Region of Interest (ROI) on the SNR of rPPG Signals

6.4. Compare the SNR of Green rPPG Signals with That of rPPG Signals Based on Luminance

6.5. Investigate the Viability of the Summary Autocorrelation Function (SACF) for Improving the SNR of rPPG Signals

6.6. Implement the HR Estimation Algorithm into a GUI

- live video capture and processing

- a timer and,

- a continuous display of the ground truth pulse oximeter signal

6.7. Prepare a Laboratory Practical Centred on HR Estimation by rPPG

- To provide students an introduction to the MATLAB IDE

- To provide students experience with extracting a clinically relevant biosignal (rPPG) using a video-based method

- To provide students an introduction to signal processing and signal processing algorithms such as the FFT

- To provide students the opportunity to perform simple statistical analyses on data

7. Conclusions and Further Work

7.1. Conclusions

7.2. Further Work

Acknowledgements

Dedication

List of Acronyms

| Acronym | Expansion |

| AC | Alternating Current |

| AVI | Audio Video Interleaved |

| bpm | beats per minute |

| BR | Breathing Rate |

| BVP | Blood Volume Pulse |

| CCD | Charge-Coupled Device |

| CMOS | Complementary Metal-Oxide-Semiconductor |

| COHFACE | COntactless Heartbeat detection for trustworthy FACE Biometrics |

| COG | Cyan, Orange and Green |

| DC | Direct Current |

| DFT | Discrete Fourier Transform |

| ECG | Electrocardiograph, Electrocardiography, Electrocardiogram |

| FFT | Fast Fourier Transform |

| fps | frames per second |

| FWHM | Full Width at Half Maximum |

| GUI | Graphical User Interface |

| HDF5 | Hierarchical Data Format 5 |

| HeNe | Helium-Neon |

| HR | Heart Rate |

| HRV | Heart Rate Variability |

| ICA | Independent Component Analysis |

| ICU | Intensive Care Unit |

| IDE | Integrated Development Environment |

| JADE | Joint Approximation Diagonalization of Eigen-matrices |

| KLT | Kanade-Lucas-Tomasi |

| LED | Light Emitting Diode |

| MATLAB | Matrix Laboratory |

| MP4 | MPEG-4 Part 14 |

| mW | milliwatt |

| PCA | Principal Component Analysis |

| PPG | Photoplethysmography |

| RGB | Red, Green and Blue |

| RMSE | Root-Mean-Square Error |

| rPPG | Reflectance Photoplethysmography |

| SACF | Summary Autocorrelation Function |

| SNR | Signal-to-Noise Ratio |

| SPA | Smoothness Priors Approach |

| SaO2 | arterial Oxygen Saturation |

| YCbCr | Luminance, blue-difference Chroma, and red-difference Chroma |

Appendix A. Raw Results

| Video | Heart Rate Estimate | Ref. HR | |||||||

| SIG1 | SIG2 | SIG3 | SIG4 | ||||||

| FFT | Welch | FFT | Welch | FFT | Welch | FFT | Welch | ||

| 1 | 83.60 | 84.96 | 83.60 | 207.30 | 83.60 | 234.49 | 83.60 | 84.96 | 85 |

| 2 | 209.50 | 207.30 | 101.84 | 101.95 | 101.84 | 207.30 | 87.29 | 88.36 | 88 |

| 3 | 74.68 | 173.32 | 174.68 | 81.56 | 174.68 | 173.32 | 174.68 | 84.96 | 86 |

| 4 | 83.36 | 108.75 | 106.63 | 108.75 | 170.61 | 108.75 | 83.36 | 84.96 | 85 |

| 5 | 19.42 | 220.90 | 224.21 | 241.29 | 247.20 | 248.09 | 115.94 | 84.96 | 86 |

| 6 | 45.66 | 149.53 | 103.90 | 129.14 | 239.83 | 241.29 | 149.53 | 149.53 | 83 |

| 7 | 89.40 | 91.41 | 135.60 | 112.50 | 90.40 | 91.41 | 89.40 | 98.44 | 86 |

| 8 | 13.40 | 169.92 | 170.91 | 169.92 | 130.36 | 129.14 | 183.46 | 183.52 | 97 |

| 9 | 14.89 | 116.02 | 115.90 | 116.02 | 184.43 | 116.02 | 180.40 | 179.30 | 97 |

| 10 | 17.14 | 217.50 | 241.37 | 241.29 | 217.14 | 217.50 | 237.49 | 237.89 | 94 |

| 11 | 121.92 | 122.34 | 176.54 | 122.34 | 199.94 | 200.51 | 92.66 | 95.16 | 95 |

| 12 | 114.38 | 115.55 | 114.38 | 115.55 | 114.38 | 115.55 | 100.81 | 101.95 | 100 |

| 13 | 148.87 | 142.73 | 94.73 | 142.73 | 147.90 | 142.73 | 98.60 | 98.55 | 100 |

| 14 | 118.00 | 251.48 | 118.00 | 115.55 | 132.51 | 251.48 | 193.44 | 193.71 | 97 |

| 15 | 99.04 | 173.32 | 99.04 | 98.55 | 173.81 | 173.32 | 99.04 | 98.55 | 98 |

| 16 | 194.41 | 163.13 | 194.41 | 163.13 | 213.85 | 214.10 | 103.04 | 101.95 | 106 |

| 17 | 02.19 | 241.29 | 189.55 | 142.73 | 202.19 | 305.86 | 105.96 | 105.35 | 107 |

| 18 | 173.61 | 173.32 | 173.61 | 173.32 | 113.48 | 112.15 | 101.84 | 101.95 | 104 |

| 19 | 248.71 | 251.48 | 150.00 | 149.53 | 248.71 | 125.74 | 210.97 | 207.30 | 107 |

| 20 | 100.50 | 101.95 | 100.50 | 101.95 | 100.50 | 101.95 | 149.75 | 105.47 | 104 |

| 21 | 152.68 | 152.93 | 140.20 | 180.12 | 152.68 | 152.93 | 178.61 | 98.55 | 99 |

| 22 | 181.94 | 183.52 | 249.68 | 129.14 | 182.90 | 183.52 | 96.77 | 95.16 | 100 |

| 23 | 204.88 | 146.13 | 148.56 | 146.13 | 204.88 | 203.91 | 102.92 | 98.55 | 100 |

| 24 | 315.66 | 129.14 | 128.96 | 227.70 | 315.66 | 200.51 | 100.09 | 98.55 | 101 |

| 25 | 181.57 | 180.12 | 156.33 | 156.33 | 142.73 | 142.73 | 98.07 | 135.94 | 99 |

| 26 | 105.00 | 105.47 | 105.00 | 112.50 | 105.00 | 105.47 | 150.00 | 151.17 | 130 |

| 27 | 174.87 | 176.72 | 174.87 | 81.56 | 175.85 | 176.72 | 107.84 | 159.73 | 111 |

| 28 | 137.50 | 139.34 | 149.12 | 139.34 | 137.50 | 139.34 | 137.50 | 139.34 | 143 |

| 29 | 238.02 | 237.89 | 238.02 | 237.89 | 238.99 | 237.89 | 123.38 | 122.34 | 127 |

| 30 | 309.81 | 309.26 | 117.88 | 115.55 | 173.42 | 173.32 | 117.88 | 118.95 | 121 |

| 31 | 241.24 | 256.64 | 185.57 | 179.30 | 167.99 | 249.61 | 83.02 | 84.38 | 87 |

| 32 | 103.64 | 94.92 | 103.64 | 172.27 | 103.64 | 80.86 | 91.91 | 91.41 | 83 |

| 33 | 284.00 | 284.77 | 80.30 | 80.86 | 284.98 | 284.77 | 80.30 | 80.86 | 82 |

| 34 | 322.90 | 214.45 | 88.33 | 87.89 | 159.00 | 214.45 | 88.33 | 87.89 | 87 |

| 35 | 79.72 | 94.92 | 79.72 | 80.86 | 126.95 | 126.56 | 126.95 | 161.72 | 81 |

| 36 | 334.13 | 305.86 | 117.58 | 84.38 | 220.47 | 305.86 | 83.29 | 84.38 | 87 |

| 37 | 238.50 | 130.08 | 89.31 | 91.41 | 238.50 | 288.28 | 172.74 | 94.92 | 85 |

| 38 | 240.72 | 168.75 | 312.45 | 168.75 | 240.72 | 232.03 | 240.72 | 239.06 | 83 |

| 39 | 107.67 | 84.96 | 115.36 | 129.14 | 107.67 | 108.75 | 94.21 | 105.35 | 87 |

| 40 | 189.63 | 105.47 | 130.68 | 130.08 | 225.00 | 225.00 | 84.50 | 91.41 | 87 |

| 41 | 241.18 | 172.27 | 169.61 | 158.20 | 241.18 | 239.06 | 235.29 | 235.55 | 84 |

| 42 | 232.48 | 232.03 | 204.03 | 203.91 | 290.35 | 288.28 | 177.55 | 175.78 | 85 |

| 43 | 212.73 | 214.10 | 191.74 | 214.10 | 211.78 | 214.10 | 165.03 | 197.11 | 83 |

| 44 | 272.46 | 274.22 | 283.28 | 284.77 | 354.10 | 281.25 | 240.00 | 239.06 | 81 |

| 45 | 223.30 | 227.70 | 198.69 | 197.11 | 283.85 | 282.07 | 292.37 | 292.27 | 83 |

| 46 | 290.51 | 239.06 | 80.48 | 123.05 | 240.46 | 239.06 | 299.35 | 161.72 | 84 |

| 47 | 314.07 | 158.20 | 159.00 | 158.20 | 326.83 | 326.95 | 180.59 | 179.30 | 83 |

| 48 | 218.27 | 217.97 | 283.85 | 217.97 | 218.27 | 217.97 | 240.78 | 210.94 | 80 |

| 49 | 173.75 | 203.91 | 300.65 | 221.48 | 300.65 | 281.25 | 240.13 | 179.30 | 87 |

| 50 | 233.26 | 234.49 | 322.40 | 81.56 | 233.26 | 234.49 | 233.26 | 146.13 | 83 |

| Video | Heart Rate Estimate | Ref. HR | |||||||

| SIG1 | SIG2 | SIG3 | SIG4 | ||||||

| FFT | Welch | FFT | Welch | FFT | Welch | FFT | Welch | ||

| 1 | 83.60 | 84.96 | 83.60 | 84.96 | 83.60 | 84.96 | 83.60 | 84.96 | 85 |

| 2 | 87.29 | 88.36 | 87.29 | 88.36 | 87.29 | 88.36 | 87.29 | 88.36 | 88 |

| 3 | 84.43 | 81.56 | 84.43 | 81.56 | 84.43 | 81.56 | 84.43 | 84.96 | 86 |

| 4 | 83.36 | 84.96 | 83.36 | 84.96 | 83.36 | 84.96 | 83.36 | 84.96 | 85 |

| 5 | 86.23 | 84.96 | 86.23 | 84.96 | 86.23 | 84.96 | 86.23 | 84.96 | 86 |

| 6 | 84.48 | 84.96 | 84.48 | 84.96 | 84.48 | 84.96 | 84.48 | 84.96 | 83 |

| 7 | 81.36 | 80.86 | 81.36 | 80.86 | 81.36 | 80.86 | 81.36 | 80.86 | 86 |

| 8 | 94.63 | 95.16 | 94.63 | 95.16 | 94.63 | 95.16 | 94.63 | 95.16 | 97 |

| 9 | 95.74 | 98.44 | 95.74 | 98.44 | 95.74 | 98.44 | 95.74 | 98.44 | 97 |

| 10 | 92.09 | 91.76 | 92.09 | 91.76 | 92.09 | 91.73 | 92.09 | 91.73 | 94 |

| 11 | 92.66 | 95.16 | 92.66 | 95.16 | 92.66 | 95.16 | 92.66 | 95.16 | 95 |

| 12 | 100.80 | 102.00 | 100.80 | 102.00 | 100.80 | 102.00 | 100.80 | 102.00 | 100 |

| 13 | 94.73 | 95.16 | 94.73 | 95.16 | 94.73 | 95.16 | 98.60 | 98.55 | 100 |

| 14 | 97.69 | 95.16 | 97.69 | 95.16 | 97.69 | 95.16 | 97.69 | 95.16 | 97 |

| 15 | 99.04 | 98.55 | 99.04 | 98.55 | 99.04 | 98.55 | 99.04 | 98.55 | 98 |

| 16 | 103.00 | 102.00 | 103.00 | 102.00 | 103.00 | 102.00 | 103.00 | 102.00 | 106 |

| 17 | 106.00 | 105.40 | 106.00 | 105.40 | 106.00 | 105.40 | 106.00 | 105.40 | 107 |

| 18 | 101.80 | 102.00 | 101.80 | 102.00 | 101.80 | 102.00 | 101.80 | 102.00 | 104 |

| 19 | 106.50 | 105.40 | 106.50 | 105.40 | 106.50 | 105.40 | 106.50 | 105.40 | 107 |

| 20 | 100.50 | 102.00 | 100.50 | 102.00 | 100.50 | 102.00 | 100.50 | 102.00 | 104 |

| 21 | 98.91 | 98.55 | 98.91 | 98.55 | 98.91 | 98.55 | 98.91 | 98.55 | 99 |

| 22 | 96.77 | 98.55 | 96.77 | 98.55 | 96.77 | 98.55 | 96.77 | 95.16 | 100 |

| 23 | 102.90 | 98.55 | 102.90 | 98.55 | 102.90 | 98.55 | 102.90 | 98.55 | 100 |

| 24 | 100.10 | 98.55 | 100.10 | 98.55 | 100.10 | 98.55 | 100.10 | 98.55 | 101 |

| 25 | 98.07 | 98.55 | 98.07 | 98.55 | 98.07 | 98.55 | 98.07 | 98.55 | 99 |

| 26 | 126.00 | 126.60 | 126.00 | 126.60 | 126.00 | 126.60 | 126.00 | 126.60 | 130 |

| 27 | 107.80 | 108.80 | 107.80 | 108.80 | 107.80 | 108.80 | 107.80 | 108.80 | 111 |

| 28 | 137.50 | 139.30 | 137.50 | 139.30 | 137.50 | 139.30 | 137.50 | 139.30 | 143 |

| 29 | 123.40 | 122.30 | 123.40 | 122.30 | 123.40 | 122.30 | 123.40 | 122.30 | 127 |

| 30 | 117.90 | 118.90 | 117.90 | 118.90 | 117.90 | 118.90 | 117.90 | 118.90 | 121 |

| 31 | 83.02 | 84.38 | 83.02 | 84.38 | 83.02 | 84.38 | 83.02 | 84.38 | 87 |

| 32 | 81.15 | 80.86 | 81.15 | 80.86 | 81.15 | 80.86 | 81.15 | 80.86 | 83 |

| 33 | 80.30 | 80.86 | 80.30 | 80.86 | 80.30 | 80.86 | 80.30 | 80.86 | 82 |

| 34 | 88.33 | 87.89 | 88.33 | 87.89 | 88.33 | 87.89 | 88.33 | 87.89 | 87 |

| 35 | 79.72 | 80.86 | 79.72 | 80.86 | 79.72 | 80.86 | 79.72 | 80.86 | 81 |

| 36 | 84.27 | 84.38 | 84.27 | 84.38 | 84.27 | 84.38 | 84.27 | 84.38 | 87 |

| 37 | 80.48 | 77.34 | 80.48 | 77.34 | 80.48 | 77.34 | 80.48 | 77.34 | 85 |

| 38 | 83.52 | 84.38 | 83.52 | 84.38 | 83.52 | 84.38 | 83.52 | 84.38 | 83 |

| 39 | 84.60 | 84.96 | 84.60 | 84.96 | 82.67 | 84.96 | 82.67 | 84.96 | 87 |

| 40 | 84.50 | 84.38 | 84.50 | 84.38 | 84.50 | 84.38 | 84.50 | 84.38 | 87 |

| 41 | 83.33 | 84.38 | 83.33 | 84.38 | 83.33 | 84.38 | 83.33 | 84.38 | 84 |

| 42 | 83.38 | 84.38 | 83.38 | 84.38 | 83.38 | 84.38 | 83.38 | 84.38 | 85 |

| 43 | 79.18 | 81.56 | 79.18 | 81.56 | 79.18 | 81.56 | 79.18 | 81.56 | 83 |

| 44 | 80.66 | 80.86 | 80.66 | 80.86 | 80.66 | 80.86 | 80.66 | 80.86 | 81 |

| 45 | 80.42 | 81.56 | 80.42 | 81.56 | 80.42 | 81.56 | 80.42 | 81.56 | 83 |

| 46 | 80.48 | 80.86 | 80.48 | 80.86 | 80.48 | 80.86 | 80.48 | 80.86 | 84 |

| 47 | 80.48 | 80.86 | 80.48 | 80.86 | 80.48 | 80.86 | 80.48 | 80.86 | 83 |

| 48 | 78.30 | 77.34 | 78.30 | 77.34 | 78.30 | 77.34 | 78.30 | 77.34 | 80 |

| 49 | 88.83 | 87.89 | 88.83 | 87.89 | 88.83 | 87.89 | 88.83 | 87.89 | 87 |

| 50 | 81.55 | 81.56 | 81.55 | 81.56 | 81.55 | 81.56 | 81.55 | 81.56 | 83 |

| Video | Heart Rate Estimate | Ref. HR | |||||||

| SIG1 | SIG2 | SIG3 | SIG4 | ||||||

| FFT | Welch | FFT | Welch | FFT | Welch | FFT | Welch | ||

| 1 | 226.96 | 95.16 | 131.91 | 95.16 | 226.96 | 95.16 | 93.11 | 91.76 | 95 |

| 2 | 155.62 | 190.31 | 187.47 | 186.91 | 189.29 | 190.31 | 95.55 | 95.16 | 98 |

| 3 | 279.99 | 98.55 | 96.88 | 98.55 | 153.07 | 98.55 | 96.88 | 98.55 | 100 |

| 4 | 120.20 | 142.73 | 92.09 | 142.73 | 142.50 | 142.73 | 101.78 | 101.95 | 99 |

| 5 | 238.24 | 95.16 | 94.52 | 95.16 | 140.82 | 190.31 | 96.45 | 95.16 | 94 |

| 6 | 91.98 | 91.76 | 91.98 | 91.76 | 91.98 | 91.76 | 183.96 | 95.16 | 91 |

| 7 | 217.01 | 217.50 | 217.01 | 217.50 | 230.64 | 231.09 | 97.32 | 95.16 | 94 |

| 8 | 134.37 | 176.72 | 263.90 | 180.12 | 182.70 | 183.52 | 88.93 | 91.76 | 92 |

| 9 | 210.29 | 210.94 | 189.16 | 158.20 | 86.53 | 210.94 | 101.62 | 214.45 | 94 |

| 10 | 101.15 | 101.95 | 101.15 | 101.95 | 98.23 | 98.55 | 95.32 | 95.16 | 96 |

| 11 | 120.13 | 118.95 | 120.13 | 118.95 | 120.13 | 118.95 | 120.13 | 118.95 | 122 |

| 12 | 287.14 | 285.47 | 121.15 | 118.95 | 286.18 | 285.47 | 114.47 | 115.55 | 119 |

| 13 | 182.50 | 152.93 | 115.87 | 115.55 | 231.74 | 231.09 | 115.87 | 115.55 | 119 |

| 14 | 110.80 | 135.94 | 103.09 | 105.35 | 110.80 | 142.73 | 110.80 | 108.75 | 112 |

| 15 | 118.00 | 217.50 | 79.31 | 217.50 | 241.80 | 241.29 | 109.29 | 108.75 | 113 |

| 16 | 110.51 | 108.75 | 110.51 | 108.75 | 109.54 | 108.75 | 110.51 | 108.75 | 112 |

| 17 | 163.61 | 105.35 | 115.49 | 105.35 | 163.61 | 278.67 | 115.49 | 115.55 | 112 |

| 18 | 115.30 | 116.02 | 115.30 | 116.02 | 115.30 | 116.02 | 117.28 | 116.02 | 113 |

| 19 | 148.23 | 118.95 | 110.45 | 118.95 | 217.98 | 149.53 | 110.45 | 112.15 | 110 |

| 20 | 127.88 | 282.07 | 134.67 | 129.14 | 190.86 | 190.31 | 102.69 | 101.95 | 107 |

| 21 | 141.28 | 142.73 | 267.69 | 271.88 | 152.44 | 149.53 | 152.44 | 149.53 | 146 |

| 22 | 207.05 | 207.30 | 207.05 | 163.13 | 207.05 | 207.30 | 271.21 | 271.88 | 139 |

| 23 | 193.66 | 193.71 | 128.78 | 234.49 | 193.66 | 193.71 | 128.78 | 129.14 | 132 |

| 24 | 236.66 | 237.89 | 236.66 | 234.49 | 236.66 | 237.89 | 236.66 | 234.49 | 124 |

| 25 | 220.04 | 146.13 | 109.54 | 122.34 | 180.30 | 180.12 | 120.20 | 122.34 | 123 |

| 26 | 118.46 | 118.95 | 118.46 | 118.95 | 118.46 | 118.95 | 118.46 | 105.35 | 121 |

| 27 | 224.77 | 115.55 | 117.23 | 115.55 | 117.23 | 115.55 | 118.20 | 118.95 | 121 |

| 28 | 114.64 | 115.55 | 114.64 | 115.55 | 217.62 | 217.50 | 229.28 | 231.09 | 121 |

| 29 | 123.52 | 115.55 | 114.77 | 115.55 | 123.52 | 115.55 | 182.85 | 176.72 | 117 |

| 30 | 189.82 | 190.31 | 111.21 | 190.31 | 238.71 | 186.91 | 111.21 | 112.15 | 115 |

| 31 | 182.05 | 183.52 | 182.05 | 224.30 | 214.63 | 217.50 | 212.71 | 217.50 | 115 |

| 32 | 70.39 | 55.78 | 67.61 | 65.63 | 109.28 | 65.63 | 82.43 | 82.03 | 112 |

| 33 | 219.41 | 217.50 | 219.41 | 217.50 | 217.50 | 217.50 | 217.50 | 217.50 | 113 |

| 34 | 212.94 | 210.70 | 212.94 | 210.70 | 212.94 | 210.70 | 211.03 | 210.70 | 109 |

| 35 | 64.97 | 85.31 | 64.97 | 85.31 | 85.39 | 85.31 | 64.97 | 98.44 | 110 |

| 36 | 257.74 | 258.28 | 257.74 | 258.28 | 257.74 | 258.28 | 86.90 | 78.16 | 82 |

| 37 | 87.53 | 116.02 | 117.72 | 116.02 | 87.53 | 87.89 | 117.72 | 116.02 | 84 |

| 38 | 81.61 | 81.56 | 166.13 | 81.56 | 81.61 | 81.56 | 78.69 | 78.16 | 83 |

| 39 | 157.83 | 149.53 | 152.02 | 149.53 | 182.04 | 149.53 | 232.39 | 78.16 | 81 |

| 40 | 80.59 | 81.56 | 80.59 | 81.56 | 80.59 | 81.56 | 162.15 | 163.13 | 82 |

| 41 | 185.35 | 186.91 | 73.75 | 74.77 | 175.65 | 288.87 | 74.72 | 74.77 | 74 |

| 42 | 253.43 | 163.13 | 162.15 | 163.13 | 149.53 | 149.53 | 80.59 | 81.56 | 80 |

| 43 | 124.08 | 139.34 | 112.45 | 112.15 | 124.08 | 197.11 | 143.47 | 142.73 | 78 |

| 44 | 158.45 | 159.73 | 158.45 | 159.73 | 250.79 | 251.48 | 79.71 | 81.56 | 84 |

| 45 | 83.69 | 81.56 | 83.69 | 81.56 | 83.69 | 84.96 | 83.69 | 84.96 | 83 |

| 46 | 174.87 | 156.33 | 157.39 | 156.33 | 81.61 | 152.93 | 84.52 | 84.96 | 83 |

| 47 | 148.31 | 149.53 | 140.56 | 98.55 | 148.31 | 149.53 | 132.80 | 132.54 | 140 |

| 48 | 124.49 | 122.34 | 124.49 | 122.34 | 124.49 | 122.34 | 124.49 | 135.94 | 127 |

| 49 | 115.55 | 115.55 | 116.52 | 115.55 | 115.55 | 115.55 | 115.55 | 115.55 | 117 |

| 50 | 113.23 | 112.15 | 113.23 | 112.15 | 113.23 | 112.15 | 112.26 | 112.15 | 117 |

| 51 | 114.51 | 112.15 | 114.51 | 112.15 | 114.51 | 115.55 | 114.51 | 115.55 | 118 |

| Video | Heart Rate Estimate | Ref. HR | |||||||

| SIG1 | SIG2 | SIG3 | SIG4 | ||||||

| FFT | Welch | FFT | Welch | FFT | Welch | FFT | Welch | ||

| 1 | 96.99 | 95.16 | 96.99 | 95.16 | 96.02 | 95.16 | 93.11 | 91.76 | 95 |

| 2 | 95.55 | 95.16 | 95.55 | 95.16 | 95.55 | 95.16 | 95.55 | 95.16 | 98 |

| 3 | 96.88 | 98.55 | 96.88 | 98.55 | 96.88 | 98.55 | 96.88 | 98.55 | 100 |

| 4 | 92.09 | 91.76 | 92.09 | 91.76 | 92.09 | 91.76 | 92.09 | 91.76 | 99 |

| 5 | 93.56 | 95.16 | 94.52 | 95.16 | 93.56 | 95.16 | 96.45 | 95.16 | 94 |

| 6 | 91.98 | 91.97 | 91.98 | 91.76 | 91.98 | 91.76 | 93.90 | 95.16 | 91 |

| 7 | 87.58 | 98.55 | 87.58 | 98.55 | 87.58 | 98.55 | 97.32 | 95.16 | 94 |

| 8 | 93.77 | 91.76 | 88.93 | 88.36 | 90.87 | 91.76 | 88.93 | 91.76 | 92 |

| 9 | 86.53 | 87.53 | 86.53 | 87.53 | 86.53 | 87.53 | 86.53 | 87.89 | 94 |

| 10 | 101.20 | 102.00 | 101.20 | 102.00 | 98.23 | 98.55 | 95.32 | 95.16 | 96 |

| 11 | 120.10 | 118.90 | 120.10 | 118.90 | 120.10 | 118.90 | 120.10 | 118.90 | 122 |

| 12 | 114.50 | 122.30 | 114.50 | 118.50 | 114.50 | 115.50 | 114.50 | 115.50 | 119 |

| 13 | 115.90 | 115.50 | 115.90 | 115.50 | 115.90 | 115.50 | 115.90 | 115.50 | 119 |

| 14 | 110.80 | 105.40 | 110.80 | 105.40 | 110.80 | 108.80 | 110.80 | 108.80 | 112 |

| 15 | 112.20 | 112.10 | 112.20 | 112.10 | 112.20 | 112.10 | 112.20 | 108.80 | 113 |

| 16 | 110.50 | 108.80 | 110.50 | 108.80 | 109.50 | 108.80 | 110.50 | 108.80 | 112 |

| 17 | 115.50 | 105.40 | 115.50 | 115.50 | 115.50 | 115.50 | 115.50 | 115.50 | 112 |

| 18 | 115.30 | 116.00 | 115.30 | 116.00 | 115.30 | 116.00 | 117.30 | 116.00 | 113 |

| 19 | 110.40 | 105.40 | 110.40 | 108.80 | 110.40 | 105.40 | 110.40 | 112.10 | 110 |

| 20 | 102.70 | 102.00 | 102.70 | 102.00 | 102.70 | 102.00 | 102.70 | 102.00 | 107 |

| 21 | 152.40 | 149.50 | 152.40 | 149.50 | 152.40 | 149.50 | 152.40 | 149.50 | 146 |

| 22 | 135.10 | 135.90 | 135.10 | 135.90 | 135.10 | 135.90 | 135.10 | 135.90 | 139 |

| 23 | 128.80 | 129.10 | 128.80 | 129.10 | 128.80 | 129.10 | 128.80 | 129.10 | 132 |

| 24 | 122.20 | 122.30 | 122.20 | 122.30 | 122.20 | 122.30 | 122.20 | 122.30 | 124 |

| 25 | 120.20 | 122.30 | 120.20 | 122.30 | 120.20 | 122.30 | 120.20 | 122.30 | 123 |

| 26 | 118.50 | 118.90 | 118.50 | 118.90 | 118.50 | 118.90 | 118.50 | 118.90 | 121 |

| 27 | 118.20 | 115.50 | 118.20 | 115.50 | 118.20 | 115.50 | 118.20 | 118.90 | 121 |

| 28 | 114.60 | 115.50 | 114.60 | 115.50 | 114.60 | 115.50 | 114.60 | 112.10 | 121 |

| 29 | 114.80 | 115.50 | 114.80 | 115.50 | 114.80 | 115.50 | 114.80 | 115.50 | 117 |

| 30 | 111.20 | 112.10 | 111.20 | 112.10 | 111.20 | 112.10 | 111.20 | 112.10 | 115 |

| 31 | 113.10 | 115.50 | 113.10 | 115.50 | 113.10 | 115.50 | 113.10 | 115.50 | 115 |

| 32 | 109.30 | 111.60 | 109.30 | 111.60 | 109.30 | 111.60 | 109.30 | 111.60 | 112 |

| 33 | 114.50 | 115.50 | 114.50 | 115.50 | 114.50 | 115.50 | 114.50 | 115.50 | 113 |

| 34 | 109.30 | 108.80 | 109.30 | 108.80 | 109.30 | 108.80 | 109.30 | 108.80 | 109 |

| 35 | 108.60 | 108.30 | 108.60 | 108.30 | 108.60 | 108.30 | 108.60 | 108.30 | 110 |

| 36 | 79.99 | 78.16 | 79.99 | 78.16 | 79.99 | 78.16 | 79.99 | 78.16 | 82 |

| 37 | 87.53 | 87.89 | 87.53 | 87.89 | 87.53 | 87.89 | 87.53 | 84.38 | 84 |

| 38 | 81.61 | 81.56 | 81.61 | 81.56 | 81.61 | 81.56 | 78.69 | 78.16 | 83 |

| 39 | 78.43 | 78.16 | 78.43 | 78.16 | 78.43 | 78.16 | 77.46 | 78.16 | 81 |

| 40 | 80.59 | 81.56 | 80.59 | 81.56 | 80.59 | 81.56 | 80.59 | 81.56 | 82 |

| 41 | 73.75 | 74.77 | 73.75 | 74.77 | 73.75 | 74.77 | 74.72 | 74.77 | 74 |

| 42 | 81.56 | 81.56 | 81.56 | 81.56 | 80.59 | 81.56 | 80.59 | 81.56 | 80 |

| 43 | 73.67 | 74.77 | 73.67 | 74.77 | 73.67 | 74.77 | 73.67 | 74.77 | 78 |

| 44 | 79.71 | 78.16 | 79.71 | 78.16 | 79.71 | 78.16 | 79.71 | 81.56 | 84 |

| 45 | 83.69 | 81.56 | 83.69 | 81.56 | 83.69 | 84.96 | 83.69 | 84.96 | 83 |

| 46 | 81.61 | 81.56 | 81.61 | 81.56 | 81.61 | 81.56 | 81.61 | 94.96 | 83 |

| 47 | 132.80 | 132.50 | 132.80 | 132.50 | 132.80 | 132.50 | 132.80 | 132.50 | 140 |

| 48 | 124.50 | 122.30 | 124.50 | 122.30 | 124.50 | 122.30 | 124.50 | 122.30 | 127 |

| 49 | 115.50 | 115.50 | 115.50 | 115.50 | 115.50 | 115.50 | 115.50 | 115.50 | 117 |

| 50 | 113.20 | 112.10 | 113.20 | 112.10 | 113.20 | 112.10 | 112.30 | 112.10 | 117 |

| 51 | 114.50 | 112.10 | 114.50 | 112.10 | 114.50 | 115.50 | 114.50 | 115.50 | 118 |

| Video | Heart Rate Estimate | Ref. HR | |||||||

| SIG1 | SIG2 | SIG3 | SIG4 | ||||||

| FFT | Welch | FFT | Welch | FFT | Welch | FFT | Welch | ||

| 1* | 82.45 | 82.03 | 82.45 | 82.03 | 82.45 | 84.38 | 82.45 | 82.03 | 82.48 |

| 2 | 80.53 | 79.69 | 75.56 | 79.69 | 81.52 | 79.69 | 78.54 | 79.69 | 81.49 |

| 3 | 70.53 | 84.38 | 117.22 | 117.19 | 70.53 | 70.31 | 70.53 | 70.31 | 70.49 |

| 4 | 70.47 | 70.31 | 70.47 | 70.31 | 70.47 | 70.31 | 70.47 | 70.31 | 70.41 |

| 5 | 72.52 | 70.31 | 72.52 | 70.31 | 72.52 | 72.66 | 71.52 | 72.66 | 72.40 |

| 6 | 130.13 | 93.75 | 130.13 | 128.91 | 95.36 | 128.91 | 131.13 | 121.88 | 71.48 |

| 7 | 93.38 | 93.75 | 93.38 | 93.75 | 53.64 | 93.75 | 106.29 | 107.81 | 53.55 |

| 8 | 54.55 | 53.91 | 54.55 | 53.91 | 54.55 | 53.91 | 54.55 | 53.91 | 54.55 |

| 9 | 67.21 | 67.97 | 67.21 | 67.97 | 67.21 | 67.97 | 67.21 | 67.97 | 67.11 |

| 10 | 68.60 | 67.97 | 67.63 | 67.97 | 68.60 | 67.97 | 67.63 | 67.97 | 68.50 |

| 11 | 61.59 | 60.94 | 61.59 | 60.94 | 59.60 | 60.94 | 59.60 | 91.41 | 61.55 |

| 12 | 65.51 | 65.63 | 65.51 | 65.63 | 65.51 | 65.63 | 65.51 | 65.63 | 65.45 |

| 13 | 75.37 | 75.00 | 75.37 | 75.00 | 75.37 | 75.00 | 75.37 | 75.00 | 75.27 |

| 14 | 78.14 | 77.34 | 78.14 | 77.34 | 78.14 | 77.34 | 78.14 | 77.34 | 78.07 |

| 15 | 70.36 | 70.31 | 70.36 | 70.31 | 70.36 | 67.97 | 70.36 | 70.31 | 70.27 |

| 16 | 68.43 | 67.97 | 68.43 | 67.97 | 68.43 | 67.97 | 68.43 | 67.97 | 68.36 |

| 17 | 91.47 | 135.94 | 91.47 | 91.41 | 92.46 | 93.75 | 91.47 | 91.41 | 91.33 |

| 18 | 88.05 | 89.06 | 89.04 | 89.06 | 88.05 | 86.72 | 87.06 | 86.72 | 87.99 |

| 19 | 61.49 | 60.94 | 61.49 | 60.94 | 61.49 | 60.94 | 61.49 | 60.94 | 61.42 |

| 20 | 62.43 | 63.28 | 62.43 | 63.28 | 62.43 | 63.28 | 62.43 | 63.28 | 62.35 |

| 21 | 82.45 | 82.03 | 82.45 | 82.03 | 83.44 | 82.03 | 82.45 | 82.03 | 83.39 |

| 22 | 82.52 | 84.38 | 82.52 | 84.38 | 85.50 | 84.38 | 85.50 | 84.38 | 85.38 |

| 23 | 80.20 | 173.44 | 66.34 | 65.63 | 93.07 | 93.75 | 91.09 | 93.75 | 90.96 |

| 24 | 76.49 | 145.31 | 169.87 | 77.34 | 108.28 | 107.81 | 89.40 | 89.06 | 89.26 |

| Video | Heart Rate Estimate | Ref. HR | |||||||

| SIG1 | SIG2 | SIG3 | SIG4 | ||||||

| FFT | Welch | FFT | Welch | FFT | Welch | FFT | Welch | ||

| 1 | 84.65 | 84.38 | 84.65 | 84.38 | 84.65 | 84.38 | 84.65 | 84.38 | 84.56 |

| 2 | 85.50 | 86.72 | 85.50 | 86.72 | 85.50 | 86.72 | 85.50 | 84.38 | 85.47 |

| 3 | 69.54 | 70.31 | 69.54 | 70.31 | 69.54 | 70.31 | 69.54 | 70.31 | 69.49 |

| 4 | 68.54 | 67.97 | 68.54 | 67.97 | 68.54 | 67.97 | 68.54 | 67.97 | 68.43 |

| 5 | 68.60 | 67.97 | 68.60 | 67.97 | 68.60 | 67.97 | 68.60 | 67.97 | 68.50 |

| 6 | 70.30 | 70.31 | 70.30 | 70.31 | 70.30 | 70.31 | 70.30 | 70.31 | 70.27 |

| 7 | 57.57 | 56.25 | 57.57 | 56.25 | 57.57 | 56.25 | 56.58 | 56.25 | 56.53 |

| 8 | 53.69 | 53.91 | 53.69 | 110.16 | 53.69 | 53.91 | 53.69 | 56.25 | 53.61 |

| 9 | 71.34 | 70.31 | 71.34 | 70.31 | 71.34 | 70.31 | 71.34 | 70.31 | 71.22 |

| 10 | 69.16 | 67.97 | 69.16 | 67.97 | 69.16 | 67.97 | 69.16 | 67.97 | 69.06 |

| 11 | 59.55 | 60.94 | 59.55 | 84.38 | 59.55 | 58.59 | 59.55 | 60.94 | 59.50 |

| 12 | 64.52 | 63.28 | 64.52 | 63.28 | 60.55 | 63.28 | 60.55 | 60.94 | 60.50 |

| 13 | 75.30 | 77.34 | 75.30 | 77.34 | 75.30 | 77.34 | 76.27 | 77.34 | 76.22 |

| 14 | 74.58 | 75.00 | 74.58 | 75.00 | 74.58 | 75.00 | 74.58 | 75.00 | 74.52 |

| 15 | 67.49 | 67.97 | 67.49 | 67.97 | 67.49 | 67.97 | 67.49 | 67.97 | 67.44 |

| 16 | 69.48 | 70.31 | 69.48 | 70.31 | 69.48 | 70.31 | 69.48 | 70.31 | 69.42 |

| 17 | 86.42 | 86.72 | 78.48 | 84.38 | 78.48 | 84.38 | 85.43 | 84.38 | 86.28 |

| 18 | 83.44 | 84.38 | 83.44 | 82.03 | 83.44 | 84.38 | 83.44 | 84.38 | 83.31 |

| 19 | 60.55 | 58.59 | 60.55 | 58.59 | 60.55 | 60.94 | 60.55 | 60.94 | 60.50 |

| 20 | 61.49 | 60.94 | 61.49 | 60.94 | 61.49 | 60.94 | 61.49 | 60.94 | 61.42 |

| 21 | 81.46 | 82.03 | 81.46 | 82.03 | 81.46 | 82.03 | 80.46 | 82.03 | 80.41 |

| 22 | 84.30 | 84.38 | 84.30 | 84.38 | 84.30 | 84.38 | 84.30 | 84.38 | 84.21 |

| 23 | 94.29 | 93.75 | 119.11 | 119.53 | 94.29 | 93.75 | 94.29 | 93.75 | 95.21 |

| 24 | 96.44 | 75.00 | 96.44 | 96.09 | 96.44 | 96.09 | 88.48 | 89.06 | 89.35 |

Appendix B. HR Estimation Algorithm

| %STAGE 1: DETECT A FACE |

| % Create a cascade detector object. |

| faceDetector = vision.CascadeObjectDetector(); |

| % Read a video frame and run the face detector. |

| videoFileReader = vision.VideoFileReader(NAME4); |

| videoFrame = step(videoFileReader); |

| videoFrame = step(videoFileReader); |

| bbox = step(faceDetector, videoFrame); |

| fROI = [(bbox(1) + (1/3)*bbox(3)) (bbox(2) + 0.1*bbox(4)) ((1/3)*bbox(3)) (0.2*(bbox(4)))]; |

| bfROI= [(bbox(1) + (1/3)*bbox(3)) (bbox(2) + 0.0*bbox(4)) ((1/3)*bbox(3)) (0.3*(bbox(4)))]; |

| bbROI=[(bbox(1) + (0.2)*bbox(3)) (bbox(2) + 0.1*bbox(4)) ((0.6)*bbox(3)) (0.8*(bbox(4)))]; |

| fROIPoints = bbox2points(fROI(1, :)); |

| bfROIPoints = bbox2points(bfROI(1, :)); |

| bbROIPoints = bbox2points(bbROI(1, :)); |

| redChannel = videoFrame(:, :, 1); % red channel of video frame |

| greenChannel = videoFrame(:, :, 2); % green channel of video frame |

| blueChannel = videoFrame(:, :, 3); % blue channel of video frame |

| YCbCr = rgb2ycbcr(videoFrame); |

| YChannel = YCbCr(:,:,1); |

| x1 = fROIPoints(:,1); |

| y1 = fROIPoints(:,2); |

| x2 = bfROIPoints(:,1); |

| y2 = bfROIPoints(:,2); |

| x3 = bbROIPoints(:,1); |

| y3 = bbROIPoints(:,2); |

| bw1 = poly2mask(x1, y1, size(videoFrame, 1), size(videoFrame, 2)); % creates binary mask based on polygon with vetices defined by x and y with vid frame dimensions |

| bw2 = poly2mask(x2, y2, size(videoFrame, 1), size(videoFrame, 2)); |

| bw3 = poly2mask(x3, y3, size(videoFrame, 1), size(videoFrame, 2)); |

| p = 1; |

| pSIG1(1, p) = mean2(redChannel(logical(bw1))); %bigger forehead ROI raw red signal vector |

| pSIG2(1, p) = mean2(greenChannel(logical(bw1))); %bigger forehead ROI raw green signal vector |

| pSIG3(1, p) = mean2(blueChannel(logical(bw1))); %bigger forehead ROI raw blue signal vector |

| pSIG4(1, p) = mean2(YChannel(logical(bw1))); %bigger forehead ROI raw luminance signal vector |

| pSIG5(1, p) = mean2(greenChannel(logical(bw2))); % bigger forehead ROI raw green signal vector |

| pSIG6(1, p) = mean2(greenChannel(logical(bw3))); % 60%80% bounding box ROI raw green signal vector |

| pSIG9(1, p) = fROIPoints(1,1); % x motion signal from first x coordinate of forehead ROI |

| pSIG10(1, p) = fROIPoints(1,2); % y motion signal from first y coordinate of forehead ROI |

| % Draw the returned bounding box around the detected face. |

| videoFrame = insertShape(videoFrame, ‘Rectangle’, bbox); |

| videoFrame = insertShape(videoFrame, ‘Rectangle’, fROI); % insert forehead ROI into video frame |

| videoFrame = insertShape(videoFrame, ‘Rectangle’, bfROI); |

| videoFrame = insertShape(videoFrame, ‘Rectangle’, bbROI); |

| %figure; imshow(videoFrame); title(‘Detected face’); |

| % Convert the first box into a list of 4 points |

| % This is needed to be able to visualize the rotation of the object. |

| bboxPoints = bbox2points(bbox(1, :)); |

| %fROIPoints = bbox2points(fROI(1, :)); % convert fROI to four coordinates of rectangle |

| % This establishes the ROI in first frame; the bbox coordinates are transformed to yield |

| % the coordinates for a ROI at 60% width of bbox and 80% height; assumes that bounding box in |

| % first frame is (unrotated) square/rectangle |

| %STAGE 2: IDENTIFY FACIAL FEATURES TO TRACK |

| % Detect feature points in the face region. |

| points = detectMinEigenFeatures(rgb2gray(videoFrame), ‘ROI’, bbox); |

| % Display the detected points. |

| %figure, imshow(videoFrame), hold on, title(‘Detected features’); |

| %plot(points); |

| %STAGE 3: INITIALIZE A TRACKER TO TRACK THE POINTS |

| % Create a point tracker and enable the bidirectional error constraint to |

| % make it more robust in the presence of noise and clutter. |

| pointTracker = vision.PointTracker(‘MaxBidirectionalError’, 2); |

| % Initialize the tracker with the initial point locations and the initial |

| % video frame. |

| points = points.Location; |

| initialize(pointTracker, points, videoFrame); |

| %STAGE 4: INITIALIZE A VIDEO PLAYER TO DISPLAY THE RESULTS |

| videoPlayer = vision.VideoPlayer(‘Position’,... |

| [100 100 [size(videoFrame, 2), size(videoFrame, 1)]+30]); |

| %STAGE 5: TRACK THE FACE |

| % Make a copy of the points to be used for computing the geometric |

| % transformation between the points in the previous and the current frames |

| oldPoints = points; |

| while ~isDone(videoFileReader) |

| % get the next frame |

| videoFrame = step(videoFileReader); |

| % Track the points. Note that some points may be lost. |

| [points, isFound] = step(pointTracker, videoFrame); |

| visiblePoints = points(isFound, :); |

| oldInliers = oldPoints(isFound, :); |

| if size(visiblePoints, 1) >= 2 % need at least 2 points |

| % Estimate the geometric transformation between the old points |

| % and the new points and eliminate outliers |

| [xform, oldInliers, visiblePoints] = estimateGeometricTransform(... |

| oldInliers, visiblePoints, ‘similarity’, ‘MaxDistance’, 4); |

| % Apply the transformation to the bounding box points |

| bboxPoints = transformPointsForward(xform, bboxPoints); |

| % Also apply the transformation to the ROI box points and other ROIs |

| fROIPoints = transformPointsForward(xform, fROIPoints); |

| fROIPoints = double(fROIPoints); %change fROIPoints from single to double |

| bfROIPoints = transformPointsForward(xform, bfROIPoints); |

| bfROIPoints = double(bfROIPoints); %change fROIPoints from single to double |

| bbROIPoints = transformPointsForward(xform, bbROIPoints); |

| bbROIPoints = double(bbROIPoints); %change fROIPoints from single to double |

| x1 = fROIPoints(:,1); |

| y1 = fROIPoints(:,2); |

| x2 = bfROIPoints(:,1); |

| y2 = bfROIPoints(:,2); |

| x3 = bbROIPoints(:,1); |

| y3 = bbROIPoints(:,2); |

| bw1 = poly2mask(x1, y1, size(videoFrame, 1), size(videoFrame, 2)); |

| bw2 = poly2mask(x2, y2, size(videoFrame, 1), size(videoFrame, 2)); |

| bw3 = poly2mask(x3, y3, size(videoFrame, 1), size(videoFrame, 2)); |

| %bw4 = poly2mask(x4, y4, size(videoFrame, 1), size(videoFrame, 2)); |

| p = p + 1; |

| gr(:,:,p) = fROIPoints; |

| %monitor(:,:,p) = bROIPoints; |

| redChannel = videoFrame(:, :, 1); % red channel of video frame |

| greenChannel = videoFrame(:, :, 2); % green channel of video frame |

| blueChannel = videoFrame(:, :, 3); % blue channel of video frame |

| YCbCr = rgb2ycbcr(videoFrame); |

| YChannel = YCbCr(:,:,1); |

| pSIG1(1, p) = mean2(redChannel(logical(bw1))); %forehead ROI raw red signal |

| pSIG2(1, p) = mean2(greenChannel(logical(bw1))); % forehead ROI raw green signal |

| pSIG3(1, p) = mean2(blueChannel(logical(bw1))); %forehead ROI raw blue signal |

| pSIG4(1, p) = mean2(YChannel(logical(bw1))); %forehead ROI raw luminance signal |

| pSIG5(1, p) = mean2(greenChannel(logical(bw2))); % bforehead ROI raw green signal |

| pSIG6(1, p) = mean2(greenChannel(logical(bw3))); % 60%80% bb box ROI raw green |

| pSIG9(1, p) = fROIPoints(1,1); % x motion signal of forehead ROI |

| pSIG10(1, p) = fROIPoints(1,2); % y motion signal of forehead ROI |

| % Insert a bounding box around the object being tracked |

| bboxPolygon = reshape(bboxPoints’, 1, []); |

| videoFrame = insertShape(videoFrame, ‘Polygon’, bboxPolygon, ... |

| ‘LineWidth’, 2); |

| % Also insert fROI box around the object being tracked, and insert for other Roys |

| fROIPolygon = reshape(fROIPoints’, 1, []); |

| videoFrame = insertShape(videoFrame, ‘Polygon’, fROIPolygon, ... |

| ‘LineWidth’, 2); |

| bfROIPolygon = reshape(bfROIPoints’, 1, []); |

| videoFrame = insertShape(videoFrame, ‘Polygon’, bfROIPolygon, ... |

| ‘LineWidth’, 2); |

| bbROIPolygon = reshape(bbROIPoints’, 1, []); |

| videoFrame = insertShape(videoFrame, ‘Polygon’, bbROIPolygon, ... |

| ‘LineWidth’, 2); |

| % Display tracked points |

| videoFrame = insertMarker(videoFrame, visiblePoints, ‘+’, ... |

| ‘Color’, ‘white’); |

| % Reset the points |

| oldPoints = visiblePoints; |

| setPoints(pointTracker, oldPoints); |

| end |

| % Display the annotated video frame using the video player object |

| step(videoPlayer, videoFrame); |

| %break |

| end |

| % Clean up |

| release(videoFileReader); |

| release(videoPlayer); |

| release(pointTracker); |

| % STEP 6: SIGNAL DETRENDING, SPA |

| pSIG1 = double(pSIG1’); |

| pSIG2 = double(pSIG2’); |

| pSIG3 = double(pSIG3’); |

| pSIG4 = double(pSIG4’); |

| pSIG5 = double(pSIG5’); |

| pSIG6 = double(pSIG6’); |

| pSIG9 = double(pSIG9’); |

| pSIG10 = double(pSIG10’); |

| T = length(pSIG1); |

| lambda = 10; |

| I = speye(T); |

| D2 = spdiags(ones(T-2,1)*[1 -2 1],[0:2],T-2,T); |

| dpSIG1 = (I-inv(I+lambda^2*D2’*D2))*pSIG1; |

| dpSIG2 = (I-inv(I+lambda^2*D2’*D2))*pSIG2; |

| dpSIG3 = (I-inv(I+lambda^2*D2’*D2))*pSIG3; |

| dpSIG4 = (I-inv(I+lambda^2*D2’*D2))*pSIG4; |

| dpSIG5 = (I-inv(I+lambda^2*D2’*D2))*pSIG5; |

| dpSIG6 = (I-inv(I+lambda^2*D2’*D2))*pSIG6; |

| dpSIG9 = (I-inv(I+lambda^2*D2’*D2))*pSIG9; |

| dpSIG10 = (I-inv(I+lambda^2*D2’*D2))*pSIG10; |

| % STEP 7: FILTERING |

| fs = 29; |

| [b, a] = butter(4, [0.8 6.0]/(fs/2), ‘bandpass’); |

| fpSIG1 = filter(b, a, dpSIG1); |

| fpSIG2 = filter(b, a, dpSIG2); |

| fpSIG3 = filter(b, a, dpSIG3); |

| fpSIG4 = filter(b, a, dpSIG4); |

| fpSIG5 = filter(b, a, dpSIG5); |

| fpSIG6 = filter(b, a, dpSIG6); |

| fpSIG9 = filter(b, a, dpSIG9); |

| fpSIG10 = filter(b, a, dpSIG10); |

| % STEP 8: SIGNAL NORMALIZATION |

| npSIG1 = (fpSIG1 - mean(fpSIG1))/std(fpSIG1); % remember to change these signals back to fSIG |

| npSIG2 = (fpSIG2 - mean(fpSIG2))/std(fpSIG2); |

| npSIG3 = (fpSIG3 - mean(fpSIG3))/std(fpSIG3); |

| npSIG4 = (fpSIG4 - mean(fpSIG4))/std(fpSIG4); |

| npSIG5 = (fpSIG5 - mean(fpSIG5))/std(fpSIG5); |

| npSIG6 = (fpSIG6 - mean(fpSIG6))/std(fpSIG6); |

| npSIG9 = (fpSIG9 - mean(fpSIG9))/std(fpSIG9); |

| npSIG10 = (fpSIG10- mean(fpSIG10))/std(fpSIG10); |

| % STEP 9: FFT OF R, G, and B SIGNALS + DISPLAY OF FFT OF EACH SIGNAL |

| L = length(npSIG1); |

| winvec = hann(L); |

| F1 = fft(npSIG1.*winvec); |

| F2 = fft(npSIG2.*winvec); |

| F3 = fft(npSIG3.*winvec); |

| F4 = fft(npSIG4.*winvec); |

| F5 = fft(npSIG5.*winvec); |

| F6 = fft(npSIG6.*winvec); |

| F9 = fft(npSIG9.*winvec); |

| F10 = fft(npSIG10.*winvec); |

| A2 = abs(F1/L); |

| A1 = A2(1:L/2+1); |

| A1(2:end-1) = 2*A1(2:end-1); |

| B2 = abs(F2/L); |

| B1 = B2(1:L/2+1); |

| B1(2:end-1) = 2*B1(2:end-1); |

| C2 = abs(F3/L); |

| C1 = C2(1:L/2+1); |

| C1(2:end-1) = 2*C1(2:end-1); |

| D2 = abs(F4/L); |

| D1 = D2(1:L/2+1); |

| D1(2:end-1) = 2*D1(2:end-1); |

| E2 = abs(F5/L); |

| E1 = E2(1:L/2+1); |

| E1(2:end-1) = 2*E1(2:end-1); |

| G2 = abs(F6/L); |

| G1 = G2(1:L/2+1); |

| G1(2:end-1) = 2*G1(2:end-1); |

| J2 = abs(F9/L); |

| J1 = J2(1:L/2+1); |

| J1(2:end-1) = 2*J1(2:end-1); |

| K2 = abs(F10/L); |

| K1 = K2(1:L/2+1); |

| K1(2:end-1) = 2*K1(2:end-1); |

| % DISPLAY THREE FFTs |

| f = 60*fs*(0:(L/2))/L; |

| figure |

| subplot(2,2,1) |

| plot(f, B1.^2, ‘green’) |

| title(‘fft of green signal of forehead ROI’) |

| xlabel(‘f(Hz)’) |

| ylabel(‘Power(f)’) |

| subplot(2,2,2) |

| plot(f, D1.^2) |

| title(‘fft of luminance signal of forehead ROI’) |

| xlabel(‘f(Hz)’) |

| ylabel(‘Power(f)’) |

| subplot(2,2,3) |

| plot(f, E1.^2, ‘green’) |

| title(‘fft of green signal of bigger forehead ROI’) |

| xlabel(‘f(Hz)’) |

| ylabel(‘Power(f)’) |

| subplot(2,2,4) |

| plot(f, G1.^2, ‘green’) |

| title(‘fft of green signal of 60%80% bb ROI’) |

| xlabel(‘f(Hz)’) |

| ylabel(‘Power(f)’) |

| figure |

| subplot(2,2,1) |

| plot(f, J1.^2) |

| title(‘fft of x motion signal’) |

| xlabel(‘f(Hz)’) |

| ylabel(‘Power(f)’) |

| subplot(2,2,2) |

| plot(f, K1.^2) |

| title(‘fft of y motion signal’) |

| xlabel(‘f(Hz)’) |

| ylabel(‘Power(f)’) |

| %------------------------------WELCH |

| [pxx1 f] = pwelch(npSIG2.*winvec, [], [], [], fs); |

| [pxx2 f] = pwelch(npSIG4.*winvec, [], [], [], fs); |

| [pxx3 f] = pwelch(npSIG5.*winvec, [], [], [], fs); |

| [pxx4 f] = pwelch(npSIG6.*winvec, [], [], [], fs); |

| [pxx7 f] = pwelch(npSIG9.*winvec, [], [], [], fs); |

| [pxx8 f] = pwelch(npSIG10.*winvec, [], [], [], fs); |

| figure |

| subplot(2,2,1) |

| plot(60*f, pxx1, ‘green’) |

| title(‘Welch of green signal of forehead ROI’) |

| xlabel(‘f(Hz)’) |

| ylabel(‘Power(f)’) |

| subplot(2,2,2) |

| plot(60*f, pxx2) |

| title(‘Welch of luminance signal of forehead ROI’) |

| xlabel(‘f(Hz)’) |

| ylabel(‘Power(f)’) |

| subplot(2,2,3) |

| plot(60*f, pxx3, ‘green’) |

| title(‘Welch of green signal of bigger forehead ROI’) |

| xlabel(‘f(Hz)’) |

| ylabel(‘Power(f)’) |

| subplot(2,2,4) |

| plot(60*f, pxx4, ‘green’) |

| title(‘Welch of green signal of 60%80% bb ROI’) |

| xlabel(‘f(Hz)’) |

| ylabel(‘Power(f)’) |

Appendix C. Laboratory Practical

| ROI Name | ROI Description | Associated Signals | Description of Signal |

| ROI A | ROI centred on Forehead | SIGNAL 1.1 | Green (RGB) signal (FFT) |

| SIGNAL 1.2 | Green (RGB) signal (Welch) | ||

| SIGNAL 2.1 | Luminance (YCbCr) signal (FFT) | ||

| SIGNAL 2.2 | Luminance (YCbCr) signal (Welch) | ||

| ROI B | Forehead ROI 50% larger than ROI A | SIGNAL 3.1 | Green (RGB) signal (FFT) |

| SIGNAL 3.2 | Green (RGB) signal (Welch) | ||

| ROI C | Face ROI comprises central 60% (width) and central 80% (height) of bbox | SIGNAL 3.1 | Green (RGB) signal (FFT) |

| SIGNAL 3.2 | Green (RGB) signal (Welch) |

- 1.

- Open the MATLAB IDE by double clicking on the MATLAB shortcut icon in the desktop window

- 2.

- Wait for the completion of MATLAB initialization and the appearance of ready at the bottom left corner of the MATLAB window

- 3.

- Seat at approximately 0.5 m from the webcam (use a metre rule to measure the distance). Seat still facing the camera and ensure that field of view of the webcam is centered on your face.

- 4.

- Insert the tip of your index figure into the rubber case of the sensor of the pulse oximeter module (already connected to the computer). An IR LED illuminates the finger from the top while a photodetector detects transmitted light at the bottom. Place the hand on a level surface (such as table top or arm rest) and keep it steady. See Figure 4 for correct placement of finger into sensor.

- 5.

- Type GUI1 followed by the ENTER key into the MATLAB command window. A GUI will appear and will serve as the interface for rPPG signal extraction and the outputting of FFT results (which will be analysed for heart rate estimation).

- 6.

- Initiate video capture (as well as a timer and simultaneous capture of ground truth pulse oximeter signal) by pressing the START pushbutton at the top of the GUI window.

- 7.

- Terminate video capture after 1 min (by pressing the STOP pushbutton).

- 8.

- Press the SELECT VIDEO pushbutton at the top of the GUI window. This is a browse button which will allow you to select the video you just recorded and enter it into the GUI space for rPPG signal extraction.

- 9.

- Press the PROCESS VIDEO pushbutton adjacent to SELECT VIDEO. This will initiate the processing of the video for rPPG signal. This may take more than 5 min (depending on hardware). The immediate output of this processing will be the display of the FFT of rPPG signals 1 – 4 in four labelled panels.

- 10.

- The Welch periodograms of signals 1 – 4 can be accessed by pressing the WELCH pushbutton at the bottom of the GUI. Return to the FFT results is achieved by pressing the same pushbutton which is automatically reconfigured and re-labelled FFT.

- 11.

- Determine the heart rate (in bpm) for both the FFT and Welch periodogram results by clicking on the peak of maximum amplitude in the frequency spectrum.

- 12.

- An FFT and Welch periodogram of the pulse oximeter reference values is obtained by pressing the GROUND TRUTH pushbutton.

- 13.

- Record all results in a table like the one in the Recording and presenting your data section below (it is designed to accommodate Results from multiple subjects/videos).

- For each of the eight (8) heart rate estimates (one for each rPPG signal), you need a corresponding error and signal-to-noise ratio (SNR) measure. Error values are obtained by clicking the (static) drop-down list titled ERROR at the top of the GUI window (it allows display but no selection).

- SNR values are obtained by clicking the adjacent SNR drop-down list.

- Record Error and SNR values in a table like the one specified in the Recording and presenting your data section

- Close the GUI by pressing the EXIT pushbutton and repeat steps 3 – 13 of Exercise 1 and 1 – 4 of Exercise 2 with dedicated light source about 1 metre from the face.

- 1.

- In the MATLAB command window, type GUI2. A new GUI will appear. Using this GUI, heart rate is determined directly without the need for manual evaluation of the FFTs.

- 2.

- Adopt the sitting posture employed in Exercise 1 and keep the dedicated lighting.

- 3.

- Press the START button at the bottom of the GUI window. Video capture will be initiated after 5 s and will yield your HR every 15 s.

- 4.

- Let the video run for 5 min. By then you will have 20 estimated heart rate values and 20 corresponding pulse oximeter ground truth values. These values will appear sequentially in labelled static text fields in the GUI window and will also be automatically outputted to a file.

- 5.

- Record your estimated HR and reference HR values in a table like the one specified in the Recording and presenting your data section

| Exercise 1 | ||||||||||

| Sample table for recording rPPG HR estimates | ||||||||||

| Video | Heart Rate Estimate | Ref. HR | ||||||||

| SIG1 | SIG2 | SIG3 | SIG4 | |||||||

| FFT | Welch | FFT | Welch | FFT | Welch | FFT | Welch | |||

| 1 | ||||||||||

| 2 | ||||||||||

| 3 | ||||||||||

| 4 | ||||||||||

| 5 | ||||||||||

| 6 | ||||||||||

| 7 | ||||||||||

| 8 | ||||||||||

| 9 | ||||||||||

| 10 | ||||||||||

| Exercise 2 |

|

| Exercise 3 | ||

| Sample table for recording estimated HR vs. Ref. HR | ||

| Trial | Est. HR | Ref. HR |

| 1 | ||

| 2 | ||

| 3 | ||

| 4 | ||

| 5 | ||

| 6 | ||

| 7 | ||

| 8 | ||

| 9 | ||

| 10 | ||

| Exercise 1 |

|

| Exercise 2 |

|

| Exercise 3 |

|

| Evaluation |

|

- To provide students an introduction to the MATLAB Integrated Development Environment (IDE)

- To provide students experience with extracting a clinically relevant biosignals (rPPG) using a video-based method

- To provide students an introduction to signal processing and signal processing algorithms such as the FFT

- To provide students the opportunity to perform simple statistical analyses on data

Possible Extension Work

- 1.

- Investigation of the effect of distance of subject from camera on the SNR of rPPG signals and mean error of heart rate estimates

- 2.

- Investigation of the effect of light intensity (perhaps adjusted by altering distance of light source from subject) on the SNR of rPPG signals and mean error of heart rate estimates

- 3.

- Investigation of whether there is statistically significant difference between mean error of heart rate estimates and SNR of rPPG signals for resting versus post-exercise rPPG.

- 4.

- Investigation of the effect of various kinds of motion on the mean error of heart rate estimates and SNR of rPPG signals

- 1.

- PC with webcam

- 2.

- MATLAB software package

- 3.

- (Arduino) Microcontroller

- 4.

- MATLAB support package for Arduino hardwire (to allow Arduino output to be processed directly in MATLAB)

- 5.

- Pulse oximeter (module) interfaced with laptop via (Arduino) microcontroller

- 6.

- Light source

- 7.

- Metre rule

| 1 | For convenience of comparison, the lighting conditions – natural and studio – for COHFACE videos shall heretofore be referred to as ambient and dedicated

|

| 2 | The discussion of results focuses on mean error since its meaning is more intuitive than that of RMSE as a measure of agreement. Mean error also has the advantage of being defined relative to a reference. RMSE is, however, still useful as it facilitates comparison with the results of other studies which employ that measure. |

References

- Agashe, G. S., J. Coakley, and P. D. Mannheimer. 2006. “Forehead pulse oximetry: Headband use helps alleviate false low readings likely related to venous pulsation artifact.” Anesthesiology 105 (6):1111-6.

- American Heart Association. 2015. “Target Heart Rates.” American Heart Association, Last Modified Feb 2015, accessed 2 Feb http://www.heart.org/HEARTORG/HealthyLiving/PhysicalActivity/Target-Heart-Rates_UCM_434341_Article.jsp#.WnUqq3cXa00.

- Bal, U. 2015. “Non-contact estimation of heart rate and oxygen saturation using ambient light.” Biomed Opt Express 6 (1):86-97. [CrossRef]

- Balakrishnan, G., F. Durand, and J. Guttag. 2013. “Detecting Pulse from Head Motions in Video.” 2013 IEEE Conference on Computer Vision and Pattern Recognition, 23-28 June 2013.

- Bernstein, Joshua G. W., and Andrew J. Oxenham. 2005. “An autocorrelation model with place dependence to account for the effect of harmonic number on fundamental frequency discrimination.” The Journal of the Acoustical Society of America 117 (6):3816-3831. [CrossRef]

- Borst, C., W. Wieling, J. F. van Brederode, A. Hond, L. G. de Rijk, and A. J. Dunning. 1982. “Mechanisms of initial heart rate response to postural change.” Am J Physiol 243 (5):H676-81. [CrossRef]

- Bouguet, J. Y. 2001. “{Pyramidal implementation of the affine lucas kanade feature tracker description of the algorithm}.” Intel Corporation 1 (2):1-9.

- Brown, Guy, DeLiang Wang, Jacob Benesty, Shoji Makino, and Jingdong Chen. 2006. Separation of Speech by Computational Auditory Scene Analysis.

- Cardoso, J. F. 1999. “High-Order Contrasts for Independent Component Analysis.” Neural Computation 11 (1):157-192. [CrossRef]

- Cennini, G., J. Arguel, K. Aksit, and A. van Leest. 2010. “Heart rate monitoring via remote photoplethysmography with motion artifacts reduction.” Opt Express 18 (5):4867-75. [CrossRef]

- Challoner, A. V., and C. A. Ramsay. 1974. “A photoelectric plethysmograph for the measurement of cutaneous blood flow.” Phys Med Biol 19 (3):317-28. [CrossRef]

- Chan, E. D., M. M. Chan, and M. M. Chan. 2013. “Pulse oximetry: understanding its basic principles facilitates appreciation of its limitations.” Respir Med 107 (6):789-99. [CrossRef]

- Cochran, D. 1988. “A consequence of signal normalization in spectrum analysis.” ICASSP-88., International Conference on Acoustics, Speech, and Signal Processing, 11-14 Apr 1988.

- Cochran, W., J. Cooley, D. Favin, H. Helms, R. Kaenel, W. Lang, G. Maling, D. Nelson, C. Rader, and P. Welch. 1967. “What is the fast Fourier transform?” IEEE Transactions on Audio and Electroacoustics 15 (2):45-55. [CrossRef]

- Crow, Franklin C. 1984. “Summed-area tables for texture mapping.” SIGGRAPH Comput. Graph. 18 (3):207-212. [CrossRef]

- Da Costa, German. 1995. “Optical remote sensing of heartbeats.” Optics Communications 117 (5):395-398. [CrossRef]

- Datcu, Dragos, Marina Cidota, Stephan Lukosch, and Léon Rothkrantz. 2013. Noncontact Automatic Heart Rate Analysis in Visible Spectrum by Specific Face Regions. Vol. 767.

- Dyer, A. R., V. Persky, J. Stamler, O. Paul, R. B. Shekelle, D. M. Berkson, M. Lepper, J. A. Schoenberger, and H. A. Lindberg. 1980. “Heart rate as a prognostic factor for coronary heart disease and mortality: findings in three Chicago epidemiologic studies.” Am J Epidemiol 112 (6):736-49.

- Freund, Yoav, and Robert E. Schapire. 1997. “A Decision-Theoretic Generalization of On-Line Learning and an Application to Boosting.” Journal of Computer and System Sciences 55 (1):119-139. [CrossRef]

- Garbey, M., N. Sun, A. Merla, and I. Pavlidis. 2007. “Contact-Free Measurement of Cardiac Pulse Based on the Analysis of Thermal Imagery.” IEEE Transactions on Biomedical Engineering 54 (8):1418-1426. [CrossRef]

- Garcia, A. G. 2013. “Development of a Non-contact heart rate measurement system.” Master’s Master’s Thesis, School of Informatics, University of Edinburgh.

- Gillman, M. W., W. B. Kannel, A. Belanger, and R. B. D’Agostino. 1993. “Influence of heart rate on mortality among persons with hypertension: the Framingham Study.” Am Heart J 125 (4):1148-54.

- Guo, Z., Z. J. Wang, and Z. Shen. 2014. “Physiological parameter monitoring of drivers based on video data and independent vector analysis.” 2014 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 4-9 May 2014.

- Haan, G. de, and V. Jeanne. 2013. “Robust Pulse Rate From Chrominance-Based rPPG.” IEEE Transactions on Biomedical Engineering 60 (10):2878-2886. [CrossRef]

- Hertzman, A. B., and C. R. Spealman. 1937. “Observation on the finger volume pulse recorded photoelectrically.” American Journal of Physiology 119:334-335.

- Heusch, Guillaume, André Anjos, and Sébastien Marcel. 2017. A Reproducible Study on Remote Heart Rate Measurement.

- Hjalmarson, A., E. A. Gilpin, J. Kjekshus, G. Schieman, P. Nicod, H. Henning, and J. Ross, Jr. 1990. “Influence of heart rate on mortality after acute myocardial infarction.” Am J Cardiol 65 (9):547-53. [CrossRef]

- Holton, B. D., K. Mannapperuma, P. J. Lesniewski, and J. C. Thomas. 2013. “Signal recovery in imaging photoplethysmography.” Physiol Meas 34 (11):1499-511. [CrossRef]

- Humphreys, K., T. Ward, and C. Markham. 2007. “Noncontact simultaneous dual wavelength photoplethysmography: a further step toward noncontact pulse oximetry.” Rev Sci Instrum 78 (4):044304. [CrossRef]

- Jiang, W. J., S. C. Gao, P. Wittek, and L. Zhao. 2014. “Real-time quantifying heart beat rate from facial video recording on a smart phone using Kalman filters.” 2014 IEEE 16th International Conference on e-Health Networking, Applications and Services (Healthcom), 15-18 Oct. 2014.

- John, Allen. 2007. “Photoplethysmography and its application in clinical physiological measurement.” Physiological Measurement 28 (3):R1.

- Kannel, W. B., C. Kannel, R. S. Paffenbarger, Jr., and L. A. Cupples. 1987. “Heart rate and cardiovascular mortality: the Framingham Study.” Am Heart J 113 (6):1489-94.

- Kumar, M., A. Veeraraghavan, and A. Sabharwal. 2015. “DistancePPG: Robust non-contact vital signs monitoring using a camera.” Biomed Opt Express 6 (5):1565-88. [CrossRef]

- Kwon, S., H. Kim, and K. S. Park. 2012. “Validation of heart rate extraction using video imaging on a built-in camera system of a smartphone.” Conf Proc IEEE Eng Med Biol Soc 2012:2174-7. [CrossRef]

- Lewandowska, M., J. Rumiński, T. Kocejko, and J. Nowak. 2011. “Measuring pulse rate with a webcam — A non-contact method for evaluating cardiac activity.” 2011 Federated Conference on Computer Science and Information Systems (FedCSIS), 18-21 Sept. 2011.

- Li, X., J. Chen, G. Zhao, and M. Pietikäinen. 2014. “Remote Heart Rate Measurement from Face Videos under Realistic Situations.” 2014 IEEE Conference on Computer Vision and Pattern Recognition, 23-28 June 2014.

- Lienhart, R., and J. Maydt. 2002. “An extended set of Haar-like features for rapid object detection.” Proceedings. International Conference on Image Processing, 2002.

- Lindqvist, A., and M. Lindelow. 2016. “Remote Heart Rate Extraction from Near Infrared Videos: An Approach to Heart Rate Measurements for the Smart Eye Head Tracking System.” Master’s Master’s Thesis, Department of Signals and Systems, Chalmers University of Technology.

- Lucas, Bruce D., and Takeo Kanade. 1981. “An iterative image registration technique with an application to stereo vision.” Proceedings of the 7th international joint conference on Artificial intelligence - Volume 2, Vancouver, BC, Canada.

- McDuff, D., S. Gontarek, and R. W. Picard. 2014. “Improvements in remote cardiopulmonary measurement using a five band digital camera.” IEEE Trans Biomed Eng 61 (10):2593-601. [CrossRef]

- Mensink, G. B. M., and H. Hoffmeister. 1997. “The relationship between resting heart rate and all-cause, cardiovascular and cancer mortality.” European Heart Journal 18 (9):1404-1410. [CrossRef]

- Miura, Hideharu, Shuichi Ozawa, Tsubasa Enosaki, Atsushi Kawakubo, Fumika Hosono, Kiyoshi Yamada, and Yasushi Nagata. 2017. “Quality assurance of a gimbaled head swing verification using feature point tracking.” Journal of Applied Clinical Medical Physics 18 (1):49-52. [CrossRef]

- National Heart, Lung and Blood Institute “Arrythmia.” accessed 2 Feb. https://www.nhlbi.nih.gov/health-topics/arrhythmia.

- National Heart, Lung and Blood Institute “Sudden Cardiac Arrest“, accessed 2 Feb. https://www.nhlbi.nih.gov/health-topics/sudden-cardiac-arrest.

- Nijboer, J. A., J. C. Dorlas, and H. F. Mahieu. 1981. “Photoelectric plethysmography-some fundamental aspects of the reflection and transmission methods.” Clinical Physics and Physiological Measurement 2 (3):205. [CrossRef]

- Njoum, H., and P. A. Kyriacou. 2013. “Investigation of finger reflectance photoplethysmography in volunteers undergoing a local sympathetic stimulation.” Journal of Physics: Conference Series 450 (1):012012. [CrossRef]

- Papon, M. T. I., I. Ahmad, N. Saquib, and A. Rahman. 2015. “Non-invasive heart rate measuring smartphone applications using on-board cameras: A short survey.” 2015 International Conference on Networking Systems and Security (NSysS), 5-7 Jan. 2015.

- Pelegris, P., K. Banitsas, T. Orbach, and K. Marias. 2010. “A novel method to detect heart beat rate using a mobile phone.” Conf Proc IEEE Eng Med Biol Soc 2010:5488-91. [CrossRef]

- Peng, Rong-Chao, Xiao-Lin Zhou, Wan-Hua Lin, and Yuan-Ting Zhang. 2015. “Extraction of Heart Rate Variability from Smartphone Photoplethysmograms.” Computational and Mathematical Methods in Medicine 2015:11. [CrossRef]

- Persky, V., A. R. Dyer, J. Leonas, J. Stamler, D. M. Berkson, H. A. Lindberg, O. Paul, R. B. Shekelle, M. H. Lepper, and J. A. Schoenberger. 1981. “Heart rate: a risk factor for cancer?” Am J Epidemiol 114 (4):477-87.

- Phua, C. T. , G. Lissorgues, B. C. Gooi, and B. Mercier. 2012. “Statistical Validation of Heart Rate Measurement Using Modulated Magnetic Signature of Blood with Respect to Electrocardiogram.” International Journal of Bioscience, Biochemistry and Bioinformatics 2 (2). [CrossRef]

- Poh, M. Z., D. J. McDuff, and R. W. Picard. 2010. “Non-contact, automated cardiac pulse measurements using video imaging and blind source separation.” Opt Express 18 (10):10762-74. [CrossRef]

- Poh, M. Z., D. J. McDuff, and R. W. Picard. 2011. “Advancements in Noncontact, Multiparameter Physiological Measurements Using a Webcam.” IEEE Transactions on Biomedical Engineering 58 (1):7-11. [CrossRef]

- Poynton, Charles. 2012. Digital Video and HD: Algorithms and Interfaces. second ed, Computer Graphics. Boston: Morgan Kaufmann.

- Raghi, E. R., and M. S. Lekshmi. 2016. “Single Channel Speech Separation with Frame-based Summary Autocorrelation Function Analysis.” Procedia Technology 24:1074-1079. [CrossRef]

- Rahman, Hamidur, Mobyen Ahmed, Shahina Begum, and Peter Funk. 2016. Real Time Heart Rate Monitoring from Facial RGB Color Video Using Webcam.

- Rahman, Hamidur, Shaibal Barua, and Shahina Begum. 2015. Intelligent Driver Monitoring Based on Physiological Sensor Signals: Application Using Camera.

- Rahman, Hamidur, Shahina Begum, and Mobyen Ahmed. 2015. Driver Monitoring in the Context of Autonomous Vehicle.

- Sahindrakar, P., G. de Haan, and I. Kirenko. 2011. “Improving motion robustness of contact-less monitoring of heart rate using video analysis.” Master’s Master’s Thesis, Department of Mathematics and Computer Science, Eindhoven University of Technology.

- Saquib, N., M. T. I. Papon, I. Ahmad, and A. Rahman. 2015. “Measurement of heart rate using photoplethysmography.” 2015 International Conference on Networking Systems and Security (NSysS), 5-7 Jan. 2015.

- Schmitz, G. 2011. “Video camera based photoplethysmography using video camera based photoplethysmography using ambient light.” Master’s Master’s Thesis, Department of Mathematics and Computer Science, Eindhoven University of Technology.

- Severinghaus, J. W. 2007. “Takuo Aoyagi: discovery of pulse oximetry.” Anesth Analg 105 (6 Suppl):S1-4, tables of contents. [CrossRef]

- Severinghaus, J. W., and Y. Honda. 1987. “History of blood gas analysis. VII. Pulse oximetry.” J Clin Monit 3 (2):135-8.

- Shao, D. 2016. “Monitoring Physiological Signals Using Camera.” PhD Doctoral Thesis, Arizona State University.

- Shao, D., C. Liu, F. Tsow, Y. Yang, Z. Du, R. Iriya, H. Yu, and N. Tao. 2016. “Noncontact Monitoring of Blood Oxygen Saturation Using Camera and Dual-Wavelength Imaging System.” IEEE Transactions on Biomedical Engineering 63 (6):1091-1098. [CrossRef]

- Spodick, D. H. 1993. “Survey of selected cardiologists for an operational definition of normal sinus heart rate.” Am J Cardiol 72 (5):487-8. [CrossRef]

- Takano, C., and Y. Ohta. 2007. “Heart rate measurement based on a time-lapse image.” Med Eng Phys 29 (8):853-7. [CrossRef]

- Tamura, Toshiyo, Yuka Maeda, Masaki Sekine, and Masaki Yoshida. 2014. “Wearable Photoplethysmographic Sensors—Past and Present.” Electronics 3 (2). [CrossRef]

- Tarasenko, V., and D. W. Park. 2016. Detection and tracking over image pyramids using lucas and kanade algorithm. Vol. 11.

- Tarassenko, L., M. Villarroel, A. Guazzi, J. Jorge, D. A. Clifton, and C. Pugh. 2014. “Non-contact video-based vital sign monitoring using ambient light and auto-regressive models.” Physiol Meas 35 (5):807-31. [CrossRef]

- Tarvainen, M. P., P. O. Ranta-aho, and P. A. Karjalainen. 2002. “An advanced detrending method with application to HRV analysis.” IEEE Transactions on Biomedical Engineering 49 (2):172-175. [CrossRef]

- Teng, X. F., and Y. T. Zhang. 2004. “The effect of contacting force on photoplethysmographic signals.” Physiological Measurement 25 (5):1323.

- Texas Heart Institute. “Categories of Arrythmias.” Last Modified Aug 2016, accessed 2 Feb. http://www.texasheart.org/HIC/Topics/Cond/arrhycat.cfm.

- Thaulow, E., and J. E. Erikssen. 1991. “How important is heart rate?” J Hypertens Suppl 9 (7):S27-30.

- Tofighi, G., Afarin N. A., K. Raahemifar, and A. N. Venetsanopoulos. 2014. “Hand Pointing Detection Using Live Histogram Template of Forehead Skin.” accessed 2 Feb. https://arxiv.org/abs/1407.4898.

- Valentini, M., and G. Parati. 2009. “Variables influencing heart rate.” Prog Cardiovasc Dis 52 (1):11-9. [CrossRef]

- Verkruysse, Wim, Lars O. Svaasand, and J. Stuart Nelson. 2008. “Remote plethysmographic imaging using ambient light.” Optics express 16 (26):21434-21445. [CrossRef]

- Viola, P., and M. Jones. 2001. “Rapid object detection using a boosted cascade of simple features.” Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition. CVPR 2001, 2001.

- Wieringa, F. P., F. Mastik, and A. F. van der Steen. 2005. “Contactless multiple wavelength photoplethysmographic imaging: a first step toward “SpO2 camera” technology.” Ann Biomed Eng 33 (8):1034-41. [CrossRef]

- Wu, Hao-Yu, Michael Rubinstein, Eugene Shih, John Guttag, Fr, #233, do Durand, and William Freeman. 2012. “Eulerian video magnification for revealing subtle changes in the world.” ACM Trans. Graph. 31 (4):1-8. [CrossRef]

- Yu, Sun, Sijung Hu, Vicente Azorin-Peris, Jonathon A. Chambers, Yisheng Zhu, and Stephen E. Greenwald. 2011. “Motion-compensated noncontact imaging photoplethysmography to monitor cardiorespiratory status during exercise.”. [CrossRef]

- Zhang, Q., G. q. Xu, M. Wang, Y. Zhou, and W. Feng. 2014. “Webcam based non-contact real-time monitoring for the physiological parameters of drivers.” The 4th Annual IEEE International Conference on Cyber Technology in Automation, Control and Intelligent, 4-7 June 2014.

- Zhao, F., M. Li, Y. Qian, and J. Z. Tsien. 2013. “Remote measurements of heart and respiration rates for telemedicine.” PLoS One 8 (10):e71384. [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).