Introduction

AI is a transdisciplinary field, which involves the use of computer algorithms model intelligent behaviour with minimal human intervention and is informed by logic, statistics, cognitive psychology, linguistics, decision theory, neuroscience, cybernetics, and computer engineering 1. AI applications are primarily based on machine learning and utilise information retrieval, image and speech recognition, sensor technologies, robotic devices, and cognitive decision support systems. AI is already creating a global impact and is fast transforming all spheres of modern life including industry, social media, healthcare, space technology, as well as a wide range of functions at the level of governments.2–4 The ultimate aim of AI is to create machines which are capable to perform intellectual tasks like humans.5

Chat Generative Pre-trained Transformer (ChatGPT) is an open-source artificial intelligence-based application freely available on the internet (

https://chat.openai.com/). Presently it is one of the most advanced natural language processing (NLP) model based on 175 billion parameters and is trained on Azure’s AI supercomputer.

6 ChatGPT is a generative AI which is capable of generating new content during real-time conversations.

7 ChatGPT uses various AI models trained on massive amount of text data from before Q4 2021 to respond to user queries.

8 It offers conversational responses to user queries. It has the ability to remember the user input into the conversation thread and its own response and builds on its previous outputs with subsequent queries.

Since its launch in November 2022 by open AI, chatGPT has generated incredible excitement globally and continues to dominate tech media headlines due to its remarkable abilities.9 Powered with a conversational interface, chatGPT allows users to perform numerous text-based tasks such as, answering questions on an unprecedented scale, generating codes, translations, and generating bespoke content. Like all sectors, inevitably, ChatGPT will affect academia in many ways and healthcare education is no exception. One of the main concerns about ChatGPT in healthcare education is related to its ability to generate content and answer questions, which may potentially encourage dishonesty in academic work and assessments.

The aims of this study were to investigate how ChatGPT performs on dental students’ assessments and evaluate the implications of this technology on the authenticity, validity and reliability of assessment methods commonly used in undergraduate dental education.

Methods

This was a web-based exploratory study investigating the accuracy of ChatGPT to attempt a range of recognised assessments in undergraduate dental curricula. ChatGPT Feb 9 free version was used in this study.

ChatGPT application was used to attempt ten items based on each of the five commonly used question formats including single-best answer (SBA) multiple-choice questions (MCQs); short answer questions (SAQs); short essay questions (SEQs); True/False questions and fill in the blanks items.

A total of 50 items were created de novo for knowledge-based assessments by the research team. Most items (over 80%) were based on clinical vignettes with a focus on the application of knowledge rather than mere factual recall. The items used for knowledge-based assessments were based on core clinical topic areas in restorative dentistry, periodontics, fixed prosthodontics, removable prosthodontics, endodontics, pedodontics,’ orthodontics, preventive dentistry, oral surgery, and oral medicine. One assessment item was created for each of ten aforementioned clinical subjects, using five different formats.

Assessments on reflective reports based on multisource feedback (MSF) portfolio assignments, research methodology, and critical appraisal of the literature were also prepared.

All assessment items were blueprinted against the learning outcomes for undergraduate dental students, as identified by the General Dental Council (UK), and benchmarked against the knowledge level expected from newly qualified dental graduates to ensure the items were within the scope of undergraduate dental education.10 The assessment items and answer keys/assessment rubrics were reviewed by two blinded external reviewers to evaluate their face validity, accuracy, and suitability for undergraduate dental students

A user account was created on ChatGPT website and the bot was asked to provide response to each item in turn. A log of every query regarding individual assessment items and response(s) generated by ChatGPT was automatically recorded on the account dashboard. All items and responses by ChatGPT were exported from the website for assessment.

Assessment of ChatGPT responses to MCQs, SAQs, SEQs, True/False and Fill in the blank items was done by the research team using the answer keys for individual items. Responses to written assignments i.e.., MSF reflective reports, research methodology, and critical appraisal of literature were evaluated by blinded assessors using a predefined rubric for each type of assignment. A separate assessor was used for each of the three different types of written assignments.

Results

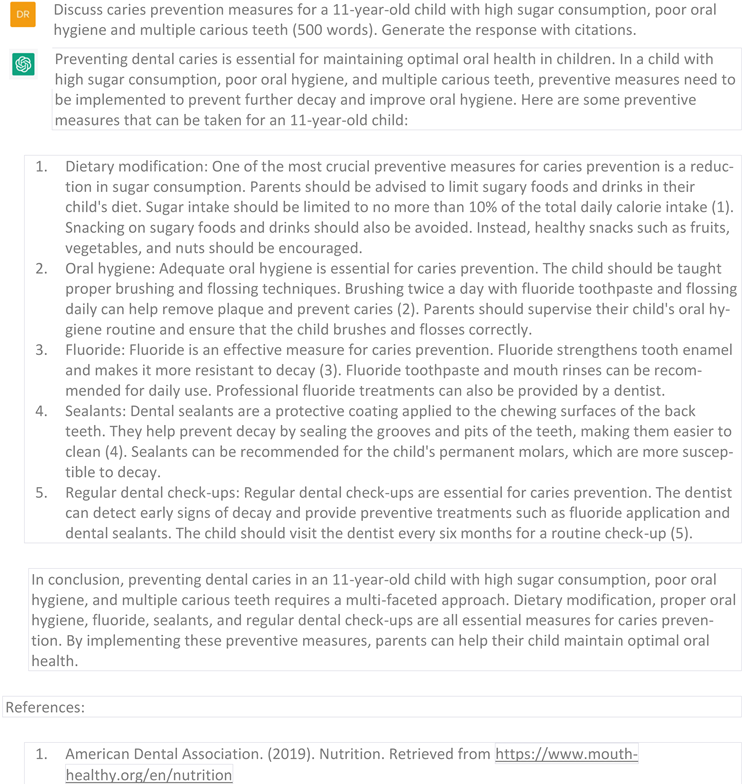

ChatGPT performed above satisfactory level on all types dental assessments included in this study except critical appraisal of literature. Exemplars of each type of assessment items and responses by ChatGPT are included in the appendix. For knowledge-based assessments, the mean score of ChatGPT was in the range of 70% to 100%. The highest score was recorded for True/False items (100%) while the lowest mean score of 70% was observed for short essay questions. The performance of ChatGPT on other assessment items i.e., MCQs, SEQs and Fill in the blank items yielded a mean score of 90%. The performance of ChatGPT on knowledge-based assessments and the number of items in each assessment category are summarised in Table. 1.

Table 1.

ChatGPT Performance on Knowledge-based Assessments.

Table 1.

ChatGPT Performance on Knowledge-based Assessments.

| Assessment |

Number of Assessment items

(N) |

Mean Percentage Score ChatGPT

(%) |

| |

|

|

|

10 |

90 |

- 2.

Fill in the blanks items |

10 |

90 |

- 3.

Short answer questions (SAQs) |

10 |

90 |

- 4.

True/False questions |

10 |

100 |

- 5.

Short essay questions (SEQs) |

10 |

70% |

The main limitation observed for ChatGPT performance on these items was that is only able to answer text-based questions and did not allow processing of questions based on images. The main limitation in responses to SEQs was limited details of clinical interventions and follow up visits which resulted in low scores in comparison to other question formats. Nevertheless, no factually inaccurate information was identified in the responses to any of the SEQ items.

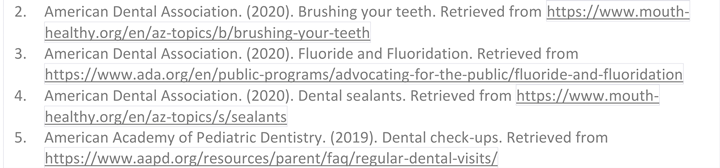

Performance of ChatGPT on MSF assignments, research methodology and critical appraisal of literature is summarised in

Table 2 and exemplars are provided in the appendix.

ChatGPT was able to generate good quality reflective reports on MSF and all five reports received an excellent grade. ChatGPT was also able to generate satisfactory research methodology for five different RCTs. However, deficiencies details of sample size calculations, blinding, and assessment of outcome measures were noted consistently for all five attempts. Finally, the lowest grade of performance was observed for critical appraisal of literature. The key limitations observed consistently for all attempts by the ChatGPT included a low word count ( upper limit of 650 words), most references cited by ChatGPT were more than five years old, and it missed some key studies based on RCTs. However, some improvements in the quality of critical appraisal and references could be achieved through a conversation with the ChatGPT

Discussion

This study is a first in investigating the impact of generative AI represented by ChatGPT on commonly used assessments in dental education. Our results demonstrate the capabilities of ChatGPT to attempt dental assessments and achieve acceptable grades. Our results corroborate with few recent studies which showed that ChatGPT was able to perform at or near the passing threshold of all three parts of on United States Medical Licensing Examination® (USMLE®) without any additional training or reinforcement.11,12 The findings underscore the need for dental education providers to recognize the impact of rapid technological advances on dental education. It warrants strategies to mitigate against inappropriate use of technology whilst ensuring that students and faculty are able to benefit from the technology. This is not the first time that education providers are confronted by the challenges posed by innovative technologies. The current generation of academics has already witnessed the internet revolution. Access to information has been transformed by powerful search engines such as Google, and web-based applications like YouTube, as well as use of digital flashcards.13 Unlike the pre-millennium era, teachers and textbooks are no longer the exclusive sources of information for students.

One of the main strengths of ChatGPT over traditional web search engines is that ChatGPT offers a conversation style interactive platform for the users and provides a direct response to queries instead of signposting the user to numerous websites. Also, the users are able to engage with ChatGPT to dissect the information and question its authenticity, and sources etc. The utility of ChatGPT is akin to a virtual tutor, which may be accessed round the clock free of cost and it is likely there will be increased use of this tool in higher education including medical and science education.12,14,15 ChatGPT offers an intelligent learning platform allowing scaffolding students learning with the ability to adapt and personalise learning content. Use of ChatGPT as a learning platform appears to be a suitable option and does not raise any concerns. Inaccurate information is always a risk with any web-search and is also applicable to ChatGPT warranting the user to cross check information when in doubt. ChatGPT, and similar platforms which may be rolled out in the future, are likely to evolve further with further human input from technical experts as well as users. In any case

Assessments are a critical part of dental education and inform decisions regarding student progression during successive stages of a dental programme.16 Quality assurance of assessments is essential for institutional reputation and public confidence. Education providers need to ensure that the assessment content is maintained securely and student submissions represent original work. The main limitation of the reflective portfolio reports generated by ChatGPT was that there was little reference to specific learning activities or events. However, it may be argued that if a student is prepared to use ChatGPT to prepare their assignment works, the output can be tweaked to address such limitations. Similarly, ChatGPT may be able to provide a solid foundation for other assignments such as research methodology and critical appraisal and students may be able to refine their assignments to address any limitations with or without further help from ChatGPT.

For academic assignments submitted by the students, an increasing number of institutions carry out plagiarism checks using appropriate software applications such as iThenticate (Turnitin LLC).17 However, plagiarism-check software is primarily aimed at quantifying the similarity index with published works available online and identifying the source. Given that, ChatGPT is capable of generating new text, routine software applications used for checking plagiarism may not be reliable for identifying outputs by ChatGPT.

Detecting text generated by AI is an active research area in the field of AI. One approach involves using machine learning models to differentiate between AI-written and human-written text.18 A number of AI-based tools have recently been developed to address this problem such as AI Text Classifier by OpenAI, such as, DetectGPT and GPTZero.19 However, these tools are not always accurate and misclassification can happen. For instance, the outputs generated by ChatGPT were subjected to scrutiny using these tools, but the results were inconclusive. Some of the outputs generated by ChatGPT were subjected to scrutiny using ChatGPT-detector software but the results were inconclusive. Moreover, with further expansion and sophistication of language processing, such detection may become even for difficult in the future.20 Therefore, further research is required to enhance the precision of these tools.

Knowledge-based assessments are a core component of undergraduate dental curricula and provide serve to demonstrate that dental graduates have the underpinning scientific knowledge to inform their clinical practice. 21–23 Historically, knowledge-based assessments in dental education have been delivered face-to-face in university settings using pen and paper. Although this mode of assessment delivery remains the most common, web-based digital assessment platforms are gaining popularity and allow assessments to be administered online as well as offline. Digital assessment platforms allow secure storage of assessment content in addition to designing, blueprinting, audit and psychometric analyses of assessments. Following the COVID-19 pandemic, many healthcare institutions have utilised remote proctoring to deliver online assessments.13 Although face-to-face assessments have returned, remote assessments offer some advantages to dental institutions especially if there are resource constraints to provide space and IT equipment for large groups of students sitting an assessment.

ChatGPT does not pose any threats to knowledge-based assessments delivered face-to-face on university campus under direct invigilation. During COVID-19, remote online delivery of assessments were undertaken on a large scale by dental institutions.13,24 However, only few institutions had the resources to use commercially available platforms designed to proctor students appropriately.25 Most dental institutions, particularly in developing countries, use open-source online platforms such as Zoom and Web ex to deliver assessments remotely which allowed students to be observed on camera during the assessments. However, these platforms did not permit restricting internet access to assessment content for candidates during assessments. This was mitigated, in part, by creating “non-searchable” questions so that students could not get a quick answer by searching the internet. However, with the availability of bots like ChatGPT, this approach may not work if remote assessments are reintroduced without with appropriate proctoring.

The educational value of ChatGPT is promising and there its use in dental education can provide a personalised learning experience to support the varying learning needs of dental students. Face to face invigilated assessments are unlikely to be impacted directly by ChatGPT and do not warrant any modification at this stage. However, there is risk of dishonesty in academic assignments which are completed by students off-campus and dental educators need to develop appropriate policies to mitigate against such risks.

Open access to ChatGPT is a recent phenomenon and represents a learning curve for dental educators. A quick-fix approach is unlikely to be the correct way forward and dental educators need to deliberate, identify and implement measures to address challenges posed by ChatGPT. Direct invigilation of assignments by moving remote assessment to campus appears to be a possible solution. However, it may not be realistic or appropriate to complete some of these assignments within a timed session. Another option may be to change the format of written assignments to oral presentations followed by questions from assessors.26 However, it would be vital to factor in the resources and faculty time required to assess large number of students. Inevitably, dental education will adapt to new technology as it has done in the past and it is important to avoid knee jerk reactions. Engagement with all stakeholders in dental education including the students to find sustainable solutions is the way forward. It is imperative that dental educators share their institutional approaches and experiences with colleagues to identify best practices which can be adopted more widely

Conclusion

ChatGPT is a double-edged sword and while it can be helpful for both students and teachers alike, it can be used to generate assignments and answer assessment questions, which raises concerns regarding potential cheating and dishonesty in academic works. Notwithstanding their current limitations, generative AI applications have the potential to revolutionize virtual learning. Instead of treating it as a threat, dental educators need to adapt teaching and assessments in dental education to the benefits of the learners whilst mitigating against dishonest use of generative AI applications.

Author Contributions

Conceptualization KA and FT, and NB; Methodology, KA & MD, Software, Resources, NB KA; drafting of manuscript; FT and MD editing of manuscript; All authors have read and agreed to the published version of the manuscript.

Funding

The authors did not receive support from any organization for the submitted work. Open access funding for the publication provided by Qatar National Library.

Institutional Review Board Statement

Not applicable; the manuscript only includes open-source data. Ethics approval/consent to publish are not relevant.

Informed Consent Statement

Not applicable

Data Availability Statement

The data underlying this article will be shared on reasonable request to the corresponding author.

Acknowledgments

The authors would like to acknowledge the chatGPT for generating responses to a wide range of assessments items. The author queries and responses by ChatGPT are included in the appendix.

Conflicts of Interest

The authors have no conflicts of interest to declare.

Appendix: Exemplars of author queries and verbatim outputs by GPT

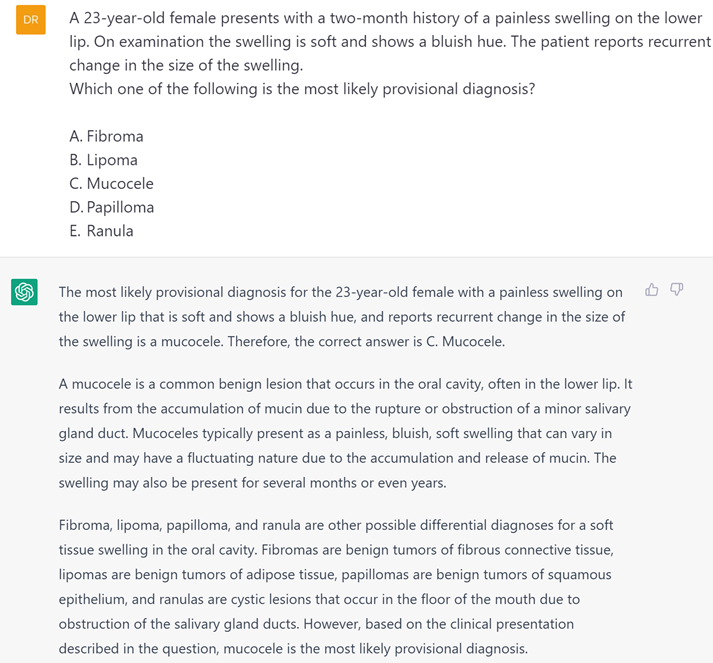

Exemplar 1 Multiple Choice Question (MCQ) - Single-best answer

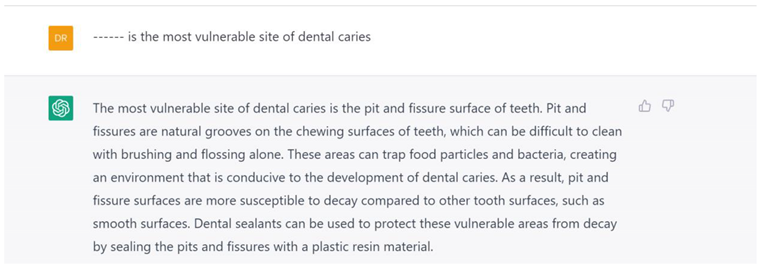

Exemplar 2 Fill in the blanks

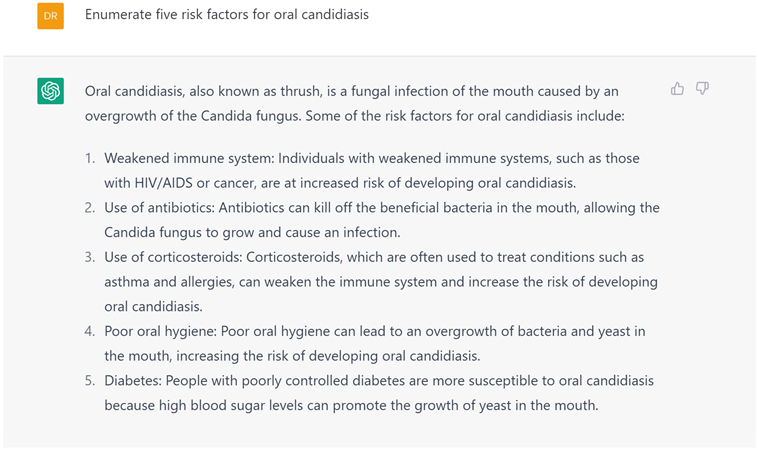

Exemplar 3 Short answer question (SAQ)

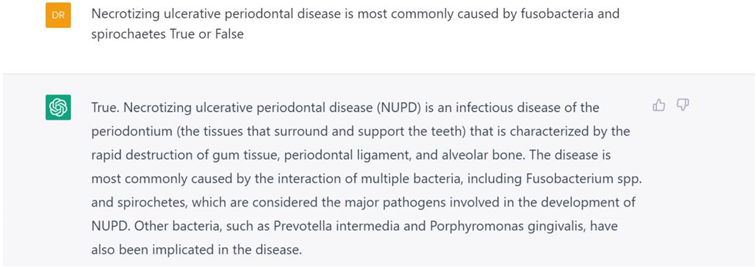

Exemplar 5 Short Essay Question (SEQ)

Exemplar 6 Short Essay Question (SEQ) with Citations

Exemplar 7 Reflective Report on Multisource Feedback

Exemplar 8 Research Proposal

Exemplar 9 Critical Appraisal of Literature

References

- Howard, J. Artificial intelligence: Implications for the future of work. Vol. 62, American Journal of Industrial Medicine. 2019.

- King, MR. The future of AI in medicine: a perspective from a Chatbot. Ann Biomed Eng. 2022;1–5.

- Girimonte D, Izzo D. Artificial intelligence for space applications. Intelligent Computing Everywhere. 2007;235–53.

- Sharma GD, Yadav A, Chopra R. Artificial intelligence and effective governance: A review, critique and research agenda. Sustainable Futures. 2020;2:100004.

- Grace K, Salvatier J, Dafoe A, Zhang B, Evans O. Viewpoint: When will ai exceed human performance? Evidence from ai experts. Vol. 62, Journal of Artificial Intelligence Research. 2018.

- Microsoft teams up with OpenAI to exclusively license GPT-3 language model - The Official Microsoft Blog [Internet]. [cited 2023 Feb 18]. Available online: https://blogs.microsoft.com/blog/2020/09/22/microsoft-teams-up-with-openai-to-exclusively-license-gpt-3-language-model/.

- Qadir, J. Engineering Education in the Era of ChatGPT: Promise and Pitfalls of Generative AI for Education. 2022 Feb. Available online: https://www.techrxiv.org/articles/preprint/Engineering_Education_in_the_Era_of_ChatGPT_Promise_and_Pitfalls_of_Generative_AI_for_Education/21789434.

- Model index for researchers - OpenAI API [Internet]. [cited 2023 Feb 23]. Available online: https://platform.openai.com/docs/model-index-for-researchers.

- OpenAI: Everything To Know About The Company Behind ChatGPT [Internet]. [cited 2023 Feb 21]. Available online: https://www.augustman.com/sg/gear/tech/openai-what-to-know-about-the-company-behind-chatgpt/.

- Innes N, Hurst D. GDC learning outcomes for the undergraduate dental curriculum. Vol. 13, Evidence-Based Dentistry. 2012.

- Kung Tiffany, H. AND Cheatham MANDMAANDSCANDDLLANDECANDMMANDARANDDCGANDMJANDTV. Performance of ChatGPT on USMLE: Potential for AI-assisted medical education using large language models. PLOS Digital Health [Internet]. 2023 Feb;2(2):1–12. [CrossRef]

- Gilson Aidan and Safranek CW and HT and SV and CL and TRA and CD. How Does ChatGPT Perform on the United States Medical Licensing Examination? The Implications of Large Language Models for Medical Education and Knowledge Assessment. JMIR Med Educ [Internet]. 2023 Feb;9:e45312. Available online: http://www.ncbi.nlm.nih.gov/pubmed/36753318.

- Ali K, Alhaija ESA, Raja M, Zahra D, Brookes ZL, McColl E, et al. Blended learning in undergraduate dental education: a global pilot study. Med Educ Online [Internet]. 2023;28(1):2171700. [CrossRef]

- Rudolph J, Tan S, Tan S. ChatGPT: Bullshit spewer or the end of traditional assessments in higher education? Journal of Applied Learning and Teaching. 2023;6(1).

- Qadir, J. Engineering Education in the Era of ChatGPT: Promise and Pitfalls of Generative AI for Education. 2022. [Google Scholar]

- Patel US, Tonni I, Gadbury-Amyot C, van der Vleuten CPM, Escudier M. Assessment in a global context: An international perspective on dental education. European Journal of Dental Education. 2018;22.

- Lapeña JFF. A Dozen Years, A Dozen Roses. Philippine Journal of Otolaryngology-Head and Neck Surgery. 2018;33(2).

- Mitchell E, Lee Y, Khazatsky A, Manning CD, Finn C. DetectGPT: Zero-Shot Machine-Generated Text Detection using Probability Curvature. arXiv preprint arXiv:230111305. 2023.

- GPTZero [Internet]. [cited 2023 Feb 23]. Available online: https://gptzero.me/.

- How to Detect AI-Generated Text, According to Researchers | WIRED [Internet]. [cited 2023 Feb 21]. Available online: https://www.wired.com/story/how-to-spot-generative-ai-text-chatgpt/.

- Ali K, Jerreat M, Zahra D, Tredwin C. Correlations between final-year dental students’ performance on knowledge-based and clinical examinations. J Dent Educ. 2017;81(12).

- Zahra D, Bennett J, Belfield L, Ali K, Mcilwaine C, Bruce M, et al. Effect of constant versus variable small-group facilitators on students’ basic science knowledge in an enquiry-based dental curriculum. European Journal of Dental Education. 2019;23(4).

- Ali K, Cockerill J, Bennett JH, Belfield L, Tredwin C. Transfer of basic science knowledge in a problem-based learning curriculum. European Journal of Dental Education. 2020;24(3).

- Jaap A, Dewar A, Duncan C, Fairhurst K, Hope D, Kluth D. Effect of remote online exam delivery on student experience and performance in applied knowledge tests. BMC Med Educ. 2021;21(1).

- Ali K, Barhom N, Duggal MS. Online assessment platforms: What is on offer? European Journal of Dental Education [Internet]. n/a(n/a). Available online: https://onlinelibrary.wiley.com/doi/abs/10.1111/eje.12807.

- ChatGPT raises uncomfortable questions about teaching and classroom learning | The Straits Times [Internet]. [cited 2023 Feb 21]. Available online: https://www.straitstimes.com/opinion/need-to-review-literacy-assessment-in-the-age-of-chatgpt.

Table 2.

ChatGPT Performance on Knowledge-based Assessments.

Table 2.

ChatGPT Performance on Knowledge-based Assessments.

| Assignments |

Number of Assessment items

(N) |

Average ChatGPT Grade |

| |

|

|

|

5 |

Excellent |

- 2.

Research methodology |

5 |

Satisfactory |

- 3.

Critical Appraisal of Literature |

5 |

Borderline |

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).