Submitted:

06 March 2023

Posted:

07 March 2023

You are already at the latest version

Abstract

Keywords:

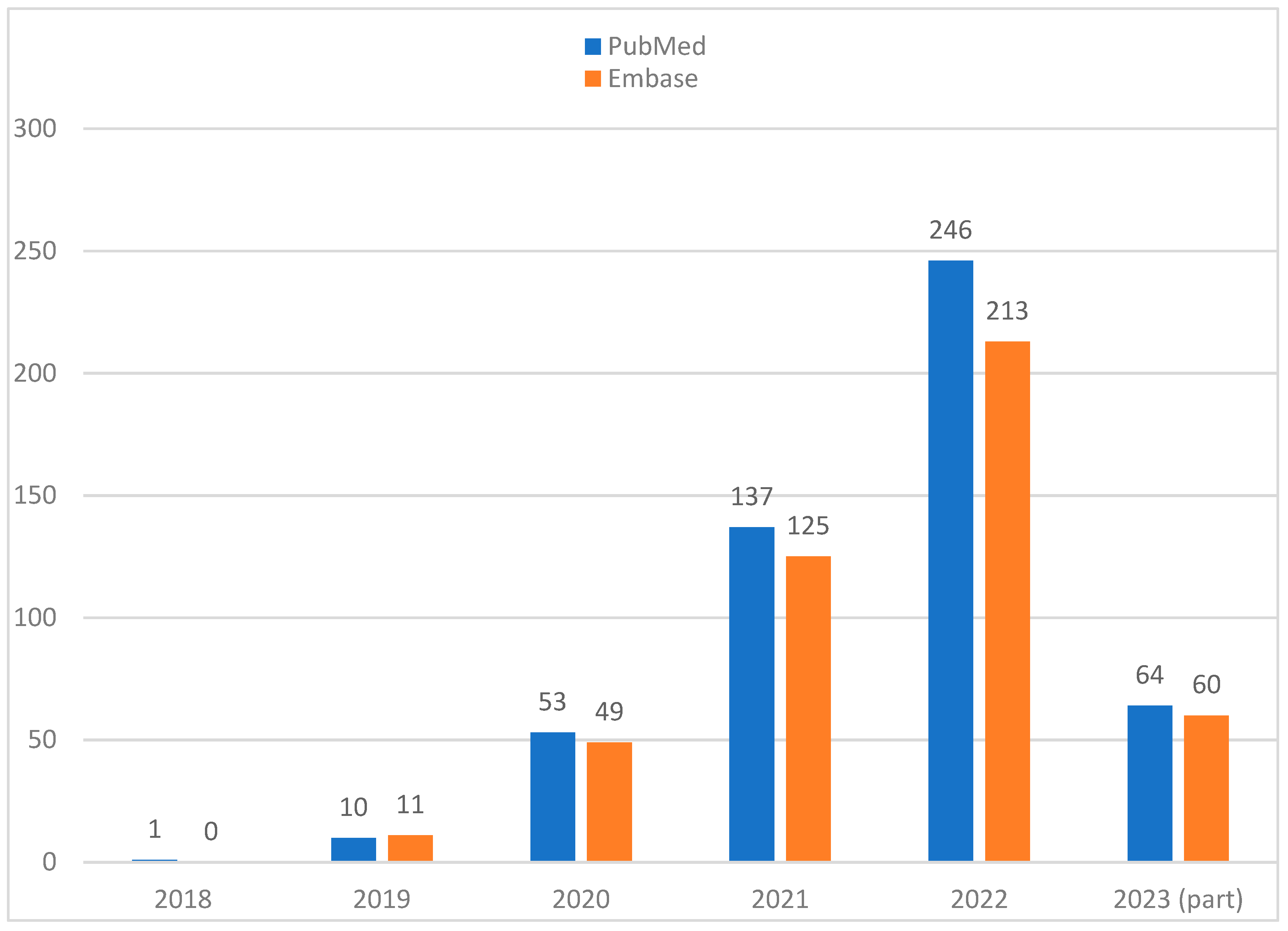

1. Introduction

2. From ‘black box’ to ‘(translucent) glass box’

3. Legal and regulatory compliance

4. Explainability: transparent or post-hoc

5. Privacy and security: a mixed bag

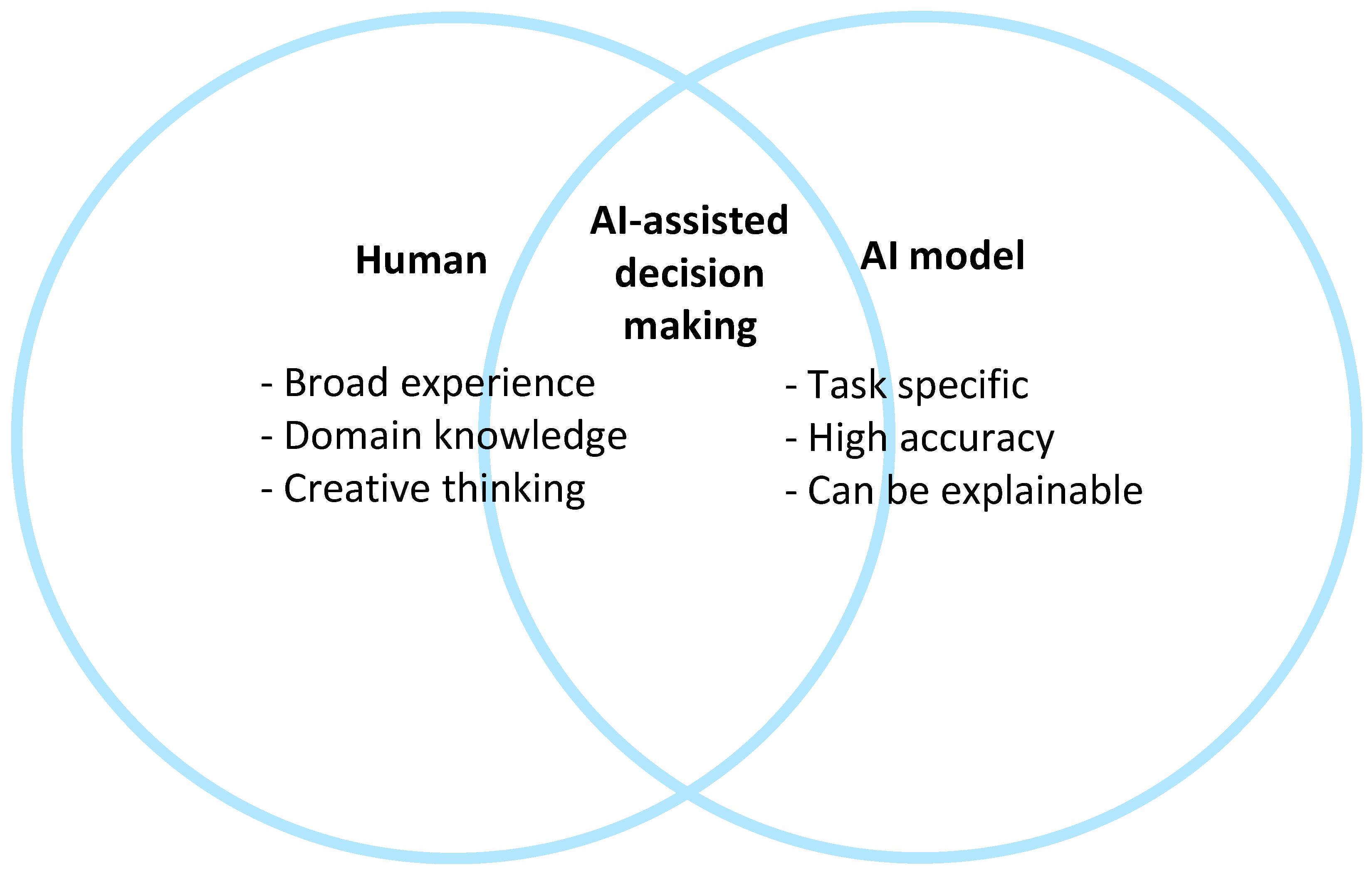

6. Collaboration between humans and AI

7. Do explanations always raise trust?

8. Scientific Explainable Artificial Intelligence (sXAI)

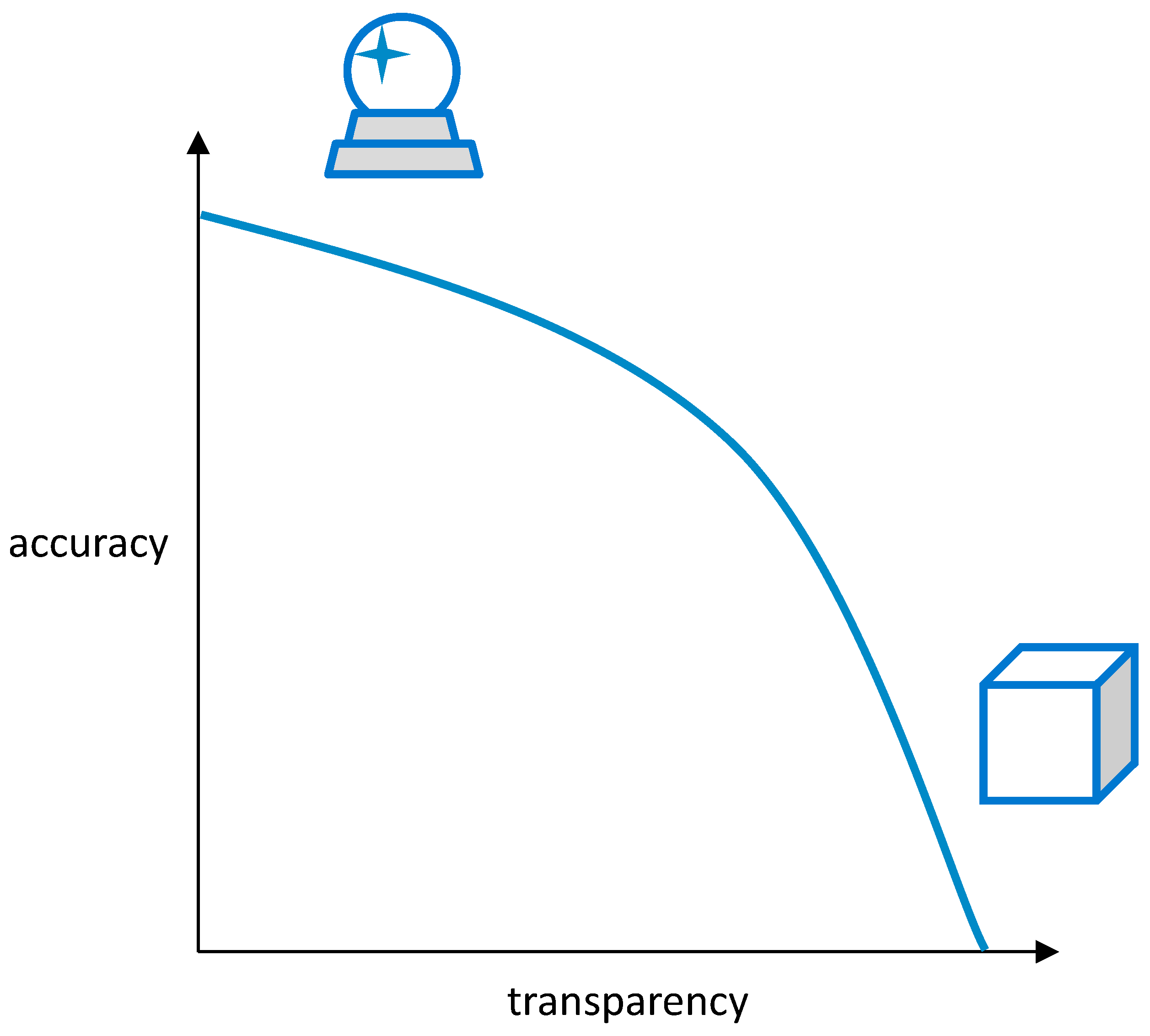

9. ‘Glass box’ vs. ‘crystal ball’: balance between explainability and accuracy/performance

10. How to measure explainability?

11. Increasing complexity in the future

12. Discussion

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Joiner, I.A. Chapter 1 - Artificial Intelligence: AI is Nearby. In Emerging Library Technologies; Joiner, I.A., Ed.; Chandos Publishing, 2018; pp. 1–22. [Google Scholar]

- Hulsen, T. Literature analysis of artificial intelligence in biomedicine. Annals of translational medicine 2022, 10, 1284. [Google Scholar] [CrossRef] [PubMed]

- Yu, K.-H.; Beam, A.L.; Kohane, I.S. Artificial intelligence in healthcare. Nature biomedical engineering 2018, 2, 719–731. [Google Scholar] [CrossRef] [PubMed]

- Hulsen, T.; Jamuar, S.S.; Moody, A.; Karnes, J.H.; Orsolya, V.; Hedensted, S.; Spreafico, R.; Hafler, D.A.; McKinney, E. From Big Data to Precision Medicine. Frontiers in Medicine 2019. [Google Scholar] [CrossRef] [PubMed]

- Hulsen, T.; Friedecký, D.; Renz, H.; Melis, E.; Vermeersch, P.; Fernandez-Calle, P. From big data to better patient outcomes. Clinical Chemistry and Laboratory Medicine (CCLM) 2022, 61, 580–586. [Google Scholar] [CrossRef] [PubMed]

- Biswas, S. ChatGPT and the Future of Medical Writing. Radiology 2023, 307, 223312. [Google Scholar] [CrossRef] [PubMed]

- Celi, L.A.; Cellini, J.; Charpignon, M.-L.; Dee, E.C.; Dernoncourt, F.; Eber, R.; Mitchell, W.G.; Moukheiber, L.; Schirmer, J.; Situ, J. Sources of bias in artificial intelligence that perpetuate healthcare disparities—A global review. PLOS Digital Health 2022, 1, e0000022. [Google Scholar] [CrossRef] [PubMed]

- Hulsen, T. Sharing Is Caring-Data Sharing Initiatives in Healthcare. Int J Environ Res Public Health 2020, 17, 46. [Google Scholar] [CrossRef] [PubMed]

- Vega-Márquez, B.; Rubio-Escudero, C.; Riquelme, J.C.; Nepomuceno-Chamorro, I. Creation of Synthetic Data with Conditional Generative Adversarial Networks. Cham, Switzerland, 2020; pp. 231–240. [Google Scholar]

- Gunning, D.; Stefik, M.; Choi, J.; Miller, T.; Stumpf, S.; Yang, G.Z. XAI-Explainable artificial intelligence. Sci Robot 2019, 4, eaay7120. [Google Scholar] [CrossRef] [PubMed]

- Vu, M.T.; Adalı, T.; Ba, D.; Buzsáki, G.; Carlson, D.; Heller, K.; Liston, C.; Rudin, C.; Sohal, V.S.; Widge, A.S.; et al. A Shared Vision for Machine Learning in Neuroscience. The Journal of neuroscience: the official journal of the Society for Neuroscience 2018, 38, 1601–1607. [Google Scholar] [CrossRef]

- Rai, A. Explainable AI: from black box to glass box. Journal of the Academy of Marketing Science 2020, 48, 137–141. [Google Scholar] [CrossRef]

- Loyola-Gonzalez, O. Black-box vs. white-box: Understanding their advantages and weaknesses from a practical point of view. IEEE access 2019, 7, 154096–154113. [Google Scholar] [CrossRef]

- Ribeiro, M.T.; Singh, S.; Guestrin, C. Why Should I Trust You? Explaining the Predictions of Any Classifier. Kdd ‘16 2016, 1135–1144. [Google Scholar] [CrossRef]

- Consulting, I. Recital 58 - The Principle of Transparency. Available online: https://gdpr-info.eu/recitals/no-58/.

- Felzmann, H.; Villaronga, E.F.; Lutz, C.; Tamò-Larrieux, A. Transparency you can trust: Transparency requirements for artificial intelligence between legal norms and contextual concerns. Big Data & Society 2019, 6, 2053951719860542. [Google Scholar] [CrossRef]

- Commission, E. Proposal for a Regulation of the European Parliament and of the Council Laying Down Harmonised Rules on Artificial Intelligence (Artificial Intelligence Act) and Amending Certain Union Legislative Acts. Available online: https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=celex%3A52021PC0206.

- Bell, A.; Nov, O.; Stoyanovich, J. Think About the Stakeholders First! Towards an Algorithmic Transparency Playbook for Regulatory Compliance. arXiv preprint 2022, arXiv:2207.01482. [Google Scholar] [CrossRef]

- Office for Civil Rights, H. Standards for privacy of individually identifiable health information. Final rule. Federal register 2002, 67, 53181–53273. [Google Scholar]

- Services, U.S.D.o.H.H. The HIPAA Privacy Rule and Electronic Health Information Exchange in a Networked Environment - Openness and Transparency. Available online: https://www.hhs.gov/sites/default/files/ocr/privacy/hipaa/understanding/special/healthit/opennesstransparency.pdf.

- Creemers, R.; Webster, G. Translation: Personal Information Protection Law of the People’s Republic of China–Effective. 1 November 2021. Available online: https://digichina.stanford.edu/work/translation-personal-information-protection-law-of-the-peoples-republic-of-china-effective-nov-1-2021/.

- Arrieta, A.B.; Díaz-Rodríguez, N.; Del Ser, J.; Bennetot, A.; Tabik, S.; Barbado, A.; García, S.; Gil-López, S.; Molina, D.; Benjamins, R. Explainable Artificial Intelligence (XAI): Concepts, taxonomies, opportunities and challenges toward responsible AI. Information fusion 2020, 58, 82–115. [Google Scholar] [CrossRef]

- Phillips, P.J.; Hahn, C.A.; Fontana, P.C.; Broniatowski, D.A.; Przybocki, M.A. Four principles of explainable artificial intelligence. Gaithersburg, Maryland 2020, 18. [Google Scholar]

- Vale, D.; El-Sharif, A.; Ali, M. Explainable artificial intelligence (XAI) post-hoc explainability methods: risks and limitations in non-discrimination law. AI and Ethics 2022, 2, 815–826. [Google Scholar] [CrossRef]

- Charmet, F.; Tanuwidjaja, H.C.; Ayoubi, S.; Gimenez, P.-F.; Han, Y.; Jmila, H.; Blanc, G.; Takahashi, T.; Zhang, Z. Explainable artificial intelligence for cybersecurity: a literature survey. Annals of Telecommunications 2022, 77, 789–812. [Google Scholar] [CrossRef]

- Tramèr, F.; Zhang, F.; Juels, A.; Reiter, M.K.; Ristenpart, T. Stealing Machine Learning Models via Prediction APIs. In Proceedings of the USENIX security symposium; 2016; pp. 601–618. [Google Scholar]

- Kaissis, G.A.; Makowski, M.R.; Rückert, D.; Braren, R.F. Secure, privacy-preserving and federated machine learning in medical imaging. Nature Machine Intelligence 2020, 2, 305–311. [Google Scholar] [CrossRef]

- Saifullah, S.; Mercier, D.; Lucieri, A.; Dengel, A.; Ahmed, S. Privacy Meets Explainability: A Comprehensive Impact Benchmark. arXiv preprint 2022, arXiv:2211.04110. [Google Scholar]

- Geyer, R.C.; Klein, T.; Nabi, M. Differentially private federated learning: A client level perspective. arXiv preprint 2017, arXiv:1712.07557. [Google Scholar]

- Ivanovs, M.; Kadikis, R.; Ozols, K. Perturbation-based methods for explaining deep neural networks: A survey. Pattern Recognition Letters 2021, 150, 228–234. [Google Scholar] [CrossRef]

- Viganò, L.; Magazzeni, D. Explainable Security. In Proceedings of the 2020 IEEE European Symposium on Security and Privacy Workshops (EuroS&PW), Genoa, Italy, 7–11 September 2020; 2018; pp. 293–300. [Google Scholar]

- Kuppa, A.; Le-Khac, N.A. Black Box Attacks on Explainable Artificial Intelligence(XAI) methods in Cyber Security. In Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN), Glasgow, UK, 19–24 July 2020; pp. 1–8. [Google Scholar]

- Bhattacharya, S.; Pradhan, K.B.; Bashar, M.A.; Tripathi, S.; Semwal, J.; Marzo, R.R.; Bhattacharya, S.; Singh, A. Artificial intelligence enabled healthcare: A hype, hope or harm. Journal of family medicine and primary care 2019, 8, 3461–3464. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Liao, Q.V.; Bellamy, R.K.E. Effect of Confidence and Explanation on Accuracy and Trust Calibration in AI-Assisted Decision Making. Fat* ‘20 2020, 295–305. [Google Scholar] [CrossRef]

- Druce, J.; Harradon, M.; Tittle, J. Explainable artificial intelligence (XAI) for increasing user trust in deep reinforcement learning driven autonomous systems. arXiv preprint 2021, arXiv:2106.03775. [Google Scholar]

- Le Merrer, E.; Trédan, G. Remote explainability faces the bouncer problem. Nature Machine Intelligence 2020, 2, 529–539. [Google Scholar] [CrossRef]

- Durán, J.M. Dissecting scientific explanation in AI (sXAI): A case for medicine and healthcare. Artificial Intelligence 2021, 297, 103498. [Google Scholar] [CrossRef]

- Cabitza, F.; Campagner, A.; Malgieri, G.; Natali, C.; Schneeberger, D.; Stoeger, K.; Holzinger, A. Quod erat demonstrandum? - Towards a typology of the concept of explanation for the design of explainable AI. Expert Systems with Applications 2023, 213, 118888. [Google Scholar] [CrossRef]

- Guang, Y.; Qinghao, Y.; Jun, X. Unbox the black-box for the medical explainable AI via multi-modal and multi-centre data fusion: A mini-review, two showcases and beyond. Information Fusion 2022, 77, 29–52. [Google Scholar] [CrossRef]

- van der Veer, S.N.; Riste, L.; Cheraghi-Sohi, S.; Phipps, D.L.; Tully, M.P.; Bozentko, K.; Atwood, S.; Hubbard, A.; Wiper, C.; Oswald, M.; et al. Trading off accuracy and explainability in AI decision-making: findings from 2 citizens’ juries. Journal of the American Medical Informatics Association 2021, 28, 2128–2138. [Google Scholar] [CrossRef] [PubMed]

- Sokol, K.; Flach, P. Explainability fact sheets: a framework for systematic assessment of explainable approaches. In Proceedings of the 2020 Conference on Fairness, Accountability, and Transparency, Barcelona, Spain, 27–30 January 2020; pp. 56–67. [Google Scholar]

- Lipton, Z.C. The Mythos of Model Interpretability: In Machine Learning, the Concept of Interpretability is Both Important and Slippery. Queue 2018, 16, 31–57. [Google Scholar] [CrossRef]

- Hoffman, R.R.; Mueller, S.T.; Klein, G.; Litman, J. Metrics for explainable AI: Challenges and prospects. arXiv preprint 2018, arXiv:1812.04608. [Google Scholar]

- Klein, G.; Hoffman, R.R. Macrocognition, mental models, and cognitive task analysis methodology. Naturalistic decision making and macrocognition 2008, 57–80. [Google Scholar]

- Fauvel, K.; Masson, V.; Fromont, E. A performance-explainability framework to benchmark machine learning methods: application to multivariate time series classifiers. arXiv preprint 2020, arXiv:2005.14501. [Google Scholar]

- Larochelle, H.; Erhan, D.; Courville, A.; Bergstra, J.; Bengio, Y. An Empirical Evaluation of Deep Architectures on Problems with Many Factors of Variation. Icml ‘07 2007, 473–480. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial networks. Communications of the ACM 2020, 63, 139–144. [Google Scholar] [CrossRef]

- Huynh, T.D.; Tsakalakis, N.; Helal, A.; Stalla-Bourdillon, S.; Moreau, L. Explainability-by-Design: A Methodology to Support Explanations in Decision-Making Systems. arXiv preprint 2022, arXiv:2206.06251. [Google Scholar]

- Sarkar, A. Is explainable AI a race against model complexity? arXiv preprint 2022, arXiv:2205.10119. [Google Scholar]

- Asan, O.; Bayrak, A.E.; Choudhury, A. Artificial Intelligence and Human Trust in Healthcare: Focus on Clinicians. J Med Internet Res 2020, 22, e15154. [Google Scholar] [CrossRef]

- Hulsen, T. The ten commandments of translational research informatics. Data Science 2019, 2, 341–352. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).