Submitted:

04 April 2023

Posted:

04 April 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Methods

2.1. Participants

2.2. Analyses

2.2.1. Balanced EI (BEI)

2.2.2. Balanced Level EI (BLEI)

2.2.3. Quality EI (QEI)

2.2.4. Unbiased EI (UEI)

3. Results

4. Discussion

5. Limitations

6. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Bolboacă, S.D. Medical diagnostic tests: a review of test anatomy, phases, and statistical treatment of data. Comput. Math. Methods Med. 2019, 2019, 1891569. [Google Scholar] [CrossRef] [PubMed]

- Larner, A.J. The 2x2 matrix. Contingency, confusion and the metrics of binary classification; Springer: London, 2021. [Google Scholar]

- Larner, A.J. Communicating risk: developing an “Efficiency Index” for dementia screening tests. Brain Sci. 2021, 11, 1473. [Google Scholar] [CrossRef] [PubMed]

- Larner, A.J. Evaluating binary classifiers: extending the Efficiency Index. Neurodegener. Dis. Manag. 2022, 12, 185–194. [Google Scholar] [CrossRef] [PubMed]

- McNicol, D. A primer of signal detection theory; Lawrence Erlbaum Associates: Mahwah, New Jersey and London, 2005. [Google Scholar]

- Kraemer, H.C. Evaluating medical tests. Objective and quantitative guidelines; Sage: Newbery Park, California, 1992. [Google Scholar]

- Katz, D.; Baptista, J.; Azen, S.P.; Pike, M.C. Obtaining confidence intervals for the risk ratio in cohort studies. Biometrics 1978, 34, 469–474. [Google Scholar] [CrossRef]

- Jaeschke, R.; Guyatt, G.; Sackett, D.L. Users’ guide to the medical literature. III. How to use an article about a diagnostic test. B. What are the results and will they help me in caring for my patients? JAMA 1994, 271, 703–707. [Google Scholar] [CrossRef] [PubMed]

- Glas, A.S.; Lijmer, J.G.; Prins, M.H.; Bonsel, G.J.; Bossuyt, P.M. The diagnostic odds ratio: a single indicator of test performance. J. Clin. Epidemiol. 2003, 56, 1129–1135. [Google Scholar] [CrossRef] [PubMed]

- Rosenthal, J.A. Qualitative descriptors of strength of association and effect size. J. Soc. Serv. Res. 1996, 21, 37–59. [Google Scholar] [CrossRef]

- McGee, S. Simplifying likelihood ratios. J. Gen. Intern Med. 2002, 17, 647–650. [Google Scholar] [CrossRef]

- Larner, A.J. Cognitive screening in older people using Free-Cog and Mini-Addenbrooke’s Cognitive Examination (MACE). Submitted 2023. [Google Scholar]

- Hsieh, S.; McGrory, S.; Leslie, F.; Dawson, K.; Ahmed, S.; Butler, C.R.; Rowe, J.B.; Mioshi, E.; Hodges, J.R. The Mini-Addenbrooke’s Cognitive Examination: a new assessment tool for dementia. Dement. Geriatr. Cogn. Disord. 2015, 39, 1–11. [Google Scholar] [CrossRef]

- Noel-Storr, A.H.; McCleery, J.M.; Richard, E.; Ritchie, C.W.; Flicker, L.; Cullum, S.J.; Davis, D.; Quinn, T.J.; Hyde, C.; Rutjes, A.W.S.; Smailagic, N.; Marcus, S.; Black, S.; Blennow, K.; Brayne, C.; Fiorivanti, M.; Johnson, J.K.; Köpke, S.; Schneider, L.S.; Simmons, A.; Mattsson, N.; Zetterberg, H.; Bossuyt, P.M.M.; Wilcock, G.; McShane, R. Reporting standards for studies of diagnostic test accuracy in dementia: the STARDdem Initiative. Neurology 2014, 83, 364–373. [Google Scholar] [CrossRef] [PubMed]

- Larner, A.J. MACE for diagnosis of dementia and MCI: examining cut-offs and predictive values. Diagnostics (Basel) 2019, 9, E51. [Google Scholar] [CrossRef] [PubMed]

- Larner, A.J. Accuracy of cognitive screening instruments reconsidered: overall, balanced, or unbiased accuracy? Neurodegener. Dis. Manag. 2022, 12, 67–76. [Google Scholar] [CrossRef]

- Larner, A.J. Applying Kraemer’s Q (positive sign rate): some implications for diagnostic test accuracy study results. Dement. Geriatr. Cogn. Dis. Extra 2019, 9, 389–396. [Google Scholar] [CrossRef] [PubMed]

- Garrett, C.T.; Sell, S. Summary and perspective: Assessing test effectiveness – the identification of good tumour markers. In Cellular cancer markers; Garrett, C.T., Sell, S., Eds.; Springer, 1995; pp. 455–477. [Google Scholar]

- Carter, J.V.; Pan, J.; Rai, S.N.; Galandiuk, S. ROC-ing along: evaluation and interpretation of receiver operating characteristic curves. Surgery 2016, 159, 1638–1645. [Google Scholar] [CrossRef] [PubMed]

- Hand, D.J.; Christen, P.; Kirielle, N. F*: an interpretable transformation of the F measure. Mach. Learn. 2021, 110, 451–456. [Google Scholar] [CrossRef] [PubMed]

- Sowjanya, A.M.; Mrudula, O. Effective treatment of imbalanced datasets in health care using modified SMOTE coupled with stacked deep learning algorithms. Appl. Nanosci. 2022, Feb 3, 1–12. [Google Scholar] [CrossRef]

- Mbizvo, G.K.; Bennett, K.H.; Simpson, C.R.; Duncan, S.E.; Chin, R.F.M.; Larner, A.J. Using Critical Success Index or Gilbert Skill Score as composite measures of positive predictive value and sensitivity in diagnostic accuracy studies: weather forecasting informing epilepsy research. Epilepsia Online ahead of print. 2023. [Google Scholar] [CrossRef]

- Sud, A.; Turnbull, C.; Houlston, R.S.; Horton, R.H.; Hingorani, A.D.; Tzoulaki, I.; Lucassen, A. Realistic expectations are key to realising the benefits of polygenic scores. BMJ 2023, 380, e073149. [Google Scholar] [CrossRef]

- Larner, A.J. Assessing cognitive screening instruments with the critical success index. Prog. Neurol. Psychiatry 2021, 25(3), 33–37. [Google Scholar] [CrossRef]

- Roccetti, M.; Delnevo, G.; Casini, L.; Cappiello, G. Is bigger always better? A controversial journey to the center of machine learning design, with uses and misuses of big data for predicting water meter failures. J. Big Data 2019, 6, 70. [Google Scholar] [CrossRef]

| EI value | Qualitative classification: change in probability of diagnosis (after Jaeschke et al. [8]) | Qualitative classification:“effect size”(after Rosenthal [10]) | Semi-quantitative classification: approximate % change in probability of diagnosis (after McGee [11]) |

|---|---|---|---|

| ≤ 0.1 | Very large decrease | - | - |

| 0.1 | Large decrease | - | –45 |

| 0.2 | Large decrease | - | –30 |

| 0.5 | Moderate decrease | - | –15 |

| 1.0 | 0 | ||

| ~1.5 | - | Small | - |

| 2.0 | Moderate increase | - | +15 |

| ~2.5 | - | Medium | - |

| ~4 | - | Large | - |

| 5.0 | Moderate increase | - | +30 |

| 10.0 | Large increase | - | +45 |

| ≥ 10.0 | Very large increase | Very large | - |

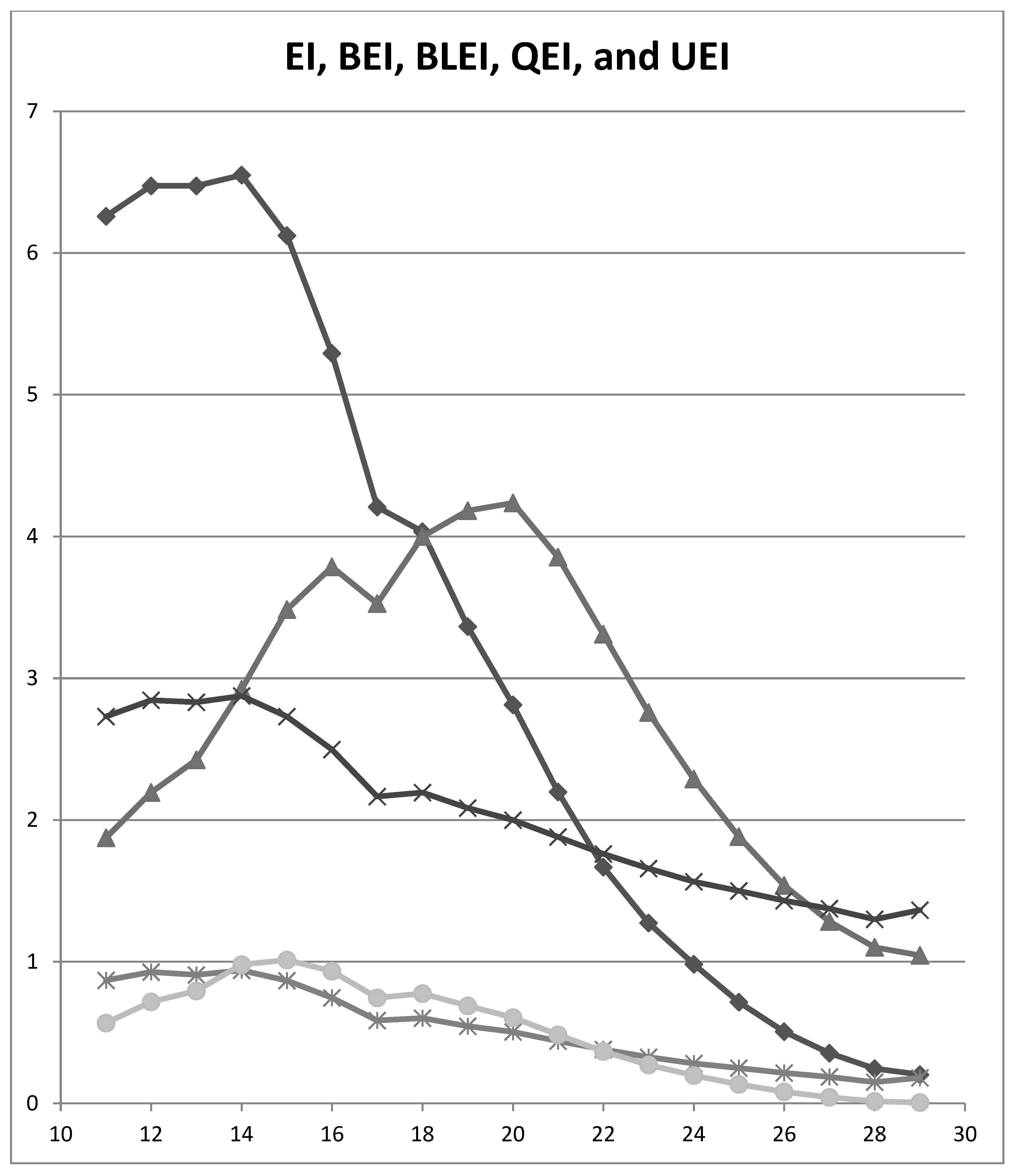

| MACE Cut-off | EI | BEI | BLEI | QEI | UEI |

|---|---|---|---|---|---|

| ≤29/30 | 0.204 | 1.045 | 1.364 | 0.181 | 0.006 |

| ≤28/30 | 0.246 | 1.101 | 1.299 | 0.150 | 0.015 |

| ≤27/30 | 0.355 | 1.283 | 1.374 | 0.186 | 0.043 |

| ≤26/30 | 0.507 | 1.538 | 1.432 | 0.215 | 0.081 |

| ≤25/30 | 0.716 | 1.882 | 1.500 | 0.249 | 0.135 |

| ≤24/30 | 0.982 | 2.289 | 1.564 | 0.282 | 0.198 |

| ≤23/30 | 1.274 | 2.759 | 1.658 | 0.327 | 0.272 |

| ≤22/30 | 1.668 | 3.310 | 1.762 | 0.381 | 0.368 |

| ≤21/30 | 2.199 | 3.854 | 1.880 | 0.440 | 0.484 |

| ≤20/30 | 2.813 | 4.236 | 2.000 | 0.504 | 0.605 |

| ≤19/30 | 3.364 | 4.181 | 2.086 | 0.546 | 0.689 |

| ≤18/30 | 4.033 | 4.000 | 2.194 | 0.602 | 0.776 |

| ≤17/30 | 4.207 | 3.525 | 2.165 | 0.586 | 0.745 |

| ≤16/30 | 5.292 | 3.785 | 2.497 | 0.746 | 0.934 |

| ≤15/30 | 6.123 | 3.484 | 2.731 | 0.866 | 1.012 |

| ≤14/30 | 6.550 | 2.922 | 2.876 | 0.939 | 0.980 |

| ≤13/30 | 6.475 | 2.425 | 2.831 | 0.908 | 0.795 |

| ≤12/30 | 6.475 | 2.195 | 2.846 | 0.927 | 0.718 |

| ≤11/30 | 6.260 | 1.874 | 2.731 | 0.868 | 0.567 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).