Submitted:

28 April 2023

Posted:

29 April 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Theoretical Background

2.1. Decision Support Systems

2.2. Donor Behaviour

2.3. DSS in NPOs

3. Research Methodolgy

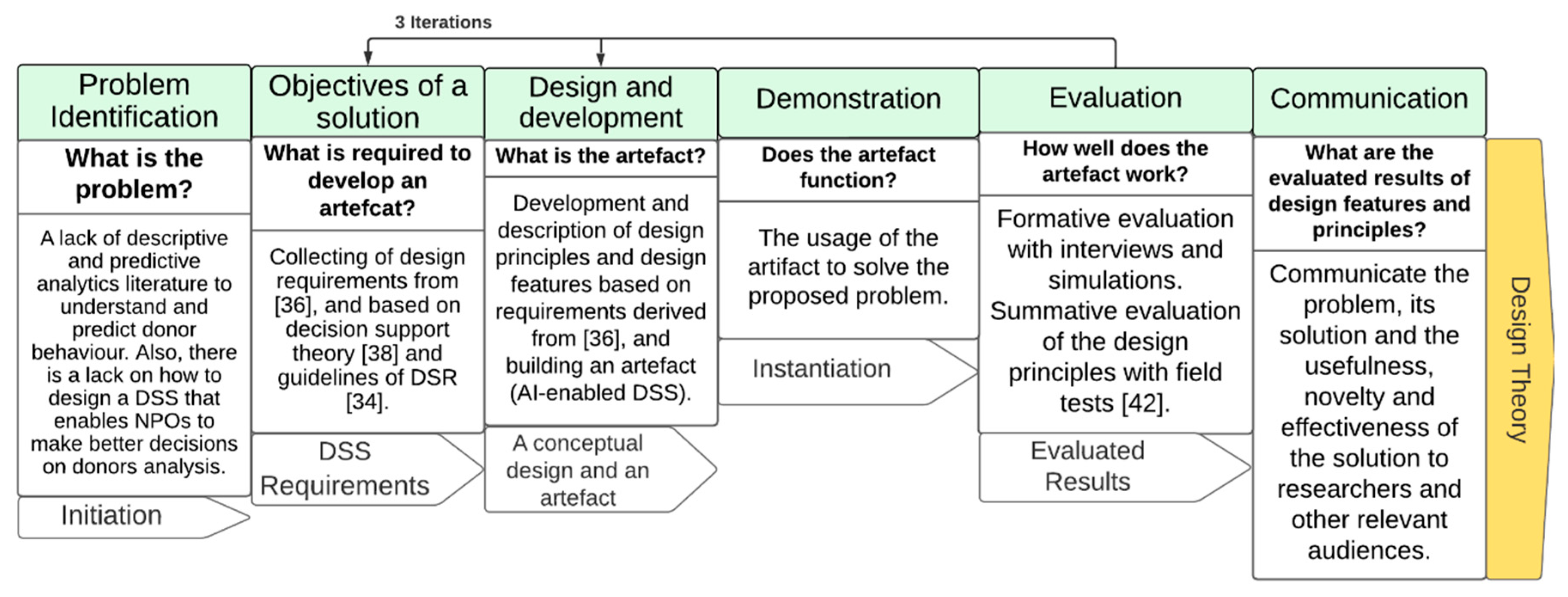

3.1. Research Process Model

3.1.1. Phase 1: Problem Identification

3.1.2. Phase 2: Objectives of a Solution

3.1.3. Phase 3: Design and Development

3.1.4. Phase 4: Demonstration

3.1.5. Phase 5: Evaluation

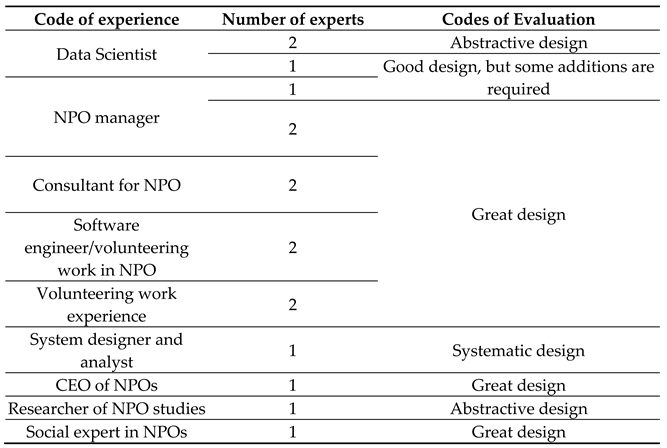

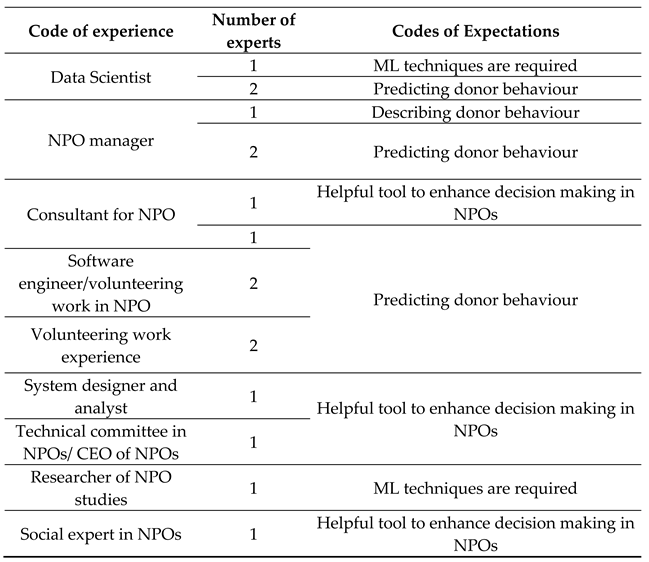

3.2. Data Collection and Interview Analysis for Iteration One Evaluation

- Participants: the questions are to ask about experts’ experience working in NPOs.

- Discovery: the questions are to ask experts about their experience working on DSS, ML, and data analytics, either in NPOs or in profitable organisations.

- Dream: the questions are to collect the experts’ feedback on the conceptual design of AI-enabled DSS for analysing donor behaviour.

- Design: the questions are to ask experts about any additional design requirements, DPs and DFs that can be added to the conceptual design.

- Destiny: the questions are to measure experts’ expectations of the AI-enabled DSS for analysing donor behaviour in NPOs.

- Working experience:

- 2.

- Evaluation of the conceptual design:

- 3.

- Additional design requirements, DPs and DFs:

- 4.

- Expectations of the AI-enabled DSS:

4. Research Results

- Effectiveness: it assists users in correctly performing actions.

- Efficiency: users may do jobs quickly by following the simplest approach.

- User engagement: Users find it enjoyable to use and relevant to the industry/topic.

- Error Tolerance: it covers a wide variety of user operations and only displays an error when something is truly wrong.

- Ease of Learning: new users will have no trouble achieving their objectives and will have even more success on subsequent visits.

5. Next Steps and Expected Research Outcomes

5.1. Iteration Two

5.2. Iteration Three

5.3. Design Theory of AI-enabled DSS

6. Limitations

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. A list of questions in the interviews for iteration 1

| Stage | Script/ Questions |

| Introduction (2 minute) |

|

| Participation (5 minutes) |

|

| Discovery (5 minutes) |

|

| Dream (3 minutes) |

|

| design (3 minutes) |

|

| Destiny (3 minutes) |

|

| Conclusion | Thank you for your collaboration and participation in this interview. I hope we can speak to you in the future for our second interview of the evaluation. |

| 1 | Iterations two and three are explained as in detail in section 5 of this paper. |

| 2 | Details about the participants’ roles experience and presented in subsection 3.1.7 |

References

- Anheier, H.K. Nonprofit organizations theory, management, policy; Routledge Taylor & Francis Group: 2005; p. 450.

- Productivity Commission. Contribution of the not for profit sector; Australia, Canberra, 2010; pp. 1–23.

- Centre for Corporate Public Affairs. Impact of the economic downturn on notfor-profit organisation management; 2009; pp. 1–52.

- Farrokhvar, L.; Ansari, A.; Kamali, B. Predictive models for charitable giving using machine learning techniques. PLoS ONE 2018, 13, 1–14. [Google Scholar] [CrossRef] [PubMed]

- Dietz, R.; Keller, B. A Deep Dive into Donor Behaviors and Attitudes; Abila: 2016; p. 22.

- Te, N. Study Helps You Better Understand Donor Behaviors. 2019.

- Sargeant, A.; Jay, E. Fundraising management: Analysis, planning and practice, Third ed.; Routledge Taylor & Francis Group London and New York, 2014; p. 438.

- Shehu, E.; Langmaack, A.C.; Felchle, E.; Clement, M. Profiling donors of blood, money, and time: A simultaneous comparison of the German population. Nonprofit Manage. Leadersh. 2015, 25, 269–295. [Google Scholar] [CrossRef]

- Li, C.; Wu, Y. Understanding Voluntary Intentions within the Theories of Self-Determination and Planned Behavior. Journal of Nonprofit & Public Sector Marketing 2019, 31, 378–389. [Google Scholar] [CrossRef]

- Weinger, A. The Importance of Donor Data and How to Use It Effectively. Philanthropy News Digest 2019, 2022. [Google Scholar]

- Dag, A.; Oztekin, A.; Yucel, A.; Bulur, S.; Megahed, F.M. Predicting heart transplantation outcomes through data analytics. Decis Support Syst 2017, 94, 42–52. [Google Scholar] [CrossRef]

- Dunford, L. To Give or Not to Give: Using an Extended Theory of Planned Behavior to Predict Charitable Giving Intent to International Aid Charities; University of Minnesota: 2016; pp. 1–79.

- Hou, Y.; Wang, D. Hacking with NPOs: Collaborative analytics and broker roles in civic data hackathons. Proc. ACM Hum. Comput. Interact. 2017, 1, 1–16. [Google Scholar] [CrossRef]

- Hackler, D.; Saxton, G. The strategic use of information technology by nonprofit organizations: Increasing capacity and untapped potential. Public Adm. Rev. 2007, 67, 474–487. [Google Scholar] [CrossRef]

- LeRoux, K.; Wright, N.S. Does Performance Measurement Improve Strategic Decision Making? Findings From a National Survey of Nonprofit Social Service Agencies. Nonprofit Volunt. Sect. Q. 2010, 39, 571–587. [Google Scholar] [CrossRef]

- Barzanti, L.; Giove, S.; Pezzi, A. A decision support system for non profit organizations. In Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer Verlag: 2017; Volume 10147 LNAI, pp. 270–280.

- Zeebaree, M.; Aqel, M. A Comparison Study between Intelligent Decision Support Systems and Decision Support Systems. The ISC Int'l Journal of Information Security 2019, 11, 187–194. [Google Scholar]

- Rhyn, M.; Blohm, I. A Machine Learning Approach for Classifying Textual Data in Crowdsourcing. In Proceedings of the 13th International Conference on Wirtschaftsinformatik (WI), St. Gallen, Switzerland; 2017; pp. 1–15. [Google Scholar]

- Alsolbi, I.; Agarwal, R.; Narayan, B.; Bharathy, G.; Samarawickrama, M.; Tafavogh, S.; Prasad, M. Analyzing Donors Behaviors in Nonprofit Organizations: A Design Science Research Framework. In Proceedings of the Proceedings of 3rd International Conference on Machine Intelligence and Signal Processing NIT Arunachal Pradesh, India, 23-25 September 2021. [Google Scholar]

- Horváth, I. Conceptual design: inside and outside. In Proceedings of the Proceedings of the 2nd International Seminar and Workshop on Engineering Design in Integrated Product; 2000; pp. 63–72. [Google Scholar]

- Walls, J.G.; Widmeyer, G.R.; El Sawy, O.A. Building an information system design theory for vigilant EIS. Information systems research 1992, 3, 36–59. [Google Scholar] [CrossRef]

- Arnott, D.; Pervan, G. A critical analysis of decision support systems research revisited: The rise of design science. Journal of Information Technology 2014, 29, 269–293. [Google Scholar] [CrossRef]

- Bourouis, A.; Feham, M.; Hossain, M.A.; Zhang, L. An intelligent mobile based decision support system for retinal disease diagnosis. Decis Support Syst 2014, 59, 341–350. [Google Scholar] [CrossRef]

- Power, D. Decision Support Systems: A Historical Overview. In Handbook on Decision Support Systems 1 Basic Themes; Springer 2008; pp. 121–140.

- Burstein, F.; Holsapple, C.W. Handbook on Decision Support Systems 1: Basic Themes; Springer-Verlag London Ltd.: Berlin Germany, 2008. [Google Scholar]

- Arnott, D.; Pervan, G. Design Science in Decision Support Systems Research: An Assessment using the Hevner, March, Park, and Ram Guidelines. Journal of the Association of Information Systems 2012, 13, 923–949. [Google Scholar] [CrossRef]

- Fredriksson, C. Big data creating new knowledge as support in decision-making: practical examples of big data use and consequences of using big data as decision support. J. Decis. Syst. 2018, 27, 1–18. [Google Scholar] [CrossRef]

- Bopp, C.; Harmon, C.; Voida, A. Disempowered by data: Nonprofits, social enterprises, and the consequences of data-driven work. In Proceedings of the ACM SIGCHI Conference on Human Factors in Computing Systems, Denver, USA; 2017; pp. 3608–3619. [Google Scholar]

- Maxwell, N.L.; Rotz, D.; Garcia, C. Data and decision making: same organization, different perceptions; different organizations, different perceptions. Am. J. Eval. 2016, 37, 463–485. [Google Scholar] [CrossRef]

- Korolov, R.; Peabody, J.; Lavoie, A.; Das, S.; Magdon-Ismail, M.; Wallace, W. Predicting charitable donations using social media. Soc. Netw. Analysis Min. 2016, 6. [Google Scholar] [CrossRef]

- Barzanti, L.; Giove, S.; Pezzi, A. A. A Decision Support System for Non Profit Organizations. In Fuzzy Logic and Soft Computing Applications; Lecture Notes in Computer Science; Springer International Publishing: Cham, 2017; pp. 270–280. [Google Scholar]

- Rhyn, M.; Leicht, N.; Blohm, I.; Leimeister, J.M. Opening the Black Box: How to Design Intelligent Decision Support Systems for Crowdsourcing. In Proceedings of the 15th International Conference on Wirtschaftsinformatik, Potsdam, Germany; 2020; pp. 1–15. [Google Scholar]

- Johannesson, P.; Perjons, E. An Introduction to Design Science; 2014; pp. 1–197.

- Hevner, R.A.; March, S.; Park, J.; Ram, S. Design Science in Information Systems Research. Management Information Systems Quarterly 2004, 28, 75–105. [Google Scholar] [CrossRef]

- Peffers, K.; Tuunanen, T.; Rothenberger, M.; Chatterjee, S. A design science research methodology for information systems research. Journal of Management Information Systems 2007, 24, 45–77. [Google Scholar] [CrossRef]

- Meth, H.; Mueller, B.; Maedche, A. Designing a requirement mining system. Journal of the Association for Information Systems 2015, 16, 799–837. [Google Scholar] [CrossRef]

- Gregor, S.; Hevner, A. Positioning and Presenting Design Science Research for Maximum Impact. MIS Quarterly 2013, 37, 337–356. [Google Scholar] [CrossRef]

- Silver, M.S. Decisional Guidance for Computer-Based Decision Support. MIS Quarterly 1991, 15, 105–122. [Google Scholar] [CrossRef]

- Silver, M.S. User perceptions of DSS restrictiveness: An experiment. In Proceedings of the Proceedings of the Twenty-First Annual Hawaii International Conference on System Sciences.; 1988; pp. 116–124. [Google Scholar]

- Gregor, S.; Jones, D. The anatomy of a design theory. Journal of the Association for Information Systems 2007, 8, 312–335. [Google Scholar] [CrossRef]

- Đurić, G.; Mitrović, Č.; Vorotović, G.; Blagojevic, I.; Vasić, M. Developing self-modifying code model. Istrazivanja i projektovanja za privredu 2016, 14, 239–247. [Google Scholar] [CrossRef]

- Venable, J.; Pries-Heje, J.; Baskerville, R. FEDS: a framework for evaluation in design science research. European journal of information systems 2016, 25, 77–89. [Google Scholar] [CrossRef]

- Dworkin, S.L. Sample Size Policy for Qualitative Studies Using In-Depth Interviews. Archives of Sexual Behavior 2012, 41, 1319–1320. [Google Scholar] [CrossRef] [PubMed]

- Weston, C.; Gandell, T.; Beauchamp, J.; McAlpine, L.; Wiseman, C.; Beauchamp, C. Analyzing Interview Data: The Development and Evolution of a Coding System. Qualitative Sociology 2001, 24, 381–400. [Google Scholar] [CrossRef]

- Börjesson, A.; Holmberg, L.; HolmstrГöm, H.; Nilsson, A. Use of Appreciative Inquiry in Successful Process Improvement. In Proceedings of the Organizational Dynamics of Technology-Based Innovation: Diversifying the Research Agenda, Boston, MA, 2007; 2007//; pp. 181–196. [Google Scholar]

- Saldaña, J. Coding and analysis strategies. The Oxford handbook of qualitative research 2014, 581–605. [Google Scholar]

- Li, C.; Yangyong, Z. The Challenges of Data Quality and Data Quality Assessment in the Big Data Era. Data Science Journal 2015, 2. [Google Scholar] [CrossRef]

- Wang, R.Y.; Strong, D.M. Beyond Accuracy: What Data Quality Means to Data Consumers. Journal of Management Information Systems 1996, 12, 5–33. [Google Scholar] [CrossRef]

- Weibelzahl, S.; Paramythis, A.; Masthoff, J. Evaluation of Adaptive Systems. In Proceedings of the Proceedings of the 28th ACM Conference on User Modeling, Adaptation and Personalization, Genoa, Italy, 2020; pp. 394–395.

- Narendra, K.S. Chapter Two - Hierarchical Adaptive Control of Rapidly Time-Varying Systems Using Multiple Models. In Control of Complex Systems, Vamvoudakis, K.G., Jagannathan, S., Eds.; Butterworth-Heinemann: 2016; pp. 33–66.

- Nielsen, J. Usability 101: Introduction to Usability. Available online: www.nngroup.com/articles/usability-101-introduction-to-usability/ (accessed on 23/02/2022).

- Mt, A. The Effect of Usability and Information Quality on Decision Support Information System (DSS). Arts and Social Sciences Journal 2017, 8. [Google Scholar] [CrossRef]

- Isaksen, H.; Iversen, M.; KaasbГёll, J.; Kanjo, C. Design of Tooltips for Data Fields. In Proceedings of the Design, User Experience, Cham, 2017, and Usability: Understanding Users and Contexts; pp. 63–78.

- Christie, D.M.; Wayne, D.D.; Joseph, E.U. A methodology for the objective evaluation of the user/system interfaces of the MADAM system using software engineering principles In Proceedings of the Proceedings of the 18th annual Southeast regional conference, Tallahassee, Florida, 1980; pp. 103–109. [Google Scholar]

- Parsa, I. KDD Cup 1998 Data. In Proceedings of the he Second International Knowledge Discovery and Data Mining Tools Competition, New York; 19898. [Google Scholar]

- Dick, W. Dick, W. Summative evaluation. Instructional design: Principles and applications 1977, 337-348.

- Malasi, A. All you need to know to build your first Shiny app. Available online: https://towardsdatascience.com/all-you-need-to-know-to-build-your-first-shiny-app-653603fd80d9 (accessed on 8 April 2021).

- Dataiku.com. The Dataiku Story. Available online: www.dataiku.com/stories/the-dataiku-story/ (accessed on 8 April 2021).

- Uskov, V.L.; Bakken, J.P.; Putta, P.; Krishnakumar, D.; Ganapathi, K.S. Smart education: predictive analytics of student academic performance using machine learning models in Weka and Dataiku systems. In Smart Education and e-Learning 2021; Springer: 2021; pp. 3–17.

- Vaishnavi, V.; Kuechler, B.; Petter, S. Design Science Research in Information Systems. 2019, 1-62.

| Expert category | Process of analysing donor behaviour | Major challenges | Suggestions |

| Data scientist in a NPO (5 years) | Occasionally analysing data of donor to create such analysis temporarily. No usage of DSS for analysing donor behaviour. | No collection of data of donor regularly. Different needs of creating such analysis, depending on NPOs’ needs. | Building a DSS that analyse donor behaviour using ML techniques. Also, creating a design theory would increase the awareness of scholars to consider such analytics solutions. |

| Manager of NPO (8 years) | Normally using Excel sheets for creating tables and graphs about donors | Lack of human and technical resources. Low budget to afford such effective solutions. Spending long times to make a decision. |

Using an efficient system helps understand donors more comprehensively through such analysis. |

| Consultant of NPOs | Using Google analysis for analysing data of donor to help in making-decision. | Lack of human and technical resources. Lack of knowledge on designing a DSS for analysing donor behaviour. |

Relying on ML capabilities to benefit more in creating visualistions that lead to understanding donors and volunteers and enhance decision-making. |

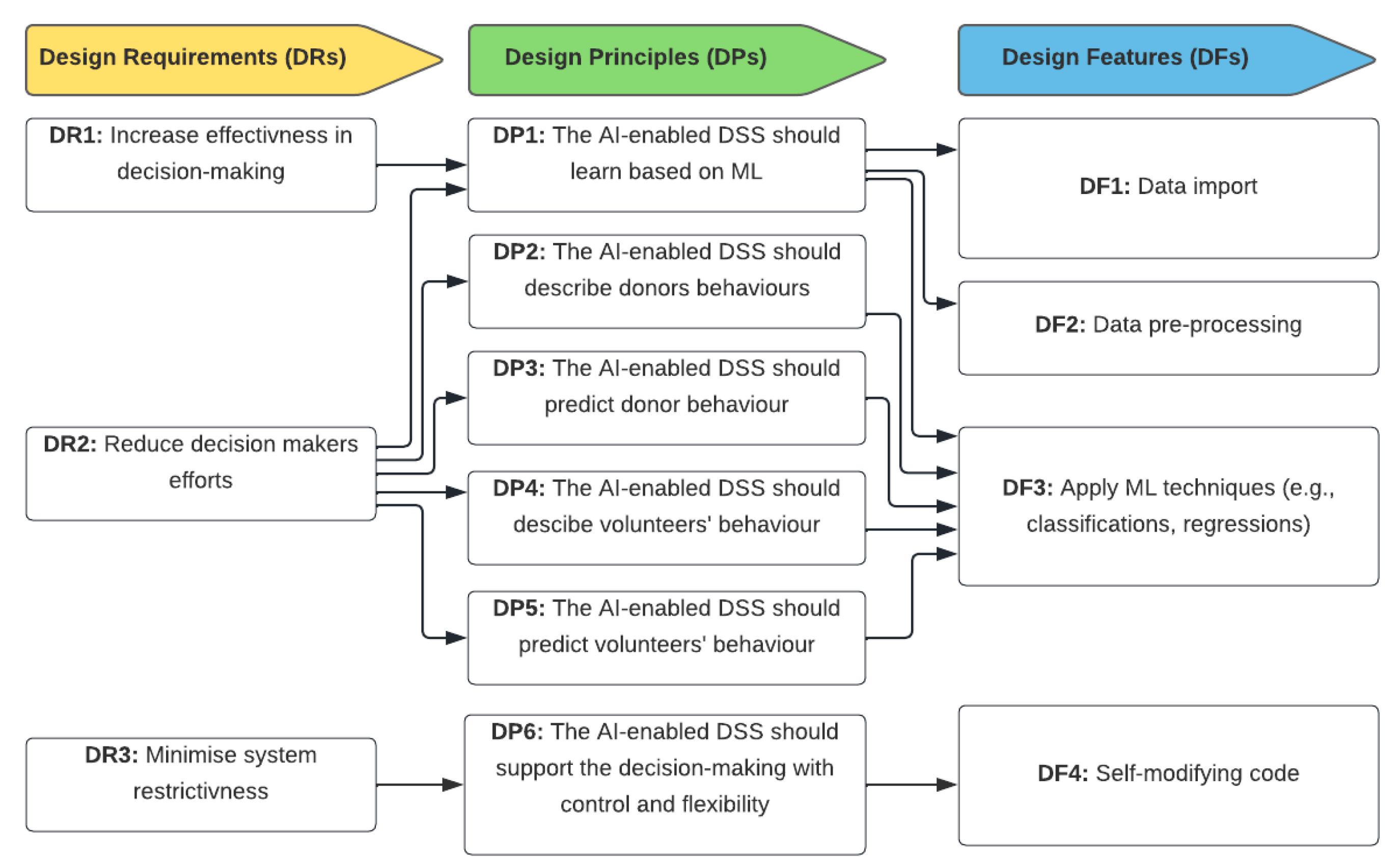

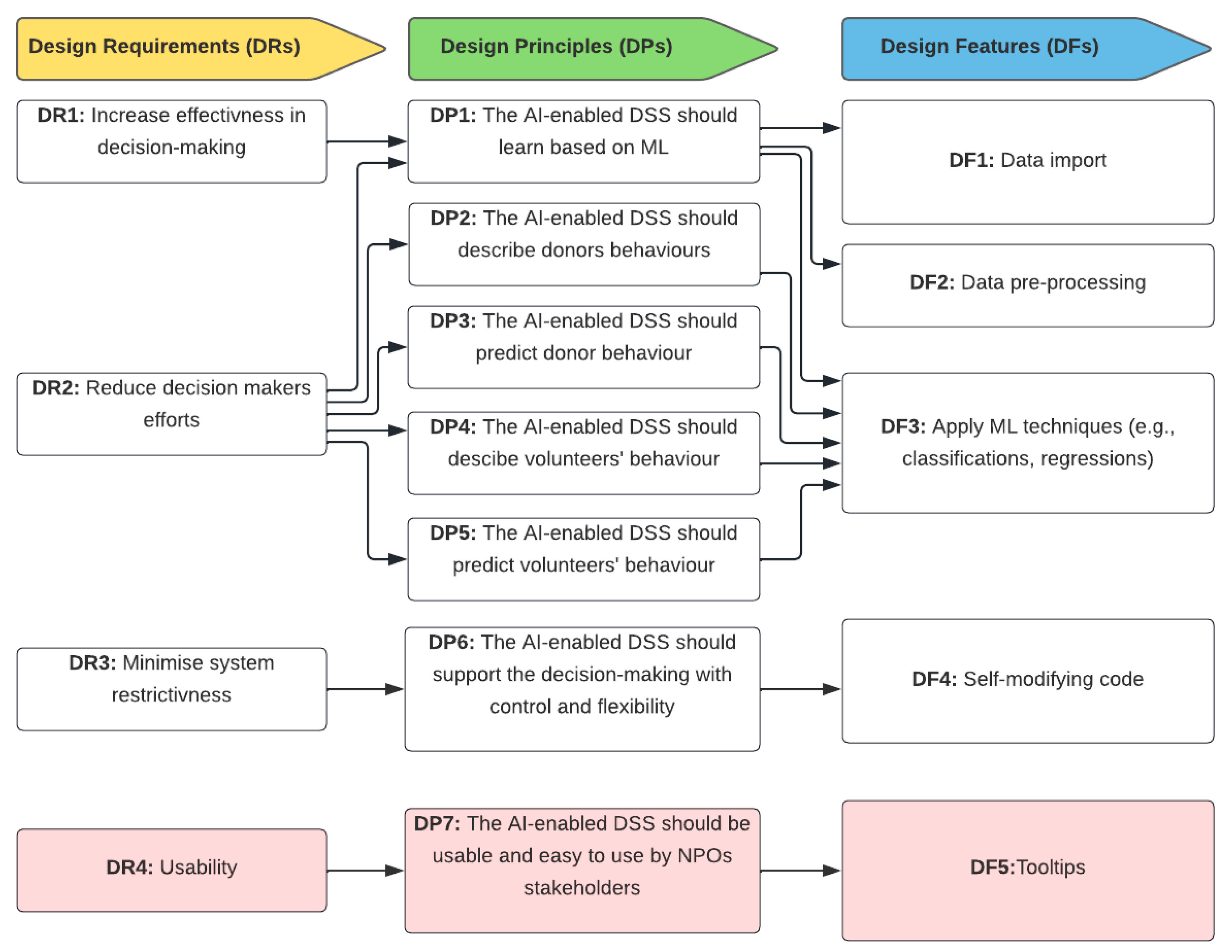

| Design Requirements | Explanations | Justification | |

|---|---|---|---|

| Increase the decision quality by providing high quality advice. | Providing advice with quality. The process of analysing donor behaviour should be supported by the system that improves the quality of decisions. | Decision makers have various objectives when making a decision [40]. Thus, they aim to achieve the maximum of good advice [40]. The AI-enabled DSS should provide high quality of decision to help NPOs make better decisions about donors and volunteers. | |

| Reduce decision maker’s effort. | The system should prepare the decision and offer it for the decision-maker with the relevant information. For example, the system should provide information (through visualisations) about donors. This type of information can decrease the cognitive effort needed for NPOs decision-makers. | Decision makers strive to make the minimum efforts when making decisions [36]. When the DSS provides high quality advice, the effort of decision makers will be reduced [40]. | |

| Minimise system restrictiveness. | The system should offer several pre-selects decision strategies and offer decision makers more flexibility to choose appropriate analytics. | The AI-enabled DSS should provide control and to not restrict users [40]. For example, users of DSS in NPOs require to choose the type of the analytics (predictive or descriptive). |

| Design principles | Explanation |

| DP1: The AI-enabled DSS should learn based on ML | The AI-enabled DSS should be designed as an adaptive system [38]. The AI-enabled DSS should have predefined models to train the datasets. Therefore, ML techniques can learn based on the generated data of donors entered by decision-makers in NPOs (who use the AI-enabled DSS) to create effective descriptive and predictive models. |

| DP2: The AI-enabled DSS should describe donor behaviour. | Describing donor behaviour using ML is a key element of the AI-enabled DSS. NPOs may benefit from the interpreted results by the DSS to explain certain factors and information about donors such as the most gender donating, etc. Most importantly, ML techniques can describe the relative information about donors and visualise it properly. |

| DP3: The AI-enabled DSS should predict donor behaviour. | The AI-enabled of DSS should be able to predict donor behaviour using ML algorithms. Different types of predictive models can generate useful insights for NPOs decision-makers and support decision-making about donors. For example, the AI-enabled DSS should create a model to predict which age of previous donors may donate more in the future. |

| DP4: The AI-enabled DSS should describe volunteers’ behaviour. | Describing volunteers’ behaviour using ML is a key element of the AI-enabled DSS. NPOs need to rely on interpreted results by the DSS to explain certain factors and information about donors. For example, the AI-enabled DSS should create a model to predict who is likely to volunteer in the future. |

| DP5: The AI-enabled DSS should predict volunteers’ behaviour. | The AI-enabled DSS should be able to predict volunteers’ behaviour using ML algorithms. Different types of predictive models can generate useful insights for NPOs decision-makers and support decision-making about volunteers. Thus, ML techniques can describe the relative information about volunteers and visualise it properly. |

| DP6: The AI-enabled DSS should support the decision making with control and flexibility. | The AI-enabled DSS should maintain the control level by allowing decision makers in NPOs (who use this system) to choose the type of predictive or descriptive analysis. Another example is allowing the NPOs decision makers to print a report or start a new analysis. |

| Design Features | Explanation |

| DF1: Data import | The AI-enabled DSS should allow data import of donors. A guideline should be introduced to NPOs on preparing the data and making the attributes aligned with the back-end code of the system. This feature will allow the user of the AI-enabled DSS to import the data from a spreadsheet containing specified features. Importing the data will be an easy step and automatically loaded via the interface of the AI-enabled DSS. The sources of the data may vary; however, there will be insurance when building the AI-enabled DSS that a guideline about the data, its format, and how it is imported is provided. |

| DF2: Data pre-processing | This feature is to pre-process the data to ensure the adequacy of attributes. Meth, et al. [36] described pre-processing features as important. The pre-processing feature uses data preprocessing techniques such as cleaning the data and formatting the dates. |

| DF3: Applying ML techniques (e.g., classifications, regressions, etc.) |

The AI-enabled DSS should analyse the imported data using MLs techniques. ML techniques provide the means to structure the data, organise patterns and extract useful hidden information. For example, a classification technique can be chosen to classify donors based on their donations (high or low) and provide recommendations (high potential to donate in the future) or low (unlikely to donate again). |

| DF4: Self-Modifying code | Software systems that have the capacity to independently change in a certain way are referred to as having self-modifying code, programs, or software [41]. The AI-enabled DSS should provide control for the users to maintain the workflow of making decision [32]. For example, enabling the user to choose the type of analysis from a list menu or removing unnecessary tooltip. |

| Working experience code | Number of Experts | Number of experts on DSS | Length of experience (years) | Number of Experts of donor behaviour | Experience length (years) |

| NPO manager | 4 | 1 | 4 | 1 | 10 |

| Data Scientist | 3 | 2 | 6 and 15 | 1 | 8 |

| Consultant for NPO | 2 | 0 | 0 | 2 | 4 and 8 |

| Software engineer | 2 | 1 | 7 | 1 | 2 |

| Volunteering work experience | 2 | 1 | 12 | 0 | 0 |

| Researcher of NPO studies | 1 | 0 | 0 | 1 | 13 |

| Social expert in NPOs | 1 | 0 | 0 | 1 | 5 |

| System designer and analyst | 1 | 1 | 18 | 1 | 13 |

| Total of experts | 16 | 6 | - | 8 | - |

|

| Code of experience | Number of experts | Additional DR | Additional design requirements | Additional DPs | Additional DFs |

|---|---|---|---|---|---|

| Data Scientist | 3 | Quality of data | - | - | - |

| Increasing efficiency | |||||

| NPO manager | 3 | Adaptive system | |||

| Quality of data | |||||

| Usability | DSS should be usable to use to describe or predict donor behaviour | Tooltips | |||

| Choice of colours | |||||

| Consultant for NPO | 2 | Quality of data | - | - | |

| Security | Performance | ||||

| Software engineer/volunteering work in NPO | 2 | Usability | - | Easy to navigate | |

| Adaptive systems | - | ||||

| Volunteering work experience | 2 | - | DSS should be usable to use to describe or predict donor behaviour | Tooltips | |

| System designer and analyst | 1 | ||||

| Technical committee in NPOs/ CEO of NPOs | 1 | ||||

| Researcher of NPO studies | 1 | Tooltips | |||

| Social expert in NPOs | 1 | Flexibility to use |

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).