1. Introduction

With the advancement of hyperspectral imaging technology, hyperspectral imaging systems can simultaneously acquire abundant spectral information and two-dimensional spatial information of a feature and then form a hyperspectral image(HSI)[

1,

2,

3]. HSI provides tens to hundreds of continuous spectral bands[

4]. The abundance of spectral information greatly enhances the ability to distinguish objects. Therefore, HSI has been commonly used in disaster monitoring, vegetation classification, fine agriculture and medical diagnosis due to its extremely high spectral resolution[

1,

2,

5].

HSI classification task as the focus of the field of hyperspectral image analysis has always received much attention from scholars. Hyperspectral image classification aims to classify each pixel point in the image [

6]. In the early days, most HSI classification methods mainly relied on some traditional machine learning algorithms[

7], which were mainly divided into two processes: traditional manual feature engineering and classifier classification[

8]. Feature engineering is aimed at processing data based on knowledge so that the processed features can be better used in subsequent classification algorithms, commonly used feature engineering methods include principal component analysis (PCA), independent component analysis and other dimensionality reduction methods.

Typical classification algorithms such as support vector machine (SVM)[

9], random forest (RF)[

10]and k-nearest neighbor (KNN)[

11], etc. The above machine learning approaches only focus on the spectral information of HSI, and it is inaccurate to use the spectral information only for the classification task, thus limiting the improvement of classification accuracy and gradually being eliminated.

As a result of the triumph of deep learning in areas such as computer vision, many approaches based on deep learning have also been adopted for hyperspectral image classification [

12]. Among the deep learning methods, convolutional neural networks(CNN) [

13] have become a popular method for hyperspectral image classification due to their excellent performance. Deep learning-based methods represented by CNN have replaced traditional machine learning-based HSI classification methods and have become a research spot[

14].

Deep learning methods of 1D-CNN [

15] and 2D-CNN were first applied to hyperspectral image classification, and the performance surpassed machine learning methods, but the above methods suffer from underutilization of spatial and spectral information. Therefore, the 3D-CNN model [

16] was proposed, which can extract spatial-spectral features simultaneously and therefore obtain better classification results, but the model has a large computational burden. To extract richer features, some scholars proposed a hybrid spectral CNN (HybridSN) [

16], which combines 3D-CNN and 2D-CNN to exploit the spatial-spectral features of HSI with less computational burden than 3D-CNN

.

With the purpose of finding correlations between data, highlight important features and ignore irrelevant noise information, attention mechanism has been proposed. Li et al. proposed a two-branch double attention network (DBDA)[

17], which contains two branches to extract spatial and spectral features, and added attention mechanism to obtain better classification results. In order to capture richer features, deeper network layers are needed, but the deeper network layers will lead to computational complexity and make the model training difficult. Zhong et al. introduced a residual structure based on the 3D-CNN model[

18], constructed a spectral residual module and a spatial residual module, achieved more satisfactory classification results.

Although the classification results achieved by CNN-based classification methods have been good, there are still some limitations. First, CNN is designed for Euclidean data, and the traditional CNN model can only convolve regular rectangular regions, so it is difficult to obtain complex topological information; Second, CNN cannot capture and utilize the relationship between different pixels or regions in hyperspectral images, it can only extract detailed features in the local fine region, but the structure features and dependency relationship between the nodes may provide useful information for the classification process[

19,

20].

In order to obtain the relationship between objects, graph convolutional networks(GCN) have been developed rapidly in recent years. GCN is designed to process graph structured data,CNN is used for processing Euclidean data such as images, which are regular matrix, so no matter where the convolution kernel of a CNN is located in the image, the consistency of the result of the operation is guaranteed, and we call it translational invariance. However, the graph structured data is non-Euclidean data, and the graph structure is irregular so it is impossible to apply CNN on graph data. The graph convolution is designed to resolve this situation. The most important innovation of GCN is to overcome the inapplicability of translation invariance on non-Euclidean data, so it can be applied to extract the features of the graph structure.

Kipf et al. proposed the GCN model [

21], which is able to operate on non-Euclidean data and extract the structural relationship between different nodes

. [

19]. Some scholars have tried to apply GCN to hyperspectral classification tasks[

22], and studies have shown that the classification results are not only affected by spectral information, but also related to spatial structure information of the pixels[

20,

23]. By treating each pixel or superpixel in the HSI as a graph node, the hyperspectral image can be converted into graph structured data, and then the GCN can be used to obtain the spatial structure information in the image and provide more effective information for classification. Hong et al [

20]proposed the miniGCN method and constructed an end-to-end fusion network which was able to sample images in small batches, classify images as subgraphs and achieved good classification results. Wan et al proposed MDGCN [

24], which is different from the commonly used GCN working on a fixed graph model, MDGCN is able to make the graph structure update dynamically, so that the two steps benefit each other. In addition, we cannot consider each pixel of a HSI as a graph node due to the limitation of computational complexity, so hyperspectral images are usually preprocessed as superpixels. Superpixel segmentation technique is applied to the construction of graph structure, which reduces the complexity of model training significantly. But the superpixel segmentation technique leads to another problem, superpixel segmentation often leads to smooth edges of the classification map and lack of local detail information of the features. This problem has restricted the improvement of the classification performance and has an impact on the analysis of the results.

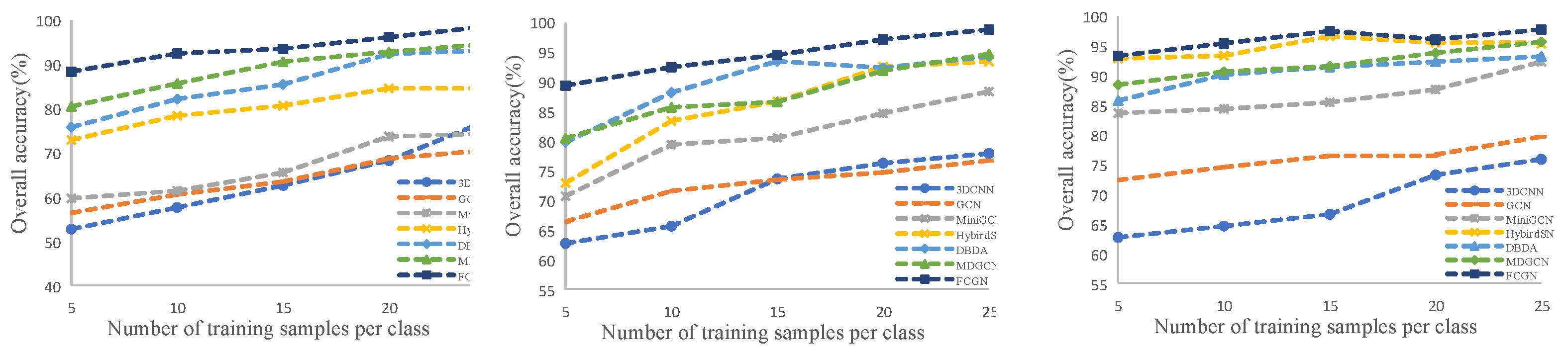

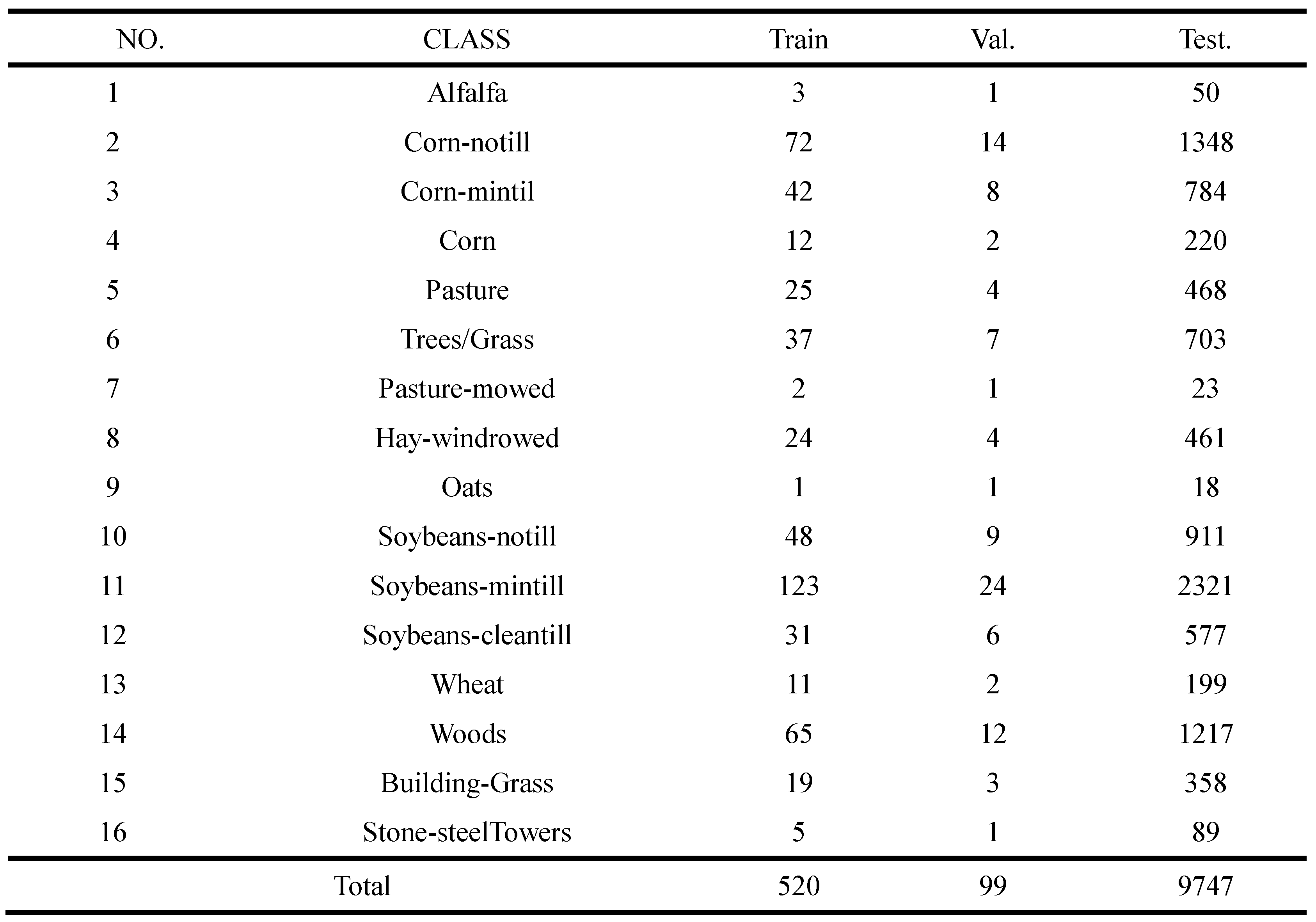

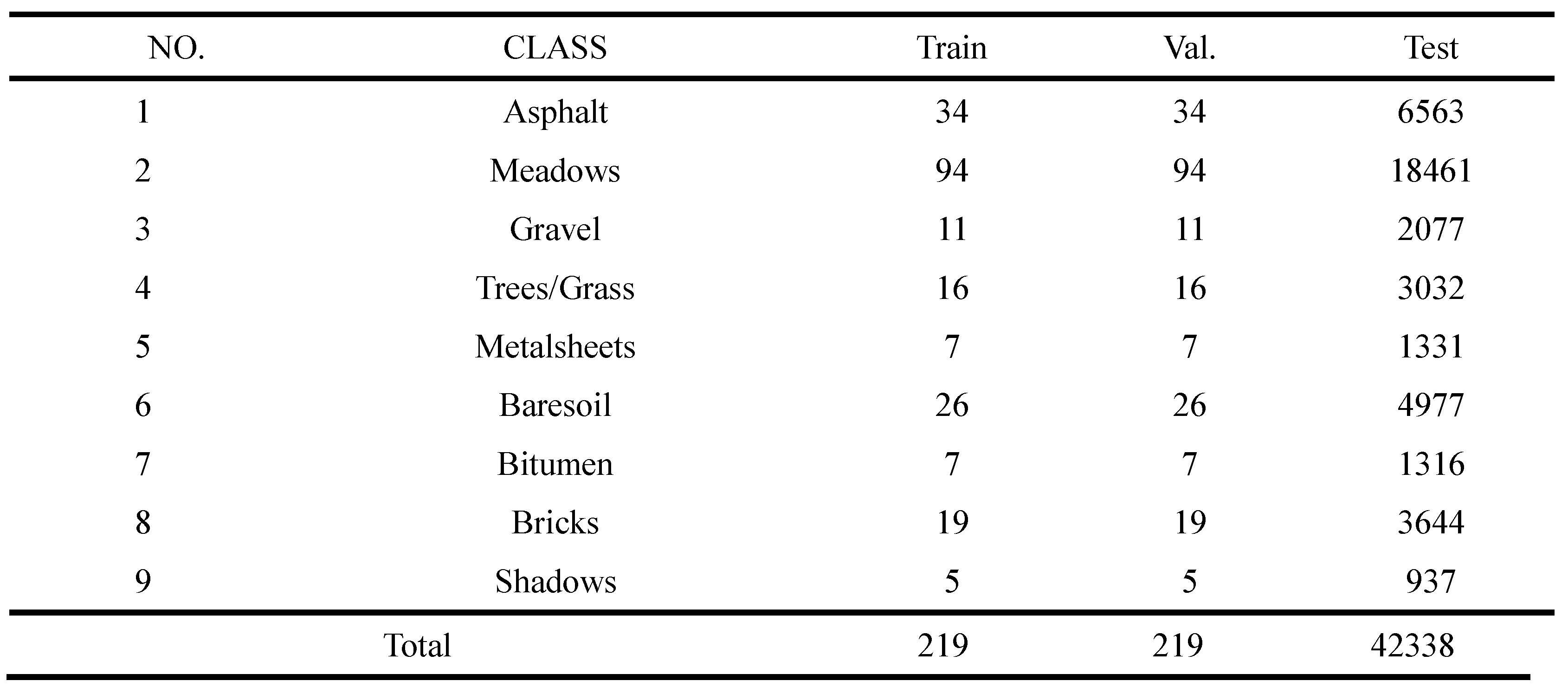

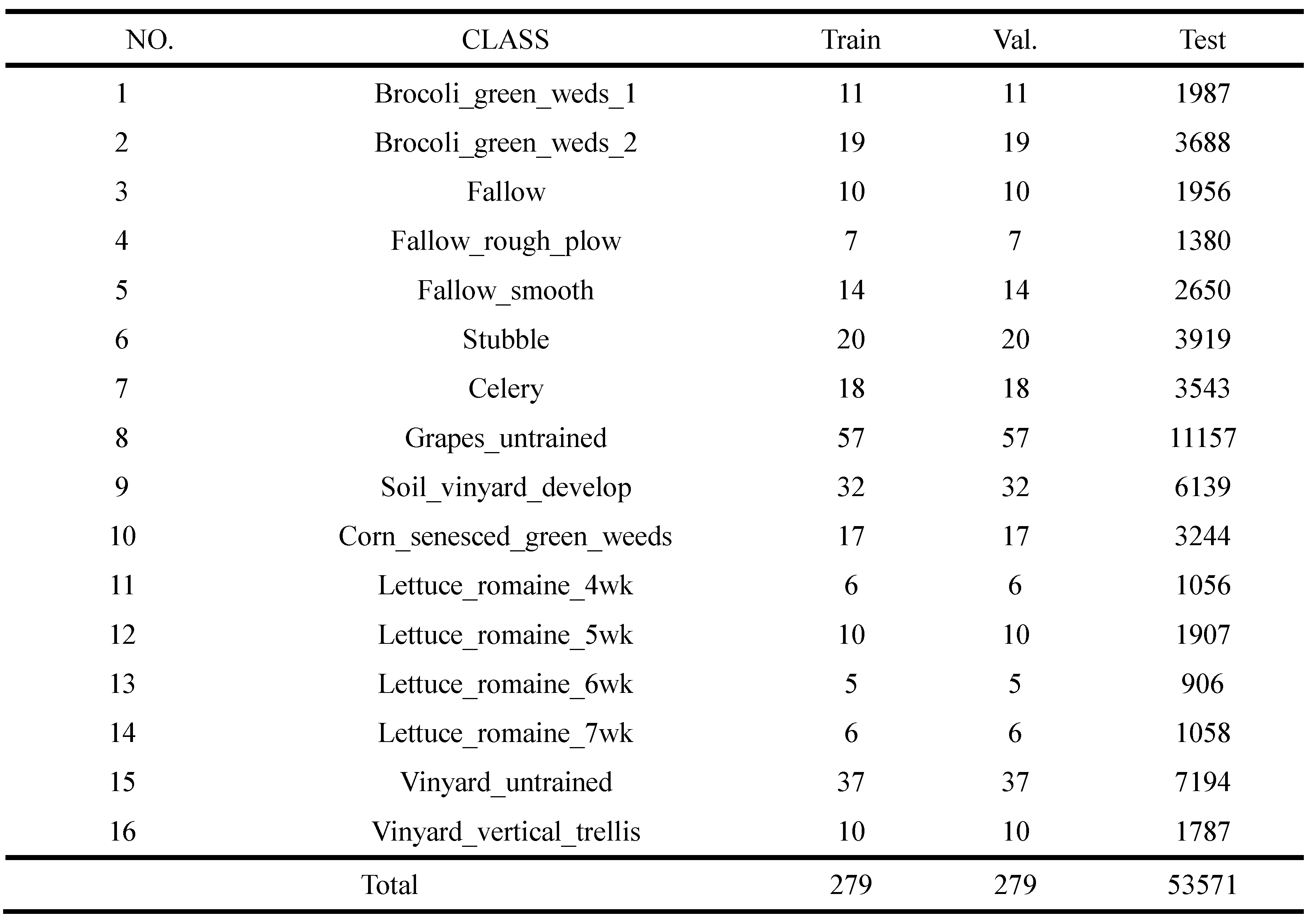

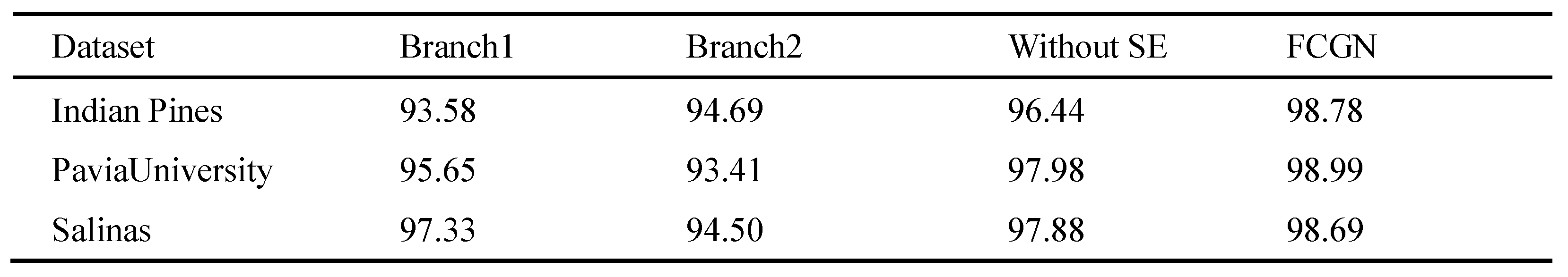

To obtain the relational features of HSI and to solve the problem of missing details due to superpixel segmentation, inspired by [

25], we designed a feature fusion of CNN and GCN networks (FCGN), the algorithm consisted of two branches, GCN branch and CNN branch. We apply the superpixel segmentation technique in the GCN branch. Superpixel segmentation technique can aggregate similar pixels into a superpixel, and then we treat these superpixels as graph nodes. Graph convolution processes the data by aggregating the features of each node as well as its neighboring nodes. This approach can capture the structure features and dependency relationship between the nodes and thus better represent the features of the nodes. Compared with CNN branch, GCN branch based on superpixel segmentation can acquire structure information of longer distance, while the CNN branch can obtain the pixel-level features of the HSI and perform fine classification of local regions. Finally, the different features acquired by the two branches are fused to obtain richer image features by complementing their strengths. In addition, the attention mechanism and depthwise separable convolution algorithm[

26] are applied to further optimize the classification results and network parameters.

2. Methodology

This section presents the proposed FCGN for HSI classification which includes the overall structure of FCGN and the function of each module in the network.

2.1. General Framework

To solve the problem of missing local details in classification maps due to superpixel segmentation, we proposed a feature fusion of CNN and GCN network, as shown in

Figure 1, the proposed network framework contains a spectral dimension reduction module (see

Section 2.2 for details), a graph convolutional branch(see

Section 2.3 for details), a convolutional branch(see

Section 2.4 for details), a feature fusion module and a softmax classifier. It should be noted that the features extracted from convolutional neural networks are different from those of graph convolutional networks. Feature fusion methods can utilize different features of an image to complement each other's strengths, thus obtaining more robust and accurate results. Because of that, it is the reason why it is possible to obtain better classification results than a single branch by fusing features from two branches.

The original HSI is handled by the spectral dimensionality reduction module first, which is used for spectral information transformation and feature dimensionality reduction. Then, we use convolutional neural networks to extract the detailed features in a local fine region, considering the problem that CNN-based method may overfitting with too many parameters and insufficient number of training samples, we use a depth separable convolution to reduce the parameters and enhance the robustness. To further improve the model, we add attention modules to the convolution branch. We use the SE attention module to optimize the proposed network[

27]. SE module can obtain the weight matrix of different channels. Then, the weight values of each channel calculated by the SE module are multiplied with the two-dimensional matrix of the corresponding channel of the original feature map. We use graph convolutional networks to extract the superpixel-level contextual features, in this branch, we apply a graph encoder and a graph decoder to implement the transformation of pixel features and superpixel-level features(see

Section 2.5 for details). Next, the different features acquired by the two branches are fused to obtain richer image features by complementing their strengths. Finally, after the processing of the softmax classifier, we can get the label of each pixel.

2.2. Spectral dimension reduction module

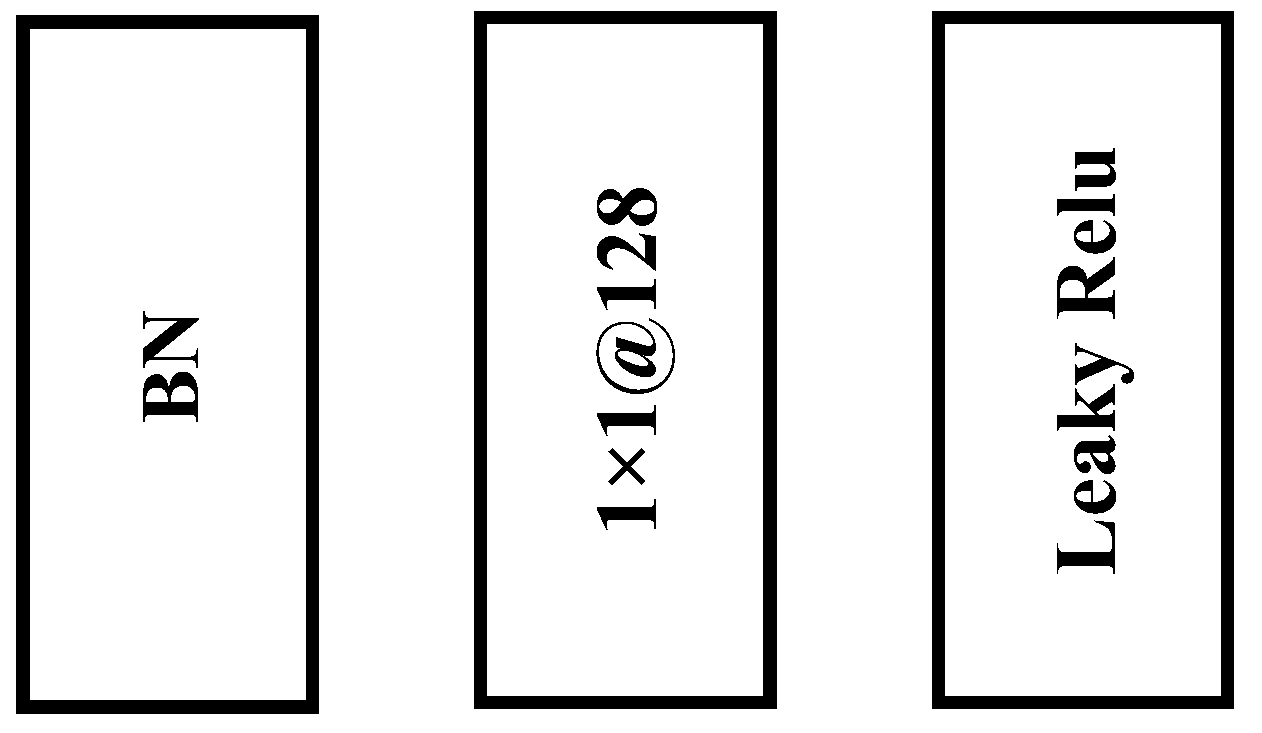

There is a lot of redundant information in the original hyperspectral image, by using dimension reduction modules, it is possible to significantly reduce the computational cost without significant performance loss. 1×1 convolutional layer has the ability to remove useless spectral information and increase nonlinear characteristics. Moreover, it is usually used as dimension reduction module to remove computational cost. As shown in

Figure 2. In the FCGN network, hyperspectral images are first processed by two 1×1 convolutional blocks. Specifically, each of 1×1 convolutional block contains a BN layer, a 1×1 convolution layer, and an activation function layer. The role of the BN layer is to speed up the convergence of the network, and the activation function layer can significantly increase the network nonlinearity to achieve better expressiveness. The activation function in this module adopts Leaky ReLU.

We have

where

denotes input feature map,

denotes the batch-normalized input feature map,

denotes output feature map,

denotes the convolution kernel of the input feature map in row x, column y,

denotes bias and n is the number of convolution kernels.

represents the Leaky ReLU activation function.

2.3. Graph Convolution Branch

Numerous studies have shown that the classification accuracy can be effectively improved by combining the different features of images. Traditional CNN models can only convolve images in regular image regions using convolution kernels of fixed size and weight, resulting in the inability to obtain global features and structural features of images, so it is often need to deepen the network layer to alleviate this problem. However, as the number of network layers deepens, the chance of overfitting increases subsequently, especially when processing data with a small amount of training samples like HSI, such a result is unacceptable to us.

Therefore, a GCN branch based on superpixel segmentation is constructed to obtain the structural features. Different from CNN, GCN is a method used for graph structure. GCN branch can extract the structure features and dependency relationship between the nodes from images. These features are different from the neighborhood spatial features in a local fine region region extracted by the CNN branch.Finally, the property of the network can be enhanced by fusing the different features extracted from the two branches. The graph structure is a non-Euclidean structure that can be defined as , whereis the set of nodes andis the set of edges,andare usually encoded into a degree matrix D and node matrix A , where D records the relationship between each pixel of the hyperspectral image, and A denotes the number of edges associated with each node.

Because the degree of each graph node in the graph structure is not the same, GCN cannot directly use the same size local graph convolution kernel for all nodes like CNN. Considering that the convolution in the spatial domain is equivalent to the product in the frequency domain, researchers hope to implement the convolution operation on topological graphs with the help of the theory of graph spectra, they proposed the frequency domain graph convolution method[

28]. The Laplacian matrix of the graph structure is defined as

, The symmetric normalized Laplacian matrix is defined as

the graph convolution operation can be expressed by equation (3)

where

is the orthogonal matrix composed of the feature vectors of the Laplacian matrix L by column,

is a diagonal matrix consisting of parameter

, representing the parameter to be learned. The above is the general form of graph convolution, but equation (3) is computationally intensive, because the complexity of the eigenvector matrix

is

, therefore, Hammond et al [

29] showed that this process can be obtained by fitting a Chebyshev polynomial, as in equation (4)

where

and

is the largest eigenvalue of

,

is the vector of Chebyshev coefficients. In order to reduce the computational effort, the literature [

30] only calculates up to K = 1,

is approximated as 2, then we have

In addition, self-normalization is introduced

where

,

, finally, the graph convolution is

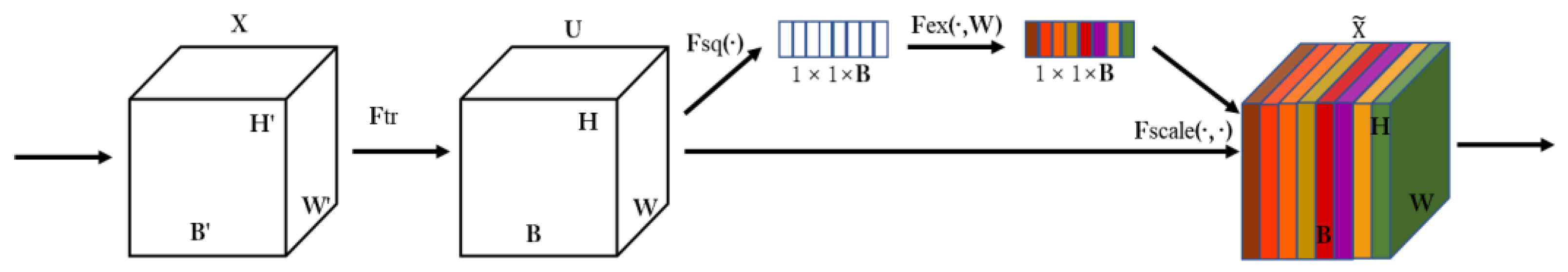

2.4. SE Attention Mechanism

The attention mechanism can filter key information from the input images and enhance the accuracy of the model with limited computational capability. Therefore, we applied the attention mechanism to the convolutional branch. For simplicity, we chose the SE attention mechanism. The SE module consists of three steps.Firstly, the compression operation, which performs feature compression from the spatial dimension to turn the feature of H×W×B into a 1×1×B feature. Secondly, the excitation operation, which generates weights for each feature channel by introducing the w parameter. Finally, the weights output from the excitation block are considered as the importance of each feature channel after selection, and the weights of each channel calculated by the SE module are multiplied with the two-dimensional matrix of the corresponding channel of the original feature map to complete the rescaling of the original features in the channel dimension, so as to highlight the important features. As shown in

Figure 3.

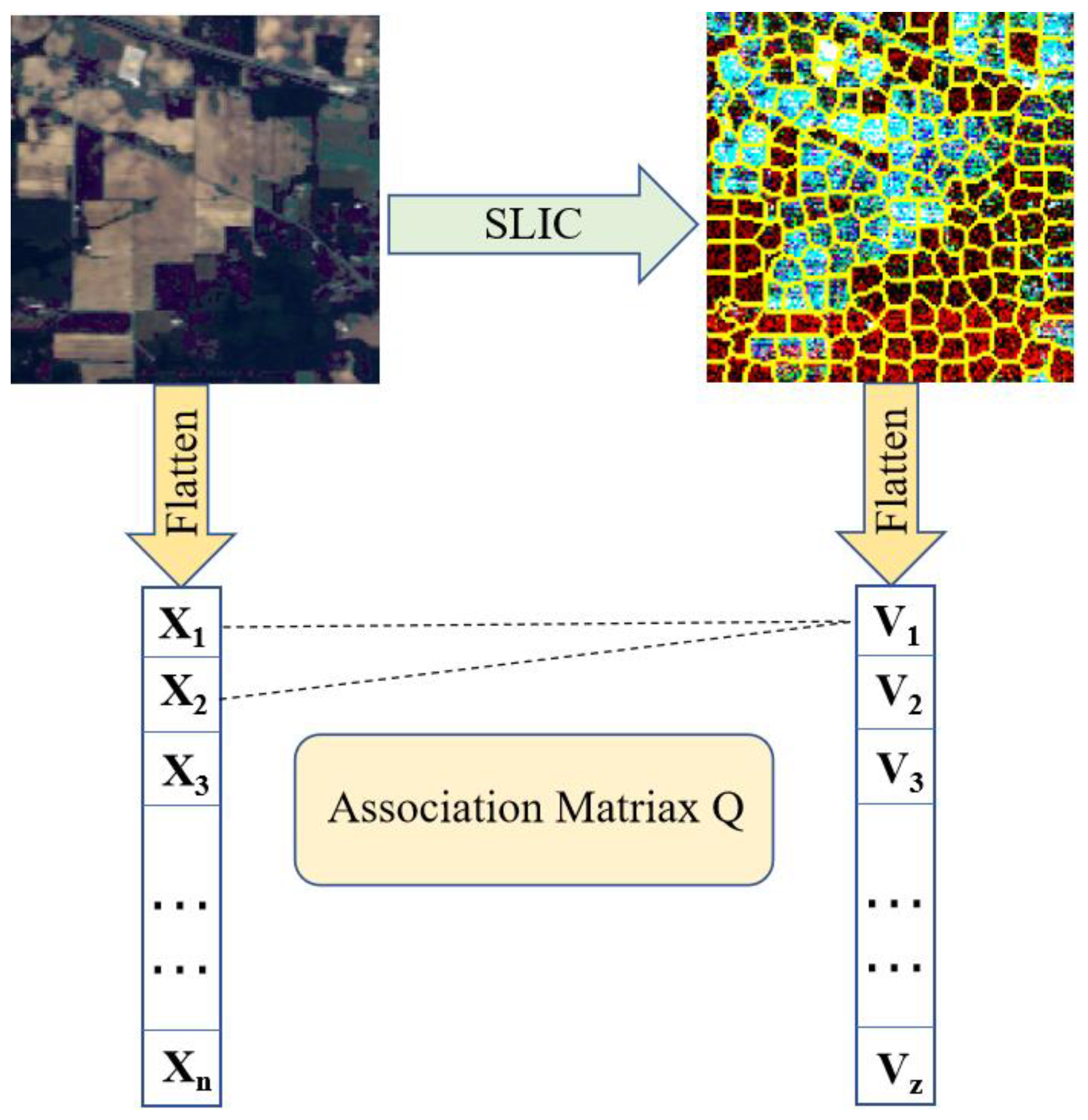

2.5. Superpixel segmentation and feature conversion module.

GCN can only be applied on graph structured data, and in order to apply GCN to hyperspectral images, the hyperspectral image needs to be constructed as a graph structure first. The simplest method is to consider each pixel of the image as each node of the graph structure, but this method leads to huge computational cost. So it is common to first apply superpixel segmentation to the HSI.

Currently, common superpixel segmentation algorithms include SLIC[

31], QuickShift[

32], Mean-Shift[

33]. Among them, the SLIC algorithm assigns image pixels to the nearest clustering centers to form superpixels based on the distance and color difference between pixels, and this method is computationally simple and has excellent results compared with other segmentation methods.

In general, the SLIC algorithm has only one parameter, the number of superpixels K. Suppose an image with M pixel is expected to be partitioned into K superpixel blocks, then each superpixel block contains M/K pixels. Under the assumption that the length and width of each superpixel block are uniformly distributed the length and width of each superpixel block can be defined as S, S = sqrt(M /K).

Secondly, in order to avoid the seed points falling on noisy points or line edges of the image and thus affecting the segmentation results, the positions of the seed points are also adjusted by recalculating the gradient values of the pixel points in the 3×3 neighborhood of each seed point and setting the new seed point to the minimum gradient in that neighborhood.

Finally, the new clustering centers are calculated iteratively by clustering. The pixel points in the 2S×2S region around the centroid of each superpixel block are traversed. After that, each pixel is divided into the superpixel blocks closest to it, and thus an iteration is completed. The coordinates of the centroid of each superpixel block are recalculated and iterated, and convergence is usually completed in 10 iterations.

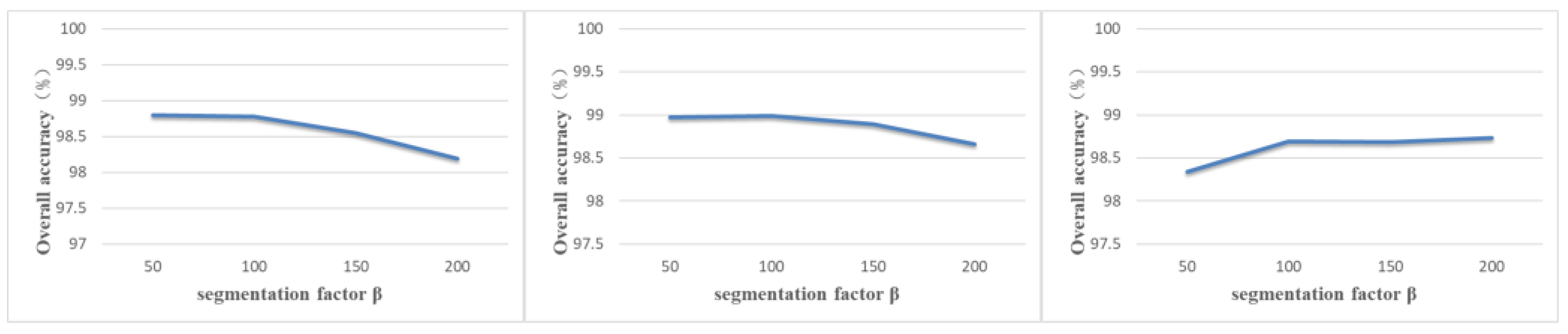

In this paper, the number of superpixel is not the same in each dataset, but varies according to the total number of pixels in the dataset, for which the number of superpixels is specified as , where H and W are the length and width of the dataset, and is a segmentation factor to control the number of superpixels, which is 100 in this paper.

It is worth noting that since each superpixel has a different number of pixels, since the data structures of the two branches are different, the CNN branch and the GCN branch cannot be fused directly. Inspired by [

25], we apply a data transformation module that allows the features obtained from the GCN branch to be fused with the features from the CNN branch, as shown in

Figure 4.

denotes the i-th pixel in the flattened HSI, and

denotes the average radiance of the pixels contained in the superpixels

, let

be the association matrix between pixels and superpixels, where Z denotes the number of superpixels, then we have

where

,

denotes the value of

at association matrix,

denotes the i-th pixel in

. Finally, the feature conversion process can be represented by

where

denotes the normalized

by column,

denotes restoring the spatial dimension of the flattened data.

denotes the nodes composed of superpixels,

denotes the feature converted back to Euclidean domains. In summary, features can be projected from the image space to the graph space using the graph encoder. Accordingly, the graph decoder can assign node features to pixels.

5. Conclusions

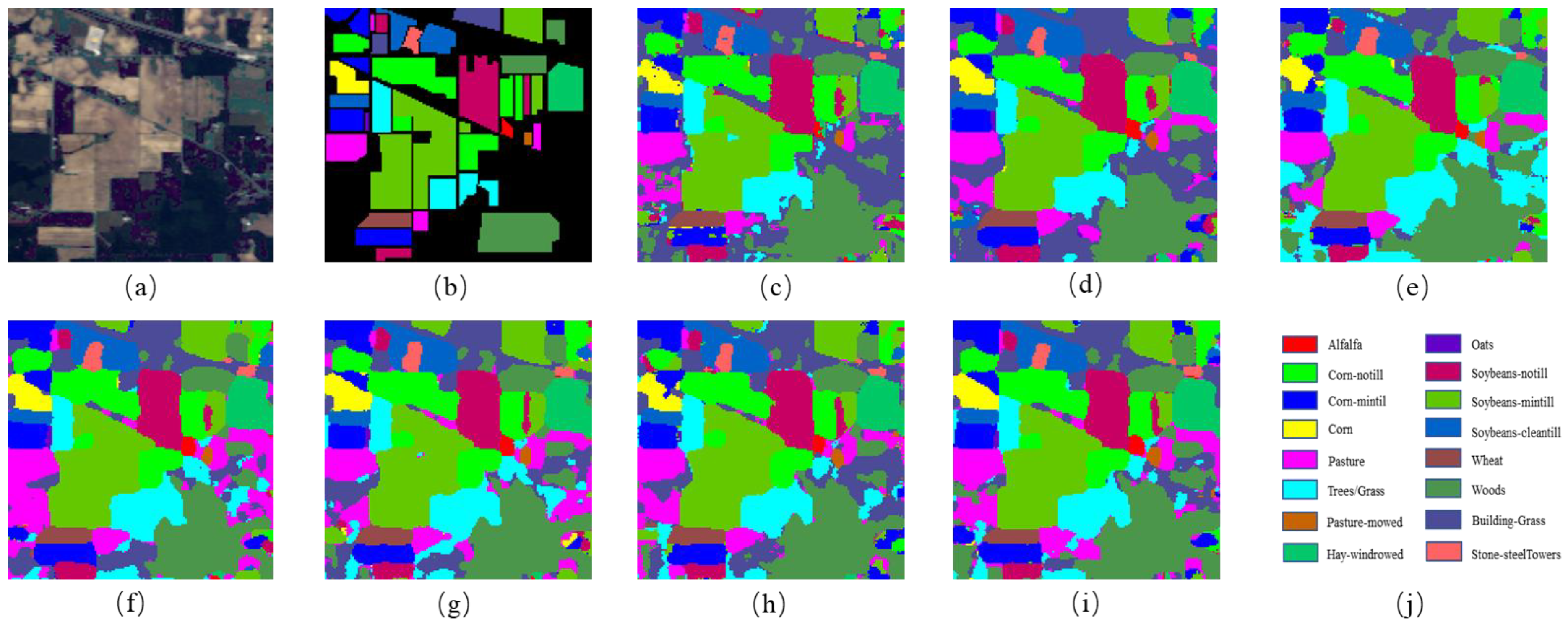

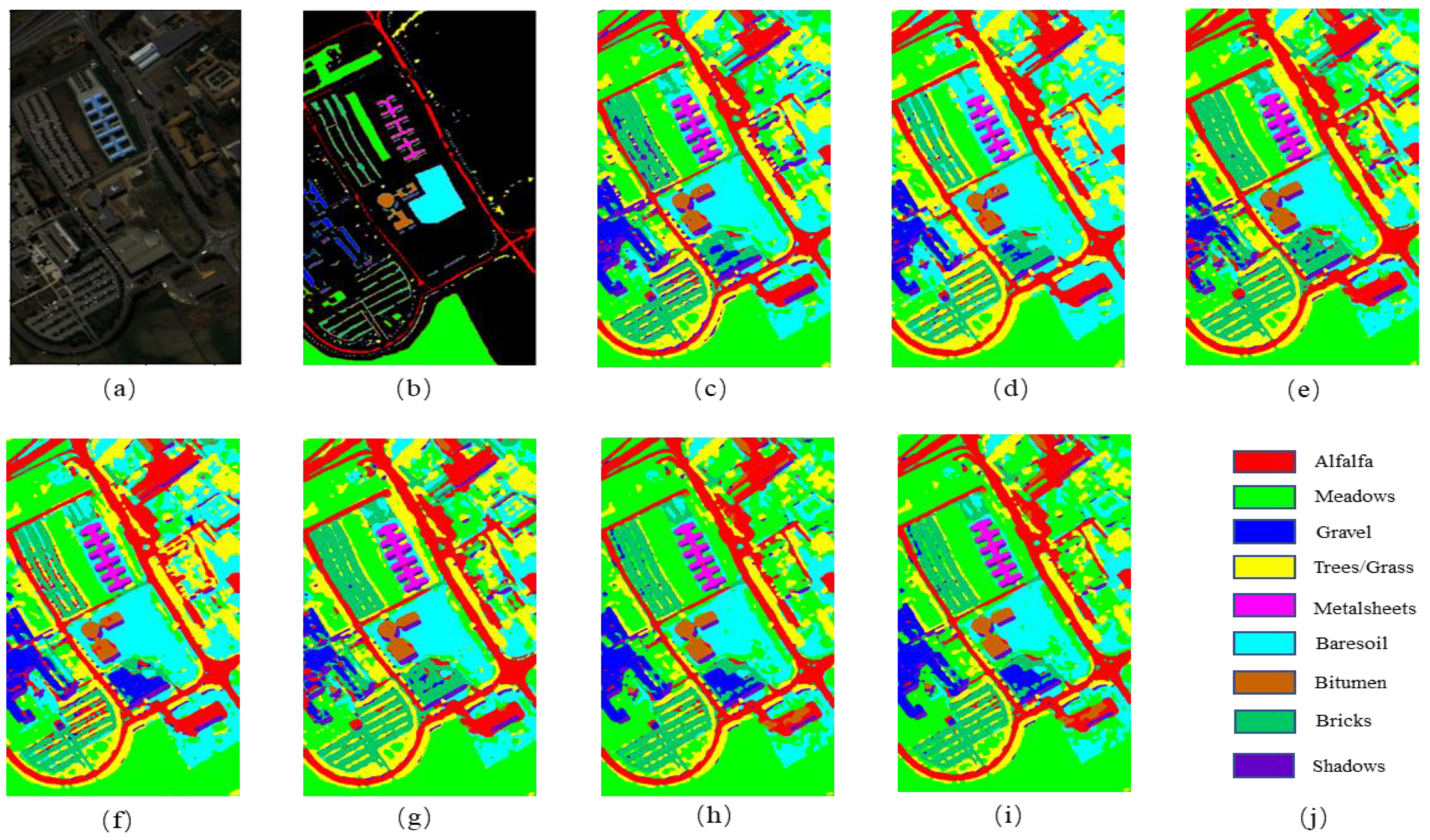

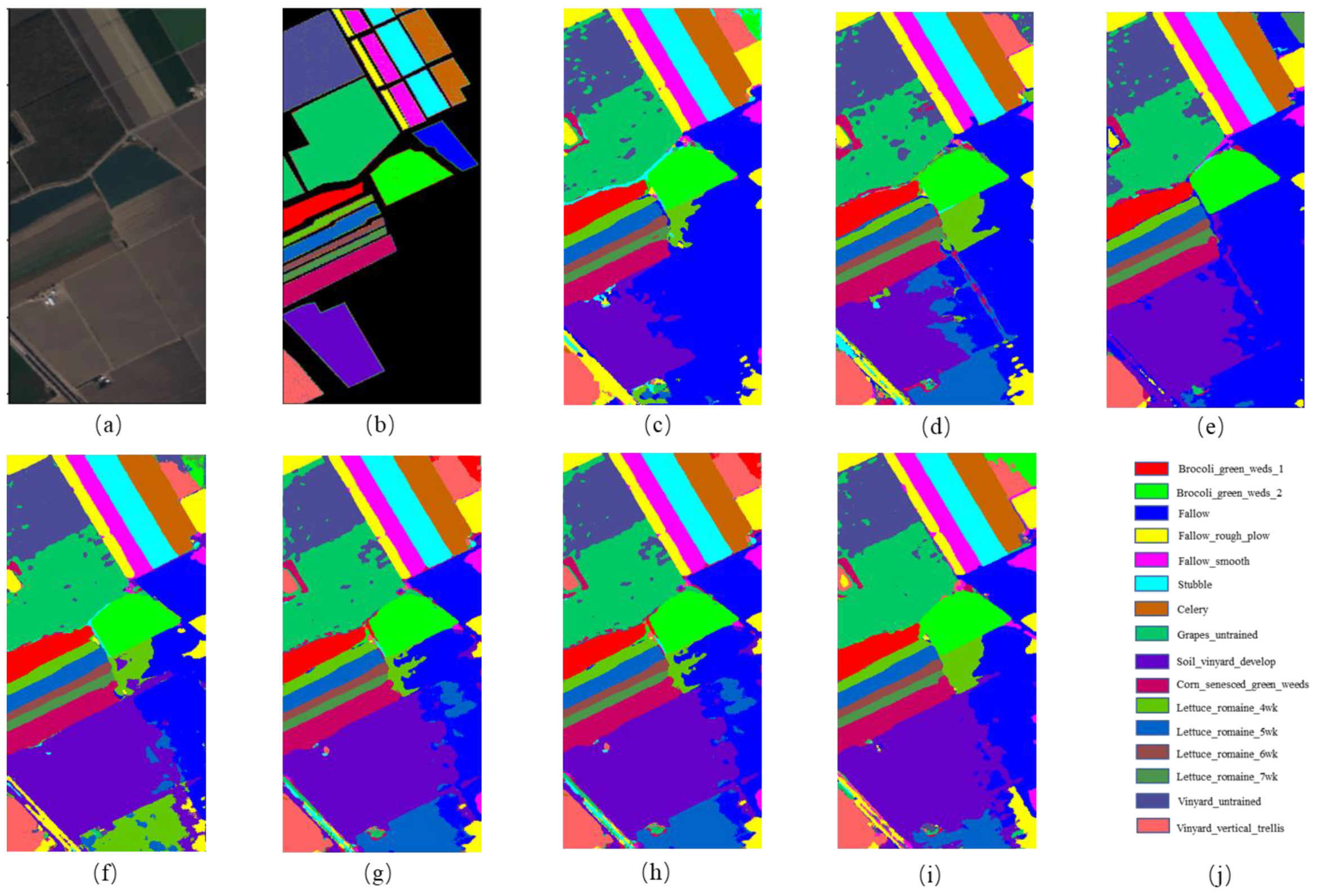

In recent years GCN has been applied in the field of hyperspectral image classification by virtue of its ability to process graph structured data. Compared with CNN, GCN is able to extract the structural features and capture the relationships between the nodes in irregular image regions. In order to reduce the computational complexity, superpixel segmentation is often performed on HSI first, however, superpixel segmentation processing leads to similar features within each superpixel node, resulting in a lack of local details in the classification map. To solve the above problems, a new hyperspectral image classification algorithm FCGN is proposed in this paper, in which a graph convolutional network based on superpixel segmentation is fused with an attentional convolutional network for feature fusion, a GCN network based on superpixel segmentation is used to extract superpixel-level features, an attentional convolutional network is used to extract local detail features, and finally the obtained complementary features are used to improve the classification results. In order to verify the effectiveness of the algorithm, experiments are conducted on three datasets and compared with some excellent algorithms. Experimental results showed that FCGN achieved better classification performance. Although FCGN achieves better classification results but there are still some shortcomings, this paper does not consider the variability of different neighbor nodes during the construction of the graph structure, which may limit the ability of the model .In addition, only a simple feature splicing fusion method is used in this paper, so the construction of graph structure and new fusion mechanism will be further explored in the subsequent research.

Author Contributions

Conceptualization, L.G.; methodology, L.G.; software, L.G.; validation, L.G., S.X. and C.H.; formal analysis, L.G.; investigation, L.G.; resources, L.G., S.X. and C.H.; data curation, L.G.; writing—original draft preparation, L.G.; writing—review and editing, L.G.,Y.Y.; visualization, L.G. and S.X.; supervision, C.H.,Y.Y.; project administration, C.H.; funding acquisition, C.H. and Y.Y.; All authors have read and agreed to the published version of the manuscript.