Submitted:

18 May 2023

Posted:

19 May 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Introduction and Preprocessing of Dataset

2.1 Brief Introduction of Ground-Penetrating Radar

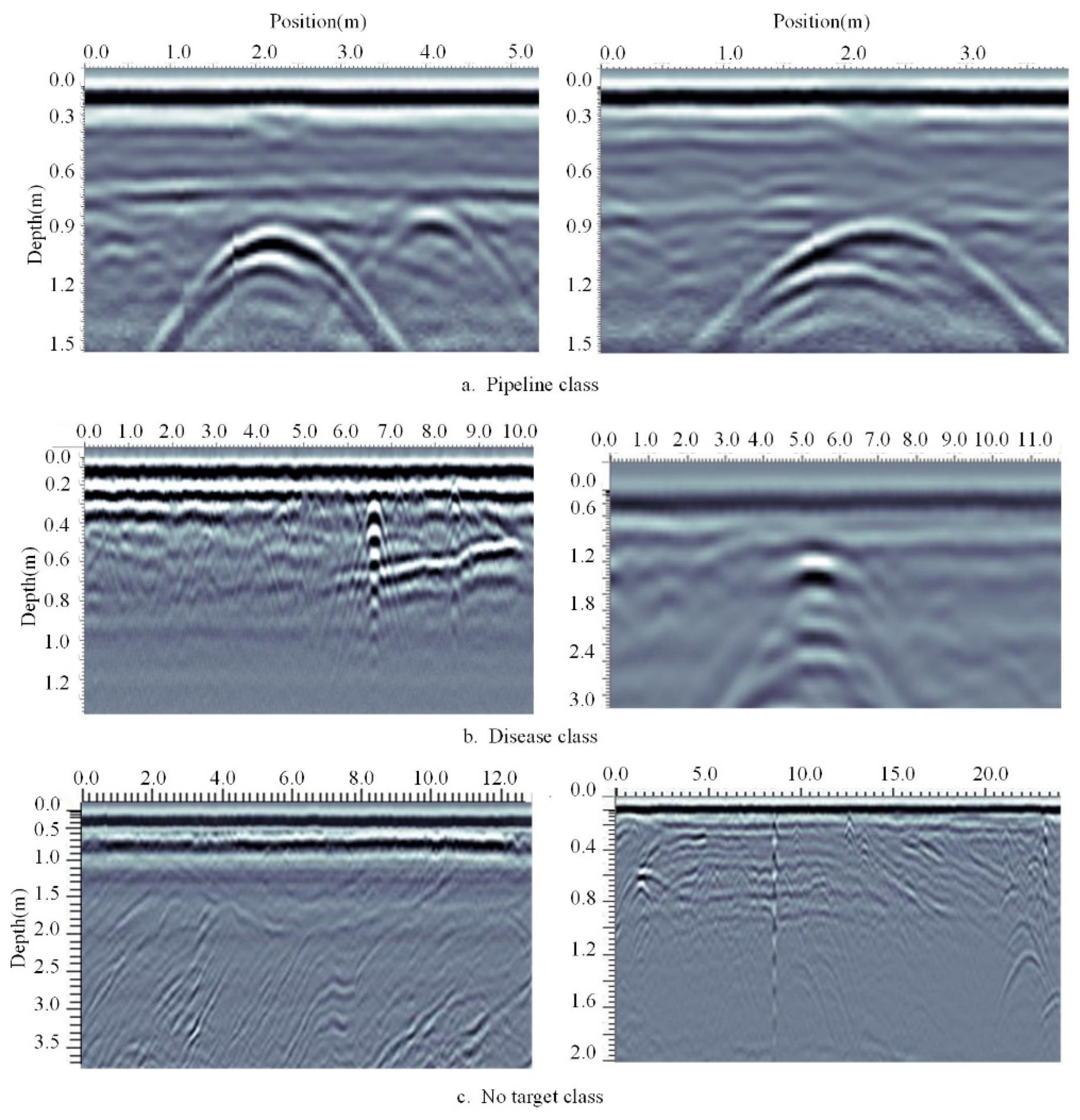

2.2 Experimental Real Data

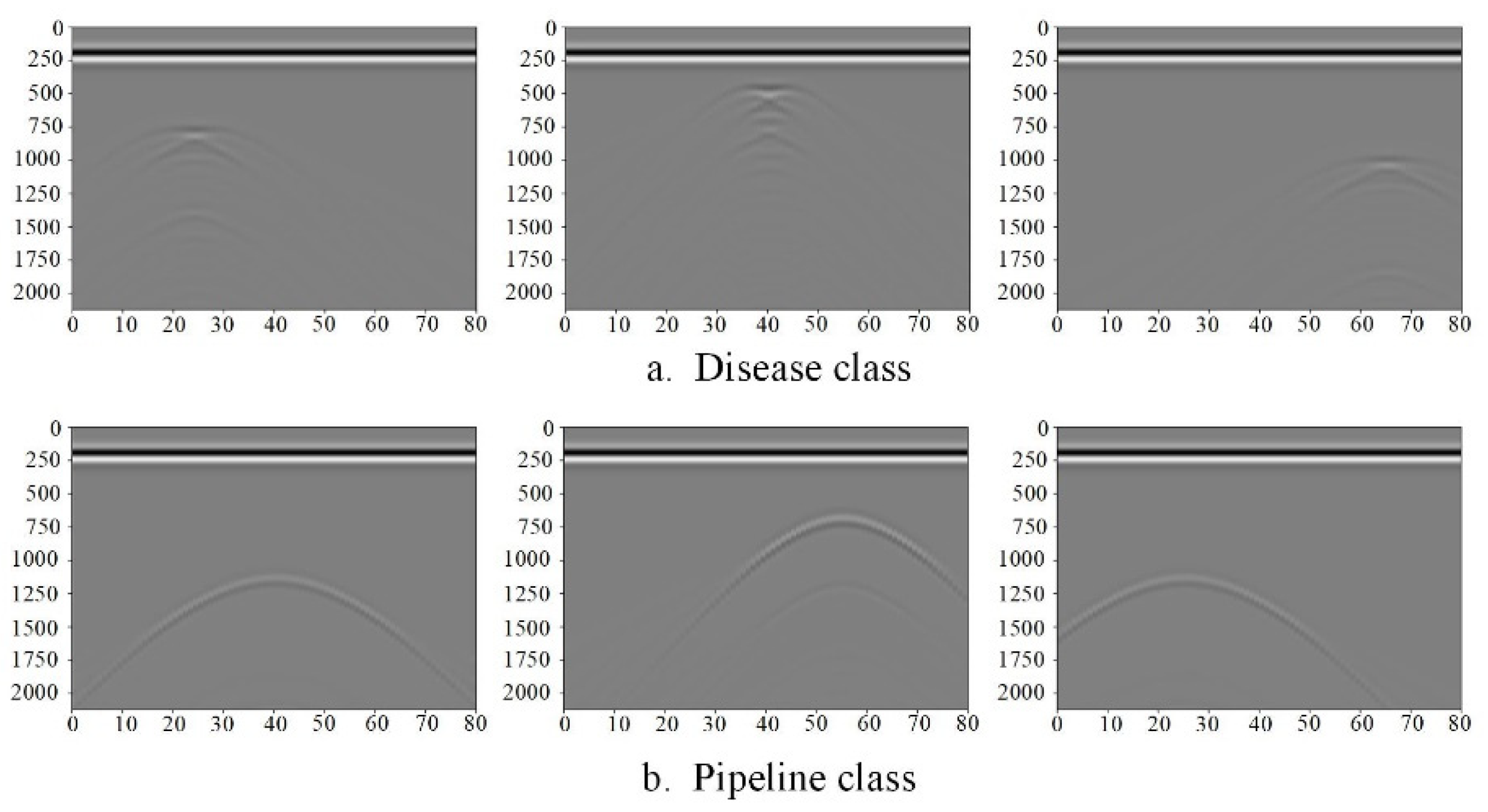

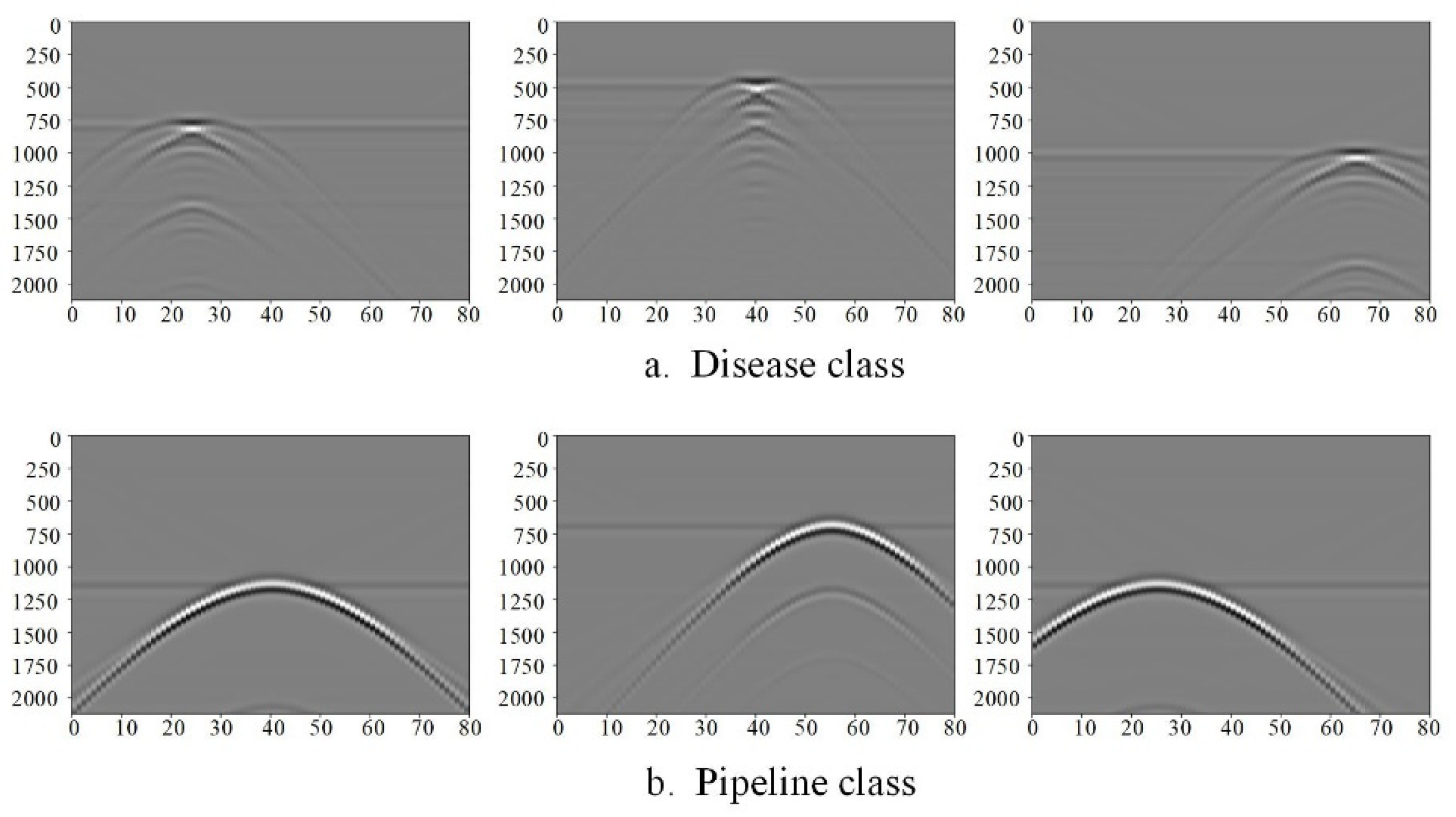

2.3 GPRMAX Simulation Data

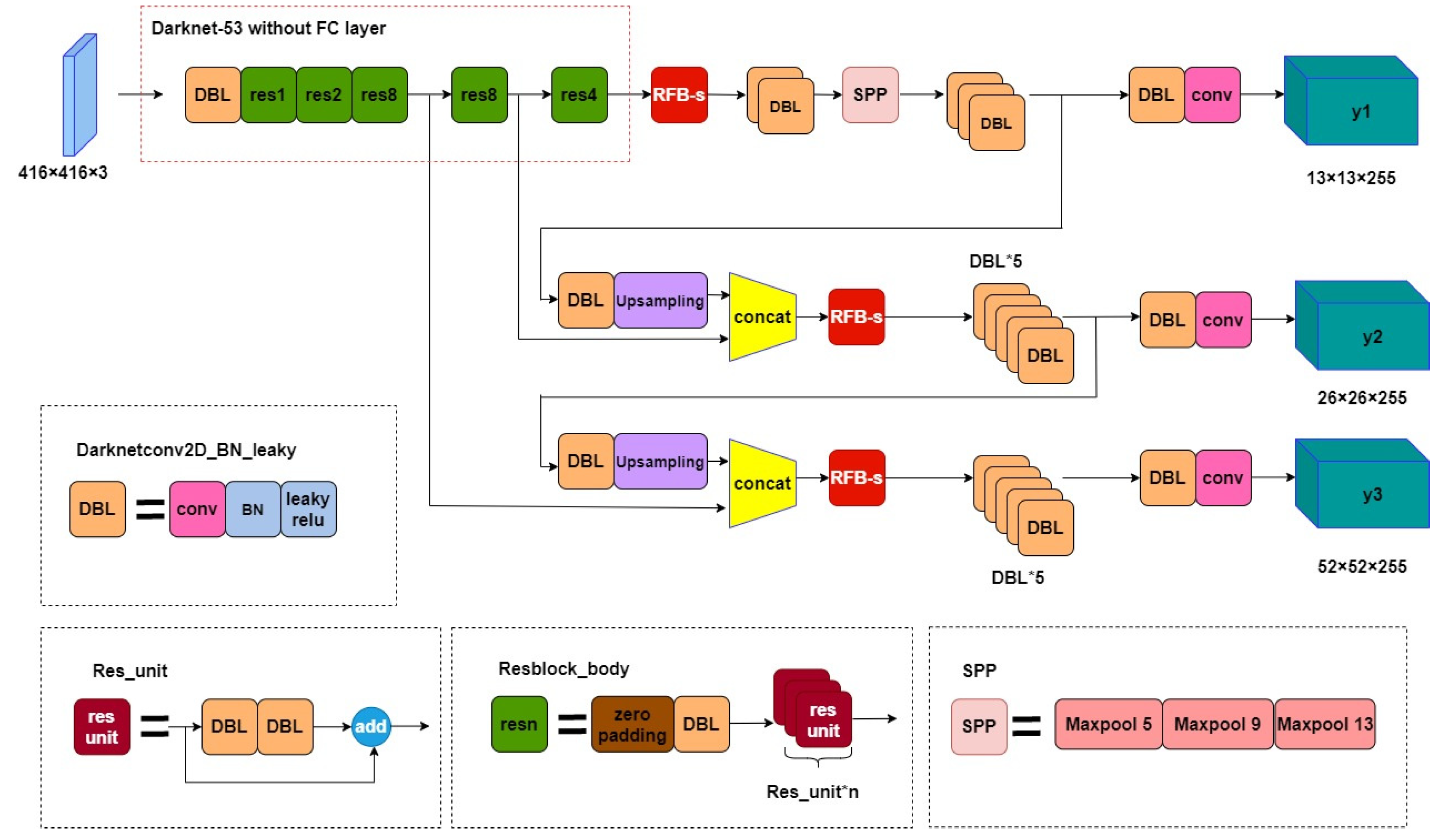

3. Introduction and Improvement of YOLOv3-SPP Algorithm

3.1 YOLOv3-SPP

3.2 Improved YOLOv3-SPP

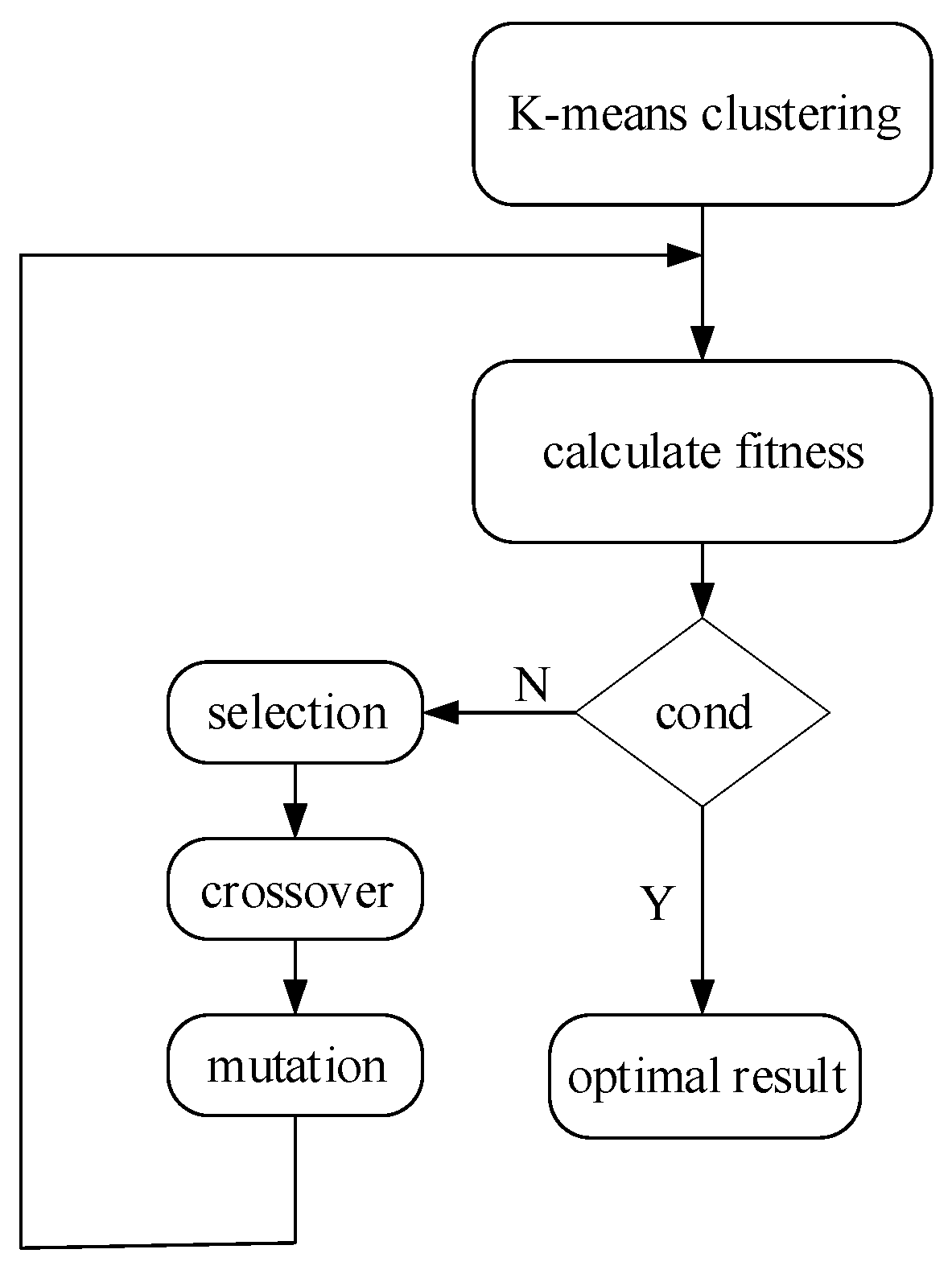

3.2.1. Optimize the Anchorage Value

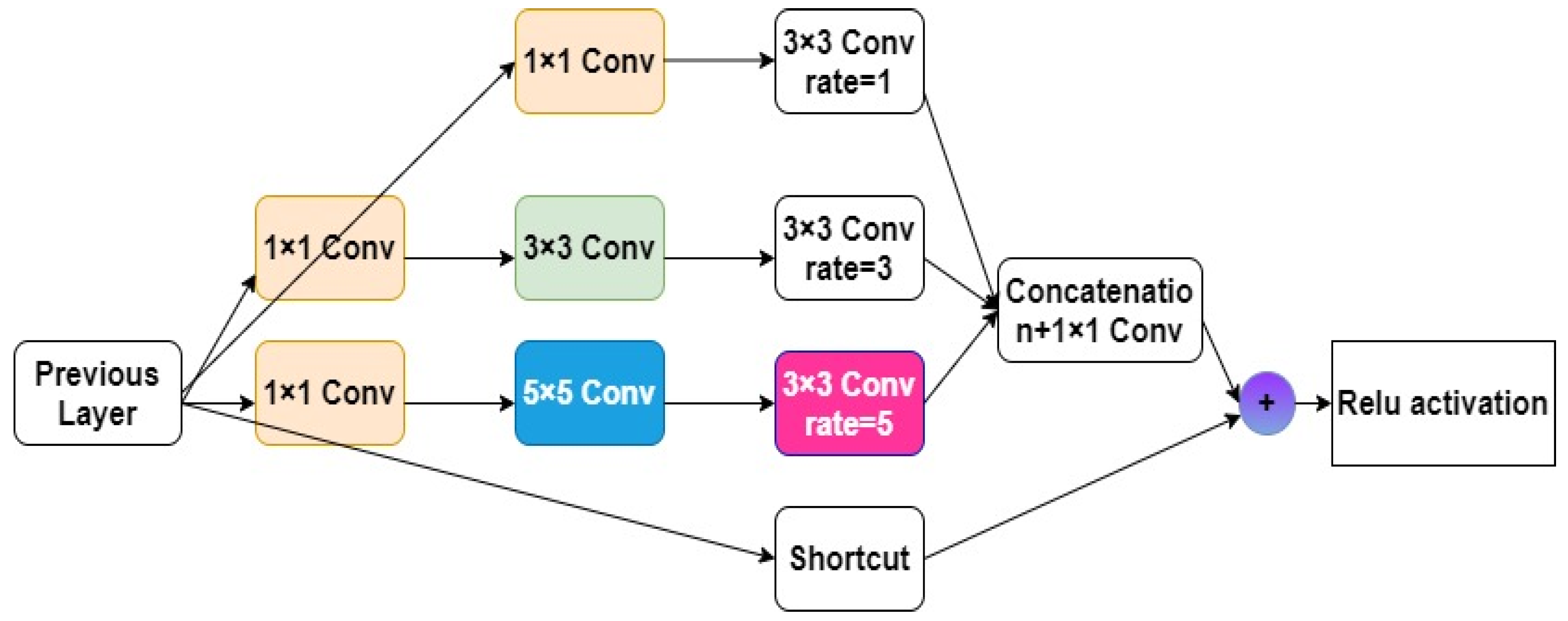

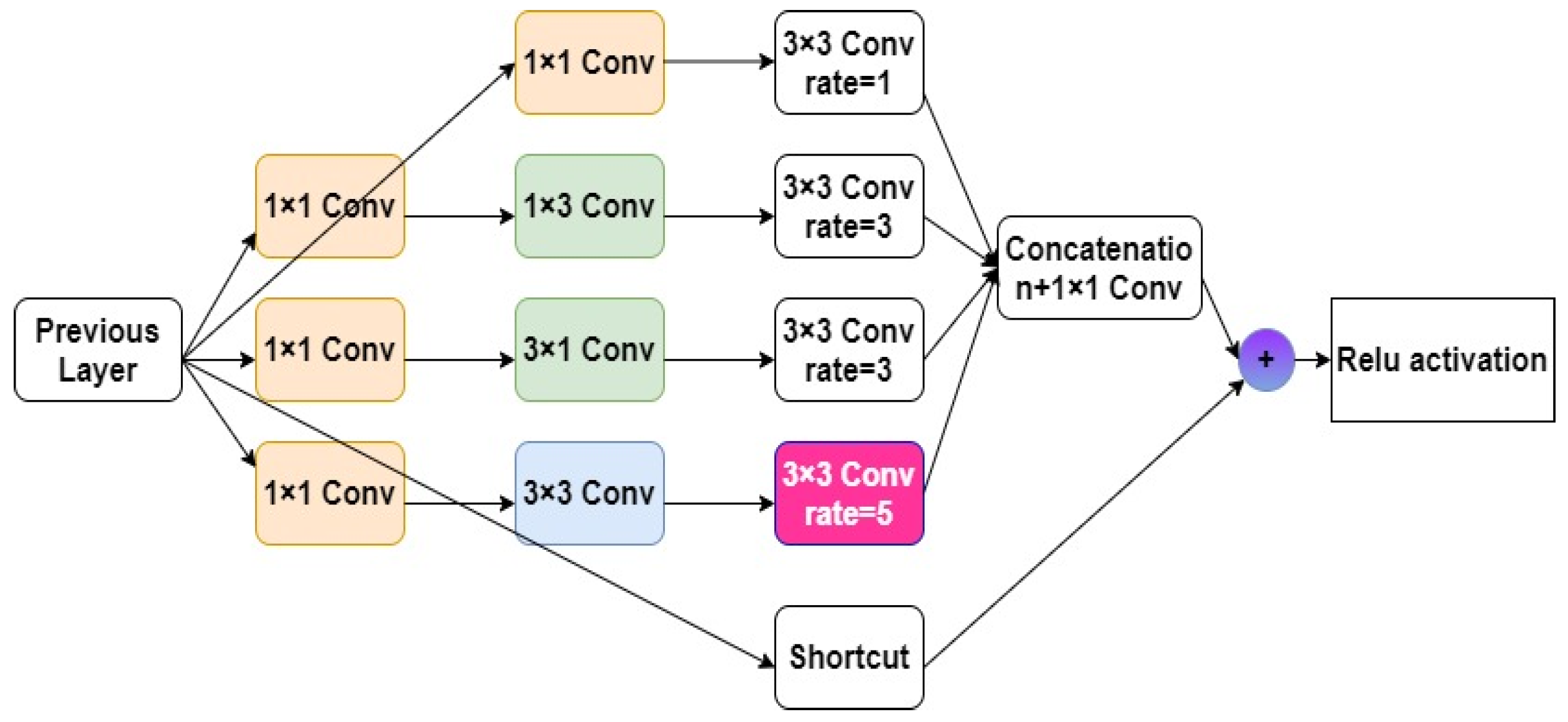

3.2.2. RFB and RFB-s Modules

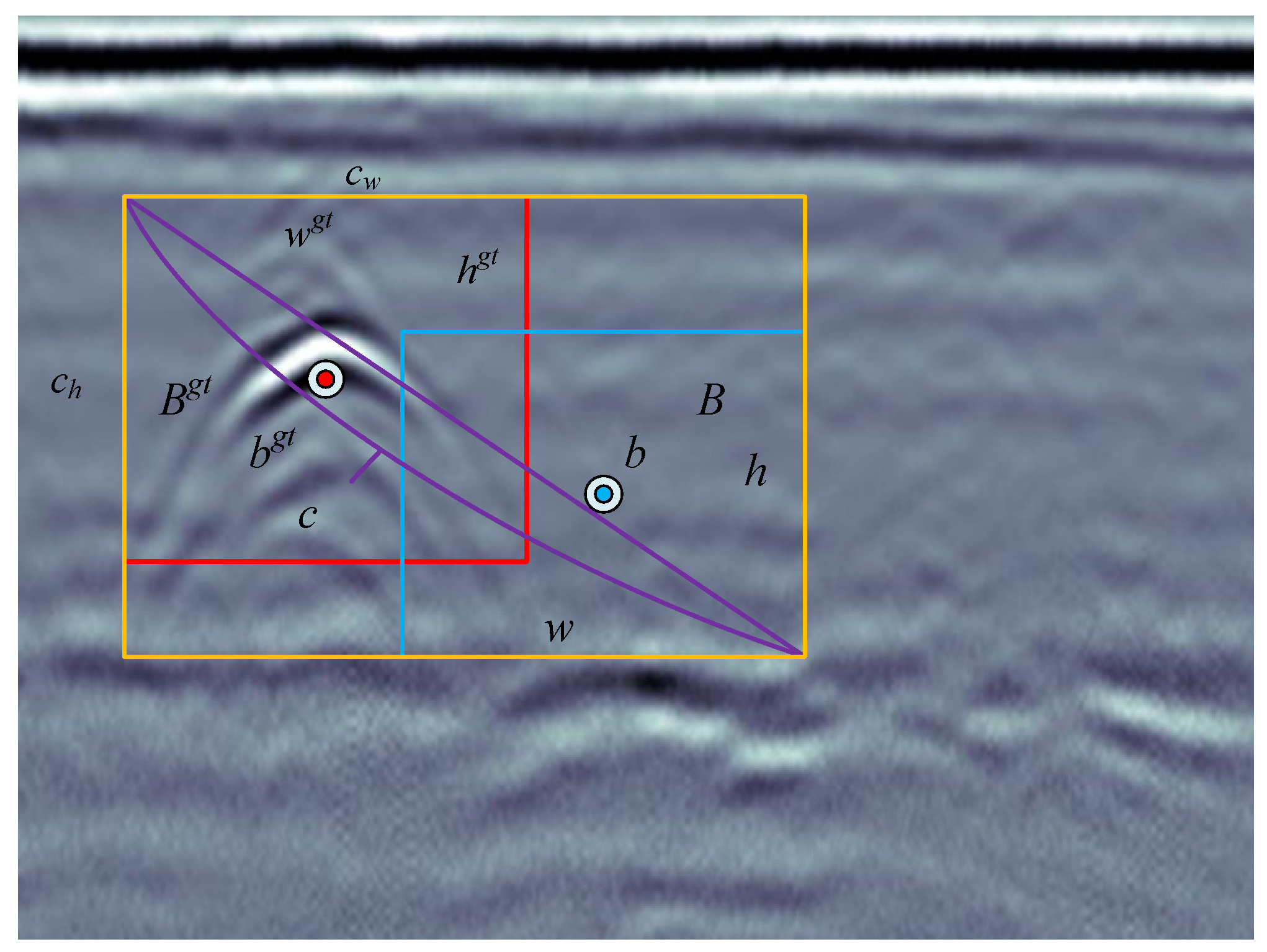

3.2.3. Introduce EIoU Loss Function

3.3 Model Evaluation Index

4. Analysis of Experimental Results

4.1. Experimental Environment

4.2. Model Parameter Setting and Training

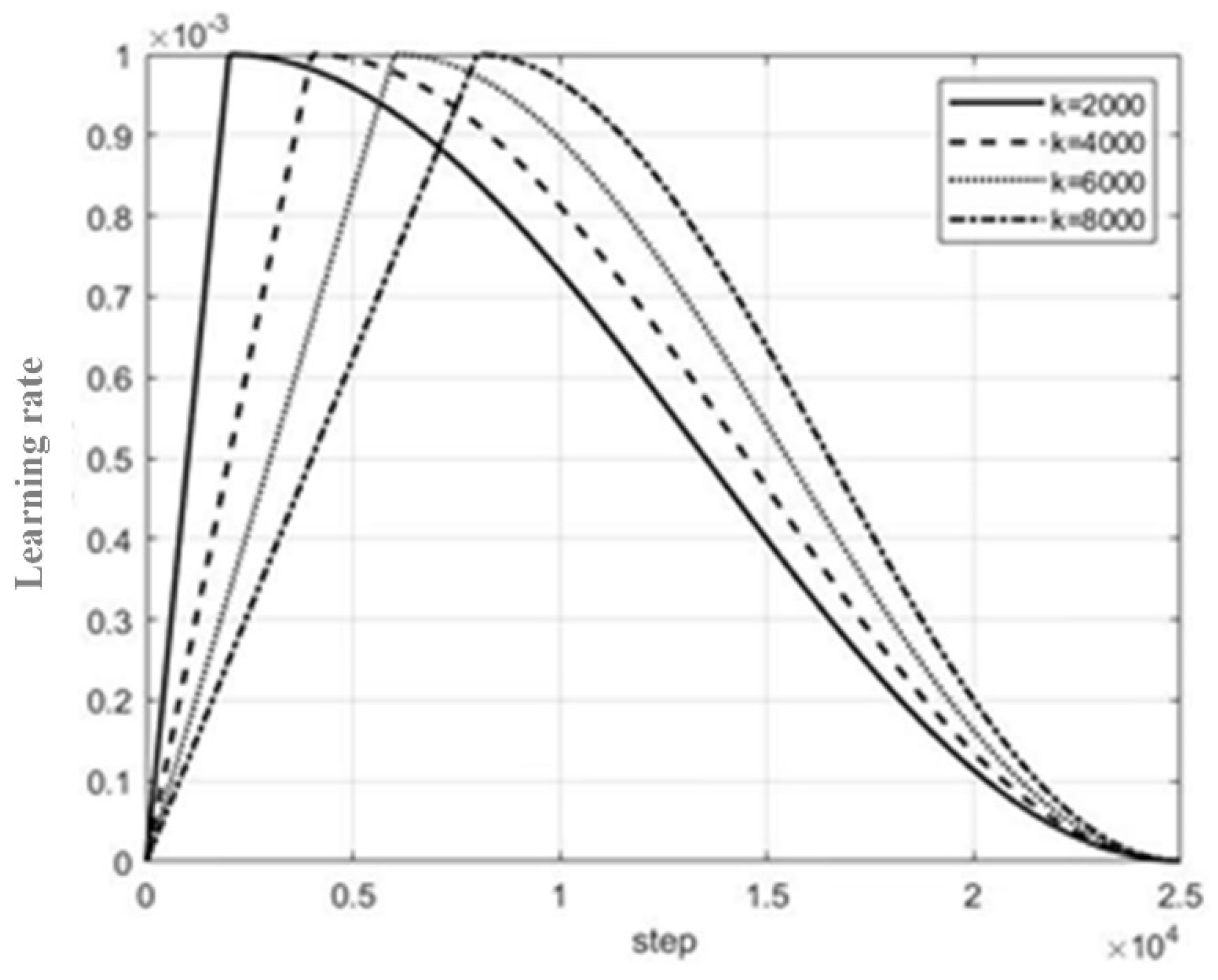

4.3. Ablation Experiments

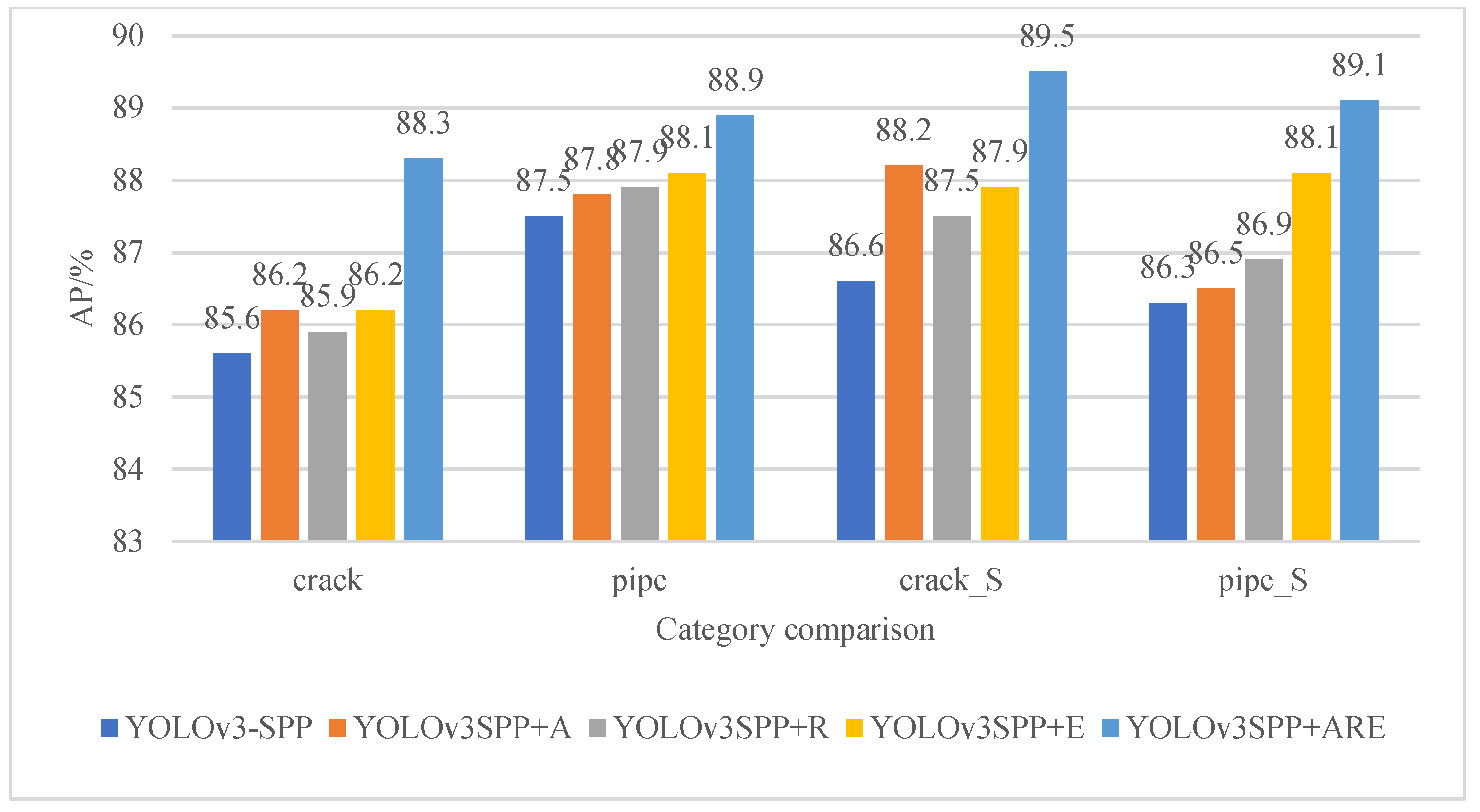

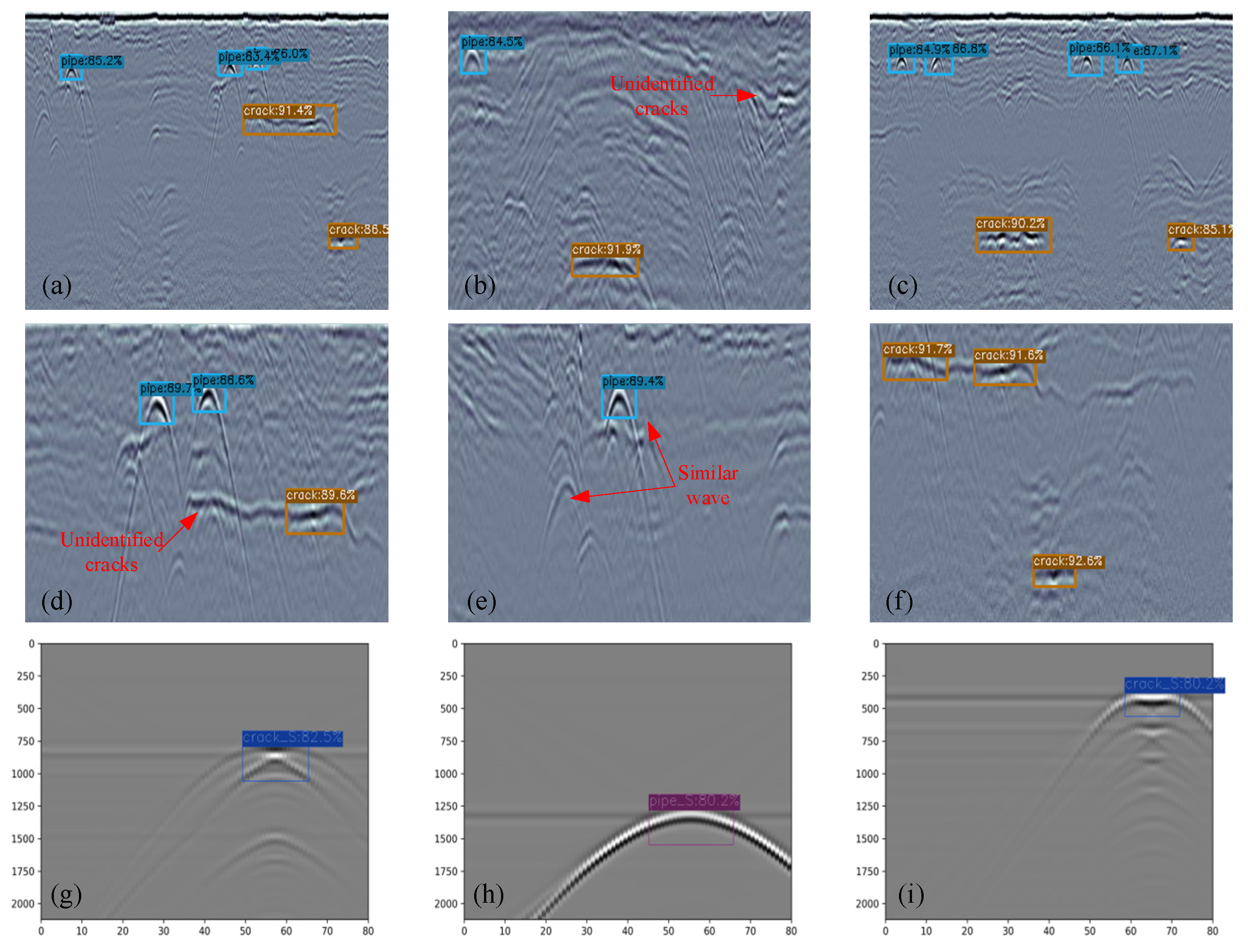

4.4. Comparative Analysis of Models

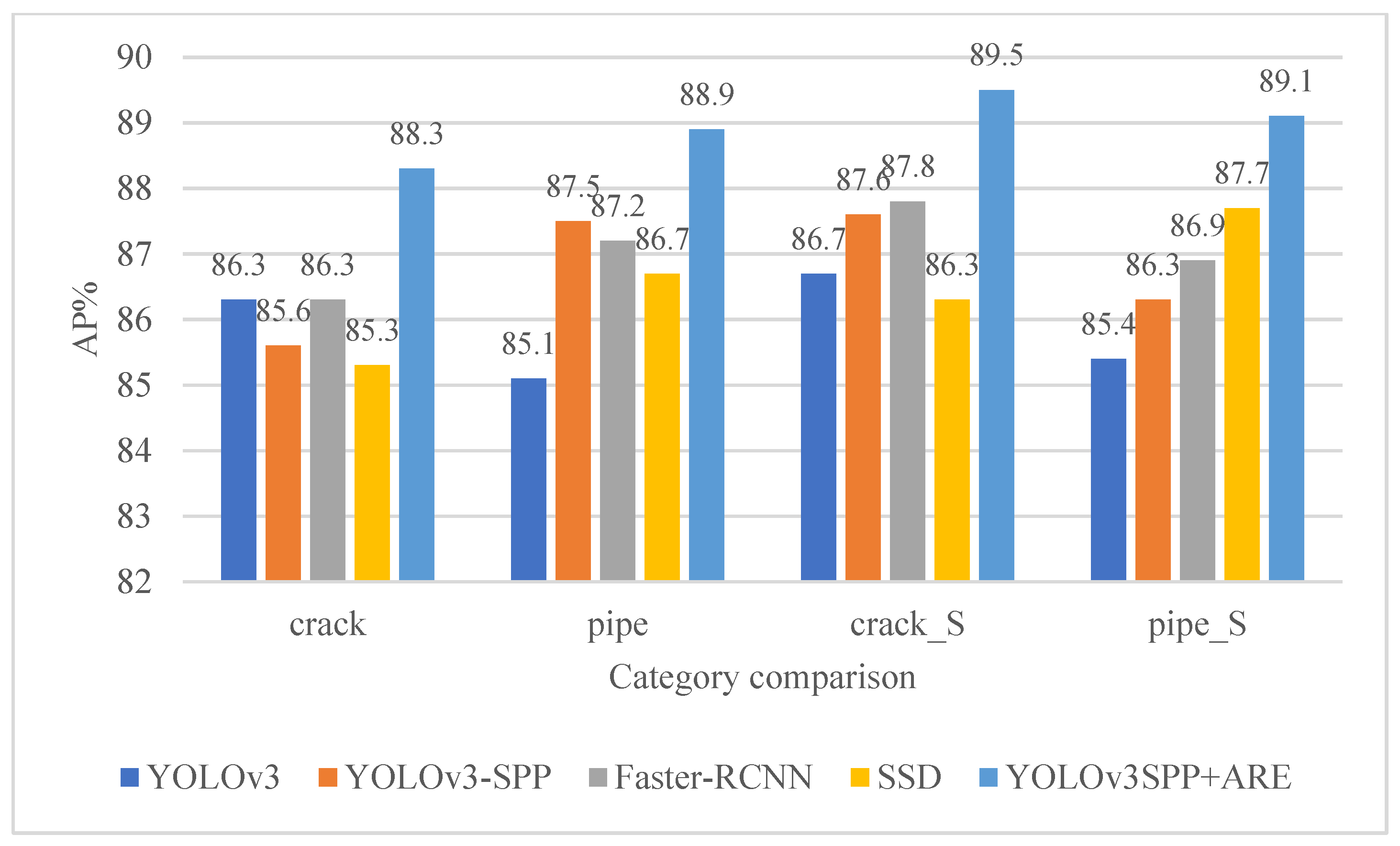

5. Conclusion

References

- Liu, H.; Huang, Z.G.; Yue, Y.P.; Cui, J.; Hu, Q.F. Characteristics Analysis of Ground Penetrating Radar Signals for Ground water Pipe Leakage Environment. JOURNAL OF ELECTRONICS & INFORMATION TECHNOLOGY 2022, 44, 1257–1264. [Google Scholar]

- Stryk, J.; Matula, R.; Pospisil, K.; Derobert, X.; Simonin, J.M.; Alani, A.M. Comparative measurements of ground penetrating radars used for road and bridge diagnostics in the Czech Republic and France. CONSTR BUILD MATER 2017, 154, 1199–1206. [Google Scholar] [CrossRef]

- Harseno, R.W.; Lee, S.J.; Kee, S.H.; Kim, S. Evaluation of Air-Cavities behind Concrete Tunnel Linings Using GPR Measurements. REMOTE SENS-BASEL 2022, 14. [Google Scholar] [CrossRef]

- Amran, T.; Ismail, M.P.; Ismail, M.A.; Amin, M.; Ahmad, M.R.; Basri, N. , GPR application on construction foundation study. In GLOBAL CONGRESS ON CONSTRUCTION, MATERIAL AND STRUCTURAL ENGINEERING 2017 (GCOMSE2017), AbdRahman, N.; Khalid, F. S.; Othman, N. H.; Ghing, T. Y.; Yong, L. Y.; Ali, N., ^Eds. Global Congress on Construction, Material and Structural Engineering (GCoMSE), 2017; Vol. 271.

- Joshaghani, A.; Shokrabadi, M. Ground penetrating radar (GPR) applications in concrete pavements. INT J PAVEMENT ENG 2022, 23, 4504–4531. [Google Scholar] [CrossRef]

- Thomas, S.B.; Roy, L.P. Signal processing for coal layer thickness estimation using high-resolution time delay estimation methods. IET SCI MEAS TECHNOL 2017, 11, 1022–1031. [Google Scholar] [CrossRef]

- J. , P.; C., L.B.; Y., W.; M., S. Time-Delay Estimation Using Ground-Penetrating Radar With a Support Vector Regression-Based Linear Prediction Method. IEEE T GEOSCI REMOTE 2018, 56, 2833–2840. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. ; IEEE, YOLO9000: Better, Faster, Stronger. In 30TH IEEE CONFERENCE ON COMPUTER VISION AND PATTERN RECOGNITION (CVPR 2017), 30th IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2017; pp 6517-6525.

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv e-prints 2018. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. ; IEEE, You Only Look Once: Unified, Real-Time Object Detection. In 2016 IEEE CONFERENCE ON COMPUTER VISION AND PATTERN RECOGNITION (CVPR), 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2016; pp 779-788.

- Balcioglu, Y.S.; Sezen, B.; Gok, M.S.; Tunca, S. IMAGE PROCESSING WITH DEEP LEARNING: SURFACE DEFECT DETECTION OF METAL GEARS THROUGH DEEP LEARNING. MATER EVAL 2022, 80, 44–53. [Google Scholar] [CrossRef]

- Han, Y.C.; Wang, J.; Lu, L. ; IEEE, A Typical Remote Sensing object detection Method Based on YOLOv3. In 2019 4TH INTERNATIONAL CONFERENCE ON MECHANICAL, CONTROL AND COMPUTER ENGINEERING (ICMCCE 2019), 4th International Conference on Mechanical, Control and Computer Engineering (ICMCCE), 2019; pp 520-523.

- Yang, Z.L. Intelligent Recognition of Traffic Signs Based on Improved YOLO v3 Algorithm. MOB INF SYST 2022, 2022. [Google Scholar] [CrossRef]

- T., Y.L.; P., D.; R., G.; K., H.; B., H.; S., B. In Feature Pyramid Networks for Object Detection, 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 21-26 July 2017; pp. 936–944.

- K. , H.; X., Z.; S., R.; J., S. Spatial Pyramid Pooling in Deep Convolutional Networks for Visual Recognition. IEEE T PATTERN ANAL 2015, 37, 1904–1916. [Google Scholar] [CrossRef] [PubMed]

- Liu, S.; Huang, D.; Wang, Y. In Receptive Field Block Net for Accurate and Fast Object Detection, Cham, 2018, 2018; Ferrari, V.; Hebert, M.; Sminchisescu, C.; Weiss, Y., Eds. Springer International Publishing: Cham, 2018; pp. 404-419.

- Zhang, Y.F.; Ren, W.; Zhang, Z.; Jia, Z.; Wang, L.; Tan, T. Focal and Efficient IOU Loss for Accurate Bounding Box Regression. 2021.

- Zhao Di,Ye Shengbo,Zhou Bin. Ground penetrating radar anomaly detection based on convolution Grad-CAM. Electronic Measurement Technology 2020, 43, 113–118. [Google Scholar]

- Aggarwal, S.; Singh, P. Cuckoo, Bat and Krill Herd based k-means plus plus clustering algorithms. CLUSTER COMPUT 2019, 22, 14169–14180. [Google Scholar] [CrossRef]

- Sinaga, K.P.; Yang, M.S. Unsupervised K-Means Clustering Algorithm. IEEE ACCESS 2020, 8, 80716–80727. [Google Scholar] [CrossRef]

- J. , E.; R., G.; B., U.; R., G. Optimization of Sparse Time-Modulated Array by Genetic Algorithm for Radar Applications. IEEE ANTENN WIREL PR 2014, 13, 161–164. [Google Scholar] [CrossRef]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU Loss: Faster and Better Learning for Bounding Box Regression. arXiv 2019. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Transactions on Pattern Analysis & Machine Intelligence 2017, 39, 1137–1149. [Google Scholar]

- Berg, A.C.; Fu, C.Y.; Szegedy, C.; Anguelov, D.; Erhan, D.; Reed, S.; Liu, W. SSD: Single Shot MultiBox Detector. 2015.

| Models | mAP/% | FPS/(frame/s) |

|---|---|---|

| YOLOv3-SPP | 86.5 | 67 |

| YOLOv3SPP+A | 87.2 | 67 |

| YOLOv3SPP+R | 87.1 | 60 |

| YOLOv3SPP+E | 87.6 | 67 |

| YOLOv3SPP+ARE | 89.0 | 60 |

| Models | mAP/% | FPS/(frame/s) |

|---|---|---|

| YOLOv3 | 85.9 | 72 |

| YOLOv3-SPP | 86.8 | 67 |

| Faster R-CNN | 87.1 | 17 |

| SSD | 86.5 | 40 |

| YOLOv3SPP+ARE | 89.0 | 60 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).