1. Introduction

The last several decades have seen a significant amount of tunnelling construction activities performed, which has led to concerns being expressed regarding the need to enhance the approaches currently used in the supervision of civil and mining projects, as well as inspection and monitoring in general. Underground tunnels are often large and complicated constructions that need specialized inspectors to carry out non-destructive inspection and evaluation procedures. These inspections and assessments are carried out on-site utilizing visual and physical measurement methods, the employment of which depends on the type of underground tunnel and the material that makes up its structure. These inspections and assessments are also carried out to ensure that the tunnel is in good condition [

1,

2]. For effective and efficient underground tunnel modelling the use of unmanned aerial vehicles (UAVs) or drones-based 3D photogrammetric modelling in inaccessible underground tunnels is a novel and promising method that has the potential to significantly increase the efficiency and accuracy of tunnel mapping and inspection. The new technology, such as unmanned aerial vehicle (UAV) photogrammetry [

3,

4,

5,

6,

7,

8,

9], not only combines aerial and terrestrial photogrammetry to open up multiple applications in the near range domain, but it also presents low-cost alternatives to the standard human aerial photogrammetry [

10,

11,

12,

13,

14].

UAVs have become powerful devices for both practitioners and researchers. In recent years, there has been a substantial growth in the usage of UAVs for a wide range of applications in research and engineering. UAVs are mostly utilized for image capturing techniques [

15] due to their time savings, low cost, minimum field work, and high precision. Moreover, UAVs technology is commonly employed in geotechnical, tunneling and mining projects basically for mapping and 3D reconstruction, [

16,

17,

18].

According to Rossini et al [

16], and Salvini et al [

19] UAVs photogrammetry are mostly applicable in maps, surveying, and other technical applications. The UAVs photogrammetry-based 3D models have high precision and can be applied to conduct highly precise measurements. As a result, the approach was always employed to depict the true state of the place. UAV-based 3D photogrammetric modelling in inaccessible underground tunnels is a technique for constructing 3D representations of inaccessible areas using UAVs. UAVs equipped with cameras might be used to rapidly examine and collect visual data from inaccessible post-event regions [

20,

21]. Phantom 4 Pro is one of the most often used drones for 3d modeling. Using a drone to capture many high-resolution images of the inaccessible tunnel using photogrammetry software such as Agisoft Photo-Scan to generate a thorough 3D model of the location. Photogrammetry is the practice of measuring objects using photos. Photogrammetry often produces a map, a graphic, or a three-dimensional model of a real-world object or land mass [

22]. To build 3D maps using aerial photogrammetry, the camera is often placed on a drone and aimed vertically towards the ground. The camera is positioned horizontally on the UAVs and utilizes photogrammetry to build 3D representations of monuments and statues. While the UAVs fly along an autonomously planned flight route termed a waypoint, overlapping images of the ground or model are captured (80 to 90% overlap). To perfectly overlap photographs of an item or terrain by 80 to 90 percent using pilot navigation would be difficult. Points equipped with waypoint navigation technology are required.

Therefore, the 3D photogrammetric modelling of inaccessible locations, such as underground tunnels, is important for an efficient method of producing high-resolution and accurate representations [

23]. In recent years, the technology and techniques for generating digital models using photogrammetry have evolved significantly, enabling users to measure distances, gather spatial data from the ground, and map points of interest (ROIs). Photogrammetry with UAVs has emerged as a viable alternative to traditional surveying methods and has the potential to drastically reduce the time and money required to map difficult terrain [

1,

17,

23,

24,

25,

26] To create a realistic 3D model of an underground tunnel, researchers may use UAVs equipped with high-resolution cameras and fly them over the tunnel to collect data from many viewpoints. The resulting 3D model of the tunnel may be used for several reasons, including construction, maintenance, and safety assessments [

25].

The purpose of this study is to generate an accurate and reliable 3D modelling process using UAVs photogrammetric technology; hence this study allows the techniques to evaluate the practicability of modelling and mapping inaccessible tunnels using images recorded by UAVs and photogrammetry. These tunnels are located underground. To achieve this objective, there are a few things that need to be taken into consideration, such as the following: capturing digital imagery using a UAVs with the appropriate lighting and settings; flying the UAVs without crashing by using an obstacle detection system; creating 3D digital photogrammetry models from video frame stills; and different features within the digital model. To develop a safer and more efficient way for modelling in an underground inaccessible tunnel while also generating a more complete dataset for use in the construction and modelling of future tunnels, this is the goal of this study.

The rest of the paper is organized as follows:

Section 2 provides the materials and methods and outlines the background on the study area, materials, and experiment one and two. The proposed drone system that can work in inaccessible underground tunnel is described in

Section 3.

Section 4 outlines the result of the study using the techniques of UAV and photogrammetric. The importance of the UAV and 3D photogrammetric in inaccessible tunnel is discussed in

Section 5. The key conclusions from the research and their implications are presented in

Section 6.

2. Materials and Methods

Photogrammetry technique was selected for modelling in underground inaccessible tunnel because the technique is more lightweight and affordable than laser-based techniques. There are various ways to build a 3D photogrammetric model such as using 2D images, 360-degree panorama images and frames cut from videos. Each way has its own advantages and disadvantages.

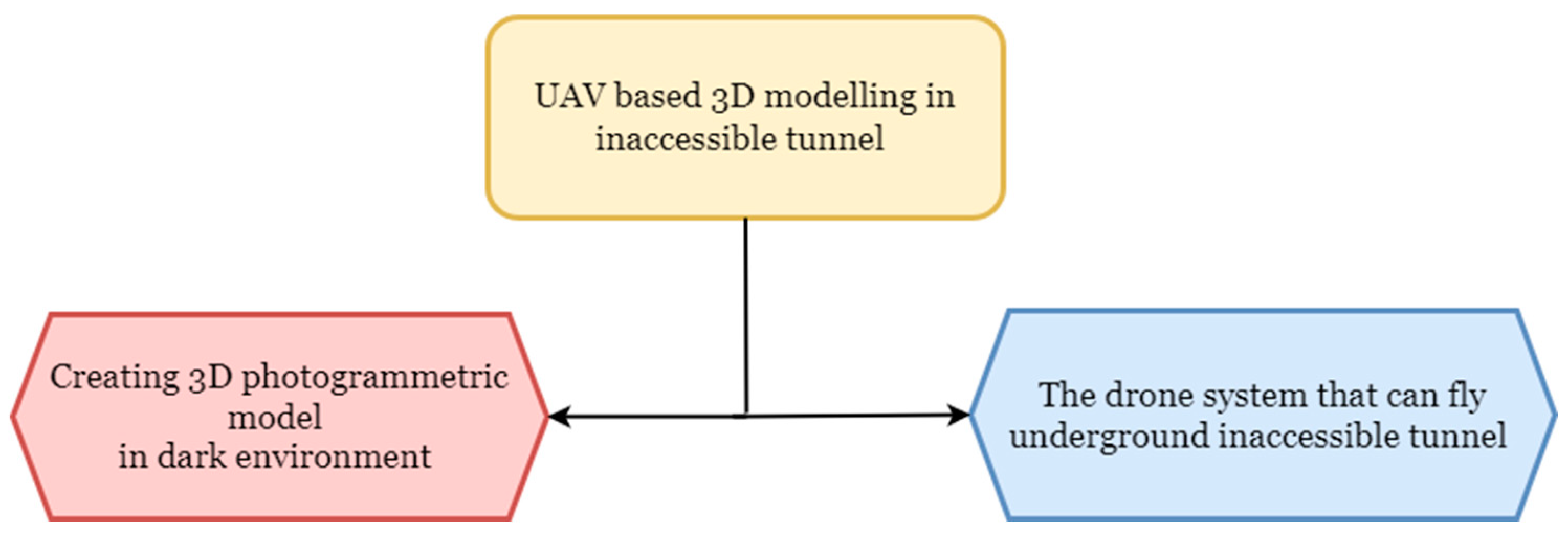

UAV-based 3D photogrammetric modelling system consists of two subsystems.

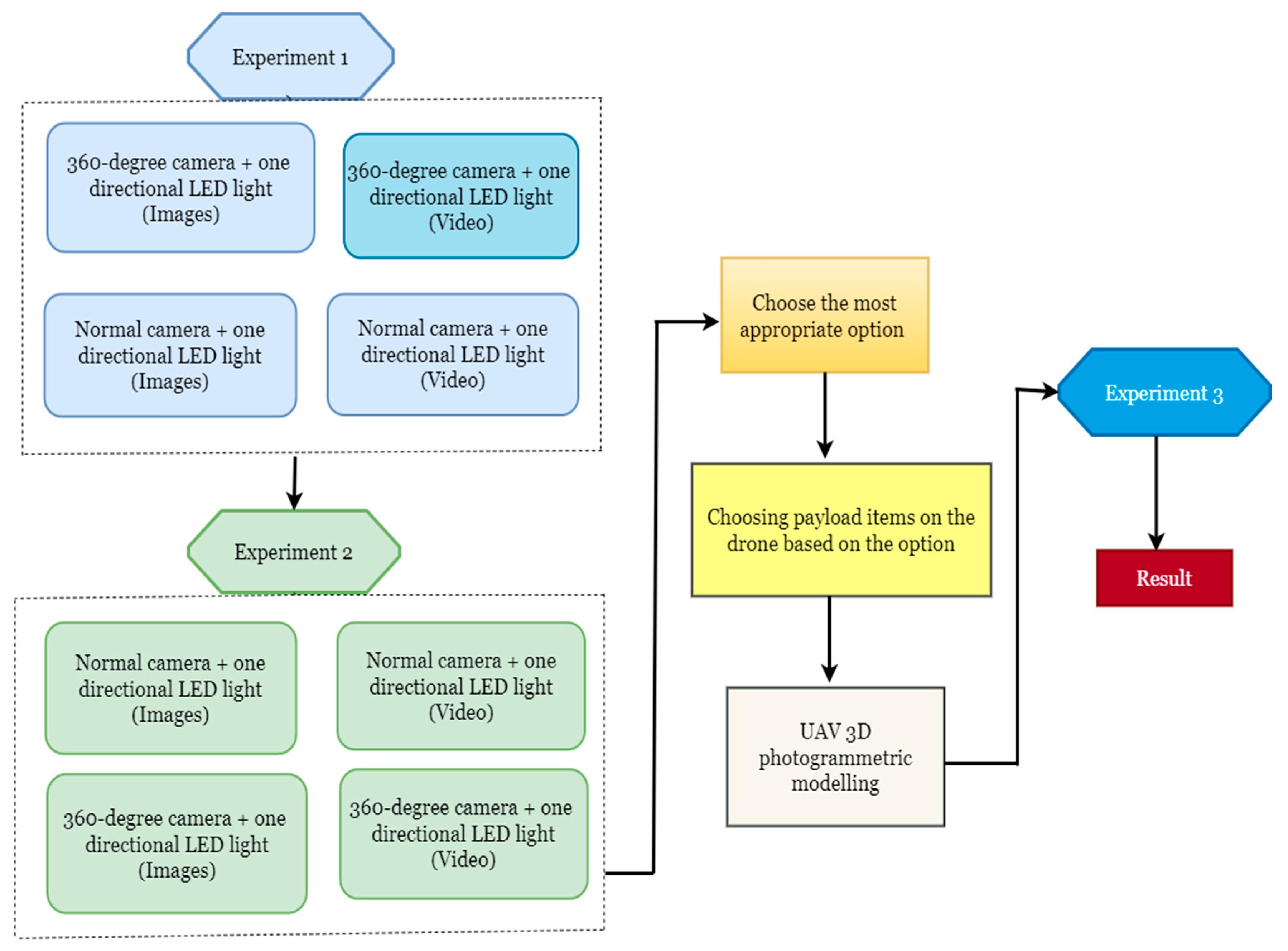

Figure 1 shows the techniques to create a 3D photogrammetric model in a dark environment system. In this system, it needs to be ensured that the appropriate photographing tools are selected for creating the best 3D model in a dark environment. Because there are many factors to influence accuracy of the photogrammetry such as image resolution, lighting condition and camera calibration etc. Second is the drone system that can fly underground in harsh environments. Combining those systems, UAV-based 3D photogrammetric modelling system is built.

Figure 2 shows the proposed flow diagram of UAV 3D photogrammetric mapping system, hence the proposed flow system for the development of a UAV of this study involves several stages, including data acquisition, data processing, system development, and evaluation.

Firstly, data acquisition is performed using various equipment such as different types of cameras and different light sources. The objective is to capture multiple photographs and videos of the underground dark tunnel. These images and videos are then processed to create a 3D model of the tunnel. This process includes georeferencing, utilizing photogrammetry techniques for image stitching and 3D modeling.

Secondly, a UAV system is developed, which includes attaching light resource and drone cage to the Phantom 4 Pro V2.0. After the development of the UAV system, the most suitable photographing method is integrated with the UAV to gather additional data and create a more detailed 3D model of the object.

Thirdly, the UAV system is evaluated to identify any technical problems or issues that need to be addressed. This process includes testing the UAV flight performance, evaluating the accuracy of the data collected by the 3D scanning system, and identifying any areas where the UAV system could be improved.

Lastly, the UAV is used to create a 3D model of the tunnel by flying over the area and using the 3D scanning system to gather data. The accuracy of the 3D model is then evaluated by comparing it to ground truth data to assess the accuracy of the model.

2.1. Study area

Three experiments were conducted for three different purposes. The first experiment was to build a 3D model using only one directional light source and evaluate the accuracy of 3D photogrammetric model and conducted at the concrete tunnel named in the research as a “pedestrian tunnel I”. The second one was to build a 3D model of underground sewer tunnel using different light sources, one directional and omni directional. The tunnel's long-term sustainability, and structural integrity have been significantly impacted by water leakage, which has caused cracks in the concrete walls. This issue has arisen due to the considerable amount of time that has elapsed since the tunnel's construction. Consequently, it is essential to conduct an inspection of the interior of the tunnel. However, due to its lengthy and narrow structure, travelling the tunnel on foot presents significant difficulties for human personnel, resulting in considerable time and cost constraints.

Figure 3.

Underground sewer tunnel.

Figure 3.

Underground sewer tunnel.

The last experiment was to build a 3D model using a UAV and evaluate the accuracy of 3D photogrammetric model and conducted at the tunnel named in the research as a “pedestrian tunnel II”.

Table 1.

Study area’s location.

Table 1.

Study area’s location.

| Study area |

Purpose |

Dimension (m) |

| Pedestrian Tunnel I |

Modeling using one light source |

H:2.72 W:3.26L:30 |

| Sewer tunnel |

Modeling using two different light sources |

H: 2.5, W:2.5 |

| Pedestrian Tunnel II |

Mapping and modelling using an UAV |

H:4 W:3.5 L:37.5 |

2.2. Creating 3D photogrammetric model in dark environment

Materials

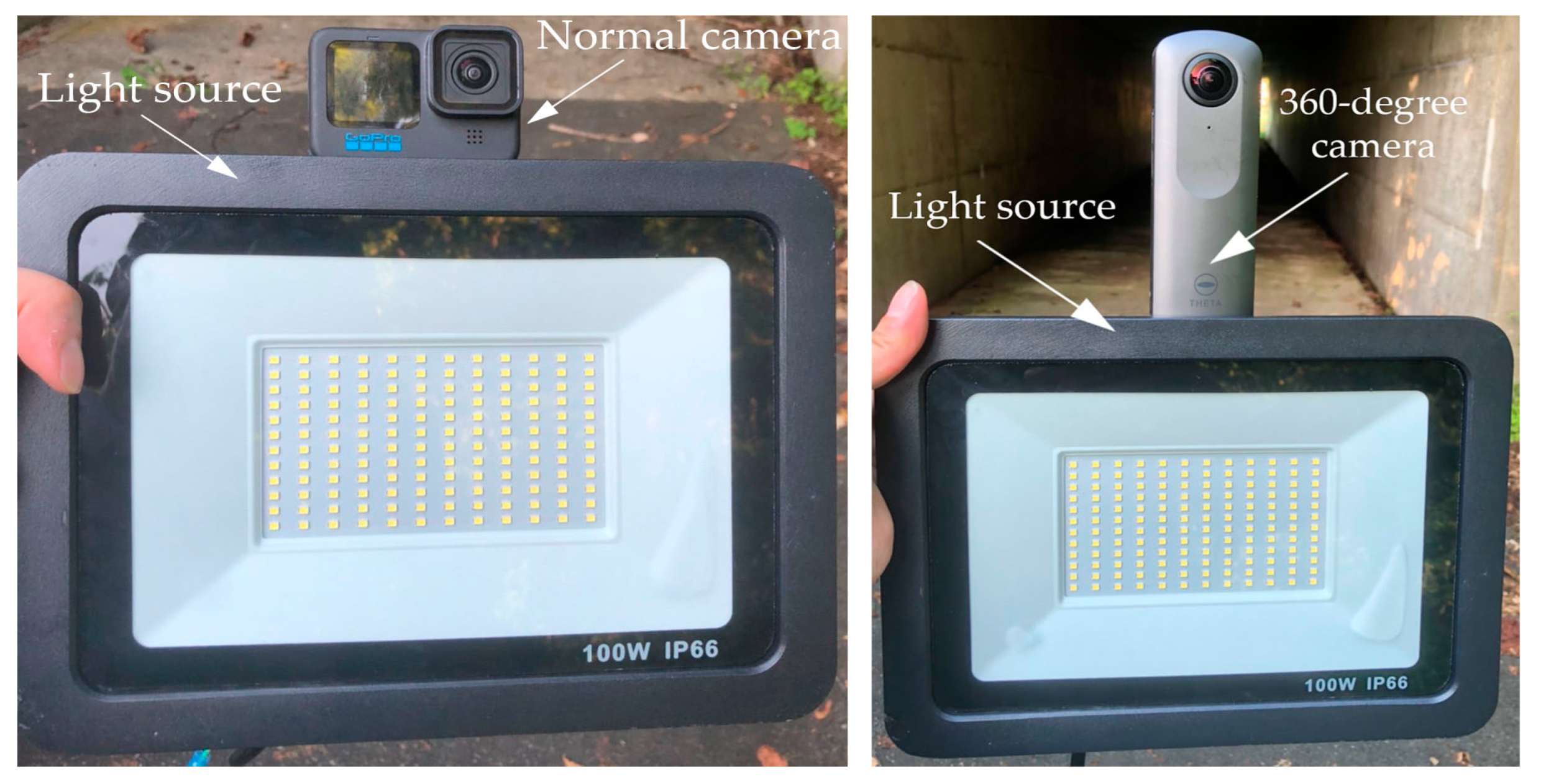

Figure 4.

Equipment for creating 3D models in dark environment 1, 1. Ground Control Point 2. Normal camera (GoPro Black Hero 10) 3. 360-degree camera (Ricoh Theta 360) 4. Normal LED light (10000 Lumens LED) 5. Omni-directional LED light (Tube 10000 360-degree LED (10000 Lumens) 6. Anker 100w portable battery.

Figure 4.

Equipment for creating 3D models in dark environment 1, 1. Ground Control Point 2. Normal camera (GoPro Black Hero 10) 3. 360-degree camera (Ricoh Theta 360) 4. Normal LED light (10000 Lumens LED) 5. Omni-directional LED light (Tube 10000 360-degree LED (10000 Lumens) 6. Anker 100w portable battery.

Ground control points: Ground control points (GCPs) point on the ground with known coordinates. The points were used for georeferencing and scaling reconstructed three-dimensional model into exact size.

Cameras: Normal camera (GoPro Black Hero 10) and 360-degree camera (Ricoh Theta 360-deg camera) were tested for taking images. Both cameras were mounted on an artificial light source.

Light source: Underground tunnel is in poor light condition. Therefore, illumination is required to take photos. Good light condition will greatly impact the accuracy and result of a photogrammetry model. In the experiment two light sources were tested for illumination of taking photos. The first one was a one-directional LED light which was used for taking photos with a normal camera and 360-degree camera. The second one was omni-directional LED which was utilized with taking photos by 360-degree camera. Portable battery. Anker was used to provide electricity to LED light source.

Software: Agisoft metashape professional 1.8.4 was used for creating 3D photogrammetric model.

2.2.1. Experiment I in pedestrian tunnel.

As mentioned before, the purpose of this experiment was to create a 3D photogrammetric model in an underground dark environmental area successfully using a normal camera, 360-degree camera and one-directional LED light which is 10000 Lumens.

Figure 5.

Equipment’s for creating 3D model in dark environment I.

Figure 5.

Equipment’s for creating 3D model in dark environment I.

Two different set-ups to take images in dark environment. In result, 4 different 3D photogrammetric model were reconstructed. These are following as: 3D model reconstructed from

Images taken with normal camera

Video recorded with normal camera

360-degree images taken with 360-degree camera

360-degree video recorded 360-degree camera

However, the 3D model was not successfully created from the images captured by the 360-degree camera with normal LED light source.

The experiment was conducted in an underground pedestrian tunnel during the night. Ground control points (GCP) were used at the experimental tunnel which were printed with tool of the Agisoft Metashape software. Because the GCPs in the images can be detected automatically with the function of detecting markers. It not only saves time, but also reduces manual work. At least three GCPs were placed on the walls covering X, Y, Z axis and their coordinates were determined by using tape-meter.

Table 2.

Coordinates of the Ground Control Points.

Table 2.

Coordinates of the Ground Control Points.

| Name of GCP/coordinates |

X (cm) |

Y (cm) |

Z(cm) |

| GCP1 |

150 |

0 |

180 |

| GCP2 |

635 |

0 |

180 |

| GCP4 |

144.4 |

321 |

90 |

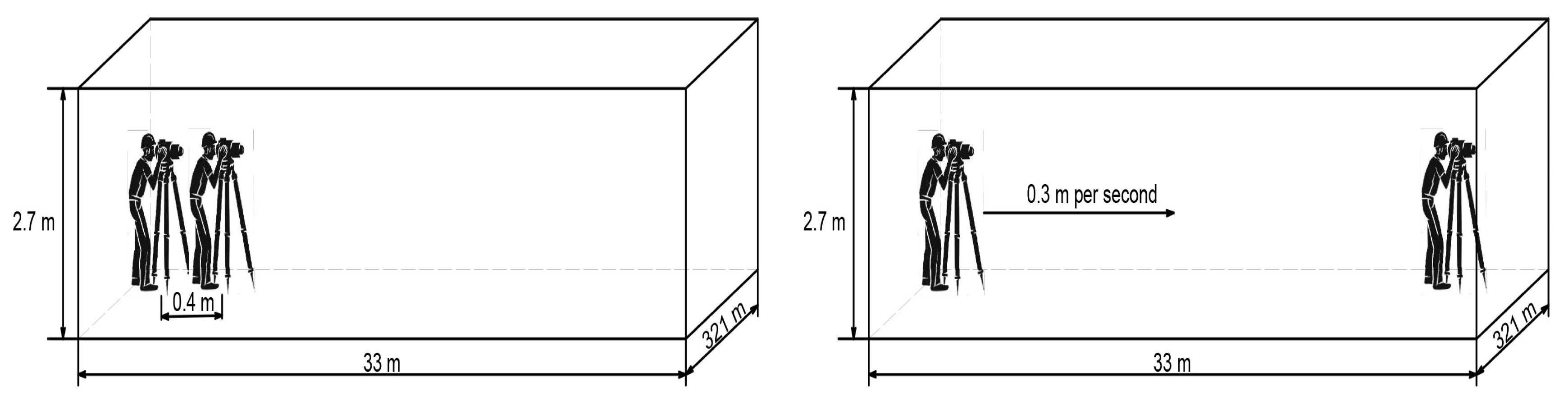

When taking photos with normal camera, 84 photos were taken in a total of 33m tunnels at 40cm considering overlapping (

Figure 6). As for the video, it was recorded at a speed of 0.3 meters per second (

Figure 7). The data set was created by extracting frames from the recordings. For the 360-degree camera, the test was performed in the same order as above.

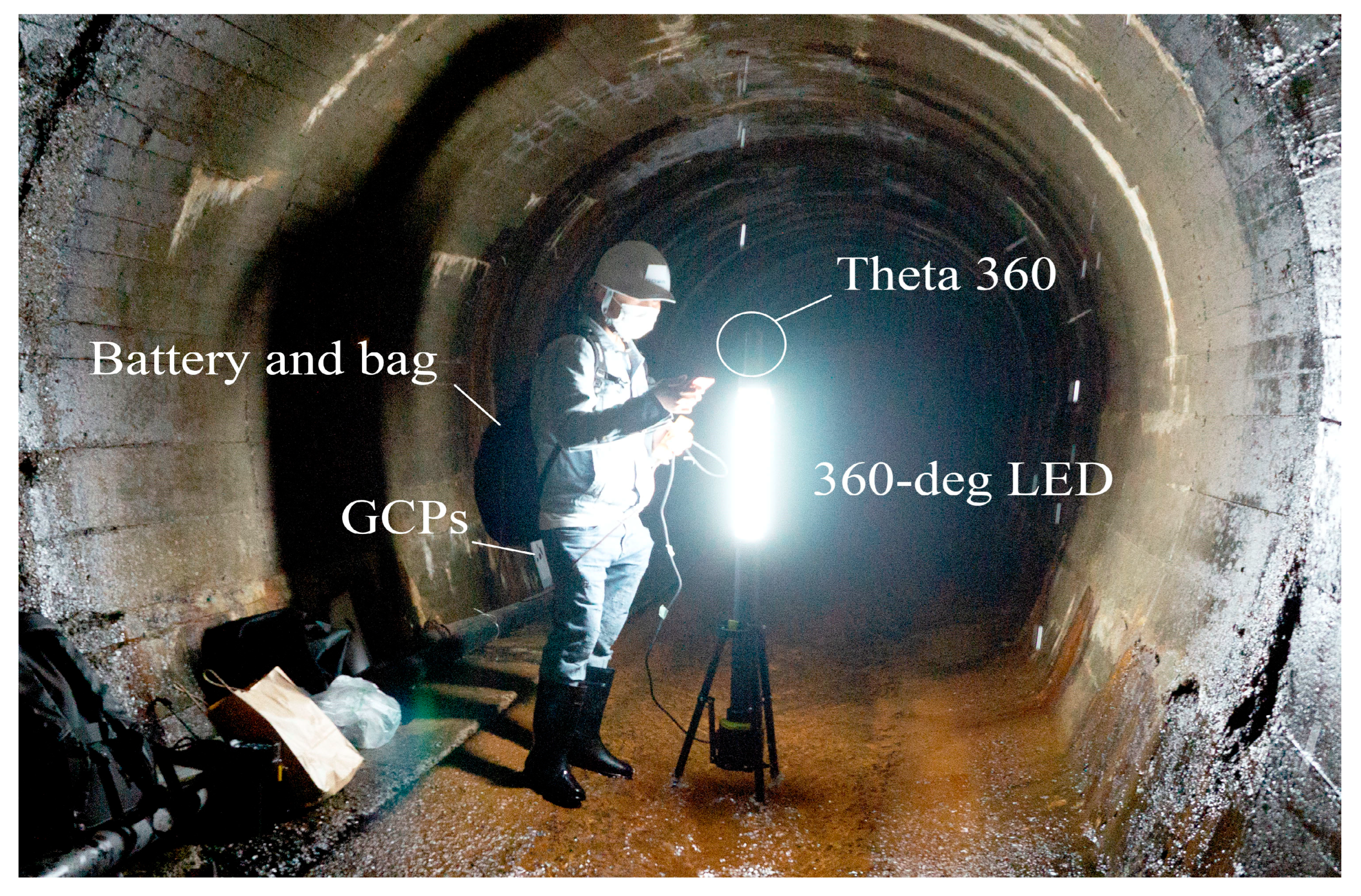

2.2.2. Experiment II at underground sewer tunnel

The purpose of this experiment was to understand the effect of omnidirectional light source on the the accuracy of a 3D model created from panorama images taken with 360-degree camera.

First, three GCPs were placed on the walls and measured the distance between them. After setting the points, omnidirectional LED was connected to the battery in the bag, the 360-degree camera was mounted on the light and took pictures at 40 cm. After that, the mode of the camera was switched to video mode and recorded at a speed of 0.3 meters per second.

Figure 8.

Preparation for an experiment to take photos and video.

Figure 8.

Preparation for an experiment to take photos and video.

After this experiment, a normal camera was set on the one directional light and the light was connected to the battery and photos were taken through the tunnel.

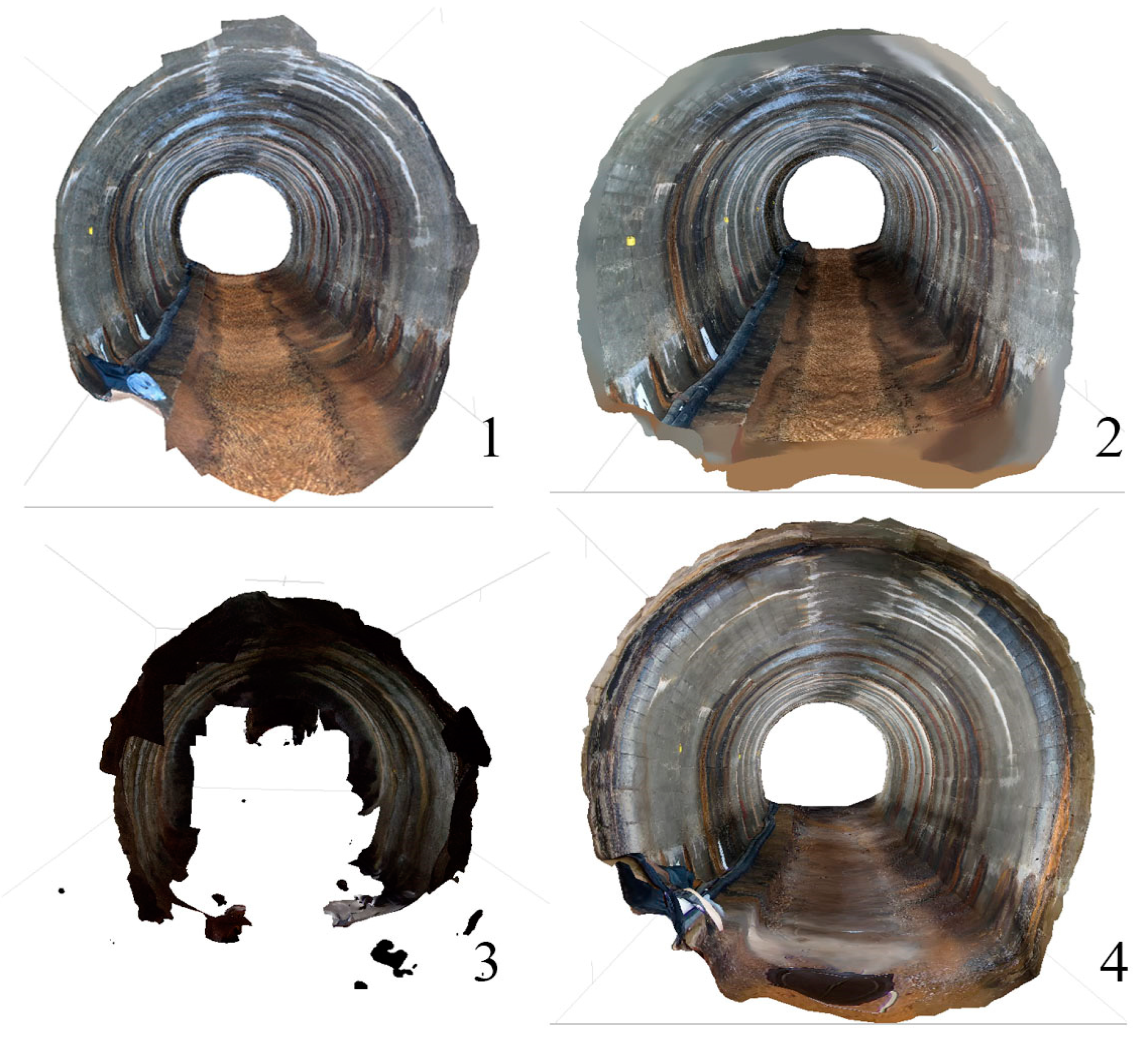

As a result of the experiment, four models were built (

Figure 9) and compared to determine the accuracy. Mostly the 3D model is reconstructed from images taken with normal camera using one-directional light source, video recorded with normal using one directional light source, 360-degree images taken with 360-degree camera omni directional light source, and 360-degree video recorded with 360-degree camera omni directional light source.

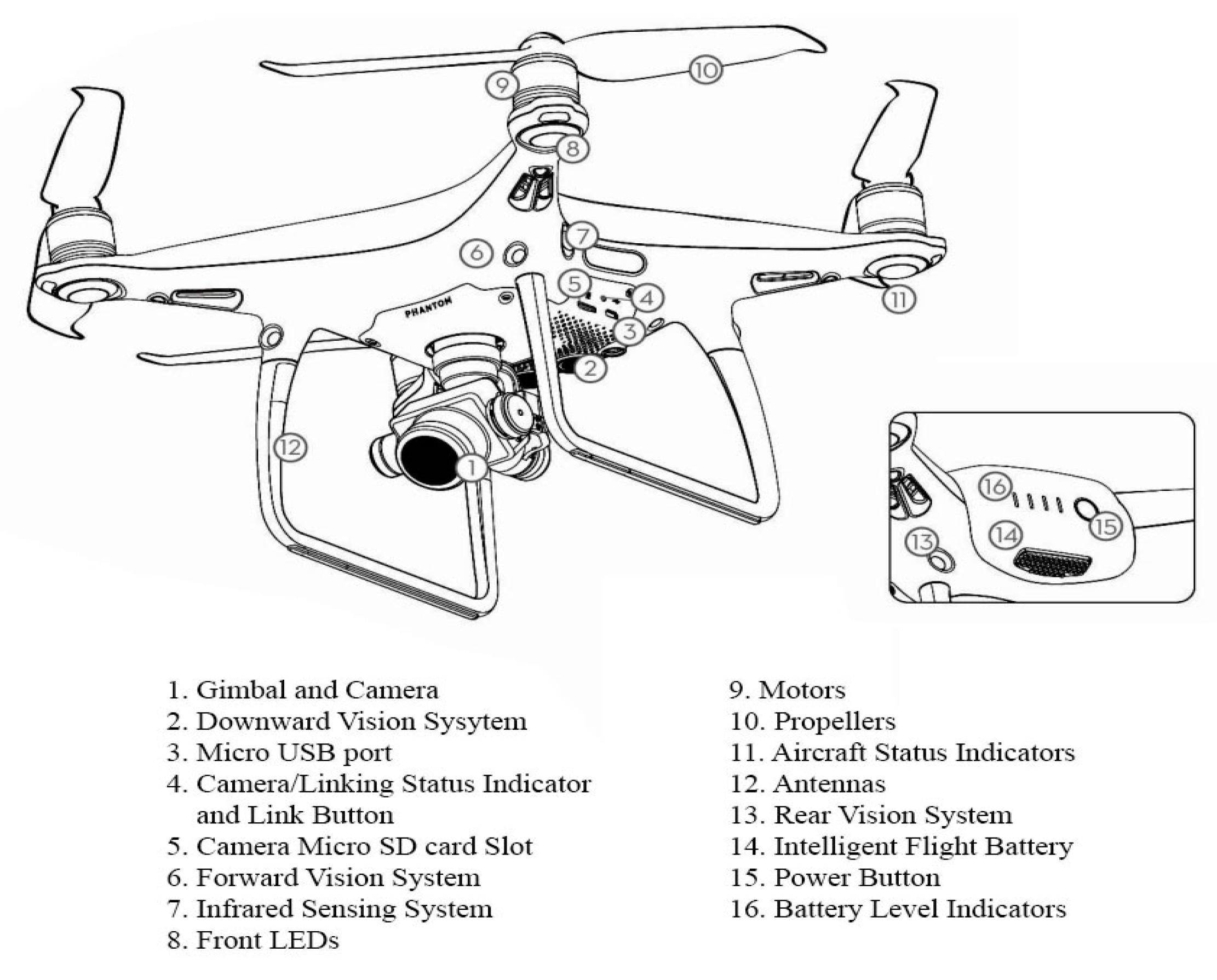

3. Developing a drone system that can work in inaccessible underground tunnel

3.1. Drone system

For this research purpose, the DJI Phantom 4 pro v2.0 drone was selected. The features that supported the selection of this drone for the research are listed below.

Vision system and Infrared sensing system: The main components of the Vision System are located on the front, rear and bottom Phantom 4 Pro V2.0, including [

1] [

2] [

3] three stereo vision sensors and [

4] two ultrasonic sensors. The Vision System uses ultrasound and image data to help the aircraft maintain its current position, enabling precision hovering indoors or in environments where a GPS signal is not available. The Vision System constantly scans for obstacles, allowing the Phantom 4 Pro to avoid them by going over, around or hovering.

Figure 10.

Main components of drone.

Figure 10.

Main components of drone.

The Infrared Sensing System consists of [

5] two 3D infrared modules on both sides of the aircraft. These scan for obstacles on both sides of the aircraft and is active in certain flight modes.

Camera: The DJI Phantom 4 Pro V2.0 camera is a high-quality camera that is capable of capturing 4K high-definition video and 20-megapixel still photos. It is equipped with a 1-inch CMOS sensor and a mechanical shutter, which allows it to produce clear and crisp images even in low light conditions. The camera also has a wide dynamic range, which allows it to capture a wide range of tonal detail in a single image. Additionally, the Phantom 4 Pro V2.0 camera has a 3-axis gimbal for smooth, stable footage and a variety of shooting modes to help us capture the perfect shot.

Accessories: Accessories of the DJI Phantom 4 Pro V2.0 such as propellor guard, head light, cage and strobe lights are more common than other DJI’s drone. These accessories are available from DJI and other retailers, as well as online marketplaces such as Amazon and eBay.

3.2. Artificial Light (Imalent MS03 LED)

A 3D model of the underground dark tunnel was successfully created using 10000 Lumes LED light. Thus, outage of the light source which is mounted on the drone should be more than 10000 Lumens and it should be lightweight. For this reason, the IMALENT MS03 was selected. It is the brightest EDC flashlight with an outstanding 13,000 lumen output at max performance. Two LED lights were mounted on the drone for illumination purposes when images were taken.

Table 3.

Specification of the Imalent MS03 LED.

Table 3.

Specification of the Imalent MS03 LED.

| Brand |

IMALENT |

| Product Code |

MS03 |

| LED Type |

3 pieces of American CREE XHP70 2nd LEDs |

| Batteries Required |

1x rechargeable 4000mAh 21700 Li-ion battery |

| Luminous Flux |

Up To 13000 Lumens |

| Intensity |

26320cd (Max.) |

| Distance |

324m (Max.) |

| Operating Modes and Output and Runtime |

Turbo/High/Middle II/Middle I/Middle Low/Low/

Turbo output: 13,000~2000 Lumens; Run-time: 45s+70min

High output: 8,000~2,000 Lumens; Run-time: 1min+72min

Middle II output: 3,000 Lumens; Run-time: 1h15min

Middle I output: 1,300 Lumens; Run-time: 2h

Middle low output: 800 Lumens; Run-time: 3h40min

Low output: 150 Lumens; Run-time: 27h |

| Weight |

187g (battery included) |

| Waterproof |

IPX-8 standard waterproof (2 meters submersible) |

3.3. Short range LED light

Too much brightness in a photograph used for photogrammetry can cause overexposure, which can result in washed out or white areas in the image. This can make it difficult to accurately measure features in the photograph and can lead to errors in the photogrammetric measurements. Therefore, close range LED light was used for the experiment when drone closed to the object.

Figure 12.

Small Ring mini-LED light.

Figure 12.

Small Ring mini-LED light.

3.4. Drone cage

The cage surrounds the camera, arms, and other sensitive components of the Phantom 4 Pro v2.0, providing additional protection against collisions or crashes in an environment which has no GPS. It is often used in situations where the drone is flown in tight or enclosed spaces, or in environments where the risk of damage is high.

Table 4.

Specification of the cage.

Table 4.

Specification of the cage.

| Maker |

SKYPIX |

| Size |

61 x 61 x 11.5 cm |

| Weight |

281 g |

| Material |

Aerospace grade carbon fiber tube |

| Impact on flight time |

Roughly 20-25% reduction depending on flying style |

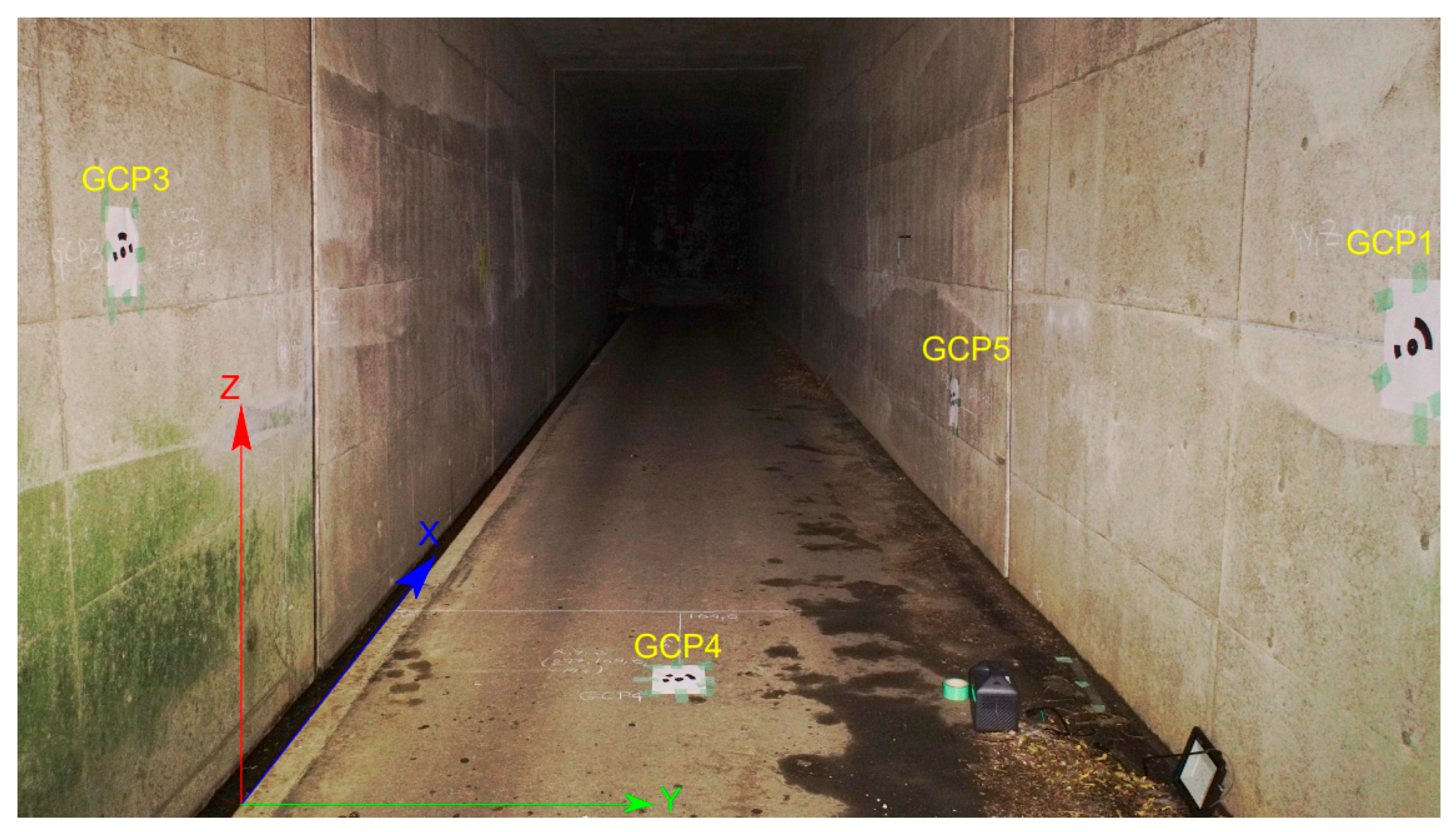

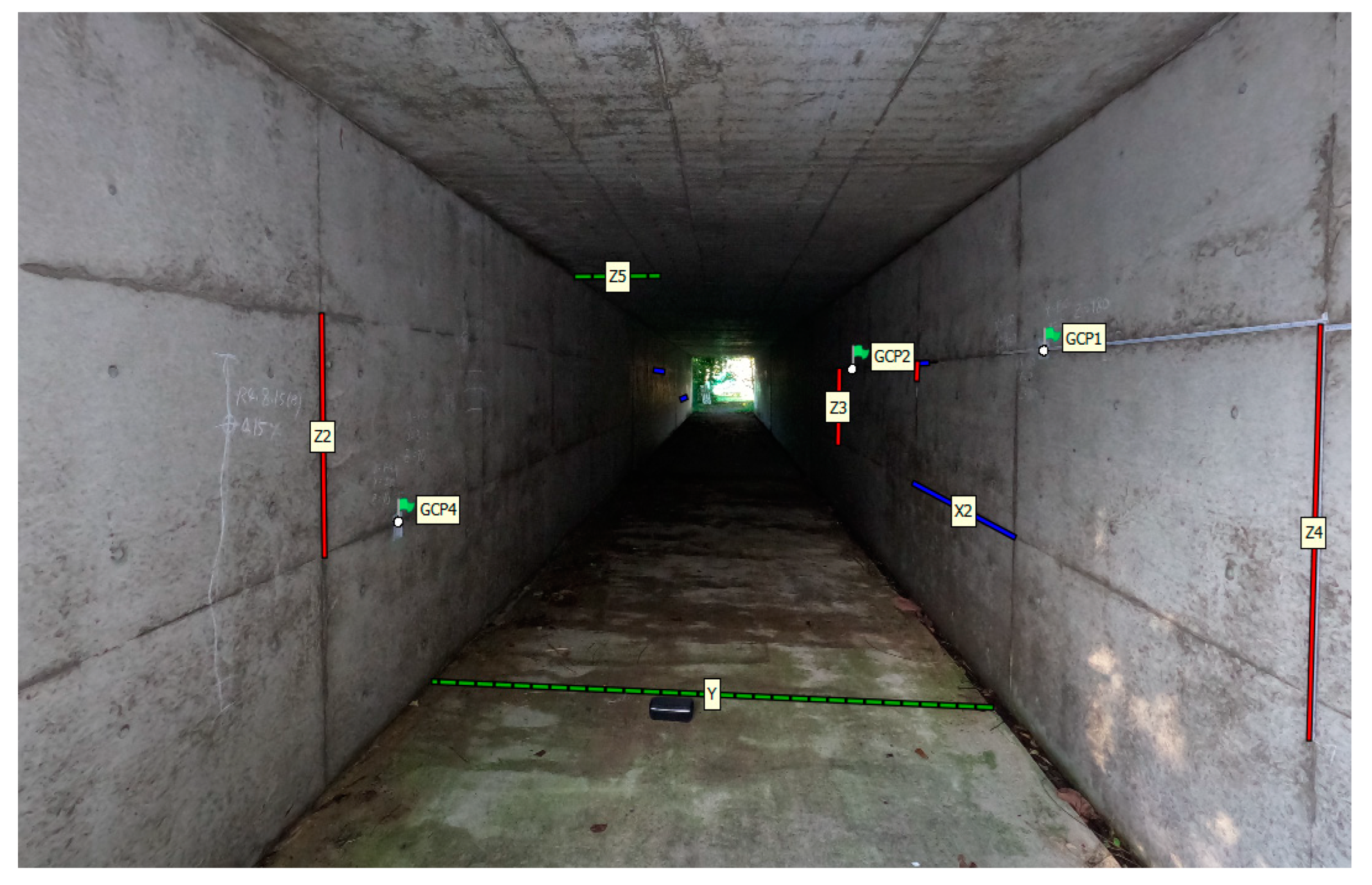

3.5. Experiment at the pedestrian tunnel II

The experiment was conducted with the main objective of creating a 3D model by capturing images using a drone system that can operate in the challenging environment of an underground tunnel and evaluating the accuracy of the generated 3D model. To establish a reference point, the coordinates were determined at the inception of the experiment. Four ground control points (GCPs) were subsequently positioned on a wall, and their coordinates were measured utilizing a tape measure. The inter-GCP distances were also quantified and recorded. The acquired data is presented in the

Table 6.

Figure 14.

Placement ground control point.

Figure 14.

Placement ground control point.

Figure 15.

X, Y and Z axis were displayed on the wall.

Figure 15.

X, Y and Z axis were displayed on the wall.

Table 5.

Ground control points and three coordinates.

Table 5.

Ground control points and three coordinates.

| № |

X (cm) |

Y (cm) |

Z (cm) |

| GCP1 |

99 |

0 |

0 |

| GCP3 |

222 |

351 |

25.5 |

| GCP4 |

277 |

164.6 |

-144 |

| GCP5 |

528 |

0 |

-73 |

After securing the GCP to the wall, set the drone to fly at a A-mode (Atti mode) and take photos with an 85-degree overlap or at 40-50 cm intervals to clearly capture the GCP. In total, 80 photos were taken in the tunnel which was 37.6 meters long.

Figure 16.

Flying drone and taking imagery.

Figure 16.

Flying drone and taking imagery.

3.6. Technical challenges

3.6.1. Weight and balance: Adding a payload to a drone changed its weight distribution, which affected its stability. If the payload was not properly balanced, it caused the drone to fly unevenly, making it more difficult to control. Therefore, when mounting payloads on the drone, the balance was carefully considered

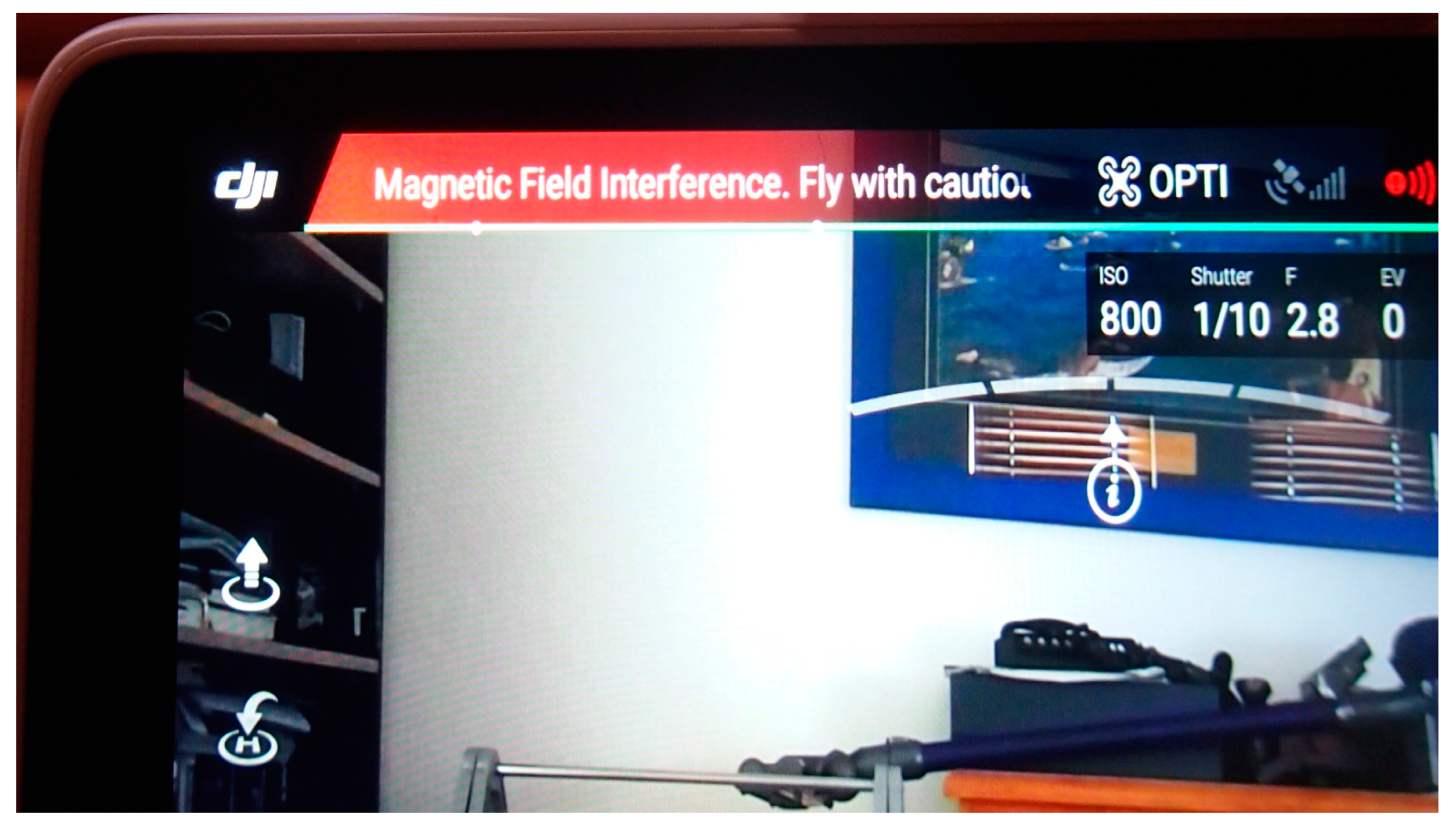

3.6.2. Magnetic field problem: The affixation of a metallic LED light to a drone has been observed to potentially impact the drone’s magnetic field. The metal component within the LED light may generate its own magnetic field, which may perturb the drone’s magnetic field

Therefore, the magnetic field was isolated by wrapping the metallic light in aluminum foil, a paramagnetic material.

Figure 17.

Magnetic field interference.

Figure 17.

Magnetic field interference.

Figure 18.

Wrapping the light in aluminum foil.

Figure 18.

Wrapping the light in aluminum foil.

3.6.3. Stabilization problem: The GPS on the DJI Phantom 4 Pro V2.0 helps to improve the drone's hovering stability by allowing it to determine its precise location and altitude. This information is used by the drone's flight controller to make real-time adjustments to its position and movements to hold a steady hover. Additionally, the GPS also enables the drone to compensate for wind, so that it can maintain its position even in gusty conditions. When the drone is in GPS mode, it uses the GPS signal to determine its location, and uses this information to hold its position in the air. This is known as GPS stabilization, which is a more advanced form of stabilization than optical flow stabilization alone. By combining data from its GPS and other sensors, the Phantom 4 Pro V2.0 can maintain a very stable hover even in windy conditions. This can be especially useful when capturing aerial photos or videos, as it helps to keep the camera steady and reduce the risk of blur.

In the experiment, the environment didn’t have a GPS signal, the drone had a harder time maintaining a stable hover and had difficulty determining its precise location and altitude. However, the drone is equipped with other sensors such as accelerometer, barometer and vision sensors that help to provide some level of stabilization and position control in environments where the GPS signal is weak or lost.

Figure 19.

The drone in the ATTI mode.

Figure 19.

The drone in the ATTI mode.

In the absence of a GPS signal, the drone was switched to "Attitude stabilization" mode, which uses the data from its internal sensors to maintain its stability. This mode is less precise and stable than GPS mode, and it is not able to hold its position as well in windy conditions or perform autonomous flights as smoothly.

4. Results

4.1. The result and assessment of the experiment in the pedestrian tunnel 1

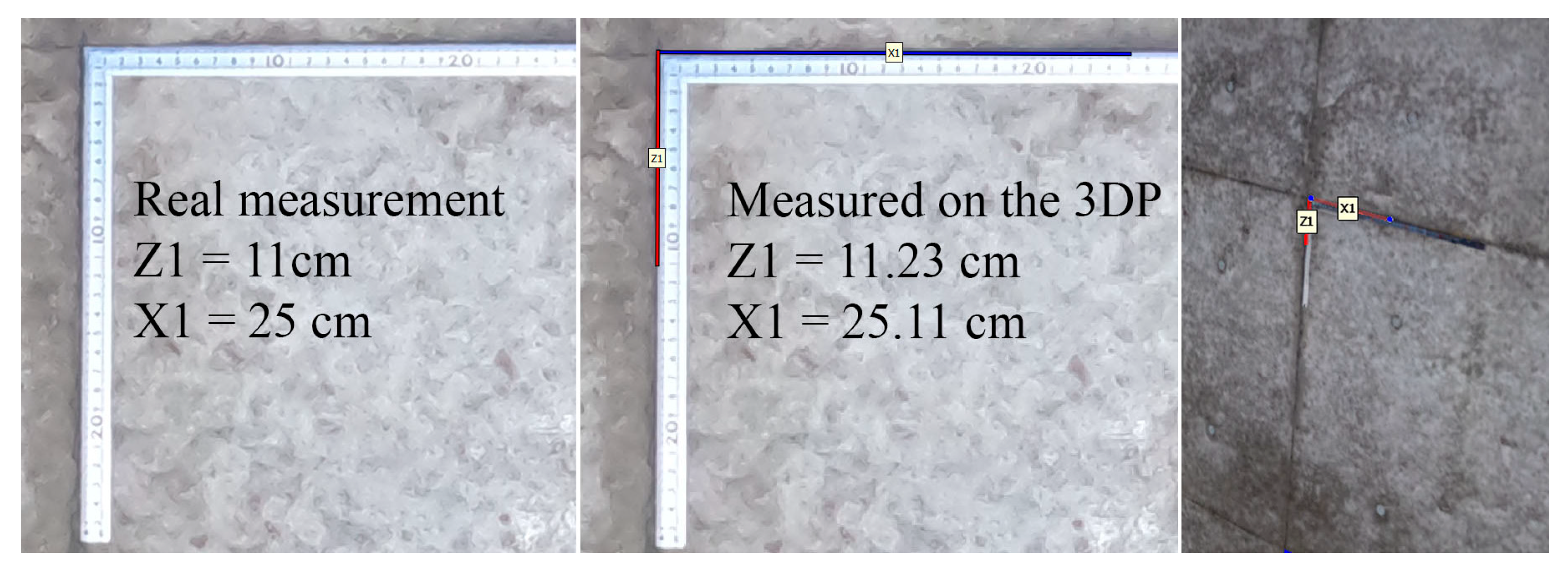

To evaluate the accuracy of the 3D models (also known as digital twins), measurements were taken from the models and compared with actual measurements taken from the real-world objects that the models represented.

Figure 20.

Measurement on the real object.

Figure 20.

Measurement on the real object.

Figure 21.

Measurements on the 3D model.

Figure 21.

Measurements on the 3D model.

The blue, green and red lines represent X, Y, Z axis respectively. These lines were measured in the tunnel with tape-meter and ruler.

Figure 22.

Comparison of real measurement and measurement on the 3D photogrammetric model.

Figure 22.

Comparison of real measurement and measurement on the 3D photogrammetric model.

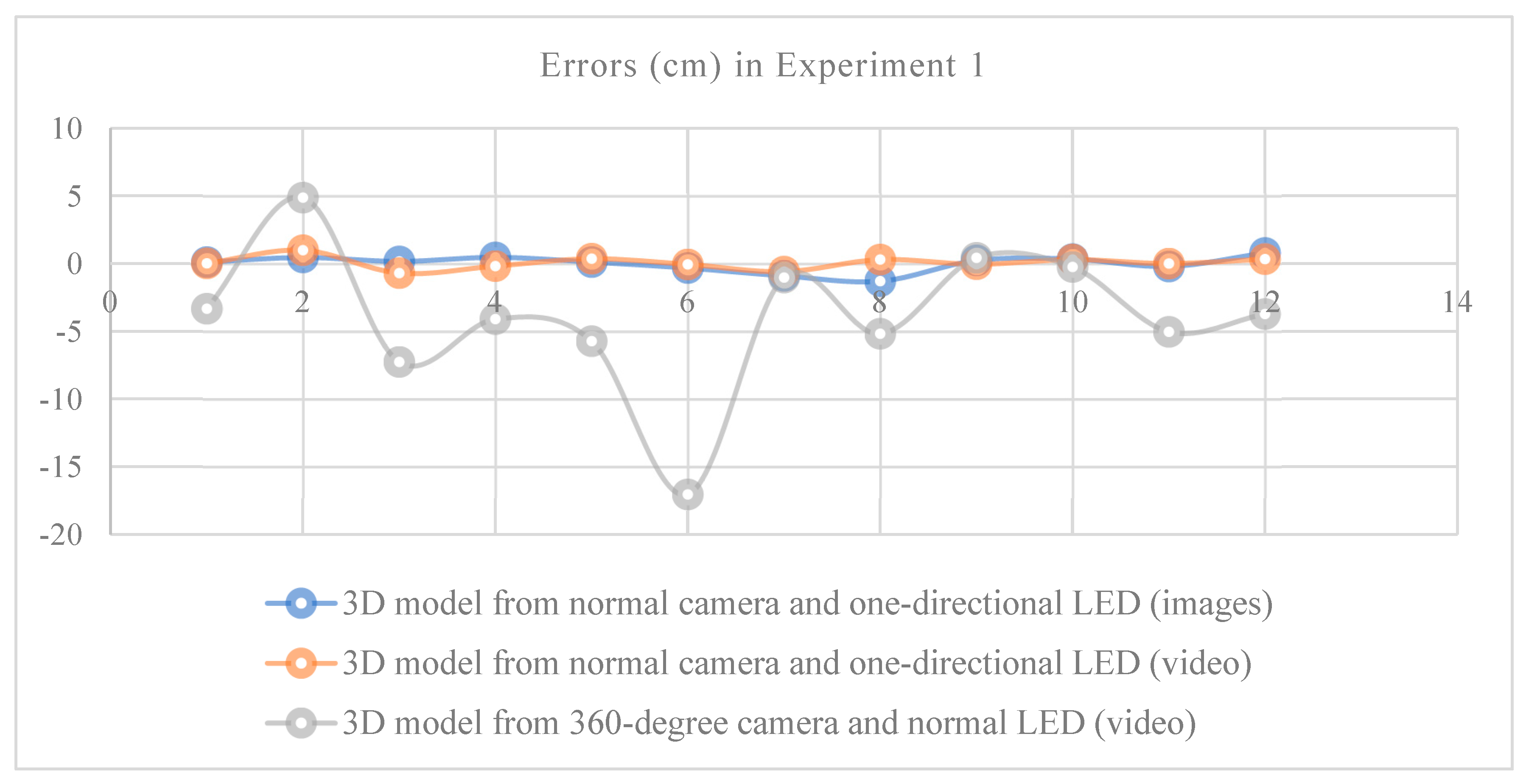

A total of 12 places were measured, and the errors were determined comparing real-object and 3D photogrammetric model. The total results are shown in the graph. The errors of the 3D model from normal camera and normal LED source (images) and 3D model from normal camera and normal LED source (video) were between 0-10 mm, however the errors of the 3D model from 360-degree camera and normal LED source (video) were comparatively high. This is because when using the 360-degree camera with normal LED light source, the photos taken were quite shaded. Later, when creating a 3D model, it was affected by errors.

Table 6.

Comparison of measurement on the 3D model and real object (3D model from photos taken with normal camera).

Table 6.

Comparison of measurement on the 3D model and real object (3D model from photos taken with normal camera).

| Axis |

Measured at real site (cm) |

Measured at 3D model (cm) |

Error (cm) |

| X1 |

25 |

25.111 |

0.111 |

| X2 |

180 |

180.457 |

0.457 |

| X3 |

180 |

180.165 |

0.165 |

| X4 |

180 |

180.467 |

0.467 |

| Y1 |

311 |

311.117 |

0.117 |

| Y2 |

90 |

89.675 |

-0.325 |

| Y3 |

90 |

89.1 |

-0.9 |

| Y4 |

90 |

88.7 |

-1.3 |

| Z1 |

11 |

11.235 |

0.235 |

| Z2 |

90 |

90.333 |

0.333 |

| Z3 |

90 |

89.794 |

-0.206 |

| Z4 |

100 |

100.793 |

0.793 |

Table 7.

Comparison of measurement on the 3D model and real object (3D model from videos recorded with normal camera).

Table 7.

Comparison of measurement on the 3D model and real object (3D model from videos recorded with normal camera).

| Axis |

Measured at real site (cm) |

Measured at 3D model (cm) |

Error (cm) |

| X1 |

10 |

10.02 |

0.018 |

| X2 |

200 |

200.99 |

0.998 |

| X3 |

180 |

179.3 |

-0.7 |

| X4 |

180 |

179.82 |

-0.18 |

| Y1 |

311 |

311.36 |

0.361 |

| Y2 |

90 |

89.94 |

-0.052 |

| Y4 |

90 |

89.41 |

-0.59 |

| Y5 |

90 |

90.3 |

0.3 |

| Z1 |

10 |

9.952 |

-0.048 |

| Z2 |

90 |

90.304 |

0.304 |

| Z3 |

90 |

90.007 |

0.007 |

| Z4 |

90 |

90.337 |

0.337 |

Table 8.

Comparison of measurement on the 3D model and real object (3D model from videos recorded with 360-degree camera).

Table 8.

Comparison of measurement on the 3D model and real object (3D model from videos recorded with 360-degree camera).

| Axis |

Measured at real site (cm) |

Measured at 3D model (cm) |

Error (cm) |

| X1 |

180 |

176.674 |

-3.326 |

| X2 |

200 |

204.876 |

4.876 |

| X3 |

180 |

172.743 |

-7.257 |

| X4 |

180 |

175.9 |

-4.1 |

| Y1 |

90 |

84.282 |

-5.718 |

| Y2 |

321 |

303.956 |

-17.044 |

| Y4 |

90 |

88.97 |

-1.03 |

| Y5 |

90 |

84.82 |

-5.18 |

| Z1 |

90 |

90.427 |

0.427 |

| Z2 |

90 |

89.756 |

-0.244 |

| Z3 |

90 |

84.97 |

-5.03 |

| Z4 |

90 |

86.28 |

-3.72 |

Figure 23.

The result of experiment 1 is shown in the following table.

Figure 23.

The result of experiment 1 is shown in the following table.

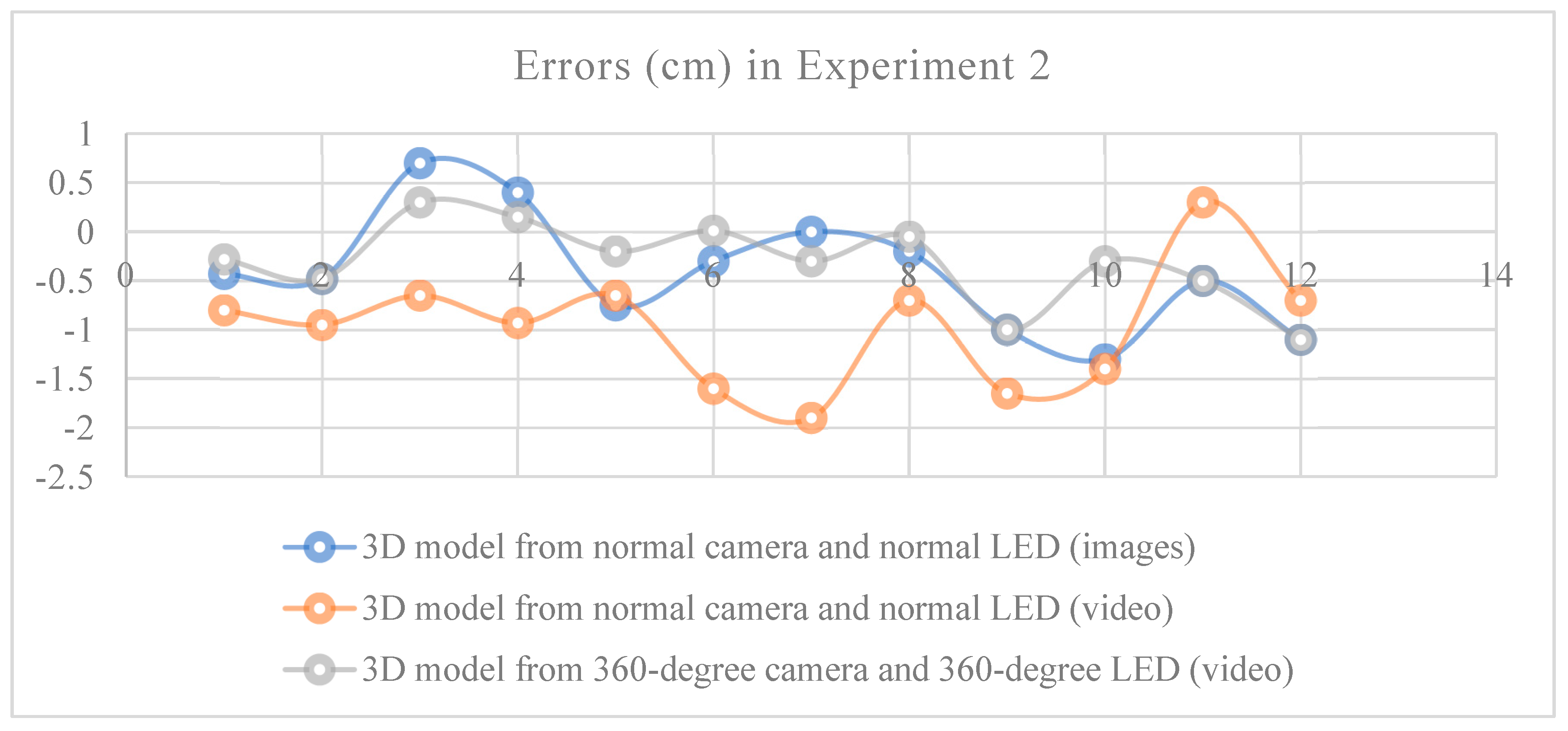

4.2. The result of the underground sewer tunnel

Because the error of the 3D model created using the 360-degree camera and normal LED light source was large, in order to create a better model, the light source was changed to an omni directional light source and pictures to be create a 3D model were taken. As a result, the error of the above model is reduced and improved. The error of this model was lower than the model created using normal camera and normal LED light source.

Table 9.

Comparison of measurement on the 3D model and real object (3D model from photos taken with normal camera).

Table 9.

Comparison of measurement on the 3D model and real object (3D model from photos taken with normal camera).

| Axis |

Measured at real site |

Measured at 3D model |

Error (cm) |

| X1 |

22 |

21.57 |

-0.43 |

| X2 |

22 |

21.52 |

-0.48 |

| X3 |

22 |

22.7 |

0.7 |

| X4 |

22 |

22.4 |

0.4 |

| Z1 |

29 |

28.25 |

-0.75 |

| Z2 |

29 |

28.7 |

-0.3 |

| Z3 |

29 |

29 |

0 |

| Z4 |

29 |

28.8 |

-0.2 |

| Y1 |

205 |

204 |

-1 |

| Y2 |

205 |

203.7 |

-1.3 |

| Y3 |

205 |

204.5 |

-0.5 |

| Y4 |

205 |

203.9 |

-1.1 |

Table 10.

Comparison of measurement on the 3D model and real object (3D model from videos recorded with normal camera).

Table 10.

Comparison of measurement on the 3D model and real object (3D model from videos recorded with normal camera).

| Axis |

Measured at real site |

Measured at 3D model |

Error (cm) |

| X1 |

22 |

21.2 |

-0.8 |

| X2 |

22 |

21.05 |

-0.95 |

| X3 |

22 |

21.35 |

-0.65 |

| X4 |

22 |

21.07 |

-0.93 |

| Z1 |

29 |

28.35 |

-0.65 |

| Z2 |

29 |

27.4 |

-1.6 |

| Z3 |

29 |

27.1 |

-1.9 |

| Z4 |

29 |

28.3 |

-0.7 |

| Y1 |

205 |

203.35 |

-1.65 |

| Y2 |

205 |

203.6 |

-1.4 |

| Y3 |

205 |

205.3 |

0.3 |

| Y4 |

205 |

204.3 |

-0.7 |

Table 11.

Comparison of measurement on the 3D model and real object (3D model from videos recorded with 360-degree camera).

Table 11.

Comparison of measurement on the 3D model and real object (3D model from videos recorded with 360-degree camera).

| Axis |

Measured at real site |

Measured at 3D model |

Error (cm) |

| X1 |

22 |

21.72 |

-0.28 |

| X2 |

22 |

21.52 |

-0.48 |

| X3 |

22 |

22.3 |

0.3 |

| X4 |

22 |

22.15 |

0.15 |

| Z1 |

29 |

28.8 |

-0.2 |

| Z2 |

29 |

29.01 |

0.01 |

| Z3 |

29 |

28.7 |

-0.3 |

| Z4 |

29 |

28.95 |

-0.05 |

| Y1 |

205 |

204 |

-1 |

| Y2 |

205 |

204.7 |

-0.3 |

| Y3 |

205 |

204.5 |

-0.5 |

| Y4 |

205 |

203.9 |

-1.1 |

Figure 24.

The result of experiment 1 shown in the following table.

Figure 24.

The result of experiment 1 shown in the following table.

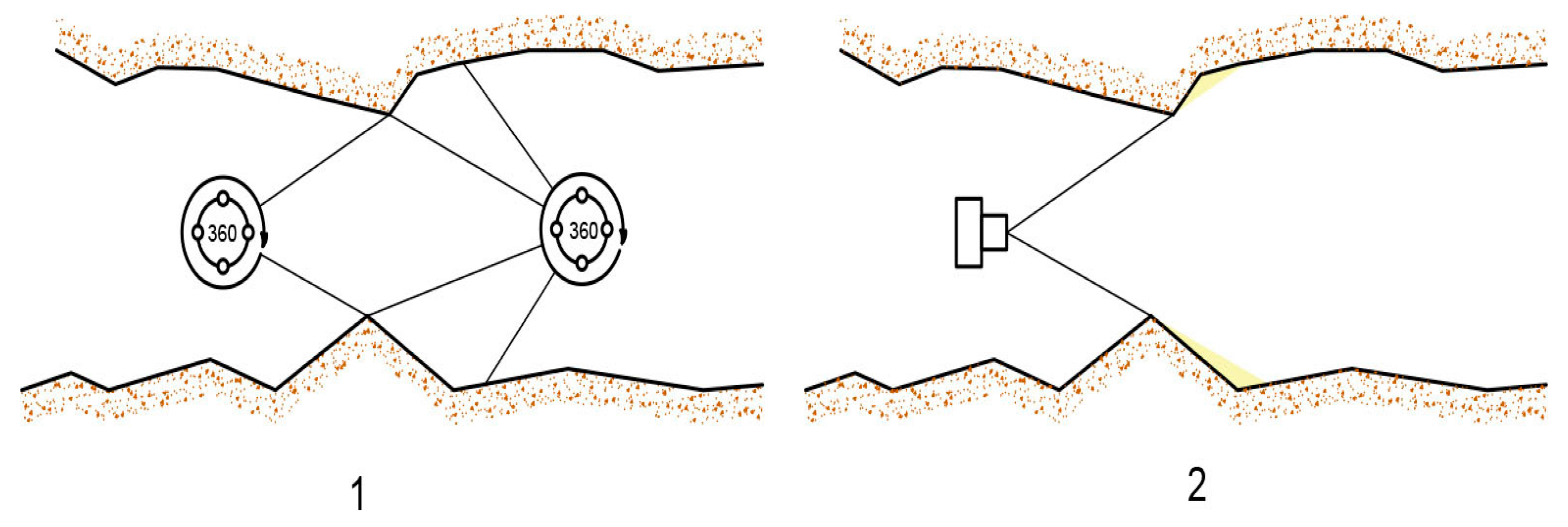

4.3. Significant of using 360-degree camera for mapping and modeling

When utilizing a 360-degree camera for image acquisition of an obstacle, the shadowed region behind the obstacle is captured in the resultant 3D model. Conversely, when utilizing a GoPro camera, which possesses a more limited field of view, it is infeasible to capture the shadowed region as it falls outside the camera's field of view, in the resultant 3D model.

In

Figure 25, If the images are taken with a normal camera, the shadowed area will not be captured in the images. However, If the images are taken with a 360-degree camera, it is possible to get the shadowed area on the other side of the obstacle.

Figure 25.

Comparison of images of obstacles in the model 1. 360-degree camera 2. Normal camera.

Figure 25.

Comparison of images of obstacles in the model 1. 360-degree camera 2. Normal camera.

Figure 26.

Comparison of one directional camera and 360-degree camera photography 1. 360-degree camera 2. Normal camera.

Figure 26.

Comparison of one directional camera and 360-degree camera photography 1. 360-degree camera 2. Normal camera.

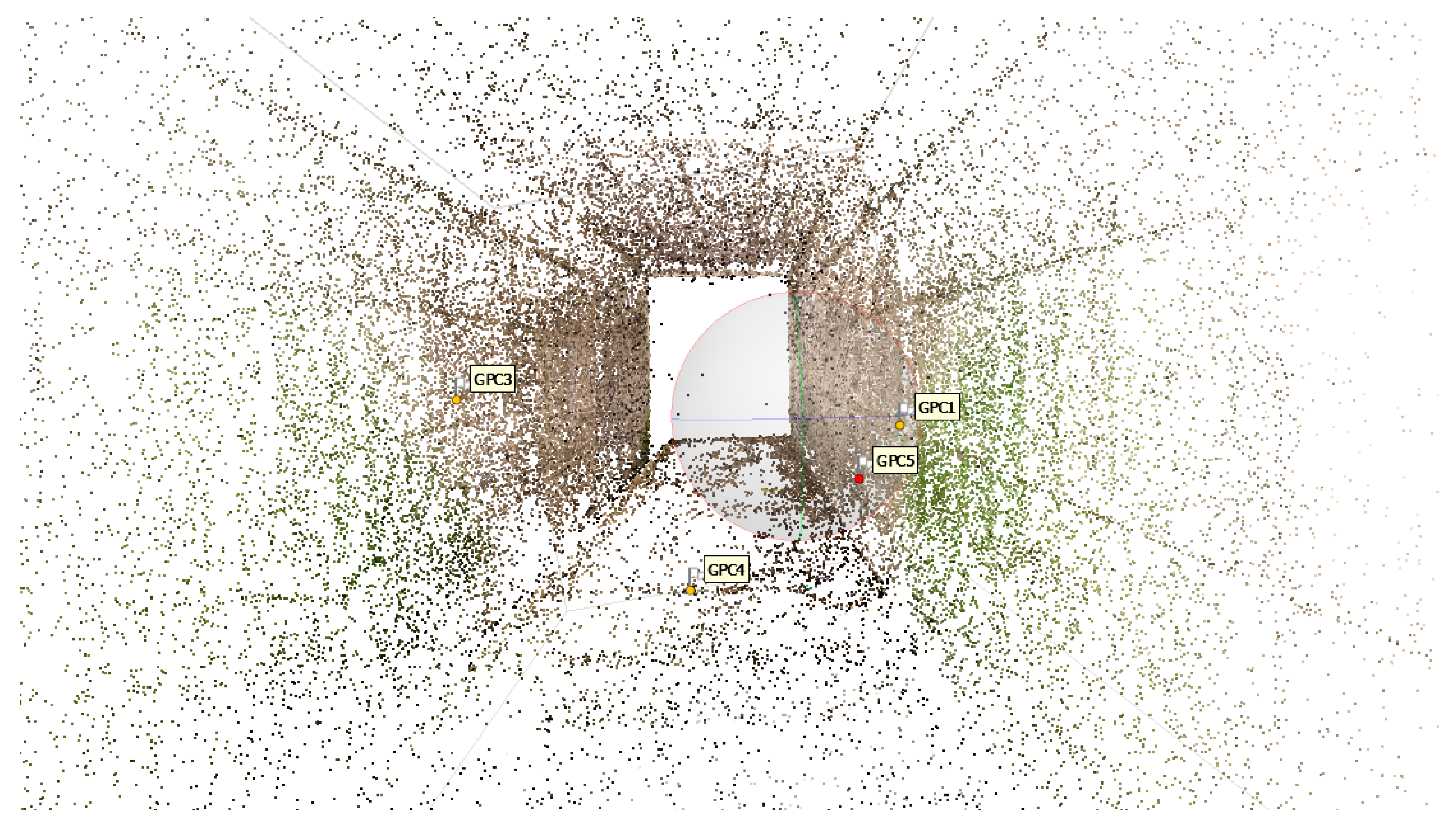

4.4. Assessment of an accuracy of the 3D model created by the UAV system

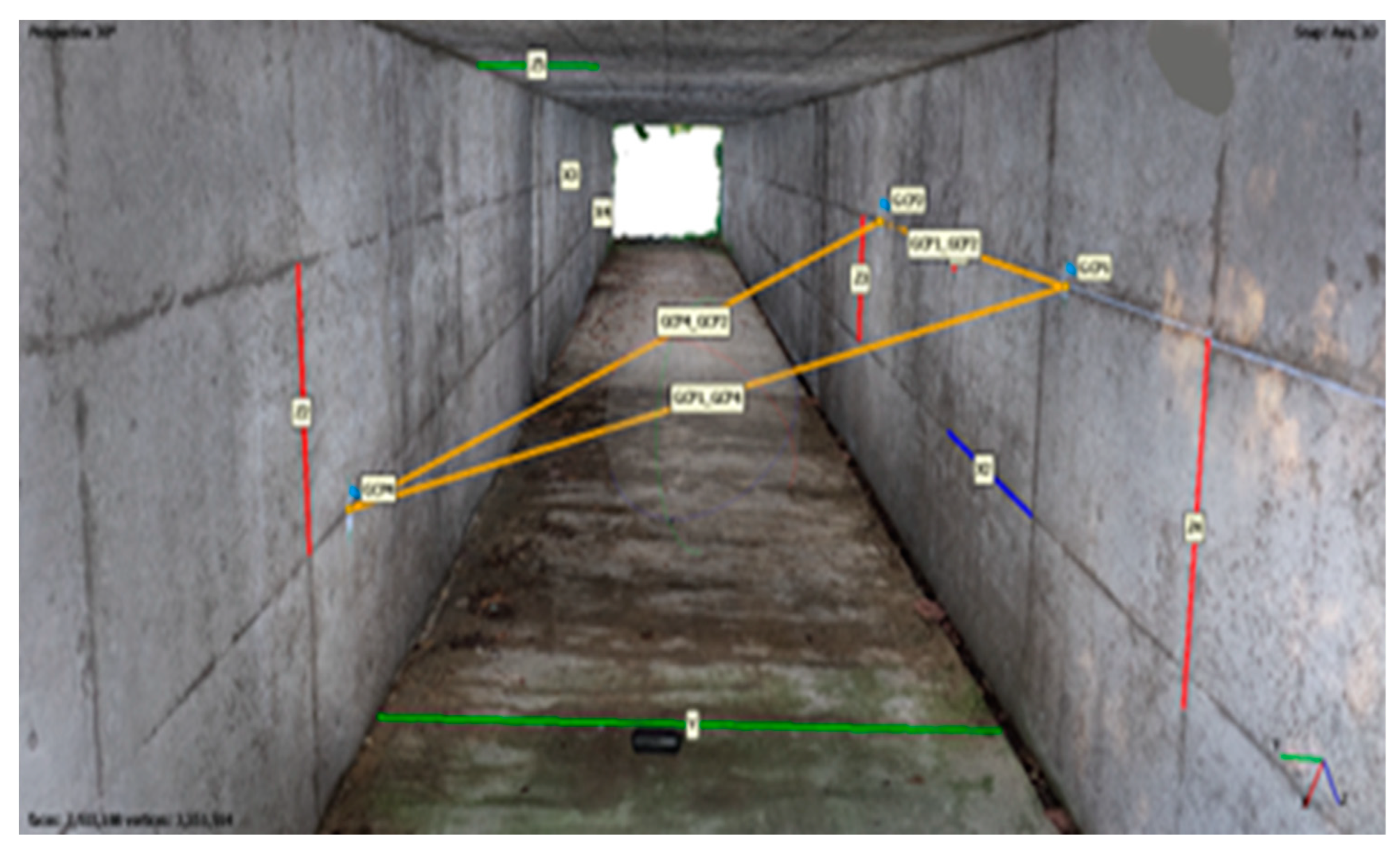

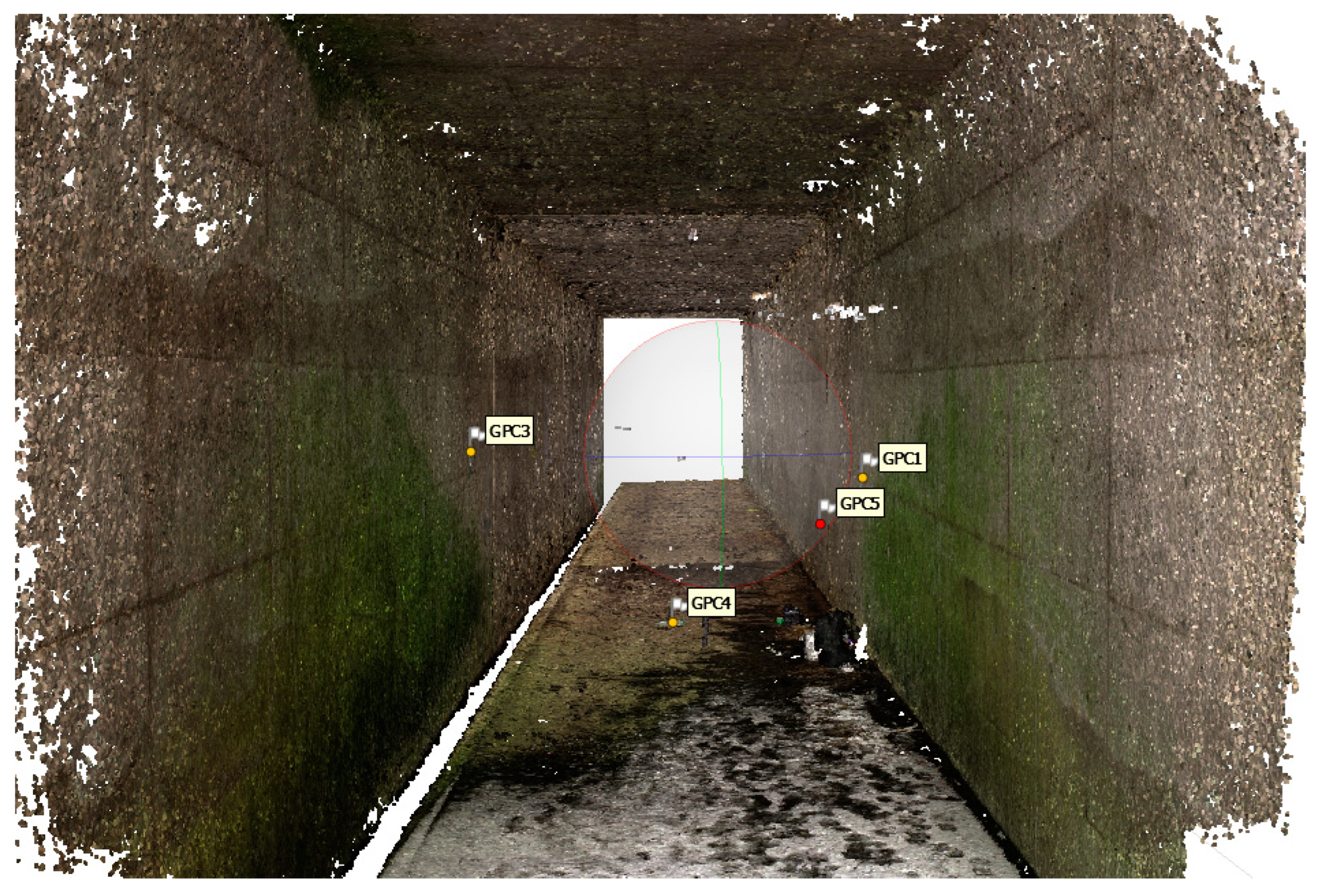

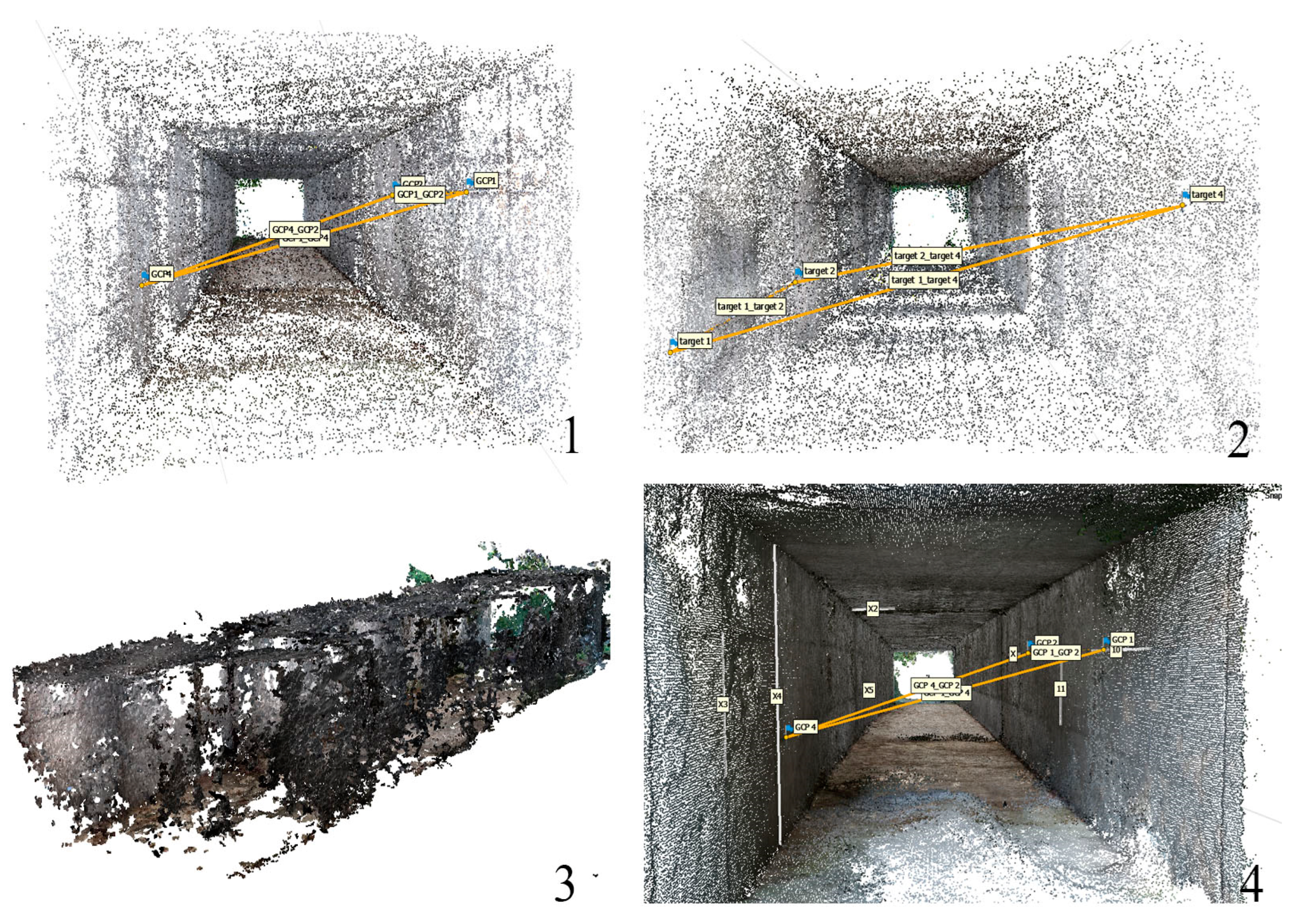

After successfully taking pictures, the 3D model was created using AJISOFT METASHAPE PRO 1.8.4 software and the 3D model was scaled into exact size using GCPs.

Figure 27.

Three-dimensional point cloud.

Figure 27.

Three-dimensional point cloud.

Figure 28.

Three-dimensional dense point cloud.

Figure 28.

Three-dimensional dense point cloud.

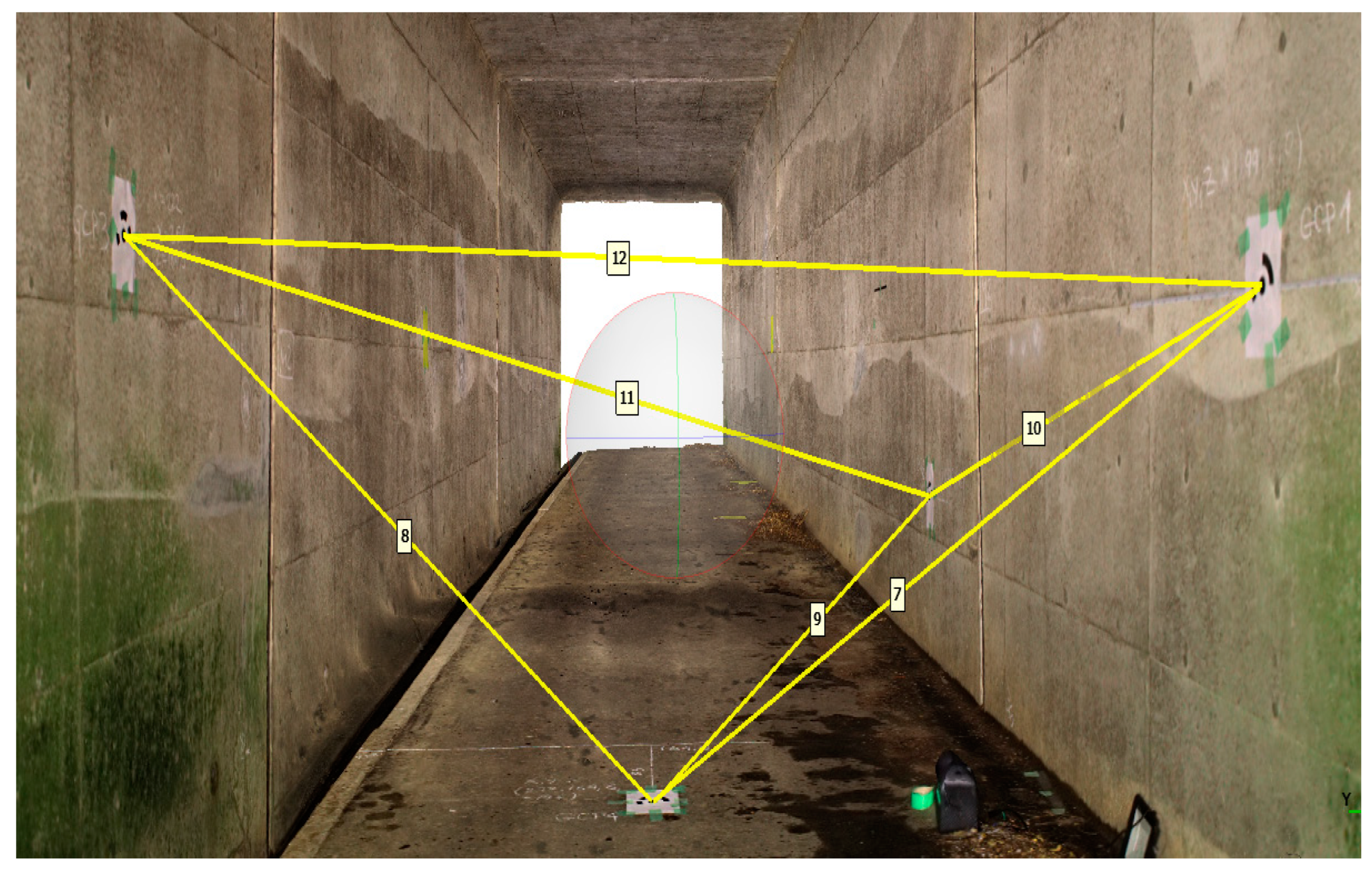

The yellow lines are the measurements taken in the tunnel. This line can be used to assess the accuracy of the 3D model on each axis (or three-dimensional space).

Figure 29.

Three-dimensional measurement in the tunnel.

Figure 29.

Three-dimensional measurement in the tunnel.

Table 12.

Measurements and errors on the three-dimensional space.

Table 12.

Measurements and errors on the three-dimensional space.

| № |

GCPs |

Distance between GPC on the real site (cm) |

Distance measured on the 3DP model (cm) |

Error (cm) |

| 1 |

GCP1-GCP3 |

372.80 |

372.356 |

-0.44 |

| 2 |

GPC1-GPC4 |

281.98 |

283.21 |

1.23 |

| 3 |

GPC1-GPC5 |

435.17 |

436.028 |

0.86 |

| 4 |

GCP3-GCP4 |

257.88 |

258.691 |

0.81 |

| 5 |

GCP3-GCP5 |

475.96 |

479.37 |

3.41 |

| 6 |

GCP4-GCP5 |

308.44 |

311.862 |

3.42 |

Table 13.

Accuracy at three-dimensional space average, max error, and root mean square.

Table 13.

Accuracy at three-dimensional space average, max error, and root mean square.

| Average Error (mm) |

0.17 |

| Max Error (mm) |

3.42 |

| Root Mean Square Deviation (mm) |

0.21 |

The table shows the errors between measurements taken on a real site, and measurements taken on a 3D model of the same site. The errors are generally in the range of a few millimeters, which would typically to be considered as a good accuracy for photogrammetry. The errors in this table appear to be within an acceptable range for most applications.

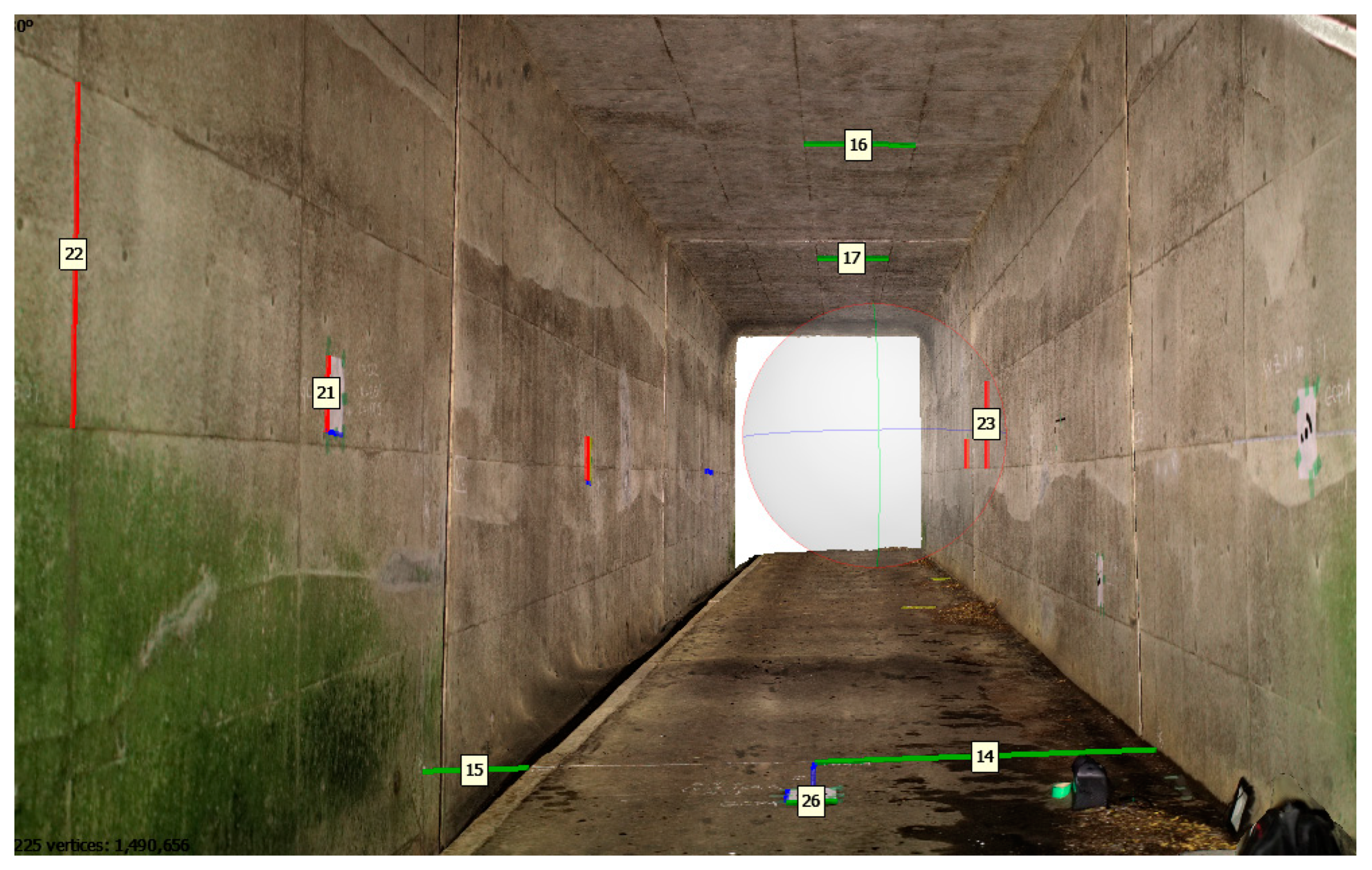

As mentioned above, the blue, green and red lines represent X, Y, Z axis respectively. These lines were measured in the tunnel with tape-meter and used to assess of the accuracy 3D model on X, Y and Z axis (two-dimensional axis).

Figure 30.

Two-dimensional measurement on the tunnel (X: blue, Y: green, Z: red).

Figure 30.

Two-dimensional measurement on the tunnel (X: blue, Y: green, Z: red).

Table 14.

Measurements and errors on each axis.

Table 14.

Measurements and errors on each axis.

| Axis and Line number |

Shape number |

Measured on the real site (cm) |

Measured on the 3D model (cm) |

Error (cm) |

| X1 |

13 |

83 |

82.45 |

-0.55 |

| X2 |

16 |

21 |

21.8 |

0.80 |

| X3 |

19 |

25.7 |

25.8 |

0.10 |

| X4 |

20 |

25.7 |

26.4 |

0.70 |

| X5 |

24 |

180 |

180.5 |

0.50 |

| Y1 |

14 |

164.6 |

164.745 |

0.14 |

| Y2 |

26 |

21 |

21.37 |

0.37 |

| Y3 |

16 |

90 |

91.05 |

1.05 |

| Y4 |

17 |

90 |

90.827 |

0.83 |

| Y5 |

15 |

50 |

50.9 |

0.90 |

| Z1 |

17 |

36.3 |

36.8 |

0.50 |

| Z2 |

18 |

36.3 |

36.49 |

0.20 |

| Z3 |

21 |

29.7 |

29.5 |

-0.20 |

| Z4 |

22 |

90 |

90.3 |

0.30 |

| Z5 |

23 |

90 |

91.3 |

1.30 |

Table 15.

Average error, RMES and Max error on each axisx-axis.

Table 15.

Average error, RMES and Max error on each axisx-axis.

| Average Error (cm) |

RMES (cm) |

Max Error(cm) |

| 0.53 |

0.58 |

0.546 |

| y-axis |

| Average Error (cm) |

RMES (cm) |

Max Error(cm) |

| 0.66 |

0.74 |

1.05 |

| z-axis |

| Average Error (cm) |

RMES (cm) |

Max Error(cm) |

| 0.5 |

0.65 |

1.3 |

5. Discussion

UAVs have become powerful devices for both practitioners and researchers. In recent years, there has been a substantial growth in the usage of UAVs for a wide range of applications in research and engineering. UAVs are mostly utilized for image capturing techniques [

15] due to their time savings, low cost, minimum field work, and high precision. Moreover, UAVs technology is commonly employed in geotechnical, tunneling and mining projects basically for mapping and 3D reconstruction, [

16,

17,

18]. According to Rossini et al [

16], and Salvini et al [

19] UAVs photogrammetry are mostly applicable in maps, surveying, and other technical applications. The UAVs photogrammetry-based 3D models have high precision and can be applied to conduct highly precise measurements. As a result, the approach was always employed to depict the true state of the place. UAV-based 3D photogrammetric modelling in inaccessible underground tunnels is a technique for constructing 3D representations of inaccessible areas using UAVs. The purpose of this study is to generate an accurate and reliable 3D modelling process using UAVs photogrammetric technology; hence this study allows the techniques to evaluate the practicability of modelling and mapping inaccessible tunnels using images recorded by UAVs and photogrammetry. These tunnels are located underground.

Some of the limitations of this research are weight and balance, magnetic field problem, and stabilization problem.

Weight and balance: Adding a payload to a drone changed its weight distribution, which affected its stability. If the payload was not properly balanced, it caused the drone to fly unevenly, making it more difficult to control. Therefore, when mounting payloads on the drone, the balance was carefully considered.

Magnetic field problem: The affixation of a metallic LED light to a drone has been observed to potentially impact the drone’s magnetic field. The metal component within the LED light may generate its own magnetic field, which may perturb the drone’s magnetic field. Therefore, the magnetic field was isolated by wrapping the metallic light in aluminum foil, a paramagnetic material.

Stabilization problem: The GPS on the DJI Phantom 4 Pro V2.0 helps to improve the drone's hovering stability by allowing it to determine its precise location and altitude. This information is used by the drone's flight controller to make real-time adjustments to its position and movements to hold a steady hover. Additionally, the GPS also enables the drone to compensate for wind, so that it can maintain its position even in gusty conditions. When the drone is in GPS mode, it uses the GPS signal to determine its location, and uses this information to hold its position in the air. This is known as GPS stabilization, which is a more advanced form of stabilization than optical flow stabilization alone. By combining data from its GPS and other sensors, the Phantom 4 Pro V2.0 can maintain a very stable hover even in windy conditions. This can be especially useful when capturing aerial photos or videos, as it helps to keep the camera steady and reduce the risk of blur.

6. Conclusions

For mapping the underground inaccessible tunnel, at first several 3D models were created in a dark underground environment using two types of cameras (normal and 360-degree) and two types of light sources (one-direction and omni-direction). Out of a total of 8 models, 6 models were created successfully, and 2 models failed. The 2 models were created from images taken with a 360-degree camera. In the first experiment, the error of the model created using 360-degree video and one-directional light was extremely high, but in the second experiment, when the light source was replaced by an omni-directional light source, the error became comparatively low. From this, it can be concluded that when using a 360-degree camera, if one direction of light is used for illumination, it affects the accuracy of the model.

To develop a UAV based 3D photogrammetric mapping system for underground inaccessible tunnel mapping, the light source and camera which was mounted on the UAV were selected based on the light source and camera used in the 3D model successfully created in the dark underground environment. According to the above results, the 3D model created from the 360-degree light and the video captured by the 360-degree camera was the most accurate, however when using the 360-degree camera, the UAV must be equipped with an omni directional light source. However, the case of mounting omni directional light source on the UAV affects its flight balance and aerodynamics. Thus, a normal camera and normal light source were mounted on the UAV. The light source must be greater than 10,000 lumens and the camera needed to be greater than 20MP. The camera of the DJI Phantom 4 V2.0 was 20MP, it met the requirements. The maximum error produced by the UAV imageries was 3.42 cm, which is slightly more than the acceptable error. However, the object was inaccessible tunnel, there were too many factors to influence the accuracy. Thus, flight balance, way to take images and illumination for taking will be managed well, it can be mapped with high accuracy.

Author Contributions

Conceptualization, B.B.; methodology, B.B.; software, B.B.; validation, B.B.; formal analysis, Y.F.; investigation, B.S.; resources, H.I. and T.A.; data curation, N.O.; writing—original draft preparation, B.B.; writing—review and editing, Y.F and B.B; visualization, Y.K. and T.A.; supervision, Y.K. and T.A.; project administration, T.A.; funding acquisition, H.T. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable

Acknowledgments

We thank the anonymous reviewers and members of the editorial team for their comments and contributions.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Toriumi, F.Y.; Bittencourt, T.N.; Futai, M.M. UAV-Based Inspection of Bridge and Tunnel Structures: An Application Review. Rev. IBRACON Estrut. Mater. 2023, 16, e16103. [Google Scholar] [CrossRef]

- Chmelina, K.; Gaich, A.; Keuschnig, M.; Delleske, R.; Galler, R.; Wenighofer, R. Drone Based Deformation Monitoring at the Tunnel Project Zentrum Am Berg.

- Colica, E.; D’Amico, S.; Iannucci, R.; Martino, S.; Gauci, A.; Galone, L.; Galea, P.; Paciello, A. Using Unmanned Aerial Vehicle Photogrammetry for Digital Geological Surveys: Case Study of Selmun Promontory, Northern of Malta. Environ Earth Sci 2021, 80, 551. [Google Scholar] [CrossRef]

- Drummond, C.D.; Harley, M.D.; Turner, I.L.; Matheen, A.N.A.; Glamore, W.C. UAV Applications to Coastal Engineering. New Zealand 2015. [Google Scholar]

- Giordan, D.; Adams, M.S.; Aicardi, I.; Alicandro, M.; Allasia, P.; Baldo, M.; De Berardinis, P.; Dominici, D.; Godone, D.; Hobbs, P.; et al. The Use of Unmanned Aerial Vehicles (UAVs) for Engineering Geology Applications. Bull Eng Geol Environ 2020, 79, 3437–3481. [Google Scholar] [CrossRef]

- Zan, W.; Zhang, W.; Wang, N.; Zhao, C.; Yang, Q.; Li, H. Stability Analysis of Complex Terrain Slope Based on Multi-Source Point Cloud Fusion. J. Mt. Sci. 2022, 19, 2703–2714. [Google Scholar] [CrossRef]

- Tsunetaka, H.; Hotta, N.; Hayakawa, Y.S.; Imaizumi, F. Spatial Accuracy Assessment of Unmanned Aerial Vehicle-Based Structures from Motion Multi-View Stereo Photogrammetry for Geomorphic Observations in Initiation Zones of Debris Flows, Ohya Landslide, Japan. Prog Earth Planet Sci 2020, 7, 24. [Google Scholar] [CrossRef]

- Aleshin, I.M.; Soloviev, A.A.; Aleshin, M.I.; Sidorov, R.V.; Solovieva, E.N.; Kholodkov, K.I. Prospects of Using Unmanned Aerial Vehicles in Geomagnetic Surveys. Seism. Instr. 2020, 56, 522–530. [Google Scholar] [CrossRef]

- Xu, Q.; Li, W.; Ju, Y.; Dong, X.; Peng, D. Multitemporal UAV-Based Photogrammetry for Landslide Detection and Monitoring in a Large Area: A Case Study in the Heifangtai Terrace in the Loess Plateau of China. J. Mt. Sci. 2020, 17, 1826–1839. [Google Scholar] [CrossRef]

- Liu, C.; Liu, X.; Peng, X.; Wang, E.; Wang, S. Application of 3D-DDA Integrated with Unmanned Aerial Vehicle–Laser Scanner (UAV-LS) Photogrammetry for Stability Analysis of a Blocky Rock Mass Slope. Landslides 2019, 16, 1645–1661. [Google Scholar] [CrossRef]

- Shahriari, H.; Honarmand, M.; Mirzaei, S.; Padró, J.-C. Application of UAV Photogrammetry Products for the Exploration of Dimension Stone Deposits: A Case Study of the Majestic Rose Quarry, Kerman Province, Iran. Arab J Geosci 2022, 15, 1650. [Google Scholar] [CrossRef]

- Cho, J.; Lee, J.; Lee, B. Application of UAV Photogrammetry to Slope-Displacement Measurement. KSCE J Civ Eng 2022, 26, 1904–1913. [Google Scholar] [CrossRef]

- Wang, S.; Ahmed, Z.; Hashmi, M.Z.; Pengyu, W. Cliff Face Rock Slope Stability Analysis Based on Unmanned Arial Vehicle (UAV) Photogrammetry. Geomech. Geophys. Geo-energ. Geo-resour. 2019, 5, 333–344. [Google Scholar] [CrossRef]

- Sha, G.; Xiping, Y.; Shu, G.; Milong, Y.; Lin, H.; Rui, B. Local 3D Scene Fine Detection Analysis of Circular Landform on the Southern Edge of Dinosaur Valley. Arab J Geosci 2021, 14, 1840. [Google Scholar] [CrossRef]

- Rango, A.; Laliberte, A.; Steele, C.; Herrick, J.E.; Bestelmeyer, B.; Schmugge, T.; Roanhorse, A.; Jenkins, V. Research Article: Using Unmanned Aerial Vehicles for Rangelands: Current Applications and Future Potentials. Environmental Practice 2006, 8, 159–168. [Google Scholar] [CrossRef]

- Rossini, M.; Di Mauro, B.; Garzonio, R.; Baccolo, G.; Cavallini, G.; Mattavelli, M.; De Amicis, M.; Colombo, R. Rapid Melting Dynamics of an Alpine Glacier with Repeated UAV Photogrammetry. Geomorphology 2018, 304, 159–172. [Google Scholar] [CrossRef]

- Saratsis, G.; Xiroudakis, G.; Exadaktylos, G.; Papaconstantinou, A.; Lazos, I. Use of UAV Images in 3D Modelling of Waste Material Stock-Piles in an Abandoned Mixed Sulphide Mine in Mathiatis—Cyprus. Mining 2023, 3, 79–95. [Google Scholar] [CrossRef]

- Tong, X.; Liu, X.; Chen, P.; Liu, S.; Luan, K.; Li, L.; Liu, S.; Liu, X.; Xie, H.; Jin, Y.; et al. Integration of UAV-Based Photogrammetry and Terrestrial Laser Scanning for the Three-Dimensional Mapping and Monitoring of Open-Pit Mine Areas. Remote Sensing 2015, 7, 6635–6662. [Google Scholar] [CrossRef]

- Salvini, R.; Mastrorocco, G.; Esposito, G.; Di Bartolo, S.; Coggan, J.; Vanneschi, C. Use of a Remotely Piloted Aircraft System for Hazard Assessment in a Rocky Mining Area (Lucca, Italy). Nat. Hazards Earth Syst. Sci. 2018, 18, 287–302. [Google Scholar] [CrossRef]

- Yu, R.; Li, P.; Shan, J.; Zhu, H. Structural State Estimation of Earthquake-Damaged Building Structures by Using UAV Photogrammetry and Point Cloud Segmentation. Measurement 2022, 202, 111858. [Google Scholar] [CrossRef]

- Ismail, A.; Ahmad Safuan, A.R.; Sa’ari, R.; Wahid Rasib, A.; Mustaffar, M.; Asnida Abdullah, R.; Kassim, A.; Mohd Yusof, N.; Abd Rahaman, N.; Kalatehjari, R. Application of Combined Terrestrial Laser Scanning and Unmanned Aerial Vehicle Digital Photogrammetry Method in High Rock Slope Stability Analysis: A Case Study. Measurement 2022, 195, 111161. [Google Scholar] [CrossRef]

- Nebiker, S.; Annen, A.; Scherrer, M.; Oesch, D. A LIGHT-WEIGHT MULTISPECTRAL SENSOR FOR MICRO UAV – OPPORTUNITIES FOR VERY HIGH RESOLUTION AIRBORNE REMOTE SENSING. 2008.

- Barrile, V.; Bilotta, G.; Nunnari, A. 3D MODELING WITH PHOTOGRAMMETRY BY UAVS AND MODEL QUALITYVERIFICATION. ISPRS Ann. Photogramm. Remote Sens. Spatial Inf. Sci. 2017, IV-4/W4, 129–134. [Google Scholar] [CrossRef]

- Nebiker, S.; Annen, A.; Scherrer, M.; Oesch, D. A LIGHT-WEIGHT MULTISPECTRAL SENSOR FOR MICRO UAV – OPPORTUNITIES FOR VERY HIGH RESOLUTION AIRBORNE REMOTE SENSING. 2008.

- Shaharizuan, H. Surveying with Photogrammetric Unmanned Aerial Vehicles (UAV) – an Industrial Breakthrough.

- Ćwiąkała, P.; Gruszczyński, W.; Stoch, T.; Puniach, E.; Mrocheń, D.; Matwij, W.; Matwij, K.; Nędzka, M.; Sopata, P.; Wójcik, A. UAV Applications for Determination of Land Deformations Caused by Underground Mining. Remote Sensing 2020, 12, 1733. [Google Scholar] [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions, or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).