Submitted:

21 May 2023

Posted:

23 May 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Reviews for the development of literature on solar PV power forecasting models

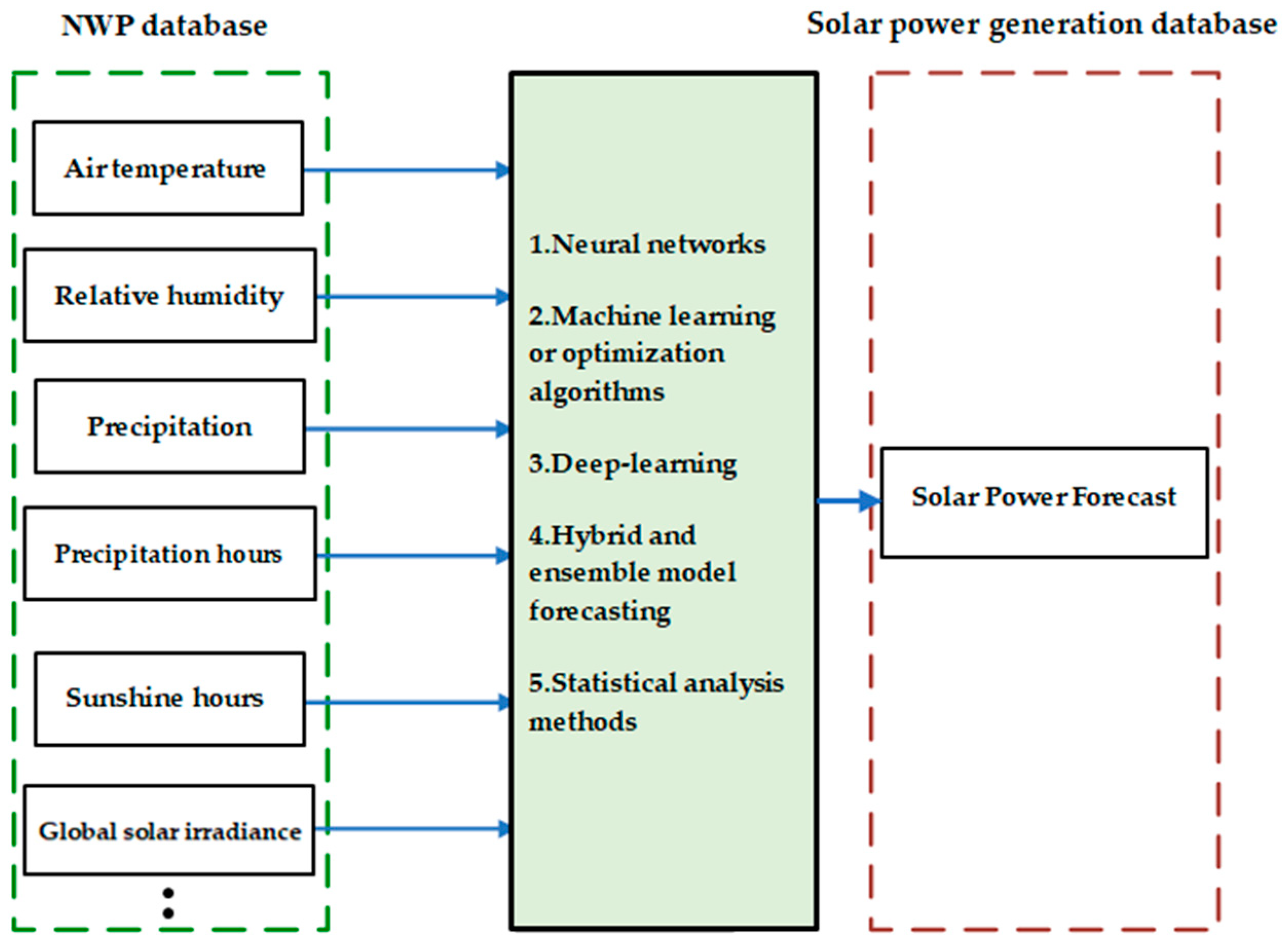

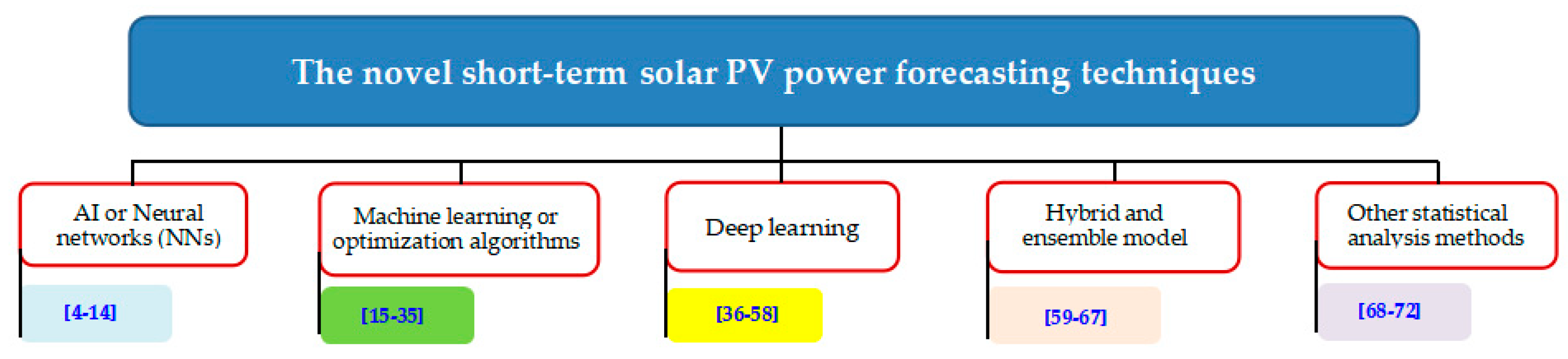

2.1. Forecasting techniques

2.2. Literature classification based on methods

2.3. Summary of forecasting techniques

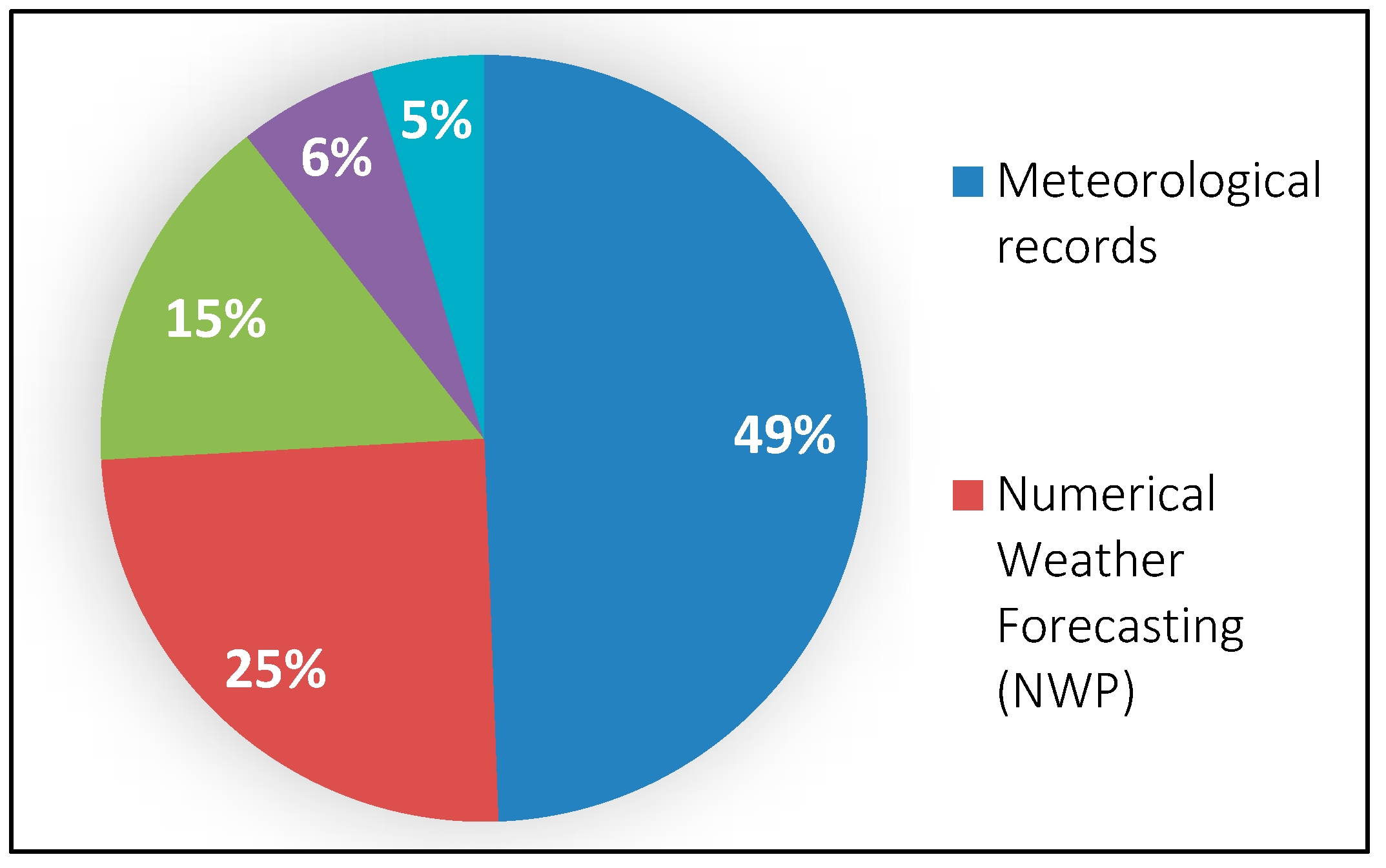

2.3.1. Distribution of input data for the reviewed works

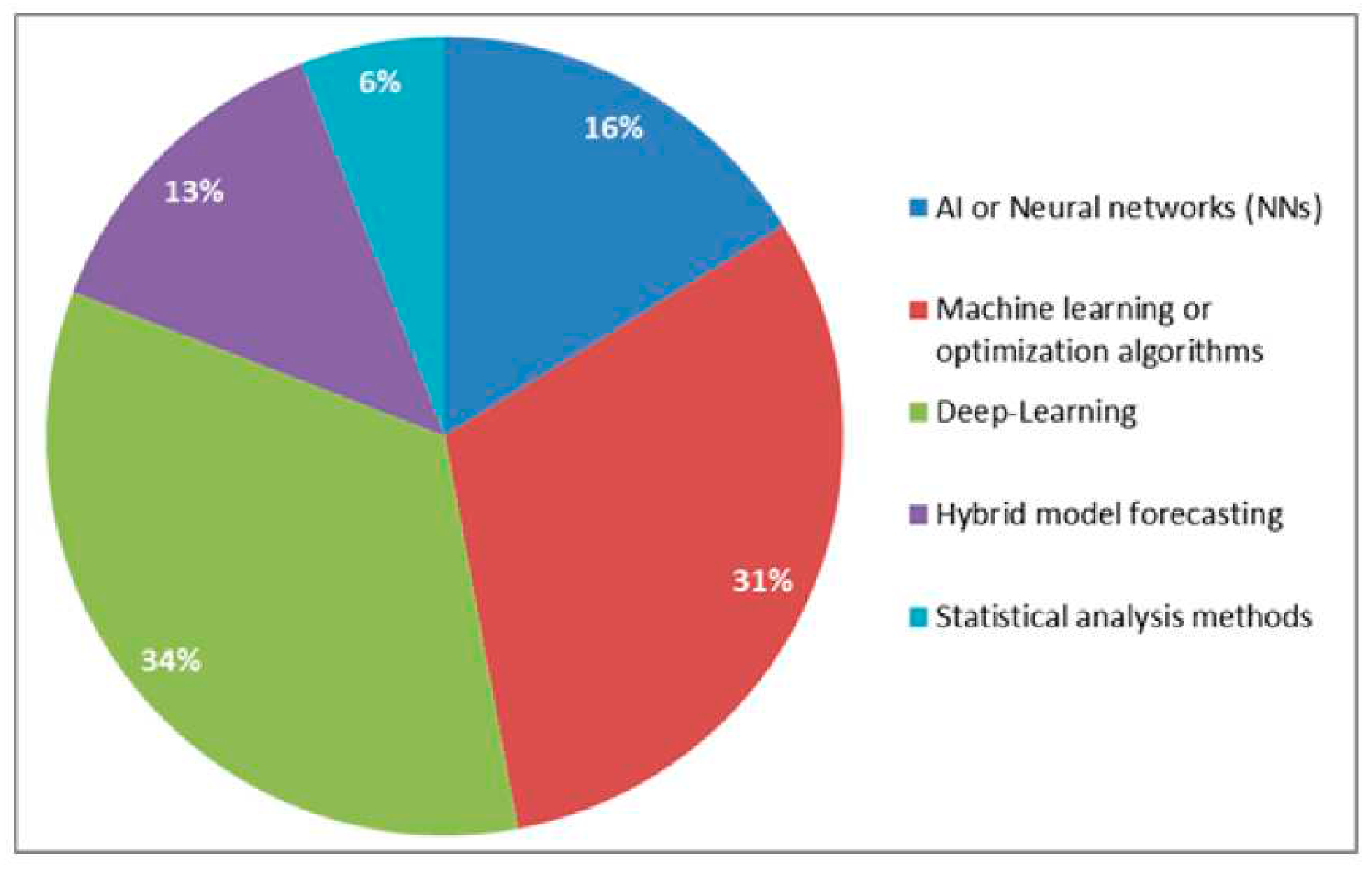

2.3.2. Distribution of forecasting methods for the reviewed works

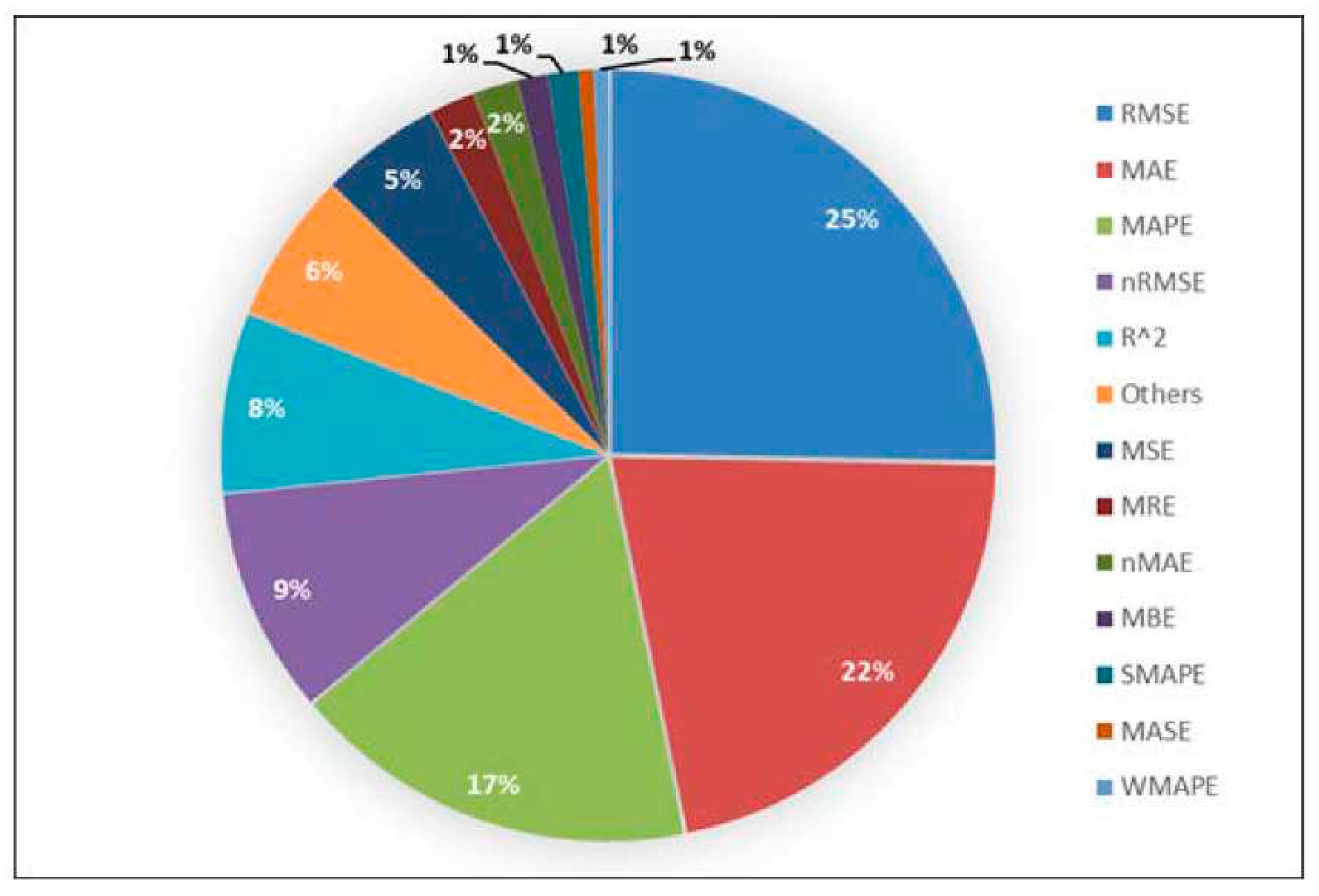

2.3.3. Statistical metrics for the reviewed works

2.4. Scientific contributions and comparison of reviewed works

3. The state-of-the-art approaches for short-term solar PV power forecasting

3.1. Insolation Prediction for solar PV power Generation

3.2. Data mining technique

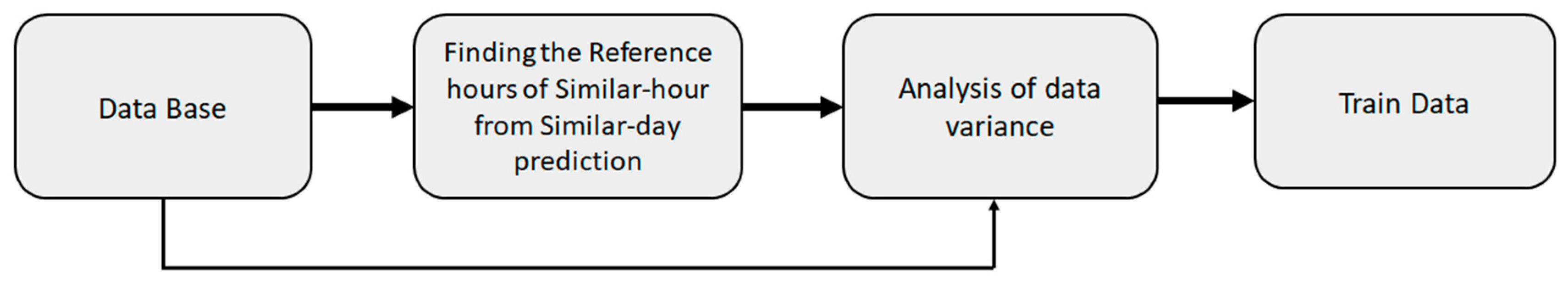

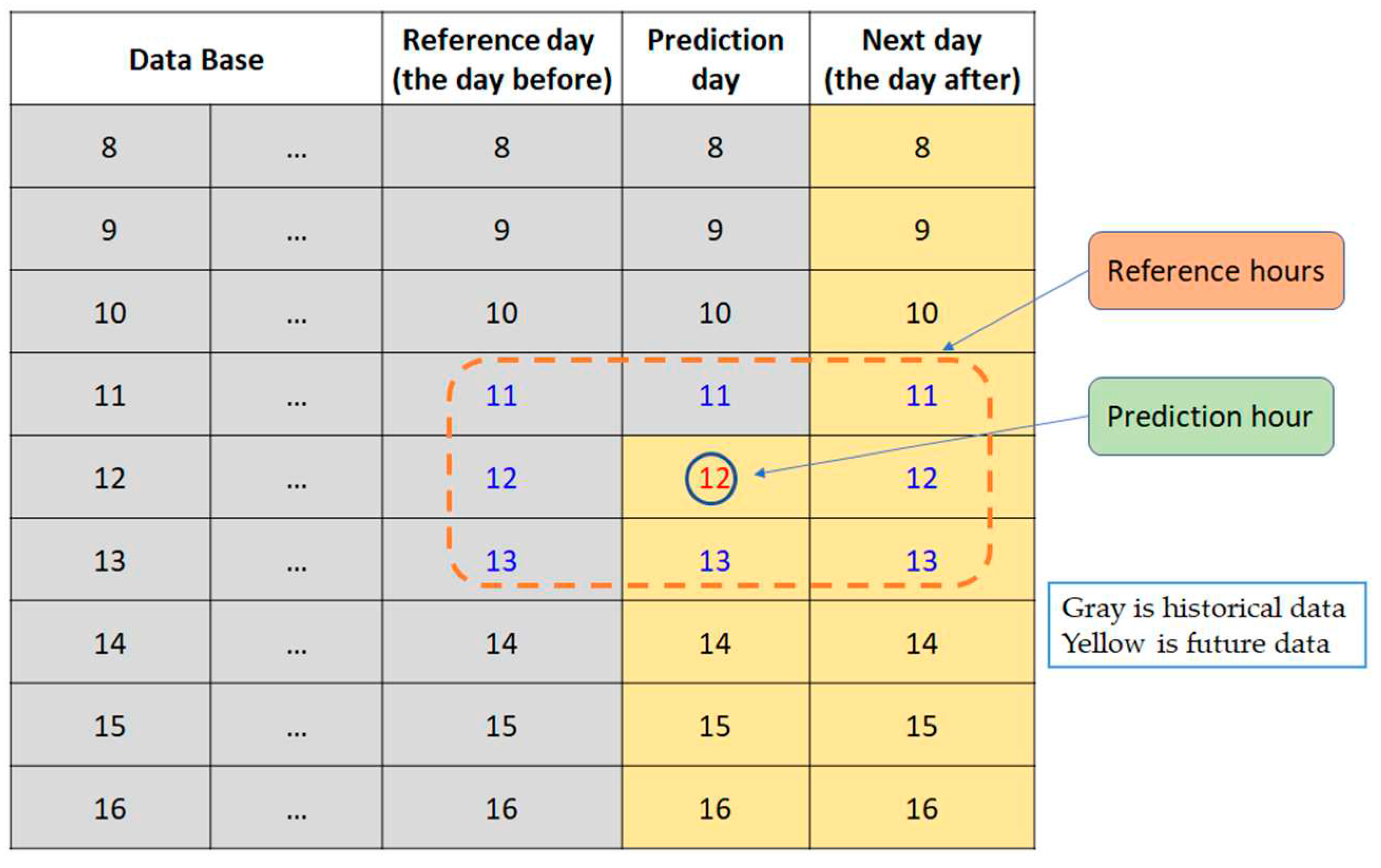

3.3. Hourly-similarity (HS) based method

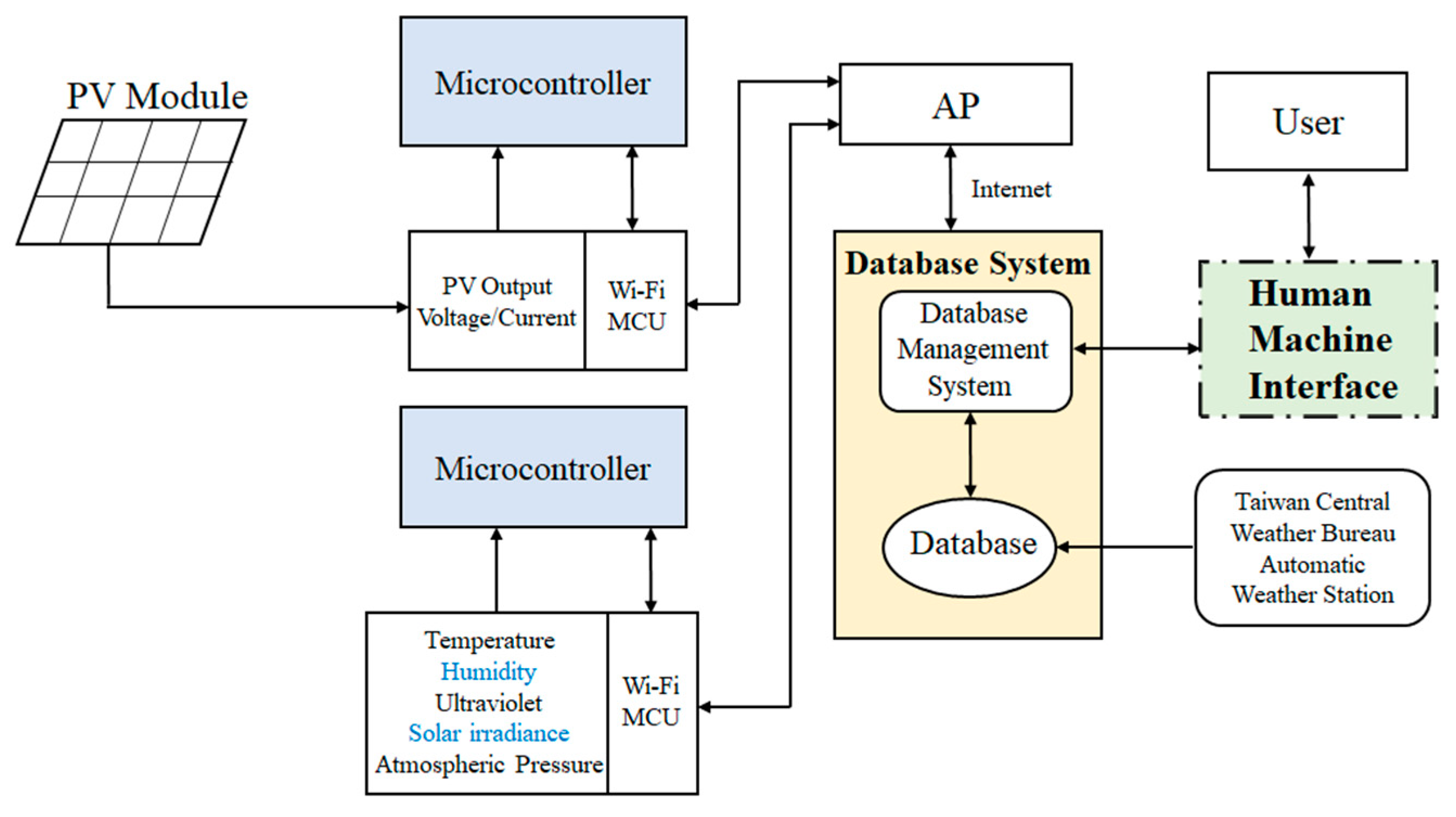

3.4. Internet of Things (IOT) Technology

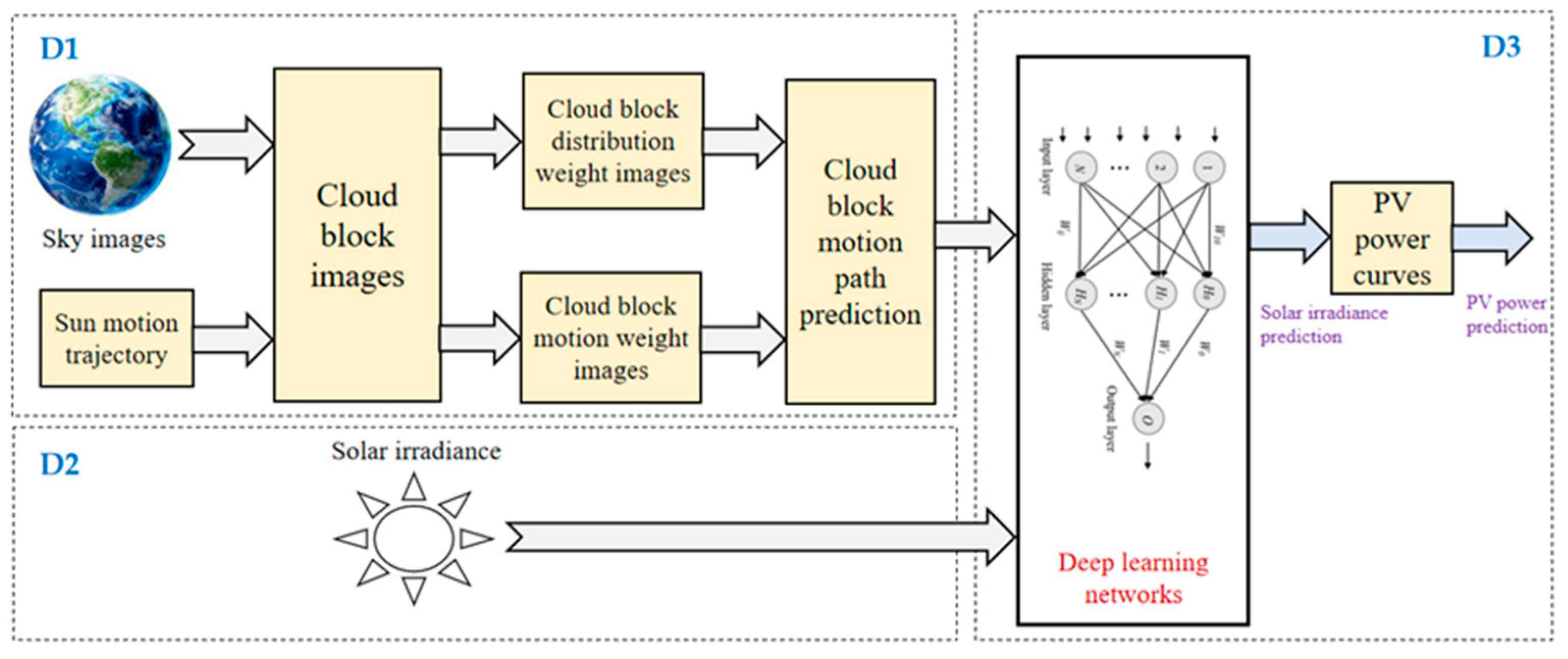

3.5. Sky image-based methods

4. Future studies and development

5. Conclusion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| PV | Photovoltaic |

| AI | Artificial intelligence |

| ANFIS | Adaptive-Network-based Fuzzy Inference Systems |

| ANN | Artificial neural network |

| BNN | Backpropagation neural network |

| CNN | Convolutional neural network |

| RNN | Regression neural network |

| LSTM | Long-short term memory |

| CLSTM | Convolutional-Long short term memory |

| SVM | Support vector machine |

| SVR | Support Vector Regression |

| GBDT | Gradient boosting decision tree |

| ELM | Extreme Learning Machine |

| GHI | Global horizontal irradiance |

| ABL | Adaptive boosting Learning |

| TOB | Transparent Open Box |

| FOS-ELM | Extreme learning machine with a forgetting mechanism |

| ResAttGRU | Multibranch attentive gated current residual network |

| BMA | Bayesian model averaging |

| Rec_LSTM | Recursive long short-term memory network |

| STVAR | Spatio-temporal autoregressive model |

| GPR | Gaussian process regression |

| MK-RVFLN | Multi-kernel random vector functional link neural network |

| GRU | Gate recurrent units- A variant of LSTM |

| Conv LSTM | Convolutional long-term short-term memory |

| MFFNN | Multi-layer feedforward neural network |

| MVO | Multiverse optimization |

| GA | Genetic algorithm |

| MLP | Multi layer Perceptron |

| VMD | Variational mode decomposition |

| RVM | Relevance Vector Machine |

| CMAES | Covariance Matrix Adaptive Evolution Strategies |

| XGB | Extreme Gradient Boosting |

| MARS | Multi-Adaptive Regression Splines |

| MC-WT-CBiLSTM | Multichannel, wavelet transform combining convolutional neural network and bidirectional long short-term memory |

| NARX-CVM | Nonlinear autoregressive with exogenous inputs and corrective vector multiplier |

| LSTM-SVR-BO | Long short-term memory-Support vector regression-Bayesian Optimization |

| GBRT-Med-KDE | Gradient boosting regression tree-Median-Kernel density estimation |

| TG-A-CNN-LSTM | Theory-guided and attention-based CNN-LSTM |

| HMM | Hidden Markov model |

| SBFMs | Similarity-based forecasting models |

| KF | Kalman filtering |

| QRA | Quantile regression averaging |

| MRE | Mean relative error |

| MAE | Mean absolute error |

| MASE | Mean absolute scaled error |

| WMAPE | Weight mean absolute percentage error |

| MBE | Mean bias error |

| MSE | Mean squared error |

| RMSE | Root mean squared error |

| MAPE | Mean absolute percent error |

| SMAPE | Symmetric mean absolute percentage error |

| nMAE | Normalized mean absolute error |

| nMBE | Normalized mean bias error |

| nRMSE | Normalized root mean squared error |

| R2 | Fitting coefficient |

References

- International Renewable Energy Agency. Renewable Capacity Statistics. 2020.

- Gonçalves, G.L.; Abrahão, R.; Rotella Junior, P.; Rocha, L.C.S. Economic Feasibility of Conventional and Building-Integrated Photovoltaics Implementation in Brazil. Energies 2022, 15, 6707. [Google Scholar] [CrossRef]

- Grazioli, G.; Chlela, S.; Selosse, S.; Maizi, N. The Multi-Facets of Increasing the Renewable Energy Integration in Power Systems. Energies 2022, 15, 6795. [Google Scholar] [CrossRef]

- Moreira, M.O.; Kaizer, B.M.; Ohishi, T.; Bonatto, B.D.; de Souza, A.C.Z.; Balestrassi, P.P. Multivariate Strategy Using Artificial Neural Networks for Seasonal Photovoltaic Generation Forecasting. Energies 2022, 16, 369. [Google Scholar] [CrossRef]

- Gutiérrez, L.; Patiño, J.; Duque-Grisales, E. A Comparison of the Performance of Supervised Learning Algorithms for Solar Power Prediction. Energies 2021, 14, 4424. [Google Scholar] [CrossRef]

- Lateko, A.A.H.; Yang, H.-T.; Huang, C.-M.; Aprillia, H.; Hsu, C.-Y.; Zhong, J.-L.; Phương, N.H. Stacking Ensemble Method with the RNN Meta-Learner for Short-Term PV Power Forecasting. Energies 2021, 14, 4733. [Google Scholar] [CrossRef]

- Bhatti, A.R.; Awan, A.B.; Alharbi, W.; Salam, Z.; Bin Humayd, A.S.; P. , P.R.; Bhattacharya, K. An Improved Approach to Enhance Training Performance of ANN and the Prediction of PV Power for Any Time-Span without the Presence of Real-Time Weather Data. Sustainability 2021, 13, 11893. [Google Scholar] [CrossRef]

- Bozkurt, H.; Maci̇t, R.; Çeli̇k, A.; Teke, A. Evaluation of artificial neural network methods to forecast short-term solar power generation: a case study in Eastern Mediterranean Region. Turk. J. Electr. Eng. Comput. Sci. 2022, 30, 2013–2030. [Google Scholar] [CrossRef]

- Hu, K.; Wang, L.; Li, W.; Cao, S.; Shen, Y. Forecasting of solar radiation in photovoltaic power station based on ground-based cloud images and BP neural network. IET Gener. Transm. Distrib. 2021, 16, 333–350. [Google Scholar] [CrossRef]

- Erduman, A. A smart short-term solar power output prediction by artificial neural network. Electr. Eng. 2020, 102, 1441–1449. [Google Scholar] [CrossRef]

- Kim, J.; Lee, S.-H.; Chong, K.T. A Study of Neural Network Framework for Power Generation Prediction of a Solar Power Plant. Energies 2022, 15, 8582. [Google Scholar] [CrossRef]

- Gutiérrez, L.; Patiño, J.; Duque-Grisales, E. A Comparison of the Performance of Supervised Learning Algorithms for Solar Power Prediction. Energies 2021, 14, 4424. [Google Scholar] [CrossRef]

- Moreno, G.; Martin, P.; Santos, C.; Rodriguez, F.J.; Santiso, E. A Day-Ahead Irradiance Forecasting Strategy for the Integration of Photovoltaic Systems in Virtual Power Plants. IEEE Access 2020, 8, 204226–204240. [Google Scholar] [CrossRef]

- Lima, M.A.F.; Carvalho, P.C.; Fernández-Ramírez, L.M.; Braga, A.P. Improving solar forecasting using Deep Learning and Portfolio Theory integration. Energy 2020, 195, 117016. [Google Scholar] [CrossRef]

- Akhter, M.N.; Mekhilef, S.; Mokhlis, H.; Almohaimeed, Z.M.; Muhammad, M.A.; Khairuddin, A.S.M.; Akram, R.; Hussain, M.M. An Hour-Ahead PV Power Forecasting Method Based on an RNN-LSTM Model for Three Different PV Plants. Energies 2022, 15, 2243. [Google Scholar] [CrossRef]

- Meng, M.; Song, C. Daily Photovoltaic Power Generation Forecasting Model Based on Random Forest Algorithm for North China in Winter. ustainability 2020, 12, 2247. [Google Scholar] [CrossRef]

- Alzahrani, A. Short-Term Solar Irradiance Prediction Based on Adaptive Extreme Learning Machine and Weather Data. Sensors 2022, 22, 8218. [Google Scholar] [CrossRef]

- Wood, D.A. Hourly-averaged solar plus wind power generation for Germany 2016: Long-term prediction, short-term forecasting, data mining and outlier analysis. Sustain. Cities Soc. 2020, 60, 102227. [Google Scholar] [CrossRef]

- Radovan, A.; Šunde, V.; Kučak, D.; Ban, A. Solar Irradiance Forecast Based on Cloud Movement Prediction. Energies 2021, 14, 3775. [Google Scholar] [CrossRef]

- Babbar, S.M.; Lau, C.Y.; Thang, K.F. Long Term Solar Power Generation Prediction using Adaboost as a Hybrid of Linear and Non-linear Machine Learning Model. Int. J. Adv. Comput. Sci. Appl. 2021, 12. [Google Scholar] [CrossRef]

- Ramkumar, G.; Sahoo, S.; Amirthalakshmi, T.M.; Ramesh, S.; Prabu, R.T.; Kasirajan, K.; Samrot, A.V.; Ranjith, A. A Short-Term Solar Photovoltaic Power Optimized Prediction Interval Model Based on FOS-ELM Algorithm. Int. J. Photoenergy 2021, 2021, 1–12. [Google Scholar] [CrossRef]

- Lateko, A.A.H.; Yang, H.-T.; Huang, C.-M. Short-Term PV Power Forecasting Using a Regression-Based Ensemble Method. Energies 2022, 15, 4171. [Google Scholar] [CrossRef]

- Mohana, M.; Saidi, A.S.; Alelyani, S.; Alshayeb, M.J.; Basha, S.; Anqi, A.E. Small-Scale Solar Photovoltaic Power Prediction for Residential Load in Saudi Arabia Using Machine Learning. Energies 2021, 14, 6759. [Google Scholar] [CrossRef]

- Mehazzem, F.; André, M.; Calif, R. Efficient Output Photovoltaic Power Prediction Based on MPPT Fuzzy Logic Technique and Solar Spatio-Temporal Forecasting Approach in a Tropical Insular Region. Energies 2022, 15, 8671. [Google Scholar] [CrossRef]

- Zazoum, B. Solar photovoltaic power prediction using different machine learning methods. Energy Rep. 2021, 8, 19–25. [Google Scholar] [CrossRef]

- Majumder, I.; Dash, P.K.; Bisoi, R. Short-term solar power prediction using multi-kernel-based random vector functional link with water cycle algorithm-based parameter optimization. Neural Comput. Appl. 2019, 32, 8011–8029. [Google Scholar] [CrossRef]

- Liu, Y. Short-Term Prediction Method of Solar Photovoltaic Power Generation Based on Machine Learning in Smart Grid. Math. Probl. Eng. 2022, 2022, 1–10. [Google Scholar] [CrossRef]

- Krechowicz, M.; Krechowicz, A.; Lichołai, L.; Pawelec, A.; Piotrowski, J.Z.; Stępień, A. Reduction of the Risk of Inaccurate Prediction of Electricity Generation from PV Farms Using Machine Learning. Energies 2022, 15, 4006. [Google Scholar] [CrossRef]

- Das, U.K.; Tey, K.S.; Bin Idris, M.Y.I.; Mekhilef, S.; Seyedmahmoudian, M.; Stojcevski, A.; Horan, B. Optimized Support Vector Regression-Based Model for Solar Power Generation Forecasting on the Basis of Online Weather Reports. IEEE Access 2022, 10, 15594–15604. [Google Scholar] [CrossRef]

- Nejati, M.; Amjady, N. A New Solar Power Prediction Method Based on Feature Clustering and Hybrid-Classification-Regression Forecasting. IEEE Trans. Sustain. Energy 2021, 13, 1188–1198. [Google Scholar] [CrossRef]

- Yan, J.; Hu, L.; Zhen, Z.; Wang, F.; Qiu, G.; Li, Y.; Yao, L.; Shafie-Khah, M.; Catalao, J.P.S. Frequency-Domain Decomposition and Deep Learning Based Solar PV Power Ultra-Short-Term Forecasting Model. IEEE Trans. Ind. Appl. 2021, 57, 3282–3295. [Google Scholar] [CrossRef]

- Cheng, L.; Zang, H.; Wei, Z.; Ding, T.; Xu, R.; Sun, G. Short-term Solar Power Prediction Learning Directly from Satellite Images With Regions of Interest. IEEE Trans. Sustain. Energy 2021, 13, 629–639. [Google Scholar] [CrossRef]

- Sheng, H.; Ray, B.; Chen, K.; Cheng, Y. Solar Power Forecasting Based on Domain Adaptive Learning. IEEE Access 2020, 8, 198580–198590. [Google Scholar] [CrossRef]

- Ziyabari, S.; Du, L.; Biswas, S.K. Multibranch Attentive Gated ResNet for Short-Term Spatio-Temporal Solar Irradiance Forecasting. IEEE Trans. Ind. Appl. 2021, 58, 28–38. [Google Scholar] [CrossRef]

- Doubleday, K.; Jascourt, S.; Kleiber, W.; Hodge, B.-M. Probabilistic Solar Power Forecasting Using Bayesian Model Averaging. IEEE Trans. Sustain. Energy 2020, 12, 325–337. [Google Scholar] [CrossRef]

- Blazakis, K.; Katsigiannis, Y.; Stavrakakis, G. One-Day-Ahead Solar Irradiation and Windspeed Forecasting with Advanced Deep Learning Techniques. Energies 2022, 15, 4361. [Google Scholar] [CrossRef]

- Malakar, S.; Goswami, S.; Ganguli, B.; Chakrabarti, A.; Roy, S.S.; Boopathi, K.; Rangaraj, A.G. Deep-Learning-Based Adaptive Model for Solar Forecasting Using Clustering. Energies 2022, 15, 3568. [Google Scholar] [CrossRef]

- Michael, N.E.; Mishra, M.; Hasan, S.; Al-Durra, A. Short-Term Solar Power Predicting Model Based on Multi-Step CNN Stacked LSTM Technique. Energies 2022, 15, 2150. [Google Scholar] [CrossRef]

- Mishra, Manohar; Byomakesha Dash, Pandit; Nayak, Janmenjoy; Naik, Bighnaraj; Kumar Swain, Subrat, Deep learning and wavelet transform integrated approach for short-term solar PV power prediction. Journal of the International Measurement Confederation 2020, v 166, 15.

- Zhu, T.; Guo, Y.; Li, Z.; Wang, C. Solar Radiation Prediction Based on Convolution Neural Network and Long Short-Term Memory. Energies 2021, 14, 8498. [Google Scholar] [CrossRef]

- Wentz, V.H.; Maciel, J.N.; Ledesma, J.J.G.; Junior, O.H.A. Solar Irradiance Forecasting to Short-Term PV Power: Accuracy Comparison of ANN and LSTM Models. Energies 2022, 15, 2457. [Google Scholar] [CrossRef]

- Fraihat, H.; Almbaideen, A.A.; Al-Odienat, A.; Al-Naami, B.; De Fazio, R.; Visconti, P. Solar Radiation Forecasting by Pearson Correlation Using LSTM Neural Network and ANFIS Method: Application in the West-Central Jordan. Future Internet 2022, 14, 79. [Google Scholar] [CrossRef]

- Cheng, L.; Zang, H.; Wei, Z.; Zhang, F.; Sun, G. Evaluation of opaque deep-learning solar power forecast models towards power-grid applications. Renew. Energy 2022, 198, 960–972. [Google Scholar] [CrossRef]

- Chang, Rui; Bai, Lei; Hsu, Ching-Hsien Solar power generation prediction based on deep Learning. Sustainable Energy Technologies and Assessments 2021, 47, 101354. [CrossRef]

- Wang, F.; Li, J.; Zhen, Z.; Wang, C.; Ren, H.; Ma, H.; Zhang, W.; Huang, L. Cloud Feature Extraction and Fluctuation Pattern Recognition Based Ultrashort-Term Regional PV Power Forecasting. IEEE Trans. Ind. Appl. 2022, 58, 6752–6767. [Google Scholar] [CrossRef]

- Zhang, X.; Huo, K.; Liu, Y.; Li, X. Direction of arrival estimation via joint sparse bayesian learning for bi-static passive radar. IEEE Access 2019, 7, 72979–72993. [Google Scholar] [CrossRef]

- Suresh, V.; Aksan, F.; Janik, P.; Sikorski, T.; Revathi, B.S. Probabilistic LSTM-Autoencoder Based Hour-Ahead Solar Power Forecasting Model for Intra-Day Electricity Market Participation: A Polish Case Study. IEEE Access 2022, 10, 110628–110638. [Google Scholar] [CrossRef]

- Fu, Y.; Chai, H.; Zhen, Z.; Wang, F.; Xu, X.; Li, K.; Shafie-Khah, M.; Dehghanian, P.; Catalao, J.P.S.P.S. Sky Image Prediction Model Based on Convolutional Auto-Encoder for Minutely Solar PV Power Forecasting. IEEE Trans. Ind. Appl. 2021, 57, 3272–3281. [Google Scholar] [CrossRef]

- Hossain, M.S.; Mahmood, H. Short-Term Photovoltaic Power Forecasting Using an LSTM Neural Network and Synthetic Weather Forecast. IEEE Access 2020, 8, 172524–172533. [Google Scholar] [CrossRef]

- Li, Q.; Xu, Y.; Chew, B.S.H.; Ding, H.; Zhao, G. An Integrated Missing-Data Tolerant Model for Probabilistic PV Power Generation Forecasting. IEEE Trans. Power Syst. 2022, 37, 4447–4459. [Google Scholar] [CrossRef]

- Prado-Rujas, I.-I.; Garcia-Dopico, A.; Serrano, E.; Perez, M.S. A Flexible and Robust Deep Learning-Based System for Solar Irradiance Forecasting. IEEE Access 2021, 9, 12348–12361. [Google Scholar] [CrossRef]

- Li, G.; Xie, S.; Wang, B.; Xin, J.; Li, Y.; Du, S. Photovoltaic Power Forecasting With a Hybrid Deep Learning Approach. IEEE Access 2020, 8, 175871–175880. [Google Scholar] [CrossRef]

- Cheng, L.; Zang, H.; Wei, Z.; Ding, T.; Sun, G. Solar Power Prediction Based on Satellite Measurements – A Graphical Learning Method for Tracking Cloud Motion. IEEE Trans. Power Syst. 2021, 37, 2335–2345. [Google Scholar] [CrossRef]

- Obiora, C.N.; Hasan, A.N.; Ali, A.; Alajarmeh, N. Forecasting Hourly Solar Radiation Using Artificial Intelligence Techniques. IEEE Can. J. Electr. Comput. Eng. 2021, 44, 497–508. [Google Scholar] [CrossRef]

- Elsaraiti, M.; Merabet, A. Solar Power Forecasting Using Deep Learning Techniques. IEEE Access 2022, 10, 31692–31698. [Google Scholar] [CrossRef]

- Ahmed, R.; Sreeram, V.; Togneri, R.; Datta, A.; Arif, M.D. Computationally expedient Photovoltaic power Forecasting: A LSTM ensemble method augmented with adaptive weighting and data segmentation technique. Energy Convers. Manag. 2022, 258, 115563. [Google Scholar] [CrossRef]

- Talaat, M.; Said, T.; Essa, M.A.; Hatata, A. Integrated MFFNN-MVO approach for PV solar power forecasting considering thermal effects and environmental conditions. Int. J. Electr. Power Energy Syst. 2021, 135, 107570. [Google Scholar] [CrossRef]

- Jebli, I.; Belouadha, F.-Z.; Kabbaj, M.I.; Tilioua, A. Prediction of solar energy guided by pearson correlation using machine learning. Energy 2021, 224, 120109. [Google Scholar] [CrossRef]

- Ma, Yuan ; Zhang, Xuemin; Zhen, Zhao; Mei, Shengwei Ultra-short-term Photovoltaic Power Prediction Method Based on Modified Clear-sky Model. Automation of Electric Power System 2021, 45, 44–51.

- Goliatt, L.; Yaseen, Z.M. Development of a hybrid computational intelligent model for daily global solar radiation prediction. Expert Syst. Appl. 2023, 212. [Google Scholar] [CrossRef]

- Ghimire, S.; Deo, R.C.; Casillas-Pérez, D.; Salcedo-Sanz, S.; Sharma, E.; Ali, M. Deep learning CNN-LSTM-MLP hybrid fusion model for feature optimizations and daily solar radiation prediction. Measurement 2022, 202. [Google Scholar] [CrossRef]

- Pi, M.; Jin, N.; Chen, D.; Lou, B. Short-Term Solar Irradiance Prediction Based on Multichannel LSTM Neural Networks Using Edge-Based IoT System. Wirel. Commun. Mob. Comput. 2022, 2022, 1–11. [Google Scholar] [CrossRef]

- Rangel-Heras, E.; Angeles-Camacho, C.; Cadenas-Calderón, E.; Campos-Amezcua, R. Short-Term Forecasting of Energy Production for a Photovoltaic System Using a NARX-CVM Hybrid Model. Energies 2022, 15, 2842. [Google Scholar] [CrossRef]

- Meng, F.; Zou, Q.; Zhang, Z.; Wang, B.; Ma, H.; Abdullah, H.M.; Almalaq, A.; Mohamed, M.A. An intelligent hybrid wavelet-adversarial deep model for accurate prediction of solar power generation. Energy Rep. 2021, 7, 2155–2164. [Google Scholar] [CrossRef]

- Wang, L.; Mao, M.; Xie, J.; Liao, Z.; Zhang, H.; Li, H. Accurate solar PV power prediction interval method based on frequency-domain decomposition and LSTM model. Energy 2023, 262. [Google Scholar] [CrossRef]

- Zhang, Y.; Hu, T. Ensemble Interval Prediction for Solar Photovoltaic Power Generation. Energies 2022, 15, 7193. [Google Scholar] [CrossRef]

- Du, J.; Zheng, J.; Liang, Y.; Liao, Q.; Wang, B.; Sun, X.; Zhang, H.; Azaza, M.; Yan, J. A theory-guided deep-learning method for predicting power generation of multi-region photovoltaic plants. Eng. Appl. Artif. Intell. 2023, 118. [Google Scholar] [CrossRef]

- Ghasvarian Jahromi, Khatereh; Gharavian, Davood; Mahdiani, Hamidreza A novel method for day-ahead solar power prediction based on hidden Markov model and cosine similarity. Soft Computing 2020, 24, 4991–5004. [CrossRef]

- Sangrody, H.; Zhou, N.; Zhang, Z. Similarity-Based Models for Day-Ahead Solar PV Generation Forecasting. IEEE Access 2020, 8, 104469–104478. [Google Scholar] [CrossRef]

- Suksamosorn, S.; Hoonchareon, N.; Songsiri, J. Post-Processing of NWP Forecasts Using Kalman Filtering With Operational Constraints for Day-Ahead Solar Power Forecasting in Thailand. IEEE Access 2021, 9, 105409–105423. [Google Scholar] [CrossRef]

- Mutavhatsindi, T.; Sigauke, C.; Mbuvha, R. Forecasting Hourly Global Horizontal Solar Irradiance in South Africa Using Machine Learning Models. IEEE Access 2020, 8, 198872–198885. [Google Scholar] [CrossRef]

- Maciel, J.N.; Ledesma, J.J.G.; Junior, O.H.A. Forecasting Solar Power Output Generation: A Systematic Review with the Proknow-C. IEEE Lat. Am. Trans. 2021, 19, 612–624. [Google Scholar] [CrossRef]

- Rajagukguk, R.A.; Ramadhan, R.A.; Lee, H.-J. A Review on Deep Learning Models for Forecasting Time Series Data of Solar Irradiance and Photovoltaic Power. Energies 2020, 13, 6623. [Google Scholar] [CrossRef]

- Gupta, Priya; Singh, Rhythm PV Power Forecasting Based On Data Driven Models: A Review. International Journal of Sustainable Engineering 2021, 14, 1733–1755. [CrossRef]

- Wu, Y.-K.; Huang, C.-L.; Phan, Q.-T.; Li, Y.-Y. Completed Review of Various Solar Power Forecasting Techniques Considering Different Viewpoints. Energies 2022, 15, 3320. [Google Scholar] [CrossRef]

- Cesar, L.B.; e Silva, R.A.; Callejo, M. .M.; Cira, C.-I. Review on Spatio-Temporal Solar Forecasting Methods Driven by In Situ Measurements or Their Combination with Satellite and Numerical Weather Prediction (NWP) Estimates. Energies 2022, 15, 4341. [Google Scholar] [CrossRef]

- Sudharshan, K.; Naveen, C.; Vishnuram, P.; Kasagani, D.V.S.K.R.; Nastasi, B. Systematic Review on Impact of Different Irradiance Forecasting Techniques for Solar Energy Prediction. Energies 2022, 15, 6267. [Google Scholar] [CrossRef]

- Radzi, P.N.L.M.; Akhter, M.N.; Mekhilef, S.; Shah, N.M. Review on the Application of Photovoltaic Forecasting Using Machine Learning for Very Short- to Long-Term Forecasting. Sustainability 2023, 15, 2942. [Google Scholar] [CrossRef]

- Tu, C.-S.; Tsai, W.-C.; Hong, C.-M.; Lin, W.-M. Short-Term Solar Power Forecasting via General Regression Neural Network with Grey Wolf Optimization. Energies 2022, 15, 6624. [Google Scholar] [CrossRef]

- Lotfi, M.; Javadi, M.; Osório, G.J.; Monteiro, C.; Catalão, J.P.S. A Novel Ensemble Algorithm for Solar Power Forecasting Based on Kernel Density Estimation. Energies 2020, 13, 216. [Google Scholar] [CrossRef]

- Prasad, A.A.; Kay, M. Assessment of Simulated Solar Irradiance on Days of High Intermittency Using WRF-Solar. Energies 2020, 13, 385. [Google Scholar] [CrossRef]

- Alkahtani, H.; Aldhyani, T.H.H.; Alsubari, S.N. Application of Artificial Intelligence Model Solar Radiation Prediction for Renewable Energy Systems. Sustainability 2023, 15, 6973. [Google Scholar] [CrossRef]

- Benti, N.E.; Chaka, M.D.; Semie, A.G. Forecasting Renewable Energy Generation with Machine Learning and Deep Learning: Current Advances and Future Prospects. Sustainability 2023, 15, 7087. [Google Scholar] [CrossRef]

- López-Cuesta, M.; Aler-Mur, R.; Galván-León, I.M.; Rodríguez-Benítez, F.J.; Pozo-Vázquez, A.D. Improving Solar Radiation Nowcasts by Blending Data-Driven, Satellite-Images-Based and All-Sky-Imagers-Based Models Using Machine Learning Techniques. Remote. Sens. 2023, 15, 2328. [Google Scholar] [CrossRef]

- Wei, Y.; Zhang, H.; Dai, J.; Zhu, R.; Qiu, L.; Dong, Y.; Fang, S. Deep Belief Network with Swarm Spider Optimization Method for Renewable Energy Power Forecasting. Processes 2023, 11, 1001. [Google Scholar] [CrossRef]

- Moreno, G.; Santos, C.; Martín, P.; Rodríguez, F.J.; Peña, R.; Vuksanovic, B. Intra-Day Solar Power Forecasting Strategy for Managing Virtual Power Plants. Sensors 2021, 21, 5648. [Google Scholar] [CrossRef] [PubMed]

- Dhimish, M.; Lazaridis, P.I. Approximating Shading Ratio Using the Total-Sky Imaging System: An Application for Photovoltaic Systems. Energies 2022, 15, 8201. [Google Scholar] [CrossRef]

- Alzahrani, A. Short-Term Solar Irradiance Prediction Based on Adaptive Extreme Learning Machine and Weather Data. Sensors 2022, 22, 8218. [Google Scholar] [CrossRef] [PubMed]

- Crisosto, C.; Hofmann, M.; Mubarak, R.; Seckmeyer, G. One-Hour Prediction of the Global Solar Irradiance from All-Sky Images Using Artificial Neural Networks. Energies 2018, 11, 2906. [Google Scholar] [CrossRef]

- Wang, Z.; Wang, L.; Huang, C.; Luo, X. A Hybrid Ensemble Learning Model for Short-Term Solar Irradiance Forecasting Using Historical Observations and Sky Images. IEEE Transactions on Industry Applications, Volume 59, Issue 2, Pages 2041-2049, March 1, 2023.

- El Alani, O.; Abraim, M.; Ghennioui, H.; Ghennioui, A.; Ikenbi, I.; Dahr, F.-E. Short term solar irradiance forecasting using sky images based on a hybrid CNN–MLP model. Energy Rep. 2021, 7, 888–900. [Google Scholar] [CrossRef]

- da Rocha, B.; Fernandes, E.d.M.; dos Santos, C.A.C.; Diniz, J.M.T.; Junior, W.F.A. Development of a Real-Time Surface Solar Radiation Measurement System Based on the Internet of Things (IoT). Sensors 2021, 21, 3836. [Google Scholar] [CrossRef]

- Zhou, H.; Liu, Q.; Yan, K.; Du, Y. Deep Learning Enhanced Solar Energy Forecasting with AI-Driven IoT. Wirel. Commun. Mob. Comput. 2021, 2021, 1–11. [Google Scholar] [CrossRef]

- Pi, M.; Jin, N.; Chen, D.; Lou, B. Short-Term Solar Irradiance Prediction Based on Multichannel LSTM Neural Networks Using Edge-Based IoT System. Wireless Communications and Mobile Computing 2022, 2022. [Google Scholar] [CrossRef]

- Dissawa, L. H.; Godaliyadda, R. I.; Ekanayake, P. B.; Agalgaonkar, A. P.; Robinson, D.; Ekanayake, J. B.; Perera, S. Sky Image-Based Localized, Short-Term Solar Irradiance Forecasting for Multiple PV Sites via Cloud Motion Tracking. International Journal of Photoenergy 2021, 2021. [Google Scholar] [CrossRef]

- Song, S.; Yang, Z.; Goh, H.; Huang, Q.; Li, G. A novel sky image-based solar irradiance nowcasting model with convolutional block attention mechanism. Energy Reports 2022, 8, 125–132. [Google Scholar] [CrossRef]

- Kong, W.; Jia, Y.; Dong, Z.Y.; Meng, K.; Chai, S. Hybrid approaches based on deep whole-sky-image learning to photovoltaic generation forecasting. Appl. Energy 2020, 280, 115875. [Google Scholar] [CrossRef]

| Ref | Method | Model type | Parameter Used | Accuracy |

|---|---|---|---|---|

| [4] | AI or Neural networks (NNs) | Principal component analysis (PCA), artificial neural networks (ANN) with the outputs using Mixture DOE (MDOE) | Instantaneous temperature (◦C), Instantaneous humidity (%), Instantaneous precipitation (◦C), Instantaneous pressure (hPa), Wind speed(m/s), Wind direction(◦), Wind gust(m/s) and Radiation(KJ/m2). |

MAPE= 10.45%, SD=7.34 for summer; MAPE=9.29%, SD=7.23 for autumn; MAPE=9.11%, SD=5.55 for winter; MAPE=6.75%, SD=6.47 for spring |

| [5] | AI or Neural networks (NNs) | Artificial neural networks (ANN) | Relative Humidity Solar Radiation Temperature Wind Speed |

RMSE=86.466 MAE=8.409 |

| [6] | AI or Neural networks (NNs) | Recurrent neural network (RNN) | Temperature Humidity Wind Speed |

MRE(%)= 3.87 MAE(kW)= 7.75 nRMSE (%)=5.69 |

| [7] | AI or Neural networks (NNs) | Artificial neural network (ANN); | National Renewable Energy Laboratory Irradiance, Temperature, Wind Speed, Wind Pressure |

MAPE(%)=1.8 MSE=3.19×10e−10 |

| [8] | AI or Neural networks (NNs) | Feedforward backpropagation neural network (FFBPNN) method | Daily average temperature, daily average humidity, daily average wind speed, daily total sunshine duration, and daily average Global solar irradiation (GSI) | MAPE=7.066%, nMAE=3.629%, nRMSE= 4.673%, and MAE =5.256% |

| [9] | AI or Neural networks (NNs) | BP neural network | Cloud-based images, historical data of solar radiation | MAE= 46.1W MAPE= 7.8%. |

| [10] | AI or Neural networks (NNs) | Artificial neural network (ANN) | Radiation, temperature, wind speed, and humidity | Classification accuracy% =97.53% |

| [11] | AI or Neural networks (NNs) | Neural Network Prediction Model | Temp., wind Speed, wind direction, humidity, total amount of cloud, insolation | MAPE(%) =12.94% |

| [12] | AI or Neural networks (NNs) | Artificial Neural Network | Relative humidity, solar radiation, temperature and wind speed | RMSE=86.466(W) MAE=8.409 |

| [13] | AI or Neural networks (NNs) | Similar day-based and ANN-based approaches | Extra-terrestrial radiation Cloud cover factor Temperature |

MAPE = 21.37% nRMSE = 30.99% |

| [14] | AI or Neural networks (NNs) | AI methods based on the Portfolio Theory (PT) | Solar irradiance Air temperature |

MAPE= 4.52% |

| [15] | Machine learning or optimization algorithms | RNN-LSTM Model | Solar radiation, module temperature, and ambient temperature | RNN-LSTM (p-si) RMSE=26.85 RNN-LSTM (m-si) RMSE=19.78 R2=0.9943 |

| [16] | Machine learning or optimization algorithms | Gradient boosting decision tree (GBDT) | Temperature (◦C) Atmospheric pressure (kPa) Relative humidity (%) Wind speed (m/s) Total solar radiation (0.01 MJ/m2) |

MAE(MWh)=6.02 MAPE(%)=3.30 RMSE(MWh)=6.73 |

| [17] | Machine learning or optimization algorithms | Adaptive extreme learning machine model | (a)Global horizontal irradiance(GHI) (b)Temperature (c)Relative humidity |

MAE=0.2444 MSE=0.1727 RMSE=0.3012 |

| [18] | Machine learning or optimization algorithms | Transparent Open Box (TOB) machine-learning method | Solar radiation, wind velocity and air pressure | RMSE = 1175 MW and R2 = 0.9804; RMSE = 1632 MW and R2 = 0.9609 |

| [19] | Machine learning or optimization algorithms | Clouds and Sun Detection Algorithm | Image acquisition, image processing | Sun coverage between 5 and 6 s.Standard error level in range of 10–20%. |

| [20] | Machine learning or optimization algorithms | Adaptive boosting Learning Model | Solar power (MW), solar irradiance (W/m2) and model temperature (K) | RMSE =25.77MAE=30.28 |

| [21] | Machine learning or optimization algorithms | Extreme learning machine with a forgetting mechanism (FOS-ELM) | PV Data, Weather data, Noise variance | nRMSE=0.952, MAPE=1.549 |

| [22] | Machine learning or optimization algorithms | Regression-Based Ensemble Method | Irradiance, temperature, precipitation, humidity, wind speed | MRE=4.362%, MAE=87.242 kW, and R2=0.933 |

| [23] | Machine learning or optimization algorithms | Machine learning (ML)-based | Ambient temperature, relative humidity, wind speed, wind direction, solar irradiation , and precipitation | MSE=0.15. |

| [24] | Machine learning or optimization algorithms | Spatio-temporal autoregressive model (STVAR) | Global horizontal irradiance (GHI) | rMAE (%)=13.13, rMBE (%)=-2.99, rRMSE (%)=21.8 |

| [25] | Machine learning or optimization algorithms | Support vector machine (SVM) and Gaussian process regression (GPR) models | Solar PV panel temperature, ambient temperature, solar flux, time of the day and relative humidity. | RMSE=7.967,MAE=5.302 and R2=0.98 |

| [26] | Machine learning or optimization algorithms | Multi-kernel random vector functional link neural network (MK-RVFLN) | Historical solar power data | MAPE (%)=2.29, RMSE (MW)=0.738, MAE (MW)=0.343 |

| [27] | Machine learning or optimization algorithms | An adaptive k-means and Gru machine learning model | Temperature, dew time, humidity, wind speed, wind direction, azimuth angle, visibility, pressure, wind-chill index, calorific value, precipitation, weather type | RMSE=8.15MAPE/(%)=0.04 |

| [28] | Machine learning or optimization algorithms | Choice of random forest regression | Global horizontal irradiation, relative humidity, ambient air temperature, cloud cover, and the generation of electricity more than 20 items | R2=0.94 MAE=5.12 kWh RMSE=34.59 kWh |

| [29] | Machine learning or optimization algorithms | Support Vector Regression- Based Model | powerHourly Standard Solar Irradiance (SSI), Online Weather Condition (OWC) Cloud Cover (CC) | nRMSE=2.841% MAPE=10.776% |

| [30] | Machine learning or optimization algorithms | Hybrid-classification-regression forecasting engine | Forecasted/lagged values of weather parameters, lagged solar power values, and calendar data | MAE= 0.078 MAPE=14.1 MSE=0.014 |

| [31] | Machine learning or optimization algorithms | Frequency-Domain Decomposition and Convolutional neural network (CNN) | PV power data | MAPE=0.1778 RMSE= 1.1757 R2=0.9438 |

| [32] | Machine learning or optimization algorithms | Regions of interest (ROIs) | Precise cloud distribution information | nRMSE= 5.573 nMAE= 2.362 MASE= 0.644 |

| [33] | Machine learning or optimization algorithms | Adaptive learning neural networks | Solar irradiation, temperature, wind speed and humidity. | RMSE=143.7483(W/m2) MAE= 67.2620(W/m2) MBE=4.5844(W/m2) |

| [34] | Machine learning or optimization algorithms | A novel multibranch attentive gated recurrent residual network (ResAttGRU) | Clear Sky Index, Solar Irradiance |

RMSE=0.049(W/m2) MAE= 0.031(W/m2) R2=0.99 |

| [35] | Machine learning or optimization algorithms | Bayesian model averaging (BMA) | Numerical weather prediction (NWP) | SS’s of at least 12% |

| [36] | Deep-Learning | The encoder–decoder LSTM network | Air temperature (◦C), Relative humidity (%) Global irradiance on the Horizontal plane (W/m2 ) Beam/direct irradiance Diffuse irradiance on the horizontal plane Extraterrestrial irradiation |

MAPE (%)=39.47% RMSE (W/m2 )=99.22% MAE (W/m2 )=67.69% nRMSE =0.27 |

| [37] | Deep-Learning | Deep-Learning-Based Adaptive Model | Temperature, dew point, wind speed, and cloud cover. | nRMSE=0.3058 |

| [38] | Deep-Learning | Multistep CNN-Stacked LSTM Model | Solar irradiance, plane of array (POA) irradiance | nRMSE = 0.11 RMSE = 0.36 |

| [39] | Deep-Learning | LSTM-dropout Model | (a) cloudy index (b) visibility (c) temperature (d) dew point (e) humidity (f) wind speed (g)atmospheric pressure (h) altimeter (i) solar output power. |

RMSE = 0.01 MAE=0.0756 MAPE=0.05711 R2=0.90668 |

| [40] | Deep-Learning | SCNN–LSTM model | Direct normal irradiance (DNI), solar zenith angle, relative humidity, and air mass | nRMSE=23.47% Forecast skill= 24.51% |

| [41] | Deep-Learning | Artificial neural network (ANN) and Long-Term Short Memory (LSTM) network models | Air temperature, relative humidity, atmospheric pressure, wind speed, wind direction, maximum wind speed, precipitation (rain), month, hour, minute, Global Horizontal Irradiance (GHI) | MAPE = 19.5% |

| [42] | Deep-Learning | LSTM and ANFIS learning models | Direct and diffuse short-wave radiation, evapo-transpiration, vapor pressure deficit at 2 m, relative humidity, sunshine duration, and soil temperature | RMSE=0.04–0.8 MSE=0.0016–0.64 MAE =0.034–0.86 |

| [43] | Deep-Learning | Opaque deep-learning solar forecast models | Total column liquid water, Total column ice water, Surface pressure, Relative humidity, Total cloud cover, U&V wind component, temperature, Surface solar radiation downwards, Surface thermal radiation downwards, Top net solar radiation, Total precipitation. | MAE=0.050 ± 0.002 RMSE=0.098 ± 0.003 |

| [44] | Deep-Learning | VM-based forecast models | Solar radiation and temperature | Accuracy factor increase 27%. |

| [45] | Deep-Learning | A fluctuation pattern prediction (FPP)-LSTM modelFPR-LSTM | The ultrashort-term power prediction was performed with the cloud distribution features and historical power data as input | RMSE=6.675% MAE=4.768% COR=0.9055 |

| [46] | Deep-Learning | Long Short-Term Memory (LSTM) network | PV inverter Energy meter Data logger,Weather data acquisition | RMSE= 0.512 |

| [47] | Deep-Learning | Long Short-Term Memory (LSTM) network | Samples, time steps and features | RMSE= 15.59 kW MAE= 8.36 kW |

| [48] | Deep-Learning | Convolutional autoencoder (CAE) based sky image prediction models | Precise cloud distribution information | SSIM=1.012 MSE=0.712 |

| [49] | Deep-Learning | Long short-term memory (LSTM) neural network | Temperature, relative humidity, wind speed and precipitable water. The approximate numerical solar irradiance |

RMSE= 0.71MW MAE= 0.36MW MAPE=22.31% |

| [50] | Deep-Learning | Recursive long short-term memory network (Rec-LSTM) | General weather information | nRMSE= 15.25% WMAPE=68.47% |

| [51] | Deep-Learning | Convolutional long short-term memory (Conv-LSTM) | Multi-point regional data consolidation17 sensors were laid on the island of Oahu (Hawaii) covering an area of roughly 1km2 from March 2010 to October 2011. | RMSE never increases more than 15% |

| [52] | Deep-Learning | Convolutional neural network (CNN) and LSTM recurrent neural network | General weather information | RMSE= 2.095MW MAE= 1.028MW |

| [53] | Deep-Learning | A spatial-temporal graph neural network(GNN) is then proposed to deal with the graph | Precise cloud distribution information | RMSE= 6.945k MAE= 3.565k MAPE=1.286% |

| [54] | Deep-Learning | Time-series long short-term memory (LSTM) network, convolutional LSTM (ConvLSTM), | Historical hourly solar radiation | nRMSE= 4.05% |

| [55] | Deep-Learning | Long Short Term Memory (LSTM) | Mean solar radiation and air temperature for a region | RMSE= 317.4 MAE=236.35 MAPE=2.17 |

| [56] | Deep-Learning | Long Short-Term Memory (LSTM) | Weather temperature (◦ C) Global horizontal radiation (W/m2) PV power history data |

MAPE =6.02 |

| [57] | Deep-Learning | The multi layer feed forward neural network (MFFNN) multiverse optimization (MVO) | Wind speed Solar irradiance Ambient temperature. |

nRMSE=5.95E-03 MSE=2.16E-05 MAE=9.44E-05 R2=0.994045813 |

| [58] | Deep-Learning | Multi layer Perceptron (MLP) | Temperature, humidity, wind speed; wind direction, pressure Solar radiation Solar energy |

MAE=0.03(J/m2) MSE=0.006(J/m2) RMSE=0.08(J/m2) |

| [59] | Hybrid model forecasting | VMD-LSTM-RVM Model | power history data | MAPE%=5.12 RMSE(kW)=4.80 |

| [60] | Hybrid model forecasting | Covariance Matrix Adaptive Evolution Strategies (CMAES) with Extreme Gradient Boosting (XGB) and Multi-Adaptive Regression Splines (MARS) models | Wind velocity, maximum and minimum weather humidity, maximum and minimum weather temperature, vapor pressure deficit and evaporation | RMSE=4.9% |

| [61] | Hybrid model forecasting | CNN-LSTM-MLP hybrid fusion model | Temperature, rainfall, evaporation, vapour pressure, relative humidity | 𝑟 ≈ 0.930, RMSE ≈ 2.338 MJm−2day−1, MAE ≈ 1.69 MJm−2day−1 |

| [62] | Hybrid model forecasting | MC-WT-CBiLSTM depth model | Global level irradiance and temperature | MAE=18.13 RMSE=27.98 R2=0.99 SMAPE=10.97 MAPE=15.63 |

| [63] | Hybrid model forecasting | NARX-CVM Hybrid Model | Temperature, solar radiation, relative humidity, wind speed, and pressure | Forecasting skills =34% |

| [64] | Hybrid model forecasting | Hybrid wavelet-adversarial deep model | Global horizontal irradiance (GHI) | RMSE=0.0895, MAPE=0.0531 |

| [65] | Hybrid model forecasting | Hybrid LSTM-SVR-BO model. | PV power history data | RMSE(MW)=9.321, MAE(MW)=4.588, AbsDEV(%)=0.174 |

| [66] | Hybrid model forecasting | GBRT-Med-KDE Model | Wind speed, temperature (Celsius), and relative humidity. | MAE=0.05, RMSE=0.08, R2(%)=99.75, MAPE=0.055, SMAPE=0.028. |

| [67] | Hybrid model forecasting | Theory-guided and attention-based CNN-LSTM (TG-A-CNN-LSTM) | Neglect the meteorological data, such as temperature and wind speed. | RMSE=11.07 MAE = 4.98 R2 =0.94 |

| [68] | Other statistical analysis methods | Hidden Markov model (HMM) | Solar historical data | nMAE=2.84, nRMSE=6.05, MAPE=13.46 and Correlation coefficient= 0.975. |

| [69] | Other statistical analysis methods | Similarity-based forecasting models (SBFMs) | Temperature, humidity, dew point, and wind speed | RMSE= 15.3% MAE=826.2W MRE=10.8% |

| [70] | Other statistical analysis methods | Kalman filtering (KF) | Irradiance, temperature, relative humidity, and the solar zenith angle | RMSE= 156.42(39.88%) nRMSE= 12.71% |

| [71] | Other statistical analysis methods | Quantile regression averaging (QRA) | Temperature, wind speed, relative humidity, barometric pressure, wind direction standard deviation, rainfall | RMSE= 88.600 MAE= 52.034 |

| Work | Date of publication and Location | Main contribution | Advantages | Disadvantages |

|---|---|---|---|---|

| [4] | December, 2022 | The versatility of the proposed approach allows the choice of parameters in a systematic way and reduces the search space and the number of experimental simulations, saving computational resources and time without losing statistical reliability | -The approach separates the data into seasons of the year and considers multiple climatic variables for each period. -The dimensionality reduction of climate variables is performed through PCA. |

Increasing dimensions of the input vector. |

| [5] | August, 2021 | To accommodate uncertain weather, a daily clustering method based on statistical features as daily average, maximum, and standard deviation of PV power is applied in the data sets. | -The forecasting results of ANN, DNN, SVR, LSTM, and CNN were combined by the RNN meta-learner to construct the ensemble model.-Higher stability | -Time-consuming; -Complex computation process; -Increasing dimensions of the input vector |

| [6] | March, 2020 | -Forecasting errors are relatively large in unusual weather conditions. -The forecasting precision can be improved by enlarging training samples, performing subdivision, and imposing manual intervention. |

Reducing the risk of over-fitting by balancing decision trees | -Increasing dimensions of the input vector; -Adjusting the parameters of abnormal weather |

| [7] | October 2021 | -The proposed model requires only the set of dates specifying forecasting period as the input for prediction purposes. -Being able to predict PV power for different time spans rather than only for a fixed period in the presence of the historical weather data . |

-A simplified application of the already trained ANN is introduced -Photovoltaic (PV) output can be predicted without the real-time current weather data. |

-Increasing dimensions of the input vector -Statistics of daily solar energy over the years |

| [8] | June, 2020 | It is more appropriate for meteorological data that belong to a specific region to obtain an accurate forecasting model and results. | Being a promising alternative for accurate power forecasting of the actual PV power plants. | The chaotic nature of meteorological parameters causes a decrease in ANN model forecast accuracy. |

| [9] | September, 2021 | 1. By calculating the displacement vector of the cloud on the ground-based cloud images, the moving trajectory of the cloud can be estimated, so as to accurately predict the occurrence of sun occlusion. 2. A new ultra-short-term solar radiation prediction model is designed, and especially suitable for the prediction of sudden change of solar radiation near the surface in cloudy weather conditions. Its time scale is 5 min. |

-Combined with the features of ground-based cloud images, the prediction accuracy is greatly improved. -By the digital image processing, 13 features that affect the solar radiation near the surface are extracted from the ground-based cloud images. |

In cloudy weather conditions, the ultra-short- term forecasting is very difficult because there are no rules for clouds to block the sun. |

| [10] | March, 2020 | In power plant determination studies, which regions are more appropriate can be determined by using the proposed model. | Extra costs for installation and measurement can be eliminated. | Long mathematical processes |

| [11] | November, 2022 | The amount of input and calculation of the neural network model is reduced to streamline the hidden layer stage, establishing a power generation prediction model capable of fast and accurate prediction. | To create a predictive model including non-linearly related variables. | Improvements in the prediction accuracy of performance. |

| [12] | July, 2021 | Supervised learning algorithms are employed to predict the power generated from meteorological variables since renewable power generation systems present a wide variation due to meteorological conditions. | The actual electricity generation values in the PV installation were compared with the predictions made by different methods (ANN, KNN, LR and SVM). | This database size limits the prediction horizon of the models. |

| [13] | November, 2020 | The similar hour-based approach and the hybrid method have demonstrated better performance than widely employed forecasting techniques | The outputs of both forecasting methods are dynamically weighted, according to the type of the day (sunny, cloudy and overcast) and the MAE. | Increasing dimensions of the input vector |

| [14] | January, 2020 | PT takes advantage of diversified forecast assets: when one of the assets shows prediction errors, these are offset by another asset. | Integration of AI methods in a new adaptive topology based on the Portfolio Theory (PT) is proposed hereby to improve solar forecasts. | -Increasing dimensions of the input vector -Multi-method evaluation |

| [15] | March, 2022 | -A deep learning algorithm (RNN-LSTM) is proposed for hour-ahead forecasting of output PV power for three independent PV plants on yearly basis for a four-year period. -Annual hour-ahead forecasting of PV output power using SVR, GPR and ANN -Different LSTM structures are also investigated with RNN to determine the most feasible structure |

-ANFIS is performed to compare with the proposed technique (RNN-LSTM). -Better forecasting accuracy and performance |

It is difficult to adjust the LSTM parameters, and determine whether it converges. |

| [16] | October, 2021 | Predicting PV power for different time spans in the presence of the historical weather data. | The proposed model requires only the set of dates specifying forecasting period and multiple inputs . | The modeling would take a longer time due to large amount of historical data. |

| [17] | October, 2022 | The extreme learning machine method uses approximated sigmoid and hyper-tangent functions to ensure faster computational time and more straightforward microcontroller implementation. | Feed Forward Neural Network based PSO completes the search when the optimal weight is calculated. | Using PSO to select the parameters of adaptive extreme learning machine will make the computation time longer. |

| [18] | June, 2020 | Offering potential to assist system operators and regulator in better planning solar and wind power contributions to power supply networks. | Using a longer time period of prior data could lead to further improvements for TOB’s short-term forecasting accuracy . | More work is required on larger datasets (i.e., for multiple years) to confirm that. |

| [19] | June, 2021 | Decreasing the predicted amount of generated energy to avoid wrong optimistic predictions to affect the stability of a virtual power plant. | Improving accuracy and resolution of irradiance prediction for the next hour interval. | It is necessary to obtain a curve of the percentage of the uncovered sun with clouds for in the next hour. |

| [20] | November, 2021 | Bringing originality to predicts 10 days ahead solar power generation. | They are trained with accurate ratio of training and testing to have best forecast accuracy with minimal error. | Individual model would not be enough to have sharp accuracy. |

| [21] | November, 2021 | Assisting the energy dispatching unit list producing strategies while also providing temporal and spatial compensation and integrated power regulation, which are crucial for the stability and security of energy systems and also their continuous optimization. | The FOS-ELM approach may expand accuracy while also reducing the training time. | The level of uncertainty in PV generation is strongly related to the chaotic nature of weather schemes. |

| [22] | June, 2022 | -Utilizing a new PV forecasting structure that incorporates K-means clustering, RF models, and the regression-based method with LASSO and Ridge regularization to increase forecasting accuracy.-Determining the five optimal sets of weight coefficients and which model predictors are significant. | The proposed ensemble forecasting strategies are much more accurate than single forecasting models. | Increasing the accuracy by recalculating the weight of the individual prediction model for each new input sample. |

| [23] | October, 2021 | Seven well-known machine learning algorithms were successfully applied to solar PV system data from Abha to predict the generated power. | The prediction error of the algorithms was relatively low. | RF was the worst in terms of MSE. |

| [24] | November, 2022 | STVAR model showed a good predictive performance for a time scale from 5 min to 1 h. | The design and component sizing of PV power plants. | The strength and limits of the irradiance forecasting model (STVAR model) are consequently imposed on the final PV forecasting results. |

| [25] | November, 2021 | Machine learning (ML) models can be utilized as rapid tool for predicting the suitable performance of the power of any solar PV panel. | Approving the high reliability and accuracy of Matern 5/2 GPR model. | -Squared exponential GPR exhibited poor performance due to the complex relationship between the dielectric permittivity and the input parameters. -Cubic SVM exhibited poor performance due to the complex relationship between the input parameters and the PV panel power |

| [26] | June, 2019 | -The randomness of the RVFLN technique is mitigated for fast learning and accurate forecasting by implementing the kernel matrix -Different kernel functions are investigated to achieve better prediction accuracy and two best kernel functions are combined together to attain more actual solar power prediction -The randomly selected kernel parameters are optimally tuned by an efficient optimization technique, thereby imparting a more accurate shorter time interval solar power prediction. |

Reducing computational time and complexity of the model. | MK-RVFLN is the choice of parameters which affects the accuracy of the prediction technique. |

| [27] | September, 2022 | The adaptive k-means is used to cluster the initial training set and the power on the forecast day. | Gru network has better effect, better robustness, and less error. | Increasing dimensions of the input vector |

| [28] | May, 2022 | Horizontal global irradiation and water saturation deficit have a strong proportional. | Development of seven machine learning models for the prediction of PV power generation. | Increasing dimensions of the input vector |

| [29] | February, 2022 | PSO-based algorithm is adapted for the selection of dominant SVR-based model parameters and improvement of performance. | Reaching better performance of the forecast algorithm. | Using algorithm bar parameters will lead to longer operation time. |

| [30] | April, 2022 | A new solar power prediction method, composed of a feature selecting/clustering approach and a hybrid classification-regression forecasting engine | -The forecasting computation is faster by using two subsets. Each of these two subsets is separately trained by a forecasting engine. -The final solar power prediction is obtained by a relevancy-based combination of these two forecasts. |

-Increasing dimensions of the input vector -The internal parameters of the subset need to be well selected. |

| [31] | August, 2021 | Raw data is subtracted from the correlation between the the decomposition components and raw data to obtain the optimal frequency demarcation points for decomposition components. | A CNN is used to forecast the low-frequency and high-frequency components, and the final forecasting result is obtained by addition reconstruction. | Use of FFT for data preprocessing is less applicable than the general data pre-processing method |

| [32] | January, 2022 | An end-to-end short-term forecasting model is proposed to take satellite images as inputs, learning the cloud motion characteristics from stacked optical flow maps. | -Better performance of the forecast algorithm. -Sky image technologywith cloud motion |

-The extremely large sizes of satellite images can lead to a heavy computational burden. -Complex computation process. |

| [33] | November, 2020. | Unlike existing adaptive iterative methods, the proposed approach does not rely on the labels of the test data in the updating process. | As weather changes, the model can dynamically adjust its structure to adapt to the latest weather conditions. | -Complex computation process; -Parameter adjustment required |

| [34] | February, 2022 | The proposed multibranch ResAttGRU is capable of modeling data at various resolutions, extracting hierarchical features, and capturing short- and long-term dependencies. | Accelerating the learning process, and reduce overfitting by leveraging shared representations as the auxiliary information. | -Complex computation process; -Parameter adjustment required |

| [35] | January, 2021 | -BMA’s mixture-model approach mitigates under dispersion of the raw ensemble to significantly improve forecast calibration. -Consistently outperforming an ensemble model output statistics (EMOS) parametric approach from the literature. |

-BMA is a kernel dressing technique for NWP ensembles -A weighted sum of member-specific probability density functions. |

-Increasing dimensions of the input vector |

| [36] | June, 2022 | -A series of experiments applying advanced deep-learning-based forecasting techniques were conducted, achieving high statistical accuracy forecasts. -Solar irradiation data were categorized by each month during the year, resulting in a monthly time-series dateset, which is more significance for high-performance forecasting. -A walk-forward validation forecast strategy in combination with a recursive multi step and a multiple-output forecast strategy was implemented |

-Use of fixed-sized internal representation in the core of the model -Significantly improving short- term solar irradiation forecasts. |

More LSTM parameter settings require to be adjusted |

| [37] | May, 2022 | -The proposed model showed promising forecasting performance compared to benchmark models such as convolutional neural network (CNN)-LSTM and nonclustering-based site-specific LSTMs. -The model achieved less forecasting error for solar stations having significant solar variability. |

-The performance of CB-LSTM was robust under differing conditions. -CB-LSTM achieved better forecasting performance than that of M-LSTM and ST-LSTM for all climatic zones and regions. |

-High error in terms of NRMSE in Idukki station. |

| [38] | March, 2022 | The proposed stacked architecture and the incorporation of drop-out layers are helpful for accuracy improvement in the PV prediction model. | The multi-step CNN-stacked LSTM with drop-out deep learning method for improved effectiveness as compared to other traditional solar irradiance forecasting. | -Complex structure and hardware requirement. |

| [39] | July, 2020 | Validating the performance of the proposed approach through a detail comparative analysis with several other contemporary ML approaches such as linear regression (LR), ridge regression (RR), least absolute shrinkage and selection operator (LASSO) and elastic net (ENET) methods | -Outperforming with respect to all selected performance criterion. -Effectively confirming the likelihood and practicality of the proposed model. |

It is failed to achieve the accuracy of the proposed WT-LSTM-dropout model. |

| [40] | December, 2021 | -A Siamese CNN was developed to automatically extract the features of continuous total sky images, where the Siamese structure reduced the model training time by sharing part parameters of the model; -SCNN-LSTM was used to effectively fuse the time-series features of images and meteorological data to improve the DNI prediction accuracy. | The prediction accuracy was improved by comparing to other models. | It is required to improve the prediction accuracy, especially under partly cloudy or cloudy days. |

| [41] | March, 2022 | -Optimizing the prediction performance of the ANN and LSTM models to improve the accuracy rates of these models. | The ANN and LSTM models using the reduced Input Set demonstrated the same prediction accuracy as the seven exogenous variables in the complete Input Set. | -It requires a larger amount of training data -Higher computational cost and training time for the models. |

| [42] | March, 2022 | -The parameters of solar radiation, direct short-wave radiation, diffuse short-wave radiation, and temperature always have a very high degree of influence on solar radiation forecasting.-Evapotranspiration, sunshine duration and humidity showed a remarkable influence in west-central Jordan -Other parameters like cloud cover, snowfall amount, wind speed, and total precipitation amount have no influence in Jordan on the solar radiation prediction. |

-Abilities of adaptation -Nonlinearity -Rapid learning |

Too many twenty-four solar radiation metrological parameters (inputs to the ML or DL algorithms). |

| [43] | August, 2022 | -These simple improvements can ensure higher accuracy and stability of opaque models. -The LSTM-AE model is proposed as the benchmark of deep-learning solar forecast. -Comprehensive evaluation studies are conducted to evaluate different forecast performances. |

The proposed deep-learning AE model is recommend as an efficient method for day-ahead NWP-based PV power forecast due to the highest accuracy. | The day-ahead forecast accuracy will decrease sharply for each model without NWP and its improvement using deep-learning is quite limited. |

| [44] | June, 2021 | -The proposed TESDL short-term prediction algorithm has excellent capacity and robustness for generalization -Achieving an outstanding predictive efficiency. |

The costs for control, initial hardware part costs, and extended-lasting maintenance of potential PV farms could be minimized by the proposed TESDL algorithm. | Mismatch losses pose a significant problem since the performance in the worst circumstances of the entire PV array is calculated by the lowest powered solar panel. |

| [45] | September, 2022 | FPP model based on convolutional neural network is used to predict future PV power fluctuation patterns with historical satellite images as input. | Reaching better performance of the forecast algorithm. | -Complex computation process; -Use of cloud computing |

| [46] | January, 2021 | the network forecasting results can successfully approximate to the expected outputs and the intra-hour ramping is well captured. | Reaching better performance of the forecast algorithm. | It is difficult to adjust the LSTM parameters and determine whether it converges. |

| [47] | October, 2022 | It was observed that the LSTM-Autoencoder model was the best performing one in terms of reliability for the investigated models. | -Data normalization-Reaching better performance of the forecast algorithm. | It is difficult to adjust the LSTM parameters, and determine if it converges. |

| [48] | August, 2021 | Precise cloud distribution information ismainly achieved by ground-based total sky image. | -Particle image velocimetry and Fourier phase correlation theory are introduced to build the benchmark models. -Sky image technology |

-The feature of 3-D CAE models could not find well. -Increasing dimensions of the input vector |

| [49] | October, 2020 | This highlights the significance of the proposed synthetic forecast, and promote a more efficient utilization of the publicly available type of sky forecast to achieve a more reliable PV generation prediction. | The performance of the proposed model is investigated using different intraday horizon lengths in different seasons. | -Complex computation process; |

| [50] | November, 2022 | Proposing an integrated missing-data tolerant model for probabilistic PV power generation forecasting. | -Dealing with data missing scenarios at both offline and online stages. -Data tolerance |

-Increasing dimensions of the input vector -Computing time is too long |

| [51] | January, 2021 | Several Artificial Neural Networks are trained as a basis for predicting solar irradiance on several locations at the same time. | A family of deep learning models for solar irradiance forecasting comply with the aforementioned features, i.e. flexibility and robustness. | -Increasing dimensions of the input vector |

| [52] | September, 2020 | The CNN model is leveraged to discover the nonlinear features and invariant structures in the previous output power data, thereby facilitating the prediction of PV power. | -CNN was use to preprocess the data -Reaching better performance of the forecast algorithm. |

-Increasing dimensions of the input vector -Computing time is too long |

| [53] | May, 2022 | By simulating the cloud motion using bi-directional extrapolation, a directed graph is generated representing the pixel values from multiple frames of historical images. | GNN is more flexible for varying sizes of input in order to be able to handle dynamic ROIs. | Increasing dimensions of the input vector |

| [54] | December, 2021 | The performance of forecasting models depends largely on the quality of the training data, the size of data, the meteorological condition of the location where the data were obtained, and the duration or horizon of measured solar irradiance. | -Ten-year dataset is a great improvement in the accuracy of the solar irradiance forecast techniques. -It is considered the best result obtained in this work. |

-Complex computation process; -Parameter adjustment required |

| [55] | March, 2022 | A deep learning technique based on the Long Short-Term Memory (LSTM) algorithm is evaluated with respect to its ability to forecast solar power data. | Focusing on research and development in multiple models to arrive at predictions with high suitability. | -Complex computation process; -Parameter adjustment required |

| [56] | March, 2022 | -The comparison is then used to minimize uncertainty by implementing grid search technique. -Comparing the effects of different data segmentations (three-months to one-day) |

-Varying time-horizons (14-days to 5-mins) to compare the effects of seasonal and periodic variations on time- series data and PV output forecast. | It is difficult to adjust the LSTM parameters, and determine whether it converges. |

| [57] | August, 2021 | The numbers of neurons in the hidden layers, weights, and biases of the proposed ANNs were optimized with MVO and GA. | The multilayer feedforward neural network (MFFNN) was used to investigate its accuracy through the results obtained from MFFNN-MVO and the MFFNN-GA models. | -Increasing dimensions of the input vector |

| [58] | February, 2021 | -The relevance of the studied models was evaluated for real-time and short-term solar energy forecasting to ensure optimized management and security requirements by using an integral solution based on a single tool and an appropriate predictive model. | ANN has shown good performance for both real-time and short-term predictions. | -Increasing dimensions of the input vector |

| [59] | June, 2021 | The prediction model has higher prediction accuracy and relatively small overall fluctuations. | VMD decomposition technology is used to decompose the PV power sequence to reduce the complexity and non-stationarity of the raw data. | Prediction error and fluctuation are large. |

| [60] | August, 2022 | -It can serve as an alternative tool to provide reliable predictions. -Providing a promising method for predicting daily solar radiation as evidenced by the performance at the stations analyzed. |

The interchangeability of optimization algorithms and machine learning models. | -Computational complexity |

| [61] | August, 2022 | -Development of a new hybrid DL model, which process the input data with a sequential application of Slime Mould Algorithm (SMA) for feature selection, CNN, LSTM network, CNN and a final processing with a MLP -Overcoming the shortcomings mentioned above to obtain a more accurate GSR prediction. |

A novel DL-based hybrid model that overcomes the above limitations and produces accurate GSR predictions. | -Incorporating different design of predictor data decomposition methods. -Complex computation process; |

| [62] | January, 2022 | The various methods combined with the MC-WT-CBiLSTM model have the effect of improving the prediction ability. | -The wavelet transform preprocessing step effectively reduces the data complexity -Improving the prediction ability of the multichannel CNN-BiLSTM model. | -The generalization ability for the most forecasting methods is poor -Only achieve good results in a small range. |

| [63] | April, 2022 | The development methodology in this work can be applied anywhere. | -The forecasting skills of the hybrid model are about 34% against the NAR model. -About 42% against the Persistence model. |

A forecasting model should not be including redundant or irrelevant variables to avoid spurious results. |

| [64] | April, 2021 | Proposing a three-phase adaptive modification solution for DA to increase the algorithm capabilities in both the local and global searches. | The proposed hybrid deep model is equipped by a powerful decomposing mechanism which helps to provide simpler signals with less complexity. | The negative effect of long-time windows on the prediction results. |

| [65] | September, 2022 | -A comparative test is conducted in multiple time dimensions to better reflect the accuracy of experimental results -Verifying the superiority of the proposed method. |

The prediction accuracy and prediction stability are improved by about 15% on average compared to the other prediction models. | BO algorithm is used for tuning parameters, which also increases the time cost for training models. |

| [66] | September, 2022 | Proposing an ensemble interval prediction for solar power generation that obtains prediction intervals with higher quality than other methods. | Obtaining more reliable and stable interval prediction results. | The KDE method takes a longer total computational time than other methods. |

| [67] | November, 2022 | -In the training process, data mismatch and boundary constraint are incorporated into the loss function.-The positive constraint is utilized to restrict the output of the model. | The performance of prediction models with sparse data is tested to illustrate the stability and robustness of TG-A-CNN- LSTM. | It is difficult to adjust the LSTM parameters and determine whether it converges. |

| [68] | July, 2020 | -Providing better accuracy than other examined methods -Working with a better computational cost. |

Outperforming other examined methods in terms of accuracy and computational time. | Prediction accuracy can be increased with other new effective techniques. |

| [69] | June, 2020 | Similarity-based forecasting models (SBFMs) are advocated to forecast PV power in high temporal resolution using low temporal resolution weather variables. | The PV power generation forecasting for the next day with five-minute temporal resolution can significantly yield accurate results. | -Increasing dimensions of the input vector |

| [70] | August, 2021 | Being generalized to find the optimal prediction given that the available measurements are mapped by an affine transformation. | WRF forecasts of irradiance, temperature, relative humidity, and the solar zenith angle were selected as highly relevant inputs of the model. | -Complex computation process; -Parameter adjustment required |

| [71] | November, 2020 | Forecast combination of machine learning models is done using convex combination and quantile regression averaging (QRA). | It is found that the predictive performance is significant on the Diebold Mariano and Giacomini -White tests. | -Increasing dimensions of the input vector |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).