Submitted:

12 May 2023

Posted:

23 May 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Data model

3. Proposed approach

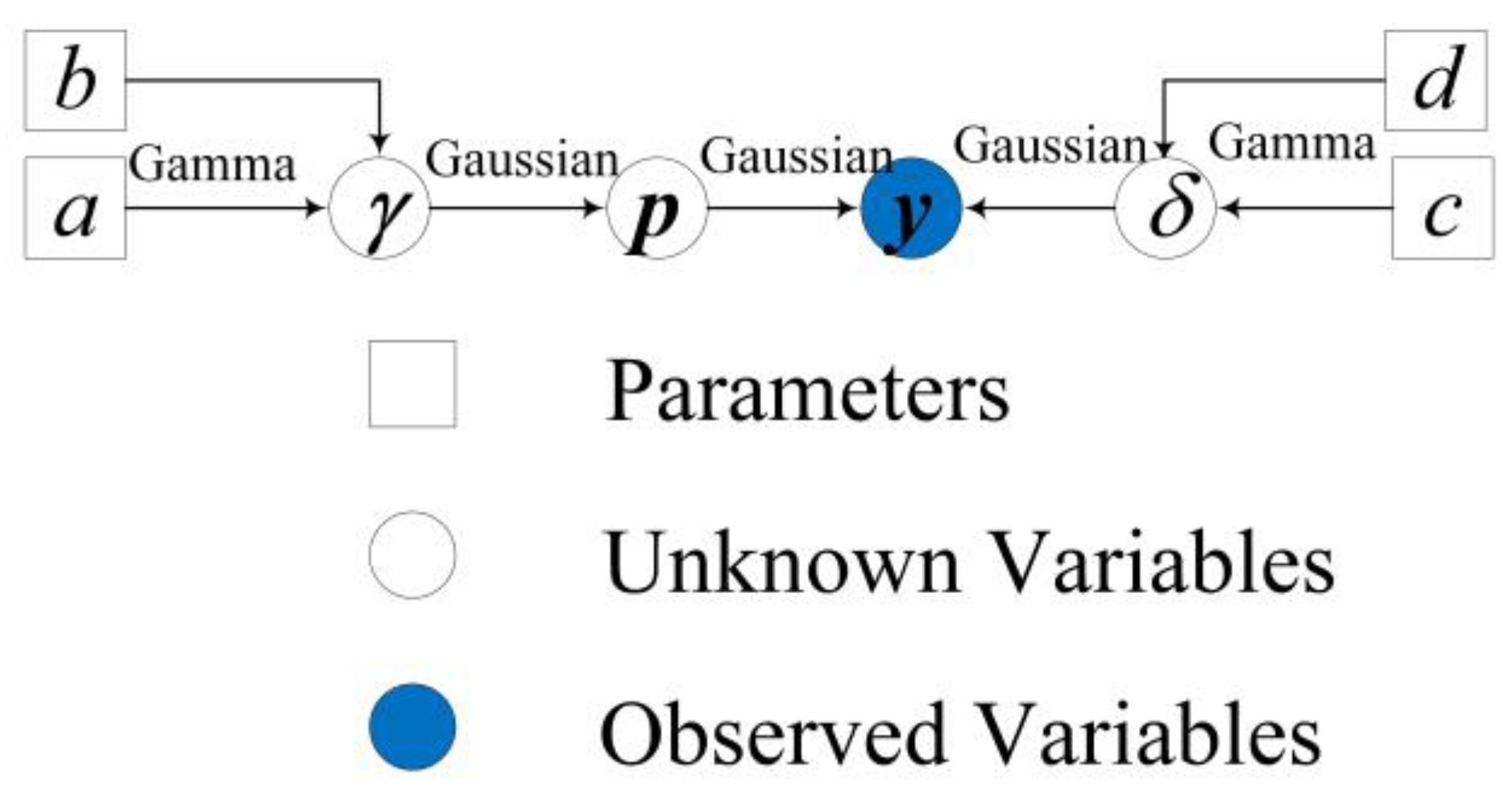

3.1. Bayesian Model

3.2. Variational Bayesian inference

3.3. Computational Complexity

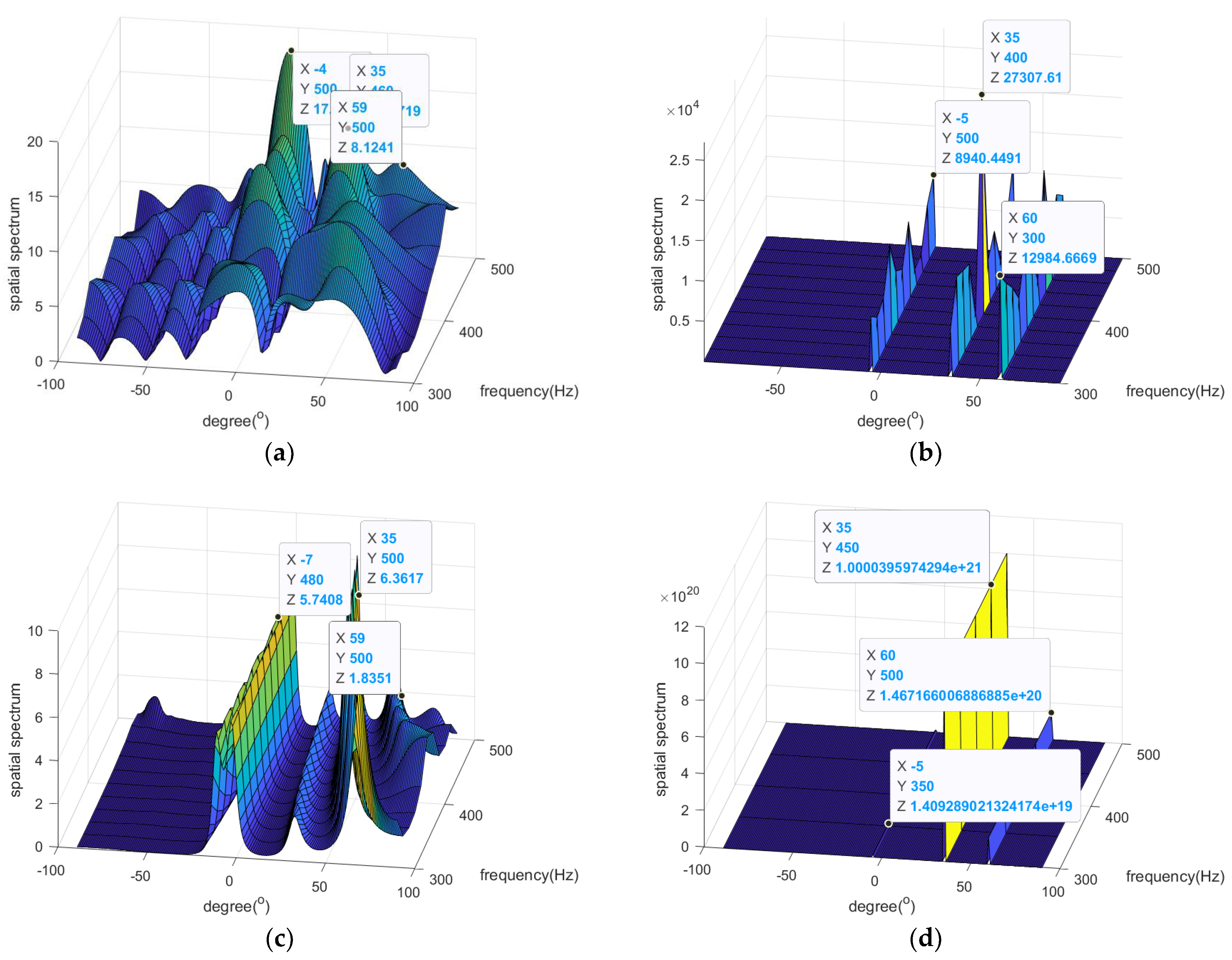

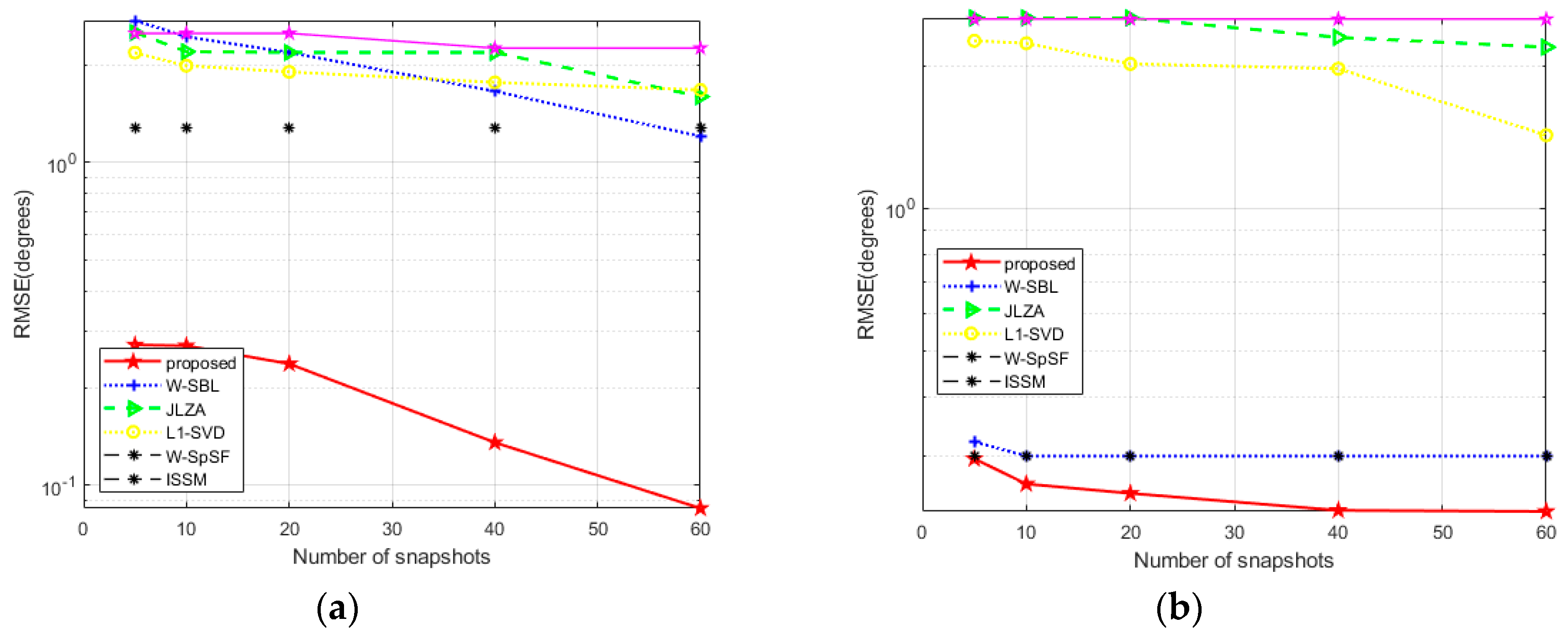

4. Numerical Simulation

5. Conclusions

References

- Krim, H.; Viberg, M. Two decades of array signal processing research: the parametric approach. IEEE SIGNAL PROC MAG 1996, 13, 67–94. [Google Scholar] [CrossRef]

- Liu, C.; Zakharov, Y.V.; Chen, T. Broadband underwater localization of multiple sources using basis pursuit de-noising. IEEE T SIGNAL PROCES 2011, 60, 1708–1717. [Google Scholar] [CrossRef]

- Liu, Z.M.; Huang, Z.T.; Zhou, Y.Y. An efficient maximum likelihood method for direction-of-arrival estimation via sparse Bayesian learning. IEEE T WIREL COMMUN 2012, 11, 1–11. [Google Scholar] [CrossRef]

- Su, G.; Morf, M. The signal subspace approach for multiple wide-band emitter location. IEEE T WIREL COMMUN 1983, 31, 1502–1522. [Google Scholar]

- Allam, M.; Moghaddamjoo, A. Two-dimensional DFT projection for wideband direction-of-arrival estimation. IEEE T SIGNAL PROCES 1995, 43, 1728–1732. [Google Scholar] [CrossRef]

- Wang, H.; Kaveh, M. Coherent signal-subspace processing for the detection and estimation of angles of arrival of multiple wide-band sources. IEEE Trans. Acoust, Speech, Signal Processing 1985, 33, 823–831. [Google Scholar] [CrossRef]

- Hung, H.; Kaveh, M. Focussing matrices for coherent signal-subspace processing. IEEE Trans. Acoust, Speech, Signal Processing 1988, 36, 1272–1281. [Google Scholar] [CrossRef]

- El-Keyi, A.; Kirubarajan, T. Adaptive beamspace focusing for direction of arrival estimation of wideband signals. SIGNAL PROCESS 2008, 88, 2063–2077. [Google Scholar] [CrossRef]

- Tang, Z.; Blacquiere, G.; Leus, G. Aliasing-free wideband beamforming using sparse signal representation. IEEE T SIGNAL PROCES 2011, 59, 3464–3469. [Google Scholar] [CrossRef]

- Shen, Q.; Liu, W.; Cui, W.; et al. Underdetermined DOA estimation under the compressive sensing framework: A review. IEEE ACCESS 2016, 4, 8865–8878. [Google Scholar] [CrossRef]

- Gan, L.; Wang, X. DOA estimation of wideband signals based on slice-sparse representation. EURASIP J ADV SIG PR 2013, 2013, 1–10. [Google Scholar] [CrossRef]

- Qin, Y.; Liu, Y.; Liu, J.; et al. Underdetermined wideband DOA estimation for off-grid sources with coprime array using sparse Bayesian learning. SENSORS-BASEL 2018, 18, 253. [Google Scholar] [CrossRef] [PubMed]

- Hyder, M.M.; Mahata, K. Direction-of-Arrival Estimation Using a Mixed l2,0.Norm Approximation. Norm Approximation. IEEE T SIGNAL PROCES 2010, 58, 4646–4655. [Google Scholar] [CrossRef]

- Malioutov, D.; Cetin, M.; Willsky, A.S. A sparse signal reconstruction perspective for source localization with sensor arrays. IEEE T SIGNAL PROCES 2005, 53, 3010–3022. [Google Scholar] [CrossRef]

- He, Z.Q.; Shi, Z.P.; Huang, L.; et al. Underdetermined DOA estimation for wideband signals using robust sparse covariance fitting. IEEE SIGNAL PROC LET 2014, 22, 435–439. [Google Scholar] [CrossRef]

- Hu, N.; Sun, B.; Zhang, Y.; et al. Underdetermined DOA estimation method for wideband signals using joint nonnegative sparse Bayesian learning. IEEE SIGNAL PROC LET 2017, 24, 535–539. [Google Scholar] [CrossRef]

- Zhao, L.; Li, X.; Wang, L.; et al. Computationally efficient wide-band DOA estimation methods based on sparse Bayesian framework. IEEE T VEH TECHNOL 2017, 66, 11108–11121. [Google Scholar] [CrossRef]

- Wipf, D.P.; Rao, B.D. Sparse Bayesian learning for basis selection. IEEE T SIGNAL PROCES 2004, 52, 2153–2164. [Google Scholar] [CrossRef]

- Wipf, D.P.; Rao, B.D. An empirical Bayesian strategy for solving the simultaneous sparse approximation problem. IEEE T SIGNAL PROCES 2007, 55, 3704–3716. [Google Scholar] [CrossRef]

- Zhang, Z.; Rao, B.D. Sparse signal recovery with temporally correlated source vectors using sparse Bayesian learning. IEEE J-STSP 2011, 5, 912–926. [Google Scholar] [CrossRef]

- Tzikas, D.G.; Likas, A.C.; Galatsanos, N.P. The variational approximation for Bayesian inference. IEEE SIGNAL PROC MAG 2008, 25, 131–146. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).