1. Introduction

Mobile communication devices such as smartphones or tablets are widely available to most of the global population, with an expected number of smartphone subscriptions to reach about 7,145 billion by 2024 [

1]. The increasing number of validated applications for smartphones in the field of general otorhinolaryngology and especially in a field related to voice assessment and management of voice disorders is permanently monitored in the literature [

2,

3,

4,

5,

6]. Advances in smartphone technology and microphone quality offer an affordable and accessible alternative to studio microphones traditionally used for speech analysis, thus providing an effective tool for assessing, detecting, and caring for voice disorders [

7,

8,

9].

The combination of variables in smartphone hardware and software may lead to differences between voice quality measures. Whether acoustic voice features recorded using smartphones sufficiently match a current gold standard for remote monitoring and clinical assessment with a studio microphone remains uncertain [

7,

10,

11]. Some controversies on this matter in the literature still exist. Several studies found that smartphones’ provided voice recordings and derived acoustic voice quality parameters are comparable to those derived using standard studio microphones [

8,

12,

13,

14]. On the other hand, using some other acoustic voice quality parameters can be discouraging [

15]. Two recent studies found none of the studied smartphones could replace the professional microphone in a voice recording to evaluate the six parameters analyzed, except for f

0 and jitter. Moreover, passing a voice signal through a telecom channel induced filter and noise effects that significantly impacted common acoustic voice quality measures [

16,

17].

Nowadays, multiparametric models for voice quality assessment are generally accepted to be more reliable and valid than single-parameter measures because they demonstrate stronger correlations with auditory-perceptual voice evaluation and are more representative of daily use patterns. For example, the Acoustic Voice Quality Index (AVQI) is a six-variable acoustic model for the multiparametric measurement evaluating both the voiced parts of a continuous speech fragment and sustained vowel [a:] developed by Maryn et al. in 2010 [

18,

19].

Multiple studies across different languages have attested to the reliability of AVQI as a clinical voice quality evaluation tool. High consistency, concurrent validity, test–retest reliability, high sensitivity to voice quality changes through voice therapy, utility in discriminating across the perceptual levels of dysphonia severity, and adequate diagnostic accuracy with good discriminatory power of the AVQI in differentiating between normal and abnormal voice qualities were observed [

19,

20,

21,

22,

23,

24,

25,

26]. It is noteworthy that several studies have reported that gender and age do not affect the overall AVQI value, thus proving the perspectives for further generalization of this objective and quantitative voice quality measurement [

26,

27,

28,

29]. Therefore, nowadays, AVQI is considered a recognized-around-the-globe multiparametric construct of voice quality assessment for its clinical and research applications [

30,

31,

32].

Several previous studies have proved the suitability of using smartphone voice recordings performed both in acoustically treated sound-proof rooms or in ordinary users’ environments to estimate the AVQI [

9,

11,

14,

26,

33,

34]. However, just a few studies in the literature provide data about AVQI realization using different applications for mobile communication devices [

9,

26,

35].

The study by Grillo et al. in 2020 presented the application (

VoiceEvalU8) which provides an automatic option for the reliable calculation of several acoustic voice measures and AVQI on iOS and Android smartphones using Praat source code and algorithms [

35]. A user-friendly application/graphical user interface for the Kannada-speaking population was proposed by Shabnam et al. in 2022. That application provides a simplified output for AVQI cut-off values to depict the AVQI-based severity of dysphonia which can be comprehendible by patients with voice disorder and health professionals [

26]. The multilingual “

VoiceScreen” application developed by Uloza et al. allows voice recording in clinical settings, automatically extracting acoustic voice features, estimating the AVQI result and displaying it alongside a recommendation to the user [

9]. However, the “

VoiceScreen” application runs the iOS operating system, and that feature limits usability only to iPhones, tablets, etc.

The results of the studies mentioned above enabled us to presume the feasibility of voice recordings captured with different smartphones for the estimation of AVQI. Consequently, the current research was designed to answer the following questions regarding the possibility of a smartphone-based “VoiceScreen” application for AVQI estimation: (1) are the average AVQI values estimated by different smartphones consistent and comparable; (2) is the diagnostic accuracy of different smartphones estimated AVQIs relevant to differentiate normal and pathological voices. We hypothesize that using different smartphones for voice recordings and estimation of AVQI will be feasible for the quantitative voice assessment.

Therefore, the present study aimed to develop the universal-platform-based (UPB) application suitable for different smartphones for estimation of AVQI and evaluate its reliability in AVQI measurements and normal/pathological voice differentiation.

2. Materials and Methods

All subjects gave their informed consent for inclusion before they participated in the study. The study was conducted in accordance with the Declaration of Helsinki of 1975, and the protocol was approved by the Kaunas Regional Ethics Committee for Biomedical Research (2022-04-20 No. BE-2-49).

The study group consisted of 135 adult individuals, 58 men and 77 women. The mean age of the study group was 42.9 (SD 15.26) years. They were all examined at the Department of Otolaryngology of the Lithuanian University of Health Sciences, Kaunas, Lithuania.

The pathological voice subgroup consisted of 86 patients, 42 men and 44 women: with a mean age of 50.8 years (SD 14.3). They presented with a relatively common and clinically discriminative group of laryngeal diseases and related voice disturbances, i.e., benign and malignant mass lesions of the vocal folds and unilateral paralysis of the vocal fold. The normal voice subgroup consisted of 49 selected healthy volunteer individuals, 16 men and 33 women: mean age 31.69 (SD 9.89) years. This subgroup was collected following three criteria to define a vocally healthy subject: (1) all selected subjects considered their voice as normal and had no actual voice complaints, no history of chronic laryngeal diseases or voice disorders; (2) no pathological alterations in the larynx of the healthy subjects were found during video laryngoscopy; and (3) all these voice samples were evaluated as normal voices by otolaryngologists working in the field of voice. Demographic data of the study group and diagnoses of the pathological voice subgroup are presented in

Table 1.

No correlations between the subject’s age, sex, and AVQI measurements were found in the previous study [

27]. Therefore, in the present study, the control and patient groups were considered suitable for AVQI-related data analysis despite these groups not being matched by sex and age.

Original Voice Recordings

Voice samples from each subject were recorded in a T-series sound-proof room for hearing testing (T-room, CATegner AB, Bromma, Sweden) using a studio oral cardioid AKG Perception 220 microphone (AKG Acoustics, Vienna, Austria). The microphone was placed at a 10.0 cm distance from the mouth, keeping a 90° microphone-to-mouth angle. Each participant was asked to complete two vocal tasks, which were digitally recorded. The tasks consisted of (1) sustaining phonation of the vowel sound [a:] for at least 4 s duration and (2) reading a phonetically balanced text segment in Lithuanian “Turėjo senelė žilą oželį” (“The granny had a small grey goat”). The participants completed both vocal tasks at a personally comfortable loudness and pitch. All voice recordings were captured with Audacity recording software (

https://www.audacityteam.org/) at a sampling frequency of 44.1 kHz and exported in a 16-bit depth lossless “wav” audio file format into the computer’s hard disk drive (HDD).

Auditory-Perceptual Evaluation

Five experienced physicians-laryngologists, who were all native Lithuanians, served as the rater panel. Blind to all relevant information regarding the subject (i.e., identity, age, gender, diagnosis, and disposition of the voice samples), they performed auditory-perceptual evaluations to quantify the vocal deviation, judging the voice samples into four ordinal severity classes of Grade from the GRBAS scale (i.e., 0 = normal, 1 = slight, 2 = moderate, 3 = severe dysphonia [

36]. A detailed description of the auditory-perceptual evaluation is presented elsewhere [

21].

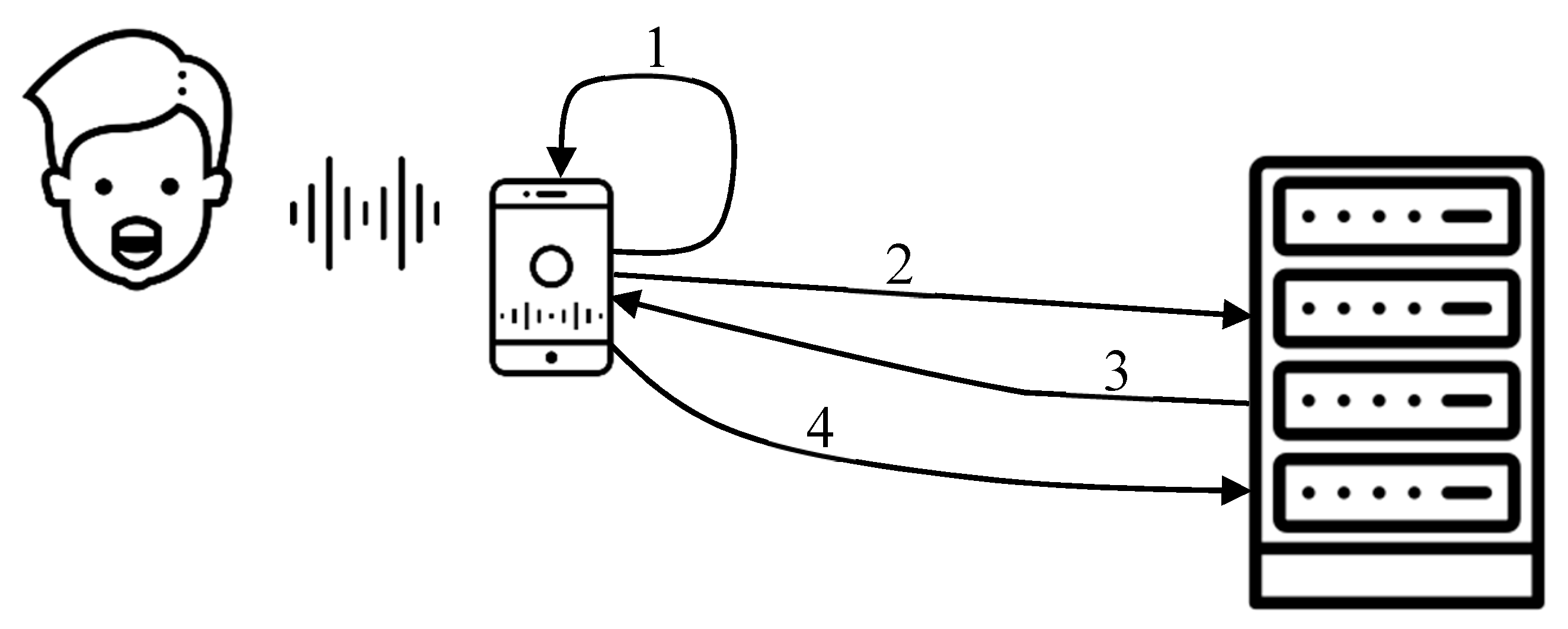

Transmitting Studio Microphone Voice Recordings to Smartphones

The impact on voice recordings caused by technical differences in studio and smartphone microphones was averted by applying the filtration (equalization) of the already recorded flat frequency audio using the data from the smartphone frequency response curves. The filtered result would represent audio recorded with the selected smartphone. Using this method, the only variable affected is the frequency response, keeping other variables, i.e., room reflections, distance to the microphone, directionality, user loudness, and other variables, constant. Ableton DAW (digital audio workstation) was implemented as an editing environment, and the VST (virtual studio plugin) plugin MFreeformEqualizer by MeldaProduction (

https://www.meldaproduction.com/MFreeformEqualizer/features) was used to import the frequency response datasets and equalize the frequencies according to the required frequency response. The MFreeformEqualizer filter quality was set to the extreme (highest available), with 0% curve smoothing. All the audio files were then re-exported as 44100 Hz 16-bit wav files.

With this method, the digital voice recordings obtained with a studio microphone were directly transmitted to different smartphones, avoiding the surrounding environment’s impact.

AVQI Estimation

For AVQI calculations, the signal processing of the voice samples was done in the Praat software (version 5.3.57;

https://www.fon.hum.uva.nl/praat /). Only voiced parts of the continuous speech were manually extracted and concatenated to the medial 3 s of sustained [a] phonation. The voice samples were concatenated for auditory-perceptual judgment in the following order: text segment, a 2-second pause, followed by the 3-second sustained vowel /a/ segment. This chain of signals was used for acoustic analysis with the AVQI script version 02.02 developed for the program Praat

https://www.vvl.be/documenten-en-paginas/praat-script-avqi-v0203? download=AcousticVoiceQualityIndexv.02.03.txt.

Statistical Analysis

Statistical analysis was performed using IBM SPSS Statistics for Windows, version 20.0 (Armonk, NY: IBM Corp.) and MedCalc Version 20.118 (Ostend, BE: MedCalc Software Ltd.). The chosen level of statistical significance was 0.05.

The data distribution was determined according to the normality law by applying the Shapiro-Wilk test of normality and calculating the coefficients of skewness and kurtosis. Student’s

t-test was used to test the equality of means in normally distributed data [

37]. An analysis of variance (ANOVA) was employed to determine if there were significant differences between the multiple means of the independent groups [

38]. Cronbach’s alpha was used to measure the internal consistency of measures [

39]. Pearson’s correlation coefficient was applied to assess the linear relationship between variables obtained from continuous scales. Spearman’s correlation coefficient was used to determine the relationship in ordinal results. Receiver operating characteristic (ROC) curves were used to obtain the optimal sensitivity and specificity at optimal AVQI cut-off points. The “area under the ROC curve” (AUC) served to calculate the possible discriminatory accuracy of AVQI performed with a studio microphone and different smartphones. A pairwise comparison of ROC curves, as described by De Long et al., was used to determine if there was a statistically significant difference between two or more variables when categorizing normal/pathological voice [

40].

3. Results

Raters’ Perceptual Evaluation Outcomes

The rater panel demonstrated excellent inter-rater agreement (Cronbach’s α = 0.967) with a mean intra-class correlation coefficient of 0.967 between five raters (from 0.961 to 0.973).

AVQI Evaluation Outcomes

An individual smartphone AVQI evaluation displayed excellent agreement by achieving a Cronbach’s alpha of 0.984. The inter-smartphone AVQI measurements’ reliability was excellent, with an average Intra-class Correlation Coefficient (ICC) of 0.983 (ranging from 0.979 to 0.987).

The mean AVQI scores provided by different smartphones and a studio microphone can be observed in

Table 2.

As shown in

Table 2, the one-way ANOVA analysis did not detect statistically significant differences between mean AVQI scores revealed using different smartphones (F= 0.759; p = 0.58). Further Bonferroni analysis reaffirmed the lack of difference between the AVQI scores obtained from different smartphones (p=1.0, estimated Bonferroni’s p for statistically significant difference p= 0.01). The mean AVQI differences ranged from 0.01 to 0.4 points when comparing different smartphones.

Almost perfect direct linear correlations were observed between the AVQI results obtained with a studio microphone and different smartphones. Pearson’s correlation coefficients ranged from 0.991 to 0.987 and can be observed in

Table 3.

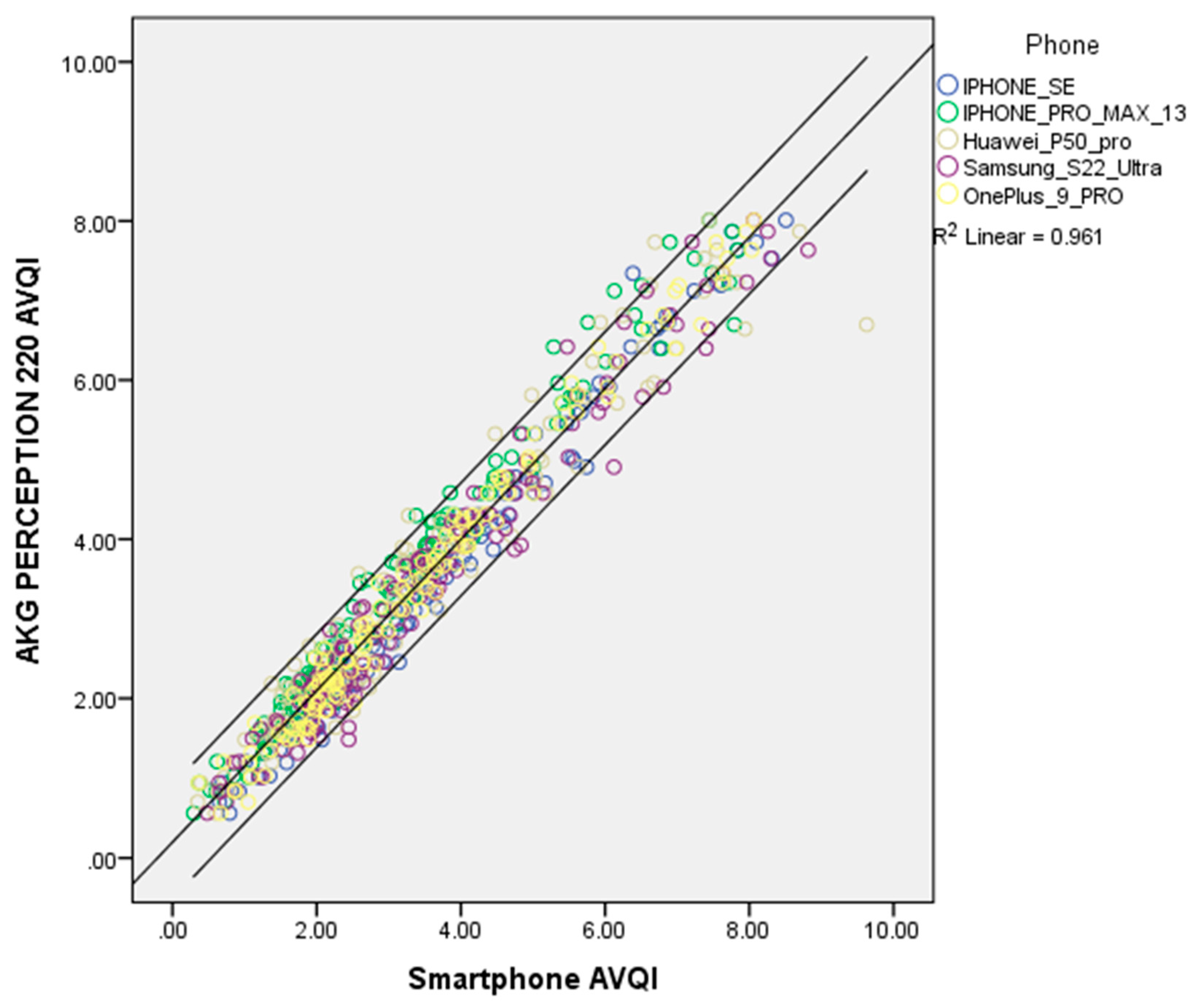

The relationships between the AVQI scores obtained with a studio microphone and different smartphones are graphically presented in

Figure 2.

As demonstrated in

Figure 2, it is evident that AVQI results obtained with different smartphones closely resemble the AVQI results obtained with a studio microphone, with very few data points outside of the 95% confidence interval (R

2= 0.961). Therefore, it is safe to conclude that the AVQI scores obtained with smartphones are directly compatible with the ones obtained with the reference studio microphone.

The Normal vs. Pathological Voice Diagnostic Accuracy of the AVQI Using Different Smartphones

First, the ROC curves of AVQI obtained from a studio microphone and different smartphone voice recordings were inspected visually to identify optimum cut-off scores according to general interpretation guidelines [

41]. All of the ROC curves were visually almost identical and occupied the largest part of the graph, clearly revealing their respectable power to discriminate between normal and pathological voices.

Figure 3.

Second, as revealed by the AUC statistics analysis, a high level of precision of the AVQI in discriminating between normal and pathological voices was yielded with the suggested AUC = 0.800 threshold. The results of the ROC statistical analysis are presented in

Table 4.

As demonstrated in

Table 4, the ROC analysis determined the optimal AVQI cut-off values for distinguishing between normal and pathological voices for each smartphone. All employed microphones passed the proposed 0.8 AUC threshold and revealed an acceptable Youden-index value.

Third, a pairwise comparison of the significance of the differences between the AUCs revealed in the present study is presented in

Table 5.

As shown in

Table 5, a comparison of the AUCs-dependent ROC curves (AVQI measurements obtained from studio microphone and different smartphones), according to the test of DeLong et al., confirmed no statistically significant differences between the AUCs (p > 0.05). The most considerable observed difference between the AUCs was only 0.028. These results confirm compatible results of the AVQI’s diagnostic accuracy in differentiating normal vs. pathological voice when using voice recordings from a studio microphone and different smartphones.

4. Discussion

In the present study, the novel UPB “

VoiceScreen” application for the estimation of AVQI and detection of voice deteriorations in patients with various voice disorders and healthy controls was tested for the first time simultaneously with different smartphones. The AVQI was chosen for voice quality assessment because of some essential favorable features of this multiparametric measurement: the less vulnerability of the AVQI to environmental noise compared to other complex acoustic markers, the robustness of the AVQI regarding the interaction between acoustic voice quality measurements and room acoustics; no significant differences within-subject for both women and men when comparing the AVQI across different voice analysis programs [

11,

14,

42]. Another essential attribute of the AVQI is that Praat is the only freely available program that estimates the AVQI. That eliminates the impact of possible software differences on AVQI computation.

In the present study, the results of the ANOVA analysis did not detect statistically significant differences between mean AVQI scores revealed using different smartphones (F= 0.759; p = 0.58). Moreover, the mean AVQI differences ranged from 0.01 to 0.4 points when comparing AVQI estimated with different smartphones, thus establishing a low level of variability. This corresponds with a value of 0.54 for the absolute retest difference of AVQI values proposed by Barsties and Maryn in 2013 [

19,

43]. Consequently, these outcomes of AVQI measurements with different smartphones were considered neither statistically nor clinically significant, justifying the possibility of practical use of the UPB

“VoiceScreen” app.

The correlation analysis showed that all AVQI measurements were highly correlated (Pearson’s r ranged from 0.991 to 0.987) across the devices used in the present study. This concurred with the literature data on the high correlation between acoustic voice features derived from studio microphones and smartphones and examined both for control participants and synthesized voice data [

7,

12,

13,

14].

Furthermore, analysis of the results revealed that the AVQI showed a remarkable ability to discriminate between normal and pathological voices as determined by auditory-perceptual judgment. The ROC analysis determined the optimal AVQI cut-off values for distinguishing between normal and pathological voices for each smartphone used. A remarkable precision of AVQI in discriminating between normal and pathological voices was yielded (AUC 0.834 – 0.862), resulting in an acceptable balance between sensitivity and specificity. These findings suggest that AVQI is a reliable tool in differentiating normal/pathological voice independently of the voice recordings from tested studio microphone and different smartphones. The comparison of the AUCs-dependent ROC curves (AVQI measurements obtained from studio microphone and different smartphones) demonstrated no statistically significant differences between the AUCs (p > 0.05), with the largest revealed difference between the AUCs of only 0.028. These results confirm compatible results of the AVQI diagnostic accuracy in differentiating normal vs. pathological voice when using voice recordings from studio microphone and different smartphones and present remarkable importance from a practical point of view.

Several limitations of the present study have to be considered. Despite the encouraging results of the AVQI measurements, some individual discrepancies between AVQI results revealed with different smartphones still exist. Therefore, further research in a wide diversity of voice pathologies, including functional voice disorders, is needed to ensure the maximum comparability of acoustic voice features derived from voice recordings obtained with mobile communication devices and reference studio microphones. Moreover, it is also essential to evaluate the impact of the voice recording environment on the AVQI estimation in real clinical settings by performing simultaneous voice recordings with different smartphones, making possible the results and improvements to be employed in healthcare applications.

Summarizing the results of the previous and present studies allows for the presumption that the performance of the novel UPB “VoiceScreen” app using different smartphones represents an adequate and compatible performance of AVQI estimation.

However, it is important to note that due to existing differences in recording conditions, microphones, hardware, and software, the results of acoustic voice quality measures may differ between recording systems [

11]. Therefore, using the UPB

“VoiceScreen” app with some caution is advisable. For voice screening purposes, it is more reliable to perform AVQI measurements using the same device, especially when performing repeated measurements. Moreover, these bits of advice should be considered when comparing data of acoustic voice analysis between different voice recording systems, i.e., different smartphones or other mobile communication devices, and when using them for diagnostic purposes or monitoring voice treatment outcomes.

5. Conclusions

The UPB “VoiceScreen” app represents an accurate and robust tool for voice quality measurement and normal vs. pathological voice screening purposes, demonstrating the potential to be used by patients and clinicians for voice assessment employing both iOS and Android smartphones.

Funding

This project has received funding from European Regional Development Fund (project No 13.1.1-LMT-K-718-05-0027) under grant agreement with the Research Council of Lithuania (LMTLT). Funded as European Union’s measure in response to COVID-19 pandemic.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki of 1975, and the protocol was approved by the Kaunas Regional Ethics Committee for Biomedical Research (2022-04-20 No. BE-2-49).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Mobile network subscriptions worldwide 2028. Available online: https://www.statista.com/statistics/330695/number-of-smartphone-users-worldwide/ (accessed on 3 April 2023).

- Casale, M.; Costantino, A.; Rinaldi, V.; Forte, A.; Grimaldi, M.; Sabatino, L.; Oliveto, G.; Aloise, F.; Pontari, D.; Salvinelli, F. Mobile applications in otolaryngology for patients: An update. Laryngoscope Investigative Otolaryngology 2018, 3, 434. [Google Scholar] [CrossRef]

- Eleonora, M.C.T.; Lonigro, A.; Gelardi, M.; Kim, B.; Cassano, M. Mobile Applications in Otolaryngology: A Systematic Review of the Literature, Apple App Store and the Google Play Store. Ann Otol Rhinol Laryngol 2021, 130, 78–91. [Google Scholar] [CrossRef]

- Grillo, E.U.; Wolfberg, J. An Assessment of Different Praat Versions for Acoustic Measures Analyzed Automatically by VoiceEvalU8 and Manually by Two Raters. Journal of Voice 2023, 37, 17–25. [Google Scholar] [CrossRef] [PubMed]

- Boogers, L.S.; Chen, B.S.J.; Coerts, M.J.; Rinkel, R.N.P.M.; Hannema, S.E. Mobile Phone Applications Voice Tools and Voice Pitch Analyzer Validated With LingWAVES to Measure Voice Frequency. Journal of Voice 2022. [Google Scholar] [CrossRef] [PubMed]

- Kojima, T.; Hasebe, K.; Fujimura, S.; Okanoue, Y.; Kagoshima, H.; Taguchi, A.; Yamamoto, H.; Shoji, K.; Hori, R. A New iPhone Application for Voice Quality Assessment Based on the GRBAS Scale. Laryngoscope 2021, 131, 580–582. [Google Scholar] [CrossRef]

- Fahed, V.S.; Doheny, E.P.; Busse, M.; Hoblyn, J.; Lowery, M.M. Comparison of Acoustic Voice Features Derived From Mobile Devices and Studio Microphone Recordings. Journal of Voice 2022. [Google Scholar] [CrossRef]

- Awan, S.N.; Shaikh, M.A.; Awan, J.A.; Abdalla, I.; Lim, K.O.; Misono, S. Smartphone Recordings are Comparable to “Gold Standard” Recordings for Acoustic Measurements of Voice. Journal of Voice 2023. [Google Scholar] [CrossRef]

- Uloza, V.; Ulozaite-Staniene, N.; Petrauskas, T. An iOS-based VoiceScreen application: feasibility for use in clinical settings-a pilot study. Eur Arch Otorhinolaryngol 2023, 280, 277–284. [Google Scholar] [CrossRef]

- Munnings, A.J. The Current State and Future Possibilities of Mobile Phone “Voice Analyser” Applications, in Relation to Otorhinolaryngology. Journal of Voice 2020, 34, 527–532. [Google Scholar] [CrossRef] [PubMed]

- Maryn, Y.; Ysenbaert, F.; Zarowski, A.; Vanspauwen, R. Mobile Communication Devices, Ambient Noise, and Acoustic Voice Measures. Journal of Voice 2017, 31, 248.e11–248.e23. [Google Scholar] [CrossRef] [PubMed]

- Kardous, C.A.; Shaw, P.B. Evaluation of smartphone sound measurement applications. J Acoust Soc Am 2014, 135, EL186–EL192. [Google Scholar] [CrossRef]

- Manfredi, C.; Lebacq, J.; Cantarella, G.; Schoentgen, J.; Orlandi, S.; Bandini, A.; DeJonckere, P.H. Smartphones Offer New Opportunities in Clinical Voice Research. Journal of Voice 2017, 31, 111.e1–111.e7. [Google Scholar] [CrossRef]

- Grillo, E.U.; Brosious, J.N.; Sorrell, S.L.; Anand, S. Influence of Smartphones and Software on Acoustic Voice Measures. Int J Telerehabil 2016, 8, 9–14. [Google Scholar] [CrossRef]

- Schaeffler, F.; Jannetts, S.; Beck, J.M. Reliability of clinical voice parameters captured with smartphones – measurements of added noise and spectral tilt. Proceedings of the 20th Annual Conference of the International Speech Communication Association INTERSPEECH, Graz, Austria 2019, 2523–2527. Available online: https://eresearch.qmu.ac.uk/handle/20.500.12289/10013 (accessed on 3 April 2023). [CrossRef]

- Marsano-Cornejo, M.; Roco-Videla, Á. Comparison of the Acoustic Parameters Obtained With Different Smartphones and a Professional Microphone. Acta Otorrinolaringol Esp (Engl Ed) 2022, 73, 51–55. [Google Scholar] [CrossRef]

- Pommée, T.; Morsomme, D. Voice Quality in Telephone Interviews: A preliminary Acoustic Investigation. Journal of Voice 2022. [Google Scholar] [CrossRef]

- Maryn, Y.; De Bodt, M.; Roy, N. The Acoustic Voice Quality Index: Toward improved treatment outcomes assessment in voice disorders. J Commun Disord 2010, 43, 161–174. [Google Scholar] [CrossRef] [PubMed]

- Barsties, B.; Maryn, Y. [The Acoustic Voice Quality Index. Toward expanded measurement of dysphonia severity in German subjects]. HNO 2012, 60, 715–720. [Google Scholar] [CrossRef] [PubMed]

- Hosokawa, K.; Barsties, B.; Iwahashi, T.; Iwahashi, M.; Kato, C.; Iwaki, S.; Sasai, H.; Miyauchi, A.; Matsushiro, N.; Inohara, H.; Ogawa, M.; Maryn, Y. Validation of the Acoustic Voice Quality Index in the Japanese Language. Journal of Voice. 2017, 31, pp. 260.e1–260.e9. Available online: https://www.sciencedirect.com/science/article/pii/S0892199716300789. [CrossRef]

- Uloza, V.; Petrauskas, T.; Padervinskis, E.; Ulozaitė, N.; Barsties, B.; Maryn, Y. Validation of the Acoustic Voice Quality Index in the Lithuanian Language. Journal of Voice. 2017, 31, pp. 257.e1–257.e11. Available online: https://www.sciencedirect.com/science/article/pii/S0892199716300716. [CrossRef]

- Kankare, E.; Barsties V Latoszek, B.; Maryn, Y.; Asikainen, M.; Rorarius, E.; Vilpas, S.; Ilomäki, I.; Tyrmi, J.; Rantala, L.; Laukkanen, A. The acoustic voice quality index version 02.02 in the Finnish-speaking population. Logoped Phoniatr Vocol 2020, 45, 49–56. [Google Scholar] [CrossRef] [PubMed]

- Englert, M.; Lopes, L.; Vieira, V.; Behlau, M. Accuracy of Acoustic Voice Quality Index and Its Isolated Acoustic Measures to Discriminate the Severity of Voice Disorders. Journal of Voice. 2022, 36, pp. 582.e1–582.e10. Available online: https://www.sciencedirect.com/science/article/pii/S0892199720302939. [CrossRef]

- Yeşilli-Puzella, G.; Tadıhan-Özkan, E.; Maryn, Y. Validation and Test-Retest Reliability of Acoustic Voice Quality Index Version 02.06 in the Turkish Language. Journal of Voice. 2022, 36, pp. 736.e25–736.e32. Available online: https://www.sciencedirect.com/science/article/pii/S0892199720303222. [CrossRef]

- Englert, M.; Latoszek, B.B.v.; Behlau, M. Exploring The Validity of Acoustic Measurements and Other Voice Assessments. Journal of Voice 2022. [Google Scholar] [CrossRef] [PubMed]

- Shabnam, S.; Pushpavathi, M.; Gopi Sankar, R.; Sridharan, K.V.; Vasanthalakshmi, M.S. A Comprehensive Application for Grading Severity of Voice Based on Acoustic Voice Quality Index v.02.03. Journal of Voice 2022. [Google Scholar] [CrossRef] [PubMed]

- Barsties, v. Latoszek, B.; Ulozaitė-Stanienė, N.; Maryn, Y.; Petrauskas, T.; Uloza, V. The Influence of Gender and Age on the Acoustic Voice Quality Index and Dysphonia Severity Index: A Normative Study. Journal of Voice. 2019, 33, pp. 340–345. Available online: https://www.sciencedirect.com/science/article/pii/S089219971730468X. [CrossRef]

- Batthyany, C.; Maryn, Y.; Trauwaen, I.; Caelenberghe, E.; van Dinther, J.; Zarowski, A.; Wuyts, F. A case of specificity: How does the acoustic voice quality index perform in normophonic subjects? Applied Sciences (Switzerland) 2019. [Google Scholar] [CrossRef]

- Jayakumar, T.; Benoy, J.J.; Yasin, H.M. Effect of Age and Gender on Acoustic Voice Quality Index Across Lifespan: A Cross-sectional Study in Indian Population. Journal of Voice. 2022, 36, pp. 436.e1–436.e8. Available online: https://www.sciencedirect.com/science/article/pii/S0892199720301995. [CrossRef]

- Jayakumar, T.; Benoy, J.J. Acoustic Voice Quality Index (AVQI) in the Measurement of Voice Quality: A Systematic Review and Meta-Analysis. Journal of Voice 2022. [Google Scholar] [CrossRef]

- Batthyany, C.; Latoszek, B.B.V.; Maryn, Y. Meta-Analysis on the Validity of the Acoustic Voice Quality Index. Journal of voice : official journal of the Voice Foundation 2022. [Google Scholar] [CrossRef] [PubMed]

- Saeedi, S.; aghajanzadeh, M.; Khatoonabadi, a.r. A Literature Review of Voice Indices Available for Voice Assessment. JRSR 2022, 9, 151–155. [Google Scholar] [CrossRef]

- Grillo, E.U.; Wolfberg, J. An Assessment of Different Praat Versions for Acoustic Measures Analyzed Automatically by VoiceEvalU8 and Manually by Two Raters. Journal of Voice 2020, 37, 17–25. [Google Scholar] [CrossRef]

- Uloza, V.; Ulozaitė-Stanienė, N.; Petrauskas, T.; Kregždytė, R. Accuracy of Acoustic Voice Quality Index Captured With a Smartphone - Measurements With Added Ambient Noise. J Voice 2021, S0892–S0894. [Google Scholar] [CrossRef]

- Grillo, E.U.; Wolfberg, J. An Assessment of Different Praat Versions for Acoustic Measures Analyzed Automatically by VoiceEvalU8 and Manually by Two Raters. J Voice 2023, 37, 17–25. [Google Scholar] [CrossRef]

- Dejonckere, P.H.; Bradley, P.; Clemente, P.; Cornut, G.; Crevier-Buchman, L.; Friedrich, G.; Van De Heyning, P.; Remacle, M.; Woisard, V. A basic protocol for functional assessment of voice pathology, especially for investigating the efficacy of (phonosurgical) treatments and evaluating new assessment techniques. Guideline elaborated by the Committee on Phoniatrics of the European Laryngological Society (ELS). Eur Arch Otorhinolaryngol 2001, 258, 77–82. [Google Scholar] [CrossRef]

- Senn, S.; Richardson, W. The first t-test. Statist Med 1994, 13, 785–803. [Google Scholar] [CrossRef] [PubMed]

- McHugh, M.L. Multiple comparison analysis testing in ANOVA. Biochem Med (Zagreb) 2011, 21, 203–209. [Google Scholar] [CrossRef]

- Cho, E. Making Reliability Reliable: A Systematic Approach to Reliability Coefficients. Organ Res Methods 2016, 19, 651–682. [Google Scholar] [CrossRef]

- Hanley, J.A.; McNeil, B.J. The meaning and use of the area under a receiver operating characteristic (ROC) curve. Radiology 1982, 143, 29–36. [Google Scholar] [CrossRef] [PubMed]

- Dollaghan, C.A. The handbook for evidence-based practice in communication disorders, Paul H. Brookes Publishing Co: Baltimore, MD, US, 2007; pp. xi, 169.

- Bottalico, P.; Codino, J.; Cantor-Cutiva, L.C.; Marks, K.; Nudelman, C.J.; Skeffington, J.; Shrivastav, R.; Jackson-Menaldi, M.C.; Hunter, E.J.; Rubin, A.D. Reproducibility of Voice Parameters: The Effect of Room Acoustics and Microphones. Journal of Voice. 2020, 34, pp. 320–334. Available online: https://www.sciencedirect.com/science/article/pii/S0892199718304338. [CrossRef]

- Lehnert, B.; Herold, J.; Blaurock, M.; Busch, C. Reliability of the Acoustic Voice Quality Index AVQI and the Acoustic Breathiness Index (ABI) when wearing CoViD-19 protective masks. Eur Arch Otorhinolaryngol 2022, 279, 4617–4621. [Google Scholar] [CrossRef] [PubMed]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).