Submitted:

06 June 2023

Posted:

08 June 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Materials and Methods

2.1. The GARCH Model

2.2. The fGARCH Model

2.3. The True Parameter Recovery Measure

2.4. Simulation Design

2.4.1. Aim of the Simulation Study

2.4.2. State the Research Questions

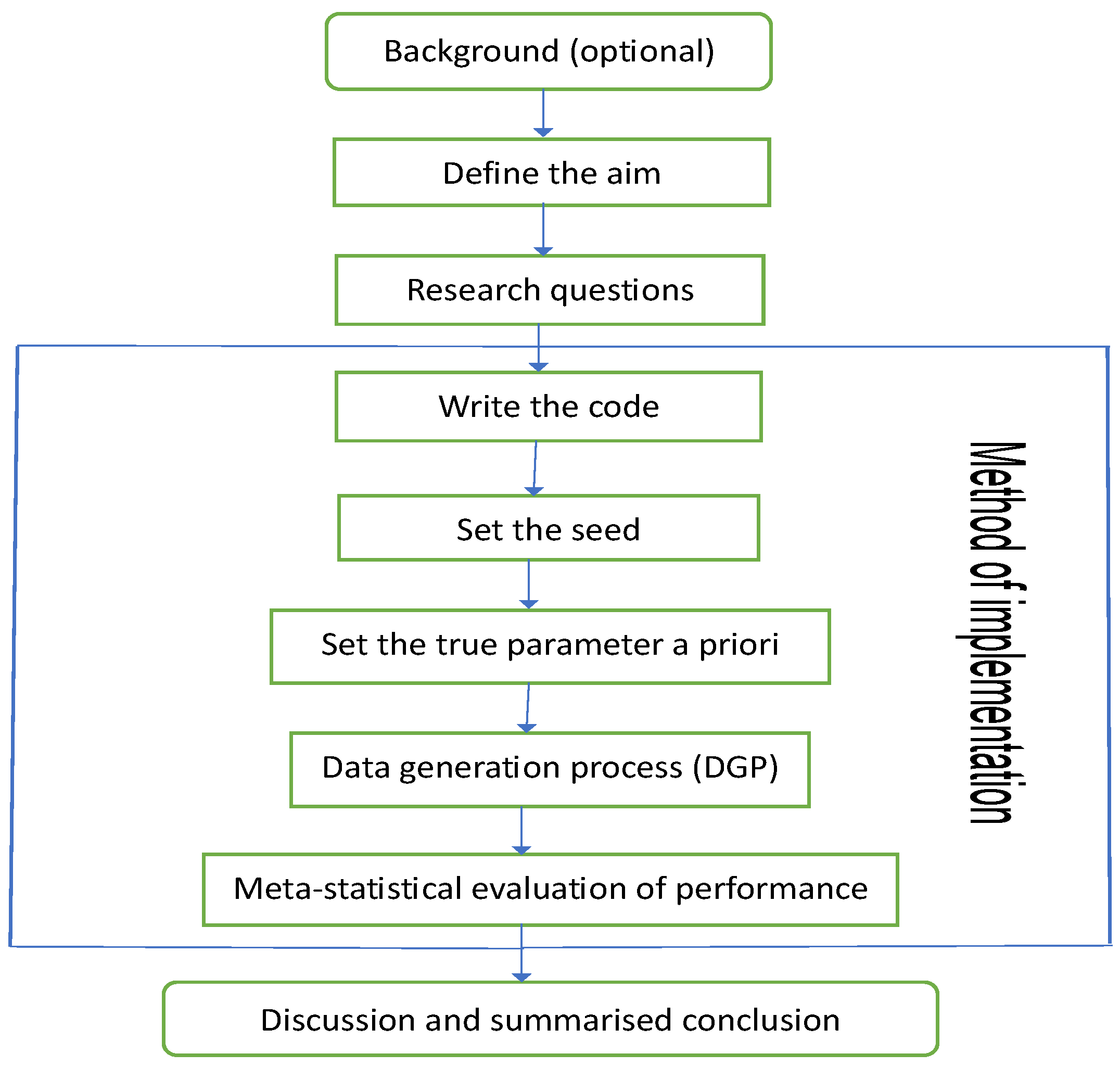

2.4.3. Method of Implementation

- Write the code: Carrying out a proper simulation experiment that mirrors real-life situations can be very demanding and computationally intensive, hence readable computer code with the right syntax must be ensued. MCS code must be well organised to avoid difficulties during debugging. It is always safer to start with small coding practices, get familiar with them and ensure they run properly with necessary debugging of errors before embarking on more intensive and complex ones. Code must be efficiently and flexibly written and well arranged for easy readability.

-

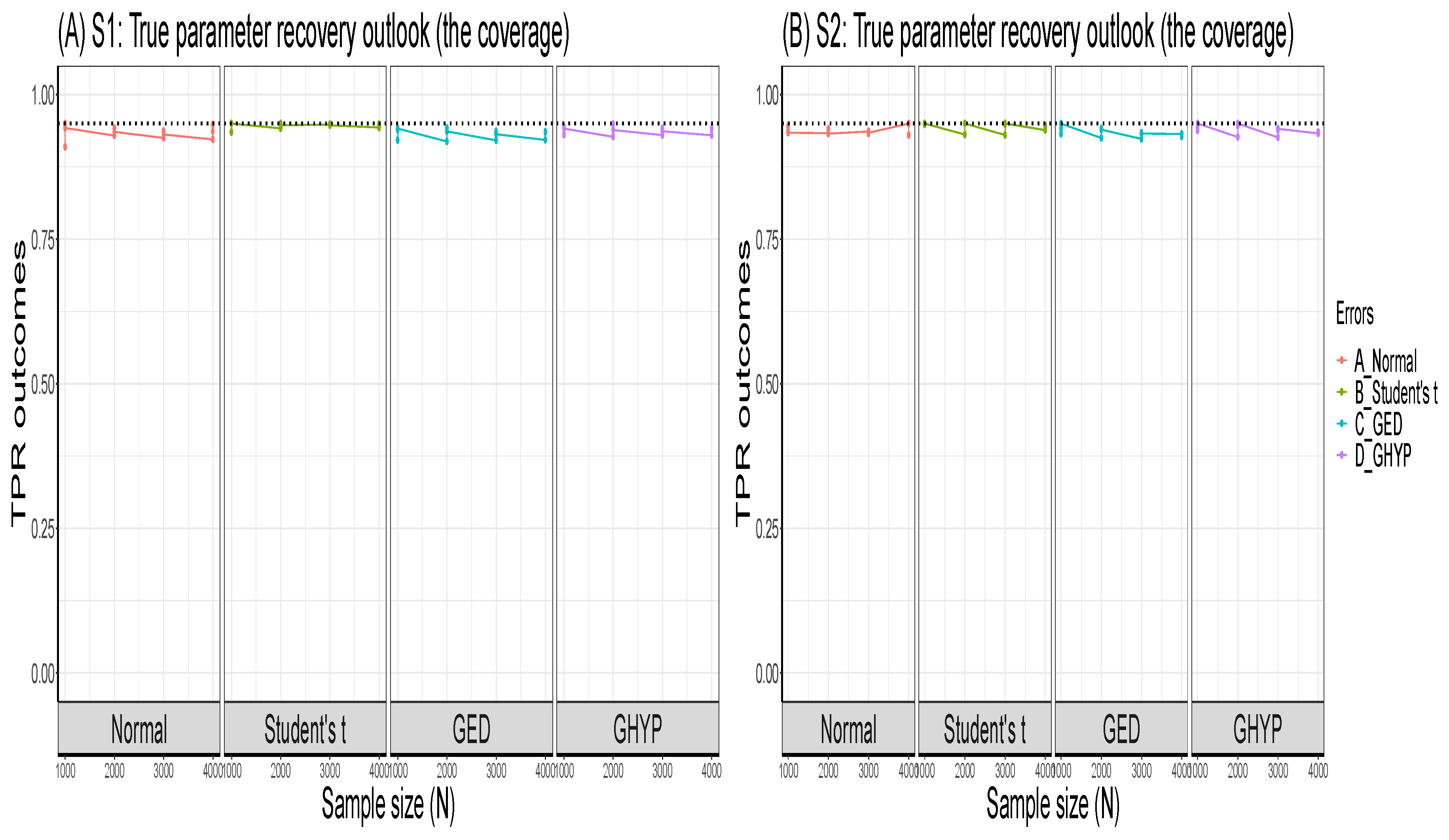

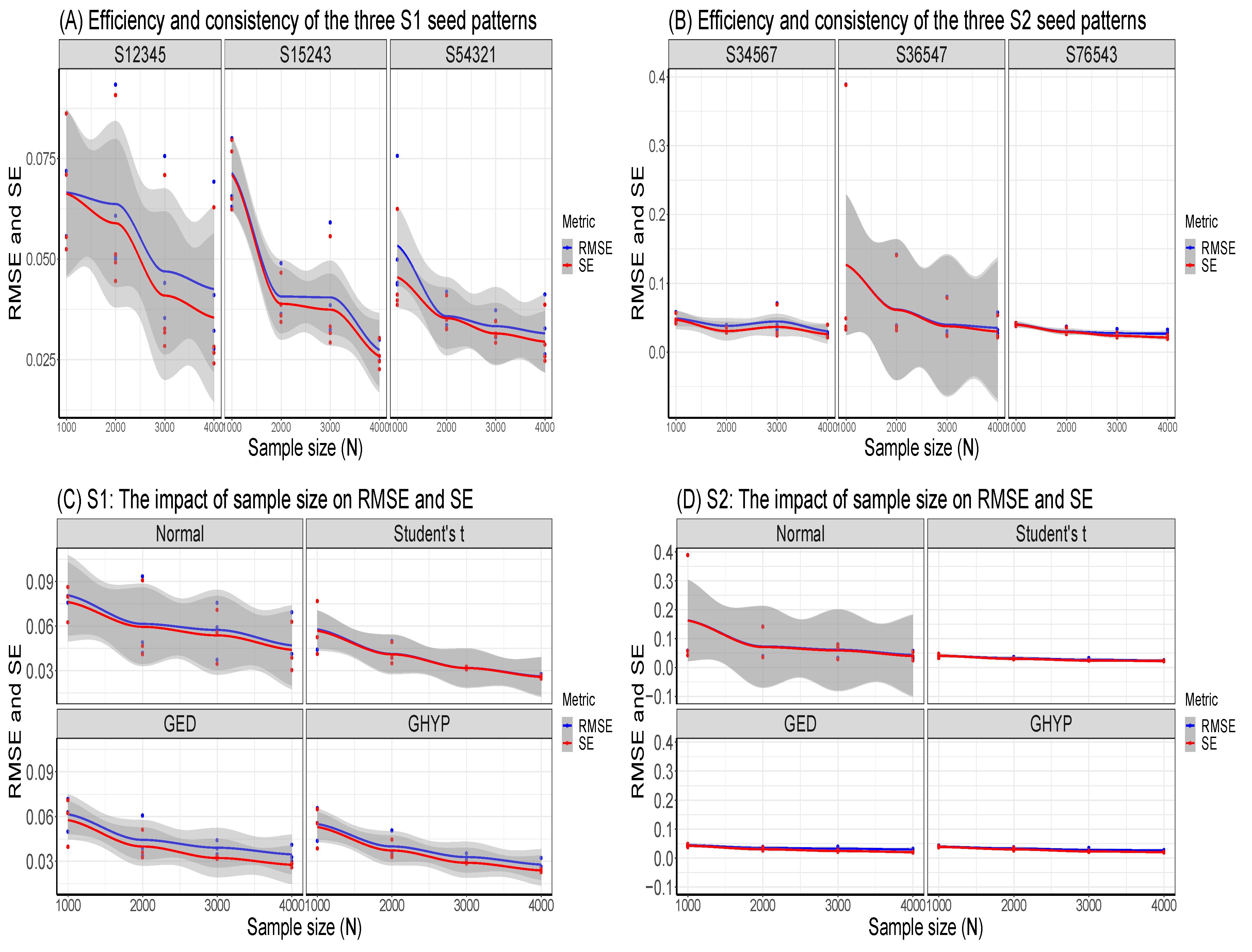

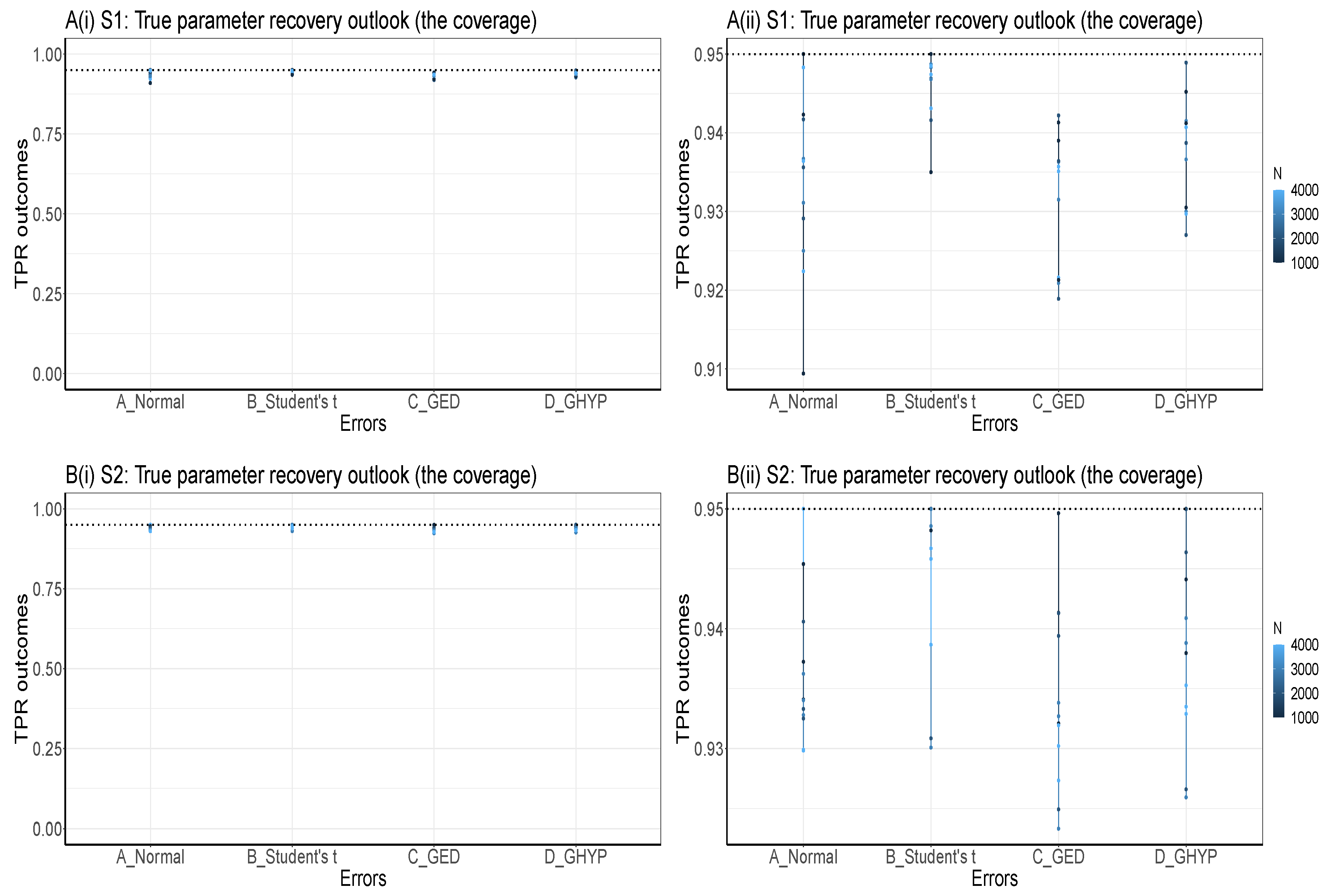

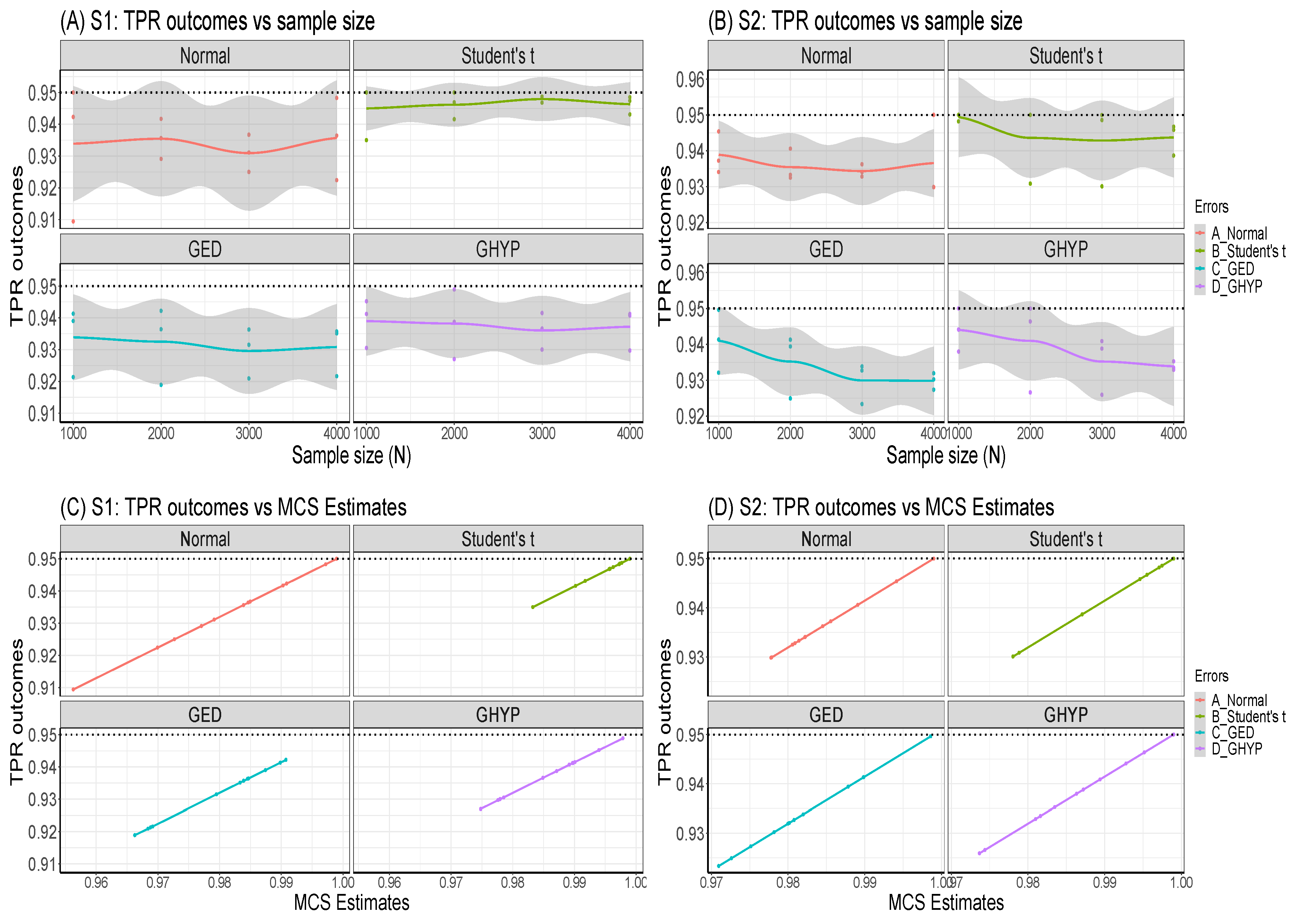

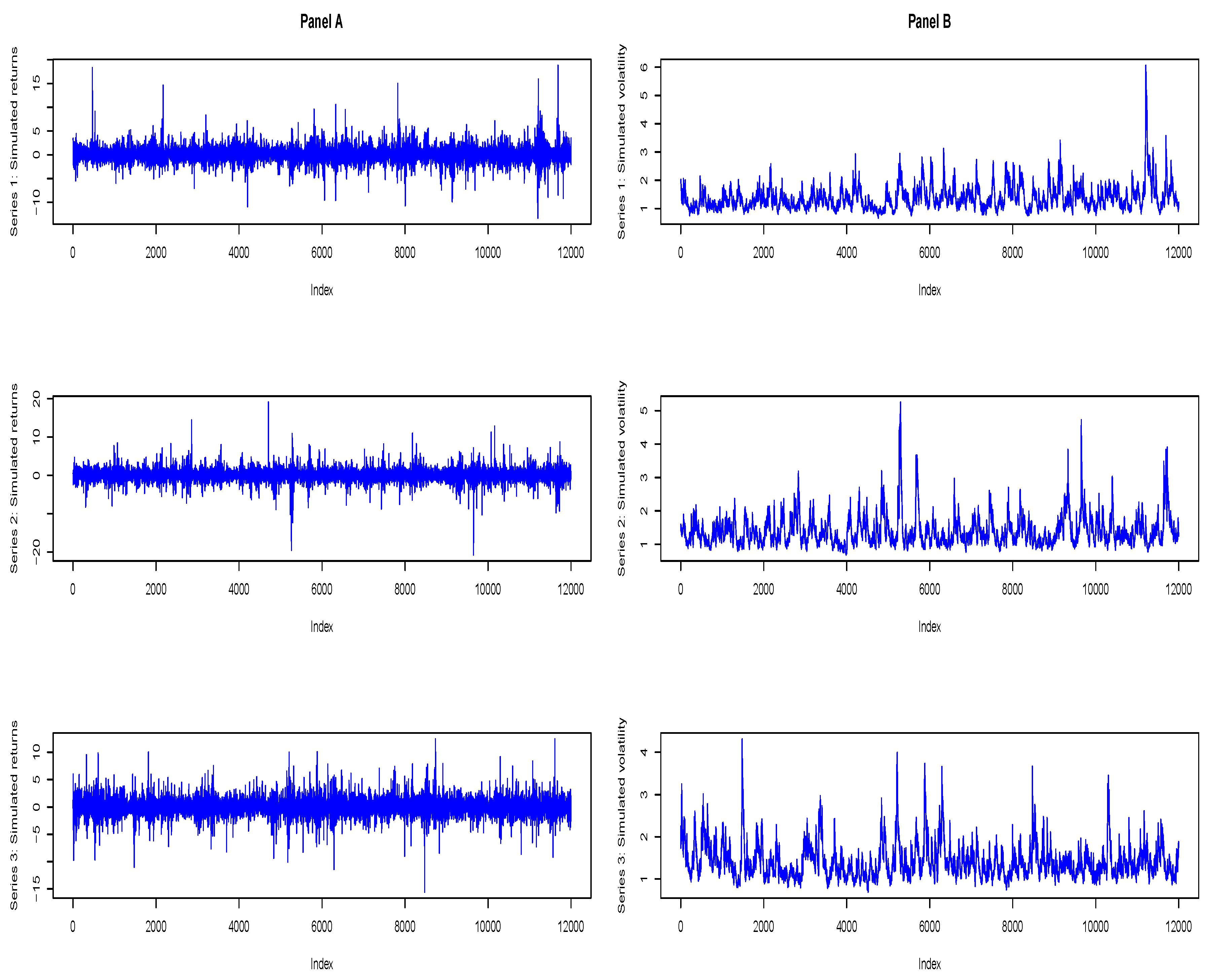

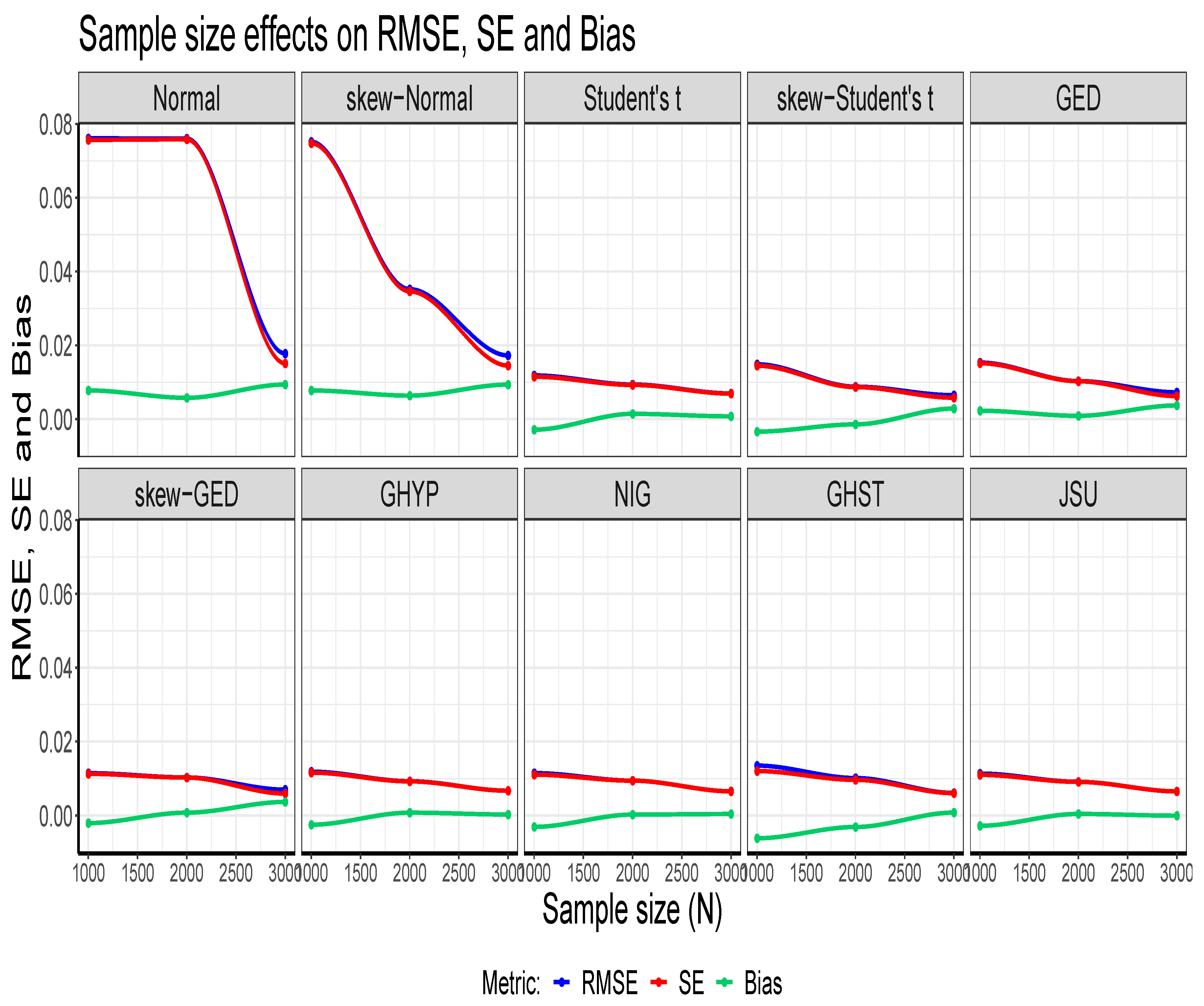

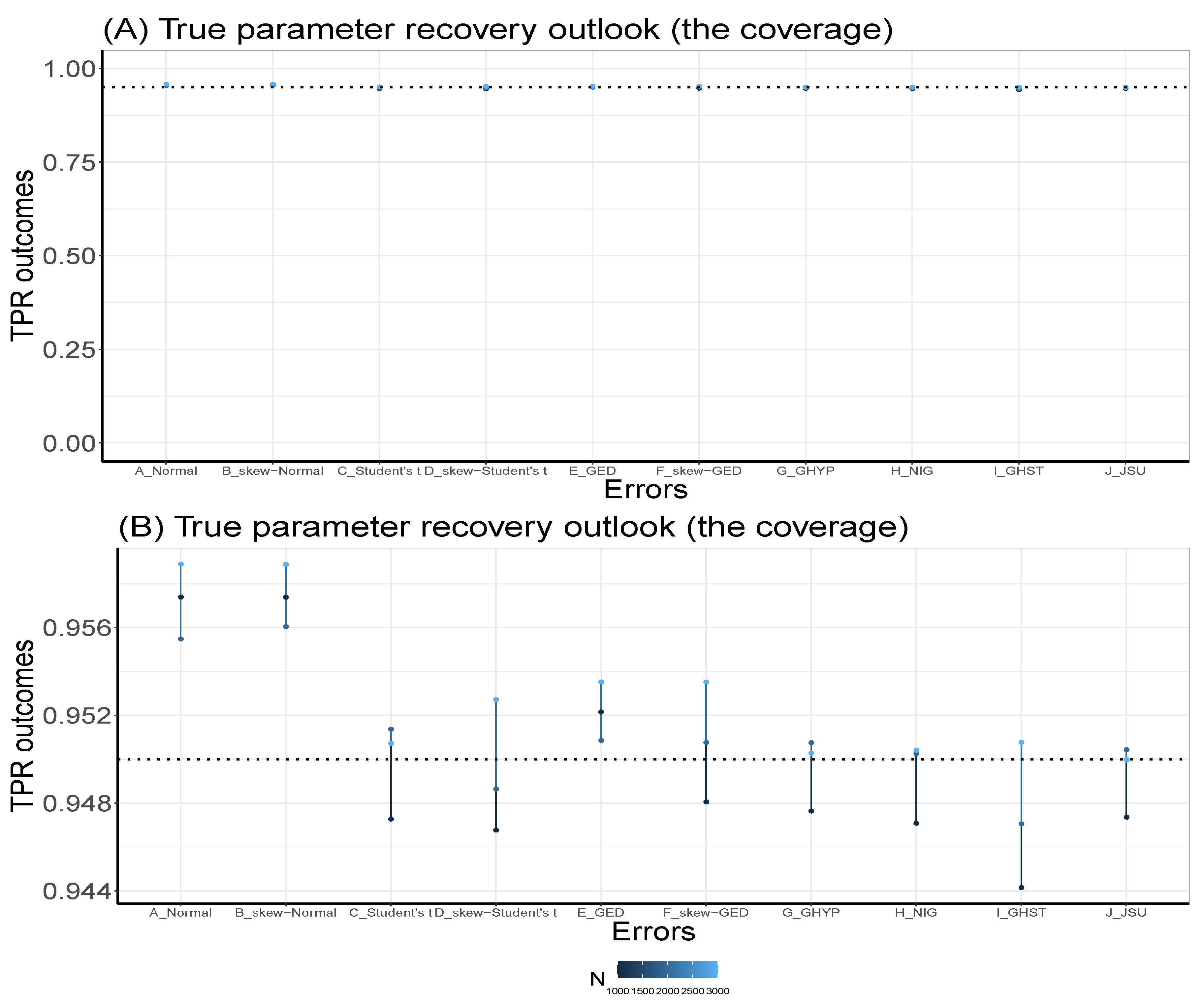

Set the seed: Simulation code will generate a different sequence of random numbers each time it is run unless a seed is set [43]. A set seed initialises the random number generator [4] and ensures reproducibility, where the same result is obtained for different runs of the simulation process [44]. The seed needs to be set only once, for each simulation, at the start of the simulation session [2,4], and it is better to use the same seed values throughout the process [2].Now, through the GARCH model, this study carries out an MCS experiment to ascertain whether the seed values’ pattern or arrangement affects the estimators’ efficiency and consistency properties. Two sets of seeds are used for the experiment, where each set contains three different patterns of seed values. The first set is = {12345, 54321, 15243}, while the second set = {34567, 76543, 36547}. In each set, the study tries to use seed values arranged in ascending order, then reverses the order, and finally mixes up the ordered arrangement. The simulation starts by using GARCH(1,1)-Student’s t, with a degree of freedom = 3, as the true model under four assumed error distributions of a Normal, Student’s t, Generalised Error Distribution (GED) and Generalised Hyperbolic (GHYP) distribution. Details on these selected error distributions can be seen in [24,45]. The true parameter values used are (, , , ) = (0.0678, 0.0867, 0.0931, 0.9059), and they are obtained by fitting GARCH(1,1)-Student’s t to the SA bond return data.Using each of the seed patterns in turn, simulated dataset of sample size N = 12000, repeated 1000 times are generated through the parameter values. However, because of the effect of initial values in the data generating process, which may lead to size distortion [46], the first N = {11000, 10000, 9000, 8000} sets of observations are each discarded at each stage of the generated 12000 observations to circumvent such distortion. That is, only the last N = {1000, 2000, 3000, 4000} are used under each of the four assumed error distributions, as shown in Table A1, Appendix A. These trimming steps are carried out following the simulation structure of Feng and Shi [28] 2. An observation-driven process like the GARCH can be size distorted with regards to its kurtosis, where strong size distortion may be a result of high kurtosis [47]. The extracts of the RMSE and SE outcomes for the GARCH volatility persistence estimator are shown in Table A1. For in Panel A of the table, as N tends to its peak, the performance of the RMSE from the lowest to the highest under the four error distribution assumptions is Student’s t, GHYP, GED and Normal in that order, while that of SE from the lowest to the highest is GHYP, Student’s t, GED and Normal in that order, for the three arrangements of seed values.For in Panel B of the table, as N reaches its peak at 4000, the performance of the RMSE from the lowest to the highest is Student’s t, GHYP, GED and Normal in that order, while that of SE from the lowest to the highest is GHYP, GED, Student’s t and Normal in that order, for the three patterns of seed values. Hence, efficiency and precision in terms of RMSE and SE are the same as the sample size N becomes larger under the three seeds, regardless of the arrangement of the seed values under , as also observed under . In addition, the flows of consistency of the estimator under the seed values in are roughly the same; this is also applicable to those of the seed values in . The plotted outcomes can be visualised as displayed by the trend lines within the 95% confidence intervals in Figure 2 for the three seed values of sets in Panel A and in Panel B, where the efficiency and consistency outcomes are roughly the same with increase in N.To summarise, this study observes that, as , the pattern or arrangement of the seed values does not affect the estimator’s overall consistency and efficiency properties, but this may likely depend on the quality of the model used. The seed is primarily used to ensure reproducibility. Panels C and D of the figure further reveal that the RMSE/SE → 0 as for the four error distributions in and .Table A1 further shows that the MCS estimator considerably recovers the true parameter at the 95% nominal recovery level, where some of the estimates even recover the complete true value (0.9990) with TPR outcomes of 95%. These recovery outcomes can be seen in the visual plots of Figure 3 (or as shown in Panels A and B of Figure A1, Appendix B), where Panels A(i) and B(i) reveal that the MCS estimates perform quite well in recovering the true parameter as shown by the closeness of the TPR outcomes to the 95% (i.e., 0.95) nominal recovery level for and , respectively. The bunched up TPR outcomes in Panels A(i) and B(i) are clearly spread out as shown in Panels A(ii) and B(ii) for and , respectively. From these recovery outputs, two distinct features can be observed. First, the TPR results do not depend on the sample size as shown in Panels A and B of Figure 4 for and , which is a feature of coverage probability (see [11]); second, the closer (farther) the MCS estimate is to zero, the smaller (larger) the TPR outcome, as revealed in Panels C and D of the figure.

-

Next, simulated observations are generated using the true sampling distribution or the true model given some sets of (or different sets of) fixed parameters. Generation of simulated datasets through the GARCH model is carried out using the R package "rugarch". Random data generation involving this package can be implemented using either of two approaches. The first approach is to carry out the data generating simulation directly on a fitted object "fit" using the ugarchsim function [4,24] for the simulated random data. The second approach uses the ugarchpath function, which enables simulation of desired number of volatility paths through different parameter combinations [4,24].The simulation or data generating process can be run once or replicated multiple times. This study carries out another MCS investigation through the GARCH model to determine the effect (on the outcomes) of running a given GARCH simulation once or replicating it multiple times. That is, for a given sample size and seed value, the outcome of running the simulation once is compared to that of running it with different replications like 2500, 1000, and 300. This MCS experiment uses GARCH(1,1)-Student’s t, with = 3, as the true model under four assumed error distributions of a Normal, Student’s t, GED and GHYP. However, it should be understood that any non-normal error distributions (apart from the Student’s t that is used here) can also be used with GARCH(1,1) model for the true model. The GARCH(1,1)-Student’s t fitted to the SA bond return data yields the true parameter values (, , , ) = (0.0678, 0.0867, 0.0931, 0.9059).Using these parameter values, datasets of sample size N = 12000 are generated in each of the four distinct simulations (i.e., simulations with 1, 2500, 1000, and 300 replicates). After necessary trimmings in each simulation, to evade initial values effect, the last N = {1000, 2000, 3000} sets of observations are used at each stage of the generated 12000 observations under the four assumed innovation distributions. That is, datasets of the last three sample sizes, each simulated once, then replicated L = {2500, 1000, and 300} times are consecutively generated. From the modelling outputs, it is observed that the log-likelihood (llk), RMSE, SE and bias outcomes of , and estimators for each simulation under the four assumed errors are the same for the three sample-size datasets with the same seed value, regardless of whether the simulation is run once or replicated multiple times. For brevity, this study only displays the outcomes of the experiment under the assumed GED error for each run in Table 1. However, increasing the number of replications may reduce sampling uncertainty in meta-statistics [6].

- The generated (simulated) data are analysed, and the estimates from them are evaluated using classic methods through meta-statistics to derive relevant information about the estimators. Meta-statistics (see [6]) are performance measures or metrics for assessing the modelling outputs by judging the closeness between an estimate and the true parameter. A few of the frequently used meta-statistical summaries, as described below, include bias, root mean square error (RMSE) and standard error (SE). For more meta-statistics, see [2,6,50].

Bias

Standard Error

RMSE

2.4.4. Discussion and Summary

3. Results: Simulation and Empirical

3.1. Practical Illustrations of the Simulation Design: Application to Bond Return Data

3.1.1. The Background

3.1.2. Aim of the Simulation Study

3.1.3. Research Questions

- Which among the assumed error distributions is the most appropriate from the fGARCH process simulation for volatility estimation?

- Financial data are fat-tailed [76], i.e., non-Normal. Hence, will the combined volatility estimator of the most suitable error assumption still be consistent under departure from Normal assumption?

- What type (i.e., strong, weak or inconsistence) of consistency, in terms of RMSE and SE, does the fGARCH estimator exhibit?

- How is the performance of the MCS estimator in recovering the true parameter?

3.1.4. Method of Implementation

3.2. Empirical Verification

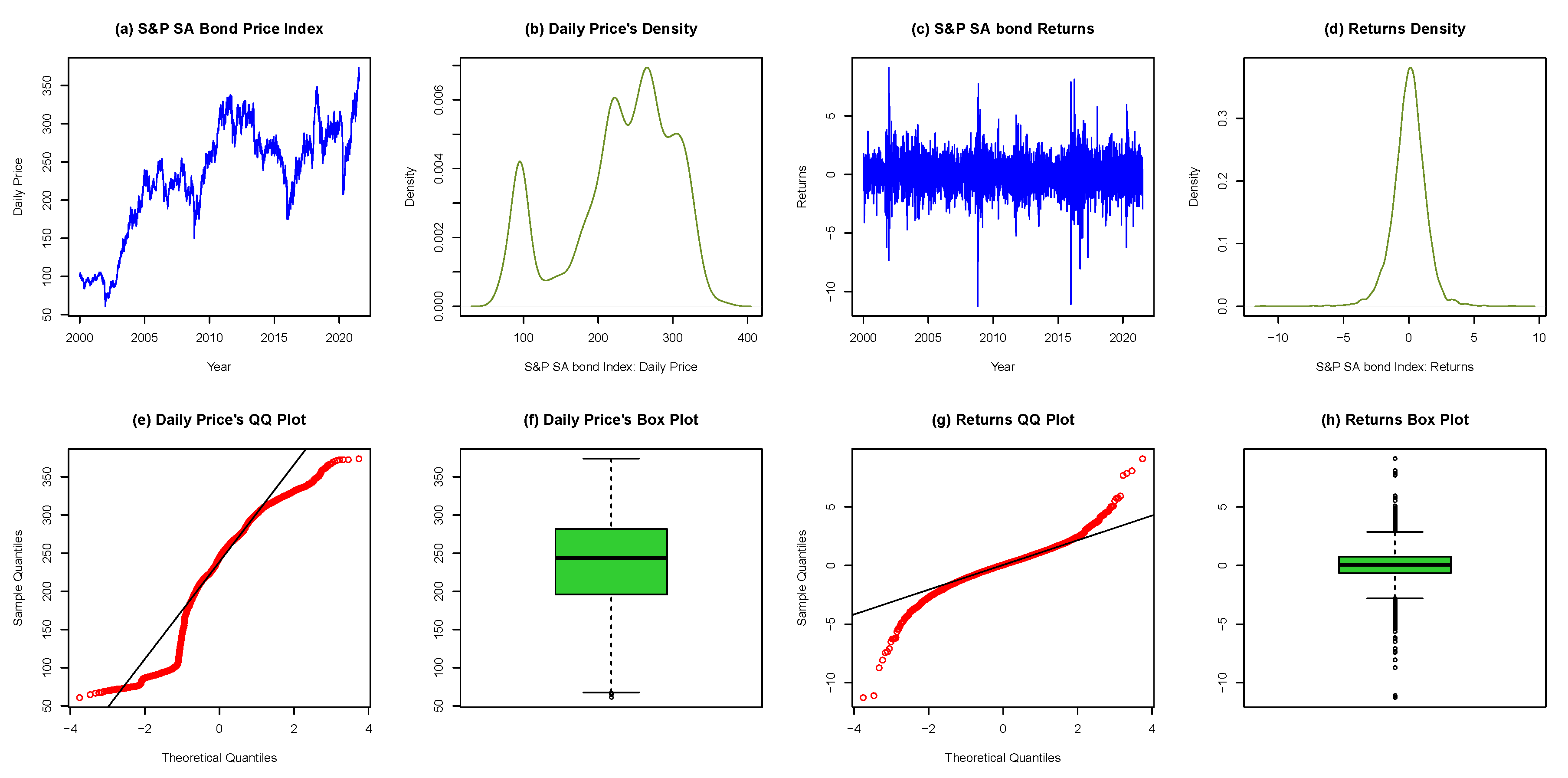

3.2.1. Exploratory Data Analysis

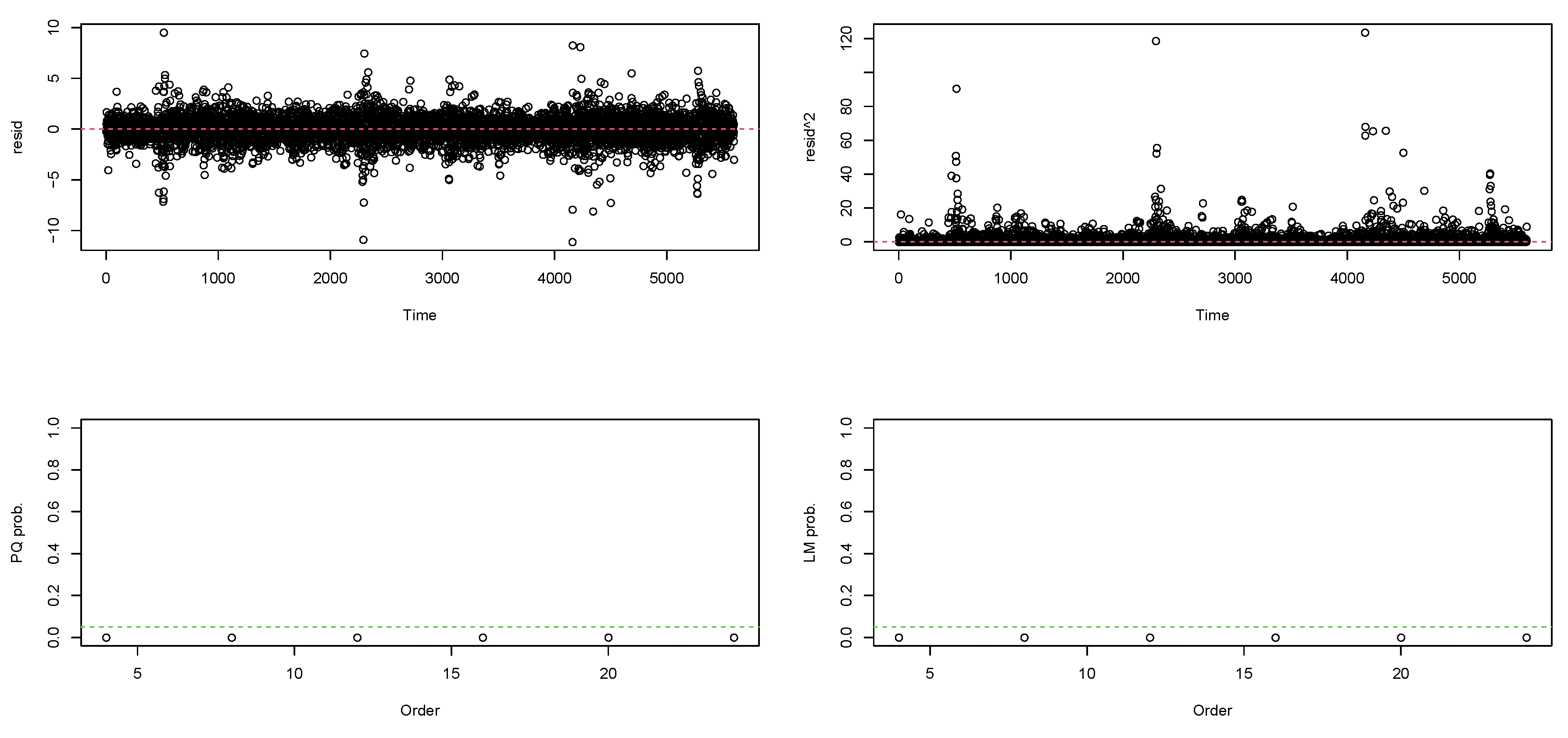

3.2.2. Tests for Serial Correlation and Heteroscedasticity

3.2.3. Selection of the Most Suitable Error Distribution

4. Discussion and Summarised Conclusion

5. Conclusions

5.1. Limitations in the Study

5.2. Future Research Interest

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| MCS | Monte Carlo simulation |

| SA | South Africa |

| GARCH | Generalised Autoregressive Conditional Heteroscedasticity |

| ugarchsim | Univariate GARCH Simulation |

| ugarchpath | Univariate GARCH Path Simulation |

| ARCH | Autoregressive Conditional Heteroscedasticity |

| TPR | True Parameter Recovery |

| S&P | Standard & Poor |

| ARMA | Autoregressive Moving Average |

| ARIMA | Autoregressive Integrated Moving Average |

| i.i.d. | Independent and identically distributed |

| MLE | Maximum likelihood estimation |

| QMLE | Quasi-maximum likelihood estimation |

| fGARCH | family GARCH |

| sGARCH | simple GARCH |

| AVGARCH | Absolute Value GARCH |

| GJR GARCH | Glosten-Jagannathan-Runkle GARCH |

| TGARCH | Threshold GARCH |

| NGARCH | Nonlinear ARCH |

| NAGARCH | Nonlinear Asymmetric GARCH |

| EGARCH | Exponential GARCH |

| apARCH | Asymmetric Power ARCH |

| CGARCH | Component GARCH |

| MCGARCH | Multiplicative Component GARCH |

| Persistence | |

| DGP | Data generation process |

| RMSE | Root mean square error |

| SE | Standard error |

| GED | Generalised Error Distribution |

| GHYP | Generalised Hyperbolic |

| NIG | Normal Inverse Gaussian |

| GHST | Generalised Hyperbolic Skew-Student’s t |

| JSU | Johnson’s reparametrised SU |

| llk | log-likelihood |

| EDA | Exploratory Data Analysis |

| Quantile-Quantile | |

| LM | Lagrange Multiplier |

| PQ | Portmanteau-Q |

| WLB | Weighted Ljung-Box |

| AIC | Akaike information criterion |

| BIC | Bayesian information criterion |

| HQIC | Hannan-Quinn information criterion |

| SIC | Shibata information criterion |

| AP-GoF | Adjusted Pearson Goodness-of-Fit |

| p-value | Probability value |

| GAS | Generalised Autoregressive Score |

Appendix A. Outcomes of different patterns of seed values for sets S1 and S2

| () | Seed: 12345 | Seed: 54321 | Seed: 15243 | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| N | RMSE | SE | TPR (95%) | RMSE | SE | TPR (95%) | RMSE | SE | TPR (95%) | ||||

| Normal | 1000 | 0.9990 | 0.0862 | 0.0862 | 95.00% | 0.9563 | 0.0757 | 0.0625 | 90.94% | 0.9909 | 0.0801 | 0.0796 | 94.23% |

| 2000 | 0.9771 | 0.0934 | 0.0908 | 92.91% | 0.9903 | 0.0419 | 0.0410 | 94.17% | 0.9839 | 0.0490 | 0.0466 | 93.56% | |

| 3000 | 0.9727 | 0.0756 | 0.0709 | 92.50% | 0.9850 | 0.0373 | 0.0345 | 93.67% | 0.9791 | 0.0591 | 0.0557 | 93.11% | |

| 4000 | 0.9700 | 0.0693 | 0.0629 | 92.24% | 0.9846 | 0.0412 | 0.0387 | 93.64% | 0.9972 | 0.0304 | 0.0303 | 94.83% | |

| Student’s t | 1000 | 0.9990 | 0.0525 | 0.0525 | 95.00% | 0.9833 | 0.0441 | 0.0412 | 93.50% | 0.9990 | 0.0768 | 0.0768 | 95.00% |

| 2000 | 0.9902 | 0.0500 | 0.0492 | 94.16% | 0.9990 | 0.0349 | 0.0349 | 95.00% | 0.9958 | 0.0388 | 0.0386 | 94.69% | |

| 3000 | 0.9973 | 0.0327 | 0.0327 | 94.83% | 0.9977 | 0.0308 | 0.0308 | 94.87% | 0.9956 | 0.0318 | 0.0316 | 94.68% | |

| 4000 | 0.9918 | 0.0277 | 0.0267 | 94.31% | 0.9974 | 0.0258 | 0.0258 | 94.85% | 0.9963 | 0.0247 | 0.0246 | 94.74% | |

| GED | 1000 | 0.9875 | 0.0719 | 0.0710 | 93.90% | 0.9688 | 0.0499 | 0.0397 | 92.13% | 0.9899 | 0.0630 | 0.0624 | 94.13% |

| 2000 | 0.9663 | 0.0608 | 0.0512 | 91.89% | 0.9908 | 0.0336 | 0.0326 | 94.22% | 0.9847 | 0.0387 | 0.0360 | 93.64% | |

| 3000 | 0.9684 | 0.0441 | 0.0317 | 92.09% | 0.9846 | 0.0347 | 0.0315 | 93.63% | 0.9795 | 0.0385 | 0.0333 | 93.15% | |

| 4000 | 0.9692 | 0.0410 | 0.0282 | 92.16% | 0.9833 | 0.0328 | 0.0288 | 93.51% | 0.9839 | 0.0300 | 0.0259 | 93.57% | |

| GHYP | 1000 | 0.9940 | 0.0557 | 0.0555 | 94.52% | 0.9785 | 0.0437 | 0.0386 | 93.05% | 0.9897 | 0.0657 | 0.0650 | 94.12% |

| 2000 | 0.9748 | 0.0507 | 0.0446 | 92.70% | 0.9979 | 0.0328 | 0.0328 | 94.89% | 0.9871 | 0.0364 | 0.0344 | 93.87% | |

| 3000 | 0.9780 | 0.0353 | 0.0284 | 93.00% | 0.9901 | 0.0305 | 0.0292 | 94.15% | 0.9849 | 0.0325 | 0.0293 | 93.66% | |

| 4000 | 0.9776 | 0.0322 | 0.0241 | 92.97% | 0.9898 | 0.0263 | 0.0247 | 94.12% | 0.9892 | 0.0247 | 0.0226 | 94.07% | |

| () | Seed: 34567 | Seed: 76543 | Seed: 36547 | ||||||||||

| N | RMSE | SE | TPR (95%) | RMSE | SE | TPR (95%) | RMSE | SE | TPR (95%) | ||||

| Normal | 1000 | 0.9856 | 0.0583 | 0.0568 | 93.72% | 0.9942 | 0.0424 | 0.0421 | 94.54% | 0.9823 | 0.3888 | 0.3884 | 93.41% |

| 2000 | 0.9814 | 0.0396 | 0.0354 | 93.33% | 0.9891 | 0.0370 | 0.0357 | 94.06% | 0.9806 | 0.1419 | 0.1407 | 93.25% | |

| 3000 | 0.9845 | 0.0708 | 0.0693 | 93.62% | 0.9809 | 0.0334 | 0.0281 | 93.28% | 0.9822 | 0.0805 | 0.0787 | 93.40% | |

| 4000 | 0.9990 | 0.0397 | 0.0397 | 95.00% | 0.9778 | 0.0326 | 0.0248 | 92.98% | 0.9779 | 0.0575 | 0.0535 | 92.99% | |

| Student’s t | 1000 | 0.9971 | 0.0474 | 0.0474 | 94.82% | 0.9990 | 0.0422 | 0.0422 | 95.00% | 0.9990 | 0.0329 | 0.0329 | 95.00% |

| 2000 | 0.9789 | 0.0364 | 0.0303 | 93.08% | 0.9990 | 0.0281 | 0.0281 | 95.00% | 0.9990 | 0.0315 | 0.0315 | 95.00% | |

| 3000 | 0.9781 | 0.0326 | 0.0249 | 93.01% | 0.9975 | 0.0237 | 0.0236 | 94.86% | 0.9990 | 0.0234 | 0.0234 | 95.00% | |

| 4000 | 0.9871 | 0.0253 | 0.0223 | 93.87% | 0.9955 | 0.0218 | 0.0215 | 94.67% | 0.9946 | 0.0238 | 0.0234 | 94.58% | |

| GED | 1000 | 0.9802 | 0.0463 | 0.0423 | 93.21% | 0.9899 | 0.0389 | 0.0378 | 94.13% | 0.9986 | 0.0490 | 0.0490 | 94.96% |

| 2000 | 0.9726 | 0.0386 | 0.0282 | 92.49% | 0.9898 | 0.0280 | 0.0265 | 94.13% | 0.9879 | 0.0388 | 0.0371 | 93.94% | |

| 3000 | 0.9710 | 0.0398 | 0.0282 | 92.33% | 0.9820 | 0.0276 | 0.0218 | 93.38% | 0.9808 | 0.0303 | 0.0243 | 93.27% | |

| 4000 | 0.9800 | 0.0285 | 0.0213 | 93.19% | 0.9782 | 0.0284 | 0.0194 | 93.02% | 0.9752 | 0.0321 | 0.0215 | 92.73% | |

| GHYP | 1000 | 0.9863 | 0.0436 | 0.0417 | 93.80% | 0.9928 | 0.0383 | 0.0378 | 94.41% | 0.9990 | 0.0370 | 0.0370 | 95.00% |

| 2000 | 0.9744 | 0.0377 | 0.0285 | 92.66% | 0.9952 | 0.0265 | 0.0262 | 94.64% | 0.9990 | 0.0358 | 0.0358 | 95.00% | |

| 3000 | 0.9737 | 0.0351 | 0.0242 | 92.59% | 0.9872 | 0.0243 | 0.0213 | 93.88% | 0.9894 | 0.0256 | 0.0237 | 94.09% | |

| 4000 | 0.9810 | 0.0278 | 0.0212 | 93.29% | 0.9835 | 0.0246 | 0.0192 | 93.53% | 0.9816 | 0.0275 | 0.0213 | 93.35% | |

Appendix B. Further Visual Illustrations of S1 and S2 TPR Outcomes

References

- De Silva, H.; McMurran, G.M.; Miller, M.N. Diversification and the volatility risk premium, in Factor Investing. In Factor Investing; Jurczenko, E., Ed.; Elsevier, 2017; chapter 14, pp. 365–387. [CrossRef]

- Morris, T.P.; White, I.R.; Crowther, M.J. Using simulation studies to evaluate statistical methods. Statistics in Medicine 2019, 38, 2074–2102. [Google Scholar] [CrossRef]

- Hallgren, K.A. Conducting simulation studies in the R programming environment. Tutorials in Quantitative Methods for Psychology 2013, 9, 43–60. [Google Scholar] [CrossRef]

- Ghalanos, A. rugarch: Univariate GARCH models. R package version 1.4-7, 2022.

- Ardia, D.; Boudt, K.; Catania, L. Generalized autoregressive score models in R: The GAS package. Journal of Statistical Software 2019, 88, 1–28. [Google Scholar] [CrossRef]

- Chalmers, R.P.; Adkins, M.C. Writing effective and reliable Monte Carlo simulations with the SimDesign package. The Quantitative Methods for Psychology 2020, 16, 248–280. [Google Scholar] [CrossRef]

- Wickham, H.; Averick, M.; Bryan, J.; Chang, W.; McGowan, L.; François, R.; Grolemund, G.; Hayes, A.; Henry, L.; Hester, J.; Kuhn, M.; Pedersen, T.; Miller, E.; Bache, S.; Müller, K.; Ooms, J.; Robinson, D.; Seidel, D.; Spinu, V.; Takahashi, K.; Vaughan, D.; Wilke, C.; Woo, K.; Yutani, H. Welcome to the Tidyverse. Journal of Open Source Software 2019, 4, 1–6. [Google Scholar] [CrossRef]

- Bratley, P.; Fox, B.L.; Schrage, L.E. A guide to simulation, second ed.; Springer Science ∖& Business Media: New York, 2011; pp. 1–397. [Google Scholar] [CrossRef]

- Jones, O.; Maillardet, R.; Robinson, A. Introduction to scientific programming and simulation using R, second ed.; Chapman & Hall/CRC: Florida, 2014; pp. 1–573. [Google Scholar]

- Kleijnen, J.P. Design and analysis of simulation experiments, second ed.; Vol. 230, Springer: New York, 2015; pp. 1–322. [Google Scholar] [CrossRef]

- Hilary, T. Descriptive statistics for research, 2002.

- Datastream. Thomson reuters datastream. [Online]. Available at: Subscription Service (Accessed: June 2021), 2021.

- Bollerslev, T. Generalized autoregressive conditional heteroskedastic. Journal of Econometrics 1986, 31, 307–327. [Google Scholar] [CrossRef]

- Engle, R.F. Autoregressive conditional heteroscedacity with estimates of variance of United Kingdom inflation. Econometrica 1982, 50, 987–1008. [Google Scholar] [CrossRef]

- Kim, S.Y.; Huh, D.; Zhou, Z.; Mun, E.Y. A comparison of Bayesian to maximum likelihood estimation for latent growth models in the presence of a binary outcome. International Journal of Behavioral Development 2020, 44, 447–457. [Google Scholar] [CrossRef]

- McNeil, A.J.; Frey, R. Estimation of tail-related risk measures for heteroscedastic financial time series: An extreme value approach. Journal of Empirical Finance 2000, 7, 271–300. [Google Scholar] [CrossRef]

- Smith, R.L. Statistics of extremes, with applications in environment, insurance, and finance. Extreme values in finance, telecommunications, and the environment, 2003; 20–97. [Google Scholar]

- Maciel, L.d.S.; Ballini, R. Value-at-risk modeling and forecasting with range-based volatility models: Empirical evidence. Revista Contabilidade e Financas 2017, 28, 361–376. [Google Scholar] [CrossRef]

- Nelson, B.D. Conditional heteroskedasticity in asset returns : A new approach. Econometrica 1991, 59, 347–370. [Google Scholar] [CrossRef]

- Samiev, S. GARCH (1,1) with exogenous covariate for EUR / SEK exchange rate volatility: On the effects of global volatility shock on volatility. Master of science in economics, Umea University, 2012.

- Zivot, E. Practical issues in the analysis of univariate GARCH models. In Handbook of financial time series.; Springer: Berlin, Heidelberg, 2009; pp. 113–155. [Google Scholar] [CrossRef]

- Fan, J.; Qi, L.; Xiu, D. Quasi-maximum likelihood estimation of GARCH models with heavy-tailed likelihoods. Journal of Business and Economic Statistics 2014, 32, 178–191. [Google Scholar] [CrossRef]

- Engle, R.F.; Bollerslev, T. Modelling the persistence of conditional variances. Econometric Reviews 1986, 5, 1–50. [Google Scholar] [CrossRef]

- Ghalanos, A. Introduction to the rugarch package. (Version 1.3-8), 2018.

- Chou, R.Y. Volatility persistence and stock valuations : Some empirical evidence using GARCH. Journal of Applied Econometrics 1988, 3, 279–294. [Google Scholar] [CrossRef]

- Francq, C.; Zakoïan, J.M. Maximum likelihood estimation of pure GARCH and ARMA-GARCH processes. Bernoulli 2004, 10, 605–637. [Google Scholar] [CrossRef]

- Bollerslev, T.; Wooldridge, J.M. Quasi-maximum likelihood estimation and inference in dynamic models with time-varying covariances. Econometric Reviews 1992, 11, 143–172. [Google Scholar] [CrossRef]

- Feng, L.; Shi, Y. A simulation study on the distributions of disturbances in the GARCH model. Cogent Economics and Finance 2017, 5, 1355503. [Google Scholar] [CrossRef]

- Hentschel, L. All in the family nesting symmetric and asymmetric GARCH models. Journal of Financial Economics 1995, 39, 71–104. [Google Scholar] [CrossRef]

- Taylor, S.J. Modelling financial time series, second ed.; World Scientific Publishing Co. Pte. Ltd., 1986; pp. 1–297.

- Schwert, G.W. Stock volatility and the crash of ’ 87. The Review of Financial Studies 1990, 3, 77–102. [Google Scholar] [CrossRef]

- Glosten, L.R.; Jagannathan, R.; Runkle, D.E. On the relation between the expected value and the volatility of the nominal excess return on stocks. The Journal of Finance 1993, 48, 1779–1801. [Google Scholar] [CrossRef]

- Zakoian, J.M. Threshold heteroscedastic models. Journal of Economic Dynamics and Control 1994, 18, 931–955. [Google Scholar] [CrossRef]

- Higgins, M.L.; Bera, A.K. A class of nonlinear Arch models. International Economic Review, 1992, 33, 137–158. [Google Scholar] [CrossRef]

- Engle, R.F.; Ng, V.K. Measuring and testing the impact of news on volatility. The journal of Finance 1993, 48, 17749–1778. [Google Scholar] [CrossRef]

- Ding, Z.; Granger, C.W.; Engle, R.F. A long memory property of stock market returns and a new model. Journal of Empirical Finance 1993, 1, 83–106. [Google Scholar] [CrossRef]

- Geweke, J. Comment on: Modelling the persistence of conditional variances. Econometric Reviews 1986, 5, 57–61. [Google Scholar] [CrossRef]

- Pantula, S.G. Comment: Modelling the persistence of conditional variances. Econometric Reviews 1986, 5, 71–74. [Google Scholar] [CrossRef]

- Chalmers, P. Introduction to Monte Carlo simulations with applications in R using the SimDesign package 2019. pp. 1–46.

- Zeileis, A.; Grothendieck, G. zoo: S3 infrastructure for regular and irregular time series. Journal of Statistical Software 2005, 14, 1–27. [Google Scholar] [CrossRef]

- Qiu, D. aTSA: Alternative time series analysis, 2015.

- Hyndman, R.J.; Khandakar, Y. Automatic time series forecasting: The forecast package for R. Journal of Statistical Software 2008, 27, 1–22. [Google Scholar] [CrossRef]

- Danielsson, J. Financial risk forecasting: the theory and practice of forecasting market risk with implementation in R and Matlab; John Wiley ∖& Sons: Chichester, West Sussex, 2011; pp. 1–274. [Google Scholar]

- Foote, W.G. Financial engineering analytics: A practice manual using R, 2018.

- Barndorff-Nielsen, O.E.; Mikosch, T.; Resnick, S. Levy processes: Theory and applications; Birkhauser Science, Springer Nature, Global publisher: London, 2013. [Google Scholar] [CrossRef]

- Su, J.J. On the oversized problem of Dickey-Fuller-type tests with GARCH errors. Communications in Statistics: Simulation and Computation 2011, 40, 1364–1372. [Google Scholar] [CrossRef]

- Silvennoinen, A.; Teräsvirta, T. Testing constancy of unconditional variance in volatility models by misspecification and specification tests. Studies in Nonlinear Dynamics and Econometrics 2016, 20, 347–364. [Google Scholar] [CrossRef]

- Mooney, C.Z. Monte Carlo simulation, first ed.; Vol. 116, SAGE Publications: Thousand Oaks, CA, 1997. [Google Scholar]

- Koopman, S.J.; Lucas, A.; Zamojski, M. Dynamic term structure models with score driven time varying parameters: estimation and forecasting. NBP Working Paper No. 258.

- Sigal, M.J.; Chalmers, P.R. Play it again: Teaching statistics with Monte Carlo simulation. Journal of Statistics Education 2016, 24, 136–156. [Google Scholar] [CrossRef]

- Harwell, M. A strategy for using bias and RMSE as outcomes in Monte Carlo studies in statistics. Journal of Modern Applied Statistical Methods 2018, 17, 1–16. [Google Scholar] [CrossRef]

- Yuan, K.H.; Tong, X.; Zhang, Z. Bias and efficiency for SEM with missing data and auxiliary variables: Two-stage robust method versus two-stage ML. Structural Equation Modeling: A Multidisciplinary Journal 2015, 22, 178–192. [Google Scholar] [CrossRef]

- Wang, C.; Gerlach, R.; Chen, Q. A semi-parametric realized joint value-at-risk and expected shortfall regression framework. arXiv preprint arXiv:1807.02422 2018, pp. 1–39. arXiv:1807.02422.

- Gilli, M.; Maringer, D.; Schumann, E. Generating random numbers. In book: Numerical Methods and Optimization in Finance; Academic Press, 2019; pp. 103–132.

- Bollerslev, T. Conditionally heteroskedasticity time series model for speculative prices and rates of returns. The Review of Economic and Statistics 1987, 69, 542–547. [Google Scholar] [CrossRef]

- Creal, D.; Koopman, S.J.; Lucas, A. Generalized autoregressive score models with applications. Journal of Applied Econometrics 2013, 28, 777–795. [Google Scholar] [CrossRef]

- Buccheri, G.; Bormetti, G.; Corsi, F.; Lillo, F. A score-driven conditional correlation model for noisy and asynchronous data: An application to high-frequency covariance dynamics. Journal of Business and Economic Statistics 2021, 39, 920–936. [Google Scholar] [CrossRef]

- Abadie, A.; Angrist, J.; Imbens, G. A permanent and transitory component model of stock return volatility. In Cointegration Causality and Forecasting A Festschrift in Honor of Clive WJ Granger. Oxford University Press, 1999; 475–497. [Google Scholar]

- Engle, R.F.; Sokalska, M.E. Forecasting intraday volatility in the US equity market. Multiplicative component GARCH. Journal of Financial Econometrics 2012, 10, 54–83. [Google Scholar] [CrossRef]

- Javed, F.; Mantalos, P. GARCH-type models and performance of information criteria. Communications in Statistics: Simulation and Computation 2013, 42, 1917–1933. [Google Scholar] [CrossRef]

- Eling, M. Fitting asset returns to skewed distributions: Are the skew-normal and skew-student good models? Insurance: Mathematics and Economics 2014, 59, 45–56. [Google Scholar] [CrossRef]

- Lee, Y.H.; Pai, T.Y. REIT volatility prediction for skew-GED distribution of the GARCH model. Expert Syst. Appl. 2010, 37, 4737–4741. [Google Scholar] [CrossRef]

- Ashour, S.K.; Abdel-hameed, M.A. Approximate skew normal distribution. Journal of Advanced Research 2010, 1, 341–350. [Google Scholar] [CrossRef]

- Pourahmadi, M. Construction of skew-normal random variables: Are they linear combinations of normal and half-normal? J. Stat. Theory Appl. 2007, 3, 314–328. [Google Scholar]

- Azzalini, A.; Capitanio, A. Distributions generated by perturbation of symmetry with emphasis on a multivariate skew t-distribution. Journal of the Royal Statistical Society. Series B: Statistical Methodology 2003, 65, 367–389. [Google Scholar] [CrossRef]

- Branco, M.D.; Dey, D.K. A general class of multivariate skew-elliptical distributions. Journal of Multivariate Analysis 2001, 79, 99–113. [Google Scholar] [CrossRef]

- Azzalini, A. A class of distributions which includes the normal ones. Scandinavian Journal of Statistics 1985, 12, 171–178. [Google Scholar]

- Francq, C.; Thieu, L.Q. QML inference for volatility models with covariates. Econometric Theory 2019, 35, 37–72. [Google Scholar] [CrossRef]

- Hoga, Y. Extremal dependence-based specification testing of time series. Journal of Business and Economic Statistics, 2022. [Google Scholar] [CrossRef]

- Lin, C.H.; Shen, S.S. Can the student-t distribution provide accurate value at risk? Journal of Risk Finance 2006, 7, 292–300. [Google Scholar] [CrossRef]

- Duda, M.; Schmidt, H. Evaluation of various approaches to value at risk. PhD thesis, Lund University, 2009.

- Heracleous, M.S. Sample kurtosis, GARCH-t and the degrees of freedom issue. EUR Working Papers, 2007; 1–22. [Google Scholar]

- Zivot, E. Univariate GARCH, 2013.

- White, H. Maximum likelihood estimation of misspecified models. Econometrica 1982, 50, 1–25. [Google Scholar] [CrossRef]

- Wuertz, D.; Yohan, C.; Setz, T.; Maechler, M.; Boudt, C.; Chausse, P.; Miklovac, M.; Boshnakov, G.N. Rmetrics - Autoregressive conditional heteroskedastic modelling, 2022.

- Li, Q. Three essays on stock market volatility. Doctor of philosophy thesis, Utah State University, 2008.

- Chib, S. Monte Carlo methods and Bayesian computation: Overview, 2015. [CrossRef]

- Fisher, T.J.; Gallagher, C.M. New weighted portmanteau statistics for time series goodness of fit testing. Journal of the American Statistical Association 2012, 107, 777–787. [Google Scholar] [CrossRef]

| 1 | Coverage probability is the probability that a confidence interval of estimates contains or covers the true parameter value [11]. |

| 2 | This study only follows the authors’ trimming steps for initial values effect. The other trimming by the authors for "simulation bias" (where some initial numbers of replications are further discarded after the initial value effect adjustment) are not used here because it is observed that it sometimes distorts the estimators’ consistency. |

| Panel A: Simulation run once | ||||||||||||

| N | llk | RMSE | Bias | SE | RMSE | Bias | SE | RMSE | Bias | SE | ||

| 0.0931 | 0.9059 | 1000 | -2020.5 | 0.0504 | 0.0328 | 0.0383 | 0.0551 | -0.0443 | 0.0327 | 0.0719 | -0.0115 | 0.0710 |

| 2000 | -3813.8 | 0.0246 | 0.0046 | 0.0241 | 0.0462 | -0.0374 | 0.0271 | 0.0608 | -0.0327 | 0.0512 | ||

| 3000 | -5734.2 | 0.0156 | -0.0037 | 0.0152 | 0.0316 | -0.0269 | 0.0166 | 0.0441 | -0.0306 | 0.0317 | ||

| Panel B: Simulation run with 2500 replications | ||||||||||||

| 0.0931 | 0.9059 | 1000 | -2020.5 | 0.0504 | 0.0328 | 0.0383 | 0.0551 | -0.0443 | 0.0327 | 0.0719 | -0.0115 | 0.0710 |

| 2000 | -3813.8 | 0.0246 | 0.0046 | 0.0241 | 0.0462 | -0.0374 | 0.0271 | 0.0608 | -0.0327 | 0.0512 | ||

| 3000 | -5734.2 | 0.0156 | -0.0037 | 0.0152 | 0.0316 | -0.0269 | 0.0166 | 0.0441 | -0.0306 | 0.0317 | ||

| Panel C: Simulation run with 1000 replications | ||||||||||||

| 0.0931 | 0.9059 | 1000 | -2020.5 | 0.0504 | 0.0328 | 0.0383 | 0.0551 | -0.0443 | 0.0327 | 0.0719 | -0.0115 | 0.0710 |

| 2000 | -3813.8 | 0.0246 | 0.0046 | 0.0241 | 0.0462 | -0.0374 | 0.0271 | 0.0608 | -0.0327 | 0.0512 | ||

| 3000 | -5734.2 | 0.0156 | -0.0037 | 0.0152 | 0.0316 | -0.0269 | 0.0166 | 0.0441 | -0.0306 | 0.0317 | ||

| Panel D: Simulation run with 300 replications | ||||||||||||

| 0.0931 | 0.9059 | 1000 | -2020.5 | 0.0504 | 0.0328 | 0.0383 | 0.0551 | -0.0443 | 0.0327 | 0.0719 | -0.0115 | 0.0710 |

| 2000 | -3813.8 | 0.0246 | 0.0046 | 0.0241 | 0.0462 | -0.0374 | 0.0271 | 0.0608 | -0.0327 | 0.0512 | ||

| 3000 | -5734.2 | 0.0156 | -0.0037 | 0.0152 | 0.0316 | -0.0269 | 0.0166 | 0.0441 | -0.0306 | 0.0317 | ||

| N | llk | RMSE | Bias | SE | RMSE | Bias | SE | RMSE | Bias | SE | TPR | ||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 95% | |||||||||||||||

| Panel A | 8000 | 0.0835 | 0.9234 | 1.0069 | -13860.4 | 0.0390 | 0.0087 | 0.0380 | 0.0377 | -0.0009 | 0.0377 | 0.0761 | 0.0078 | 0.0757 | 95.74% |

| Normal | 9000 | 0.0790 | 0.9259 | 1.0049 | -15490.9 | 0.0297 | 0.0042 | 0.0294 | 0.0465 | 0.0015 | 0.0464 | 0.0760 | 0.0058 | 0.0758 | 95.55% |

| 10000 | 0.0803 | 0.9281 | 1.0085 | -17081.0 | 0.0088 | 0.0055 | 0.0069 | 0.0091 | 0.0038 | 0.0082 | 0.0178 | 0.0094 | 0.0151 | 95.89% | |

| Panel B | 8000 | 0.0834 | 0.9235 | 1.0069 | -13860.4 | 0.0385 | 0.0086 | 0.0375 | 0.0372 | -0.0009 | 0.0372 | 0.0752 | 0.0078 | 0.0748 | 95.74% |

| skew- | 9000 | 0.0792 | 0.9262 | 1.0055 | -15490.6 | 0.0138 | 0.0044 | 0.0131 | 0.0216 | 0.0019 | 0.0215 | 0.0352 | 0.0064 | 0.0346 | 95.61% |

| Normal | 10000 | 0.0801 | 0.9284 | 1.0085 | -17080.2 | 0.0085 | 0.0053 | 0.0066 | 0.0089 | 0.0041 | 0.0079 | 0.0173 | 0.0094 | 0.0145 | 95.89% |

| Panel C | 8000 | 0.0736 | 0.9226 | 0.9963 | -13337.1 | 0.0060 | -0.0012 | 0.0058 | 0.0059 | -0.0017 | 0.0056 | 0.0118 | -0.0029 | 0.0115 | 94.73% |

| Student t | 9000 | 0.0727 | 0.9279 | 1.0006 | -14912.0 | 0.0054 | -0.0021 | 0.0050 | 0.0056 | 0.0036 | 0.0043 | 0.0094 | 0.0014 | 0.0093 | 95.14% |

| 10000 | 0.0735 | 0.9263 | 0.9999 | -16428.3 | 0.0043 | -0.0013 | 0.0041 | 0.0035 | 0.0020 | 0.0028 | 0.0070 | 0.0008 | 0.0069 | 95.07% | |

| Panel D | 8000 | 0.0732 | 0.9225 | 0.9957 | -13337.1 | 0.0084 | -0.0016 | 0.0083 | 0.0064 | -0.0018 | 0.0062 | 0.0149 | -0.0034 | 0.0145 | 94.68% |

| skew- | 9000 | 0.0715 | 0.9262 | 0.9977 | -14912.2 | 0.0061 | -0.0033 | 0.0051 | 0.0040 | 0.0019 | 0.0036 | 0.0088 | -0.0014 | 0.0087 | 94.87% |

| Student t | 10000 | 0.0743 | 0.9277 | 1.0020 | -16428.4 | 0.0035 | -0.0005 | 0.0034 | 0.0041 | 0.0034 | 0.0024 | 0.0065 | 0.0029 | 0.0058 | 95.27% |

| Panel E | 8000 | 0.0770 | 0.9244 | 1.0014 | -13386.3 | 0.0079 | 0.0022 | 0.0076 | 0.0076 | 0.0001 | 0.0076 | 0.0153 | 0.0023 | 0.0152 | 95.22% |

| GED | 9000 | 0.0734 | 0.9266 | 1.0000 | -14966.3 | 0.0056 | -0.0014 | 0.0054 | 0.0053 | 0.0023 | 0.0048 | 0.0103 | 0.0009 | 0.0103 | 95.09% |

| 10000 | 0.0753 | 0.9275 | 1.0028 | -16492.3 | 0.0036 | 0.0005 | 0.0035 | 0.0042 | 0.0032 | 0.0027 | 0.0073 | 0.0037 | 0.0062 | 95.35% | |

| Panel F | 8000 | 0.0750 | 0.9221 | 0.9971 | -13386.2 | 0.0059 | 0.0002 | 0.0059 | 0.0059 | -0.0022 | 0.0054 | 0.0115 | -0.0020 | 0.0113 | 94.81% |

| skew- | 9000 | 0.0734 | 0.9265 | 0.9999 | -14966.0 | 0.0055 | -0.0014 | 0.0054 | 0.0054 | 0.0022 | 0.0049 | 0.0103 | 0.0008 | 0.0103 | 95.08% |

| GED | 10000 | 0.0753 | 0.9275 | 1.0028 | -16492.3 | 0.0035 | 0.0006 | 0.0035 | 0.0040 | 0.0031 | 0.0025 | 0.0070 | 0.0037 | 0.0060 | 95.35% |

| Panel G | 8000 | 0.0732 | 0.9234 | 0.9966 | -13336.3 | 0.0065 | -0.0016 | 0.0063 | 0.0054 | -0.0009 | 0.0053 | 0.0119 | -0.0025 | 0.0116 | 94.76% |

| GHYP | 9000 | 0.0720 | 0.9279 | 0.9999 | -14911.4 | 0.0057 | -0.0028 | 0.0050 | 0.0056 | 0.0036 | 0.0043 | 0.0093 | 0.0008 | 0.0093 | 95.08% |

| 10000 | 0.0729 | 0.9265 | 0.9994 | -16427.7 | 0.0045 | -0.0019 | 0.0040 | 0.0035 | 0.0022 | 0.0027 | 0.0067 | 0.0003 | 0.0067 | 95.03% | |

| Panel H | 8000 | 0.0731 | 0.9229 | 0.9961 | -13343.3 | 0.0058 | -0.0017 | 0.0056 | 0.0057 | -0.0014 | 0.0055 | 0.0115 | -0.0031 | 0.0111 | 94.71% |

| NIG | 9000 | 0.0719 | 0.9275 | 0.9994 | -14919.7 | 0.0059 | -0.0029 | 0.0052 | 0.0053 | 0.0031 | 0.0043 | 0.0095 | 0.0003 | 0.0095 | 95.03% |

| 10000 | 0.0729 | 0.9266 | 0.9995 | -16438.1 | 0.0045 | -0.0019 | 0.0041 | 0.0034 | 0.0023 | 0.0025 | 0.0066 | 0.0004 | 0.0066 | 95.04% | |

| Panel I | 8000 | 0.0711 | 0.9218 | 0.9930 | -13435.0 | 0.0067 | -0.0036 | 0.0056 | 0.0070 | -0.0025 | 0.0065 | 0.0135 | -0.0062 | 0.0121 | 94.42% |

| GHST | 9000 | 0.0699 | 0.9261 | 0.9960 | -15027.3 | 0.0071 | -0.0049 | 0.0051 | 0.0049 | 0.0018 | 0.0046 | 0.0102 | -0.0031 | 0.0097 | 94.71% |

| 10000 | 0.0734 | 0.9266 | 0.9999 | -16569.1 | 0.0038 | -0.0014 | 0.0035 | 0.0034 | 0.0022 | 0.0026 | 0.0061 | 0.0008 | 0.0061 | 95.08% | |

| Panel J | 8000 | 0.0731 | 0.9232 | 0.9963 | -13337.1 | 0.0057 | -0.0017 | 0.0055 | 0.0057 | -0.0011 | 0.0055 | 0.0114 | -0.0028 | 0.0110 | 94.74% |

| JSU | 9000 | 0.0719 | 0.9277 | 0.9996 | -14912.4 | 0.0057 | -0.0029 | 0.0050 | 0.0053 | 0.0033 | 0.0042 | 0.0091 | 0.0005 | 0.0091 | 95.04% |

| 10000 | 0.0727 | 0.9264 | 0.9991 | -16429.3 | 0.0045 | -0.0020 | 0.0040 | 0.0033 | 0.0020 | 0.0026 | 0.0066 | 0.0000 | 0.0066 | 95.00% |

| Panel A | Panel B | Panel C | Panel D | Panel E | |

|---|---|---|---|---|---|

| Normal | skew-Normal | Student’s t | skew-Student’s t | GED | |

| 0.0164 | 0.0078 | 0.0387 | 0.0177 | 0.0378 | |

| 0.0323 | 0.0278 | 0.0297 | 0.0270 | 0.0311 | |

| 0.0701 | 0.0670 | 0.0690 | 0.0661 | 0.0700 | |

| 0.9093 | 0.9170 | 0.9188 | 0.9236 | 0.9137 | |

| 0.2504 | 0.2344 | 0.3499 | 0.3445 | 0.2879 | |

| 0.2245 | 0.2209 | 0.0729 | 0.0943 | 0.1445 | |

| = | 1.4550 | 1.4233 | 1.2362 | 1.2058 | 1.3436 |

| Persistence | 0.9794 | 0.9825 | 0.9764 | 0.9792 | 0.9762 |

| WLB (5) | 0.3227 | 0.8383 | 0.9103 | 1.6060 | 1.3361 |

| p-value (5) | (1.0000) | (1.0000) | (1.0000) | (0.9955) | (0.9995) |

| ARCH LM statistic(7) | 3.0979 | 3.1854 | 3.8897 | 4.1266 | 3.4264 |

| p-value (7) | (0.4953) | (0.4793) | (0.3627) | (0.3287) | (0.4369) |

| AP-GoF | 87.2 | 64.56 | 42.32 | 18.48 | 53.68 |

| p-value | (0.0000) | (0.0000) | (0.0016) | (0.4908) | (0.0000) |

| Log-likelihood | -8909.189 | -8886.553 | -8803.012 | -8790.528 | -8825.745 |

| AIC | 3.1862 | 3.1785 | 3.1486 | 3.1445 | 3.1568 |

| BIC | 3.1969 | 3.1903 | 3.1605 | 3.1576 | 3.1686 |

| SIC | 3.1862 | 3.1785 | 3.1486 | 3.1445 | 3.1567 |

| HQIC | 3.1899 | 3.1826 | 3.1528 | 3.1491 | 3.1609 |

| Run-time (seconds) | 4.3245 | 6.6636 | 7.6463 | 11.9177 | 9.1407 |

| Panel F | Panel G | Panel H | Panel I | Panel J | |

| skew-GED | GHYP | NIG | GHST | JSU | |

| 0.0157 | 0.0156 | 0.0155 | -0.0062 | 0.0159 | |

| 0.0273 | 0.0267 | 0.0261 | 0.0251 | 0.0265 | |

| 0.0665 | 0.0661 | 0.0657 | 0.0650 | 0.0658 | |

| 0.9206 | 0.9241 | 0.9246 | 0.9284 | 0.9243 | |

| 0.2823 | 0.3370 | 0.3341 | 0.3202 | 0.3378 | |

| 0.1592 | 0.0942 | 0.0964 | 0.1163 | 0.0949 | |

| = | 1.3048 | 1.2086 | 1.2171 | 1.1942 | 1.2102 |

| Persistence | 0.9797 | 0.9795 | 0.9800 | 0.9826 | 0.9796 |

| WLB (5) | 2.5350 | 1.5990 | 1.8260 | 2.5920 | 1.7170 |

| p-value (5) | (0.7599) | (0.9957) | (0.9822) | (0.7277) | (0.9906) |

| ARCH LM statistic(7) | 3.6331 | 4.0705 | 4.0249 | 4.2354 | 4.0750 |

| p-value (7) | (0.4026) | (0.3365) | (0.3430) | (0.3139) | (0.3359) |

| AP-GoF | 46.18 | 17.01 | 22.23 | 29.37 | 21.66 |

| p-value | (0.0005) | (0.5890) | (0.2730) | (0.0604) | (0.3013) |

| Log-likelihood | -8810.111 | -8790.079 | -8793.107 | -8800.387 | -8791.112 |

| AIC | 3.1515 | 3.1447 | 3.1454 | 3.1480 | 3.1447 |

| BIC | 3.1646 | 3.1589 | 3.1585 | 3.1611 | 3.1578 |

| SIC | 3.1515 | 3.1447 | 3.1454 | 3.1480 | 3.1447 |

| HQIC | 3.1561 | 3.1497 | 3.1500 | 3.1526 | 3.1493 |

| Run-time (seconds) | 19.0058 | 49.9461 | 20.7803 | 16.8525 | 10.6434 |

| Panel A | Panel B | Panel C | Panel D | Panel E | |

|---|---|---|---|---|---|

| Normal | skew-Normal | Student’s t | skew-Student’s t | GED | |

| Log-likelihood | -8910.136 | -8887.475 | -8803.200 | -8790.782 | -8826.007 |

| AIC | 3.1862 | 3.1784 | 3.1483 | 3.1443 | 3.1565 |

| BIC | 3.1957 | 3.1891 | 3.1590 | 3.1561 | 3.1671 |

| SIC | 3.1862 | 3.1784 | 3.1483 | 3.1443 | 3.1565 |

| HQIC | 3.1895 | 3.1822 | 3.1521 | 3.1484 | 3.1602 |

| Panel F | Panel G | Panel H | Panel I | Panel J | |

| skew-GED | GHYP | NIG | GHST | JSU | |

| Log-likelihood | -8810.472 | -8790.315 | -8793.329 | -8802.039 | -8791.340 |

| AIC | 3.1513 | 3.1444 | 3.1452 | 3.1483 | 3.1445 |

| BIC | 3.1631 | 3.1575 | 3.1570 | 3.1601 | 3.1563 |

| SIC | 3.1513 | 3.1444 | 3.1452 | 3.1483 | 3.1445 |

| HQIC | 3.1554 | 3.1490 | 3.1493 | 3.1524 | 3.1486 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).