1. Introduction

Land cover change detection (LCCD) with remote sensing images is defined by comparing the bi-temporal images and extracting the land cover change[

1]. LCCD with remote sensing images can obtain large-scale land change on the earth’s surface promptly, and the land cover change information plays a vital role in landslide and earthquake inventory mapping [

2,

3,

4], environmental quality assessment [

5,

6,

7], natural resource monitoring [

8,

9], urban development decision-making [

10,

11,

12], crop yield estimation [

13], and other applications [

14]. Therefore, LCCD with remote sensing images is attractive in practical applications [

15,

16,

17,

18].

In the previous decades, various LCCD methods have been developed. According to the difference in the bi-temporal images used for LCCD, these methods can be divided into two major groups [

19]: change detection with homogeneous remote sensing images (Homo-CD) and change detection with heterogeneous remote sensing images (Hete-CD). Details of each group were reviewed as follows:

Homo_CD indicates that the images used for LCCD are homogeneous data acquired with the same remote sensors, have the same spatial-spectral resolution, and performed with the same reflectance signatures [

14]. Therefore, the images can compare directly to measure the change magnitude and obtain land cover change information. Researchers have promoted many methods for Homo_CD. One of the most popular methods is the contextual information-based method [

20], such as the sliding window-based approach [

21], the mathematical model-based method [

22], and the adaptive region-based approach [

23,

24]. In addition, a deep learning method for Homo_CD has been widely used, such as convolutional neural networks [

25,

26,

27] and fully convolutional Siamese networks [

28,

29]. To cover more ground targets and utilize deep features, a large number of deep learning networks were constructed with multiscale convolution [

30,

31] and deep feature fusion [

32,

33]. Moreover, the change trend detection with multitemporal images is attractive and popular in recent years [

15,

34,

35]. Despite various change detection methods that have been promoted and their excellent performance demonstrating their feasibility and advantages for Homo_CD, two major flaws remain: deep learning neural network training required many samples, and preparing a large number of training samples is labor-intensive and time-consuming.

Moreover, due to the rapid development of remote sensing techniques, homogeneous images may be unavailable in considerable application scenarios. For example, optical satellite image is usually unavailable for detecting land cover change caused by night wildfire or flood, yet the pre-event optical images are easily obtained with satellites. In addition, the image quality of optical satellite images is easily affected by weather [

36,

37]. Therefore, to solve the limitation of Homo_CD in practical applications, Hete-CD is promoted and has become popular in recent years [

38].

Compared with Homo_CD, the images used for Hete-CD are heterogeneous remote sensing images (HRSIs). The HRSIs have an advantage in complementing the insignificance of homogeneous images, such as an optical image reflecting the appearance of ground targets by visible, near-infrared, and short-wave infrared sensors. Thus, the illumination and atmosphere conditions strongly affect the optical image quality. While Synthetic Aperture Radar (SAR) image represents the physical scattering of ground targets, the appearance of a target in SAR image depends on its roughness and wavelength of microwave energy. Therefore, when an optical image is available before a night flood or landslide, SAR image is an alternative after the disaster because it is independent of visual light and weather conditions [

39]. Therefore, immediate assessment of the damage of natural disasters using optical and SAR images for some urgent cases is possible. The superiority of Hete-CD increases the corresponding demand in practical engineering.

In recent years, various methods have been promoted for Hete-CD. The most popular approach of Hete-CD focused on exploring the shared features and measuring the similarity between the shared features to bitemporal HRSIs. Wan et al. explored statistical features from multisensor images for LCCD [

40]. Lei et al. extracted the adaptive local structure from HRSIs for Hete-CD [

41]. Sun et al. improved the adaptive structure feature based on sparse theory for Hete-CD [

42]. In addition, image regression [

43,

44,

45], graph representation theory [

46,

47,

48,

49,

50], and pixel transformation [

51] are feasible for exploring the shared feature to achieve change detection tasks with HRSIs. Although considerable traditional methods and their applications have demonstrated the feasibility and advantages of using HRSIs in LCCD, these algorithms are usually complex and their optimal parameter requires trial-and-error experiments.

In addition, deep learning techniques have been widely used to explore deep features from HRSIs and make them comparable for change detection. Zhan et al. promoted a log-based transformation feature learning network for Hete-CD [

52]. Wu et al. developed a commonality auto-encoder for learning common features for Hete-CD [

53], and Niu presented a conditional adversarial network for Hete-CD [

54]. Although some applications indicated that deep learning techniques can learn deep features from HRSIs to be comparable, the deep learning-based Hete-CD methods usually required a large amount of training samples.

Particularly, the challenges of Hete-CD were summarized as follows: 1) bi-temporal HRSIs cannot be compared directly for detecting change; 2) achieving satisfactory performance with very small samples for training is challenging for a number of existing deep learning approaches; 3) labeling training samples for deep learning based methods is necessary, but time-consuming and labor-intensive. We promoted a novel framework to achieve change detection with HRSIs under very small initial sample set to deal with the challenges of Hete-CD. The major contribution of the proposed framework can be briefly summarized as follows:

A novel deep-learning framework is designed for Hete-CD. In particular, we demonstrated that the simple framework has some advantages in improving the detection accuracies with very small initial samples. A simple yet competitive performance of the deep learning framework is attractive and preferred for practical engineering.

A non-parametric sample-enhanced algorithm is proposed to be embedded into a neural network. In particular, it explores the potential samples around each initial sample in a non-parametric and iterative approach. Although this idea was verified by Hete-CD with HRSIs in this study, it may be useful for other supervised remote sensing image applications, such as land cover classification, scene classification, and Homo-CD.

The remainder of this paper is organized as follows. Section II presents the details of the proposed framework. Section III presents the experiments and the related discussion. The conclusion is given in Section IV.

2. Methods

In this section, we provide a detailed description of the proposed framework for Hete-CD, including an overview and backbone of the proposed neural network, non-parametric sample enhanced algorithm, and accuracy assessment. Each part is detailed in the following section.

Overview

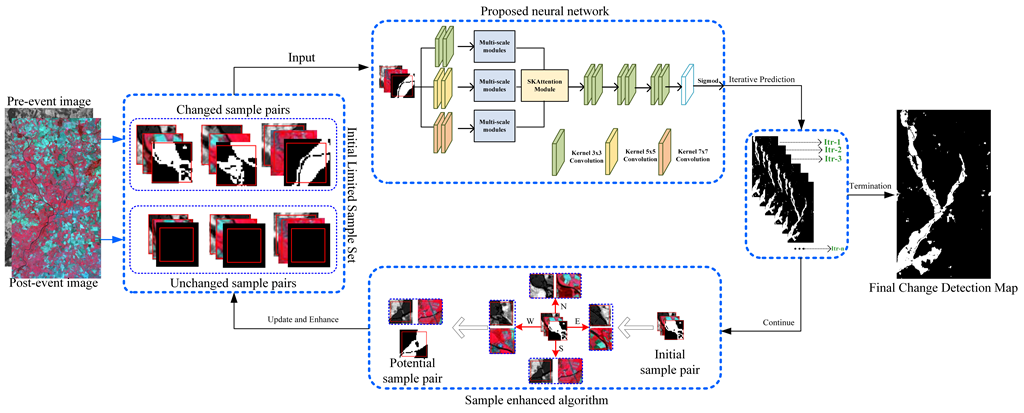

The motivation of this study lies in achieving change detection performance with very small samples for Hete-CD. A novel framework was designed here to achieve the objective. As shown in

Figure 1, the proposed framework has three major parts.

The overview of the proposed framework indicates that the proposed framework generated change detection map iteratively, and the final detection map was output until the iteration is terminated. Moreover, the framework was initialized with very small sample, and then the initial training sample set was amplified by a proposed non-parametric sample-enhanced algorithm in each iteration. Details of the termination condition of the proposed framework are presented in the following section.

To clarify this concept, the matched pixels for changed and unchanged classes between three adjacent iterations are defined in (1), as follows:

where in

, 0 and 1 denote the unchanged and changed class, respectively.

and

are calculated by (2) and (3), respectively; they indicate the similarity between the detection map from the (k-1)th and k-th and k-th and (k+1)th iterations. In addition,

is a very small constant, and it is fixed at 0.0001 in our proposed framework. The training sample was adjusted dynamically in the iterative progress. For example, if the change class satisfies the (1), then the corresponding changed sample will not be enhanced in the next iteration, and (1) will be checked again in each iteration. Therefore, (1) implies that the iteration of the proposed framework is terminated until the difference between the matched ratio among the (k-1)th, k-th, and (k+1) detection maps is less than

for the unchanged and changed classes.

In addition to the overview of the proposed framework, the two major parts are the proposed deep learning neural network and non-parametric sample enhanced algorithm; details are presented as follows.

Proposed deep-learning neural network

Intuitively, different ground targets are performed with different sizes in remote sensing images, and distinguishing a target, such as a lake or a building, requires an appropriate scale from the viewpoint of human vision [

55,

56]. Therefore, as shown in

Figure 1-(a), three aspects are considered in the proposed neural network. First, the bi-temporal images were concatenated into one image and then feed it into three parallel branches. Each branch contained two convolution layers and one multiscale module, and each convolution layer in each branch has a different kernel size. The motivation of this operation lies in learning multiscale features for describing the various targets with different sizes. Second, aiming at guiding the module learning a selective scale for a specific target, a selective kernel (SK) attention module [

57] is used here to adaptively adjust its receptive field size based on multiple scales of the input information. Then, six convolutional layers with a

kernel size are used, following the SK-attention to further smoothen the detection noise. Finally, the proposed neural network is activated by the Sigmoid function.

In addition, the proposed neural network was trained with 20 epochs for one iteration in the entire framework and then used to predict one change detection map. When the training samples were enhanced, the proposed neural network was trained again with the new sample set. In the training progress, a widely used loss function named cross-entropy was adopted, and was used for prediction.

Nonparametric sample enhanced algorithm

The labeling training sample is well-known to be time-consuming and labor-intensive, and sufficient samples are required for training a deep learning model [

58]. Therefore, a non-parametric sample-enhanced algorithm is promoted and embedded in our proposed framework. Details of the sample-enhanced algorithm are presented as follows:

Two image blocks,

and

, are symbolized for the pre-event and post-event and defined as a known sample. Their neighboring image blocks around the known sample position (i,j) are selected as the potential training samples, and each potential sample is overlapped a quarter with the known sample. In this study, the correlation between

and

was measured by the Pearson Correlation Coefficient (PCC), as follows:

where

is an expectation, and

and

represent the pixel within the spatial domain of

and the mean of these pixels in terms of gray value, respectively.

is the standard deviation of the pixels within

.

reflects the linear correlation between the pixels of the samples from the HRSIs. Based on the definition, the label of the samples around the known sample pair

is distinguished as follows:

where

denotes the sample’s label, and

and

denote the changed and unchanged classes, respectively. The defined rules indicated that when the label of the known sample is “changed,” and if the correlation between the pairwise neighboring potential samples (

and

) is less than or equal to that of the known sample (

and

), then the label of the pairwise neighboring potential sample will be marked as “changed.” On the contrary, when the label of the known sample is “unchanged,” and if

, then the label of the pairwise neighboring potential sample will be marked as “unchanged.” Based on this definition, the initial sample set with a very limited size is gradually amplified in the progress of iteration, and the enhanced sample set is re-used for training the proposed neural network to generate a preferred detection performance.

These rules based on PCC is effective for exploring potential samples around each known sample with regard to the following intuitive assumptions: (1) PCC can measure the correlation between two datasets from different modality; (2) around a sample with changed label, the lower correlation between pairwise of heterogonous image blocks indicates the lower possibility of change between them; (3) around a sample with unchanged label, the higher correlation between pairwise of heterogonous image blocks indicates higher possibility of change between them.

2.4. Accuracy assessment

To investigate the performance quantitively, nine widely used evaluation indicators are used in our study; details are summarized in Table I. Here, four variables are defined to clarify the referred indicators: true positive (TP) refers to the number of accurately detected changed pixels, true negative (TN) is the number of accurately detected unchanged pixels, false positive (FP) is the number of inaccurately detected changed pixels, and false negative (FN) is the number of inaccurately detected unchanged pixels.

Table I.

Quantitative accuracy evaluation indicators for each experiment.

Table I.

Quantitative accuracy evaluation indicators for each experiment.

| Evaluation Indicators |

Formula |

Definition |

| False alarm (FA) |

|

FA is the ratio between false changed and unchanged pixels of ground truth. |

| Missed alarm (MA) |

|

MA is the ratio between false unchanged and changed pixels of ground truth. |

| Total error (TE) |

|

TE is the ratio between the summary of false changed and false unchanged pixels and the total pixels of the ground map. |

| Overall accuracy (OA) |

|

OA is the accurately detected pixels between the total pixels of the ground map. |

| Average accuracy (AA) |

|

AA is the mean of accurately detected changed and accurately unchanged ratios. |

| Kappa coefficient (Ka) |

|

Ka reflects the reliability of the detection map by measuring inter-rater reliability for changed and unchanged classes. |

| Precision (Pr) |

|

Pr is the ratio between the accurately detected changed and total changed pixels in a detention map. |

| Recall (Re) |

|

Re is the ratio between the accurately detected changed and total changed pixels in the ground truth map. |

| F1-score (F1) |

|

F1 is the harmonic mean of precision and recall. |

3. Experiments

Two experiments are designed here to verify the superiority and performance of our proposed framework for Hete-CD. The experiments are performed on four pairs of actual HRSIs. Detailed descriptions of the experiments are presented in the following section.

3.1. Dataset Description

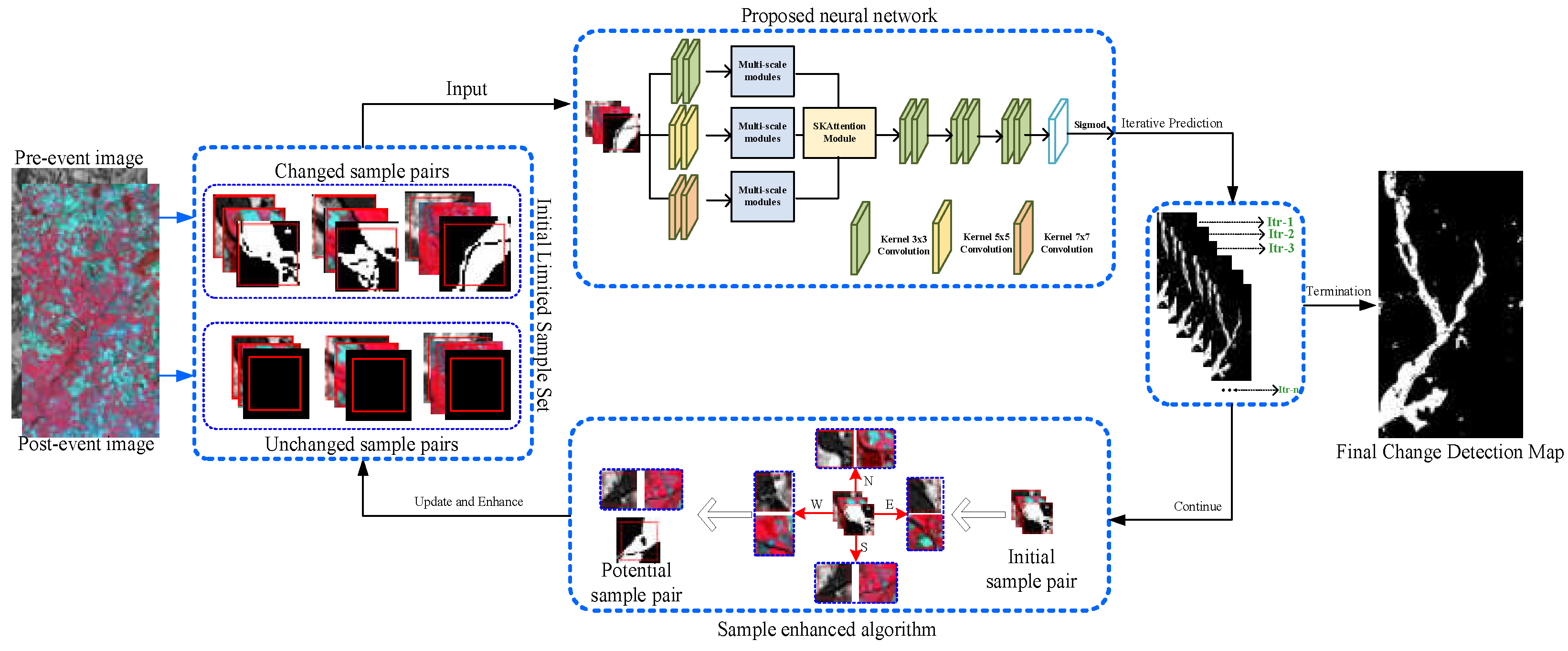

Four pairs of HRSIs are used for the experiments. As shown in

Figure 2, these images referred to water body change, building construction, flood, and wildfire change events. Details of each image pair are detailed as follows:

Dataset-1: This dataset refers to different optical images, which are acquired from different sources. The pre-event image was acquired by Landsat-5 in September 1995 and has a size of pixels with 30 meters/pixel. The post-event image was acquired in July 1996, and it was clipped from Google Earth; its size is pixels with 30 meters per pixel. The type of pairwise HRSIs is easily available. Here, they are used to detect the expansion area of the Sarindia (Italy) Lake.

Dataset-2: This dataset contains one SAR pre-event image, which was acquired with the Radarsat-2 satellite in June 2008, and one optical post-event image, which was extracted from Google Earth in September 2012. The size of the HRSIs is pixels and pixels with 8 meters per pixel. The change event is the building construction located at Shuguang Village, China.

Dataset-3: This dataset includes SPOT Xs-ERS image and SPOT image for pre-event and post-event, respectively. The pre-event image and post-event image were acquired in October 1999 and October 2000 before and after a flood over Gloucester, U.K. Particularly, the ERS image reflects the roughness of the ground before the flood, and the multispectral SPOT HRV image describes the color of the ground during the flood. The detection task aims at calculating the change areas by comparing the bitemporal heterogonous images.

Dataset-4: This dataset is composed of two images acquired by different sensors (Landsat-5 TM and Landsat-8) over Bastrop County, Texas, USA for a wildfire event on September 4, 2011. The bi-temporal images have the size of pixels and with a 30 meter/pixel resolution. The modality difference of the bi-temporal images lies in the pre-event image, which was acquired by Landsat-5 and composed of 4-3-2 bands, and the post-event image, which was obtained by Landsat-8 and composed of 5-4-3 bands.

3.2. Experimental Setup

In the experiments, nine state-of-the-art change detection methods, including three traditional methods and six deep learning methods, are selected for comparison. Details are presented as follows:

(i) In the first part of the experiments, three relatively new and highly cited related methods, namely, adaptive graph and structure cycle consistency (AGSCC)

1[

45], the graph-based image regression and MRF segmentation method (GIR-MRF)

2[

50], and the sparse constrained adaptive structure consistency based method (SCASC)

3[

59], were compared with our proposed framework. In the comparisons, the parameters of the selected methods [

45,

50,

59] are the same as those used in the original studies. For our proposed framework, 12 pairs of unchanged samples (six pairs) and changed samples (six pairs) are randomly selected from the ground reference map for framework initialization.

(ii) The second part of the experiments aims at verifying the advantages and feasibility of the proposed framework while comparing it with some state-of-the-art deep learning methods, including fully convolutional Siamese difference (FC-Siam-diff) [

28], crosswalk detection network (CDNet) [

56], feature difference convolutional neural network (FDCNN) [

26], deeply supervised image fusion network (DSIFN) [

60], cross-layer convolutional neural network (CLNet) [

27], and multiscale fully convolutional network (MFCN) [

30]. The following parameters are set for each network: learning rate=0.0001, batch size=3, and epochs =20. In addition, to guarantee comparative fairness, all the selected deep learning methods used the same training samples randomly clipped and extracted from the ground reference map. Moreover, the quantity of the training samples for the state-of-the-art deep learning methods is close to the number of samples at the end of the iteration of our proposed framework.

3.3. Experimental Results

The experimental results for the four pairs of HRSIs were obtained according to the above parameter setting. Details are as follows:

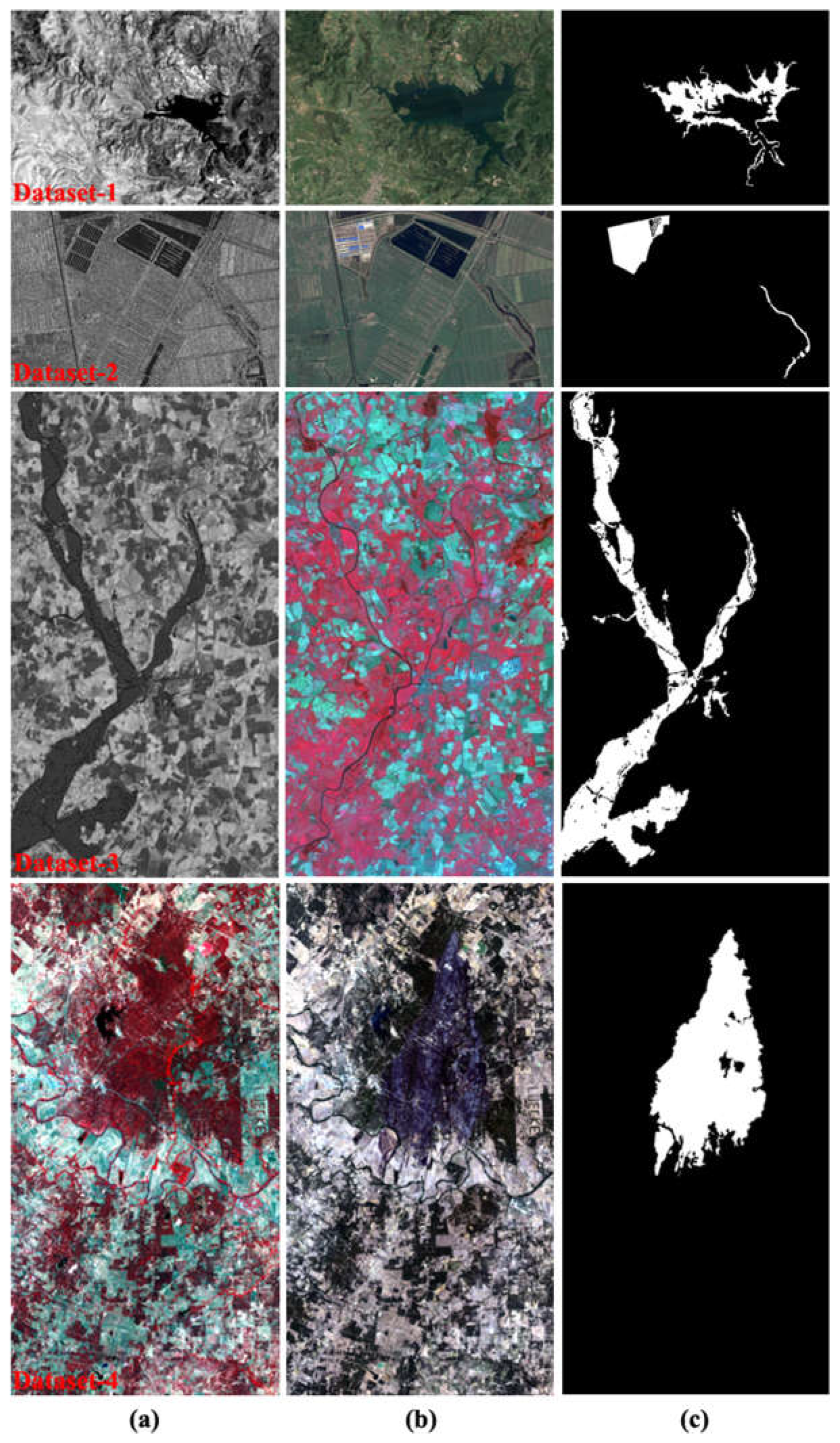

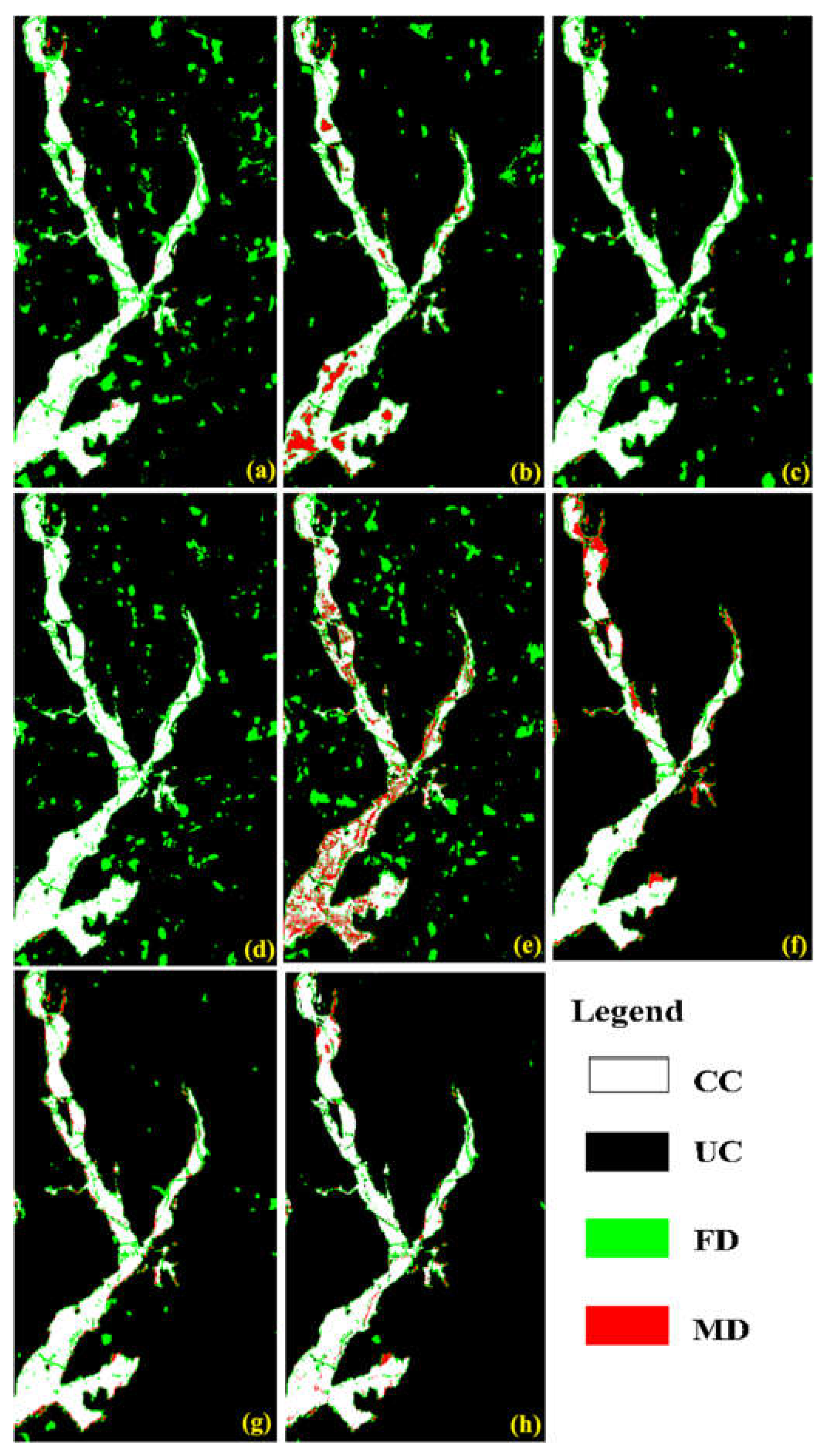

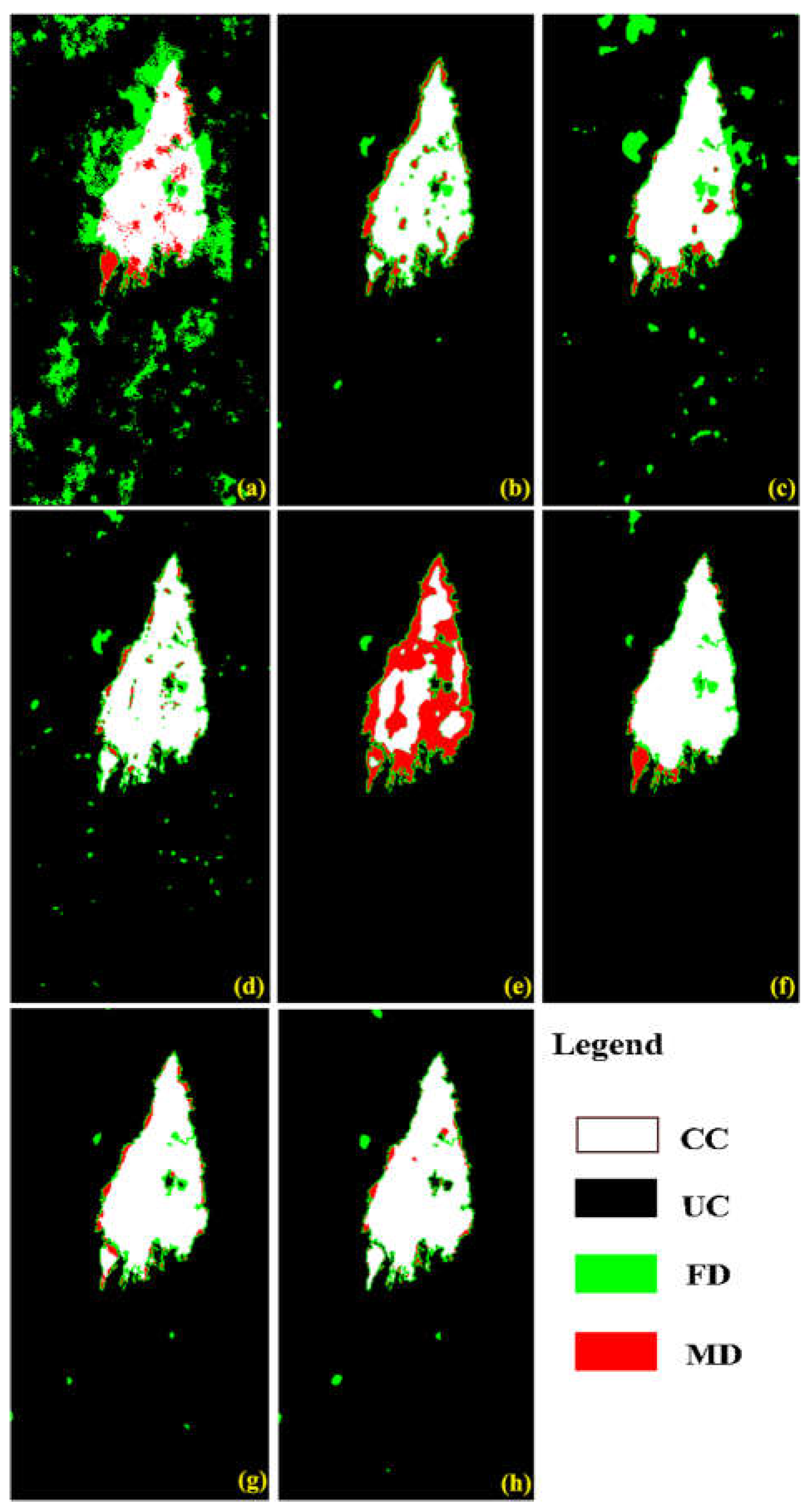

(i) Comparisons with traditional methods: The visual performance of AGSCC [

45], GIR-MRF [

50], SCASC [

59] is presented in Figures 3-(a), -(b), and -(c), respectively. Compared with the results based on our proposed framework,

Figure 3-(d) shows that our proposed framework achieved the detection performance with the least false alarm (green) and missed alarm (red) pixels. The corresponding quantitative results in

Table 2 further supported the conclusion from the visual observation comparisons. For example, for the four datasets, the proposed framework achieved 99.04%, 98.75%, 96.77%, and 97.92% in terms of OA; these values are the best among the results from AGSCC [

45], GIR-MRF [

50], SCASC [

59], and our proposed framework.

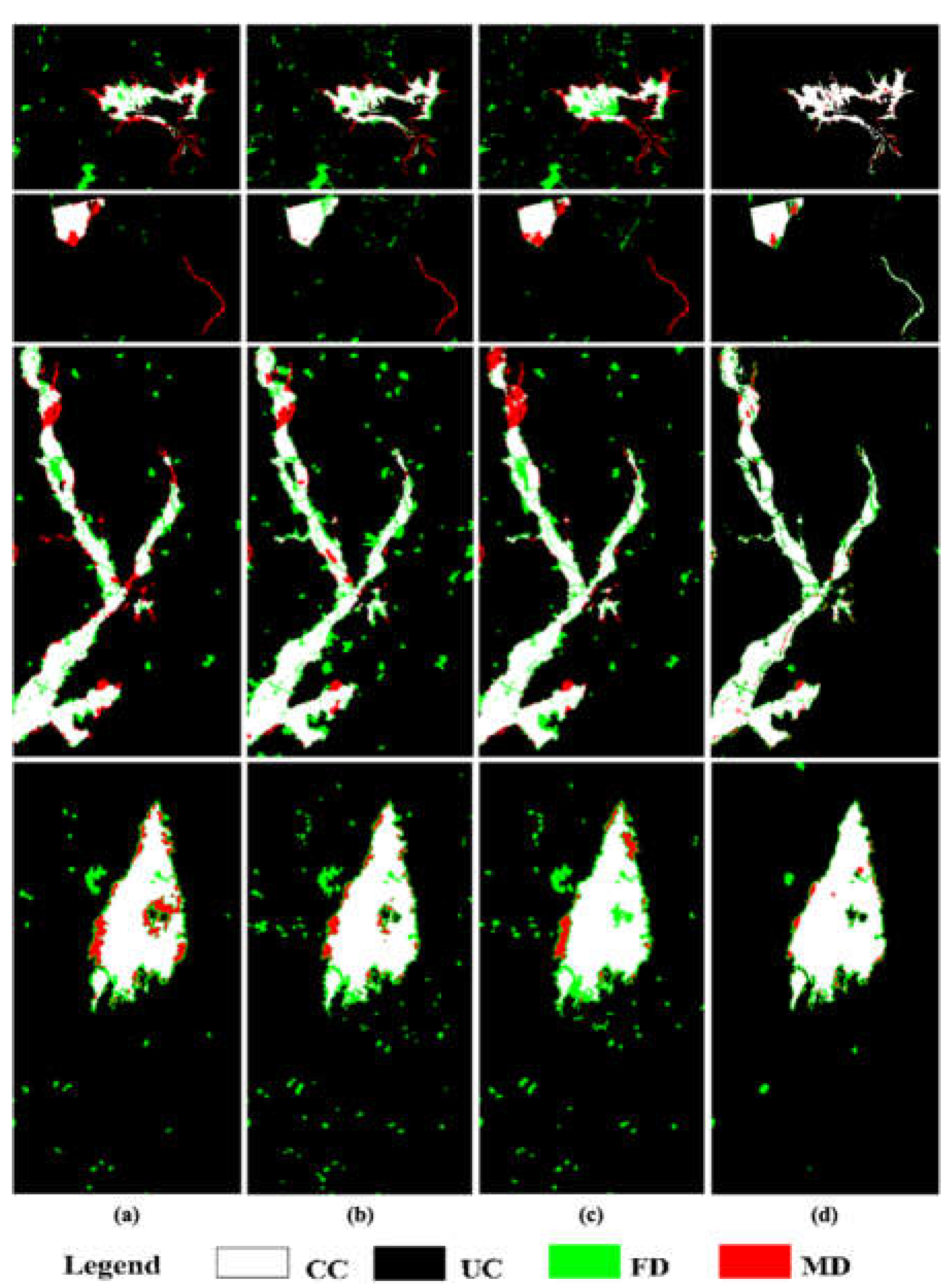

(ii) Comparisons with deep learning methods: We further verify the robustness of the proposed framework by comparing it with some state-of-the-art deep learning methods. The detection maps presented in

Figure 4,

Figure 5,

Figure 6 and

Figure 7 are acquired by different deep learning methods for the four datasets. These visual comparisons indicated that the proposed framework achieved better performance with fewer false alarms and missed alarms. Moreover, while removing the sample enhanced algorithm from the entire proposed framework, as denoted by “Proposed-” in

Table 3,

Table 4,

Table 5 and

Table 6, the improvement was achieved compared with that of other approaches for the same datasets. Multiscale information extraction and selective kernel attention in the promoted neural network are complementary to learning more accuracy in our proposed framework. The following quantitative comparisons in

Table 3,

Table 4,

Table 5 and

Table 6 further supported the visually observed conclusion.

3.4. Discussion and Analysis

To promote the widely use and further understanding of the proposed framework, two aspects of the training samples for the proposed framework are discussed and analyzed, as follows:

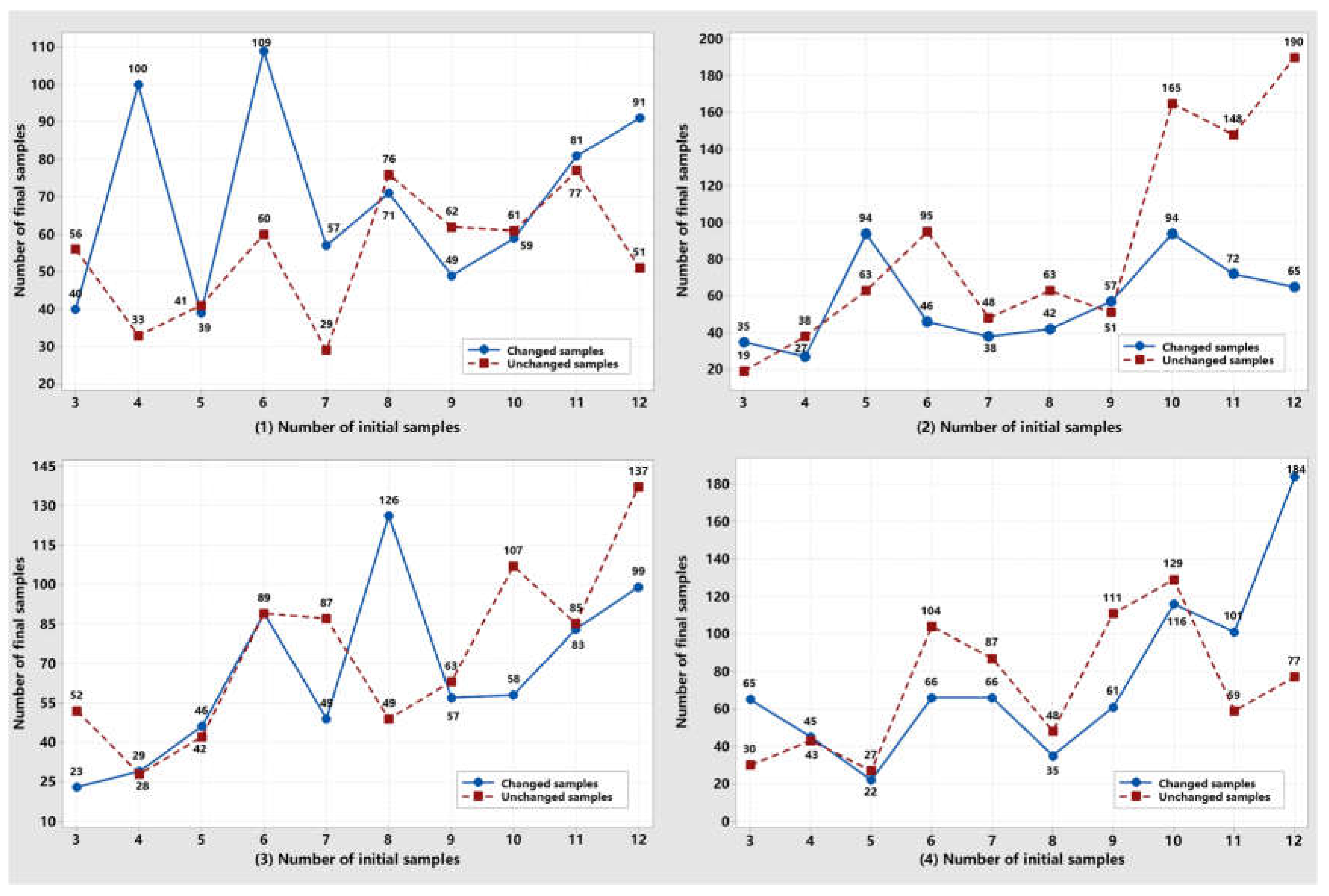

(i) Relationship between the initial and final samples: as stated in Section II, the proposed framework was initialized with very small training samples, which were amplified iteratively at each iteration. Therefore, observing the relationship between the initial samples and the final samples helps in understanding the balancing ability for unchanged and changed classes in our proposed framework.

Figure 8 indicated that the quantity of samples for the changed and unchanged classes is equal for initialization. When the iteration of the proposed framework was terminated, the number of samples for the unchanged and changed classes was adjusted automatically to be different because the size of the area was different in an image scene. Detecting them with an unequal sample is beneficial for balancing their detection accuracies. In addition,

Figure 8 also demonstrated that the relationship between the initial samples and the final sample is nonlinear. For example, for the unchanged and changed sample of Dataset-1, when the initial samples for the unchanged class increased from three to four pairs, its final samples decreased from 56 pairs to 33 pairs. Moreover, different datasets exhibit the relationship between the initial samples and the final sample for our proposed framework. Therefore, the suitable quantity of samples for the initialization of the proposed framework may require trial-and-error experiments in practical applications.

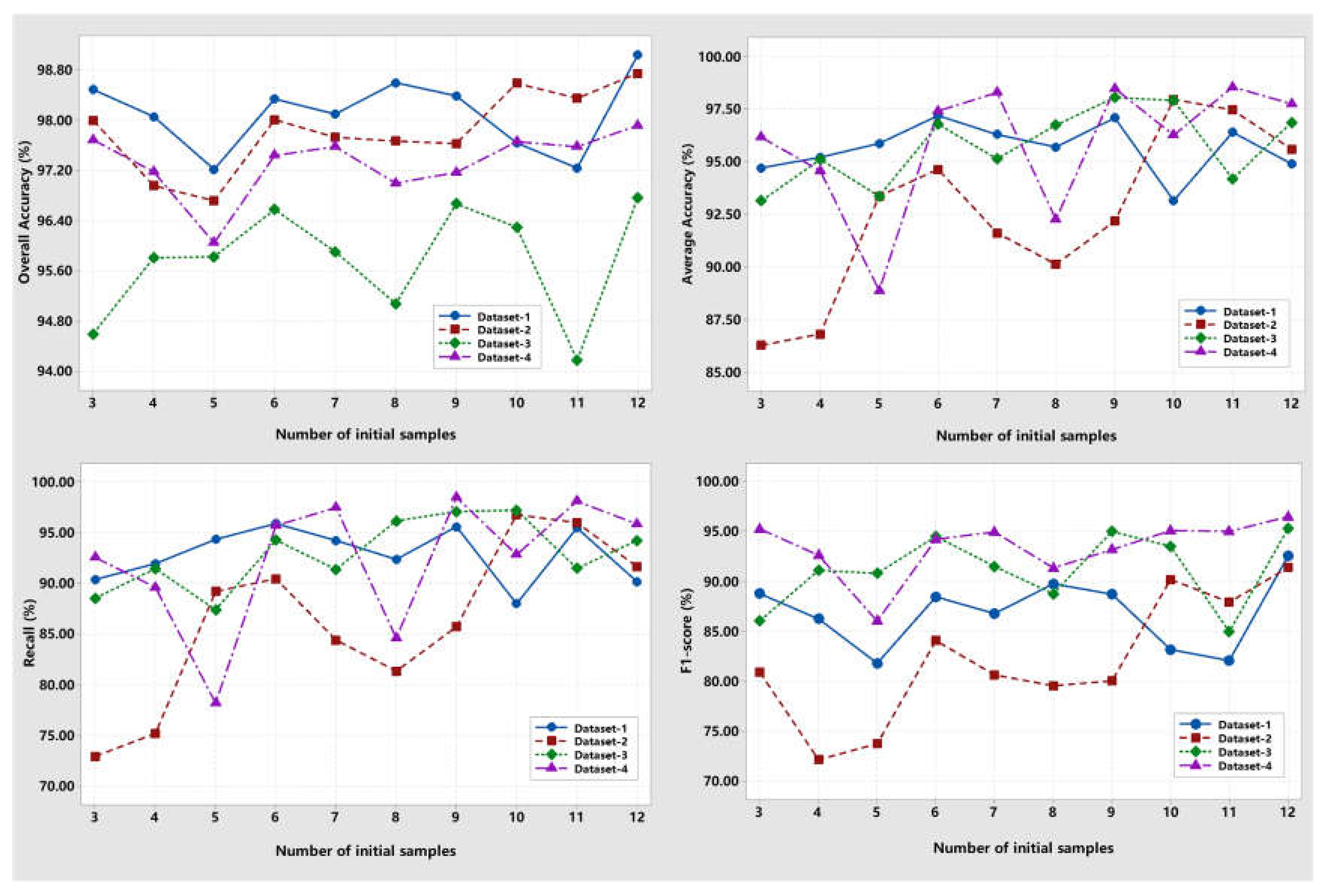

(ii) Relationship between the initial samples and detection accuracies: As shown in

Figure 9, the observation indicated that the detection accuracies initially decreased with the increment of the initial samples for some datasets, and then the accuracies increased and posed to be a state with a small variation. For example, the OA for Dataset-2 and -4 decreased when the initial samples increased from three pairs to four pairs, and then it increased and vibrated within [96.72%, 98.75%] and [96.05%, 97.92%], respectively. Some explored samples may be marked with missed labels, which negatively affect the learning performance. However, with the increment of the initial training samples, the sample with missed labels becomes the minority in the total enhanced sample set. Therefore, an uncertainty relationship may cause detection accuracy variation when training our proposed framework with the sample set.

4. Conclusion

In this study, a novel deep learning-based framework is proposed to achieve change detection with heterogeneous remote sensing images when the training samples are very small. To achieve the motivation of acquiring satisfied detection performance with very small initial training samples, the proposed framework fused a novel multiscale neural network and a novel non-parametric sample-enhanced algorithm into iterative progress. The advantages of the proposed framework are summarized as follows:

(i) Advanced detection accuracies are obtained with the proposed framework. The comparative results with four pairs of actual HTRSIs indicated that the proposed framework outperforms three traditional cognate methods (AGSCC [

45], GIR-MRF [

50], and SCASC [

59],) and six state-of-the-art deep learning methods (FC-Siam-diff [

28], CDNet [

61], FDCNN [

26], DSIFN [

60], CLNet [

27], and MFCN [

30]) in terms of visual performance and nine quantitative evaluation metrics.

(ii) Training deep learning neural networks iteratively with a non-parametric sample-enhanced algorithm is effective to improve the detection performance with very limited initial samples. This work is the first to couple a non-parametric sample enhanced algorithm with a deep learning neural network for training the neural network iteratively with the enhanced training samples from each iteration. Experimental results and comparisons demonstrate the feasibility and effectiveness of the recommended sample enhanced algorithm and training strategy for our proposed framework

(iii) A simple, robust, and non-parametric framework is preferred and acceptable for practical engineering applications. In addition to the very small initial training samples for initialization, the proposed framework has no parameters that required hard tuning. This characteristic can be easily applied to practical engineering.

Despite these advantages of the proposed framework, the widespread applications with large areas and other types of change events should be further investigated. In our future study, we will focus on collecting more types of HTRSIs, and multiclass change detection will be considered.