1. Attentional networks and Vigilance

1.1. What is the ANTI-Vea task?

One of the most widely acclaimed approaches to the understanding of human attention is the integrative model developed by Michel I. Posner (Petersen and Posner 2012; Posner and Petersen 1990; Posner and Dehaene 1994; Posner 1994). According to this renowned author, attention should be considered as a system exerting three different attentional functions: alertness (or selection in time), orienting (or selection in perception), and executive control (or selection at response levels), all playing an important overall role in behavioral coordination. These attentional functions are modulated by three neural networks (Fernandez-Duque and Posner 2001): a network involving frontal and parietal regions of the right hemisphere modulated by noradrenergic release for alertness, a posterior network (frontal eye field, parietal cortex, and other subcortical structures) modulated by cholinergic innervations for orienting (Corbetta 1998), and two anterior circuits (fronto-parietal and cingulo-opercular systems) modulated by dopaminergic activity for executive control.

Following the original Attention Network Test (ANT; Fan et al. 2002), specific adapted versions of the ANT have been developed aiming to improve the assessment of the three attentional functions (for a review, see de Souza Almeida, Faria-Jr, and Klein 2021). For example, the ANT for Interactions (ANTI; Callejas, Lupiáñez, and Tudela 2004) task allows to measure not only the way the networks work but also how they interact with each other. The subsequent version, the ANTI-Vigilance (ANTI-V; Roca et al. 2011) was developed with the aim of adding a direct measure of maintenance of attention over time-on-task, that is, vigilance. Additional adaptations of these tasks have been developed, incorporating new components and adjusting them to specific populations, such as children (e.g., Child ANT, Rueda et al. 2004; ANTI-Birds, Casagrande et al. 2022), or patients with visual impairments (Auditory ANT, Johnston, Hennessey, and Leitão 2019; Roberts, Summerfield, and Hall 2006). For a more detailed review of the origins and different evolutions of the task, see de Souza Almeida, Faria-Jr, and Klein (2021).

Since the expanded use of all of these tasks in attention research began, our team has been one of the most active in the subject and has greatly contributed to this enterprise. We are currently working on the dissemination of the ultimate version developed during the last five years: the ANT for Interactions and Vigilance–executive and arousal components (ANTI-Vea; Luna et al. 2018). The ANTI-Vea is suitable to assess the performance of the attentional networks and their interactions, while it provides two independent measures of vigilance, in line with other well-known tasks like the Sustained Attention for Response Task (SART; Robertson et al. 1997) and the Mackworth Clock Test (MCT; Mackworth 1948) for executive vigilance (EV) or the Psychomotor Vigilance Test (PVT; (Dinges and Powell 1985) for arousal vigilance (AV). The platform we have built for the use of the ANTI-Vea and the embedded subtasks is user-friendly, and the data, despite being complex and providing multiple measures, can be easily analyzed with the provided guide and resources. The present tutorial aims to present a detailed description of the ANTI-Vea-UGR platform, introducing a theoretical and methodological description of the ANTI-Vea and then a step-by-step guidance on the use of the online ANTI-Vea task and its different available resources for data analyses.

1.2. ANTI-Vea relevance. Dissociation between executive and arousal vigilance

When measuring vigilance, the behavioral pattern usually observed depicts a decrease in performance across time-on-task (Al-Shargie et al. 2019; Doran, Van Dongen, and Dinges 2001; Mackworth 1950; Tiwari, Singh, and Singh 2009). Theoretical and empirical research has proposed a dissociation between two well-differentiated components of this ability: (a) EV, understood as the capacity to monitor and detect critical signals that rarely occur over a long period of time; and (b) AV, which refers to the ability to maintain a fast response to any stimulus in the environment (see Luna et al. 2018). Thus, while the EV decrement has been observed as a gradual loss in the hit rate in the MCT and the SART (See et al. 1995; Thomson, Besner, and Smilek 2016), the AV decrement has been instead reported as a progressive increase in the average and variability of reaction time (RT) in the PVT (Basner, Mollicone, and Dinges 2011; Lamond et al. 2008; Loh et al. 2004). These behavioral patterns describe the so-called vigilance decrement.

The relevance of the ANTI-Vea relies on allowing a simultaneous (yet independent) assessment of the EV and AV components in a single experimental session. In this task, the EV component is assessed with a signal-detection task similar to the MCT (Mackworth 1948), in which participants have to discriminate the vertical displacement of the central arrow. In turn, the AV component involves a reaction time task akin to the PVT (Dinges and Powell 1985), where participants must stop a countdown as quickly as possible. While the EV decrement is observed as a decrease in both hits and false alarms (FAs), leading to an increase in response bias rather than a loss of sensitivity 1, in line with Thomson, Besner, and Smilek (2016), the AV decrement is characterized as an increase in mean and variability of RT.

2. What does ANTI-Vea measure?

2.1. ANTI-Vea design

The standard ANTI-Vea combines three classic attentional and vigilance paradigms, in three different types of trials, which allows measuring the functioning of the three attentional networks and their interactions (ANTI trials), while simultaneously testing the decrement in executive and arousal vigilance across time-on-task (EV and AV trials respectively). The three types of trials are randomly presented within each block of trials.

The largest proportion of trials (ANTI trials, 60%) are similar to the ones used in the ANTI, based on a classic flanker task (Eriksen and Eriksen 1974), but also incorporating attentional orienting (as in a spatial cueing paradigm; Posner 1980), and alertness (an auditory tone as a warning signal) manipulations. The remaining trials are evenly distributed between the two vigilance paradigms. As described above, for the assessment of the EV component participants have to discriminate the vertical displacement of the central arrow (EV trials, 20%), while in the case of the AV component participants must stop a countdown as quickly as possible (AV trials, 20%).

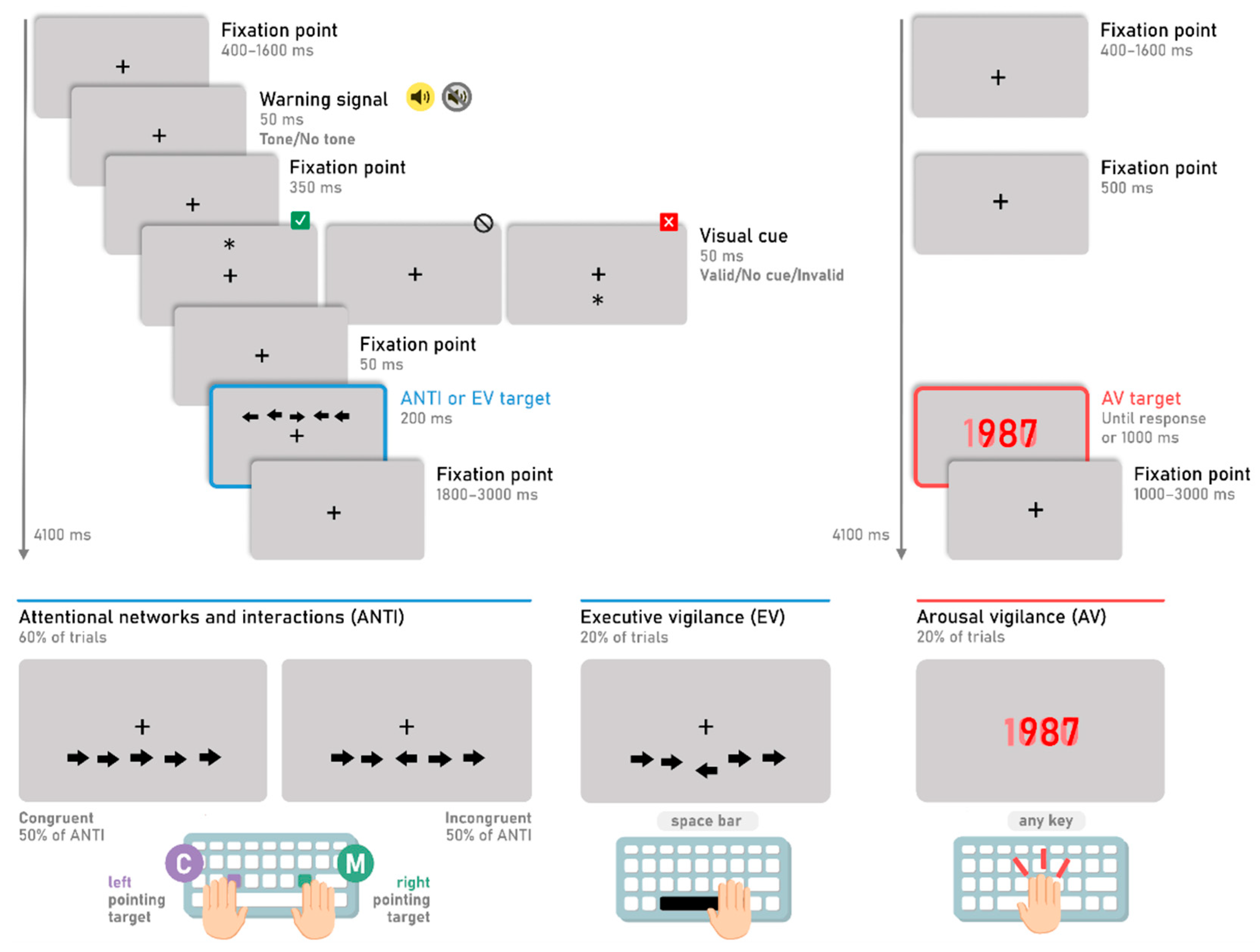

The general procedure is represented in

Figure 1. While participants keep their eyes on a black fixation point (“+”) which remains centered on the screen the whole time, a horizontal string of five black arrows appears for 200 ms either above or below the fixation point. Participants have to respond towards which direction the central arrow (i.e., the target) points to, ignoring the direction pointed at by the surrounding arrows (i.e., the distractors), by pressing the corresponding key. If the target points rightwards, participants have to press the letter “M'' on the keyboard with their right hand, and if it points leftwards, participants have to press the letter “C” with their left hand. There are two conditions for the ANTI trials: one condition in which all five arrows point in the same direction (congruent trials, 50%) and another condition in which the central arrow points in the opposite direction (incongruent trials, 50%). RT and percentage of errors of both conditions allow assessing executive control (also referred to as “cognitive control” or “executive attention”). An auditory warning signal (2000 Hz) may appear 500 ms before the target in half of the ANTI trials during 50 ms (tone condition and no tone condition). This warning signal tests phasic alertness. The orienting network is assessed with a non predictive visual cue (a black asterisk, “*”) of 50 ms that can appear either above or below the fixation point 100 ms prior to the target. This cue can be presented at the same location as the target (valid trials, a third of ANTI trials) or at the opposite position (invalid condition, a third of ANTI trials), and is absent for the remaining third of ANTI trials (no cue condition). The presence of this visual cue in the same location as the flankers make it easier for participants to respond to the target direction. Furthermore, the auditory warning signal mentioned above increases this facilitation effect, as shown in various studies (e.g., Callejas et al. 2005; Roca et al. 2011). In contrast, in the invalid trials, participants have to reorient their attention to the flankers and the target. This results in longer RTs compared to the control condition (no cue).

The EV trials (i.e., the trials of the signal-detection subtask of the ANTI-Vea) follow the same procedure and stimuli presentation as the ANTI trials. However, the target appears vertically displaced (either upwards or downwards) from its central position in relation to the alignment with the flankers. In particular, to generate some noise, the five arrows in the ANTI trials and the surrounding arrows in the EV trials can be slightly horizontally and vertically displaced at random by ± 2 px from its central position (see

Figure 1). In the EV trials, the substantial displacement of the target is only vertical and fixed at ± 8 px (see

Figure 1). To complete the EV subtask correctly, participants are instructed to remain vigilant at all times to detect the large vertical displacement of the target and to press the space bar regardless of the target’s direction. Note that the target displacement is considered as the infrequent critical signal of the signal-detection task in the ANTI-Vea. Thus, if participants correctly detect the target’s displacement in EV trials, the response is categorized as a hit. If this displacement is not detected (i.e., the space bar is not pressed), the response is categorized as a miss. Importantly, the ANTI trials serve a dual purpose in the ANTI-Vea. On the one hand, as explained above, they measure the independence and interactions of the classic attentional networks; on the other hand, critically, they serve as the

‘noise events’ of the signal-detection task of the ANTI-Vea since in these trials the target is not substantially displaced from its central position relative to the distractors. Therefore, if participants press the space bar in the ANTI trials, the response is categorized as a FA (i.e., an incorrect detection of the infrequent critical signal).

Lastly, for the AV trials (i.e., the trials that mimic the PVT), no warning signal, visual cue, or flankers are presented. Instead, the fixation point remains on the screen until a red millisecond countdown appears at the center of the screen, starting at 1000 and descending to 0 or until a response is executed. Participants are instructed to remain vigilant at all times and to stop the millisecond countdown every time it appears on the screen as fast as possible by pressing any key on the keyboard. The AV is thus evaluated with the mean and variability of the RT to the countdown.

The three types of trials have the same timing, with each having a total duration of 4,100 ms. Each trial starts with the fixation point on the center of the screen for a random duration between 400 and 1600 ms, continues with a maximum response time of 2000 ms, and ends with the fixation point until the trial duration is reached. This stimulus timing makes participants unable to predict when the target will appear on the screen and which type of trial will be presented. All the stimuli and instructions are presented over a gray background.

The standard ANTI-Vea includes a four-block practice phase, in which instructions and visual feedback are provided so that participants can gradually familiarize themselves with each type of trial. In the first practice block, 16 ANTI trials are presented after the instructions. The second block consists of 32 randomized trials, of which half are EV trials. The third one contains 16 ANTI, 16 EV and 16 AV randomized trials. Finally, the last block includes 40 randomized trials (24 ANTI, 8 EV, and 8 AV) with no visual feedback.

Once participants complete the practice phase, six consecutive experimental blocks are run, without pause and visual feedback. The total time of the experimental blocks is 32 min 48 s for the standard format of six blocks (5 min 28 s per block; 21 min 52 s in the sometimes used four-blocks version). Each experimental block includes 80 pseudo-randomized trials (48 ANTI, 16 EV, and 16 AV). The 48 ANTI trials per block have the following factorial design: Warning signal (no tone/tone) × Visual cue (invalid/no cue/valid) × Congruency (congruent/incongruent) × Target direction (left/right) × Stimuli position regarding the fixation point (up/down). The last two factors are usually not considered for statistical analyses and are only included as control conditions of stimuli presentation. For the EV trials, one factor is added: target displacement direction (upwards/downwards). The 16 EV trials per block are randomly picked out from among the 96 possible ones. For a better understanding of what this experimental phase looks like, a video is available on the website (direct link at

https://videopress.com/v/0hmK7b0Q).

In some versions of the task or when some additional parameters are used (see section 5.1. Features and options), other types of trials are added to the task (also randomly within each block of trials). Thus, in the ANTI-Vea-D version of the task, 8 additional trials are added per block in which a salient image of a cartoon character is added to measure distraction by irrelevant but salient information. Similarly, it is possible to add a variable number of thought probes (TP) to each block to measure mind wandering across time on task. In these trials, participants have to answer the following question: “Where was your attention just before the appearance of this question?” Participants respond by moving the cursor on a continuous scale ranging from "completely on-task" (extreme left, coded as -1) to "completely off-task" (extreme right, coded as 1). It is possible to select the option of 4, 8 or 12 TPs per block. The presentation of the TP trials is pseudo-randomized, so that there are at least 5 trials of the ANTI-Vea task between TPs.

2.2. ANTI-Vea indexes

The complex structure and multiple manipulations present in the ANTI-Vea allow for obtaining a wide variety of attentional functioning indexes. The core indexes of the ANTI-Vea comprise 8 attentional network scores (ANTI) and 10 vigilance scores (EV and AV). These core indexes are described in

Table 1.

ANTI scores include both mean RT and error rate for the overall ANTI trials, as well as the phasic alertness, orienting, and congruency (i.e., executive control) effects. For RT in ANTI trials, incorrect trials and RTs below 200 ms or above 1500 ms are usually filtered out, which complies with Luna, Roca, et al. (2021). Vigilance scores include both overall performance indexes and their decrement slope across task blocks. The EV measures are the percentage of hits and FAs; whereas the AV scores are the mean RT, the standard deviation (SD) of RT, and the percentage of lapses. Note that for FAs only a set of ANTI trials (i.e., ANTI trials with more than 2 px of random noise from the target to at least one of its two adjacent flankers; referred to as the FA difficult column in the trial dataset) are considered. This allows for the emergence of a decreasing trend of FAs across blocks due to the avoidance of a floor effect (Luna, Roca, et al. 2021). The analytical method for computing FAs in a subset of ANTI trials, aiming to avoid a floor effect in FA rate, can be reviewed in detail in Luna, Barttfeld, et al. (2021).

3. Reliability of the measures

Table 2 summarizes the findings about the internal consistency scores found for the ANTI-Vea core indexes. In terms of internal consistency, a recent study conducted by Luna, Roca, et al. (2021) provides consistent evidence that the ANTI-Vea task (administered either in the lab or as an online session) is roughly as reliable as the ANT (MacLeod et al. 2010) and the ANTI-V (Roca et al. 2018) for the measurement of the classic attentional networks. As for EV and AV, while most of the overall scores (i.e., the average performance on the entire task) showed acceptable internal consistency (i.e., split-half correlations corrected by the Spearman-Brown prophecy > .75) in both lab and online settings (Luna, Roca, et al. 2021), the vigilance decrement scores (i.e., the linear slopes of each vigilance outcome across the six blocks of the task) are substantially less reliable (Cásedas, Cebolla, and Lupiáñez 2022; Coll-Martín, Carretero-Dios, and Lupiáñez 2021; Luna et al. 2022). Even so, these measures of decrement are reliable enough to achieve satisfactory statistical power using large samples (Coll-Martín, Carretero-Dios, and Lupiáñez 2023), which is more feasible thanks to our platform.

Previous studies have also shown that the ANTI-Vea is suitable to be used in repeated sessions, thus supporting the stability of the task’ scores. In Sanchis et al. (2020), participants completed the ANTI-Vea in the lab in six repeated sessions. Although some EV and AV scores were modulated by experimental manipulations (i.e, caffeine intake and exercise intensity), most of the task’ scores were not modulated in the experimental sessions. To specifically assess the stability of the online ANTI-Vea, we have conducted a pre-registered study in which 20 participants completed the online task across ten repeated sessions (

https://osf.io/vh2g9/; Unpublished data). Preliminary analyses showed that main effects of phasic alertness and executive control were not modulated across sessions. Most importantly, the drop in hits for EV and the increase in mean RT for AV were also not modulated across sessions. Interestingly, as observed in Ishigami and Klein (2010) for the ANT and ANTI tasks, split-half reliability scores of the online ANTI-Vea increase as a function of the number of sessions.

4. How? Online version

Having explained what the ANTI-Vea is, what it measures, and having demonstrated the reliability of its measures, we will now explain the different characteristics of the platform our team has developed, in which contexts the ANTI-Vea can be applied, and, above all, how to collect, analyze and interpret the data. Note that the current versions of the task on the platform are only available to be administered via computer.

The ANTI-Vea-UGR platform (

https://anti-vea.ugr.es) is a research resource offered freely to researchers interested in investigating attention. Different programming languages have been used in its design: JavaScript ES5, HTML5, CSS3, and Angular JS. This allows researchers to freely collect data in the laboratory or online with the available task versions. Additionally, they can download the scripts of these attentional tasks in different programming languages to adapt or modify the existing versions. Although not typically the case, it should be noted that researchers can choose to administer the online version of the task in a lab setting or to send participants the offline (i.e., downloaded) version of the task for them to run it outside the lab.

It is possible to run the complete ANT-Vea task with the ANTI, EV, and AV trials, or to run the tasks with all stimuli but with participants only having to respond to some specific trial types (ANTI, EV, and AV as single tasks, as well as EV-AV as a dual task). Thus, it is possible to run a version in which participants have to respond just to ANTI trials, thus providing only the main measures of the three attentional networks. Similarly, it is possible to run versions of the task in which, although all trial types are presented, participants have to respond only to either EV trials (SART) or to AV trials (PVT), therefore only providing measures of EV and AV, respectively. In addition, the same task versions are provided, but with only the corresponding trials being presented (i.e., presenting only one specific type of trial; ANTI-Only, PVT-Only, SART-Only-Go, SART-Only-NoGo). Two versions are provided for the SART as a function of whether participants are to respond to all trials except for the displaced arrow trials (SART-Only-NoGo) or to only the displaced arrow trials (SART-Only-Go). Note that the SART and PVT tasks as provided in the ANTI-Vea-UGR platform are adapted versions from the original ones running with the specific parameters of the ANTI-Vea task.

Furthermore, it is possible to run an ANTI-Vea version with 8 additional trials per block in which a salient image of a cartoon character is added to measure irrelevant distraction (ANTI-Vea-D). The addition of the salient image does not seem to affect the measurement of the other attentional indexes of the ANTI-Vea (Coll-Martín, Carretero-Dios, and Lupiáñez 2021, 2023).

Finally, the tasks can be run with the standard parameters (presented in

Table 3) or with some variations of these parameters (e.g., without practice, with more or fewer blocks of trials, with varying degrees of difficulty, and with longer or shorter stimulus duration).

Although experimental conditions (i.e., environmental noise, luminosity, the device on which the task is run) cannot be controlled as much as in the laboratory (Luna, Roca, et al. 2021), this platform is effectively addressing the growing need for online method administration and self-reporting for the collection of large data samples (Germine et al. 2012). This alternative has great advantages, being easy to use in applied contexts. In terms of time and cost-efficiency, the online version of the task is far less expensive (e.g., no need for a laboratory infrastructure and no need for a person to explain the task individually to each participant), and allows data to be collected from participants from anywhere in the world. It should be emphasized that the online version of the ANTI-Vea has been proven to be as reliable as the standard one in assessing the main effects, the interaction, and the independence of the classic attentional components, along with the overall performance and decrement in EV and AV (Cásedas, Cebolla, and Lupiáñez 2022; Coll-Martín, Carretero-Dios, and Lupiáñez 2021; Luna, Roca, et al. 2021).

In the studies conducted in our lab/research group, the procedure is conducted under the same conditions for all participants: individually in an experimental room, using headphones, using the same device, and under the same conditions of luminosity, distance from the screen, etc. When participants perform the task online, they can do it from home or from any suitable place of their choice, as long as there is a good internet connection. In order to reduce any distractions along the process, the online version of the ANTI-Vea includes additional instructions at the beginning. Participants are warned that the task will be displayed in full screen and that it is important to complete it without interruptions or pauses, and that any other entertainment devices (TV or radio) have to be turned off. The instructions suggest setting the device volume to 75% and to turn off cell phones or set them to silent mode. The experimenter may also monitor the session by video call and instruct the participants to ensure the correct understanding and performance of the task. Group sessions can also be conducted using the ANTI-Vea platform, as the online server supports more than one session at a time.

5. Online version. Multiple versions in different languages

The standard ANTI-Vea and its versions on the platform can be run in six different languages, namely Spanish, English, German, French, Italian, and Polish. This is a remarkable feature, as it allows the user to study the attentional functioning within and across different countries and cultures. We are open to incorporating additional languages to expand the free access of attention assessment into diverse populations.

5.1. Features and options

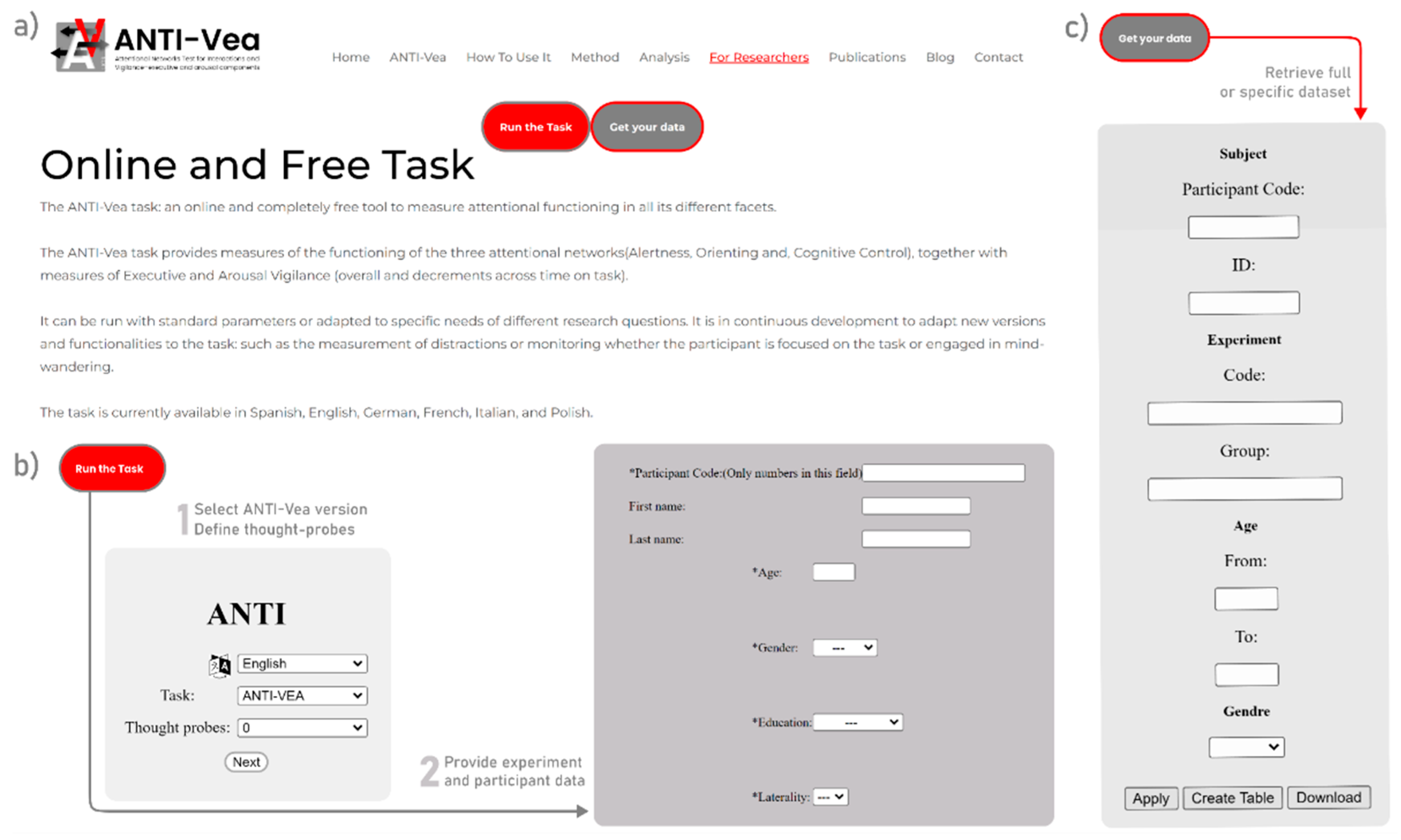

On the website, you can find specific sections with different features of the task. The

Home section (

Figure 2.a) presents a brief description of the task, as well as statistics on website visits, years in use, number of participants, published papers related to the task, available languages of the task, and countries using the tool worldwide. The webpage also offers more detailed information about the task and the different ANTI-Vea versions. There is also a

How to use it section and other useful menu items such as

ANTI-Vea Method,

Analysis,

For Researchers,

Publications,

Blog, and

Contact.

On the “How to use it” section of the website, you can find all the know-how knowledge that is necessary to collect data with the task, how to analyze it, and how to interpret it. To collect data with the online ANTI-Vea or any of its versions—regardless of whether you use the online website either inside or outside the lab—, you first need to click on the red button “Run the task”. This will bring you to the online website for data collection (

Figure 2.b). There you (or the participants) will have to select the instruction language, the version of the task to be run (default: standard ANTI-Vea), and the number of thought probes to present during the task (default: no thought probes). After clicking on “Next”, the participant’s details have to be entered: Participant Code (only numbers are allowed in this field), First and Last Name (both are optional and will not be visible when you download the data), Age, Gender (“Male”/”Female”), Education (“No education”/”Primary”/”Secondary”/”High School”/”Universitary”) and Laterality (“Left handed”/”Right handed”/”Ambidextrous”). These fields (marked with an asterisk, “*”) must be filled in. On the next page, you have to introduce the Experiment and Group codes. Although these fields are optional, it is very important that you provide a code that can be used later to download the data for your specific experiment. With the “Settings” button you can change the parameters of the task procedure, namely Noise, Difficulty, Stimuli Duration, and Number of Blocks, all with the same values as those shown for the link options (see

Table 2; but Stimuli Duration is presented here with limited discrete options). In this Settings window you can also uncheck the “Do practice blocks” to run the task without practice (only the instructions are presented to remind the participants of the response keys). This is useful when conducting a study in which you manipulate any within-participant variable (e.g., exercise level, time of day, caffeine intake, etc.). In this case, you can ask participants to first perform the whole task in a first familiarization session, and then do it as many times as necessary, according to your within-participant experimental conditions, but without the practice blocks (for an example of this procedure, see Sanchis et al. 2020).

5.2. Data collection and data protection. Specific know-how information about use of the task in research

In the

For Researchers section, we provide a system for each user to create a customized and unique link for each participant to access the task.

Table 3 shows all the adjustable setting parameters that can be customized to make the task fit your experimental procedure. Note that this is an advanced option for the data collection process. This option is useful when the experimenter wants to avoid giving participants control over the selection of task settings. For example, the experimenter may want to ensure that all participants correctly write their code (unique for each participant) and the name of the experiment (the same for all participants in a given study), and perform the practice trials plus just four experimental blocks.

As for the protection of the data, it should be reminded that the provision of identifiable information (i.e., name and surname) is optional and in any case will not be available for download. Furthermore, our platform's server is managed by the University of Granada, a public institution that adheres to data protection policies and maintains strict ethical guidelines in line with standard academic practices. Researchers can request the removal of their participant data or study information from our database at any time, and participants have the right to request deletion or access to their data. To ensure participant's understanding of how their data will be collected and treated, their rights during and after participation, the study's objectives, and any other relevant aspects, researchers must provide them with detailed information in this regard and an informed consent that ensures their understanding and agreement must be signed prior to participation.

5.3. Exporting data. Specific know-how information and tools about use and management of the task data in research

Once the data has been collected, if you click on the gray “Get your data” button (located right next to the red “Run the task” button mentioned in the previous step), you will be able to download your raw data file in CSV format. Here you need to enter the specific details you have used during the collection (typically, the Experiment Code) and click on the “Download” button (

Figure 2.c).

In the raw data file, each row contains the information corresponding to each single trial of the task. The first columns show the participant’s details entered at the beginning (i.e., Participant Code, Age, Gender, Education, Laterality, Experiment and Experiment Group). Some extra details are provided, such as the Subject ID—identifier automatically generated by the system for each participant,2 the Session Number (automatically generated by the system based on the Subject ID), and the Session Date (yyyy-mm-dd hh:mm:ss). Following them, you will find the Noise and Difficulty task settings. The following consecutive columns are the specific ones to be used during the analysis: Trial time (the time elapses from the start of the task until the trial begins, in milliseconds), Block (the block number, 0 for practice and 1–n-block for the experimental blocks), Trial number (1 to 16, 32, 40, and 48 for each of the four practice block, respectively; and 1 to 80 for experimental blocks in the ANTI-Vea standard version), Trial type (ANTI, EV or AV in the ANTI-Vea standard version), Reaction time (in milliseconds), Correct answer (C, M, Space or Any), Answer (the keyboard button the participant has pressed), Accuracy (0 or 1, for incorrect and correct trials, respectively), FA Total (if the participant has comitted a FA or not, computed as 1 or 0, respectively), FA difficul (same as FA Total but only computed when there is more than 2 px from the target to at least one of its two adjacent flankers). The next columns describe the characteristics of the stimuli relevant in ANTI or EV trials (i.e., they are not interpretable in AV trials): Target (the direction the target arrow is pointing at; Right or Left), Flankers (the direction the distractors are pointing at; Right or Left); Congruency (Congruent or Incongruous), Cue position (Up or Down), Arrows position (Up or Down), Validity (Valid, Invalid, or No_cue), and Tone (Yes or No). Finally, after some columns with the coordinates of the arrows and others describing characteristics of some subtasks, there is the Task Version column (ANTI_VEA, ANTI, SART, PVT, SART-PVT, ANTI-Only, SART-Only-Go, SART-Only-NoGo, PVT-Only, or ANTI-VEA-D).

6. Analyzing data. Scripts and tools for analysis of data

In the Analysis section, you will find instructions and tools to analyze your data. Starting from the downloaded raw dataset from the “Get your data” section, the analysis procedure typically begins with a pre-processing phase. Note, however, that what follows is a description of the standard procedure, but researchers may choose to follow alternative analytic strategies depending on their specific research aims. Here, practice trials are removed and participants with incomplete experimental blocks, minimization of the task (i.e., unintentional exits from full-screen task display mode leading to incorrectly registered trials), and poor performance are identified. Note that the raw data allow exclusion thresholds to be chosen based on the characteristics of each particular study (type of participants, design, resource constraints, etc.). In adult community samples, we recommend excluding participants with incomplete blocks or with more than 25% errors in ANTI trials, according to Luna, Roca, et al. (2021). Once the data has been processed, the main analysis consists of obtaining the score of the different indexes of the task for each participant.

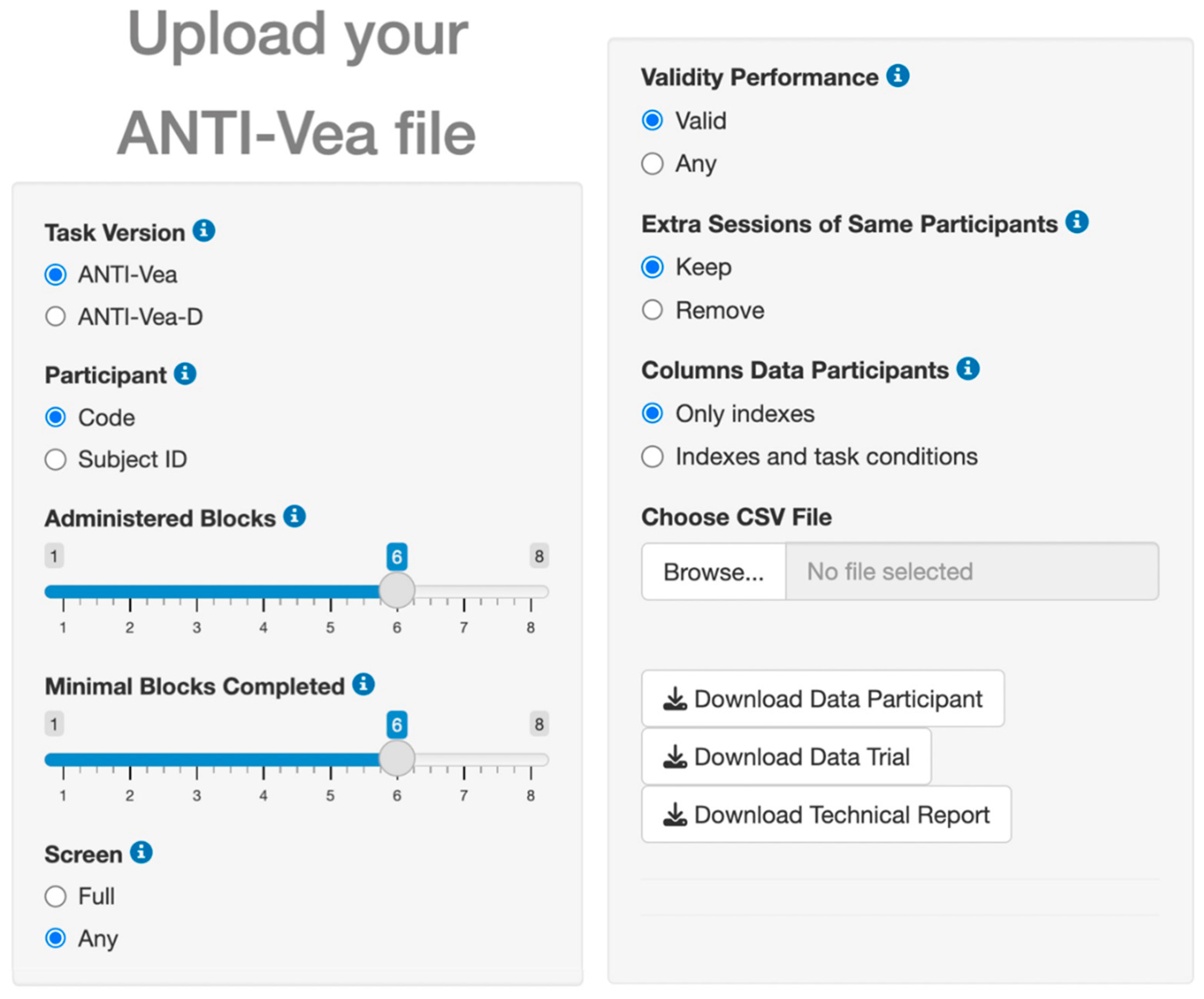

To support and facilitate the ANTI-Vea analysis process in obtaining the core indexes described in

Table 1, we have developed a code in R that is implemented in a Shiny app embedded in the Analysis section of the website (

Figure 3). This app easily allows the transformation of a raw dataset of the ANTI-Vea into two clean and processed datasets: Data Participant and Data Trial, both in CSV format. In Data Participant each row contains the information of a task session, with the columns including general information about the session (date of the session, noise, difficulty, trials and blocks completed, validity of the performance, etc.) as well as the scores of the ANTI-Vea core indexes in that session. Data Trial has the same structure as the raw dataset (i.e., trial-level rows) with additional columns related to the session. To do so, the user does not need any programming knowledge, but only click on the desired options for the following parameters: Task Version, Participant (column used to identify each participant), Administered Blocks per session, Minimal Blocks Completed (sessions with fewer completed blocks are removed), Screen (remove [Full] or retain [Any] sessions in which the screen was minimized by the participant), Validity Performance (remove [Valid] or retain [Any] sessions due to poor performance), Extra Sessions of the Same Participant, and Columns shown in the Data Participants file related to task indexes. The website includes sample CSV files for Data Participant and Data Trial, as well as their corresponding codebooks to ensure they are correctly interpreted.

Finally, the Shiny app includes the option to download a technical report (PDF format) of the whole analysis procedure and summary statistics of the task indices (see the website for a sample report).

For those with some programming skills, the R code underlying the Shiny app (default setting) is openly available at the website. This format can be useful for a better understanding of the code and to facilitate modifications in the analysis flow (e.g., different filters and new indexes). Indeed, beyond the ANTI-Vea core indexes, there are several outcomes of the task that are worth considering. In this sense, the conditions that are manipulated to obtain the effects of the three attentional networks and the slope of decrement in vigilance can be specifically analyzed for a more detailed analysis (e.g., comparing congruent and incongruent conditions between two groups via a 2 × 2 mixed ANOVA). Having the conditions separated also allows us to check whether the task manipulation worked correctly, although this can also be checked by a one-sample t-test on the difference scores or slopes from the ANTI-Vea core indexes. Secondly, examples of new indexes that have been or may be derived from the core indices are the slope of cognitive control (Luna et al. 2022), mean and variability of RT in EV trials (Sanchis et al. 2020), scores from the Signal Detection Theory (SDT; i.e., sensitivity and response criterion; Luna et al. 2018), sequential effects such as post-error slowing and Gratton effect (Román-Caballero, Martín-Arévalo, and Lupiáñez 2021), scores from the psychometric-curve analysis (i.e., scale, shift, and lapse rate; Román-Caballero, Martín-Arévalo, and Lupiáñez 2023), between-blocks variability of vigilance scores, and scores from the diffusion decision model (i.e., drift rate, boundary separation, starting point, and non-decision component). We are in the process of implementing these extra scores into the R code. Suggestions for new additions to the code are welcome.

7. Discussion. Summary of published research with ANTI-Vea

The aim of the present tutorial was to provide a detailed, step-by-step user guide of the ANTI-Vea-UGR online platform (

https://anti-vea.ugr.es/index.php), enabling researchers worldwide to collect, download, and analyze data using the ANTI-Vea task (Luna et al. 2018) and its adapted versions.

The ANTI-Vea is the latest version of the attentional networks test for measuring the functioning and interactions of the three attentional networks described by Posner and Petersen (1990). It combines different paradigms to assess phasic alertness, orienting, and executive control together. It employs the typical flanker paradigm (Eriksen and Eriksen 1974) along with the spatial cueing task (Posner 1980) and the auditory tone used in the ANTI (Callejas, Lupiáñez, and Tudela 2004). Moreover, one of the most remarkable contributions of the ANTI-Vea is the theoretical distinction between two components of vigilance: EV, which refers to the ability to monitor and detect critical signals that rarely occur over a long period of time, and AV, understood as the capacity to maintain a fast response to any stimulus in the environment (Luna et al. 2018). Both components had already been described and tested separately with the MCT (Mackworth 1948) and the SART (Robertson et al. 1997), for EV; and with the PVT (Dinges and Powell 1985) for AV. However, the ANTI-Vea also succeeds in assessing the two vigilance components together in a single session.

When we analyze the vigilance decrement, it manifests as an increase in the average and variability of response time for those trials that evaluate AV and in which the participants have to stop a millisecond backward counter as quickly as possible. In contrast, in EV trials, where the participants have to focus and discriminate the vertical displacement of the central flanker (target), i.e., detect infrequent stimuli, the results show that there is no loss of sensitivity to these infrequent stimuli. What happens rather is that the participant’s response bias increases, according to what Thomson, Besner, and Smilek (2016) state in their review. The interpretation that has been given to this phenomenon can be debated if we found a floor effect in FAs, an effect that is frequently observed in simple signal detection tasks such as the SART (Luna, Roca, et al. 2021). Nevertheless, to avoid this floor effect in the ANTI-Vea, which is a more complex task, FAs are only computed in those ANTI trials in which a FA response is more likely to be observed (Luna, Barttfeld, et al. 2021).

A number of studies have been carried out since the implementation of this task (Cásedas, Cebolla, and Lupiáñez 2022; Coll-Martín, Carretero-Dios, and Lupiáñez 2021; Feltmate, Hurst, and Klein 2020; Hemmerich et al. 2023; Román-Caballero, Martín-Arévalo, and Lupiáñez 2021). Furthermore, the ANTI-Vea itself, or some studies that have used this task, have been featured in different dissemination reports. You can find more in the “Blog” section on the website.

When the participants perform this task online, on their own, there may be some potential difficulties that may cast doubt on the validity of the obtained data. Lighting conditions, distance to the screen, environmental noise, as well as the device features (operating system, screen size, etc.) may vary between participants. In addition, the participants may not understand the instructions and may not perform the task properly. Nevertheless, it is worth mentioning that vigilance has been successfully assessed in some other online studies, in which experimental conditions were not controlled as in typical studies in the lab (Claypoole et al. 2018; Fortenbaugh et al. 2015; Sadeh, Dan, and Bar-Haim 2011). Indeed, Luna, Roca, et al. (2021) concluded that the online ANTI-Vea was as effective as the standard ANTI-Vea carried out in the laboratory in assessing the functioning and interactions of the classical attentional components, along with EV and AV decrements. If you would like to monitor the conditions under which participants do the task, even if it takes a bit longer, you can make a video-call to explain previously all the necessary conditions under which they have to perform the task or keep the video-call while the participants carry out the task online from their house or other place meeting the desired experimental conditions (to ensure they do it correctly). Several studies (e.g., Cásedas, Cebolla, and Lupiáñez 2022) have also used the online ANTI-Vea allowing them to reach large samples of participants from remote places and countries. In short, the use of this platform allows the research teams to investigate human attention in a simpler, cheaper, and more accessible way.

Thanks to the versatility offered by our online platform, the task and the different sub-versions can be applied to explain the variations and the functioning of attention in different populations, like attention-deficit/hyperactivity disorder patients (e.g., Coll-Martín, Carretero-Dios, and Lupiáñez 2021; Coll-Martín, Carretero-Dios, and Lupiáñez 2023; Coll-Martín, Sonuga-Barke et al. 2023), athletes (Huertas et al. 2019), musicians, (Román-Caballero, Martín-Arévalo, and Lupiáñez 2021), to apply it under multiple conditions (e.g., caffeine intake and exercise intensity; Sanchis et al. 2020), or states (e.g., fatigued, relaxed, mindful, excited; see, for instance, Feltmate, Hurst, and Klein 2020).

In summary, the online ANTI-Vea task can be run with standard parameters or adapted to the specific needs of different research questions. It is in continuous development to adapt new versions and functionalities to the task, such as the measurement of distractions or monitoring whether the participants are focused on the task or engaged in mind-wandering.

7. Conclusions

In conclusion, the ANTI-Vea-UGR platform provides a rigorous, accessible, and free assessment of the attentional functioning, encompassing the three attentional networks and two vigilance components. These functions, grounded in influential theoretical frameworks and extensive empirical research, are measured with a reasonable reliability in the ANTI-Vea, the main task of the platform. The resources for online data collection adapted to different languages and analysis through a user-friendly app facilitate task administration by different researchers and in diverse contexts and populations. Finally, the platform’s free nature aligns with open science principles, while being supported by a public institution that ensures proper data protection. Therefore, we encourage researchers to take advantage of this valuable resource to advance the study of attention across different areas.

Author Contributions

Conceptualization, J.L., E.M-A., F.B., A.M. and F.G.L..; methodology, J.L., E.M-A., F.G.L., T.C-M. and R.R-C..; software, M.R.M-C.; validation, T.C-M., R.R-C., L.C., F.G.L and M.J.A; formal analysis, J.L., L.C., T.C-M., R.R-C., F.G.L and M.J.A.; investigation, T.C-M., R.R-C., L.C., F.G.L and M.J.A..; resources, F.B and M.R.M.C..; data curation, M.R.M.C.; writing—original draft preparation, L.T., P.C.M-S., T.C-M., R.R-C., K.H., G.M., F.B., M.J.A., J.L., M.C.C. and F.G.L.; writing—review and editing, J.L., E.M-A., F.G.L., T.C-M., R.R-C., L.C., M.J.A., K.H., L.T., P.C.M-S., G.M., A.M., F.B., M.R.M-C. and M.C.C.; visualization, T.C-M., R.R-C., G.M. and K.H.; supervision, J.L., E.M-A. and F.G.L.; project administration, J.L., E.M-A., M.C.C. and F.G.L.; funding acquisition, J.L. All authors have read and agreed to the published version of the manuscript.

Funding

Research reported in this paper was supported by the Spanish MCIN/ AEI/10.13039/501100011033/, through grant number PID2020-114790GB-I00, and by research project P20-00693, funded by the Consejería de Universidad, Investigación e Innovación de la Junta de Andalucía and by FEDER a way of making Europe, to Juan Lupiáñez.

Acknowledgments

This paper is part of the doctoral dissertation by Tao-Coll-Martín under the supervision of Juan Lupiáñez and Hugo Carretero-Dios. T.C.-M. was funded by a predoctoral fellowship (FPU17/06169) from the Spanish Ministry of Education, Culture, and Sport.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the tasks and the tool, in the writing of the manuscript, or in the decision to publish the paper.

References

- Al-Shargie: Tariq, Mir, Alawar, Babiloni, and Al-Nashash. 2019. “Vigilance Decrement and Enhancement Techniques: A Review.” Brain Sciences 9 (8): 178. [CrossRef]

- Basner, Mathias, Daniel Mollicone, and David F. Dinges. 2011. “Validity and Sensitivity of a Brief Psychomotor Vigilance Test (PVT-B) to Total and Partial Sleep Deprivation.” Acta Astronautica 69 (11–12): 949–59. [CrossRef]

- Callejas, Alicia, Juan Lupiáñez, María Jesús Funes, and Pío Tudela. 2005. “Modulations among the Alerting, Orienting and Executive Control Networks.” Experimental Brain Research 167 (1): 27–37. [CrossRef]

- Callejas, Alicia, Juan Lupiáñez, and Pío Tudela. 2004. “The Three Attentional Networks: On Their Independence and Interactions.” Brain and Cognition 54 (3): 225–27. [CrossRef]

- Casagrande, Maria, Andrea Marotta, Diana Martella, Elisa Volpari, Francesca Agostini, Francesca Favieri, Giuseppe Forte, et al. 2022. “Assessing the Three Attentional Networks in Children from Three to Six Years: A Child-Friendly Version of the Attentional Network Test for Interaction.” Behavior Research Methods 54 (3): 1403–15. [CrossRef]

- Cásedas, Luis, Ausiàs Cebolla, and Juan Lupiáñez. 2022. “Individual Differences in Dispositional Mindfulness Predict Attentional Networks and Vigilance Performance.” Mindfulness 13 (March): 967–81. [CrossRef]

- Claypoole, Victoria L., Alexis R. Neigel, Nicholas W. Fraulini, Gabriella M. Hancock, and James L. Szalma. 2018. “Can Vigilance Tasks Be Administered Online? A Replication and Discussion.” Journal of Experimental Psychology: Human Perception and Performance 44 (9): 1348–55. [CrossRef]

- Coll-Martín, Tao, Edmund J. S. Sonuga-Barke, Hugo Carretero-Dios, and Juan Lupiáñez. 2023. “Are attentional processes differentially related to symptoms of ADHD in childhood versus adulthood? Evidence from a multi-sample of community adults” Unpublished manuscript, last modified 13 June. Microsoft Word file.

- Coll-Martín, Tao, Hugo Carretero-Dios, and Juan Lupiáñez. 2021. “Attentional Networks, Vigilance, and Distraction as a Function of Attention-deficit/Hyperactivity Disorder Symptoms in an Adult Community Sample.” British Journal of Psychology 112 (4): 1053–79. [CrossRef]

- Attention-Deficit/Hyperactivity Disorder Symptoms as Function of Arousal and Executive Vigilance: Testing the Halperin and Schulz’s Neurodevelopmental Model in an Adult Community Sample. 2023. Available online: https://psyarxiv.com/jzk7b/ (accessed on 13 June 2016).

- Corbetta, Maurizio. 1998. “Frontoparietal Cortical Networks for Directing Attention and the Eye to Visual Locations: Identical, Independent, or Overlapping Neural Systems?” Proceedings of the National Academy of Sciences of the United States of America 95 (3): 831–38.

- Dinges, David F., and John W. Powell. 1985. “Microcomputer Analyses of Performance on a Portable, Simple Visual RT Task during Sustained Operations.” Behavior Research Methods, Instruments, & Computers 17 (6): 652–55. [CrossRef]

- Doran, S. M., H. P. Van Dongen, and David F. Dinges. 2001. “Sustained Attention Performance during Sleep Deprivation: Evidence of State Instability.” Archives Italiennes de Biologie 139 (3): 253–67. [CrossRef]

- Eriksen, Barbara A, and Charles W Eriksen. 1974. “Effects of Noise Letters upon the Identification of a Target Letter in a Nonsearch Task.” Perception & Psychophysics 16 (1): 143–49. [CrossRef]

- Fan, J, Bruce D McCandliss, Tobias Sommer, Amir Raz, and Michael I Posner. 2002. “Testing the Efficiency and Independence of Attentional Networks.” Journal of Cognitive Neuroscience 14 (3): 340–47. [CrossRef]

- Feltmate, Brett B. T., Austin J. Hurst, and Raymond M. Klein. 2020. “Effects of Fatigue on Attention and Vigilance as Measured with a Modified Attention Network Test.” Experimental Brain Research 238 (11): 2507–19. [CrossRef]

- Fernandez-Duque, Diego, and Michael I. Posner. 2001. “Brain Imaging of Attentional Networks in Normal and Pathological States.” Journal of Clinical and Experimental Neuropsychology 23 (1): 74–93. [CrossRef]

- Fortenbaugh, Francesca C., Joseph Degutis, Laura Germine, Jeremy B. Wilmer, Mallory Grosso, Kathryn Russo, and Michael Esterman. 2015. “Sustained Attention across the Life Span in a Sample of 10,000: Dissociating Ability and Strategy.” Psychological Science 26 (9): 1497–1510. [CrossRef]

- Germine, Laura, Ken Nakayama, Bradley C. Duchaine, Christopher F. Chabris, Garga Chatterjee, and Jeremy B. Wilmer. 2012. “Is the Web as Good as the Lab? Comparable Performance from Web and Lab in Cognitive/Perceptual Experiments.” Psychonomic Bulletin & Review 19 (5): 847–57. [CrossRef]

- Hemmerich, Klara, Juan Lupiáñez, Fernando G Luna, and Elisa Martín-Arévalo. 2023. “The Mitigation of the Executive Vigilance Decrement via HD-TDCS over the Right Posterior Parietal Cortex and Its Association with Neural Oscillations.” Cerebral Cortex bhac540 (January): 1–11. [CrossRef]

- Huertas, Florentino, Rafael Ballester, Honorato José Gines, Abdel Karim Hamidi, Consuelo Moratal, and Juan Lupiáñez. 2019. “Relative Age Effect in the Sport Environment. Role of Physical Fitness and Cognitive Function in Youth Soccer Players.” International Journal of Environmental Research and Public Health 16 (16): 2837. [CrossRef]

- Ishigami, Yoko, and Raymond M Klein. 2010. “Repeated Measurement of the Components of Attention Using Two Versions of the Attention Network Test (ANT): Stability, Isolability, Robustness, and Reliability.” Journal of Neuroscience Methods 190 (1): 117–28. [CrossRef]

- Johnston, Samantha-Kaye, Neville W. Hennessey, and Suze Leitão. 2019. “Determinants of Assessing Efficiency within Auditory Attention Networks.” The Journal of General Psychology 146 (2): 134–69. [CrossRef]

- Lamond, Nicole, Sarah M Jay, Jillian Dorrian, Sally a Ferguson, Gregory D Roach, and Drew Dawson. 2008. “The Sensitivity of a Palm-Based Psychomotor Vigilance Task to Severe Sleep Loss.” Behavior Research Methods 40 (1): 347–52. [CrossRef]

- Loh, Sylvia, Nicole Lamond, Jill Dorrian, Gregory Roach, and Drew Dawson. 2004. “The Validity of Psychomotor Vigilance Tasks of Less than 10-Minute Duration.” Behavior Research Methods, Instruments, & Computers 36 (2): 339–46. [CrossRef]

- Luna, Fernando Gabriel, Pablo Barttfeld, Elisa Martín-Arévalo, and Juan Lupiáñez. 2021. “The ANTI-Vea Task: Analyzing the Executive and Arousal Vigilance Decrements While Measuring the Three Attentional Networks.” Psicológica 42 (1): 1–26. [CrossRef]

- Luna, Fernando Gabriel, Julián Marino, Javier Roca, and Juan Lupiáñez. 2018. “Executive and Arousal Vigilance Decrement in the Context of the Attentional Networks: The ANTI-Vea Task.” Journal of Neuroscience Methods 306 (August): 77–87. [CrossRef]

- Luna, Fernando Gabriel, Javier Roca, Elisa Martín-Arévalo, and Juan Lupiáñez. 2021. “Measuring Attention and Vigilance in the Laboratory vs. Online: The Split-Half Reliability of the ANTI-Vea.” Behavior Research Methods 53 (3): 1124–47. [CrossRef]

- Luna, Fernando Gabriel, Miriam Tortajada, Elisa Martín-Arévalo, Fabiano Botta, and Juan Lupiáñez. 2022. “A Vigilance Decrement Comes along with an Executive Control Decrement: Testing the Resource-Control Theory.” Psychonomic Bulletin & Review 29 (April): 1831–43. [CrossRef]

- Mackworth, N. H. 1948. “The Breakdown of Vigilance during Prolonged Visual Search.” Quarterly Journal of Experimental Psychology 1 (1): 6–21. [CrossRef]

- 1950. “Researches on the Measurement of Human Performance.” Journal of the American Medical Association 144 (17): 1526. [CrossRef]

- MacLeod, Jeffrey W, Michael A Lawrence, Meghan M McConnell, Gail A Eskes, Raymond M Klein, and David I Shore. 2010. “Appraising the ANT: Psychometric and Theoretical Considerations of the Attention Network Test.” Neuropsychology 24 (5): 637–51. [CrossRef]

- Petersen, Steven E, and Michael I Posner. 2012. “The Attention System of the Human Brain: 20 Years After.” Annual Review of Neuroscience 35 (1): 73–89. [CrossRef]

- Posner, Michael I. 1980. “Orienting of Attention.” The Quarterly Journal of Experimental Psychology 32 (1): 3–25. [CrossRef]

- Posner, Michael I. 1994. “Attention: The Mechanisms of Consciousness.” Proceedings of the National Academy of Sciences 91 (16): 7398–7403. [CrossRef]

- Posner, Michael I, and Stanislas Dehaene. 1994. “Attentional Networks.” Trends in Neurosciences 17 (2): 75–79. [CrossRef]

- Posner, Michael I, and Steven E Petersen. 1990. “The Attention System of The Human Brain.” Annual Reviews of Neuroscience 13: 25–42. [CrossRef]

- Roberts, Katherine L., A. Quentin Summerfield, and Deborah A. Hall. 2006. “Presentation Modality Influences Behavioral Measures of Alerting, Orienting, and Executive Control.” Journal of the International Neuropsychological Society 12 (04). [CrossRef]

- Robertson, Ian H., Tom Manly, Jackie Andrade, Bart T. Baddeley, and Jenny Yiend. 1997. “‘Oops!’: Performance Correlates of Everyday Attentional Failures in Traumatic Brain Injured and Normal Subjects.” Neuropsychologia 35 (6): 747–58. [CrossRef]

- Roca, Javier, Cándida Castro, María Fernanda López-Ramón, and Juan Lupiáñez. 2011. “Measuring Vigilance While Assessing the Functioning of the Three Attentional Networks: The ANTI-Vigilance Task.” Journal of Neuroscience Methods 198 (2): 312–24. [CrossRef]

- Roca, Javier, Pedro García-Fernández, Cándida Castro, and Juan Lupiáñez. 2018. “The Moderating Effects of Vigilance on Other Components of Attentional Functioning.” Journal of Neuroscience Methods 308 (October): 151–61. [CrossRef]

- Román-Caballero, Rafael, Elisa Martín-Arévalo, and Juan Lupiáñez. 2021. “Attentional Networks Functioning and Vigilance in Expert Musicians and Non-Musicians.” Psychological Research 85 (3): 1121–35. [CrossRef]

- 2023. “Changes in Response Criterion and Lapse Rate as General Mechanisms of Vigilance Decrement: Commentary on McCarley and Yamani (2021).” Psychological Science 34 (1): 132–36. [CrossRef]

- Rueda, M Rosario, J Fan, Bruce D McCandliss, Jessica D Halparin, Dana B Gruber, Lisha Pappert Lercari, and Michael I Posner. 2004. “Development of Attentional Networks in Childhood.” Neuropsychologia 42 (8): 1029–40. [CrossRef]

- Sadeh, Avi, Orrie Dan, and Yair Bar-Haim. 2011. “Online Assessment of Sustained Attention Following Sleep Restriction.” Sleep Medicine 12 (3): 257–61. [CrossRef]

- Sanchis, Carlos, Esther Blasco, Fernando Gabriel Luna, and Juan Lupiáñez. 2020. “Effects of Caffeine Intake and Exercise Intensity on Executive and Arousal Vigilance.” Scientific Reports 10 (1): 8393. [CrossRef]

- See, Judi E., Steven R. Howe, Joel S. Warm, and William N. Dember. 1995. “Meta-Analysis of the Sensitivity Decrement in Vigilance.” Psychological Bulletin 117 (2): 230–49. [CrossRef]

- Souza Almeida, Rafael de, Aydamari Faria-Jr, and Raymond M. Klein. 2021. “On the Origins and Evolution of the Attention Network Tests.” Neuroscience & Biobehavioral Reviews 126 (March): 560–72. [CrossRef]

- Thomson, David R, Derek Besner, and Daniel Smilek. 2016. “A Critical Examination of the Evidence for Sensitivity Loss in Modern Vigilance Tasks.” Psychological Review 123 (1): 70–83. [CrossRef]

- Tiwari, T., A. L. Singh, and I. L. Singh. 2009. “Task Demand and Workload: Effects on Vigilance Performance and Stress.” Journal of the Indian Academy of Applied Psychology 35 (2): 265–75.

Notes

| 1 |

In order for the system to assign the same

Subject ID to two or more different sessions, the codes for Participant, Name,

Experiment, and Experiment Group must match (in older versions, the access

cookies also need to match). Therefore, in studies where participants perform

multiple sessions of the task, it is recommended that the experimenter

distinguish their participants by using the Participant Code instead of the

Subject ID. |

| 2 |

Technically, a decrement in sensitivity across

the blocks of the ANTI-Vea has been also observed. However, compared to the

change in response bias, that effect is substantially lower and likely due to a

floor effect in FAs (Luna, Roca, et al. 2021) or other artifacts

(Román-Caballero, Martín-Arévalo, and Lupiáñez 2023). |

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).