1. Introduction

Finite-time thermodynamics (TTF) has been developed by placing realistic limits on irreversible processes through various properties, such as power, efficiency, and dissipation. The TTF can be considered an extension of classical equilibrium thermodynamics (CET), in which thermodynamic models more similar to the real world are sought than those given by CET. These models consider the irreversibilities of the [

5][

9] system. The approach incorporates the constraints of finite time operation; constraints on system variables; and generic models for the sources of irreversibility and thus the production of entropy such as finite rate heat transfer, friction, and heat leakage, among others [

4]. Moreover, an extreme or optimum of a thermodynamically significant variable is calculated, such as minimizing entropy production, maximizing energy or availability, maximizing power, maximizing efficiency, and so on [

4]. The pioneering work of the TTF is that of Curzon and Ahlborn [

4][

5] in which the fundamental limits of a power plant used a model of

machine endoreversible, this is made up of an endoreversible Carnot cycle where the irreversible processes of the cycle are what involve the exchange of heat between the thermal reservoirs and the active substance.

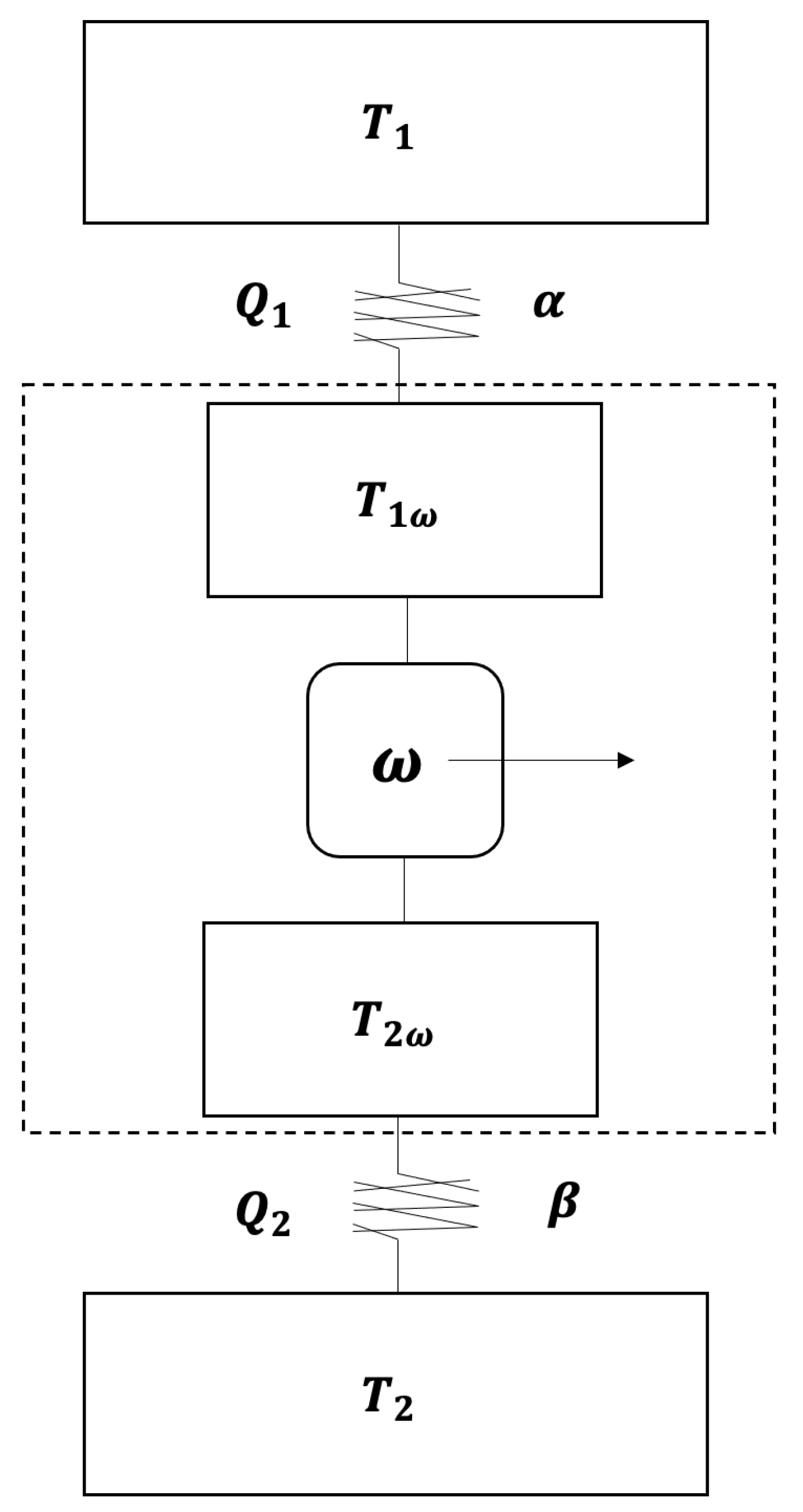

The thermal machine is made up of two temperature stores

and

where

, two irreversible components that are the two thermal resistances, which produce thermal flows towards the reversible Carnot machine with intermediate temperatures

and

with

, placed between the intermediate stores. The model considers a linear heat transfer between two irreversible components(thermal conductances

and

) conductances (see

Figure 1.- Scheme proposed by De Vos) [

10].

A problem solved by finite-time thermodynamics efficiently is the so-called weak young Sun paradox proposed by Sagan and Mullen [

2]. This study presents a drawback for understanding the early stages of planet Earth since the Sun’s luminosity about 4.5 Gyr ago was around 70-80 percent of its value to act [

1][

2][

3]. It represents a terrestrial temperature below the freezing point of water. The planet’s surface temperature is known to be controlled by the solar radiation it receives and its interaction with the gases in the atmosphere. Assuming a blackbody radiative balance between the young Sun and the Earth results in a surface temperature T=255 K, low enough to keep most of the planet’s surface frozen down to 1-2 Gyr [

2]. However, several studies, together with sedimentary records, suggest the existence of an average surface temperature capable of having liquid water for almost the entire history of the planet [

2]. So, to resolve such a paradox, the first hypothesis is taken that solar radiation has increased in the Sun’s lifetime due to the increase in density of the solar nucleus[

2]. The luminosity of the young Sun has been estimated to be 30% less than the present value received from the Sun according to what was said by Gough [

2], where

is the present luminosity of the Sun and

which is the present age of the Sun. The equation (

1) shows the evolution of the Sun’s luminosity, and this equation affects the amount of average solar radiation

received by the planet. The equation of the luminosity of Gough is expressed in the following way:

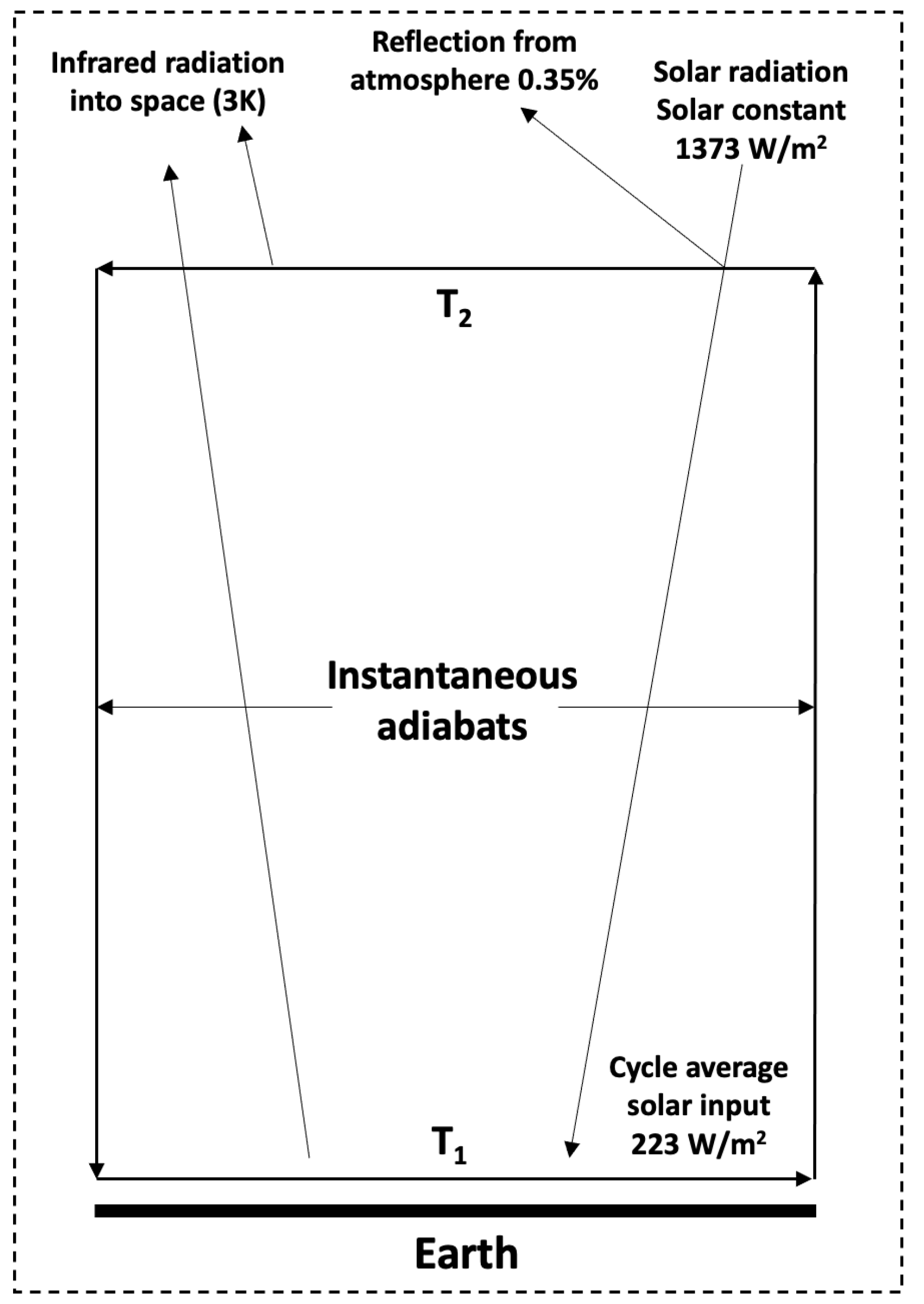

Based on foundation, the problem of thermodynamic equilibrium between the solar system’s planets depends on the incident solar influx

, the planet’s albedo

, and the greenhouse effect

. Thus, the problem of the thermal balance between planets of the solar system and a correct temperature estimation is solved based on the atmosphere’s physical characteristics. An approach by Finite-Time Thermodynamics (FTT) was raised in work published by Curzon and Ahlborn in 1975 by modeling a Carnot cycle with finite heat transfer between the heat reservoir and the working substance under a maximum power operating regime[

4]. Subsequently, the FTT has been developed considering other operating regimes such as efficiency power, ecological function, and others. Models created with the FTT approach provide more realistic real-world power converters operating levels. In 1989, Gordon and Zarmi (GZ) proposed an atmospheric convection model to calculate the temperature of the lowest layer of the Earth’s atmosphere and an upper limit of the average wind power [

5]. The GZ model consists of a convection cell, an endoreversible Carnot cycle, and two thermal reservoirs external to the working substance (such as air). De Vos and Flater [

6] considered that in the endoreversible model, there is a dissipation of wind energy and obtain an upper limit for the efficiency of conversion of solar energy into wind energy given by

assuming the atmospheric "heat engine" is powered by a complete power engine [

6]. On the other hand, Van der Wel improved a new efficiency of solar energy upper bound

with another model endoreversible based on convective Hadley cells [

7][

8]. These types of GZ models were used to propose a possible solution to the so-called paradox of the young and weak Sun, which was initially presented by Carl Sagan and George Mullen in 1972 [

1] [

2,

3]. GZ model and the Gough model are applied to the evolution of the solar constant to study the possible future scenarios of Earth’s temperature using different objective functions such as maximum power, efficient power, and ecological function.

Thus, the present work proposes a study of the planet’s surface temperatures due to the increase of greenhouse gases by working to the atmosphere in a thermodynamic regime of finite times. We decided to employ this methodology, considering the good results in predicting climate change in several geologic eras in the past. So, it is possible to modify and set the endoreversible machine model to forecast temperatures derived from climate change in the coming years.

The rest of the manuscript is structured as follows: the next section comprises the literature review on climate change models based on different approaches.

Section 3 describes the preliminary foundations concerning Finite-Time Thermodynamics;

Section 4 outlines the methods related to the proposed endoreversible model; and

Section 5 describes the proposed model and its peculiarities.

Section 6 shows the experimental results, and the discussion of the outcomes and findings are included in

Section 7, and the last section involves the conclusion and future works.

2. Related work

Global warming caused by human activities represents one of the most significant challenges of the present time. The classical approaches concerning climate change have studied complex systems such as differential equations and developments in chaos theory. Nevertheless, the large amount of data available allows us to use Artificial Intelligence techniques, which are more straightforward than those used by the areas of complexity science, resulting in the prediction of future scenarios due to climate change.

According to Houghton [

23], global warming has a climate system where several variables are responsible for raising global average temperatures. Most of these effects are related to the radiative balance of the planetary atmosphere: water vapor feedback, cloud-radiation feedback, and ocean-circulation feedback. In consequence, all of them refer to the albedo and greenhouse effects. Therefore, to forecast global warming, a set of characteristics that affect the global emission of greenhouse gases must be taken. These gases have had a notable increase due to anthropogenic behavior and activity. Development projections of global average temperature changes for the present century are in the range of 0.15C°-0.6C° per decade. Understanding this problem allows us to consider humans’ and ecosystems’ impacts and adaptive capacity [

23].

One of the main effects of global warming is the melting of ice bodies on the Earth. The Arctic Sea is one of the leading indicators of the increase in average temperature. The study of the ice concentration and the rise in sea level has various approaches, one of which is widely used is the Deep Learning techniques to predict how the ice concentration changes with the increase in average temperature [

24]. In the same way that the arctic layers and their melting show the effect of climate change, all oceans experience the same significant warming and a rising sea level, so it is necessary to generate diagnostic and prognostic prediction models to elucidate these increases and their risks since they are associated with other adverse events such as the propagation of cycles, lack of rain and the growth and spread of diseases. According to diverse authors, the combination of machine learning and deep learning techniques can give us entirely accurate predictions for the future [

25,

26,

27], and [

28].

In the study carried out by Balsher Singh Sidhu of the University of British Columbia [

18], the use of machine learning is analyzed to understand the impact of climate change on different types of crops, taking into account the climate-yield relationships. The authors compared the usual linear regression (LR) technique for estimating historical data to approximate yield against climate change and using boosted regression trees (BRTs). The conclusions suggest that interpreting results based on a single model can generate biases in the information obtained.

On the other hand, due to the high economic and social impacts associated with climate change, it is essential to understand the causes of this and identify the patterns of the data obtained to make correct predictions. According to Zheng, H. [

19], the construction of a reliable model based on experimental data and the relationship between temperature and the concentration of gases in the atmosphere such as carbon dioxide

, nitrous oxide

and methane

, is the first challenge to address the climate change problem. Zheng’s study used various learning techniques, such as linear regression, lasso, support vector machines, and random forest, to build an accurate model that would identify changes in the atmosphere increasing temperature dominated mainly by the increase in temperature of

due to its higher concentration within greenhouse gases.

According to several authors, the construction of a reliable model combined with the temperature data set and machine learning prediction tools will help us to have a better understanding of the phenomenon and thus be able to make a good forecast that allows us to face the risks of climate change. The thermal equilibrium model was studied by De Vos and Flater, among others [

8], who analyzed solar radiation as an energy converter used to examine the average temperature of a planet. It is done by the radiation from the planet’s surface and the irradiance reaching Earth. This analysis takes into account the physical characteristics of the atmosphere, such as friendliness and the albedo effect [

4,

6,

8]. Thus, the total flux

Q appears as shown in Equation 2.

It is the first thermodynamic model that allows a dynamic study of the different layers of the atmosphere, the lowest layer corresponding to the temperature on the planetary surface. This development can analyze various scenarios where greenhouse gases and albedo concentrations are modified. The feasibility of the model was tested in the study of geological eras, and several authors carried out the solution of the faint young Sun paradox [

1,

2]. This study of the solar converters under the regime of finite time thermodynamics was analyzed in this work, changing the parameters to current time considering the increase of

main greenhouse gas [

19] its relationship with albedo was developed in this work. In addition, a dissipation of energy in the system is considered to have realistic results at the current time.

6. Experimental Results

It is necessary to determine possible and future scenarios for the growth of greenhouse gases. Most of the concentration of gases in the atmosphere has presented a significant increase since the 70s due to industrial activities. According to Mauna Loa laboratory in Hawaii [

14] [

29], data shows a massive rise in

by the empirical formula concentration for the interval

[

14]. So, the expression obtained by Wubbles concerning the trace gas trends and their potential role in climate change is valid for this methodology [

14].

According to equation

47, the albedo and the greenhouse effect are related. For the Earth, the value of the greenhouse effect can be defined as

, where

is the surface emission, and

F is the outgoing radiation [

2]. Moreover, it is noticed that the increase in greenhouse gases rises over time, according to Wubbles and different experimental measurements. With all these characteristics, the natural average temperature (

) and its possible evolution in the coming years can be determined with reasonable accuracy.To test the GZ model that considers a dissipation

developed in this work, solving numerically with

and different values of

and

related to the year. It is a data compilation by Berkeley Earth. The study shows the temperature of the Earth’s surface, and the experimentally measured temperatures

were compared against our theoretically calculated temperatures

to use a forecasting technique later to determine the future of temperatures.

6.1. Data pre-processing

To analyze the complexity of climate change, the terrestrial and oceanic temperatures of the planet are measured. The used data is a compilation of data provided by Berkeley Laboratory. Other widely used datasets are MLOST NOAA Land-Ocean Surface Temperature and GISTEM from NASA [

20][

21][

22]. The data compilation by Berkeley records Land Average temperatures in the format yyyy/mm/dd. So, a split was made by year, month, and day taking the temperature of each month, and the mean temperature per year was computed. It is observed that there is a correlation with a value of 0.89 between the variables of the year and the Land Average Temperature from the year 1975 to 2015 [

20][

21][

22].

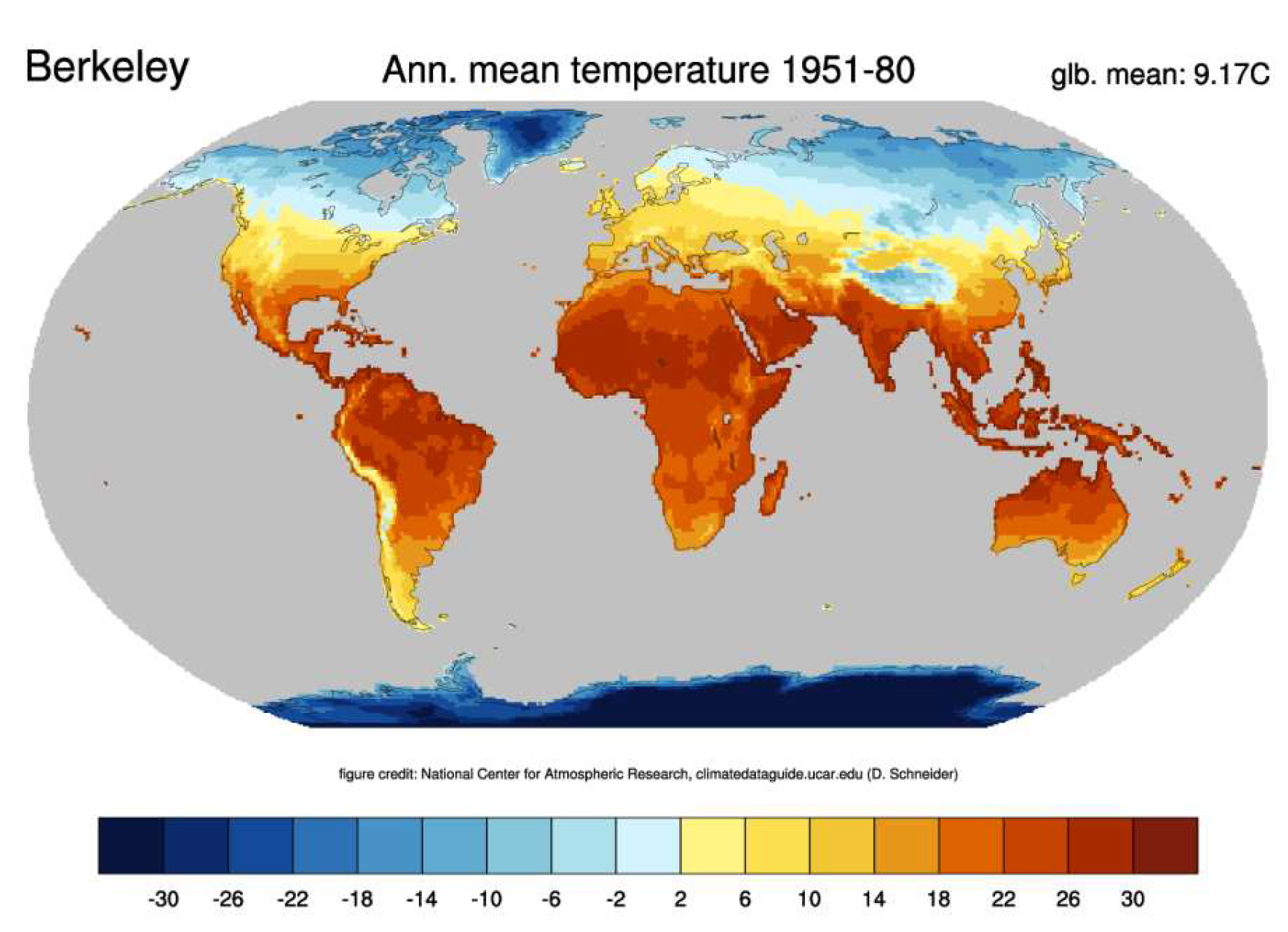

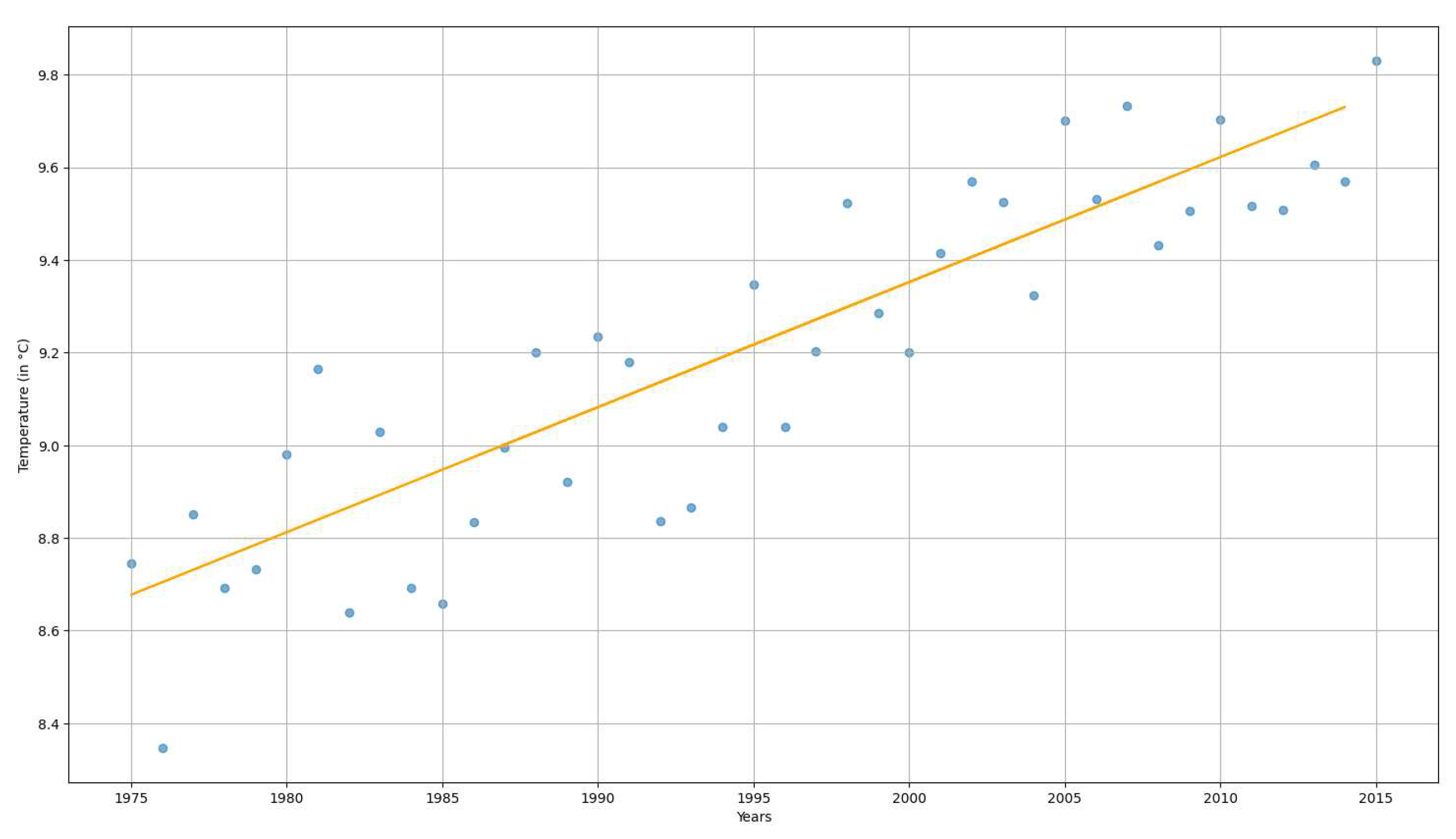

Figure 3 shows the climatology of the average annual terrestrial temperature between 1951 and 1980 from the Berkeley Earth Data with a global mean of 9.17 Celsius. In our work, the mean experimental temperature of each year is compared with that obtained in the theoretical model developed.

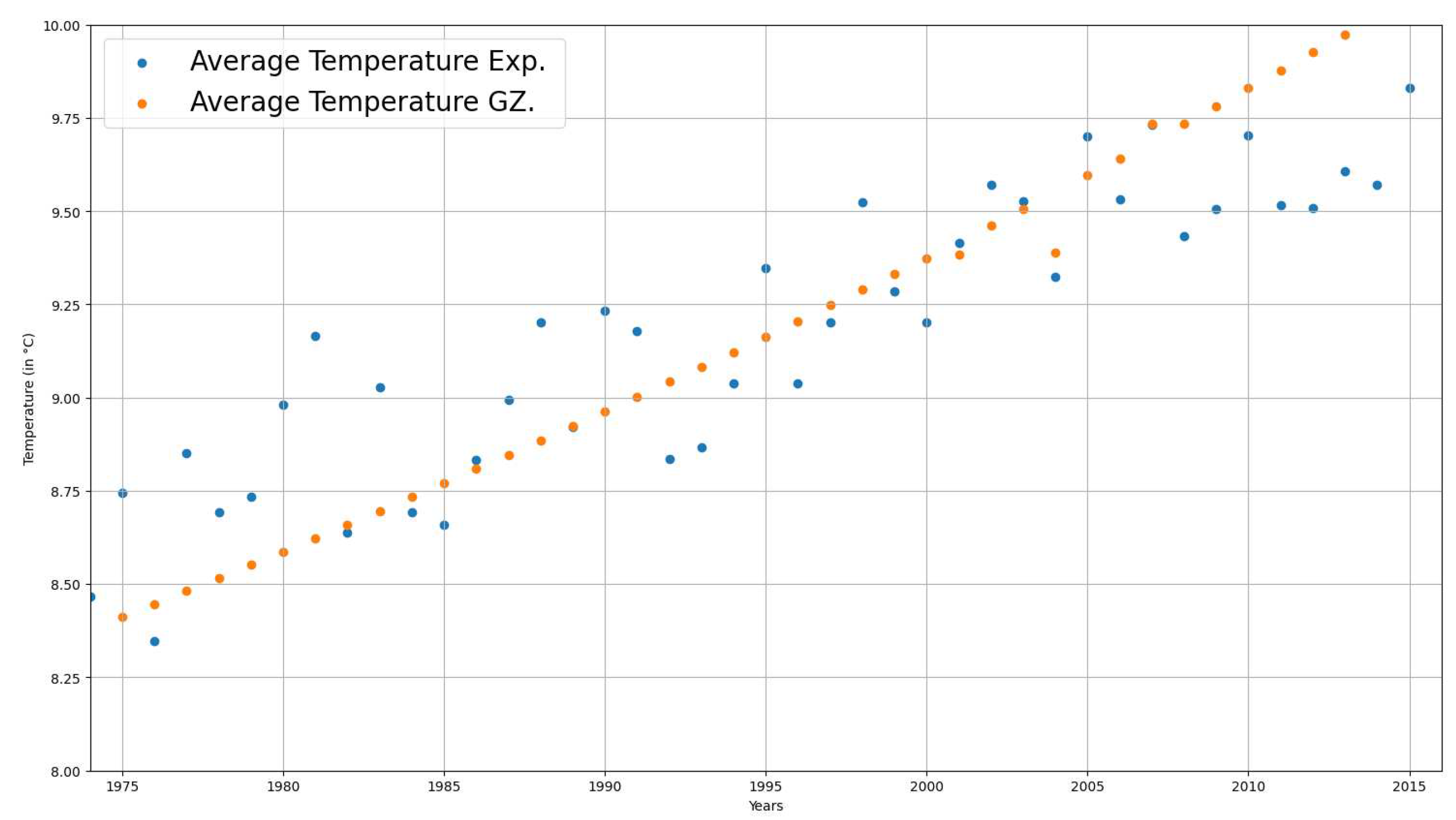

The results of the data and the surface temperatures

obtained from the model expressed in the equation

50 that was developed in this work are shown in

Table 1. All the results regarding data are presented in Celsius degrees.

The temperature increase due to greenhouse gas growth has been analyzed since 1975. It was fixed this year because of the significant increase in the concentration of

as shown by the experimental development of Wubbles in equation

51; when seeing the correlations of the observational variables of the temperature of the Berkeley database. We can notice a high correlation between the year and the land’s Average Temperature, and the correlation is equal to 0.89. Therefore, a linear regression model is sufficient in this case to make a future prediction of the temperature. In the following plot (

Figure 4. Average temperatures observed and calculated by the GZ-type model), we can observe a relationship between the average temperature per year measured against the temperature of the modified GZ model.

Thus, (

Figure 5. Average temperatures observed since 1975 with linear regression.) shows how a linear regression adjusts perfectly to predict the evolution of the temperature from the year 1975. It is possible to infer how the temperature change will be towards the year 2100 thanks to this type of modeling.

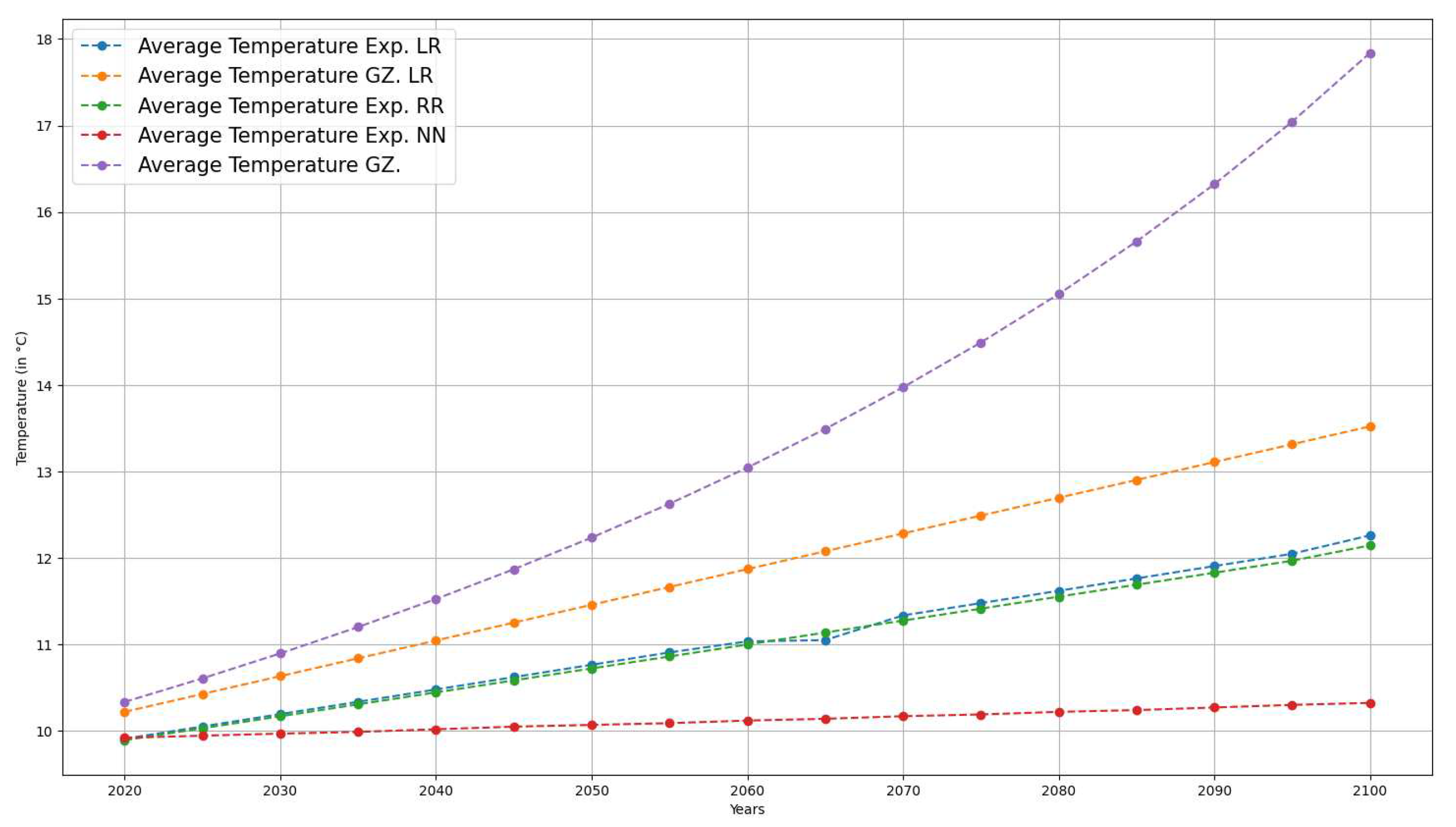

On the other hand,

Table 2 presents the future prediction of the temperatures using linear regression (LR), Ridge Regression (RR), and Artificial Neural Networks (ANN). Thus, the ANN has five layers: an input layer with a linear activation function, three layers with a e rectified linear activation function or Relu or ReLU for short, and an output layer with a linear activation function. All techniques were applied to the observed temperatures (

) and the models’ temperatures used in the present work. In the same way, the third column shows the temperatures calculated (

) from our model of Gordon and Zarmi (GZM) without applying a linear regression, where the physical characteristics of the atmosphere are taken into account and what theoretical temperature would be reached. In addition,

Table 2 depicts the entire prediction made up to 2100, starting in 2016.

Moreover,

Figure 6 shows the evolution of the surface temperature (

), according to the predictions made by the model proposed in our work with the initials GZM and the temperature prediction from the experimental data (

). Thus,

and

were forecasted using machine learning techniques.

From a correlation analysis between the temperature variables under different machine learning techniques such as Linear Regression (LR), Ridge Regression (RR), Artificial Neural Networks (ANN), and the proposed endoreversible model (GZM), it can be observed that the GZM model is more suitable with a linear relationship (see

Figure 7).

7. Discussion

In this analysis of climate change, an endoreversible modeling of the Gordon and Zarmi type was carried out. Unlike other finite-time thermodynamic studies for studying the atmosphere, adjustments were made to give the model realistic results if applied. As for the climatic analysis of geological eras, as observed in other works, it is noticed that the results do not correspond to what is reported by observations of the current temperature. According to Levario, Valencia, and Arias [

9], for a correct thermodynamic optimization of power plants, it is necessary to consider the system’s variations. Therefore, the modeling was performed considering those variations, the change in luminosity per year, the increase in greenhouse gas, and its relationship with the terrestrial albedo, thus adapting it to our model of winds at maximum power. In this way, the family of equations

45 to the equation

51 complement the system to calculate climate change due to atmospheric conditions and the increase in greenhouse gases by anthropogenic conditions.

From

Table 1, an increase in the average temperature of the Earth’s surface can be seen from 1975 to 2015, both in the observational (experimental) model and the theoretical model developed in our work. The rise in temperature in both cases is related to the increase in greenhouse gases in the atmosphere.

In

Figure 2, we can appreciate the differences between the points obtained experimentally (observation and measurements in the laboratory) and the modeling proposed in our work. Suppose we observe

Figure 3 and correlation analysis. In that case, the experimental points in blue show a high linear tendency, so linear or ridge regression is an excellent technique for correctly predicting temperature increases.

On the other hand, the points of our previously mentioned modeling of the GZM would seem to show the same linear trend, so in

Table 2, two comparisons were made taking into account a linear regression

with LR and an analysis obtained directly from our modeling

with GZM. As a result, we got a difference between the analysis with LR and GZM. This is explained considering that the temperature observations only recorded points in our vector. In contrast, the modeling records these points, and the physical information of the atmosphere is saved, as well as the thermodynamic variables of the system, which gives us results of mean temperature increase with more value than those obtained by an analysis of experimental points.

Moreover,

Figure 3 shows a plot of the predictions made from the experimental data

and the modeling of the GZM system. It is important to note that in future scenarios with forecasting by GZM, the average temperature is higher than that obtained by the data of the evolution of the observed temperatures

from various machine learning techniques. Nevertheless, the rate of temperature increase is in the range per decade according to [

23]. The plot shows that the temperature evolution in the case of the construction of an ANN, LR, and RR grows in a widespread gradual way compared with our proposed model. GZM modeling saves the atmosphere’s physical characteristics, such as entropic relationships, radiation conditions, and irradiance. It helps to present more realistic behavior in the data, unlike the other forecasting that only shows us a regression of the linear type without considering the evolution of the physical parameters caused by the alterations in the Earth’s atmosphere.

8. Conclusion and Future Work

In this article, we proposed a new finite-time thermodynamics approach to predict changes in surface temperature in the lowest layer of the atmosphere that corresponds to the average temperature. The proposed approach considers the evolution in albedo and greenhouse gases, the change in luminosity per year, and the system’s dissipation in the regime of maximum power conditions.

Thus, the increase in temperature is linked to physical conditions such as irradiance and radiation. Moreover, a comparison with different machine learning techniques showed a rise in temperature in all these methods. Nevertheless, machine learning algorithms do not preserve atmospheric information in the period studied; therefore, the forecasting could present a bias in the prediction because these are trained only with experimental data without considering the variables that generate climate change. All the techniques and our modeling demonstrated an increase in temperature. We can conclude the success of our model by comparing it with our experimental data. In addition, according to Houghton[

23], the projections of global average temperature changes are in the range of 0.15 °C - 0.6 °C per decade, which is in the field of the values obtained.

Our future works are oriented towards developing other thermodynamic models, such as ecological and efficiency power regimes, assessing these models with approaches based on machine learning. The present proposal studies the atmosphere, considering a wind engine the most common control in obtaining the maximum power as it works. In this paper, studying other regimes will allow us to analyze the whole spectrum of our modeling (wind engine) and thus observe all cases of global warming. All theoretical predictions always will be compared against experimental data to face climate change in the best way.

Author Contributions

Conceptualization, S.V.-R. and M.T.-R.; methodology, R.Q.; software, C.G.S.-M. and K.T.C.; validation, S.V.-R. and M.T.-R.; formal analysis, R.Q. and S.V.-R.; investigation, M.T.-R.; resources, K.T.C.; data curation, C.G.S.-M; writing—original draft preparation, S.V.-R.; writing—review and editing, M.T.-R.; visualization, K.T.C.; supervision, M.T.-R.; project administration, C.G.S.-M.; funding acquisition, K.T.C. All authors have read and agreed to the published version of the manuscript.