Submitted:

27 June 2023

Posted:

28 June 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

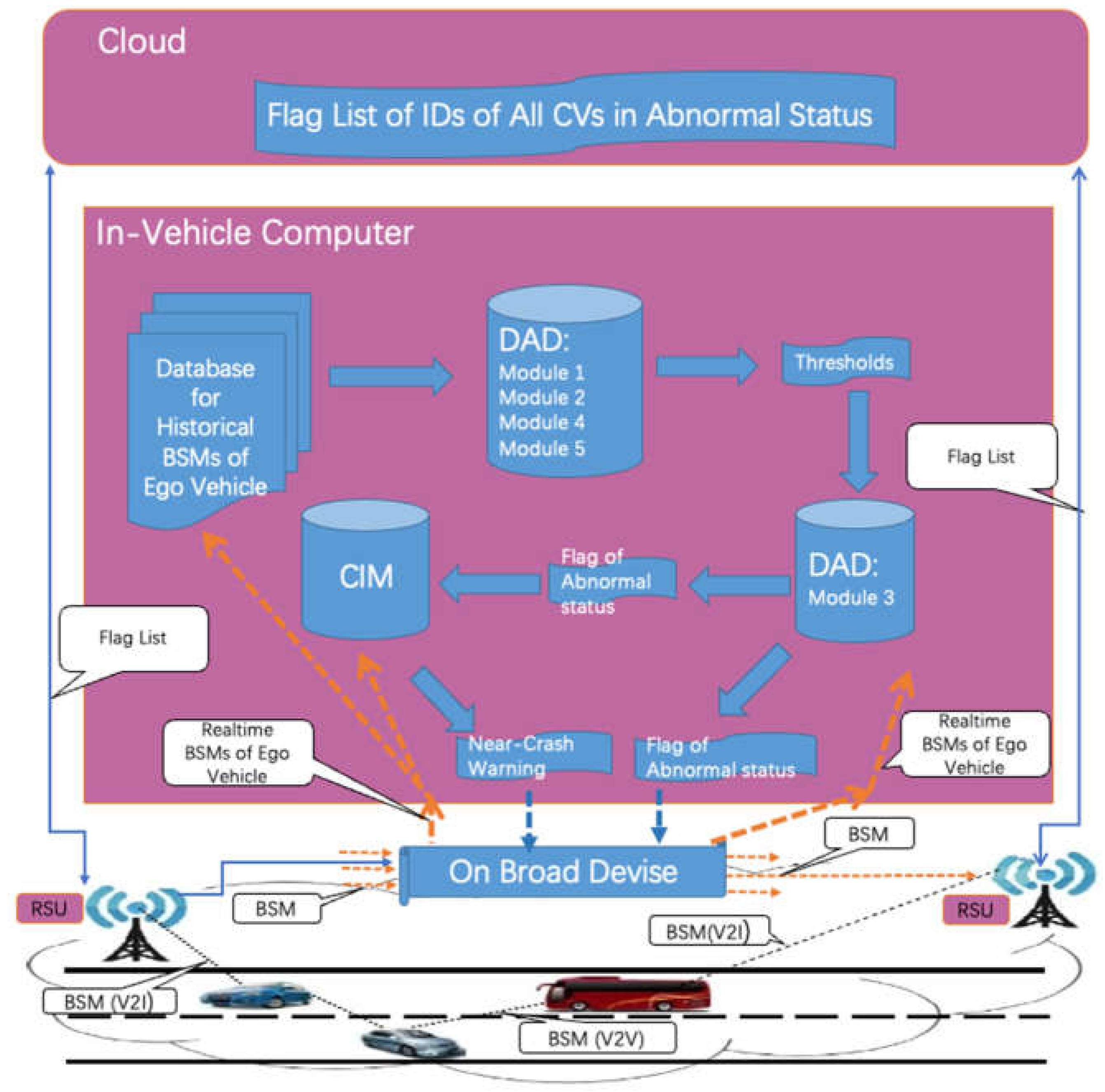

2. Materials and Methods

2.1. Data Description

2.1.1. BSM Data

2.1.2. SHRP2 Data

2.2. Methods

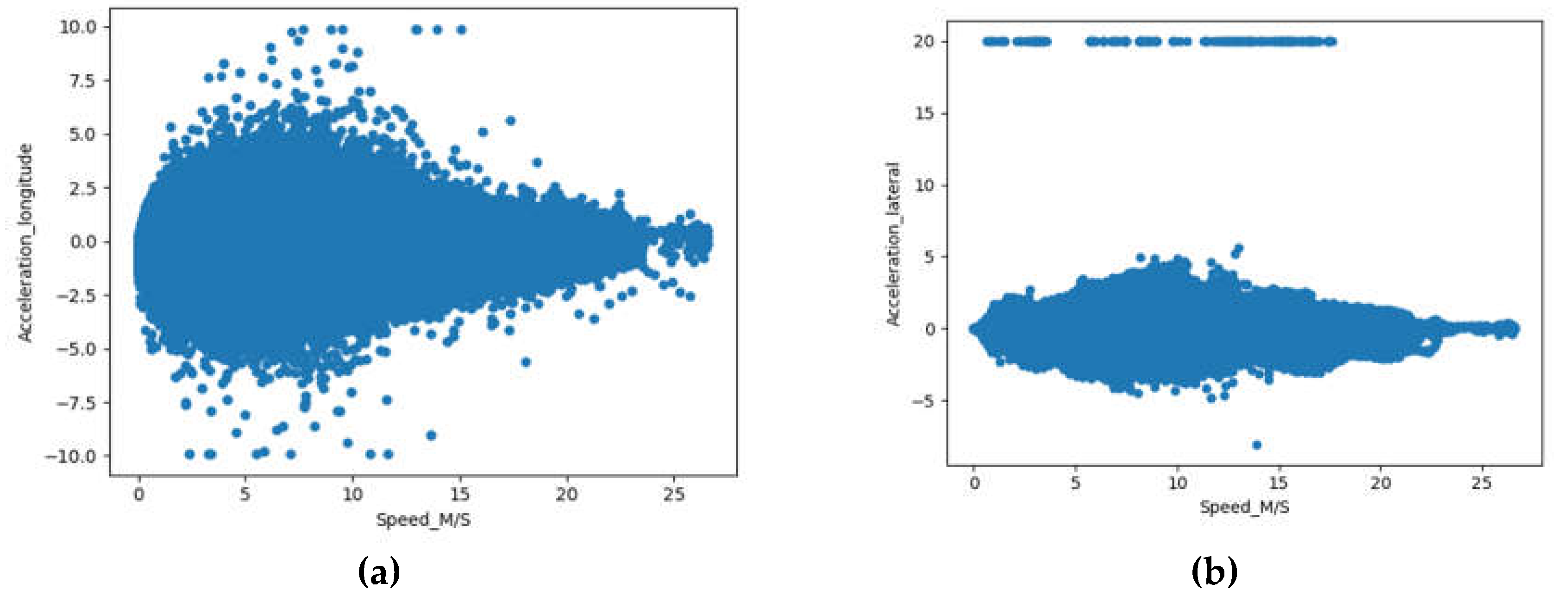

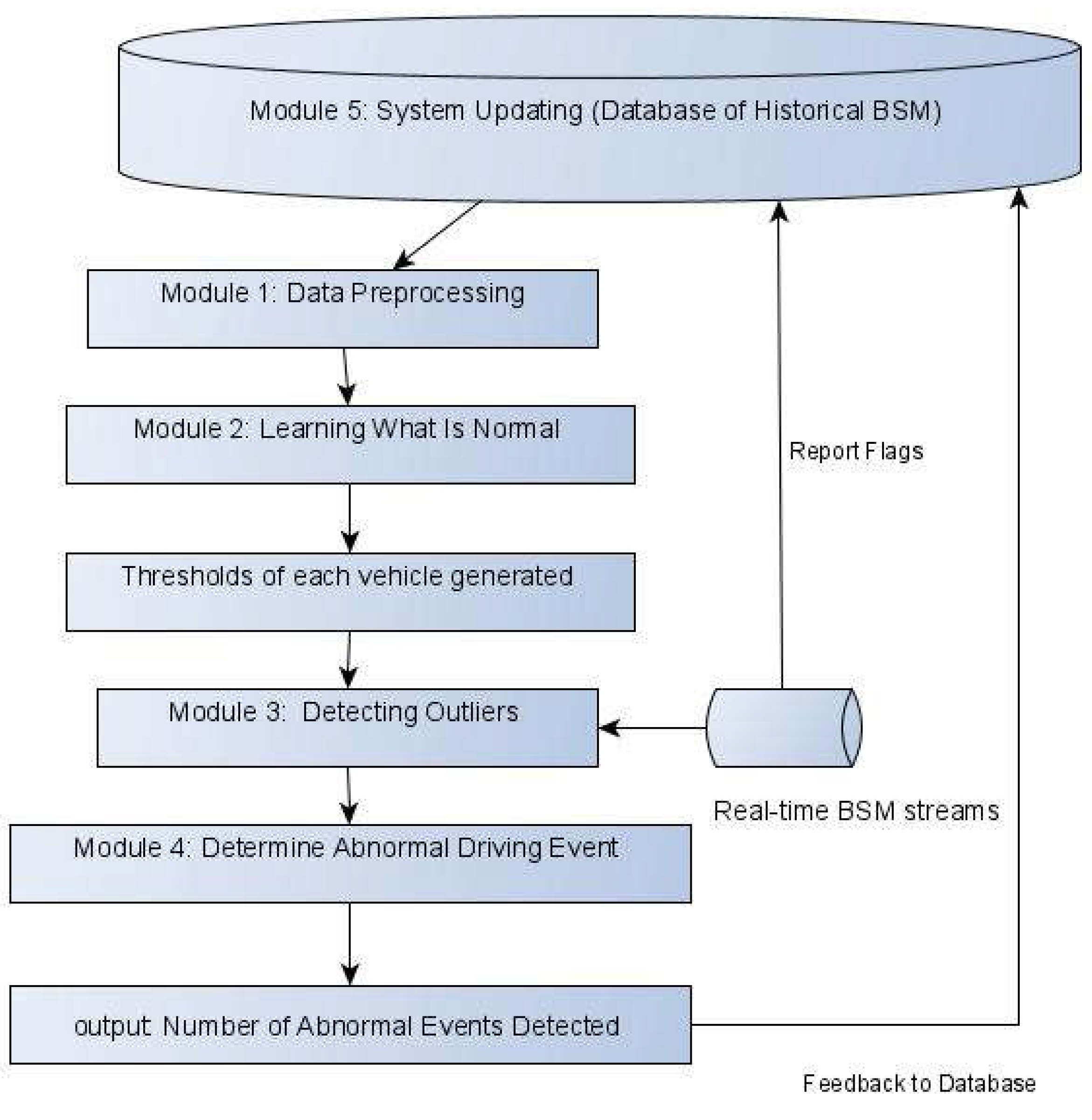

Module 1: Data Preprocessing and Selecting KPIs

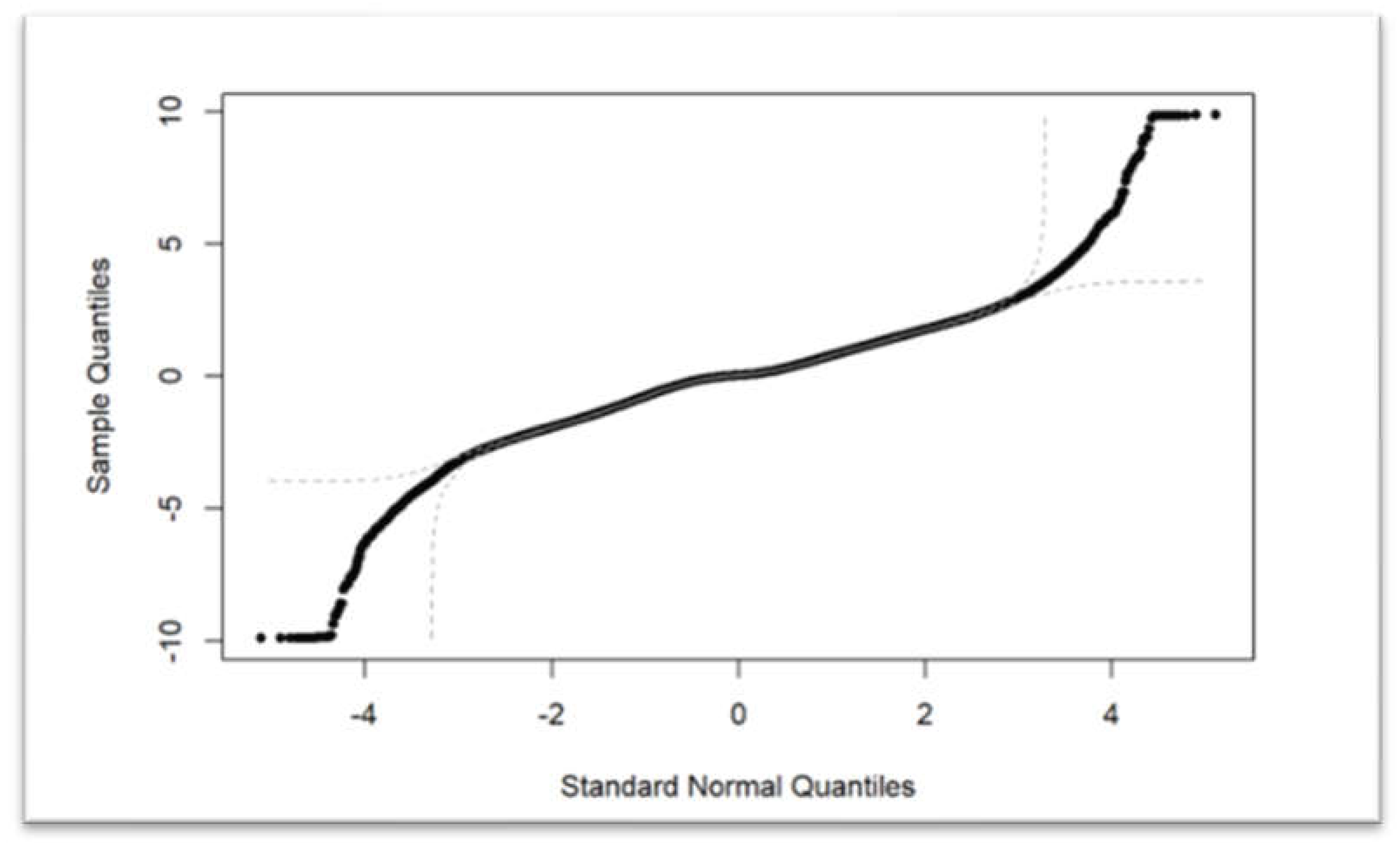

Module 2: Learning What Is Normal

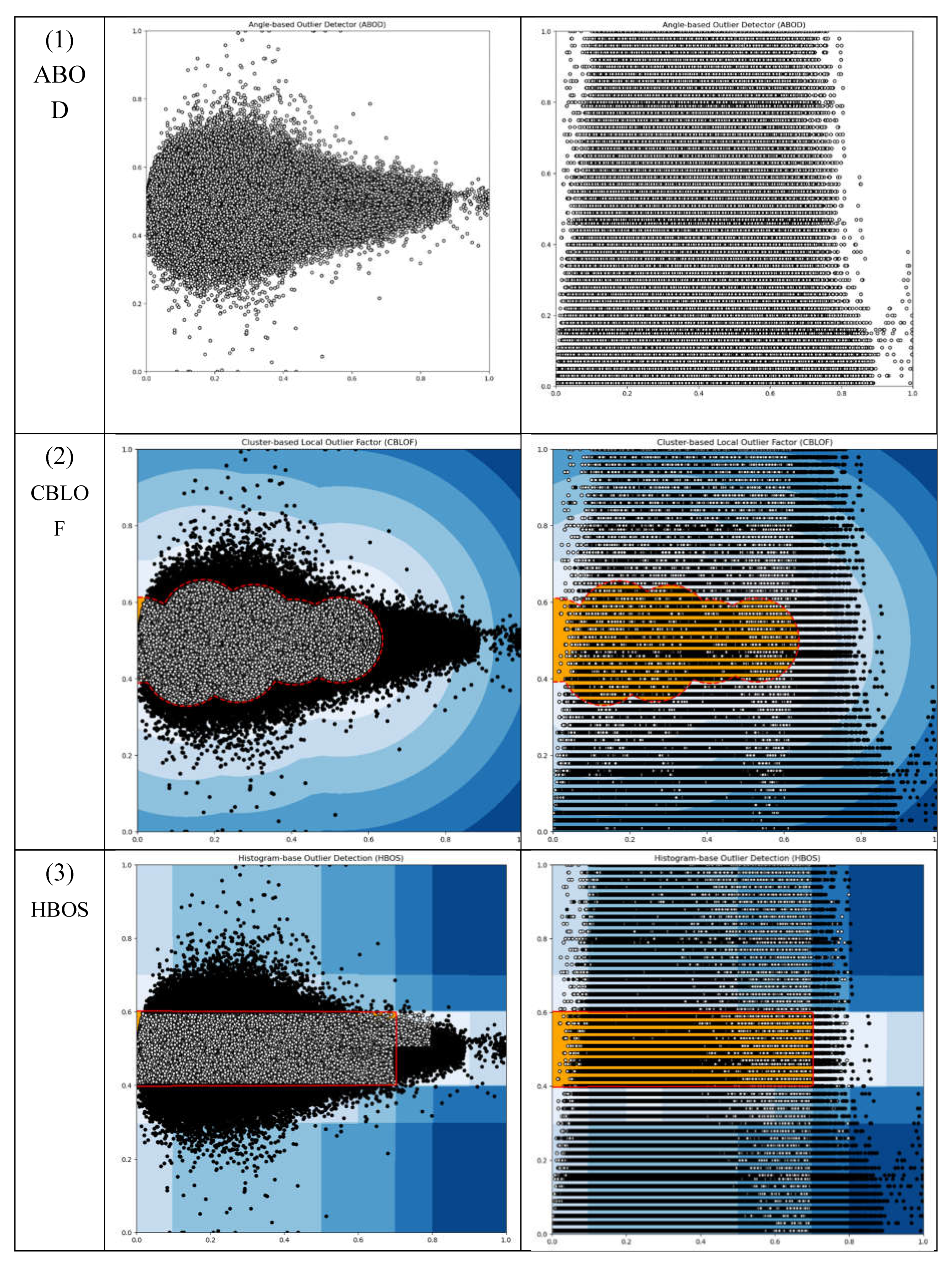

Module 3: Detecting Outliers

Module 4: Determine Abnormal Driving Event

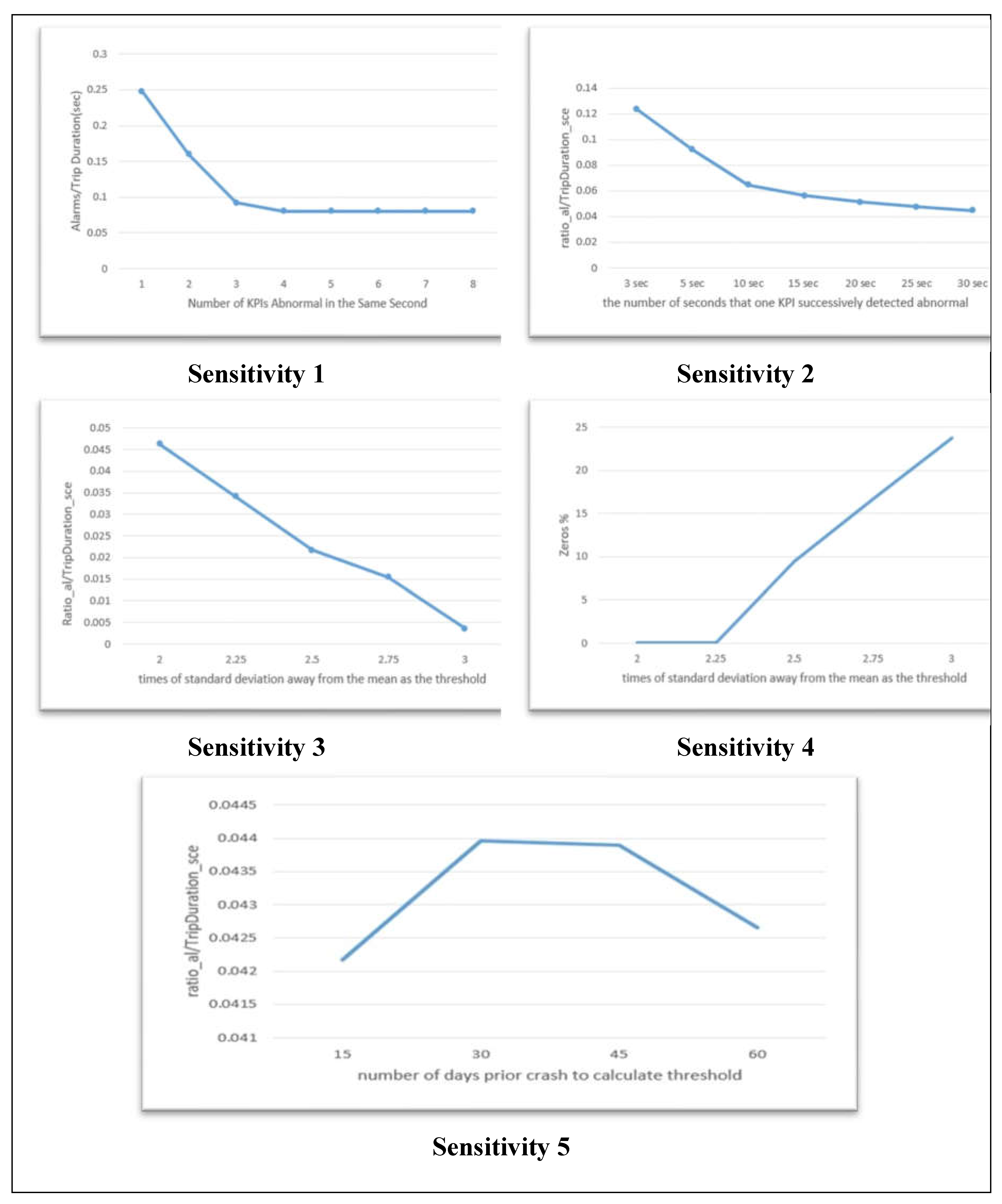

- 1)

- The number of KPIs being identified as outliers in the same second is larger or equal to .

- 2)

- Within more than one KPI are identified as an outlier in a row.

Module 5: System Updating

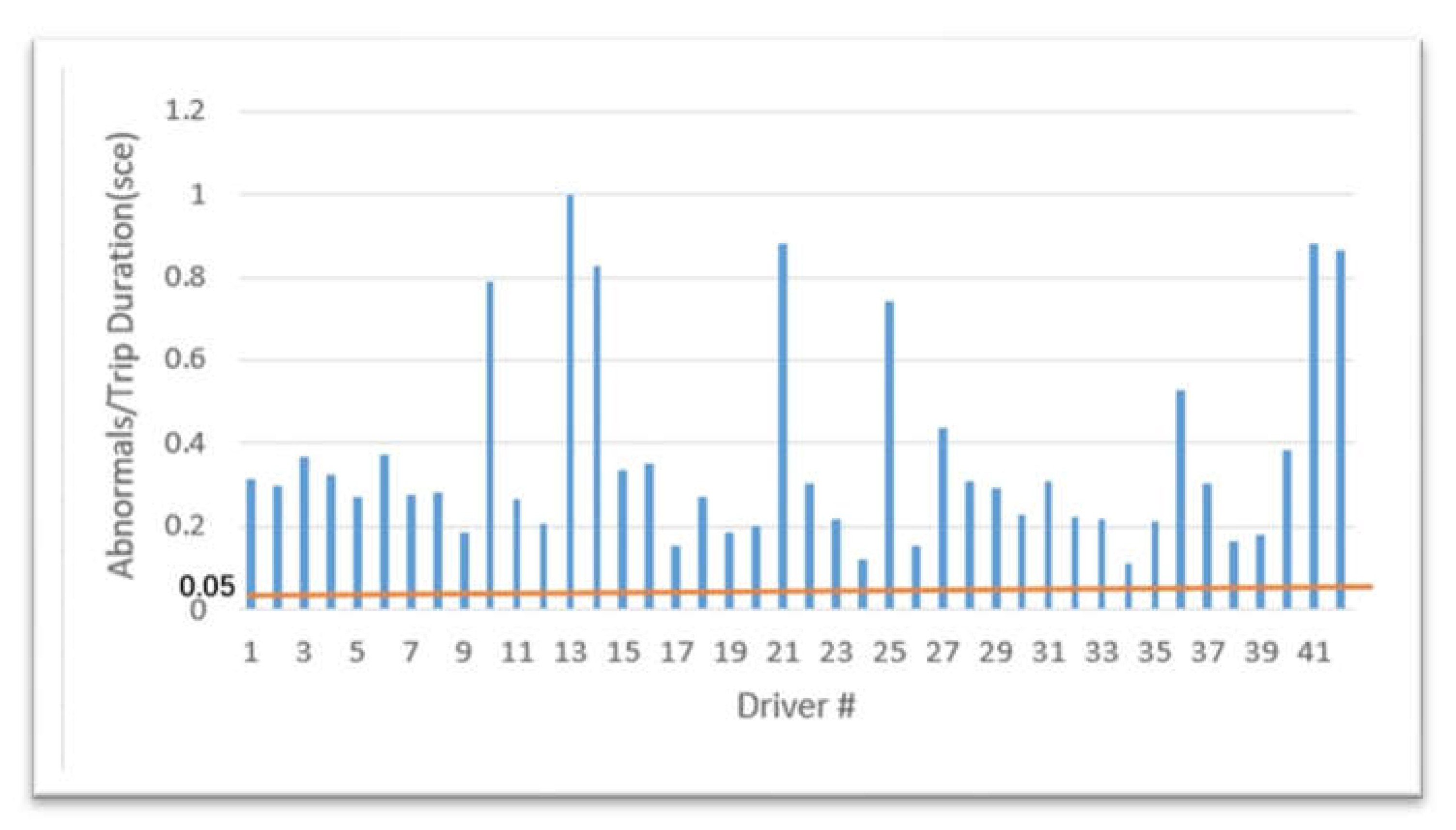

3. Model Evaluation

4. Discussion

5. Conclusions

References

- Ayuso, M., Guillen, M., & Nielsen, J. P. (2019). Improving automobile insurance ratemaking using telematics: incorporating mileage and driver behaviour data. Transportation, 46, 735–752. [CrossRef]

- Boyle, J. M., & Lampkin, C. (2007). Motor vehicle occupant safety survey—volume 2—seat belt report (report no. DOT-HS-810-975). Washington, DC: US Department of Transportation.

- Chandola, V., Banerjee, A., & Kumar, V. (2009). Anomaly detection: A survey. ACM computing surveys (CSUR), 41, 1–58. [CrossRef]

- De Vlieger, I., De Keukeleere, D., & Kretzschmar, J. G. (2000). Environmental effects of driving behaviour and congestion related to passenger cars. Atmospheric Environment, 34, 4649–4655. [CrossRef]

- Dingus, T. A., Guo, F., Lee, S., Antin, J. F., Perez, M., Buchanan-King, M., & Hankey, J. (2016). Driver crash risk factors and prevalence evaluation using naturalistic driving data. Proceedings of the National Academy of Sciences, 113, 2636–2641. [CrossRef]

- Ellison, A. B., & Greaves, S. (2010). Driver characteristics and speeding behaviour. Proceedings of the 33rd Australasian Transport Research Forum (ATRF’10).

- Ericsson, E. (2000). Variability in urban driving patterns. Transportation Research Part D: Transport and Environment, 5, 337–354. [CrossRef]

- Fancher, P. (1998). Intelligent cruise control field operational test. Final report. Volume II: appendices A-F. Tech. rep.

- Halim, Z., & Rehan, M. (2020). On identification of driving-induced stress using electroencephalogram signals: A framework based on wearable safety-critical scheme and machine learning}. Information Fusion, 53, 66--79. [CrossRef]

- Henclewood, D., Abramovich, M., & Yelchuru, B. (2014). Safety pilot model deployment–one day sample data environment data handbook. Research and Technology Innovation Administration. Research and Technology Innovation Administration, US Department of Transportation, McLean, VA.

- Igarashi, K., Miyajima, C., Itou, K., Takeda, K., Itakura, F., & Abut, H. (2004). Biometric identification using driving behavioral signals. 2004 IEEE international conference on multimedia and expo (ICME)(IEEE Cat. No. 04TH8763), 1, pp. 65–68.

- Jafari, M., & others. (2017). Traffic safety measures using multiple streams real time data. Tech. rep., Rutgers University. Center for Advanced Infrastructure & Transportation.

- Janai, J., Güney, F., Behl, A., & Geiger, A. (2017). Computer vision for autonomous vehicles: Problems, datasets and state of the art. Arxiv, arXiv–1704. [CrossRef]

- Kim, E., & Choi, E. (2013). Estimates of critical values of aggressive acceleration from a viewpoint of fuel consumption and emissions. 2013 Transportation Research Board Annual Meeting.

- Lajunen, T., Karola, J., & Summala, H. (1997). Speed and acceleration as measures of driving style in young male drivers. Perceptual and motor skills, 85, 3–16. [CrossRef]

- Langari, R., & Won, J.-S. (2005). Intelligent energy management agent for a parallel hybrid vehicle-part I: system architecture and design of the driving situation identification process. IEEE Transactions on Vehicular Technology, 54, 925-934. [CrossRef]

- Lees, M. N. (2010). Translating cognitive neuroscience to the driver’s operational environment: a neuroergonomic approach. The American journal of psychology, 123, No. 4(University of Illinois Press ), 391-411. [CrossRef]

- Liu, J., & Khattak, A. J. (2016). Delivering improved alerts, warnings, and control assistance using basic safety messages transmitted between connected vehicles. Transportation research part C: emerging technologies, 68, 83–100. [CrossRef]

- Liu, J., Wang, X., & Khattak, A. (2014). Generating real-time driving volatility information. 2014 World Congress on Intelligent Transport Systems.

- Martinez, C. M., Heucke, M., Wang, F.-Y., Gao, B., & Cao, D. (2017). Driving style recognition for intelligent vehicle control and advanced driver assistance: A survey. IEEE Transactions on Intelligent Transportation Systems, 19, 666–676. [CrossRef]

- Miyaji, M., Danno, M., & Oguri, K. (2008). Analysis of driver behavior based on traffic incidents for driver monitor systems. 2008 IEEE Intelligent Vehicles Symposium, (pp. 930–935). [CrossRef]

- Murphey, Y. L., Milton, R., & Kiliaris, L. (2009). Driver's style classification using jerk analysis. 2009 IEEE Workshop on Computational Intelligence in Vehicles and Vehicular Systems, (pp. 23–28).

- Reason, J. (1990). Human error. Cambridge university press.

- Richard, C. M., Magee, K., Bacon-Abdelmoteleb, P., Brown, J. L., & others. (2018). Countermeasures That Work: A Highway Safety Countermeasure Guide for State Highway Safety Offices, 2017. Tech. rep., United States. Department of Transportation. National Highway Traffic Safety.

- Wang, X., Khattak, A. J., Liu, J., Masghati-Amoli, G., & Son, S. (2015). What is the level of volatility in instantaneous driving decisions? Transportation Research Part C: Emerging Technologies, 58, 413–427.

- Wilson, F. N., Johnston, F. D., Macleod, A. G., & Barker, P. S. (1934). Electrocardiograms that represent the potential variations of a single electrode. American Heart Journal, 9, 447–458. [CrossRef]

- Wu, D., Zhang, L., Whalin, R., & Tu, S. (2022). Conflict Identification Using Speed Distance Profile on Basic Safety Messag-es. Procedings of International Conference on Transportation and Development 2022.

| Attributes Name | Type | Units | Description |

|---|---|---|---|

| DevID | Integer | None | Test vehicle ID assigned by the CV program |

| EpochT | Integer | seconds | Epoch time, the number of seconds since the January 1 of 1970 Greenwich Mean Time (GMT) |

| Latitude | Float | Degrees | Current latitude of the test vehicle |

| Longitude | Float | Degrees | Current longitude of the test vehicle |

| Elevation | Float | Meters | Current elevation of test vehicle according to GPS |

| Speed | Real | m/sec | Test vehicle speed |

| Heading | Real | Degrees | Test vehicle heading/direction |

| Ax | Real | m/sec^2 | Longitudinal acceleration |

| Ay | Real | m/sec^2 | Lateral acceleration |

| Az | Real | m/sec^2 | Vertical acceleration |

| Yawrate | Real | Deg/sec | Vehicle yaw rate |

| R | Real | m | Radius |

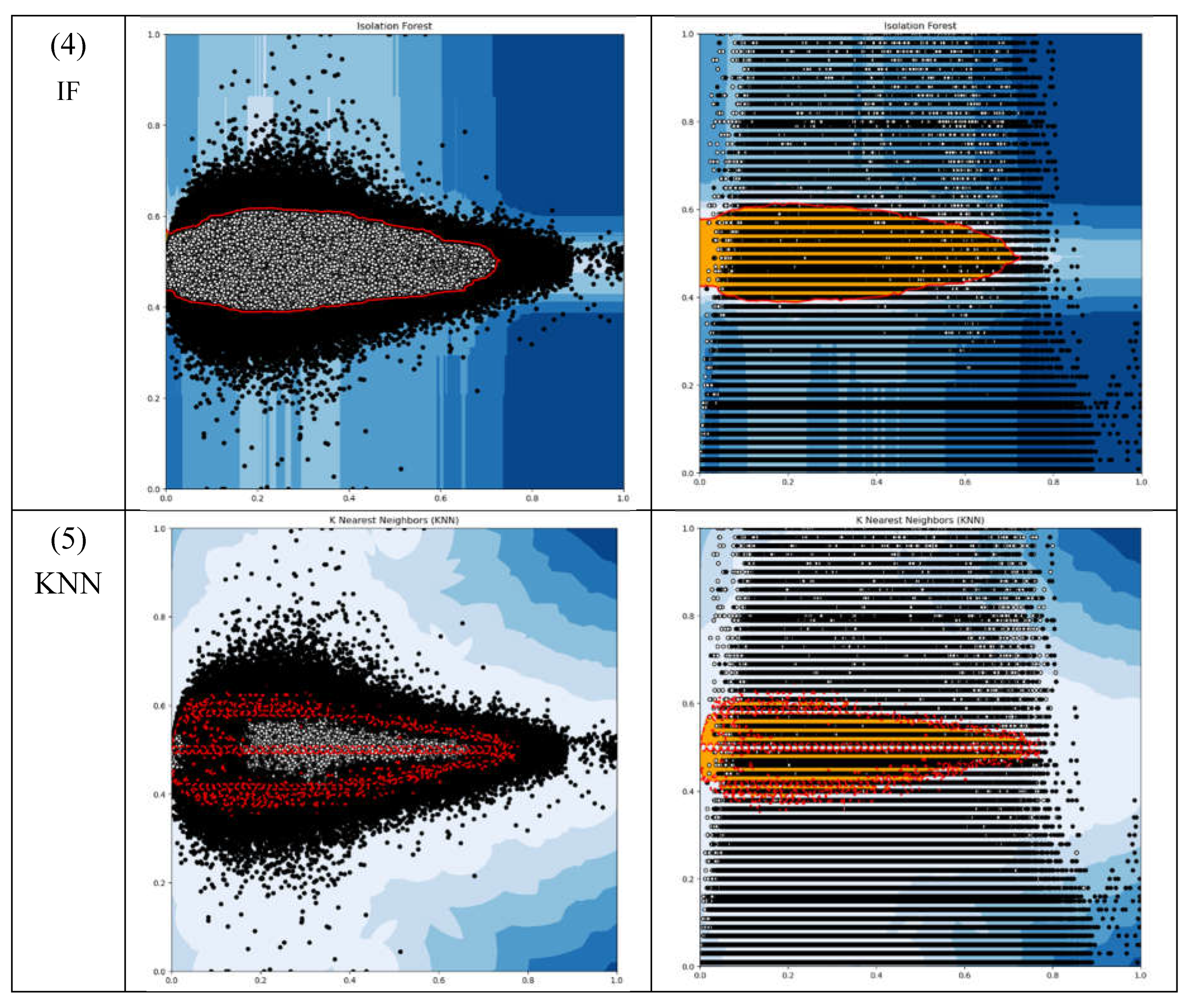

| Algorithm Name | Total Instance | Outliers | Threshold | |||||

|---|---|---|---|---|---|---|---|---|

| Longitudinal | Longitudinal Percentage | Lateral | Lateral Percentage | Longitudinal | Lateral | |||

| 1 | ABOD | 3166950 | 0 | 0 | nan | nan | 0 | 0 |

| 2 | CBLOF | 3166950 | 158345 | 158344 | -0.11175434977913001 | -0.10919071757369557 | 158345 | 158344 |

| 3 | HBOS | 3166950 | 153140 | 135949 | -1.9078634333717992 | 0.2580508062387792 | 153140 |

135949 |

| 4 | IF | 3166950 | 158348 | 0 158335 |

-2.0801210125544493e-17 | 0.0 | 158348 | 0 158335 |

| 5 | KNN | 3166950 | 142490 | 142490 | 0.0001503609022556196 | 0.000505561180569658 | 142490 | 142490 |

| Speed bin | 5 | 6 | 7 | 8 | |

|---|---|---|---|---|---|

| KPI | Measure | ||||

| Acceleration-longitudinal_possitive | Mean | 1.192235 | 1.337538 | 1.32516 | 1.398614 |

| Std | 0.806321 | 0.839056 | 0.804345 | 0.804976 | |

| Acceleration-longitudinal_negative | Mean | -1.04187 | -1.14423 | -1.1853 | -1.20188 |

| Std | 0.753567 | 0.74688 | 0.771699 | 0.786363 | |

| Acceleration-lateral_possitive | Mean | 0.069047 | 0.085095 | 0.096859 | 0.120503 |

| Std | 0.431809 | 0.085095 | 0.096859 | 0.120503 | |

| Acceleration-laterall_negative | Mean | -0.02688 | -0.03648 | -0.05113 | -0.06153 |

| Std | 0.040362 | 0.07236 | 0.132858 | 0.170901 | |

| Jerk-longitudinal_possitive | Mean | 0.824624 | 0.802729 | 0.692773 | 0.62276 |

| Std | 0.696375 | 0.680028 | 0.612652 | 0.605413 | |

| Jerk -longitudinal_negative | Mean | -0.42201 | -0.46223 | -0.39244 | -0.40045 |

| Std | 0.433433 | 0.487027 | 0.401976 | 0.395484 | |

| Jerk -lateral_possitive | Mean | 0.035219 | 0.050722 | 0.043935 | 0.054583 |

| Std | 0.286576 | 0.237766 | 0.083867 | 0.110184 | |

| Jerk -lateral_negative | Mean | -0.05251 | -0.03598 | -0.04478 | -0.05237 |

| Std | 0.582464 | 0.064078 | 0.084626 | 0.126077 | |

| Parameter | Test Value | Initial Value | Determined Value |

|---|---|---|---|

| 1,2,3,4,5,6,7,8 | 2 | 3 | |

| 3,5,10,15,20,30 | 5 | 10 | |

| 2,2.25,2.5,2.75,3 | 2 | 2 | |

| 15,30,45,60 | 30 | 30 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).