1. Introduction

Mice are one of the animal models in the biology and medical fields. It has been used for many years and has many advantages, including similarity to humans in many physiological functions and many methods of functional intervention through genetic modification. Researchers conducted various experiments on mice and observed the experimental phenomena of mice for biological and medical study, such as gene identification [

1], cell classification [

2] and protein prediction [

3]. Among the in vivo and in vitro experiments, mice behavior analysis is an essential topic and plays key roles in the medicine, neuroscience, biology, genetics, and educational psychology field. For example, researchers study behavioral patterns of mice to investigate the effect of a gene mutation, understand the efficacy of potential pharmacological therapies, or uncover the neural underpinnings of behavior for further treatment of mental disorders. Nowadays, mice behavior analysis has become a common approach in a wide range of biomedical research fields.

In the early stages of research, traditional behavioral analysis approaches allow for quantification of behavior by tracking the animal’s position in space, such as three-chamber assay [

4], open-field arena [

5] and water maze [

6]. However, with the development of technologies, traditional approaches face challenges in emphasizing important details of behavior involving subtle actions [

7]. Fine-grained behavioral feature data cannot be obtained through visual observation or subjective evaluation. Traditional approaches are time-consuming on high-precision feature computation work, and the results are also variable [

8]. A novel, automated, quantifiable approach for extracting fine-grained behavioral features is essential. Along with the development of the artificial intelligence (AI) field, AI can learn from large amounts of data and extract quantitative features automatically. “AI-empowered” has become a research and application trend today. Researchers also have applied AI to mice behavior analysis by analyzing the video or video frame data, such as by machine learning methods [

9] and by deep learning methods [

10]. AI empowers mice behavior analysis and makes some creative research possible now.

Recently, with the rapid spread of ChatGPT [

11], a more convenient and intelligent AI system has become a popular trend in the AI field. Compared with traditional AI studies, a GPT-integrated system can fulfill various objectives, such as translation, Q&A, dialogue, and text generation. However, there are no AI systems like MiceGPT for biology-related researchers. The Researchers must choose appropriate methods from a wide range of AI approaches to accomplish their research. This not only fails to demonstrate the convenience of AI but also increases their extra study tasks, which reduces research efficiency. Therefore, biology-related researchers require a system like

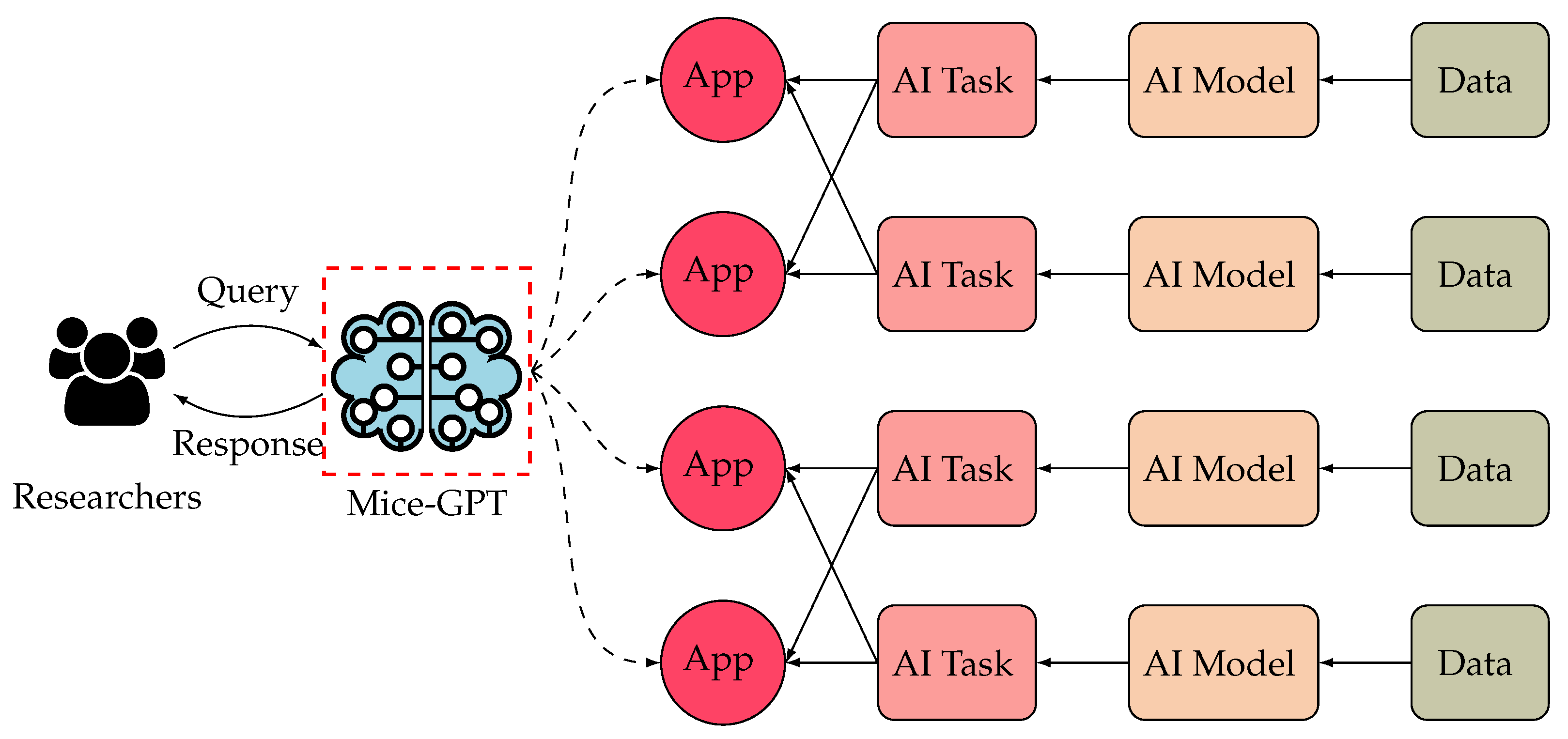

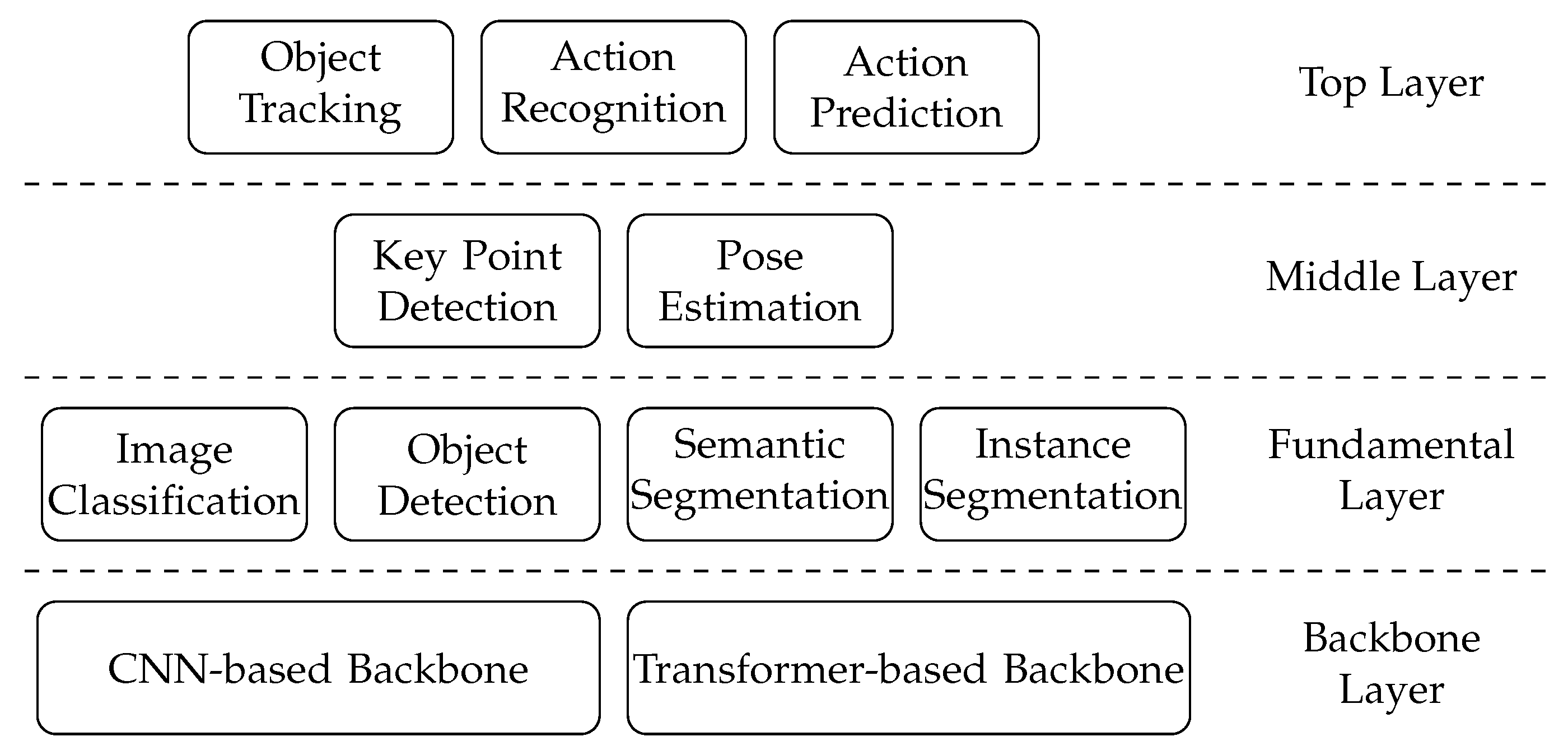

“MiceGPT”, shown in

Figure 1, in which it contains diverse mice behavior analysis apps combined with lots of state-of-arts AI models. Researchers can input their query requirements of analysis in the system, and MiceGPT can automatically classify the queries into specific applications in AI methods, and divide the application into the AI task, which is trained by different state-of-the-art AI models with mice behavior data, and finally response the query results to the researchers. So, can a “MiceGPT” be true? Concretely, what applications of mice behavior analysis can “MiceGPT” support? What tasks can the applications be divided into? What AI models can empower the tasks? We want to answer these questions in this paper.

This paper aims to make a survey to answer the above questions. Based on

Figure 1, we summarize the applications of mice behavior analysis, classify the applications into several well-known tasks of the AI field, and propose state-of-the-art AI-empowered approaches to solve the tasks. Finally, we propose our prospect architecture on “MiceGPT” with the content of the survey. We also propose two improved MiceGPT architectures with state-of-the-art Natural Language Processing (NLP) and AI generation technologies.

The rest of the paper is as follows:

Section 2 introduces our motivations for this survey.

Section 3 summarizes all the applications on the mice behavior analysis and proposes the relationship between applications and AI tasks.

Section 4 summarizes the suitable AI-empowered task approaches.

Section 5 introduces the iteration of MiceGPT’s architecture.

Section 6 concludes the paper.

2. Research Questions

This section introduces the main research questions of the survey. We first retrieve the AI-based papers of mice behavior analysis to ensure that all the studies in this survey are all AI-based. The paper starts with a general question of "Can MiceGPT be true", which is subsequently divided into four Research Questions (RQs) based on

Figure 1. The paper answers the RQs through literature surveys and makes summaries. Research questions include:

RQ1: What applications can AI empowers in the mice behavior analysis studies? (Answered in

Section 3)

RQ2: How to taxonomize the applications into AI tasks? (Answered in

Section 3)

RQ3: What AI methods can be used for executing AI tasks? (Answered in

Section 4)

RQ4: How can MiceGPT trains the AI methods, classify the AI tasks, and identify the applications? (Answered in

Section 5)

3. Applications

In this section, we conduct a preliminary search about mice behavior with AI approaches using the Google Scholar and SCI Expanded library with the keywords “mice behavior AND machine learning AND deep learning”. In Google Scholar, the keywords are chiefly matched in the body of papers instead of the abstract, and the search results contain the patents and research reports. They are not our main focus. In the SCI Expanded library, we search the same keywords in the title, abstract, and keywords. The search scope is “Article AND Meetings.” The initial number of retrieved documents amounted to around 85 publications. We selected 26 papers as state-of-the-art works, according to the following rules:

Including studies whose data are videos or video frames;

Including studies that have exact application goals instead of technical goals;

Excluding studies that focus on machine learning instead of deep learning;

In the end, we obtained 26 related papers and grouped them into four applications. This section summarizes the state-of-art AI-empowered mice behavior research on applications to summarize and taxonomize AI-empowered mice behavior analysis applications for the further study of MiceGPT.

3.1. Disease Detection

Changes in daily human behavior (e.g., food intake, sleep, and activity patterns) can often reflect symptoms of several diseases. Mice disease models [

12,

13] are a valuable resource in studying the diseases [

14]. However, these studies require long and systematic observations of disease-carrying mice, which requires much labor work and is subject to human error. Fortunately, AI can be a powerful tool for diagnosing disease in mice [

15,

16,

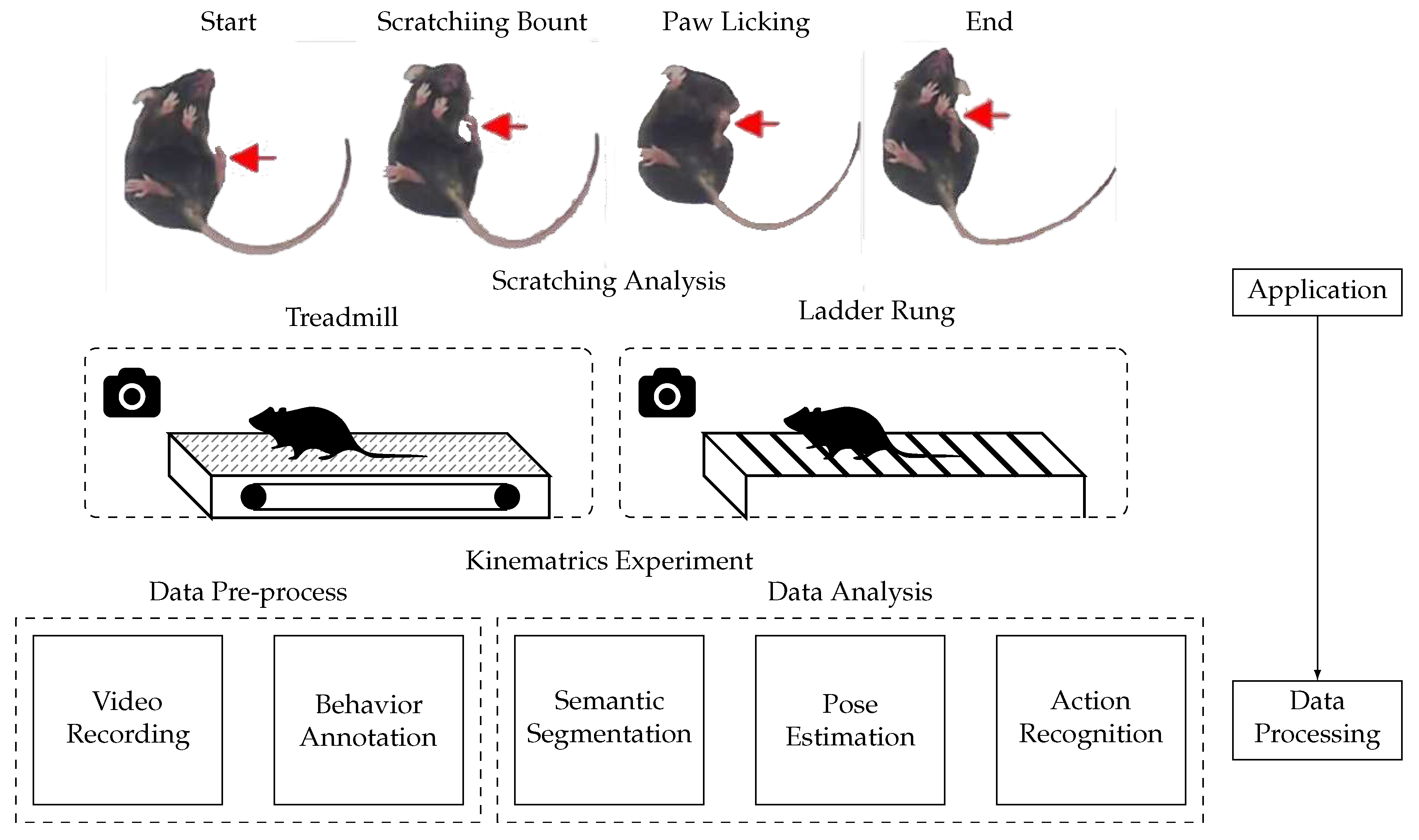

17]. As shown in

Figure 2, mice behaviors, such as scraching and gait, are recorded as video data with high-speed cameras. AI methods, such as semantic segmentation, pose estimation, and action recognition, diagnose disease in mice through the video data. AI provides new insights into the pathophysiology and treatment of diseases.

Most of the existing studies on AI-empowered mice disease detection are based on video data, while a few are based on text data. Yu et al. [

18] record the mice behavior from the bottom of a mouse videotaping box with a camera. Compared to the top or side views, the bottom view can clearly capture the key body parts involved in scratching behavior. Weber et al. [

19] customize a free-walking runway with two mirrors that allow 3D recording of the mice from the lateral/side and down perspectives. Aljovic et al. [

20] film all videos with a GoPro 8 camera positioned parallel to and at a fixed distance and angle from the treadmill and ladder. Alexandrov et al. [

9] generate a large, content-rich behavioral data set using a series of HET Htt CAG-repeat-KI mice with a range of CAG repeat lengths, assessed at different ages.

Weber et al. [

19] reveal gait abnormalities and motor deficits in rodents after a focal ischemic stroke with key point detection and pose estimation based on deep learning. They provide a comprehensive 3D gait analysis of mice. They further refined the widely used ladder rung test using deep learning and compared its performance to human annotators. The results show that deep learning-based motion tracking with comprehensive post-analysis provides accurate and sensitive data to describe the complex recovery of rodents following a stroke.

Yu et al. [

18] develop a new system, Scratch-AID (Automatic Itch Detection), based on image classification and action recognition. The system could automatically identify and quantify mice scratching behavior with high accuracy. They design a CRNN (Convolutional Recurrent Neural Network) by combining CNN (Convolutional Neural Network) and RNN (Recurrent Neural Network). The CNN extracts static features, and the RNN extracts dynamic features. Finally, a classifier combines the features extracted by both CNN and RNN and generates the prediction output (scratching or non-scratching). The best-trained network achieves 97.6% recall and 96.9% precision on test videos.

Sakamoto et al. [

16] develop an accurate automated prediction method for black mice with image classification and action recognition. Same with Yu et.al’s research [

18], they also used CRNN. The CRNN outputs a decimal value between zero and one for pre-processed images. They define an image whose value is more than 0.5 as “scratching”. They set a posterior filter that removes the predictions for nine or fewer frames, which could easily be wrong, to improve the predictive performance. The results show that the established CRNN and posterior filter successfully predicted the scratching behavior in black mice.

Aljovic et al. [

20] develop an open-source computational “toolbox” with pose estimation and image classification functions. The toolbox can be applied to neurological conditions affecting the brain and spinal cord. The toolbox is based upon pose estimation obtained from DeepLabCut [

21]. It can be used for automated kinematic parameter computation, automated footfall detection, and kinematic data analysis with random forest classification and principal component analysis. The results show that the automated comprehensive analysis could delineate the specific parameters of the locomotor function that are best suited to track injuries of the brain or spinal cord or are sensitive enough to predict disease onset during the prodromal phase of a multiple sclerosis model.

Alexandrov et al. [

9] use a computational method based on SVMs (Support Vector Machines) to analyze the large-scale phenotypic information generated by the three systems. They select the phenotypes that best-distinguished mice with CAG repeats of different lengths. The final model, which incorporates about 200 behavioral features, accurately predicts the CAG-repeat length of a blinded mouse line. The results demonstrate the potential to predict underlying disease mutations by measuring subtle variations at the level of behavioral phenotypes.

3.2. External Stimuli Effective Assessment

External stimuli effective assessment is a basic experiment approach for mice. Compared with the mice without the stimuli, researchers make external stimuli on the specific mice organ to analyze the stimuli effect by analyzing mice behaviors. The types of external stimuli are various, such as medicine [

22], artificial stimulus [

23], and genetic alteration [

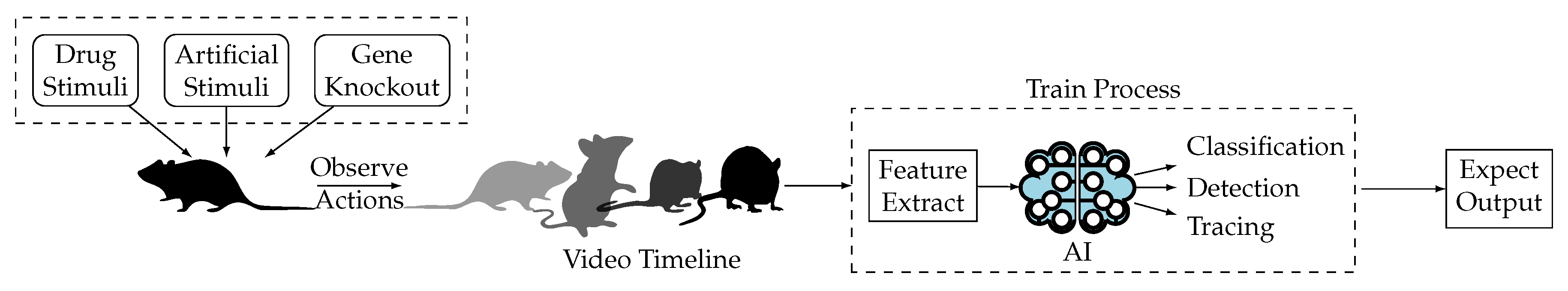

24]. Due to the high-speed behavior of mice, traditional approaches cannot exactly obtain the video frames with the complete organ. With the development of AI technology, researchers apply the AI-empowered approaches to evaluate the external stimuli effect on mice automatically, which presents the basic research steps in

Figure 3. Researchers make various external stimuli on the mice, such as drug stimuli, artificial stimuli and gene knockout to observe the actions of mice. With the AI techniques empowering, the AI models extract the features from the video timeline, and make classification, detection and tracing tasks by the train process. Then the researchers can get the expected outputs from the AI models. Studying external stimuli effect in mice can contribute to exploring disease treatment and neuroscience. They generally focus on the detection, classification, segmentation, and tracing tasks.

Current AI-empowered studies on external stimuli effective assessment adopt video data as the training and testing data. Wotton et al. [

25] collect video data of mice behavior in response to a hind paw formalin injection. Kathote et al. [

26] record the bottom view videos of the mice behavior with acetazolamide and baclofen. Vidal et al. [

27] create a video database including the behavioral data of 8 different white-haired mice collected multiple times at different times. Abdus-Saboor et al. [

28] use high-speed videography to record sub-second, full-body move videos. Marks et al. [

29] collect raw video frames in complex environments directly. Torabi et al. [

30] collect neonatal (10-days-old) rat pup video recordings using standard locomotor-derived kinematic measures. Martins et al. [

31] collected videos of the tail suspension test (TST) in a controlled environment. Wang et al. [

32] collect mice behavior with an overhead camera during video recording.

Wotton et al. [

25] aim to make key point detection and licking action recognition of mice and propose an automated rating system for rapid, yet clinically relevant nociception assays in the formalin assay. They take advantage of the key point detection by DeepLabCut [

21] with a pre-trained ResNet50 [

33], and use the GentleBoost classifier to identify the behavior of licking of each frame. The results show that the automated system easily scores over 80 videos and reveals strain differences in both response timing and amplitude.

Vidal et al. [

27] focus on automating the prediction of the grimace scale on white-furred mice by AI-empowered object detection, semantic segmentation, and image classification. They create a video database including the behavioral data of 8 different white-haired mice collected multiple times, use YOLO to detect frames that provide a stable frontal face of the mice, and propose a Dilated CNN to segment the mice eyes region and a Grimace Scale Prediction Network to classify the grimace scale into dilatation, activation, and dropout. The results show that this process is possible to differentiate among the pain scale of the mice.

Abdus-Saboor et al. [

28] analyze sub-second behavioral features following hind paw stimulation with both noxious and innocuous stimuli to assess pain sensation in mice by AI-empowered action recognition. They apply four mechanical stimuli to the plantar surface of a randomly chosen hind paw of fully acclimated mice, apply machine learning to make classifies withdrawal action behaviors as a probability of being pain-like, and obtain the probability by regression analysis. The results indicated that a sensitive pain sensation assessment could be feasibly achieved based on the calibration of the animal’s own behavior.

Kathote et al. [

26] develop an AI-empowered pose estimation method to quantify Glucose transporter 1 deficiency syndrome mice behavior to infer potential therapeutic value on cancer. They make automation of pose estimation by deep neural networks to analyze more subtle changes that the drugs may potentially cause, use K-means to cluster and select usable frames, and train these frames for automated tracking of body parts in the recorded videos. The results indicate that this in vivo approach can estimate preclinical suitability from the perspective of G1D locomotion.

Marks et al. [

29] propose a novel deep learning architecture to study brain function, the effects of pharmacological interventions, and genetic alterations by quantification of behaviors. The architecture consists of four neural networks. It made instance segmentation to find the mask and bounding box for each animal by SegNet. Based on the segmentation, the architecture can make key point detection by PoseNet, object tracing by IdNet, and action recognition by BehaveNet based on different types of input data. The results show that the architecture successfully recognized multiple behaviors of freely moving individual mice and socially interacting non-human primates in three dimensions.

Torabi et al. [

30] study the effect of maternal nicotine exposure before conception on 10-day-old rat pup motor behavior and propose a deep neural network by action recognition. They train the model for classifying the videos into maternal preconception nicotine exposed groups and control them. The results suggest novel findings that maternal preconception nicotine exposure delays and alters offspring motor development.

Martins et al. [

31] develop a novel computerized approach, based on AI and video analysis of the experimentation procedure, to standardize the TST by object detection and action classification. They propose a CNN network to detect the bounding boxes of the rear paws in the videos. Based on this, they apply some machine learning techniques to classify the movement status of the rodent, such as SVMs, decision trees, and kNNs (k-nearest neighbors). The results show that the CNN achieved 87.7% success in the paw identification problem, and the classifier achieved 95% accuracy in classifying the animal’s mobility states.

Wang et al. [

32] seek to develop a hybrid machine learning workflow to understand the brain more by accurate and effective quantification of animal behavior. They use DeepLabCut to trace the mice body key points and detect the mice behavior during a video period by random forest and hidden Markov model models. The results show that the workflow represented a balanced approach for improving the depth and reliability of machine learning classifiers in chemosensory and other behavioral contexts.

3.3. Social Behavior Analysis

The study of social behavior in mice [

34,

35] holds significant importance in the field of medicine. By gaining a deep understanding of the neurobiological basis of social behavior in mice, people can unravel the mechanisms underlying social behavioral disorders and provide clues for the diagnosis and treatment of related diseases [

36,

37]. Additionally, research has revealed the impact of social stress and stress on social behavior in mice, highlighting the interaction between stress and social behavior and offering new strategies for treating stress-related disorders. The study of social behavior in mice also contributes to exploring the influence of social interaction on health, providing important clues to understanding the association between social isolation and health issues. Social behavior analysis in mice generally includes object detection, key point detection, post estimation, action recognition, and other tasks, as shown in

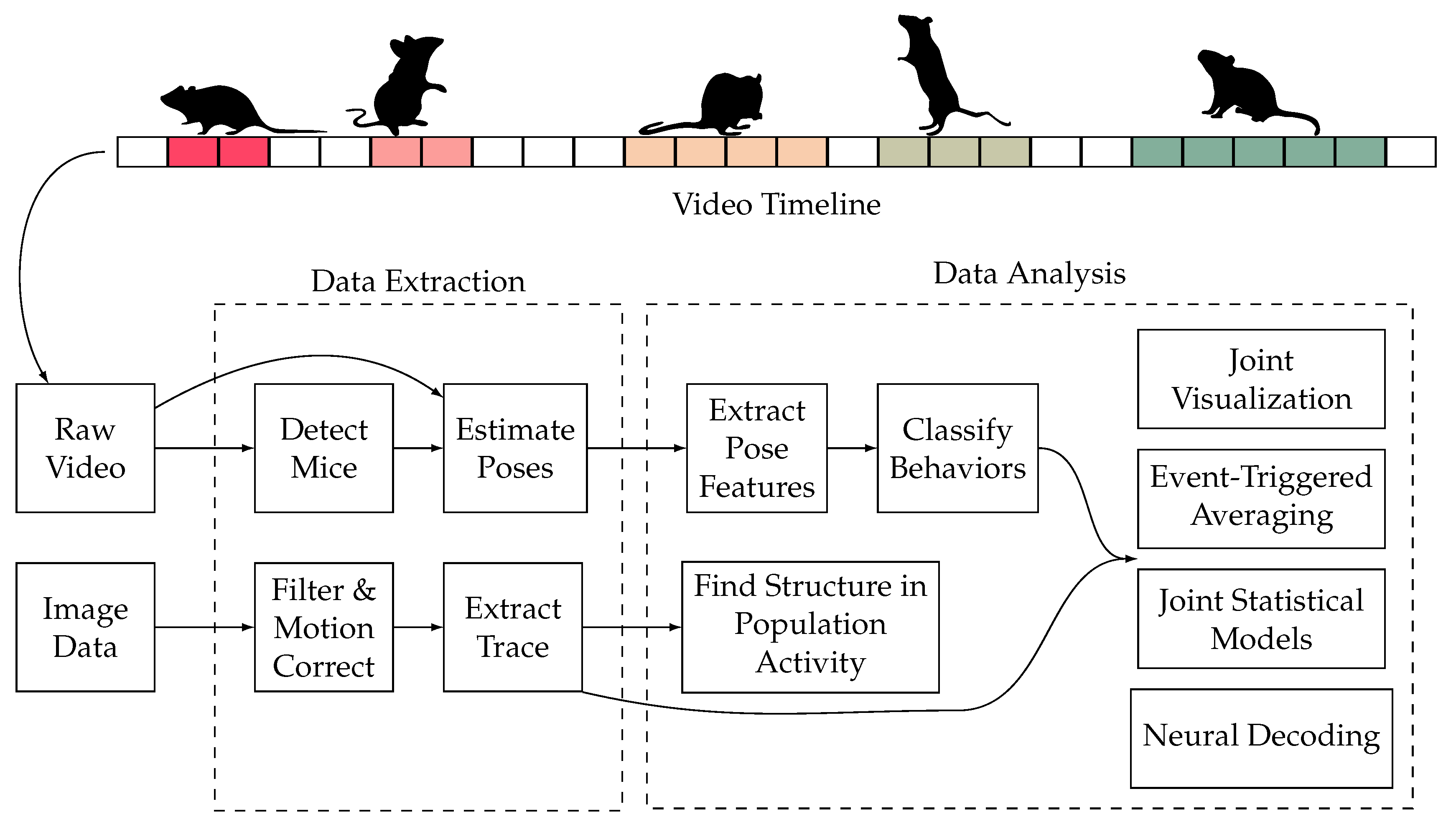

Figure 4. The process mainly consists of two steps: data extraction and data analysis. In the data extraction step, the researchers estimate the posture of the mice in the video data and extract the mouse trajectories from the image data. In the data analysis step, they extract the pose features and classify behaviors. Finally, the results are visualized.

Current studies of social behavior in mice are vision-based, which means they study the behaviors of mice by analyzing the video data of mice activity. Video data are classified as single-view or multi-view. The studies that rely on analyzing single-view video recordings [

38,

39] can be ambiguous when the basic information about the behavior is occluded. Multi-view video can provide more behavioral information about mice, which is easier to identify their behavioral characteristics. Therefore, multi-view video recordings for mouse observations are increasingly receiving much attention [

40,

41,

42,

43].

Segalin et al. [

38] introduce the Mouse Action Recognition System (MARS), an automated pose estimation and behavior quantification pipeline in pairs of freely interacting mice. MARS achieves human-level performance in pose estimation and behavior classification. Moreover, it uses computer vision to track and detect the pose of the mice and the XGBoost [

44] algorithm to classify their behavior. The authors also provide custom Python code to train novel behavior classifiers.

Agbele et al. [

39] present a system that uses local binary patterns and cascade AdaBoost [

45] classifier to detect and classify mice behavioral movement in videos with minimal supervision, helping animal behaviorists in their research by providing a non-invasive and non-intrusive way to study mice behavior. The developed cascade AdaBoost algorithm was able to detect eight different mice movements.

Winters et al. [

46] present a new automated method for assessing maternal care in laboratory mice using machine learning algorithms and aim to improve the reliability and reproducibility of the pup retrieval test performance assessment. The results show that the proposed automated procedure was able to estimate retrieval success with an accuracy of 86.7%. They bred primiparous c57bl/6JRJ mice and housed them in groups for time-controlled breeding in standard type II cages. They use the puppy retrieval test to evaluate puppy-oriented maternal care in laboratory mice. Automatic tracking of dams and one pup is established in DeepLabCut, and “maternal approach”, “handling” and "digging" for automatic behavioral classification are established in simple behavior analysis.

Jiang et al. [

40] propose a novel multi-view latent-attention and dynamic discriminative model for identifying social behaviors from various views. The proposed model outperforms other state-of-the-art technologies and effectively solves the imbalanced data problem. The model jointly learns view-specific and view-shared sub-structures, where the former captures the unique dynamics of each view while the latter encodes the interaction between the views. Additionally, a multi-view latent-attention variational autoencoder model is introduced in learning the acquired features, enabling them to learn discriminative features in each view. Also, the graphical model models the correlation between the neighboring labels, which has shown superior performance in recognizing mouse behaviors in a long video recording.

Hong et al. [

47] present a new integrated hardware and software system for automatically estimating pose and classifying social behaviors involving close and dynamic interactions between two mice. The experiment proves that their integrated approach allows for rapid, automated measurement of social behaviors and allows the ability to develop new, objective behavioral metrics. They design a hardware setup and software to produce an accurate representation and segmentation of inaccurately represented segments. Then they develop a computer vision tool that extracts a representation of the location and body pose (orientation, posture, etc.) of individual animals and use the representation to train a supervised machine learning algorithm to detect specific social behaviors.

Burgos-Artizzu et al. [

42] present a novel method for analyzing social behavior in continuous videos by segmenting them into action “bouts” using a temporal context model that combines features from spatio-temporal energy and agent trajectories. The method is tested on a dataset of videos of interacting pairs of mice, reaching a mean recognition rate of 61.2% compared to the expert’s agreement rate of about 70%. The authors find that their novel trajectory features, used in a discriminative framework, are more informative than widely used spatio-temporal features. Furthermore, temporal context plays an important role in action recognition of continuous videos. The authors compare their method with other approaches and show that their approach outperforms them regarding recognition rate.

Tanas et al. [

48] discuss using multidimensional analysis to evaluate the behavioral phenotype of mice with Angelman syndrome and wild-type littermates. The approach was able to predict the genotype of mice based on their behavioral profile with high accuracy and detect behavioral improvement as a function of treatment in Angelman syndrome model mice. They define multidimensional analysis as the multi-step process of (a) reducing the dimensionality of large behavioral datasets using principal component analysis, (b) clustering data in principal component space using k-means clustering, and (c) assessing whether behaviorally defined clusters align with animal genotype.

3.4. Neurobehavioral Assessment

Neurology is a major research direction of biology and medical science. Traditional approaches measure the representational mice behavior information for the neurologic study, such as dynamic weight-bearing test [

49], metabolic parameters test [

50] and grip strength test [

51]. However, some micro features of mice behaviors can promote the research of neurology, which can not be discovered by manual observation. Therefore, researchers apply AI methods in analyzing certain mice behaviors to study mice’s nervous systems further. To make the neurobehavioral assessment, researchers commonly collect the video of mice behavior, then transfer the video data into image frames, and make AI models for training the images for classification, segmentation, key point detection, and context action prediction.

In the neurobehavioral assessment, all the studies collect video data and divide videos into image frames to train AI models. Ren et al. [

52], Jiang et al. [

53], and Tong et al. [

54] collected mice action behavior videos. Geuther et al. [

10] collected the mice sleep behavior videos. Cai et al. [

55] recorded the mice freezing behavior videos. Jhuang et al. [

56] provided software and an extensive manually annotated video database for data training and testing. Lara-Dona et al. [

57] collected the mice pupil behavior videos of both eyes.

Ren et al. [

52] find that automated annotation of mice behavior could help study the neuroscience of long-term memory in mice. Then they treat the annotation task as a per-frame image classification problem and fine-tune a powerful CNN network pre-trained on ImageNet for recognizing annotate animal behaviors automatically to save human annotation costs. The results show that the powerful CNN can provides more accurate annotations than alternate automatic methods.

Cai et al. [

55] study the reward & punishment mechanism of dopamine neurons by mice freezing behaviors, and eliminate the need for human scoring by pre-trained ResNet. They further train on the pixel-by-pixel intensity difference between consecutive pairs of frames and classify each frame into a certain behavior classification. The results show that each classifier achieved optimal training within 50 training epochs and yielded 92–96% accuracy.

Jhuang et. al [

56] aime to make the neurobehavioural analysis of mice phenotypes and classify every frame of a video sequence by semantic segmentation and image classification, even for those frames that are ambiguous and difficult to categorize. They first made the semantic segmentation to get the foreground mask by the background subtraction procedure. Then they train and test a multiclass SVM model on single isolated frames to recognize high-quality unambiguous behavior. The results show that their model can lead to 93% accuracy, which is significantly higher than the performance of a representative computer vision system.

Lara-Dona et al. [

57] analyze the changes in pupil diameter by semantic segmentation, which reflects neural activity in the locus coeruleus. They built up the SOLOv2 to segment mice pupils from each photo frame, and output the range of mice pupils. The results confirm a high accuracy that makes the system suitable for real-time pupil size tracking.

Geuther et al. [

10] treat the nerve signals and the mice behavior videos to analyze mice’s sleep quality. They segment the mice mask from the video and use the human expert-scored EEG/EMG data to train a visual classifier, and finally make action recognition, which classified each 10s video into categories, such as wake, sleep NREM, and sleep REM. The results show that their classifier can reach the overall accuracy of 0.92 ± 0.05, which can replace the manual classification.

Tong et al. [

54] apply both segmentation and key point detection in their study. They aim to analyze optomotor response to evaluate animals’ visual function and nervous system. They use binarization to make the semantic segmentation of mouse contour and propose a powerful CNN network to detect the position of the mouse’s nose and track the orientation of the mouse’s head. The results show that their CNN network can achieve a recognition rate of 94.89%.

Jiang et al. [

53] propose a hybrid deep learning architecture with a novel hidden Markov model algorithm to describe the temporal characteristics of mice behaviors by action prediction. The architecture contains an unsupervised layer and a supervised layer. The unsupervised layer relies on an advanced spatial-temporal segment Fisher vector encoding both visual and contextual features, and the supervised layer is trained to estimate the state-dependent observation probabilities of the hidden Markov model. The results show that the accuracy of their architecture can get 96.5% on average.

3.5. AI Tasks Taxonomy

After summarizing the behavior analysis applications in mice, we also summarized the AI tasks during behavior analysis in mice, as shown in

Table 1. The table also summarizes the data types and characteristics of the study.

To summarize the behavior analysis applications in mice, we first read the collected literature and classified them according to their research purposes and applications. We found that most of the studies could be grouped into 4 categories, namely disease detection, external stimuli effective assessment, social behavior analysis, and neurobehavioral assessment, in which we introduce 4, 8, 6, and 7 literature, respectively. In addition, in the mice behavior analysis studies, the AI-empowered mice behavior applications can divide into multiple AI tasks because of different applications and research methods. We summarized nine tasks in total. We also summarize the characteristics of the data analyzed in the studies. Almost all of them analyze video data, which are broadly classified as having single-view and multiple-view, i.e., whether the data were collected from a single camera or multiple cameras.

4. AI-empowered Approaches

In this section, we focus on the techniques behind mice behavior analysis in biology fields. We first build up an AI pyramid according to the AI task’s dependency relationship. Then, we introduce several general backbones, namely the fundamental architectures of AI models. In the last, we introduce the AI models in each AI task. Noted that, except some models used to couple with mice video data are introduced, we also introduce some state-of-art approaches used for human-related recognition.

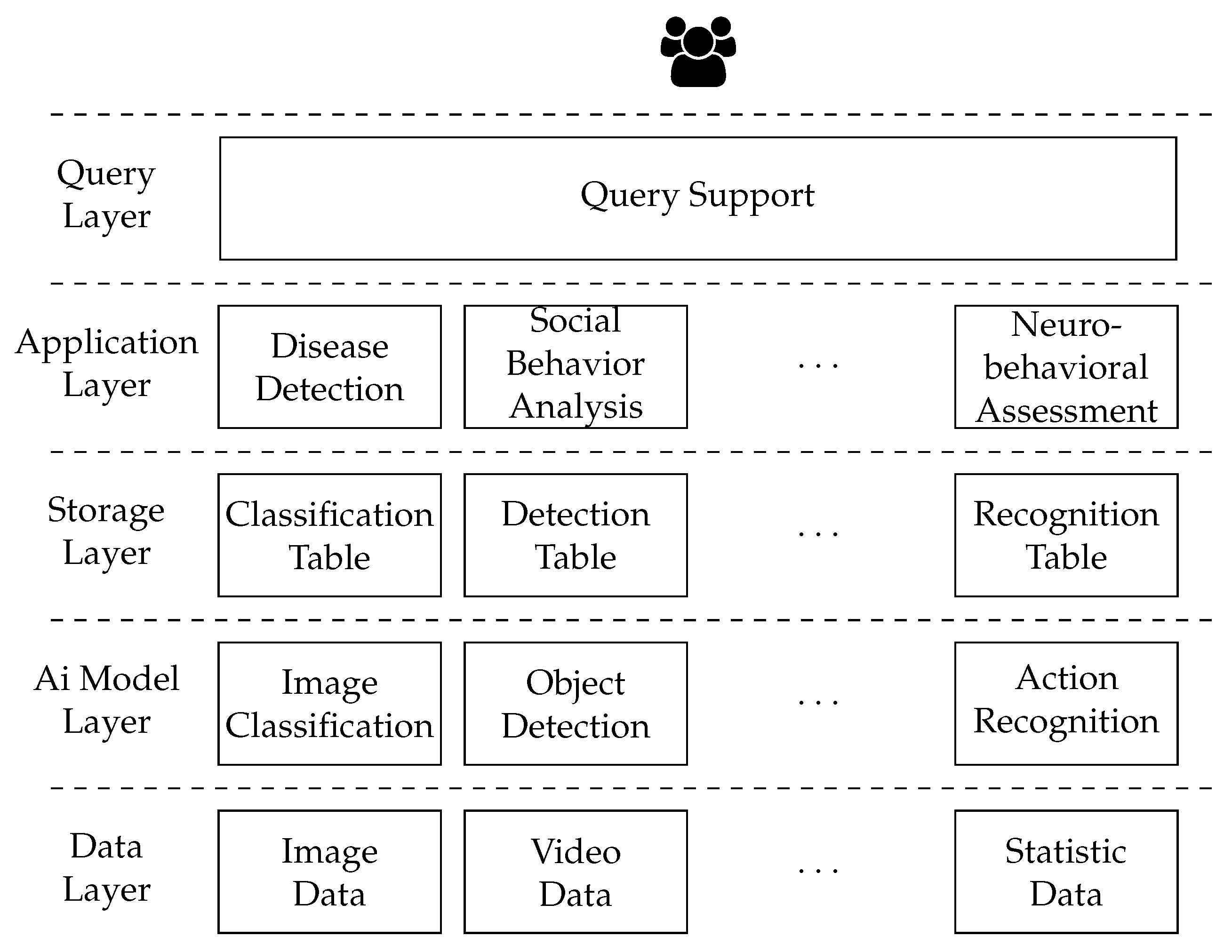

4.1. AI Pyramid

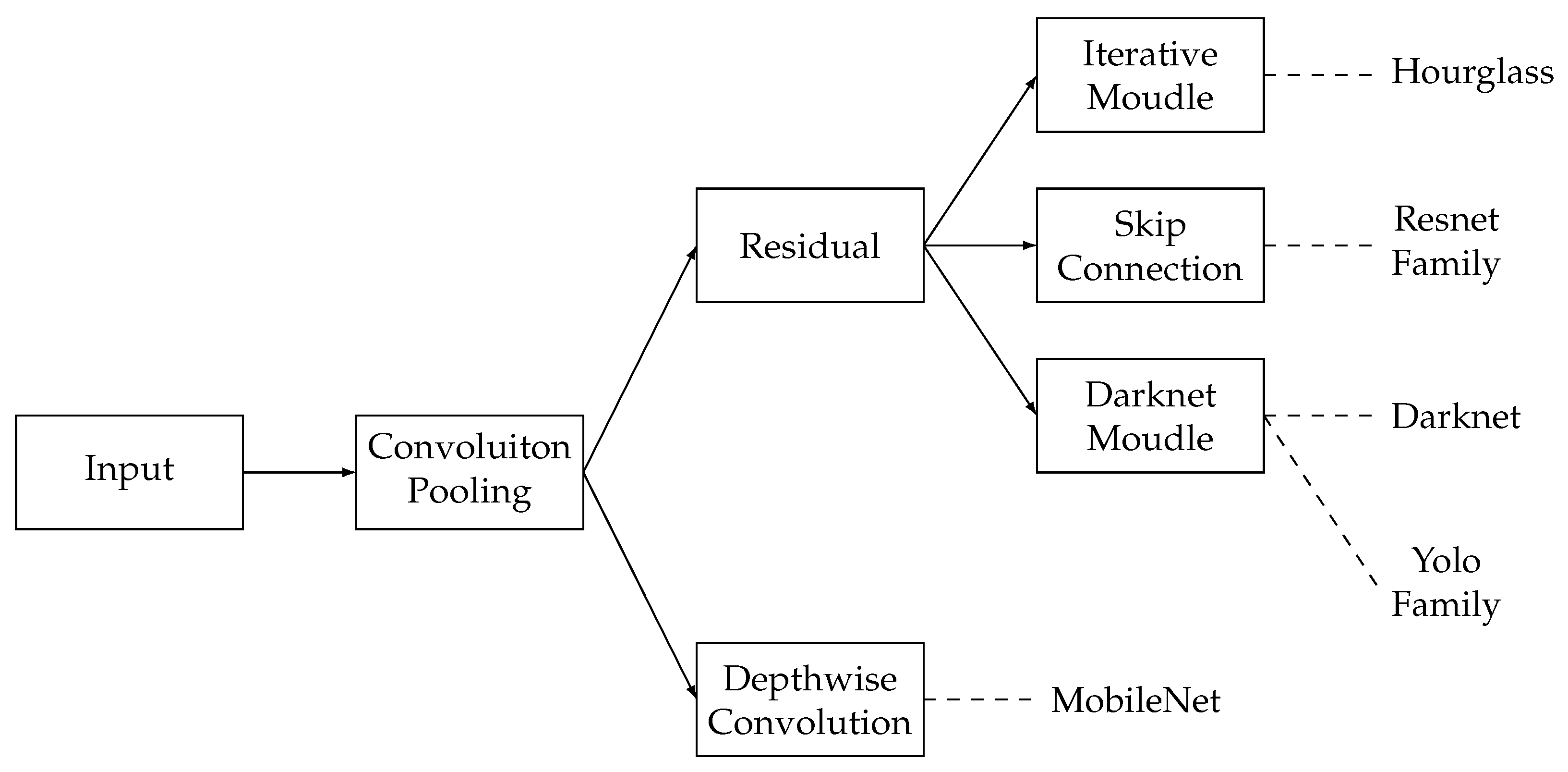

The architecture of AI tasks is organized as

Figure 5. It is a “pyramid” structure including four layers: top layer, middle layer, fundamental layer, and backbone layer. The topper layers may take advantage of the techniques of the lower layers.

The backbone layer contains the backbone models and networks. The backbone is the major network of a model. It helps abstract the features of images or videos and generate the feature map for the following network structure. Researchers mainly use the pre-trained backbone and fine-tune it for their study. The common backbones include CNNs, such as ResNet, ResNeXt, DarkNet, MobileNet, Yolo, HourGlass, and Transformers.

The fundamental layer contains the basic AI tasks, including image classification, object detection, semantic segmentation, and instance segmentation. These tasks are atomic and can not be further divided into other AI tasks and take advantage of backbone networks from the backbone layer. For example, object detection can select YoloV5 as the backbone network.

The middle layer contains key point detection and poses estimation. Both tasks may need support from the fundamental layer. For example, the key point detection model may combine the semantic and instance segmentation as the first step, and apply the object detection as the final step. Also, the tasks of the middle layer may apply to the backbone networks from the backbone layer, such as DarkNet and MobileNet.

The top layer tasks may integrate both the middle and fundamental layers’ tasks in the model. For example, the action prediction can combine the key point detection(Middle layer) and the semantic segmentation(Fundamental layer) tasks. The task of the top layer can also integrate the backbone network into its model.

4.2. Backbone

The backbone is the major architecture of the AI models. It helps to extract the modular structure of image features and transform the images into high-dimensional feature representations. Existing known backbones for mice behavior analysis can be categorized into two categories: CNN-based and Transformer-based.

In CNN-based backbones, the backbones contain multiple convolutional layers and pooling layers. The convolutional layers help extract the features of images. The pooling layers reduce the number of parameters and improve the robustness of features. Common CNN-based backbones include DarkNet, ResNet, MobileNet, HourGlass, and YoloV5. The dependency and relationship of these CNN-based backbones are shown in

Figure 6. The CNN-based backbones are all based on convolutions and poolings. In detail, the MobileNet requires depthwise convolution to achieve lightweight, and others require residual techniques to improve performance. In the residual techniques, HourGlass, ResNet family, and DarkNet family can be categorized by the iterative module, skip connection, and the darknet module and the darknet module can be furtherly dividied into DarkNet and Yolo families.

DarkNet [

58] is a lightweight CNN network. The structure of the backbone adopts multiple convolution layers and downsampling layers. A Batch Normalization layer and a Leaky ReLU layer follow each convolution layer. In detail, it contains an input layer, 19 convolutional layers, 2 upsampling layers, a fully connected layer, 26 batch normalization layers, 19 leaky ReLU layers, and 5 max pooling layers.

ResNet50 [

59] is a typical backbone in the Resnet family, which is a deep residual network. It has 50 layers in total and avoids the problem of disappearing gradients. In detail, it contains an input layer, a 7*7 convolutional layer, a pooling layer, 16 residual blocks (3 convolutional layers in each block), a global average pooling layer, a fully connected layer, and a softmax layer.

MobileNet [

60] is a lightweight convolutional neural network proposed by Google. It can make rapid image classification and object detection on mobile devices. It has an input layer, 13 convolutional layers, 13 depthwise separable convolutional layers, a global average layer, a fully connected layer, and a softmax layer.

HourGlass [

61] is a CNN-based backbone for human pose estimation. It consists of 4 HourGlass modules. Each module contains an input layer, a convolutional layer (64 filters, 7*7 kernel size, stride 2), some residual blocks (64 filters, 3*3 kernel size), a max pooling layer (2*2 kernel size, stride 2), an Hourglass (recursive), some residual blocks (128 filters, 3*3 kernel size), an upsampling layer (2*2 kernel size, nearest-neighbor interpolation), some residual blocks (64 filters, 3*3 kernel size), a convolutional layer (specific filters, 1*1 kernel size), and an output layer.

YoloV5 [

62] is the scaled-YoloV4 in fact. contains a convolutional layer, a feature pyramid layer, and a detection head. The convolutional layer takes the CSPNet as the backbone, including 9 convolutional layers. The feature pyramid layer applies a spatial pyramid pooling module and fuses multi-scale feature maps to improve the detection ability of micro targets. The detection head has three branches for detecting targets of any size. Each branch has a convolutional layer and an output layer.

Recently, the transformer-based backbone has become a popular backbone architecture in computer vision tasks. However, it hasn’t been used in the mice behavior analysis. Considering its state-of-art performance and accuracy. It is essential to utilize the transformer-based in the biology field. Transformer is mainly applied in Sequence-to-Sequence tasks, such as translation and speech recognition. It contains an input embedding layer, some encoder layers, and some decoder layers (each includes three sub-layers, Multi-head Self-attention, multi-head attention, and feedforward neural network). However, in the transformer-based, the encoders are mostly used as a backbone, such as ViT [

63] and Swin [

64].

ViT is proposed by Google Brain in 2020. It aims to apply a transformer to the computer vision field. ViT contains four layers: patch embedding layer, transformer encoder layer, global average pooling layer, and the fully connection layer. The patch embedding layer divides the image into pieces of fixed size and maps them into a vector. The transformer encoder layers help to extract the features of the vector. The global average pooling layer and the fully connection layer are used for the feature presentation and the output presentation.

Swin is proposed by Microsoft Research Asia in 2021. It has three parts: Swin transformer block for extracting the local feature, stage segmentation for dividing the image into multiple sub-figures, and the cross-stage connection for transmitting the features among different parts.

4.3. Fundamental Layer Tasks

The fundamental layer tasks mainly make basic image analysis. The goal of these tasks is to extract information about objects or features from images or videos, such as their location, size, shape, and category.

4.3.1. Image Classification

Image Classification is a fundamental task in the field of computer vision. Its goal is to assign a label to an input image from a predefined set of categories. The training methods of image classification can be divided into supervised learning, unsupervised learning, semi-supervised learning, self-supervised learning, and weakly supervised learning. Supervised learning is the model learning labeled data, learning a mapping relationship between data and labels. Unsupervised learning is learning completely unlabeled data from which models learn patterns. Semi-supervised learning is data that includes both labeled and unlabeled parts. Self-supervised model learning is also learning unlabeled data. The difference is that these unlabeled data can be labeled by learning.

Ren et al. [

52] used the supervised learning training model. They take a pre-trained CNN trained on ImageNet and fine-tune it for their rodent behavior classification task. They use

,

,

P,

D,

C to represent a convolutional layer with

k filters (

), a fully-connected layer with

k neurons (

), a down-sampling max-pooling layer (

P) with kernel size 3 and stride 2, a dropout layer (

D), and a soft-max classifier (

C). They transfer AlexNet into use by replacing its last 1000-dimensional classification layer with a 5-dimensional classification layer. The AlexNet network architecture is:

C96(11)-

P-

C256(5)-

P-

C384(3)-

C384(3)-

C256(3)-

PF4096-

D-

F4096-

D-

C. They also transferred C3D, which simultaneously learns spatial and temporal features by performing 3D convolutions, and has been shown to outperform alternate 2D CNNs for video classification tasks. The C3D network architecture is

C64-

P-

C128-

P-

C256-

C256-

P-

C512-

C512-

P-

C512

C512-

P-

F4096-

D-

F4096-

D-

C.

Cai et al. [

55] also use the supervised learning. They develop an analysis pipeline based on a CNN model to identify freezing behavior in mice. The CNN is initialized on the pre-trained ResNet18 architecture and further trained on `difference images,’ the pixel-by-pixel intensity difference between consecutive pairs of frames. The rationale for inputting different images to the CNN was to capture frame-by-frame motion. Each difference image is human-labeled as 1 or 0 to signify `freeze’ or `no freeze,’ and the network learned to predict labels for new difference images. The CNN allowes accurate and automated classification of freezing behavior throughout the duration of their experiments with minimal labor and enables them to determine that the precise temporal relationship between dopamine neuron activity and freezing behavior depends on the VTA subregion.

At present, the image classification of mice is basically supervised learning. It is worth noting that labeling data usually takes a lot of manpower and material resources, and there are a lot of unlabeled data in real life. Although supervised learning is the most commonly used method in image classification, other training methods have their applications, particularly when large amounts of labeled data are unavailable or when labeling is costly. Most of the current popular image classification methods combine supervised and unsupervised learning. The following introduces the current advanced image classification algorithms. The summary of image classification is shown in

Table 2.

Du et al. [

65] propose a novel semi-supervised efficient contrastive learning classification method for esophageal disease. They use pre-trained ResNet50 as the CNN backbone. First, they propose an efficient contrastive pair generation module to generate efficient contrastive pairs. Then, an unsupervised visual feature representation containing the general feature of esophageal gastroscopic images is learned by unsupervised efficient contrastive learning. Finally, they transfer the feature representation to the downstream esophageal disease classification task. The experimental results have demonstrated that the classification accuracy is 92.57%. The proposed method can reduce the reliance on large labeled datasets and the burden of data annotation.

Xue et al. [

66] propose a generative self-supervised pretraining and few-shot land cover classification method for multimodal remote sensing data. The approach contains two stages: generative self-supervised pretraining and few-shot land cover classification. In the pretraining procedure, local multiview observed images are divided into image patches, which are masked randomly, and unmasked patches are embedded for the encoder to learn high-level feature representations. After the self-supervised pretraining process, the learned spatial features are normalized and combined with corresponding spectral information. These are employed as an input of the lightweight SVM for classification. The transformer structure is employed as the backbone.

Li et al. [

67] present a self-supervised learning framework for retinal disease diagnosis that reduces the annotation efforts by learning the visual features from the unlabeled images. The framework is based on ResNet18. The workflow of the overall architecture of the self-supervised method involves randomly sampling images from the training dataset, applying random data augmentation twice to generate rotated images, assigning rotation labels to each image, and utilizing a feature embedding network to map the input to a high-level feature vector that is decoupled into two parts: rotation-related and rotation-invariant features. The experimental results demonstrate that with a large amount of unlabeled data available, the proposed method could surpass the supervised baseline for pathologic myopia and is very close to the supervised baseline for age-related macular degeneration, showing the potential benefit of the method in clinical practice.

Taleb et al. [

68] propose using self-supervised learning methods to learn from unlabeled data for dental caries classification. The backbone of the methods is CNNs. They train with three self-supervised algorithms on a large corpus of unlabeled dental images, which contain 38K bitewing radiographs. They then apply the learned neural network representations on tooth-level dental caries classification, using labels extracted from electronic health records. The experimental results demonstrate improved caries classification performance and label efficiency.

Table 2.

Summary of Studies on Image Classification.

Table 2.

Summary of Studies on Image Classification.

| |

Architecture |

Type |

Category |

Dataset |

Performance |

| [52] |

AlexNet,C3D |

Mice |

Supervised learning |

Private |

The model not only provides more accurate annotations than alternate automatic methods, but also provides reliable annotations that can replace human annotations for neuroscience experiments. |

| [55] |

ResNet18 |

Mice |

Supervised learning |

Private |

The CNN allows accurate and automated classification of freezing behavior throughout the duration of our experiments with minimal labor |

| [65] |

ResNet50 |

Stomach |

Semi-supervised learning |

Private,Kvasir [69] |

The classification accuracy is 92.57%, which is better than that of the other state-of-the-art semi-supervised methods and is also higher than the classification method based on transfer learning by 2.28%. |

| [66] |

Transformer |

Remote sensing |

Self-supervised learning |

Private |

The generative self-supervised model achieves superior performance in terms of feature learning and land cover classification, especially in the small sample classification case. |

| [67] |

ResNet18 |

Retina |

Self-supervised learning |

Ichallenge-AMD dataset [70], Ichallenge-PM dataset [71] |

The method outperforms other self-supervised feature learning methods (around 4.2% area under the curve and can surpass the supervised baseline for pathologic myopia |

| [68] |

ResNet18 |

Dental caries |

Self-supervised learning |

Private |

Using as few as 18 annotations can produce 45% sensitivity, which is comparable to human-level diagnostic performance |

4.3.2. Object Detection

Object detection aims to solve the problem of identifying and positioning the set goal. Its solutions can be classified into two categories: one-stage and two-stage. The two-stage method splits the object detection task into a location task and a classification task. A series of candidate boxes as samples are generated through the region propose networks (RPN) first, and then classification regression is carried out through the network. The one-stage method directly regresses the distribution probability and position coordinates of the target instead of the RPN. It obtains the location information and target categories over the backbone network. The major processes of the two methods are shown in

Figure 7.

Existing mice behavior analysis studies apply one-stage and two-stage methods. For the one-stage method, Vidal et al. [

27] applied YoloV3 trained on the Open Images datasets to detect the mouse faces. Their modified YOLO model is trained for 100 epochs on the corpus. For the first 50 epochs, the entire model is frozen except for the output layer. Then, they unfreeze all the parameters in the model, training the model for another 50 epochs.

For the two-stage method, Martins et al. [

31] apply Inspection ResNetV2 with Faster R-CNN to detect the rear paws of mice. They apply Faster R-CNN to locate the rear paws by RPN networks and obtain the region of interest (ROIs). Then, the extracted ROIs are integrated with the feature map, and classification and box regression are carried out by the Inspection ResNetV2. Besides, Segalin et al. [

38] also applied Inspection ResNetV2 with ImageNet pre-trained weights to detect the mice location. In their study, the network model computes a short list of up to

K possible object detectors proposal (bounding boxes) and associate confidence scores denoting the likelihood of that box containing a target object, in this case, the black or white mice. During training, their network model seeks to optimize the location and maximize confidence scores of predicted bounding boxes that best match the ground truth, while minimizing confidence scores of those that do not match the ground truth. The bounding box location was encoded as the coordinates of the box’s upper-left and lower-right corners, normalized with respect to the image dimensions. Finally, the network output is the confidence score scaled between 0 (lowest) and 1 (highest).

With the development of deep learning, state-of-the-art object detection techniques can be further divided into anchor-based and anchor-free methods. The anchor is used for label allocation. In the anchor-based method, boxes of different sizes and aspect ratios are preset either manually or by clustering methods, which can cover the whole image. It can be applied in both one-stage and two-stage methods. The anchor-free method can be divided into two sub-methods. The first one determines the object’s center and the predictions for the four borders (called center-based). The second one locates to multiple predefined or self-learning key points and then constrains the spatial range of the object (called key point-based). The state-of-the-art studies on object detection apply the anchor-based and anchor-free mode, which are summarized in

Table 3.

Hu et al. [

72] propose a one-stage anchor-free network for improving the detection accuracy of the one-stage method. The whole network takes a point cloud input and voxelized it. They apply AFDet as the backbone, which has two stages, and each stage has a convolutional layer and three blocks. To fully explore the potential of the single-stage framework, they apply the self-calibrated convolutions for each block. Besides, they devise an intersection over union (IoU) aware confidence score prediction as the anchor-free head of the network. The head belongs to the key point-based anchor-free method. The authors devise a keypoint prediction sub-head as auxiliary supervision in the detection head. They add another heatmap that predicts 4 corners and the center of every object in bird’s eye view during training.

Li et al. [

73] propose a two-stage anchor-based network to make the first-stage recognition more effective at locating insignificant small defects with high similarity on the steel surface. The network structure contains input, backbone, neck, and output parts. The input terminal mainly contains the preprocessing of the data, including mosaic data augmentation and adaptive image filling. In the neck network, the feature pyramid structures of feature pyramid network (FPN) and pixel aggregation network (PAN) were used. The FPN structure conveys strong semantic features from the top feature maps into the lower feature maps. At the same time, the PAN structure conveys strong localization features from lower feature maps into higher feature maps. The head output is mainly used to predict targets of different sizes on feature maps. The backbone is YoloV5 with improved feature extraction capability of the backbone network for steel defects. They remove the Conv and C3 layer that obtained 1/32 scale feature information in the original YOLOv5, and replace it with a Conv and C3 layer that extracted feature information at a 1/24 scale. Besides, they embed an efficient channel attention network mechanism into the backbone network and connect it in parallel to the C3 module.

Sun et al. [

74] present a simple yet efficient framework to address the computational bottlenecks and achieve efficient one-stage VOD. They proposed two modules to achieve an efficient one-stage video object detector called the location prior network and the size prior network. The location prior network has two steps. First, the foreground region selection is guided by the detected bounding boxes from the previous frame. Second, the partial feature aggregation enhances the selected foreground pixels using attention modules. Besides, the authors apply an attention mechanism in the one-stage method and solve the bottlenecks, including efficiency and detection heads on low feature levels. The input of the attention is foreground pixels on the current frame and the reference frames.

Zhou et al. [

75] propose an anchor-based two-stage model called TS4Net for rotating object detection solely. Benefiting from the ARM and TS4, the TS4Net can achieve superior performance with one preset horizontal anchor. The architecture of TS4Net adopts the vanilla one-stage detector RetinaNet as the baseline model. In the RetinaNet, two parallel fully convolutional networks are connected after FPN to perform the classification and regression tasks, respectively. It can also add an extra IoU prediction head to train jointly with the classification head and regression head, which improves the detection performance during inference. To select the positive samples from the horizontal anchors with large IoU values, authors adopted an ARM cascade network including a two-stage cascade network, which is stacked by four convolutional layers with 3*3 convolution kernels as classification and regression networks in the first stage. Besides, the authors propose the two-stage sample selective strategy. The first stage of ARM refines the horizontal anchors to high-quality rotated anchors, and then the second stage adjusts the rotating anchor to a more accurate prediction box.

Recently, Zhou et al. [

76] propose a state-of-the-art two-stage study on video object detection field with transformer technique. They propose an end-to-end model based on spatial-temporal transformer architecture, improving the efficiency of the detection transformer and deformable DETR. The model started from a ResNet backbone extracting features of multiple frames, Then, a series of shared spatial transformer encoders produce the feature memories, which are linked and fed into the temporal deformable transformer encoder, and the spatial transformer decoder decodes the spatial object queries. Next, the model used a temporal query encoder to model the relations of different queries and aggregate these queries supporting the object query of the current frame. Both the temporal object query and the temporal feature memories are fed into the temporal deformable transformer decoder to learn the temporal contexts across different frames. The input is video frames, and the output is the shared weights.

4.3.3. Semantic Segmentation

Semantic segmentation is a computer vision task that assigns each pixel in an image to a specific semantic category. In mice behavior analysis studies, by applying semantic segmentation to mice behavior video data, it can be used for behavior recognition and tracking, spatial localization and trajectory analysis, environmental interaction, behavioral context association, disease model, and drug effect evaluation. The application of semantic segmentation in mice behavior analysis research can achieve fine classification and quantification of behavior, provide more comprehensive and accurate behavioral characterization, and promote a deeper understanding of mouse behavior patterns and biological mechanisms.

Vidal et al. [

27] propose a machine-learning approach to automate the prediction of the grimace scale on white-furred mice, which is used to understand the suffering of a mouse in the presence of interventions. The approach involves face detection, landmark region extraction, and expression recognition. For eye region extraction and grimace pain prediction, a novel structure based on a dilated convolutional network is proposed. Dilated convolutional neural networks [

77] were proposed as effective tools to perform semantic segmentation.

Wu et al. [

78] propose a boosting semantic segmentation framework that performs state-of-the-art segmenting of somata and vessels in the mouse brain. The proposed framework consists of a CNN for multilabel semantic segmentation, a fusion module combining the annotated labels and the corresponding predictions from the CNN, and a boosting algorithm to update the sample weights sequentially. It improves the quality of the annotated labels for deep learning-based segmentation tasks.

Geuther et al. [

10] propose a machine learning-based visual classification of sleep in mice, which provides a path to high-throughput studies of sleep. The authors collect synchronized high-resolution video and EEG/EMG data in 16 male C57BL/6J mice, extract features from the video that are time and frequency-based, and use the human expert-scored EEG/EMG data to train a visual classifier. When processing the video data, they apply a segmentation neural network architecture [

79] to produce mice masks.

Existing semantic segmentation methods are divided into four categories according to different network architectures: CNN-based architectures, transformer-based architectures, multi-layer perception-based (MLP-based) architectures, and others.

In the CNN-based architecture, the deep network has a strong representation ability of semantic information, and the shallow network contains rich spatial detail information. Zhang et al. [

80] proposed an EncNet model, which designed a context encoding module to capture global semantic information and calculated the scaling factor of the feature graph based on the coding information to highlight the information categories that need to be emphasized. Some of the most important works include the DeepLap family proposed by Chen et al. [

81] and the densely connected atrous spatial pyramid pooling (DenseASPP) proposed by Yang et al. [

82]. They all use dilated convolution to replace the original down-sampling method and expand the receptive field to obtain more context information without increasing the number of parameters and calculations.

Transformer is a deep neural network based on self-attention. In the recent two years, transformer structure and its variants have been successfully applied to segmentation. Zheng et al. [

83] first performed semantic segmentation based on the transformer and constructed a segmentation transformer network to extract global semantic information. Inspired by the segmentation transformer network, trudel et al. [

84] design a pure transformer model, named Segmenter, to apply to semantic segmentation tasks. The model leverages pre-trained models for image classification and fine-tunes them on moderate-sized datasets available for semantic segmentation. Segmenter outperforms the state-of-the-art on both ADE20K and Pascal Context datasets and is competitive on Cityscapes.

MLP-based architecture is simple in design since it abandons convolution and self-attention. The performance in many visual tasks is comparable to the CNN-based and Transformer-based architectures. Yu et al. [

85] propose a novel pure MLP architecture, spatial-shift MLP (S2-MLP), which only contains channel-mixing MLP. The proposed S2-MLP attains higher recognition accuracy than MLP-mixer when training on the ImageNet-1K dataset.

Table 4.

Summary of Studies on Semantic Segmentation.

Table 4.

Summary of Studies on Semantic Segmentation.

| Reference |

Architecture |

Type |

Category |

Dataset |

Performance |

| [27] |

YoloV3 |

Mice |

CNN-based |

Open Images dataset |

Achieves a

performance of 97.2% in terms of accuracy |

| [78] |

DCNN based on U-Net |

Mice |

CNN-based |

MOST dataset |

Improves the network

performance by about 3–10% |

| [10] |

- |

Mice |

- |

Private |

Achieves an overall accuracy of 0.92 ± 0.05 (mean ± SD) |

| [80] |

Context Encoding Network based on ResNet |

Semantic segmentation framework |

CNN-based |

CIFAR-10 dataset |

Achieves an error rate of 3.45% |

| [81] |

DCNN (VGG-16 or ResNet-101) |

Semantic image segmentation model |

CNN-based |

PASCAL VOC 2012, PASCAL-Context, PASCALPerson-Part, and Cityscapes dataset |

Reaching 79.7 percent mIOU |

| [82] |

DenseASPP, consists of a base network followed by a cascade of atrous convolution layers |

Semantic image segmentation in autonomous driving |

CNN-based |

Cityscapes dataset |

Achieve state-of-the-art performance |

| [83] |

Transformer |

Segmentation model |

Transformer-based |

ADE20K, Pascal Context, and Cityscapes dataset |

Achieves new state of the art on ADE20K (50.28% mIoU), Pascal Context (55.83% mIoU) and competitive results on Cityscapes |

| [84] |

Vision Transformer |

Segmentation model |

Transformer-based |

ADE20K, Pascal Context, and Cityscapes dataset |

Outperforms the state

of the art on both ADE20K and Pascal Context datasets and

is competitive on Cityscapes |

| [85] |

Spatial-shift MLP (S2-MLP), containing only channel-mixing MLPs |

Segmentation model |

MLP-based |

ImageNet-1K dataset |

Attains considerably higher recognition

accuracy than MLP-mixer on ImageNet-1K dataset. |

4.3.4. Instance Segmentation

Instance segmentation is a computer vision technique that involves identifying and delineating individual objects within an image. Unlike semantic segmentation, which assigns a single label to each pixel in an image, instance segmentation identifies different objects within an image and assigns each object a unique label. The methods of instance segmentation can be divided into three categories: top-down, bottom-up, and one-stage. In mice behavior studies, instance segmentation can be used to track mouse movement trajectories and postures, allowing for the analysis of activity patterns and behavioral characteristics. Instance segmentation provides researchers with accurate and efficient data analysis tools to promote the development and progress of mouse research, whose studies are summarized in

Table 5.

Marks et al. [

29] use top-down methods. They propose SIPEC:SegNet, which is based on the Mask R-CNN architecture, to segment instances of animals. SIPEC:SegNet is optimized for analyzing multiple animals. They further apply transfer learning onto the weights of the Mask R-CNN ResNet-backbone pre-trained on the Microsoft Common Objects in Context (COCO) dataset. Moreover, they apply image augmentation to increase network robustness against invariances (for example, rotational invariance) and therefore increase generalizability. The experimental results demonstrate that SIPEC:SegNet achieved a mean average precision of 1.0 ± 0 (mean ± s.e.m.). For single-mouse videos, the model achieves 95% of its mean peak performance (MAP of 0.95 ± 0.05) using as few as a total of three labeled frames for training. SIPEC:SegNet could robustly segment animals despite occlusions, multiple scales, and rapid movement, and enable tracking of animal identities within a session.

Although instance segmentation can play a significant role in mice behavior recognition, there are not many studies on mice behavior that utilize instance segmentation. The following introduces some popular instance segmentation methods of the above three method categories, which can provide a reference for the subsequent research on mice behavior.

Shen et al. [

86] propose a parallel detection and segmentation, a framework to learn instance segmentation with only image-level labels. The framework draws inspiration from both top-down and bottom-up instance segmentation approaches. The detection module is the same as the typical design of any weakly supervised object detection. In contrast, the segmentation module leverages self-supervised learning to model class-agnostic foreground extraction, followed by self-training to refine class-specific segmentation. The paper further proposes an instance-activation correlation module to improve the coherence between detection and segmentation branches. The experimental results demonstrate that the proposed method outperforms baselines and achieves state-of-the-art results on PASCAL VOC and COCO.

Korfhage et al. [

87] present a CNN architecture based on Mask R-CNN for cell detection and segmentation (top-down) that incorporates previously learned nucleus features. A novel fusion of feature pyramids for nucleus detection and segmentation with feature pyramids for cell detection and segmentation is used to improve performance on a microscopic image dataset created by the authors and provided for public use, containing both nucleus and cell signals. The proposed feature pyramid fusion architecture clearly outperforms a state-of-the-art Mask R-CNN approach for cell detection and segmentation with relative mean average precision improvements of up to 23.88% and 23.17%, respectively. No post-processing was carried out in the experiments when compared to other methods to ensure a fair comparison.

Zhou et al. [

88] propose a bottom-up regime to learn category-level human semantic segmentation and multi-person pose estimation in a joint and end-to-end manner. They adopt ResNet-101 [

33] as the backbone. The proposed method exploits structural information over different human granularities and eases the difficulty of person partitioning. A dense-to-sparse projection field is learned and progressively improved over the network feature pyramid for robustness. By formulating joint association as maximum-weight bipartite matching, a differentiable solution is developed to exploit projected gradient descent and Dykstra’s cyclic projection algorithm. This makes the method end-to-end trainable and allows back-propagating the grouping error to supervise multi-granularity human representation learning directly. Experiments on three instance-aware human parsing datasets show that the proposed model outperforms other bottom-up alternatives with much more efficient inference.

Wang et al. [

89] propose a framework called segmenting objects by locations (SOLO), which is based on ResNet-50. SOLO is a one-stage, end-to-end instance segmentation method that can perform detection and segmentation simultaneously with high efficiency and accuracy. The main idea of the SOLO is to transform the instance segmentation problem into a dense prediction problem. Specifically, SOLO divides the image into a set of position-sensitive small grids and predicts the object category and instance segmentation mask in each grid. In this way, each pixel can be assigned to an instance, and the object edge can be accurately segmented. The experimental results demonstrated that the proposed SOLO framework achieves state-of-the-art results for the instance segmentation task in terms of both speed and accuracy while being considerably simpler than the existing methods.

Li et al. [

90] propose PaFPN-SOLO, a SOLO-based image instance segmentation algorithm. They enhanced the ResNet backbone by incorporating a Non-local operation, effectively preserving more feature information from the image during the extraction process. In addition, they employ a method known as bottom-up path augmentation. This method was designed to extract more precise positional information from the lower feature layers. This dual improvement not only boosted the network model’s ability to localize the feature structure but also reduced the distance over which information needed to propagate between feature layers. When the modified algorithm was tested on two datasets, COCO2017 and Cityscapes, it produced significantly improved segmentation results. The average segmentation accuracy on these datasets reached 56% and 47.3% respectively, marking an increase of 4.4% and 7.4% over the performance of the original SOLO network.

Table 5.

Summary of Studies on Instance Segmentation.

Table 5.

Summary of Studies on Instance Segmentation.

| Reference |

Architecture |

Type |

Category |

Dataset |

Performance |

| [29] |

Mask R-CNN |

Mice |

Top-down method |

Private |

SIPEC successfully recognizes multiple behaviours of freely moving individual mice as well as socially interacting non-human primates in three dimensions |

| [86] |

PDSL framework |

- |

Top-down method |

PASCAL VOC 2012 [91], MS COCO [92] |

PDSL framework outperforms baselines and achieves state-of-the-art results on PASCAL VOC and MS COCO. |

| [87] |

Mask R-CNN |

Cell |

Top-down method |

Private |

The proposed architecture clearly outperforms a state-of-the-art Mask R-CNN approach for cell detection and segmentation with relative mean average precision improvements ofup to23.88% and 23.17%, respectively. |

| [88] |

ResNet101 |

Human |

Bottom-up method |

MHPv2 [93], DensePose-COCO [94], PASCAL-Person-Part [95] |

Experiments on three instance-aware human parsing datasets show that the proposed model outperforms other bottom-up alternatives with much more efficient inference. |

| [89] |

ResNet50 |

- |

Top-down method |

LVIS [96] |

The proposed framework achieves state-of-the-art results for instance segmentation in terms of both speed and accuracy, while being considerably simpler than the existing methods. |

| [90] |

ResNet |

- |

Bottom-up method |

COCO2017, Cityscapes [97] |

The average segmentation accuracy on COCO2017 and Cityscapes reached 56% and 47.3% respectively, marking an increase of 4.4% and 7.4% over the performance of the original SOLO network. |

4.4. Middle Layer Tasks

The middle-layer tasks mainly focus on pose estimation in both humans and other animals. They can be used in motion recognition, human-computer interaction, and motion capture applications. To make the estimation more accurate, they need higher accuracy and real-time than other tasks, so they also cost more computing resources than other tasks.

4.4.1. Key Point Detection

Key point detection is a major technology of deep learning. It is a basic task in computer vision. It is the pre-task of human action recognition and action prediction. In the mice behavior analysis studies, the key point detection also contains fundamental techniques, such as object detection and semantic segmentation. The input is an image, and the output is the expected key points. Normally, key point detection can be categorized into 2D and 3D detection, and all the studies of mice key point detection apply 2D detection methods.

Tong et al. [

54] make key point detection based on the semantic segmentation of the mice contour. They proposed a CNN architecture to detect the snout point of the mice. The CNN contains four convolutional layers, an average pooling layer after the convolutional layers, a flattened layer, and three fully connected layers. The input of CNN is an area near snouts, and the output is the snout point position.

Wotton et al. [

25], Weber et al. [

19], Winters et al. [

46], and Aljovic et al. [

20] all make key point detection for body-part detection. Wotton et al. [

25] propose a ResNet50-based CNN to learn specific features and the skipping function to minimize information loss. Weber et al. [

19], Aljovic et al. [

20], and Winters et al. [

46] make the key point for detecting distinct body parts of mice. They proposed a ResNet-50 from the DeepLabCut by manually labeling 120 frames selected using k-means clustering from multiple videos of different mice. The former one detects the body parts, including the head, right front toe, left front toe, center front, right back toe, left back toe, center back, and tail base. The middle one detected 14 body parts configuration for the mother and pup together. The latter labels six body parts (toe, MTP joint, ankle, knee, hip, and iliac crest) in 450 image frames, and trained for 400,000 iterations.

Besides mice, key point detection is mostly applied in humans. Human key point detection can be categorized into single-person and multi-person detection. The multi-person detection algorithms can be further divided into Top-Down and Bottom-Up two parts. All the studies are summarized in

Table 6.

Wen et al. [

98] make multi-person key point detection based on the pre-trained network and SHNet. The pre-trained network was used for object detection. SHNet is used for keypoint detection. It consisted of four stages and the attention mechanism. The first stage consists of four remaining units, which are the same as ResNet50 and are composed of a bottleneck with a width of 64, followed by a 3*3 convolution feature graph whose width is reduced to 4. The second, third, and fourth stages contained 1, 4, and 3 communicative blocks. Besides, the model required paying more attention to the channel features with the largest amount of information and suppressing unimportant channel features. The attention mechanism contains information input, calculation of attention distribution, and calculation of weight average of input information. The input is the vector of each image, and the output is the weights of each feature.

Gong et al. [

99] propose a retrained AlphaPose model to make multi-person key point detection in the upper human body. The AlphaPose method detects human key points based on the regional multiplayer pose estimation (RMPE) framework proposed by the AlphaPose method, containing three components: symmetric spatial transformation network (SSTN), parametric pose non-maximum suppression (NMS) and pose guided proposals generator (PGPG). The SSTN network consists of a spatial transformation network (STN), single-person pose estimation (SPPE), and spatial de-transformer network (SDTN). STN is used to acquire high-quality human proposals and exclude inaccurate input frames. SPPE is used to estimate the pose of the input human candidates. SDTN maps the pose estimated by SPPE back to the original image coordinates and adjusts the input frames to make the detected frames more accurate. The AlphaPose model can detect 17 human upper body key points.

Zang et al. [

100] propose a lightweight multi-stage attention network (LMANet) to detect the key points of a single person at night. LMANet contains a backbone network and some subnets for identifying key points that are not obvious or hidden through the characteristics of different receptive fields and the association between key points. The backbone network is pruned MobileNet. The input of the backbone is 334*384. The first layer is a 3*3 convolution, and layer 2 to layer 6 are the classic bottleneck structure. The expected output is 12*12. For the subnets, there are 2 subnets, each of which contains only 2 bottlenecks. The input is 48*48, and the output is 12*12, which is the spatial attention module in the revised feature representation. Besides, the second bottleneck and the fourth bottleneck of the LMANet backbone network have added the channel attention mechanism, which is used to enhance the local features of each feature map at the spatial level. The attention module can get a refined output after the two-stage networks, and finally obtain a heatmap of 14 key points of the human body.

Hong et al. [

101] proposed a PGNet for single-person key-point detection. PGNet consists of three main components: Pipeline Guidance Strategy (PGS), Cross-Distance-IoU Loss (CIoU), and Cascaded Fusion Feature Model (CFFM). The backbone network in PGNet is ResNet-50, which is divided into 5 stages using CFFM. The feature-guided network after the image is convolved is used to extract key-point features, while CFFM is utilized to extract high-level and low-level features from the conv1-5 layers of ResNet-50. The middle three layers of CFFM are specifically used to avoid consuming a large amount of spatial information during convolution calculations. The feature-guided network combines traditional data parallelism with model parallelism enhanced with pipelining, which partitions the layers of the object being trained into multiple stages. After feature extraction, a convolution operation is used to fuse the features of the two branches, which completes the key points.

4.4.2. Pose Estimation

Quantifying mice behaviors from videos or images remains a challenging problem, where pose estimation plays an important role in describing mice behaviors. Although deep learning-based methods have made promising advances in human pose estimation, they cannot be directly applied to pose estimation of mice due to different physiological natures. Particularly, since the mouse body is highly deformable, it is a challenge to accurately locate different keypoints on the mouse body. The mice pose estimation can be divided into 2D and 3D.

Zhou et al. [