1. Introduction

Futures contracts have long been used in finance to harness the

wisdom of the crowd and make predictions about the future value of an asset by exploiting people’s aggregate expectations. Prediction markets are one of the most recent forms of futures and, although they were originally only meant to forecast the outcomes of important political events, nowadays they are used in a number of different contexts. For instance, alongside public markets that allow betting on political or sports events, there exist private prediction markets that are used by companies such as Google, Intel, and General Electric to gather people’s beliefs about business activities such as sales forecasts or the likelihood of a team meeting certain performance goals [

1,

2]. Although prediction markets are often heralded as an effective mechanism to make highly accurate predictions [

3,

4], it has been shown that they are prone to bias [

5] and manipulation [

6], and that these phenomena can spread to financial markets with dreadful consequences [

6,

7].

To better understand prediction markets and consequently account for these adverse events, thereby limiting their impact outside the prediction market itself, there is the need for models that realistically reproduce the underlying processes that drive price and opinion formation. This can be achieved by building adequately complex frameworks that can be validated against real-world data. That is, models that are simple enough to be understood and controlled, but complex enough to allow the emergence of realistic and complex dynamics generated by simple interactions between agents. Despite the vast amount of existing models of prediction markets [

5,

8,

9,

10], there is little work on modelling prediction market exchange platforms, arguably the most important type of prediction market.

To address this gap, in this paper we propose a model that matches the empirical properties (often referred to as

stylized facts) of historical price and volume time series of political prediction markets. To achieve this, we consider a social network where agents possess an opinion about the probability of a given event occurring, and either buy or sell contracts on a prediction market exchange based on their opinion. To model the opinion dynamics process, we use the Deffuant model [

11]. Opinion dynamics have long been a topic on which a number of physicists applied statistical physics tools to better understand human interactions and the complex phenomena that emerge from them (see, for example, [

11,

12,

13,

14,

15] for seminal opinion dynamics models and [

16] for a thorough review of the topic). Research has also been done using opinion dynamics models with binary opinions to model agents in the stock market [

17,

18,

19,

20]. Among the many models proposed to describe opinion diffusion in social networks, we choose to follow the Deffuant model, which has three features that make it especially suitable to represent the underlying opinion propagation process that determines prices in prediction markets. First, the Deffuant model considers continuous opinions bounded by arbitrary values. This makes it perfect to describe the diffusion of opinions about the probability of an event to occur, as the opinion can be bounded between 0 and 1 and take any value in this range. Second, since it has only two free parameters, the Deffuant model has the merit of being extremely simple, which allows us to gain deeper insights on what drives price properties in prediction markets. This also guarantees a good degree of realism without having to make assumptions on other parameters in the model, which have otherwise to be fine-tuned, a common (and often necessary) practice for models of financial markets [

21,

22]. Third, similar to other bounded confidence models of opinion diffusion, in the Deffuant model only people with similar opinions update their beliefs after interacting, which allows the possibility of not reaching consensus at equilibrium. This property enable us to analyze the similarity of our results to historical data depending on the number of opinion clusters that coexist at equilibrium.

This paper makes three important contributions. First, we introduce a model of prediction markets that uses opinion dynamics in social networks as its underlying mechanism. This model has the merit of being particularly simple, since it possesses only two free parameters, but highly robust at the same time, as it is capable of generating price time series that match the stylized facts of historical data for any combination of the two free parameters. Second, we show that our model reproduces historical data best when opinions are heterogeneous but revolve around a single opinion cluster. This suggests that participants in prediction markets tend to have a similar, yet not identical view on the outcome, especially in markets that have a limited duration. Third, our results support the presence of Deffuant-like opinion dynamics. This contribution helps tackling an important challenge that Sobkowitz posed by arguing that opinion dynamics models are often disconnected from the real world and lack of empirical validation [

23]. Since his paper, the explosion of popularity social media experienced has certainly offered means of compelling validation [

24,

25]. However, the availability and popularity of such vast data sets resulted in most opinion dynamics models being validated only on social media data, potentially introducing a bias. In this paper, we use market data to provide evidence a continuous opinion diffusion models, and specifically the Deffuant model, is compatible with the exchange of opinions in prediction markets. To achieve this, we use data from PredictIt, a political prediction market exchange platform, and show that the Deffuant model provides an excellent representation of the underlying opinion diffusion process in social networks when opinions are scalar.

The remainder of the paper is organized as follows. In

Section 2 we define the model of opinion formation and market exchange. In

Section 3 we explain the experimental setting we used to run agent-based simulations of our model and discuss our findings, showing that our model provides a qualitatively good description of historical price and volume time series even in the worst case scenario. Finally, we conclude with a short discussion and outline future work in

Section 4.

2. Model

In this section we describe the price formation model and argue why it is appropriate to represent prediction markets. We start by describing how prediction markets work, also defining normalized prices and true probabilities, and then we describe the model dynamics in detail.

Prediction markets are time-limited markets in which contracts are traded on the outcomes of a given event, , where i denotes the i-th event, and can take only two values: if i occurs, and 0 otherwise. Markets for which multiple options are available, e.g., Who will win the presidential elections? can be seen as markets in which there are N events, where N is the number of candidates running for president. The payoff of a contract on the i-th event is 1 if the corresponding event occurs and 0 otherwise. Let us denote with the price of the contract on the event . Since, in prediction markets, , where is the turnaround (e.g., spread, bookmaker fees, etc.), for our analysis we consider normalized prices , which give a better representation of the corresponding realization probabilities. Also, if a prediction market is completely efficient, the normalized price of a security reflects exactly the probability of the corresponding outcome to happen, i.e., , where is referred to as true probability.

To model the opinion diffusion process we follow the Deffuant model [

11]. We start by considering a population of N agents, who belong in an undirected, unweighted social network

. Without loss of generality, we assume that there is only one event with two possible outcomes

and

. Then, agent j possesses an opinion

, which can take any real value and corresponds to its subjective probability the agent attaches to the outcome

. The opinion update process is iterative: at each time step, agents may discuss the event with their neighbors, and update their opinion. To model this process, every round an agent

i is randomly chosen from the network to discuss with agent

j, which is, in turn, randomly chosen among agent

i’s neighbors. If their opinions are too different, they refuse to update their beliefs. More precisely, the agent pair

interacts only if

, where

is the threshold of this process and can take any real value between 0 and 1. If the agent pair interacts, they update their opinions as following:

where

is the

convergence parameter, and

.

In this model,

represents the

open-mindedness of agents which would discuss with, or listen to other agents, only if their opinions are sufficiently close. In this paper we follow the basic Deffuant model and consider

constant among all agents, but there exist other versions of this model in which agents have heterogeneous open-mindedness [

26,

27]. In general,

affects the number of clusters at equilibrium (i.e., the number of opinions that coexist), while

drives the convergence time [

11].

To reflect the temporal volume dynamics observed by Restocchi et al. [

28], each agent has a probability

to participate in the market, where T is the duration of the market (in days),

represents the number of days elapsed from the beginning of the market (with

), and

is a scaling parameter, estimated on the same dataset [

28]. If agent

i is chosen to participate in the market, they can either buy or short sell a contract, whose payoff is 1 if

and 0 otherwise. For sake of simplicity, we assume that there exists only one event, with two possible outcomes. Since agent

i believes that

, they will buy a contract only if the current price

, and sell (or short sell) it if

. They will neither buy or sell if

. Following influential agent-based models of financial markets, we assume that price is purely driven by excess demand. Agents can only buy/sell at the current trading price and contracts are created whenever necessary. The demand of agent

i,

, is proportional to the distance between their opinion and the price, and is described by

That is, the more mispriced the agent believes the contract is, the more they will trade. The excess demand (ED) is simply the sum of each agent’s demand, multiplied by a noise term

, as follows:

where, following [

29],

. The reason we choose to model the process with a multiplicative (cf. additive) noise term is that, to ensure that the price does not go beyond the boundaries too often, we need a quenching term. To ensure that this decision does not affect the model’s robustness, we run extensive simulations where the equation for the excess demand is

. We found that, for

, the two models return qualitatively similar results. However, adding such a quenching term would require us to run additional calibration and optimization, eventually risking to overfit our model. By adding a multiplicative noise, we avoid this issue. Importantly, below we show that the empirical properties of the time series obtained by running our simulations heavily depend on

and

, suggesting that the noise term has little impact on the emerging properties of the model. At each trading round, the price gets updated depending only on

. Since prices are bounded between 0 and 1, they are set to 0 or 1 if they become less than 0 or greater than 1, respectively, following an update. Therefore,

takes the following values:

where, without loss of generality, we have included the equation for the price update for

in the first case (i.e.,

).

3. Results

In this section we describe the experimental setting we use to run agent-based simulations of the model, and the results we obtained from such experiments. The simulations are run with all possible combinations of

and

, which are the only two free parameters in our model, within the ranges

and

, which represent the whole possible space for the Deffuant model, with a precision of 0.02 units. Our results show that this model provides a particularly accurate and robust description of prediction markets, because even under the worst-performing conditions, the synthetic time series produced by our simulations capture (at least to some degree) the emerging properties of prediction markets, such as volatility clustering and absence of autocorrelation of returns [

30].

We tested the model on three different network topologies: a random network, a scale-free network, and a complete graph. We show results obtained on a complete graph (i.e. any agent is free to exchange opinions with any other agent), since we find that these replicate historical data more accurately. However, we do not find any significant difference in qualitative behaviour of the results, suggesting that i) price formation does not heavily depend on the network structure and that ii) our model is robust to topological changes. Results on the other two network topologies are shown in

Appendix A.

Although it has been demonstrated that, at least under some circumstances, diffusion processes display finite-size effects [

14,

31], we decide to run experiments with 1000 agents to reflect the scarce liquidity prediction markets usually exhibit [

28], with a median of approximately 300 trades per day per market. In fact, if any finite-size effect exists, this must be displayed by real prediction markets and, therefore, by keeping the number of participants low, we can capture such an effect in our simulations. We leave a detailed analysis of the relation between network size and price formation in prediction markets to future work. The choice of using Barabasi-Albert networks for our model is motivated by the fact that social networks exhibit hubs and that network topology does not have an influence on the equilibria of the Deffuant model [

32].

To specify the other parameters of the simulations, we follow [

28,

30], who provide a comprehensive quantitative analysis of the empirical properties of prediction markets using the same data set from PredictIt. Specifically, for each combination of

,

, we run

simulations, which is the number of markets used by Restocchi et al. [

28,

30] in their analysis, and the duration T of each market is randomly drawn from the empirical distribution of durations observed among these markets. In this way, our simulations will produce time series which are directly comparable with historical data on prediction markets. We initialised our agents with a uniform opinion distribution with an average of

. This is for three reasons: First, these are the initial conditions that were first used in the paper that introduced the Deffuant model, and are the most widely used in practice. Second, we believe that a uniform distribution with an average of

constitutes the most “uninformative prior”, and as such, it should not introduce a bias in our model. That is, other assumptions, and especially those with a different average opinion, could change the results. Given the impossibility to calibrate the initial opinion distribution with the existing dataset, we decided to remove what would have been another free parameter of the model. Third, for sake of simplicity, we assume that the true probability is equal to

and is constant throughout the market. This assumption reflects the fact that, on PredictIt and other prediction markets exchange platforms, it is possible to bet on an event and on its opposite (i.e., there is the possibility to buy and sell contracts on the event

Will happen? but also on the event

Will not happen?).

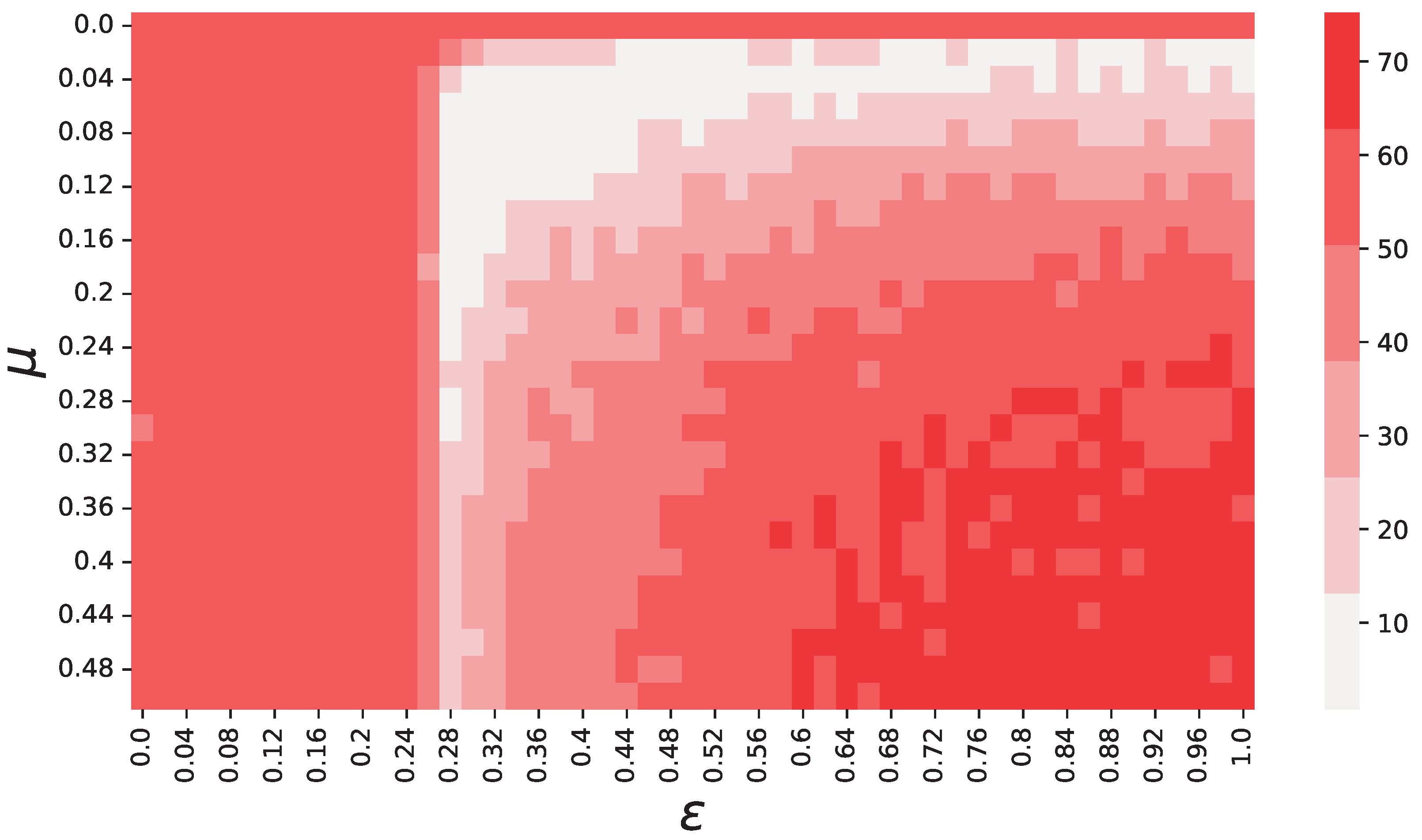

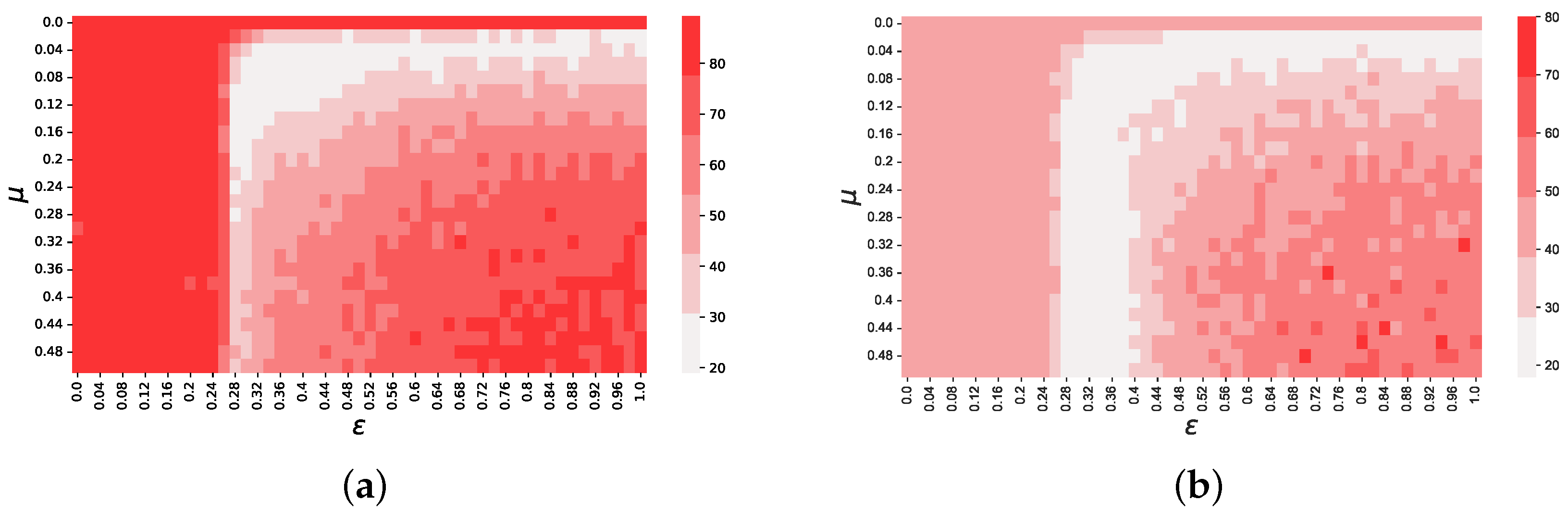

Figure 1.

Objective function values (see Equation (

5)) depending on

and

. These results show that there is a region, approximately delimited by the area

, where the objective function

f reaches its minima. Each color used in this figure represents an interval of 12.5 for

f, starting with

. We chose to discretize the colors to smooth our results over noise, and make the regions easily recognizable.

Figure 1.

Objective function values (see Equation (

5)) depending on

and

. These results show that there is a region, approximately delimited by the area

, where the objective function

f reaches its minima. Each color used in this figure represents an interval of 12.5 for

f, starting with

. We chose to discretize the colors to smooth our results over noise, and make the regions easily recognizable.

To find the optimal values for the pair

,

, we follow [

33] and define the following objective function:

where

and

represent the kurtosis value of the distribution of the returns of all 3385 markets, for simulated and historical data, respectively. For the second term, rather than using the value of ARCH(1), i.e., the first autoregressive term of the time series, as suggested by Gilli and Winker, we use the value of the scaling parameter

that describes the power-law decay of the autocorrelation function of absolute returns. Since the two terms in (

5) can significantly differ in magnitude, to ensure that no component in the objective function outweighs the other, Gilli and Winker suggest that the second term, in our case

, is multiplied by a constant

that rescales its magnitude. For our data set, Restocchi et al. [

30] found that

and

, from which we derive

. We choose to use

, instead of the first-order autocorrelation term

a, because we believe this gives the calibration a better accuracy. Specifically, we also tried to calibrate the model by using two different functions, namely

, where

, and

, and found that

exhibits a qualitatively similar behavior to

f, without adding information. Also, we found that by using

, as suggested by Gilli and Winker, reduces the sensitivity of the objective function to

and

, and generates two regions of local minima in which values are not significantly different. These results are shown by

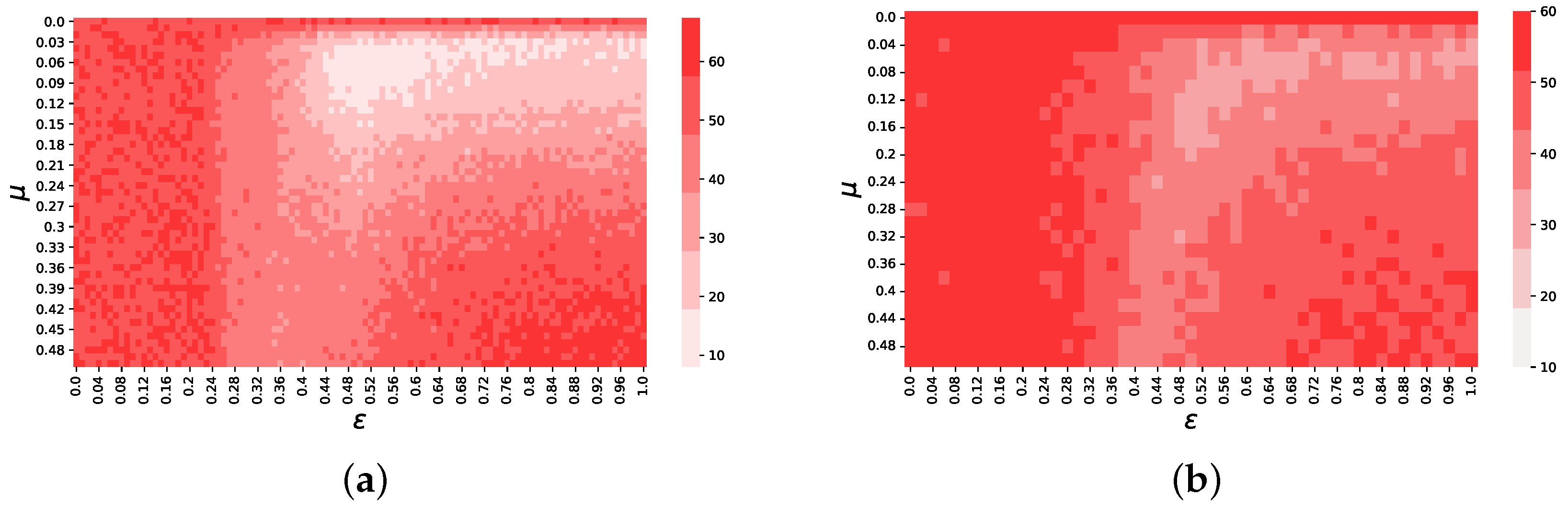

Figure 2.

The objective function values computed from our simulations for each pair

,

are displayed by the heatmap in

Figure 1. It is clear to see from this figure that there exists one region, approximately within the interval

where

f is significantly lower than in the rest of the space. This region also includes the global minimum, which is found in

and

.

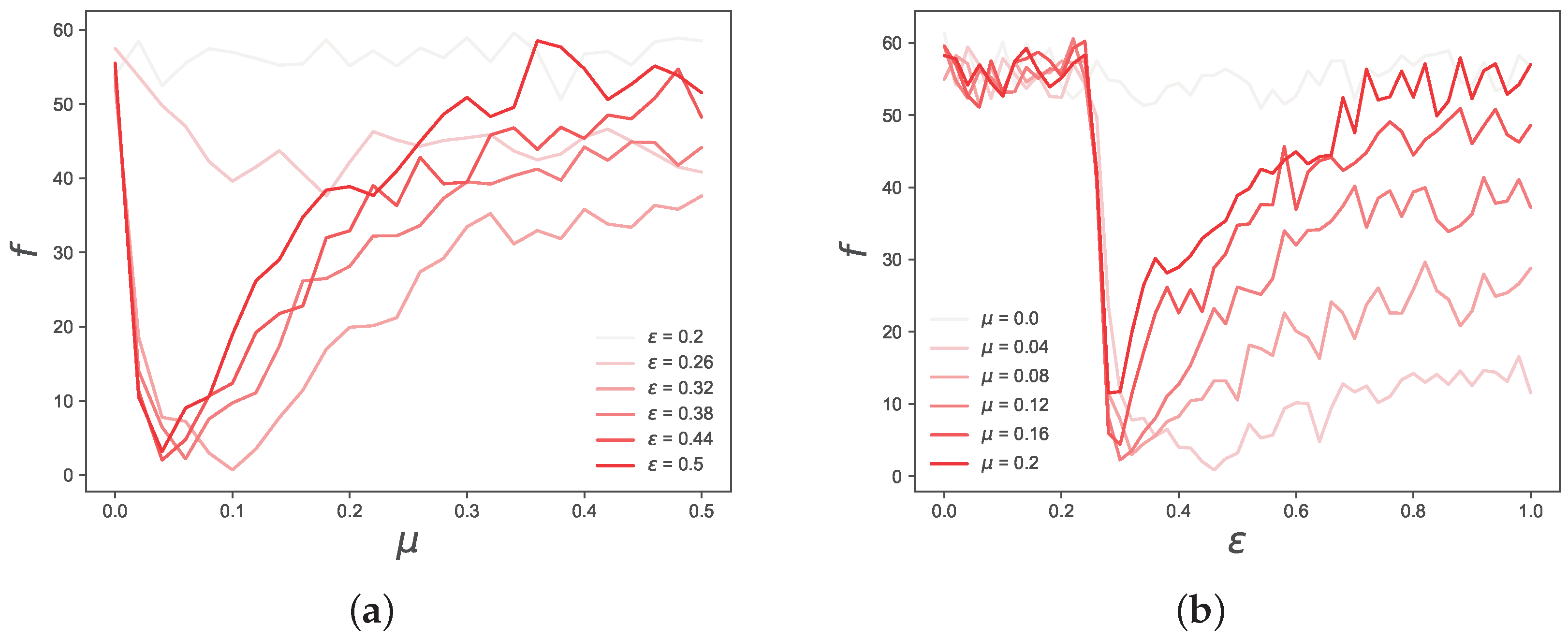

It is also interesting to note that

f displays a regular behavior when varying

and

. To better visualize this, in

Figure 3 we cut the objective function space in slices and show the behavior of

f separately depending on

(

), for a few

(

) around its optimal value. By observing these two figures, it is easy to see two regularities. First, from the right-hand side plot one can note that, in the range

, there is a sharp transition from high values of

f to low ones, and that this behavior does not depend on

(apart from

). Second, the left-hand side plot shows that

f has always a minimum approximately in the range

, but, depending on

, this minimum is more or less pronounced, and it almost disappears for

.

These results suggest that both

and

have a significant impact on the objective function, i.e., both parameters contribute in shaping the statistical properties of the time series generated by the model. This is expected, since, within short time horizons, they both contribute towards the emergence of consensus, and the speed at which this happens. For instance, for high values of

and

, consensus is reached too soon, and the generated time series become less accurate, as suggested by the high value of

f in the region

. However, our results suggest that

has a greater impact on

f than

. Specifically, we observe that there is one region, delimited by

, for which the objective function value becomes particularly high. Interestingly, this is the same value of

below which consensus on a single opinion is not reached in the Deffuant model, and two or more opinions coexist at equilibrium [

11], suggesting a relation between the accuracy of our model and whether there coexist one or more opinion clusters in the underlying opinion dynamics model. These results suggest that the quantitative accuracy at reproducing

and

heavily depends on whether there is a single opinion cluster existing at equilibrium, and on the time it takes for opinions to converge. Further evidence is represented by the results shown in

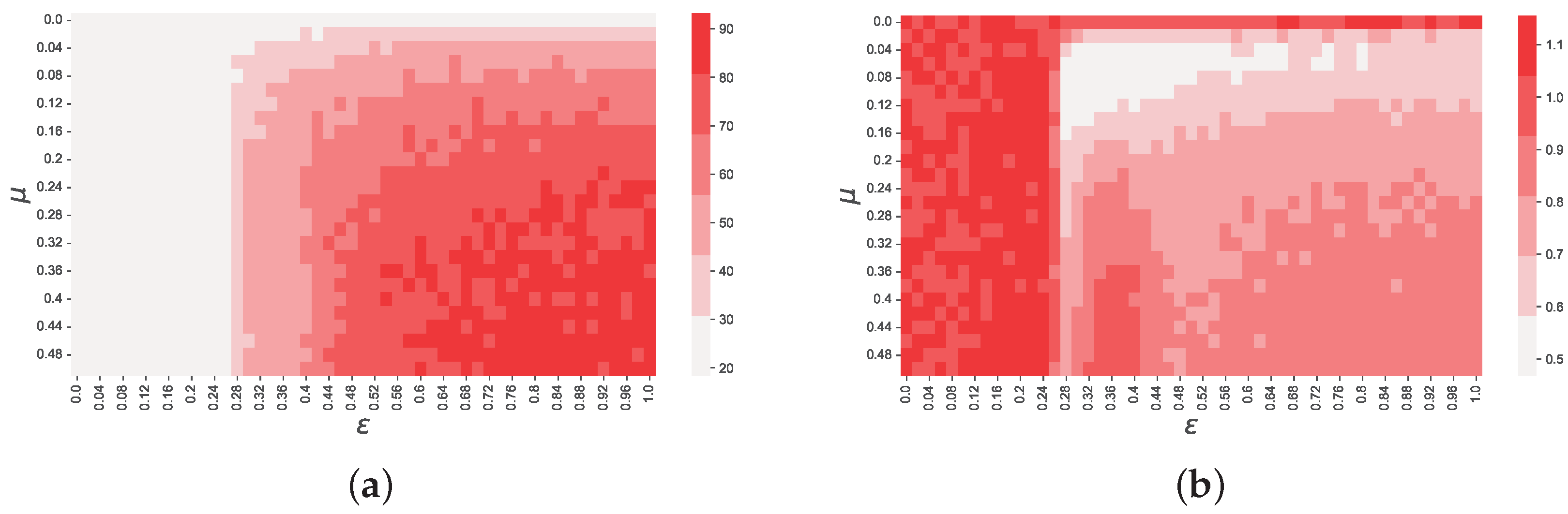

Figure 4, in which we show the dependence of

k and

(

Figure 4b) on

and

.

The results shown in

Figure 4a suggest that the kurtosis of the distribution of raw returns is affected both by

and

, but loses its dependence on

for low values of

, approximately for

, that is, in the region where multiple opinions coexist at equilibrium. However, the dependence on

when

suggests that, when only one opinion exists at equilibrium, the kurtosis highly depends on the time it takes to reach consensus. Not surprisingly, the shorter the time to reach consensus, the higher the kurtosis, as once consensus is reached only few agents trade, and those who trade have low absolute values of demand, since their opinion is closer to the mean. Similarly,

Figure 4b shows that

seems to depend mostly on

, but exhibits a far sharper phase transition around

than

k, significantly decreasing its value for

. Also,

Figure 4b shows that, similarly to

k, in the region

,

depends mainly on

, and its value becomes the larger the faster opinions converge.

These results are further evidence that the accuracy of our model depends on whether there is a single opinion cluster or more, except for the region approximately delimited by

, where consensus is reached early in the market and little or no trading exists after a certain point. Finally,

Figure 4 shows that, for any pair

, the time series generated by our simulations exhibit excess kurtosis and volatility clustering, which are the two main features of prediction markets time series we want to replicate. This is important because the optimal values of

and

can significantly change depending on the data set used for calibration, but our results suggest that our model is capable to reproduce, at least qualitatively, the empirical properties of prediction markets regardless the value of its parameters. However, it is important to note that combinations of parameters far from the optimum still show the emergence of the discussed stylized facts, but these are quantitatively far off from those observed in historical data.

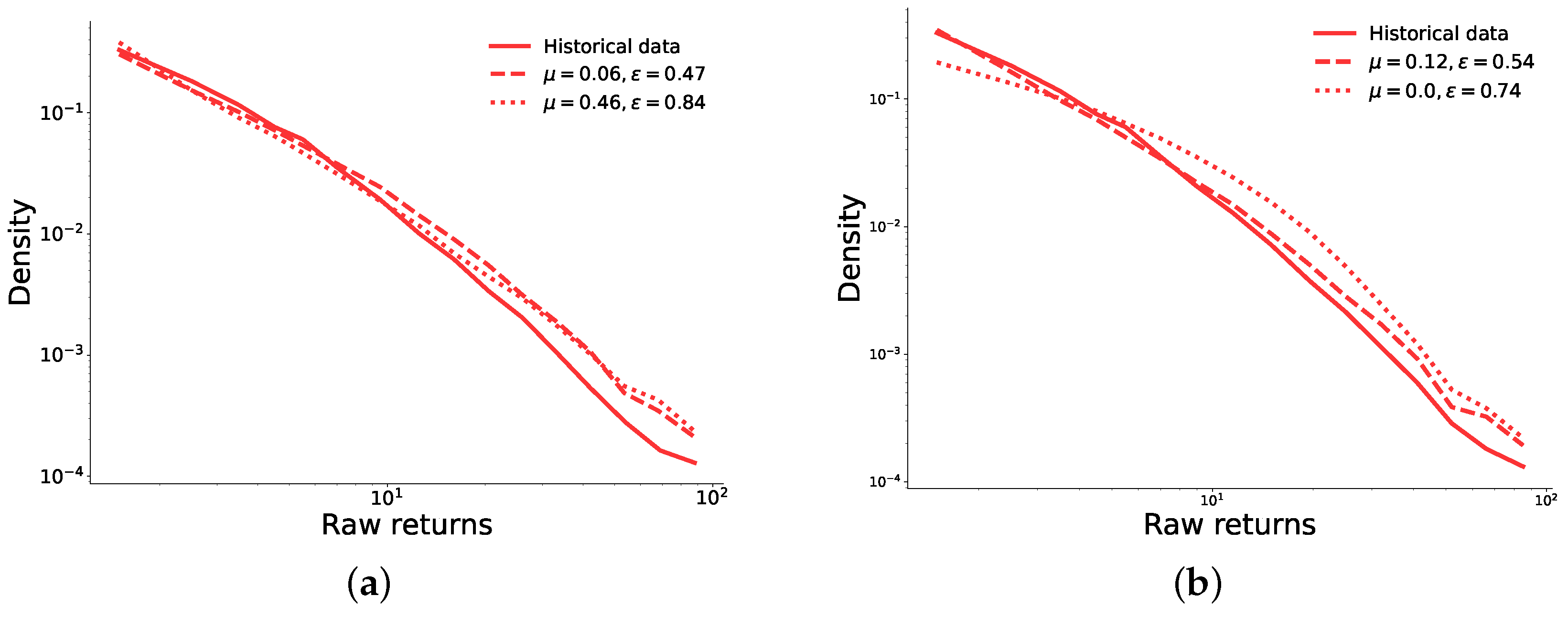

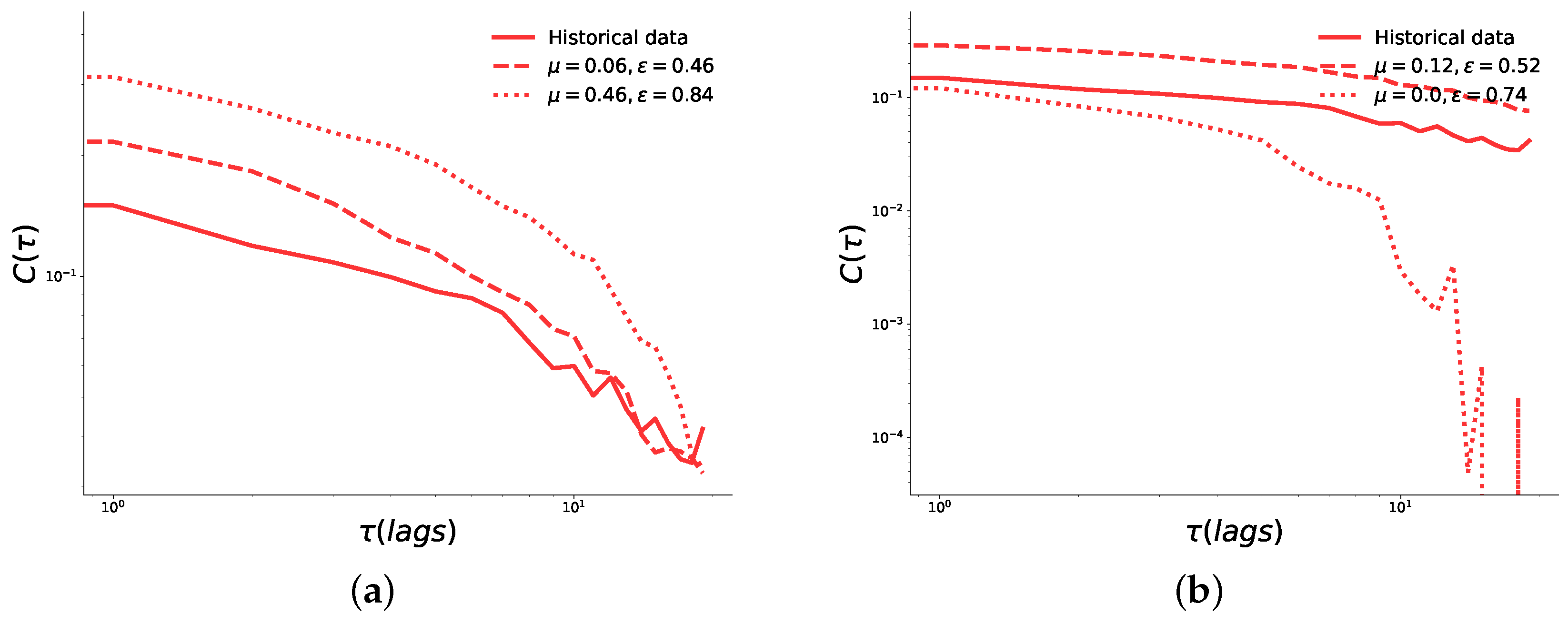

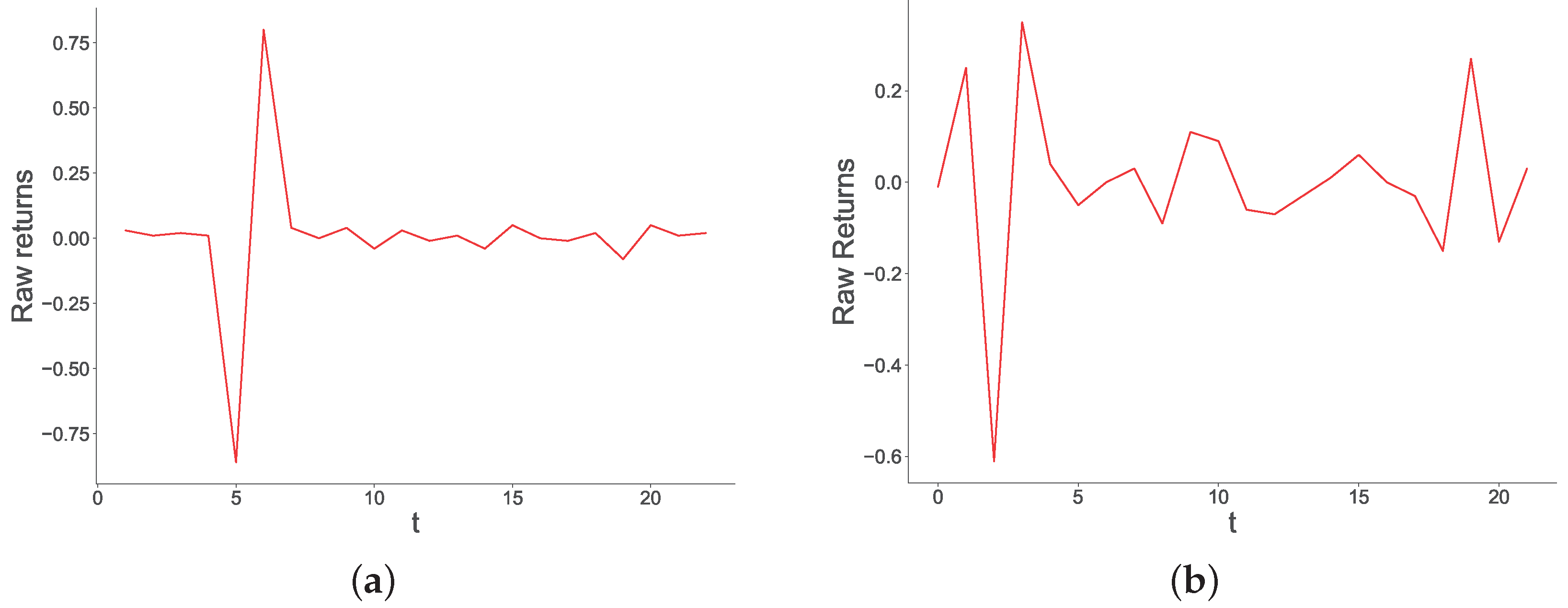

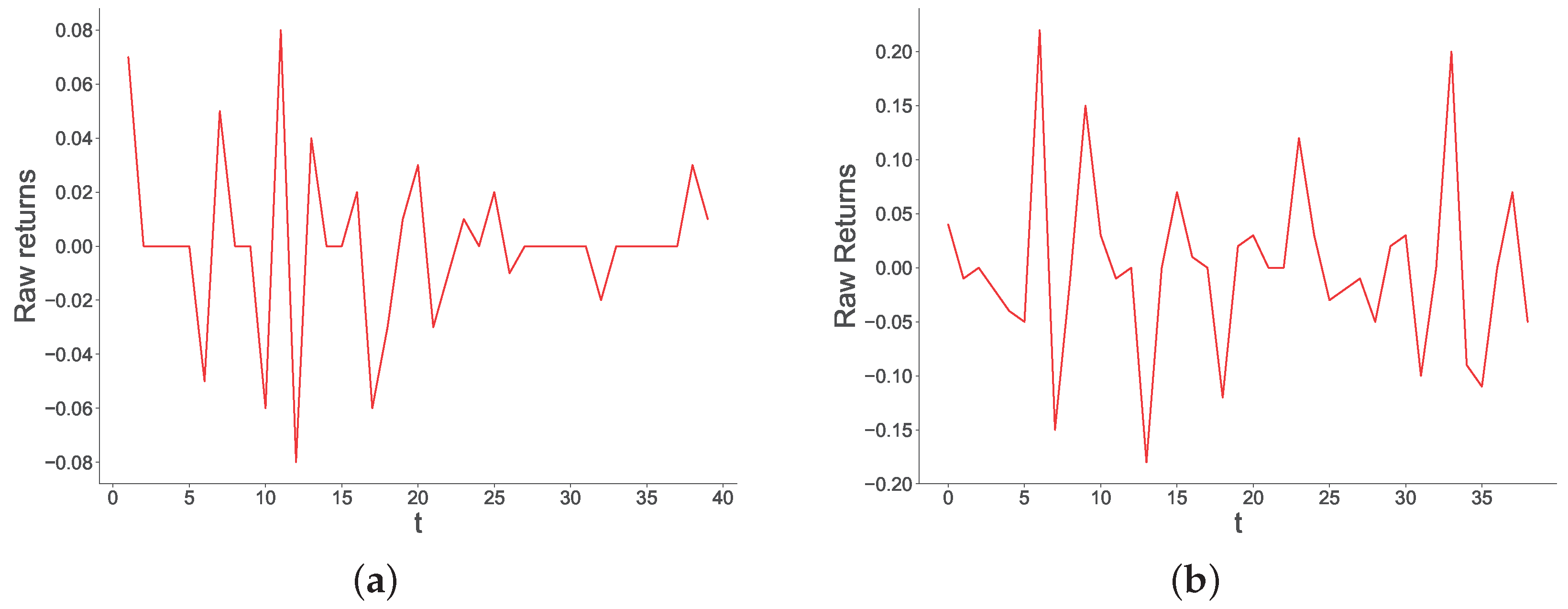

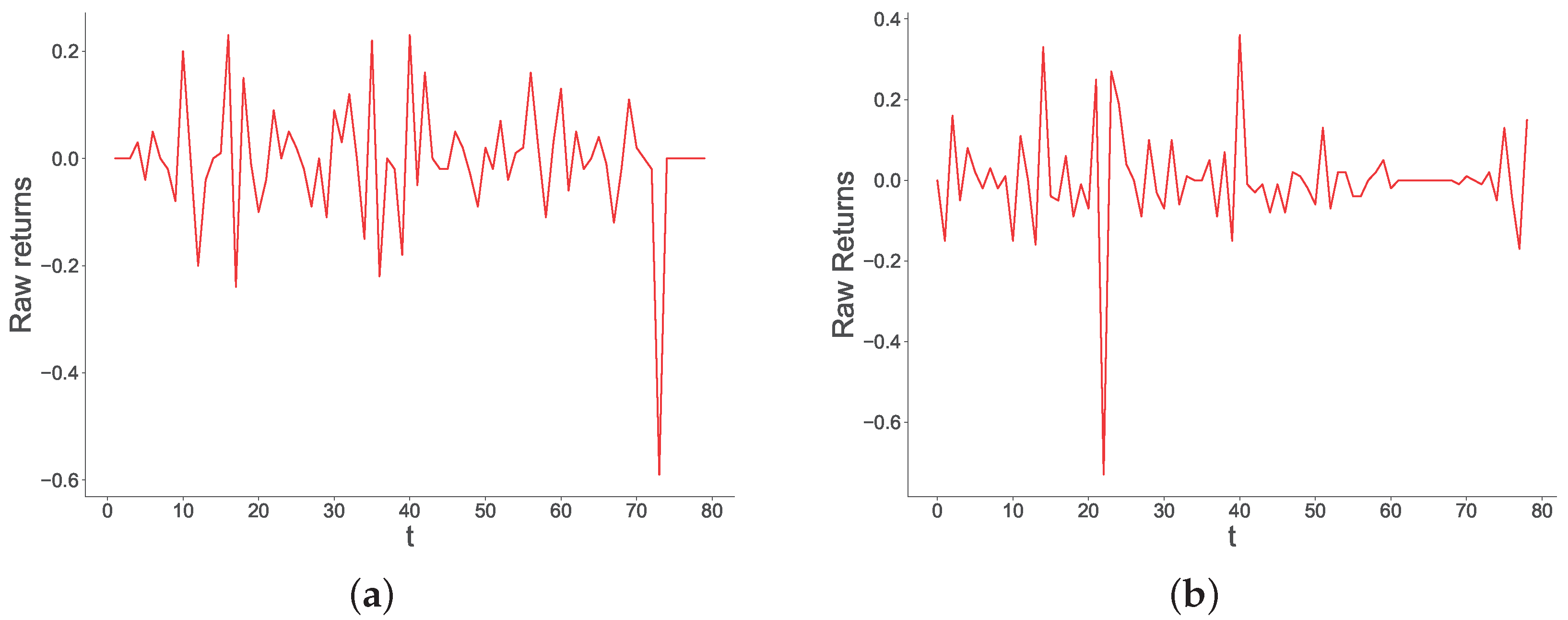

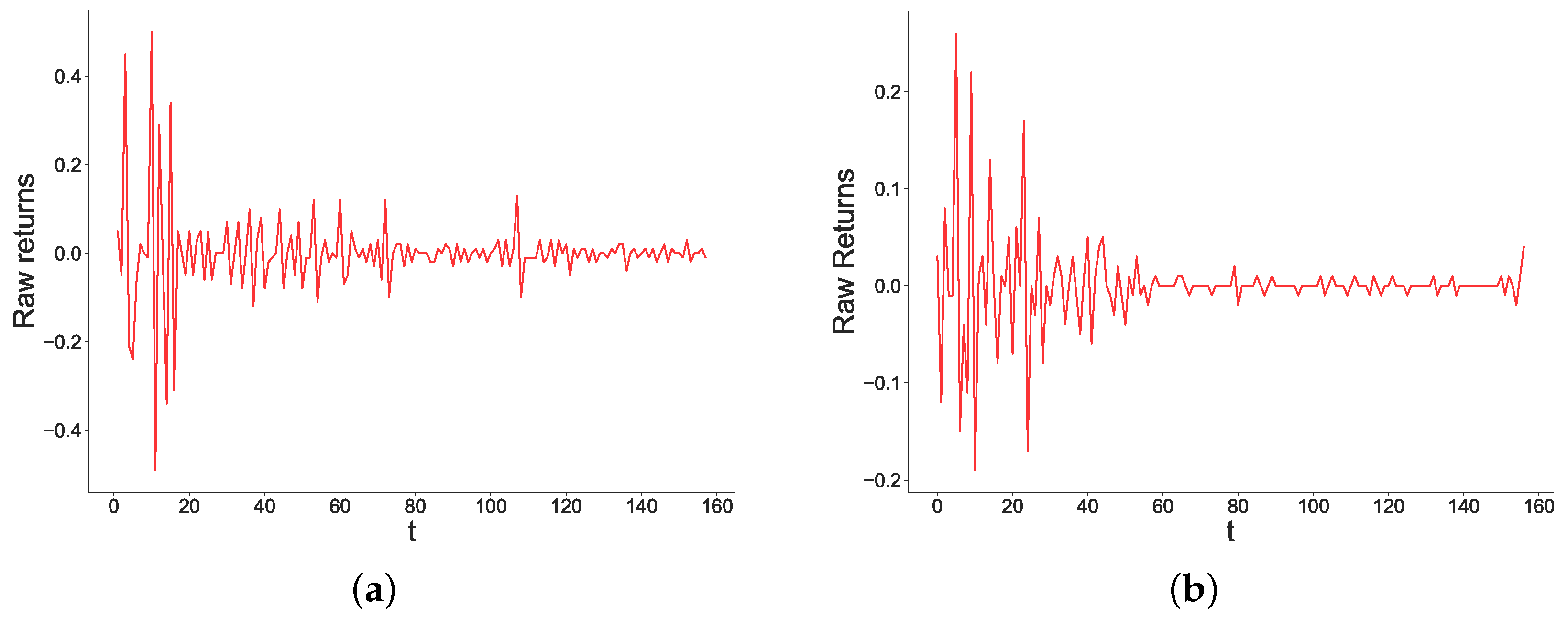

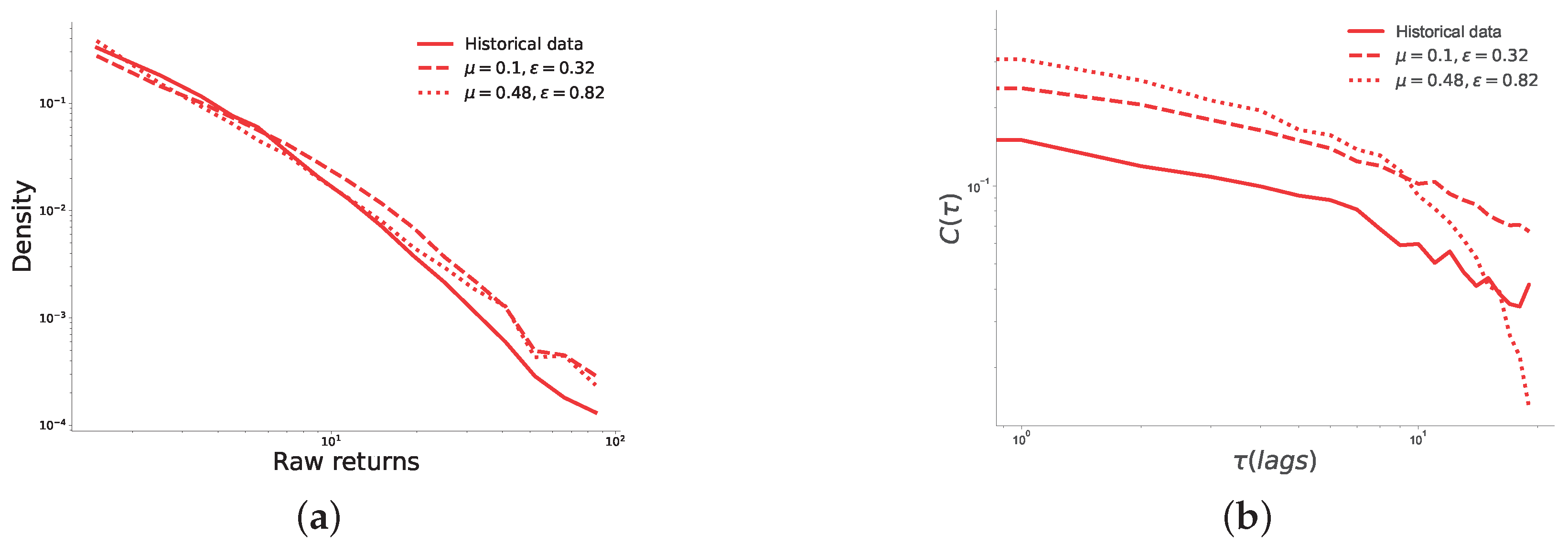

Figure 5 shows two comparisons between historical data and simulation results, obtained with both the best and worst configurations, found for the pairs with

and

, respectively. The comparisons are based on the autocorrelation of absolute return and the probability density function of absolute returns, which are commonly used metrics to describe time series in financial markets [

30,

34]. From this figure we observe that the both the best and worst configurations generate distributions of returns which are similar to the historical one, only with slightly heavier tails. Results displayed in

Figure 5 suggest that, whereas time series generated by the best configuration perfectly match the decay slope of the absolute return autocorrelation function, those generated by the worst configuration do not match historical data accurately. Indeed, although even in this case the decay of the autocorrelation function exhibits a long tail, its values quickly drop when the lag considered is greater than 10 days. This is because, for the pair of values

, the underlying opinion dynamics process converges to one opinion cluster far earlier than the end of the market for most market durations. This causes price changes to be very small or zero, in contrast to the beginning of the market, when price changes are larger due to the higher heterogeneity of opinions.