Submitted:

07 August 2023

Posted:

08 August 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Theoretical Analysis

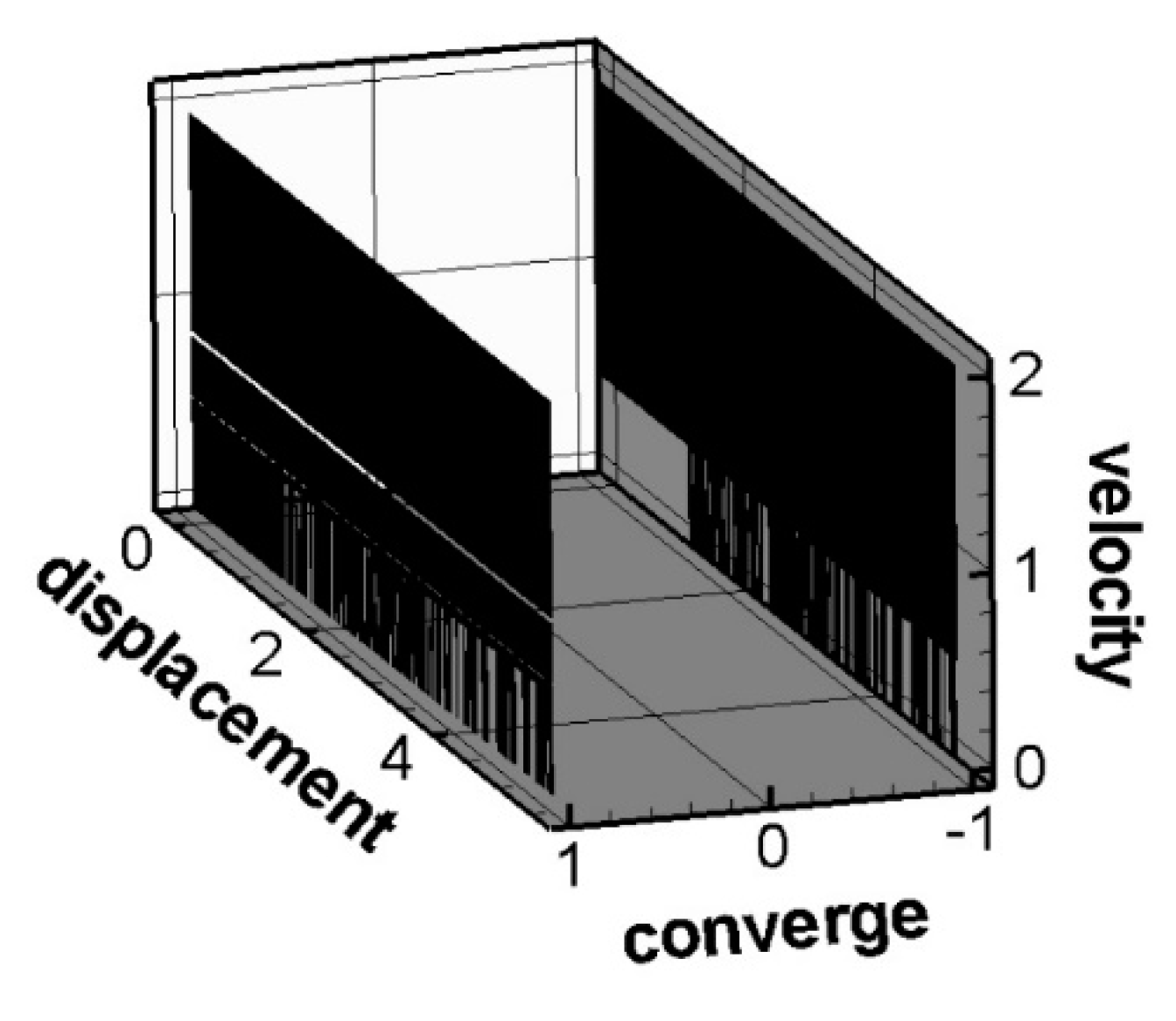

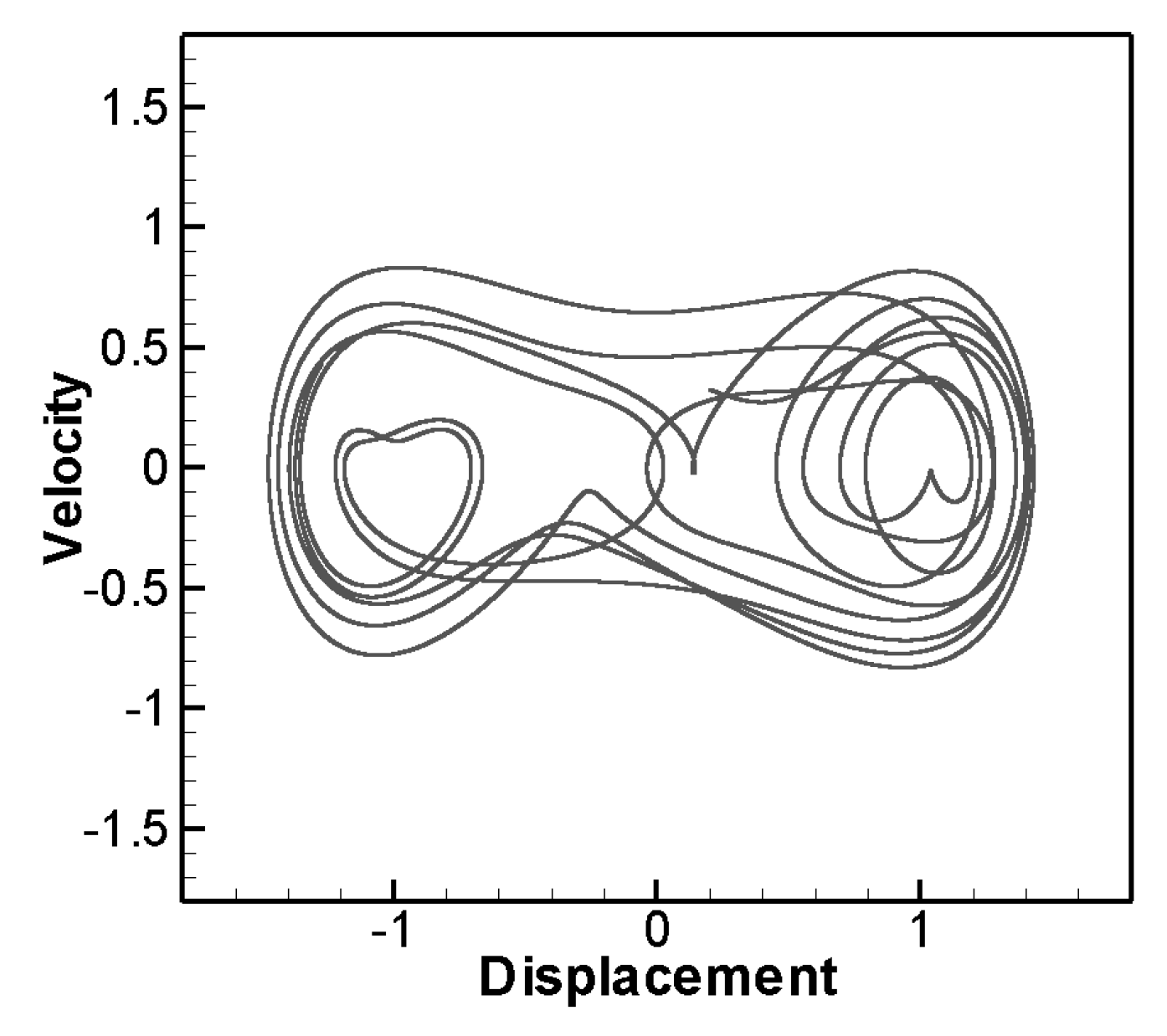

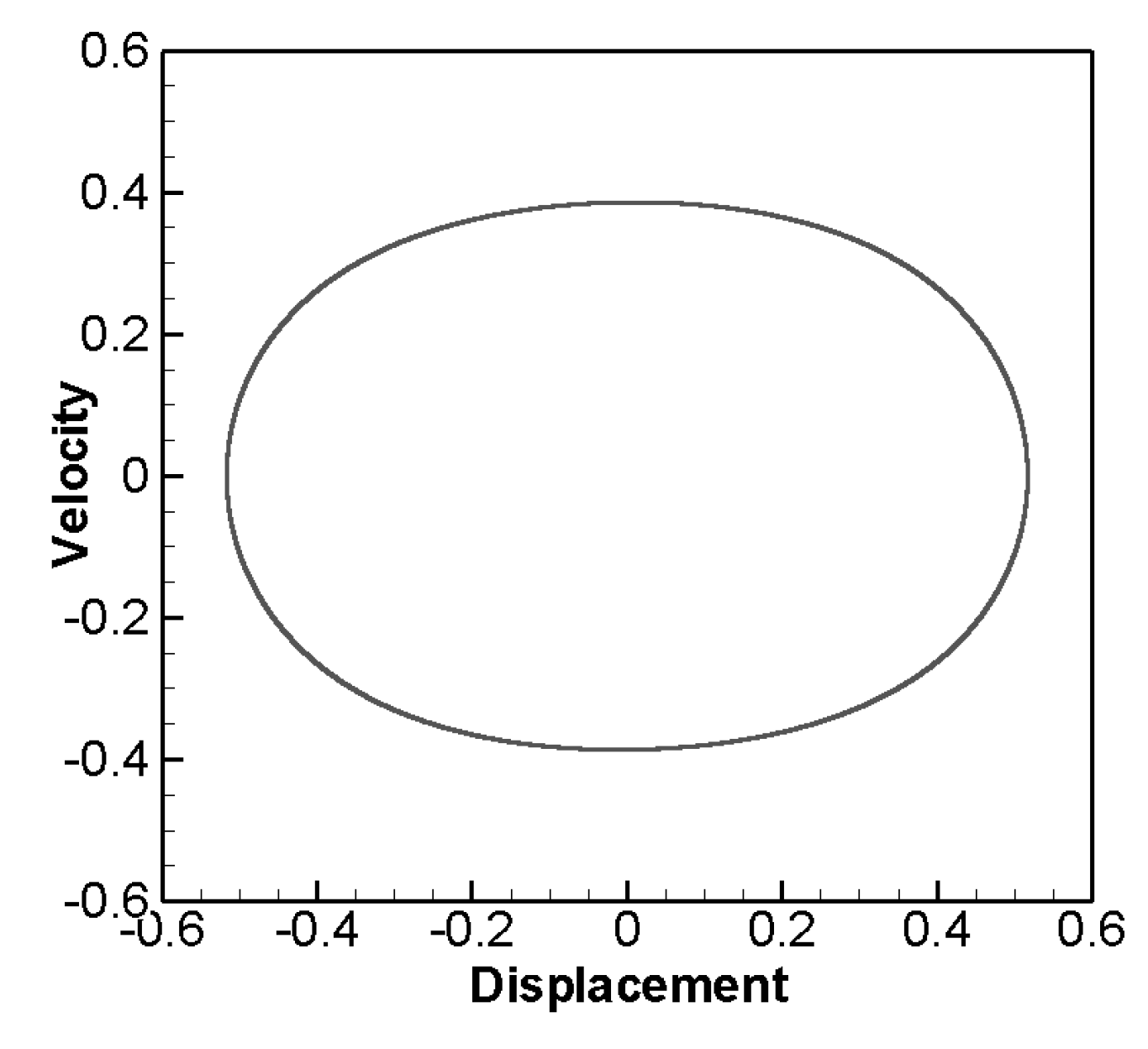

2.1. Duffing equation

2.2. Numerical solution of fourth-order Runge-Kutta method

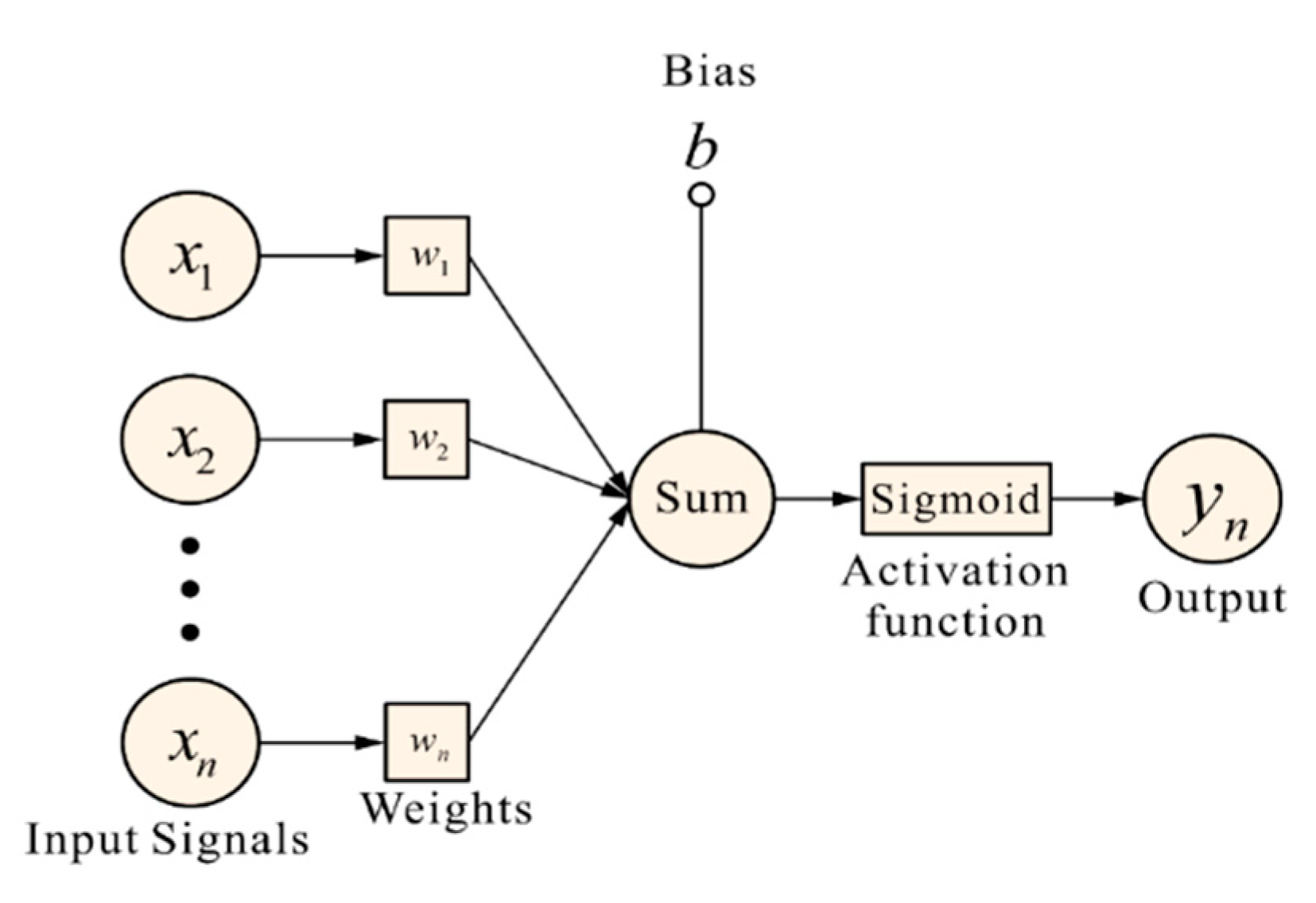

3. Deep Learning

3.1. Database establishment

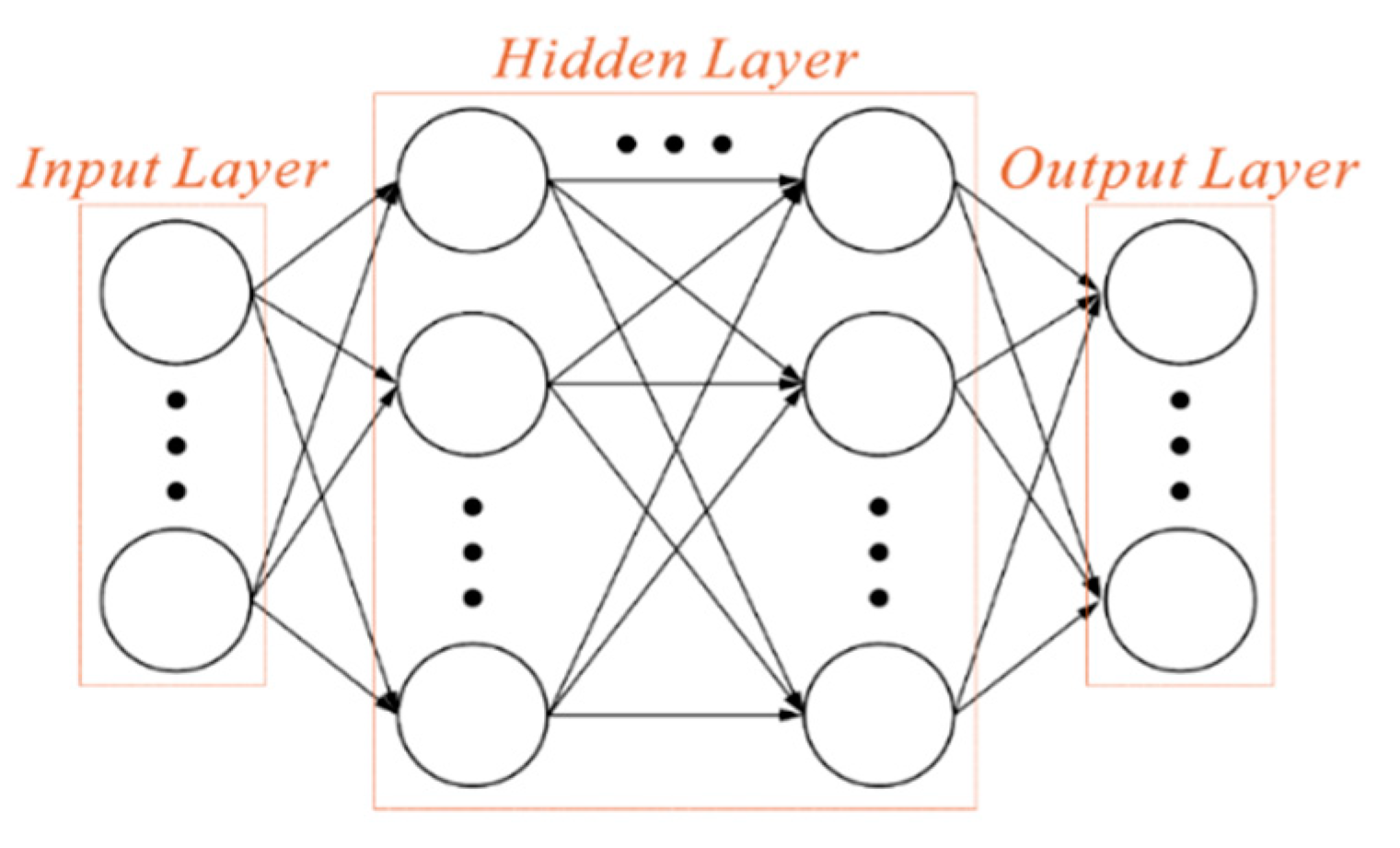

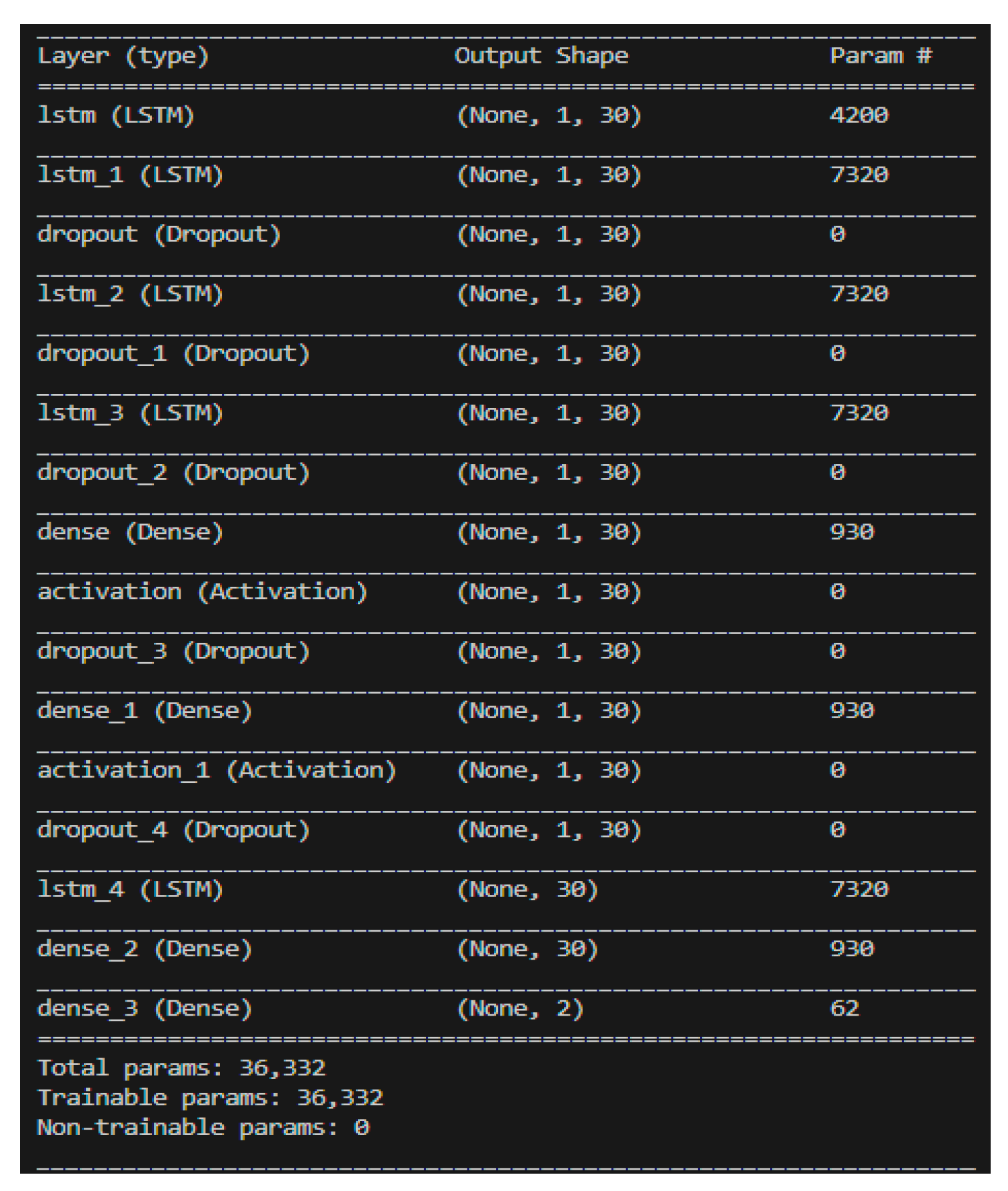

3.2. Deep Neural Network Architecture

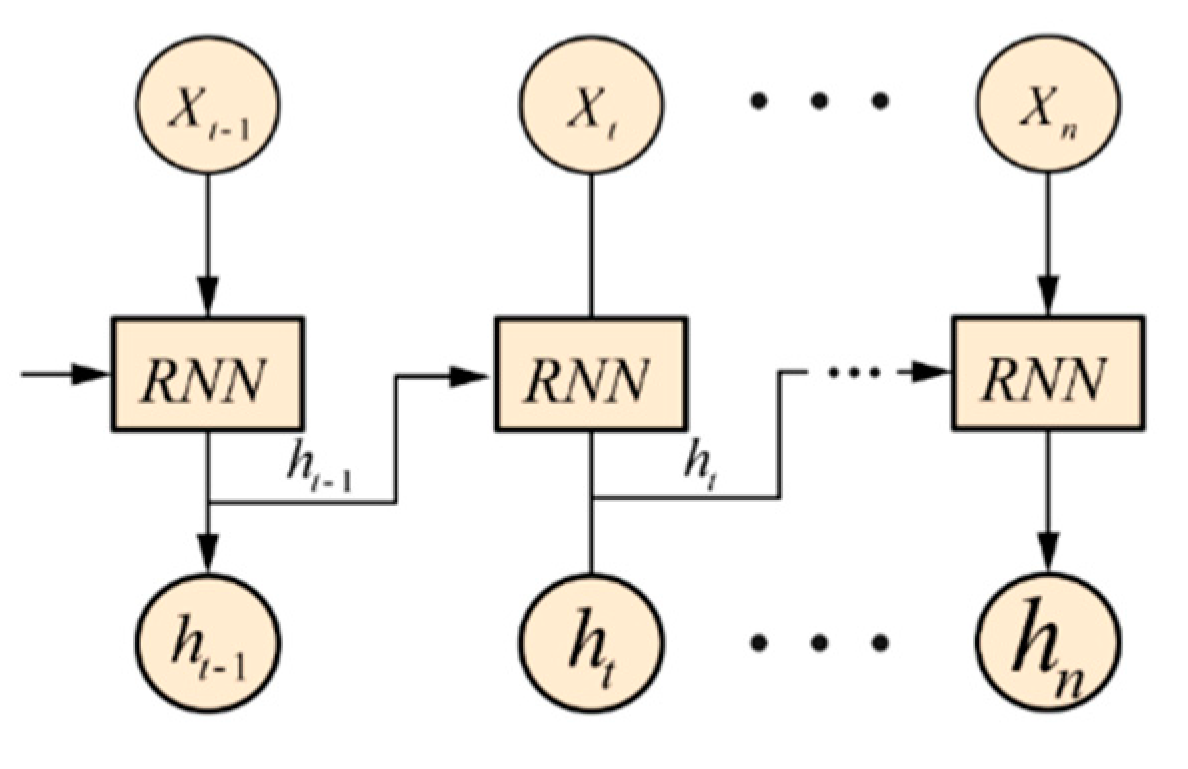

3.3. The basic structure of LSTM

4. Deep learning training analysis

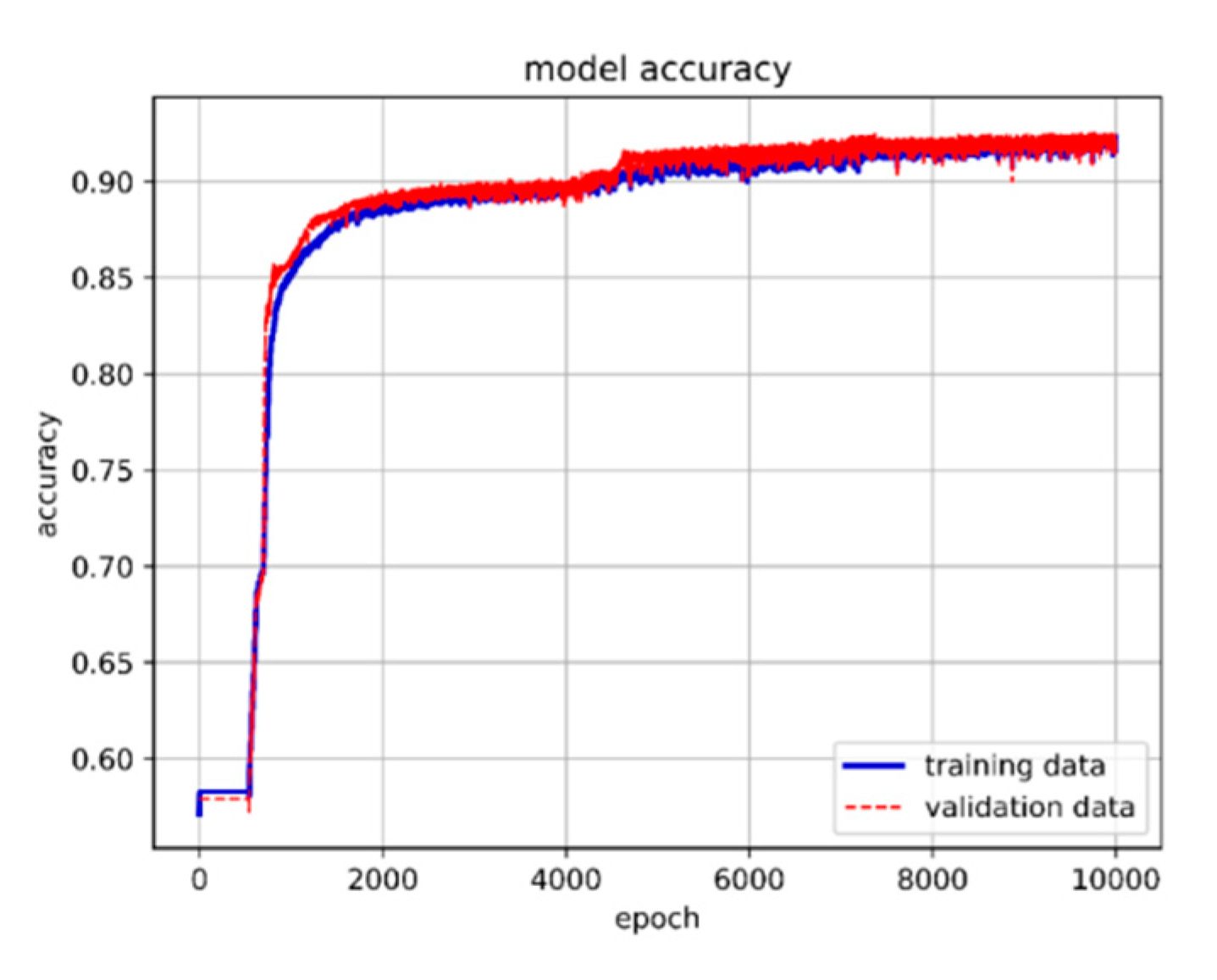

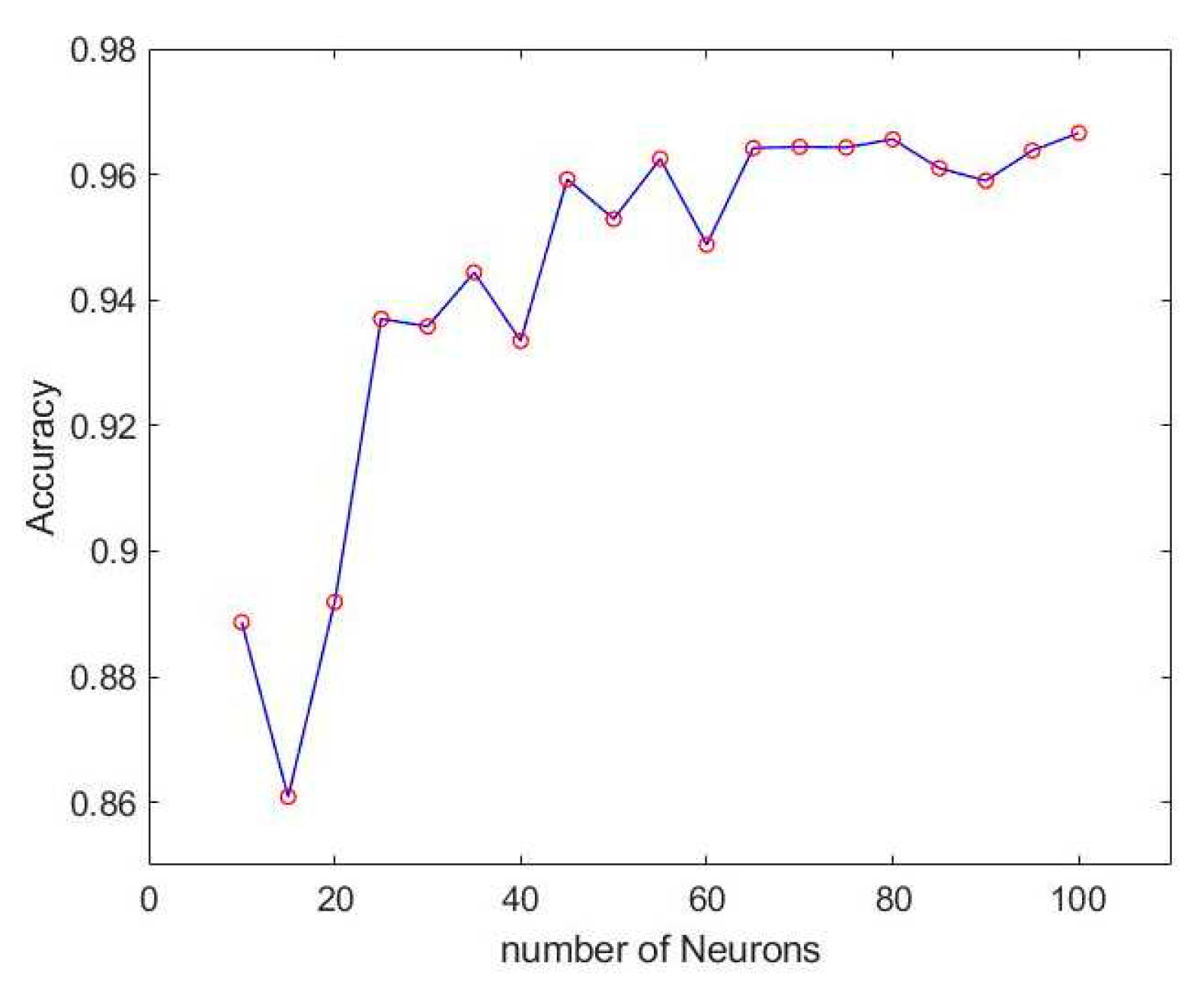

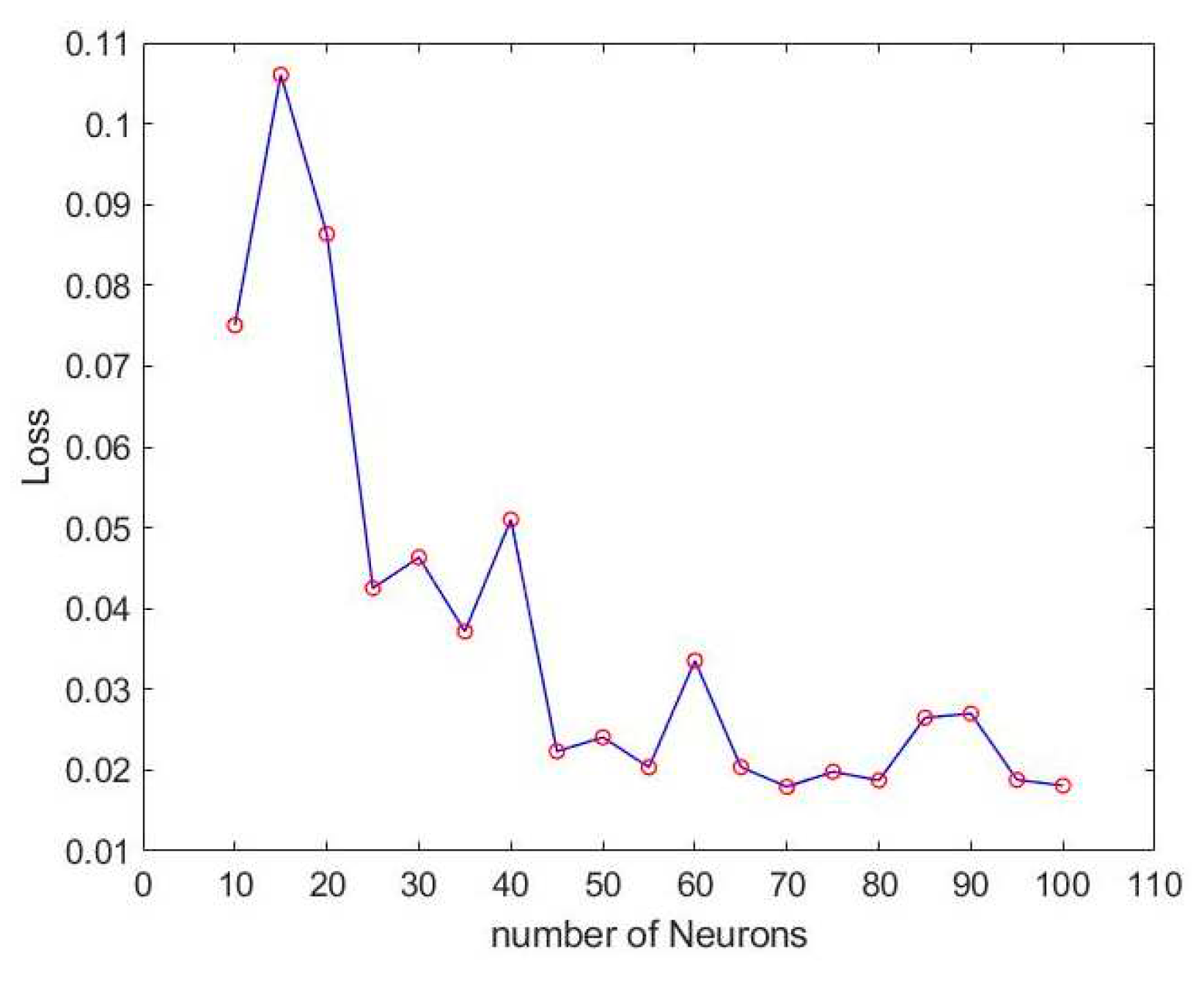

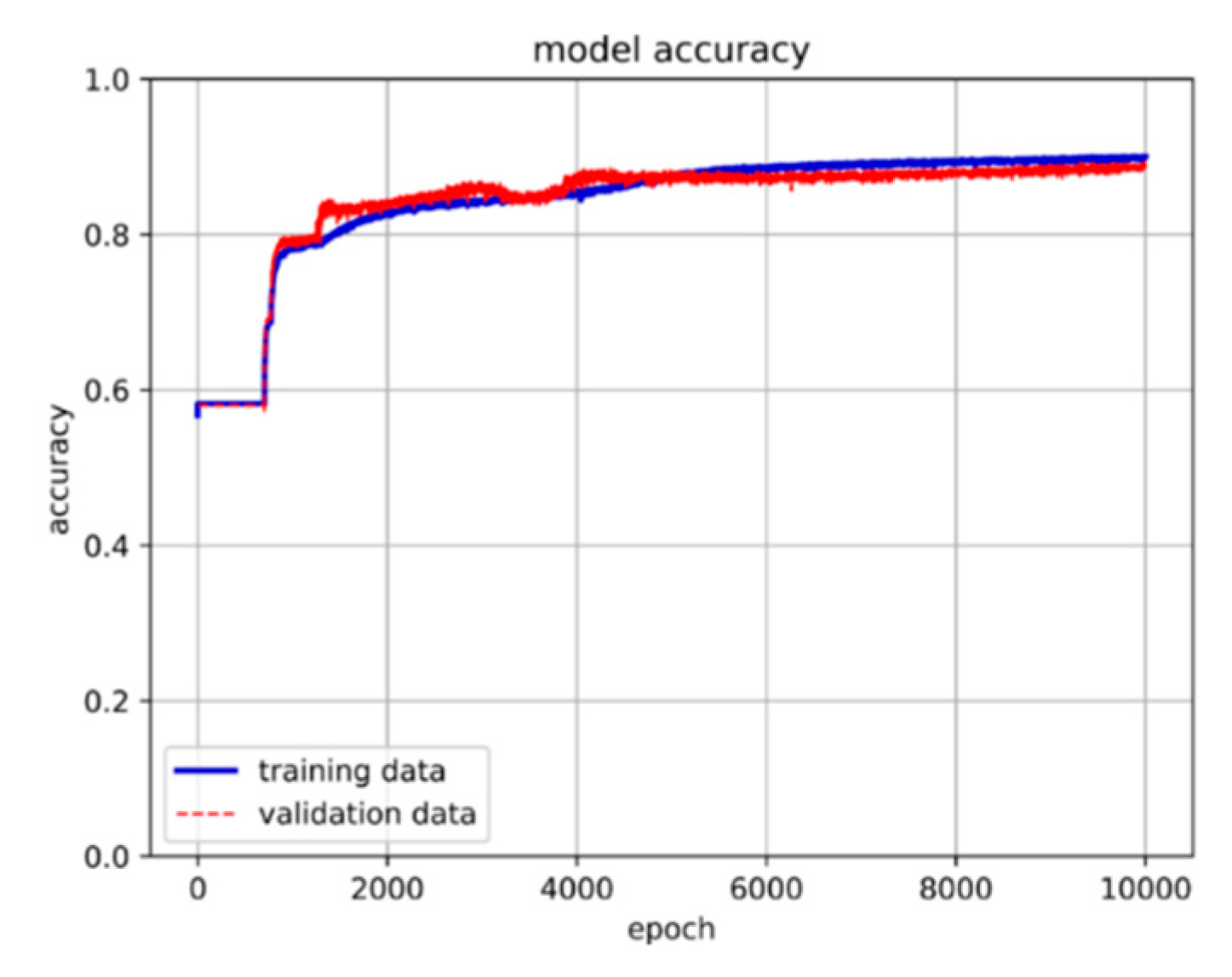

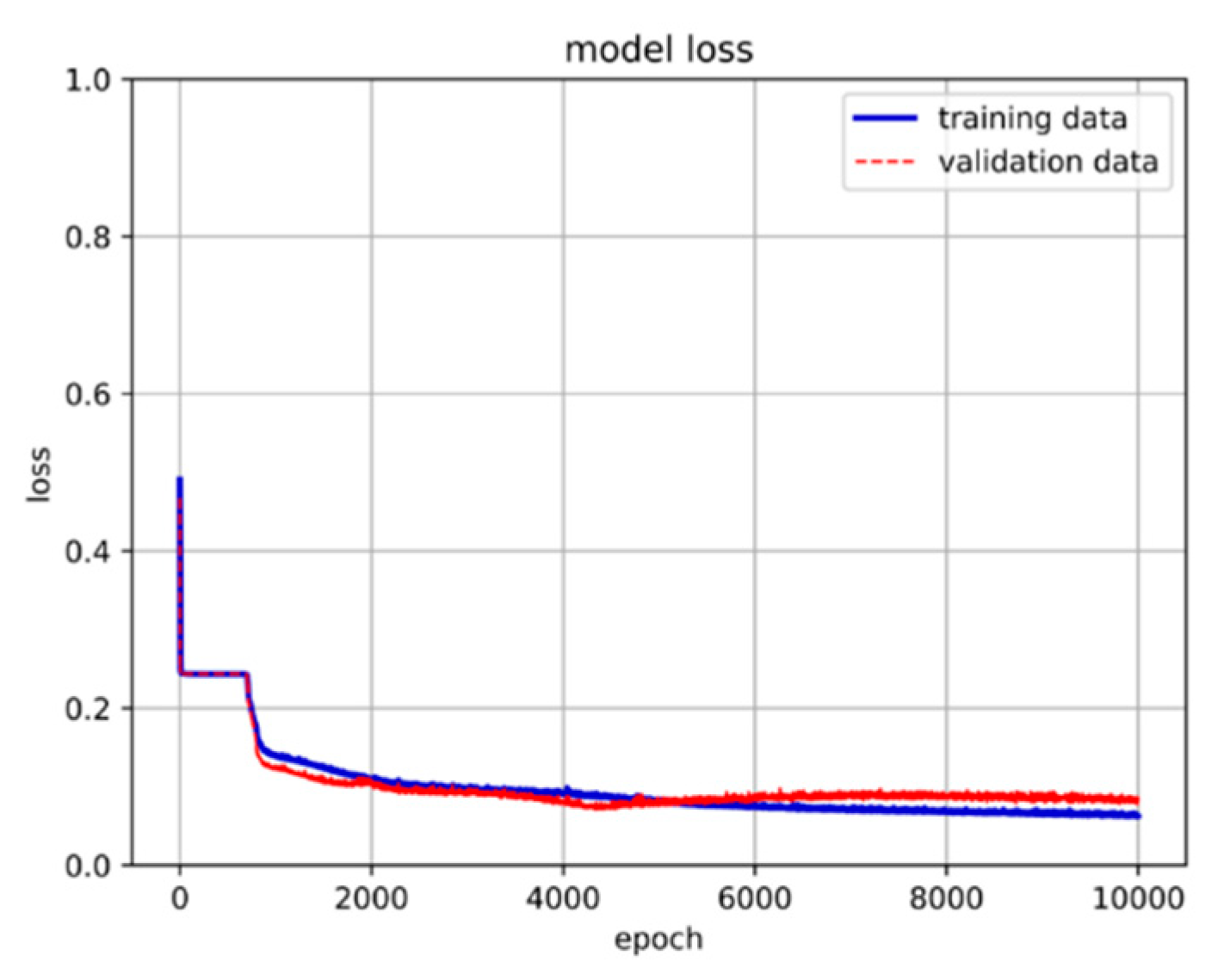

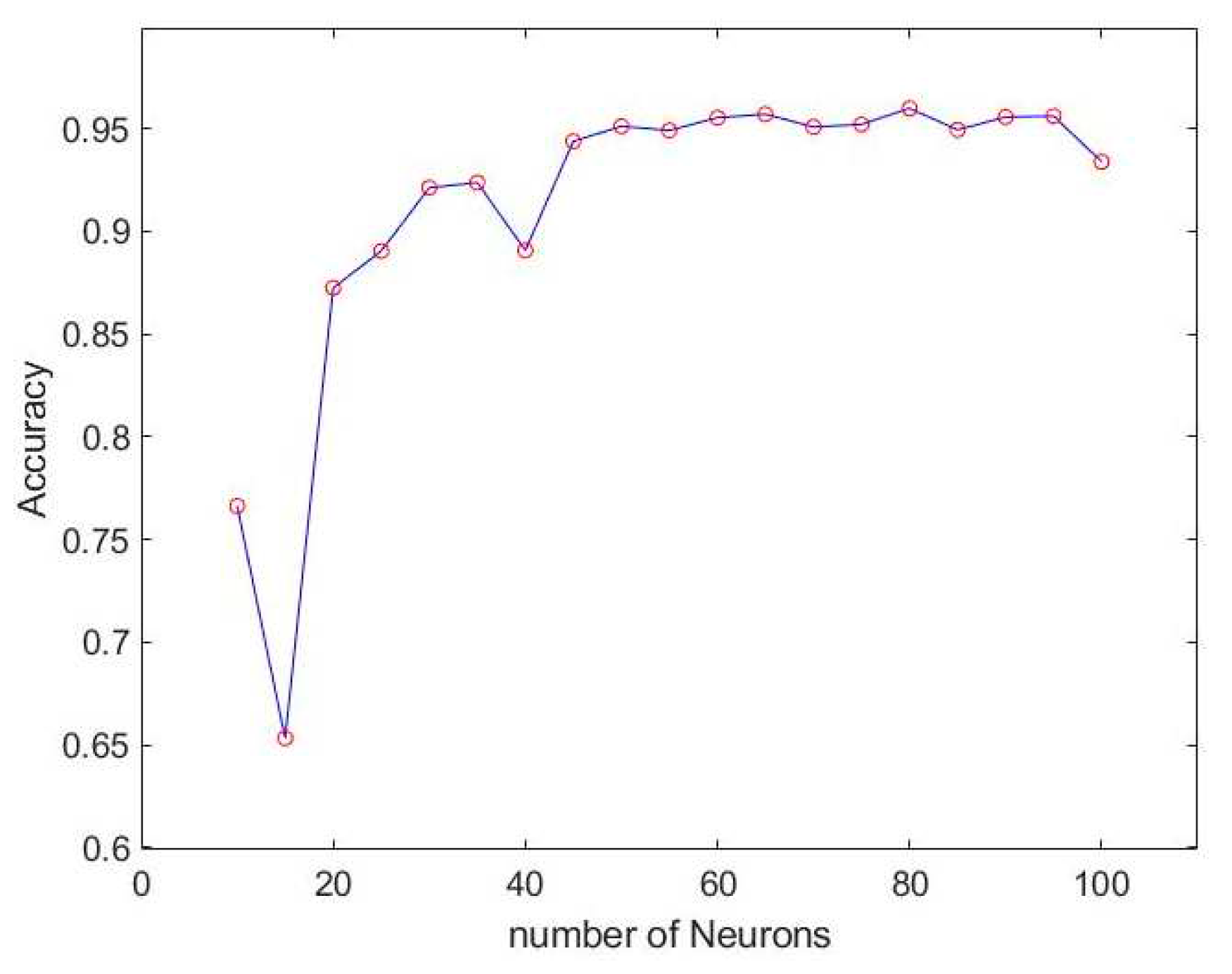

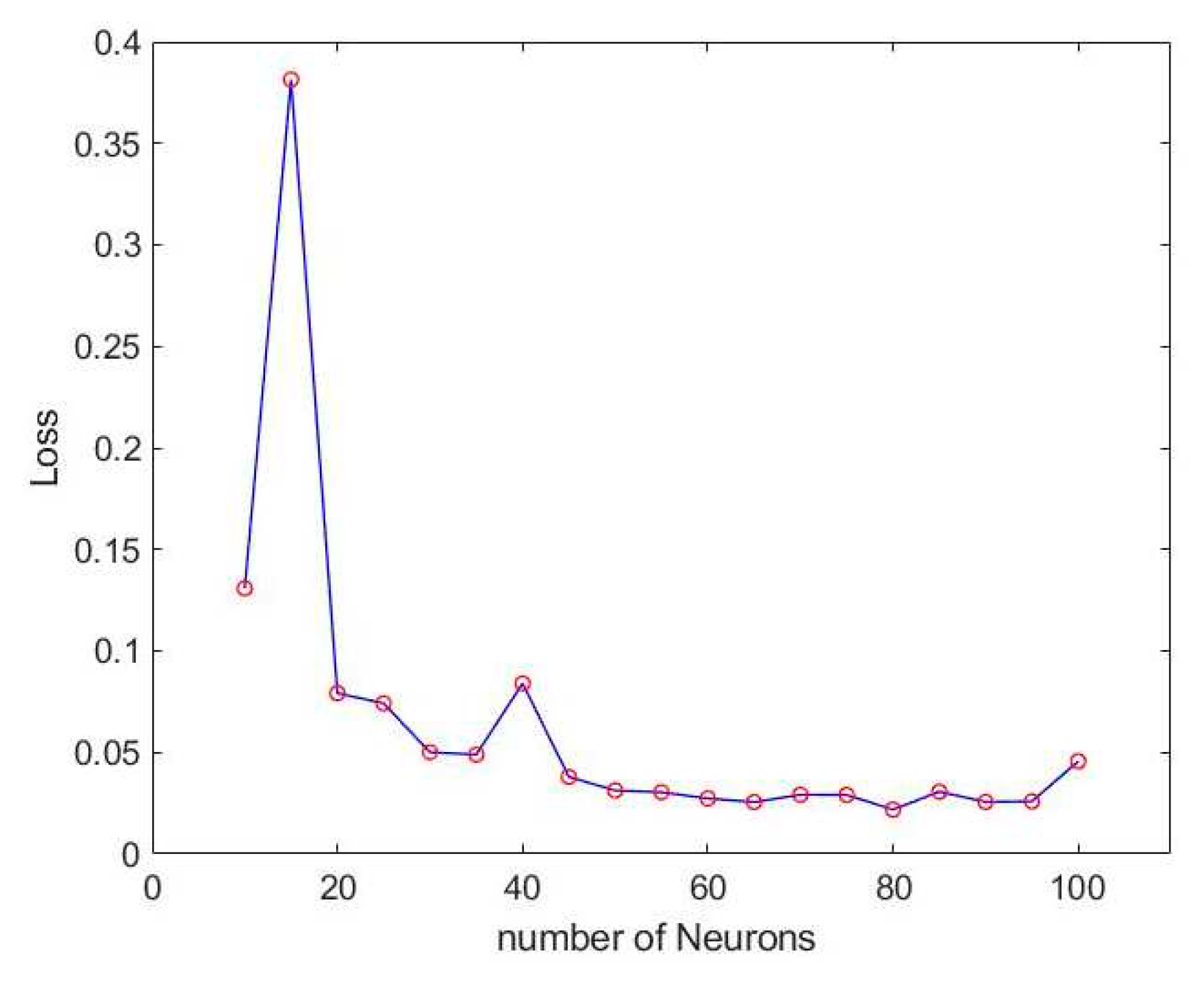

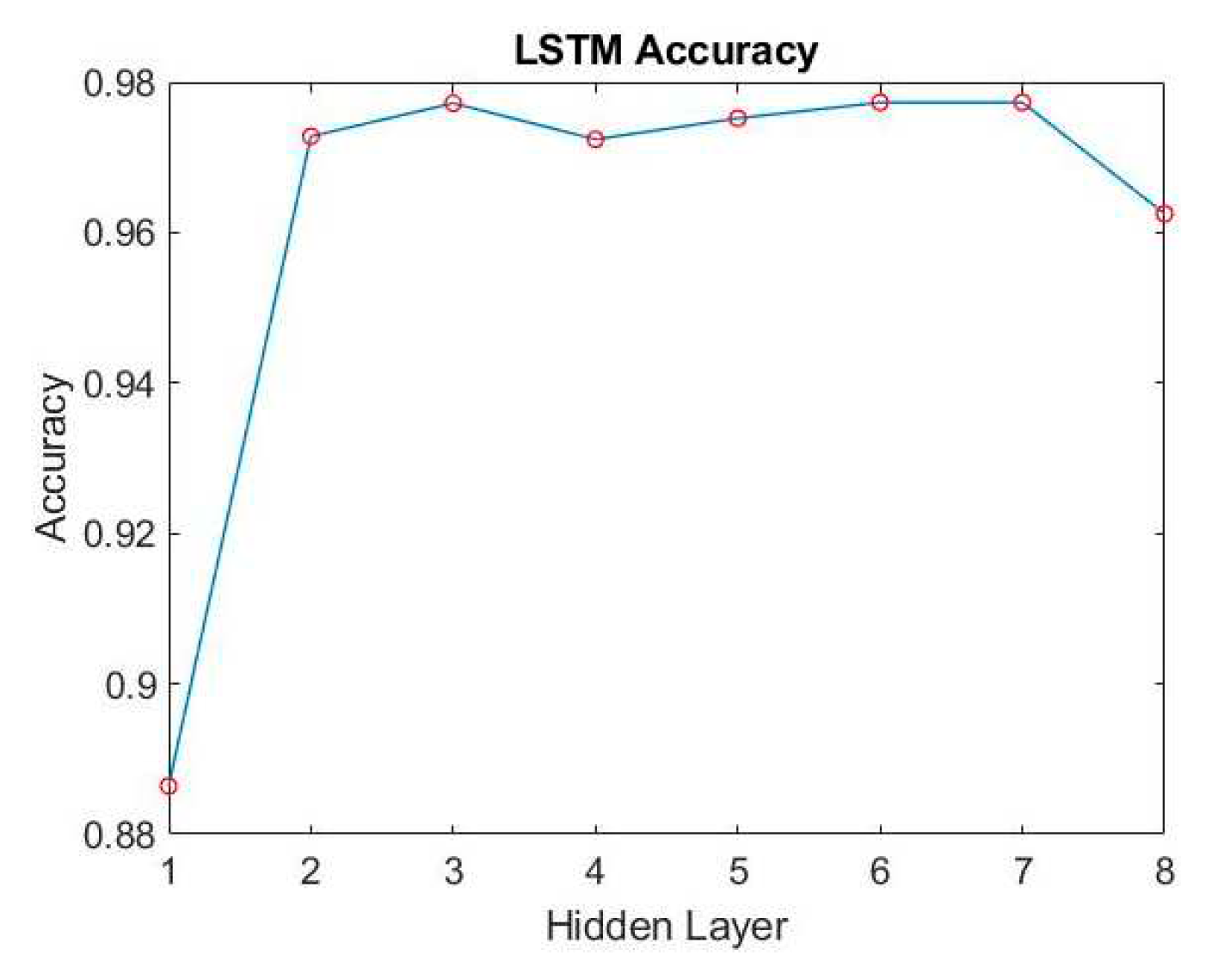

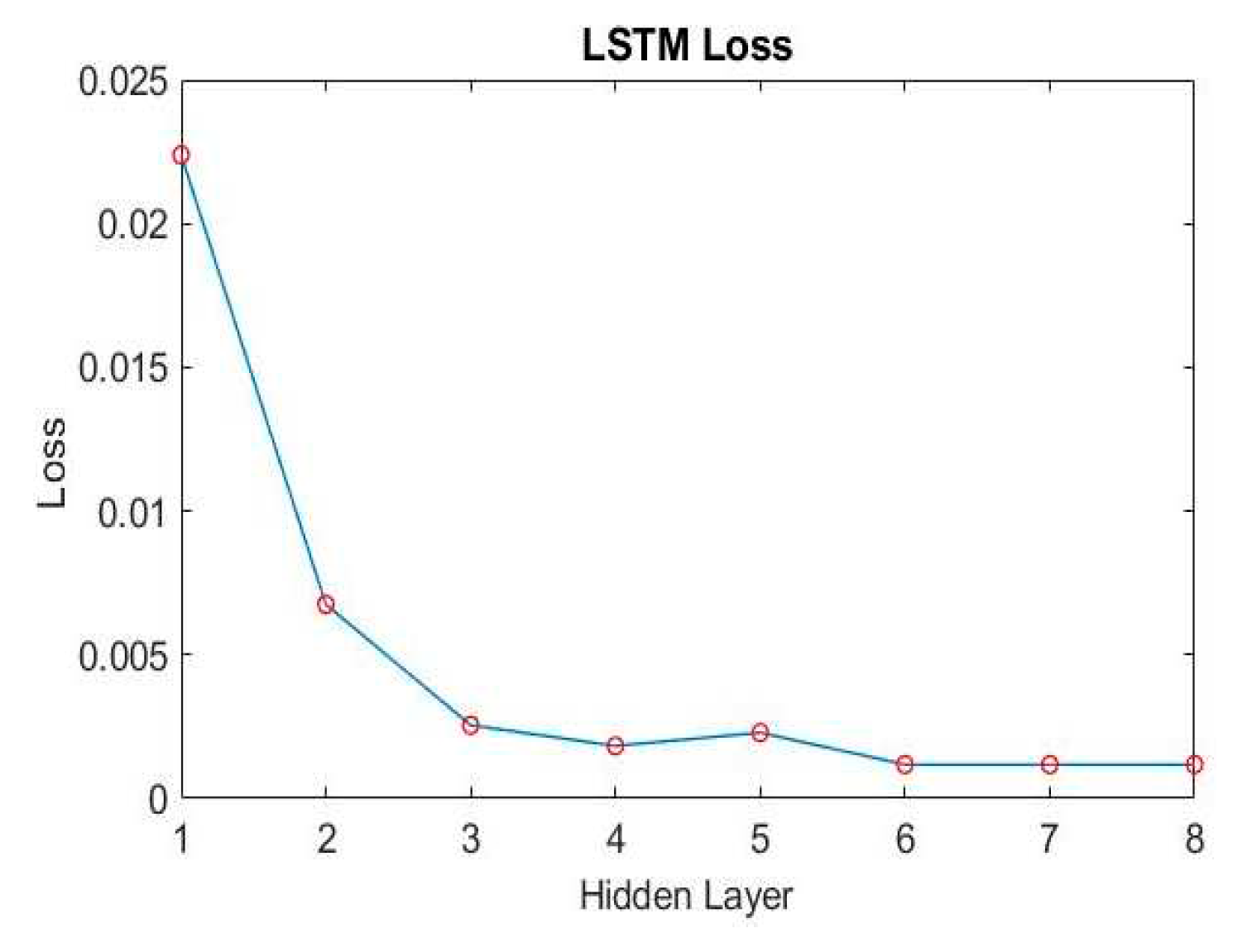

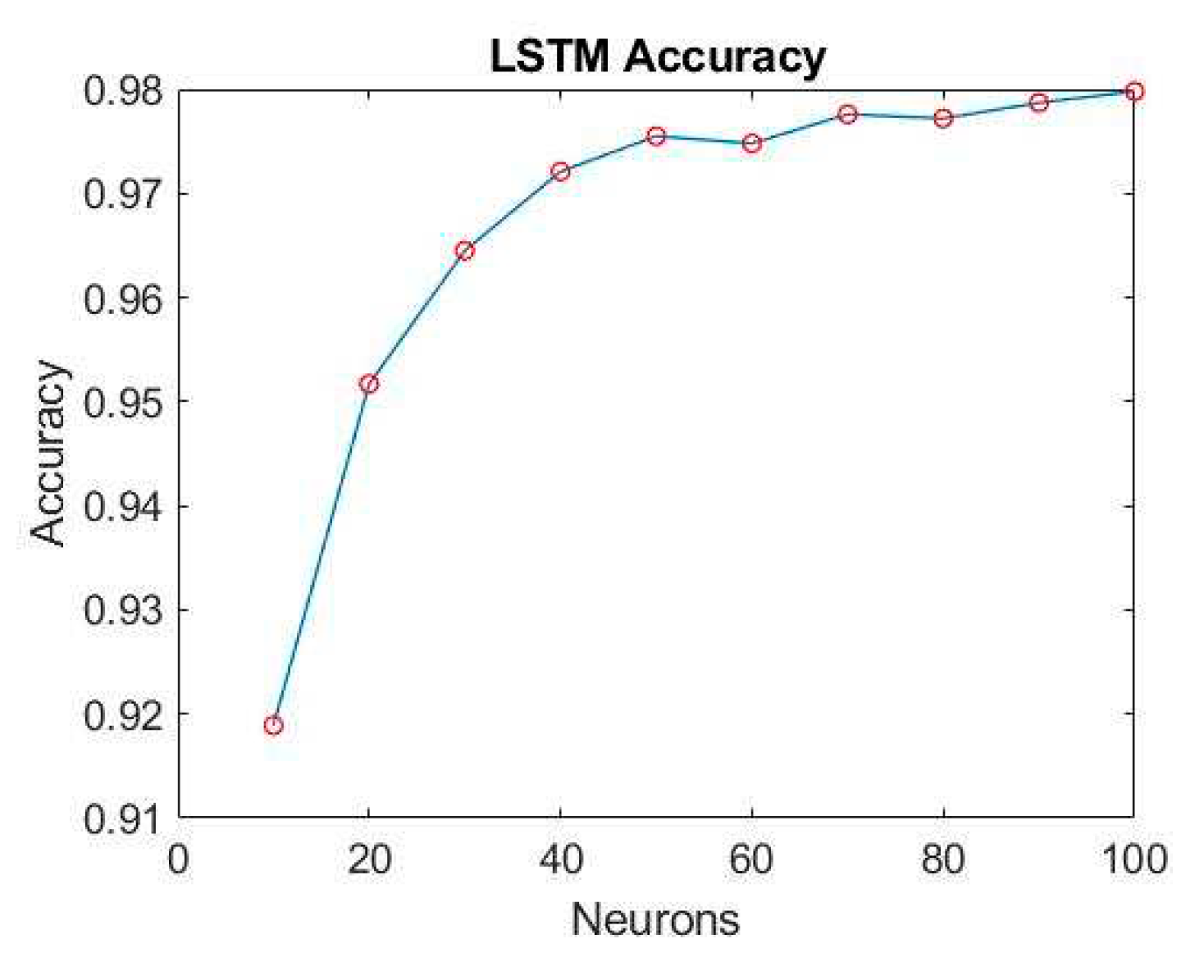

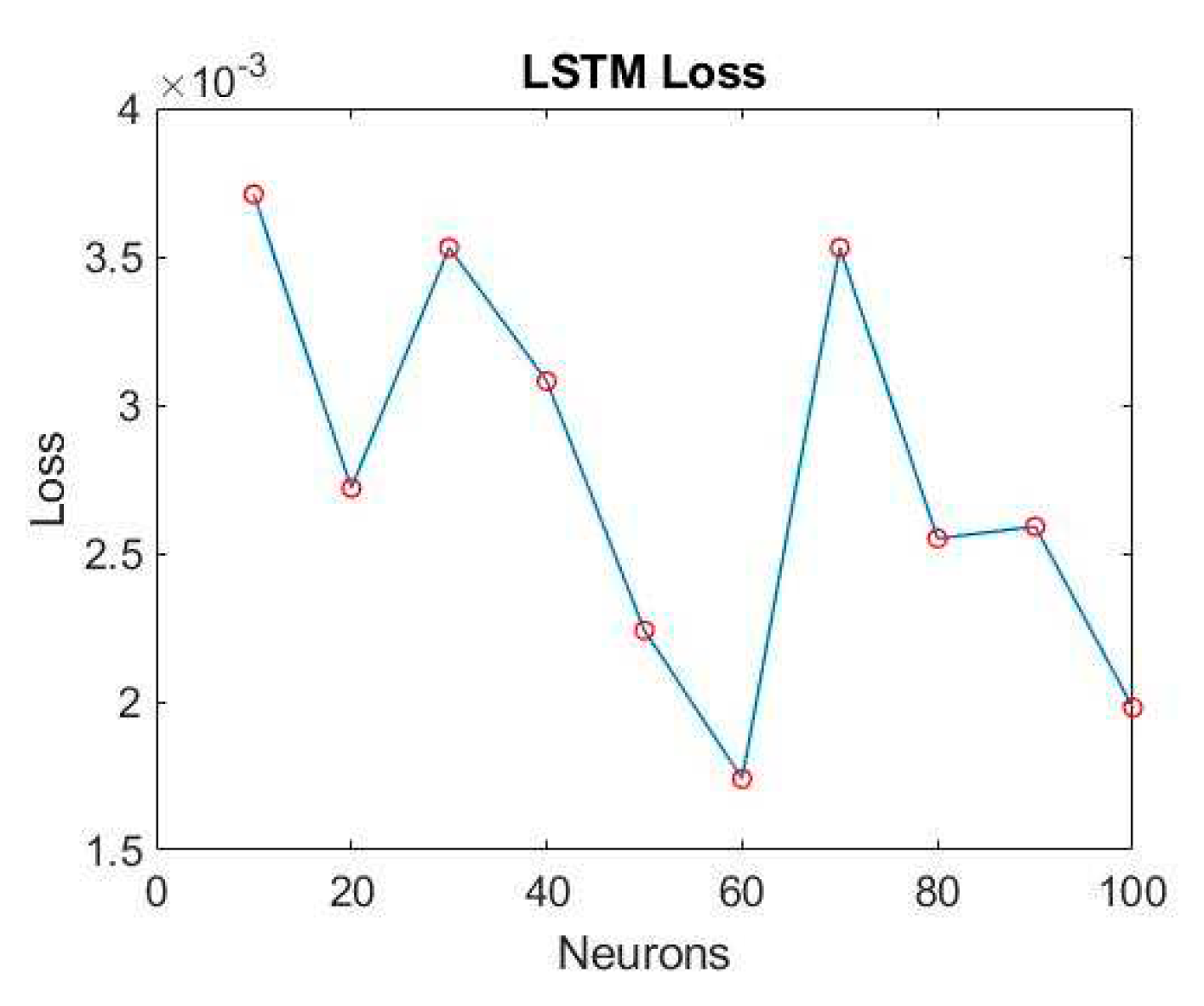

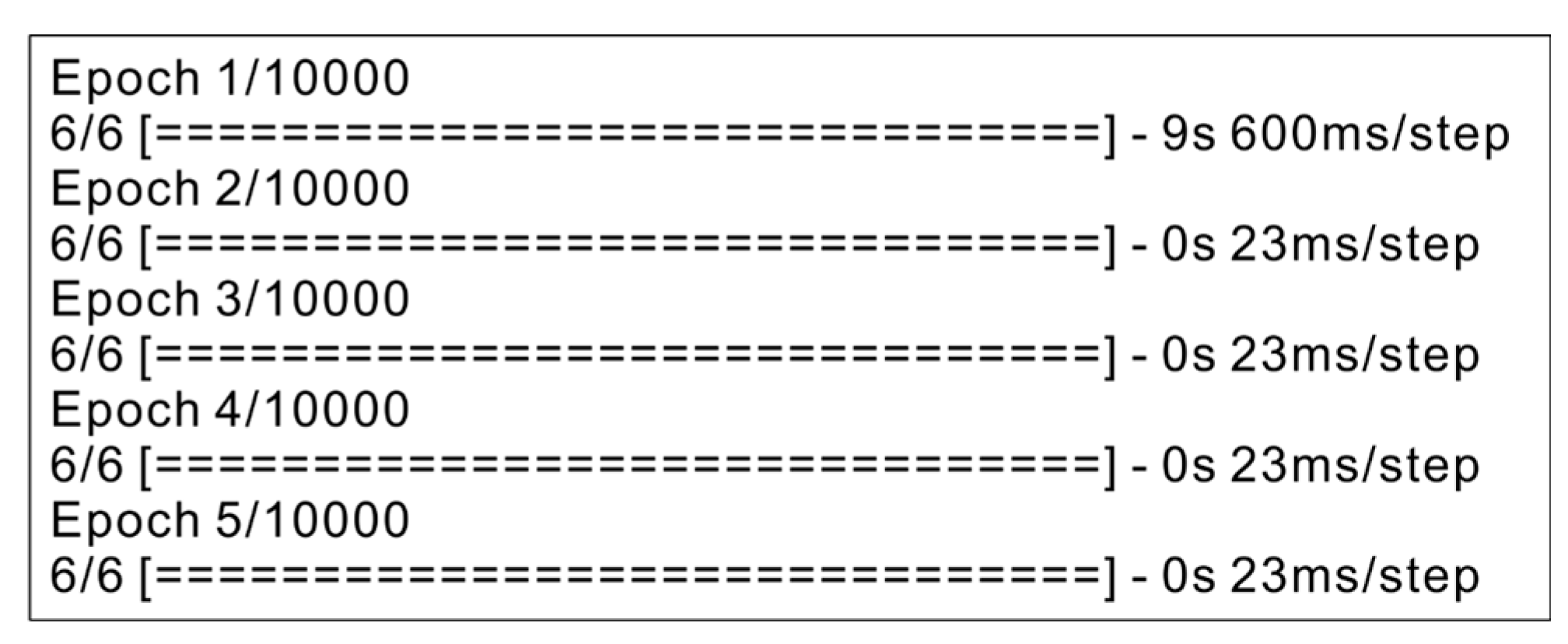

4.1. Duffing equation undamped free vibration type - by LSTM model

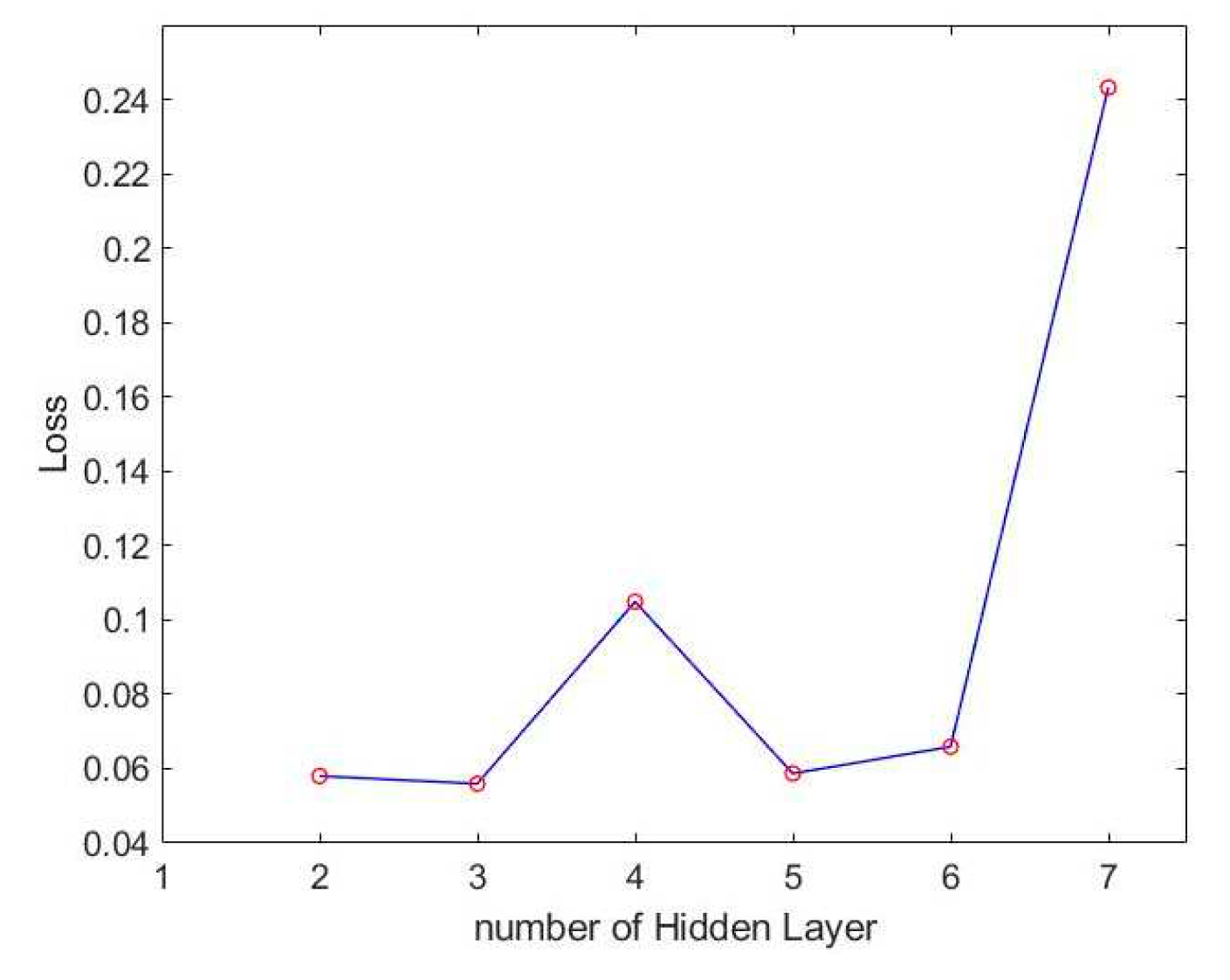

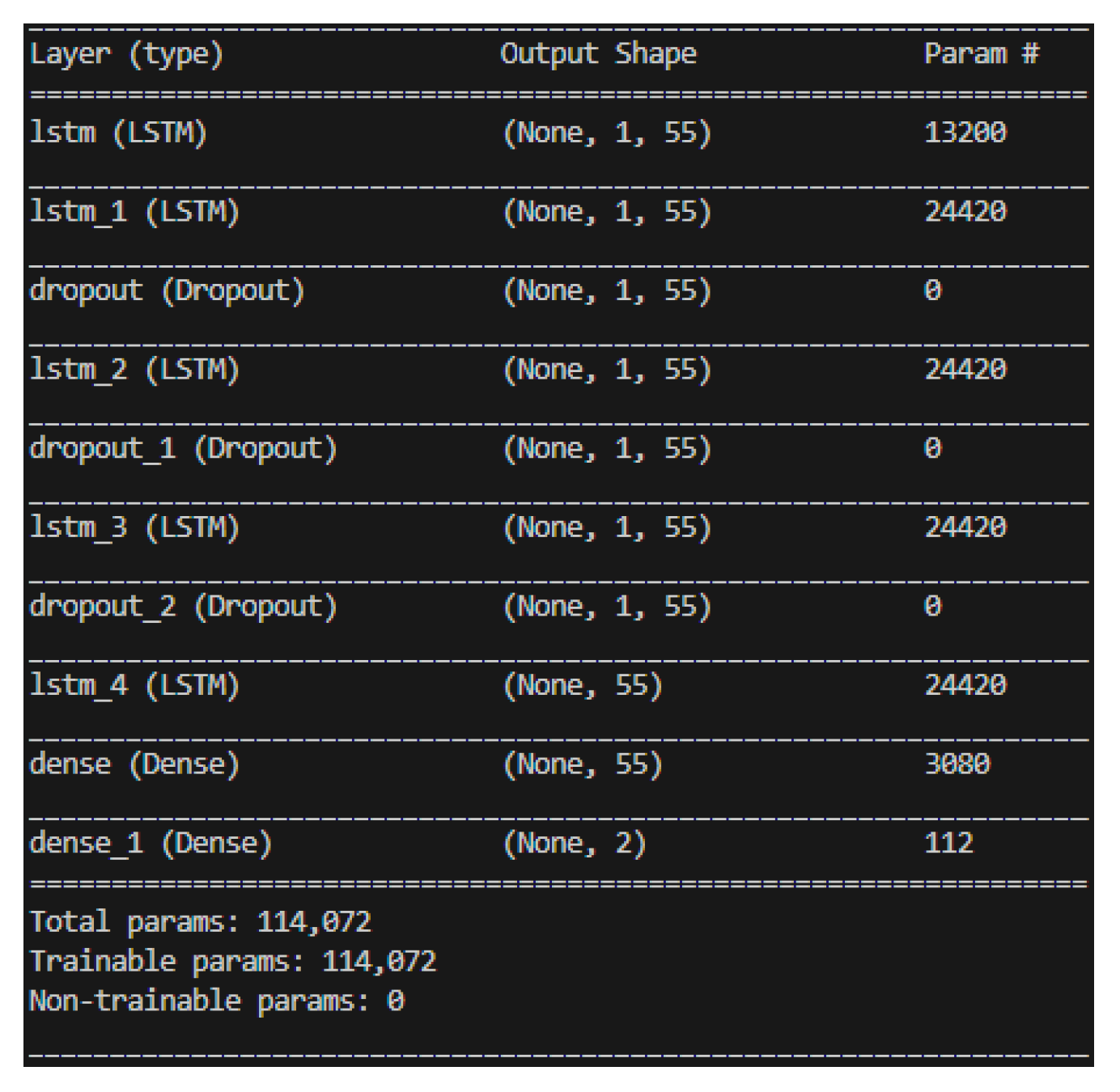

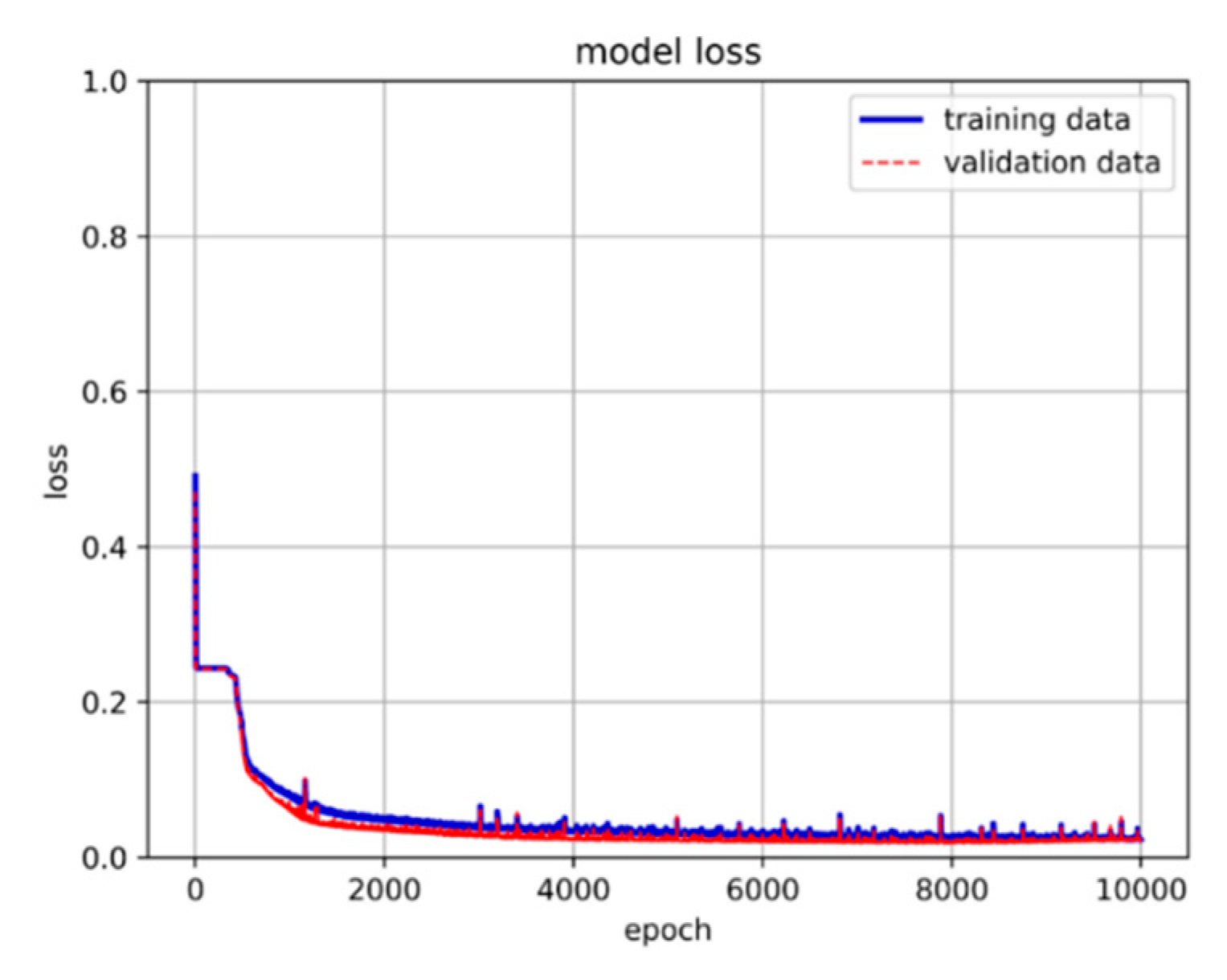

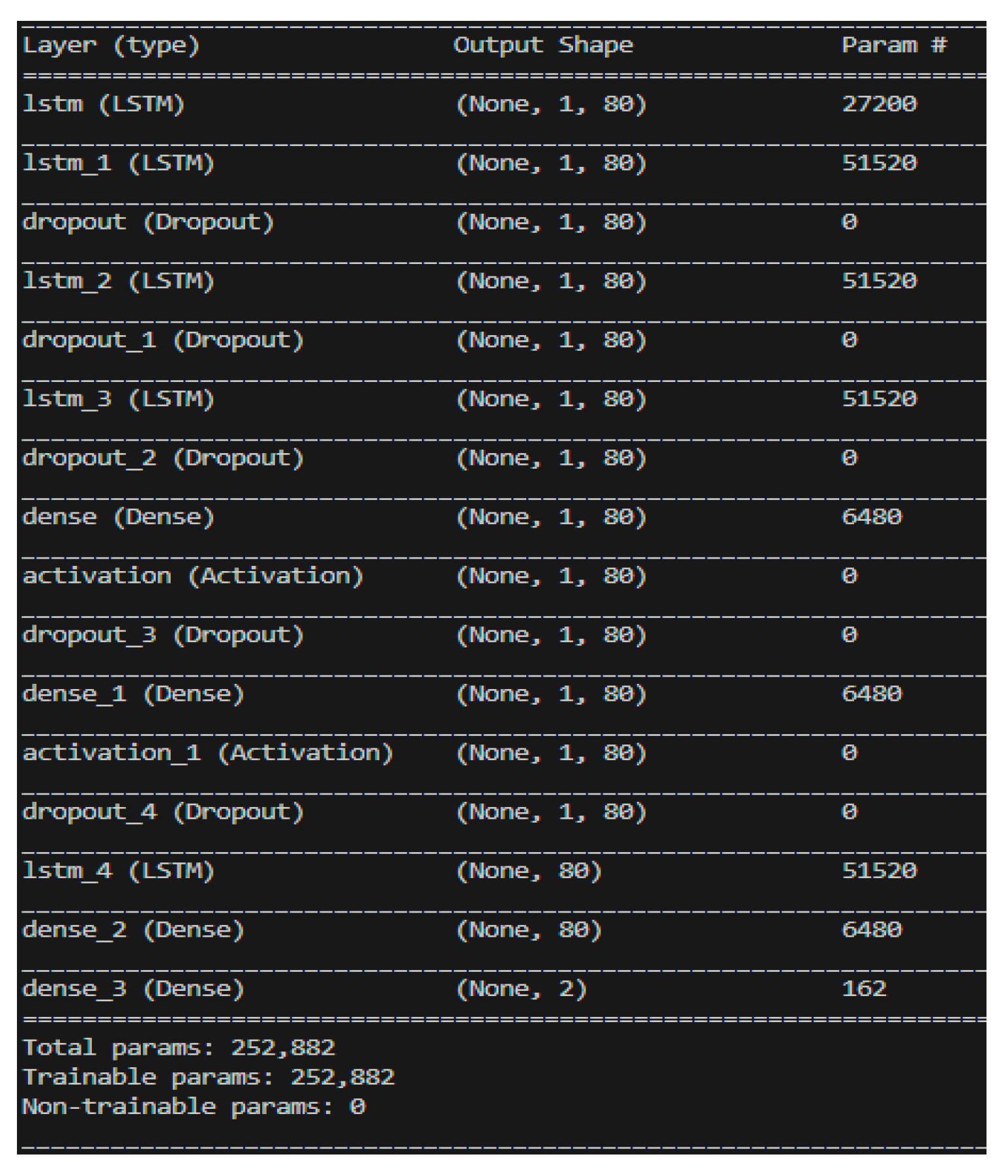

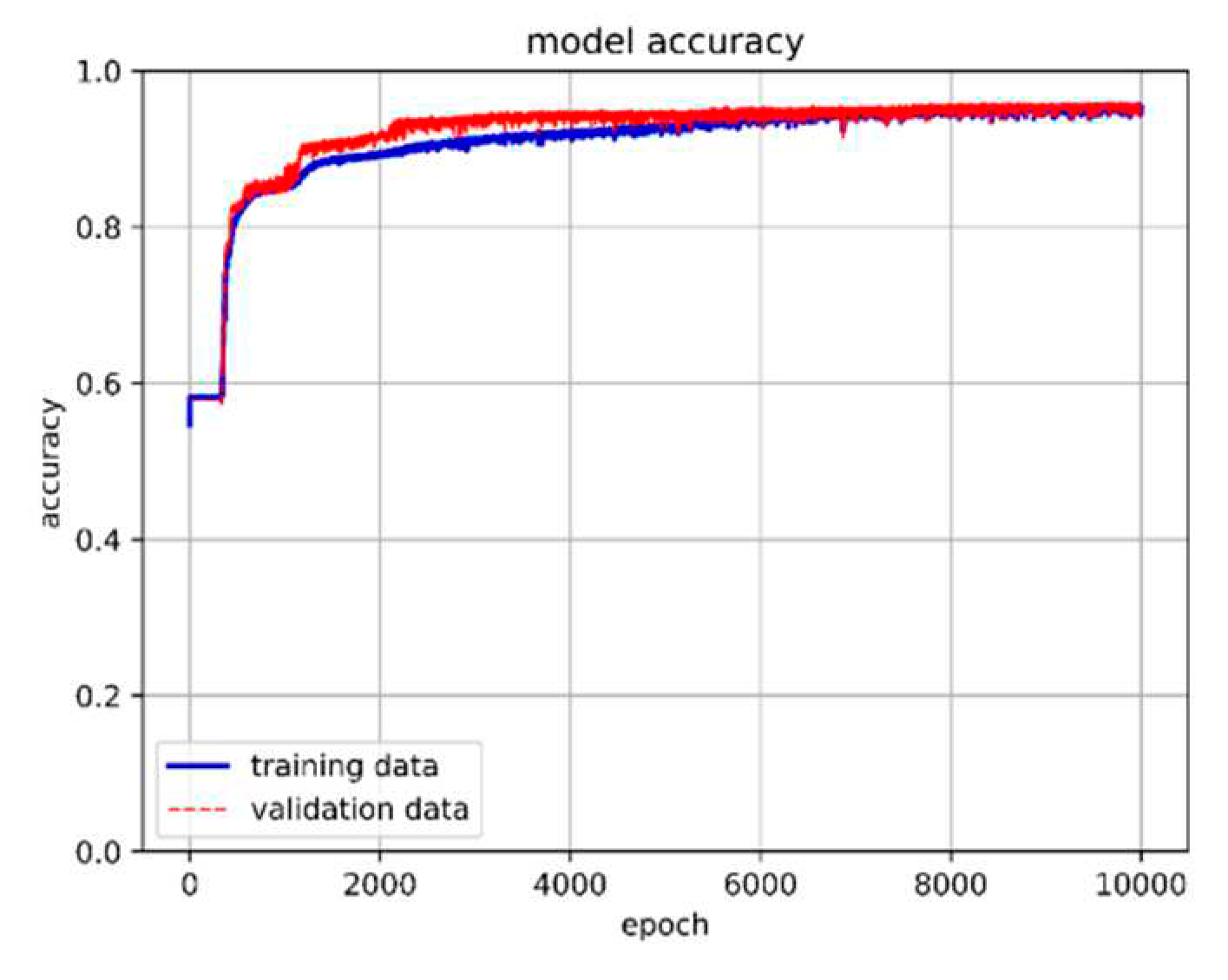

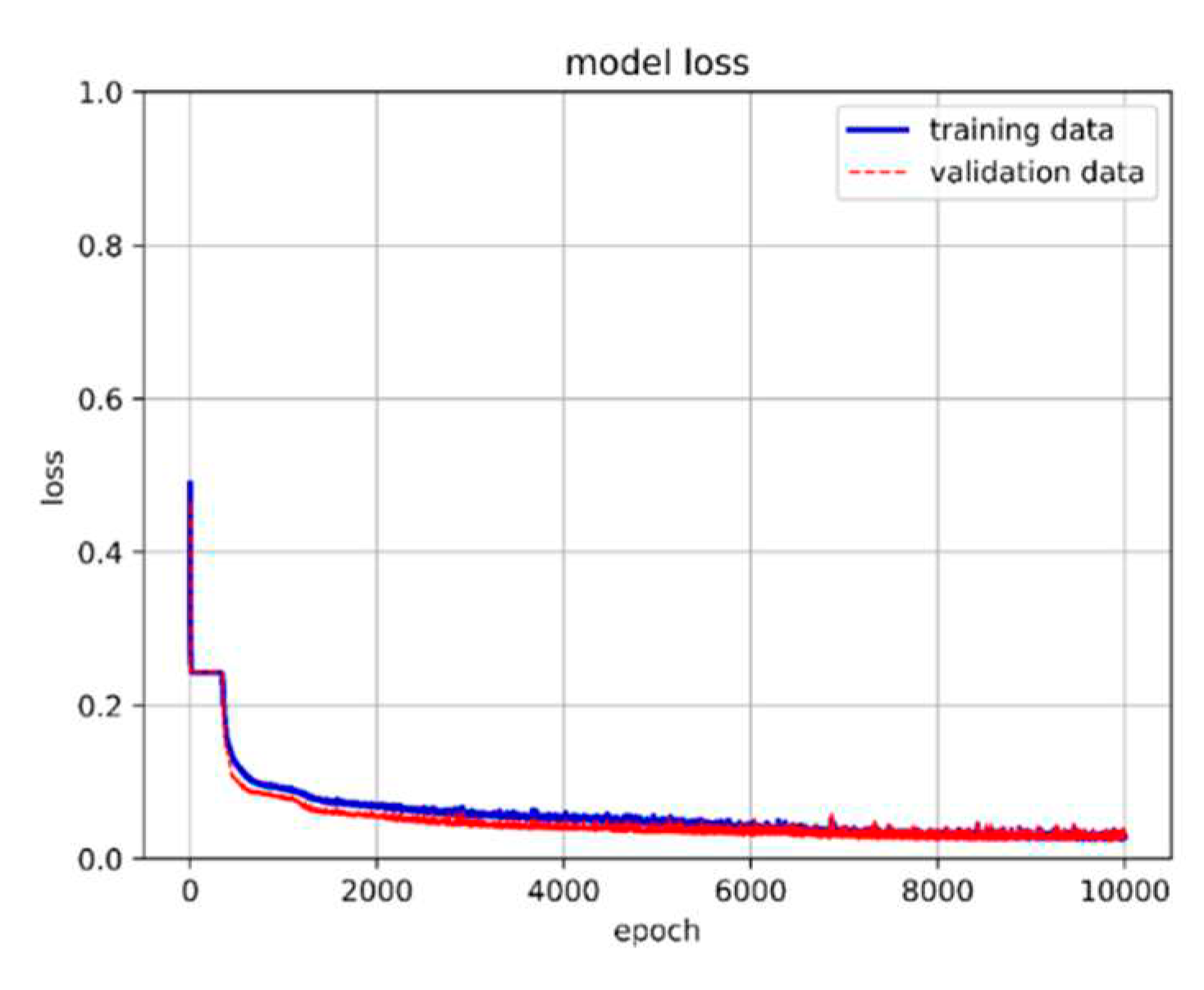

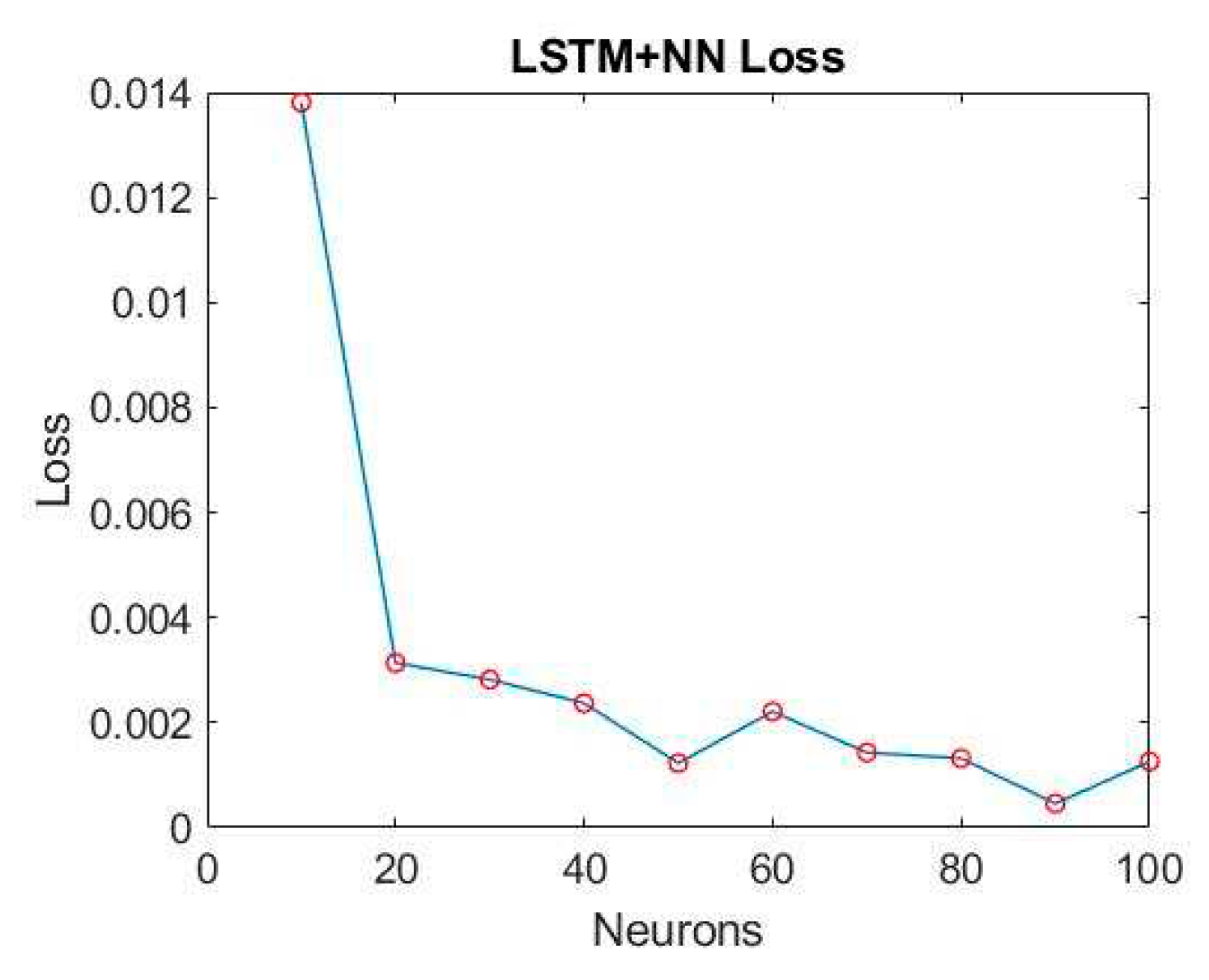

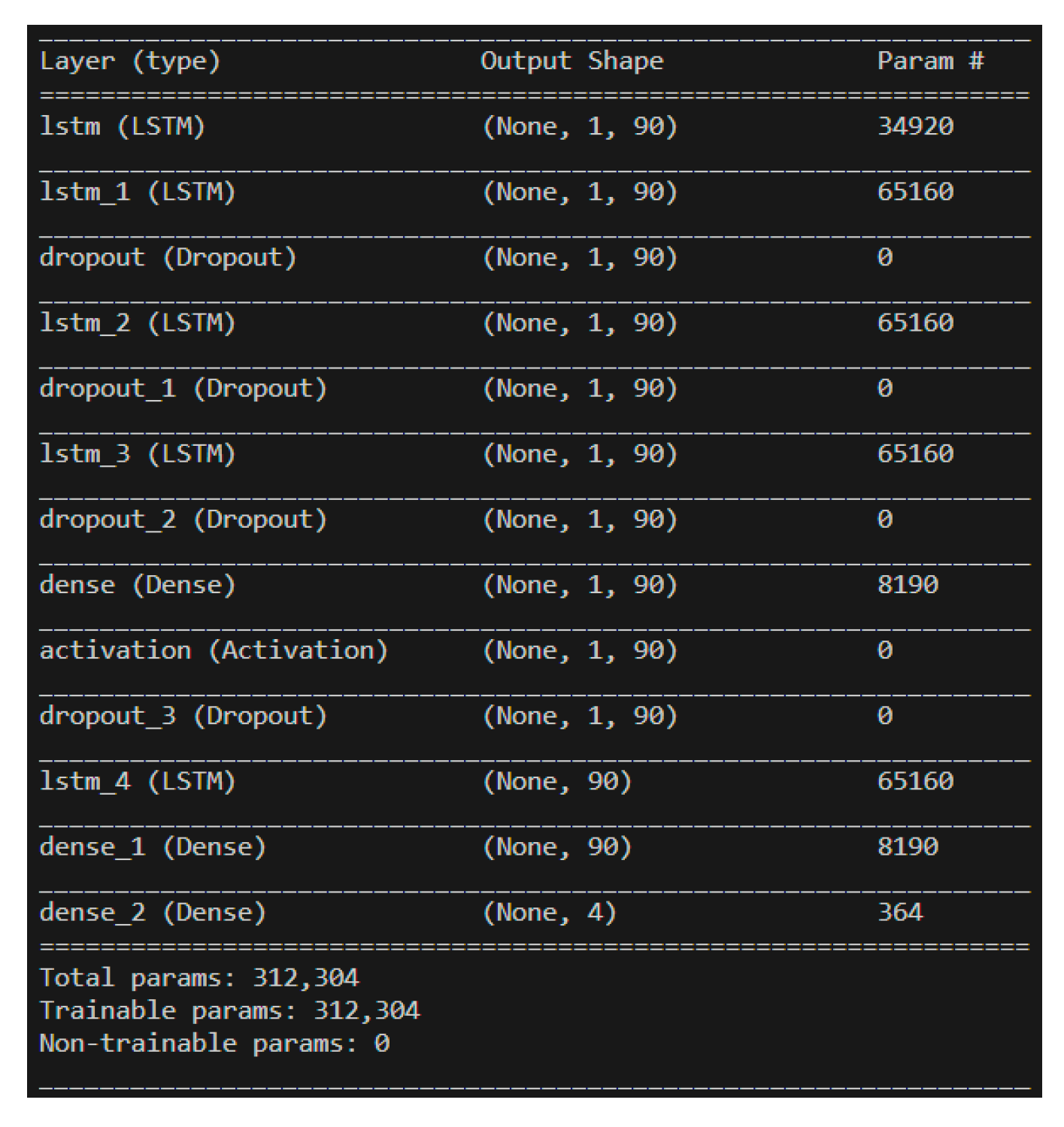

4.2. Duffing equation undamped free vibration type - by LSTM-NN model

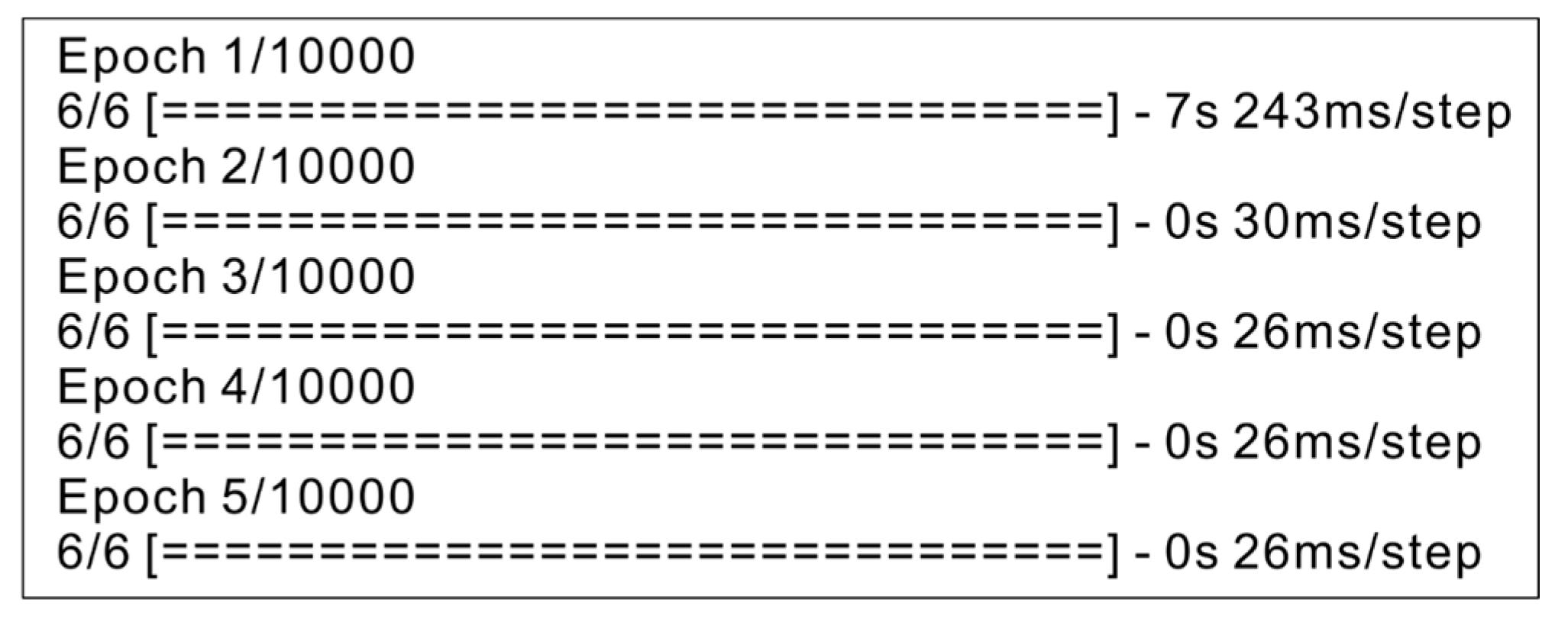

4.3. Duffing equation damped and forced vibration type - by LSTM model

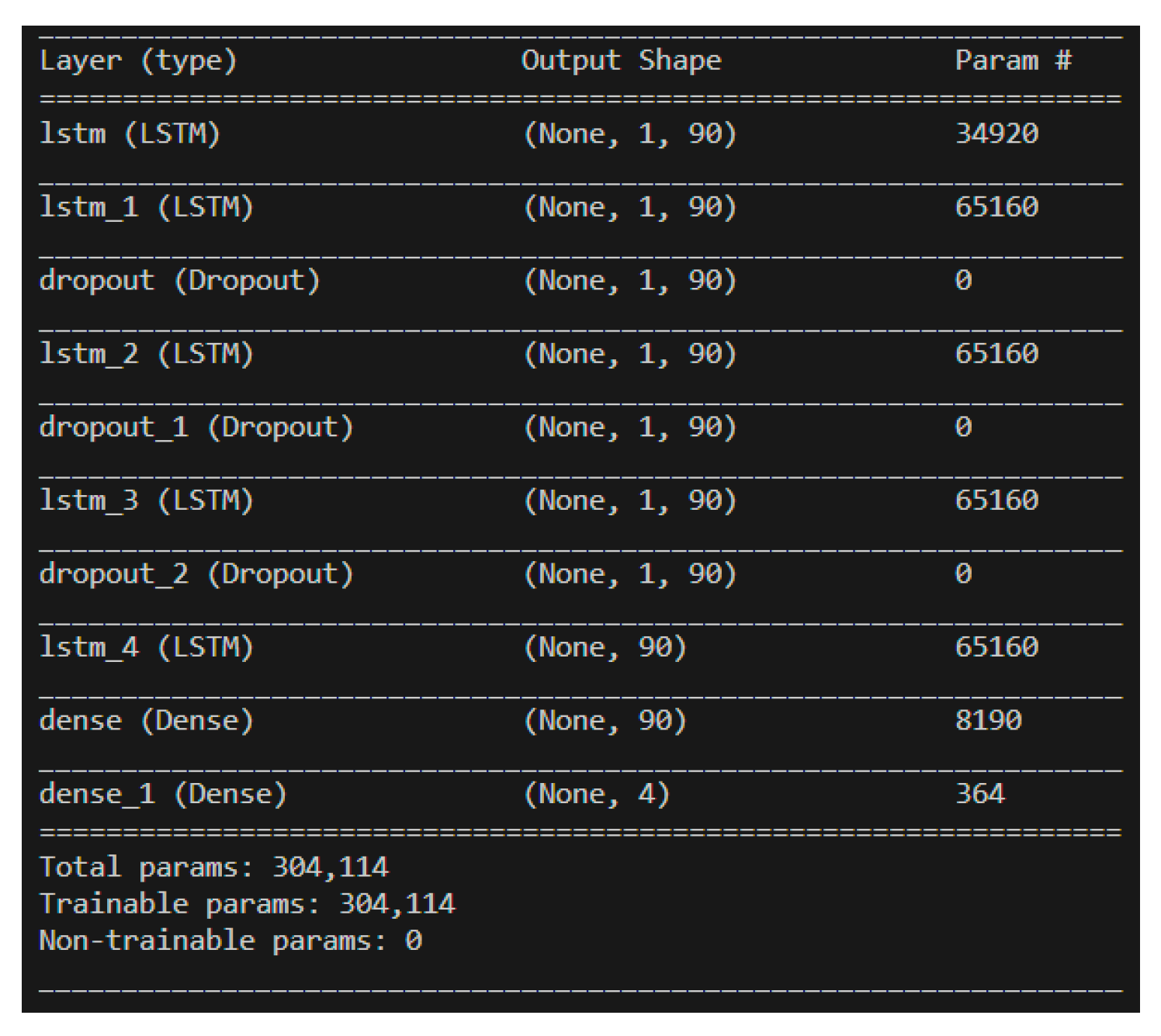

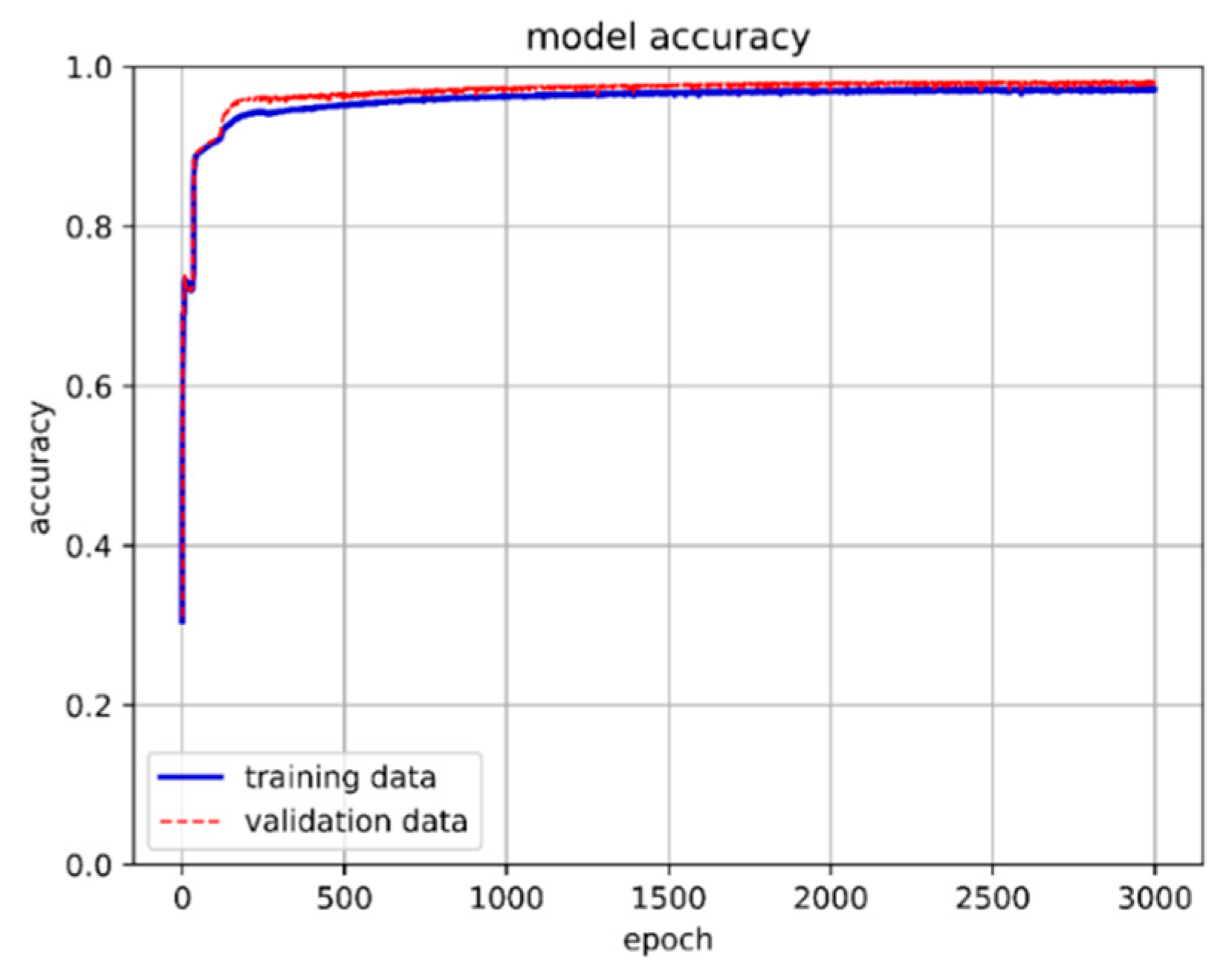

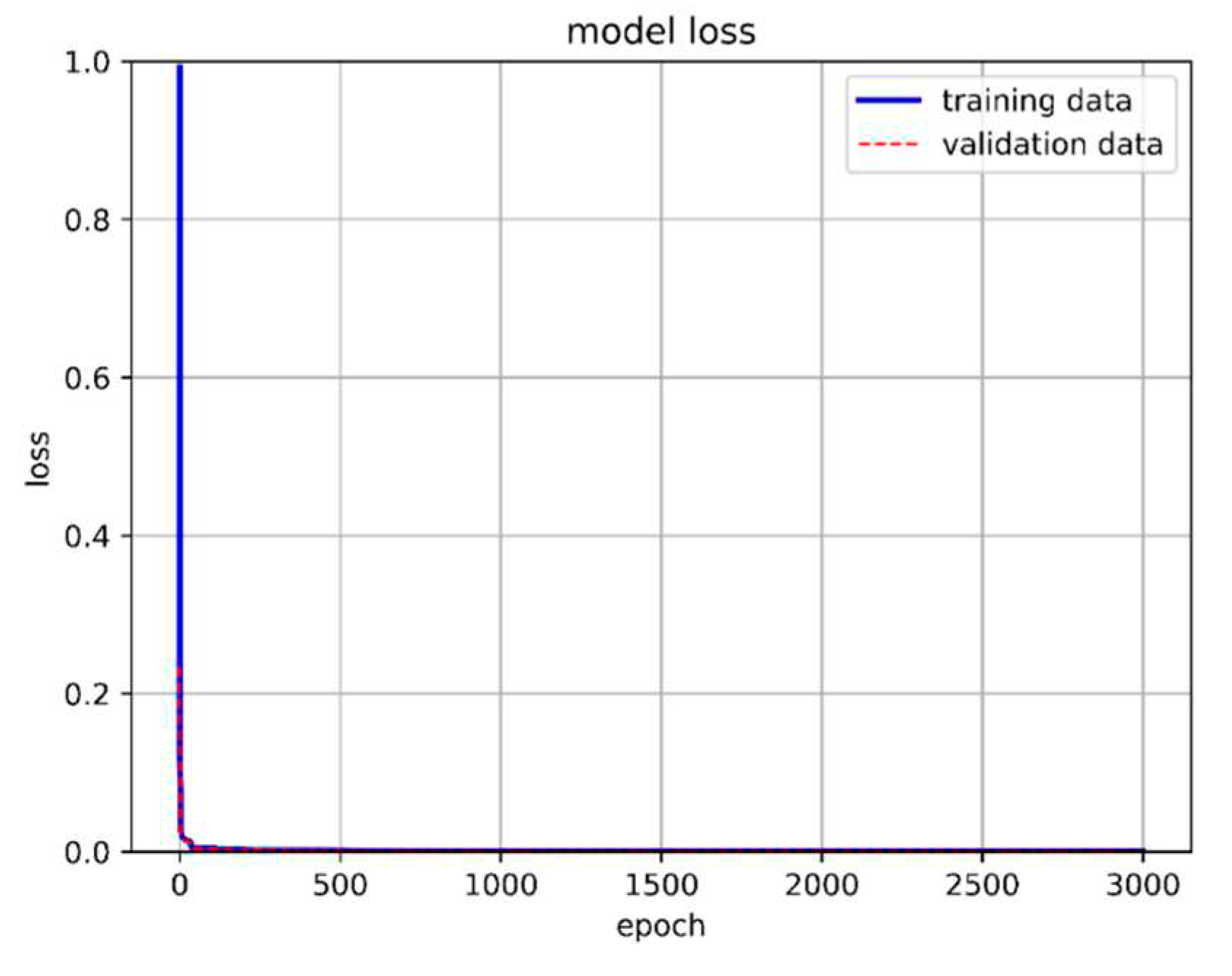

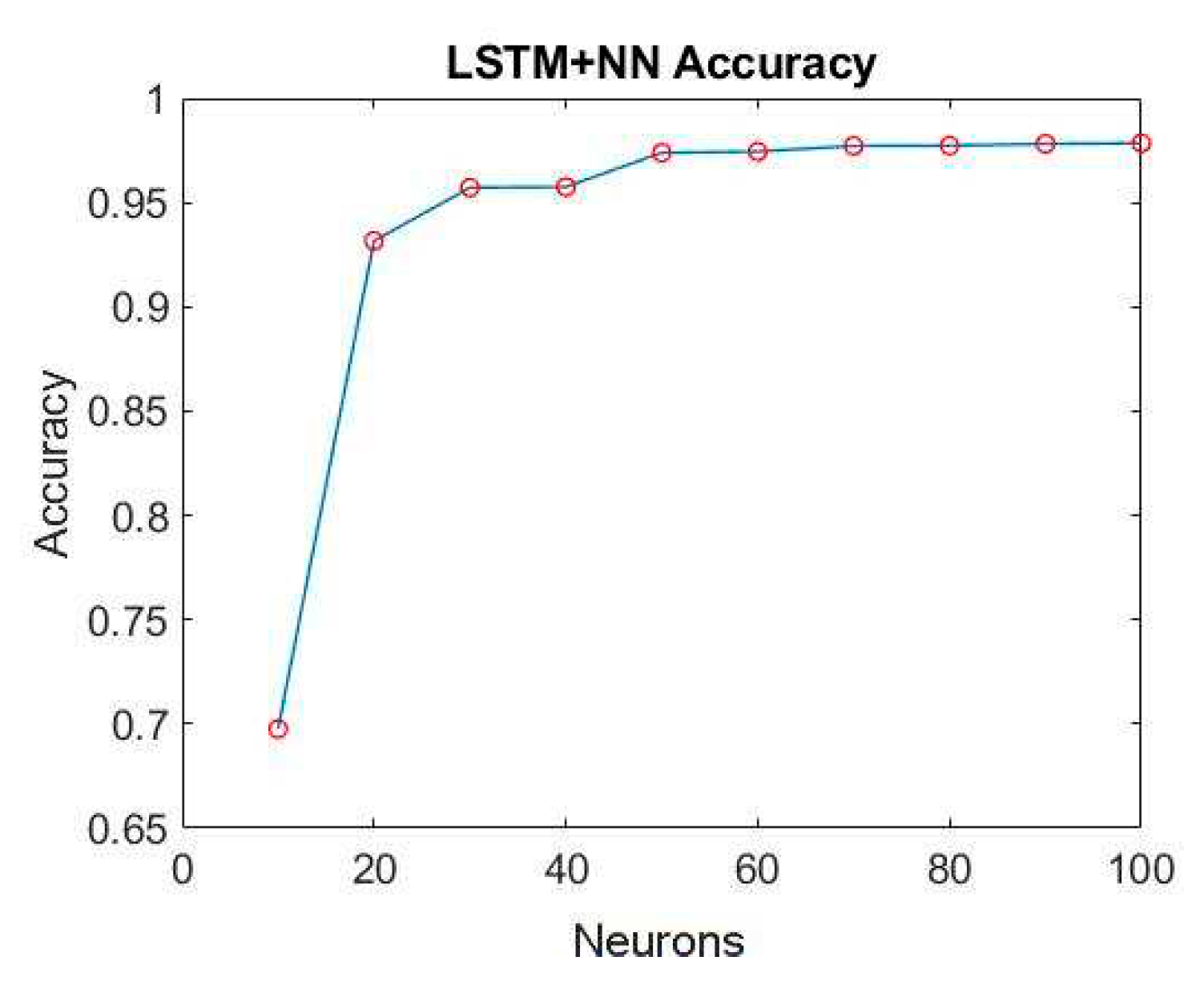

4.4. Duffing equation damped and forced vibration type - by LSTM-NN model

5. Deep learning prediction results and model comparison

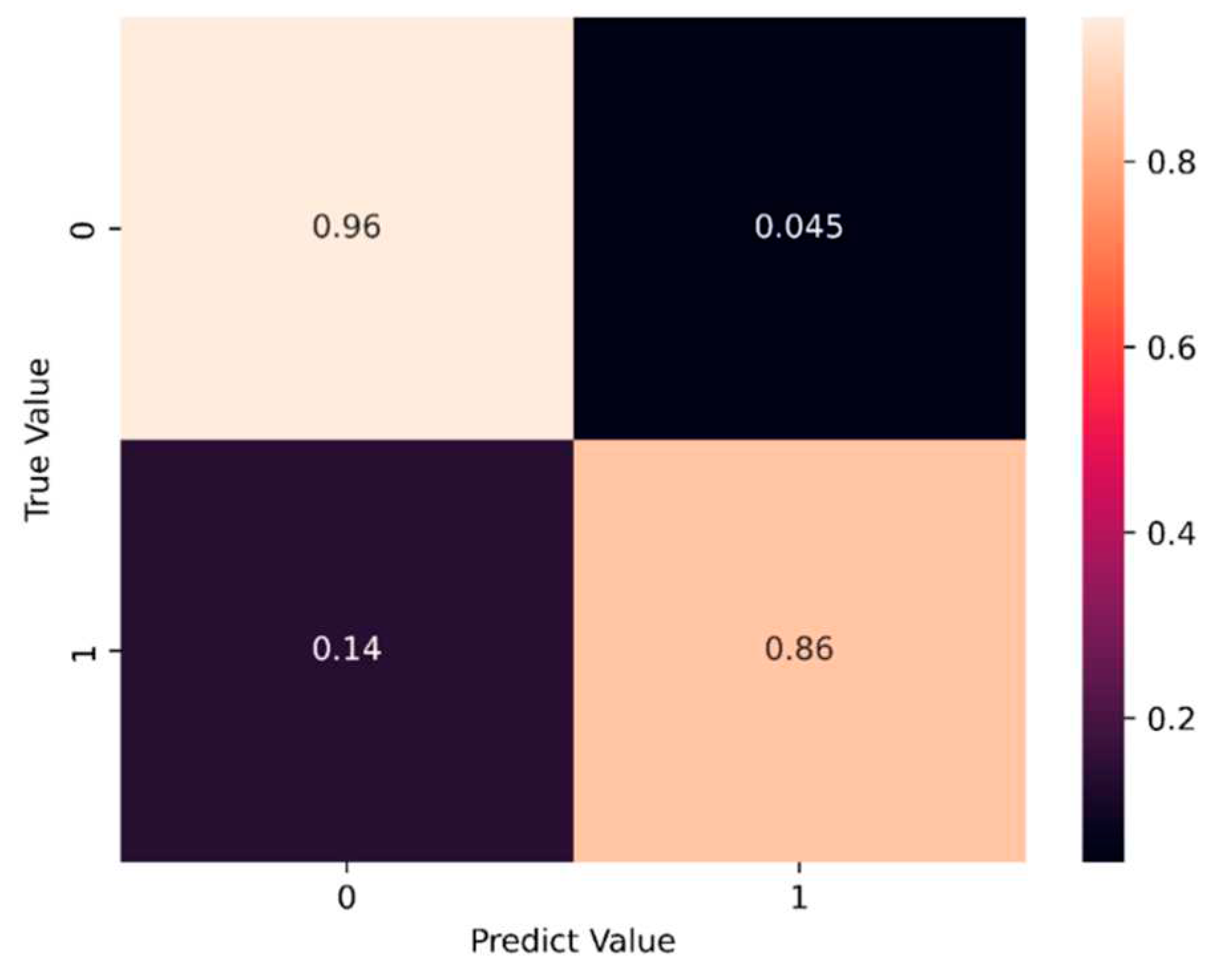

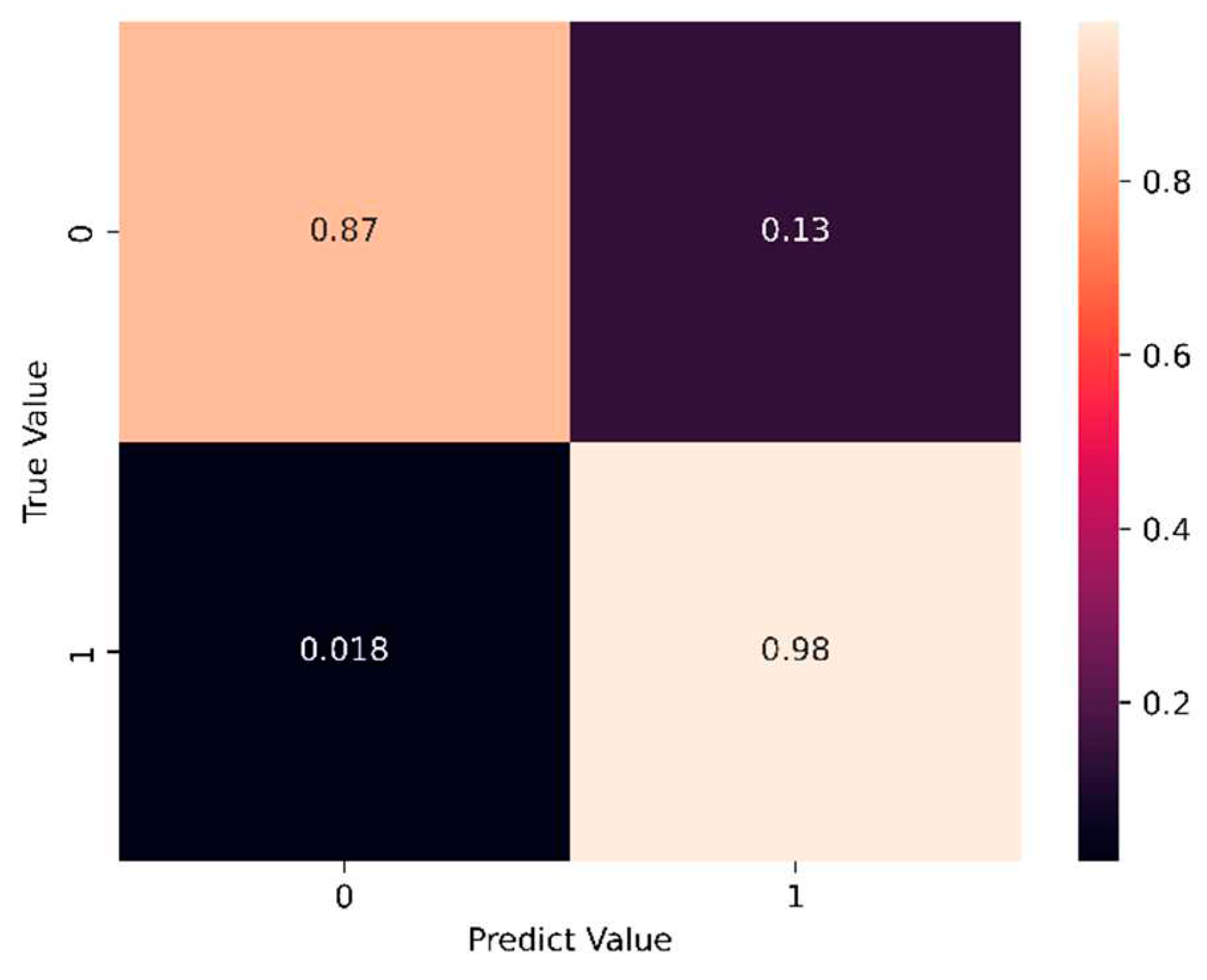

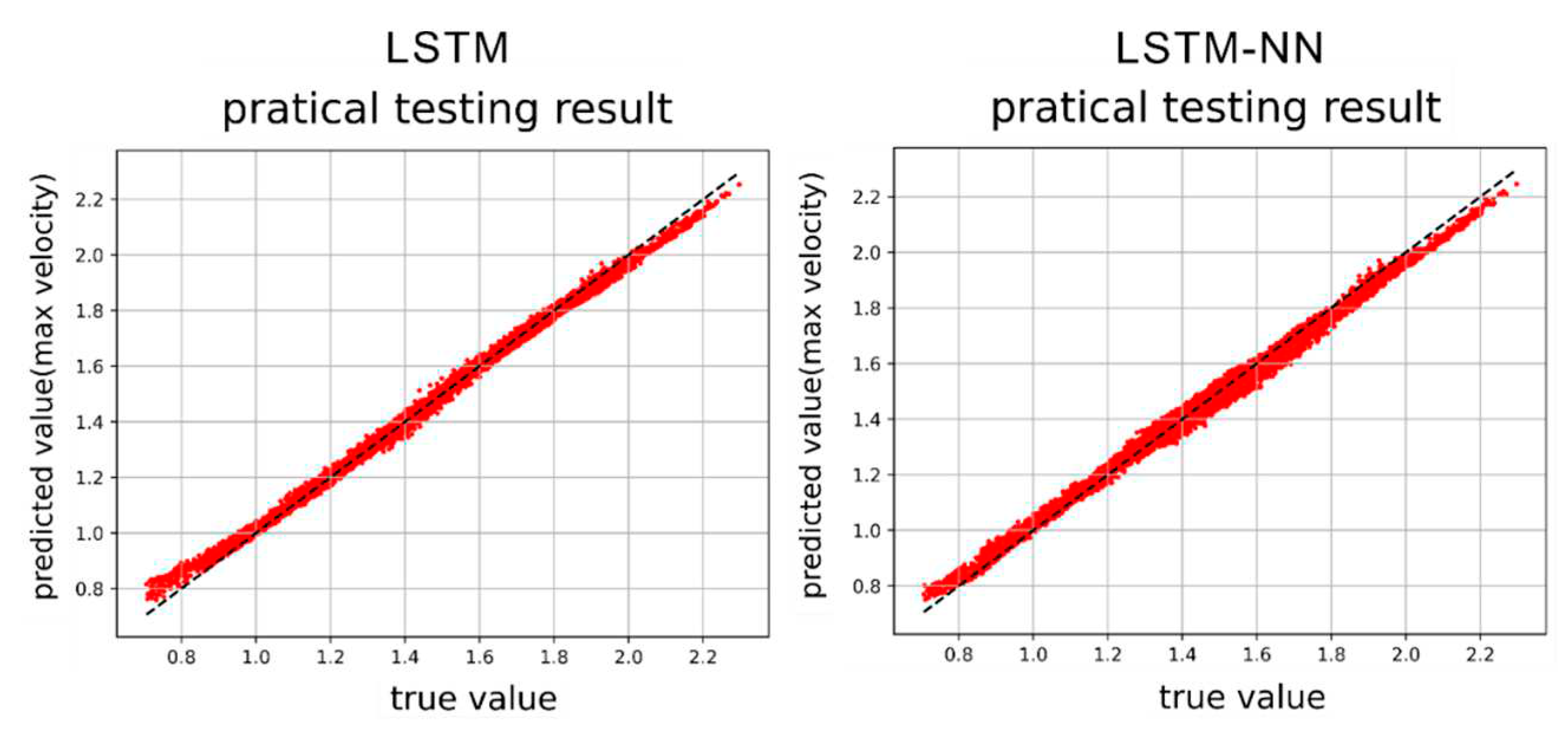

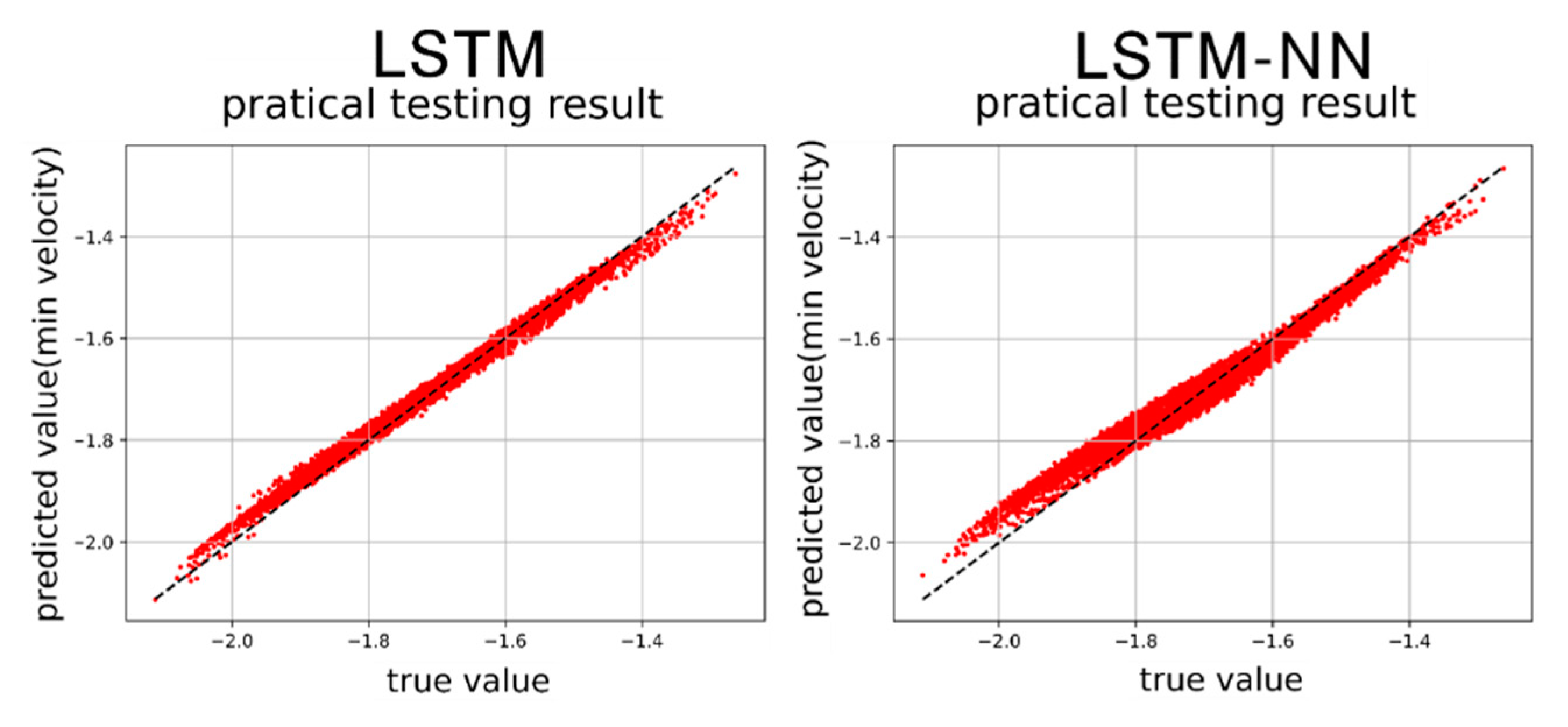

5.1. Duffing equation undamped free vibration type

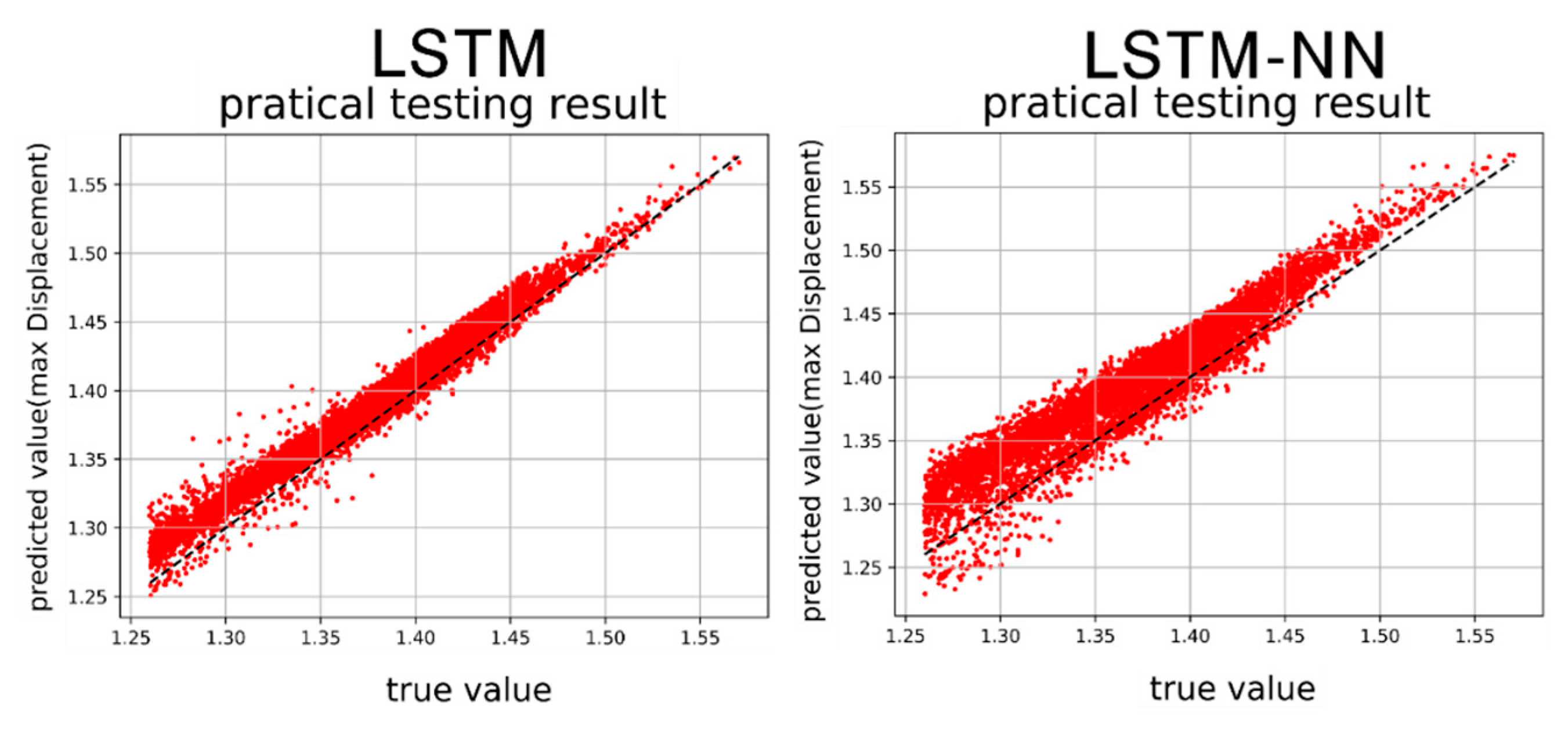

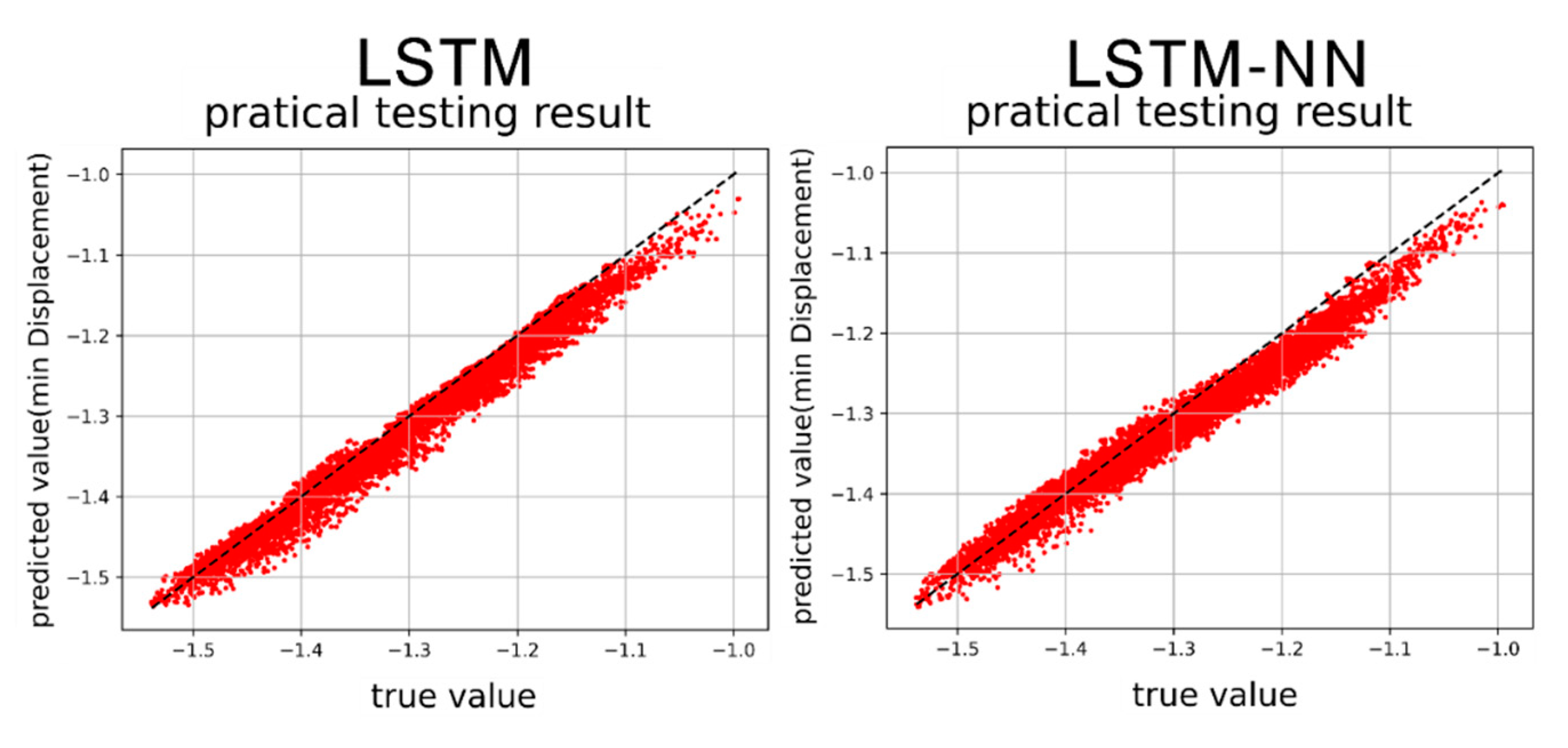

5.2. Duffing equation damped and forced vibration type

6. Conclusions

- When training the LSTM model, careful consideration must be given to the number of hidden layers, neurons, and epochs. While increasing these parameters may lead to improvements on the training set, there is a risk of overfitting on the validation set. Therefore, selecting the optimal number of layers, neurons, and epochs is essential to ensure efficient training and optimal performance on both the training and validation sets.

- To address the issue of over-reliance on training data and mitigate overfitting, incorporating a dropout layer into the basic model structure is recommended. The dropout layer enhances training stability by preventing excessive dependence on specific neurons, reducing the risk of overfitting.

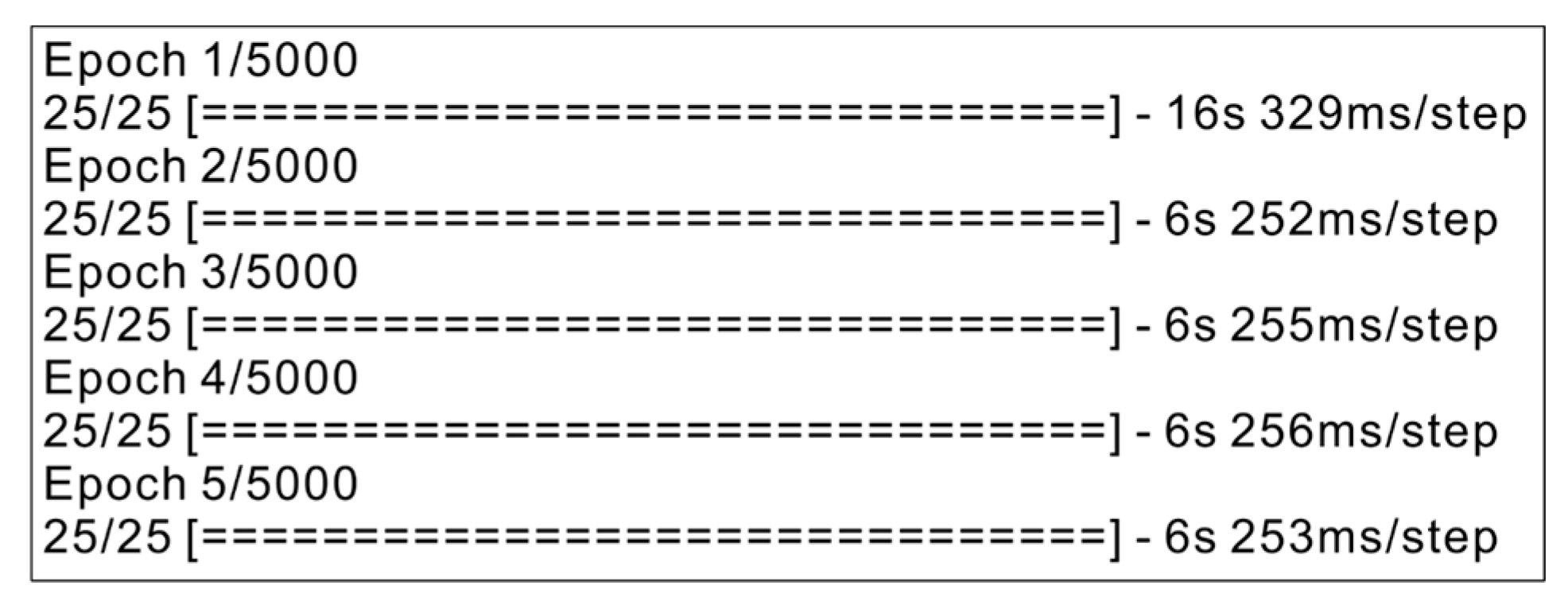

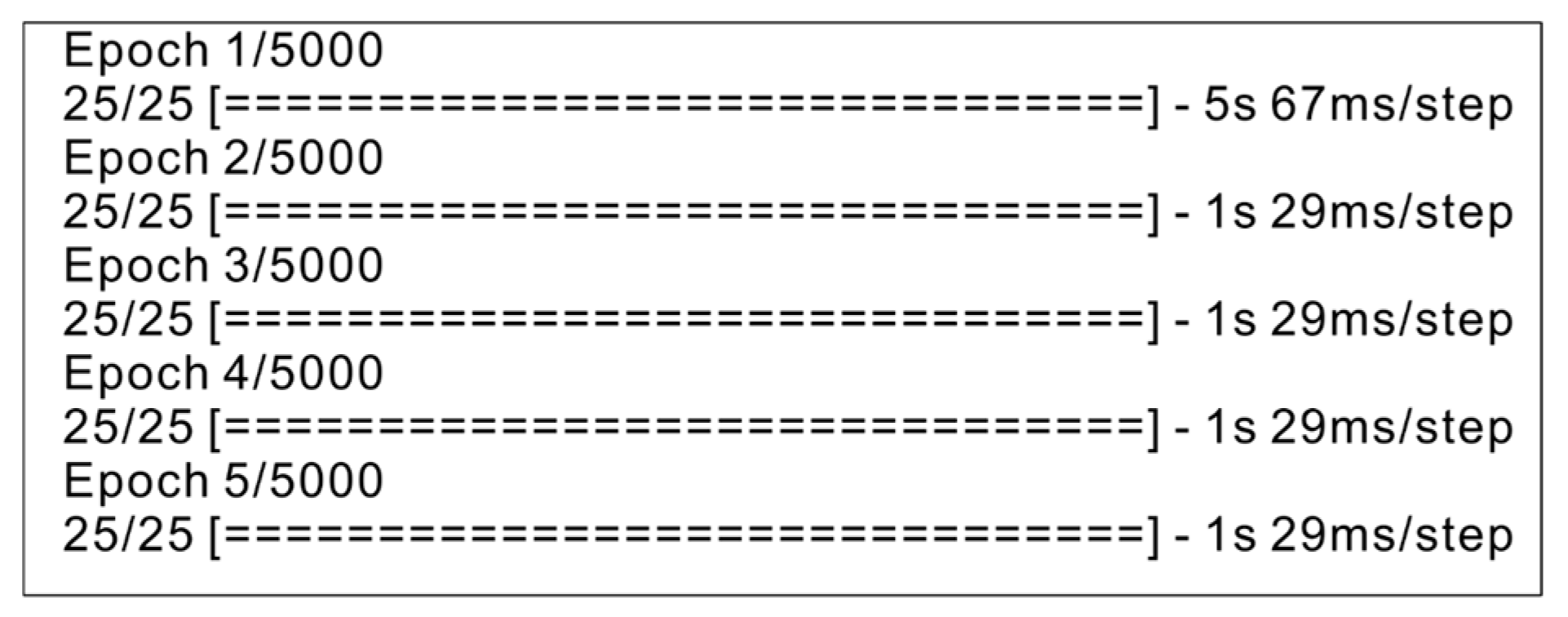

- When selecting computing hardware for LSTM model training, using a GPU can significantly reduce the training time. GPUs are equipped with more cores and higher memory bandwidth, facilitating parallel execution of multiple matrix operations. This characteristic makes GPUs highly suitable for training deep learning models, efficiently handling the extensive computations involved in these models. Consequently, opting for GPU acceleration can lead to substantial time savings during LSTM model training.

- In this study, LSTM models were employed to train a specific dataset of undamped and unforced Duffing equation convergence results. The achieved accuracy was 95%, although it exhibited some fluctuations. To address this, neural network hidden layers were incorporated into the LSTM model, creating the LSTM-NN model. This modification improved the accuracy to 96% and mitigated the issue of accuracy fluctuations. However, it is worth noting that the increased number of hidden layers resulted in longer training times.

- In this study, the LSTM model was utilized to train a dataset containing convergence results of the Duffing equation with damping and external forces, focusing on single-cycle solutions. The accuracy achieved was 97.5% with fast training speed. By incorporating hidden layers of neurons (LSTM-NN), the training accuracy increased to 98% with lower loss. However, it is important to acknowledge that training time was prolonged due to the inclusion of a larger number of hidden layers.

- The LSTM model established in this study can predict the special case of the convergence result of the Duffing equation without damping and no external force. When the predicted value is +1, the accuracy reaches 96%, and when the value is -1, the accuracy also reaches 86%. The model with a hidden layer of neurons was 87% accurate on +1 predictions and 98% accurate on -1 predictions. The LSTM model after adding the hidden layer of neurons (LSTM-NN) is more suitable for predicting binary data sets.

- The LSTM model established in this study has a large deviation in the predicted displacement range when predicting the convergence range of the single-cycle Duffing equation containing damping and external forces, but has better performance in predicting the range of velocity (the case of multi-solution prediction). The model after adding the neuron hidden layer (LSTM-NN) also has the same situation, but the prediction accuracy is lower than that of the general LSTM model.

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Feng, G. Q. He’s frequency formula to fractal undamped Duffing equation. Journal of Low Frequency Noise, Vibration and Active Control 2021, 40, 1671–1676. [Google Scholar] [CrossRef]

- Rao, S.S. Chapter 13 Nonlinear vibration. In Mechanical Vibrations, 6th ed.; Pearson Education, Inc.: New York, NY, USA, 2017. [Google Scholar]

- Akhmet, M. U.; Fen, M. O. Chaotic period-doubling and OGY control for the forced Duffing equation. Communications in Nonlinear Science and Numerical Simulation 2012, 17.4, 1929–1946. [Google Scholar] [CrossRef]

- Hao, K. We analyzed 16,625 papers to figure out where AI is headed next. MIT Technology Review. Available online: https://www.technologyreview.com/2019/01/25/1436/we-analyzed-16625-papers-to-figure-out-where-ai-is-headed-next/ (accessed on 25 January 2019).

- Hinton, G. E.; Osindero, S. Teh, Y.-W. A fast learning algorithm for deep belief nets. Neural Computation 2006, 18, 1527–1554. [Google Scholar] [CrossRef] [PubMed]

- Hinton, G. E. Learning multiple layers of representation. Trends in Cognitive Sciences Review 2007, 11, 428–434. [Google Scholar] [CrossRef] [PubMed]

- Hinton, G.E.; Srivastava, N.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R.R. Improving neural networks by preventing co-adaptation of feature detectors. arXiv 2012, arXiv:1207.0580. [Google Scholar] [CrossRef]

- Kingma, D. P.; Ba, J. Adam:A method for stochastic optimization. arXiv 2015, arXiv:1412.6980. [Google Scholar] [CrossRef]

- Srivastava, N.; Hinton, G.E.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A simple way to prevent neural networks from overfitting. Journal of Machine Learning Research 2014, 15, 1929–1958. [Google Scholar]

- Potdar, K.; Taher, S.P.; Chinmay, D.P. A comparative study of categorical variable encoding techniques for neural network classifiers. International journal of computer applications 2017, 175, 7–9. [Google Scholar] [CrossRef]

- Mathia, K.; Saeks, R. Solving nonlinear equations using Recurrent Neural Networks. In Proceedings of the World Congress on Neural Networks (WCNN’95), Renaissance Hotel, Washington, DC, USA, 17–21 July 1995; Vol. I, pp. 76–80. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Computation 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Han, J.; Arnulf, J.; Weinan, E. Solving high-dimensional partial differential equations using deep learning. Proceedings of the National Academy of Sciences 2018, 115, 8505–8510. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.R.; Wang, Y.J. Flutter speed prediction by using deep learning. Advances in Mechanical Engineering 2021, 13, 11. [Google Scholar] [CrossRef]

- Gawlikowski, J. A survey of uncertainty in deep neural networks. arXiv 2022, arXiv:2107.03342. [Google Scholar] [CrossRef]

- Hua, Y. Deep learning with long short-term memory for time series prediction. IEEE Communications Magazine 2019, 57, 114–119. [Google Scholar] [CrossRef]

- Hwang, K.; Sung, W. Single stream parallelization of generalized LSTM-like RNNs on a GPU. arXiv:1503.02852 2015, arXiv:1503.02852 2015. https:// doi.org/10.48550/arXiv.1503, 2852. [Google Scholar]

- Zheng, B.; Vijaykumar, N.; Pekhimenko, G. Echo: Compiler-based GPU memory footprint reduction for LSTM RNN training. In Proceedings of the 47th Annual International Symposium on Computer Architecture (ISCA), IEEE, Virtual Event, 30 May–3 June 2020; pp. 1089–1102. [Google Scholar] [CrossRef]

- Tariq, S.; Lee, S.; Shin, Y.; Lee, M.S.; Jung, O.; Chung, D.; Woo, S.S. Detecting anomalies in space using multivariate convolutional LSTM with mixtures of probabilistic PCA. In Proceedings of the 25th ACM SIGKDD international conference on knowledge discovery & data mining, Anchorage, AK, USA, 4–8 August 2019; pp. 2123–2133. [Google Scholar]

- Memarzadeh, G.; Keynia, F. Short-term electricity load and price forecasting by a new optimal LSTM-NN based prediction algorithm. Electric Power Systems Research 2021, 192, 106995. [Google Scholar] [CrossRef]

| Feature | Label | ||||

|---|---|---|---|---|---|

| Initial Displ., x0 | Initial | Linear Spring Const., k | |||

| Range | [0.010~5.250] | [0.001~2.000] | [-1.72 ~ -0.2] | [0.2~1.72] | [1; -1] |

| Feature | ||||||

|---|---|---|---|---|---|---|

| Initial Displ., x0 | Initial | Damping Coef., c | Linear Spring Const., k | Force, Q | ||

| Range | [0.30~1.26] | [0.30~1.26] | [0.03~0.24] | [0.45~1.75] | [0.45~1.75] | [0.30~0.72] |

| Output data | ||||

|---|---|---|---|---|

| MaxDispl., xmax | Max | MinDispl., xmin | Min | |

| Range | [0.38814 ~ 1.75069] | [-1.74609 ~ -0.25300] | [0.30012 ~ -3.95563] | [-3.95583 ~ -0.34118] |

| 1 LSTM Layer & 1 NN layer | 1 LSTM Layer & 2 NN layer | 1 LSTM Layer & 3 NN layer | |

| Accuracy (%) | 93.20 | 82.70 | 77.74 |

| Loss (%) | 4.32 | 15.81 | 14.13 |

| 2 LSTM Layer & 1 NN layer | 2 LSTM Layer & 2 NN layer | 2 LSTM Layer & 3 NN layer | |

| Accuracy (%) | 86.67 | 87.56 | 82.81 |

| Loss (%) | 11.35 | 8.70 | 11.57 |

| 3 LSTM Layer & 1 NN layer | 3 LSTM Layer & 2 NN layer | 3 LSTM Layer & 3 NN layer | |

| Accuracy (%) | 86.78 | 90.90 | 89.86 |

| Loss (%) | 9.87 | 6.50 | 7.09 |

| 4 LSTM Layer & 1 NN layer | 4 LSTM Layer & 2 NN layer | 4 LSTM Layer & 3 NN layer | |

| Accuracy (%) | 92.74 | 91.97 | 86.89 |

| Loss (%) | 5.20 | 4.96 | 8.61 |

| 5 LSTM Layer & 1 NN layer | 5 LSTM Layer & 2 NN layer | 5 LSTM Layer & 3 NN layer | |

| Accuracy (%) | 92.64 | 58.22 | 58.12 |

| Loss (%) | 4.68 | 24.34 | 24.34 |

| 1 LSTM Layer & 1 NN layer | 1 LSTM Layer & 2 NN layer | 1 LSTM Layer & 3 NN layer | |

| Accuracy (%) | 97.57 | 87.47 | 87.40 |

| Loss (%) | 1.22 | 1.90 | 1.14 |

| 2 LSTM Layer & 1 NN layer | 2 LSTM Layer & 2 NN layer | 2 LSTM Layer & 3 NN layer | |

| Accuracy (%) | 98.13 | 98.00 | 98.24 |

| Loss (%) | 0.29 | 0.87 | 0.29 |

| 3 LSTM Layer & 1 NN layer | 3 LSTM Layer & 2 NN layer | 3 LSTM Layer & 3 NN layer | |

| Accuracy (%) | 98.14 | 97.58 | 95.12 |

| Loss (%) | 0.07 | 0.34 | 0.37 |

| 4 LSTM Layer & 1 NN layer | 4 LSTM Layer & 2 NN layer | 4 LSTM Layer & 3 NN layer | |

| Accuracy (%) | 97.61 | 97.10 | 97.62 |

| Loss (%) | 0.08 | 0.12 | 0.54 |

| 5 LSTM Layer & 1 NN layer | 5 LSTM Layer & 2 NN layer | 5 LSTM Layer & 3 NN layer | |

| Accuracy (%) | 96.80 | 97.37 | 97.33 |

| Loss (%) | 0.09 | 0.13 | 0.23 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).