1. Introduction

Non-contact measurement technology for determining trajectory and attitude changes of rigid moving targets has become a focus of research with the rapid advancement of science and technology [

1,

2,

3]. Visual technologies for measuring the pose of a target are widely used in obtaining motion parameters and mainly involve monocular, binocular, and multi-ocular measurements, with each technology having its advantages and disadvantages. However, whereas binocular and multi-ocular measurements suffer from a small field of view and difficulties in stereo matching, monocular measurements have a simple structure, large field of view, strong real-time performance, and good accuracy. Monocular systems are thus used widely to measure parameters of rigid-body motion [

2,

3,

4,

5].

There are two types of monocular visual measurement of pose according to the features selected, namely measurements of cooperative targets [

9,

10,

11] and measurements of non-cooperative targets [

12,

13,

14,

15]. Among them, the spatial constraint relationship between target feature points in cooperative target pose measurement is controllable, which to some extent limits the application scope but reduces the difficulty of feature extraction, improves the extraction accuracy, and reduces the complexity of the pose calculation. At present, research on cooperative target measurement methods has focused mainly on cooperative target design, feature extraction, pose calculation methods, and pose calculation errors [

16,

17,

18,

19,

20]. However, most of the results are based on calibrated camera parameters and there has been little research on the pose estimation problem of cameras having varying focal lengths. In some practical problems, such as the measurement of multiple rigid-body motion parameters addressed in this article, the internal parameters of the camera need to be frequently adjusted and changed, and there are many factors that affect the calibration accuracy of the internal parameters of a camera. It is difficult to accurately determine the internal parameters in the absence of standard equipment. Therefore, research on uncalibrated camera pose estimation technology is also important. The main methods of solving the pose measurement problem of uncalibrated cameras are the classic two-step method [

21], direct linear transform (DLT) method [

22], Zhengyou Zhang calibration method [

23], P4P and P5P methods [

24], and AFUFPnP method [

25] proposed by Tsai and others. The two-step method is not suitable for dynamic measurement. P4P and P5P methods face noise sensitivity problems because they use fewer control points to estimate internal and external parameters. Zhengyou Zhang’s calibration method requires the installation of a planar chessboard on the measured object to ensure accuracy, which is inconvenient in the case of a moving rigid body. The AFUFPnP method is a pose estimation method based on the EPnP [

26] and POSIT [

27] algorithms. This method has high pose estimation accuracy and calculation efficiency but low execution efficiency.

Against the above background, this article combines the foundations of previous research on pose measurement to theoretically derive a method of measuring the absolute pose of a moving rigid body and presents a complete implementation of the method. The method is validated in terms of its feasibility and experimental repeatability for different positional accuracies using a high-speed camera having a variable focal length.

2. Theoretical derivation of methods

2.1. Principle of the absolute pose measurement for a rigid body

As shown in

Figure 1, Body and World rigid bodies move relative to one another in space. For the convenience of expression, the body coordinate system O

B-X

BY

BZ

B, world coordinate system O

W-X

WY

WZ

W, and camera coordinate system O

C-X

CY

CZ

C are respectively denoted B, W, and C hereafter. We let

P and

Q denote physical points on the body and world rigid bodies, respectively. We use superscripts to distinguish the coordinate values of the physical points

P and

Q in the different coordinate systems and subscripts to distinguish different points on the rigid body, For example,

represents the coordinate values of

P3 point on the Body rigid body in the W system. We use minuscule letters to represent pixel points; e.g.,

p represents the pixel points projected by physical point

P on the image.

R and

T represent conversion relationships between coordinate systems, where superscript is used to represent the target coordinate system in the conversion and subscript is used to represent the starting coordinate system; e.g.,

is the rotation matrix for conversion from the C system to the W system, and

is the translation matrix for the conversion from the B system to the W system.

The aim of this article is to measure the motion pose of a rigid body in the B system relative to the W system, and the real-time spatial coordinate of the center of mass P of the B-system rigid body in the W system.

The imaging projection formulas are

Here, is the ratio of two vector modes, representing the spatial coordinates of the pixel point p in the C system.

It follows from (1) and (2) that

The motion pose of the rigid body can be solved by

, if

According to reference [

28], the attitude angle of a rigid body is

Here, , and are the rotation angles around the Z, Y, and X axes, with the order of rotation being Z, Y, and X.

It is seen from (3) and (4) that for and , , , and need to be determined. and are determined from the control points on the W-system rigid body whereas and are determined from the control points on the B-system rigid body. The absolute pose problem of a moving rigid body is thus transformed into a problem of solving the rotation and translation matrices.

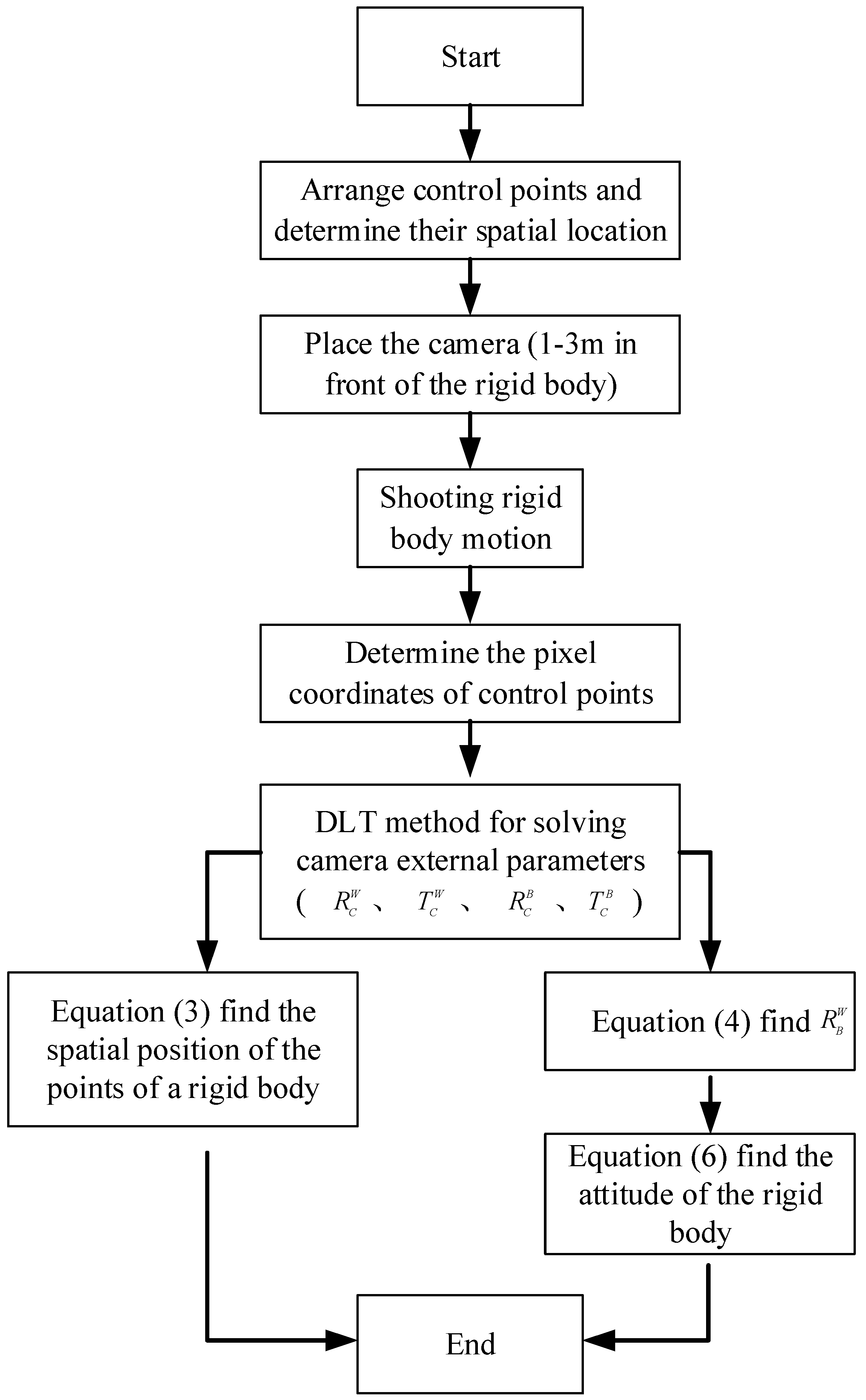

2.2. Implementation steps of the measurement method

The measurement of the pose parameters of the rigid body involves the following steps. Groups of control points with each group having more than six non-coplanar points are first arranged in the W and B systems. R and T for the W–C and B–C conversions are then calibrated and calculated to calculate the coordinates of the B-system control points in the W system and to calculate the attitude changes of the B-system rigid body. Finally, the other parameters are measured.

2.3. Determination of the rotation and translation matrices

This paper considers a high-speed camera with a variable focal length. To facilitate repeated experiments, a DLT [

29] camera calibration method providing simple operation and high calculation efficiency is adopted to obtain the camera rotation and translation matrices. The DLT method does not require an approximate initial value of the internal orientation element (i.e., it does not require the manual calculation of the initial value in contrast with other space rendezvous algorithms) and is suitable for the calibration processing of non-metric digital cameras [

30].

As shown in

Figure 1,

N (

N ≥ 6) control points are arranged on each of the B and W series. Taking the control points on the body rigid body as an example, if the spatial coordinates are, the corresponding pixel coordinates are. It follows from the collinearity equation of the DLT method that

where

denotes coefficients representing the camera’s internal and external parameters.

Formula (7) shows that for the 11 unknowns, at least six points are required to determine the 11 values. As the number of equations is greater than the number of unknowns, the least squares method is used to solve for L and thus obtain the external parameters of the camera.

The constraint conditions of the rotation matrix are not fully considered in obtaining the results using the proposed method, and the error in the results is thus bound to be large. To improve the accuracy, this paper uses Gauss–Newton iterative method to solve the 11 L equations and six constraint equations of the rotation matrix iteratively and then applies singular value decomposition to the obtained rotation matrix. If , then . Similarly, and can be determined. After this processing, the estimated attitude matrix strictly meets the inherent constraints of the rotation matrix, and the estimation error is effectively reduced.

3. Experiments and data analysis

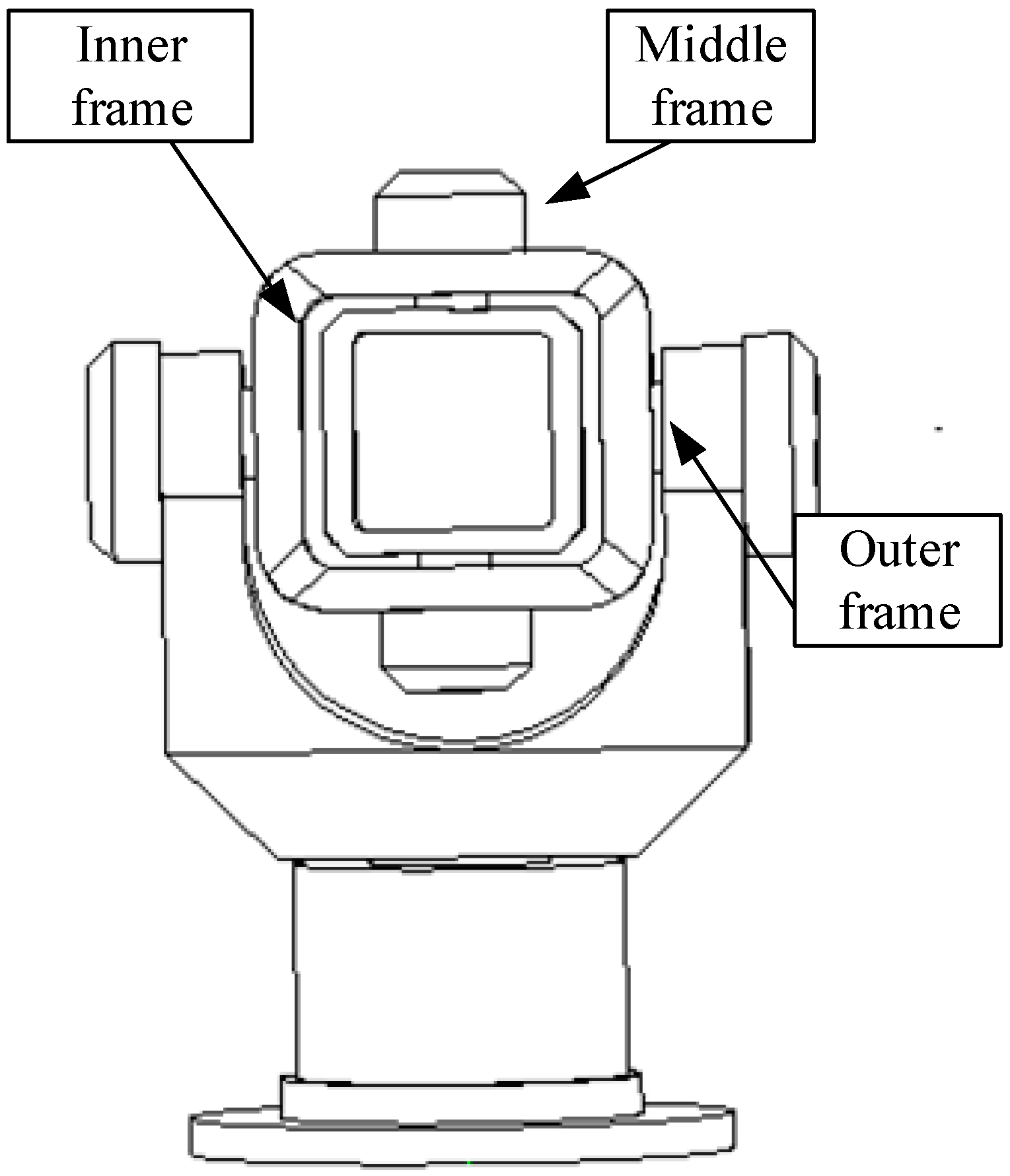

The experimental platform used in this study mainly comprised a three-axis turntable and camera. The SGT320E three-axis turntable had an accuracy of 0.0001 degrees and a mechanical structure in which a U-shaped outer frame rotated around the azimuth axis, an O-shaped middle frame rotated around the pitch axis, and an O-shaped inner frame rotated around the transverse roller axis. The outer frame, middle frame, and inner frame can all be considered rigid bodies, as shown in

Figure 3. The three axes simultaneously had speed, position, and sinusoidal oscillation modes and did not interfere with each other, enabling the high-precision measurement of pose. The Phantom M310 high-speed camera, produced by Vision Research in the United States, had adjustable resolution, shooting speed, and exposure time.

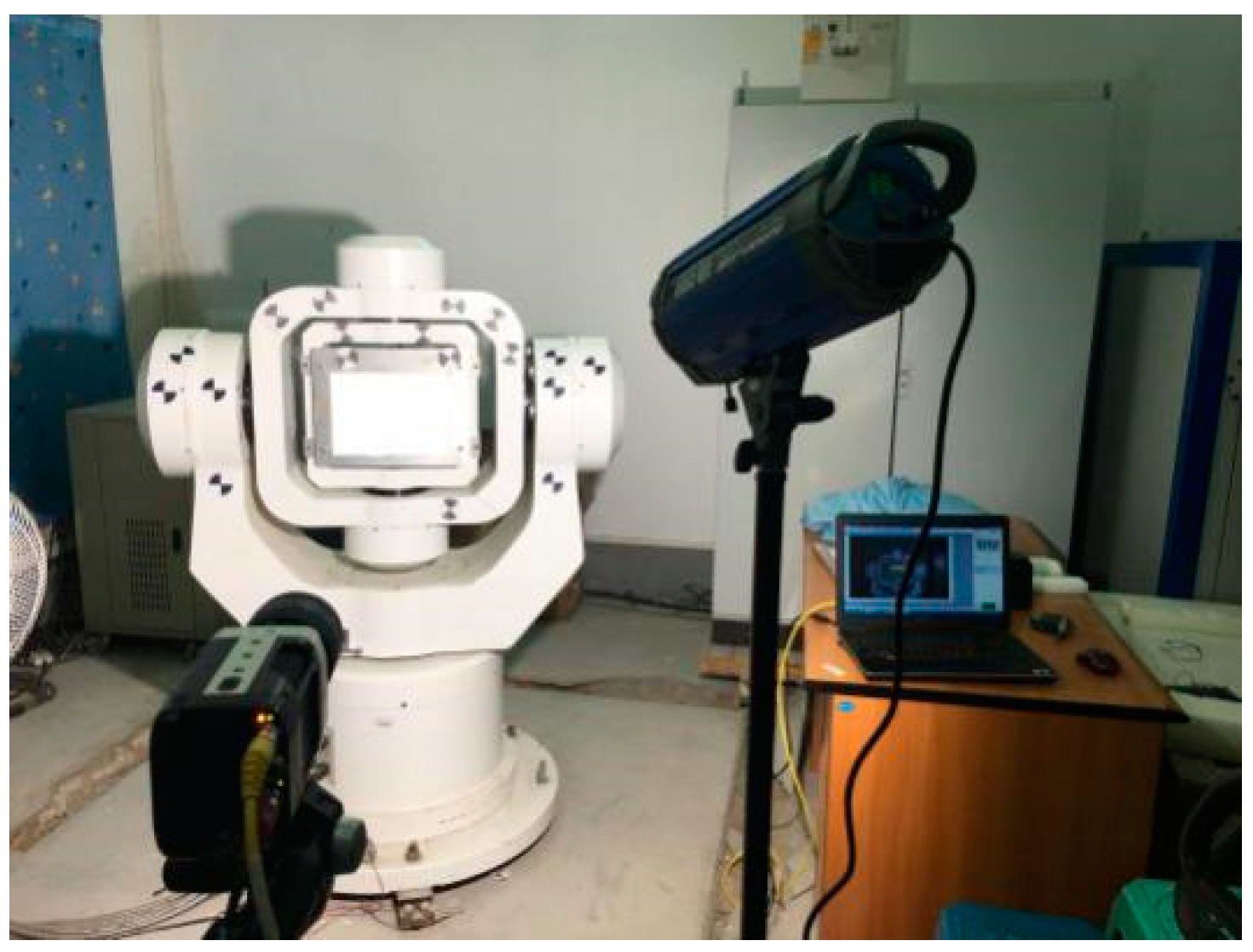

3.1. Establishment of the coordinate system and arrangement of control points

For the convenience of arranging control points, the inner frame of the turntable was locked and formed a body rigid body with the middle frame, whereas the outer frame was a world rigid body. The origins of the B and W systems were both set at the center of the turntable. According to the construction of the turntable, this point was also the center of mass of the body rigid body, as shown in

Figure 4.

Figure 5 shows the scene of the experiment, which truly reflects the layout of each marking point. Eight control points were arranged on the Body rigid body (i.e., the middle frame) and eight control points were arranged on the World rigid body (i.e., the outer frame).

As the geometric structure and dimensions of the three-dimensional turntable were fixed, the three-dimensional coordinates of the control and test points on the middle and outer frames could be measured easily.

3.2. Determination of pose in the turntable measurement

For the convenience of experimental verification, the world rigid body (i.e., the outer frame of the turntable) is set stationary. As only the central axis rotates, the real-time motion attitude angle of the turntable changes only with the rotation angle around the X-axis, with the other two angles being zero.

Denoting the angle of rotation of the frame of the turntable around the X-axis as, the real-time position coordinates of any point on the body rigid body in the W system are

The center of mass of the body rigid body is at the origin of the B and W systems and can be inferred from equation (8).

3.3. Experiment on measuring the pose of a rigid body with a camera

We set the body rigid body motion mode to swing mode, with the swing parameters being an amplitude of 20 degrees, frequency of 0.5 Hz, and time of 10 s. To ensure that the high-speed camera recorded the entire motion of the turntable, the high-speed camera was operated at 500 frames/second, and 16.68 seconds of video was collected. The test scene is shown in

Figure 5.

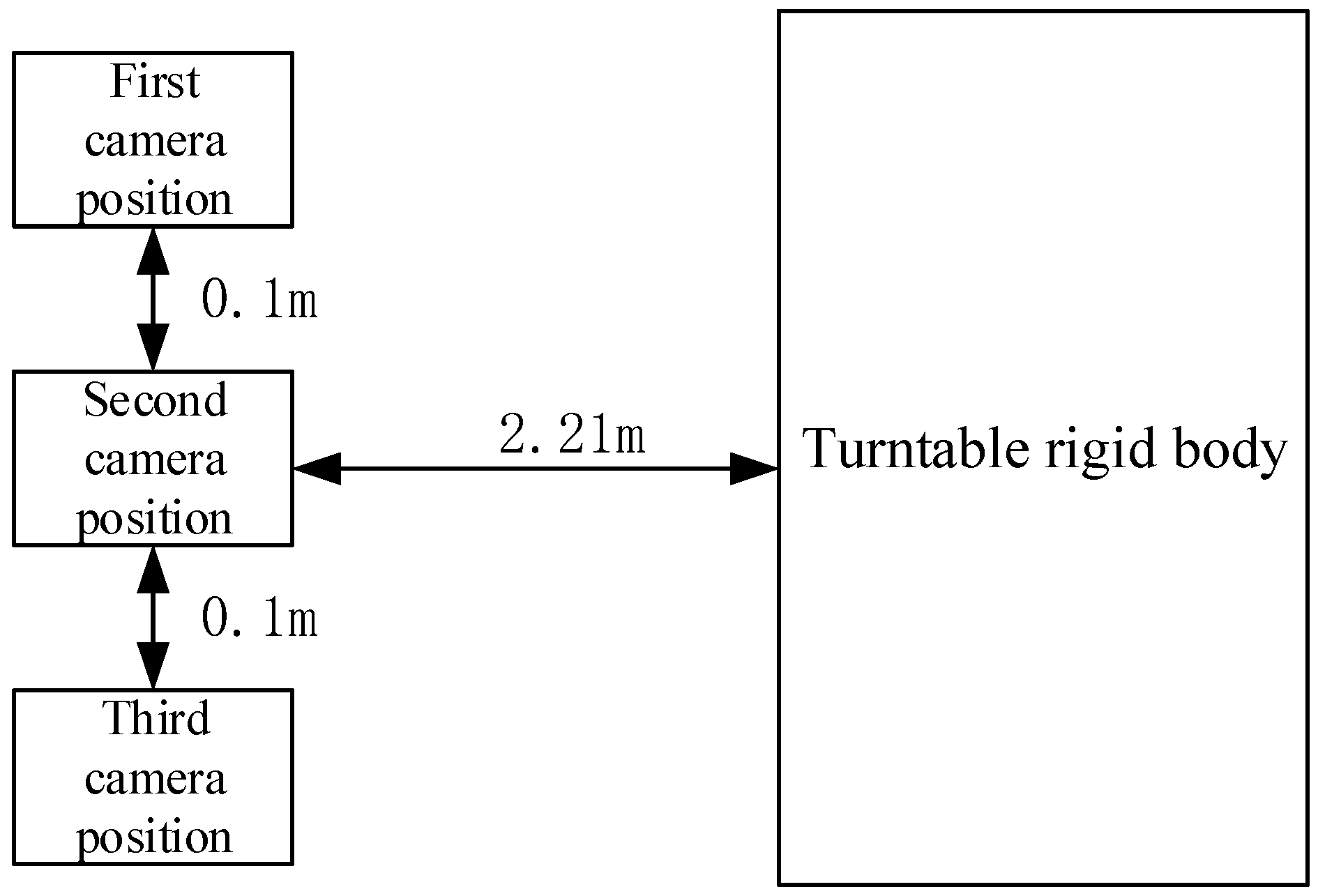

In verifying the ability to make repeated measurements adopting the proposed method, the camera was placed at three positions to measure the swing mode of the central axis of the turntable, with each position being 2.21 m from the rigid body, as shown in

Figure 6.

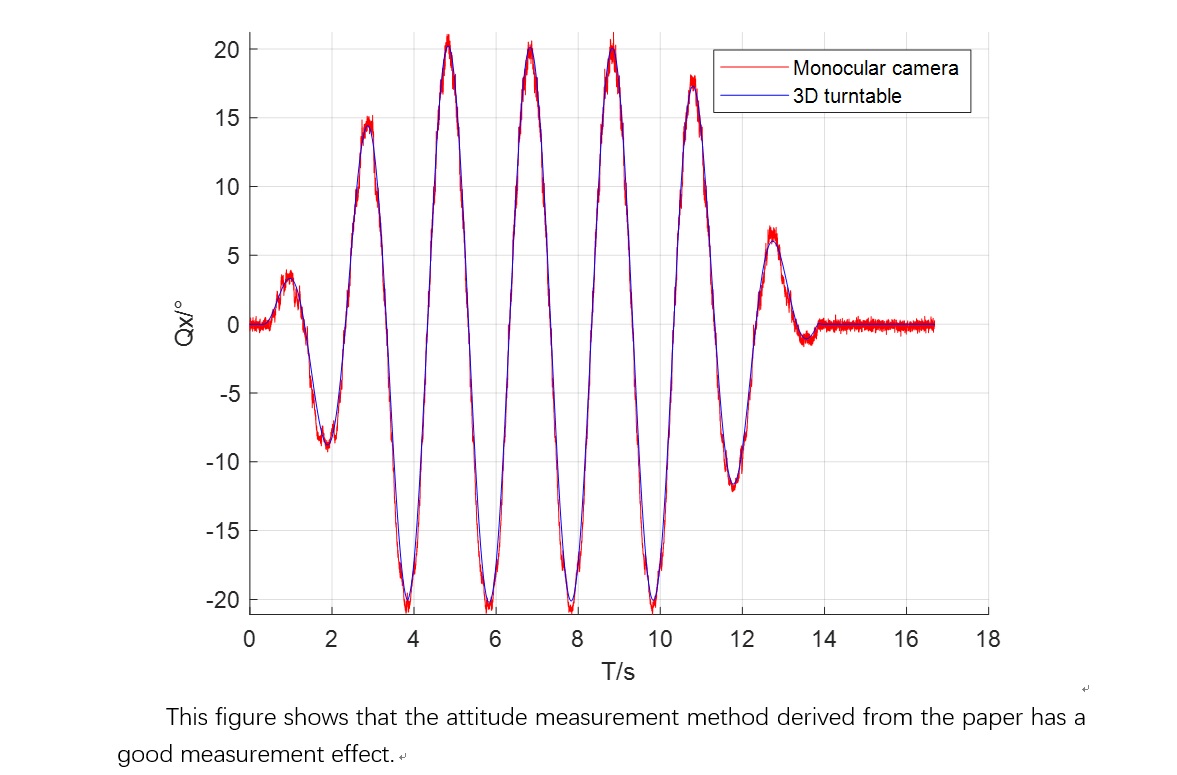

3.3.1. Attitude measurement and analysis

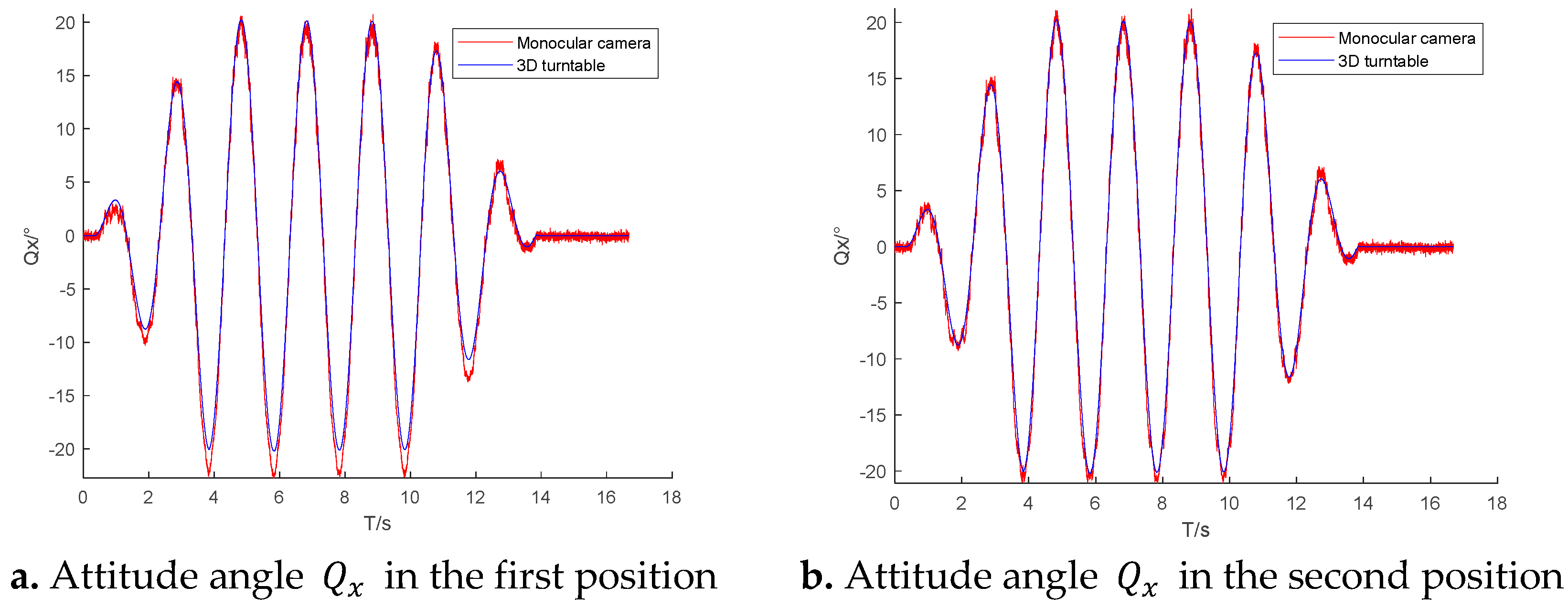

We used the high-speed camera to record video frames of dynamic changes in the middle and outer frames of the turntable. To ensure that the pixel coordinate measurement did not affect the pose measurements, the pixel coordinates of the control points were determined using TEMA, an advanced motion analysis tool developed by Image Systems AB in Sweden. We adopted the DLT method mentioned earlier for measurements and then used formulas (4) and (6) to obtain the attitude of the turntable. Dynamic changes in the attitude of the turntable are shown in

Figure 7.

Figure 7 shows the change in the measured attitude angle of the rigid body when the camera is at a distance of 2.21 m.

Figure 7a–c show the real-time change in the camera’s attitude angle

of the middle frame of the turntable measured for different camera positions.

Table 1 compares the mean absolute error and standard deviation of the camera’s attitude angle measured for the three camera positions.

Figure 7a–c and

Table 1 reveal that the minimum error in the attitude angle

measured by the camera at the second camera position. The mean absolute error difference of the attitude angle measured at three positions is not significant (not more than 0.2 degrees).

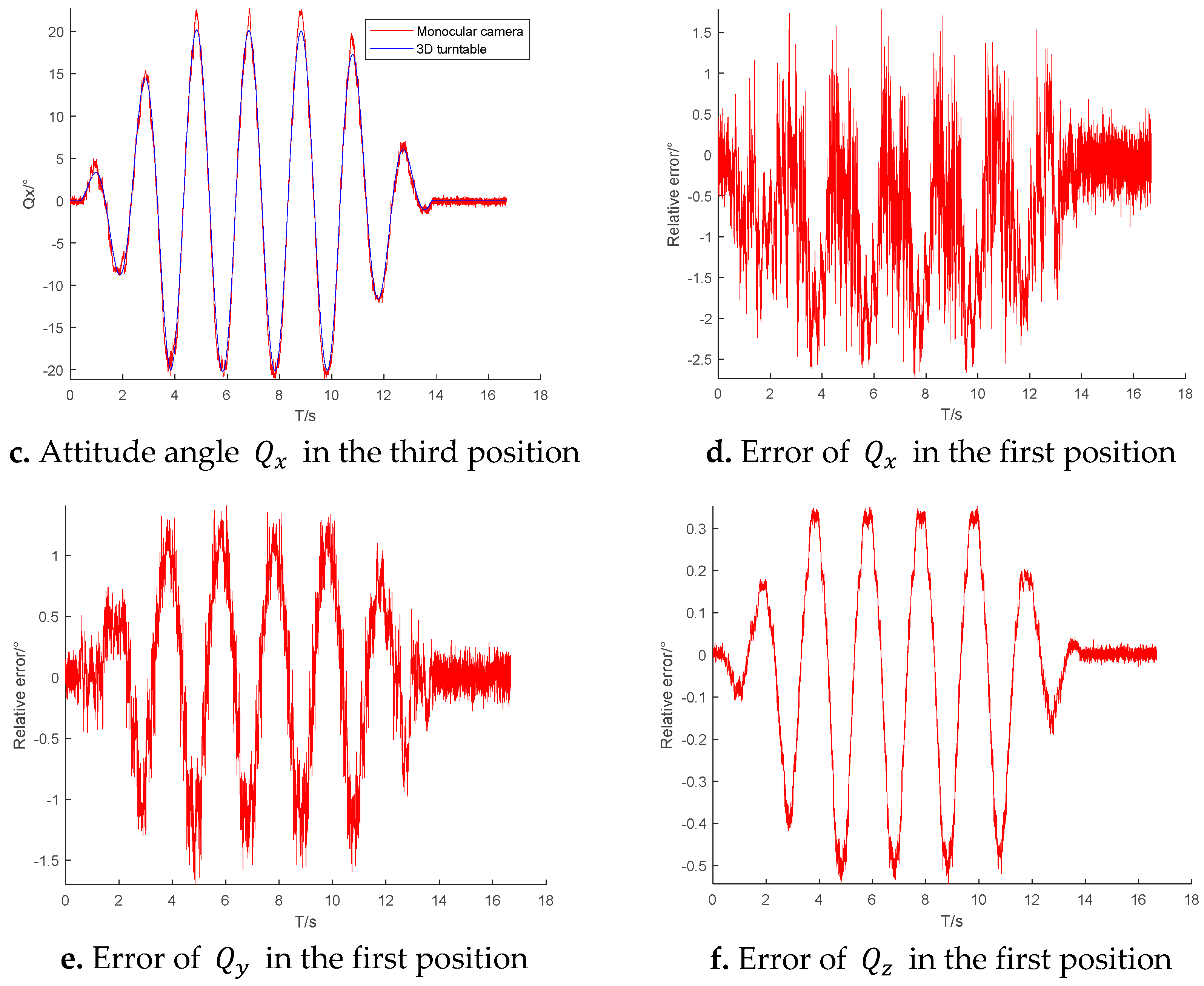

Figure 7d reflects the error of the camera in measuring the attitude angle

at the first position. The error increases with the tilt of the rigid body relative to the camera lens. The mean absolute error is 0.8092 degrees, the standard deviation is 0.6623 degrees, and there are individual errors as large as 2.5 degrees.

Figure 7e,f show that although only one attitude angle

is changing in theory, the angles

and

measured by the camera still include errors. The mean absolute error is 0.186 degrees and the standard deviation is 0.641 degrees for

, and the mean absolute error is −0.032 degrees and the standard deviation is 0.222 degrees for

.

3.3.2. Measurement and analysis of the position of the center of gravity of the rigid body

The DLT method mentioned earlier was used to measure , , , and . The centroid coordinates of the rigid body were , and the real-time position changes were calculated using formula (3).

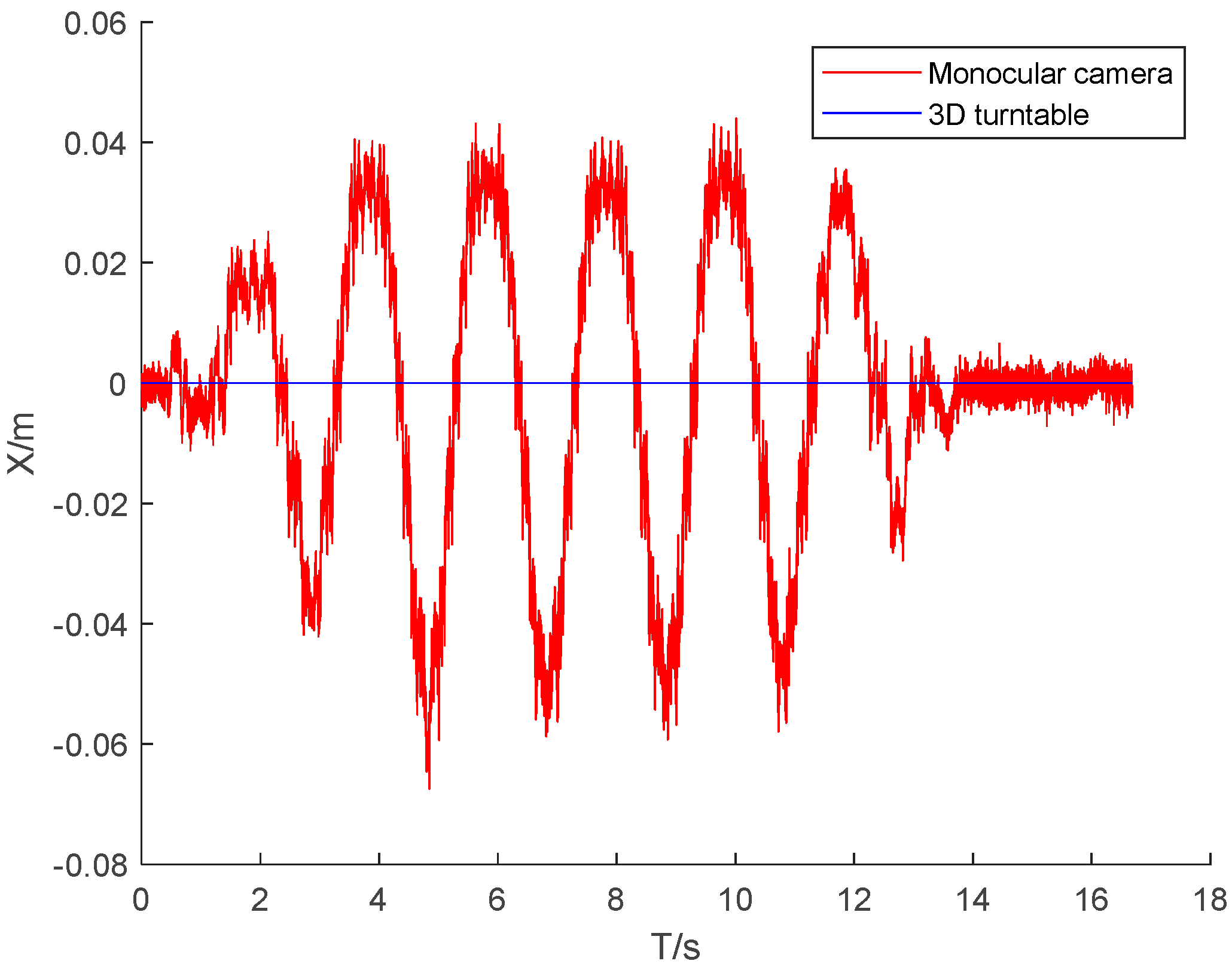

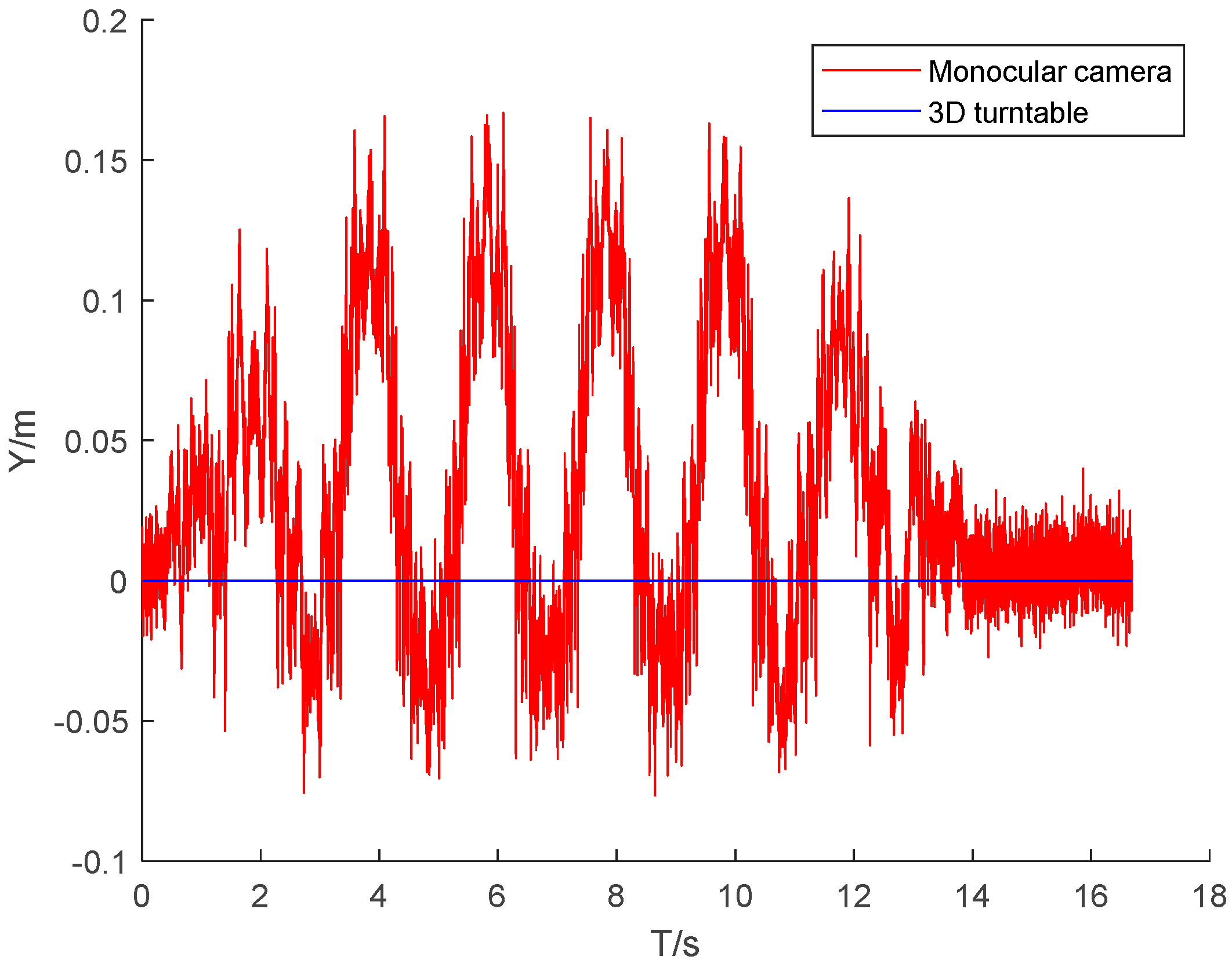

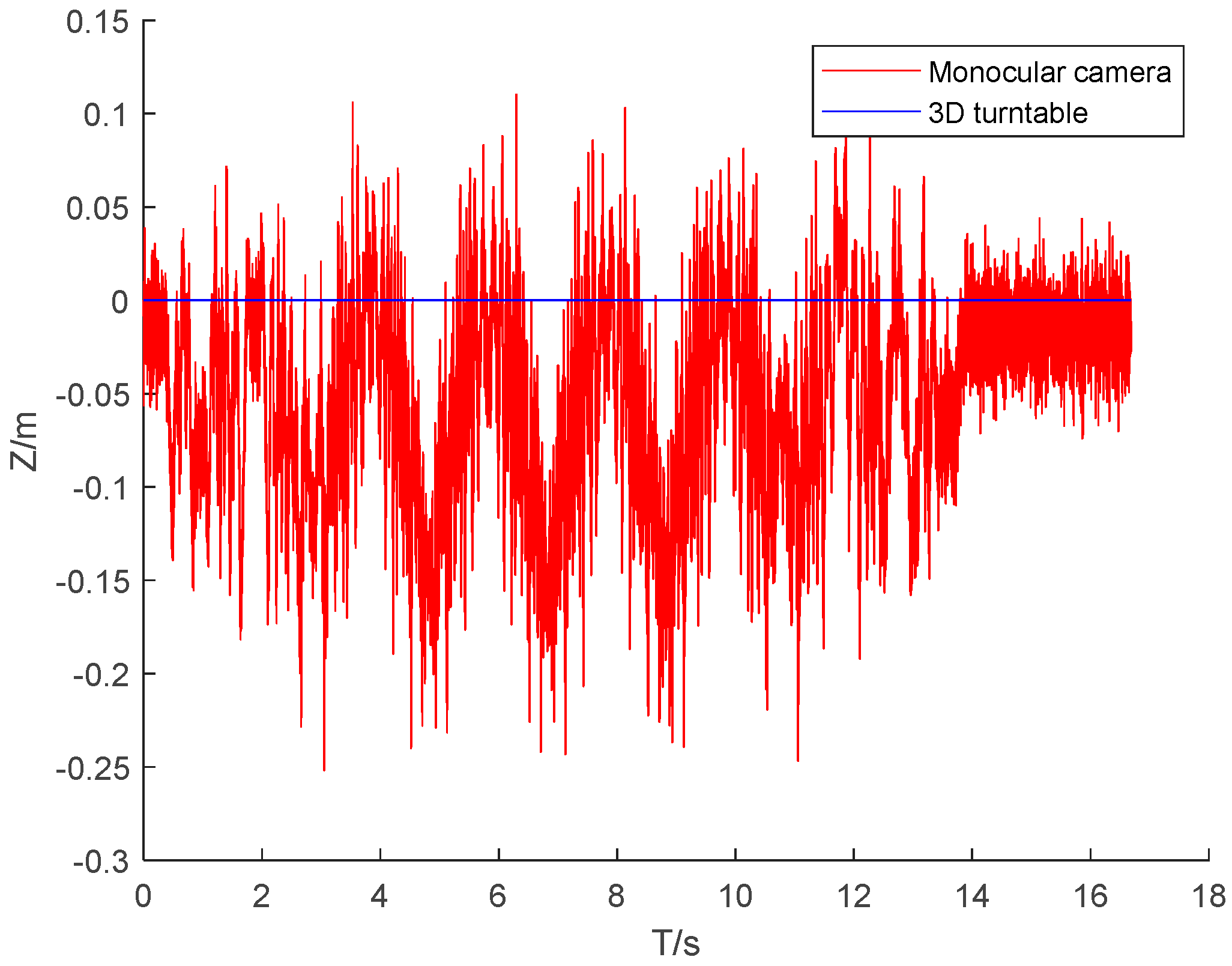

Figure 8,

Figure 9 and

Figure 10 present the three-dimensional coordinate changes of the body’s center of mass as measured by the monocular camera at the first position. It is seen that the error in the three-dimensional position of the center of mass of the rigid body increased with the tilt of the rigid body relative to the camera lens. This is because the constraint of the non-coplanar control points on the rigid body decreased and the imaging quality of the control points deteriorated when the tilt ratio increased. The error in the X-axis coordinate of the rigid body center was much smaller than the errors in the other two axis coordinates, because the middle-frame swinging motion of the rigid body involved rotation around the X-axis, and all control points had the best X-axis constraint during the motion.

Table 2 gives the errors in the camera measurements made at the three camera positions. The error in the coordinates of the center of mass was small for the three camera positions. The maximum deviation of the mean absolute error was 0.53 cm and the maximum deviation of the standard deviation was 0.66 cm, indicating that the measurement process was insensitive to the camera position and that repeated measurements could be made.

4. Conclusions

This article systematically derived a method of measuring the absolute pose of a moving rigid body using a monocular camera based on a camera imaging model. A turntable test was conducted to verify that the proposed method could accurately measure position and attitude in repeated measurements within the visual range of the camera. The mean absolute error in the attitude angle measured at different camera positions varied by no more than 0.2 degrees. The error in the attitude angle measured by the camera at the first camera position increased with the tilt angle of the rigid body relative to the camera lens. The mean absolute error was 0.8092 degrees, the standard deviation was 0.6623 degrees, and there were individual errors up to 2.5 degrees. An analysis of the error in the coordinates of the center of mass position showed that the deviation of the measurements across the three camera positions was small, the maximum deviation of the mean absolute error was 0.53 cm, and the maximum deviation of the standard deviation was 0.66 cm. The measurement was thus insensitive to the camera placement, and repeated measurements could be made. Although the proposed method requires the placement of two sets of marker points, which may be infeasible, it is suitable for non-professional and low-cost measurement applications.

Author Contributions

Conceptualization, S.G.; methodology, S.G. and Z.Z.; validation, S.G. and L.G.; formal analysis, Z.Z.; investigation, M.W. and L.G.; resources, Z.Z.; writing—original draft preparation, S.G. and M.W.; writing—review and editing, L.G. and Z.Z.; visualization, S.G. and L.G.; supervision, Z.Z. and M.W.; project administration, S.G.; funding acquisition, Z.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the Common Key Technology R&D Innovation Team Construction Project of Modern Agriculture of Guangdong Province, China (grant no. 2021KJ129).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Restrictions apply to the availability of the data. Data can be obtained from the first author.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Wei, Y.; Ding, Z.R.; Huang, H.C.; Yan, C.; Huang, J.X.; Leng, J.X. A non-contact measurement method of ship block using image-based 3D reconstruction technology. Ocean Engineering 2019, 178, 463–475. [Google Scholar] [CrossRef]

- Luo, H.; Zhang, K.; Su, Y.; Zhong, K.; Li, Z.W.; Guo, J.; Guo, C. Monocular vision pose determination-based large rigid-body docking method. Measurement 2022, 204, 112049. [Google Scholar] [CrossRef]

- Wang, W.X. Research on the monocular vision position and attitude parameters measurement system. Master, Harbin Institute of Technology, China, 2013.

- Audira, G.; Sampurna, B.P.; Juniardi, S.; Liang, S.T.; Lai, Y.-H.; Hsiao, C.D. A Simple Setup to Perform 3D Locomotion Tracking in Zebrafish by Using a Single Camera. Inventions 2018, 3, 11. [Google Scholar] [CrossRef]

- Chen, X.; Yang, Y.H. A Closed-Form Solution to Single Underwater Camera Calibration Using Triple Wavelength Dispersion and Its Application to Single Camera 3D Reconstruction. IEEE Transactions on Image Processing 2017, 26, 4553–4561. [Google Scholar] [CrossRef]

- Liu, W.; Chen, L.; Ma, X.; et al. Monocular position and pose measurement method for high-speed targets based on colored images. Chinese Journal of Scientific Instrument 2016, 37, 37,675–682. [Google Scholar]

- Wang, P.; Sun, X.H.; Sun, C.K. Optimized selection of LED feature points coordinate for pose measurement. Journal of Tianjin University (Natural Science and Engineering Technology Edition) 2018, 51, 315–324. [Google Scholar]

- Sun, C.; Sun, P.; Wang, P. An improvement of pose measurement method using global control points calibration. PLoS One 2015, 10, e0133905. [Google Scholar] [CrossRef]

- Adachi, T.; Hayashi, N.; Takai, S. Cooperative Target Tracking by Multiagent Camera Sensor Networks via Gaussian Process. IEEE Access 2022, 10, 71717–71727. [Google Scholar] [CrossRef]

- Wang, Y.; Yuan, F.; Jiang, H.; Hu, Y.H. Novel camera calibration based on cooperative target in attitude measurement. Optik 2016, 127, 10457–10466. [Google Scholar] [CrossRef]

- Wang, X.J.; Cao, Y.; Zhou, K. Single camera space pose measurement method for two-dimensional cooperative target. Optical Precision Engineering 2017, 25, 274–280. [Google Scholar]

- Sun, D.; Hu, L.; Duan, H.; Pei, H. Relative Pose Estimation of Non-Cooperative Space Targets Using a TOF Camera. Remote Sens. 2022, 14, 6100. [Google Scholar] [CrossRef]

- Peng, J.; Xu, W.; Liang, B.; Wu, A.G. Pose Measurement and Motion Estimation of Space Non-Cooperative Targets Based on Laser Radar and Stereo-Vision Fusion. IEEE Sensors Journal 2019, 19, 3008–3019. [Google Scholar] [CrossRef]

- Zhao, L.K.; Zheng, S.Y.; Wang, X.N.; et al. Rigid object position and orientation measurement based on monocular sequence. Journal of Zhejiang University (Engineering Science) 2018, 52, 2372–2381. [Google Scholar]

- Peng, J.; Xu, W.; Yan, L.; Pan, E.; Liang, B.; Wu, A.G. A Pose Measurement Method of a Space Noncooperative Target Based on Maximum Outer Contour Recognition. IEEE Transactions on Aerospace and Electronic Systems 2020, 56, 512–526. [Google Scholar] [CrossRef]

- Su, J.D.; Qi, X.H.; Duan, X.S. Plane pose measurement method based on monocular vision and checkerboard target. Acta Optica Sinica 2017, 37, 218–228. [Google Scholar]

- Mo, S.W.; Deng, X.P.; Wang, S.; et al. Moving object detection algorithm based on improved visual background extractor. Acta Optica Sinica 2016, 36, 0615001. [Google Scholar]

- Chen, Z.K.; Xu, A.; Wang, F.B.; et al. Pose measurement of target based on monocular vision and circle structured light. Journal of Applied Optics 2016, 37, 680–685. [Google Scholar]

- Li, J.; Besada, J.A.; Bernardos, A.M.; et al. A Novel System for Object Pose Estimation Using Fused Vision and Inertial Data. Information Fusion 2016, 33, 15–28. [Google Scholar] [CrossRef]

- Wang, X.; Yu, H.; Feng, D. Pose estimation in runway end safety area using geometry structure features. Aeronautical Journal 2016, 120, 675–691. [Google Scholar] [CrossRef]

- Ma, X.P.; Li, D.; Yao, X.N.; et al. Camera Calibration of Visual Dispensing System Based on Tsai ’ s Two-step Method. Automation & Instrumentation 2018, 33, 1–4. [Google Scholar]

- Fischler; Martin, A. ; Bolles; et al. Random sample consensus: a paradigm for model fitting with applications to image analysis and automated cartography. Readings in Computer Vision 1987, 24, 726–740. [Google Scholar]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Transactions on Pattern Analysis and Machine Intelligence 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Guo, Y.; Xu, X.H. An analytic solution for the P5P problem with an uncalibrated camera. Chinese Journal of Computers 2007, 30, 1195–1200. [Google Scholar]

- Cao, F.; Zhang, J.J.; Zhu, Y.K. An accurate algorithm for uncalibrated camera pose estimation. Computer Measurement & Control 2016, 24, 209–212. [Google Scholar]

- Lepetit, V.; Noguer, F.M.; Fua, P. EPnP: An accurate O(n) solution to the PnP problem. International Jouranl of Computer Vision 2009, 81, 155–166. [Google Scholar] [CrossRef]

- Li, S.J.; Liu, X.P. High-precision and fast solution of camera pose. Chinese Journal of Image and Graphics 2014, 19, 20–27. [Google Scholar]

- Li, R.C.; Ye, S.X. A monocular method of pose detection for SDOFA. Optics & Optoelectronic Te8chnology 2019, 17, 56–62. [Google Scholar]

- Bronislav, P.; Pavel, Z.; Martin, C. Absolute pose estimation from line correspondences using direct linear transformation. Computer Vision and Image Understanding 2017, 161, 130–144. [Google Scholar]

- Wang, Q.S. A model based on DLT improved three-dimensional camera calibration algorithm research. Geomatis & Spatial Information Technology 2016, 39, 207–210. [Google Scholar]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).