1. Introduction

When objects become occluded, the available information input to the visual system diminishes, thereby intensifying the challenge of objects recognition [

1]. However, humans excel at identifying partially occluded objects, a frequency-occurring circumstance in everyday interaction with natural environments [

2]. In recent years, with the advent of deep learning, computer vision algorithms have demonstrated the capability to handle simple target recognition [

3,

4,

5]; however, they have yet fallen short in achieving human-level performance in highly occluded object recognition tasks [

6]. The neural mechanisms underlying the ability to efficiently recognize the object under high occlusion remains to be fully understood.

Recently, an anatomical model for occluded object recognition was proposed through the Golin incomplete image recognition task [

7], dividing objects recognition into two primary stages: visual processing and semantic classification. According to one functional magnetic resonance imaging (fMRI) study, low-level visual regions exhibited more activation in the occluded condition as compared to the non-occluded one [

8]. Another study suggested that low-level visual regions encoded only spatial information about occluded objects, while high-level visual regions were responsible for representing their identities [

9].

Although such studies provided insight on object processing under occlusion, they mainly study information transfer and processing in visual regions of the brain [

10,

11]. Considering the significant cognitive demands from highly occluded object recognition, the involvement of prefrontal cortex (PFC) was also justified [

12,

13]. Neurophysiological recordings in rhesus monkeys illustrated that signals between the PFC and visual cortex can interact to recognize objects under occlusion [

14]. Additionally, anatomical research exposed that the PFC receives direct projections from the visual cortex [

15] and reciprocated these back [

16]. Given these findings, we hypothesize that the interaction between the PFC and occipital lobe could play a crucial role in object recognition when occlusion hampers perceptual judgments.

To test this hypothesis, we conducted an fMRI-based experiment focusing on object recognition with three degrees of occlusion. By using the generalized linear model (GLM) analysis, multivariate pattern analysis (MVPA), and psychophysiological interaction (PPI) analysis, we revealed the brain functional representation of highly occluded object recognition. These analytical techniques facilitated our investigation into three primary inquiries: Do the PFC and occipital regions play a pivotal role in the processing of highly obscured objects? Assuming they do, which definitive brain sectors take part in this process? Are there significant differences in the interactions and contributions of these areas under different degrees of occlusion?

2. Methods

2.1 Participants

Altogether 66 university students participated in this study, and two subjects were excluded from the final fMRI analysis due to poor imaging quality. Eventually, 64 subjects (32 women, mean age of 22 years, aged between 19 and 26 years) were included for the final analysis. All subjects were right-handed, presented no previous record of psychiatric or neurological disorders, and had refrained from any medication usage prior to the experiment. All subjects completed an informed consent form and an MRI safety screening questionnaire before entering the MRI scanner and received compensation for their participation. The Ethics Committee of Henan Provincial People's Hospital granted approval for all experimental design protocols. All research procedures were in agreement with related standards and regulations.

2.2 Stimuli

The initial experimental stimulus material was sourced from the publicly accessible SynISAR simulation dataset [

17], from which two aircraft images were chosen as recognition objects. Adjustments were made to the object images to ensure similarity in size and contrast. In order to produce an occluded image, black squares of varying sizes were iteratively added at random positions on the image. Taking reference from a recent study on occluded object recognition [

6], we defined three levels of occlusion: 10% (baseline conditions), 70%, and 90% occlusion. Instead of using a completely clear target, we used the 10% occlusion as the baseline condition to eliminate any potential interference from the black squares. The resulting image set consisted of six conditions: 2 targets × 3 levels of occlusion (

Figure 1A). For each condition, fifty sample images were generated by altering the orientation of the aircraft and the position of occlusion.

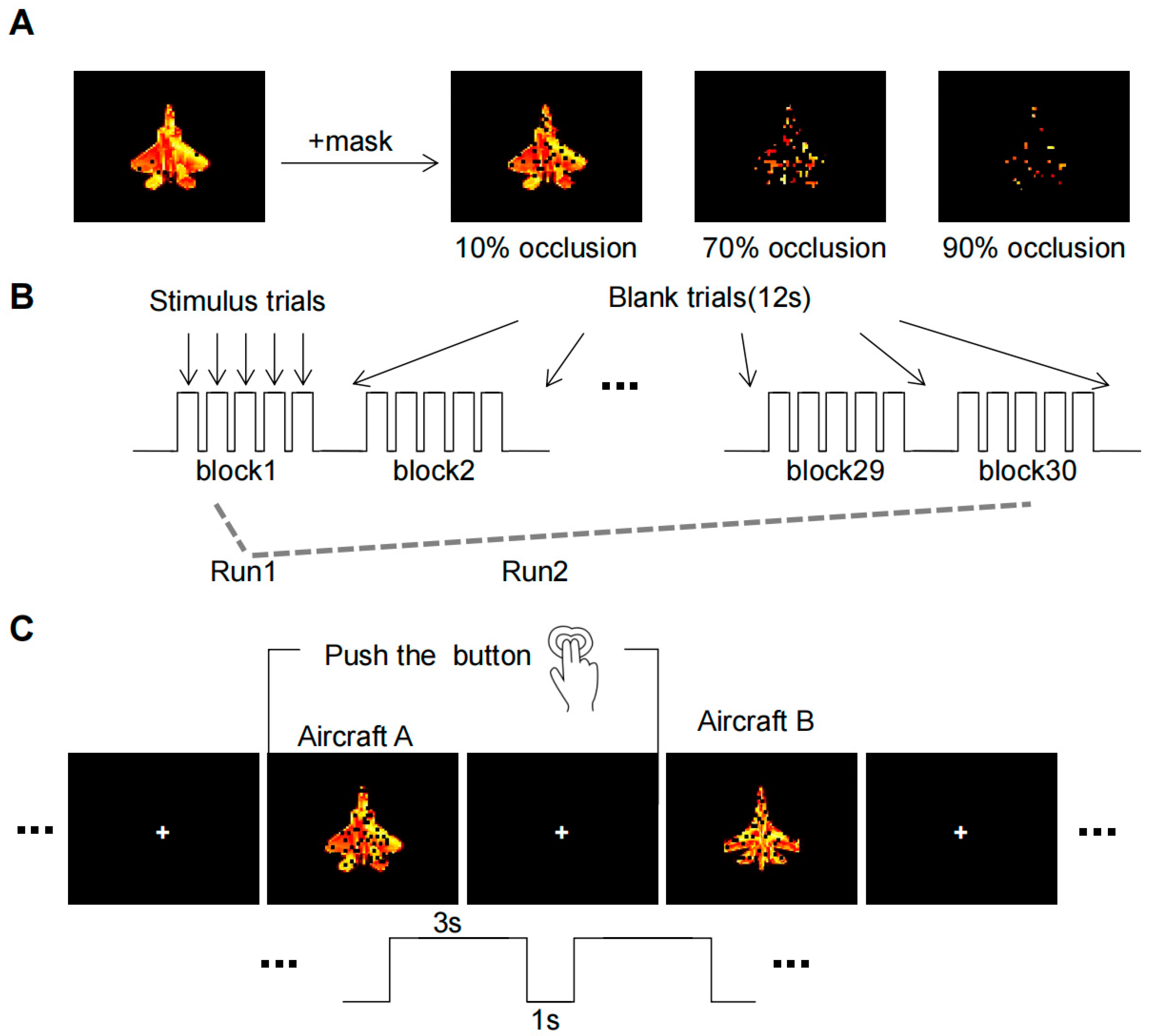

Figure 1.

The fMRI experimental paradigm. (A) Occlusion Image Set. Aircraft targets undergo three levels of occlusion to generate an image set. (B) The whole process of MRI scans. Three types of occlusion image stimuli (10%, 70%, and 90%) were randomly presented 10 blocks in each run. (C) Task operations in each trail. Subjects must make a decision within a 4-second window when the object was displayed and indicate their choice by pressing a button.

Figure 1.

The fMRI experimental paradigm. (A) Occlusion Image Set. Aircraft targets undergo three levels of occlusion to generate an image set. (B) The whole process of MRI scans. Three types of occlusion image stimuli (10%, 70%, and 90%) were randomly presented 10 blocks in each run. (C) Task operations in each trail. Subjects must make a decision within a 4-second window when the object was displayed and indicate their choice by pressing a button.

2.3 fMRI Experimental Paradigm

Instructions about tasks were provided to the subjects before the MRI scan, and they practiced the task on an external computer to the scanner. The practice tasks had exactly the same user interface with the real experiment, but to avoid early familiarization with the target, the target images utilized in the practice tasks differed from those in the actual experiment.

The experimental paradigm (

Figure 1B) was designed in reference to the Golin incomplete image recognition task [

7]. As the subjects were in the scanner, the process began with anatomical scans succeeded by functional scans, totaling approximately 50 minutes for each subject. The participants were asked to view and memorize the features of two clear aircraft targets prior to each of the two experimental runs. Each run comprised 30 blocks, within which each task (10%, 70%, and 90% occlusion) presented 10 blocks randomly. Each block maintained a consistent occlusion level and contained five trials, with either aircraft A or aircraft B appearing at random.

Before each run, the subjects were asked to watch two clear aircraft objects to remember their features. Each run consisted of 30 blocks, of which 10 blocks were randomly presented for each of the three tasks (10%, 70%, and 90% occlusion). The target image occlusion level of each block was the same, which contained five trails, with either aircraft A or aircraft B randomly appearing in each trail. The experiment required a simple binary decision from the subjects, who had to discern whether the viewed image was aircraft A or B. Responses were recorded via button press: the right index finger for aircraft A and the right middle finger for aircraft B (

Figure 1C).

2.4 MRI Data Acquisition

Functional and structural MRI scans were conducted using a standard 64-channel head coil on a 3T Siemens Magneto Prisma scanner. Subjects observed the projection screen through a mirror system mounted on the head coil. Functional images were captured using a single-shot gradient echo, and echo-planar imaging (EPI) sequence using the parameters: TR of 2000 ms, TE of 30 ms, flip angle of 76°, a 96x96 matrix, field of view (FOV) of 192x192 mm², and 2x2x2 mm³ voxel size resolution. Each EPI volume included 64 transversal slices (2-mm thick with no gap) in an interleaved order containing 504 functional images per run. For localization of activation assistance, we obtained high-resolution T1-weighted magnetization-prepared rapid gradient-echo imaging (MP-RAGE) 3-D MRI using a pulse sequence with TR of 2300 ms, TE of 2.26 ms, flip angle of 8°, 256x256 matrix, FOV of 256x256 mm², 1x1x1mm³ voxel size resolution, and 192 contiguous axial images each 1-mm thick.

2.5 MRI Data Preprocessing

We employed Statistical Parametric Mapping software (SPM12; Wellcome Department of Cognitive Neurology, London, UK) to preprocess and conduct statistical analysis on the functional images. During preprocessing, the EPI data were corrected for slice time and head movement relative to the median functional volume. Subsequently, the data were co-registered and normalized to align with the standard Montreal Neurological Institute (MNI, McGill University, Montreal, Quebec, Canada) coordinate space. Lastly, we applied a Gaussian kernel for spatial smoothing, with a full width at half maximum (FWHM) specification of 6mm.

2.6 GLM Data Analysis

The conducted GLM data analysis utilised SPM12 to reveal regions activated by the occlusion stimulus, which involved two different methods of evaluation: Firstly, the analysis models integrated all three tasks, and secondly, each task was modelled separately to discern the effects across the tasks. Contrast images for the main effects of three tasks were generated for each participant and subjected to a second-level analysis for population-level inference. A threshold for the statistical results was defined at p<0.05, as corrected by FWE.

To visualize and further analyze the different levels of occlusion dependent response, a region of interest (ROI) analysis was performed in this study. Referring to the GLM results acquired afore, occipital lobes and the dACC were chosen as the ROIs, and the Marsbar toolbox was utilised to extract the mean Betas from them. The derived betas were then subjected to repeated-measures t-tests to underscore significantly different regional responses to varying occlusion levels.2.9 Definition of regions of interest

2.7 Multivariate Pattern Analysis

The classification of three occluded object recognition tasks was carried out across subjects, utilizing the Python-based PyMVPA toolbox [

18]. A reiteration of the GLM analysis was performed for each subject to generate spmT files for each block, resulting in an aggregate of 3840 samples (64 subjects × 60 blocks). Referring to the GLM results acquired afore, brain regions of bilateral occipital lobes (inferior occipital gyrus - IOG, middle occipital gyrus - MOG, and occipital fusiform gyrus - OFG) and dACC in AAL90 were selected as templates to segment the brain regions of the spmT file, and the mean beta values of each brain region were extracted to generate a 7×1 feature vector. The beta values underwent normalization (µ = 0, σ= 1) prior to the MVPA application.

We trained a Support Vector Machine (SVM) classifier, using a Radial Basis Function (RBF) kernel, to categorize three separate occluded object recognition tasks using leave-one-subject-out cross-validation, where the model was trained on the data from all except one subject and tested on the hold-out subject data. To compare the functions of the dACC and the occipital lobe, ablation experiments were carried out where dACC features were excluded, and feature vectors constituted only by the beta of the occipital brain region were picked for classification. We determined the classifier's accuracy by computing the proportion of accurately classified events relative to the overall number of events.

2.8 gPPI Data Analysis

To determine the correlation between the functional connectivity (FC) of the dACC and the occipital lobe with the occlusion level, we employed the generalized form of the PPI (gPPI) method [

19], an approach ideally suited to scrutinizing FC during block-design experiments [

20]. Based on whole-brain activation analysis findings, we selected bilateral occipital lobes (IOG, MOG, and OFG), and dACC as ROIs (

Figure 6B). For each participant, the ROIs' time series were extracted using the first eigenvariate of a 6 mm radius sphere surrounding the peak (

Table 1). At the individual participant level, the inclusion criteria were: (1) a psychological variable signifying the three occlusion levels; (2) a physiological variable of the ROIs; and (3) PPI values, the cross product of the first two regressors (

Figure 6A). To obtain FC values between the dACC and occipital lobes, we computed regression coefficients for the PPI values of the two relevant ROIs (

Figure 6C). Subsequently, we averaged the FC values between the dACC and six occipital lobes for each participant across all three tasks. This was followed by conducting a paired t-test on the averaged FC values between the dACC and occipital lobes.

3. Results

3.1 Behavioral Data

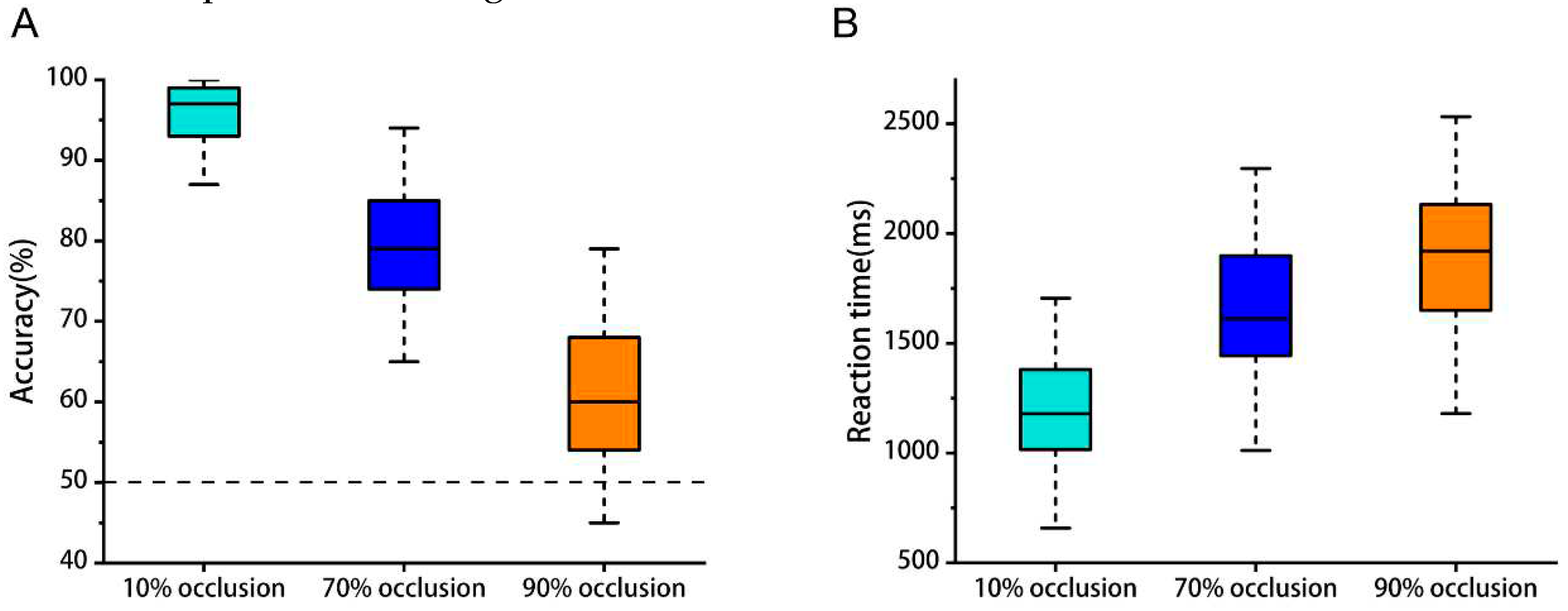

For the object recognition tasks, average recognition accuracy at occlusion levels of 10%, 70%, and 90% stood at 96%, 79%, and 62% respectively (

Figure 2A). The average response times were 1.23 s, 1.65 s, and 1.89 s respectively (

Figure 2B). These figures indicated that there were significant disparities in participants' performance both regarding accuracy (p< 0.001) and reaction time (p< 0.001), which amplified as the degree of occlusion rose.

Figure 2.

Behavioral results. (A) Mean accuracy for object recognition tasks over three occlusion degrees. p <0.001. (B) Mean reaction time for object recognition tasks over three occlusion degrees. p <0.001.

Figure 2.

Behavioral results. (A) Mean accuracy for object recognition tasks over three occlusion degrees. p <0.001. (B) Mean reaction time for object recognition tasks over three occlusion degrees. p <0.001.

3.2 GLM Analysis Results

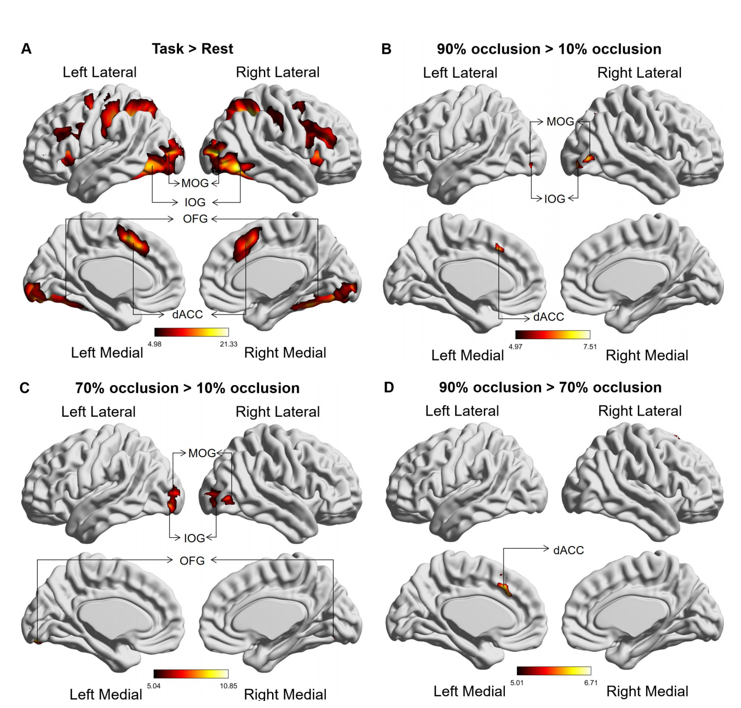

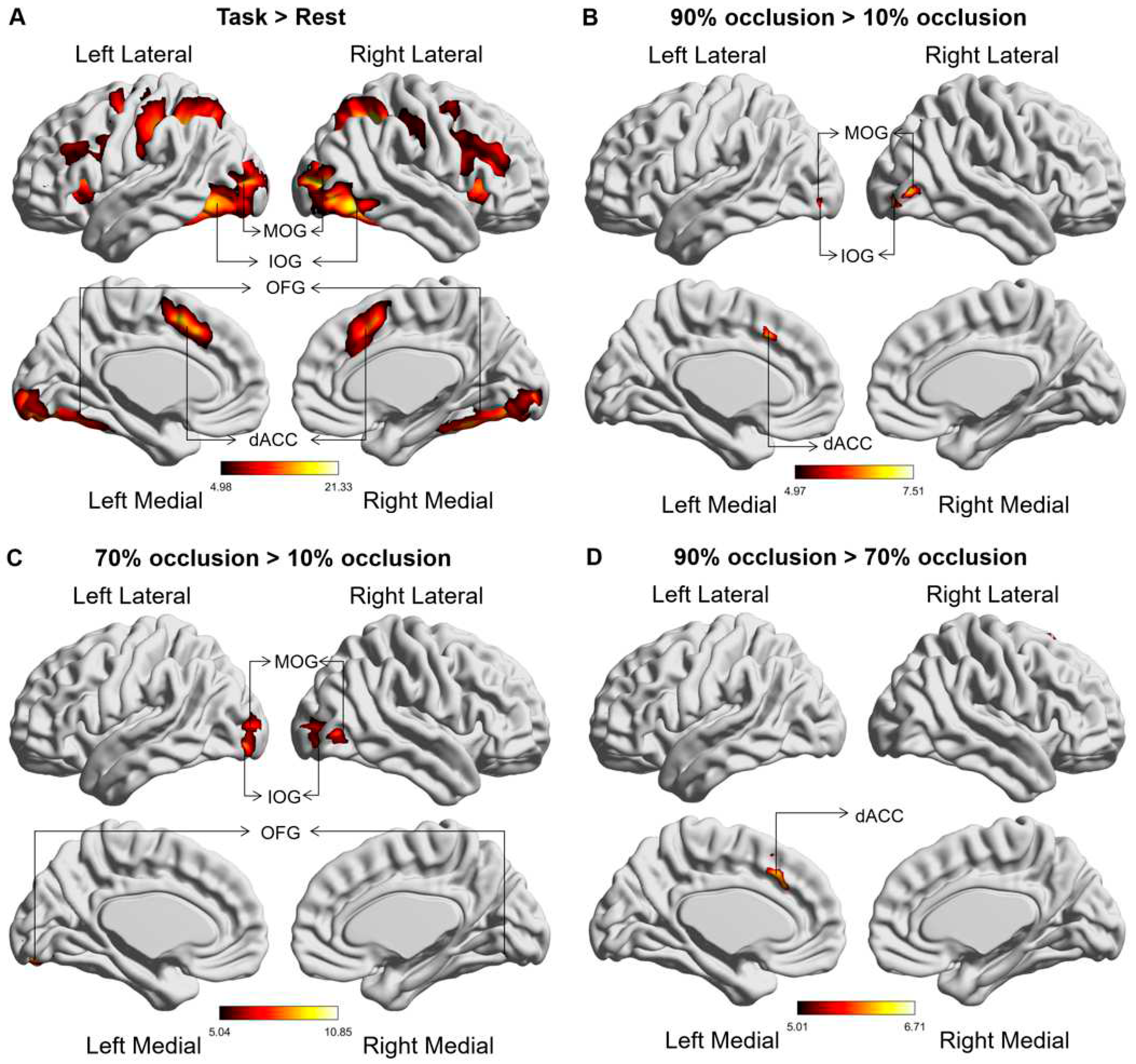

Comparative brain activation maps between the occluded object recognition task and the resting state demonstrated discernible activation in the dACC, along with the occipital, parietal, and frontal lobes during the tasks (

Figure 3A). Tasks with high levels of occlusion (70% and 90%) corresponded with stronger activation in the dACC and occipital lobe (IOG, MOG, and OFG) relative to tasks with low level of occlusion (10%) (

Figure 3B-C). Notably, dACC activation continued to strengthen as the occlusion level increased from 70% to 90% (

Figure 3D).

Table 1 provides the MNI coordinates of the differentially activated brain regions.

Figure 3.

Global brain activation of the group analysis. (A) Brain activation maps in comparison between the occluded object recognition task and the resting state. (B) The variation in activated brain regions between tasks at 90% occlusion and 10% occlusion. (C) The variation in activated brain regions between tasks at 70% occlusion and 10% occlusion. (D) The varia tion in activated brain regions between tasks at 90% occlusion and 70% occlusion. The colour bar represents the t value. The abbreviations used are as follows: IOG - Inferior Occipital Gyrus, MOG - Middle Occipital Gyrus, OFG - Occipital Fusiform gyrus, dACC - Dorsal anterior cingulate cortex.

Figure 3.

Global brain activation of the group analysis. (A) Brain activation maps in comparison between the occluded object recognition task and the resting state. (B) The variation in activated brain regions between tasks at 90% occlusion and 10% occlusion. (C) The variation in activated brain regions between tasks at 70% occlusion and 10% occlusion. (D) The varia tion in activated brain regions between tasks at 90% occlusion and 70% occlusion. The colour bar represents the t value. The abbreviations used are as follows: IOG - Inferior Occipital Gyrus, MOG - Middle Occipital Gyrus, OFG - Occipital Fusiform gyrus, dACC - Dorsal anterior cingulate cortex.

Table 1.

Variations in brain activation regions across diverse occlusion object recognition tasks.

Table 1.

Variations in brain activation regions across diverse occlusion object recognition tasks.

| Anatomical regions and BA |

Cluster size (voxels) |

t-score

(FWE, p < 0.05) |

x |

y |

z |

| 90% occlusion > 10% occlusion |

|

|

|

|

|

| L Inferior Occipital Gyrus (BA18) |

67 |

6.3 |

-30 |

-84 |

-6 |

| R Inferior Occipital Gyrus (BA18) |

97 |

7.21 |

39 |

-84 |

-6 |

| L Middle Occipital Gyrus (BA19) |

47 |

5.71 |

-27 |

-87 |

12 |

| R Middle Occipital Gyrus (BA19) |

149 |

7.13 |

45 |

-78 |

0 |

| L Dorsal anterior cingulate cortex (BA32) |

42 |

5.9 |

-6 |

18 |

42 |

| 70% occlusion > 10% occlusion |

|

|

|

|

|

| R Middle Occipital Gyrus (BA19) |

149 |

7.13 |

-24 |

-87 |

-12 |

| R Inferior Occipital Gyrus (BA18) |

128 |

7.12 |

34 |

-78 |

-8 |

| L Middle Occipital Gyrus (BA19) |

222 |

8.75 |

-24 |

-89 |

9 |

| R Middle Occipital Gyrus (BA19) |

166 |

8.32 |

45 |

-75 |

0 |

| L Occipital Fusiform gyrus (BA37) |

50 |

6.12 |

-30 |

-69 |

-9 |

| R Occipital Fusiform gyrus (BA37) |

62 |

9.45 |

30 |

-81 |

-6 |

| 90% occlusion > 70% occlusion |

|

|

|

|

|

| L Dorsal anterior cingulate cortex (BA32) |

32 |

5.27 |

-30 |

48 |

12 |

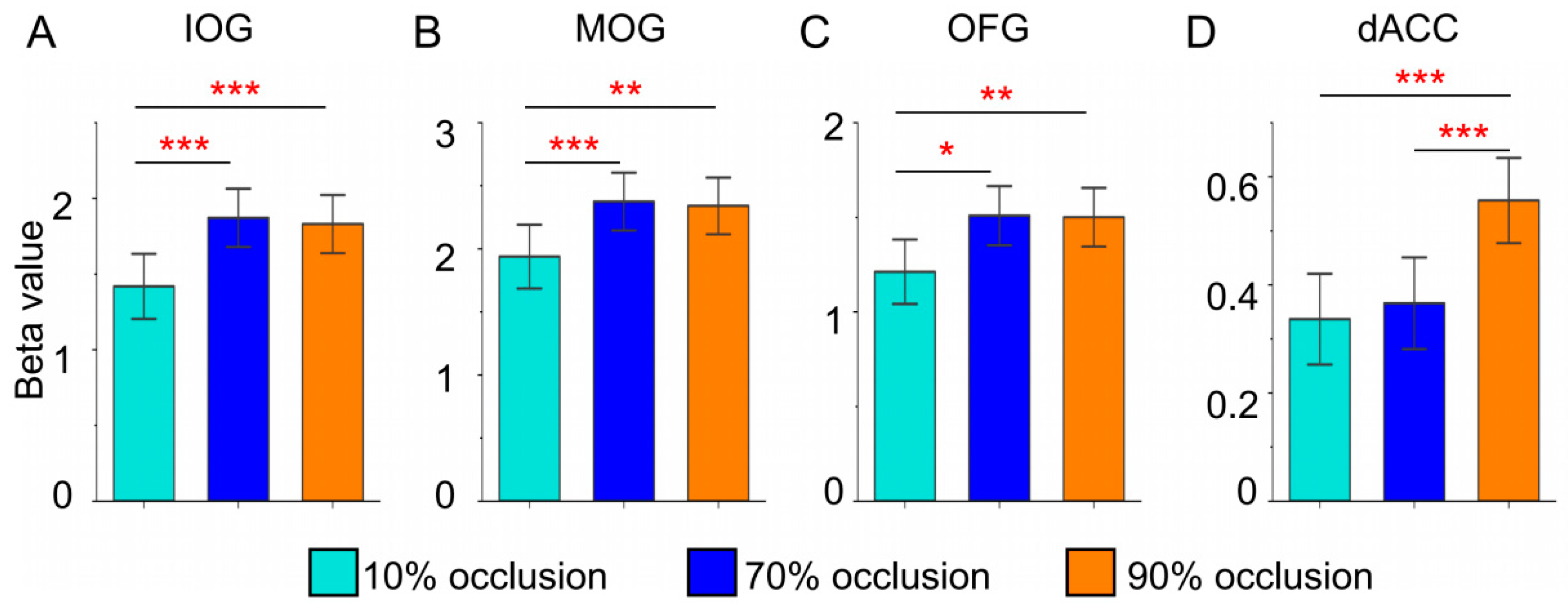

To achieve a clearer representation and deeper analysis of brain responses to varying tasks, we performed an ROI analysis.

Figure 4 delineated beta values of brain regions that showed notable main effects during the GLM analysis. Within the occipital lobe, tasks involving 70% and 90% occlusion induced stronger activation (

Figure 4A-C). Notably, only tasks with 90% occlusion triggered intensified activation in the dACC (

Figure 4D).

Figure 4.

Brain regional (IOG, MOG, OFG and dACC) responses (betas) to three types of occluded objects. * = p < 0.05, ** = p < 0.01, *** = p < 0.001.

Figure 4.

Brain regional (IOG, MOG, OFG and dACC) responses (betas) to three types of occluded objects. * = p < 0.05, ** = p < 0.01, *** = p < 0.001.

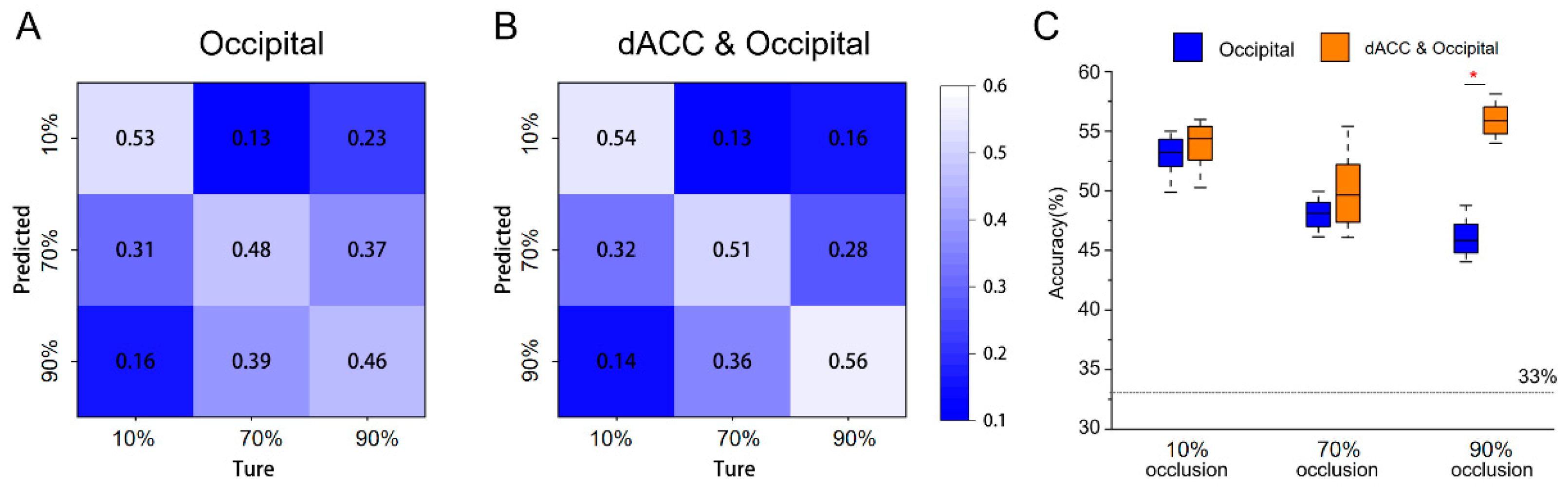

3.3 Multivariate Pattern Analysis Results

The presence of brain activation variations in the dACC and the occipital lobe were observed across all three tasks, which led us to further investigate their potential in eliciting statistically significant activation patterns. We first employed features from bilateral occipital lobes for classification, resulting in an average accuracy of 49%, with the confusion matrix indicating that each task's classification accuracy surpassed 46% (

Figure 5A). We then added beta values from the dACC as features, and the average classification accuracy increased to 53.7%, exceeding 51% for each task (

Figure 5B). The increase in classification accuracy was mainly due to the 90% occlusion task (t = 10%, p < 0.05) (

Figure 5C).

Figure 5.

The results of multivariate pattern analysis. (A) Confusion matrix for classifying three types of occlusion image stimuli (taking only occipital brain activation as features). (B) Confusion matrix for classifying three types of occlusion image stimuli (taking both occipital and dACC brain activation as features). (C) Differences in accuracy between the above two classification methods (baseline= 33%). * = p < 0.05.

Figure 5.

The results of multivariate pattern analysis. (A) Confusion matrix for classifying three types of occlusion image stimuli (taking only occipital brain activation as features). (B) Confusion matrix for classifying three types of occlusion image stimuli (taking both occipital and dACC brain activation as features). (C) Differences in accuracy between the above two classification methods (baseline= 33%). * = p < 0.05.

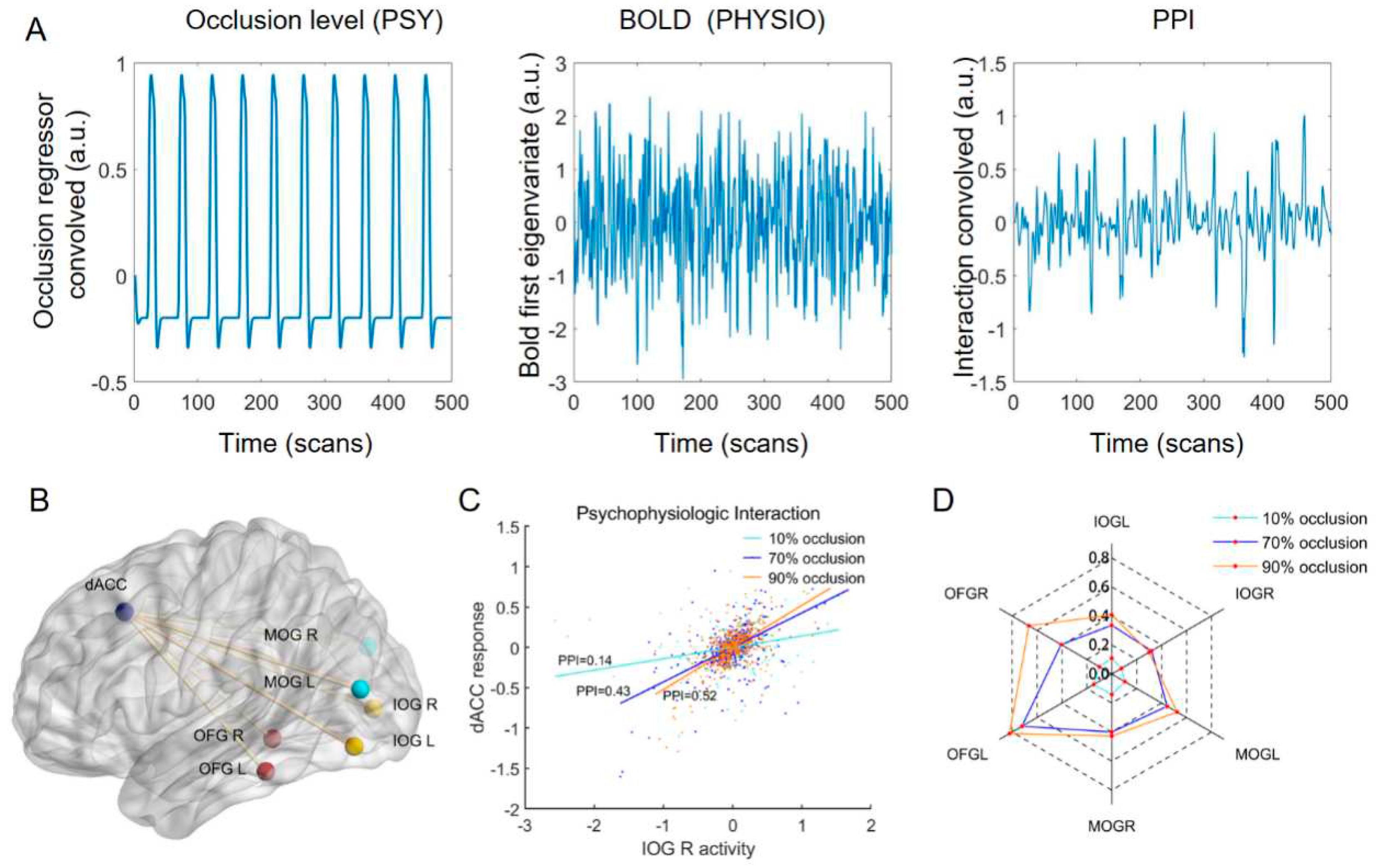

3.4 Functional Connectivity: gPPI Analysis Results

To investigate the interplay between the dACC and occipital lobe, we calculated the FC values between them by the gPPI method. The slope of the straight line indicated the PPI values between the dACC and left IOG for subject 1 in the three tasks (

Figure 6C). Similarly, we calculated the PPI values between the dACC and the other six occipital ROIs (

Figure 6B) for each subject in three tasks. Group-level analysis revealed that the PPI values between dACC and occipital lobe increased with increasing occlusion level (

Figure 6D) and these differences were determined to be statistically significant (p < 0.05).

Figure 6.

PPI results. (A) Representative example of the regressors used for the PPI analyses including the psychological regressor (occlusion level;left subplot), physiological regressor (BOLD first eigenvariate of the ROIs; middle subplot) and their interaction (right subplot). (B) ROIs for the PPI analysis. (C) Results of PPI analysis for subject 1 (example). The slopes of the three straight lines represent the PPI values between dACC and left IOG in the three tasks. (D) PPI value between the dACC and each occipital ROI in three tasks (group effects).

Figure 6.

PPI results. (A) Representative example of the regressors used for the PPI analyses including the psychological regressor (occlusion level;left subplot), physiological regressor (BOLD first eigenvariate of the ROIs; middle subplot) and their interaction (right subplot). (B) ROIs for the PPI analysis. (C) Results of PPI analysis for subject 1 (example). The slopes of the three straight lines represent the PPI values between dACC and left IOG in the three tasks. (D) PPI value between the dACC and each occipital ROI in three tasks (group effects).

4. Discussion

In order to assess the role of the PFC in the recognition of partially occluded objects, we studied the functional representation of the dACC and occipital lobe when human recognized objects at different occlusion levels. By employing behavioral data, GLM analysis, MVPA, and PPI analysis, we were able to underline the essential function of the dACC and occipital lobe in recognizing highly occluded objects.

The unique advantages of our study, in contrast to previous research, was investigation of the involvement of the PFC and occipital regions in recognizing occluded objects. Whereas previous studies were limited to the role of visual areas in occluded object recognition. Furthermore, we provided additional evidence using MVPA that the dACC plays a crucial role in successfully recognizing highly occluded objects. By comparing the baseline tasks (10% occlusion), we found that higher occluded conditions(70% and 90% occlusion) caused stronger activation and FC in the dACC and occipital lobe, which provided evidence for the significance of these brain regions in processing incomplete information.

4.1 The Activation of the dACC and Occipital Lobe under Different Occluded Conditions

Our findings indicated that stimuli with higher levels of occlusion (70% and 90%) elicited stronger activation of the occipital lobe compared to the baseline tasks with only 10% occlusion (

Figure 3B-C,

Figure 4A-C), which were consistent with some reports that studied the effect of occlusion degree on visual area [

11,

21]. In highly occluded situations, the reduction of available visual information mainly interfered with the down-top processes, so that heightened activation of the visual area was required to recognize occluded objects [

22].

However, instead of eliciting a heightened activation in occipital lobe compared to the 70% occlusion, the 90% occlusion elicited stronger activation in dACC (

Figure 3D, 4D), which may be explained by the S-type activation theory in neural cytology [

23]. We believed that the occipital lobe reach the threshold of visual information processing at the occlusion level of 70%, necessitating the dACC's heightened activation to meet the needs of a 90% occlusion task. This inference was also confirmed by MVPA results (

Figure 5), which showed that features of the dACC played a significant role in classifying the 90% occluded task from the other tasks.

4.2 The FC between the dACC and Occipital Lobe

Previous research on task-state FC demonstrated an overall increase in across-network FC during cognitive tasks [

24]. It was also observed that enhancement of across-network FC in specific brain regions was related to task difficulty [

25]. The dACC and occipital lobe, belonging to distinct brain networks, synchronously activated to perform highly occluded tasks (

Figure 3), and these brain regions may constitute the key network for occluded object recognition. To reveal their interaction, a PPI analysis was executed, leading to the discovery that the FC between the dACC and occipital lobe consistently intensified as the degree of occlusion progressed from 10% to 90% (

Figure 6D). As cited in earlier research, the human brain transitions into a state of superior global integration to resolve more complex tasks [

26]. Analogously, the amplified FC between the dACC and occipital lobe promoted information interchange, facilitating the processing of high occlusion tasks.

4.3 The Role of the dACC in Decision-Making Processes under Highly Occlusion

To perform the occluded object recognition task in the study, the test stimulus on the screen must be compared to the reference stimulus held in memory. Given the function of PFC in working memory, it serves as a reasonable neural site for facilitating such comparison [

27]. The results suggested that the heightened activation and FC of the dACC for highly occluded objects might be align with the dACC's decision-making role [

28]. Subjects in this experimental paradigm were required to scrutinize an occluded image appeared on screen and subsequently make a decision by pressing a button. In the highly occluded tasks, subjects took more time (

Figure 2B) for decision making and obtained lower accuracy (

Figure 2A). Decision-making became complicated when the visual cortex receives less information as a result of occlusion [

29]. In this case, the dACC might amplify weak signals to facilitate decision making by increasing its own activation and the FC with visual areas. In this regard, our results were consistent with the dACC engaging in decision-making tasks under uncertainty [

30,

31]. Therefore, the decision-making role of dACC under uncertain conditions might be the key reason why humans can efficiently identify highly occluded targets.

5. Conclusion

In summary, unlike prior research that primarily focused on visual regions, this study investigates the significant involvement of PFC and occipital lobe in occluded objects recognition tasks. Our results indicate that the heightened activation and FC between the dACC and the occipital lobe can help us solve highly occluded objects recognition. These findings provide insight into the neural mechanisms of highly occluded objects recognition, enhancing our comprehension of how the brain processes incomplete visual information.

Author Contributions

Bao Li: Methodology, Data acquisition, Data analysis, Writing - Original Draft. Chi Zhang, Long Cao, and Linyuan Wang: Investigation, Data preprocessing, Supervision, Writing - Review & Editing. Panpan Chen, Tianyuan Liu, and Hui Gao: Data acquisition. Bin Yan: Resources, Writing - Review & Editing, Funding acquisition. Li Tong: Conceptualization, Methodology, Investigation, Resources, Data Curation, Project administration, Funding acquisition.

Funding

This research was funded by by the STI2030-Major Projects (Grant No. 2022ZD0208500), the National Natural Science Foundation of China (Grant No. 82071884, Grant No. 62106285).

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki, and approved by the Ethics Committee of Henan Provincial People's Hospital.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data that support the findings of this study are available from the corresponding author upon reasonable request.

Conflicts of Interest

The authors declare no conflict of interest.

References

- DICARLO J J, COX D D. Untangling invariant object recognition[J/OL]. Trends in Cognitive Sciences, 2007, 11(8): 333-341. [CrossRef]

- THIELEN J, BOSCH S E, VAN LEEUWEN T M, 等. Neuroimaging Findings on Amodal Completion: A Review[J/OL]. i-Perception, 2019, 10(2): 204166951984004. [CrossRef]

- WEN H, SHI J, CHEN W, 等. Deep Residual Network Predicts Cortical Representation and Organization of Visual Features for Rapid Categorization[J/OL]. Scientific Reports, 2018, 8(1): 3752. [CrossRef]

- CADIEU C F, HONG H, YAMINS D L, 等. Deep neural networks rival the representation of primate IT cortex for core visual object recognition[J]. PLoS computational biology, 2014, 10(12): e1003963.

- YAMINS D L, HONG H, CADIEU C F, 等. Performance-optimized hierarchical models predict neural responses in higher visual cortex[J]. Proceedings of the National Academy of Sciences, 2014, 111(23): 8619-8624.

- RAJAEI K, MOHSENZADEH Y, EBRAHIMPOUR R, 等. Beyond core object recognition: Recurrent processes account for object recognition under occlusion[J/OL]. PLOS Computational Biology, 2019, 15(5): e1007001. [CrossRef]

- FLOREZ J F, M. Michael S. Gazzaniga, George R. Mangun (eds): The Cognitive Neurosciences, 5th edition[J/OL]. Minds and Machines, 2015, 25(3): 281-284. [CrossRef]

- BAN H, YAMAMOTO H, HANAKAWA T, 等. Topographic Representation of an Occluded Object and the Effects of Spatiotemporal Context in Human Early Visual Areas[J/OL]. The Journal of Neuroscience, 2013, 33(43): 16992-17007. [CrossRef]

- ERLIKHMAN G, CAPLOVITZ G P. Decoding information about dynamically occluded objects in visual cortex[J/OL]. NeuroImage, 2017, 146: 778-788. [CrossRef]

- DE HAAS B, SCHWARZKOPF D S. Spatially selective responses to Kanizsa and occlusion stimuli in human visual cortex[J/OL]. Scientific Reports, 2018, 8(1): 611. [CrossRef]

- HEGDÉ J, FANG F, MURRAY S O, 等. Preferential responses to occluded objects in the human visual cortex[J/OL]. Journal of Vision, 2008, 8(4): 16. [CrossRef]

- JIANG Y, KANWISHER N. Common Neural Mechanisms for Response Selection and Perceptual Processing[J/OL]. Journal of Cognitive Neuroscience, 2003, 15(8): 1095-1110. [CrossRef]

- LIEBE S, HOERZER G M, LOGOTHETIS N K, 等. Theta coupling between V4 and prefrontal cortex predicts visual short-term memory performance[J/OL]. Nature Neuroscience, 2012, 15(3): 456-462. [CrossRef]

- FYALL A M, EL-SHAMAYLEH Y, CHOI H, 等. Dynamic representation of partially occluded objects in primate prefrontal and visual cortex[J/OL]. eLife, 2017, 6: e25784. [CrossRef]

- UNGERLEIDER L G, GALKIN T W, DESIMONE R, 等. Cortical Connections of Area V4 in the Macaque[J/OL]. Cerebral Cortex, 2008, 18(3): 477-499. [CrossRef]

- NINOMIYA T, SAWAMURA H, INOUE K ichi, 等. Segregated Pathways Carrying Frontally Derived Top-Down Signals to Visual Areas MT and V4 in Macaques[J/OL]. The Journal of Neuroscience, 2012, 32(20): 6851-6858. [CrossRef]

- KONDAVEETI H K, VATSAVAYI V K. Abridged Shape Matrix Representation for the Recognition of Aircraft Targets from 2D ISAR Imagery[C/OL]//Advances in Computational Sciences and Technology: 卷 10. 2017: 1103[2023-04-24]. http://ripublication.com/acst17/acstv10n5_41.pdf.

- HANKE M, HALCHENKO Y O, SEDERBERG P B, 等. PyMVPA: A python toolbox for multivariate pattern analysis of fMRI data[J/OL]. Neuroinformatics, 2009, 7(1): 37-53. [CrossRef]

- MCLAREN D G, RIES M L, XU G, 等. A generalized form of context-dependent psychophysiological interactions (gPPI): A comparison to standard approaches[J/OL]. NeuroImage, 2012, 61(4): 1277-1286. [CrossRef]

- CISLER J M, BUSH K, STEELE J S. A comparison of statistical methods for detecting context-modulated functional connectivity in fMRI[J/OL]. NeuroImage, 2014, 84: 1042-1052. [CrossRef]

- STOLL S, FINLAYSON N J, SCHWARZKOPF D S. Topographic signatures of global object perception in human visual cortex[J/OL]. NeuroImage, 2020, 220: 116926. [CrossRef]

- AO J, KE Q, EHINGER K A. Image Amodal Completion: A Survey[M/OL]. arXiv, 2022[2023-03-27]. http://arxiv.org/abs/2207.02062.

- ITO T, BRINCAT S L, SIEGEL M, 等. Task-evoked activity quenches neural correlations and variability across cortical areas[J/OL]. PLOS Computational Biology, 2020, 16(8): e1007983. [CrossRef]

- BETTI V, DELLA PENNA S, DE PASQUALE F, 等. Natural Scenes Viewing Alters the Dynamics of Functional Connectivity in the Human Brain[J/OL]. Neuron, 2013, 79(4): 782-797. [CrossRef]

- KWON S, WATANABE M, FISCHER E, 等. Attention reorganizes connectivity across networks in a frequency specific manner[J/OL]. NeuroImage, 2017, 144: 217-226. [CrossRef]

- SHINE J M, BISSETT P G, BELL P T, 等. The Dynamics of Functional Brain Networks: Integrated Network States during Cognitive Task Performance[J/OL]. Neuron, 2016, 92(2): 544-554. [CrossRef]

- ROMO R, SALINAS E. Flutter Discrimination: neural codes, perception, memory and decision making[J/OL]. Nature Reviews Neuroscience, 2003, 4(3): 203-218. [CrossRef]

- WLAD M, FRICK A, ENGMAN J, 等. Dorsal anterior cingulate cortex activity during cognitive challenge in social anxiety disorder[J/OL]. Behavioural Brain Research, 2023, 442: 114304. [CrossRef]

- KOSAI Y, EL-SHAMAYLEH Y, FYALL A M, 等. The role of visual area V4 in the discrimination of partially occluded shapes[J/OL]. The Journal of Neuroscience: The Official Journal of the Society for Neuroscience, 2014, 34(25): 8570-8584. [CrossRef]

- MONOSOV I, E. Anterior cingulate is a source of valence-specific information about value and uncertainty[J/OL]. Nature Communications, 2017, 8(1): 134. [CrossRef]

- SHENHAV A, COHEN J D, BOTVINICK M M. Dorsal anterior cingulate cortex and the value of control[J/OL]. Nature Neuroscience, 2016, 19(10): 1286-1291. [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).