1. Introduction

1.1. Observing night sky

Since 2022, the James Webb Space Telescope consistently unveils stunning images with unprecedented clarity, showcasing galaxies and nebulae

1. Media play a crucial role in sharing this remarkable astronomical news with the world. However, there remains a question: do most people realize that they need only look upwards at night to marvel at the deep sky? While it’s theoretically possible for anyone to observe nearby targets like Andromeda galaxy (M31) and Orion Nebula (M42) using just a simple pair of binoculars, the road to stargazing is not without its challenges [

1]. Among them, we can mention:

Optimal weather conditions, such as clear skies free from clouds, are essential. Moreover, low light pollution level is crucial for a rewarding experience [

2].

Even with ideal conditions, stargazing via direct observation through an eyepiece and a telescope can be disappointing because of the lack of contrast and colour of the observed targets [

1]. This requires a conditioning in total darkness, to accustom the eyes to night observation.

Astronomy is an outdoor pursuit, and enduring the cold and dampness can test the patience of even the most curious observers.

People with limited physical abilities (poor eyesight, handicap, etc.) might not be able to comfortably use the equipment.

It is important to adapt the eyes to the darkness for a certain amount of time before being able to observe properly, and the very numerous constellations of satellites can be dazzling (especially when they are put into orbit) [

3].

Beyond the simple hobby, astronomy is one of the few domains where novices can make discoveries, independently and contribute to the scientific community [

4,

5].

1.2. Going beyond the limits with Electronically Assisted Astronomy

Nowadays, Electronically Assisted Astronomy (EAA) is increasingly applied by astronomers to observe deeps sky objects (nebulae, galaxies, stars clusters) and event comets. By capturing images directly from a camera coupled to a telescope and applying lightweight image processing, they generate enhanced images in real time, of targets directly on their screens (laptop, tablet, smartphone). EAA take beautifully detailed images even in places heavily impacted by light pollution and poor weather conditions. Depending of the used hardware, it is even possible to observe the images captured via an outdoor installation in the warmth in front of a screen.

In practice, hundreds of targets could be observed with EAA: open stars clusters, globular clusters, galaxies, nebula and even comets! Most of them are documented in well-known astronomical catalogs (Messier, New General Catalog, Index catalog, Sharpless, Barnard), many books and software [

6]: these list of deep sky objects have been established during a time when light pollution was not a problem.

Unfortunately, EAA is not so simple to practice for beginners, and a strong technical background is needed [

7]. If it is carried out in a conventional manner, EAA requires to manage complex hardware (motorized tracking mount, refractor ot reflector, dedicated cameras, pollution filters, etc.) and software (like Sharpcap

2 or AstroDMX

3). These constitute the barriers to engage people to do EAA. But above all, it makes it difficult to organise stargazing sessions.

This paper presents an approach to combine smart telescopes usage and Artificial Intelligence to organize attractive and original EAA sessions.

2. Methodology to assist observational astronomy

In the context of the MILAN research project (MachIne Learning for AstroNomy), granted by the Luxembourg National Research Fund, we participated to various events opened the public. We describe here the tools and the methodology followed during the project to go further in astronomical observations and to easily share knowledge with public.

2.1. Stargazing with smart telescopes

The recent years moreover brought the emergence of smart telescopes, making sky observation accessible to close to anyone. These ingenious instruments are utilized to gather images of deep sky objects, employing smartphones and tablets as the display devices (

Figure 1). Such an evolution also opened huge opportunities in the market, several manufacturers around the world starting to design and develop this type of products, with prices ranging from 500 to 4500 EUR. Requiring no prior knowledge and with a time investment of less than five minutes, such telescopes allow capturing and sharing instant images of star clusters, galaxies, and nebulae. Even professional scientist community is taking advantage of these new tools to study astronomical events (i.e. asteroids occultations, exoplanets transits, eclipses) with simultaneous observations coming from hundreds of smart instruments simultaneously [

8].

During public outreach events or when working hands-on with non-experts, the automation of repetitive tasks with smart telescopes provides a streamlined approach to covering a diverse array of topics during live EAA sessions. These sessions enable the real-time streaming of various deep sky objects, all while using accessible language to describe their characteristics such as apparent size, physical composition, and distance [

9].

Furthermore, these sessions offer the opportunity to illustrate and expound upon the process of selecting celestial targets based on their seasonal visibility and sky positioning. Practical implementation involves linking these intelligent telescopes to multiple devices concurrently, facilitating the organization of interactive observation sessions. In these sessions, each participant can effortlessly partake in viewing the captured astronomical images.

2.2. Demystifying concepts behind astrophotography

Stargazing with smart telescope allows to observe the deep sky, but it also facilitates the communication of advanced concepts to the most curious people. As a first example, participants can better understand how the image acquisition process works – by visually observing the impacts of capture settings like exposure time and gain (equivalent to ISO in classical photography). For instance, increasing these parameters directly impact the quantity of signal received on the image. As a second example, it is visually obvious that the quality of the image shown on the screen increases as the station accumulates data (

Figure 2), allowing to discuss the basics of image processing in astronomy (signal-to-noise ratio, images alignment and stacking). Finally, participants can retrieve the captured images (raw, unprocessed, or final) for further exercises in image processing – providing an opportunity to learn new techniques to make the most of the data captured trough dedicated open-source software. For example, SIRIL can be applied to stack the raw images while Photoshop or Gimp can be used to make the final images more attractive [

7].

There is still one fundamental aspect to be passed in order to make the most of the observations: explaining and understanding what is observed.

2.3. Enriching observations with eXplainable Artificial Intelligence

How to visually recognize a spiral galaxy, a globular cluster, or a planetary nebula? For people with a minimal knowledge of astronomy, a simple observation may be enough. But what about a novice? How to compare these very different objects visually? To tackle the subject in an original way, we used Artificial Intelligence (AI). AI has become widespread thanks to its state-of-the-art performance in multiple domains like the medical, financial, social media and energy fields. However, the lack of transparency of AI models’ decisions hampers their development and deployment greatly due to ethical, assurance and trust concerns. This marks the rise in demand for AI solutions that are accurate, trustworthy, interpretable. eXplainable AI (XAI) is a recent field of research that aims to tackle issues of transparency and trust worthiness, i.e understanding why an AI makes a certain decision and not another and in case the decision disagrees with the human, the reasons behind this also [

10]. For image processing, deep neural networks are favoured for their peak performance, but they are black boxes that require additional mathematical frameworks to explain their decisions, after they have been developed, i.e. post hoc.

In order to prepare outreach events, we followed a pedagogical approach supported by XAI – astronomy and AI was already applied in [

9]. We study what Computer Vision (CV) models

see and

recognize objects in pictures. We use XAI techniques to look into black box machine learning models and Deep neural networks, offering a more intuitive understanding of basic and advanced AI, and simultaneously, astronomy concepts.

Two XAI approaches can be followed [

10]: explaining the entire AI model’s decision-making process or explaining a single prediction – offering insight into which features are most important for the investigated output. While the first one is useful for data scientist to test the AI model robustness, the second one is more interesting in our case to distinguish differences between the deep sky objects.

During the MILAN project, we have captured thousands of astronomical RGB images with a Stellina smart telescope for one year and we have published the data [

11], and we have developed numerous Deep Learning approaches to process and evaluate astronomical images [

12,

13].

Here, we reused these data to train several Deep Learning models to simply detect the presence of celestial objects. The following steps were applied:

We built a set of 5000 RGB images with a resolution of 224x224 pixels – by applying random crops.

We manually classified these images to make two distinct groups: images with, and images without deep sky objects (we made sure we had an even balance for each set). Images with only stars are classified as images without deep sky objects.

We split into three sets: training, validation and test sets (80%, 10%, 10%).

We developed a Python prototype to train ResNet50 model in order to learn this binary classification.

A dedicated Python prototype was implemented. Basic image processing tasks were realized with popular CV packages like

openCV 4 and

scikit-image 5, while ResNet50 model design was done with Tensorflow. To optimize the hardware usage during specific image treatment tasks (for both CPU and GPU), the NUMBA framework was used.

The Python prototype was executed on a high-performance infrastructure with the following hardware features: 40 cores with 128 GB RAM (Intel(R) Xeon(R) Silver 4210 CPU @ 2.20GHz) and NVIDIA Tesla V100-PCIE-32GB.

The following hyper-parameters were used during the training: ADAM optimizer, learning rate of 0.001, 50 epochs, 32 images per batch.

We obtained ready-to-use ResNet50 models with an accuracy of 85% on the validation set that can be applied on astronomical images.

VGG16 and MobileNetV2 architectures were tested too during the tests, but the results are mostly similar.

We built a pipeline to analyze the ResNet50 model output with XRAI [

14]: this technique provides good results on galaxy images [

15]. In practice, we used the

saliency Python package

6 and we analyzed the output of the last convolution layer. As a result, we can obtain a heatmap showing the

zone of interests in the classified images.

3. Discussion

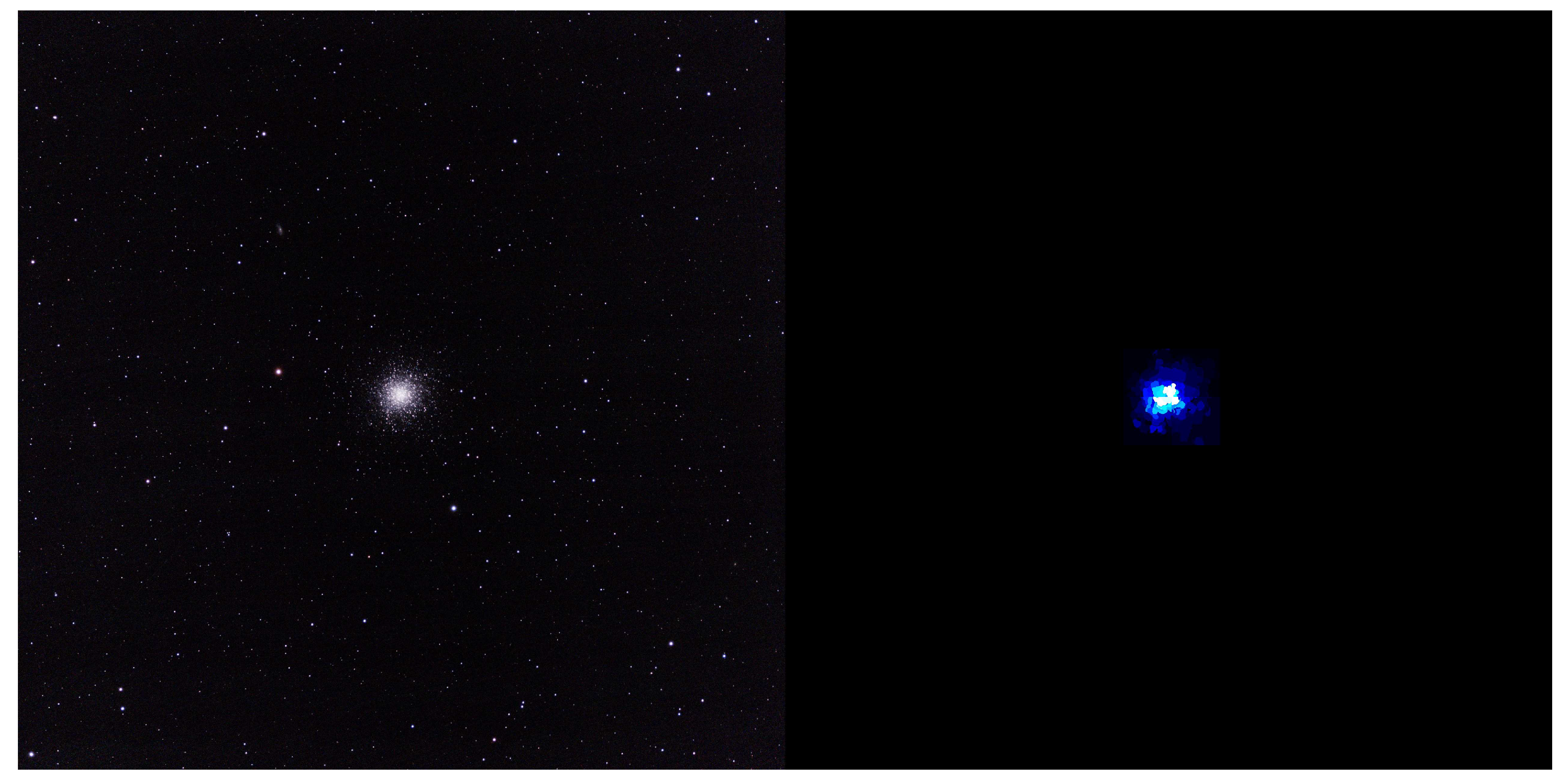

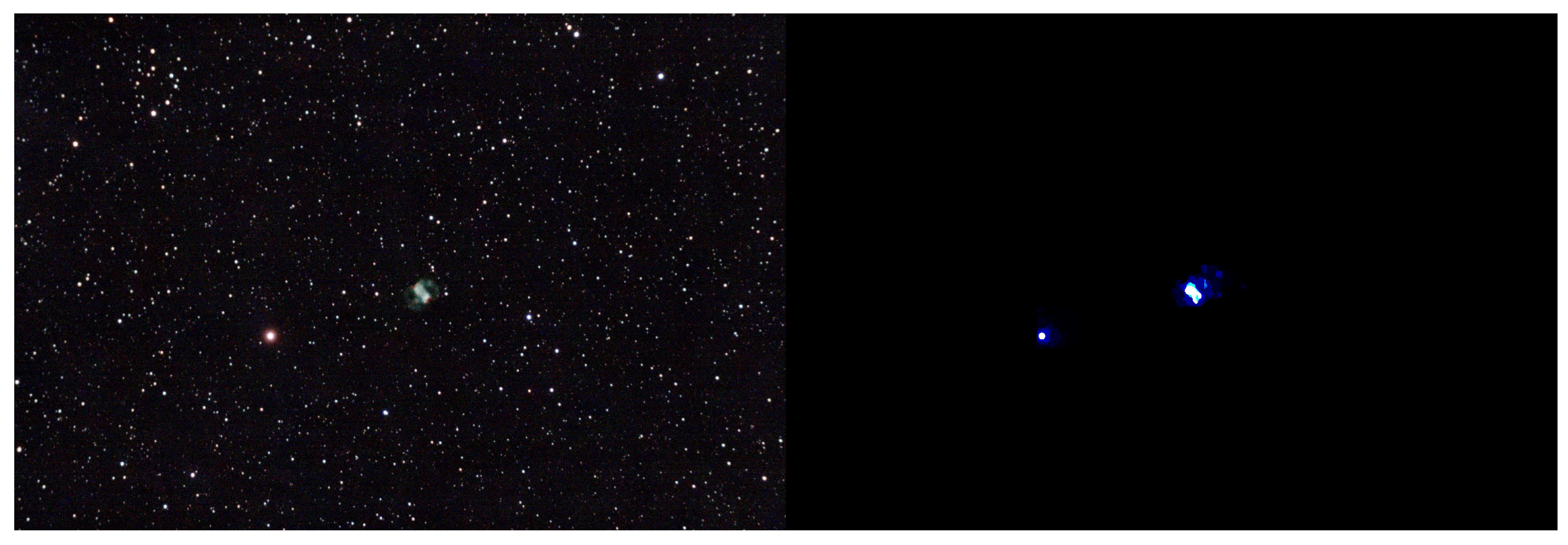

3.1. XAI pipeline

What is interesting is that using XRAI after ResNet50 also makes it possible to detect the position of objects in the images (this is a side effect of the justification of the result) – so that when training the model the idea was to only detect the presence. If an image is classified as having deep sky object, then the heatmap produced by XRAI localizes the zones of the image that lead to this classification (

Figure 3,

Figure 4,

Figure 5,

Figure 6). The pipeline has guessed where the galaxies/nebulae/globular clusters are, while mostly ignoring the stars. Sometimes, we can observe that the XRAI heatmap highlights large stars or stars with a strong halo. It highlights the difficulty for the ResNet50 model to do correctly the classification between these large stars and small galaxies – it could be fixed by improving the training dataset. (Note that this is the primary role of XAI: to enable the construction of more accurate and robust models).

In litterature, state-of-the-art YOLO-based approaches (’You Only Look Once’) are specially dedicated to the objects detection in images. For example, [

16] proposes to combine YOLO and data augmentation to detect and classify galaxies in large astronomical surveys. The major drawback of this method is the need to annotate a huge quantity of images to train the underlying model.

Another point concerns the computation time on high resolution images. Even if training needs a high performance computing platform to be realized in reasonable time (no more than several hours), the XRAI heatmap generation should be possible on normal computers with modest capabilities – especially for on-the-fly evaluation of stacked images.

Let’s take the example of a 3584x3584 astronomical image: with no overlap, we may need to evaluate 256 224x224 patches – it may take some time depending of the hardware. To be efficient, one must try to minimize the number of required calculations required. In a pragmatic way, the following strategies can be applied:

Decrease the resolution of the image to reduce the count of patches to evaluate.

Estimate the heatmap of a small relevant subset of patches – for instance by ignoring patches where the ResNet50 classifier does not detect deep sky object.

During our experiments, we have concluded that the second one provides better results. Further performance optimizations will be realized after deep analysis of model execution with dedicated tools [

17].

3.2. Outreach events

We took part in several outreach events in Luxembourg Greater Region to share our ideas and results at stargazing sessions open to the public (

Figure 7), including:

’Rencontres Astronomique du Centre Ardenne 2022’ organized by Observatoire Centre Ardenne (Belgium). Participants were mostly experimented astronomers and scientists.

’Expo Sciences 2022’ organized by Fondation Jeunes Scientifiques Luxembourg (Luxembourg). Participants were young people with a strong scientific interest.

’Journée de la Statistique 2022’ organized by Luxembourg Science Center and STATEC (Luxembourg). The participants were of all ages and had a strong interest in mathematics and science.

’Robotic Event 2023’ organized by École Internationale de Differdange (Luxembourg). Participants were mainly students and teachers.

A huge quantity of images were captured during these events, in various conditions (urban / rural environments), and smart telescopes helped us to gran interesting deep sky objects images.

We enabled demonstrations to the events participants immediately after acquiring images with smart telescopes, or even during, with a simple laptop (no internet connection is required). When we set a date for an outreach event, we obviously have no guarantee of the weather conditions. To have a fallback option in the event of a cloudy night, we have recorded videos to show how observations are made with a smart telescope

7. It also allows us to share our results with people who can’t be there for the events – including captured images [

11]. Among the topics discussed with events’ participants, we can mention:

Observing with both conventional and smart telescopes, to highlight the difference between what is seen (and sometimes invisible) with naked yes and what can be obtained through captured images. Participants are often surprised by what is visible through the eyepiece (the impressive number of stars), and amazed by the images of nebulae obtained in just a few minutes by smart telescopes.

Transferring smart telescopes images on the laptop (via a FTP server – this feature is provided with the Vespera smart telescope).

Applying the ResNet50 model on these images and presenting the results to the participants.

Computing and showing the explanations of the ResNet50 model behaviour with XRAI heatmaps (giving the opportunity to explain in simple language how to train and use AI models).

Explaining why deep-sky objects captured by the smart telescope and detected by the XAI pipeline are often invisible when viewed directly with the naked eye or via an eyepiece and a telescope.

Then describing and comparing the different types of deep sky objects (galaxies, stars clusters, nebulae, etc.). We did this through short oral question and answer games, which showed that the participants understood the concepts quickly and made the connection with their prior knowledge (sometimes linked to popular culture [

18]).

In practice, it’s interesting to see that our approach complements what can be done with advanced astrometry

8. Based on a large database of celestial coordinates, astrometry consists in measuring the positions and movements of stars and deep sky objects – allowing to identifying precisely the target that are presents on an image.

During outreach events, the important objective is to demonstrate to participants that observing the night sky is above all fun, and it can also be done easily with smart telescopes.

4. Conclusion and perspectives

This paper presented an approach to use smart telescopes for stargazing sessions with public, as well as applying XAI to assist the participants during the observations.

To this end, we have a developed a Python prototype to train an AI model, we used the XRAI technique to generate heatmaps allowing to interpret the results of the model – while providing a mean of automatically detecting deep-sky objects in the images analysed.

Through a concrete use case and visual demonstrations of the results, the approach provides an other aspect of observational astronomy, while showing in a pragmatic way what XAI can be used for. In the short term, the idea is above all to share knowledge about astronomy, but also about artificial intelligence. In the medium and long term, we hope that this will encourage younger people to become scientists themselves.

In future work, we will continue to enrich the approach to raise public awareness of practical issues such as light pollution and the proliferation of satellite constellations.

Author Contributions

Conceptualization, Olivier Parisot; methodology, Olivier Parisot; writing—original draft preparation, Olivier Parisot, Mahmoud Jaziri; writing—review and editing, Olivier Parisot, Mahmoud Jaziri; visualization, Olivier Parisot; supervision, Olivier Parisot; project administration, Olivier Parisot; funding acquisition, Olivier Parisot. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Luxembourg National Research Fund (FNR), grant reference 15872557.

Institutional Review Board Statement

Not Applicable.

Informed Consent Statement

Not Applicable.

Data Availability Statement

Raw astronomical images used during this study can be found on the following page: ’MILAN Sky Survey, raw images captured with a Stellina observation station’ (

https://doi.org/10.57828/f5kg-gs25). Additional materials used to support the findings of this study are available from the corresponding author upon request.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| EAA |

Electronically Assisted Astronomy |

| AI |

Artificial Intelligence |

| XAI |

eXplainable Artificial Intelligence |

| CV |

Computer Vision |

References

- Farney, M.N. Looking Up: Observational Astronomy for Everyone. The Physics Teacher 2022, 60, 226–228. [Google Scholar] [CrossRef]

- Varela Perez, A.M. The increasing effects of light pollution on professional and amateur astronomy. Science 2023, 380, 1136–1140. [Google Scholar] [CrossRef] [PubMed]

- Levchenko, I.; Xu, S.; Wu, Y.L.; Bazaka, K. Hopes and concerns for astronomy of satellite constellations. Nature Astronomy 2020, 4, 1012–1014. [Google Scholar] [CrossRef]

- Drechsler, M.; Strottner, X.; Sainty, Y.; Fesen, R.A.; Kimeswenger, S.; Shull, J.M.; Falls, B.; Vergnes, C.; Martino, N.; Walker, S. Discovery of Extensive [O iii] Emission Near M31. Research Notes of the AAS 2023, 7, 1. [Google Scholar] [CrossRef]

- Popescu, M.M. The impact of citizen scientist observations. Nature Astronomy 2023, pp. 1–2.

- Steinicke, W. Observing and cataloguing nebulae and star clusters: from Herschel to Dreyer’s New General Catalogue; Cambridge University Press, 2010.

- Parker, G. Making Beautiful Deep-Sky Images; Springer, 2007.

- Cazeneuve, D.; Marchis, F.; Blaclard, G.; Asencio, J.; Martin, V. Detection of Occultation Events by Machine Learning for the Unistellar Network. AGU Fall Meeting Abstracts, 2021, Vol. 2021, pp. P11B–12.

- Billingsley, B.; Heyes, J.M.; Lesworth, T.; Sarzi, M. Can a robot be a scientist? Developing students’ epistemic insight through a lesson exploring the role of human creativity in astronomy. Physics Education 2022, 58, 015501. [Google Scholar] [CrossRef]

- Dwivedi, R.; Dave, D.; Naik, H.; Singhal, S.; Omer, R.; Patel, P.; Qian, B.; Wen, Z.; Shah, T.; Morgan, G.; others. Explainable AI (XAI): Core ideas, techniques, and solutions. ACM Computing Surveys 2023, 55, 1–33. [Google Scholar] [CrossRef]

- Parisot, O.; Hitzelberger, P.; Bruneau, P.; Krebs, G.; Destruel, C.; Vandame, B. MILAN Sky Survey, a dataset of raw deep sky images captured during one year with a Stellina automated telescope. Data in Brief 2023, 48, 109133. [Google Scholar] [CrossRef]

- Parisot, O. Amplifier glow reduction, 2023. US Patent App. 18/147, 839.

- Parisot, O.; Bruneau, P.; Hitzelberger, P. Astronomical Images Quality Assessment with Automated Machine Learning. Proceedings of the 12th International Conference on Data Science, Technology and Applications - DATA. INSTICC, SciTePress, 2023, pp. 279–286. [CrossRef]

- Kapishnikov, A.; Bolukbasi, T.; Viégas, F.; Terry, M. XRAI: Better attributions through regions. Proceedings of the IEEE/CVF International Conference on Computer Vision, 2019, pp. 4948–4957.

- Jaziri, M.; Parisot, O. Explainable AI for Astronomical Images Classification. ERCIM News 2023, 2023. [Google Scholar]

- González, R.; Muñoz, R.; Hernández, C. Galaxy detection and identification using deep learning and data augmentation. Astronomy and Computing 2018, 25, 103–109. [Google Scholar] [CrossRef]

- Jin, Z.; Finkel, H. Analyzing Deep Learning Model Inferences for Image Classification using OpenVINO. IPDPSW 2020, 2020, pp. 908–911. [Google Scholar] [CrossRef]

- Stanway, E.R. Evidencing the interaction between science fiction enthusiasm and career aspirations in the

UK astronomy community, 2022, [arXiv:astro-ph.IM/2208.05825].

Figure 1.

A setup used by authors for stargazing. At the left, the instrument is a ED refractor, diameter of 72mm, focal lenght of 420mm, manual alt-azimuthal mount. At the right, the instrument is a Vespera smart telescope: 4-lenses apochromatic refractor, diameter of 50mm, focal length of 200mm, Sony IMX462 sensor, automated alt-azimuthal mount.

Figure 1.

A setup used by authors for stargazing. At the left, the instrument is a ED refractor, diameter of 72mm, focal lenght of 420mm, manual alt-azimuthal mount. At the right, the instrument is a Vespera smart telescope: 4-lenses apochromatic refractor, diameter of 50mm, focal length of 200mm, Sony IMX462 sensor, automated alt-azimuthal mount.

Figure 2.

An example of the observation of the Laguna Nebula during a stargazing session with a Vespera smart telescope. At the left, the image with after 10 seconds of capture. At the right, the image after 1200 seconds of capture: the signal is higher, the noise is lower.

Figure 2.

An example of the observation of the Laguna Nebula during a stargazing session with a Vespera smart telescope. At the left, the image with after 10 seconds of capture. At the right, the image after 1200 seconds of capture: the signal is higher, the noise is lower.

Figure 3.

at the left, an image of the Lion Triplet captured with a Vespera smart telescope. At the right, the XRAI heatmap representation of what is understood by the ResNet50 model.

Figure 3.

at the left, an image of the Lion Triplet captured with a Vespera smart telescope. At the right, the XRAI heatmap representation of what is understood by the ResNet50 model.

Figure 4.

at the left, an image of the Markarian Chain captured with a Vespera smart telescope. At the right, the XRAI heatmap representation of what is understood by the ResNet50 model.

Figure 4.

at the left, an image of the Markarian Chain captured with a Vespera smart telescope. At the right, the XRAI heatmap representation of what is understood by the ResNet50 model.

Figure 5.

at the left, an image of the Great Cluster in Hercules captured with a Vespera smart telescope. At the right, the XRAI heatmap representation of what is understood by the ResNet50 model.

Figure 5.

at the left, an image of the Great Cluster in Hercules captured with a Vespera smart telescope. At the right, the XRAI heatmap representation of what is understood by the ResNet50 model.

Figure 6.

at the left, an image of the Litlle Dumbell Nebula captured with a Vespera smart telescope. At the right, the XRAI heatmap representation of what is understood by the ResNet50 model.

Figure 6.

at the left, an image of the Litlle Dumbell Nebula captured with a Vespera smart telescope. At the right, the XRAI heatmap representation of what is understood by the ResNet50 model.

Figure 7.

Setup of the stargazing party at Luxembourg, Expo Sciences 2022. A Stellina smart telescope was used to capture deep sky images while a Dobson telescope was used to observe directly brightest targets through eyepieces.

Figure 7.

Setup of the stargazing party at Luxembourg, Expo Sciences 2022. A Stellina smart telescope was used to capture deep sky images while a Dobson telescope was used to observe directly brightest targets through eyepieces.

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).