Submitted:

15 September 2023

Posted:

18 September 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Related Work

2.1. Vehicle Re-Identification

2.2. Attention Mechanism

3. Proposed Method

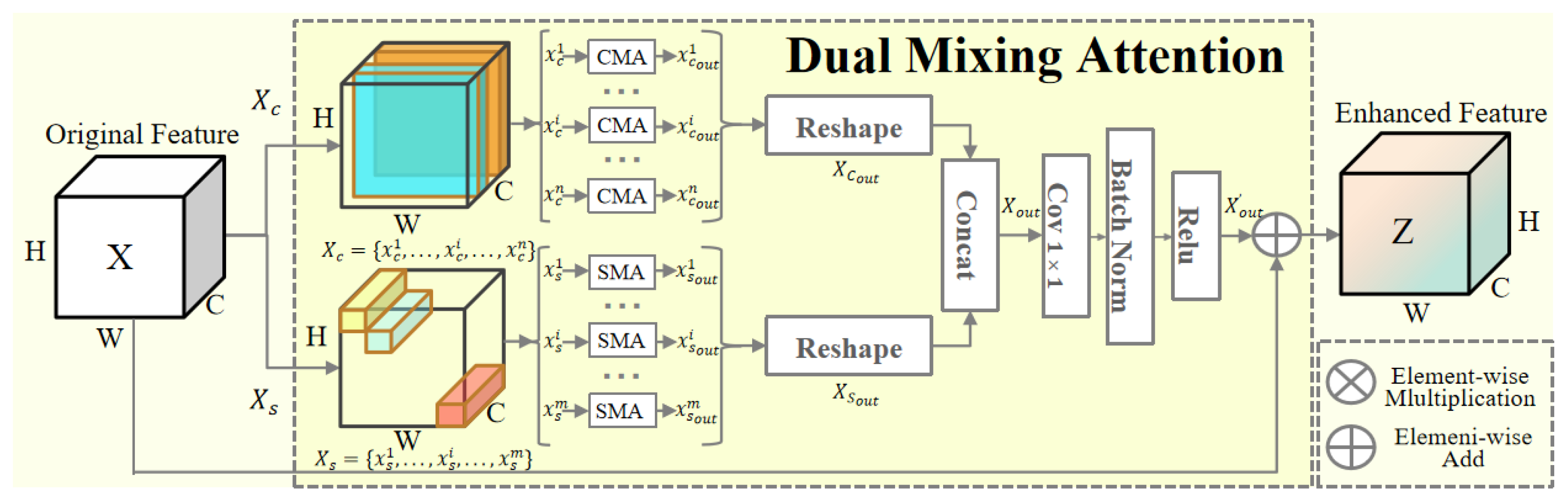

3.1. Dual Mixing Attention Module

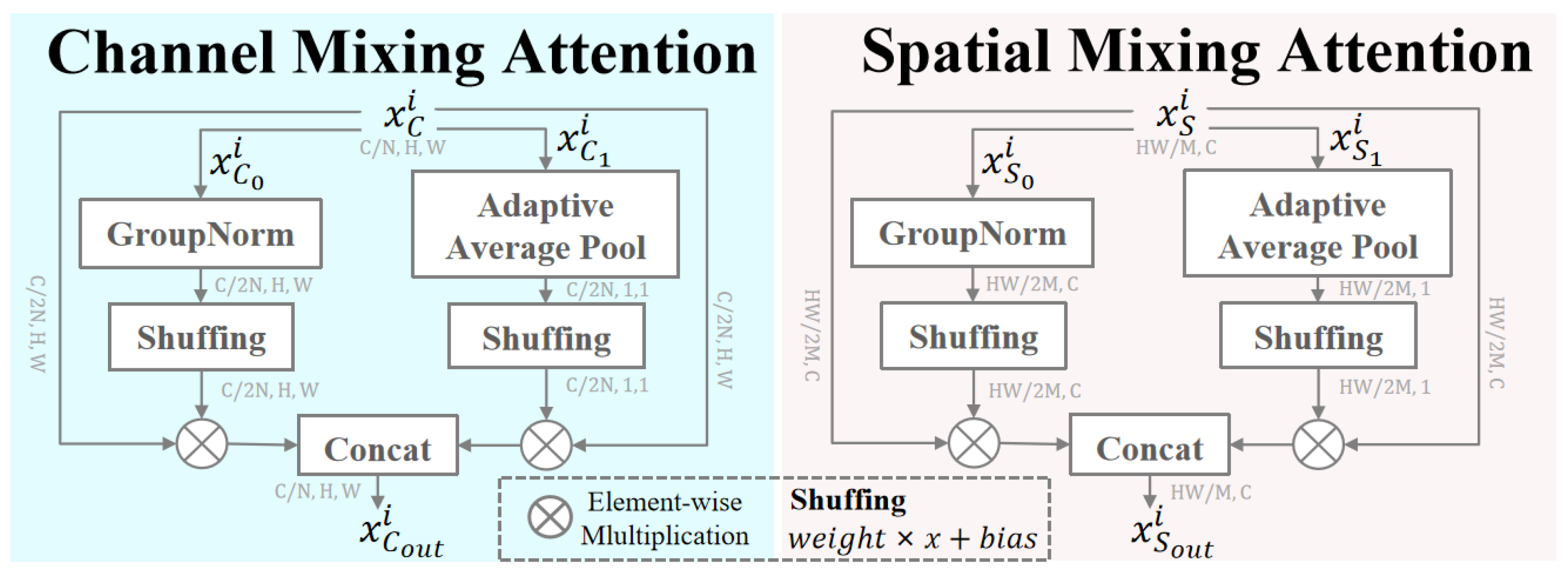

3.2. Channel Mixing Attention

3.3. Spatial Mixing Attention

4. Analysis And Experiments

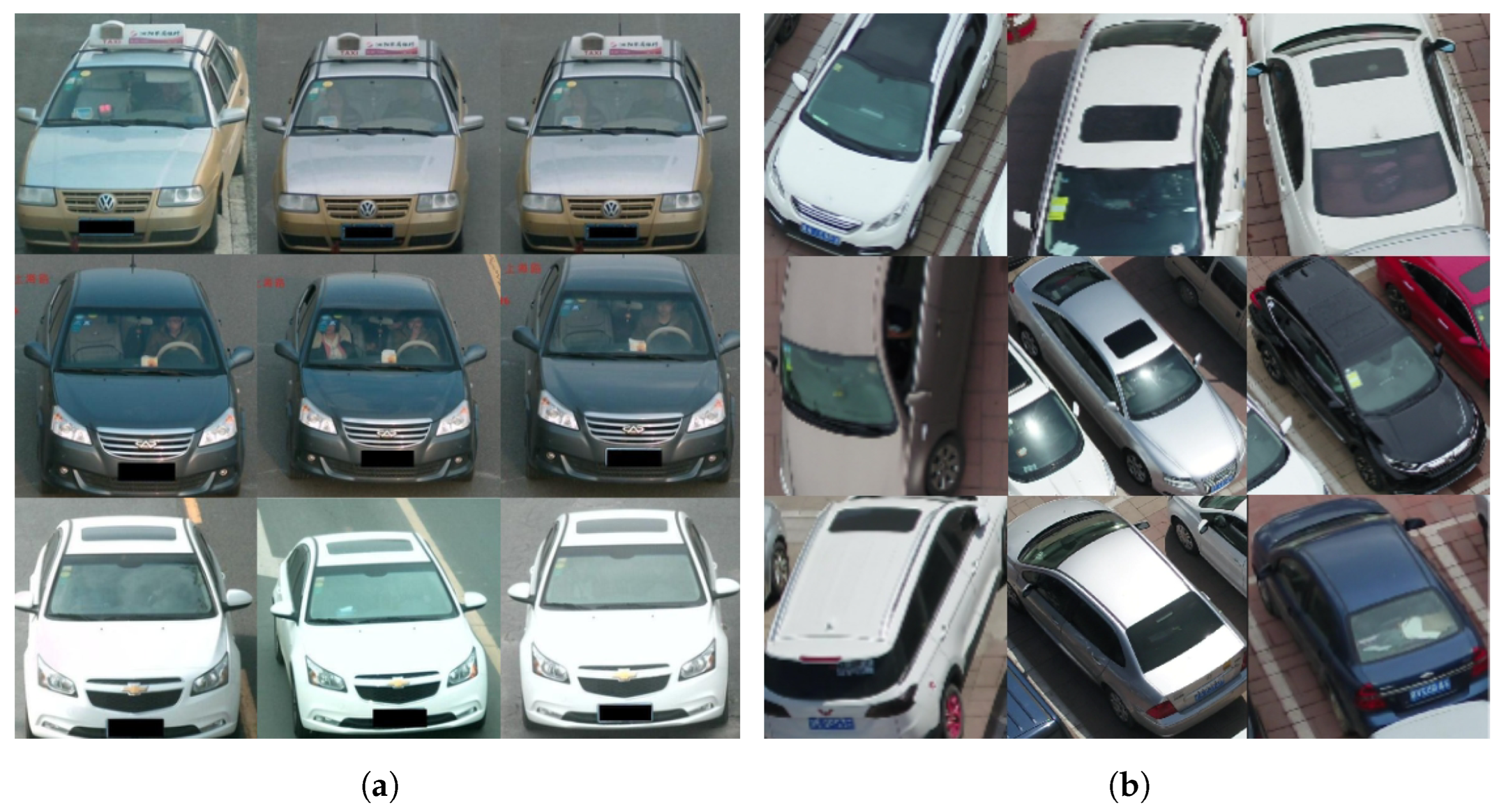

4.1. Datasets

4.2. Implementation Details

4.3. Comparison With State-of-the-Art

4.3.1. Experiments On VeRi-UAV

| Methods | Rank-1 | Rank-5 | Rank-10 |

|---|---|---|---|

| Siamese-Visual [43] | 25.98 | 41.98 | 50.61 |

| VGG CNN M [44] | 28.34 | 39.27 | 43.48 |

| SCAN [40] | 40.49 | 53.74 | 60.55 |

| GoogleLeNet [45] | 45.23 | 64.88 | 70.38 |

| RAM [46] | 45.26 | 59.35 | 64.07 |

| CN-Nets [47] | 55.91 | 76.54 | 82.46 |

| TCRL [30] | 56.44 | 77.21 | 82.98 |

| EMRN [38] | 63.47 | 79.84 | 84.66 |

| CANet [3] | 63.68 | 80.73 | 85.40 |

| HPGN [2] | 64.18 | 82.19 | 85.88 |

| HSGNet [11] | 64.22 | 85.31 | 86.36 |

| AM+WTL [48] | 69.11 | 87.23 | 91.64 |

| GiT [37] | 72.48 | 85.83 | 89.61 |

| Baseline | 70.94 | 84.56 | 88.22 |

| Ours | 76.63 | 88.54 | 91.75 |

| Methods | Rank-1 | Rank-5 | Rank-10 | mAP |

|---|---|---|---|---|

| BOW-SIFT [50] | 36.2 | 52.6 | 61.0 | 9.0 |

| LOMO [51] | 69.3 | 77.8 | 82.3 | 34.1 |

| VGGNet [52] | 56.0 | 72.4 | 78.6 | 44.4 |

| ResNet50 [53] | 58.7 | 74.0 | 79.5 | 47.3 |

| VD-CML (VGGNet) [42] | 62.5 | 76.2 | 81.3 | 49.7 |

| VD-CML (ResNet50) [42] | 67.3 | 78.8 | 83.0 | 54.6 |

| TCRL [30] | 77.1 | 79.2 | 84.9 | 58.5 |

| EMRN [38] | 87.6 | 88.9 | 92.4 | 65.9 |

| CANet [3] | 94.4 | 95.0 | 95.8 | 77.9 |

| HPGN [2] | 94.7 | 95.6 | 97.4 | 78.4 |

| HSGNet [11] | 94.8 | 95.7 | 97.6 | 78.5 |

| GiT [37] | 95.3 | 95.9 | 97.9 | 80.3 |

| Baseline | 95.1 | 95.6 | 97.5 | 79.6 |

| Ours | 97.0 | 98.7 | 98.8 | 87.0 |

4.3.2. Experiments On UAV-VeID

4.4. Ablation Experiment And Analysis

4.4.1. The role of Dual Mixing Attention Module

| Methods | Rank-1 | Rank-5 | Rank-10 | mAP |

|---|---|---|---|---|

| Baseline | 95.14 | 95.63 | 97.48 | 79.59 |

| +CMA | 96.34 | 97.25 | 98.03 | 83.63 |

| +SMA | 96.56 | 97.42 | 98.27 | 84.96 |

| Ours | 97.04 | 98.65 | 98.83 | 86.99 |

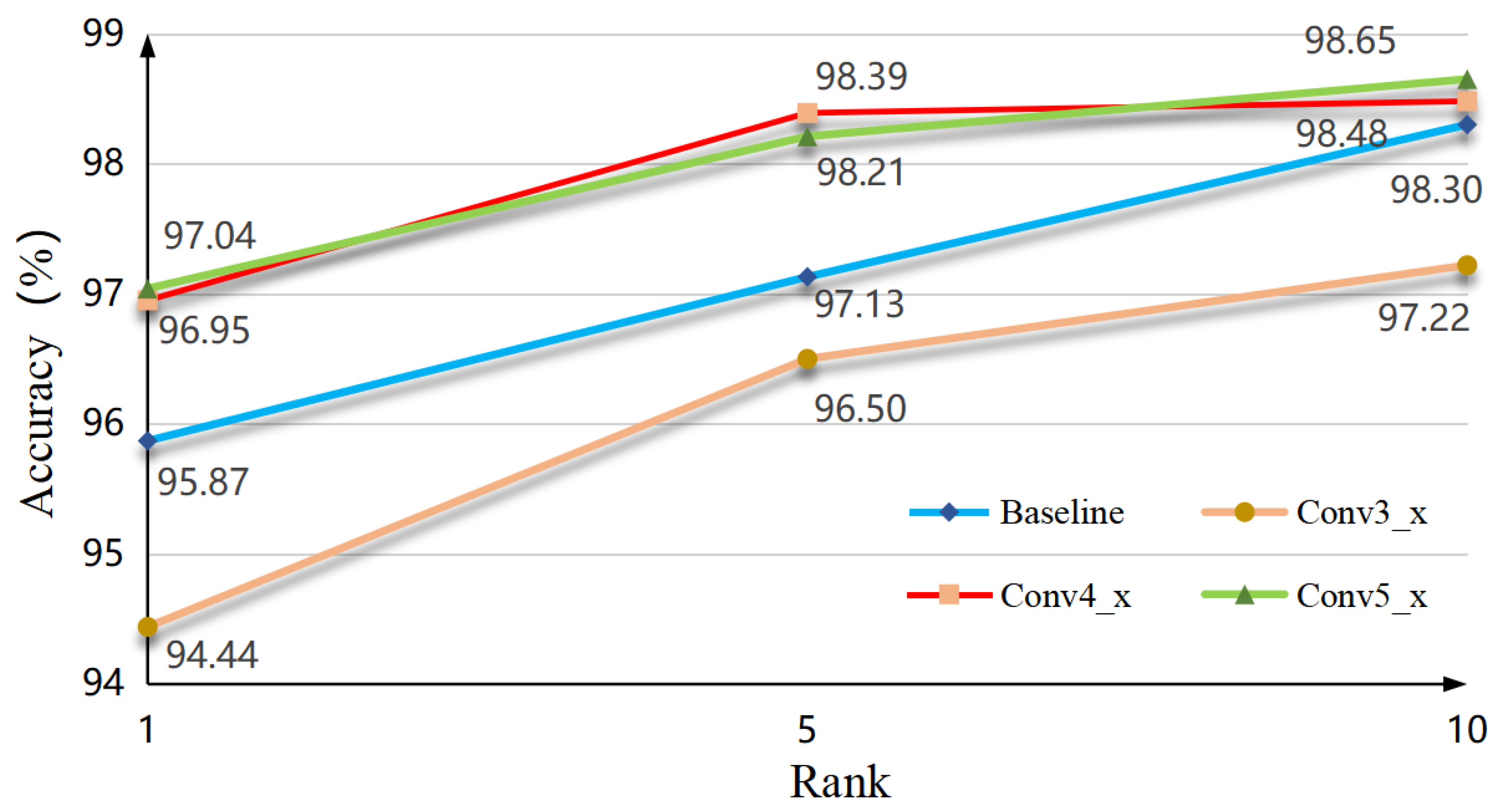

4.4.2. The Effectiveness on Which Stage to Plug the Dual Mixing Attention Module

| No. | Conv3_x | Conv4_x | Conv5_x | Rank-1 | Rank-5 | Rank-10 | mAP |

|---|---|---|---|---|---|---|---|

| 0 | 95.14 | 95.63 | 97.48 | 79.59 | |||

| 1 | 95.74 | 96.88 | 97.74 | 82.95 | |||

| 2 | 96.52 | 98.01 | 98.24 | 85.18 | |||

| 3 | 97.04 | 98.65 | 98.83 | 86.99 |

4.4.3. The Effect of Normalized Strategy in Dual Mixing Attention Module

| Methods | Rank-1 | Rank-5 | Rank-10 | mAP |

|---|---|---|---|---|

| Baseline | 70.94 | 84.56 | 88.22 | 60.04 |

| AAP → AMP | 74.88 | 87.21 | 90.49 | 64.88 |

| GN → IN | 75.98 | 88.03 | 91.25 | 65.62 |

| Ours | 76.63 | 88.54 | 91.75 | 66.22 |

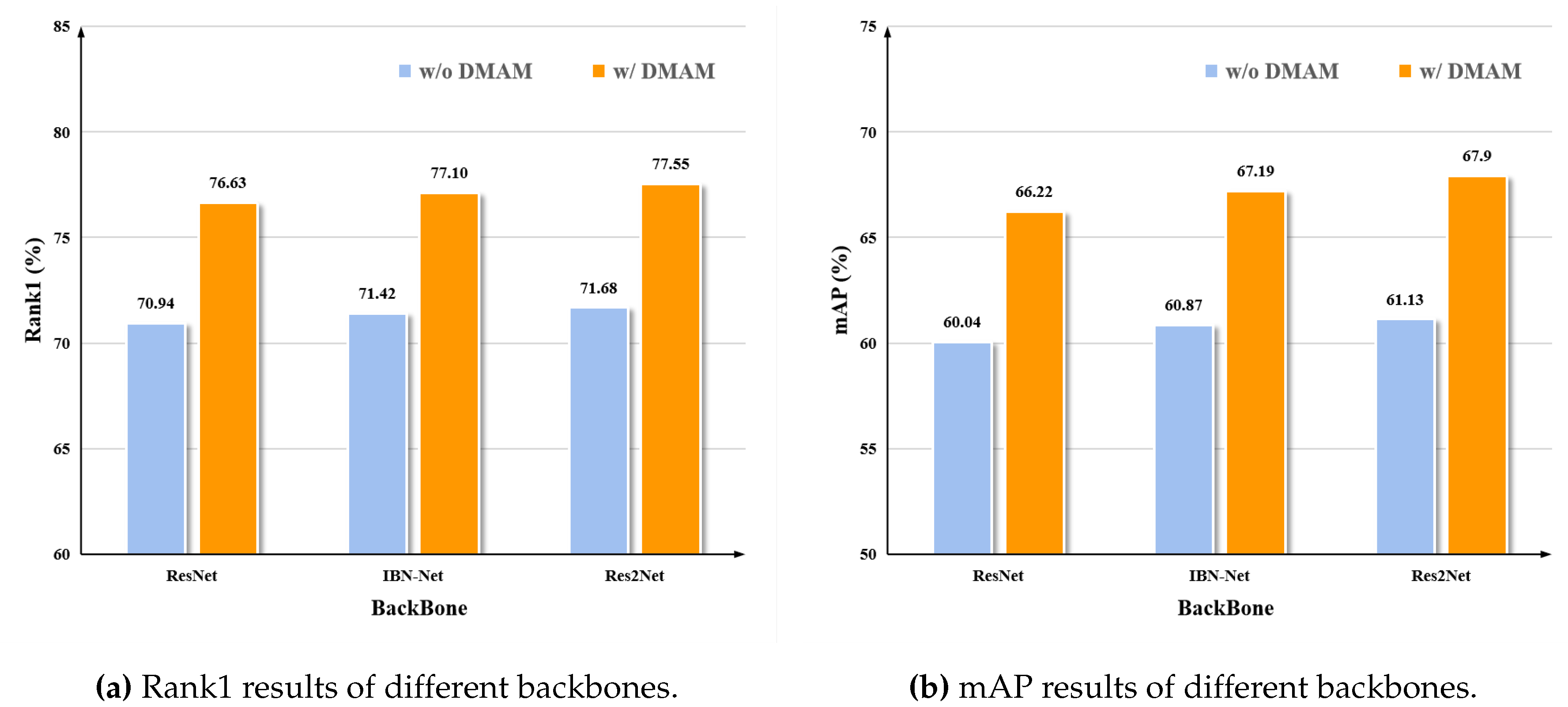

4.4.4. The Universality for Different Backbones

4.4.5. Comparison of Different Attention Modules

4.4.6. Visualization of Model Retrieval Results

5. Conclusions

Funding

Data Availability Statement

References

- Zheng, Z.; Ruan, T.; Wei, Y.; Yang, Y.; Mei, T. VehicleNet: Learning robust visual representation for vehicle re-identification. IEEE Transactions on Multimedia 2020, 23, 2683–2693. [Google Scholar] [CrossRef]

- Shen, F.; Zhu, J.; Zhu, X.; Xie, Y.; Huang, J. Exploring spatial significance via hybrid pyramidal graph network for vehicle re-identification. IEEE Transactions on Intelligent Transportation Systems 2021, 23, 8793–8804. [Google Scholar] [CrossRef]

- Li, M.; Wei, M.; He, X.; Shen, F. Enhancing Part Features via Contrastive Attention Module for Vehicle Re-identification. 2022 IEEE International Conference on Image Processing (ICIP). IEEE, 2022, pp. 1816–1820.

- Shen, F.; Peng, X.; Wang, L.; Zhang, X.; Shu, M.; Wang, Y. HSGM: A Hierarchical Similarity Graph Module for Object Re-identification. 2022 IEEE International Conference on Multimedia and Expo (ICME). IEEE, 2022, pp. 1–6.

- Han, K.; Wang, Q.; Zhu, M.; Zhang, X. PVTReID: A Quick Person Re-Identification Based Pyramid Vision Transformer 2023.

- Chen, Y.; Ke, W.; Sheng, H.; Xiong, Z. Learning More in Vehicle Re-Identification: Joint Local Blur Transformation and Adversarial Network Optimization. Applied Sciences 2022, 12, 7467. [Google Scholar] [CrossRef]

- Xiong, M.; Gao, Z.; Hu, R.; Chen, J.; He, R.; Cai, H.; Peng, T. A Lightweight Efficient Person Re-Identification Method Based on Multi-Attribute Feature Generation. Applied Sciences 2022, 12, 4921. [Google Scholar] [CrossRef]

- Qiao, W.; Ren, W.; Zhao, L. Vehicle re-identification in aerial imagery based on normalized virtual Softmax loss. Applied Sciences 2022, 12, 4731. [Google Scholar] [CrossRef]

- Tahir, M.; Anwar, S. Transformers in pedestrian image retrieval and person re-identification in a multi-camera surveillance system. Applied Sciences 2021, 11, 9197. [Google Scholar] [CrossRef]

- Li, H.; Lin, X.; Zheng, A.; Li, C.; Luo, B.; He, R.; Hussain, A. Attributes guided feature learning for vehicle re-identification. IEEE Transactions on Emerging Topics in Computational Intelligence 2021, 6, 1211–1221. [Google Scholar] [CrossRef]

- Shen, F.; Wei, M.; Ren, J. HSGNet: Object Re-identification with Hierarchical Similarity Graph Network. arXiv 2022. arXiv:2211.05486.

- Huang, P.; Huang, R.; Huang, J.; Yangchen, R.; He, Z.; Li, X.; Chen, J. Deep Feature Fusion with Multiple Granularity for Vehicle Re-identification. CVPR Workshops, 2019, pp. 80–88.

- Shen, F.; Shu, X.; Du, X.; Tang, J. Pedestrian-specific Bipartite-aware Similarity Learning for Text-based Person Retrieval. Proceedings of the 31th ACM International Conference on Multimedia, 2023.

- Shen, F.; Lin, L.; Wei, M.; Liu, J.; Zhu, J.; Zeng, H.; Cai, C.; Zheng, L. A large benchmark for fabric image retrieval. 2019 IEEE 4th International Conference on Image, Vision and Computing (ICIVC). IEEE, 2019, pp. 247–251.

- Zhou, T.; Li, J.; Wang, S.; Tao, R.; Shen, J. Matnet: Motion-attentive transition network for zero-shot video object segmentation. IEEE Transactions on Image Processing 2020, 29, 8326–8338. [Google Scholar] [CrossRef]

- Zhou, T.; Li, L.; Li, X.; Feng, C.M.; Li, J.; Shao, L. Group-wise learning for weakly supervised semantic segmentation. IEEE Transactions on Image Processing 2021, 31, 799–811. [Google Scholar] [CrossRef]

- Zhou, T.; Qi, S.; Wang, W.; Shen, J.; Zhu, S.C. Cascaded parsing of human-object interaction recognition. IEEE Transactions on Pattern Analysis and Machine Intelligence 2021, 44, 2827–2840. [Google Scholar] [CrossRef]

- Wu, H.; Shen, F.; Zhu, J.; Zeng, H.; Zhu, X.; Lei, Z. A sample-proxy dual triplet loss function for object re-identification. IET Image Processing 2022, 16, 3781–3789. [Google Scholar] [CrossRef]

- Xie, Y.; Shen, F.; Zhu, J.; Zeng, H. Viewpoint robust knowledge distillation for accelerating vehicle re-identification. EURASIP Journal on Advances in Signal Processing 2021, 2021, 1–13. [Google Scholar] [CrossRef]

- Xu, R.; Shen, F.; Wu, H.; Zhu, J.; Zeng, H. Dual modal meta metric learning for attribute-image person re-identification. 2021 IEEE International Conference on Networking, Sensing and Control (ICNSC). IEEE, 2021, Vol. 1, pp. 1–6.

- Fu, X.; Shen, F.; Du, X.; Li, Z. Bag of Tricks for “Vision Meet Alage” Object Detection Challenge. 2022 6th International Conference on Universal Village (UV). IEEE, 2022, pp. 1–4.

- Shen, F.; Wang, Z.; Wang, Z.; Fu, X.; Chen, J.; Du, X.; Tang, J. A Competitive Method for Dog Nose-print Re-identification. arXiv 2022. arXiv:2205.15934.

- Qiao, C.; Shen, F.; Wang, X.; Wang, R.; Cao, F.; Zhao, S.; Li, C. A Novel Multi-Frequency Coordinated Module for SAR Ship Detection. 2022 IEEE 34th International Conference on Tools with Artificial Intelligence (ICTAI). IEEE, 2022, pp. 804–811.

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. Proceedings of the IEEE conference on computer vision and pattern recognition, 2018, pp. 7132–7141.

- Li, X.; Wang, W.; Hu, X.; Yang, J. Selective kernel networks. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2019, pp. 510–519.

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient channel attention for deep convolutional neural networks. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2020, pp. 11534–11542.

- Zhang, Q.L.; Yang, Y.B. Sa-net: Shuffle attention for deep convolutional neural networks. ICASSP 2021-2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE, 2021, pp. 2235–2239.

- Park, J.; Woo, S.; Lee, J.Y.; Kweon, I.S. Bam: Bottleneck attention module. arXiv 2018. arXiv:1807.06514.

- Fu, J.; Liu, J.; Tian, H.; Li, Y.; Bao, Y.; Fang, Z.; Lu, H. Dual attention network for scene segmentation. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2019, pp. 3146–3154.

- Shen, F.; Du, X.; Zhang, L.; Tang, J. Triplet Contrastive Learning for Unsupervised Vehicle Re-identification. arXiv 2023. arXiv:2301.09498.

- Lin, W.H.; Tong, D. Vehicle re-identification with dynamic time windows for vehicle passage time estimation. IEEE Transactions on Intelligent Transportation Systems 2011, 12, 1057–1063. [Google Scholar] [CrossRef]

- Kwong, K.; Kavaler, R.; Rajagopal, R.; Varaiya, P. Arterial travel time estimation based on vehicle re-identification using wireless magnetic sensors. Transportation Research Part C: Emerging Technologies 2009, 17, 586–606. [Google Scholar] [CrossRef]

- Silva, S.M.; Jung, C.R. License plate detection and recognition in unconstrained scenarios. Proceedings of the European conference on computer vision (ECCV), 2018, pp. 580–596.

- Watcharapinchai, N.; Rujikietgumjorn, S. Approximate license plate string matching for vehicle re-identification. 2017 14th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS). IEEE, 2017, pp. 1–6.

- Feris, R.S.; Siddiquie, B.; Petterson, J.; Zhai, Y.; Datta, A.; Brown, L.M.; Pankanti, S. Large-scale vehicle detection, indexing, and search in urban surveillance videos. IEEE Transactions on Multimedia 2011, 14, 28–42. [Google Scholar] [CrossRef]

- Matei, B.C.; Sawhney, H.S.; Samarasekera, S. Vehicle tracking across nonoverlapping cameras using joint kinematic and appearance features. CVPR 2011. IEEE, 2011, pp. 3465–3472.

- Shen, F.; Xie, Y.; Zhu, J.; Zhu, X.; Zeng, H. Git: Graph interactive transformer for vehicle re-identification. IEEE Transactions on Image Processing 2023. [Google Scholar] [CrossRef]

- Shen, F.; Zhu, J.; Zhu, X.; Huang, J.; Zeng, H.; Lei, Z.; Cai, C. An Efficient Multiresolution Network for Vehicle Reidentification. IEEE Internet of Things Journal 2021, 9, 9049–9059. [Google Scholar] [CrossRef]

- Khorramshahi, P.; Kumar, A.; Peri, N.; Rambhatla, S.S.; Chen, J.C.; Chellappa, R. A dual-path model with adaptive attention for vehicle re-identification. Proceedings of the IEEE/CVF international conference on computer vision, 2019, pp. 6132–6141.

- Teng, S.; Liu, X.; Zhang, S.; Huang, Q. Scan: Spatial and channel attention network for vehicle re-identification. Advances in Multimedia Information Processing–PCM 2018: 19th Pacific-Rim Conference on Multimedia, Hefei, China, September 21-22, 2018, Proceedings, Part III 19. Springer, 2018, pp. 350–361.

- Teng, S.; Zhang, S.; Huang, Q.; Sebe, N. Viewpoint and scale consistency reinforcement for UAV vehicle re-identification. International Journal of Computer Vision 2021, 129, 719–735. [Google Scholar] [CrossRef]

- Song, Y.; Liu, C.; Zhang, W.; Nie, Z.; Chen, L. View-Decision Based Compound Match Learning for Vehicle Re-identification in UAV Surveillance. 2020 39th Chinese Control Conference (CCC). IEEE, 2020, pp. 6594–6601.

- Shen, Y.; Xiao, T.; Li, H.; Yi, S.; Wang, X. Learning deep neural networks for vehicle re-id with visual-spatio-temporal path proposals. Proceedings of the IEEE international conference on computer vision, 2017, pp. 1900–1909.

- Chatfield, K.; Simonyan, K.; Vedaldi, A.; Zisserman, A. Return of the devil in the details: Delving deep into convolutional nets. arXiv 2014. arXiv:1405.3531.

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. Proceedings of the IEEE conference on computer vision and pattern recognition, 2015, pp. 1–9.

- Liu, X.; Zhang, S.; Huang, Q.; Gao, W. Ram: a region-aware deep model for vehicle re-identification. 2018 IEEE International Conference on Multimedia and Expo (ICME). IEEE, 2018, pp. 1–6.

- Yao, H.; Zhang, S.; Zhang, Y.; Li, J.; Tian, Q. One-shot fine-grained instance retrieval. Proceedings of the 25th ACM international conference on Multimedia, 2017, pp. 342–350.

- Yao, A.; Huang, M.; Qi, J.; Zhong, P. Attention mask-based network with simple color annotation for UAV vehicle re-identification. IEEE Geoscience and Remote Sensing Letters 2021, 19, 1–5. [Google Scholar] [CrossRef]

- Shen, F.; He, X.; Wei, M.; Xie, Y. A competitive method to vipriors object detection challenge. arXiv 2021. arXiv:2104.09059.

- Bromley, J.; Guyon, I.; LeCun, Y.; Säckinger, E.; Shah, R. Signature verification using a" siamese" time delay neural network. Advances in neural information processing systems 1993, 6. [Google Scholar] [CrossRef]

- Liao, S.; Hu, Y.; Zhu, X.; Li, S.Z. Person re-identification by local maximal occurrence representation and metric learning. Proceedings of the IEEE conference on computer vision and pattern recognition, 2015, pp. 2197–2206.

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014. arXiv:1409.1556.

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. Proceedings of the IEEE conference on computer vision and pattern recognition, 2016, pp. 770–778.

- Pan, X.; Ge, C.; Lu, R.; Song, S.; Chen, G.; Huang, Z.; Huang, G. On the integration of self-attention and convolution. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2022, pp. 815–825.

- Li, Y.; Yao, T.; Pan, Y.; Mei, T. Contextual transformer networks for visual recognition. IEEE Transactions on Pattern Analysis and Machine Intelligence 2022, 45, 1489–1500. [Google Scholar] [CrossRef] [PubMed]

- Liu, H.; Liu, F.; Fan, X.; Huang, D. Polarized self-attention: Towards high-quality pixel-wise regression. arXiv 2021. arXiv:2107.00782.

| Methods | Rank-1 | Rank-5 | Rank-10 | mAP |

|---|---|---|---|---|

| CA [3] | 94.44 | 95.02 | 95.83 | 77.87 |

| SA [2] | 94.72 | 95.57 | 97.43 | 78.42 |

| SA&CA [11] | 94.78 | 95.67 | 97.64 | 78.53 |

| ACmix [54] | 95.07 | 97.31 | 97.76 | 78.52 |

| Cot [55] | 95.87 | 97.31 | 97.76 | 80.30 |

| Psa [56] | 96.59 | 97.85 | 98.12 | 80.70 |

| Ours | 97.04 | 98.65 | 98.83 | 86.99 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).