1. Introduction

In 1982, Ramsay [

1] first proposed the definition of functional data, laying a foundation for the development of functional data analysis. In 2005, Ramsay and Silverman provided a detailed introduction to the general methods and steps of functional data analysis, including functional principal component analysis and functional linear regression models in their book [

2]. In 2012, Horváth and Kokoszka [

3] focuses on the inferential methods in functional data analysis.

In 2009, Shin [

4] proposed a partial functional linear model (PFLM) which explores the relationship between a scalar response variable and mixed-type predictors. In 2012, Shin and Lee [

5] derived the asymptotic prediction rate of PFLM and compared it with that of other functional regression models.

In 2002, James [

6] proposed generalized linear models with functional predictors and applied it to standard missing data problems. In 2005, Müller and Stadtmüller [

7] proposed a generalized functional linear regression model where the response variable is a scalar and the predictor is a random function. They also considered the situation where the link and variance functions were unknown. In 2015, Shang and Cheng [

8] proposed a roughness regularization approach in making nonparametric inference for generalized functional linear models with known link function. In 2019, Wong et al. [

9] investigated a class of partially linear functional additive models that predicts a scalar response by both parametric effects of a multivariate predictor and nonparametric effects of a multivariate functional predictor. In 2021, Xiao et al. [

10] proposed a generalized partially functional linear regression model where the response variable is 0 or 1 and the multiple predictors were functional and scalar and the asymptotic property of the estimated coefficients in the model was established.

In a generalized linear model sometimes the link function may not be known exactly, but can be assumed to be of some general ‘parametric’ form. In 1984, Scallan et al. [

11] showed how generalized linear models can be extended to fit models with such link functions.

In 1994, Weisberg and Welsh [

12] used kernel smoothing estimation to estimate the link function and estimated regression coefficients through the link function, then alternated between these two steps, which effectively solves the fitting problem when the link function is unknown. However, kernel smoothing estimation may have problems at the boundary, so local polynomial fitting is introduced, which performs better near the boundary.

In 1998, Chiou and Müller [

13] considered the condition of the link and the variance functions to be unknown but smooth. Consistency results for the link and the variance function estimators, as well as the sampling distribution of the regression coefficients, were obtained. In 2005, Chiou and Müller [

14] introduced a flexible marginal modelling approach for statistical inference for clustered and longitudinal data under minimal assumptions. The predictor were longitudinal data in the model. The estimated estimating equation approach was semi-parametric. The semiparametric model proposed were fitted by quasi-likelihood regression. The consistency of the estimates of the link and variance functions and the asymptotic limit distribution of regression coefficients were given. In addition, there are other methods to estimate unknown functions. In 2009, Bai et al. [

15] focused on single-index models for longitudinal data. They proposed a procedure to estimate the single-index component and the unknown link function based on the combination of the penalized splines and quadratic inference functions. In 2012, Pang and Xue [

16] generalized the single-index models to the scenarios with random effects. The link function was estimated by using the local linear smoother. A new set of estimating equations modified for the boundary effects was proposed to estimate the index coefficients. In 2017, Yuan and Diao [

17] developed a sieve maximum likelihood estimation for generalized linear models, in which the estimator of the unknown link function was assumed to lie in a sieve space. Various methods of sieves including the B-spline and P-spline based methods were introduced.

In summary, in existing models with unknown link function, the issue of generalized partially functional regression model which regress response on mulitple functional and scalar predictors has not been addressed. To fill this gap, in this work we therefore propose a generalized partially functional linear model with unknown link function. The proposed method in this article can more accurately estimate the form of the link function, thus avoiding the problem of decreased model accuracy due to the selection of an incorrect link function.

2. Model and Estimation

The data we observe for the i-th subject are We assume that these data are independent identically distributed (i.i.d) copies of . For the functional predictor is a random curve, where , and T is a bounded interval of . And the scalar predictor vector is a q dimensional random vector. The response Y is a real-valued random variable which may be binary or count.

2.1. Model

We establish a model for the relationship between the response variable

and the predictors

and

:

where

is the regression coefficient function that needs to be estimated for the functional predictors

;

is a

q dimensional vector with the elements to be the regression coefficients for the scalar predictors

that need to be estimated, i.e.,

. Here

is i.i.d copies of

, which is the random error variable and

and

, where

The relationship between the response variable Y and is established through , i.e., . is the link function which is unknown and need to be estimated in this paper.

Let

be a variance function and it satisfies

for a constant

, such that

To reduce the dimensionality of the functional predictors , we adopt the method of functional principal component analysis (FPCA) in this paper. First, we need to standardize the original data by centering it, so that and .

By Karhunen-Loeve (KL) expansion and Mercer’s theorem,

can be expanded as

where

represents the functional principal component scores,

are called functional principal components which are the eigenfunctions of the covariance operator of

. Notice that

form an orthonormal basis for the function space

. Then regression coefficient function

can be expanded as

After plugging the above two expansions into (

1), we have

In (

4), we truncated the predictors at

(depending on sample size

n), and

increases asymptotically with

.

2.2. Estimation

Define a parameter vector

, where

For the estimation of the parameter vector and the link function g, we use an iterative estimation method to obtain the final estimates. Let there exist a constant , with this c and n we can define . The overall iterative process is briefly described below:

Step 1 To obtain the estimate

of

by solving equation (

5), it is assumed that the link function

is known. The link function

is required to be second-order continuously differentiable to ensure the existence of the Hessian matrix. Moreover for the variance function

is defined on the range of link function and is strictly positive.

where

,

near

,

near

,

and

We introduce the following matrix:

Then equation (

5) can be expressed in matrix form, i.e.

We can solve it by the weighted least squares method. A Taylor expansion of

, where

and then we can get

where

. Simplification yields estimates

where

,

.

Step 2 By local linear regression, the estimates , of the link functions g, are obtained.

Let the bandwidth

of the kernel function

converge to zero and define

. Since the convergence rates of

and

are different, their bandwidth choices should also be different. Let

denote the bandwidth of

,

denote the bandwidth of

, but in this paper, for simplicity, the bandwidth

is chosen. Let the distributions of both the functional predictors

and the scalar predictors

Z belong to a compact support set

U and we have

. To simplify the expression, we let

,

. For a fixed

, apply the method of local linear regression to obtain an initial estimate of

and

for

g and

, respectively. We minimize the weighted sum of squares at any point

u, and the formula for calculating the weighted sum of squares is

Through minimize (

6), we can obtain

and

and they can be represented as

,

, where

Step 3 Using the method of

Step 1, the link function is replaced by the estimated link functions

and

, where

. To update

, solve the estimation equation (

5) for

. From this we can obtain the estimated value of

Step 4 Using the method in Step 2, the parameter vector is replaced by the estimated , where . From this we obtain the estimates and for g and , where .

Step 5 Repeat the above steps until converge, and stop the iteration.

Step 6 The final estimate of the regression coefficient is obtained as , and the estimate of the link function g is obtained as .

3. Asymptotic Properties

In deriving the asymptotics of the estimates of the link function and the regression coefficients , some additional assumptions are required:

- (C1)

There exists for a constant , such that

- (C2)

Let the density function of be strictly positive, and satisfies the first-order Lipschitz condition when .

- (C3)

The kernel function satisfies the first-order Lipschitz condition and is a bounded and continuous symmetric probability density function and satisfies

- (C4)

. Here h is the bandwidth of the kernel function.

- (C5)

For , as .

Remark 1.(C1) It is a necessary condition for the asymptotic normality of the estimator. (C2) Ensures that , are far from 0 when is close enough to θ.(C3) The usual assumptions about the kernel function. (C4) The usual assumptions about the bandwidth. (C5) Some controls are applied to m in order to make the convergence faster.

3.1. Asymptotic Convergence of

Lemma 1.

Let be independent and identically distributed random vectors. Furthermore, assume that for any , there exist , and such that is the joint density function of . Let be a bounded and strictly positive kernel function that satisfies the Lipschitz condition, we have

Proof. See Proposition 4 in Mack and Silverman (1982) [

18]. □

Theorem 1.

If we assume that (C1)-(C5) holds, for , then we have

where , , and for the kernel function, let

Proof.

By expanding

, we obtain when

.

From Lemma 1, it can be proved that for

Taking (

8) into (

9), we can obtain that

Then

where

, ⊗ indicates the Kronecker product.

Inverting the matrix

, we get

Let

where

, and

By expanding

, we obtain when

From Lemma 1, combined with (

10) and (

11), we can prove that

The Taylor expansion of

at

u is

where

,

.

Combining (

7) and (

12), we can obtain

where

Since

, (

10) can be transformed into

Taking it into (

13) and combining it with Theorem 3.3 in Masry and Tjøstheim (1995) [

19]. Finally, Theorem 1 can be proved. □

Corollary 1.

If we further refine the condition in assumption (C4) such that , then it follows that

3.2. Asymptotic Convergence of

First, we need to provide some more specific explanations for the estimation iteration process mentioned in “Estimation”, which makes some preparation for Theorem 2.

- (1)

solving

by equation (

5) given the assumption that the link function is known. Assume

, then it follows that

Let

, where

and satisfies

,

. Similarly we can obtain

- (2)

Solving

given the link function is unknown by

where

. Similarly we can obtain

where

.

Lemma 2.

If the assumptions (C1)-(C5) hold, we have

Proof. Let

,

, By Theorem 4.1 of Chiou and Müller [

20], we know that

and

, then

where A, B and C can be expressed as

□

Lemma 3.

If the assumptions (C1)-(C5) hold, we have

Proof. Combining Theorem 1 and

in Lemma 2, we can prove that

□

Theorem 2.

If we assume that (C1)-(C5) hold, we have

In the case of truncated models for , let be the estimator of , , where . We define . Therefore, we have the following expression:

Furthermore, let , where . Here, I represents a dimensional identity matrix.

Proof. By using the Taylor expansion with a suitable mean value

, we can obtain

Then by Lemma 2 and Lemma 3, (

15) can be deformed as

By combining the above equation with

we can get

By (

16), it can be seen that it transforms the relationship between

and

in the case of unknown link functions into the relationship between

and

in the case of known link functions, and then combined with Theorem 1 in [

10] the proof of Theorem 2 can be obtained. □

3.3. Asymptotic Convergence of

Theorem 3.

If we assume that (C1)-(C5) hold, for , then we have

Proof.

The above expression transforms the relationship between

and

g into the relationship between

and

g(i.e., Theorem 1). Therefore, by theorem 1, we can get Theorem 3. □

Corollary 2.

If we further refine the condition in assumption (C4) such that , then it follows that

Remark 2.

Let represent the eigenvalues and eigenvectors of Ω, where

Then, the 95% confidence band for the regression coefficient function can be expressed as

where , , .

4. Simulation

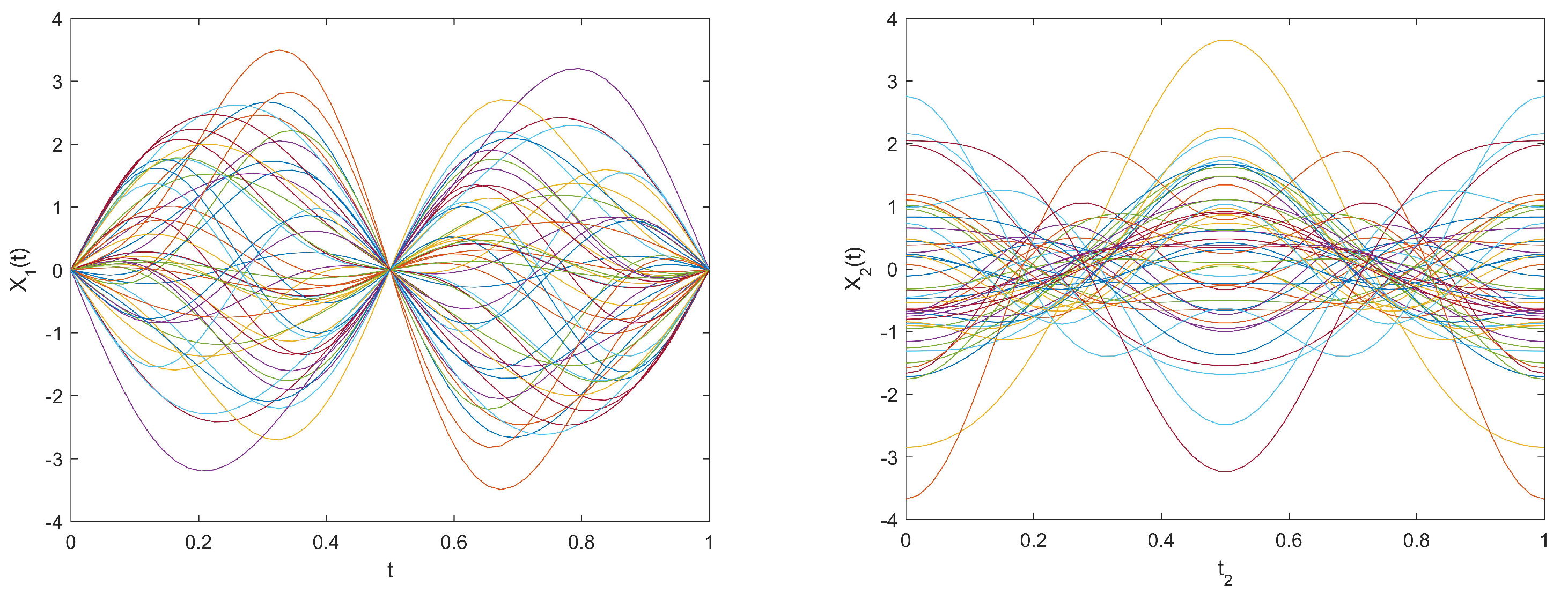

We consider a binary response and two functional predictors as well as three scalar predictors. The functional predictors and () are observed at 50 equal distant time points on the interval .

The sample sizes are

. Let the score coefficients

for each functional predictor satisfy the following assumptions:

where

.

where

.

We define the orthonormal basis functions

and

,

, which satisfy

Then,

can be represented through Karhunen-Loeve expansion as follows:

Figure 1 shows the 50 trajectories of the two functional predictors

and

.

The scalar predictor

satisfies the following assumption

We assume that the regression coefficient functions of the functional predictors satisfy the following assumption

where

and

. Moreover, we assume that the regression coefficients

of the scalar predictors satisfy

,

,

.

And we select the link function as

We generate binary response

as pseudo random sequence.

We obtain a sample

where

n is the sample size. The number of functional principal components that explain 85% of cumulative variation contribution are

,

, respectively. We run 100 simulations.

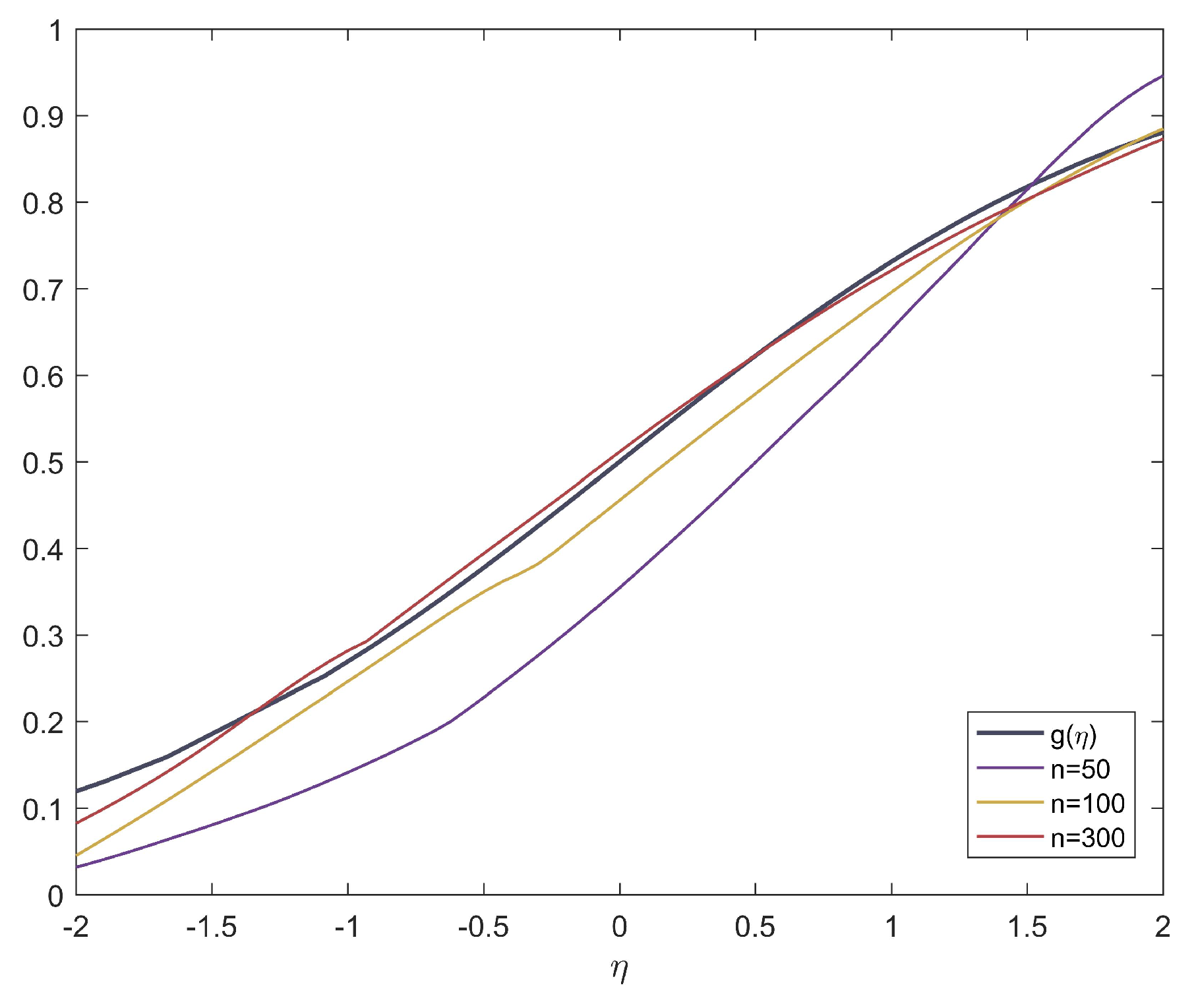

Figure 2 shows the asymptotic behavior of the link function under different sample sizes. The black lines in

Figure 2 shows the relationship between

and

, where

The additional colored lines shown in

Figure 2 represent the estimated link function

for different sample sizes. These lines are obtained through iterative processes, starting with an initial value of

g set to

. The iterative process continues until one of the following conditions is met: 100 iterations have been performed, or the error in the regression coefficients is less than 0.01. The purpose of these lines is to illustrate the relationship between

and

, where

Since in this case both

and

are in

, we denote the argument of

g and

by

and the x-axis in

Figure 2 is denoted by

and is shown in the interval

.

Table 1 presents the estimates of

evaluated through root mean integrated square error (RMISE) under different sample sizes. The RMISE is defined as follows:

where

is the number of simulations here. In summary,

Figure 2 and

Table 1 demonstrates that as the sample size increases, the estimated link function

becomes closer and closer to the true link function

g.

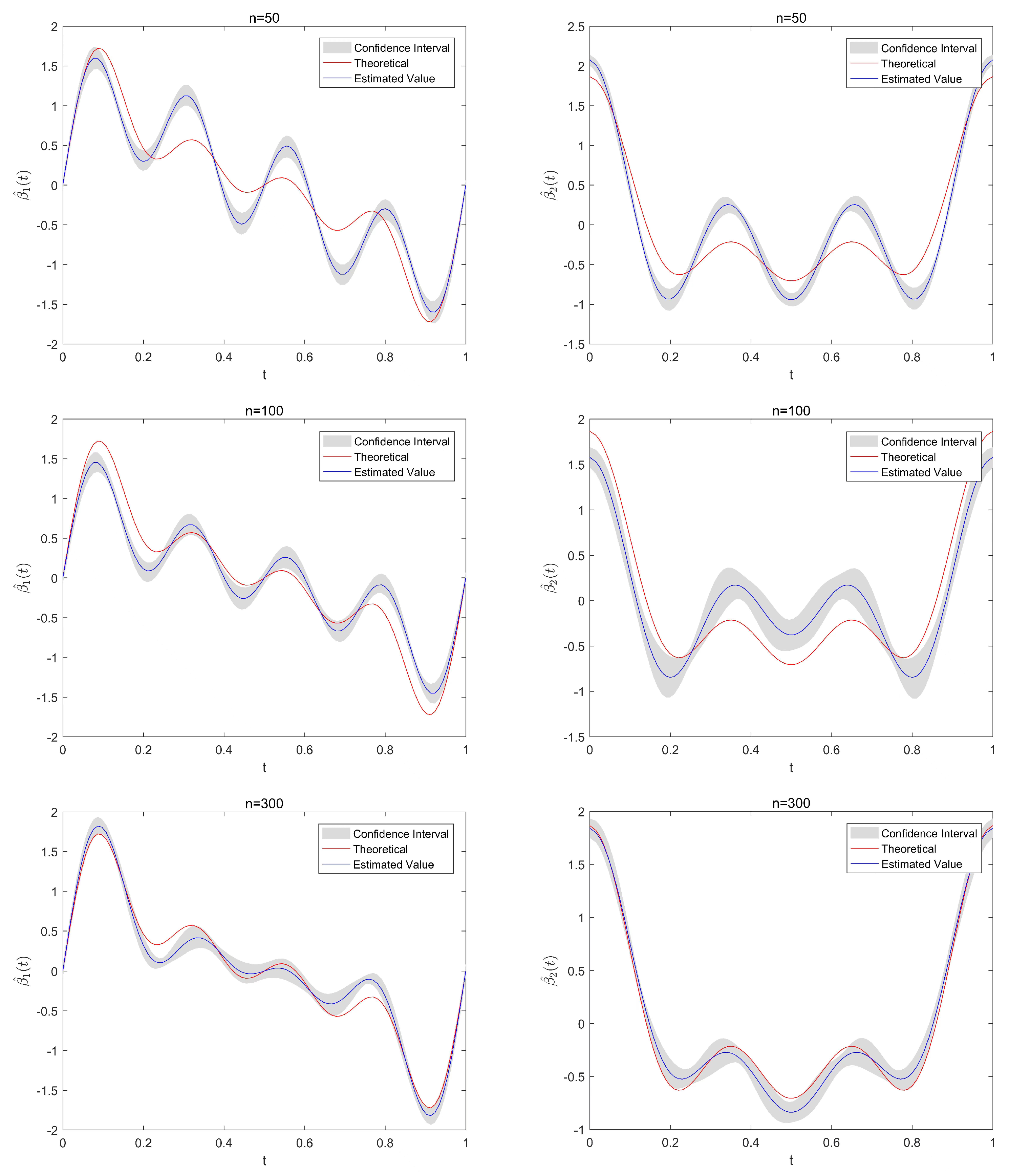

Table 2 can be seen that both the standard deviation (SD) and root mean integrated squared error (RMISE) of the estimated regression coefficient functions

and

decrease as the sample size

n increases.

Figure 3 displays the estimated functional regression coefficients

and

, as well as their 95% confidence intervals under different sample sizes. The red curve in the figure represents the theoretical values of

and

, while the blue curve represents the estimated values

and

. The gray shaded area represents the 95% confidence interval of the estimates. It can be seen that as the sample size increases, the estimated values become closer to the true values.

Table 3 presents the estimated scalar regression coefficient

and corresponding standard deviation under different sample sizes. It can be seen that as the sample size

n increases,

becomes closer to the true values

. Moreover, as the sample size

n increases, the standard deviation becomes smaller, indicating that the estimated values have more certainty.

Table 4 presents the M1 and M2 values for

Y and

for different sample sizes.

, where MAE stands for Mean Absolute Error and MAE=

.

, where MSE stands for Mean Squared Error and MSE =

. By observing the results, we find that as the sample size increases, the values of M1 and M2 become smaller, indicating that the predictive performance of the model improves.

5. Application

As is well known, research on average life expectancy is crucial for social development, health policies, and population management. Studies on average life expectancy can help governments, health departments, and social institutions develop relevant policies and plans to improve people’s quality of life and health conditions. By understanding people’s life expectancy, the efficiency of healthcare systems, the effectiveness of social welfare and public health policies can be evaluated, providing a basis for resource allocation and planning. Additionally, research on average life expectancy can also help people understand population structure and trends, providing references for social-economic development, pension systems, and labor market planning. Therefore, in the application of our proposed model, we investigate factors that influence average life expectancy, including air quality index (AQI), temperature, GDP, and number of beds in hospitals.

In 2012, Huang et al. [

21] explored the relationship between temperature and years of life lost (YLL). The study found that both high and low temperatures contribute to an increase in YLL, with high temperatures having a greater impact. In 2020, Yang et al. [

22] applied a generalized additive model to assess the associations between daily PM2.5 exposure and YLL from respiratory diseases in 96 Chinese cities during 2013–2016. They further estimated the avoidable YLL, potential gains in life expectancy, and the attributable fraction by assuming daily PM2.5 concentration decrease to the air quality standards of China and World Health Organization. In 2021, Deryugina and Molitor [

23] explored the factors influencing life expectancy across the United States. The study found that individuals living in areas with severe air pollution, poor water quality, and inadequate healthcare facilities generally had shorter life expectancies and poorer health conditions.

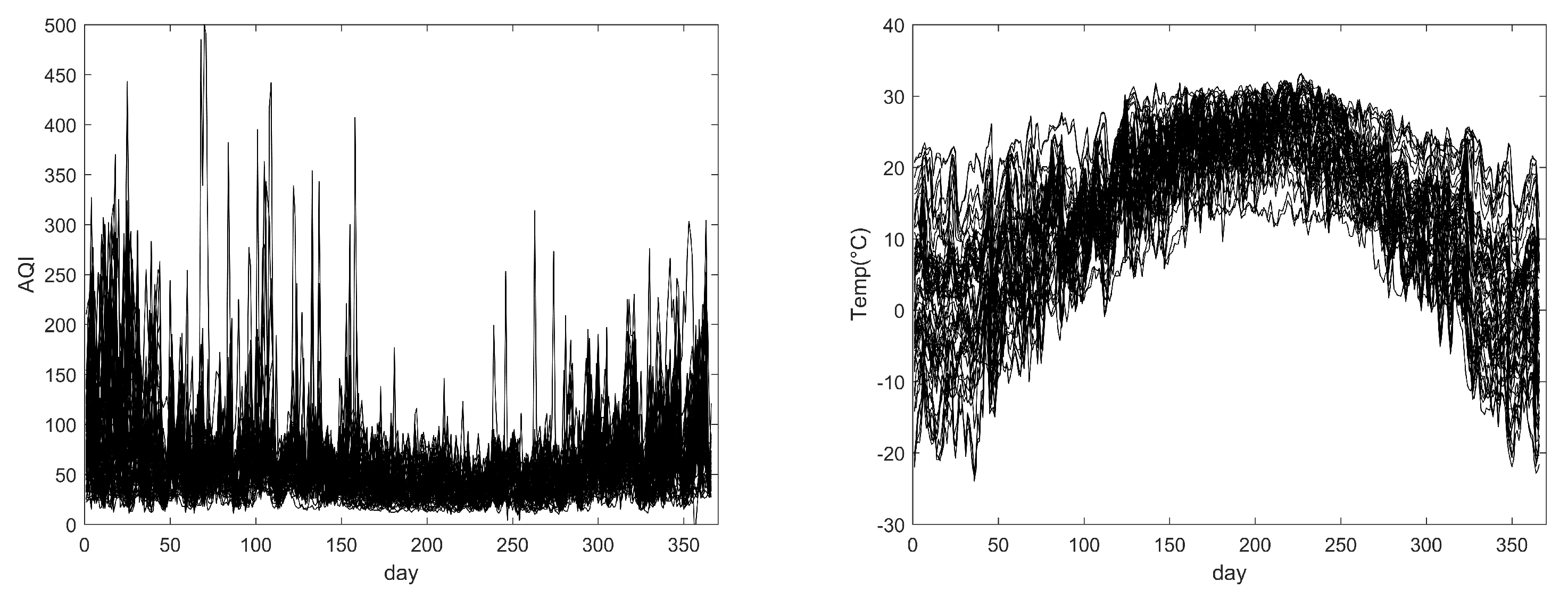

5.1. Data description

We collected average daily Temperature (Temp) data for 58 cities in China in 2020 from the National Meteorological Science Data Sharing Service Platform, and average daily Air Quality Index (AQI) data from the National Environmental Monitoring Station. We also collected GDP, number of beds in hospitals, and life expectancy data for each city from local statistical bulletins and government documents. Among them, there are two functional predictive variables, which are daily AQI and Temperature from January 1 to December 31, 2020, for 366 days in 58 cities. There are also two scalar predictive variables, which are GDP and number of beds in hospitals for the 58 cities in 2020. The response variable is Life Expectancy as proposed in the government work documents for each city.

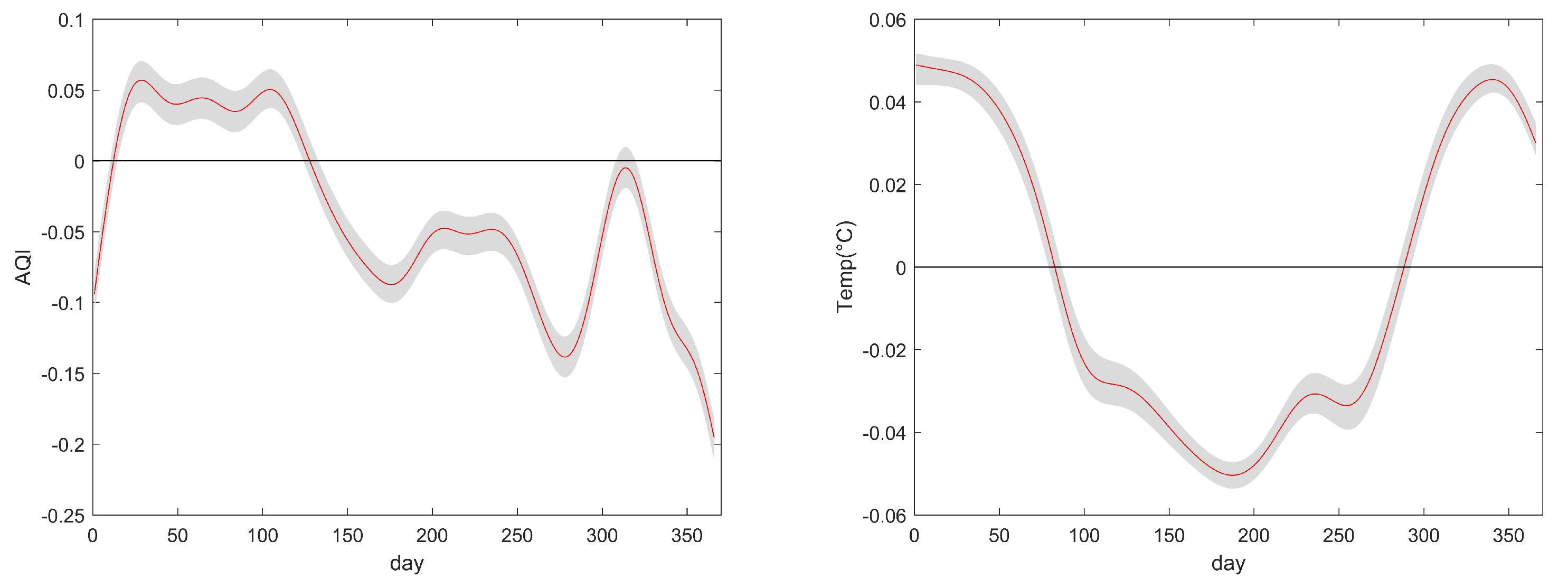

Figure 4 shows the daily AQI and Temp for 58 cities in 2020.

5.2. Data analysis

According to a report released by the National Health Commission, the average life expectancy of Chinese residents in 2020 was 77.9 years. Therefore, we divide the response variable as follows: when the life expectancy of a city is greater than 77.9 years, we represent it as 1; otherwise, when the life expectancy is less than 77.9 years, we represent it as 0.

To model the relationship between response and predictors using our proposed model, first, we center the data for the predictors. Second, for the functional predictors, we conduct functional principal component analysis (FPCA). We select the number of functional principal components that explain 75% of the variation and truncate them and the number of components have chosen and for the truncated AQI and temperature respectively.

5.3. Results analysis

By inputting the data into the generalized partially functional linear model, we obtain the regression coefficient function

for the functional predictors and the regression coefficients

for the scalar predictors. The results are shown in

Table 5 and

Figure 5, respectively.

Table 5 presents the estimated values of the regression coefficient

for scalar predictor variables. We can see that both GDP and number of beds in hospitals have a positive relationship with life expectancy, and are significant at the 5% level. This means that when a region has a higher GDP and more hospital beds, the life expectancy in that region is longer. In other words, the better the economic development and medical resources of a region, the longer the life expectancy.

In

Figure 5, we see the estimated values of the regression coefficient function

. For AQI, we can find a negative relationship between AQI and life expectancy in general. The higher the value of AQI, the more serious the air pollution is, and the lower the life expectancy corresponding to it. However, there is a more obvious positive relationship trend in February to April, which may be influenced by some other external factors. For temperature, we can find that the effect of temperature on life expectancy shows seasonal changes, when in spring, summer and fall (March to October), the effect of temperature on life expectancy is negatively correlated, and in winter (November to February) it is positively correlated, and life expectancy is lowest at the beginning of July, which is also consistent with the conclusion in Huang et al. [

21].

To confirm the necessity of considering unknown link function model, we select the logit function (i.e.

) as the link function and compare it with our proposed unknown link function model. We assume that

represents the predicted values of the response variable. In order to evaluate the prediction performance of both the logit link function model and the unknown link function model, we use MAE , MSE , and

. When the values of MAE and MSE are smaller, it indicates that the model has a smaller prediction error and better performance. When

is closer to 1, it indicates that the model has a stronger ability to explain the response variable. By observing

Table 6, we found that the proposed model in this article has smaller MAE and MSE values, and a larger

value, which has better practical value.

6. Discussion

This article proposes a generalized partial functional linear model for scalar response and predictor variables that include both functional and scalar components, without specifying a link function. The method uses functional principal component analysis to reduce the dimensionality of functional data, estimates the regression coefficients using pseudo-likelihood estimation, and estimates the link function using local linear regression, iteratively refining the estimates until convergence while proving its asymptotic normality. The accuracy of the proposed model is validated through simulation studies.

The article also applies the generalized partial functional linear model to the average life expectancy. By collecting daily AQI, temperature, GDP, and number of beds in hospitals for 58 cities in China in 2020, the study explores the impact of environmental, economic, and medical factors on the life expectancy. The study shows that GDP and number of beds in hospitals have a positive correlation with the life expectancy, while the AQI has an overall negative correlation. Temperature has a negative correlation with the average life expectancy in spring, summer, and autumn, and a positive correlation in winter. Overall, the study concludes that the average life expectancy is higher in areas with better environmental, economic, and medical development, which is consistent with our expectations.

However, this model still has certain limitations, which have already manifested in the application. In the next phase of research, we will further consider the interactions between functional predictors in order to make the model’s results more accurate. For example, the relationship between air quality and temperature needs to be further considered. In spring, meteorological conditions are variable and unfavorable meteorological conditions such as inversions and low wind speeds may occur, which can lead to a higher concentration of air pollutants and a decrease in air quality, but it is precisely because of the fact that at the time of the exchange of cold and warmth, people pay more attention to their state of health, which in turn mitigates the impact of the AQI.

Author Contributions

W.X.: methodology, software, validation, writing—review, supervision, funding acquisition. S.L.: methodology, software, data curation, writing—original draft. H.L.: writing—review, supervision. All authors have read and agreed to the published version of the manuscript.

Funding

This research is supported by the Yujie Talent Project of North China University of Technology (Grant No. 107051360023XN075-04).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original data supporting the results of this study can be obtained from the National Meteorological Science Data Sharing Service Platform, the National Environmental Monitoring Station and local statistical bulletins.

Acknowledgments

The authors would like to thank the referees and the editor for their useful suggestions, which helped us improve the paper.

Conflicts of Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this work.

References

- Ramsay, J. O. When the data are functions. Psychometrika 1982, 47, 379–396. [Google Scholar] [CrossRef]

- Ramsay, J. O.; Silverman, B. W. Functional Data Analysis, Second Ed. ed; Springer: New York, US, 2005. [Google Scholar]

- Horváth, L.; Kokoszka, P. Inference for Functional Data with Application, Publisher:Springer, New York, US, 2012.

- Shin, H. Partial functional linear regression. Journal of Statistical Planning and Inference 2009, 139, 3405–3418. [Google Scholar] [CrossRef]

- Shin, H.; Lee,M. H. On prediction rate in partial functional linear regression. Journal of Multivariate Analysis 2012, 103, 93–106. [Google Scholar] [CrossRef]

- James, G. M. Generalized linear models with functional predictors. Journal of the Royal Statistical Society (Series B) 2002, 64, 411–432. [Google Scholar] [CrossRef]

- Müller, H. G.; Stadtmüller, U. Generalized functional linear models. The Annals of Statistics 2005, 33, 774–805. [Google Scholar] [CrossRef]

- Shang, Z. F.; Cheng, G. Nonparametric inference in generalized functional linear models. The Annals of Statistics 2015, 43, 1742–1773. [Google Scholar] [CrossRef]

- Wong, R. K. W.; Li, Y.; Zhu, Z. Y. Partially Linear Functional Additive Models for Multivariate Functional Data. Journal of the American Statistical Association 2019, 114, 406–418. [Google Scholar] [CrossRef]

- Xiao, W. W.; Wang, Y. X.; Liu, H. Y. Generalized partially functional linear model. Scientific Reports 2021, 11, 23428. [Google Scholar] [CrossRef] [PubMed]

- Scallan, A.; Gilchrist, R.; Green, M. Fitting Parametric Link Functions in Generalized Linear Models. Computational Statistics and Data Analysis 1984, 2, 37–49. [Google Scholar] [CrossRef]

- Weisberg, S.; Welsh, A. H. Adapting for the missing link. The Annals of Statistics 1994, 22, 1674–1700. [Google Scholar] [CrossRef]

- Chiou, J. M.; Müller, H. G. Quasi-likelihood regression with unknown link and variance functions. Journal of the American Statistical Association 1998, 93, 1376–1387. [Google Scholar] [CrossRef]

- Chiou, J. M.; Müller, H. G. Estimated estimating equations: semiparametric inference for clustered and longitudinal data. Journal of the Royal Statistical Society (Series B) 2005, 67, 531–553. [Google Scholar] [CrossRef]

- Bai, Y.; Fung, W. K.; Zhu, Z. Y. Penalized quadratic inference functions for single-index models with longitudinal data. Journal of Multivariate Analysis 2009, 100, 152–161. [Google Scholar] [CrossRef]

- Pang, Z.; Xue, L. Estimation for the single-index models with random effects. Computational Statistics and Data Analysis 2012, 56, 1837–1853. [Google Scholar] [CrossRef]

- Yuan, M.; Diao, G. Sieve maximum likelihood estimation in generalized linear models with an unknown link function. Wiley Interdisciplinary Reviews: Computational Statistics 2017, 10, e1425. [Google Scholar] [CrossRef]

- Mack,Y. P; Silverman,B.W. Weak and strong uniform consistency of kernel regression estimates. Probability Theory and Related Fields 1982, 63, 405–415. [Google Scholar] [CrossRef]

- Masry, E.; Tjøstheim, D. Estimation and Identification of Nonlinear ARCH Time Series: Strong Convergence and Asymptotic Normality. Econometric Theory 1995, 11, 258–289. [Google Scholar] [CrossRef]

- Chiou, J.M.; Müller, H.G. Nonparametric quasi-likelihood. The Annals of Statistics 1999, 27, 36–64. [Google Scholar] [CrossRef]

- Huang, C.; Barnett, A. G.; Wang, X.; Tong, S. The impact of temperature on years of life lost in Brisbane, Australia. Nature Climate Change 2012, 2, 265–270. [Google Scholar] [CrossRef]

- Yang, Y.; Qi, J. L.; Ruan, Z. L.; Yin, P.; Zhang, S. Y.; Liu, J. M.; Liu, Y. N.; Li, R.; Wang, L. J.; Lin, H. L. Changes in Life Expectancy of Respiratory Diseases from Attaining Daily PM2.5 Standard in China: A Nationwide Observational Study. The Innovation 2020, 1, 100064. [Google Scholar] [CrossRef]

- Deryugina, T.; Molitor, D. The Causal Effects of Place on Health and Longevity. Journal of Economic Perspectives 2021, 35, 147–170. [Google Scholar] [CrossRef] [PubMed]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).