Submitted:

21 October 2023

Posted:

23 October 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Methods

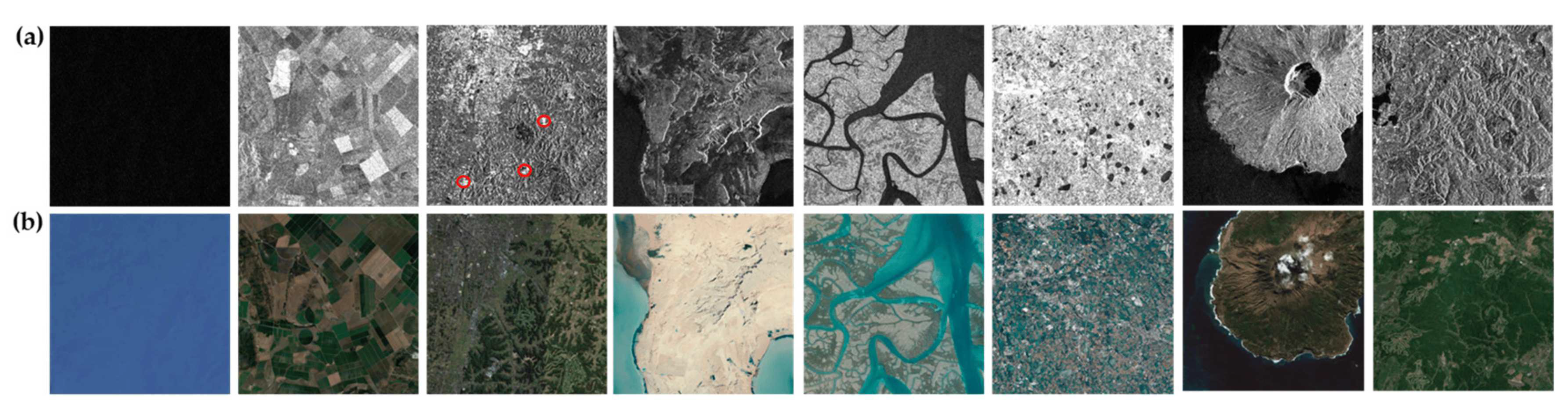

2.1. Analysis of SAR Image Features

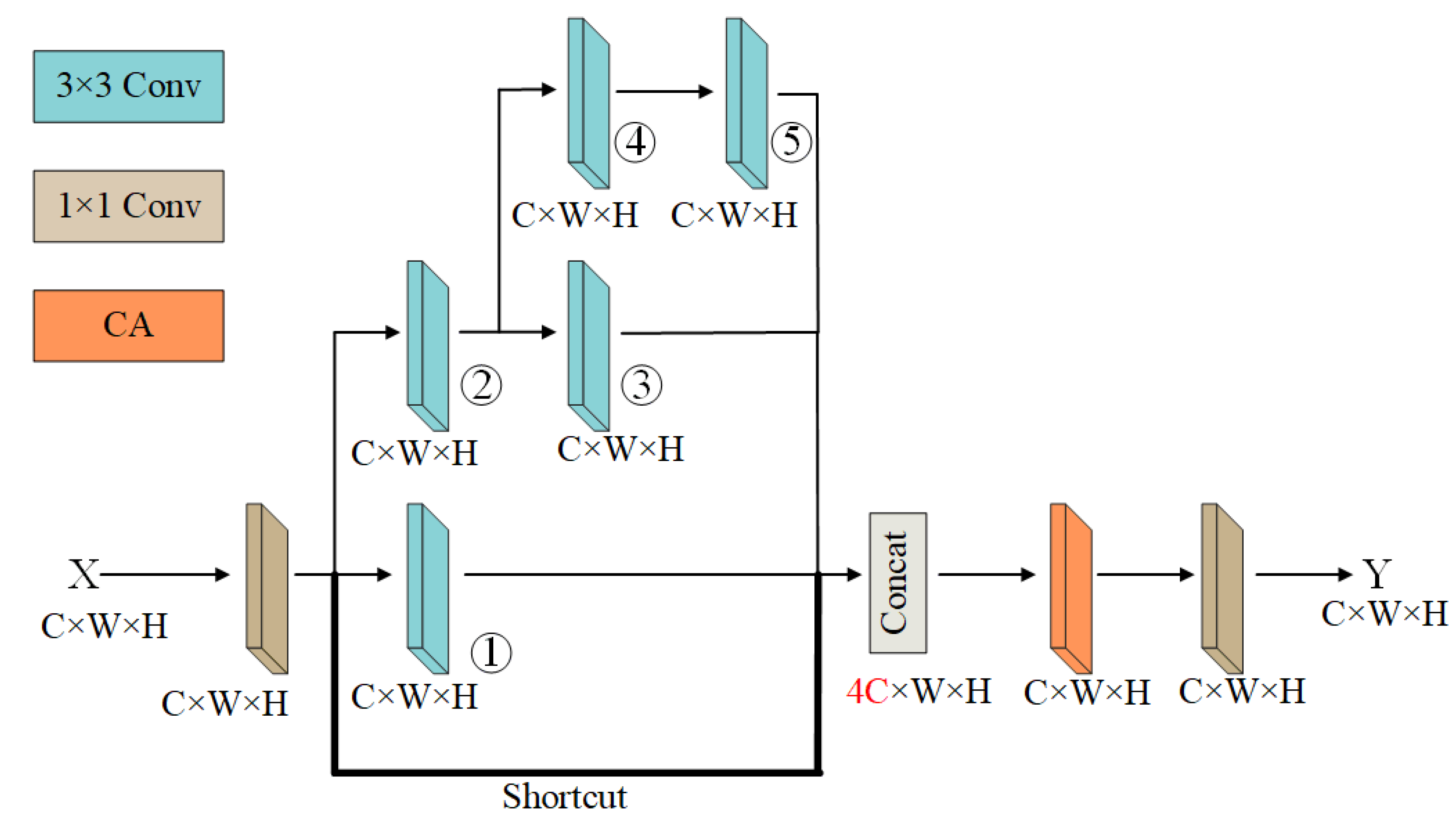

2.2. Improved Convolution Block:AMMRF

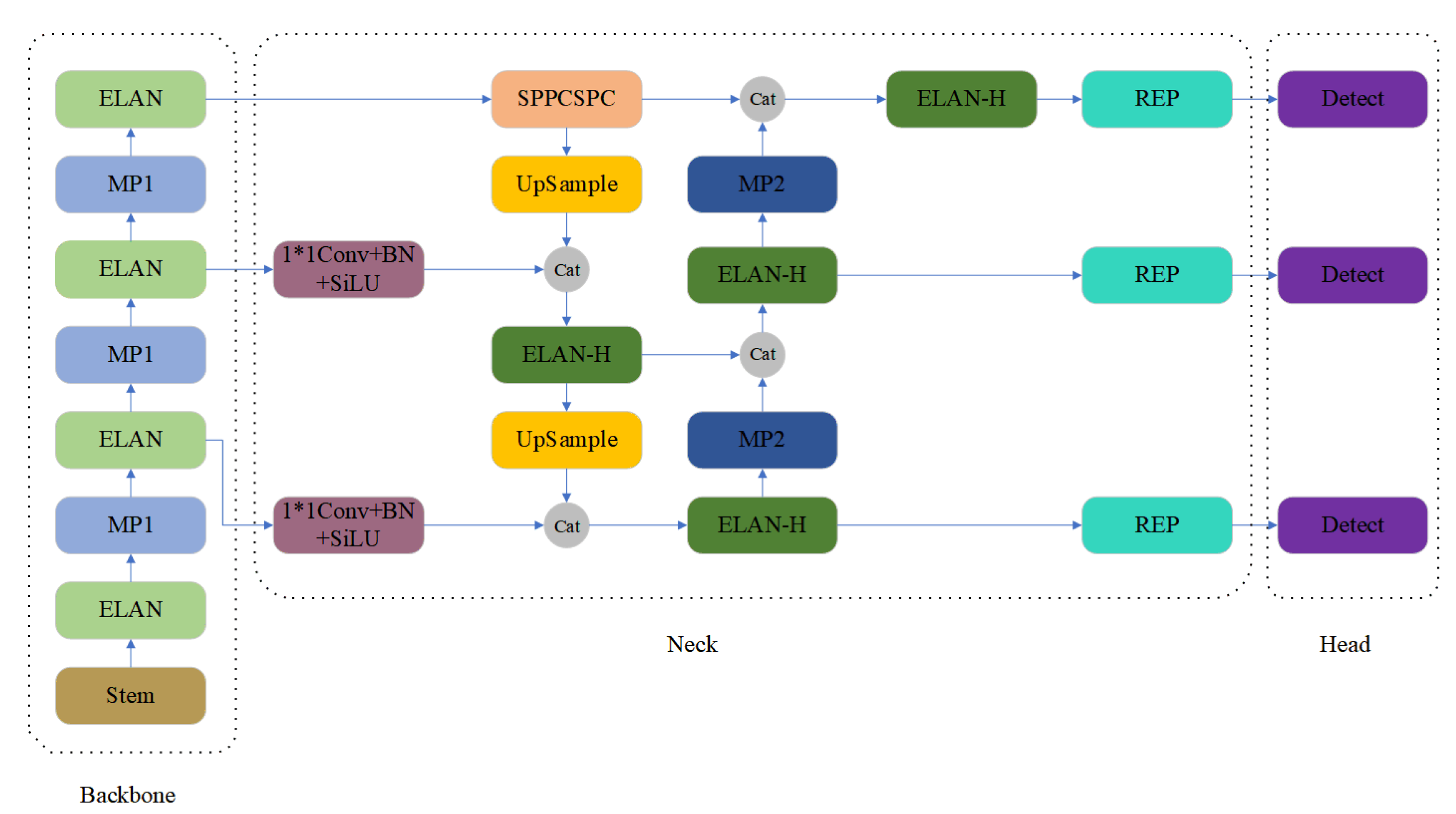

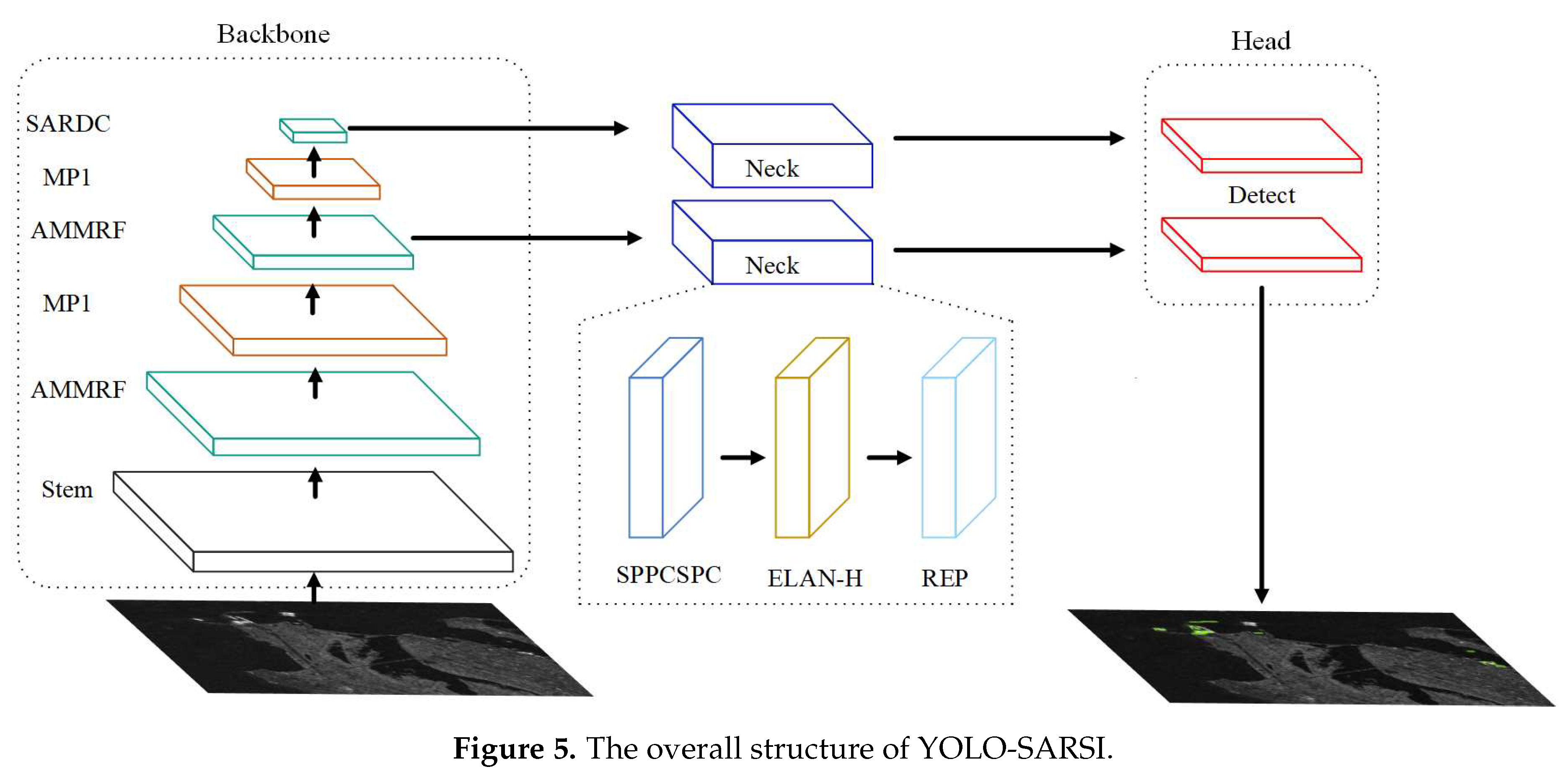

2.3. Network Structure

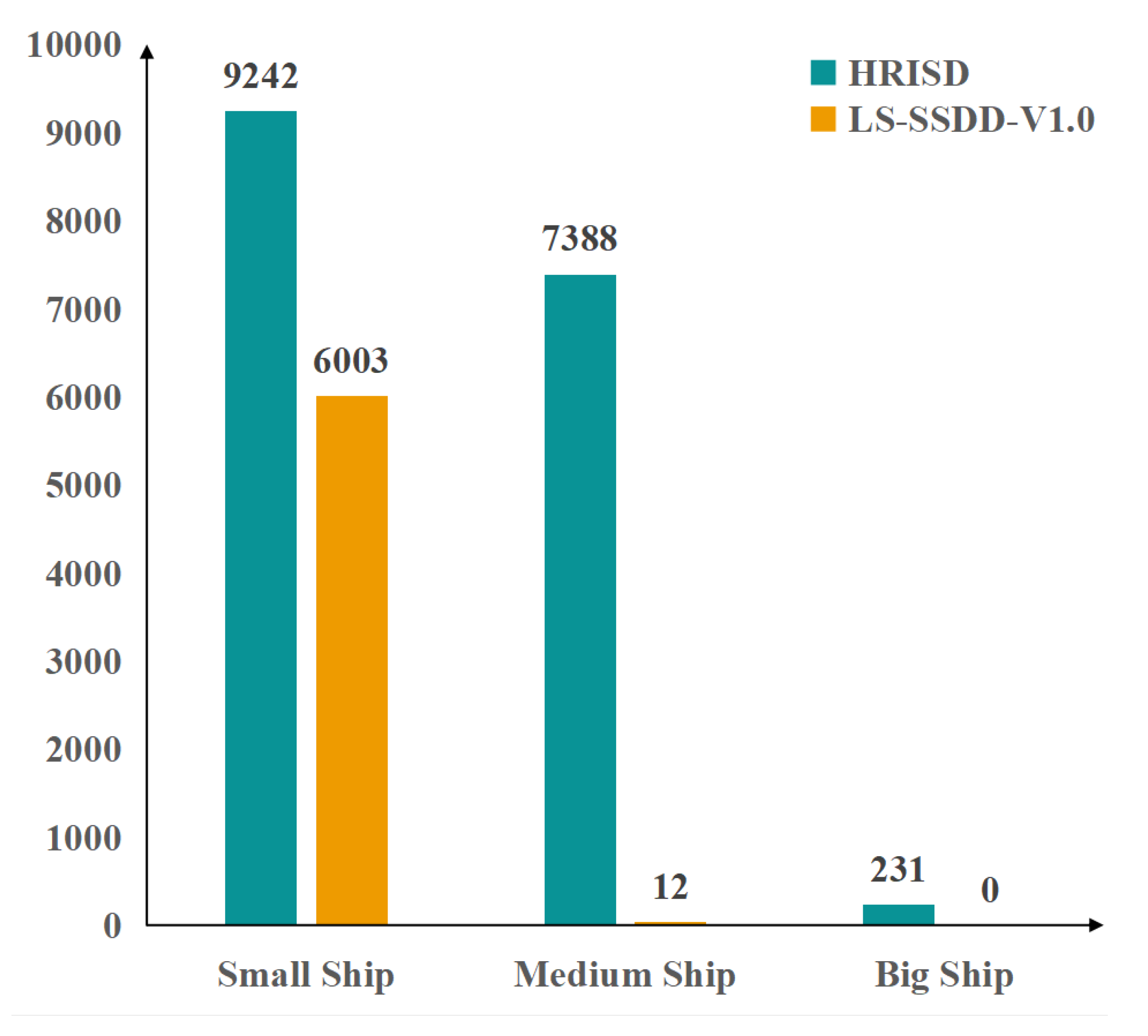

3. Experimental Results

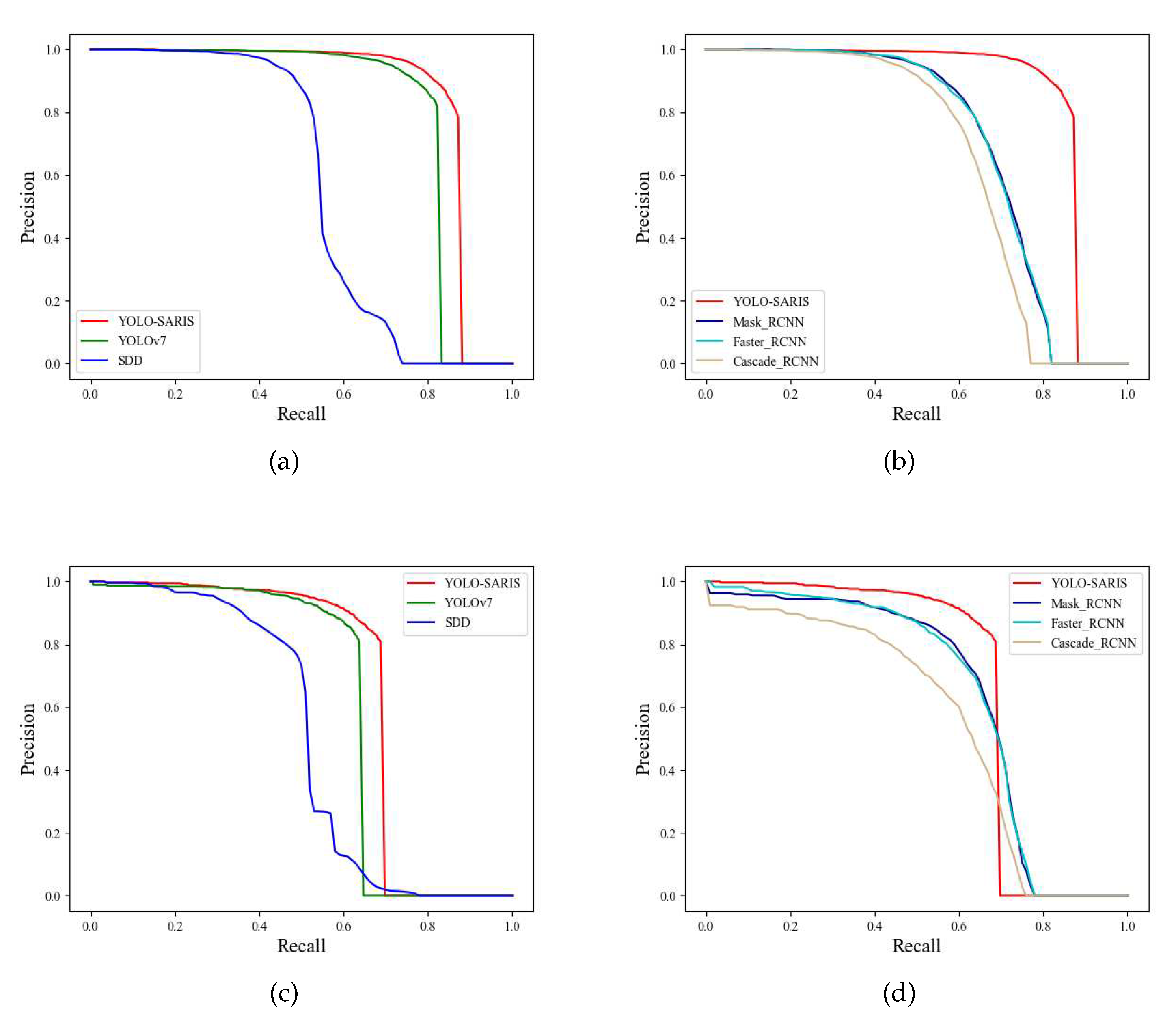

3.1. YOLO-SARSI Recognition Accuracy Evaluation

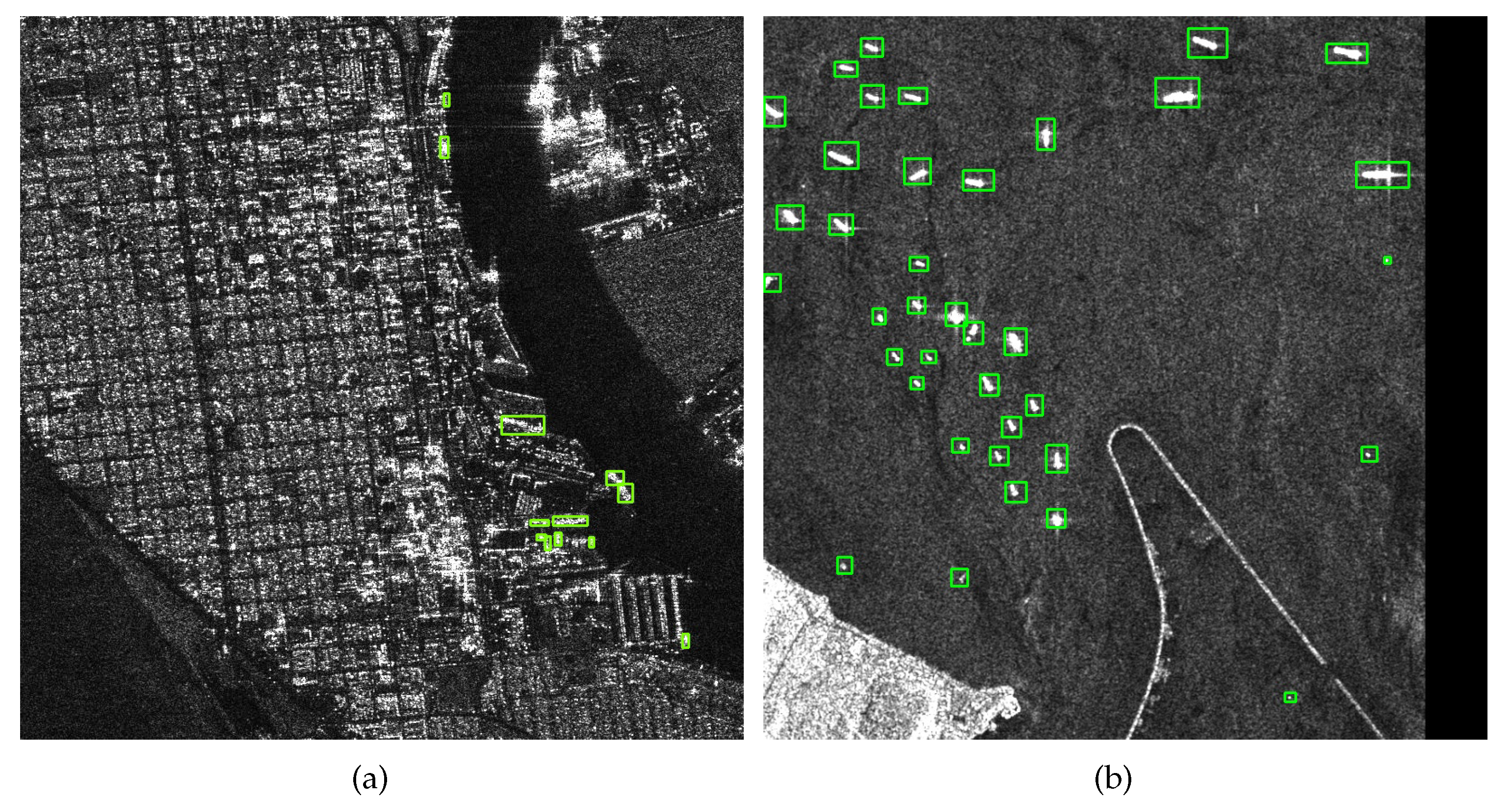

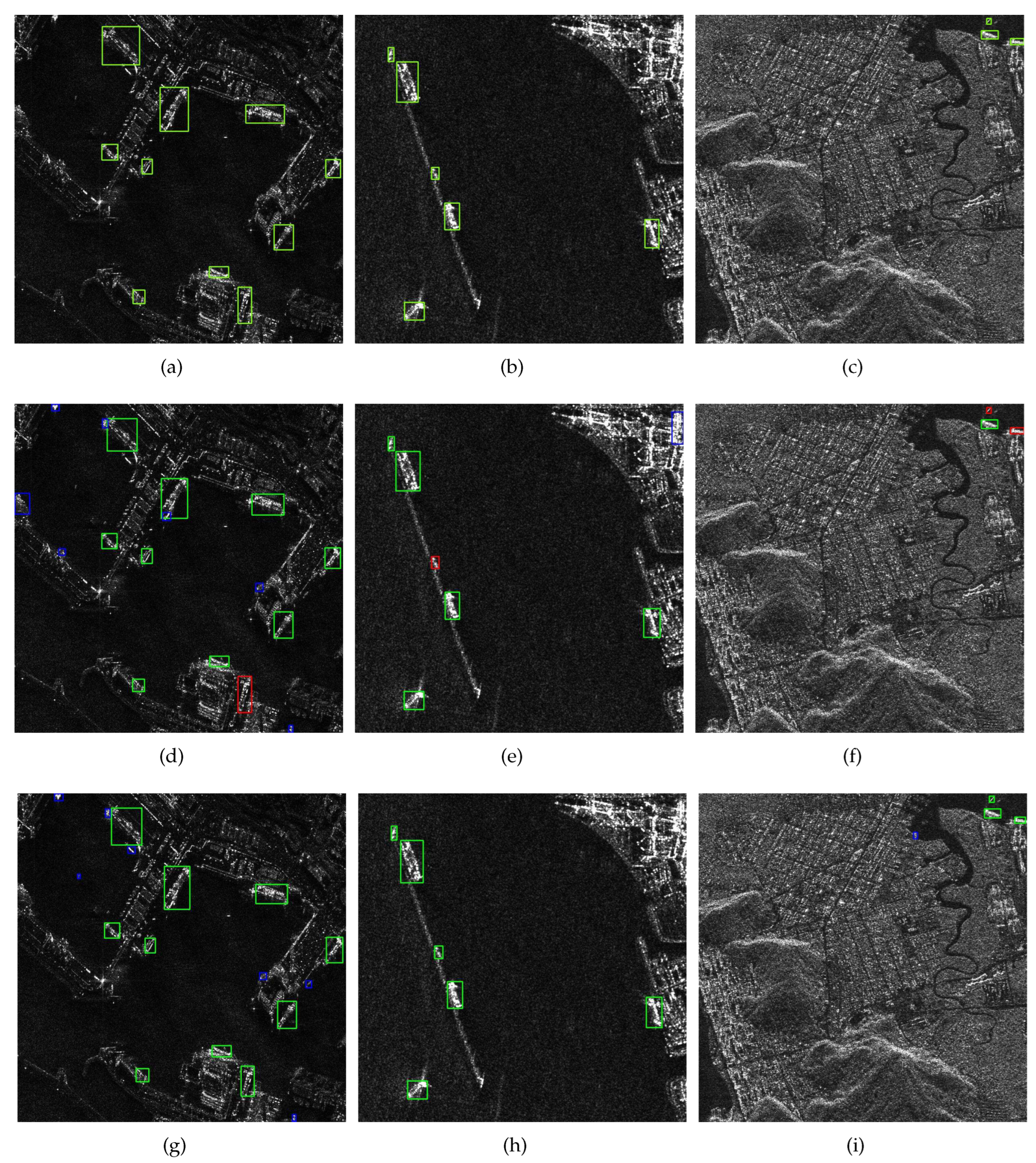

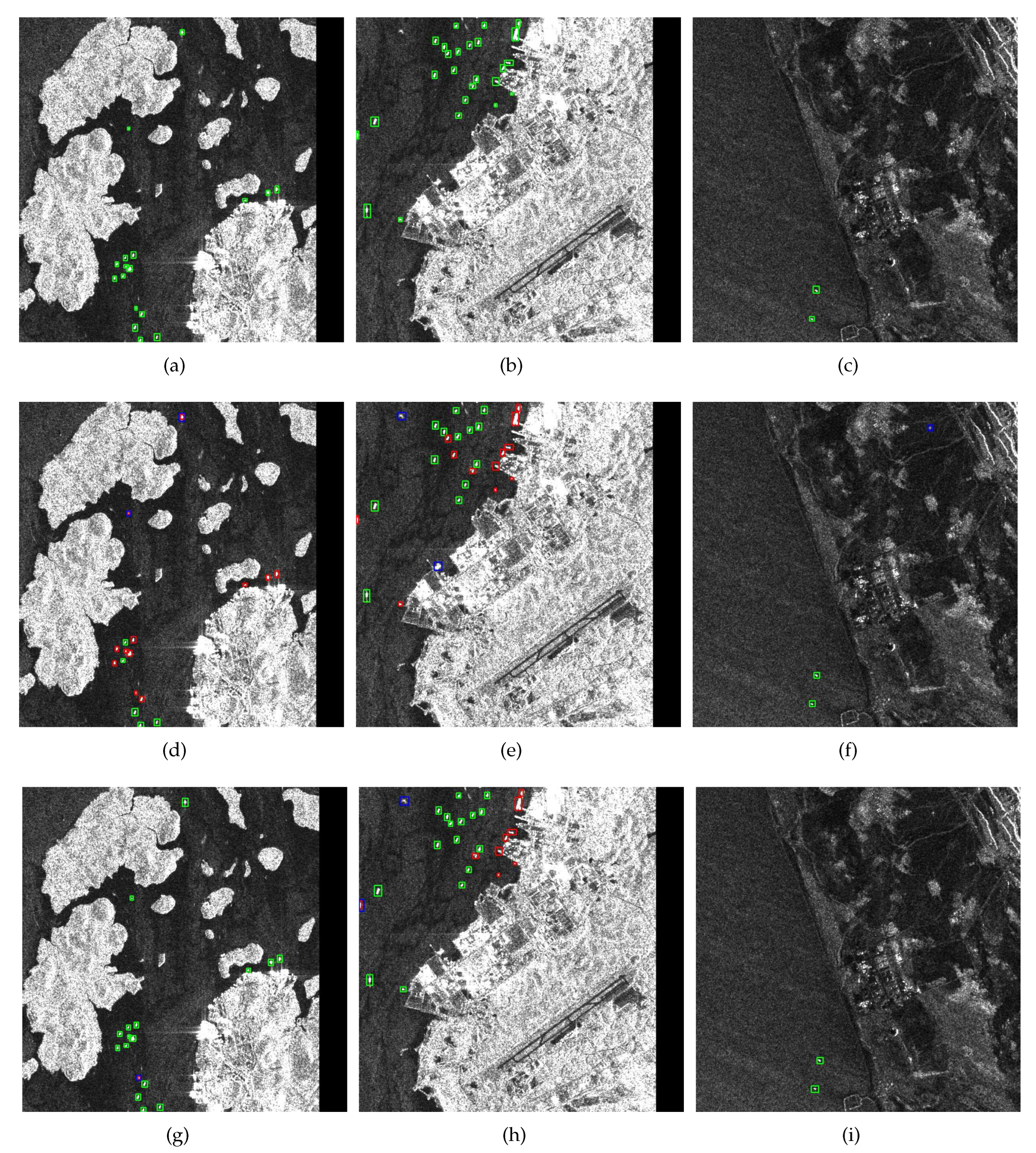

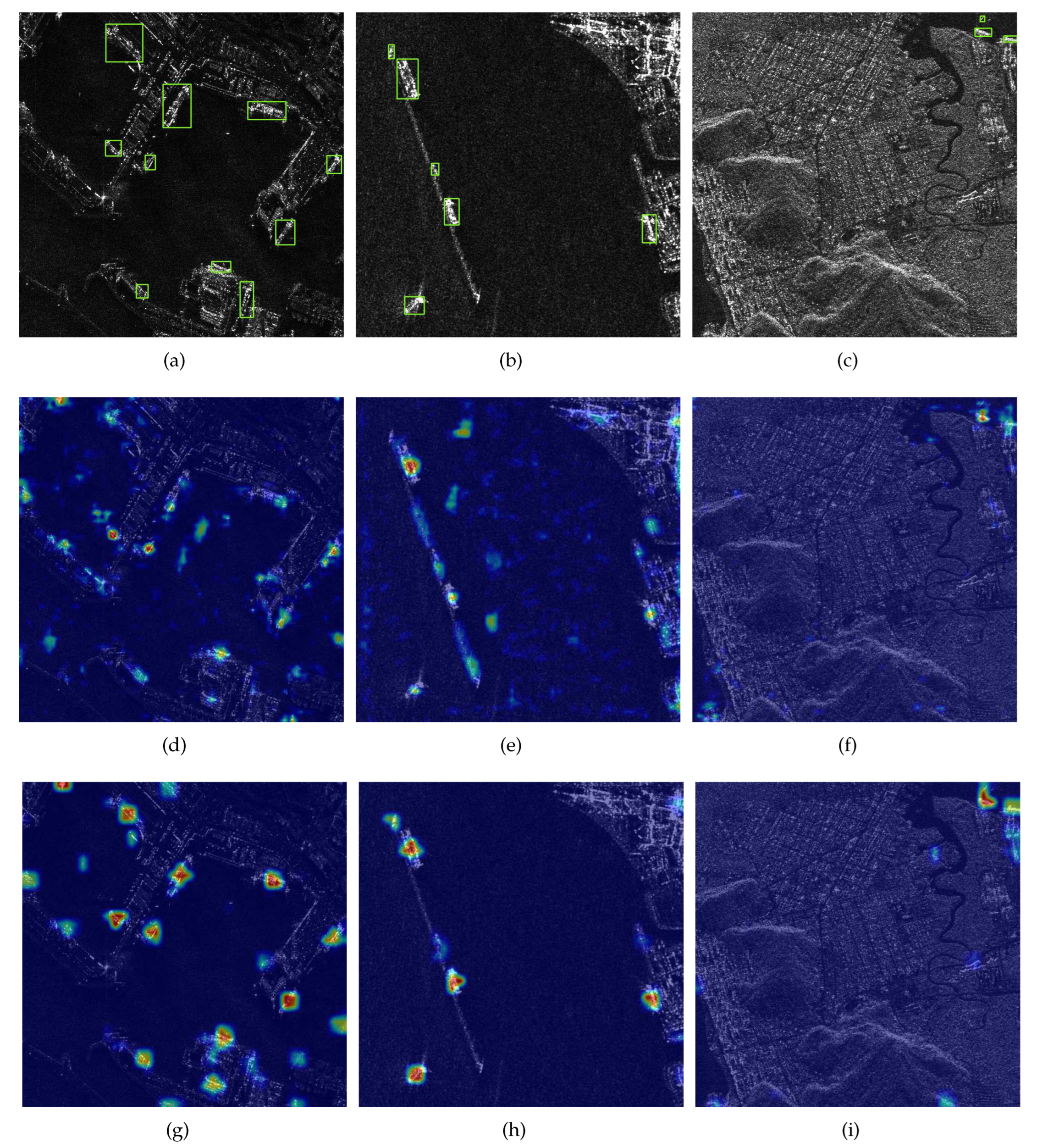

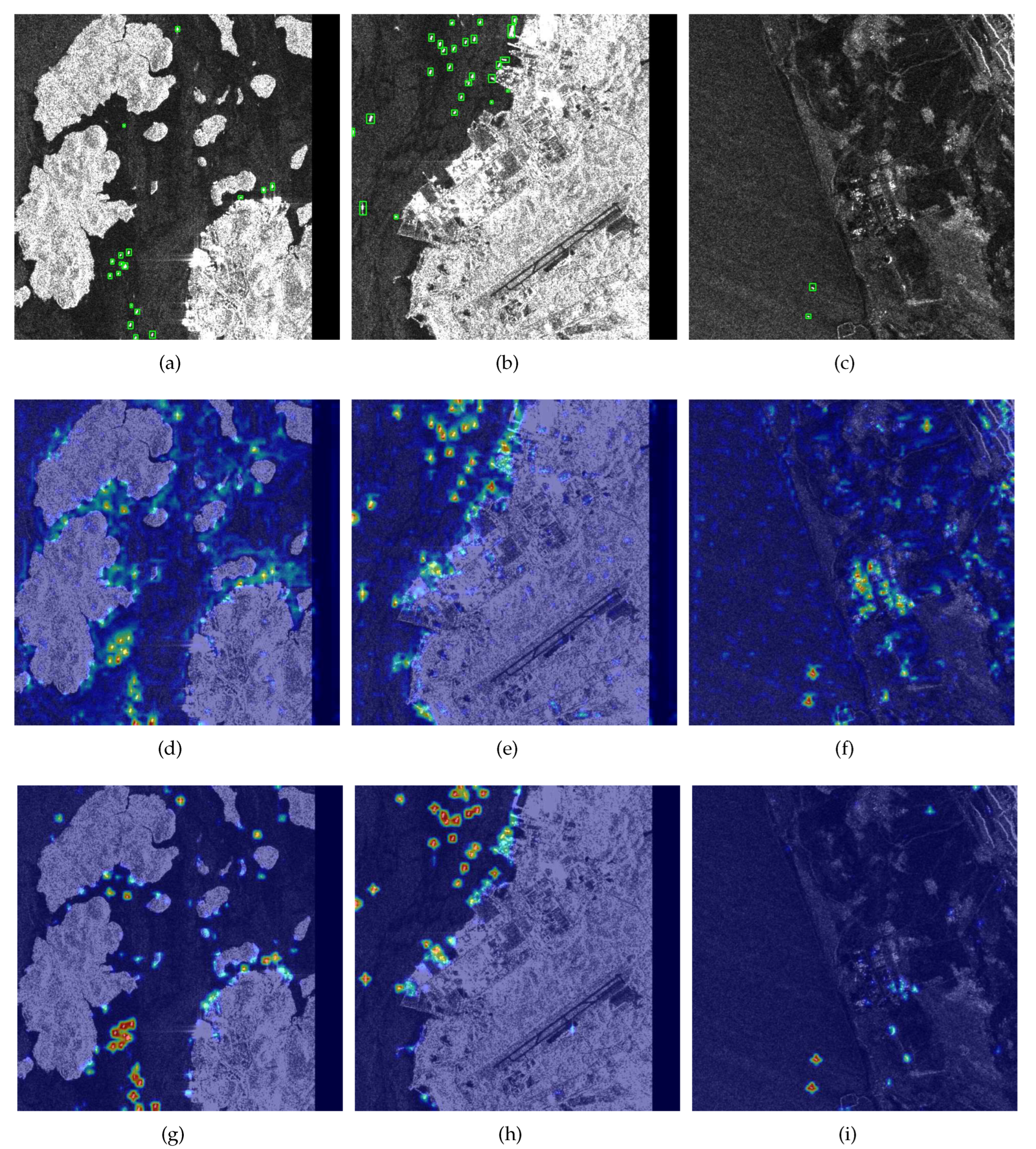

3.2. Instance Testing

4. Conclusions

Author Contributions

Funding

References

- Dai, H.; Du, L.; Wang, Y.; Wang, Z. A Modified CFAR Algorithm Based on Object Proposals for Ship Target Detection in SAR Images. IEEE Geoscience and Remote Sensing Letters 2016, 13, 1925–1929. [Google Scholar] [CrossRef]

- Tang, T.; Xiang, D.; Xie, H. Multiscale salient region detection and salient map generation for synthetic aperture radar image. Journal of Applied Remote Sensing 2014, 8. [Google Scholar] [CrossRef]

- Xiang, D.; Tang, T.; Ni, W.; Zhang, H.; Lei, W. Saliency Map Generation for SAR Images with Bayes Theory and Heterogeneous Clutter Model. Remote Sensing 2017, 9. [Google Scholar] [CrossRef]

- Hwang, S.I.; Ouchi, K. On a Novel Approach Using MLCC and CFAR for the Improvement of Ship Detection by Synthetic Aperture Radar. IEEE Geoscience and Remote Sensing Letters 2010, 7, 391–395. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. 2017, 60. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. CoRR 2015, abs/1512.03385, http://xxx.lanl.gov/abs/1512.03385.

- Kang, M.; Ji, K.; Leng, X.; Lin, Z. Contextual Region-Based Convolutional Neural Network with Multilayer Fusion for SAR Ship Detection. Remote Sensing 2017, 9. [Google Scholar] [CrossRef]

- Jiao, J.; Zhang, Y.; Sun, H.; Yang, X.; Gao, X.; Hong, W.; Fu, K.; Sun, X. A Densely Connected End-to-End Neural Network for Multiscale and Multiscene SAR Ship Detection. IEEE Access 2018, 6, 20881–20892. [Google Scholar] [CrossRef]

- Cui, Z.; Li, Q.; Cao, Z.; Liu, N. Dense Attention Pyramid Networks for Multi-Scale Ship Detection in SAR Images. IEEE Transactions on Geoscience and Remote Sensing 2019, 57, 8983–8997. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, X. High-Speed Ship Detection in SAR Images Based on a Grid Convolutional Neural Network. Remote Sensing 2019, 11. [Google Scholar] [CrossRef]

- Qu, H.; Shen, L.; Guo, W.; Wang, J. Ships Detection in SAR Images Based on Anchor-Free Model With Mask Guidance Features. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing 2022, 15, 666–675. [Google Scholar] [CrossRef]

- Sun, B.; Wang, X.; Li, H.; Dong, F.; Wang, Y. Small-Target Ship Detection in SAR Images Based on Densely Connected Deep Neural Network with Attention in Complex Scenes. Applied Intelligence 2022, 53, 4162–4179. [Google Scholar] [CrossRef]

- Gao, S.; Liu, J.M.; Miao, Y.H.; He, Z.J. A High-Effective Implementation of Ship Detector for SAR Images. IEEE Geoscience and Remote Sensing Letters 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Wang, S.; Gao, S.; Zhou, L.; Liu, R.; Zhang, H.; Liu, J.; Jia, Y.; Qian, J. YOLO-SD: Small Ship Detection in SAR Images by Multi-Scale Convolution and Feature Transformer Module. Remote Sensing 2022, 14. [Google Scholar] [CrossRef]

- Fu, J.; Sun, X.; Wang, Z.; Fu, K. An Anchor-Free Method Based on Feature Balancing and Refinement Network for Multiscale Ship Detection in SAR Images. IEEE Transactions on Geoscience and Remote Sensing 2021, 59, 1331–1344. [Google Scholar] [CrossRef]

- Guo, H.; Yang, X.; Wang, N.; Gao, X. A CenterNet++ model for ship detection in SAR images. Pattern Recognition 2021, 112, 107787. [Google Scholar] [CrossRef]

- Zhao, J.; Guo, W.; Zhang, Z.; Yu, W. A coupled convolutional neural network for small and densely clustered ship detection in SAR images. Science China Information Sciences 2018, 62, 1–16. [Google Scholar] [CrossRef]

- Qiu, J.; Chen, C.; Liu, S.; Zeng, B. SlimConv: Reducing Channel Redundancy in Convolutional Neural Networks by Weights Flipping. CoRR 2020, abs/2003.07469, http://xxx.lanl.gov/abs/2003.07469.

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors, 2022, http://xxx.lanl.gov/abs/2207.02696.

- Girshick, R. Fast R-CNN. 2015 IEEE International Conference on Computer Vision (ICCV), 2015, pp. 1440–1448. [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Bourdev, L.; Girshick, R.; Hays, J.; Perona, P.; Ramanan, D.; Zitnick, C.L.; Dollár, P. Microsoft COCO: Common Objects in Context, 2015, http://xxx.lanl.gov/abs/1405.0312.

- Everingham, M.; Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The Pascal Visual Object Classes (VOC) Challenge. Int. J. Comput. Vision 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, X.; Ke, X.; Zhan, X.; Shi, J.; Wei, S.; Pan, D.; Li, J.; Su, H.; Zhou, Y.; Kumar, D. LS-SSDD-v1.0: A Deep Learning Dataset Dedicated to Small Ship Detection from Large-Scale Sentinel-1 SAR Images. Remote Sensing 2020, 12. [Google Scholar] [CrossRef]

- Wei, S.; Zeng, X.; Qu, Q.; Wang, M.; Su, H.; Shi, J. HRSID: A High-Resolution SAR Images Dataset for Ship Detection and Instance Segmentation. IEEE Access 2020, 8, 120234–120254. [Google Scholar] [CrossRef]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate Attention for Efficient Mobile Network Design, 2021, http://xxx.lanl.gov/abs/2103.02907.

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going Deeper with Convolutions, 2014, http://xxx.lanl.gov/abs/1409.4842.

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). 2017, 2261–2269. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Computer Vision – ECCV 2016; Springer International Publishing, 2016; pp. 21–37. [CrossRef]

- Cai, Z.; Vasconcelos, N. Cascade R-CNN: Delving Into High Quality Object Detection. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2018, pp. 6154–6162. [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks, 2016, http://xxx.lanl.gov/abs/1506.01497.

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN, 2018, http://xxx.lanl.gov/abs/1703.06870.

- Chen, K.; Wang, J.; Pang, J.; Cao, Y.; Xiong, Y.; Li, X.; Sun, S.; Feng, W.; Liu, Z.; Xu, J.; Zhang, Z.; Cheng, D.; Zhu, C.; Cheng, T.; Zhao, Q.; Li, B.; Lu, X.; Zhu, R.; Wu, Y.; Dai, J.; Wang, J.; Shi, J.; Ouyang, W.; Loy, C.C.; Lin, D. MMDetection: Open MMLab Detection Toolbox and Benchmark, 2019, http://xxx.lanl.gov/abs/1906.07155.

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. International Journal of Computer Vision 2019, 128, 336–359. [Google Scholar] [CrossRef]

| Dataset | Model | (%) | (%) | Params(M) |

|---|---|---|---|---|

| HRSID | Cascade R-CNN | 65.1 | 41.2 | 68.93M |

| Faster R-CNN | 69.8 | 43.6 | 41.12 | |

| Mask R-CNN | 69.9 | 43.9 | 41.12 | |

| SDD 300 | 56.5 | 36.8 | 23.75 | |

| YOLOv7 | 80.9 | 59.6 | 34.79 | |

| YOLO-SARSI | 85.8 | 62.9 | 18.43 | |

| LS-SSDD-V1.0 | Cascade R-CNN | 55.4 | 20.1 | 68.93M |

| Faster R-CNN | 63.4 | 23.9 | 41.12 | |

| Mask R-CNN | 63.3 | 24.1 | 41.12 | |

| SDD 300 | 32.5 | 10.1 | 23.75 | |

| YOLOv7 | 61.6 | 25.0 | 34.79 | |

| YOLO-SARSI | 66.6 | 26.3 | 18.43 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).