Submitted:

13 November 2023

Posted:

14 November 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Background and Related Work

2.1. Conceptualization and Ontological View

2.2. Intelligence: Classification of environment

-

Centralized and Decentralized EnvironmentThe first factor evaluates whether the environment exhibits characteristics of centralization or decentralization, whereas the next factor determines the presence of a distributed environment. Centralization and decentralization in an information environment refer to an organization’s strategies to govern and disseminate its information resources, systems, and authority to make decisions. The notions above affect how knowledge and data are spread throughout an organization. In contrast, "distributed" commonly denotes the spread or allocation of information, data, or computing resources among different locations, systems, or entities. [9].Centralized environments are characterized by the concentration of power, decision-making, and authority in a singular human or geographical location. Every individual entity (i.e., a system component) possesses a finite level of autonomy. As [9] stated, the entity cannot make independent decisions unless it is governed by the authority and influence of the most dominant entity.The decentralized information environment refers to a type of environment that lacks a central coordinating or governing organization. The collection consists of many independent entities, which may be situated in the same or disparate geographic locations. An autonomous entity is characterized by its capacity to operate autonomously to accomplish its objectives [10]. In other words, each autonomous entity represents an information system in which no single entity is the exclusive authority. Each entity in such environments can perceive and capture the environment’s state and develop intelligence about it (typically equipped with its intelligence). It can make decisions locally, and each entity can choose how to use these local intelligence resources to fulfill that entity’s objectives. However, there is a freedom of action within each entity, which guarantees that no single node has complete intelligence.

-

Distributed EnvironmentIn this case, the categorization of the environment is predicated on the second factor. A distributed information environment can encompass systems that are either geographically dispersed or locally located. One distinguishing characteristic of a distributed environment is its collaborative utilization of various entities, nodes, or components responsible for overseeing and executing the processing and administration of resources and data. These entities can exist in a shared physical space, such as a data center, or they can be distributed across several geographic locations, including distinct data centers in different cities or countries. [11].The apparent paradox of a system being distributed and centralized can be resolved by analyzing the definitions of location and control. A distributed system consists of multiple software components that are physically spread across various entities (computers), but operate together as a cohesive system. In a distributed system, entities can be geographically far and connected by a wide area network or physically close and linked through a local network[12].Let us contemplate a cloud service enterprise that offers data storage solutions. In terms of physical implementation, the data have the potential to replicate and distribute on various devices, taking into account the availability and resilience of the resources (distributed)[13]. Nevertheless, irrespective of the geographical location of the equipment and data storage facilities, the cloud service provider assumes centralized management over them. On the contrary, the notion of a decentralized and distributed system may appear rational. Bitcoin will be used as our illustrative case. Bitcoin is a decentralized system characterized by immutability, meaning that any entity cannot alter it. Furthermore, it functions as a distributed global peer-to-peer network of autonomous computers.

-

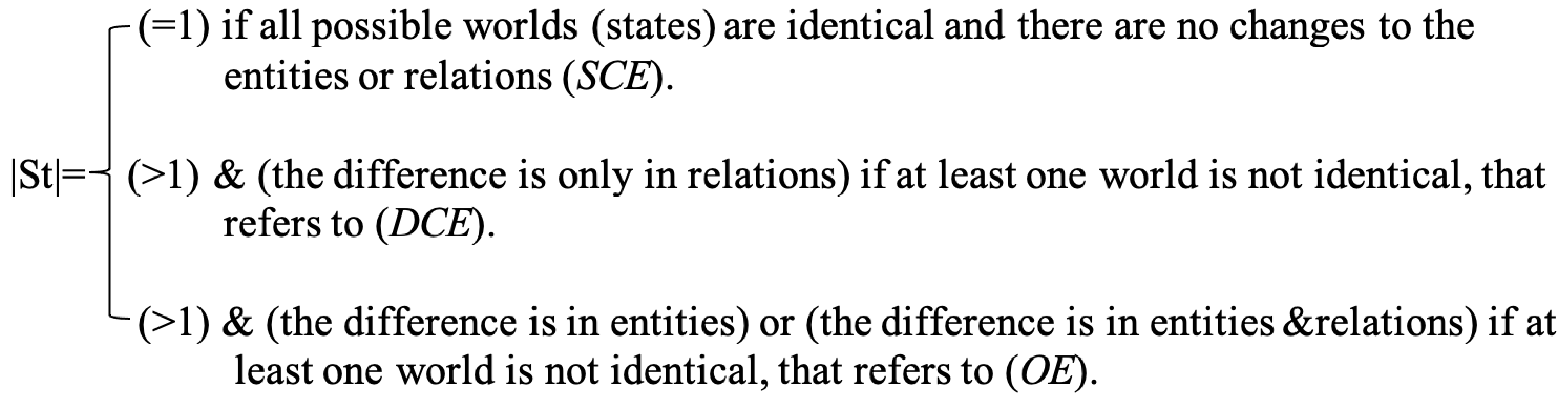

Open and Closed EnvironmentsPrior studies, such as those referenced in [14], indicate that the observable environment may present itself as either closed or open. The genesis of states within these environments is often a consequence of modifications in their fundamental components. As delineated in [14], the classification of environmental states falls into two primary groups: identical and varying. By scrutinizing the observable alterations in entities and their interrelations, one can anticipate the classification of an environment. This predictive capability is crucial for understanding the dynamics of open versus closed systems and their respective states.

2.3. Collaborative Intelligence (CIn)

- These definitions do not attempt to describe the concept of intelligence itself; therefore, they are consistent with all other definitions of intelligence.

- Because the definition of intelligence includes the term "acting," it stipulates that intelligence must be demonstrated in some behavior. According to this definition, for example, an article on Wikipedia would not be deemed intelligent in and of itself; however, the people who generated it would be intelligent.

- The definition demands that individuals behave collectively or that their activities are connected. Two unrelated people in different cities brewing coffee on the same morning indeed is not collective intelligence, and two coffee shop servers working together would be. Individuals’ actions must be related, but they need not cooperate or have the same goals. Different market actors purchase and sell to each other; therefore, their actions are connected, yet they may have different purposes.

- At the group level, it is typically significantly more crucial for an observer to attribute group aims.

3. Levels of Observation in the Information Environment

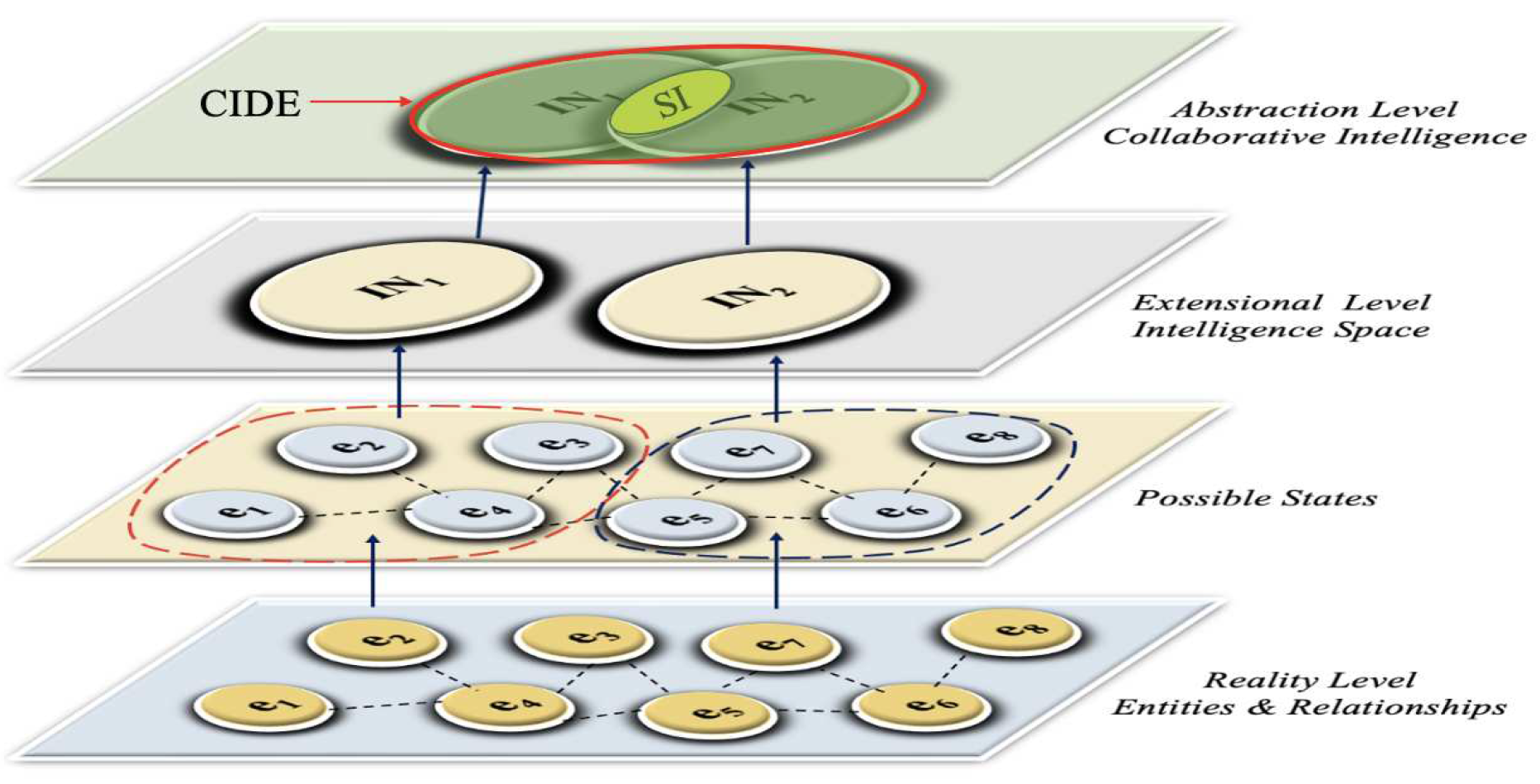

3.1. Reality Level: Existences and co-existences

3.2. Extensional Level: Intelligence and its Forms

3.3. Abstraction Level: collaborative intelligence

4. Intelligence: Definition

5. Intelligence: Ontological view-based model

-

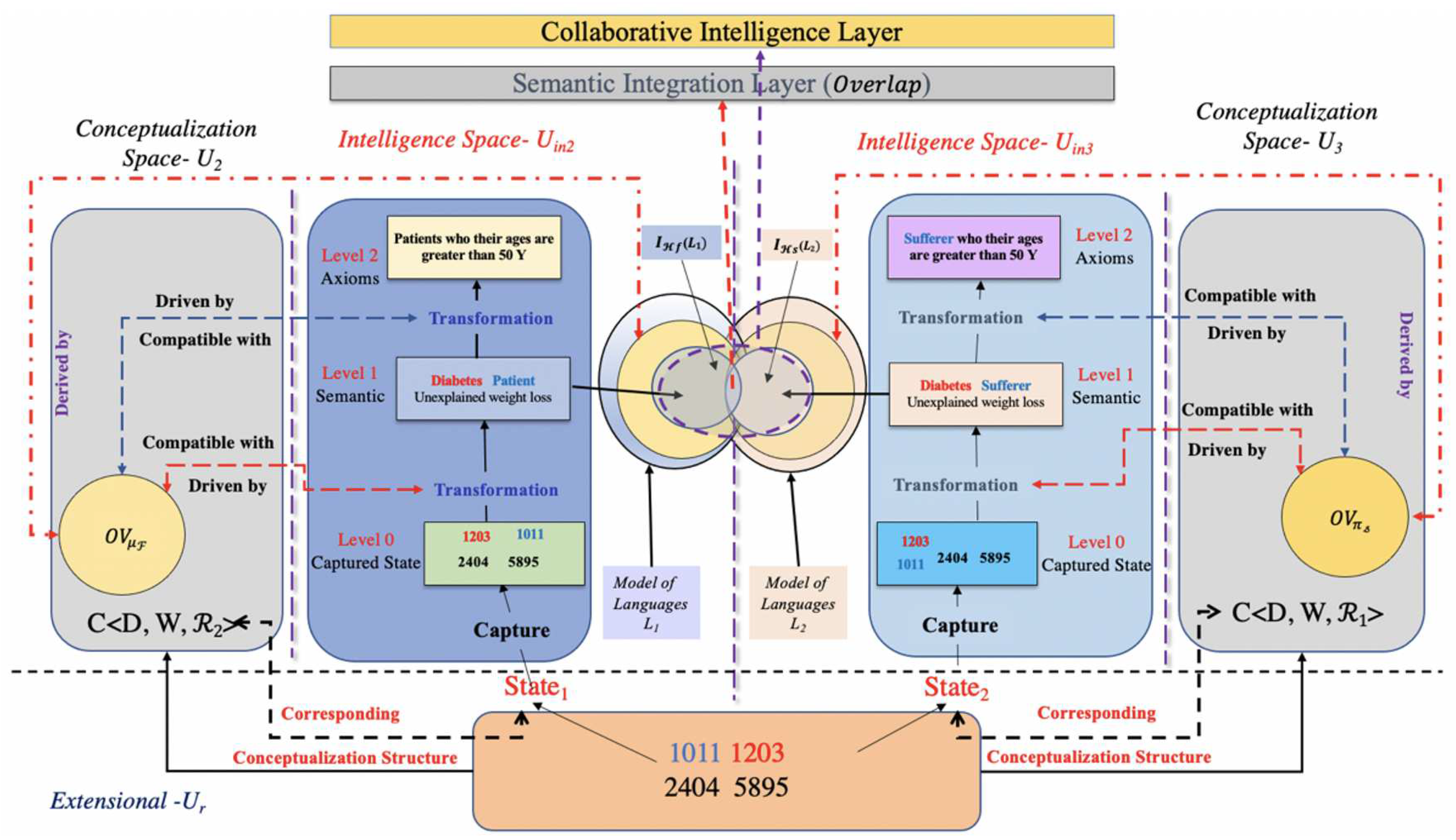

Firstly, it provides the interconnected information on two parts of semantics:

- -

- The connection between the state and the appropriate conceptualization structure.

- -

- The data is associated to the relevant specification through the use of a supporting language.

- Additionally, the association axioms of facilitate the transformation of knowledge in a manner that enables direct reasoning, hence enhancing the actionable form of intelligence.

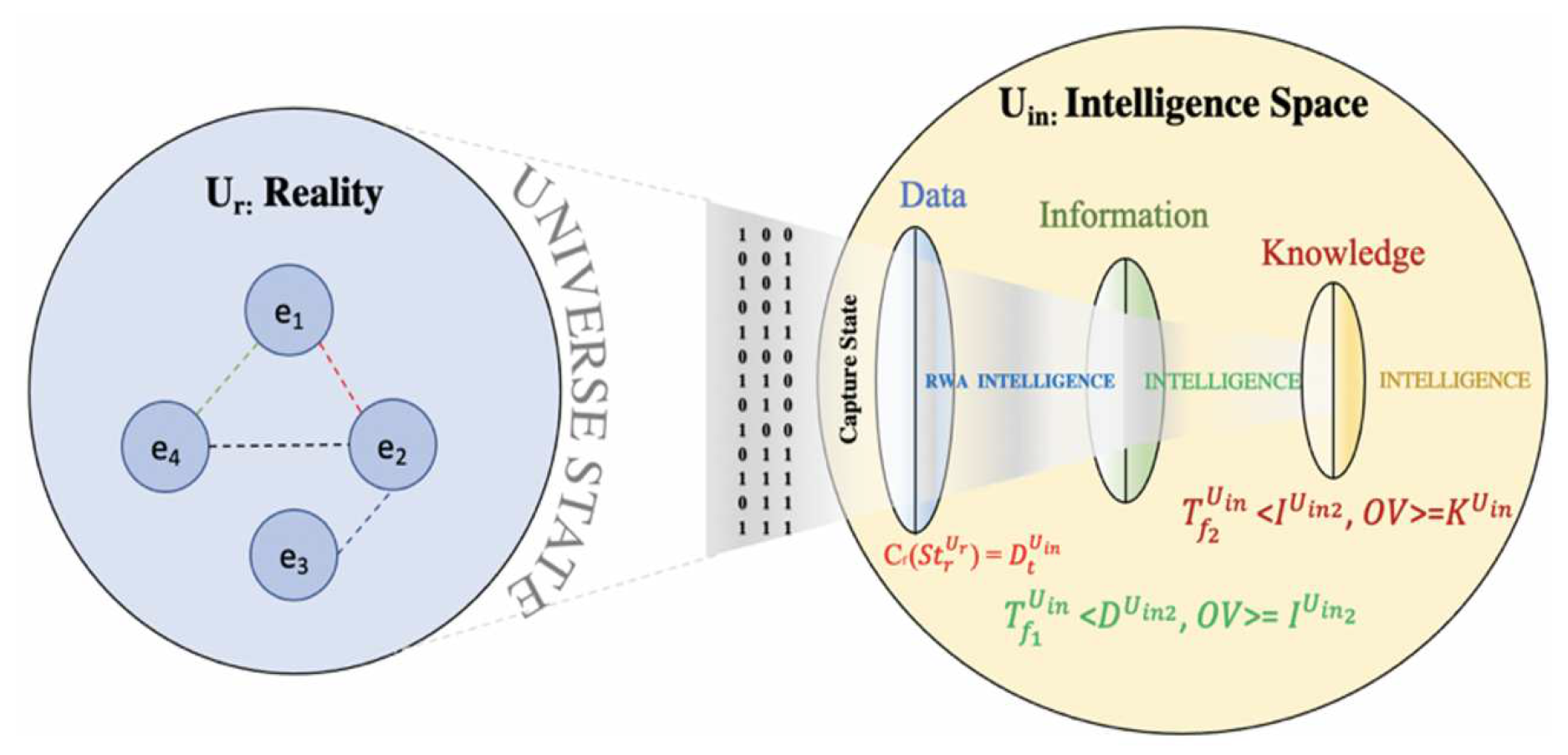

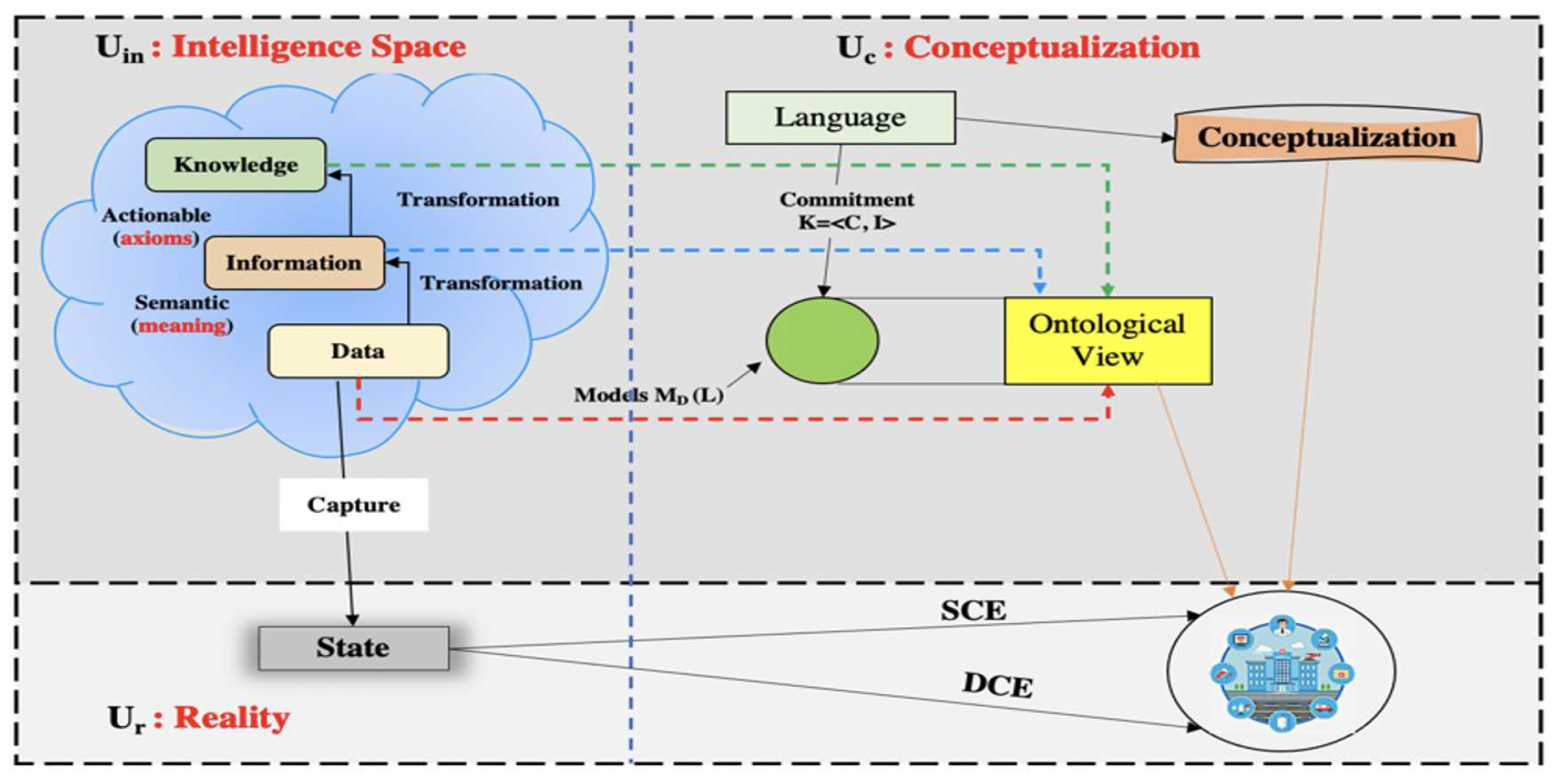

- Captured state: refers to the current snapshot of the environment’s structure, represented by entities and relationships.

- Data: is a form of raw intelligence that has been obtained by converting the captured state, but has not been organized or processed in any way.

- Information:is the second form of intelligence that can be generated through data transformation. Information is data that has been organized, structured, and presented meaningfully.

- Knowledge: Knowledge is the third form of intelligence that can be formed through the transformation of information. Knowledge is explicit information retrieved from implicit information by adding rules (axioms).

- The Observer Universe (also known as -Intelligence space) is where forms of intelligence will develop.

- The Observed Universe, known as (-Reality), is where the existences known as “entities” and the coexistences known as “relationships” between them exist. This universe can be broken down into two subcategories based on the possible alterations to its states (entities and relationships).

- Converting function: It is the process of expressing the conversion of the captured state formats into other formats, representing the first form of intelligence (data). However, the universe (intelligence space) may keep the same formats without any changes.

- Transformation function: it is a function that takes a form (information) and produces an output that has been transformed into another form (knowledge).

- Decentralized Environment: is often characterized by decentralized control, which means that there is no single controlling entity or authority. This can contrast with centralized environments, where a single central authority or entity makes decisions. Entities in a decentralized environment are often distinguished by their ability to perceive their environment. They are autonomous entities that can operate independently and make their own decisions without external direction or control.

6. Forms of Intelligence

-

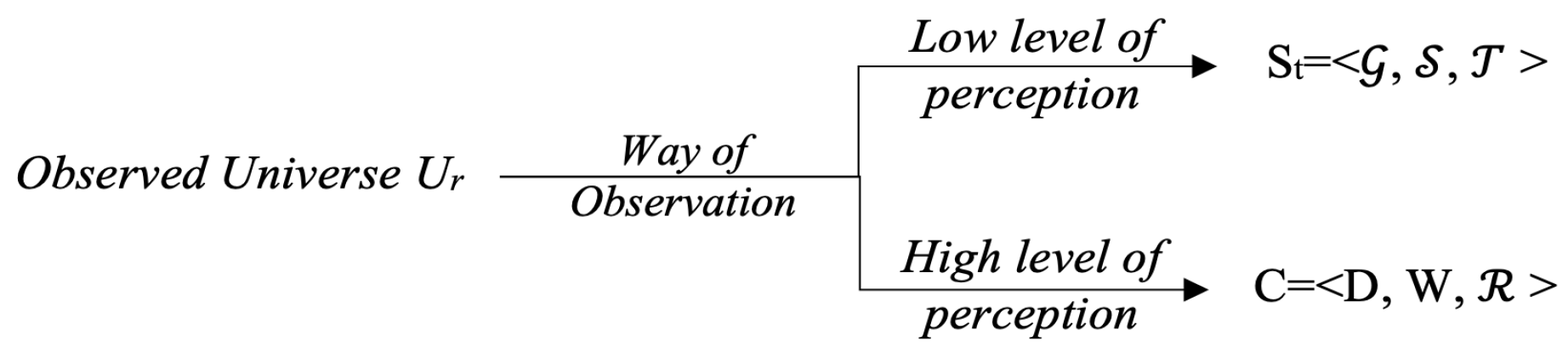

Captured state: To analyze the distinctions between "conceptualization" and "capturing the state of an environment" in the context of observation, it is necessary to comprehend how each concept relates to the act of observing and the outcomes of this observation. The correlation between conceptualization and the representation of the state of an environment is intricately related to the observation process, although its objectives may differ depending on the observation being carried out.: Assuming C =<D, W, >as a conceptualization structure representing entities and relationships within a decentralized environment, with a conceptualization structure defined as an abstract view of the environment, we can infer that this structure provides a higher-level perception of the observed environment.In contrast, the fundamental aspect of the environment’s structure, denoted as St =<, ,>, represents the environment’s actual manifestation. This structure corresponds to a lower-level perception, wherein entities and their relationships coexist within the same framework.Figure 3. shows different ways to observe U-Reality

The state structure comprises three elements: , representing the domain of the universe of reality, including entities and relationships; , representing the possible states; and , representing the intensional relations. The constituent elements of the conceptualization framework have been delineated in [6].The correlation between the conceptualization structure, representing a high level of understanding of the universe of reality [6], and the state structure resulting from our approach, indicating a low level of understanding of the universe of reality, is depicted in Figure 2.The captured states can be classified into two separate structures based on the basic alterations that will take place in the elements of the reality universe. Conversely, they are confined to the observer universe. These structures may exhibit variations or resemblances.

The state structure comprises three elements: , representing the domain of the universe of reality, including entities and relationships; , representing the possible states; and , representing the intensional relations. The constituent elements of the conceptualization framework have been delineated in [6].The correlation between the conceptualization structure, representing a high level of understanding of the universe of reality [6], and the state structure resulting from our approach, indicating a low level of understanding of the universe of reality, is depicted in Figure 2.The captured states can be classified into two separate structures based on the basic alterations that will take place in the elements of the reality universe. Conversely, they are confined to the observer universe. These structures may exhibit variations or resemblances. - First form of intelligence (Data): It can be reached by converting a function that converts the captured state of reality () into this form within the intelligence space universe (). The converting function follows:where denotes the state of the (), which is =<, , >where represent data within the intelligence space and has the same structure of the captured state where:

- Second form of intelligence (information): Data alone is insufficient and unintelligible. So, it is necessary to add meaning to the data. Adding semantics derived from transforms data within into information. may have a transformed function that allows it to do this:where represents form1 within the observed universe, and is an ontological view that enables the transformation from data to Information denotes Information.

-

Third form (knowledge): may be equipped with a function to extract new implicit information from existing information (explicitly). The function will employ axioms derived from the ontological view (these axioms are a set of logical formulae). Due to this knowledge, the intelligence space can make wise decisions and solve problems. The function of transformation can be expressed as follows:where is explicit information possessed by , and will be applied to extract axioms used in deducing. The if-then statement is a common example of deductive reasoning. Using logic, if and , then A must . However, because the FOL was used as a logic language to specify data, its deduction rules will also be applied. The three equations (1, 2 and 3) represent the three forms of intelligence (Data, information and Knowledge, respectively).

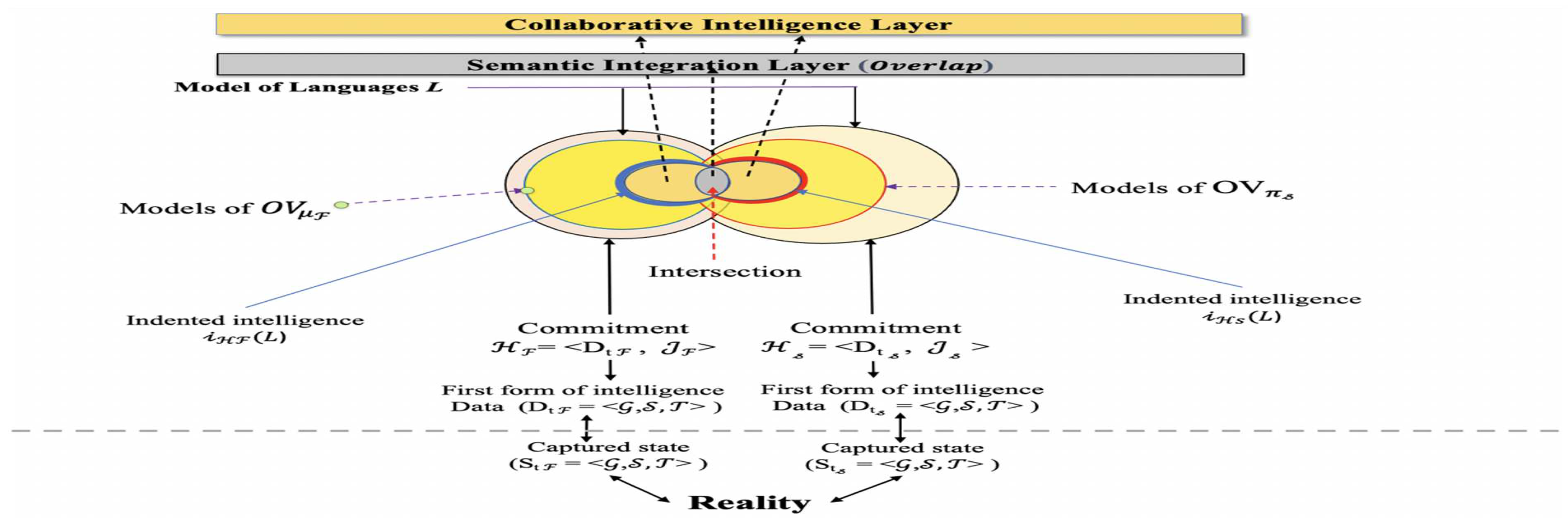

7. Collaborative Intelligence (CIDE): Framework and Definition Aspects"

- A set of decentralized intelligences, each of which will be individually developed, relying on the unique state that has been captured for the same domain of interest mentioned in section 6.

- A Semantic integration layer will employ an ontological view to find intersections among distributed intelligences in a decentralized environment, making a significant contribution.

- A collaborative intelligence layer will yield substantial advantages by constructing a logical framework (a reasoning system) that enhances the efficiency of decision-making, a capability that individual intelligences cannot attain in isolation.

7.1. Categories of Overlap among Distributed Intelligences

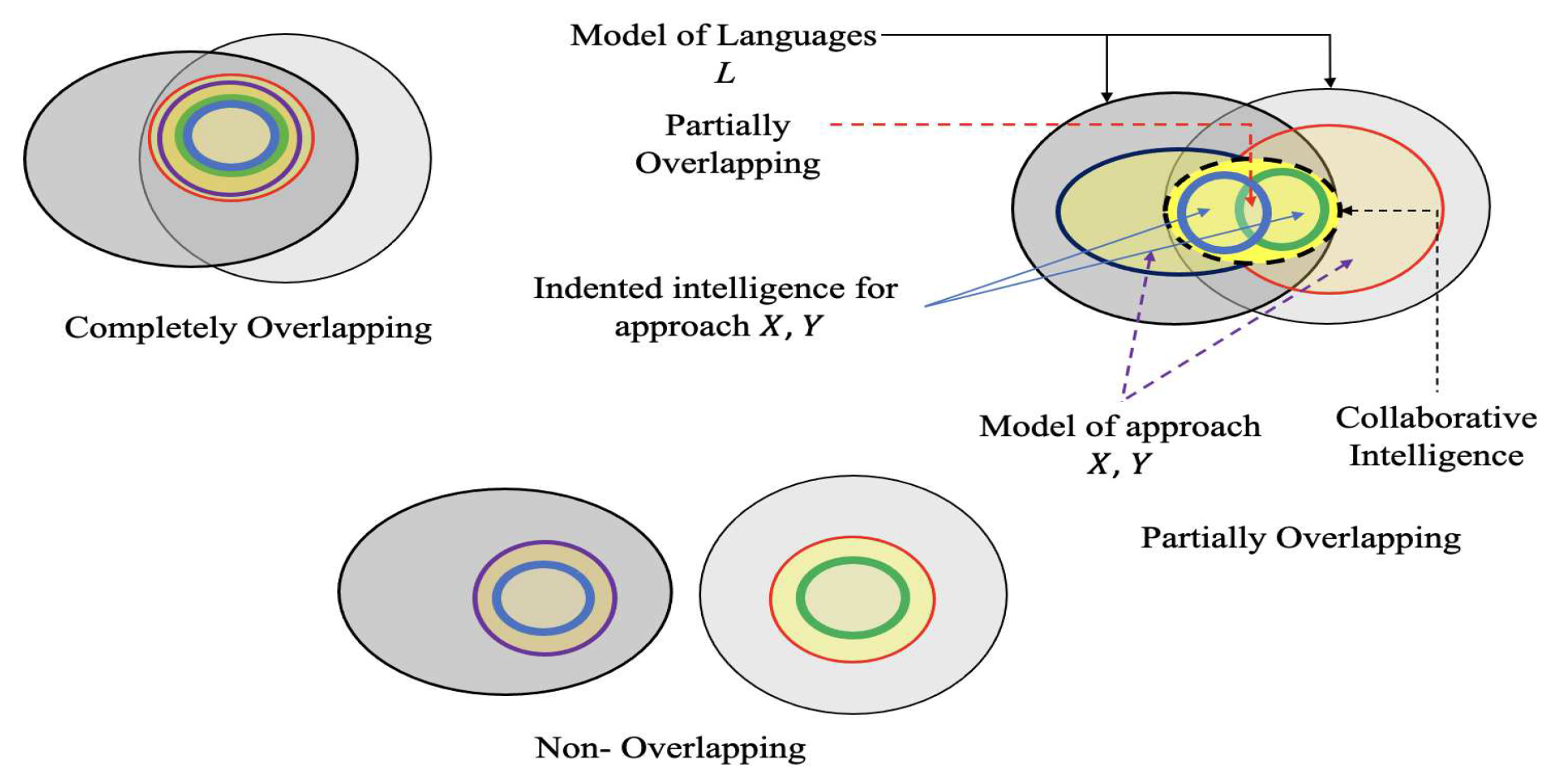

- [Definition 7.1.1 Partial Overlap] Given two sets of intended intelligences, the first intended intelligence, denoted as , pertaining to language , is derived from the first ontological view, represented as . Similarly, the second intended intelligence, denoted as , associated with language , is derived from the second ontological view, represented as . is Partially Overlapping (symbolized by ) with if only if partially intersect (⋈) with . In other words, the intersection between these two intended intelligences is true (not empty) and unequal.

- [Definition 7.1.2 Completely Overlap] Given two sets of intended intelligences, the first intended intelligence, denoted as , pertaining to language , is derived from the first ontological view, represented as . Similarly, the second intended intelligence, denoted as , associated with language , is derived from the second ontological view, represented as . is Completely Overlapping (symbolized by ⊡) with if only if completely intersect (⋉) with . In other words, the intersection between these two intended intelligences is equal to whole elements in these intended intelligences; the two ontological views either overlap completely or partially.

- [Definition 7.1.3 Non-Overlap] Given two sets of intended intelligences, the first intended intelligence, denoted as , pertaining to language , is derived from the first ontological view, represented as . Similarly, the second intended intelligence, denoted as , associated with language , is derived from the second ontological view, represented as . is non-Overlapping (symbolized by ⊠) with if only if is not intersect (⋊) with . In other words, the intersection between these two intended intelligences is empty (∅); the two ontological views will not overlap.

7.2. Foundations of Collaborative Intelligence: A Theoretical Overview

-

[Definition 7.2.1 Intended structure] For each possible state s∈, the intended state of s according to is the structure =< , >, where = () | ∈ is the set of extensions (relative to ) of the elements of . = |s∈ denotes all the intended intelligence structures of .This definitions presented in the following sections 7.2.2, 7.2.3, 7.2.4, 7.2.5, and 7.2.6 have been derived from a framework that aligns with conceptualization and ontology [24]. However, slight adjustments have been made to these definitions to suit my research requirements.[Definition 7.2.2 Model of Language] For a given ontological view , logical language, with a vocabulary , we can define a model for L as a structure < S, I>, where S = < , > is a state structure and I: →∪ is an interpretation function assigning elements of to constant symbols of and elements of to the predicate symbol of .[Definition 7.2.3 Ontological Commitment] For a given ontological view, = <, ℑ> is an ontological commitment for L, where = <, , > is a data and ℑ: →∪ is intensional interpretation. This interpretation is a function assigning elements of to constant symbols of , and element of to predicate symbols of .[Definition 7.2.4 Ontology] Given ontological view, a language L with ontological commitment , an ontology for L is a set of axioms designed in a way such that the set of its models approximates as best as possible the set of intended models of L according to .[Definition 7.2.5 Compatible] Given the ontological view, a language L with a vocabulary , and an ontological commitment = (,ℑ) for L, a model (S,L) will be compatible with if: i) S ∈ ; ii) for any constant symbols c∈ , I(c) = ℑ (c), where I is an extensional interpretation and ℑ is an intensional interpretation; iii) there exists some ∈ such that, for all predicate symbol v ∈, I(v) = (ℑ(v)) ( i.e., there exists a conceptual relation p such that ℑ(p) = ∧ () = I(p).[Definition 7.2.6 Intended intelligence (a second form of intelligence)] The set (i.e., information, “Info”) of all models of L that are compatible with will be called the set of intended intelligences of L according to . will signify the intelligence intended for this study.

7.3. Overlapped Intended Intelligence Concept

7.4. Advancing Beyond the Overlapped Intended Intelligence Concept

8. Conclusion

References

- Ziegler, M.; Danay, E.; Heene, M.; Asendorpf, J.; Bühner, M. Openness, fluid intelligence, and crystallized intelligence: Toward an integrative model. Journal of Research in Personality 2012, 46, 173–183. [Google Scholar] [CrossRef]

- McCarthy, J. From here to human-level AI. Artificial Intelligence 2007, 171, 1174–1182. [Google Scholar] [CrossRef]

- Yilam, G.; Kumar, D. Machine Learning Prediction of Human Activity Recognition. Ethiopian Journal of Science and Sustainable Development 2018, 5, 20–33. [Google Scholar]

- Wang, Y. On abstract intelligence: Toward a unifying theory of natural, artificial, machinable, and computational intelligence. International Journal of Software Science and Computational Intelligence (IJSSCI) 2009, 1, 1–17. [Google Scholar] [CrossRef]

- Faggella, D. What is artificial intelligence? An informed definition. EMERJ. December 2018, 21, 2018. [Google Scholar]

- Adhnouss, F.M.A.; El-Asfour, H.M.A.; McIsaac, K.; El-Feghi, I. A Hybrid Approach to Representing Shared Conceptualization in Decentralized AI Systems: Integrating Epistemology, Ontology, and Epistemic Logic. AppliedMath 2023, 3, 601–624. [Google Scholar] [CrossRef]

- Guarino, N.; Oberle, D.; Staab, S. What is an ontology? In Handbook on ontologies; Springer; pp. 1–17.

- Genesereth, M.R.; Nilsson, N.J. Logical foundations of artificial intelligence; Morgan Kaufmann, 2012. [Google Scholar]

- King, J.L. Centralized versus decentralized computing: Organizational considerations and management options. ACM Computing Surveys (CSUR) 1983, 15, 319–349. [Google Scholar] [CrossRef]

- Olaru, A.; Pricope, M. Multi-Modal Decentralized Interaction in Multi-Entity Systems. Sensors 2023, 23, 3139. [Google Scholar] [CrossRef] [PubMed]

- IBM, I. What is distributed computing. 2022. Available online: https://www.ibm.com/docs/en/txseries/8.2?topic=overview-what-is-distributed-computing (accessed on 02 August 2023).

- Tanenbaum, A.S. Distributed systems principles and paradigms; Pearson Prentice Hall, 2007. [Google Scholar]

- Smith, D.R. Creation of a Unified Cloud Readiness Assessment Model to Improve Digital Transformation Strategy. International Journal of Data Science and Analysis 2022, 8, 11. [Google Scholar] [CrossRef]

- El-Asfour, H.; Adhnouss, F.; McIsaac, K.; Wahaishi, A.; Aburukba, R.; El-Feghia, I. The Nature of Intelligence and Its Forms: An Ontological-Modeling Approach. International Journal of Computer and Information Engineering 2023, 17, 122–131. [Google Scholar]

- Gill, Z. User-driven collaborative intelligence: social networks as crowdsourcing ecosystems. In CHI’12 Extended Abstracts on Human Factors in Computing Systems; Association for Computing Machinery, 2012; pp. 161–170. [Google Scholar]

- Weschsler, D. Concept of collective intelligence. American Psychologist 1971, 26, 904. [Google Scholar] [CrossRef]

- Wheaton, K.J.; Beerbower, M.T. Towards a new definition of intelligence. Stan. L. & Pol’y Rev. 2006, 17, 319. [Google Scholar]

- Wechsler, D. The measurement and appraisal of adult intelligence. Academic Medicine 1958, 33, 706. [Google Scholar]

- Zhong, H.; Levalle, R.R.; Moghaddam, M.; Nof, S.Y. Collaborative intelligence-definition and measured impacts on internetworked e-work. Management and Production Engineering Review 2015, 6, 67–78. [Google Scholar] [CrossRef]

- Woolley, A.W.; Aggarwal, I.; Malone, T.W. Collective intelligence in teams and organizations. Handbook of collective intelligence, 2015; 143–168. [Google Scholar]

- Parsons, S.; Branagan, A. Word Aware 1: Teaching Vocabulary Across the Day, Across the Curriculum; Routledge, 2021. [Google Scholar]

- Siegler, R.S. The other Alfred Binet. Developmental psychology 1992, 28, 179. [Google Scholar] [CrossRef]

- Adhnouss, F.; El-Asfour, H.; McIsaac, K.; Wahaishi, A.M.; El-Feghia, I. An Intensional Conceptualization Model for Ontology-Based Semantic Integration. International Journal of Computer and Information Engineering 2023, 17, 106–111. [Google Scholar]

- Wang, Y.D. Ontology-driven semantic transformation for cooperative information systems. PhD thesis, Faculty of Graduate Studies, University of Western Ontario, 2008., 2008. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).