1. Introduction

The COVID-19 pandemic outbreak has led to a significant increase in digital competence among undergraduate students. With the transition to online learning and remote work, millions of full-time students were pushed to resume their studies online [

1]. In the health sector, informatics literacy of healthcare workers, including computer skills, understanding of information systems, and informatics principles, emerged as crucial domains [

1]. Edirippulige [

2] highlighted challenges in health professionals' confidence within the evolving e-health landscape. Therefore, addressing the preparation for health professions graduates full of digital competence is necessary as the healthcare sector undergoes rapid transformation. While platforms like PubMed, ERIC, and Google Scholar readily yield numerous studies on the digital competence of health professions students before the COVID-19 outbreaks [

3,

4,

5]; the scarcity of research analyzing the vital digital competencies required in the new normal era is evident. This highlights the unique and relatively unexplored nature of understanding how the pandemic and post-pandemic scenarios have reshaped digital skills requirements, and underscores the need for the development and validation of a digital competence assessment scale tailored specifically for health professions students. In responding the question: “What are the essential constructs and the validity of a digital competence assessment scale for health professions students (DigiCAS-HPS) in the post-COVID-19 new normal era?”, the development process of the instrument involves a literature review, designing and constructing the dimensions and items of the questionnaire, and finally, assessing the construct validity and the reliability of DigiCAS-HPS.

Literature Review

To establish the fundamental components of DigiCAS-HPS, an extensive literature review was conducted to examine the definition of digital competence and its popular frameworks. According to the European Union framework of key competencies, digital competence is defined as follows: “Digital competence involves the confident, critical and responsible use of, and engagement with, digital technologies for learning, at work, and for participation in society. It includes information and data literacy, communication and collaboration, media literacy, digital content creation (including programming), safety (including digital well-being and competences related to cybersecurity), intellectual property related questions, problem solving and critical thinking”[

6]. Furhermore, Khan [

7] defined digital competence as the knowledge, skills, attitudes and digital literacy that are needed for developing and managing digital information systems. According to Ferrari [

8], digital competence is a “set of knowledge, skills, attitudes, strategies and awareness which are required when ICT and digital media are used to perform tasks, resolve problems, communicate, manage information, collaborate, create and share content, and build knowledge in an effective, efficient and adequate way, in a critical, creative, autonomous, flexible, ethical and a sensible form for work, entertainment, participation, learning, socialization, consumption and empowerment” [

8]. Janssen & Stoyanov [

9] defined digital competence that is an amalgamation of knowledge, skills, and attitudes linked to various purposes such as communication, creative expression, information management, and personal development. The five most universally deemed competences encompass the ability to communicate via information and communication technology, ease with computer usage, collaboration following digital etiquettes, general computer skills, and the capability to extract various information from the internet [

9]. All definitions involved in cognitive, psychomotor, and affective domains that are important for developing students’ digital skills [

10].

Cognitive domain: These concepts involve mental processes such as thinking, analyzing, and acquiring knowledge. They encompass problem-solving skills, critical thinking abilities, and knowledge application, which are essential for effective utilization of digital technologies.

Psychomotor domain relates to the practical skills required to create, manage, and develop digital content and information systems. They involve hands-on actions and application of technical skills.

Affective domain reflect attitudes, emotions, and values associated with using digital technologies. They include responsible and ethical behavior, collaboration, awareness, and emotional aspects that influence how individuals engage with digital tools.

The definition of digital competence proposed by Perifanou and Economides [

11] is easily understood and involves comprehensively three above domains as follows: “Digital competence is the person’s knowledge, skills and attitude to ‘efficiently’ access, use, create and share digital resources, communicate and collaborate with others using digital technologies in order to achieve specific goals”[

11].

For currently popular frameworks of digital competence, the literature review also highlights several key frameworks proposed to describe the multifaceted concepts of digital competence that are mentioned in the above . Among these frameworks, the European Digital Competence Framework for Citizens, also known as DIGCOMP, initially put forth by the European Commission [

12], stands as one of the most prominent and widely recognized in Europe. It includes five competence areas: (i) information and data literacy, (ii) communication and collaboration, (iii) digital content creation, (iv) safety, and (v) problem solving. UNESCO has expanded upon this framework by incorporating additional competencies, such as device and software operations, as well as career-related skills [

13]. Further contributing to this discourse, the UK National Standards for Essential Digital Skills introduced the Essential Digital Skills Framework (EDSF) [

14], the standards that set out the digital skills needed for work and life consist of (i) Using devices and handling information, (ii) Creating and editing, (iii) Communicating, (iv) Transacting, and (v) Being safe and responsible online. While the International Association for the Evaluation of Educational Achievement (IEA) introduced the International Computer and Information Literacy Study (ICILS) that encompasses (i) Understanding computer use; (ii) Gathering information; (iii) Producing information; and (iv) Digital communication [

15]. Concurrently, the European Skills, Competences, Qualifications and Occupations (ESCO) delineated Digital Competencies [

16], and the Irish Government introduced the Digital Skills Framework [

17].

These frameworks converge on fundamental domains integral to digital competence, encompassing Information Handling, Digital Content Creation, Communication, and Collaboration. Nevertheless, some frameworks manifest redundancies or discrepancies wherein analogous actions are placed under different domains. For instance, DIGCOMP addresses information management not only in “Information and data literacy” but also in “Communication and collaboration”. Similarly, safeguarding information is dealt with both in “Digital content creation” and “Safety”. The literature review demonstrates the intricate and evolving nature of digital competence frameworks, reflecting the ongoing refinement of this essential construct in response to the evolving digital landscape. Therefore, these frameworks collectively serve as foundational pillars for shaping the core constructs of DigiCAS-HPS in the post-COVID 19 era. We explored the theoretical underpinnings, and dimensions of these digital competency frameworks to answer the research question: What are the essential constructs and the validity of a digital competence assessment scale for health professions students (DigiCAS-HPS) in the post-COVID-19 new normal era? The current study aims to develop and validate a digital competence scale for health professions students in the post-COVID 19 era. Moreover, previous research has indicated that there was a relationship between online learning outcomes and personal factors such as educational level, gender, and personality traits [

18]. Other studies have reported the effects of personal factors on students' digital skill components [

19]. The present study seeks to examine the potential influence of personal factors, including educational level, gender, and personality traits, on the digital competence constructs of health professions students during the post-COVID-19 new normal era. This study is crucial for health educational institutes to reform educational programs to fit the global digital trend and technologies in the future that is the platform for developing digital health that World Health Organization [

20] defined as the field of knowledge and practice associated with the development and use of digital technologies to improve health. Furthermore, it also identifies inadequacies in training and improving health professions students’ digital competence to meet future digital health. The research question guiding this investigation is “How do personal factors affect the digital skill components of health professions students in the post-COVID-19 new normal era?” The second research objective formulated from this research questions is to analyze differences in digital competence among health professions students across different groups based on their gender, age, field of study, and academic year.

2. Materials and Methods

2.1. Study Design

The present study adopted a mixed-methods approach with a structural relationship model, consisting of qualitative methods in the development of DigiCAS-HPS (phase 1), and quantitative methods in the validation of DigiCAS-HPS (phase 2) for achieving the first research objective of developing a digital competence scale for health professions students. This approach allowed for an in-depth investigation of the complex relationships between the various components of digital competence. In addition, the cross-sectional survey was designed to collect information on personal factors such as age, gender, educational level, academic years, and majors to investigate their impact on digital competence components in the second objective.

2.1.1. Development of DigiCAS-HPS

1. Constructing DigiCAS-HPS framework

The result of literature review revealed that the currently digital competence frameworks are built on the basis of fundamental dimensions as Information Handling, Digital Content Creation, Communication and Collaboration. We applied the expanded dimensions of competencies in UNESCO framework such as “Device and software operations” and “Using devices” of EDSF to establish the dimension of problems solving, and device and software operations. Regarding affective domain, the dimension of digital safety was set up by the combination of "Being safe and responsible online" of EDSF and "Safety" of DIGCOMP. It consisted of items to collectively demonstrate attitudes of caution, responsibility, and ethical behavior when using digital technologies. The structure of DigiCAS-HPS includes 5 dimensions as follows: (i) Information and data handling; (ii) Communication and collaboration; (iii) Digital content creation; (iv) Digital safety; and (v) Problem solving and Device and Software Operations.

2. Draft version of DigiCAS-HPS

Items for the digital competency scale were generated by a group consisting of a researcher and two experts in information communication technology. These items were based on a review of existing literature on digital competence scales [

11,

21,

22], and the integrating frameworks of DIGCOM, EDSF, and ICILS as shown in

Table 1. The draft version of the scale was designed using 5 personal information items, and 37 items for measuring digital competence that were distributed in 5 dimensions: (i) Information and data handling; (ii) Communication and collaboration; (iii) Digital content creation; (iv) Digital safety; and (v) Problem solving and device and software operations. All measurement items are related to the above-mentioned theoretical framework and reflect the knowledge (cognitive), skills (psychomotor) and attitude (emotion) of students to “effectively” access, use, create and share digital resources, and communicate and collaborate with others using digital technology [

11]. Each item is measured with the five point - Likert scales such as:

point. Very unlikely: I myself claim to have never done it or can't do it even with someone's help

points. Unlikely: I can't do it myself without help.

points. Not confirmed: sometimes I can do it myself without help.

points. Likely : I can do it on my own and solve problems that arise

points.Very likely: I can master myself, and solve problems, and teach others at the same time.

3. Content Validity Assessment of the Draft Version

After setting up a draft version of DigiCAS-HPS, we began to evaluate the content validity of the tool by following two steps: (i) Pilot testing and (ii) Content validation of experts

3.1. Pilot Testing

To evaluate the clarity and appropriateness of the scale items, a pilot test was conducted among a group of 10 students to answer questions related to the draft scale, e.g. like “Do you find the language used in the scale easy to understand?”. Furthermore, they were asked whether any essential aspects of digital competence were included in the scale. If any technical problems were encountered while completing the scale, any items deemed repetitive or redundant or any items intended to improve the clarity and appropriateness of the scale were removed or noted, respectively.

3.2. Expert Review

A group of six experts in information communication technology was consulted to review a scale and provided suggestions for improving items and identifying any potential issues. The experts assessed the content validity based on: (i) the relevance of items referred to the extent to which the items accurately reflected sub-dimensions of the digital competencies, and (ii) the clarity of items was measured to determine if it was easily understood. The experts were provided with a semi-structured form and were asked to rate from 1 to 5 points for each item on the scale such as (1) the item is not relevant/clear to the measures domain; (2) the item is somewhat relevant/clear to the measures domain; (3) the item is quite relevant/clear to the measures domain; and (4) the item is very relevant/clear to the measures domain. Ratings of one (rewriting a new item), two (needing major revision) and three (needing minor revision) prompted the experts to provide constructive feedback and suggestions for question improvement. The form also allowed room for additional comments from the experts, enabling further insights into the questionnaire's content. The Item-Content Validity Index (I-CVI) was used to measure the agreement among experts in rating the relevance and clarity of items in a scale, which is calculated by dividing the number of experts rating an item with a score of 3 or 4 by the total number of experts. In this study, I-CVI = (Number of experts agreed item with score of 3 or 4)/ (Total number of 6 experts). In addition, the Scale Content Validity Index (S-CVI) is applied as a measure of the overall content validity of a scale. The S-CVI is calculated by taking the average of the Item-Content Validity Index (I-CVI) scores across all items in the scale [

23]. In the case for six experts reviewing, the acceptable cut-off score of CVI is at least 0.83 [

24], and SCVI of relevance/clarity of the scale achieved 0.85.

3.3. Revision

Based on the feedback from the pilot testing and expert review, revisions were made to ensure that the scale was clear, and relevant to the sub-dimensions of measures domains. Items with I-CVI scores of less than 0.83 were revised based on the feedback. Some minor modifications were made, such as item 16: “I consistently share high-quality, original, and valuable content that is beneficial to the wider audience.”, and item 18: “My online contributions consistently consist of excellent, unique, and valuable posts or articles that benefit the public.”, integrated together to create an amendment of item16: “I post only good, original, unique, and useful posts/articles that are useful to the public”. In addition, five items were deleted , following the recommendations of the group of experts and by decision of the research team.

After completely editing the items based on expert feedback and pilot testing, a final draft version of the digital competency scale is described in details in

Appendix A. It included 37 items in five dimensions and 15 sub-dimensions as follows:

1. Dimension 1: Information and data handling (8 items).

This dimension assesses an individual's ability to effectively search for, evaluate, and manage digital information and data on the internet. It encompasses skills such as conducting online research, using search engines and databases, selecting appropriate keywords, critically assessing the reliability of sources, and using online storage platforms for file management and sharing. It reflects the competence required to navigate the vast digital landscape for information and knowledge acquisition while ensuring accuracy, reliability, and data security. This dimension is composed of 3 sub-dimensions: (i) Browsing, searching and filtering data, information and digital content; (ii) Evaluating data, information and digital content and (iii) Managing data, information and digital content.

2. Dimension 2: Communication and collaboration (9 items)

This dimension assesses an individual's ability to effectively use digital platforms and tools for communication, collaboration, and sharing information with others. This dimension encompasses skills such as creating and managing social network groups, sharing multimedia content online, submitting assignments through web applications, using blogs and social media for educational purposes, posting relevant and valuable content, considering the societal impact of online communication, and utilizing digital tools for efficient collaboration and discussions. It reflects the competence required for productive and ethical interaction in digital environments, promoting effective teamwork, and leveraging digital tools for academic and social communication. It consists of 4 sub-dimensions: (i) Interacting through digital technologies; (ii) Sharing through digital technologies, (iii) Engaging in citizenship through digital technologies and (iv) Collaborating through digital technologies.

3. Dimension 3: Digital content creation (5 items)

This dimension encompasses skills such as creating multimedia presentations, organizing and summarizing digital information, using graphing programs to draw figures or to report statistics, converting content between formats, and utilizing translation tools.

4. Dimension 4: Digital safety (9 items)

It assesses an individual's ability to safeguard personal and sensitive information in the digital realm. It includes competencies such as assessing the appropriateness of sharing personal information on social media, ensuring the security of online login credentials, protecting against spam and phishing threats, and managing and updating passwords to maintain the privacy and security of personal information. Dimension 4 focuses on the knowledge and skills required to protect both personal and others' data and privacy in digital environments. It includes three sub-dimesions: (i) privacy security, (ii) personal data protection, and (iii) digital responsibility.

5. Dimension 5: Problem solving, device and software operations (6 items).

This dimension assesses an individual's ability to effectively handle various digital resources and troubleshoot common digital problems. It includes competencies such as installing applications and connecting peripheral devices to a computer, and anti virus softwares, and setting up online communication platforms, resolving routine digital issues, engaging with diverse digital media platforms for educational purposes. It consists of 2 sub-dimensions: (i) Using devices and problems solving and (ii) Software operations

3.4. Sample Size and Data Collection

The sampling method included a total sample size of 717 health professions students in Dong Thap province, Vietnam. The sample of 717 health professions students was randomly split in half. The first half of 366 students was selected for Exploratory Factor Analysis (EFA), while the second half of 351 students was for Confirmatory Factor Analysis (CFA) [

25]. Data collection process was performed from June to August, 2022 The recruitment process included using a list of all students in the college as the sampling frame, and random sampling was used for selection. The admission criteria required the voluntary acceptance of the student and the giving of informed consent. All participants provided voluntary, anonymous consent prior to participating in the study. According to the college's ethics committee, the identity of the respondent could not be determined with certainty and all ethical requirements were met.

3.5. Data Analysis

The study used Exploratory Factor Analysis (EFA) and Confirmatory Factor Analysis (CFA) to determine the best factor structure, and evaluate the validity and reliability of the digital competence scale. EFA explores data and identify factors or latent constructs emerged from actual data. Confirmatory factor analysis (CFA), on the other hand, is useful for testing a pre-specified factor structure (hypothesized structure of scale) and determining if that structure fits data [

26].

3.5.1. Exploratory Factor Analysis

Exploratory factor analysis (EFA) is used for identifying the underlying latent factor structure of a set of items in the initial phase [

26], and selectively reduce the number of items. It's important to note that there were no missing values in the dataset. To ensure the data's normality, we scrutinized skewness and kurtosis, ensuring that they fell within the range of -2 to +2, confirming a normal univariate distribution [

27]. For factor extraction, the Varimax rotation method was employed. Principle axis factoring was chosen for this task due to its ability to handle potentially non-normally distributed data and effectively recover weak factors [

28,

29]. We determined the number of factors to extract by identifying those with eigenvalues greater than 1.0. Each extracted factor was thoughtfully named after a meticulous examination of its constituent items. Factor loadings played a crucial role in this process, as items with factor loading values greater than 0.65 were assigned to the respective extracted factor in the rotated factor matrix [

30]. Any items falling below this loading threshold on all factors or exhibiting cross-loadings on two or more factors were excluded [

31]. In addition to factor loadings, items were deemed acceptable if their communalities fell between 0.25 and 0.4 [

32]. Furthermore, each factor was required to comprise a minimum of 3 items; otherwise, it would be considered for removal [

30].

To evaluate the validity of the factor analysis, we considered the values of Bartlett’s test of sphericity (BTOS) and the Kaiser-Meyer-Olkin (KMO) measure [

33]. A KMO value exceeding 0.5 indicates the appropriateness of applying factor analysis to the dataset, affirming the adequacy of the sample size. BTOS, with a p-value of less than 0.05, confirmed that the variables in the population correlation matrix were indeed correlated [

32].

Furthermore, we assessed the internal consistency reliability using Cronbach’s alpha coefficient, with a threshold of 0.70 or higher considered acceptable [

28]. These analyses, including EFA, KMO, Bartlett’s test of sphericity, and reliability assessments, were conducted using IBM® SPSS software version 20.0 (SPSS Inc, Chicago, IL, USA).

3.5.2. Confirmatory Factor Analysis (CFA)

In Structural Equation Modeling (SEM), we used CFA to establish the structural validity of the scale and the Maximum Likelihood parameter estimation method. In cases where the initial model emerged by EFA did not fit well to data, we performed model modifications to achieve the best possible final model. CFA runs of the maximum likelihood method were performed using IBM® SPSS AMOS 20 software.

1. Modification indices

This modification requires additional re-specification (i) adding a parameter with a large modification index (MI) to the model (e.g., plotting covariance between two variables) and (ii) deleting parameters with insignificant paths from the model, (iii) remove observed variables if the standardized residual covariance (the difference between observed and expected covariance) is greater than 2, (iv) exclude items with factor loadings lower than 0.6 to achieve unidimensionality.

2. Assessment of validity and reliability

Assessing the goodness of fit: To comprehensively evaluate the goodness of fit for our model, we employed a range of criteria encompassing both absolute and relative fit indices [

34]. Firstly, the absolute fit indices included the chi-square statistic, the goodness of fit index (GFI), and the root mean square error of approximation (RMSEA) [

35]. The chi-square statistic assesses the overall model fit and measures the disparity between observed and expected covariance matrices. Smaller values indicate a superior fit. The chi-squared (χ2) analysis adheres to established criteria for goodness of fit, requiring a non-significant p-value and a χ2/df value of 3 or lower. The GFI evaluates the agreement between the proposed model and the actual covariance matrix, with values exceeding 0.9 generally indicating an acceptable model fit [

36,

37]. The Root Mean Square Error of Approximation (RMSEA) was also used, with values below 0.07 indicating an acceptable fit and values below 0.05 signifying a good fit [

28,

38] The p-value assesses the null hypothesis that RMSEA is ≤ 0.05, with a p-value greater than 0.05 supporting the null hypothesis. Secondly, relative fit indices were employed for evaluating model fit, including the Comparative Fit Index (CFI), Tucker-Lewis Index (TLI), and Incremental Fit Index (IFI), with values of 0.95 or higher indicating a good fit [

35]. Additionally, the Standardized Root Mean-Squared Residuals (SRMR) was utilized, with values below 0.08 suggesting a relatively strong fit between the proposed model and the observed data [

39].

3. Assessment of construct reliability or internal consistency: We employed two widely recognized methods, Cronbach's alpha and composite reliability (CR), to assess internal consistency. These methods gauge the consistency of responses among observed items within a construct. Both the Cronbach's alpha value and CR should surpass 0.70 to ensure robust internal consistency [

28,

40].

4. Assessment of convergent validity: We assessed convergent validity using both composite reliability (CR) and average variance extracted (AVE) [

41]. AVE indicates the proportion of variance that is captured by the factor relative to the amount of variance due to measurement error in the observed variables that load on that factor.

λ is a standardized factor loading of item

Σ represents the summation across all items (indicators) that load on the factor.

In the simple way, AVE can be the mean variance extracted of item loadings within a factor and is calculated by the formula:

n stands for the total number of items in a factor. An AVE value greater than 0.50 suggests that over half of the variance in the indicators is attributable to the intended construct [

40].

CR quantifies the extent to which the variance in the observed variables is due to the common variance explained by the latent construct, as opposed to measurement error. It measures the consistency of the relationship between the latent construct and its indicators. CR is calculated as follows:

A CR value exceeding 0.70 signifies a high level of internal consistency among the indicators.

Assessment of discriminant validity: Discriminant validity, which examines the degree of distinction between overlapping constructs in empirical measurements, was evaluated using multiple methods [

42]. This included examining Pearson correlations, which should be less than 0.8 to indicate differentiation between constructs. We also applied the Fornell & Larcker criterion, comparing the square root of the average variance extracted (AVE) for each construct with the correlations between constructs. For discriminant validity to be established, the square root of each construct's AVE should exceed the correlations with other latent constructs [

42]. Additionally, the Heterotrait-Monotrait (HTMT) ratio of correlations was used, with values below the threshold of 0.85 indicating distinguishable constructs [

43].

In summary, we employed a comprehensive array of fit indices and reliability measures to rigorously assess our model's quality, internal consistency, convergent validity, and discriminant validity, ensuring the robustness and credibility of our study's findings.

4. Results

4.1. Participants’ Characteristics

Table 2 provides an overview of the participants’ characteristics. In the total sample, the average age of students was 21.9 years (Standard deviation, SD = 5.7), with a gender distribution of 76 % female students. A significant portion of the student population, specifically 52.2 %, were enrolled in their first year of study. The predominant major of study among participants was Pharmacy, accounting for 56.3 % of the sample. The majority of students (95.2 %) used smartphones as their preferred internet device.

4.2. Results of EFA

The purpose of the EFA was to investigate the factors underlying the items in the draft version of the DigCAS-HPS (37 items). Kaiser-Meyer-Olkin (KMO) value of 0.957 and highly significant results from Bartlett’s test of sphericity (p < 0.0001) indicated that the sample dataset was well-suited for factor analysis, enabling the exploration of its underlying structure. EFA revealed a robust 3-factor structure involving 26 items, collectively explaining 53.53% of the total variance – a benchmark well above the 50% threshold [

41]. Importantly, all factor loadings exceeded 0.65, signifying a substantial proportion of shared variance among the items within each scale. This comprehensive EFA process resulted in a refinement of the scale from 37 items to 26 items, with three emerged distinct factors (F1, F2, and F3) in

Table 3. The first factor (F1), labeled “Digital information literacy and responsibility,” comprised 13 items. The second factor (F2), denoted as “Digital content creation and software mastery” consisted of 8 items, and the third factor (F3), titled “Digital safety” encompassed 5 items.

4.3. Results of CFA

4.3.1. Hypothesis 1: Digital Competece Scale Has a Three-Factor Structure

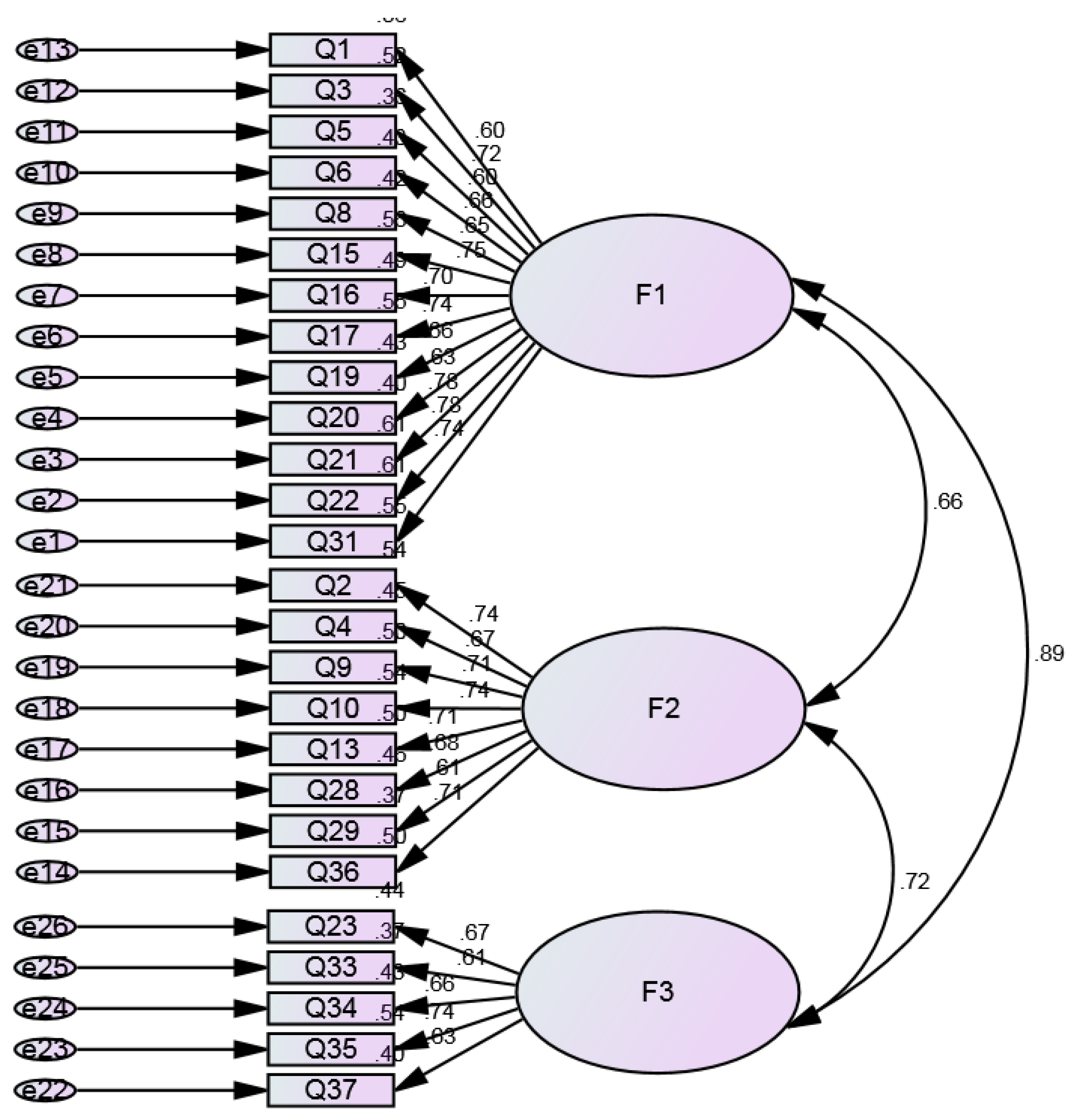

The structure encompassed 3 correlated constructs or factors: F1 (Digital information literacy and responsibility), F2 (Digital content creation and software mastery), and F3 (Digital safety) including 26 items. The symbols e1 - e26 were considered errors in the measurement model (

Figure 1). CFA was used to test the measurement model for each construct and evaluate the relationship between latent variables and observed variables as follows:

1. Assessment of construct reliability

Table 3 shows that the overall Cronbach's alpha value is 0.94. The Cronbach's alpha and composite reliability (CR) values of each construct both exceeded 0.7, indicating acceptable reliability of the data and good internal consistency. These high values demonstrate that the observed items within each construct are consistently measuring the same underlying construct.

2. Assessment of convergent and discriminant validity

The average variance extracted (AVE) values for the construct F3 is less than 0.5 in

Table 3. It indicates that a substantial amount of the variance in the indicators is due to measurement error rather than the underlying construct. This implies that the items in F3 are not converging well and may not be adequately capturing the intended construct. It suggests that the items within that factor may need revision, removal, or replacement to improve convergent validity.

In

Table 4, the correlation between constructs F1-F3 (0.891) is greater than 0.85. When correlations between constructs are too high, it suggests that the constructs may not be sufficiently distinct from each other.

Furthermore, the discriminant validity test based on the Fornell & Larcker criteria shows that 2 correlation coefficients between the constructs F1-F3 (0.891) and F2-F3 (0.724) are higher than the square root of the AVE for construct F1 (0.72) and F3 (0.69) located on the diagonal of the table. This finding suggests that there may be a lack of discriminant validity between the constructs to raise concerns about the distinctiveness and uniqueness of each construct in the model.

3. Assessment of Goodness-of-fit

Table 5 showed the result of three-factor structure of model fit analysis. The null hypothes is the three-factor structure of model to fit perfectly observed data. The absolute fit indices included χ2 = 793.612 with p- value < 0.0001, Goodness of Fit Index (GFI) = 0.847, and Root Mean Square Error of Approximation (RMSEA) = 0.069 with p-value <0.0001. All rejected the null hypothesis of model fit.

The relative fit indices included Adjusted Goodness of Fit Index (AGFI) = 0.819, Comparative Fit Index (CFI) = 0.894, Incremental Fit Index (IFI) = 0.894, (Tucker-Lewis Index) TLI = 0.883, and NFI = 0.84. These indices show that the model also does not achieve an acceptable fit to the data.

In general, based on the analysis of convergent and discriminant validity as well as model fit indices, the three-factor structure may have indices that do not ensure convergent validity, potentially lacking discriminant validity and inconsistent with the data. Therefore, model modifications were done to improve model fit based on the modification indices (MIs).

4.3.2. Hypothesis 2: Digital Competence Scale Has a Two-Factor Structure

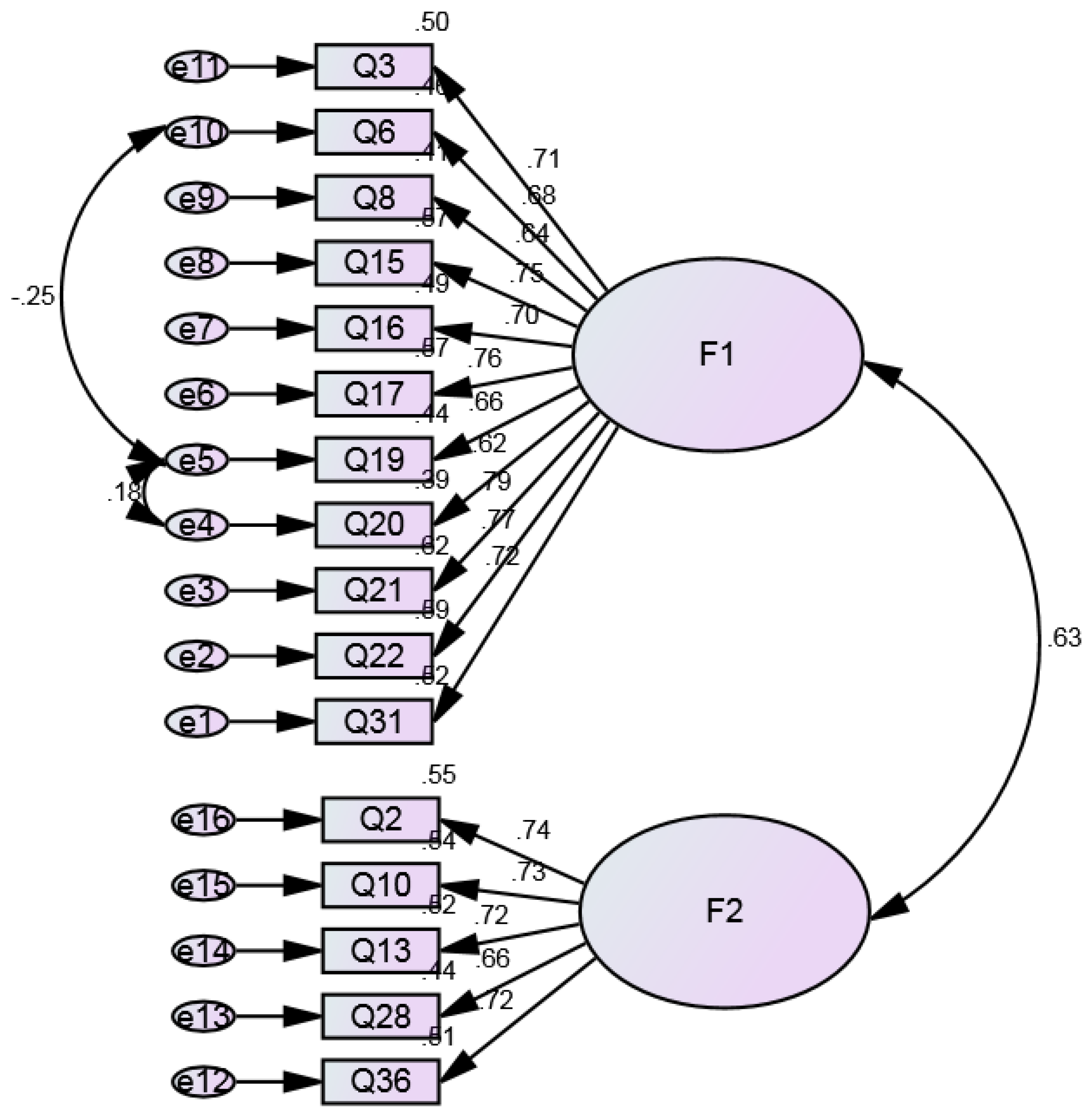

1. Model modifications

The three-factor structure of model was modified through several iterations of re-specifying the model based on standardized residual covariances and large modification indices. Eight items were deleted due to having standardized residual covariance greater than 2 such as Q1, Q4, Q5, Q9, Q23, Q29, Q33, and Q34. Furthermore, covariances between e10 and e5; e4 and e5 were added due to the large modification indices. Finally, the factor F3 was excluded from the model as it did not have at least 3 items per construct, which is a common rule of thumb for maintaining construct validity and reliability. After these revisions, the final model consisted of two factors: Digital Communication, Collaboration and Online Security (F1) and Digital Content Development and Software Mastery (F2) with a total of 16 items in the

Appendix B. The variance estimates for F1 and F2 were 0.164 and 0.360, respectively. Parameter estimates of the final model are shown in

Figure 2.

2. Assessment of construct reliability

Table 6 presents the Cronbach's alpha values and CR of F1, F2, and overall scale greater than 0.7 to indicate that the hypothesized two-factor structure of model has strong internal consistency. Items within each factor and across all factors are measuring the intended constructs effectively and consistently.

5. Assessment of Convergent and Discriminant Validity

The results of testing convergent validity and construct reliability show that the AVE values of Factor 1 (F1) and Factor 2 (F2) are 0.5 and 0.51, respectively (

Table 6). It suggests that half or more than half of the variance in the items is attributed to the constructs they are intended to measure. In this analysis, both factors met the criterion of explaining the majority of item variance. The CR values of F1 and F2 are 0.92 and 0.84, respectively. These CR values exceed the threshold of 0.7, which is generally considered acceptable, indicating that the items within each factor measure their respective constructs consistently and reliably. Overall, these results confirm the reliability and validity of both factors as measures of the constructs they represent.

Regarding the assessment of discriminant validity for the scale, the results presented in

Table 7 provide strong evidence supporting the discriminant validity between the two factors F1 and F2. Specifically, the correlation coefficient between factors F1-F2 (0.63) is smaller than the square root of AVE for each factor F1 (0.71) and F2 (0.72). This finding is consistent with established criteria for discriminant validity, as it demonstrates that the constructs represented by F1 and F2 are distinct and not closely related. Furthermore, the heterotrait-monotrait ratio (HT-MT), which is an additional measure used to assess discriminant validity, was reported to be 0.63. This value is significantly lower than the widely accepted threshold of 0.85. The HT-MT ratio serves as an additional indicator that the two constructs are indeed distinguishable from each other, reinforcing the conclusion that the scale effectively demonstrates discriminant validity. These combined results emphasize the robustness of the scale in ensuring that F1 and F2 represent separate and unrelated dimensions, reinforcing the overall validity of the measurement model [

43].

Table 7.

Test of Discriminant Validity for the Two-Factor Structure of Model based on the square root of AVE for each construct (diagonal) and correlations between constructs (off-diagonal), and HTMT/MT ratio.

Table 7.

Test of Discriminant Validity for the Two-Factor Structure of Model based on the square root of AVE for each construct (diagonal) and correlations between constructs (off-diagonal), and HTMT/MT ratio.

| |

F1 |

F2 |

| F1 |

0.71 |

|

| F2 |

0.63 |

0.72 |

| HT/MT ratio |

| MT correlation average |

0.49 |

0.51 |

HT correlation average between F1 and F2 = 0.32

HT/MT ratio = 0.32/√(0.49 × 0.51)

= 0.63 |

6. The Assessment of Goodness-of-Fit

Table 5 reveals a favorable model fit to the data. Among absolute fit indices, the chi-square value normalized by degrees of freedom (χ2/ df) is 1.93 < 3, and acceptable fit. However, the chi-square test yields a statistically significant result (p <0.05) due to its sensitivity in large sample size [

44]. In this case, the assessment commonly rely on additional fit indices. Moreover, the absolute fit indices, which include the Goodness of Fit Index (GFI) of 0.94, and the Root Mean Square Error of Approximation (RMSEA) of 0.05 with a p-value of 0.375, provide the evidence that the model fits the observed data well. Incorporating relative fit indices, the model continues to exhibit robust fit characteristics. The Comparative Fit Index (CFI), Incremental Fit Index (IFI), and Tucker-Lewis Index (TLI) are similarly equal to 0.96, and Standardized Root Mean Square Residual (SRMR) is 0.04. All attain values that affirm a very good fit to the data. The CFI, IFI, and AGFI values near unity are indicative of a strong fit, whereas the TLI value of 0.96 and the SRMR value of 0.04 further underscore the model's commendable fit to the data.

In summary, the two-factor structure of scale consistently demonstrates strong convergent and discriminant validity, construct reliability, and a good fit to the data. Hypothesis 2 posited the adoption of a two-factor structure in the model, designated DigiCAS-HPS including Factor 1 (Digital Communication, Collaboration and Online Security) and Factor 2 (Digital Content Development and Software Mastery). This measurement model serves as a reliable tool to assess the digital competencies of health professions students during the post-COVID-19 new normal era.

Differences of Digital Competence among Health Professions Students Assessed by DigiCAS-HPS Scale

The Mann-Whitney and Kruskal Wallis tests were used to test differences among student groups classified by gender, age, field of study and academic year with regard to the level of digital competence on two main constructs or factors. The analysis indicated that older students tended to demonstrate higher levels of proficiency in digital content development and software mastery when compared to younger students. Furthermore, the study results suggested that students nearing graduation may also possess more advanced competencies in these areas compared to first- and second-year students. However, the study did not reveal any statistically significant differences in digital competence among the remaining groups (

Table 7).

Table 7.

Differences in Digital Communication, Collaboration and Online Security (F1) and Digital Content Development and Software Mastery (F2) between students groups of demographic and academic characteristics.

Table 7.

Differences in Digital Communication, Collaboration and Online Security (F1) and Digital Content Development and Software Mastery (F2) between students groups of demographic and academic characteristics.

| Grouping variables |

F1 |

F2 |

Age1*

Chi-square

p-value |

2.340

0.310 |

35.002

0.0001 |

Academic years1*

Chi-square

p-value |

0.683

0.711 |

15.197

0.001 |

Field of study1

Chi-square

p-value |

2.578

0.461 |

1.807

0.613 |

Gender2

Z

p-value |

- 0.170

0.865 |

- 1.062

0.288 |

7. Discussion

The main research question of the present study is : “What are the essential constructs and the validity of a digital competence assessment scale for health professions students (DigiCAS-HPS) in the post-COVID-19 new normal era?". For addressing this question, a mixed-methods approach was employed, combining qualitative and quantitative methodologies. During the initial qualitative phase, the conceptual framework and items of the instrument were developed through a synthesis of existing literature. This includes prominent digital competency frameworks such as DIGCOMP, EDSF, IEA, ICILS, ESCO and UNESCO. This framework was intentionally designed to include both cognitive (knowledge) and psychomotor (skill) domains. The cognitive domain involves an individual's knowledge and understanding, applied in real life such as discussion and exchange of information, internet exploration for learning, use of licensed softwares, etc., . In contrast, the psychomotor domain covers a person's ability to perform tasks that require training, expertise, or practice [

45,

46] such as utilizing digital tools for collaboration, and resolving group works etc., . The integration of these domains is consistent with previous research on digital competencies for univerity students, emphasizing the key role of both cognitive and psychomotor aspects [

47,

48]. Subsequent pilot testing and expert reviews yielded valuable feedback, leading to refinements for clarity and appropriateness of the scale items. Quantitatively, both Exploratory Factor Analysis (EFA) and Confirmatory Factor Analysis (CFA) were conducted to elucidate the structure of the scale. Initially, EFA detected a three-factor structure that demostrated the strong internal consistency, but did not meet convergent and discriminant validity, and goodness-of-fit. The following adjustments according to modification indices culminate in a refined two-factor structure, including Factor 1 (Digital Communication, Collaboration and Online Security) and Factor 2 (Digital Content Development and Software Mastery). This revised model (DigiCAS-HPS) consistently demonstrated high convergent and discriminant validity, construct reliability, and strong fit to data collected from Vietnamese health professions students. To reveal the usefulness of the DigiCAS-HPS, the integration of digital competencies of this scale in the context of patient care expertise requires clear analysis. According to the systematic review of healthcare professionals’ competence in digitalization, "knowledge and skills in digital technology can serve to enhance better patient care" [

49]. Kijsanayotin et al. [

50] reported that health care professionals need to be skilled in using health information technology (IT) in their daily lives. Factor 1 (Digital Communication, Collaboration and Online Security) mainly measured discussion and exchange of information, utilizing digital tools for collaboration, privacy consideration, and engaging with digital media. These abilities to discuss and exchange information on web platforms is fundamental in healthcare. Health professionals need to communicate efficiently with colleagues, patients, and interdisciplinary teams. This skill is crucial for telehealth, where remote communication is central to patient care and telemedicine platforms are used for consultations or remote treatment monitoring [

51,

52]. Especially during the COVID-19 pandemic, robust in-hospital telehealth systems through video conferencing have addressed the challenge of communicating with patients in respiratory isolation and enabling all patients affected by the hospital's restrictions on connecting with their families [

53]. Privacy considerations, as mentioned in the DigiCAS-HPS, play an important role in upholding patient confidentiality and ensuring adherence to healthcare ethics. The effectiveness of telehealth services could be severely compromised if significant privacy and security risks are not effectively managed. The absence of robust security and privacy safeguards for the core telehealth data and systems can erode trust among both healthcare providers and patients regarding the use of telehealth solutions [

54]. Finally, engaging with diverse digital media platforms enhances understanding of medical subjects, a key requirement for health professions students. This practice not only enriches healthcare education but has gained newfound prominence in the wake of the COVID-19 pandemic, which compelled training programs to conform to social distancing requirements while upholding the rigorous benchmarks of medical instruction. Of note, social media, with its distinctive and as-yet underexplored potential, emerges as a promising supplement to formal medical education [

55]. Factor 2 of the DigiCAS-HPS scale, focusing on digital content development and software mastery, hold significant relevance for health professions students not only during their education but also in their future careers in healthcare. It mainly measured digital competencies of peripheral device connectivity, multimedia-based report creation, information reorganization, anti-virus software usage, and content format conversion. These competences are highly relevant to the practice of telehealth and digital health as follows. In data management, health professionals often manage vast amounts of paper documents and files related to patient medical records. In developed countries, most of the health management system use bar coding of patient medical record folders and also to the account files of patients to keep accurate file locator systems [

56]. Therefore, the digital competence in reorganizing, converting, and protecting data is crucial for ensuring patient privacy and providing efficient telehealth services. For cybersecurity, as telehealth becomes more prevalent, cybersecurity threats increase [

57]. Competence in using anti-virus software contributes to safeguarding patient data and maintaining the integrity of telehealth platforms.

Other digital competencies in Factor 2 involve abilities of peripheral device connectivity and multimedia-based report creation. Both are necessary for remote consultations and patient education. In telehealth, health professionals need to ensure that the devices they use, such as webcams and microphones, are connected and functioning correctly. Proficiency in connecting peripheral devices is essetial for telehealth consultations. Then, skills on creating multimedia - based communication are vital to transfer medical information or health messages effectively to patients in remote areas.

Summarily, the competences within the DigiCAS-HPS scale equip health professions students with the digital skills and knowledge necessary for their future roles in healthcare. These competencies not only facilitate their education but also enable them to excel in digital health, telehealth, and the post-COVID-19 new normal era, where digital proficiency is central to effective and secure patient care.

After DigiCAS-HPS was confirmed as the valid measurement model, it was used to assess the difference of digital competence across groups of students. The study's analysis yielded some valuable insights into the digital competence of health professions students. Notably, it was observed that older students exhibited a higher level of proficiency in digital content development and software mastery compared to their younger counterparts. This finding underscores the potential impact of age and cumulative experience in shaping digital competence among students. Additionally, the study suggested that students nearing graduation displayed more advanced competencies in these areas, possibly due to the increasing integration of digital technologies into advanced coursework or clinical practice as students progress in their studies.

However, it is important to note that the study did not identify statistically significant differences in digital competencies based on gender or field of study. This suggests that, within the limits of this study, gender and specific health professions do not appear to be important factors influencing digital competence. However, the absence of these differences does not diminish the importance of fostering digital competencies across all genders and fields of study in the health sector. Future research could reach deeper into the nuances of digital competence and explore potential variations not addressed in this study.

This research has achieved its goals by methodically designing, refining, and validating the DigiCAS-HPS scale. It addressed the research questions, elucidated the internal factor structure of the scale, confirmed the reliability and validity of the scale, and scrutinized differences in digital competence among health professions student groups according to different age and academic years.

Limitation

Despite the valuable insights garnered from this study, there are some inherent limitations that warrant consideration. Firstly, the research primarily focused on students from a single university, which may restrict the generalizability of the findings to a broader population of health professions students. In future research, expanding the participant pool to encompass a more diverse selection of universities and educational settings would enhance the questionnaire's applicability and the broader understanding of digital competence among health professions students.

Additionally, the development and validation of the DigiCAS-HPS scale were conducted within the context of the post-COVID-19 new normal era, which represents a distinct period characterized by significant shifts in educational and healthcare practices. Consequently, the generalizability of the scale's application to different contexts or eras should be approached with caution. The rapid evolution of digital technologies and healthcare practices demands a continuous evaluation of digital competence frameworks and scales to ensure their relevance in an ever-changing landscape.

Furthermore, while this study focused on the dimensions of digital competence related to health professions students, the field of digital competence is multifaceted and continually evolving. The DigiCAS-HPS scale primarily addresses certain dimensions and may not encompass the full spectrum of digital competencies required in the health sector. Thus, future research should aim to explore and expand the dimensions of digital competence, keeping pace with the evolving digital landscape in healthcare.

In conclusion, while this study successfully developed and validated the DigiCAS-HPS scale to assess the digital competence of health professions students in the post-COVID-19 new normal era, the aforementioned limitations suggest directions for future research and the ongoing refinement of digital competence assessment tools in the context of healthcare education and practice.

8. Conclusions

The findings of this research underscore the robustness of the two-factor digital competence assessment scale designed specifically for health professions students. This structure, which consists of Digital Communication, Collaboration, and Online Security (Factor 1) and Digital Content Development and Software Mastery (Factor 2), consistently exhibited strong construct validity and reliability, and demonstrated a remarkable fit to the data. These results hold significant implications for health professions student training. They offer a comprehensive and precise tool to evaluate and enhance digital competence, ensuring that students are well-prepared for the dynamic and technology-driven healthcare landscape of today and the future.

Furthermore, within the broader context of digital healthcare and telehealth, this research carries positive implications. Health professions students equipped with robust digital communication, collaboration, online security, content development, and software mastery skills will be better positioned to deliver telehealth services that are both efficient and secure. This not only elevates the quality of patient care but also fosters the expansion and accessibility of telehealth, a mode of healthcare delivery that has become increasingly vital in the post-COVID-19 new normal era. The synergistic relationship between education, digital competence, and the transformation of healthcare delivery signifies the critical role in shaping the future of the healthcare industry.

As healthcare education and practice continue to evolve in response to technological advancements and changing societal needs, this research serves as a foundational building block for the development of tailored training programs and the enhancement of digital competencies among health professions students. It offers an evidence-based framework for educators, healthcare institutions, and policymakers to ensure that the healthcare workforce of the future is not only technically proficient but also skilful at leveraging digital tools to provide high-quality, secure, and accessible care.

Author Contributions

Conceptualization, C.N.L., U.T.T.N. and L.M.L.; methodology C.N.L., U.T.T.N. and L.M.L.; software, C.N.L.; validation, C.N.L., and U.T.T.N..; formal analysis, C.N.L., and L.M.L.; investigation, C.N.L., U.T.T.N. and L.M.L.; resources, C.N.L., and U.T.T.N..; data curation, C.N.L., U.T.T.N. and L.M.L.; writing—original draft preparation, C.N.L.; writing—review and editing, C.N.L..; visualization, U.T.T.N..; supervision, C.N.L.; project administration, C.N.L. and U.T.T.N. All authors have read and agreed to the published version of the manuscrip.

Funding

This research received no external funding. This study was conducted without any financial support or sponsorship from external organizations. All aspects of this research, including data collection, analysis, and interpretation, were carried out independently without the influence of funding or financial backing from any external entities.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki, and approved by the Institutional Review Board (or Ethics Committee) of Dong Thap Medical College (protocol code No. 01/257/QD-CDYT and date of approval: June 1 2022). for studies involving humans.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

the data are available from the corresponding author upon reasonable request.

Acknowledgments

We would like to extend our gratitude to the health professions students at Dong Thap Medical College who generously volunteered for this study. We are also very grateful to the Research Section and lecturers for their collaboration.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Questionnaire assessing health professions students' digital skills in draft version

| # |

Digital competence |

Self-assessment |

| (1) |

(2) |

(3) |

(4) |

(5) |

| 1 |

I can browse, search and filter information, data, and digital content using various search engines (e.g. Google, Yahoo, Bing) and databases, using appropriate keywords and advanced search |

|

|

|

|

|

| 2 |

I can install an application from .exe file or connect peripheral devices such as printers, digital cameras, portable hard drives, etc. to my computer or notebook |

|

|

|

|

|

| 3 |

I can discuss and exchange any issue on the web (Facebook, Zoom, Google meeting, etc.) with friends, classmates, teachers, and other individuals. |

|

|

|

|

|

| 4 |

I can do, and submit assignments to teachers through web applications such as Google Apps, Microsoft 365, etc. |

|

|

|

|

|

| 5 |

I encourage myself and others not to illegally download content or otherwise disrespect digital property. |

|

|

|

|

|

| 6 |

I can utilize digital tools such as Microsoft Teams and Zoom to facilitate efficient collaboration in my studies, enabling effective teamwork and communication.. |

|

|

|

|

|

| 7 |

I know how to solve some routine problems (e.g. close program, re-start computer, re-install/update program, check internet connection). |

|

|

|

|

|

| 8 |

I am capable of sharing study-related information, and documents on platforms like blogs and Facebook.. |

|

|

|

|

|

| 9 |

I can use a graphing program to draw figures or to report statistics |

|

|

|

|

|

| 10 |

I can make the report in class with mixed media such as PowerPoint slides, videos, etc. |

|

|

|

|

|

| 11 |

I can find and decide what the best keywords that are used for online searches |

|

|

|

|

|

| 12 |

I can select information relevant to the issues from a large number of Google search results |

|

|

|

|

|

| 13 |

I can reorganize the information retrieved from digital media. To summarize the answers , e.g. grouping data, tables, etc. |

|

|

|

|

|

| 14 |

When I come across information that is referenced from other sources. I always check the primary source, and compare the authenticity and reliability of the information |

|

|

|

|

|

| 15 |

I frequently explore the internet to expand my learning by discovering new knowledge and information. |

|

|

|

|

|

| 16 |

I post only good, original, unique, and useful posts/articles that are useful to the public |

|

|

|

|

|

| 17 |

I engage with diverse digital media platforms, including YouTube, blogs, and slideshares, to enhance my understanding of subjects relevant to my studies. |

|

|

|

|

|

| 18 |

I can collaborate on group reports using social networks like Line, Facebook, Zalo, etc. |

|

|

|

|

|

| 19 |

I consider the appropriateness of disclosing personal information (Profile) on social media before deciding. |

|

|

|

|

|

| 20 |

I generally use licensed software instead of cracked software |

|

|

|

|

|

| 21 |

I use applications such as Zoom, Google Meeting, Microsoft Team, etc. to help and resolve group work issues. |

|

|

|

|

|

| 22 |

I create a group on social networks such as Line, Facebook, Zalo, Viber etc. to follow news and share useful content with others |

|

|

|

|

|

| 23 |

I can effectively distribute pictures, images, videos, and music publicly on the internet. |

|

|

|

|

|

| 24 |

I can search and find groups on a particular topic such ashobbies, music, art, science, etc., on different social media |

|

|

|

|

|

| 25 |

I use storage space on the internet such as Dropbox, GoogleDrive, iCloud, Microsoft Onedrive, etc. to store and share documents, pictures, music, video or other files for private purposes files |

|

|

|

|

|

| 26 |

I cite the source of images and articles copied from the website for reporting purposes |

|

|

|

|

|

| 27 |

I always consider the impact on other people or society when sharing and posting images or messages |

|

|

|

|

|

| 28 |

I can install and use anti-virus software on my computer |

|

|

|

|

|

| 29 |

I can evaluate the accuracy of internet information sources by verifying it in another source |

|

|

|

|

|

| 30 |

I can set up and use video calling platforms such as Skype, Zoom, FaceTime |

|

|

|

|

|

| 31 |

I can update and change passwords when personal information need to be kept safe |

|

|

|

|

|

| 32 |

I can guarantee that online login information is not shared with anyone. |

|

|

|

|

|

| 33 |

I can judge whether a website is safe and reliable. |

|

|

|

|

|

| 34 |

I can delete my account on various social networks and/or electronic services. |

|

|

|

|

|

| 35 |

I can translate content into other languages using translation tools. |

|

|

|

|

|

| 36 |

Ι can convert content from one format to another format (eg., Words to PDF) |

|

|

|

|

|

| 37 |

I can protect myself and others from spam and phishing messages |

|

|

|

|

|

Appendix B

Questionnaire assessing health professions students' digital skills in Dong Thap province, Vietnam

(Final DigiCAS-HPS Model used as Measurement Model)

After online learning for a long time during the Covid-19 outbreak, you can tell about your digital competence by

honestly answering the following questions. Your information will be kept confidential, used only for research purposes, and will not affect your work and life. Thank you very much for your cooperation.

Instructions: Read the sentences below, think to yourself to choose the answer that best suits your current digital competence:

- (1)

Very unlikely: I myself claim to have never done it or can't do it even with someone's help

- (2)

Unlikely: I can't do it myself without help.

- (3)

Not confirmed: sometimes I can do it myself without help.

- (4)

Likely : I can do it on my own and solve problems that arise

- (5)

Very likely: I can master myself, and solve problems, and teach others at the same time.

Part I: Demographic information

1. Age:……………..

2. Gender:

□ Female

□ Male

3. Academic year:…………………….

4. Majors:

□ Nursing

□ Pharmacy

□ Physiotherapy

□ Medical Lab Technology

5. Internet device use

□ Desk computer

□ Laptop

□ Smartphone

Part II: Digital competence

| # |

Digital competence |

Self-assessment |

| (1) |

(2) |

(3) |

(4) |

(5) |

| 1 |

Q2. I can install an application from .exe file or connect peripheral devices such as printers, digital cameras, portable hard drives, etc. to my computer or notebook |

|

|

|

|

|

| 2 |

Q3. I can discuss and exchange any issue on the web (Facebook, Zoom, Google meeting, etc.) with friends, classmates, teachers, and other individuals. |

|

|

|

|

|

| 3 |

Q.6 I can utilize digital tools such as Microsoft Teams and Zoom to facilitate efficient collaboration in my studies, enabling effective teamwork and communication.. |

|

|

|

|

|

| 4 |

Q8. I am capable of sharing study-related information, and documents on platforms like blogs and Facebook.. |

|

|

|

|

|

| 5 |

Q10. I can make the report in class with mixed media such as PowerPoint slides, videos, etc. |

|

|

|

|

|

| 6 |

Q13. I can reorganize the information retrieved from digital media. To summarize the answers , e.g. grouping data, tables, etc. |

|

|

|

|

|

| 7 |

Q15. I frequently explore the Internet to expand my learning by discovering new knowledge and information. |

|

|

|

|

|

| 8 |

Q16. I post only good, original, unique, and useful posts/articles that are useful to the public |

|

|

|

|

|

| 9 |

Q17. I engage with diverse digital media platforms, including YouTube, blogs, and slideshares, to enhance my understanding of subjects relevant to my studies. |

|

|

|

|

|

| 10 |

Q19. I consider the appropriateness of disclosing personal information (Profile) on social media before deciding. |

|

|

|

|

|

| 11 |

Q20. I generally use licensed software instead of cracked software |

|

|

|

|

|

| 12 |

Q21. I use applications such as Zoom, Google Meeting, Microsoft Team, etc. to help and resolve group work issues. |

|

|

|

|

|

| 13 |

Q22. I create a group on social networks such as Line, Facebook, Zalo, Viber etc. to follow news and share useful content with others |

|

|

|

|

|

| 14 |

Q28. I can install and use anti-virus software on my computer |

|

|

|

|

|

| 15 |

Q31. I can update and change passwords when personal information need to be kept safe |

|

|

|

|

|

| 16 |

Q36. Ι can convert content from one format to another format (eg., Words to PDF) |

|

|

|

|

|

References

- Li, C.; Lalani, F. The COVID-19 pandemic has changed education forever. In World Economic Forum 2020 Apr 29 (Vol. 29).

- Edirippulige, S.; Brooks, P.; Carati, C.; Wade, V.A.; Smith, A.C.; Wickramasinghe, S.; Armfield, N.R. It’s important, but not important enough: eHealth as a curriculum priority in medical education in Australia. J Telemed Telecare. 2018, 24, 697–702. [Google Scholar] [CrossRef] [PubMed]

- Terry, J.; Davies, A.; Williams, C.; Tait, S.; Condon, L. Improving the digital literacy competence of nursing and midwifery students: A qualitative study of the experiences of NICE student champions. Nurse Educ. Pract. 2019, 34, 192–8. [Google Scholar] [CrossRef] [PubMed]

- Evangelinos, G.; Holley, D. Developing a digital competence self-assessment toolkit for nursing students. In European Distance and E-Learning Network Conference Proceedings 2014 Jun 10 (No. 1, pp. 206-212).

- Gürdaş Topkaya, S.; Kaya, N. Nurses' computer literacy and attitudes towards the use of computers in health care. Int J Nurs Pract. 2015, 21, 141–9. [Google Scholar] [CrossRef] [PubMed]

- European Communities. Key competences for lifelong learning: European reference framework. Office for Official Publications of the European Communities Luxembourg. 2007.

- Khan, S.A.; Bhatti, R. Digital competencies for developing and managing digital libraries: An investigation from university librarians in Pakistan. Electron Libr. 2017, 35, 573–97. [Google Scholar] [CrossRef]

- Ferrari, A. Digital competence in practice: An analysis of frameworks. Luxembourg: Publications Office of the European Union; 2012 Sep.

- Janssen, J.; Stoyanov, S. Online consultation on experts’ views on digital competence. 2012. [Google Scholar] [CrossRef]

- Luić, L.; Alić, M. The importance of developing students’ digital skills for the digital transformation of the curriculum. In INTED 2022 Proceedings 2022 (pp. 7207-7215). IATED.

- Perifanou, M.; Economides, A.A. The digital competence actions framework. In: ICERI 2019 Proceedings. IATED Digital Library; 2019.

- European Commission. Digcomp Framework 2.2.[Online].2020 [cited 2023 Feb 22]. Available from URL: https://joint-research-centre.ec.europa.

- Law, N.W.; Woo, D.J.; de la Torre, J.; Wong, K.W. A global framework of reference on digital literacy skills for indicator 4.4. 2. UNESCO Institute for Statistics; 2018.

- UK Department for Education. National standards for essential digital skills. [Online]. 2019 [cited 2023 May 10]. Available from URL: https://doi.org/https://assets.publishing.service.gov.uk/government/uploads/system/uploads/attachment_data/file/ 796596/National_standards_for_essential_digital_skills.pdf.

- Fraillon, J.; Ainley, J.; Schulz, W.; Duckworth, D.; Friedman, T. IEA international computer and information literacy study 2018 assessment framework. Springer Nature; 2019.

- ESCO. Digital competencies, European Skills, Competences, Qualifications and Occupations. [Online]. 2019 [cited 2023 Jan 15]. Available from URL: http://data.europa.eu/esco/skill/aeecc330-0be9-419f-bddb-5218de926004.

- Towards a National Digital Skills Framework for Irish Higher Education. [Online]. 2015 [cited 2023 Aug 21]. Available from: URL: https://www.teachingandlearning.ie/wp-content/uploads/NF-2016-Towards-a-National-Digital-Skills-Framework-for-Irish-Higher-Education.

- Yu, H.; Liu, P.; Huang, X.; Cao, Y. Teacher online informal learning as a means to innovative teaching during home quarantine in the COVID-19 pandemic. Front. Psychol. 2021, 12, 596582. [Google Scholar] [CrossRef] [PubMed]

- Tømte, C.; Hatlevik, O.E. Gender-differences in self-efficacy ICT related to various ICT-user profiles in Finland and Norway. How do self-efficacy, gender and ICT-user profiles related to findings from PISA 2006. Comput. Educ. 2011, 57, 1416–24. [Google Scholar] [CrossRef]

- Mariano, B. Towards a global strategy on digital health. Bulletin of the World Health Organization. 2020 Apr 4;98(4):231.

- George, E.; Debbie, H.; Kingdom, U. Dveloping a digital competence self-assessment toolkit for nursing students. In: Proceedings of the European Distance and E-Learning Network 2014 Annual Conference. 2014.

- Peart, M.T.; Gutiérrez-Esteban, P.; Cubo-Delgado, S. Development of the digital and socio-civic skills (DIGISOC) questionnaire. Educ Technol Res Dev, 2020 [cited 2023 ];68(6):3327–51. Available from URL, 22 May 2020. [Google Scholar] [CrossRef]

- Yusoff, M.S.B. ABC of content validation and content validity index calculation. Educ Med J. 2019, 11, 49–54. [Google Scholar] [CrossRef]

- Polit, D.F.; Beck, C.T. The content validity index: are you sure you know what’s being reported? Critique and recommendations. Res Nurs Health 2006, 29, 489–97. [Google Scholar] [CrossRef]

- MacCallum, R.C.; Roznowski, M.; Mar, C.; Reith, J.V. Alternative strategies for cross-validation of covariance structure models. Multivar. Behav. Res. 1994, 29, 1–32. [Google Scholar] [CrossRef]

- DeVellis, R.F. Scale Development: Theory and Applications (Applied Social Research Methods). Fourth. SAGE Publications Sage CA: Los Angeles, CA; 2017. 105–125 p.

- George, D.; Mallery, P. SPSS for Windows step by step: A simple guide and reference. 7th Ed. Pearson Education. 2003.

- Briggs, N.E.; Maccallum, R.C.; Briggs, N.E.; Maccallum, R.C. Recovery of Weak Common Factors by Maximum Likelihood and Ordinary Least Squares Estimation Likelihood and Ordinary Least Squares Estimation. 2010 [Cited 2023 January 1]; 3: 171. Available from: https://www.tandfonline.com/loi/hmbr20.

- Cudeck, R. Exploratory factor analysis. In: Handbook of applied multivariate statistics and mathematical modeling. Elsevier; 2000. p. 265–96.

- Stevens, J. Applied multivariate statistics for the social sciences. Hillsdale, NJ: L. Erlbaum Associates Inc.; 1986.

- Cabrera-Nguyen, P. Author Guidelines for Reporting Scale Development and Validation Results in the Journal of the Society for Social Work and Research. J Soc Social Work Res. 2010, 1, 99–103. [Google Scholar] [CrossRef]

- Beavers, A.S.; Lounsbury, J.W.; Richards, J.K.; Huck, S.W.; Skolits, G.J. Practical Assessment , Research , and Evaluation Practical Considerations for Using Exploratory Factor Analysis in Educational Research. Pract Assessment, Res Eval. 2013 [Cited December 25 2022]; 18. Available from: https://scholarworks.umass.edu/pare/ vol18/iss1/6.

- Tabachnick, B.G.; Fidell, L.S.; Ullman, J.B. Using multivariate statistics. Vol. 6. Pearson Boston, MA; 2013.

- Hu, L.; Bentler, P.M. Cutoff criteria for fit indexes in covariance structure analysis: Conventional criteria versus new alternatives. Struct Equ Model A Multidiscip J 1999, 6, 1–55. [Google Scholar] [CrossRef]

- Brown, T.A.; Moore, M.T. Confirmatory factor analysis. Handbook of structural equation modeling. 2012;361:379.

- Malkanthie, A. Structural Equation Modeling with AMOS. 2015 [Cited December 15 2022]. Available from: https://www.researchgate.net/publication/278889068_Structural_Equation_Modeling_with_AMOS.

- Robert, H.M.S.; MacCallum, C. Power Analysis and Determination of Sample Size for Covariance Structure Modeling. Psychological Method. 1996 [Cited January 25 2023]; 1(2):130-149. Available from: http://ww.w.statpower.net/Content/312/Handout/MacCallumBrowneSugawara96.pdf.

- Browne, M.W.; Cudeck, R. Alternative ways of assessing model fit. In Bollen KA,Long JS (Eds.), Testing structural equation models.Newbury Park, CA:Sage.1993.

- Mattan SBen-Shachar, D.M.; Lüdecke, D.; Patil, I.; Wiernik, B.M.; Thériault, R. Interpret of CFA / SEM Indices of Goodness of Fit. 2022 [Cited January 05 2023]. Available from: https://easystats.github.io/effectsize/reference/interpret_gfi.html.

- Hair, J.F.; Babin, B.J.; Black, B.; Anderson, R.E.; Tatham, R.L. Multivariate Data Analysis : a global perspective. New Jersey: Pearson Education Inc. 2010.

- Streiner, D.L. Figuring out factors: the use and misuse of factor analysis. Can J Psychiatry 1994, 39, 135–140. [Google Scholar] [CrossRef] [PubMed]

- Kline, R.B. Principles and practice of structural equation modeling. New York, NY Guilford. 2011;14:1497–513.

- Henseler, J.; Ringle, C.M.; Sarstedt, M. A new criterion for assessing discriminant validity in variance-based structural equation modeling. J. Acad. Mark. Sci. 2015, 43, 115–135. [Google Scholar] [CrossRef]

- Hansen, A.M.; Jeske, D.; Kirsch, W. A chi-square goodness-of-fit test for autoregressive logistic regression models with applications to patient screening. J. Biopharm. Stat. 2015, 25, 89–108. [Google Scholar] [CrossRef] [PubMed]

- Cha, S.E.; Jun, S.J.; Kwon, D.Y.; Kim, H.S.; Kim, S.B.; Kim, J.M.; et al. Measuring achievement of ICT competency for students in Korea. Comput Educ. 2011, 56, 990–1002. [Google Scholar] [CrossRef]

- Suwanroj, T.; Leekitchwatana, P.; Pimdee, P. Investigating digital competencies for undergraduate students at Nakhon Si Thammarat Rajabhat University. In DRLE 2017 The 15th international conference faculty of industrial education and technology King Mongkut’s Institute of Technology Ladkrabang 2017 (Vol. 27, No. 2, pp. 11-19).

- Krasnova, L.A.; Shurygin, V.Y. Development of teachers’ information competency in higher education institution. Astra Salvensis 2017, 5, 307–14. [Google Scholar]

- Leekitchwatana, P. Development of competency factors for information technology. KKU Res J. 2017, 15, 1101–13. [Google Scholar]

- Konttila, J.; Siira, H.; Kyngäs, H.; Lahtinen, M.; Elo, S.; Kääriäinen, M.; et al. Healthcare professionals’ competence in digitalisation: A systematic review. J Clin Nurs. 2019, 28, 745–61. [Google Scholar] [CrossRef]

- Kijsanayotin, B.; Pannarunothai, S.; Speedie, S.M. Factors influencing health information technology adoption in Thailand's community health centers: applying the UTAUT model. Int. J. Med. Inform. 2009, 78, 404–16. [Google Scholar] [CrossRef]

- Brecher, D.B. The use of Skype in a community hospital inpatient palliative medicine consultation service. J Palliat Med. 2013, 16, 110–2. [Google Scholar] [CrossRef]

- Ward, M.M.; Jaana, M.; Natafgi, N. Systematic review of telemedicine applications in emergency rooms. Int J Med Inform. 2015, 84, 601–16. [Google Scholar] [CrossRef] [PubMed]

- Fang, H.; Wang, L.; Yang, Y. Human mobility restrictions and the spread of the novel coronavirus (2019-nCoV) in China. J. Public Econ. 2020, 191, 104272. [Google Scholar] [CrossRef] [PubMed]

- Hollander, J.E.; Carr, B.G. Virtually perfect? Telemedicine for COVID-19. N Engl J Med. 2020, 382, 1679–81. [Google Scholar] [CrossRef] [PubMed]

- Katz, V.S.; Jordan, A.B.; Ognyanova, K. Digital inequality, faculty communication, and remote learning experiences during the COVID-19 pandemic: A survey of US undergraduates. PLoS ONE 2021, 16, e0246641. [Google Scholar] [CrossRef]

- Burney, S.M.A.; Mahmood, N.; Abbas, Z. Information and communication technology in healthcare management systems: Prospects for developing countries. Int J Comput Appl. 2010, 4, 27–32. [Google Scholar] [CrossRef]