Submitted:

14 November 2023

Posted:

15 November 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

1.1. Barriers to AI Acceptance within PC

1.2. Research Aims

- What are the social barriers and challenges hindering the trust and acceptance of AI within PC?

- What are the specific requirements and expectations of different stakeholder levels within the PC domain for AI, considering their computing needs?

- How can the specific requirements be addressed using explainable AI (XAI) techniques at each stakeholder level?

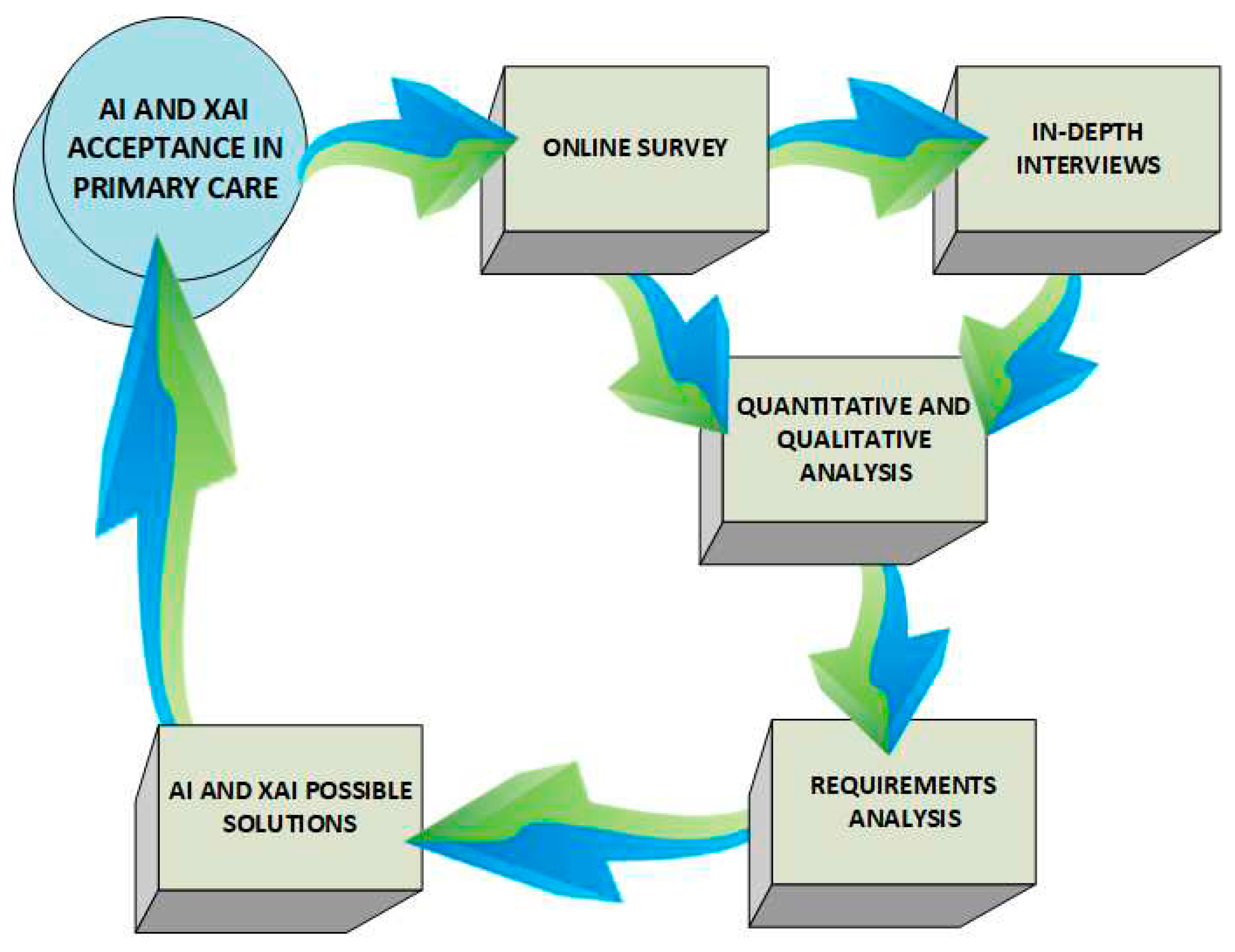

2. Methods

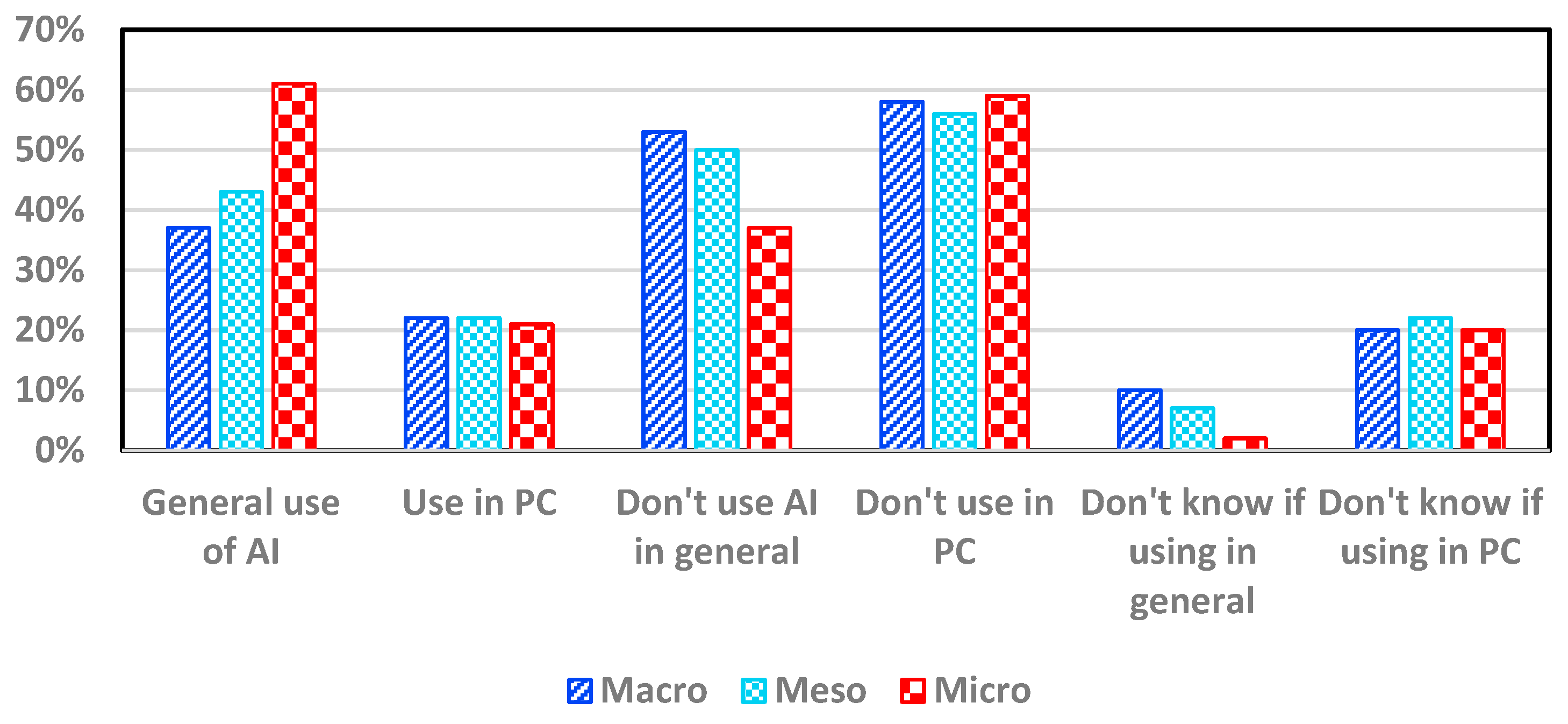

3. Results

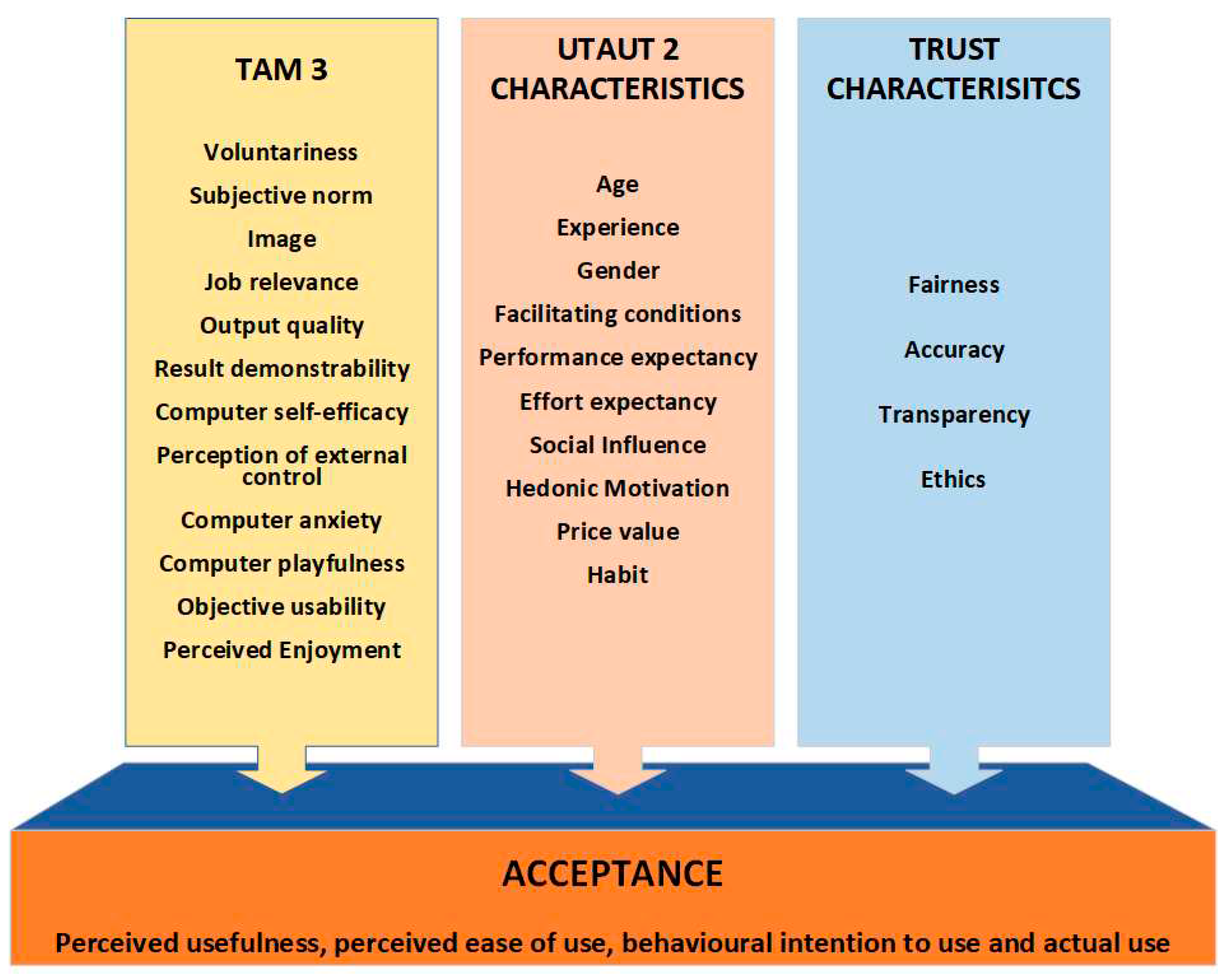

4. Discussion

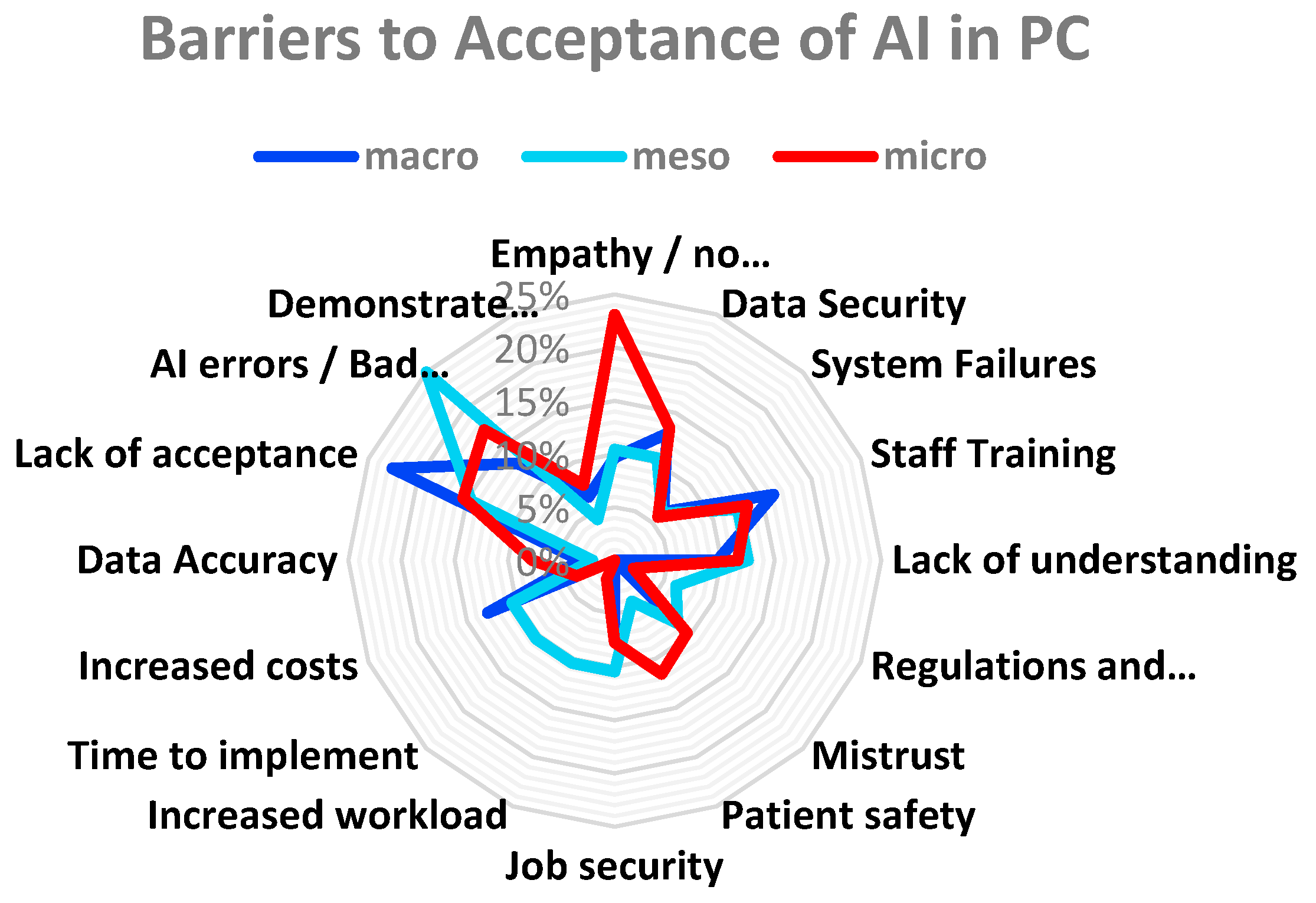

4.1. Research Objective 1: Barriers and Challenges Affecting Trust and Acceptance

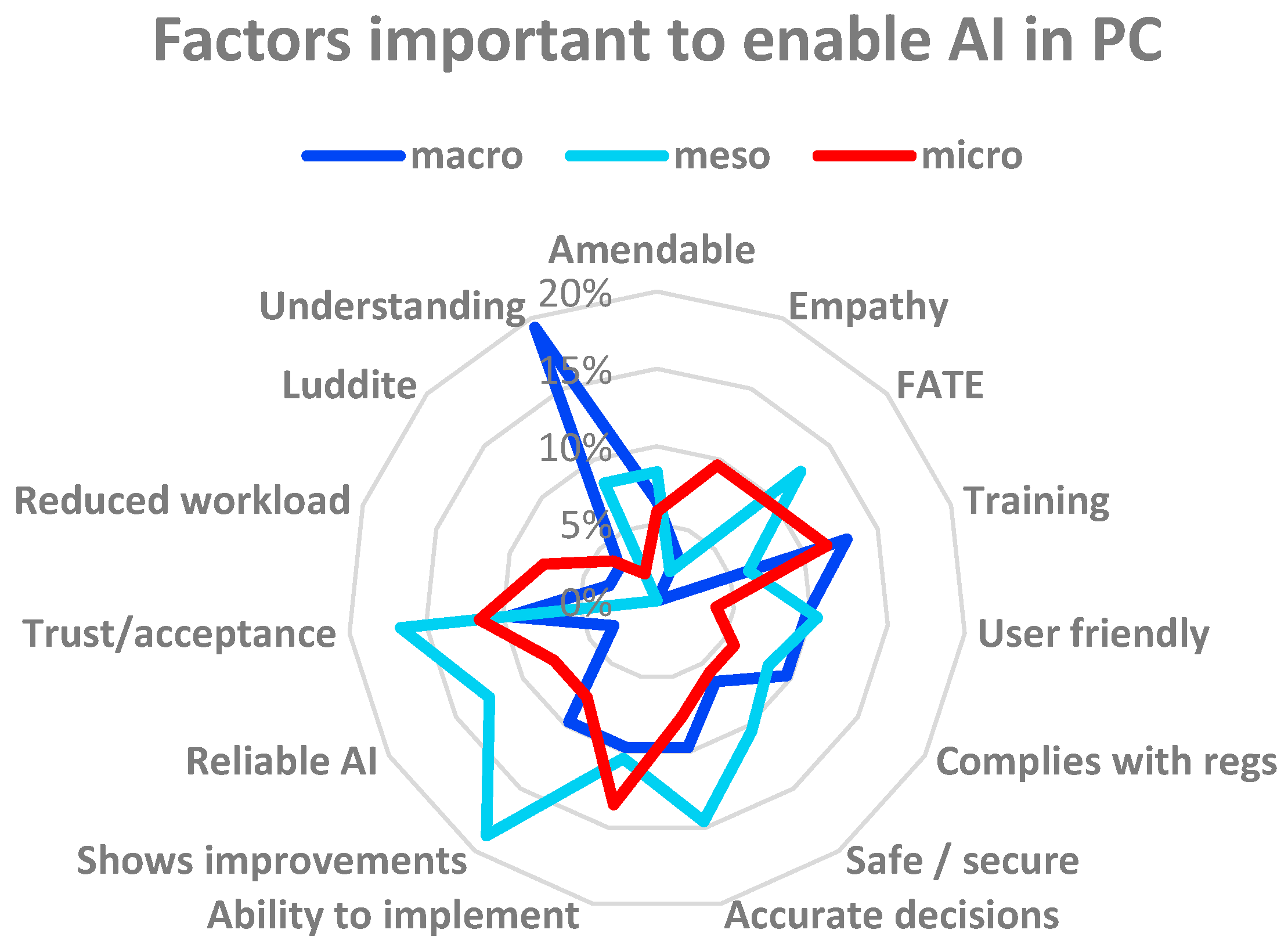

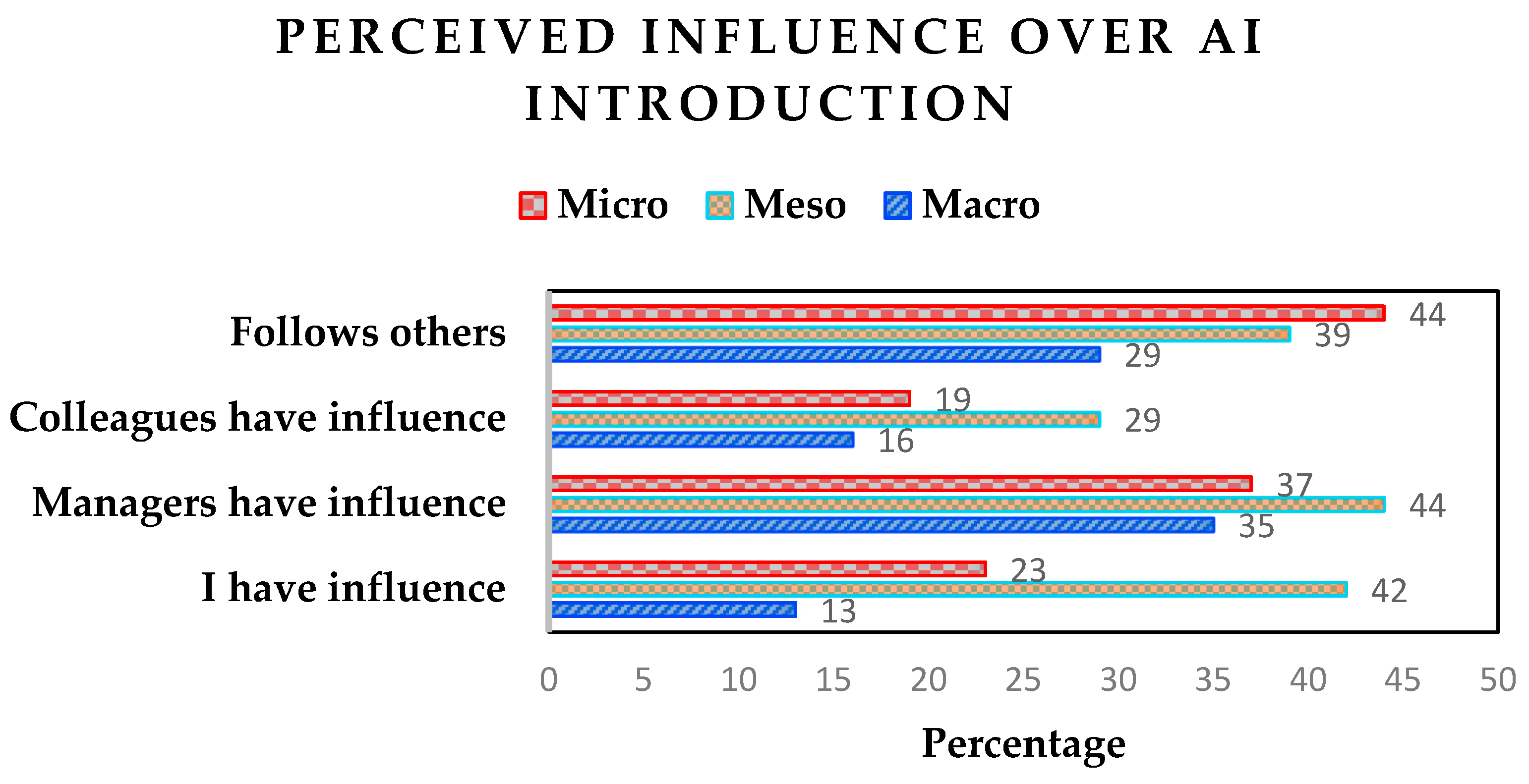

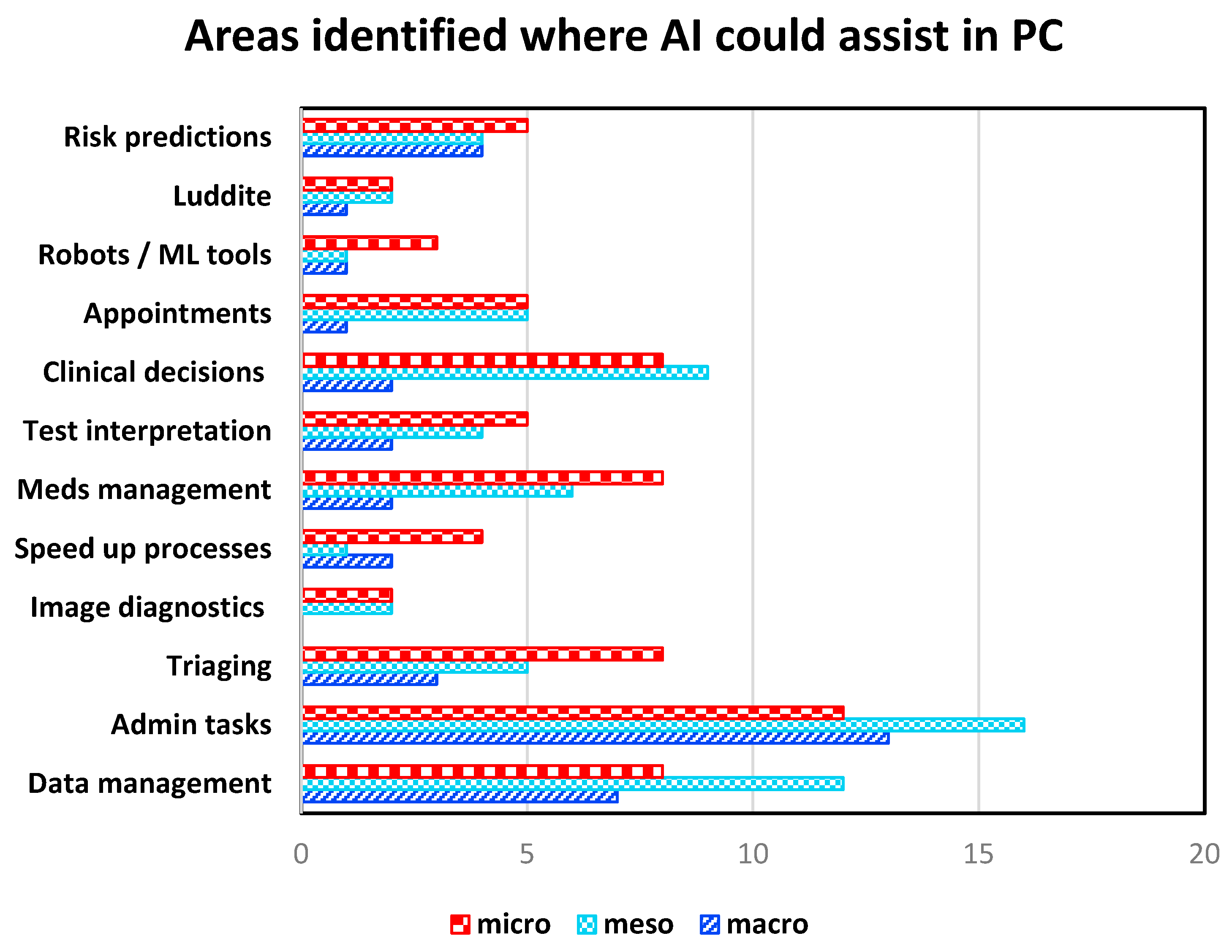

4.2. Research Objective 2: Requirements and Expectation of Different Stakeholder Levels

- GP Connect Access Document – retrieve unstructured documents from a patient’s record.

- GP Connect Access Record: HTML – view a patient’s record with read only access.

- GP Connect Access Record: Structured – retrieve structured information from a patient’s record.

- National Data Opt-Out - capture patients’ preferences towards the sharing of their data for research purposes.

- Summary Care Record – access an electronic record containing important patient information.

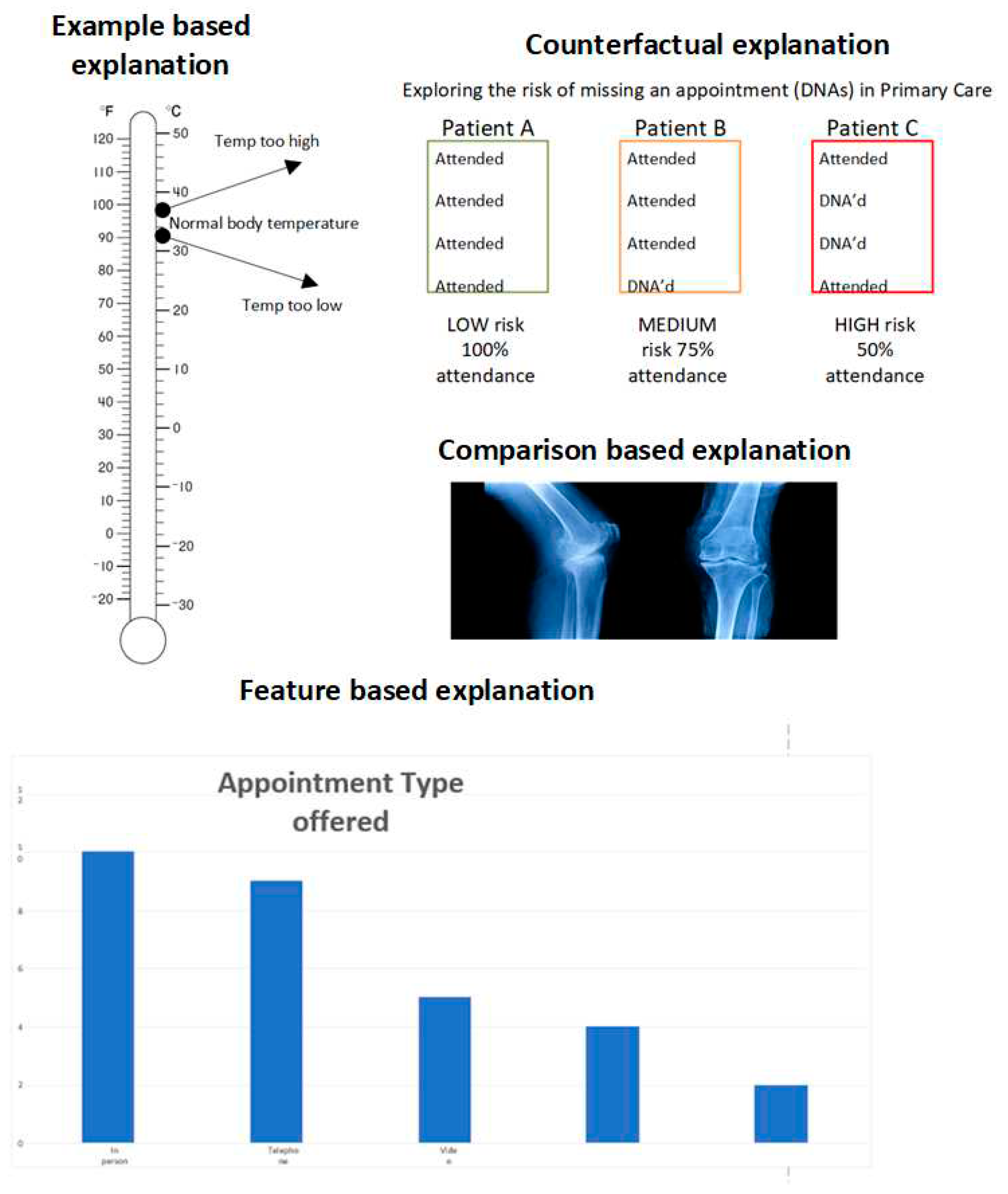

4.3. Research Objective 3: Addressing the Specific Requirements

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Bohr, A.; Memarzadeh, K. The Rise of Artificial Intelligence in Healthcare Applications. Artificial Intelligence in Healthcare 2020, 25–60. [Google Scholar] [CrossRef]

- Reddy, S.; Fox, J.; Purohit, M. P. Artificial Intelligence-Enabled Healthcare Delivery. J R Soc Med 2019, 112, 22–28. [Google Scholar] [CrossRef] [PubMed]

- Chen, Y.; Stavropoulou, C.; Narasinkan, R.; Baker, A.; Scarbrough, H. Professionals’ Responses to the Introduction of AI Innovations in Radiology and Their Implications for Future Adoption: A Qualitative Study. BMC Health Serv Res 2021, 21. [Google Scholar] [CrossRef]

- Bajwa, J.; Munir, U.; Nori, A.; Williams, B. Artificial Intelligence in Healthcare: Transforming the Practice of Medicine. Future Healthc j 2021, 8, e188–e194. [Google Scholar] [CrossRef] [PubMed]

- Kusunose, K. Radiomics in Echocardiography: Deep Learning and Echocardiographic Analysis. Curr Cardiol Rep 2020, 22, 89:6. [CrossRef]

- Rainey, C.; O’Regan, T.; Matthew, J.; Skelton, E.; Woznitza, N.; Chu, K.-Y.; Goodman, S.; McConnell, J.; Hughes, C.; Bond, R.; Malamateniou, C.; McFadden, S. An Insight into the Current Perceptions of UK Radiographers on the Future Impact of AI on the Profession: A Cross-Sectional Survey. J Med Imaging Radiat Sci 2022, 53, 347–361. [Google Scholar] [CrossRef] [PubMed]

- Scheetz, J.; Rothschild, P.; McGuinness, M.; Hadoux, X.; Soyer, H. P.; Janda, M.; Condon, J. J. J.; Oakden-Rayner, L.; Palmer, L. J.; Keel, S.; van Wijngaarden, P. A Survey of Clinicians on the Use of Artificial Intelligence in Ophthalmology, Dermatology, Radiology and Radiation Oncology. Sci Rep 2021, 11, 5193:10. [Google Scholar] [CrossRef]

- Kueper, J. K.; Terry, A.; Zwarenstein, M.; Lizotte, D. J. Artificial Intelligence and Primary Care Research: A Scoping Review. Ann Fam Med 2020, 18, 250–258. [Google Scholar] [CrossRef]

- Sides, T.; Farrell, T.; Kbaier, D. Understanding the Acceptance of Artificial Intelligence in Primary Care. In Communications in Computer and Information Science, Proceedings of HCII 2023, Copenhagen, Denmark, 23-28 July 2023; Stephanidis, C., Antona, M., Ntoa, S., Salvendy, G., Eds.; Springer: Cham, Switzerland, 2023; pp. 512–518. [Google Scholar] [CrossRef]

- NHS. NHS Long Term Plan. Available online: https://www.longtermplan.nhs.uk (accessed on 22 May 2022).

- UK government. National AI Strategy - AI Action Plan. Available online: https://www.gov.uk/government/publications/national-ai-strategy-ai-action-plan/national-ai-strategy-ai-action-plan (accessed on 16 December 2022).

- Kumar, P.; Chauhan, S.; Awasthi, L. K. Artificial Intelligence in Healthcare: Review, Ethics, Trust Challenges & Future Research Directions. Eng Appl Artif Intell 2023, 120, 105894:20. [Google Scholar] [CrossRef]

- NHS England. Structure of the NHS. Available online: https://www.england.nhs.uk/long-read/structure-of-the-nhs/ (accessed on 17 October 2023).

- Morrison, K. Artificial Intelligence and the NHS: A Qualitative Exploration of the Factors Influencing Adoption. Future Healthc J 2021, 8, e648–e654. [Google Scholar] [CrossRef]

- Panch, T.; Mattie, H.; Celi, L. A. The “Inconvenient Truth” about AI in Healthcare. npj Digit. Med. 2019, 2, 1–3. [Google Scholar] [CrossRef]

- Bagenal, J.; Naylor, A. Harnessing the Value of NHS Patient Data. The Lancet 2018, 392, 2420–2422. [Google Scholar] [CrossRef]

- UK Government. National Data Strategy. Available online: https://www.gov.uk/government/publications/uk-national-data-strategy/national-data-strategy (accessed on 8 November 2023).

- British Medical Association. How the NHS works. Available online: https://www.bma.org.uk/advice-and-support/international-doctors/life-and-work-in-the-uk/toolkit-for-doctors-new-to-the-uk/how-the-nhs-works (accessed on 3 February 2023).

- NHS Digital. General Practice Workforce, 31 May 2023. Available online: https://digital.nhs.uk/data-and-information/publications/statistical/general-and-personal-medical-services/31-may-2023 (accessed on 24 October 2023).

- Asthana, S.; Jones, R.; Sheaff, R. Why Does the NHS Struggle to Adopt eHealth Innovations? A Review of Macro, Meso and Micro Factors. BMC Health Serv Res 2019, 19, 984:7. [Google Scholar] [CrossRef]

- NHS England. NHS AI Lab roadmap. Available online: https://transform.england.nhs.uk/ai-lab/nhs-ai-lab-roadmap/ (accessed on 28 October 2022).

- Liyanage, H.; Liaw, S.-T.; Jonnagaddala, J.; Schreiber, R.; Kuziemsky, C.; Terry, A. L.; de Lusignan, S. Artificial Intelligence in Primary Health Care: Perceptions, Issues, and Challenges. Yearb Med Inform 2019, 28, 41–46. [Google Scholar] [CrossRef]

- Darcel, K.; Upshaw, T.; Craig-Neil, A.; Macklin, J.; Gray, C. S.; Chan, T. C. Y.; Gibson, J.; Pinto, A. D. Implementing Artificial Intelligence in Canadian Primary Care: Barriers and Strategies Identified through a National Deliberative Dialogue. PLoS One 2023, 18, e0281733–e0281733. [Google Scholar] [CrossRef] [PubMed]

- Buck, C.; Doctor, E.; Hennrich, J.; Jöhnk, J.; Eymann, T. General Practitioners’ Attitudes Toward Artificial Intelligence–Enabled Systems: Interview Study. J Med Internet Res 2022, 24, e28916:18. [Google Scholar] [CrossRef] [PubMed]

- Pedro, A. R.; Dias, M. B.; Laranjo, L.; Cunha, A. S.; Cordeiro, J. V. Artificial Intelligence in Medicine: A Comprehensive Survey of Medical Doctor’s Perspectives in Portugal. PLoS ONE 2023, 18, e0290613–e0290613. [Google Scholar] [CrossRef]

- Catalina, Q. M.; Fuster-Casanovas, A.; Vidal-Alaball, J.; Escalé-Besa, A.; Marin-Gomez, F. X.; Femenia, J.; Solé-Casals, J. Knowledge and Perception of Primary Care Healthcare Professionals on the Use of Artificial Intelligence as a Healthcare Tool. Digit Health 2023, 9, 20552076231180511:11. [Google Scholar] [CrossRef]

- Martinho, A.; Kroesen, M.; Chorus, C. A Healthy Debate: Exploring the Views of Medical Doctors on the Ethics of Artificial Intelligence. Artif Intell Med 2021, 121, 102190:10. [Google Scholar] [CrossRef] [PubMed]

- Kolbjørnsrud, V.; Amico, R.; Thomas, R. J. Partnering with AI: How Organizations Can Win over Skeptical Managers. Strategy & Leadership 2017, 45, 37–43. [Google Scholar] [CrossRef]

- Ferreira, H.; Ruivo, P.; Reis, C. How Do Data Scientists and Managers Influence Machine Learning Value Creation? Procedia Comput Sci 2021, 181, 757–764. [Google Scholar] [CrossRef]

- European Union. General Data Protection Regulation (GDPR). Available online: https://gdpr-info.eu/ (accessed on 31 October 2023).

- UK Government. The Privacy and Electronic Communications (EC Directive) Regulations 2003. Available online: https://www.legislation.gov.uk/uksi/2003/2426/contents/made (accessed on 31 October 2023).

- UK Government. Public Records Act 1958. Available online: https://www.legislation.gov.uk/ukpga/Eliz2/6-7/51 (accessed on 31 October 2023).

- UK Government. National Health Service Act 2006. Available online: https://www.legislation.gov.uk/ukpga/2006/41/contents (accessed on 31 October 2023).

- UK Government. Health and Social Care (Quality and Engagement) (Wales) Act 2020. Available online: https://www.legislation.gov.uk/asc/2020/1/contents/enacted (accessed on 27 October 2022).

- UK Government. Confidentiality: NHS Code of Practice. Available online: https://www.gov.uk/government/publications/confidentiality-nhs-code-of-practice (accessed on 31 October 2023).

- Ganapathi, S.; Duggal, S. Exploring the Experiences and Views of Doctors Working with Artificial Intelligence in English Healthcare; a Qualitative Study. PLoS One 2023, 18, e0282415:17. [Google Scholar] [CrossRef] [PubMed]

- Waters, A. AI Technologies: Guidelines Set out Training Requirements for NHS Staff. BMJ 2022, 379, o2560. [Google Scholar] [CrossRef] [PubMed]

- Leslie, D.; Mazumder, A.; Peppin, A.; Wolters, M. K.; Hagerty, A. Does “AI” Stand for Augmenting Inequality in the Era of Covid-19 Healthcare? BMJ 2021, 372, n304:5. [Google Scholar] [CrossRef]

- UK Government. Data Ethics Framework. Available online: https://www.gov.uk/government/publications/data-ethics-framework (accessed on 27 October 2023).

- von Eschenbach, W. J. Transparency and the Black Box Problem: Why We Do Not Trust AI. Philos. Technol. 2021, 34, 1607–1622. [Google Scholar] [CrossRef]

- Markus, A. F.; Kors, J. A.; Rijnbeek, P. R. The Role of Explainability in Creating Trustworthy Artificial Intelligence for Health Care: A Comprehensive Survey of the Terminology, Design Choices, and Evaluation Strategies. J Biomed Inform 2021, 113, 103655:11. [Google Scholar] [CrossRef]

- Amann, J.; Blasimme, A.; Vayena, E.; Frey, D.; Madai, V. I. Explainability for Artificial Intelligence in Healthcare: A Multidisciplinary Perspective. BMC Med Inform Decis Mak 2020, 20, 310:9. [Google Scholar] [CrossRef]

- Kerasidou, A. Artificial Intelligence and the Ongoing Need for Empathy, Compassion and Trust in Healthcare. Bull World Health Organ 2020, 98, 245–250. [Google Scholar] [CrossRef]

- Coulter, A.; Oldham, J. Person-Centred Care: What Is It and How Do We Get There? Future Hosp J 2016, 3, 114–116. [Google Scholar] [CrossRef]

- Montemayor, C.; Halpern, J.; Fairweather, A. In Principle Obstacles for Empathic AI: Why We Can’t Replace Human Empathy in Healthcare. AI & Soc 2022, 37, 1353–1359. [Google Scholar] [CrossRef]

- Yang, R.; Wibowo, S. User Trust in Artificial Intelligence: A Comprehensive Conceptual Framework. Electron Markets 2022, 32, 2053–2077. [Google Scholar] [CrossRef]

- Hashim, M. J. Patient-Centered Communication: Basic Skills. Am Fam Physician 2017, 95, 29–34. [Google Scholar] [PubMed]

- Alam, L.; Mueller, S. Examining the Effect of Explanation on Satisfaction and Trust in AI Diagnostic Systems. BMC Med Inform Decis Mak 2021, 21. [Google Scholar] [CrossRef] [PubMed]

- Venkatesh, V.; Bala, H. Technology Acceptance Model 3 and a Research Agenda on Interventions. Decis Sci 2008, 39, 273–315. [Google Scholar] [CrossRef]

- Venkatesh, V.; Thong, J. Y. L.; Xu, X. Consumer Acceptance and Use of Information Technology: Extending the Unified Theory of Acceptance and Use of Technology. MIS Q 2012, 36, 157–178. [Google Scholar] [CrossRef]

- Abdekhoda, M.; Dehnad, A.; Zarei, J. Determinant Factors in Applying Electronic Medical Records in Healthcare. East Mediterr Health J. 2018, 25, 24–33. [Google Scholar] [CrossRef]

- Lewis, J. R. Comparison of Four TAM Item Formats: Effect of Response Option Labels and Order. J. Usability Stud 2019, 14, 224–236. [Google Scholar]

- Mishra, P.; Pandey, C. M.; Singh, U.; Keshri, A.; Sabaretnam, M. Selection of Appropriate Statistical Methods for Data Analysis. Ann Card Anaesth 2019, 22, 297–301. [Google Scholar] [CrossRef] [PubMed]

- Braun, V.; Clarke, V. Thematic Analysis: A Practical Guide, 1st ed.; Sage Publications Ltd: London, England, 2021; ISBN 978-1-4739-5323-9. [Google Scholar]

- Lebcir, R.; Hill, T.; Atun, R.; Cubric, M. Stakeholders’ Views on the Organisational Factors Affecting Application of Artificial Intelligence in Healthcare: A Scoping Review Protocol. BMJ Open 2021, 11, e044074:6. [Google Scholar] [CrossRef]

- NHS. Digital Technology Assessment Criteria (DTAC). Available online: https://transform.england.nhs.uk/key-tools-and-info/digital-technology-assessment-criteria-dtac/ (accessed on 2 November 2023).

- Musbahi, O.; Syed, L.; Le Feuvre, P.; Cobb, J.; Jones, G. Public Patient Views of Artificial Intelligence in Healthcare: A Nominal Group Technique Study. Digit Health 2021, 7, 20552076211063682–11. [Google Scholar] [CrossRef]

- NHS Digital. API catalogue. Available online: https://digital.nhs.uk/developer/api-catalogue (accessed on 3 November 2023).

- Nadeem, F. Evaluating and Ranking Cloud IaaS, PaaS and SaaS Models Based on Functional and Non-Functional Key Performance Indicators. IEEE Access 2022, 10, 63245–63257. [Google Scholar] [CrossRef]

- Mckee, M.; Wouters, O. J. The Challenges of Regulating Artificial Intelligence in Healthcare: Comment on “Clinical Decision Support and New Regulatory Frameworks for Medical Devices: Are We Ready for It? - A Viewpoint Paper”. Int J Health Policy Manag 2023, 12, 1–4. [Google Scholar] [CrossRef]

- Adadi, A.; Berrada, M. Peeking Inside the Black-Box: A Survey on Explainable Artificial Intelligence (XAI). IEEE Access 2018, 6, 52138–52160. [Google Scholar] [CrossRef]

- Petch, J.; Di, S.; Nelson, W. Opening the Black Box: The Promise and Limitations of Explainable Machine Learning in Cardiology. Can J Cardiol 2022, 38, 204–213. [Google Scholar] [CrossRef] [PubMed]

- Davenport, T.; Kalakota, R. The Potential for Artificial Intelligence in Healthcare. Future Healthc J. 2019, 6, 94–98. [Google Scholar] [CrossRef] [PubMed]

- Pyne, Y.; Wong, Y. M.; Fang, H.; Simpson, E. Analysis of ‘One in a Million’ Primary Care Consultation Conversations Using Natural Language Processing. BMJ Health Care Inform 2023, 30, e100659:10. [Google Scholar] [CrossRef] [PubMed]

- Sarensen, N. L.; Bemman, B.; Jensen, M. B.; Moeslund, T. B.; Thomsen, J. L. Machine Learning in General Practice: Scoping Review of Administrative Task Support and Automation. BMC Prim Care 2023, 24, 14:14. [Google Scholar] [CrossRef]

- Willis, M.; Duckworth, P.; Coulter, A.; Meyer, E. T.; Osborne, M. Qualitative and Quantitative Approach to Assess the Potential for Automating Administrative Tasks in General Practice. BMJ Open 2020, 10, e032412:9. [Google Scholar] [CrossRef]

- Bertossi, L.; Geerts, F. Data Quality and Explainable AI. J. ACM J Data Inf Qual 2020, 12, 11:1–11:9. [Google Scholar] [CrossRef]

- Bunn, J. Working in Contexts for Which Transparency Is Important: A Recordkeeping View of Explainable Artificial Intelligence (XAI). Records Management Journal 2020, 30, 143–153. [Google Scholar] [CrossRef]

- Ohana, J. J.; Ohana, S.; Benhamou, E.; Saltiel. D.; Guez, B. Explainable AI (XAI) Models Applied to the Multi-agent Environment of Financial Markets. In Explainable and Transparent AI and Multi-Agent Systems, Calvaresi, D., Najjar, A., Winikoff, M., Främling, K., Eds.; Springer International Publishing, Cham, Switzerland, 2021; pp 189-207, ISBN 978-3-030-82017-6.

- Zhang, C. (Abigail); Cho, S.; Vasarhelyi, M. Explainable Artificial Intelligence (XAI) in Auditing. International Journal of Accounting Information Systems 2022, 46, 100572:22. [Google Scholar] [CrossRef]

- Mahoney, C. J.; Zhang, J.; Huber-Fliflet, N.; Gronvall, P.; Zhao, H. A Framework for Explainable Text Classification in Legal Document Review. In 2019 IEEE International Conference on Big Data (Big Data); IEEE: Los Angeles, CA, USA, 2019. [Google Scholar] [CrossRef]

- Hofeditz, L.; Clausen, S.; Rieß, A.; Mirbabaie, M.; Stieglitz, S. Applying XAI to an AI-Based System for Candidate Management to Mitigate Bias and Discrimination in Hiring. Electron Markets 2022, 32, 2207–2233. [Google Scholar] [CrossRef] [PubMed]

| Macro | Meso | Micro | Population | ||

|---|---|---|---|---|---|

| Fairness | 10 | 15 | 16 | 37 | |

| Accountability | 4 | 7 | 7 | 22 | |

| Transparency | 1 | 2 | 2 | 9 | |

| Ethics | 16 | 24 | 27 | 63 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).