1. Introduction

A group of linked diseases collectively referred to as "cancer" occurs when a number of body cells start to divide uncontrolled and invade nearby tissues [

1]. The most common cancer, carcinoma, has the potential to be dangerous [

2]. Ultraviolet rays, which are the primary cause of skin cancer, harm the deoxyribonucleic acid in skin cells [

3]. Furthermore, genetic flaws may also result in cancer. There are various classifications of carcinomas, including the most severe forms of cancer like skin cancer [

4]. Even though cancer can hit everywhere on the body, skin cancer is the most common category and commonly begins when the skin is frequently exposed to sunlight. Only 4% of all malignancies are skin melanomas, but it causes 75% of malignance-related deaths [

5]. Skin cancer is more serious compared to how a different carcinoma chooses and invades neighbouring tissues. Over 14. million new cases and 8.2 million fatalities from skin cancer were reported in 2012 [

6]. These statistics indicate that skin cancer is the main cause of death.

However, early-identified individuals have a better chance of recovering because the cure rate for initial cancer discovery and treatment might be above 90% [

7]. Dermoscopy is a specialist technology that produces high-resolution magnified images of the skin by controlling light and removing surface skin reflectance [

8]. Due to morphological variation and indistinguishable characteristics of skin cancers, it is difficult to diagnose and categorize skin lesions accurately. Skin cancer screening is one method for the beforehand finding of skin cancer, which possibly will prevent the lives of numerous humans [

9]. Researchers have traditionally utilized the ABCD rule to recognize skin lesions [

10]. Total dermoscopic scores were assigned to each of the ABCD traits, with A denoting asymmetry, B denoting border irregularity, C denoting colour variations, and D denoting diameter [

11]. Based on the significance of each feature in the feature space, a specific weight was given to each feature. The generated score is used to identify whether the lesion is cancerous or benign. Due to the complexity of skin lesions, it is difficult for researchers to recognize skin malignancies using these geometrical properties [

12].

The researchers have mainly concentrated on the automatic identification and categorization of skin cancer as computer-aided screening technologies have become more prevalent [

13]. By utilizing texture cues, geometrical aspects, colour features, and combinations of these features, medical images can be used to identify and classify skin cancer conditions [

14]. In the field of image-based melanoma diagnosis, deep convolutional neural networks (CNNs) have additionally just arrived, and they have demonstrated diagnostic effectiveness comparable to that of dermatologists [

15]. Many studies have been done to categorize melanoma skin lesions using CNNs on various datasets, as MNIST HAM10000 [

16], the International Skin Imaging Collaboration 2018 (ISIC) [

17], the PH2 public database [

18], and the International Symposium on Biomedical Imaging (ISBI) [

19]. Additionally, using these datasets, promising results were produced using a variety of built-in CNN models, including ResNet50 [

20], ImageNet50 [

21], and DenseNet201 [

22] alongside additional cutting-edge models. Additionally, some datasets, like the Dermatological and Surgical Assistance Program at the Federal University of Espirito Santo (PAD-UFES-20) dataset, is not being explored at high intent for the prolonged identification of skin lesions.

In order to classify and discriminate skin lesions, the PAD-UFES-20 dataset is employed in this research together with a cutting-edge multi-layer CNN model. The PAD-UFES-20 dataset is used to train and test the model that is proposed, and the results show a 96% accuracy rate as compared to 72% and 70% accuracies of ResNetV50 and VGG16 models respectively. The proposed model outperformed under the given circumstances with comparative models. Moreover, as progressing to the paper,

Section 2 briefly highlights the related work,

Section 3 explains the dataset and preprocessing steps taken to clean and bring it into proper format,

Section 4 clearly explains the methods used to build and train the model,

Section 5 discuss the results and evaluation matrics of models, and at last, a comprehensive conclusion will be included.

2. Literature Review

Dermoscopy is a non-invasive imaging method for taking comprehensive images of skin lesion [

23]. The development of computer-aided diagnostic (CAD) systems has been spurred by research into the need for accurate and early identification of skin illnesses, including melanoma and other types of skin cancer [

24]. When used to automate the classification of skin lesions based on dermoscopy images, deep learning, in particular CNNs, has demonstrated encouraging results [

25]. Earlier methods for classifying skin lesions relied on manually created characteristics and conventional machine learning techniques [

26]. Skin lesion classification changed by deep learning, particularly CNNs [

27]. For classification tasks involving skin lesions, well-known CNN architectures like AlexNet [

28], VGGNet [

29], and InceptionNet [

30] are adapted and refined.

Deep learning models are developed by [

31], where CNN models are trained and evaluated on the HAM10000 dataset, which delivers 90 per cent validation accuracy, to recognize and separate various forms of skin malignancies. In [

32] with the help of a data augmentation technique, a convolution neural network (CNN) classification model with an accuracy of 89.2% is suggested and trained using a public dataset of skin lesions that includes 600 testing and 6,162 training images. Using a cutting-edge prediction algorithm, benign and malignant skin lesions are separated into two categories in [

33] with CNN and the model regularizer approach. The model is trained then with a dataset obtained by The International Skin Imaging Collaboration (ISIC) databank [

34] which acquired a summed accuracy score of 97.49%. In [

35] by using fuzzy C-means clustering and K-means clustering, researchers classified skin lesions using the CNN model with the ISIC dataset, achieving an accuracy of 98.83%.

In [

36] transfer learning techniques are used with the help of two models ResNet50 and DenseNet169, that trained with the MNIST: HAM10000 dataset and generated an accuracy score of 91.2% once a deep convolutional neural network model was created. In addition to previous methods like border extraction utilizing XOR with regression logic, another DCNN model is suggested in [

37], the datasets from the PH2 and International Symposium on Biomedical Imaging 2017 is utilized to retinue the model, which acquired 97.8% of accuracy rate. In [

38] to enhance the performance of the proposed CNN model a transfer learning strategy is employed by a publicly available dataset on Kaggle, which resulted in an accuracy of 79.45%. A highly developed CNN model is designed by [

39] and trained with a medical dataset acquired from Al-Kindi Hospital and Baghdad Medical City to classify skin lesions, and it obtained 89% accuracy. In [

40] seven different types of skin problems are categorized using a machine learning method based on convolutional neural networks.

In [

41] CNN model is trained with the dataset of International Skin Imaging Collaboration 2018, with improved identification accuracy, with an average of 91.07% rate. In [

42] U-Net model is being used based on the CNN approach and evaluated with ISIC data version 2017 and 2018, dermoscopic images are segmented with the accuracy of 94.9% and 95.4%, respectively. In [

43] a CNN model which distinguishes blemishes into moderate skin cancer and cases of acne is developed using images of diverse benign skin cancers and acne cases, and it yields a precision of 96.4%. In [

44] the dataset of skin cancer dermoscopy images is employed, subjected to a number of purification steps to reduce noise and enhance the quality of images, and then a CNN model is employed for categorization, achieving an accuracy of 98.38%. In [

45] HAM10000 dataset is used with the multilayer perceptron, which acquired 98.01% accuracy score, to knob with statistical qualities as constructed visage, and a CNN model is designed to trench nonstatistical image countenance.

Table 1.

A Comprehensive Analysis of CNN Models for Skin Lesions Detection on Different Dermoscopy Datasets

Table 1.

A Comprehensive Analysis of CNN Models for Skin Lesions Detection on Different Dermoscopy Datasets

| Ref. |

Model |

Dataset |

Accuracy |

Comments |

| [31] |

Deep Convolutional

Neural Network |

HAM10000 |

90% |

One of the famous datasets is used in

this paper which produced promising

results while using the CNN model. However,

hyperparameters are needed to tune well to

increase the accuracy results. |

| [34] |

Convolutional Neural

Network (CNN) |

International Skin

Imaging Collaboration

(ISIC) |

97.49% |

A CNN model is used which shows

relatively good results, but the size of dataset

needs to be maximized. |

| [36] |

ResNet50 |

MNIST: HAM10000 |

91% |

A state-of-the-art model is used which

produced reasonable results on the given dataset.

However, dataset needs to be preprocessed

well before training to get more

accurate and promising results. |

| [37] |

Deep Convolutional

Neural Network |

International Symposium

on Biomedical Imaging

(ISBI) |

97.8% |

A DCNN model is used to train on

internationally known dataset.

However, size of dataset is

decreased which shows the good

results but may lead to model overfitting. |

| [42] |

U-Net |

International Skin

Imaging Collaboration

(ISIC) |

94.9% |

A state-of-the-art model is used which

showed promising results, but more data

preprocessing or augmentation is needed

for accurate prediction. |

| SkinLesNet |

Proposed Multilayer

CNN Model |

PAD-UFES-20 |

96% |

A state-of-the-art model is proposed which

is trained and tested on relatively new dataset

to other models. The model produced significantly

good results in terms of accuracy

and precision. However, the size of the dataset can be

increased in future to get more

promising results. |

In [

46] the PH2 dataset of dermoscopic images is utilized to create and build the CNN model to learn and classify, which acquired a test set accuracy of over 95%. In [

47] by using the ISIC dataset, a six-layer CNN model was created, and it showed promise with an accuracy of 89.30% in classifying skin lesions. A state-of-the-art CNN model is designed and developed by [

48] the model achieved 97.50% accuracy results when used with the ISIC and PH2 datasets to separate skin lesions. In [

49] A distinctive CNN model was trained and tested using three benchmark datasets, including the PH2, ISIC2016, and ISIC2017 datasets, and it obtained 97.2%, 96.3%, and 99.4% accuracy on each. In [

50] along with data augmentation and image preparation procedures, the CNN model, which obtained 95.2% accuracy, was trained and tested on the MNIST HAM10000 dataset. In [

51] a CNN model is designed by utilizing the ISIC2019 dataset and then testing it, which successfully classified eight types of skin malignancies with a 94.92% testing accuracy score. In [

52] DenseNet201 model is fine tuned and trained on the HAM10000 dataset to classify skin lesions in dermoscopy images, and it obtained an astounding accuracy of 99.12% while training the model and 86.91% of test accuracy. In [

53] to classify melanoma skin lesions, a deep convolution neural network is created and trained on the ISBI 2017 dataset. This network achieved 87% accuracy on test data.

3. Methodology

We examined several modern convolutional neural network architectures and ultimately chose to train baseline models using the VGG-16 [

54] and ResNet-50 [

55] architectures because of their improved results and confirmed performance on other research and then we compared their performance with the proposed SkinLesNet model. These models use their knowledge of the general features they have learned from sizable datasets like ImageNet [

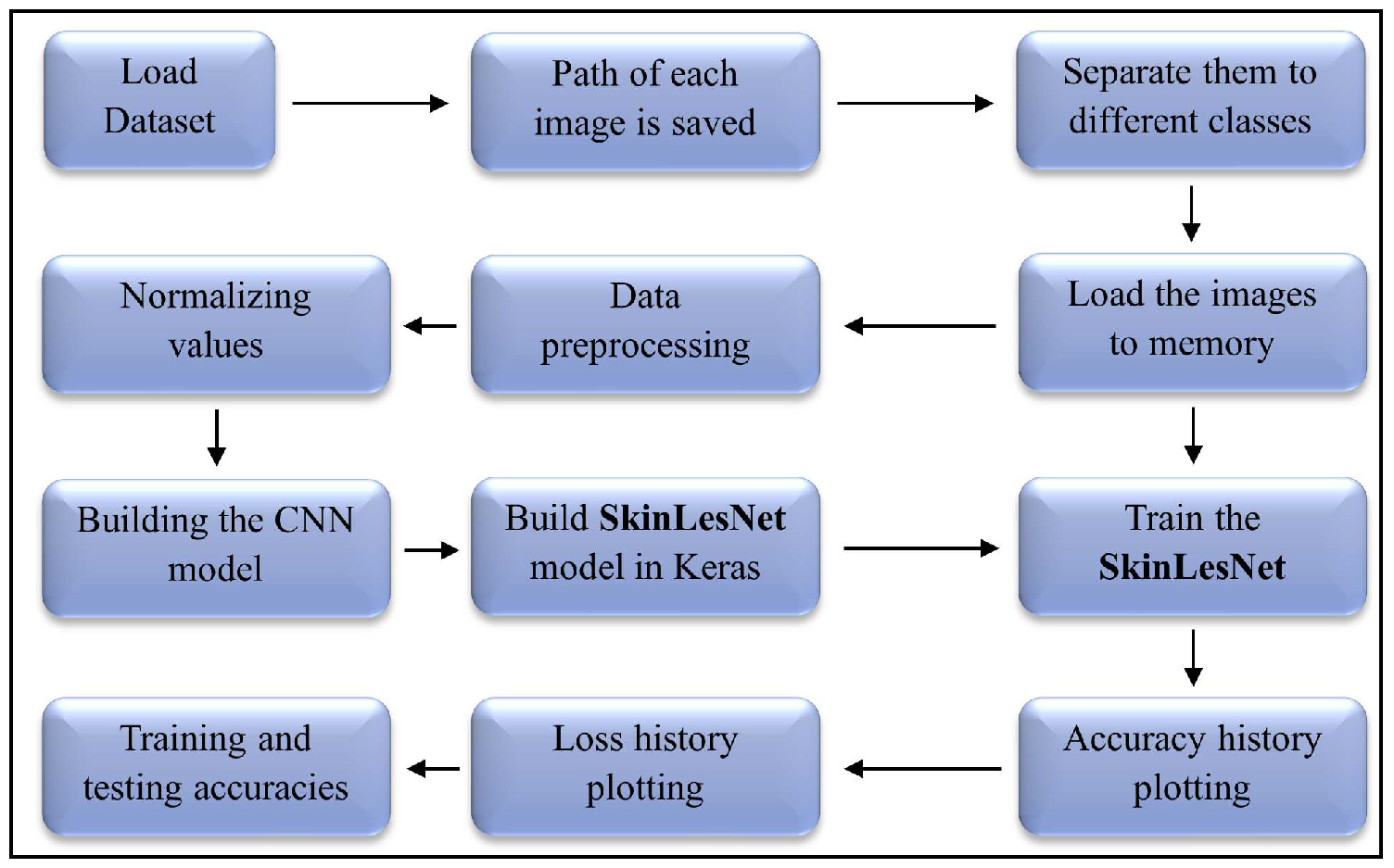

56] for the current task. For developing the SkinLesNet model we have done a comprehensive experiment visualized in the flowchart,

Figure 1. Python 3’s Keras and TensorFlow packages have been used in this computer vision task.

3.1. Dataset and Data Preprocessing

A voluntary program at the Federal University of Espirito Santo (UFES) known as The Programa de Assistncia Dermatolgica e Cirurgica (PAD), which contributes handout skin lesion medication mostly to deserving individuals who are unable to pay for personal medical services. The 19th century witnessed millions of immigrants from Europeans settle in the Espirito Santo state for reasons of historical significance. The majority of those immigrants and their children were unprepared for Brazil’s tropical atmosphere because it is a tropical country. Because skin cancer and skin lesions are so common in this state, PAD is crucial in helping those who are affected. Because they were taken with different devices, the images in this collection have different resolutions, sizes, and lighting.

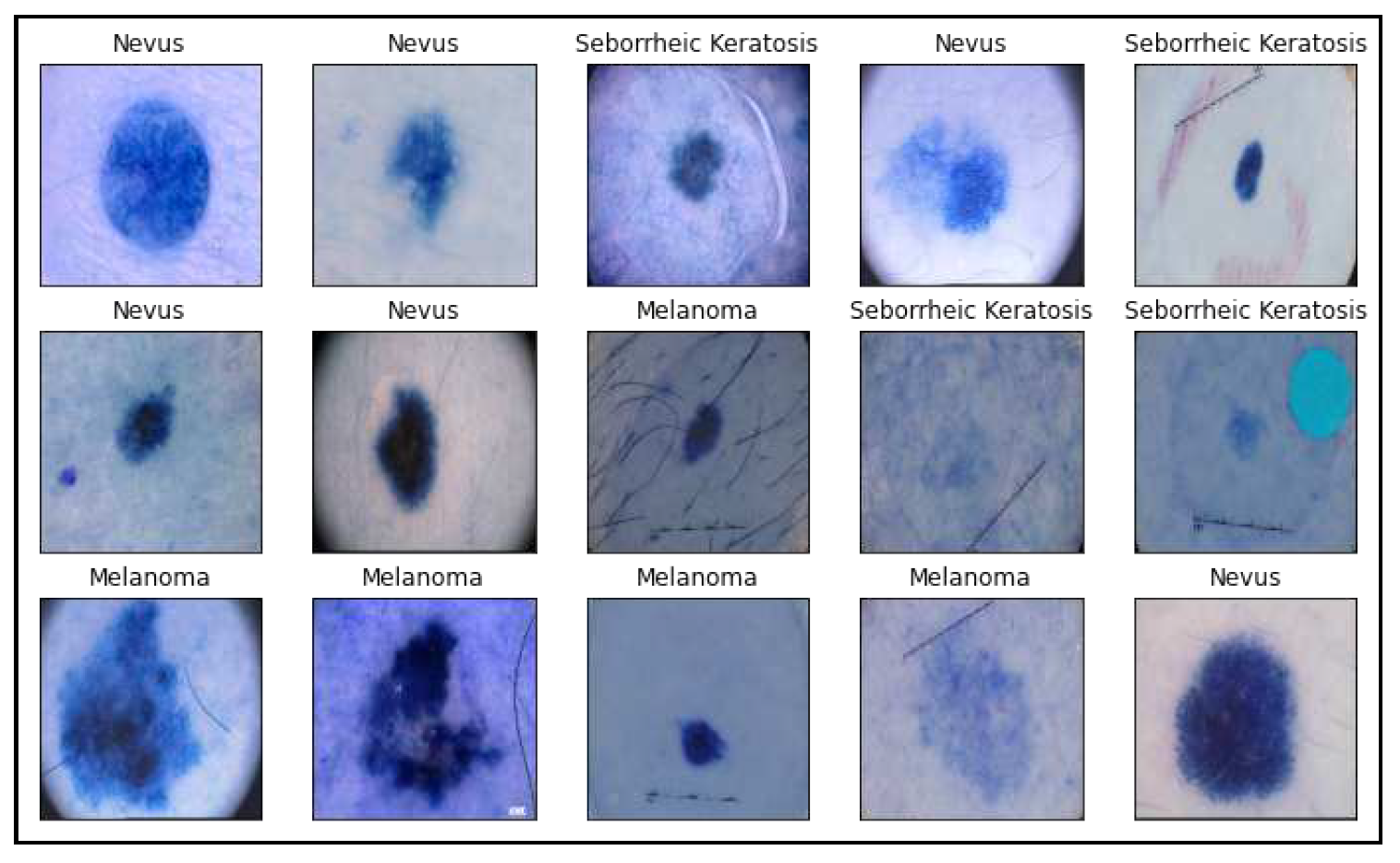

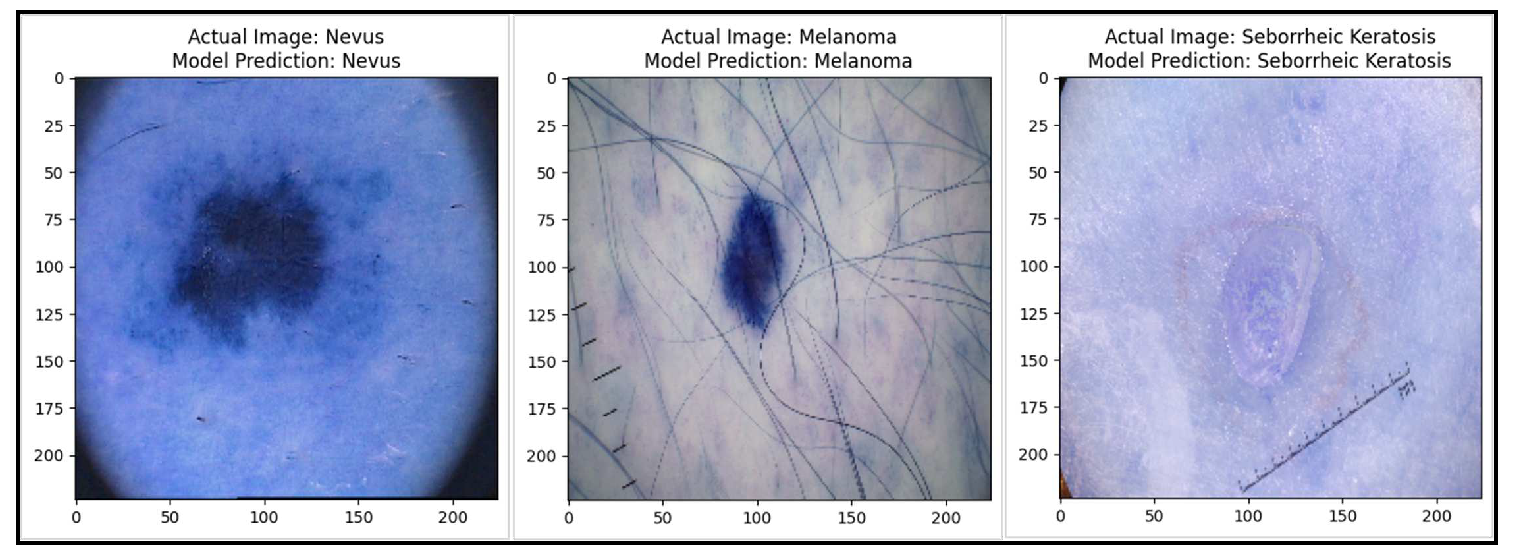

For a program that accurately detects skin cancer employing clinical images, this heterogeneity must be addressed. The Portable Network Graphics (PNG) type of file is utilized for a whole bunch of images. The PAD-UFES-20 dataset includes medical images of skin lesions alongside patient medical records relating to every skin lesion in order to aid upcoming research and the advancement of modern therapies for skin lesions. The collection includes 1314 samples of three different skin lesions: seborrheic keratosis, nevus, and melanoma. Following are a few illustrations of the image collections utilized in this investigation.

Figure 2.

Sample images from the PAD-UFES-20 dataset used in this research with different skin lesions visualizations

Figure 2.

Sample images from the PAD-UFES-20 dataset used in this research with different skin lesions visualizations

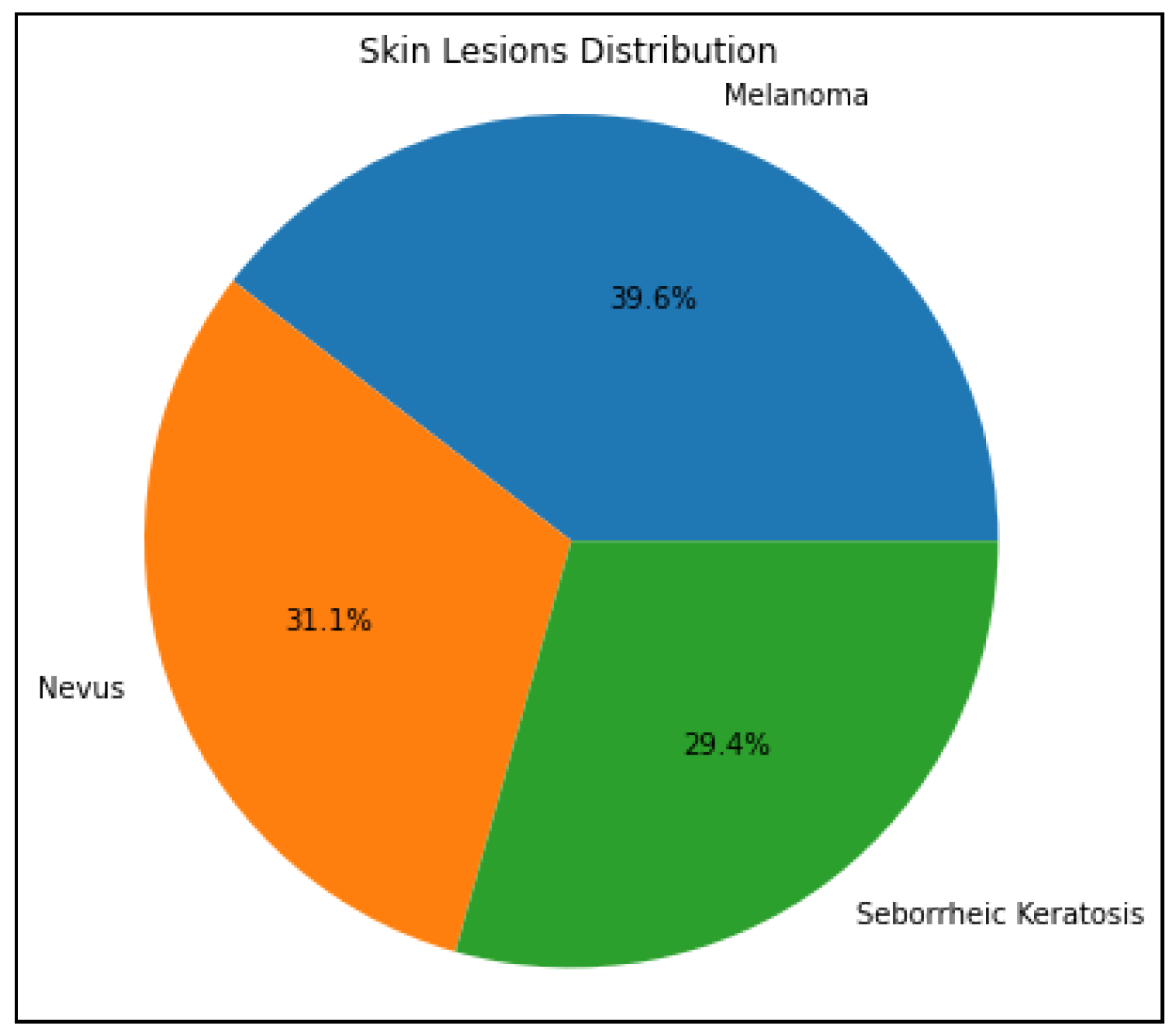

First, every image will be reduced in size to a square with 224x224 pixel dimensions. To achieve uniformity and compatibility with the deep learning model’s input size requirements, it is essential to standardize the image size. The preprocessed images and their statistical labels will all appear in the lesions data list, prepared to be loaded into a model using machine learning for categorizing skin lesions. The dataset list also produces a count plot that shows the distribution of images in all three categories (nevus, melanoma, and seborrheic keratosis).

Figure 3.

A pie chart highlights the distribution of each class for selected skin lesions in the dataset

Figure 3.

A pie chart highlights the distribution of each class for selected skin lesions in the dataset

Additionally, to visualize the distribution of all three categories (nevus, melanoma, and seborrheic keratosis) a pie chart has been used. Every slice in the pie chart represents a class and the size of each slice indicates the proportion of images in each class to all the images in the dataset. Understanding the distribution and class balance of the dataset’s skin lesion images is made easier with the aid of this graphic. Furthermore, the dataset is split into training and testing in an 80:20 ratio, with 80% of the data being used for training and 20% being utilized for testing. In order to guarantee that classes are distributed randomly across the sets used for training and testing, the train_test_split function randomly shuffles the data prior to splitting. This division is compulsory to assess the model’s generalizability to new data during testing and guard against overfitting.

3.2. Dataset Augmentation and Diversity

Apart from our primary dataset for this research, we have used two more well-known and publically available datasets HAM10000 [

57] and ISIC2017 [

58]. The HAM10000 dataset, also known as "Human Against Machine with 10000 training images," is a set of dermatoscopic images of skin lesions. It comprises 10015 dermatoscopic skin lesion images. The skin lesions in the dataset are divided into many categories, such as basal cell carcinoma, squamous cell carcinoma, seborrheic keratosis, melanoma, and nevus (moles) [

59]. Since melanoma is the most deadly type of skin cancer, it is of special interest. Furthermore, the International Skin Imaging Collaboration (ISIC), a global initiative to advance the early detection and diagnosis of skin cancer, particularly melanoma, includes the ISIC2017 dataset. A sizable number of dermatoscopic images of skin lesions are included in the dataset. The dataset’s skin lesions are divided into a number of classifications, with a particular emphasis on melanoma, the deadliest type of skin cancer. Basal cell cancer, seborrheic keratosis, and nevus (moles) are possible further classes [

60].

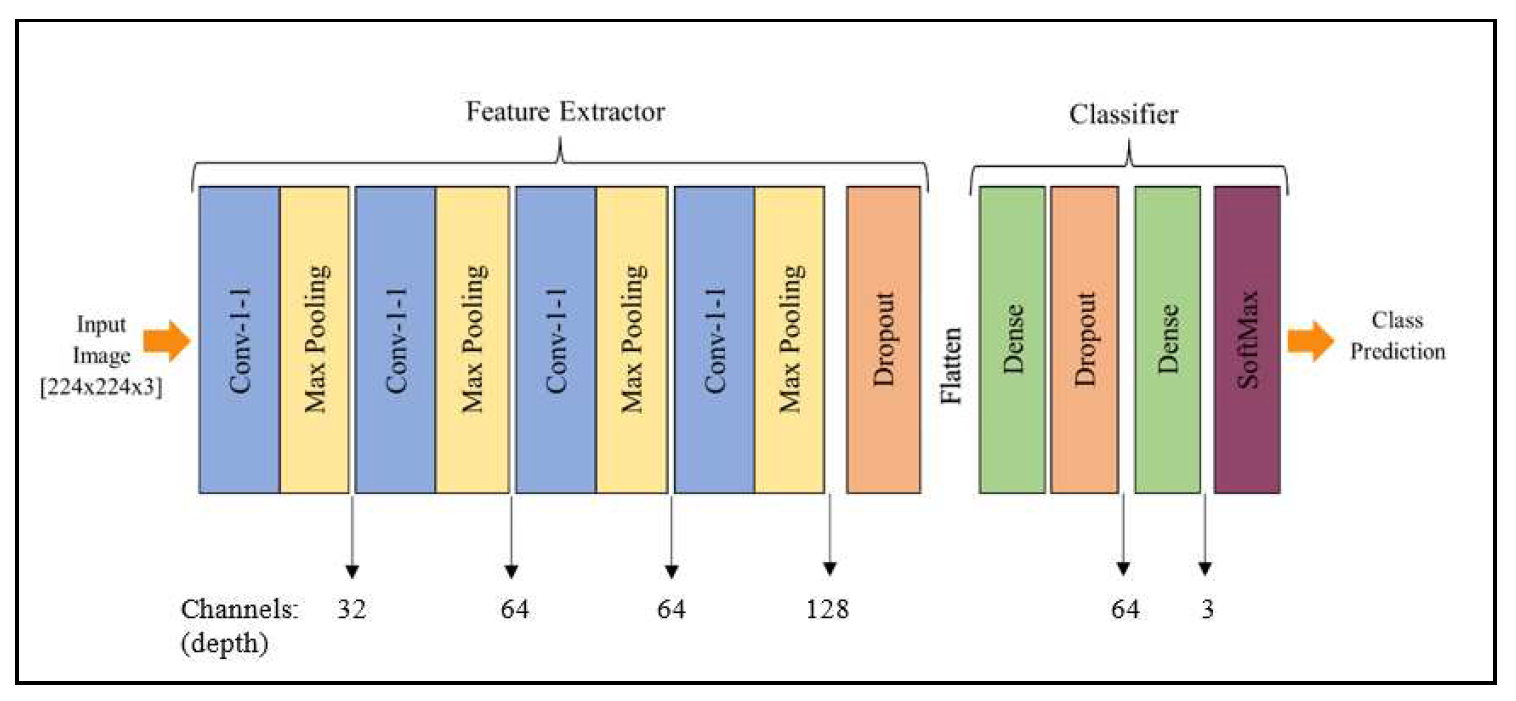

3.3. Proposed Model Architecture

We thoroughly tested numerous layer combinations in our neural network architecture before recommending a four-layer CNN model. The number of convolutional layers, the kind and size of filters, the activation functions, and the presence of pooling layers were all of them had adjusted during these analyses. Finding the best architecture that balances model complexity and efficiency became our goal. To make sure the model could correctly diagnose skin lesions, we evaluated several configurations using measures including accuracy, precision, recall, and F1-score. After extensive testing and analysis, we came to the conclusion that the four-layer CNN architecture, dubbed SkinLesNet, had the most promising outcomes in terms of precision and robustness, supporting its recommendation as the final model for skin lesion classification.

According to the suggested SkinLesNet model, Melanoma, Nevus, and Seborrheic Keratosis are the three categories into which images of skin lesions can be classified using convolutional neural networks (CNNs). The model begins with an input layer that accepts RGB images with a resolution of 224 x 224. The ReLU activation function is used to capture image characteristics in the first convolutional layer, which includes 32 filters with a 3x3 filter size. It is followed by a layer called max-pooling that shrinks the output’s spatial dimensions. To learn intricate patterns in the images, the model comprises three additional convolutional layers, each with additional filters. Each convolutional layer is followed by a further max-pooling layer. To avoid overfitting, a dropout layer with a rate of 0.5 is added. The ReLU activation function is then used to flatten the data and route it via a fully linked (dense) hidden layer with 64 neurons.

An additional layer for dropout having a rate of 0.3 is utilized preceding the output layer, and it consists of three synapses containing a softmax activation function, to calculate probabilities for each class. The algorithm undergoes training to produce precise estimations and decrease classification errors using brand-new, previously unidentified images of skin lesions. Beyond that, the proposed technique sets up the CNN model, which is used to classify images of skin lesions. The "Adam" optimization is chosen with a rate of learning of 0.001 and a moving average with an exponential momentum of 0.99 in order to improve the efficacy of training. As an integer representation of the target labels, "sparse_categorical_crossentropy" is used as the loss function used in the model. The model’s performance is assessed using the "accuracy" statistic, which provides the percentage of labels that were correctly predicted during training and evaluation.

The CNN model is built up to be used for training on the skin lesions dataset with these options. As part of its training process, the CNN model will analyze the data, use the Adam optimizer to adjust its internal parameters, and assess how well it anticipates the development of new skin lesions. The model encapsulates the previously described CNN model’s architectural layout function called summary. In a table, the model’s layers, their output formats, and the total number of parameters that can be trained are listed. The summary provides a broad overview of the model’s layers and their attributes.

Figure 4.

Proposed multilayer CNN model architecture to classify different skin lesions categories

Figure 4.

Proposed multilayer CNN model architecture to classify different skin lesions categories

3.4. Model Training

Various metrics, including training and validation accuracy, loss, and other pertinent metrics, can be used to track the training phase’s progress. These metrics offer information about the model’s performance and aid in choosing how to adjust its hyperparameters and architectural design. The training phase, in general, is a data-driven, repeating procedure where the model works to determine significant trends and abstractions from the data used for training in order to produce accurate predictions on new, unseen data. In addition, a batch size of 32, 100 epochs, and a validation split of 0.2 are used to train the model, as shown in the table below.

Table 2.

Hyperparameters and configurations used to train proposed multilayer model for this work

Table 2.

Hyperparameters and configurations used to train proposed multilayer model for this work

| Learning Rate |

Batch Size |

Epochs |

Optimizer |

Activation |

| 0.001 |

32 |

100 |

Adams |

ReLU |

The model frequently converges effectively during training at a moderate learning rate, such as 0.001. With smaller learning rates, overshooting or divergence, which might happen, are avoided by allowing the model to make incremental weight adjustments. A lower learning rate makes the optimization process more precise and controllable, which helps the model become broader and less prone to overfitting [

61]. Smaller batch sizes bring noise into the gradient estimates, which can function as a type of regularization and aid in avoiding overfitting. Working with less data can be advantageous when this regularization effect is present. Batch sizes of up to 32 are memory-efficient and allow deep neural networks to be trained even on computers with little GPU capacity [

62]. Convergence may be accelerated by Adam’s adjustment [

63] of the learning rates for each parameter during training.

For each parameter, it maintains two moving averages: the first moment’s mean and the second moment’s uncentered variance. The optimizer can modify the learning rates based on how the gradient behaves for each parameter using these moving averages. Adam is suitable for a variety of deep learning problems and is robust to noisy gradients. ReLU adds non-linearity to the model [

64], allowing it to recognize complex patterns in the data.

4. Results and Discussions

The model’s performance on unseen data after it has been trained is then displayed methodically. By looking at significant measures like accuracy, precision, recall, and F1-score, one can determine the model’s strengths and flaws. The discussion of visuals, confusion matrices, and comparison with testing methods all help to give a greater understanding of the model’s possibilities and limitations. Overall, the findings and analysis provide both practitioners and scholars in the field of medical image analysis with meaningful data that bridges the gap between the theoretical model’s conceptualization and its practical implementation.

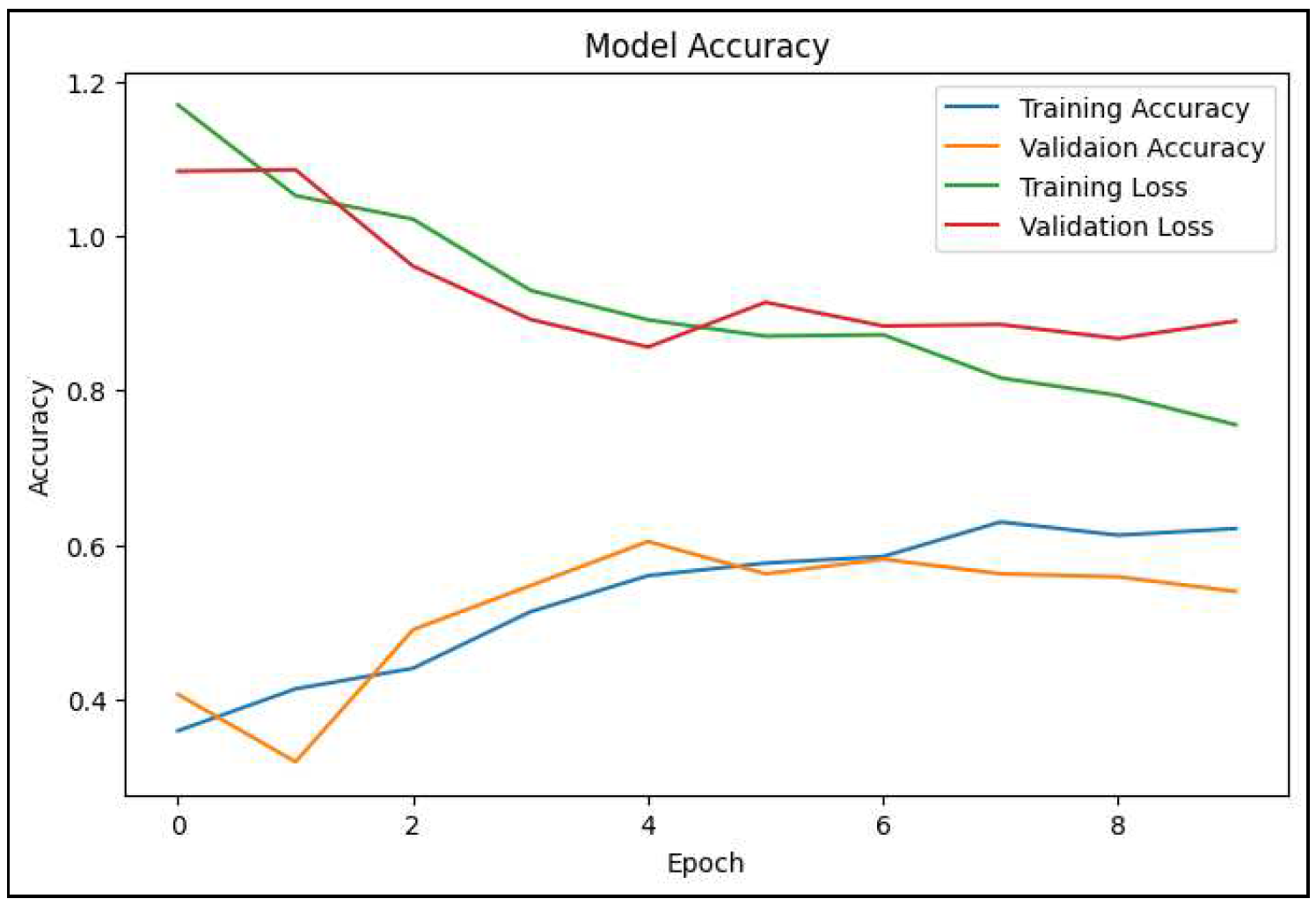

Figure 5.

A line graph depicts the variation in training and validation accuracy and loss of the proposed SkinLesNet model

Figure 5.

A line graph depicts the variation in training and validation accuracy and loss of the proposed SkinLesNet model

Several convolutional layer combinations with average pooling layers and several hidden layers with batch normalization are present in RestNet50. The fully connected layer, which has 1000 out-features, is the last layer in the original ResNet50 model. This work involves replacing this fc layer with a collection of fc layers in order to fine-tune the ResNet50 model. There are 2048 out-features in the first fc layer. A probability of 0.5 is applied for dropout. The first and second fc layers are identical. ReLU and dropout with a probability of 0.5 are performed after the second fc layer. There are three out-features and 2048 in-features in the final fc layer, which is intended for three-class categorization. Additionally, in the VGG16, some layers are frozen and unfrozen in order to fine-tune the model. Meanwhile, the VGG16 model is fine-tuned by unfreezing the last block so that their weights get updated in each epoch.

The proposed SkinLesNet model is first trained and tested on PAD-UFES-20 dataset where it shows 96% of training accuracy with precision, recall, and F1-score of 97%, 92%, and 92% respectively. The model is then trained and tested on HAM10000 and ISIC2017 datasets which produced 90% and 92% of accuracy results respectively. Moreover, training accuracies obtained for ResNET50 and VGG16 models are 82% and 79% respectively. Furthermore, models are rigorously trained and tested with high definition images to thoroughly analyse the performance of each network. The SkinLesNet model significantly outperformed on different datasets as compared to the other state-of-the-art models used in this research.

Table 3.

Performance comparison of SkinLesNet with other state-of-the-art fine-tuned models for PAD-UFES-20 dataset

Table 3.

Performance comparison of SkinLesNet with other state-of-the-art fine-tuned models for PAD-UFES-20 dataset

| Performance Metrics |

VGG-16 |

ResNet-50 |

SkinLesNet |

| Accuracy |

79% |

82% |

96% |

| Precision |

80% |

85% |

97% |

| Recall |

75% |

75% |

92% |

| F1-Score |

72% |

75% |

92% |

Table 4.

Performance comparison of SkinLesNet with other state-of-the-art fine-tuned models for HAM10000 dataset

Table 4.

Performance comparison of SkinLesNet with other state-of-the-art fine-tuned models for HAM10000 dataset

| Performance Metrics |

VGG-16 |

ResNet-50 |

SkinLesNet |

| Accuracy |

75% |

80% |

90% |

| Precision |

75% |

80% |

89% |

| Recall |

70% |

72% |

87% |

| F1-Score |

70% |

71% |

85% |

Table 5.

Performance comparison of SkinLesNet with other state-of-the-art fine-tuned models for ISIC2017 dataset

Table 5.

Performance comparison of SkinLesNet with other state-of-the-art fine-tuned models for ISIC2017 dataset

| Performance Metrics |

VGG-16 |

ResNet-50 |

SkinLesNet |

| Accuracy |

70% |

75% |

92% |

| Precision |

70% |

75% |

80% |

| Recall |

70% |

65% |

82% |

| F1-Score |

72% |

70% |

75% |

Real World Analysis

We have tested and evaluated our skin lesion categorization model as the end result of our work to enhance medical image management and analyse real-world performance. We concentrated on assessing the model’s performance in a test dataset after extensive training and optimization because this was a crucial step in connecting the gap involving hypothetical ability and realistic application. Our model uses a dataset made up of numerous dermoscopy images illustrating diverse skin conditions to achieve the challenging goal of reliable classification of skin lesions, a task essential for dermatologists’ diagnostic efforts. Before we began the real-world implementation or model deployment, the model’s performance was assessed by the evaluation of its predictions on the test dataset. Therefore, at this stage by running the model over new images, possible melanomas, nevus, or seborrheic keratoses could be identified in patients accurately.

Figure 6.

Test dataset results of the proposed model which shows real-world analysis

Figure 6.

Test dataset results of the proposed model which shows real-world analysis

5. Conclusion

Employing the suggested strategies, we embarked on a preprocessing, training, and optimization journey that led to the creation of SkinLesNet, a feat of human talent combined with technological brilliance. Our model’s performance, which is displayed in the results section, demonstrated instances of accuracy and suggested areas for improvement. Additionally, two benchmark designs—VGG-16 and ResNet-50—for recognizing skin lesions from dermoscopy images are analyzed and compared in this study. The core PAD-UFES-20 dataset is used to train and test the SkinLesNet model, which provides a training accuracy of 96%, compared to 82% for ResNetV50 and 79% for VGG16 models. Moreover, two other publicly available datasets are used to train the model such as HAM10000 and ISIC2017. Furthermore, the model acquired the accuracy on HAM10000 and ISIC2017 datasets of 90% and 92% respectively. Therefore, the SkinLesNet model is outperformed under given circumstances with comparative models. Overall, our proposed model performs significantly well as compared to other state-of-the-art models, however, the dataset can be increased, or data augmentation techniques can be refined in future directions.

Git Repository: The proposed SkinLesNet model is publicly available and accessible on Git Hub repository at the following link:

SkinLesNet_Project.

Author Contributions

Conceptualization, Muhammad Azeem; Data curation, Muhammad Azeem; Formal analysis, Muhammad Azeem, Kaveh Kiani; Methodology, Muhammad Azeem; Project administration, Kaveh Kiani and Taha Mansouri; Resources, Kaveh Kiani; Supervision, Kaveh Kiani and Taha Mansouri; Validation, Muhammad Azeem; Writing – original draft, Muhammad Azeem; Writing – review & editing, Muhammad Azeem, Kaveh Kiani and Taha Mansouri.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Khan, D.; Rahman, A.u.; Kumam, P.; Watthayu, W. A Fractional Analysis of Hyperthermia Therapy on Breast Cancer in a Porous Medium along with Radiative Microwave Heating. Fractal and Fractional 2022, 6, 82. [Google Scholar] [CrossRef]

- Abdelrahim, M.; Esmail, A.; Saharia, A.; Abudayyeh, A.; Abdel-Wahab, N.; Diab, A.; Murakami, N.; Kaseb, A.O.; Chang, J.C.; Gaber, A.O.; others. Utilization of immunotherapy for the treatment of hepatocellular carcinoma in the peri-transplant setting: transplant oncology view. Cancers 2022, 14, 1760. [Google Scholar] [CrossRef] [PubMed]

- Abdulfatah, E.; Fine, S.W.; Lotan, T.L.; Mehra, R. De novo neuroendocrine features in prostate cancer. Human pathology 2022, 127, 112–122. [Google Scholar] [CrossRef]

- Allen, N.C.; Martin, A.J.; Snaidr, V.A.; Eggins, R.; Chong, A.H.; Fernandéz-Peñas, P.; Gin, D.; Sidhu, S.; Paddon, V.L.; Banney, L.A.; others. Nicotinamide for Skin-Cancer Chemoprevention in Transplant Recipients. New England Journal of Medicine 2023, 388, 804–812. [Google Scholar] [CrossRef]

- Sharma, G.; Chadha, R. An Optimized Predictive Model Based on Deep Neural Network for Detection of Skin Cancer and Oral Cancer. 2023 2nd International Conference for Innovation in Technology (INOCON). IEEE, 2023, pp. 1–6.

- Li, X.; Zhao, Z.; Zhang, M.; Ling, G.; Zhang, P. Research progress of microneedles in the treatment of melanoma. Journal of Controlled Release 2022, 348, 631–647. [Google Scholar] [CrossRef]

- Durante, G.; Broseghini, E.; Comito, F.; Naddeo, M.; Milani, M.; Salamon, I.; Campione, E.; Dika, E.; Ferracin, M. Circulating microRNA biomarkers in melanoma and non-melanoma skin cancer. Expert Review of Molecular Diagnostics 2022, 22, 305–318. [Google Scholar] [CrossRef]

- Goceri, E. Evaluation of denoising techniques to remove speckle and Gaussian noise from dermoscopy images. Computers in Biology and Medicine 2022, 106474. [Google Scholar] [CrossRef] [PubMed]

- Rajput, G.; Agrawal, S.; Raut, G.; Vishvakarma, S.K. An accurate and noninvasive skin cancer screening based on imaging technique. International Journal of Imaging Systems and Technology 2022, 32, 354–368. [Google Scholar] [CrossRef]

- Monisha, M.; Suresh, A.; Bapu, B.T.; Rashmi, M. Retraction Note: Classification of malignant melanoma and benign skin lesion by using back propagation neural network and ABCD rule, 2023.

- Das, J.B.A.; Mishra, D.; Das, A.; Mohanty, M.N.; Sarangi, A. Skin cancer detection using machine learning techniques with ABCD features. 2022 2nd Odisha International Conference on Electrical Power Engineering, Communication and Computing Technology (ODICON). IEEE, 2022, pp. 1–6.

- Salma, W.; Eltrass, A.S. Automated deep learning approach for classification of malignant melanoma and benign skin lesions. Multimedia Tools and Applications 2022, 81, 32643–32660. [Google Scholar] [CrossRef]

- Malibari, A.A.; Alzahrani, J.S.; Eltahir, M.M.; Malik, V.; Obayya, M.; Al Duhayyim, M.; Neto, A.V.L.; de Albuquerque, V.H.C. Optimal deep neural network-driven computer aided diagnosis model for skin cancer. Computers and Electrical Engineering 2022, 103, 108318. [Google Scholar] [CrossRef]

- Gouda, W.; Sama, N.U.; Al-Waakid, G.; Humayun, M.; Jhanjhi, N.Z. Detection of skin cancer based on skin lesion images using deep learning. Healthcare. MDPI 2022, 10, 1183. [Google Scholar] [CrossRef] [PubMed]

- Azeem, M.; Javaid, S.; Khalil, R.A.; Fahim, H.; Althobaiti, T.; Alsharif, N.; Saeed, N. Neural Networks for the Detection of COVID-19 and Other Diseases: Prospects and Challenges. Bioengineering 2023, 10, 850. [Google Scholar] [CrossRef] [PubMed]

- Sethanan, K.; Pitakaso, R.; Srichok, T.; Khonjun, S.; Thannipat, P.; Wanram, S.; Boonmee, C.; Gonwirat, S.; Enkvetchakul, P.; Kaewta, C.; others. Double AMIS-ensemble deep learning for skin cancer classification. Expert Systems with Applications 2023, 234, 121047. [Google Scholar] [CrossRef]

- Faheem Saleem, M.; Muhammad Adnan Shah, S.; Nazir, T.; Mehmood, A.; Nawaz, M.; Attique Khan, M.; Kadry, S.; Majumdar, A.; Thinnukool, O. Signet ring cell detection from histological images using deep learning. [CrossRef]

- Shahsavari, A.; Khatibi, T.; Ranjbari, S. Skin lesion detection using an ensemble of deep models: SLDED. Multimedia Tools and Applications 2023, 82, 10575–10594. [Google Scholar] [CrossRef]

- Ahmed, M.R.; Fahim, M.A.I.; Islam, A.M.; Islam, S.; Shatabda, S. DOLG-NeXt: Convolutional neural network with deep orthogonal fusion of local and global features for biomedical image segmentation. Neurocomputing 2023, 546, 126362. [Google Scholar] [CrossRef]

- Sharma, A.K.; Nandal, A.; Dhaka, A.; Koundal, D.; Bogatinoska, D.C.; Alyami, H.; others. Enhanced watershed segmentation algorithm-based modified ResNet50 model for brain tumor detection. BioMed Research International 2022, 2022. [Google Scholar] [CrossRef] [PubMed]

- Jin, H.; Kim, E. Helpful or Harmful: Inter-task Association in Continual Learning. European Conference on Computer Vision. Springer, 2022, pp. 519–535.

- Sanghvi, H.A.; Patel, R.H.; Agarwal, A.; Gupta, S.; Sawhney, V.; Pandya, A.S. A deep learning approach for classification of COVID and pneumonia using DenseNet-201. International Journal of Imaging Systems and Technology 2023, 33, 18–38. [Google Scholar] [CrossRef] [PubMed]

- Alexandris, D.; Alevizopoulos, N.; Marinos, L.; Gakiopoulou, C. Dermoscopy and novel non invasive imaging of Cutaneous Metastases. Advances in Cancer Biology-Metastasis 2022, 100078. [Google Scholar] [CrossRef]

- Adla, D.; Reddy, G.V.R.; Nayak, P.; Karuna, G. Deep learning-based computer aided diagnosis model for skin cancer detection and classification. Distributed and Parallel Databases 2022, 40, 717–736. [Google Scholar] [CrossRef]

- Anand, V.; Gupta, S.; Koundal, D.; Singh, K. Fusion of U-Net and CNN model for segmentation and classification of skin lesion from dermoscopy images. Expert Systems with Applications 2023, 213, 119230. [Google Scholar] [CrossRef]

- Bibi, A.; Khan, M.A.; Javed, M.Y.; Tariq, U.; Kang, B.G.; Nam, Y.; Mostafa, R.R.; Sakr, R.H. Skin lesion segmentation and classification using conventional and deep learning based framework. Comput. Mater. Contin 2022, 71, 2477–2495. [Google Scholar] [CrossRef]

- Qian, S.; Ren, K.; Zhang, W.; Ning, H. Skin lesion classification using CNNs with grouping of multi-scale attention and class-specific loss weighting. Computer Methods and Programs in Biomedicine 2022, 226, 107166. [Google Scholar] [CrossRef]

- Ullah, A.; Elahi, H.; Sun, Z.; Khatoon, A.; Ahmad, I. Comparative analysis of AlexNet, ResNet18 and SqueezeNet with diverse modification and arduous implementation. Arabian journal for science and engineering 2022, 1–21. [Google Scholar] [CrossRef]

- Goswami, A.D.; Bhavekar, G.S.; Chafle, P.V. Electrocardiogram signal classification using VGGNet: a neural network based classification model. International Journal of Information Technology 2023, 15, 119–128. [Google Scholar] [CrossRef]

- Qayyum, A.; Mazher, M.; Khan, T.; Razzak, I. Semi-supervised 3D-InceptionNet for segmentation and survival prediction of head and neck primary cancers. Engineering Applications of Artificial Intelligence 2023, 117, 105590. [Google Scholar] [CrossRef]

- Huang, Y.; Huang, C.Y.; Li, X.; Li, K. A Dataset Auditing Method for Collaboratively Trained Machine Learning Models. IEEE Transactions on Medical Imaging 2022. [Google Scholar] [CrossRef] [PubMed]

- Shah, A.; Shah, M.; Pandya, A.; Sushra, R.; Sushra, R.; Mehta, M.; Patel, K.; Patel, K. A Comprehensive Study on Skin Cancer Detection using Artificial Neural Network (ANN) and Convolutional Neural Network (CNN). Clinical eHealth 2023. [Google Scholar] [CrossRef]

- Keerthana, D.; Venugopal, V.; Nath, M.K.; Mishra, M. Hybrid convolutional neural networks with SVM classifier for classification of skin cancer. Biomedical Engineering Advances 2023, 5, 100069. [Google Scholar] [CrossRef]

- Nawaz, M.; Nazir, T.; Masood, M.; Ali, F.; Khan, M.A.; Tariq, U.; Sahar, N.; Damaševičius, R. Melanoma segmentation: A framework of improved DenseNet77 and UNET convolutional neural network. International Journal of Imaging Systems and Technology 2022, 32, 2137–2153. [Google Scholar] [CrossRef]

- Rasel, M.; Obaidellah, U.H.; Kareem, S.A. convolutional neural network-based skin lesion classification with Variable Nonlinear Activation Functions. IEEE Access 2022, 10, 83398–83414. [Google Scholar] [CrossRef]

- Gururaj, H.; Manju, N.; Nagarjun, A.; Aradhya, V.N.M.; Flammini, F. DeepSkin: A Deep Learning Approach for Skin Cancer Classification. IEEE Access 2023. [Google Scholar] [CrossRef]

- Allugunti, V.R. A machine learning model for skin disease classification using convolution neural network. International Journal of Computing, Programming and Database Management 2022, 3, 141–147. [Google Scholar] [CrossRef]

- Bhargava, M.; Vijayan, K.; Anand, O.; Raina, G. Exploration of transfer learning capability of multilingual models for text classification. Proceedings of the 2023 5th International Conference on Pattern Recognition and Intelligent Systems, 2023, pp. 45–50.

- Ogudo, K.A.; Surendran, R.; Khalaf, O.I. Optimal Artificial Intelligence Based Automated Skin Lesion Detection and Classification Model. Computer Systems Science & Engineering 2023, 44. [Google Scholar]

- Bala, D.; Abdullah, M.I.; Hossain, M.A.; Islam, M.A.; Rahman, M.A.; Hossain, M.S. SkinNet: An Improved Skin Cancer Classification System Using Convolutional Neural Network. 2022 4th International Conference on Sustainable Technologies for Industry 4.0 (STI). IEEE, 2022, pp. 1–6.

- Ramadan, R.; Aly, S. CU-net: a new improved multi-input color U-net model for skin lesion semantic segmentation. IEEE Access 2022, 10, 15539–15564. [Google Scholar] [CrossRef]

- Kartal, M.S.; Polat, Ö. Segmentation of Skin Lesions using U-Net with EfficientNetB7 Backbone. 2022 Innovations in Intelligent Systems and Applications Conference (ASYU). IEEE, 2022, pp. 1–5.

- Vasudeva, K.; Chandran, S. Classifying Skin Cancer and Acne using CNN. 2023 15th International Conference on Knowledge and Smart Technology (KST). IEEE, 2023, pp. 1–6.

- Jayabharathy, K.; Vijayalakshmi, K. Detection and classification of malignant melanoma and benign skin lesion using CNN. 2022 International Conference on Smart Technologies and Systems for Next Generation Computing (ICSTSN). IEEE, 2022, pp. 1–4.

- Battle, M.L.; Atapour-Abarghouei, A.; McGough, A.S. Siamese Neural Networks for Skin Cancer Classification and New Class Detection using Clinical and Dermoscopic Image Datasets. 2022 IEEE International Conference on Big Data (Big Data). IEEE, 2022, pp. 4346–4355.

- Rasheed, A.; Umar, A.I.; Shirazi, S.H.; Khan, Z.; Nawaz, S.; Shahzad, M. Automatic eczema classification in clinical images based on hybrid deep neural network. Computers in Biology and Medicine 2022, 147, 105807. [Google Scholar] [CrossRef]

- Mohamed, E.H.; Abubakr, A.F.; Abdu, N.; Khalil, M.; Kamal, H.; Youssef, M.; Mohamed, H.; ElSayed, M. A Hybrid Deep Learning Framework for Skin Cancer Classification using Dermoscopy Images and Metadata 2023.

- Bedeir, R.H.; Mahmoud, R.O.; Zayed, H.H. Automated multi-class skin cancer classification through concatenated deep learning models. IAES International Journal of Artificial Intelligence 2022, 11, 764. [Google Scholar]

- Rastegar, H.; Giveki, D. Designing a new deep convolutional neural network for content-based image retrieval with relevance feedback. Computers and Electrical Engineering 2023, 106, 108593. [Google Scholar] [CrossRef]

- Ghosh, P.; Azam, S.; Quadir, R.; Karim, A.; Shamrat, F.; Bhowmik, S.K.; Jonkman, M.; Hasib, K.M.; Ahmed, K. SkinNet-16: A deep learning approach to identify benign and malignant skin lesions. Frontiers in Oncology 2022, 12, 931141. [Google Scholar] [CrossRef]

- Nigar, N.; Umar, M.; Shahzad, M.K.; Islam, S.; Abalo, D. A deep learning approach based on explainable artificial intelligence for skin lesion classification. IEEE Access 2022, 10, 113715–113725. [Google Scholar] [CrossRef]

- Agyenta, C.; Akanzawon, M. Skin Lesion Classification Based on Convolutional Neural Network. Journal of Applied Science and Technology Trends 2022, 3, 14–19. [Google Scholar] [CrossRef]

- Malo, D.C.; Rahman, M.M.; Mahbub, J.; Khan, M.M. Skin Cancer Detection using Convolutional Neural Network. 2022 IEEE 12th Annual Computing and Communication Workshop and Conference (CCWC). IEEE, 2022, pp. 0169–0176.

- Singh, A.; Pandey, A.; Rakhra, M.; Singh, D.; Singh, G.; Dahiya, O. An Iris Recognition System Using CNN & VGG16 Technique. 2022 10th International Conference on Reliability, Infocom Technologies and Optimization (Trends and Future Directions)(ICRITO). IEEE, 2022, pp. 1–6.

- Panthakkan, A.; Anzar, S.; Jamal, S.; Mansoor, W. Concatenated Xception-ResNet50—A novel hybrid approach for accurate skin cancer prediction. Computers in Biology and Medicine 2022, 150, 106170. [Google Scholar] [CrossRef]

- Azizi, S.; Kornblith, S.; Saharia, C.; Norouzi, M.; Fleet, D.J. Synthetic data from diffusion models improves imagenet classification. arXiv preprint arXiv:2304.08466 2023.

- Zephaniah, B. Comparison Of Keras Applications Prebuilt Model With Extra Densely Connected Neural Layer Accuracy And Stability Using Skin Cancer Dataset Of Mnist: Ham10000. PhD thesis, 2023.

- Alsahafi, Y.S.; Kassem, M.A.; Hosny, K.M. Skin-Net: a novel deep residual network for skin lesions classification using multilevel feature extraction and cross-channel correlation with detection of outlier. Journal of Big Data 2023, 10, 105. [Google Scholar] [CrossRef]

- Alam, T.M.; Shaukat, K.; Khan, W.A.; Hameed, I.A.; Almuqren, L.A.; Raza, M.A.; Aslam, M.; Luo, S. An efficient deep learning-based skin cancer classifier for an imbalanced dataset. Diagnostics 2022, 12, 2115. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Lyu, J.; Luo, W.; Tang, X. Superpixel inpainting for self-supervised skin lesion segmentation from dermoscopic images. 2022 IEEE 19th International Symposium on Biomedical Imaging (ISBI). IEEE, 2022, pp. 1–4.

- Tirumala, K.; Markosyan, A.; Zettlemoyer, L.; Aghajanyan, A. Memorization without overfitting: Analyzing the training dynamics of large language models. Advances in Neural Information Processing Systems 2022, 35, 38274–38290. [Google Scholar]

- Oh, S.; Moon, J.; Kum, S. Application of Deep Learning Model Inference with Batch Size Adjustment. 2022 13th International Conference on Information and Communication Technology Convergence (ICTC). IEEE, 2022, pp. 2146–2148.

- Ogundokun, R.O.; Maskeliunas, R.; Misra, S.; Damaševičius, R. Improved CNN based on batch normalization and adam optimizer. International Conference on Computational Science and Its Applications. Springer, 2022, pp. 593–604.

- Nayef, B.H.; Abdullah, S.N.H.S.; Sulaiman, R.; Alyasseri, Z.A.A. Optimized leaky ReLU for handwritten Arabic character recognition using convolution neural networks. Multimedia Tools and Applications 2022, 1–30. [Google Scholar] [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).