1. Introduction

Since Carl Friedrich Gauss had developed the mathematical method of least squares in 1795, empirical equations fitted to data have become widely used in metrology and various other branches of science and engineering. However, even though knowledge about uncertainties of values calculated from such equations is indispensable, comprehensive, systematic and generally accepted techniques for estimating those uncertainties are still not available, and related fundamental questions continue to be disputed (Feistel et al 2016). However, ”the confidence level of a model’s predictions should be included in every modelling application” (Deletic et al 2012). In particular the handling of systematic errors in fitted data is insufficiently well known.

The motivation for developing the mathematical model presented in this paper arose from metrological problems encountered in the context of a new definition of relative humidity, RH (Lovell-Smith

et al 2016, Feistel and Lovell-Smith 2017). A new, physically justified definition of RH, traceable to the International System of Units, SI, may rely on thermodynamic properties such as chemical potentials, activities, fugacities or virial coefficients in aqueous gaseous, liquid or solid mixtures. Equations for such properties are available from documents of IAPWS

2, in particular as part of the international thermodynamic standard TEOS-10 (IOC et al 2010, IAPWS AN6-16 2016), while equations for the related uncertainty information are not or only incompletely reported for those quantities. For example, virial coefficients of water can be derived from the equation of state, but their uncertainties cannot (Feistel

et al 2015). Systematic methods for estimating such uncertainties from the equations available are missing or lack of general acceptance. An attempt of applying established GUM (2008, 2011) covariance propagation methods to the IAPWS equations resulted in seemingly significantly underestimated uncertainties derived for the properties of interest (Feistel

et al 2016, Lovell-Smith

et al 2017). Among the most likely reasons for this apparent deficiency may be the unclear way of propagating systematic errors in the measurement results that were used for the construction of the fundamental IAPWS equations.

For proper metrological application of IAPWS equations, sufficiently complete and appropriate uncertainty information is indispensable. The method suggested in this paper aims at addressing associated problems that are unresolved by now. While the mathematical formalism proposed here is rather general and may be suitable also for other cases of Generalized Least-Squares (GLS) regression, the focus is here on empirical equations whose adjustable coefficients had been obtained from large sets of various thermodynamic properties of the target substance, in particular, of water, by means of Weighted Least-Squares (WLS) regression. The authors of the original measurement data are typically not involved in the construction of the equations, and any uncertainty or possibly covariance information related to those measurements is restricted to what those authors had provided along with their publications. The aim of this paper is making available lacking estimates for input data correlations, by analysing the combined regression of data belonging to different authors, quantities or experimental setups. This situation is radically different from cases where the experimenters are able include correlation information of their own measurements in uncertainty budgets, such as for the determination of the Boltzmann constant (Gaiser et al 2017). Populating non-diagonal elements of the least-squares weight matrix based on simple assumptions have not led to sufficiently improved uncertainty estimates (Feistel et al 2016, Lovell-Smith et al 2017). The approach developed in this paper goes clearly beyond the model of Davidson and MacKinnon (2004, equation 7.88 therein) which is, to the knowledge of the authors, the so-far most advanced GLS algorithm available.

When an empirical thermodynamic equation of state is constructed for a certain substance such as H2O ice (Feistel and Wagner 2006), measurement results from various origins for various properties of ice are combined in a joint least-squares regression analysis. This way, all different thermodynamic properties become expressed as functions of a single common background set of regression coefficients. The corresponding close mutual mathematical relations between the properties, such as Maxwell relations, enforce internal consistency of the entire input data set; possible systematic errors of particular data groups typically result in conspicuous non-random pattern of their fitting residuals. This paper attempts to exploit this, otherwise commonly ignored pattern for estimating off-diagonal elements of the weight matrix. This approach is conceptionally consistent, as we believe, with recent suggestions of Willink (2013) and de Courtenay and Grégis (2017) for a “frequentist” method of including the systematic error of a single data set in the form of a statistical error revealed be the joint exploitation of related, alternative data sets. “A given systematic error appears like a realization drawn from a population of similar systematic errors of the same kind produced by all the laboratories that were accessible to the user and that were handling the same type of problem” (de Courtenay and Grégis 2017, p 9 therein). For the construction of an empirical thermodynamic potential, the naturally required combination of various thermodynamic properties measured by various methods and authors represents a most suitable option for the application of this concept. Typically, systematic errors remain unnoticed when a series of measurements is carried out with the same equipment and experimental procedure, while random errors usually disclose themselves by scattering values of repeated readings. Published data are often accompanied by uncertainty estimates that cover the scatter range, but hardly ever by covariance information reflecting concealed systematic offsets or trends. Comparison of results from different studies may reveal the existence of systematic deviations between them by discernable structures in graphical presentations.

It is clear that residual patterns may also result from inappropriate choices made for the regression functions, unrelated to possible systematic errors of the background data. However, there are no systematic and rigorous methods available for choosing the best set of regression functions. Here, we rely on the experience and careful data analysis carried out along with the regression procedure, so that trivial mistakes may largely be ruled out as possible reasons for residual structures of the fit. This assumption is reasonable for empirical equations endorsed by IAPWS and other published reference-quality equations.

Uncertainty of input data propagates to uncertainty of regression coefficients, which in turn propagates to any value computed from such an empirical equation. The mathematical framework for this propagation described by the GUM (2008, 2011) can be applied to regression methods in the form of the Generalized Least Squares (GLS) technique (Feistel et al 2016), where a covariance matrix associated with the regression coefficients is derived from the covariance matrix of the input data, whose diagonal elements (variances) express standard uncertainties, while the off-diagonal elements (covariances) may allow for effects of systematic errors (Draper and Smith 1998, Davidson and MacKinnon 1993, 2004, Lovell-Smith et al 2017) but are generally unavailable. Unfortunately, uncertainties of values computed from the empirical equation using this GLS covariance propagation method seem to be systematically and substantially underestimated, at least in part because the off-diagonal elements of the input covariance matrix (Feistel et al 2016) are unknown. This lack of knowledge is a major impediment to the ability to calculate and propagate uncertainty accurately using GLS. However, it may often be possible to populate the off-diagonal elements through analysis of structure in the residual error.

In this paper, an extended approach supporting the GLS method is suggested for including systematic errors in the regression procedure by generalizing the covariance matrix (expressing only random error) to a dispersion matrix (expressing random and systematic error). For this purpose, the stochastic ensemble of randomly varied input data, that was used previously to derive covariance propagation equations (Feistel 2011), is generalized here to include also systematic errors derived from additional sources such as structured residuals of a fit. While regression analyses generally act to reduce not just residual error and its structure, the new approach outlined here attempts to exploit the remaining structure, which represents readily available and relevant additional information.

In

Section 2, the basic relations of the GLS method are summarized in vector-matrix notation. In

Section 3, the term

simulation is used for the regression results that reproduce (with certain deviations) the original measurements. The projector formalism of Aitken (1936) for the description of simulation results and related residuals by the GLS method is generalized here for the propagation of covariance and dispersion matrices. A list of mathematical properties of the projection matrices is reported, including inequalities for the simulation dispersion matrix. In

Section 4, a stochastic ensemble is used as a model for the statistical description of potential errors, and introduced to the GLS weight matrix. For an ensemble describing random and systematic errors, an analytical formula of the weight matrix is given. In particular, to allow for systematic errors in the uncertainty propagation equations, a novel dispersion matrix will be defined as a suitable generalization of the conventional covariance matrix used for GLS regression. In

Section 5, the application of the projector formalism of

Section 3 to the error ensemble of

Section 4 permits a description of the effect of systematic errors on the simulation dispersion matrix of the GLS method. In

Section 6, a simple approach is suggested how systematic errors may be estimated from structured residuals of a fit, and this way included in the dispersion and uncertainty propagation equations of the GLS method. In

Section 7, it is demonstrated that the “error blindness” inherent to regression methods applies also to uncertainties derived from systematic errors by the new dispersion matrix method proposed in this paper.

Section 8 concludes that allowing for systematic errors in the uncertainty propagation of an empirical thermodynamic potential does not necessarily raise the resulting uncertainties to the quantitative level given by the scatter range of the input data. This “error blindness” is immediately visible in a tutorial regression example presented in the Appendix.

2. Generalised Least-Squares Equations

Let a given set of M measurement results, , i = 1,…, M, be observed under the conditions , at which the particular experiment, i, was conducted. For example, y1 may be the density of a given substance measured at the temperature T1 and the pressure p1, i.e., x1 = (T1, p1)T, and y2 may be the heat capacity of the same substance measured at the temperature T2 and the pressure p2, i.e., x2 = (T2, p2)T, etc. In the vector-matrix notation used here, a vector is conventionally a column vector, which is the transpose of a row vector, as indicated the superscript T. A combined standard uncertainty (GUM 2008), , is associated with each measurement result, which is assumed to also include contributions from uncertainties of measuring the conditions xi. This approach is consistent with numerous experimental works where uncertainties are reported of the desired quantity but rarely of the conditions under which the measurement took place. The formalism for propagation of uncertainties of independent variables, if known, to the dependent regression variable is routinely available from standard software packages (Cox and Harris 2006, Saunders 2014).

The measured values are fitted by a set of related empirical functions,

, each of which depends on the same set of

N <

M adjustable parameters,

,

i = 1,…,

N, regarded as the

regression coefficients. The coefficients

a are found by minimising a

generalised least-squares (GLS) expression,

p(

a), of the form (Davidson and MacKinnon 1993, 2004, Draper and Smith 1998, Strutz 2011, Feistel

et al 2016, Lovell-Smith

et al 2017),

with a suitably specified symmetric and positive definite (

M ×

M)

weight matrix W, whose inverse,

is known as the

covariance matrix of the input values,

y, and

is the vector of residual errors of the fit. Without relevant loss of generality, it is sufficient to consider in this paper only functions

f that are linear functions of

a because the validity of linear covariance propagation equations exploited in the following is restricted to a small vicinity of a local minimum of equation (1).

For functions

f linear in

a, and a regular matrix

A, a unique solution of the problem (1) exists for the regression coefficients (Aitken 1936, Davidson and MacKinnon 1993),

Here,

A is the symmetric (

N ×

N)

Gaussian normal matrix of the problem (1),

that is assumed here to be regular,

, and

is the (

N ×

M)

Jacobian (or

sensitivity matrix) of the empirical functions

f with respect to the regression coefficients,

a, evaluated at the matrix of the independent variables,

X, of the input vector,

y. For functions

f linear in

a, the Jacobian

Jf is a matrix independent of the regression coefficients,

a. Without loss of generality, any intercept of

f at

a = 0 may have been subtracted from

y in advance, so that for simplicity of the equations we may consider only intercept-free functions

f of the form

Following the GUM (2011) covariance propagation method, the covariance matrix of the regression coefficients

a is (Feistel

et al 2016)

The minimum which equation (1) takes is known as the

goodness of fit,

p(

a), which can be expressed by means of equation (3),

While the goodness of fit is a clear and easily available indicator for a suitable choice of the regression function and of the weights used for the data points, it only insufficiently reflects possible systematic structures within the distribution of the residuals. Checking the value (8) is therefore only a first necessary step before a more thorough analysis may be performed, such as by graphical inspection of the data scatter.

The purpose of the determined set of empirical regression coefficients

a is that certain functions of interest,

h(

a,

x) may be

predicted for certain (interpolated or extrapolated) independent values,

x, such as the entropy of the substance under study at special

T-

p conditions where no measurements had been made. Following the GUM (2011) covariance propagation method, the symmetric (

L ×

L) covariance matrix,

Uh, of a set of

L predicted quantities,

h, is computed from the covariance matrix,

Ua, equation (7), of the regression coefficients by (Feistel

et al 2016),

where

is the (

N ×

L)

Jacobian (or

sensitivity matrix) of the prediction functions

h with respect to the regression coefficients,

a, evaluated at the independent variables,

x, of the output vector of interest. Note that the functions

h may or may not include some of the empirical functions

f, and the independent variables

x may or may not include some of the points where the input values

y were measured. There is no restriction to the choice of the number

L. The main diagonal elements of the matrix

Uh represent the squares of the propagated combined uncertainties,

uc(

h), of the predicted values,

h.

As the covariance propagation equations are linear in the covariance matrices, equations (4), (7), (9), any coverage factor applied to input uncertainties is accordingly returned by the output uncertainties. “An expanded uncertainty U is obtained by multiplying the combined standard uncertainty uc by a coverage factor k. … The choice of the factor k, which is usually in the range 2 to 3, is based on the coverage probability or level of confidence required of the interval” (GUM 2008 section 3.3.7 therein). Coverage factors may be applied to the standard uncertainty regardless of whether the underlying statistics is Gaussian or not.

From equation (9) it is clear that providing the covariance matrix along with the regression coefficients of an empirical equation will empower users of the equation to determine uncertainties for any properties calculated from that equation, without needing the background data used in the equation’s construction. However, in order to allow for systematic errors in the corresponding propagation equations reported above, more general dispersion matrices will be defined and suggested in section 4, to be used in place of covariance matrices that allow for random errors only.

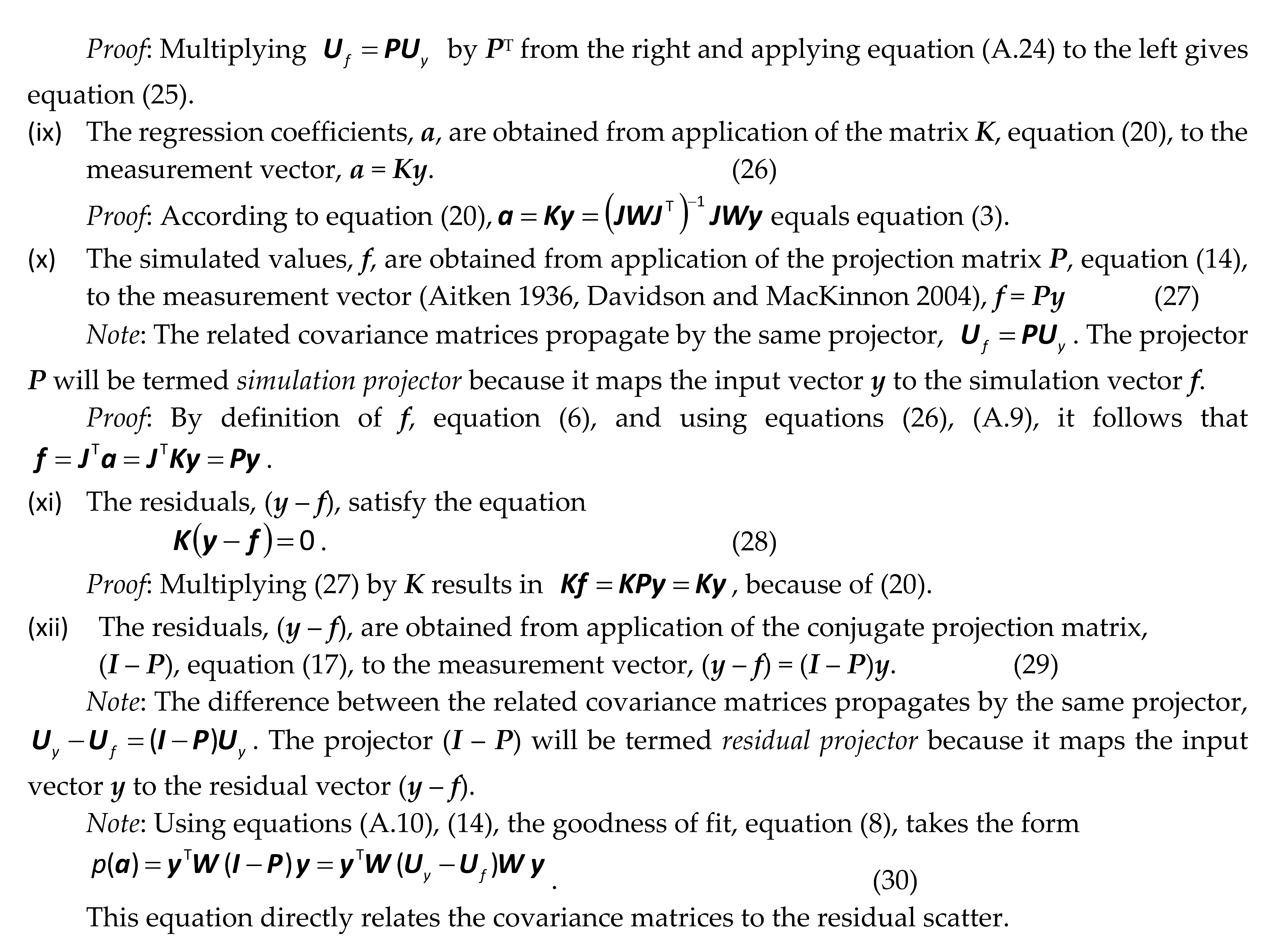

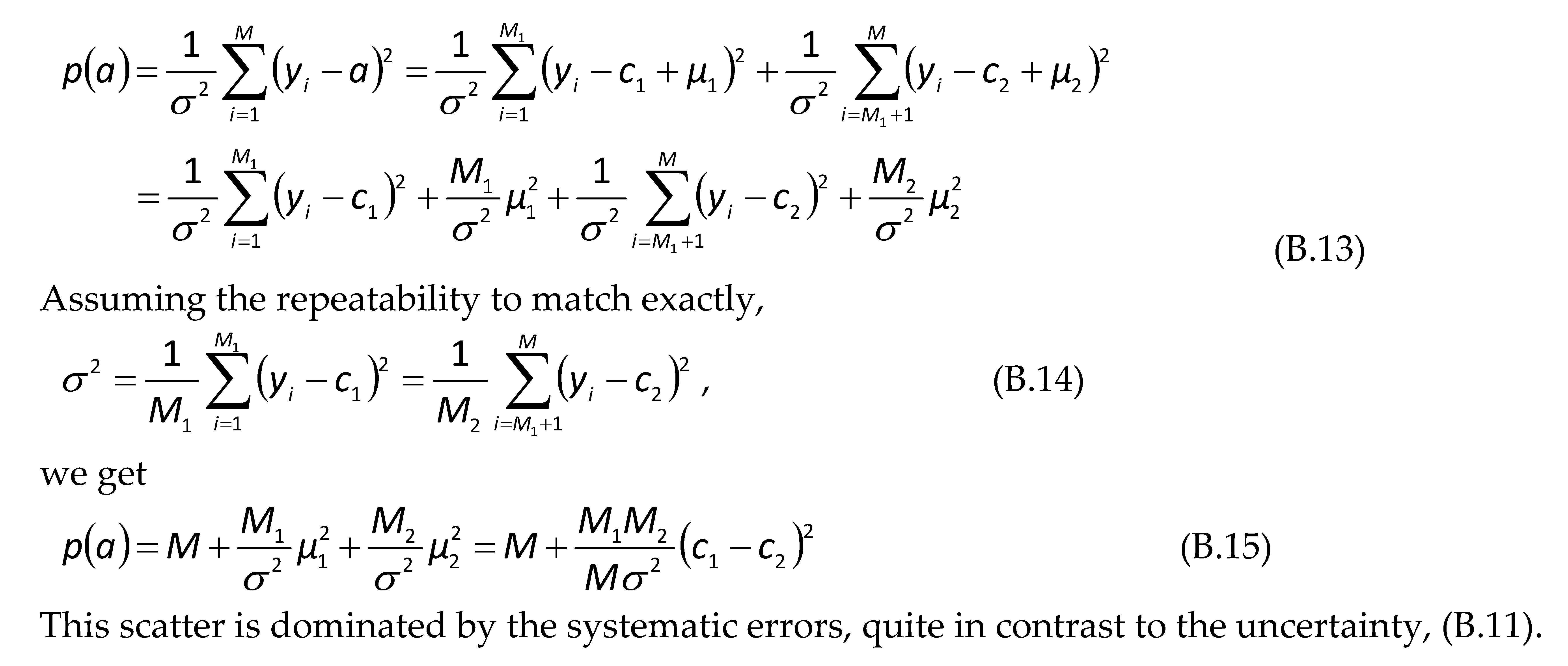

3. Simulation Covariance Equations

The uncertainties of the GLS input data are related to those of the associated GLS results by a linear projector whose properties are derived in this section. For the computation of the residuals, the set of prediction functions h is chosen to match the empirical functions f, both evaluated at the same set of independent variables. This set of functions f is regarded here as the simulation of the original measurements y by the empirical equation. In this case, the Jacobians Jf, equation (5), and Jh, equation (10) are identical matrices,

4. Stochastic Ensemble Equations

In this section, an analytical formula for the GLS weight matrix is derived in terms of random and systematic errors of the measurements. The metrological concepts of uncertainty and covariance have probabilistic backgrounds. Particular measured and calculated quantities may be understood as randomly selected samples drawn from a virtual large set of alternative values that is characterized by corresponding statistical properties (de Courtenay and Grégis 2017). Such sets are considered here, and may be termed stochastic ensembles in this context (Feistel 2011).

“It is understood that the result of the measurement is the best estimate of the value of the measurand, and that all components of uncertainty, including those arising from systematic effects … contribute to the dispersion” (GUM 2008, annex B.2.18 therein). Let a potential measurement vector,

m, be associated with the original measurement results,

y, by

where δ

m is termed the

error of the potential value with respect to “the best estimate of the value of the measurand”, so that the statistical properties of the error distribution define the related dispersion. “Traditionally, an error is viewed as having two components, namely, a

random component and a

systematic component” (GUM 2008, section 3.2.1 therein), where the “systematic error is equal to error minus random error” (GUM 2008, annex B.2.22 therein), that is,

Here, the

systematic error,

µ, is the “mean that would result from an infinite number of measurements of the same measurand carried out under repeatability conditions minus a true value of the measurand” (GUM 2008, annex B.2.22 therein), that is,

Here, the brackets

indicate the statistical average over such „an infinite number of measurements“. The

random error,

ξ, is the “result of a measurement minus the mean that would result from an infinite number of measurements of the same measurand carried out under repeatability conditions” (GUM 2008, annex B.2.21 therein), that is,

Separately for each vector,

m, of repeated potential measurements, a regression analysis can be performed with respect to the same regression functions, resulting in a corresponding statistical ensemble of regression coefficients. Modified by the small error, δ

m, the associated error of the particular regression coefficients,

, results from equation (3),

In turn, by means of the Jacobian (10), the error of

a propagates linearly (GUM 2008, annex E.3.2 therein) to errors of quantities

h derived from the empirical equation,

In contrast to the errors considered so far, GUM (2008, annex E.3.7 therein) “does not classify components of uncertainty as either ‘random’ or ‘systematic’”. GUM (2008, section 3.3.3 therein) “groups uncertainty components into two categories based on their method of evaluation, ‘A’ and ‘B’… These categories apply to uncertainty and are not substitutes for the words ‘random’ and ‘systematic’”. “Uncertainty of measurement is thus an expression of the fact that, for a given measurand and a given result of measurement of it, there is not one value but an infinite number of values dispersed about the result that are consistent with all of the observations and data and one's knowledge of the physical world, and that with varying degrees of credibility can be attributed to the measurand” (GUM 2008, annex D.5.2 therein).

An

uncertainty matrix is a matrix “containing on its diagonal the squares of the standard uncertainties associated with estimates of the components of a … vector quantity, and in its off-diagonal positions the covariances associated with pairs of estimates. … An uncertainty matrix is also known as a

covariance matrix or

variance-covariance matrix“ (GUM 2011, section 3.11 therein). The covariance of random variables

y and

z is defined by (GUM 2008, annex C.3.4 therein)

Applied to the measurements

m, the related covariance matrix is

However, this covariance matrix allows for random errors only and ignores systematic errors. For this reason, deviating from the GUM recommendation (38), we shall consider here the

dispersion matrix,

as a more suitable expression for the combined uncertainties of the measurements

m. The dispersion matrix is a generalization of the covariance matrix and reduces to the latter when systematic errors are absent. Non-diagonal elements, if any, of the covariance matrix, equation (38), result from correlations between random errors rather than from systematic errors, equation (39).

A manifest reason for the preferred presentation of propagation equations for covariance matrices, equation (38), rather than dispersion matrices, (39), within the GUM lies possibly in the fact that measurements are commonly corrected for known systematic errors and only random errors need to propagated to properties derived from the original measurements. In the context of multiple GLS regression, however, a-posteriori corrections of published measurement results are excluded, and systematic errors detected during the regression procedure need to be included in suitable mathematical tools for the uncertainty evaluation of the regression result. In this sense, GUM (2008, section 6.3.1 therein) notes that “occasionally, one may find that a known correction for a systematic effect has not been applied to the reported result of a measurement, but instead an attempt is made to take the effect into account by enlarging the ‘uncertainty’ assigned to the result. This should be avoided; only in very special circumstances should corrections for known significant systematic effects not be applied to the result of a measurement. Evaluating the uncertainty of a measurement result should not be confused with assigning a safety limit to some quantity.” GLS regression seems to be, as we assume here, such a “special circumstance”.

Taking the average,

, over a stochastic ensemble of errors given by equation (35), the dispersion matrix of the regression coefficients evaluates to

This equation (40) is consistent with the GUM covariance propagation equations (2), (7), (4) if the original covariance matrix,

, is now understood as the dispersion matrix,

, and if the errors scatter as given by the dispersion matrix

This relation defines the weight matrix of equation (1) in terms of the stochastic ensemble considered, or vice versa. In turn, as a result of the error propagation equation (36), the ultimately desired dispersion matrices , of a set of derived quantities, h, and , of the prediction functions, f, respectively, are computed from the propagation equations (9) and (12), starting from the dispersion matrix Ua.

Note that only the second moments of the error distribution are relevant here, regardless of any other details of the actual shape, as long as the linearization (e33) is justified by a negligible amount of large errors. This linearization approximation permits general analytical solutions for infinite statistical ensembles to be derived, avoiding the need to obtain particular numerical solutions from finite-size Monte-Carlo sampling on probability distributions that may not be known, as is often required for other uncertainty analyses (Deletic et al 2012).

A simple stochastic ensemble is that of uncorrelated random noise,

Here,

δij is the Kronecker symbol that equals 1 if

i =

j and 0 otherwise. From equation (41) and (42), the weight matrix is found to be diagonal (Feistel 2011),

In this special case, the GLS problem (1) reduces to weighted least squares (WLS). The variance of this ensemble may be identified with the measurement uncertainty, .

The matrix

W of equation (43) can be calculated analytically,

and the associated dispersion matrix of the regression coefficients, equation (7), then equals the covariance matrix

Extending this model, in order to allow systematic errors to be included in the stochastic ensemble, the definition (42) may be generalized to the model of equation (39),

where each potential error value has some systematic mean offset,

µi, that is unaffected by taking the ensemble average, plus a random error. While the average over the random error is zero, see equation (34), the mean value

results from the non-random error contribution. The related combined uncertainties are given by the diagonal elements of

Uy,

Note that neglecting the random-error part of equation (46) would result in a singular matrix Uy for which the inverse W does not exist. A model similar to equation (46) is discussed by Davidson and MacKinnon (1993, p324 therein, and 2004, equation 7.88 therein).

Related to equation (46), the GLS weight matrix follows from equation (41),

where the systematic errors give rise to non-vanishing off-diagonal elements. The inverse matrix of equation (48),

, can be calculated analytically by the Sherman-Morrison-Woodbury formula (Seber and Lee 2003, equation A.9.4 therein). It has the elements

This formula avoids the need for numerical inversion of the typically very large (M × M) matrix (48). It permits the explicit formulation of the GLS problem, equation (1), and the analytical computation of the related normal matrix, , for the systematic-error model (46).

Using the matrix (49), it is instructive to express the least-squares condition, equation (1), in the form

Here,

α(

a) is the angle

4 between the weighted residual vector,

and the vector of weighted systematic errors,

The structure of equation (50) reveals that the GLS fit of the model (48) results in a compromise between minimizing the WLS sum of squared residuals, |r|², that is, adjusting the simulation, f, close to the measurement, y, and minimizing the angle α, that is, adjusting the residuals r close to the errors s, or, in other words, the simulation, f, close to the “corrected” measurement, (y – µ).

The dispersion matrix of the regression coefficients, which is suggested to be reported in conjunction with any empirical equation and its coefficients, is given by equations (7), (4),

Related to the special weight matrix W given by equation (49), the requisite (N × N) inverted matrix Ua is not available in analytical form. As this matrix contains only numerical constants associated with the given equation, the numerical inversion is required only once.

There may be various forms of, or reasons for systematic errors. As well, more complicated statistical properties than those given by equation (46) may be implemented by suitable stochastic ensembles. A key question is how such a matrix may be estimated for practical regression analysis, at least for a model like that of equation (46).

5. Uncertainty of Simulation

Making use of the stochastic ensemble, equation (48), the simulation dispersion matrix is

The uncertainty of the reproduced values consists of two additive contributions, one resulting from the random error, and one from the systematic error. Note that by its definition, equation (14), the projection matrix

P itself is not independent of the stochastic ensemble chosen, namely

Because of equation (25), equation (54) can be reformulated in an explicitly symmetric form,

Let

v be a normalized vector,

vTv = 1. Consider the quadratic form

The second term is the squared vector norm,

In the absence of systematic errors, the main-diagonal elements of the matrix

A, equation (4), consist of sums of

M quadratic terms and will increase with each additional input data point. Consequently, the elements of the matrices

Ua, equation (7), and

Uf, equation (12), are expected to decrease like 1/

M with an increasing number of data points,

M (Davidson and MacKinnon 2004, GUM 2008 annex E.3.5 therein). The prediction uncertainties related to random errors may take values that become the smaller the more input data are included because the simulation covariance is proportional to the inverse normal matrix,

The first term of equation (57) is just the square of a scalar,

The quadratic form, equation (57), is bounded from below by the systematic errors,

If, in particular, v is chosen to have only a single non-zero component, the quadratic form (57) is reduced to the squared uncertainty of a predicted (reproduced) value given by the related main-diagonal element of Uf. So, the uncertainties of simulated values cannot be less than the limit given by the systematic errors in the form of the inequality (61).

The inequality (61) is the main result of this section. Its meaning may be discussed in slightly more detail here. As described in

Section 3, the matrix

P is a projector that maps any

M-dimensional measurement vector to an

N-dimensional subspace spanned by the

N eigenvectors of

P that form the sensitivity matrix

J of the

M predicted values,

f, with respect to the

N regression coefficients,

a. The product

Pµ is the projection of all

M systematic errors

µ of the measurements to that subspace, expressing the partial effect of the systematic errors that is transferred by the

N regression coefficients to the

M predicted values.

Let

be the representation of the systematic-error vector

µ in terms of eigenvectors of

P. Here,

Ji,

i = 1, …,

N, are the eigenvectors belonging to the eigenvalue 1, see equation (19), given by the sensitivity matrix

J, and

Ji’,

i =

N+1, …,

M, are the eigenvectors of

P with respect to the eigenvalue 0, see equation (18), which are eigenvectors to the eigenvalue 1 of the conjugate projector, (

I –

P). The

ci are the corresponding expansion coefficients. Projection by

P reduces the sum (62) to its first

N terms, thus rendering irrelevant the other eigenvectors,

Ji’,

This way, a certain part of the systematic error is suppressed by the smoothing effect of the regression procedure, mathematically represented by the simulation projector

P, and only the part affecting the regression coefficients is carried on. Introducing a vector

c of those

N expansion coefficients, the sum in equation (63) may be written in matrix notation,

Making use of the orthogonality, equation (23), and of equation (21), the vector

c in equation (64) can explicitly be calculated from

µ by the relation,

The matrix

K is available from the computation of the regression coefficients in terms of the input measurements, equation (26),

a =

Ky. Because

µ is the systematic error of the measurement vector

y, the analogy to equation (65),

c =

Kµ, indicates that the vector

c can be understood as the systematic error of the regression coefficients,

a. Similarly, since

f =

Py is the simulation vector,

Pµ in equations (61) and (64) can be understood as the systematic error of the simulation. The quadratic form, equation (61), can be expressed in terms of

c,

If we choose, for example, the vector

v to hold only its first component,

vT = (1, 0, …, 0), the inequality (61) provides the lower bound for the prediction uncertainty at the first measurement point, due to systematic errors, by the expression

Evidently, this lower bound is combined of the joint effects of systematic errors at all data points via equation (65), not solely at the first measurement itself. Estimating those systematic errors from structures discernible in the scatter of regression residuals is the topic of the next section.

6. Estimating Systematic Errors from Regression Residuals

The Type B standard uncertainty “is evaluated by scientific judgement based on all of the available information on the possible variability” (GUM 2008, section 4.3.1 therein) of the input quantity. Given a set of measurement values and their Type A uncertainties, only a diagonal weight matrix, equation (43), is available. After the regression has been carried out by minimizing the related WLS sum, equation (1), by a suitable choice of regressor functions and subsequent determination of the regression coefficients,

a, each measured value,

yi, is then associated with a residual, Δ

yi, of the fit,

This residual may be regarded as the error of the measurement with respect to the fit. This error typically consists of a systematic part,

si, and a random part,

ri,

For reasonably separating the given residuals Δyi in two parts, information additional to the original mathematical problem is required.

The full input data set may be split into groups, each belonging to a certain data source (article, experiment, author, etc.). It may be assumed that some kind of systematic error is characteristic for all values that belong to the same group, and that this common kind of deviation is reflected in discernible structures in the fitting residuals of this group, such as an offset or trend relative to the overall fit covering all groups together. In such a case, the residuals of a selected group may again be fitted separately to an auxiliary empirical function, s(x), that accounts for their systematic error. This fit leaves over a reduced but random residual that accounts for the random error of the group members. The offset, si = s(xi), may then be identified with the systematic error, µi, of the stochastic ensemble, equation (46), while the remaining random error, σi, may be derived from the combined uncertainty, equation (47), or alternatively from the scatter relative to the auxiliary function. With such estimates available for each measurement, an improved dispersion matrix, equation (49), can be constructed for the regression coefficients.

Structure in the residuals is evidence of commonality of error and hence correlation. This error could, at least in part, be corrected, leaving a random scatter of error characterised by the uncertainty in the correction. However, in many cases the residual error structure cannot be corrected, in which case we need to account for this error structure in the uncertainty budget. For example, a linear trend in the residuals of a particular set of data suggests a common error perhaps scaled by one of the measurement data quantities. The scatter of points around the linear trend represents the residual random error for that part of the fitted data curve, and can be quantified as a standard deviation. This approach is covered to some extent by Lovell-Smith et al (2017). It suggests that the systematic error terms in those data points which form a set sharing a trend or auxiliary empirical function, should be considered fully correlated, which is what the simple model (46) assumes.

As a cross-check, the full GLS regression may be repeated with the new weight matrix containing off-diagonal elements for inspecting the residual structure. Also, the uncertainty of the empirical equation derived from GLS may be compared with other uncertainty estimates possibly available from other sources. Iterative adjustments of the systematic-error estimates, µi, may be carried out in order to achieve sufficient agreement. Because the dispersion matrix provides uncertainty estimates also for quantities for which no such estimates were available before, these new estimates will this way become consistent with those available previously.

7. Error blindness

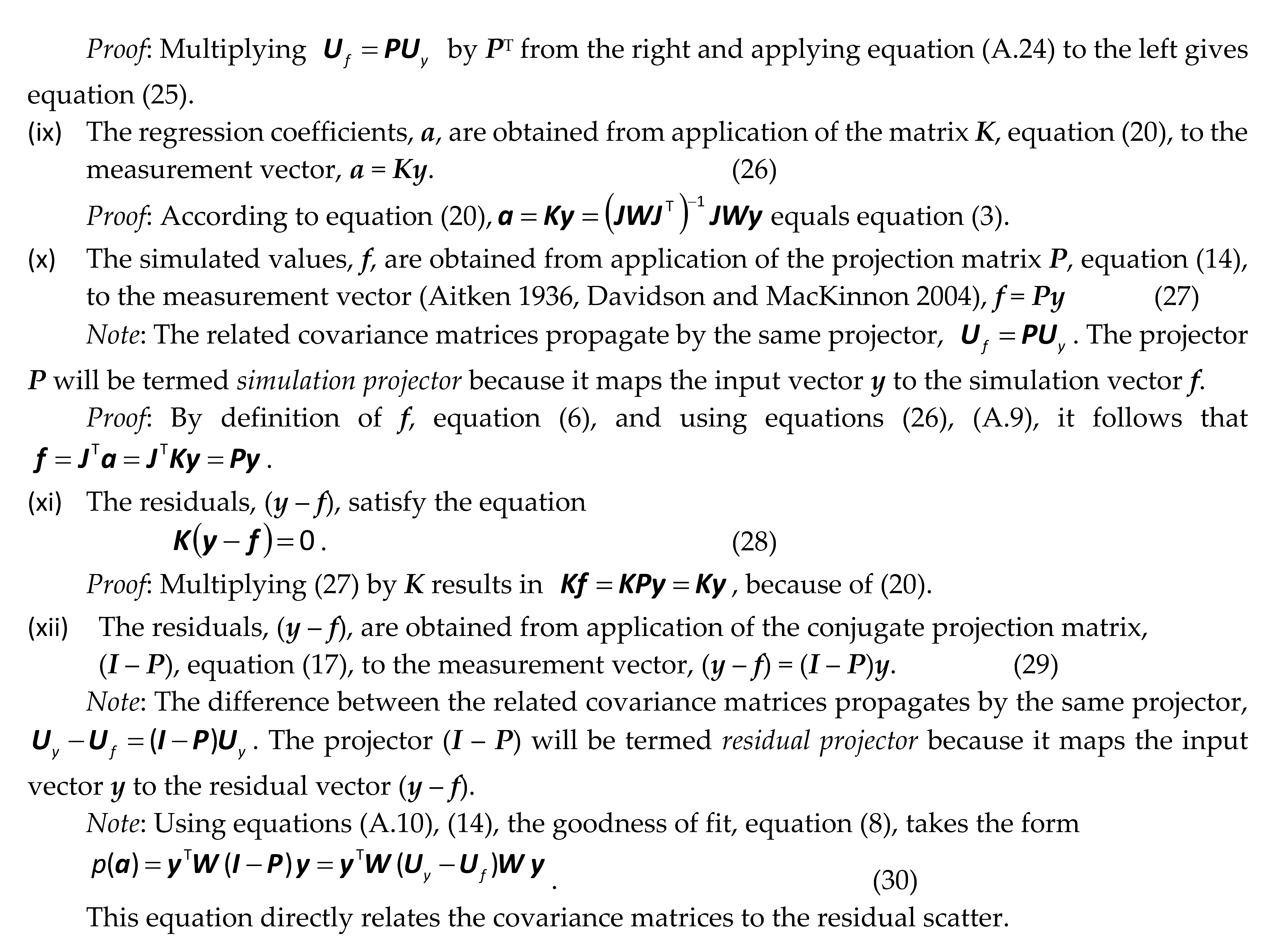

It is possible that errors belonging to a class of data points with certain mathematical properties, regardless of how large those errors may be, do not propagate to related errors of the regression coefficients. In turn, those errors will not be reflected by the dispersion matrix of the regression coefficients, and will consequently neither affect the estimated uncertainties of the regression function nor of any properties derived thereof. This insensitivity will here be termed error blindness of the regression coefficients with respect to that error class. A simple example is the calculation of the arithmetic mean value with respect to systematic offsets of the data groups involved, see Appendix B.

The propagation of errors of the measurements to those of the regression coefficients is governed by equation (35). A regression function is termed

error-blind with respect to a certain error δ

m ≠ 0 if

Here, K is the (N × M) matrix defined by equation (20).

Error-blind regression functions may lead to significantly underestimated uncertainties of quantities computed thereof. If the dispersion matrix of the regression matrix is unable to propagate significant errors of the input data to the output, the reporting of that matrix along with the regression coefficients will represent an insufficient tool for the description of deviations of input data from predicted properties of the corresponding equation.

In a sense, error-blindness is an inherent feature of least-squares regression. It is not difficult to imagine that various different point clouds may be represented by exactly the same regression function. Least-squares fitting is even designed as a method for suppressing noise in data, rendering details of the noise irrelevant for the result. The

M –

N dimensional vector space of

M differential errors d

m that belong to the same regression coefficients

a obeys a homogeneous system of

N linear equations (70) in the form

Thus, in the sense that there are much more error components,

M, in equation (71) than there are restricting conditions,

N, one may even argue that the regression coefficients are “blind to most of the errors”, in particular also to any multiples of the residual vector, equation (28). Systematic errors,

µ, that obey equation (71),

do not raise the final uncertainty, equation (66), despite arbitrarily large residuals.

In the context of this paper, the most relevant questions is what kind of systematic errors contained in the GLS weight matrix (48) may not propagate into the dispersion matrix (53). The governing uncertainty propagation equation is

Writing the weight matrix (49) in compact form,

its multiplication (73) with the Jacobians

J gives

Evidently, systematic errors

µ do not propagate to the dispersion matrix

Ua of the regression coefficients if they fall under the condition

Uncertainty estimates derived from the dispersion matrix for the regression function will be “blind” to any systematic errors with this property, even if those errors may be substantial and significant.

Calculating from equation (74) the product

demonstrates that the condition (76) implies error blindness, equation (72), for

K defined by equation (20) in the special case of simulation,

J =

Jf. Evidently, systematic errors that do not propagate to the dispersion matrix do neither propagate, too, to the regression coefficients.

The decomposition of the regression residuals into systematic and random errors,

may be done as described by equation (69) or in any other suitable way. Independently of that particular separation method applied, by considering the property (28), the multiplication of (78) with the matrix

K always results in

However, if the systematic error µ is identified with its estimate s, Ks = Kµ is the systematic contribution to the uncertainty, equation (65). It follows that the effect of error blindness does not fully suppress the non-statistical effect of systematic error, but reduces its magnitude to that of the residual random error.

8. Summary

When reference-quality empirical equations of state are constructed from large, heterogeneous sets of data for various quantities that had been published by various authors in the course of decades of years, such as the fundamental geophysical equations for air (Lemmon et al 2000), fluid water (Wagner and Pruß 2002), ice (Feistel and Wagner 2005, 2006) or seawater (Feistel 2003, 2008, 2010), the extended requisite set of regression functions involved is typically selected by numerous laborious trial-and-error iterations. A subjectively “best” version is obtained as a suitable compromise between a minimum value of the goodness of fit, equation (8), a minimum number of regression coefficients, minimum mathematical complexity of the optional regression functions, a smooth and reasonably extrapolating overall regression function without spurious oscillations of its first three or four partial derivatives, and well-balanced scatter of uncertainty-weighted residuals in the various regions of the state space and of the various derived quantities. One may hardly imagine that this demanding, complicated process may be satisfactorily accomplished by any simple, fully automatic algorithm.

As a result of such a “best fit”, certain data groups are represented very well, scattering randomly about the regression curve. Other data groups may deviate more systematically with clear trends or offsets. Both situations should properly enter the uncertainty estimates associated with the final regression function. However, only in rare cases such empirical equations of state were published together with a covariance or dispersion matrix of the regression coefficients, such as suggested by the GUM (Feistel et al 2016, Lovell-Smith et al 2017). Rather, uncertainty estimates are reported for only a few selected quantities, in particular for those where measurements actually entered the fit. Those estimates are often obtained from unsystematic and subjective, expert-based assessments. One of the main reasons for this unsatisfactory situation is the fact that, starting from available estimates for systematic and random uncertainties of the input data, there is no formal procedure available for the construction of a reasonable dispersion matrix of the regression coefficients from those input uncertainties. The aim of this paper is to reduce this profound deficiency.

The mathematical formalism developed in this article allows including systematic errors in the weight matrix of a fit, and accordingly in the dispersion matrix of the regression coefficients. The regression procedure suggested here consists of the following steps:

Accepting uncertainties,

ω, such as those reported by authors along with their measurements,

y, a WLS fit with a diagonal weight matrix, equation (44),

is performed. It is common practice that this step may be repeated for optimizing the set of regressor functions and their adjustable coefficients. Finally, from the structure of the residuals, systematic errors,

µ, and the range of random scatter,

, are estimated, as described in section 6. The regressor function values

f’ simulated by the fit, and their scatter (

y –

f’), respectively, are obtained from the projectors

P’ and (

I –

P’), equations (27), (29), applied to the vector of measurements,

y. Here, the prime ‘ added at the variables is used to indicate that these least-squares quantities are tentative ones, belonging to the preparatory first step of the method.

With the same data points and regressor functions as before, the fit is repeated using a GLS weight matrix, equations (48), (49),

The result of this fit is considered the final empirical equation. Consistent with its regression coefficients, their dispersion matrix, equation (53),

is calculated for being reported along with the regression coefficients.

External subsequent users of the published equation may estimate uncertainties (and possibly also dispersion matrices associated with sets of output data) of values

h calculated from the equation (within its range of validity) by equation (9),

The main diagonal elements of the dispersion matrix Uh represent the squared uncertainties of the predicted values, h.

Uncertainties of empirical equation estimated this way, including random and systematic errors of individual data sets, represent the reliability and robustness of the given equation with respect to variation of the input data within their uncertainties. The output data uncertainty, equation (83), should not be confused with, as the GUM is calling it, a safety limit to some quantity. As well, it should not be confused with the likely range of scatter of possible future measurements under reproducibility, or similar, conditions.

Acknowledgement:

The authors are grateful to the referee for critical comments and helpful suggestions. This paper contributes to the tasks of the Joint SCOR/IAPWS/IAPSO Committee on the Properties of Seawater (JCS). Organisations financially supporting this work include the Scientific Committee on Oceanic Research (SCOR), with funding from national SCOR committees, and the International Association for the Properties of Water and Steam (IAPWS), with funding from IAPWS National Committees. Part of this work was funded by the New Zealand Government as part of a contract for the provision of national measurement standards.

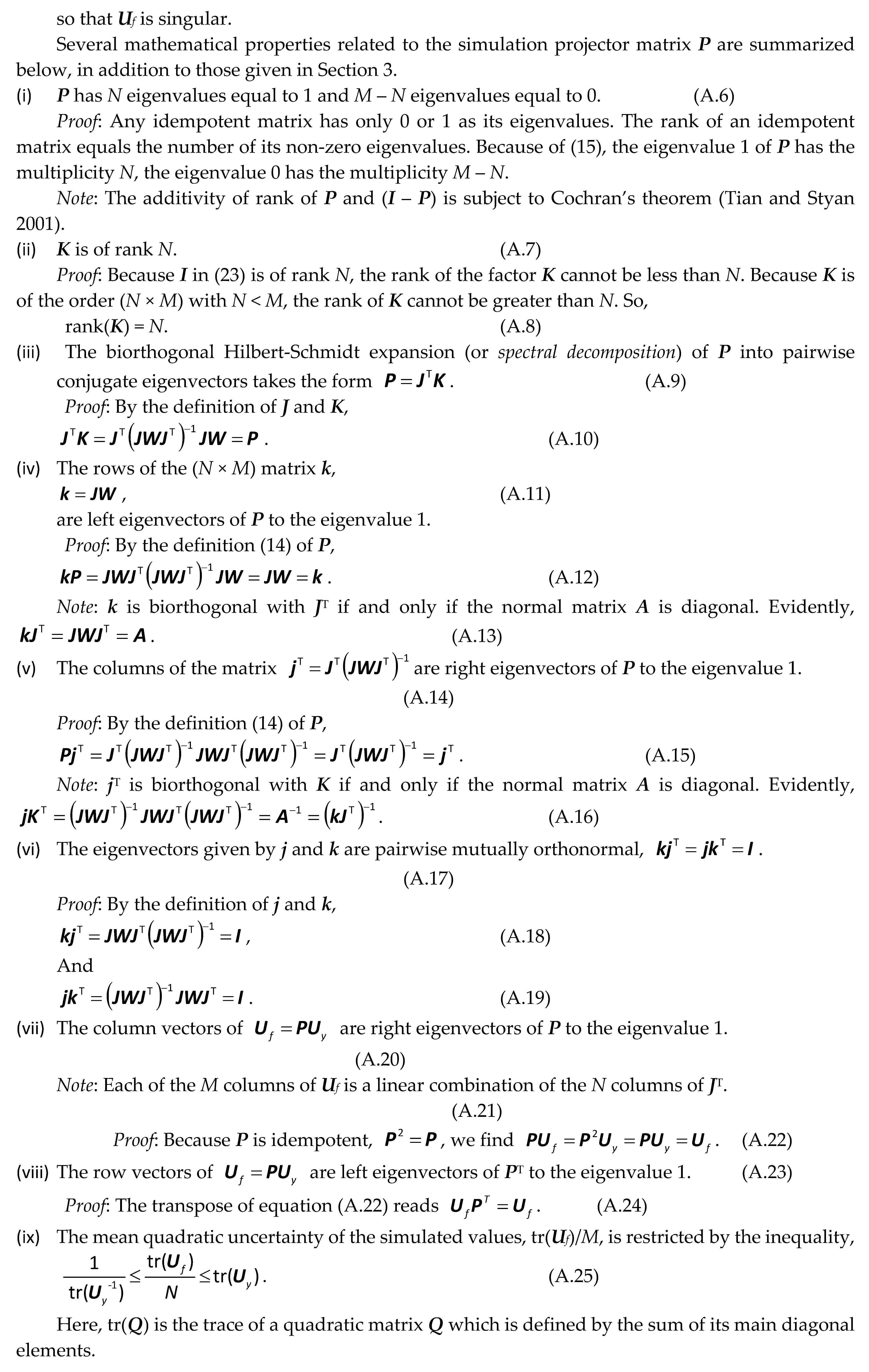

Appendix A: Additional Properties Related to the Simulation Projector P

References

- Aitken A C 1936 IV.—On Least Squares and Linear Combination of Observations Proc. Roy. Soc. Edinburgh 55 42–8. [CrossRef]

- Baksalary O M, Bernstein D S and Trenkler G 2010 On the equality between rank and trace of an idempotent matrix Appl. Math. Comp. 217 4076–80. [CrossRef]

- Coope I D 1994 On matrix trace inequalities and related topics for products of Hermitian matrix J. Math. Anal. Appl. 188 999–1001. [CrossRef]

- de Courtenay N and Grégis F 2017 The evaluation of measurement uncertainties and its epistemological ramifications Stud. Hist. Phil. Sci. in press. [CrossRef]

- Cox M G and Harris P M 2006 Uncertainty evaluation. Software support for metrology, best practice guide No. 6 NPL Report DEM-ES-011 National Physical Laboratory, Teddington, UK. http://publications.npl.co.uk/npl_web/pdf/dem_es11.pdf.

- Davidson R and MacKinnon J G 1993 Estimation and Inference in Econometrics (New York: Oxford University Press).

- Davidson R and MacKinnon J G 2004 Econometric Theory and Methods (New York: Oxford University Press).

- Deletic A, Dotto C B S, McCarthy D T, Kleidorfer M, Freni G, Mannina G, Uhl M, Henrichs M, Fletcher T D, Rauch W, Bertrand-Krajewski J L and Tait S 2012 Assessing uncertainties in urban drainage models Phys. Chem. Earth 42–44 3–10. [CrossRef]

- Draper N R and Smith H 1998 Applied Regression Analysis (New York: Wiley Interscience).

- Feistel R 2003 A new extended Gibbs thermodynamic potential of seawater Progr. Oceanogr. 58/1 43-115. [CrossRef]

- Feistel R 2008 A Gibbs function for seawater thermodynamics for -6 °C to 80 °C and salinity up to 120 g/kg Deep-Sea Res. I 55 1639-71. [CrossRef]

- Feistel R 2011 Extended equation of state for seawater at elevated temperature and salinity Desalination 250 14–8. [CrossRef]

- Feistel R 2011 Stochastic ensembles of thermodynamic potentials Accred. Qual. Assur. 16 225–35. [CrossRef]

- Feistel R and Lovell-Smith J W 2017 Defining relative humidity in terms of water activity. Part 1: definition Metrologia 54 566–76. [CrossRef]

- Feistel R, Lovell-Smith J and Hellmuth O 2015 Virial Approximation of the TEOS-10 Equation for the Fugacity of Water in Humid Air Int. J. Thermophys. 36 44-68. [CrossRef]

- Feistel R, Lovell-Smith J W, Saunders P and Seitz S 2016 Uncertainty of empirical correlation equations Metrologia 53 1079–90. [CrossRef]

- Feistel R and Wagner W 2005 High-pressure thermodynamic Gibbs functions of ice and sea ice J. Marine Res. 63 95-139.

- Feistel R and Wagner W 2006 A new equation of state for H2O ice Ih J. Phys. Chem. Ref. Data 35 1021–47. [CrossRef]

- Gaiser C, Fellmuth B, Haft N, Kuhn A, Thiele-Krivoi B, Zandt T, Fischer J, Jusko O and Sabuga W 2017 Final determination of the Boltzmann constant by dielectric-constant gas thermometry Metrologia 54, 280-9. [CrossRef]

- Gantmacher F R 1959 The Theory of Matrices Vol. 1 (Providence, Rhode Island: AMS Chelsea Publishing). [CrossRef]

- GUM 2008 Evaluation of measurement data - guide to the expression of uncertainty in measurement. JCGM 100:2008. www.bipm.org/en/publications/guides/gum.html.

- GUM 2011 Guide to the expression of uncertainty in measurement, Supplement 2, JCGM 102:2011. www.bipm.org/en/publications/guides/gum.html.

- Horn R A and Johnson C R 1984 Matrix analysis (Cambridge: Cambridge University Press).

- IAPWS AN6-16 2016 Advisory Note No. 6: Relationship between Various IAPWS Documents and the International Thermodynamic Equation of Seawater - 2010 (TEOS-10) (Dresden, Germany: The International Association for the Properties of Water and Steam). http://www.iapws.org.

- IOC, SCOR and IAPSO 2010 The international thermodynamic equation of seawater—2010: Calculation and use of thermodynamic properties Intergovernmental Oceanographic Commission, Manuals and Guides No. 56, UNESCO (English), Paris. http://www.TEOS-10.org.

- Lemmon E W, Jacobsen R T, Penoncello S G and Friend D G 2000 Thermodynamic properties of air and mixtures of nitrogen, argon and oxygen from 60 to 2000 K at pressures to 2000 MPa J. Phys. Chem. Ref. Data 29 331–62. [CrossRef]

- Lovell-Smith J W, Feistel R, Harvey A H, Hellmuth O, Bell S A, Heinonen M and Cooper J R 2016 Metrological challenges for measurements of key climatological observables. Part 4: atmospheric relative humidity Metrologia 53 R40–R59. [CrossRef]

- Lovell-Smith J W, Saunders P and Feistel R 2017 Unleashing empirical equations with “Nonlinear Fitting” and “GUM Tree Calculator” Int. J. Thermophys. in press. [CrossRef]

- Marsaglia G and Styan G P H 1974 Equalities and Inequalities for Ranks of Matrices Linear Multilinear Algebra 2 269–92. [CrossRef]

- Penrose R 1955 A generalized inverse for matrices Math. Proc. Cambridge Phil. Soc. 51 406–13. [CrossRef]

- Saunders P 2014 Nonlinear fitting software instructions version 5.0 Callaghan Innovation Report 18 (5.0) Measurement Standards Laboratory of New Zealand, Lower Hutt, New Zealand.

- Seber G A F and Lee A J 2003 Linear Regression Analysis, 2nd edn (Hoboken, New Jersey: John Wiley).

- Strutz T 2011 Data Fitting and Uncertainty (Wiesbaden: Vieweg + Teubner).

- Tian Y and Styan G P H 2001 Rank equalities for idempotent and involutory matrices Linear Algebra Appl. 335 101–17. [CrossRef]

- Wagner W and Pruß A 2002 The IAPWS formulation 1995 for the thermodynamic properties of ordinary water substance for general and scientific use J. Phys. Chem. Ref. Data 31 387–535. [CrossRef]

- Willink R 2013 Measurement uncertainty and probability (Cambridge: Cambridge University Press).

- Yang X 2000 A Matrix Trace Inequality J. Math. Anal. Appl. 250 372–4.

| 1 |

|

| 2 |

|

| 3 |

The rank of a matrix is the maximum number of linearly independent column vectors (or equivalently, row vectors) that form the matrix (Horn and Johnson 1984). |

| 4 |

The angle α between two vectors r and s is defined by their scalar product, rTs = |r||s|cos α

|

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).