1. Introduction

Natural vegetation restoration following intentional clearing is broadly seen as successful if the previous plant community composition is replaced and biomass is tracking towards a benchmark [

1]. This simplistic view does not consider the decades it can take for a site to regain most of its biodiversity and ecosystem attributes [

2,

3] nor the myriad of factors that can derail it such as weed invasions or changes to the fire regime. Indeed, the path to restoration is a dynamic one, requiring both short- and long-term monitoring. Short-term monitoring needs to allow for the potential to intervene, such as by removal of weeds, to accomplish long term success. Longer term monitoring should explore change and trajectory to evaluate success and/or refine strategies that will lead to success [

4].

UAV’s (or “drones”) have been hailed as the key to revolutionising spatial ecology for over a decade [

5], though this is deemphasised by some who suggest that processing workflows are still nascent in this space [

6,

7]. Nevertheless, imagery acquired from drones offers the greatest spatial resolution available today and is usually more cost-effective when areas of interest are much smaller (e.g., 10 ha) than the scene sizes of very high-resolution satellite imagery [

8]. Drone captures are useful for informing the short-term monitoring needs of species composition [

9]. However, repeat acquisitions require that ground crews be available to remotely pilot the UAV on anniversary dates, which can be more challenging.

Sun synchronous satellites like Sentinel-2 are constantly collecting imagery, which minimises the logistical overhead of UAV flights [

10] and guarantees an image will always be available around anniversary dates. However, the spatial resolution of most research focussed satellites (c. 10 m) limits the detection of individual plants. Planet’s Dove and Superdove satellites consist of multiple large flocks of cubesats, which collect images of the Earth daily at 3-5 m resolution. This makes them one of, if not, the greatest source of data for fine scale real-time monitoring available today. Cubesats are tiny satellites when compared to Sentinel-2 or Landsat weighing around 4 kg and orbiting at around 400 km. Imagery is provided in RGB and near infrared at small cost [

11].

A practical monitoring system that combines the advantages of the very high resolution of UAV imagery and the seamless acquisition of satellite imagery has potential to feed both the short- and long-term requirements of rehabilitation assessments. Fractional cover mapping can utilise the very high-resolution discrete classifications from drone imagery to derive fractions of land covers within pixels of lower resolution (e.g. Sentinel or Planet imagery). For example, a plant occupying a third of a 10 m pixel would be classified as 33% coverage. Repeat fractional cover mapping provides the necessary consistent assessment of vegetation coverage through time to make ecological management decisions, particularly around the trajectory of rehabilitation [

12].

The Water Corporation of Western Australia are permitted to clear native vegetation in the process of their operations (e.g., water supply, wastewater, irrigation, drainage). Cleared vegetation requires restoration to meet regulatory requirements. Monitoring is for at least 2 years, but there is an expectation to monitor for longer in the future before relinquishment. Ground based surveys are not persistent enough to be relied on for management response through intervention. An operational remote sensing approach would assist monitoring of native and exotic vegetation distribution and change over time. Here, we explore three study sites of differing species composition and rehabilitation age for the potential to integrate both UAV and satellite imagery to aid in on-going monitoring of vegetation restoration. We have the following aims: (1) determine useful image-derived variables for discriminating between native and exotic species; (2) compare classification performance of native and exotic species; and (3) determine the feasibility of using satellite data and classified UAV images to perform fractional cover mapping for the purpose of monitoring vegetation coverage over time.

4. Discussion

Our results demonstrate the ability of standard drone imagery to detect individual plants and discriminate between broad species classes, as established by Gomez-Sapiens et al. [

9]. However, the assessment of native vegetation recovery and factors that can curtail reestablishment of biodiversity such as weed invasion require repeat acquisitions. Satellite remote sensing is a more convenient method for adding this temporal component [

10] but has either been too expensive or of an unacceptable spatial resolution to provide useful information at the plot scale [

8]. We investigate the applicability of freely available Sentinel imagery at 10 m resolution and low-cost, 3 m, imagery from Planet’s Dove and Superdove satellites for estimating the proportion of exotic and native species within each pixel using fractional cover mapping. When collected over time, this provides the ability to identify vegetation cover trends including exotic weed expansion and native vegetation trajectory [

12].

We used raw spectral bands, vegetation indices, textural images, and vegetation height as input into classification as done by Wilson et al. [

59]. Significantly important variables were identified reoccurring across sites and included spectral bands (n = 4) and vegetation indices (n = 3). Spectral bands and vegetation indices are prevalent in image classification, and our findings reflect satellite-based (e.g., [

60,

61]) and UAV-based (e.g., [

16,

23]) studies. The red-edge band was not useful at any of the three sites. This is in line with the results of Pu and Cheng [

62] for mapping mixed forest, and Darvishzadeh et al. [

63] for estimating leaf area index of structurally different plants with different soil backgrounds and leaf optical properties. However, many other studies have found the red-edge to be essential for discriminating between species of varying health (e.g. [

64,

65]) and for mapping mangrove forests (e.g. [

66]). Hence, its value in classification appears to depend on how much coexisting or target vegetation varies around 700-750 nm.

A majority (n = 8) of textural variables were found to be unimportant across sites. Those that were important (n = 6) were mostly only helpful when native and exotic species were generalised into broad-level classes. Studies using pixel-based classification have successfully applied texture imagery to improve classification accuracy (e.g., [34, 68, 69]). However, these are usually undertaken at a regional scale or consider fewer classes than observed at local-scale revegetation sites. Like Wilson et al. [

59], our findings indicate that textural images may not provide enough information to adequately separate classes at the species-level when using high-resolution imagery.

Comparison of Kappa between sites showed that differing species diversity and revegetation age did not considerably influence classification accuracy. For example, Lancelin and City Beach sites obtained very comparable Kappa (0.72 and 0.71, respectively) despite Lancelin’s older and lower species diversity (n = 17) compared to City Beach’s less established (c. 5 years) and higher diversity (n = 22). Increased species diversity can reduce a classifier’s ability distinguish between large numbers of visually similar classes, impacting both Kappa and increasing class confusion (e.g., [

70,

59]). Assessment of the PA and UA and confusion matrices (see

Appendix B) of plant species indicated that various native mid-storey shrubs were highly confused at the Lancelin and City Beach sites. At Lancelin, the native shrub M. insulare was highly confused with S. crassifolia, M. cardiophylla and A. lehmanniana, all of which are native shrubs of similar height, spreading growth form and dull green leaf colour [

71,

72,

73,

74]. Likewise, at City Beach, confusion occurred between various native shrubs including S. globulosum, C. quadrifidus, T. retusa and G. preissii, all of which have similar branching and dark chlorophyl [

75,

76,

77,

78]. Exotic species were comprised entirely of low-lying herbs and grasses and were also often confused with other exotics. *G. frutocosus, an erect perennial herb, was misclassified as other common lower-lying herbs represented in the *Lawn Weeds class despite a paler complexion [

79] at Lancelin, while the co-occurring erect annual grasses *A. barbata and *L. ovatus, were highly confused at the City Beach site [

80,

81]. Confusion between native and exotic species was minimal, with the exception A. elegantissima, a rhizomatous perennial grass [

82], sometimes being omitted from its true *G. fruticosus class, at Lancelin. Being that the separation between natives and exotics is between mid-storey shrubs and low-lying exotic herbs and grasses, it is suggested canopy height is included to ensure the classifier can consider this separation [

83].

Confusion between plant species in species-diverse landscapes is a common issue in machine-learned classification of high-resolution imagery (e.g., [

70,

84,

59]). For pixel-based classification, confusion may occur when subtle variation in spectral reflectance between species, such as the vivid green pigmentation of M. insulare and the greyish-green hue of A. lehmanniana, is too fine to be measured by course multispectral UAV sensors [

85]. Literature applying machine learning to classify vegetation has reduced class confusion and improved classification accuracy by grouping confused species into one class (e.g., [

70]), broader plant communities or type (e.g., [

86]), or by combing species into native and exotic classes (e.g., [

87]). Grouping similar species such as M. insulare, S. crassifolia and M. cardiophylla into a single class may still provide information for on-going vegetation monitoring whilst improving classification accuracy. For example, significant improvements to classification accuracy were seen at all sites (Kappa ≥ 0.9) when species were aggregated into broad native and exotic classes. Other studies have improved species discrimination by using UAV-fitted hyperspectral sensors to increase spectral resolution (e.g., [

23,

88]). However, due to the higher cost associated with hyperspectral sensors and the need for additional processing due to increased image dimensionality, their uptake is still relatively low compared to multispectral UAV sensors [

89].

Noise in the form of the “salt-and-pepper” effect was observed at various canopies and canopy edges (e.g., A. cyclops and A. rostellifera at City Beach). This phenomenon is often associated with pixel-based classification and is caused by high local spatial heterogeneity between neighbouring pixels within the same land surface (e.g., a tree canopy). As each pixel is classified in isolation without consideration for surrounding pixels, spectrally similar neighbours may be assigned to different classes, often leading to a reduction in species-level classification accuracy [

90,

91]. While the application of a majority filter can successfully reduce the noise effect [

92], many studies suggest using an object-based image analysis (OBIA) technique to classify UAV imagery and reduce noise by grouping spectrally and spatially similar pixels into features or objects instead of using the raw individual pixels (e.g., Lu et al., 2011; Pérez-Ortiz et al., 2016). There is the potential to reduce noise using OBIA, although additional considerations must be made when generating features such as spectral and spatial detail and scale of segmentation [

95] and even then, canopy noise may still be present (e.g., [

59])

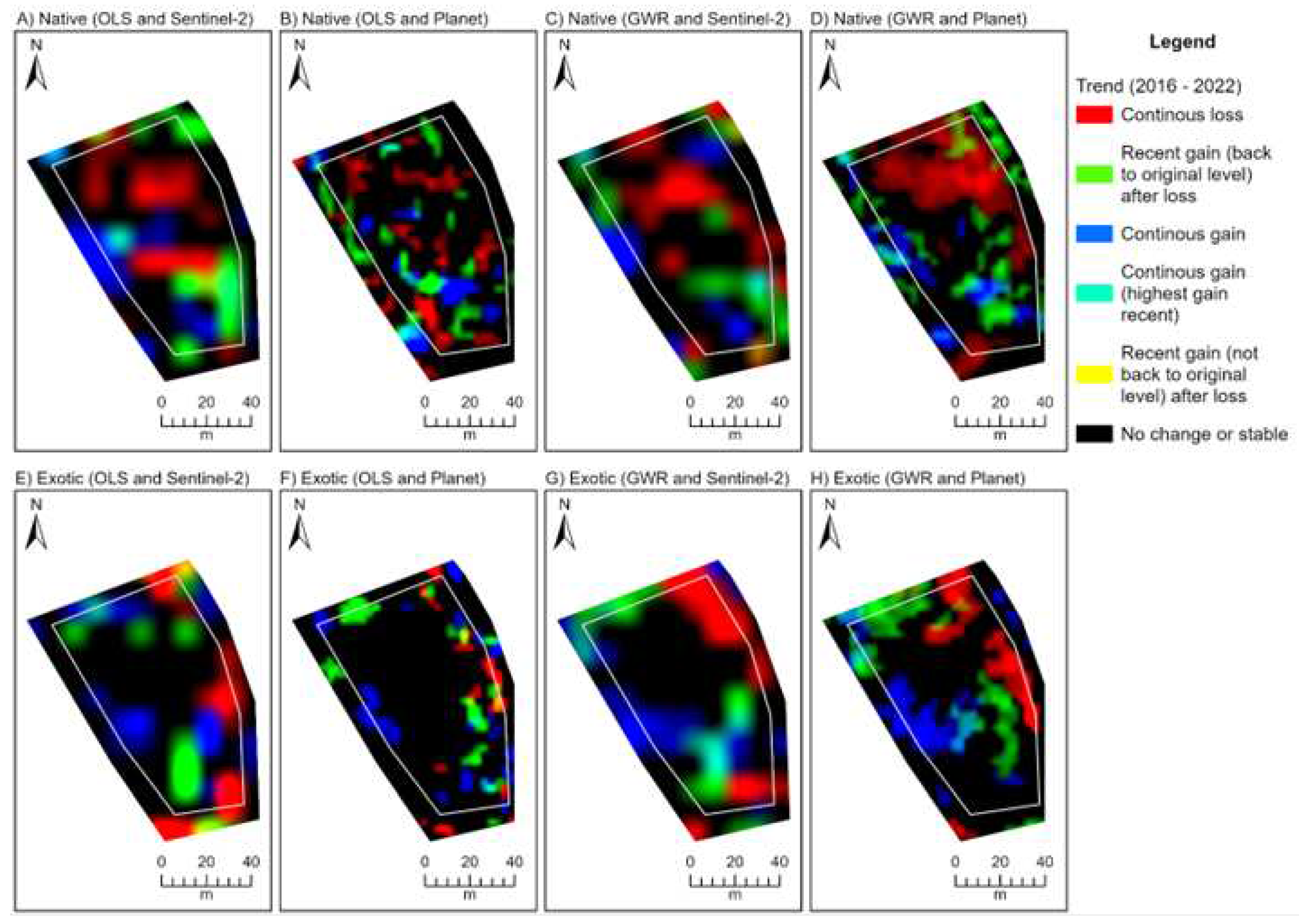

The most common approach for fractional cover mapping is to use global regression models [

96], which assume relationships between dependent and independent variables are spatially homogenous (i.e., constant regression parameters are used over the entire study area). However, as the spectral reflectance signature of vegetation depends on landscape fragmentation, species density, soil quality and age of rehabilitation [

86], different plant species are likely to exhibit spectral variability across space [

97], which has been shown to make model predictions inaccurate [

98]. Our research showed that Geographically Weighted Regression could mitigate these biases by varying the regression coefficients through space [

51]. The use of GWR for fractional cover mapping is an emerging area of research [

99].

Author Contributions

Conceptualisation, T.P.R.; methodology, L.T., and T.P.R.; software, L.T.; validation, A.C. and L.T.; formal analysis, L.T. and T.P.R.; investigation, L.T., T.P.R. and A.C.; resources, T.P.R., L.T. and E.R.; data curation., L.T., T.P.R. and E.R.; writing – original draft preparation, L.T. and T.P.R; writing – review and editing, T.P.R., L.T., A.C. and E.R.; visualisation, L.T.; supervision, T.P.R.; project administration, T.P.R and E.R.; funding acquisition, T.P.R. and E.R. All authors have read and agreed to the published version of the manuscript.

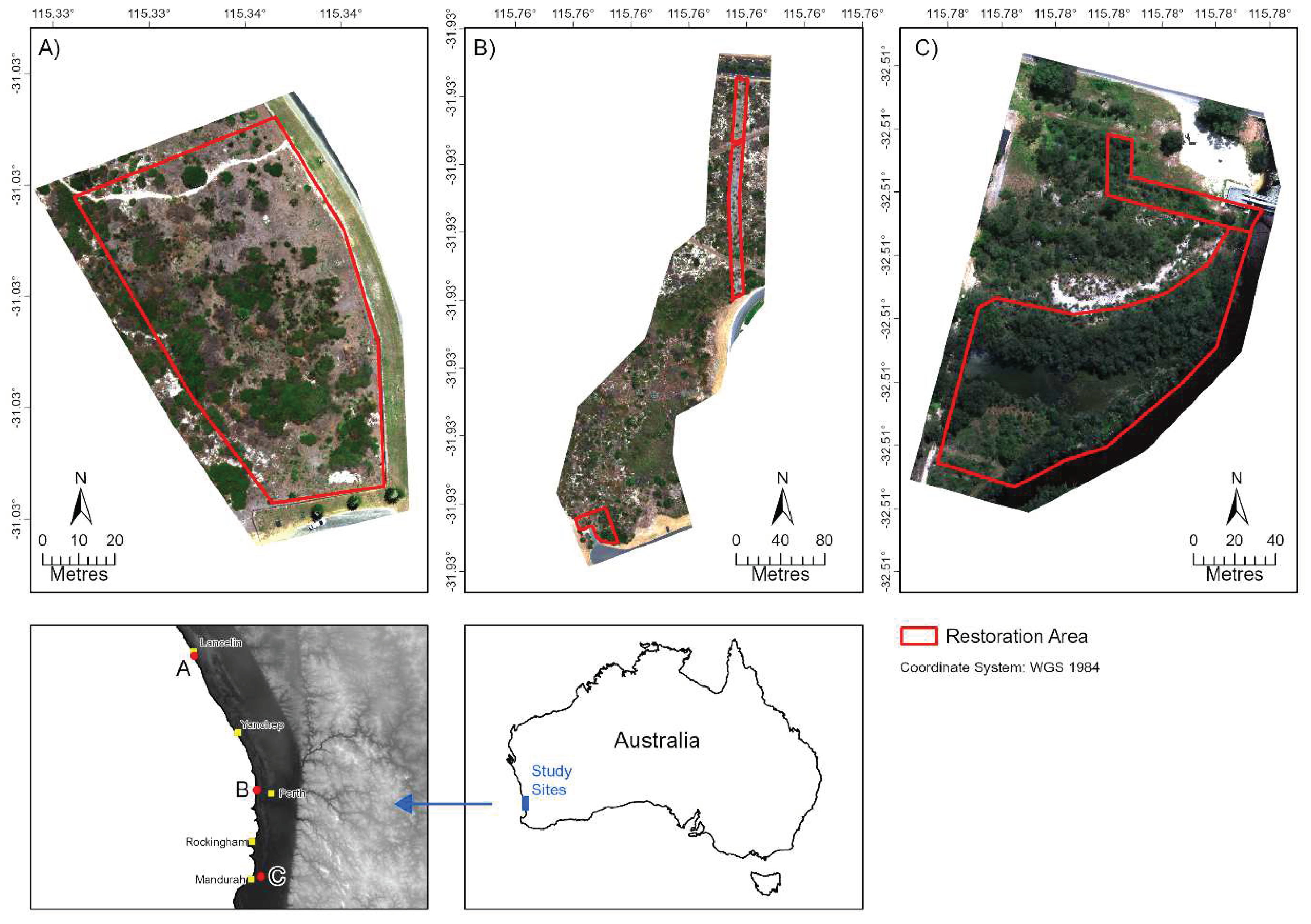

Figure 1.

Locations of the three study sites: (A) Lancelin; B) City Beach; and (C) Goegrup Reserve. The red boundaries represent revegetation monitoring areas. Site images were captured between January and April, 2023 using a DJI Matrice 300 RTK UAV fitted with a MicaSense RedEdge-P multispectral camera (c. 3.8 cm spatial resolution).

Figure 1.

Locations of the three study sites: (A) Lancelin; B) City Beach; and (C) Goegrup Reserve. The red boundaries represent revegetation monitoring areas. Site images were captured between January and April, 2023 using a DJI Matrice 300 RTK UAV fitted with a MicaSense RedEdge-P multispectral camera (c. 3.8 cm spatial resolution).

Figure 2.

Examples of dominant native and exotic species observed at each study site. (A) Scaevola crassifolia, (B) Olearia axillaris and (C) common lawn weeds (e.g., *Poa annua, *Gazania linearis) at Lancelin, respectively. City Beach contained natives (D) Acacia rostellifera and (E) Lepidosperma gladiatum, and exotic (F) *Pelargonium capitatum. Goegrup contained natives (G) Allocasuarina fraseriana, (H) Jacksonia furcellata, and exotics (I) *Avena barbata and *Lagurus ovatus.

Figure 2.

Examples of dominant native and exotic species observed at each study site. (A) Scaevola crassifolia, (B) Olearia axillaris and (C) common lawn weeds (e.g., *Poa annua, *Gazania linearis) at Lancelin, respectively. City Beach contained natives (D) Acacia rostellifera and (E) Lepidosperma gladiatum, and exotic (F) *Pelargonium capitatum. Goegrup contained natives (G) Allocasuarina fraseriana, (H) Jacksonia furcellata, and exotics (I) *Avena barbata and *Lagurus ovatus.

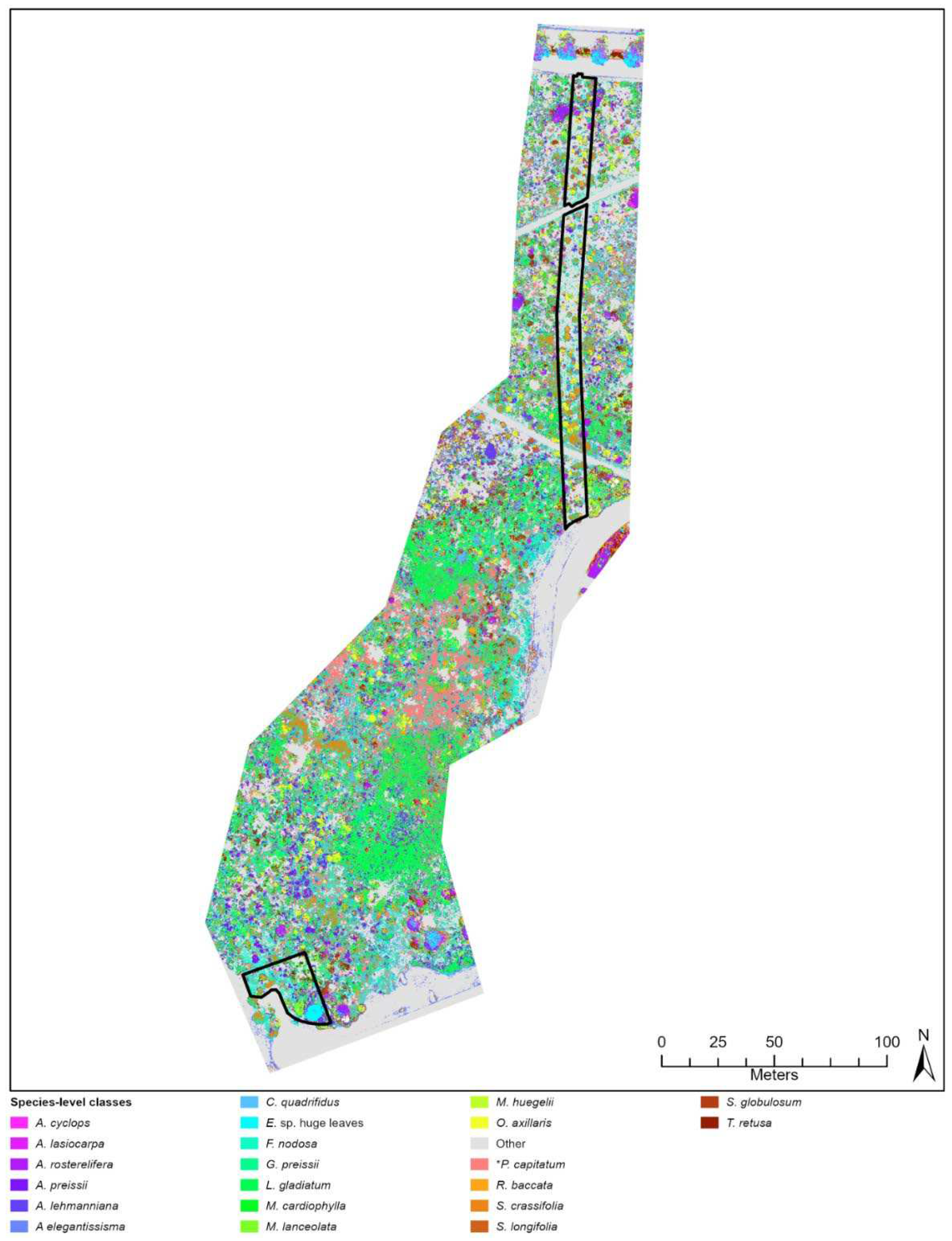

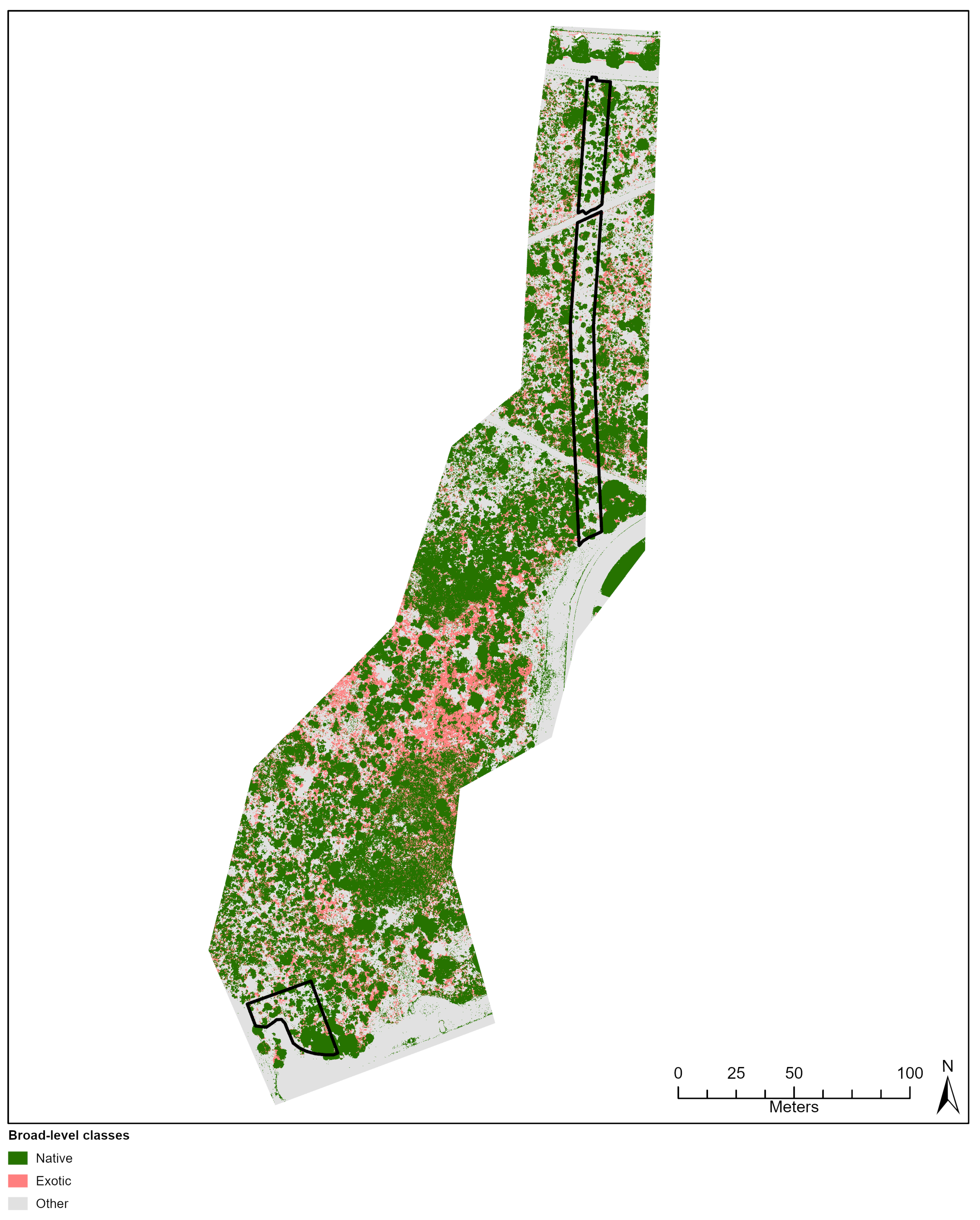

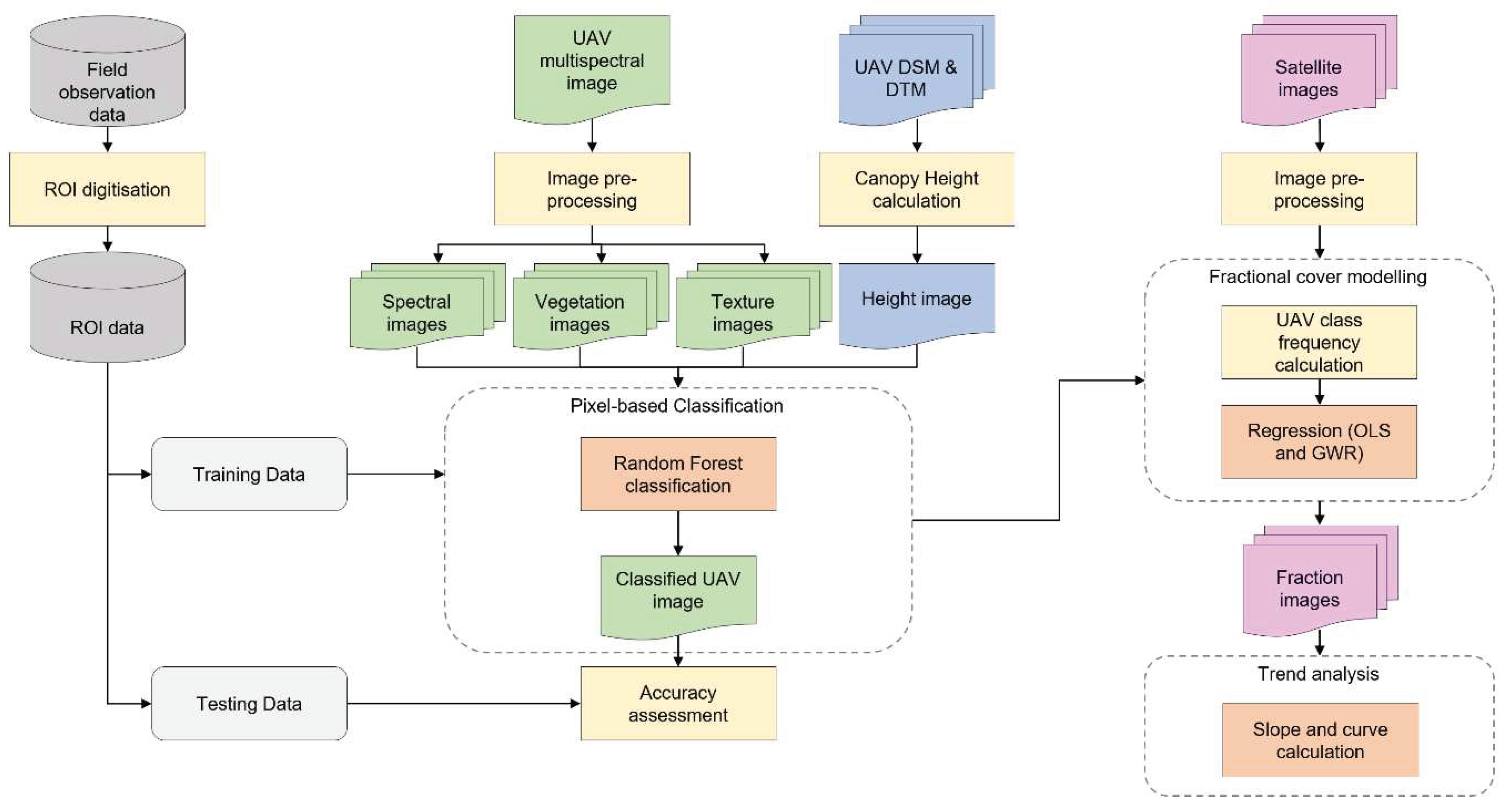

Figure 3.

Flowchart of methodology used for classification of multispectral UAV imagery and its combination with multispectral Sentinel 2 and Planet satellite imagery in fractional cover modelling and trend analysis. This workflow was applied at each study site.

Figure 3.

Flowchart of methodology used for classification of multispectral UAV imagery and its combination with multispectral Sentinel 2 and Planet satellite imagery in fractional cover modelling and trend analysis. This workflow was applied at each study site.

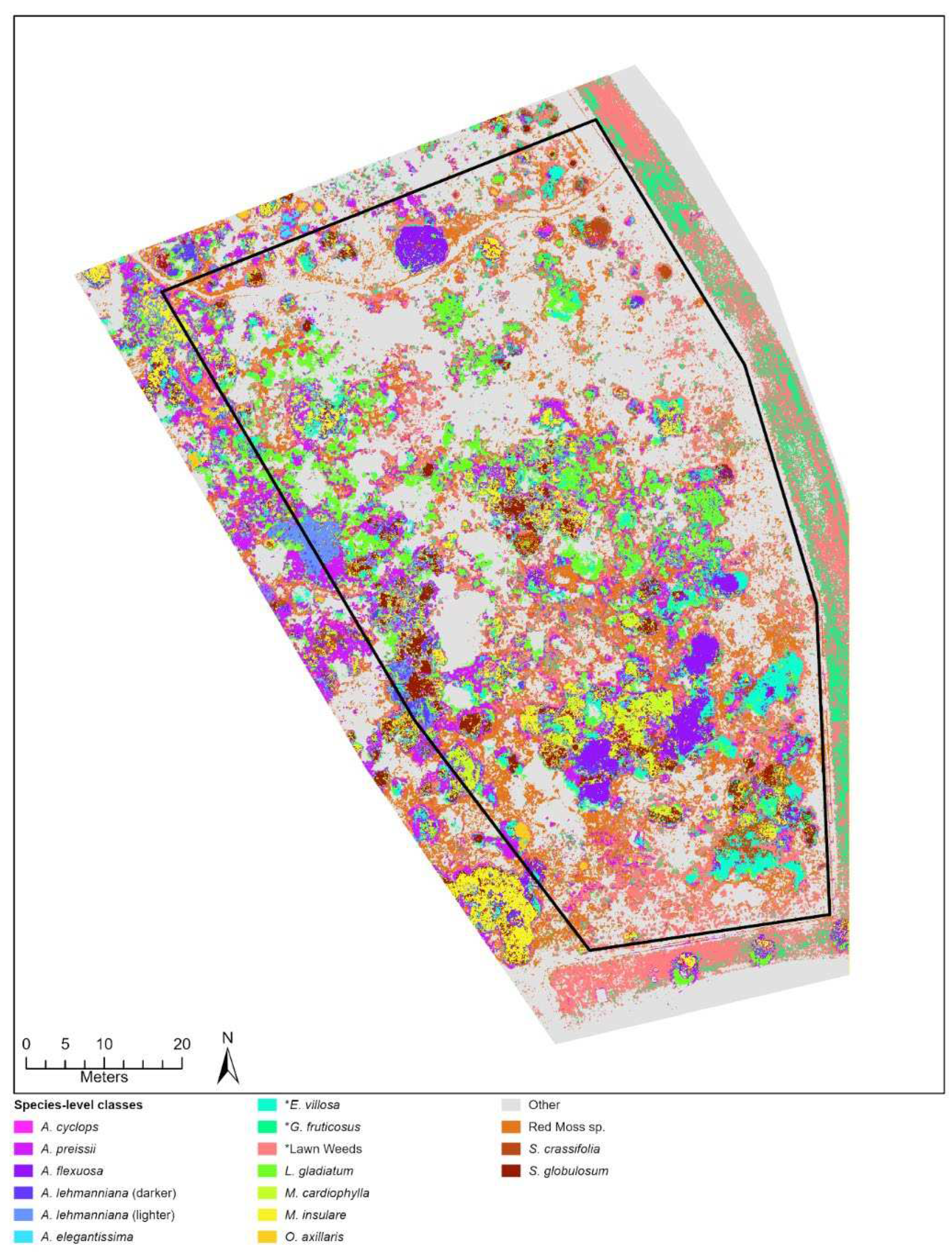

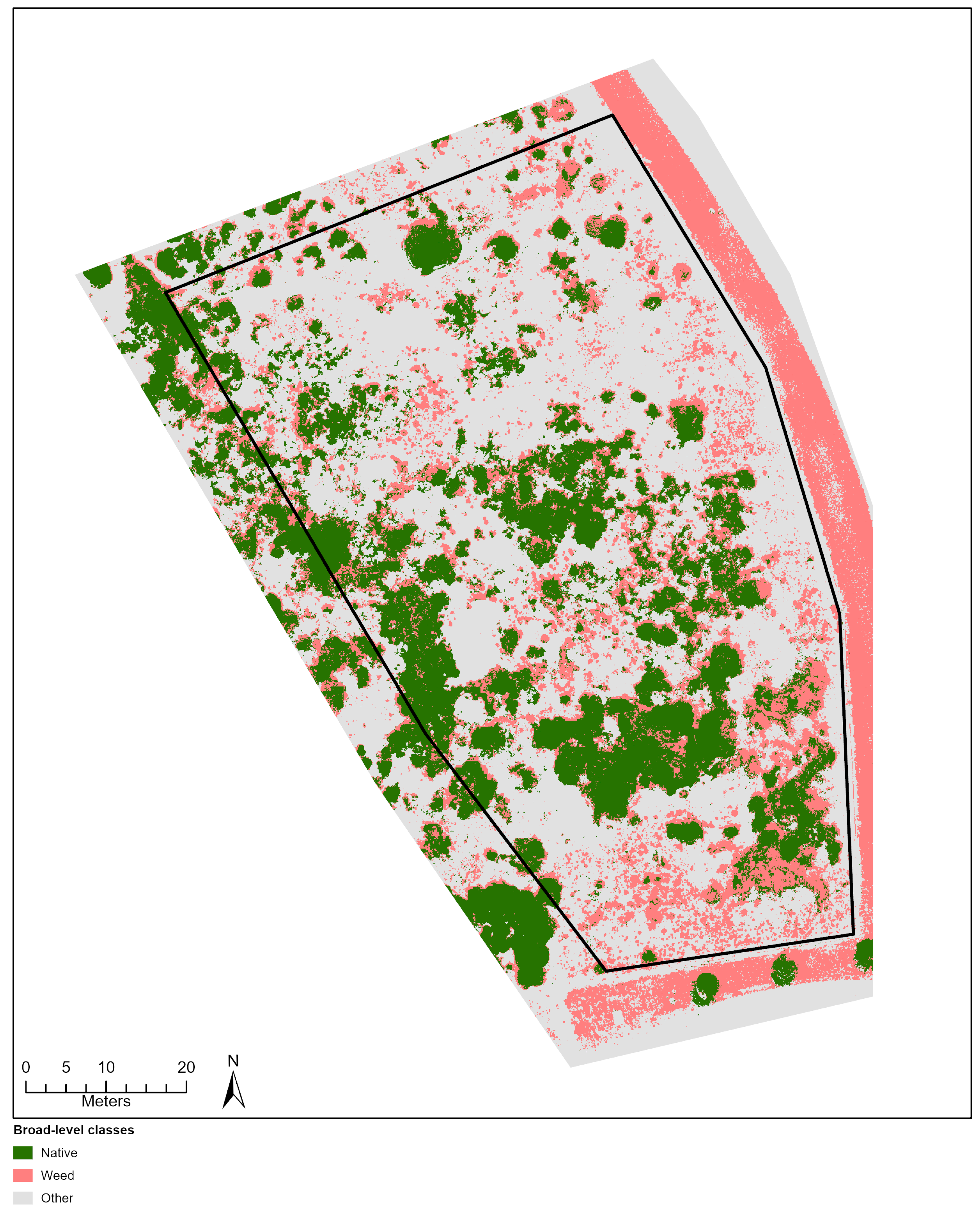

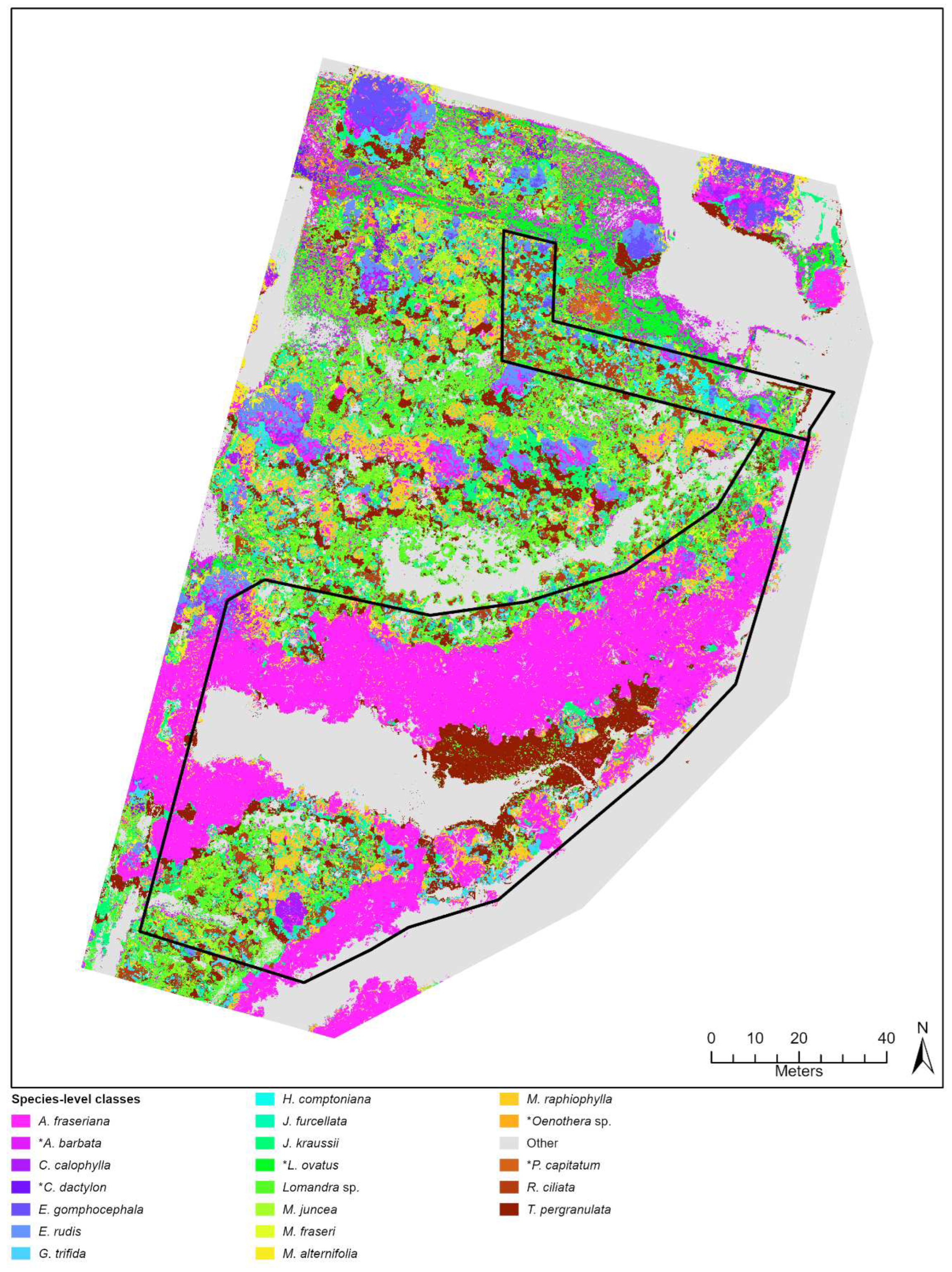

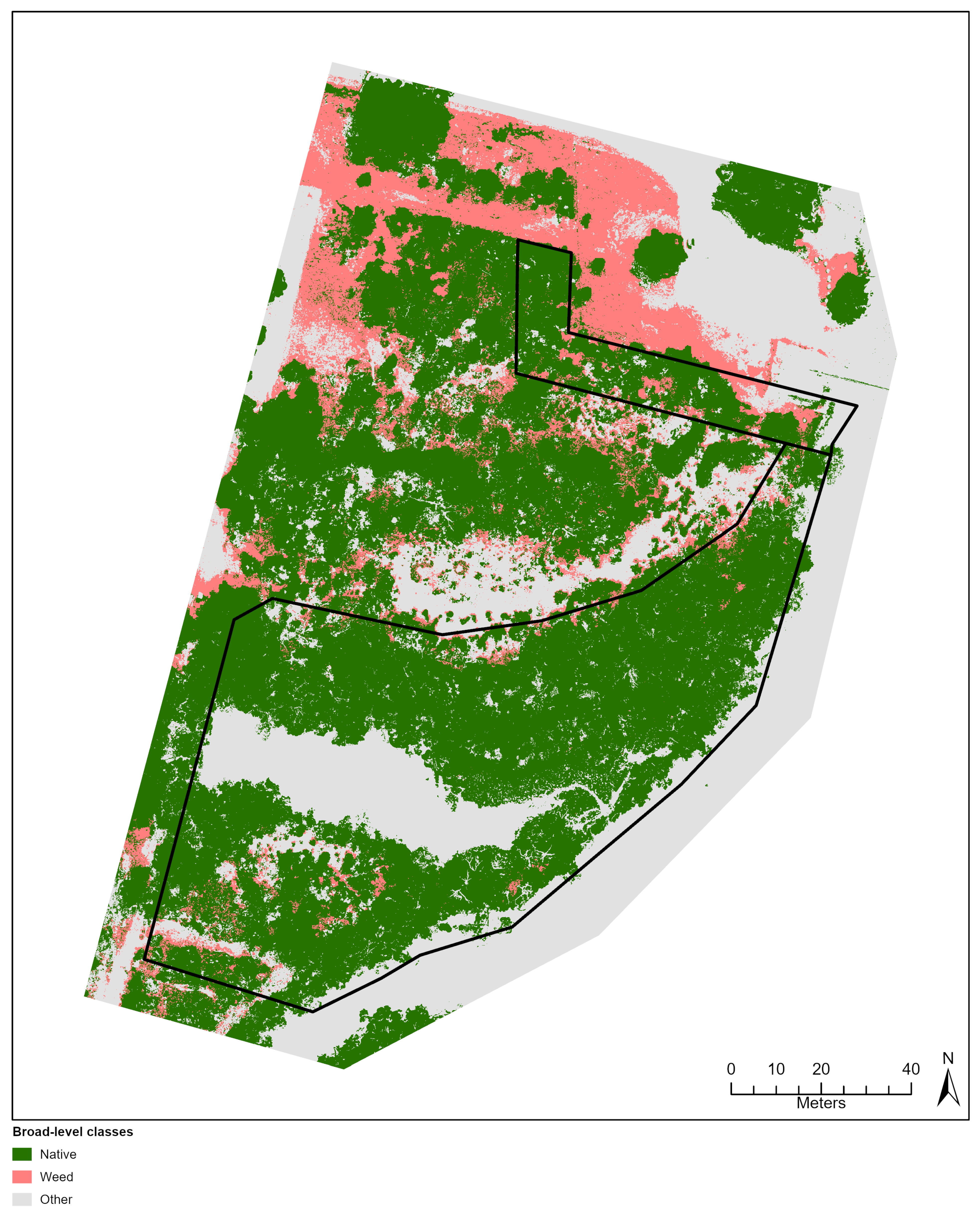

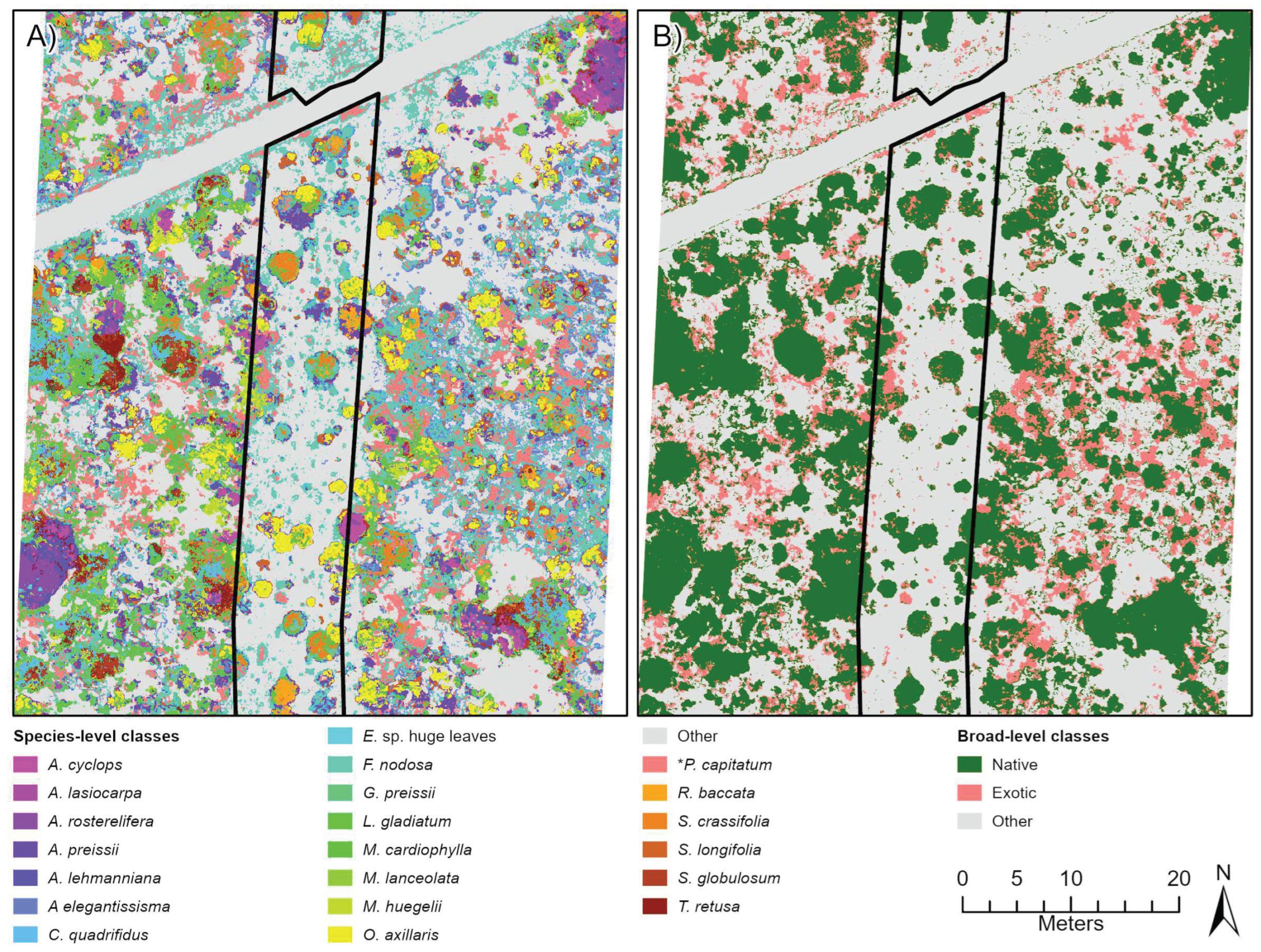

Figure 4.

Classification outputs based on species-level (A) and broad-level (B) training ROIs for a sub-section of the City Beach site. This location shows an area of the site where many native and exotic (*Pelargonium capitatum) species were observed occurring together.

Figure 4.

Classification outputs based on species-level (A) and broad-level (B) training ROIs for a sub-section of the City Beach site. This location shows an area of the site where many native and exotic (*Pelargonium capitatum) species were observed occurring together.

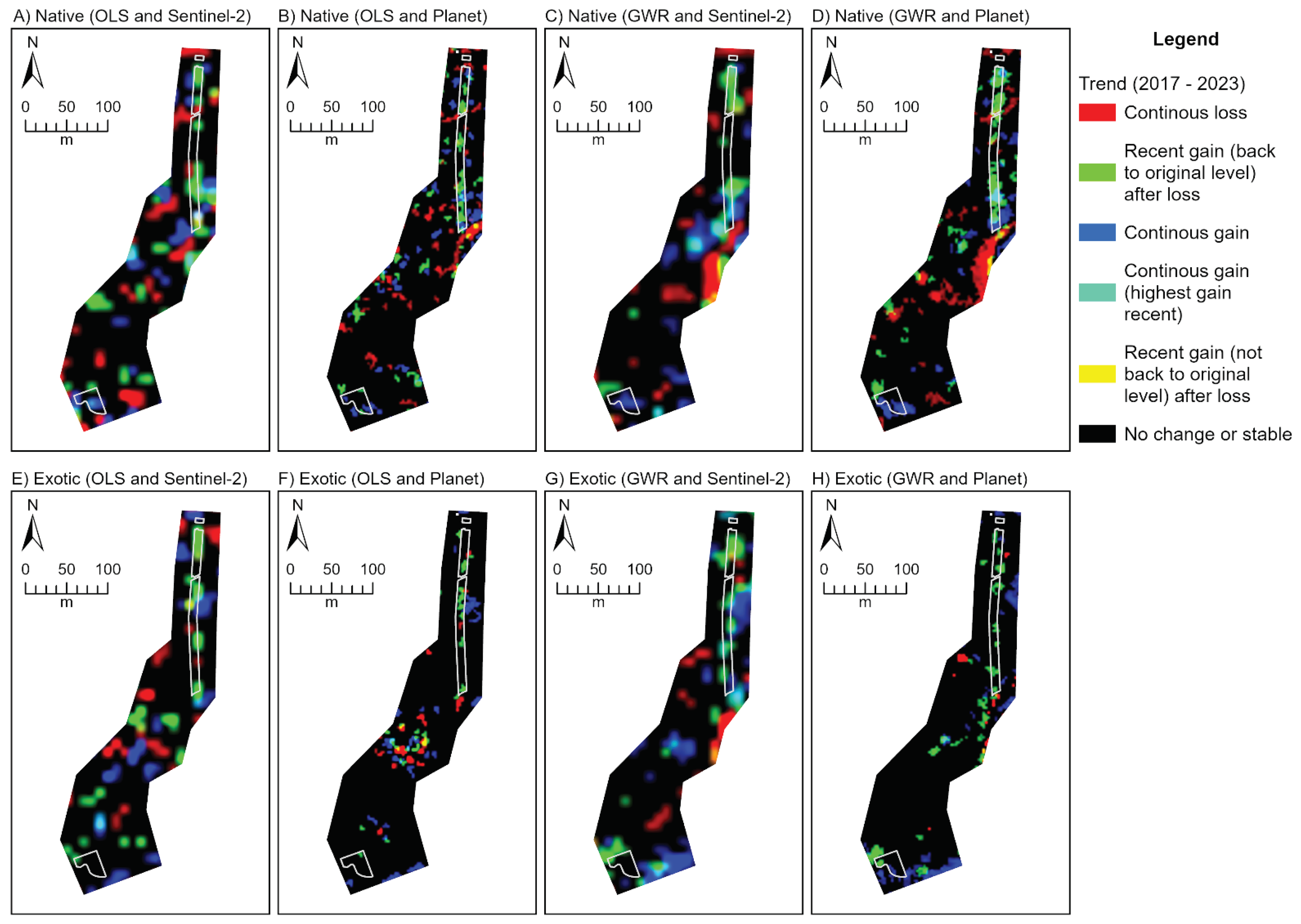

Figure 5.

Three band RGB (red, green, blue) trend rasters depicting rehabilitation condition change every February over the period 2017 to 2023 at the City Beach site. Trends are derived from temporal fractional models representing natives (A-D) and exotics (E-H). Trend rasters produced from the Sentinel-2 (A, C, E, G) and Planet (B, D, F, H) fraction models and ordinary least squares (OLS; A, B, E, F) and geographically weighted regression (GWR; C, D, G, H) techniques are provided. See

Appendix E for the trend outputs for Lancelin and Goegrup sites.

Figure 5.

Three band RGB (red, green, blue) trend rasters depicting rehabilitation condition change every February over the period 2017 to 2023 at the City Beach site. Trends are derived from temporal fractional models representing natives (A-D) and exotics (E-H). Trend rasters produced from the Sentinel-2 (A, C, E, G) and Planet (B, D, F, H) fraction models and ordinary least squares (OLS; A, B, E, F) and geographically weighted regression (GWR; C, D, G, H) techniques are provided. See

Appendix E for the trend outputs for Lancelin and Goegrup sites.

Table 1.

Capture date and time of each (A) Sentinel-2 and (B) PlanetScope satellite image obtained from Digital Earth Australia and Planet Explorer web platforms for each study site. All images are used in fractional cover modelling.

Table 1.

Capture date and time of each (A) Sentinel-2 and (B) PlanetScope satellite image obtained from Digital Earth Australia and Planet Explorer web platforms for each study site. All images are used in fractional cover modelling.

| |

|

|

Site |

| |

|

|

Lancelin |

City Beach |

Goegrup |

| |

Platform |

Year |

Date Time1 |

Sat2 |

Date Time1 |

Sat2 |

Date Time1 |

Sat2 |

| A. |

Sentinel-2 |

2015 |

14 Nov 2:24 |

S2A |

- |

- |

14 Nov 2:24 |

S2A |

| 2016 |

18 Nov 2:24 |

S2A |

12 Feb 2:26 |

S2A |

18 Nov 2:24 |

S2A |

| 2017 |

18 Nov 2:19 |

S2B |

16 Feb 2:22 |

S2A |

08 Nov 2:24 |

S2B |

| 2018 |

18 Nov 2:26 |

S2A |

11 Feb 2:25 |

S2A |

29 Oct 2:24 |

S2A |

| 2019 |

13 Nov 2:26 |

S2A |

16 Feb 2:26 |

S2A |

08 Nov 2:26 |

S2B |

| 2020 |

17 Nov 2:26 |

S2A |

06 Feb 2:26 |

S2B |

23 Oct 2:26 |

S2B |

| 2021 |

17 Nov 2:26 |

S2B |

20 Feb 2:26 |

S2B |

07 Nov 2:26 |

S2B |

| 2022 |

12 Nov 2:26 |

S2B |

15 Feb 2:26 |

S2B |

07 Nov 2:26 |

S2A |

| 2023 |

- |

- |

10 Feb 2:26 |

S2B |

- |

- |

| B. |

PlanetScope |

2015 |

- |

- |

- |

- |

- |

- |

| 2016 |

10 Nov 1:34 |

DC |

- |

- |

18 Oct 1:32 |

DC |

| 2017 |

21 Nov 1:43 |

DC |

20 Jan 1:36 |

DC |

14 Oct 1:39 |

DC |

| 2018 |

12 Nov 1:57 |

DC |

12 Feb 1:45 |

DC |

08 Oct 2:56 |

DC |

| 2019 |

15 Nov 2:21 |

DR |

14 Feb 2:37 |

DC |

12 Oct 2:00 |

DC |

| 2020 |

24 Nov 2:24 |

DR |

13 Feb 2:24 |

DR |

11 Oct 2:36 |

DR |

| 2021 |

15 Nov 3:00 |

DR |

12 Feb 2:42 |

DR |

16 Oct 1:32 |

SD |

| 2022 |

14 Nov 2:02 |

SD |

09 Feb 2:58 |

DR |

16 Oct 1:25 |

SD |

| 2023 |

- |

- |

16 Feb 1:56 |

SD |

- |

- |

Table 2.

Overview of multispectral UAV derivatives used in classification. Broad types of derivatives include (A) spectral UAV bands; (B) vegetation indices (VIs); (C) first-order textural indices; (D) grey-level co-occurrence matrix (GLCM) second-order textural images; and (E) canopy height model (CHM). Equations and references are provided for each where applicable.

Table 2.

Overview of multispectral UAV derivatives used in classification. Broad types of derivatives include (A) spectral UAV bands; (B) vegetation indices (VIs); (C) first-order textural indices; (D) grey-level co-occurrence matrix (GLCM) second-order textural images; and (E) canopy height model (CHM). Equations and references are provided for each where applicable.

| |

Variable Type |

Variable |

Description |

Equation |

Reference |

| A. |

Spectral bands (reflectance) |

B |

Blue band |

N/A |

N/A |

| G |

Green band |

| R |

Red band |

| RE |

Red edge band |

| NIR |

Near-infrared band |

| B. |

Vegetation index |

NGRDI |

Normalised Green-Red Difference Index |

|

[26] |

| RGBVI |

Red-Green-Blue Vegetation Index |

|

[27] |

| NDREI |

Normalised Difference Red Edge Index |

|

[28] |

| NDVI |

Normalized Difference Vegetation Index |

|

[29] |

| OSAVI |

Optimized Soil Adjusted Vegetation Index |

|

[30] |

| C. |

Textural image (first-order) |

KURT |

Kurtosis |

|

[37] |

| |

MAX |

Maximum |

|

| |

MIN |

Minimum |

|

| |

RNG |

Range |

|

| |

SKEW |

Skew |

|

| |

VAR |

Variance |

|

| D. |

Textural image (second-order) |

ASM |

Angular Second Moment |

|

[33] |

| CON |

Contrast |

|

| COR |

Correlation |

|

| DIS |

Dissimilarity |

|

| ENT |

Entropy |

|

| HOM |

Homogeneity |

|

| MEAN |

Mean |

|

| STDV |

Standard Deviation |

|

| E. |

Height image |

CHM |

Canopy Height Model |

|

[38] |

Table 3.

Interpretation of the colours associated with a RGB trend composite, based on Robinson et al. [

55].

Table 3.

Interpretation of the colours associated with a RGB trend composite, based on Robinson et al. [

55].

| Colour |

Interpretation |

| Red |

Continuous loss over time. |

| Green |

Recent gain (last few years) after period of loss. Recent gain typically back to original or higher levels. |

| Blue |

Continuous gain over time. |

| Yellow |

Recent gain (last few years) after period of loss. Recent gain not up to original levels. |

| Cyan |

Continuous gain over time, with highest gains recently (last few years). |

| Black |

No significant gain or loss (stable). Can be stable high or low values. |

| White |

Not possible. |

| Magenta |

Not possible. |

Table 4.

UAV Image-derived variables and Variable Importance in the Projection (VIP) scores calculated for the three study sites. The VIP scores were obtained for broad- and species-level region of interest groups. The values highlighted grey represent variables that significantly differentiated between classes (i.e., VIP above the ≥ 1 rejection threshold).

Table 4.

UAV Image-derived variables and Variable Importance in the Projection (VIP) scores calculated for the three study sites. The VIP scores were obtained for broad- and species-level region of interest groups. The values highlighted grey represent variables that significantly differentiated between classes (i.e., VIP above the ≥ 1 rejection threshold).

| |

|

|

VIP Score |

| |

|

|

Lancelin Site |

City Beach Site |

Goegrup Site |

| |

Variable Type |

Variable |

Broad |

Species |

Broad |

Species |

Broad |

Species |

| A. |

Spectral bands (reflectance) |

B |

1.22 |

1.09 |

1.63 |

1.42 |

1.16 |

1.05 |

| G |

1.21 |

1.11 |

1.51 |

1.30 |

1.19 |

1.08 |

| R |

1.02 |

1.06 |

1.43 |

1.48 |

1.18 |

1.09 |

| RE |

0.98 |

0.71 |

0.88 |

0.91 |

0.07 |

0.16 |

| NIR |

1.35 |

1.03 |

1.12 |

1.03 |

1.01 |

1.07 |

| B. |

Vegetation index |

NGRDI |

0.70 |

1.57 |

0.46 |

1.31 |

1.14 |

0.79 |

| RGBVI |

0.86 |

1.68 |

0.87 |

1.44 |

1.40 |

1.13 |

| NDREI |

1.55 |

1.62 |

1.44 |

1.43 |

1.29 |

1.01 |

| NDVI |

1.16 |

1.61 |

1.45 |

1.56 |

1.56 |

1.19 |

| OSAVI |

1.16 |

1.61 |

1.43 |

1.50 |

1.66 |

1.36 |

| C. |

Textural image (first-order) |

KURT |

0.16 |

0.15 |

0.08 |

0.04 |

0.04 |

0.08 |

| |

MAX |

1.42 |

0.88 |

0.03 |

0.31 |

1.12 |

0.85 |

| |

MIN |

1.28 |

0.76 |

0.42 |

0.54 |

1.02 |

0.52 |

| |

RNG |

0.82 |

0.62 |

0.96 |

0.51 |

0.86 |

0.97 |

| |

SKEW |

0.01 |

0.02 |

0.03 |

0.04 |

0.04 |

0.03 |

| |

VAR |

0.53 |

0.42 |

0.63 |

0.31 |

0.58 |

0.87 |

| D. |

Textural image (second-order) |

ASM |

0.64 |

0.38 |

1.02 |

0.50 |

0.78 |

1.15 |

| CON |

0.36 |

0.31 |

0.64 |

0.30 |

0.54 |

0.97 |

| COR |

0.33 |

0.24 |

0.57 |

0.31 |

0.53 |

0.62 |

| DIS |

0.52 |

0.41 |

0.74 |

0.35 |

0.63 |

0.98 |

| ENT |

1.01 |

0.47 |

1.03 |

0.48 |

1.03 |

1.25 |

| HOM |

0.56 |

0.42 |

0.76 |

0.36 |

0.64 |

0.99 |

| MEAN |

1.25 |

0.71 |

0.16 |

0.37 |

0.98 |

0.98 |

| STDV |

1.04 |

0.44 |

1.03 |

0.43 |

1.03 |

0.97 |

| E. |

Height image |

CHM |

1.62 |

1.12 |

0.31 |

0.63 |

1.01 |

1.08 |

Table 5.

Classification validation results with producer’s accuracy (PA), user’s accuracy (UA), percent overall accuracy, and Kappa for Lancelin, City Beach and Goegrup study sites. Validation measurements provided for classification undertaken with (A) species-level ROIs and (B) broad-level ROIs grouped into native, exotic and other classes.

Table 5.

Classification validation results with producer’s accuracy (PA), user’s accuracy (UA), percent overall accuracy, and Kappa for Lancelin, City Beach and Goegrup study sites. Validation measurements provided for classification undertaken with (A) species-level ROIs and (B) broad-level ROIs grouped into native, exotic and other classes.

| |

|

Lancelin Site |

City Beach Site |

Goegrup Site |

| |

Dataset |

Class |

PA |

UA |

|

Class |

PA |

UA |

|

Class |

PA |

UA |

| A. |

Species-level |

A. cyclops |

87.2 |

89.5 |

|

A. cyclops |

73.3 |

73.3 |

|

*A. barbata |

30.5 |

33.3 |

| A. elegantissima |

63.2 |

80.4 |

|

A. elegantissisma |

86.7 |

78.8 |

|

*A. calendula |

62.1 |

57.3 |

| A. flexulosa |

68.4 |

70.8 |

|

A. lasiocarpa |

64.4 |

76.3 |

|

A. flexuosa |

89.5 |

97.7 |

| A. lehmanniana (dark) |

74.4 |

81.3 |

|

A. lehmanniana |

63.3 |

69.5 |

|

A. fraseriana |

92.6 |

88.9 |

| A. lehmanniana (light) |

59.0 |

65.7 |

|

A. preissii |

82.2 |

85.1 |

|

*B. maxima |

91.6 |

88.8 |

| A. preissii |

60.7 |

56.8 |

|

A. rostellifera |

58.9 |

71.6 |

|

C. calophylla |

85.3 |

97.6 |

| *E. villosa |

69.2 |

87.1 |

|

C. quadrifidus |

51.1 |

49.5 |

|

*C. dactylon |

70.5 |

69.8 |

| *G. fruticosus |

59.0 |

61.1 |

|

E. sp. huge leaves |

97.8 |

94.6 |

|

E. gomphocephala |

82.1 |

86.7 |

| *Lawn Weeds |

65.0 |

61.3 |

|

F. nodosa |

88.9 |

67.8 |

|

E. rudis |

87.4 |

75.5 |

| L. gladiatum |

86.3 |

81.5 |

|

G. preissii |

61.1 |

49.5 |

|

G. trifida |

81.1 |

75.5 |

| M. cardiophylla |

76.1 |

67.4 |

|

L. gladiatum |

74.4 |

68.4 |

|

H. comptoniana |

88.4 |

94.4 |

| M. insulare |

61.5 |

41.4 |

|

M. cardiophylla |

63.3 |

62.6 |

|

J. furcellata |

68.4 |

77.4 |

| O. axillaris |

94.9 |

95.7 |

|

M. huegelii |

61.1 |

68.8 |

|

J. kraussii |

65.3 |

81.6 |

| Other |

94.9 |

97.4 |

|

M. lanceolata |

78.9 |

67.6 |

|

L. caespitosa |

69.5 |

53.7 |

| Red Moss sp. |

100 |

93.6 |

|

O. axillaris |

96.7 |

93.5 |

|

*L. ovatus |

42.1 |

54.8 |

| S. crassifolia |

70.9 |

76.1 |

|

Other |

98.9 |

100 |

|

M. fraseri |

85.3 |

73.6 |

| S. globulosum |

62.4 |

67.0 |

|

*P. capitatum |

83.3 |

92.6 |

|

M. juncea |

65.3 |

67.4 |

| |

|

|

|

R. baccata |

80.0 |

62.6 |

|

M. rhaphiophylla |

76.8 |

76.8 |

| |

|

|

|

S. crassifolia |

75.6 |

78.2 |

|

Other |

89.5 |

89.5 |

| |

|

|

|

S. globulosum |

45.6 |

47.1 |

|

R. ciliata |

71.6 |

74.7 |

| |

|

|

|

S. longifolia |

71.1 |

85.3 |

|

T. pergranulata |

95.8 |

81.3 |

| |

|

|

|

T. retusa |

44.4 |

71.4 |

|

|

|

|

| |

|

|

|

|

|

|

|

|

|

|

| Overall Accuracy (%) |

73.7 |

|

|

72.8 |

|

|

75.7 |

| Kappa |

0.72 |

|

|

0.71 |

|

|

0.74 |

| B. |

Broad-level |

Native |

91.9 |

91.2 |

|

Native |

94.7 |

96.2 |

|

Native |

90.1 |

91.9 |

| Exotic |

88.1 |

92.1 |

|

Exotic |

95.5 |

97.1 |

|

Exotic |

92.9 |

92.8 |

| Other |

98.1 |

94.7 |

|

Other |

99.7 |

96.7 |

|

Other |

97.1 |

95.3 |

| Overall Accuracy (%) |

92.7 |

|

|

96.6 |

|

|

93.3 |

| Kappa |

0.90 |

|

|

0.95 |

|

|

0.91 |

Table 6.

R-Squared values produced from the fractional cover modelling procedure based on Ordinary Least Squares (OLS) and Geographically Weighted Regression (GWR) techniques. Both methods were applied to Sentinel-2 and PlanetScope images over the period 2015 to 2023 at Lancelin, City Beach and Goegrup study sites.

Table 6.

R-Squared values produced from the fractional cover modelling procedure based on Ordinary Least Squares (OLS) and Geographically Weighted Regression (GWR) techniques. Both methods were applied to Sentinel-2 and PlanetScope images over the period 2015 to 2023 at Lancelin, City Beach and Goegrup study sites.

| |

|

Lancelin Site |

City Beach Site |

Goegrup Site |

| |

|

Sentinel-2 |

Planet |

Sentinel-2 |

Planet |

Sentinel-2 |

Planet |

| |

Year |

OLS |

GWR |

OLS |

GWR |

OLS |

GWR |

OLS |

GWR |

OLS |

GWR |

OLS |

GWR |

| Natives Fractions |

2015 |

0.42 |

0.60 |

- |

- |

- |

- |

- |

- |

0.62 |

0.70 |

- |

- |

| 2016 |

0.43 |

0.45 |

0.19 |

0.53 |

0.39 |

0.58 |

- |

- |

0.59 |

0.69 |

0.34 |

0.77 |

| 2017 |

0.40 |

0.52 |

0.20 |

0.50 |

0.31 |

0.61 |

0.33 |

0.59 |

0.51 |

0.71 |

0.43 |

0.78 |

| 2018 |

0.34 |

0.56 |

0.18 |

0.57 |

0.33 |

0.60 |

0.30 |

0.60 |

0.56 |

0.76 |

0.43 |

0.79 |

| 2019 |

0.42 |

0.61 |

0.26 |

0.55 |

0.34 |

0.59 |

0.40 |

0.64 |

0.57 |

0.72 |

0.45 |

0.78 |

| 2020 |

0.37 |

0.58 |

0.29 |

0.61 |

0.36 |

0.59 |

0.42 |

0.66 |

0.55 |

0.71 |

0.46 |

0.81 |

| 2021 |

0.45 |

0.62 |

0.33 |

0.63 |

0.36 |

0.61 |

0.40 |

0.63 |

0.48 |

0.72 |

0.34 |

0.77 |

| 2022 |

0.49 |

0.65 |

0.34 |

0.65 |

0.47 |

0.63 |

0.50 |

0.68 |

0.62 |

0.75 |

0.47 |

0.79 |

| 2023 |

- |

- |

- |

- |

0.50 |

0.61 |

0.43 |

0.63 |

- |

- |

- |

- |

| Mean |

0.41 |

0.57 |

0.26 |

0.58 |

0.38 |

0.60 |

0.40 |

0.63 |

0.56 |

0.72 |

0.42 |

0.78 |

| |

Range |

0.15 |

0.17 |

0.16 |

0.13 |

0.16 |

0.05 |

0.20 |

0.09 |

0.14 |

0.07 |

0.13 |

0.04 |

| Exotic Fractions |

2015 |

0.36 |

0.56 |

- |

- |

- |

- |

- |

- |

0.27 |

0.59 |

- |

- |

| 2016 |

0.34 |

0.52 |

0.12 |

0.58 |

0.30 |

0.67 |

- |

- |

0.31 |

0.57 |

0.23 |

0.63 |

| 2017 |

0.41 |

0.58 |

0.20 |

0.56 |

0.32 |

0.68 |

0.18 |

0.59 |

0.29 |

0.48 |

0.17 |

0.64 |

| 2018 |

0.32 |

0.54 |

0.15 |

0.53 |

0.36 |

0.69 |

0.14 |

0.60 |

0.33 |

0.52 |

0.19 |

0.63 |

| 2019 |

0.39 |

0.55 |

0.22 |

0.61 |

0.34 |

0.70 |

0.17 |

0.61 |

0.32 |

0.51 |

0.17 |

0.63 |

| 2020 |

0.44 |

0.52 |

0.34 |

0.65 |

0.38 |

0.69 |

0.15 |

0.62 |

0.33 |

0.61 |

0.21 |

0.66 |

| 2021 |

0.34 |

0.54 |

0.26 |

0.59 |

0.34 |

0.69 |

0.16 |

0.59 |

0.34 |

0.60 |

0.25 |

0.67 |

| 2022 |

0.29 |

0.53 |

0.13 |

0.59 |

0.39 |

0.71 |

0.15 |

0.63 |

0.35 |

0.62 |

0.16 |

0.66 |

| 2023 |

- |

- |

- |

- |

0.40 |

0.71 |

0.15 |

0.60 |

- |

- |

- |

- |

| Mean |

0.36 |

0.54 |

0.20 |

0.59 |

0.35 |

0.69 |

0.16 |

0.61 |

0.32 |

0.56 |

0.20 |

0.64 |

| |

Range |

0.15 |

0.06 |

0.22 |

0.12 |

0.09 |

0.04 |

0.04 |

0.04 |

0.08 |

0.14 |

0.09 |

0.04 |