Submitted:

11 December 2023

Posted:

13 December 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

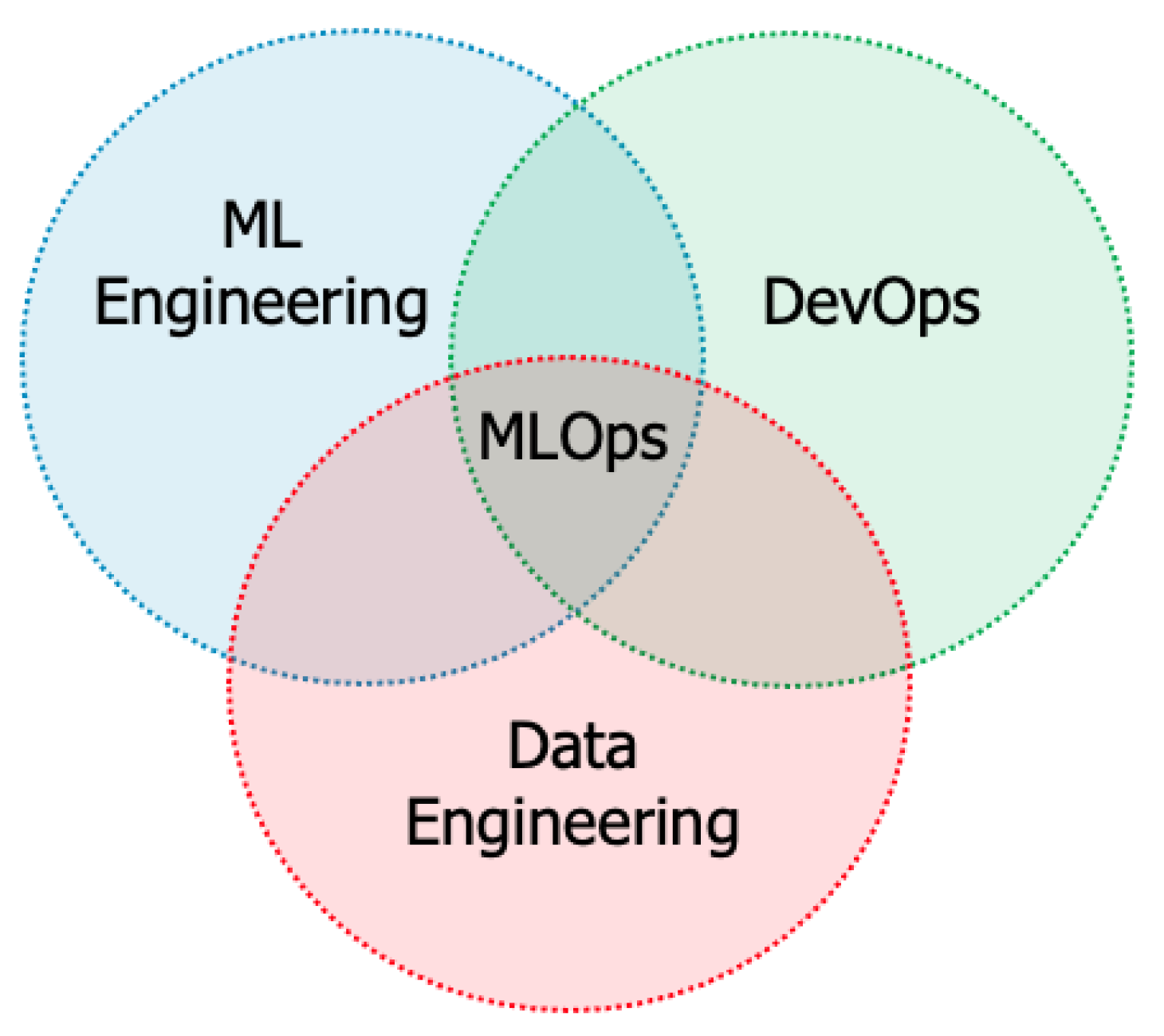

2. End-to-End Architecture of Machine Learning Workflows

3. Data Engineering

3.1. Data Pipeline: Extraction, Loading and Transformation

3.2. Feature Engineering

3.2.1. Feature Generation

3.2.2. Feature Transformation

3.2.3. Feature Selection

-

Filter methods [88,89,90]: In this category of methods, feature selection is a pre-processing step to the machine learning model training and these methods are time efficient,

- a)

- Statistical/Information-based: these methods maximize feature relevance by maximizing a dependence measure, such as variance, covariance, entropy [90], linear correlation [91], Laplacian score [92] and mutual information. Representative methods include Feature Selection with Feature Similarity (FSFS) [91] based on Maximal Information Compression Index (MICI) and Relevance Redundancy Feature Selection (RRFS) [93]. Fisher’s criterion [94] is only used in Supervised Learning.

- b)

- Spectral/Sparsity Learning: these methods perform spectral analysis or combine spectral analysis with spectral learning. They find a trade-off between Goodness-of-Fit and a feature similarity measure. Representative methods include Multi-Cluster Feature Selection (MCFS) [95], Unsupervised Discriminative Feature Selection (UDFS) [96] and Non-negative Discriminative Feature Selection (NDFS) [89].

-

Wrapper methods [88,89,90]: In this category, feature selection is intertwined with the machine learning model training and hence evaluated by the model performance. These methods are more accurate than Filter methods but less time efficient,

- a)

- Sequential methods: these methods perform clustering on each feature subset and evaluate the clustering results based on some criterion. They can be based on Expectation Maximization or Trace Criterion [97], or on min/max of intra/inter-cluster variance [98] and then make a decision based on a score that provides feature ranking. Another alternative is the Simplified Silhouette Sequential Forward Selection (SS-SFS) proposed in [99].

- b)

- Iterative methods: [100] performs clustering and feature selection simultaneously by evaluating feature weights called feature saliences. Other iterative methods include Local Learning-based Clustering with Feature Selection (LLC-fs) [101], Embedded Unsupervised Feature Selection (EUFS) [102] and Dependence Guided Unsupervised Feature Selection (DGUFS) [103].

- Embedded methods: In this category, feature selection is part of the machine learning model training process [104].

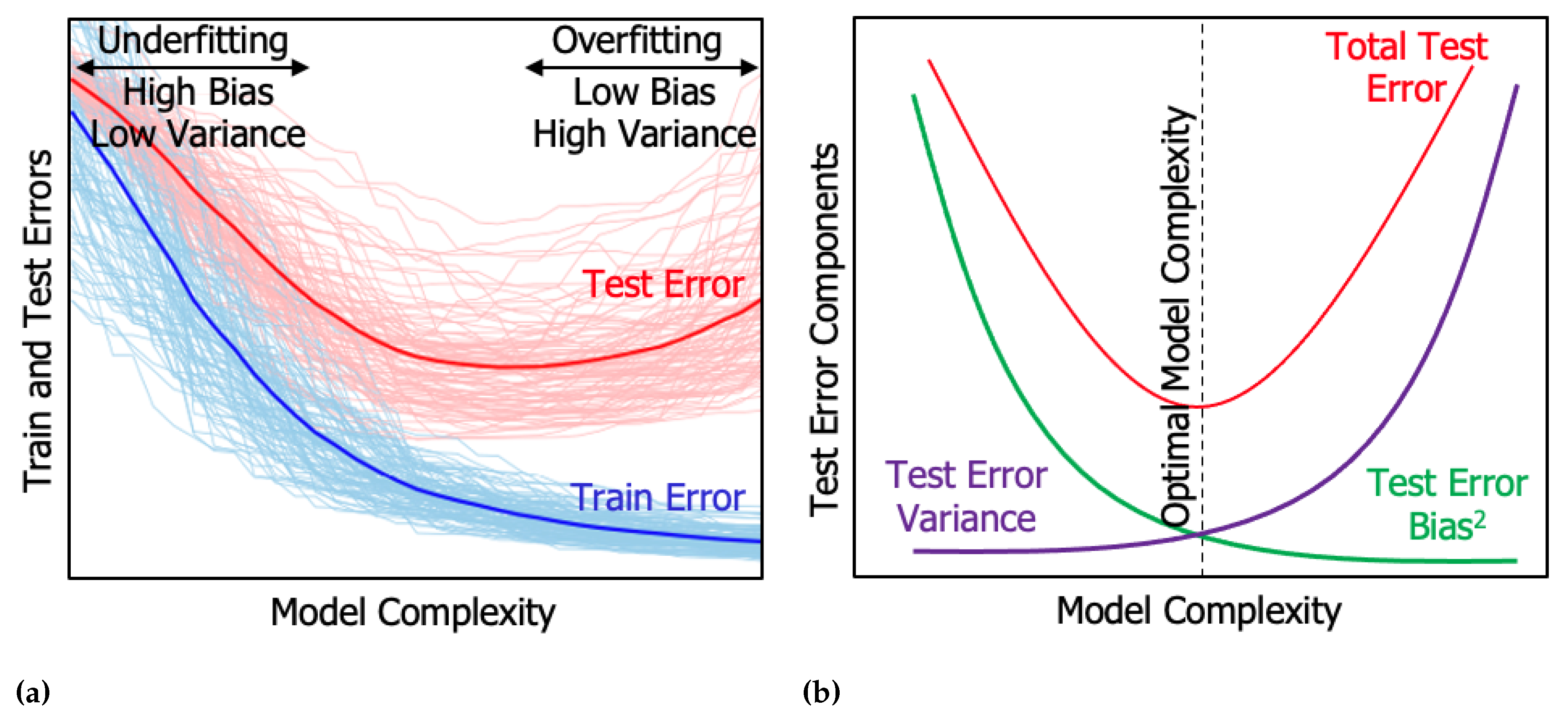

- Shrinkage-based methods [106]: single-output or multi-output regression models with - or -regularization, can be trained via k-fold Cross-Validation (CV), to optimize a shrinkage parameter which trades-off model bias for variance. Penalization of the model weights with an norm is appropriate for feature selection because it can introduce feature sparsity (Lasso estimator [107]) when penalization with an norm (Ridge Regression [68]) does not force feature weights to zero. The combination of the two is called Elastic Net, which is useful when there is a group of features with high pairwise correlations [108]. Multi-output regression models perform better when outputs are correlated, i.e. when multi-task learning is desired, instead of independent task learning [109,110]. Multi-output models utilize an norm penalization term which either includes or excludes a feature from the model for all outputs [111]. In the multi-output case, the average weight of a feature across all outputs is obtained and then these average weights are normalized in the range (relative importance) with the Min-Max scaling method so that a rank of feature relative importance is derived [23].

- Tree-based methods: CART can be trained in a supervised sense and provide feature ranking as a by-product of the training process [70], in single-output or multi-output Decision Trees (DT) [112]. DTs are over-sensitive to the training set, irrelevant information, and noise, and therefore, prior unsupervised feature selection is strongly encouraged, via one of the methods proposed above. Moreover, DTs are known to overfit, and hence, ensembles of DTs [112], such as Bagging (bootstrap aggregation) [113], Boosted Trees [114] and Rotation Forests, are constructed to cope with overfitting. The RF, a characteristic example of Bagging, can generate diverse trees by bootstrap sampling and/or randomly selecting a subset of the features during learning [115,116]. Although an RF is faster and easier to train than a boosted tree [117,118,119], it is less accurate and sacrifices the intrinsic interpretability (explanation of output value and feature ranking) present in DTs [68]. In particular, feature selection happens inherently in single-output and multi-output DTs as the tree is being constructed, since the splitting criteria used at each node select the feature which performs the most successful separation of the remaining examples [70]. Therefore, in RFs, feature ranking is either impurity-based, such as the Mean Decrease in Impurity (MDI), or permutation-based, such as Permutation Importance [115]. MDI is a.k.a. Mean Decrease Gini or Gini Importance.

- Permutation Importance [115] is not only useful in RFs which have lost the inherent feature ranking mechanism of the tree but in other supervised machine learning models as well. Permutation Importance is better than MDI because it is not computed on the training set, but on the Out-of-Bag (OoB) sample, and is therefore, more useful to inform on the feature importance for predictions [115]. Moreover, MDI significantly favors numerical features over categorical ones as well as high cardinality categorical features (many categories) over low cardinality ones [115], something that does not happen with Permutation Importance. The Permutation Importance of a feature is calculated as the difference between the original error and the average permuted error of this feature, over a number of specified repetitions [115]. The permuted error of each feature (the OoB error) occurs when that feature is permuted (shuffled). Permutation is a mechanism that breaks the relationship between that feature and the target variables, revealing the importance of a feature to the model training accuracy [120]. In trees and other supervised methods which use a feature ranking approach to feature selection, the least performing features in terms of relative importance can be excluded from the feature set.

3.2.4. Automated Feature Extraction

| Automated F.E. Tool | Operation | Tool Tested On | Developer | Paper |

|---|---|---|---|---|

| ExploreKit | Feature generation & ranking | DT, SVM, RF | UC Berkeley | [125] |

| One Button Machine | Feature discovery in relational DBs | RF, XGBOOST | IBM | [126] |

| AutoLearn | Feature generation & selection | kNN, LR, SVM, RF, Adaboost, NN, DT | IIIT | [127] |

| GeP Feature Construction | Feature generation from GeP on DTs | kNN, DT, Naive Bayes | Wellington Uni. | [128] |

| Cognito | Feature generation & selection | N/A | IBM | [129] |

| RLFE | Feature generation & selection | RF | IBM | [130] |

| LFE | Feature transformation | LR, RF | IBM | [131] |

4. Machine Learning Engineering

4.1. Models and Algorithms for Supervised Learning with Numerical and Categorical Data

4.2. Model Training and Validation

4.3. Model Evaluation

- Linear relationship between each feature and each target variable. This assumption can be verified in the testing set by constructing scatter plots of each output vs each feature.

- Homoscedasticity, i.e. constant residual variance for all the values of a feature. This assumption can be verified by plotting the residuals vs each feature in the testing set.

- Independence of residual observations which is the same as independence of target variable observations (commonly violated in time series data). This can be verified by checking that the autocorrelation of the residual observations is non-zero with the Durbin-Watson test since that would indicate sample dependence.

- Normality of the target variable observations. This can be verified by constructing QQ plots of the residuals against the theoretical normal distribution and observing the straightness of the produced line.

5. Model Deployment

5.1. Testing

5.1.1. Unit Testing

5.1.2. Performance Testing

5.1.3. Integration Testing

5.1.4. System Testing

5.1.5. Acceptance Testing

5.1.6. A/B Testing

5.2. Model Deployment

5.3. Monitoring and Maintenance

5.4. Security Considerations

6. Automation in Machine Learning Workflows

6.1. AutoML Methods

-

Black-box hyperparameter optimization:

- a)

- Model-free black-box optimization methods include grid search in a finite range, which however suffers from the CoD and random search, where random search samples configurations at random until a certain budget for the search is exhausted [206]. This works better than grid search when some hyperparameters are much more important than others, which is very often the case [206]. Covariance Matrix Adaption Evolutionary Strategy (CMA-ES) [207], is one of the most competitive black-box optimization algorithms.

- b)

- Bayesian optimization has gained interest due to DNN tuning for image classification [203,208], speech recognition [209] and neural language modeling [202]. For an in-depth introduction to Bayesian optimization, the interested reader is referred to [210,211]. Many recent advances in Bayesian optimization do not treat hyperparameter tuning as a black-box anymore, i.e. multi-fidelity hyperparameter turning, Bayesian optimization with meta-learning, and Bayesian optimization taking the pipeline structure into account [212,213].

- Multi-fidelity optimization: these methods are less costly than black-box optimization methods which approximately assess the quality of hyperparameter settings. Multi-fidelity methods introduce heuristics inside an algorithm, using low-fidelity approximations of the actual loss function to reduce runtime. Such heuristics include hyperparameter tuning on a small data subset or feature subset and training for a few iterations by using CV or down-sampled images. Learning curve-based prediction for early stopping is used, as well as, Bandit-based (successive halving [214] and Hyperband [215]) algorithms for algorithm selection based on low-fidelity algorithm approximations. Moreover, Bayesian Optimization Hyperband (BOHB) [216] combines Bayesian optimization and HyperBand to achieve a combination of strong anytime performance (quick improvements in the beginning by using low fidelities in HyperBand) and strong final performance (good performance in the long run by replacing HyperBand’srandom search by Bayesian optimization). For adaptive fidelity options see [36].

6.2. AutoML Systems

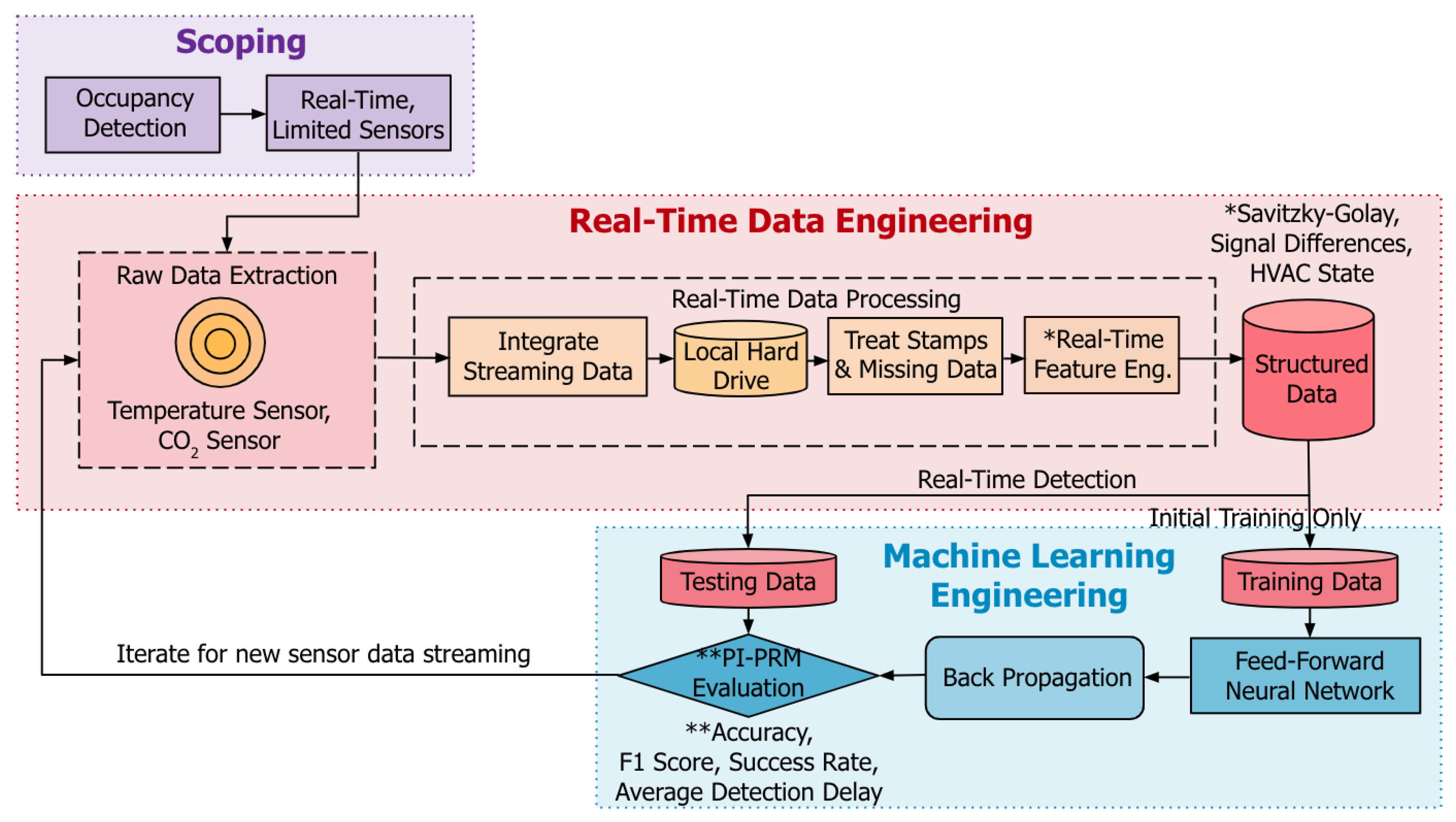

7. A Supervised Classification Workflow Example

8. Discussion

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| AHO | Automated Hyperparameter Optimization |

| AIC | Akaike Information Criterion |

| ALA | Adaptive Linear Approximation |

| API | Application Programming Interface |

| AUC | Area Under Curve |

| Auto-WEKA | Automatic Model Selection & Hyperparameter Optimization |

| BDW | Best Daubechies Wavelet Coefficients |

| BFC | Best Fourier Coefficients |

| BIC | Bayesian Information Criterion |

| BOHB | Bayesian Optimization Hyperband |

| CART | Classification and Regression Tree |

| CASH | Combined Algorithm Selection & Hyperparameter optimization |

| CI/CD | Continuous Integration Continuous Delivery or Deployment |

| CMA-ES | Covariance Matrix Adaption Evolutionary Strategy |

| CoD | Curse of Dimensionality |

| CV | Cross-Validation |

| DB | Database |

| DDoS | Distributed Denial-of-Service |

| DevOps | Development Operations |

| DFS | Deep Feature Synthesis |

| DGUFS | Dependence Guided Unsupervised Feature Selection |

| DNN | Deep Neural Network |

| DT | Decision Tree |

| ELT | Extract, Load, Transform |

| ETL | Extract, Transform, Load |

| EUFS | Embedded Unsupervised Feature Selection |

| FIR | Finite Impulse Response |

| FN | False Negative |

| FP | False Positive |

| FSFS | Feature Selection with Feature Similarity |

| GeP | Genetic Programming |

| GP | Gaussian Process |

| HVAC | Heating Ventilation and Air Conditioning |

| IARPA | Intelligence Advanced Research Projects Activity |

| KDD | Knowledge Discovery from Data |

| kNN | k-Nearest Neighbors |

| LARS | Lasso Regression |

| LBFGS | Broyden-Fletcher-Goldfarb-Shanno |

| LDS | Linear Discriminant Analysis |

| LLC-fs | Local Learning-based Clustering with feature selection |

| LR | Logistic Regression |

| MAE | Mean Absolute Error |

| MAPE | Mean Absolute Percentage Error |

| MCFS | Multi-Cluster Feature Selection |

| MDI | Mean Decrease in Impurity |

| MDL | Minimum Description Length |

| MICI | Maximal Information Compression Index |

| ML | Machine Learning |

| MLOps | Machine Learning Operations |

| MRDTL | Multi-Relational Decision Tree Learning |

| MSE | Mean Squared Error |

| NAS | Neural Automated Search |

| NDFS | Non-negative Discriminative Feature Selection |

| NN | Neural Network |

| NNI | Neural Network Intelligence |

| OLS | Ordinary Least Squares |

| OoB | Out-of-Bag |

| OOP | Object Oriented Programming |

| PoLP | Principle of Least Privilege |

| PCA | Principal Component Analysis |

| REFSVM | Recursive Feature Elimination Support Vector Machines |

| RF | Random Forest |

| RICA | Reconstruction Independent Component Analysis |

| RMSE | Root Mean Squared Error |

| ROC | Receiver Operating Characteristic |

| RRFS | Relevance Redundancy Feature Selection |

| SLA | Service Level Agreement |

| SQL | Structured Query Language |

| SRM | Structural Risk Minimization |

| SS-SFS | Simplified Silhouette Sequential Forward Selection |

| SVD | Singular Value Decomposition |

| SVM | Support Vector Machines |

| TDD | Test-Driven Development |

| TN | True Negative |

| TOC | Total Operating Characteristic |

| TP | True Positive |

| TPOT | Tree-based Pipeline Optimization Tool |

| UDFS | Unsupervised Discriminative Feature Selection |

| VPC | Virtual Private Cloud |

References

- Gibert, D.; Mateu, C.; Planes, J. The rise of machine learning for detection and classification of malware: Research developments, trends and challenges. Journal of Network and Computer Applications 2020, 153, 102526. [Google Scholar] [CrossRef]

- Bravo-Rocca, G.; Liu, P.; Guitart, J.; Dholakia, A.; Ellison, D.; Falkanger, J.; Hodak, M. Scanflow: A multi-graph framework for Machine Learning workflow management, supervision, and debugging. Expert Systems with Applications 2022, 202, 117232. [Google Scholar] [CrossRef]

- Bala, A.; Chana, I. Intelligent failure prediction models for scientific workflows. Expert Systems with Applications 2015, 42, 980–989. [Google Scholar] [CrossRef]

- Quemy, A. Two-stage optimization for machine learning workflow. Information Systems 2020, 92, 101483. [Google Scholar] [CrossRef]

- Grabska, E.; Frantz, D.; Ostapowicz, K. Evaluation of machine learning algorithms for forest stand species mapping using Sentinel-2 imagery and environmental data in the Polish Carpathians. Remote Sensing of Environment 2020, 251, 112103. [Google Scholar] [CrossRef]

- Liu, R.; Misra, S. A generalized machine learning workflow to visualize mechanical discontinuity. Journal of Petroleum Science and Engineering 2022, 210, 109963. [Google Scholar] [CrossRef]

- He, S.; Wang, Y.; Zhang, Z.; Xiao, F.; Zuo, S.; Zhou, Y.; Cai, X.; Jin, X. Interpretable machine learning workflow for evaluation of the transformation temperatures of TiZrHfNiCoCu high entropy shape memory alloys. Materials & Design 2023, 225, 111513. [Google Scholar]

- Zhou, Y.; Li, G.; Dong, J.; Xing, X.h.; Dai, J.; Zhang, C. MiYA, an efficient machine-learning workflow in conjunction with the YeastFab assembly strategy for combinatorial optimization of heterologous metabolic pathways in Saccharomyces cerevisiae. Metabolic engineering 2018, 47, 294–302. [Google Scholar] [CrossRef]

- Wong, W.K.; Joglekar, M.V.; Saini, V.; Jiang, G.; Dong, C.X.; Chaitarvornkit, A.; Maciag, G.J.; Gerace, D.; Farr, R.J.; Satoor, S.N.; others. Machine learning workflows identify a microRNA signature of insulin transcription in human tissues. Iscience 2021, 24. [Google Scholar] [CrossRef] [PubMed]

- Paudel, D.; Boogaard, H.; de Wit, A.; Janssen, S.; Osinga, S.; Pylianidis, C.; Athanasiadis, I.N. Machine learning for large-scale crop yield forecasting. Agricultural Systems 2021, 187, 103016. [Google Scholar] [CrossRef]

- Haghighatlari, M.; Hachmann, J. Advances of machine learning in molecular modeling and simulation. Current Opinion in Chemical Engineering 2019, 23, 51–57. [Google Scholar] [CrossRef]

- Reker, D. Practical considerations for active machine learning in drug discovery. Drug Discovery Today: Technologies 2019, 32, 73–79. [Google Scholar] [CrossRef]

- Narayanan, H.; Dingfelder, F.; Butté, A.; Lorenzen, N.; Sokolov, M.; Arosio, P. Machine learning for biologics: opportunities for protein engineering, developability, and formulation. Trends in pharmacological sciences 2021, 42, 151–165. [Google Scholar] [CrossRef] [PubMed]

- Jeong, S.; Kwak, J.; Lee, S. Machine learning workflow for the oil uptake prediction of rice flour in a batter-coated fried system. Innovative Food Science & Emerging Technologies 2021, 74, 102796. [Google Scholar]

- Li, W.; Niu, Z.; Shang, R.; Qin, Y.; Wang, L.; Chen, H. High-resolution mapping of forest canopy height using machine learning by coupling ICESat-2 LiDAR with Sentinel-1, Sentinel-2 and Landsat-8 data. International Journal of Applied Earth Observation and Geoinformation 2020, 92, 102163. [Google Scholar] [CrossRef]

- Lv, A.; Cheng, L.; Aghighi, M.A.; Masoumi, H.; Roshan, H. A novel workflow based on physics-informed machine learning to determine the permeability profile of fractured coal seams using downhole geophysical logs. Marine and Petroleum Geology 2021, 131, 105171. [Google Scholar] [CrossRef]

- Gharib, A.; Davies, E.G. A workflow to address pitfalls and challenges in applying machine learning models to hydrology. Advances in Water Resources 2021, 152, 103920. [Google Scholar] [CrossRef]

- Kampezidou, S.I.; Ray, A.T.; Duncan, S.; Balchanos, M.G.; Mavris, D.N. Real-time occupancy detection with physics-informed pattern-recognition machines based on limited CO2 and temperature sensors. Energy and Buildings 2021, 242, 110863. [Google Scholar] [CrossRef]

- Fu, H.; Kampezidou, S.; Sung, W.; Duncan, S.; Mavris, D.N. A Data-driven Situational Awareness Approach to Monitoring Campus-wide Power Consumption. 2018 International Energy Conversion Engineering Conference, 2018, p. 4414.

- Kampezidou, S.; Wiegman, H. Energy and power savings assessment in buildings via conservation voltage reduction. 2017 IEEE Power & Energy Society Innovative Smart Grid Technologies Conference (ISGT). IEEE, 2017, pp. 1–5.

- Kampezidou, S.I.; Romberg, J.; Vamvoudakis, K.G.; Mavris, D.N. Scalable Online Learning of Approximate Stackelberg Solutions in Energy Trading Games with Demand Response Aggregators. arXiv preprint arXiv:2304.02086 2023. [Google Scholar]

- Kampezidou, S.I.; Romberg, J.; Vamvoudakis, K.G.; Mavris, D.N. Online Adaptive Learning in Energy Trading Stackelberg Games with Time-Coupling Constraints. 2021 American Control Conference (ACC). IEEE, 2021, pp. 718–723.

- Gao, Z.; Kampezidou, S.I.; Behere, A.; Puranik, T.G.; Rajaram, D.; Mavris, D.N. Multi-level aircraft feature representation and selection for aviation environmental impact analysis. Transportation Research Part C: Emerging Technologies 2022, 143, 103824. [Google Scholar] [CrossRef]

- Tikayat Ray, A.; Cole, B.F.; Pinon Fischer, O.J.; White, R.T.; Mavris, D.N. aeroBERT-Classifier: Classification of Aerospace Requirements Using BERT. Aerospace 2023, 10. [Google Scholar] [CrossRef]

- Tikayat Ray, A.; Pinon Fischer, O.J.; Mavris, D.N.; White, R.T.; Cole, B.F. , aeroBERT-NER: Named-Entity Recognition for Aerospace Requirements Engineering using BERT. In AIAA SCITECH 2023 Forum. [CrossRef]

- Tikayat Ray, A. Standardization of Engineering Requirements Using Large Language Models. PhD thesis, Georgia Institute of Technology, 2023. [CrossRef]

- Tikayat Ray, A.; Cole, B.F.; Pinon Fischer, O.J.; Bhat, A.P.; White, R.T.; Mavris, D.N. Agile Methodology for the Standardization of Engineering Requirements Using Large Language Models. Systems 2023, 11. [Google Scholar] [CrossRef]

- Shrivastava, R.; Sisodia, D.S.; Nagwani, N.K. Deep neural network-based multi-stakeholder recommendation system exploiting multi-criteria ratings for preference learning. Expert Systems with Applications 2023, 213, 119071. [Google Scholar] [CrossRef]

- van Dinter, R.; Catal, C.; Tekinerdogan, B. A decision support system for automating document retrieval and citation screening. Expert Systems with Applications 2021, 182, 115261. [Google Scholar] [CrossRef]

- Li, X.; Zheng, J.; Li, M.; Ma, W.; Hu, Y. One-shot neural architecture search for fault diagnosis using vibration signals. Expert Systems with Applications 2022, 190, 116027. [Google Scholar] [CrossRef]

- Kim, J.; Comuzzi, M. A diagnostic framework for imbalanced classification in business process predictive monitoring. Expert Systems with Applications 2021, 184, 115536. [Google Scholar] [CrossRef]

- Jin, Y.; Carman, M.; Zhu, Y.; Xiang, Y. A technical survey on statistical modelling and design methods for crowdsourcing quality control. Artificial Intelligence 2020, 287, 103351. [Google Scholar] [CrossRef]

- Boeschoten, S.; Catal, C.; Tekinerdogan, B.; Lommen, A.; Blokland, M. The automation of the development of classification models and improvement of model quality using feature engineering techniques. Expert Systems with Applications 2023, 213, 118912. [Google Scholar] [CrossRef]

- Zhang, Y.; Kwong, S.; Wang, S. Machine learning based video coding optimizations: A survey. Information Sciences 2020, 506, 395–423. [Google Scholar] [CrossRef]

- Moniz, N.; Cerqueira, V. Automated imbalanced classification via meta-learning. Expert Systems with Applications 2021, 178, 115011. [Google Scholar] [CrossRef]

- Waring, J.; Lindvall, C.; Umeton, R. Automated machine learning: Review of the state-of-the-art and opportunities for healthcare. Artificial intelligence in medicine 2020, 104, 101822. [Google Scholar] [CrossRef] [PubMed]

- Kefalas, M.; Baratchi, M.; Apostolidis, A.; van den Herik, D.; Bäck, T. Automated machine learning for remaining useful life estimation of aircraft engines. 2021 IEEE International conference on prognostics and health management (ICPHM). IEEE, 2021, pp. 1–9.

- Tikayat Ray, A.; Bhat, A.P.; White, R.T.; Nguyen, V.M.; Pinon Fischer, O.J.; Mavris, D.N. Examining the Potential of Generative Language Models for Aviation Safety Analysis: Case Study and Insights Using the Aviation Safety Reporting System (ASRS). Aerospace 2023, 10. [Google Scholar] [CrossRef]

- Hayashi, M.; Tamai, K.; Owashi, Y.; Miura, K. Automated machine learning for identification of pest aphid species (Hemiptera: Aphididae). Applied entomology and zoology 2019, 54, 487–490. [Google Scholar] [CrossRef]

- Espejo-Garcia, B.; Malounas, I.; Vali, E.; Fountas, S. Testing the Suitability of Automated Machine Learning for Weeds Identification. Ai 2021, 2, 34–47. [Google Scholar] [CrossRef]

- Koh, J.C.; Spangenberg, G.; Kant, S. Automated machine learning for high-throughput image-based plant phenotyping. Remote Sensing 2021, 13, 858. [Google Scholar] [CrossRef]

- Warnett, S.J.; Zdun, U. Architectural design decisions for the machine learning workflow. Computer 2022, 55, 40–51. [Google Scholar] [CrossRef]

- Khalilnejad, A.; Karimi, A.M.; Kamath, S.; Haddadian, R.; French, R.H.; Abramson, A.R. Automated pipeline framework for processing of large-scale building energy time series data. PloS one 2020, 15, e0240461. [Google Scholar] [CrossRef]

- Michael, N.; Cucuringu, M.; Howison, S. OFTER: An Online Pipeline for Time Series Forecasting. arXiv preprint arXiv:2304.03877 2023. [Google Scholar] [CrossRef]

- Hapke, H.; Nelson, C. Building machine learning pipelines; O’Reilly Media, 2020.

- Kolodiazhnyi, K. Hands-On Machine Learning with C++: Build, train, and deploy end-to-end machine learning and deep learning pipelines; Packt Publishing Ltd, 2020.

- El-Amir, H.; Hamdy, M. Deep learning pipeline: building a deep learning model with TensorFlow; Apress, 2019.

- Meisenbacher, S.; Turowski, M.; Phipps, K.; Rätz, M.; Müller, D.; Hagenmeyer, V.; Mikut, R. Review of automated time series forecasting pipelines. Wiley Interdisciplinary Reviews: Data Mining and Knowledge Discovery 2022, 12, e1475. [Google Scholar] [CrossRef]

- Wang, M.; Cui, Y.; Wang, X.; Xiao, S.; Jiang, J. Machine learning for networking: Workflow, advances and opportunities. Ieee Network 2017, 32, 92–99. [Google Scholar] [CrossRef]

- Kreuzberger, D.; Kühl, N.; Hirschl, S. Machine learning operations (mlops): Overview, definition, and architecture. IEEE Access 2023. [Google Scholar] [CrossRef]

- di Laurea, I.S. Mlops-standardizing the machine learning workflow. PhD thesis, University of Bologna Bologna, Italy, 2021.

- Allison, P.D. Missing data; Sage publications, 2001.

- Little, R.J.; Rubin, D.B. Statistical analysis with missing data; Vol. 793, John Wiley & Sons, 2019.

- Candes, E.; Recht, B. Exact matrix completion via convex optimization. Communications of the ACM 2012, 55, 111–119. [Google Scholar] [CrossRef]

- Candès, E.J.; Tao, T. The power of convex relaxation: Near-optimal matrix completion. IEEE Transactions on Information Theory 2010, 56, 2053–2080. [Google Scholar] [CrossRef]

- Candes, E.J.; Plan, Y. Matrix completion with noise. Proceedings of the IEEE 2010, 98, 925–936. [Google Scholar] [CrossRef]

- Johnson, C.R. Matrix completion problems: a survey. Matrix theory and applications, 1990, Vol. 40, pp. 171–198.

- Recht, B. A simpler approach to matrix completion. Journal of Machine Learning Research 2011, 12. [Google Scholar]

- Kennedy, A.; Nash, G.; Rattenbury, N.; Kempa-Liehr, A.W. Modelling the projected separation of microlensing events using systematic time-series feature engineering. Astronomy and Computing 2021, 35, 100460. [Google Scholar] [CrossRef]

- Elmagarmid, A.K.; Ipeirotis, P.G.; Verykios, V.S. Duplicate record detection: A survey. IEEE Transactions on knowledge and data engineering 2006, 19, 1–16. [Google Scholar] [CrossRef]

- Hlupić, T.; Oreščanin, D.; Ružak, D.; Baranović, M. An overview of current data lake architecture models. 2022 45th Jubilee International Convention on Information, Communication and Electronic Technology (MIPRO). IEEE, 2022, pp. 1082–1087.

- Vassiliadis, P. A survey of extract–transform–load technology. International Journal of Data Warehousing and Mining (IJDWM) 2009, 5, 1–27. [Google Scholar] [CrossRef]

- Vassiliadis, P.; Simitsis, A. Extraction, Transformation, and Loading. Encyclopedia of Database Systems 2009, 10. [Google Scholar]

- Dash, T.; Chitlangia, S.; Ahuja, A.; Srinivasan, A. A review of some techniques for inclusion of domain-knowledge into deep neural networks. Scientific Reports 2022, 12, 1–15. [Google Scholar] [CrossRef]

- Dara, S.; Tumma, P. Feature extraction by using deep learning: A survey. 2018 Second international conference on electronics, communication and aerospace technology (ICECA). IEEE, 2018, pp. 1795–1801.

- Lee, J.; Bahri, Y.; Novak, R.; Schoenholz, S.S.; Pennington, J.; Sohl-Dickstein, J. Deep neural networks as gaussian processes. arXiv preprint arXiv:1711.00165 2017. [Google Scholar]

- Benoit, K. Linear regression models with logarithmic transformations. London School of Economics, London 2011, 22, 23–36. [Google Scholar]

- Hastie, T.; Tibshirani, R.; Friedman, J.H.; Friedman, J.H. The elements of statistical learning: data mining, inference, and prediction; Vol. 2, Springer, 2009.

- Piryonesi, S.M.; El-Diraby, T.E. Role of data analytics in infrastructure asset management: Overcoming data size and quality problems. Journal of Transportation Engineering, Part B: Pavements 2020, 146, 04020022. [Google Scholar] [CrossRef]

- Breiman, L.; Friedman, J.H.; Olshen, R.A.; Stone, C.J. Classification and regression trees; Routledge, 2017.

- Grus, J. Data science from scratch: first principles with python; O’Reilly Media, 2019.

- Sharma, V. A Study on Data Scaling Methods for Machine Learning. International Journal for Global Academic & Scientific Research 2022, 1, 23–33. [Google Scholar]

- Leznik, M.; Tofallis, C. Estimating invariant principal components using diagonal regression 2005.

- Ahsan, M.M.; Mahmud, M.P.; Saha, P.K.; Gupta, K.D.; Siddique, Z. Effect of data scaling methods on machine learning algorithms and model performance. Technologies 9 (3): 52, 2021.

- Zheng, A.; Casari, A. Feature engineering for machine learning: principles and techniques for data scientists; " O’Reilly Media, Inc.", 2018.

- Neter, J.; Kutner, M.H.; Nachtsheim, C.J.; Wasserman, W. ; others. Applied linear statistical models 1996. [Google Scholar]

- Yeo, I.K.; Johnson, R.A. A new family of power transformations to improve normality or symmetry. Biometrika 2000, 87, 954–959. [Google Scholar] [CrossRef]

- Fisher, R.A. Frequency distribution of the values of the correlation coefficient in samples from an indefinitely large population. Biometrika 1915, 10, 507–521. [Google Scholar] [CrossRef]

- Anscombe, F.J. The transformation of Poisson, binomial and negative-binomial data. Biometrika 1948, 35, 246–254. [Google Scholar] [CrossRef]

- Box, G.E.; Cox, D.R. An analysis of transformations. Journal of the Royal Statistical Society: Series B (Methodological) 1964, 26, 211–243. [Google Scholar] [CrossRef]

- Holland, S. Transformations of proportions and percentages, 2015.

- Cormode, G.; Muthukrishnan, S. An improved data stream summary: the count-min sketch and its applications. Journal of Algorithms 2005, 55, 58–75. [Google Scholar] [CrossRef]

- Kessy, A.; Lewin, A.; Strimmer, K. Optimal whitening and decorrelation. The American Statistician 2018, 72, 309–314. [Google Scholar] [CrossRef]

- Higham, N.J. Analysis of the Cholesky decomposition of a semi-definite matrix 1990.

- Jain, A.K.; Duin, R.P.W.; Mao, J. Statistical pattern recognition: A review. IEEE Transactions on pattern analysis and machine intelligence 2000, 22, 4–37. [Google Scholar] [CrossRef]

- Lakhina, A.; Crovella, M.; Diot, C. Diagnosing network-wide traffic anomalies. ACM SIGCOMM computer communication review 2004, 34, 219–230. [Google Scholar] [CrossRef]

- Han, K.; Wang, Y.; Zhang, C.; Li, C.; Xu, C. Autoencoder inspired unsupervised feature selection. 2018 IEEE international conference on acoustics, speech and signal processing (ICASSP). IEEE, 2018, pp. 2941–2945.

- Solorio-Fernández, S.; Carrasco-Ochoa, J.A.; Martínez-Trinidad, J.F. A review of unsupervised feature selection methods. Artificial Intelligence Review 2020, 53, 907–948. [Google Scholar] [CrossRef]

- Li, Z.; Yang, Y.; Liu, J.; Zhou, X.; Lu, H. Unsupervised feature selection using nonnegative spectral analysis. Proceedings of the AAAI conference on artificial intelligence, 2012, Vol. 26, pp. 1026–1032.

- Yu, L.; Liu, H. Feature selection for high-dimensional data: A fast correlation-based filter solution. Proceedings of the 20th international conference on machine learning (ICML-03), 2003, pp. 856–863.

- Mitra, P.; Murthy, C.; Pal, S.K. Unsupervised feature selection using feature similarity. IEEE transactions on pattern analysis and machine intelligence 2002, 24, 301–312. [Google Scholar] [CrossRef]

- He, X.; Cai, D.; Niyogi, P. Laplacian score for feature selection. Advances in neural information processing systems 2005, 18. [Google Scholar]

- Ferreira, A.J.; Figueiredo, M.A. An unsupervised approach to feature discretization and selection. Pattern Recognition 2012, 45, 3048–3060. [Google Scholar] [CrossRef]

- Park, C.H. A feature selection method using hierarchical clustering. In Mining intelligence and knowledge exploration; Springer, 2013; pp. 1–6.

- Cai, D.; Zhang, C.; He, X. Unsupervised feature selection for multi-cluster data. Proceedings of the 16th ACM SIGKDD international conference on Knowledge discovery and data mining, 2010, pp. 333–342.

- Yi Yang et al. “ 2, 1-norm regularized discriminative feature selection for unsupervised learning”. In: IJCAI 1062 international joint conference on artificial intelligence. 2011.

- Dy, J.G.; Brodley, C.E. Feature selection for unsupervised learning. Journal of machine learning research 2004, 5, 845–889. [Google Scholar]

- Breaban, M.; Luchian, H. A unifying criterion for unsupervised clustering and feature selection. Pattern Recognition 2011, 44, 854–865. [Google Scholar] [CrossRef]

- Hruschka, E.R.; Covoes, T.F. Feature selection for cluster analysis: an approach based on the simplified Silhouette criterion. International Conference on Computational Intelligence for Modelling, Control and Automation and International Conference on Intelligent Agents, Web Technologies and Internet Commerce (CIMCA-IAWTIC’06). IEEE, 2005, Vol. 1, pp. 32–38.

- Law, M.H.; Figueiredo, M.A.; Jain, A.K. Simultaneous feature selection and clustering using mixture models. IEEE transactions on pattern analysis and machine intelligence 2004, 26, 1154–1166. [Google Scholar] [CrossRef]

- Zeng, H.; Cheung, Y.m. Feature selection and kernel learning for local learning-based clustering. IEEE transactions on pattern analysis and machine intelligence 2010, 33, 1532–1547. [Google Scholar] [CrossRef] [PubMed]

- Wang, S.; Pedrycz, W.; Zhu, Q.; Zhu, W. Unsupervised feature selection via maximum projection and minimum redundancy. Knowledge-Based Systems 2015, 75, 19–29. [Google Scholar] [CrossRef]

- Guo, J.; Zhu, W. Dependence guided unsupervised feature selection. Proceedings of the AAAI Conference on Artificial Intelligence, 2018, Vol. 32.

- Liu, H.; Motoda, H. Feature extraction, construction and selection: A data mining perspective; Vol. 453, Springer Science & Business Media, 1998.

- Kuhn, M.; Johnson, K.; others. Applied predictive modeling; Vol. 26, Springer, 2013.

- Hastie, T.; Tibshirani, R.; Wainwright, M. Statistical learning with sparsity. Monographs on statistics and applied probability 2015, 143, 143. [Google Scholar]

- Tibshirani, R. Regression shrinkage and selection via the lasso. Journal of the Royal Statistical Society: Series B (Methodological) 1996, 58, 267–288. [Google Scholar] [CrossRef]

- Zou, H.; Hastie, T. Regularization and variable selection via the elastic net. Journal of the royal statistical society: series B (statistical methodology) 2005, 67, 301–320. [Google Scholar] [CrossRef]

- Obozinski, G.; Taskar, B.; Jordan, M. Multi-task feature selection. Statistics Department, UC Berkeley, Tech. Rep 2006, 2, 2. [Google Scholar]

- Argyriou, A.; Evgeniou, T.; Pontil, M. Multi-task feature learning. Advances in neural information processing systems 2006, 19. [Google Scholar]

- Yuan, M.; Lin, Y. Model selection and estimation in regression with grouped variables. Journal of the Royal Statistical Society: Series B (Statistical Methodology) 2006, 68, 49–67. [Google Scholar] [CrossRef]

- Kocev, D.; Vens, C.; Struyf, J.; Džeroski, S. Ensembles of multi-objective decision trees. European conference on machine learning. Springer, 2007, pp. 624–631.

- Breiman, L. Bagging predictors. Machine learning 1996, 24, 123–140. [Google Scholar] [CrossRef]

- Elith, J.; Leathwick, J.R.; Hastie, T. A working guide to boosted regression trees. Journal of animal ecology 2008, 77, 802–813. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Machine learning 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Kocev, D.; Džeroski, S.; White, M.D.; Newell, G.R.; Griffioen, P. Using single-and multi-target regression trees and ensembles to model a compound index of vegetation condition. Ecological Modelling 2009, 220, 1159–1168. [Google Scholar] [CrossRef]

- Hastie, T.; Tibshirani, R.; Friedman, J. Boosting and additive trees. In The elements of statistical learning; Springer, 2009; pp. 337–387.

- Madeh Piryonesi, S.; El-Diraby, T.E. Using machine learning to examine impact of type of performance indicator on flexible pavement deterioration modeling. Journal of Infrastructure Systems 2021, 27, 04021005. [Google Scholar] [CrossRef]

- Piryonesi, S.M.; El-Diraby, T.E. Data analytics in asset management: Cost-effective prediction of the pavement condition index. Journal of Infrastructure Systems 2020, 26, 04019036. [Google Scholar] [CrossRef]

- Segal, M.; Xiao, Y. Multivariate random forests. Wiley interdisciplinary reviews: Data mining and knowledge discovery 2011, 1, 80–87. [Google Scholar] [CrossRef]

- Page, E.S. Journal of the Royal Statistical Society. Series A (General) 1962, 125, 161–162. [CrossRef]

- Bishop, C.M. Pattern recognition and machine learning; Springer, 2006.

- Gao, Z. Representative Data and Models for Complex Aerospace Systems Analysis. PhD thesis, Georgia Institute of Technology, 2022.

- Thudumu, S.; Branch, P.; Jin, J.; Singh, J.J. A comprehensive survey of anomaly detection techniques for high dimensional big data. Journal of Big Data 2020, 7, 1–30. [Google Scholar] [CrossRef]

- Katz, G.; Shin, E.C.R.; Song, D. Explorekit: Automatic feature generation and selection. 2016 IEEE 16th International Conference on Data Mining (ICDM). IEEE, 2016, pp. 979–984.

- Lam, H.T.; Thiebaut, J.M.; Sinn, M.; Chen, B.; Mai, T.; Alkan, O. One button machine for automating feature engineering in relational databases. arXiv preprint arXiv:1706.00327 2017. [Google Scholar]

- Kaul, A.; Maheshwary, S.; Pudi, V. Autolearn: automated feature generation and selection. 2017 IEEE International Conference on data mining (ICDM). IEEE, 2017, pp. 217–226.

- Tran, B.; Xue, B.; Zhang, M. Genetic programming for feature construction and selection in classification on high-dimensional data. Memetic Computing 2016, 8, 3–15. [Google Scholar] [CrossRef]

- Khurana, U.; Turaga, D.; Samulowitz, H.; Parthasrathy, S. Cognito: Automated feature engineering for supervised learning. 2016 IEEE 16th International Conference on Data Mining Workshops (ICDMW). IEEE, 2016, pp. 1304–1307.

- Khurana, U.; Samulowitz, H.; Turaga, D. Feature engineering for predictive modeling using reinforcement learning. Proceedings of the AAAI Conference on Artificial Intelligence, 2018, Vol. 32.

- Nargesian, F.; Samulowitz, H.; Khurana, U.; Khalil, E.B.; Turaga, D.S. Learning Feature Engineering for Classification. Ijcai, 2017, Vol. 17, pp. 2529–2535.

- Li, H.; Chutatape, O. Automated feature extraction in color retinal images by a model based approach. IEEE Transactions on biomedical engineering 2004, 51, 246–254. [Google Scholar] [CrossRef]

- Dang, D.M.; Jackson, K.R.; Mohammadi, M. Dimension and variance reduction for Monte Carlo methods for high-dimensional models in finance. Applied Mathematical Finance 2015, 22, 522–552. [Google Scholar] [CrossRef]

- Donoho, D.L.; others. High-dimensional data analysis: The curses and blessings of dimensionality. AMS math challenges lecture 2000, 1, 32. [Google Scholar]

- Atramentov, A.; Leiva, H.; Honavar, V. A multi-relational decision tree learning algorithm–implementation and experiments. International Conference on Inductive Logic Programming. Springer, 2003, pp. 38–56.

- Kanter, J.M.; Veeramachaneni, K. Deep feature synthesis: Towards automating data science endeavors. 2015 IEEE international conference on data science and advanced analytics (DSAA). IEEE, 2015, pp. 1–10.

- Weimer, D.; Scholz-Reiter, B.; Shpitalni, M. Design of deep convolutional neural network architectures for automated feature extraction in industrial inspection. CIRP annals 2016, 65, 417–420. [Google Scholar] [CrossRef]

- Schneider, T.; Helwig, N.; Schütze, A. Industrial condition monitoring with smart sensors using automated feature extraction and selection. Measurement Science and Technology 2018, 29, 094002. [Google Scholar] [CrossRef]

- Laird, P.; Saul, R. Automated feature extraction for supervised learning. Proceedings of the First IEEE Conference on Evolutionary Computation. IEEE World Congress on Computational Intelligence. IEEE, 1994, pp. 674–679.

- Le, Q.; Karpenko, A.; Ngiam, J.; Ng, A. ICA with reconstruction cost for efficient overcomplete feature learning. Advances in neural information processing systems 2011, 24. [Google Scholar]

- Ngiam, J.; Chen, Z.; Bhaskar, S.; Koh, P.; Ng, A. Sparse filtering. Advances in neural information processing systems 2011, 24. [Google Scholar]

- Nocedal, J.; Wright, S.J. Numerical optimization; Springer, 1999.

- Mallat, S. Group invariant scattering. Communications on Pure and Applied Mathematics 2012, 65, 1331–1398. [Google Scholar] [CrossRef]

- Bruna, J.; Mallat, S. Invariant scattering convolution networks. IEEE transactions on pattern analysis and machine intelligence 2013, 35, 1872–1886. [Google Scholar] [CrossRef] [PubMed]

- Andén, J.; Mallat, S. Deep scattering spectrum. IEEE Transactions on Signal Processing 2014, 62, 4114–4128. [Google Scholar] [CrossRef]

- Mallat, S. Understanding deep convolutional networks. Philosophical Transactions of the Royal Society A: Mathematical, Physical and Engineering Sciences 2016, 374, 20150203. [Google Scholar] [CrossRef]

- Rizk, Y.; Hajj, N.; Mitri, N.; Awad, M. Deep belief networks and cortical algorithms: A comparative study for supervised classification. Applied computing and informatics 2019, 15, 81–93. [Google Scholar] [CrossRef]

- Rifkin, R.M.; Lippert, R.A. Notes on regularized least squares 2007.

- Yin, R.; Liu, Y.; Wang, W.; Meng, D. Sketch kernel ridge regression using circulant matrix: Algorithm and theory. IEEE transactions on neural networks and learning systems 2019, 31, 3512–3524. [Google Scholar] [CrossRef] [PubMed]

- Efron, B.; Hastie, T.; Johnstone, I.; Tibshirani, R. Least angle regression 2004.

- Bulso, N.; Marsili, M.; Roudi, Y. On the complexity of logistic regression models. Neural computation 2019, 31, 1592–1623. [Google Scholar] [CrossRef] [PubMed]

- Belyaev, M.; Burnaev, E.; Kapushev, Y. Exact inference for Gaussian process regression in case of big data with the Cartesian product structure. arXiv preprint arXiv:1403.6573 2014. [Google Scholar]

- Serpen, G.; Gao, Z. Complexity analysis of multilayer perceptron neural network embedded into a wireless sensor network. Procedia Computer Science 2014, 36, 192–197. [Google Scholar] [CrossRef]

- Jain, A.K.; Mao, J.; Mohiuddin, K.M. Artificial neural networks: A tutorial. Computer 1996, 29, 31–44. [Google Scholar] [CrossRef]

- Fleizach, C.; Fukushima, S. A naive bayes classifier on 1998 kdd cup. Dept. Comput. Sci. Eng., University of California, Los Angeles, CA, USA, Tech. Rep 1998.

- Jensen, F.V.; Nielsen, T.D. Bayesian networks and decision graphs; Vol. 2, Springer, 2007.

- Claesen, M.; De Smet, F.; Suykens, J.A.; De Moor, B. Fast prediction with SVM models containing RBF kernels. arXiv preprint arXiv:1403.0736 2014. [Google Scholar]

- Cardot, H.; Degras, D. Online principal component analysis in high dimension: Which algorithm to choose? International Statistical Review 2018, 86, 29–50. [Google Scholar] [CrossRef]

- Veksler, O. Nonparametric density estimation nearest neighbors, KNN, 2013.

- Raschka, Sebastian. STAT 479: Machine Learning Lecture Notes. 2018. Online: https://sebastianraschka.%20com/pdf/lecture-notes/stat479fs18/07_ensembles_notes.%20pdf.%20Citado%20na%20p%C3%A1g.%20viii.

- Sani, H.M.; Lei, C.; Neagu, D. Computational complexity analysis of decision tree algorithms. Artificial Intelligence XXXV: 38th SGAI International Conference on Artificial Intelligence, AI 2018, Cambridge, UK, December 11–13, 2018, Proceedings 38. Springer, 2018, pp. 191–197.

- Buczak, A.L.; Guven, E. A survey of data mining and machine learning methods for cyber security intrusion detection. IEEE Communications surveys & tutorials 2015, 18, 1153–1176. [Google Scholar]

- Ke, G.; Meng, Q.; Finley, T.; Wang, T.; Chen, W.; Ma, W.; Ye, Q.; Liu, T.Y. Lightgbm: A highly efficient gradient boosting decision tree. Advances in neural information processing systems 2017, 30. [Google Scholar]

- Cai, D.; He, X.; Han, J. Training linear discriminant analysis in linear time. 2008 IEEE 24th international conference on data engineering. IEEE, 2008, pp. 209–217.

- Refaeilzadeh, P.; Tang, L.; Liu, H. Cross-validation. Encyclopedia of database systems 2009, 5, 532–538. [Google Scholar]

- Efron, B.; Tibshirani, R.J. An introduction to the bootstrap; CRC press, 1994.

- Efron, B. Bootstrap methods: another look at the jackknife. In Breakthroughs in statistics; Springer, 1992; pp. 569–593.

- Breiman, L. Bias, variance, and arcing classifiers. Technical report, Tech. Rep. 460 Statistics Department University of California Berkeley, 1996.

- Syakur, M.; Khotimah, B.; Rochman, E.; Satoto, B.D. Integration k-means clustering method and elbow method for identification of the best customer profile cluster. IOP conference series: materials science and engineering. IOP Publishing, 2018, Vol. 336, p. 012017.

- Palacio-Niño, J.O.; Berzal, F. Evaluation metrics for unsupervised learning algorithms. arXiv preprint arXiv:1905.05667 2019. [Google Scholar]

- Halkidi, M.; Batistakis, Y.; Vazirgiannis, M. On clustering validation techniques. Journal of intelligent information systems 2001, 17, 107–145. [Google Scholar] [CrossRef]

- Perry, P.O. Cross-validation for unsupervised learning; Stanford University, 2009.

- Airola, A.; Pahikkala, T.; Waegeman, W.; De Baets, B.; Salakoski, T. An experimental comparison of cross-validation techniques for estimating the area under the ROC curve. Computational Statistics & Data Analysis 2011, 55, 1828–1844. [Google Scholar]

- Breiman, L.; Spector, P. Submodel selection and evaluation in regression. The X-random case. International statistical review/revue internationale de Statistique 1992, pp. 291–319.

- Kohavi, R.; others. A study of cross-validation and bootstrap for accuracy estimation and model selection. Ijcai. Montreal, Canada, 1995, Vol. 14, pp. 1137–1145.

- Arlot, S.; Celisse, A. A survey of cross-validation procedures for model selection. Statistics surveys 2010, 4, 40–79. [Google Scholar] [CrossRef]

- McCulloch, C.E.; Searle, S.R. Generalized, linear, and mixed models; John Wiley & Sons, 2004.

- Kühl, N.; Hirt, R.; Baier, L.; Schmitz, B.; Satzger, G. How to conduct rigorous supervised machine learning in information systems research: the supervised machine learning report card. Communications of the Association for Information Systems 2021, 48, 46. [Google Scholar] [CrossRef]

- Caruana, R.; Niculescu-Mizil, A. Data mining in metric space: an empirical analysis of supervised learning performance criteria. Proceedings of the tenth ACM SIGKDD international conference on Knowledge discovery and data mining, 2004, pp. 69–78.

- Beck, K. Test-driven development: by example; Addison-Wesley Professional, 2003.

- Washizaki, H.; Uchida, H.; Khomh, F.; Guéhéneuc, Y.G. Studying Software Engineering Patterns for Designing Machine Learning Systems. 2019 10th International Workshop on Empirical Software Engineering in Practice (IWESEP), 2019, pp. 49–495. [CrossRef]

- Gamma, E.; Helm, R.; Johnson, R.; Johnson, R.E.; Vlissides, J.; others. Design patterns: elements of reusable object-oriented software; Pearson Deutschland GmbH, 1995.

- Kohavi, R.; Longbotham, R. Online Controlled Experiments and A/B Testing. Encyclopedia of machine learning and data mining 2017, 7, 922–929. [Google Scholar]

- Rajasoundaran, S.; Prabu, A.; Routray, S.; Kumar, S.S.; Malla, P.P.; Maloji, S.; Mukherjee, A.; Ghosh, U. Machine learning based deep job exploration and secure transactions in virtual private cloud systems. Computers & Security 2021, 109, 102379. [Google Scholar] [CrossRef]

- Abran, A.; Moore, J.W.; Bourque, P.; Dupuis, R.; Tripp, L. Software engineering body of knowledge. IEEE Computer Society, Angela Burgess 2004, p. 25.

- pytest. pytest: helps you write better programs. URL: https://docs.pytest.org/en/7.4.x/. 1236.

- unittest. unittest— Unit testing framework. URL: https://docs.python.org/3/library/unittest.html. 1237.

- JUnit. JUnit. URL: https://junit.org/junit5/. 1238.

- mockito. mockito. URL: https://site.mockito.org/.

- Ardagna, C.A.; Bena, N.; Hebert, C.; Krotsiani, M.; Kloukinas, C.; Spanoudakis, G. Big Data Assurance: An Approach Based on Service-Level Agreements. Big Data 2023. 2023. [Google Scholar]

- Mili, A.; Tchier, F. Software testing: Concepts and operations; John Wiley & Sons, 2015.

- Li, P.L.; Chai, X.; Campbell, F.; Liao, J.; Abburu, N.; Kang, M.; Niculescu, I.; Brake, G.; Patil, S.; Dooley, J. ; others. Evolving software to be ML-driven utilizing real-world A/B testing: experiences, insights, challenges. 2021 IEEE/ACM 43rd International Conference on Software Engineering: Software Engineering in Practice (ICSE-SEIP). IEEE, 2021, pp. 170–179.

- Manias, D.M.; Chouman, A.; Shami, A. Model Drift in Dynamic Networks. IEEE Communications Magazine 2023. [Google Scholar] [CrossRef]

- Wani, D.; Ackerman, S.; Farchi, E.; Liu, X.; Chang, H.w.; Lalithsena, S. Data Drift Monitoring for Log Anomaly Detection Pipelines. arXiv preprint arXiv:2310.14893 2023. [Google Scholar]

- Schneider, F. Least privilege and more [computer security]. IEEE Security & Privacy 2003, 1, 55–59. [Google Scholar] [CrossRef]

- Mahjabin, T.; Xiao, Y.; Sun, G.; Jiang, W. A survey of distributed denial-of-service attack, prevention, and mitigation techniques. International Journal of Distributed Sensor Networks 2017, 13, 1550147717741463. [Google Scholar] [CrossRef]

- Certified Tester Foundation Level (CTFL) Syllabus. Technical report, International Software Testing Qualifications Board, Version 2018 v3.1.1.

- Lewis, W.E. Software testing and continuous quality improvement; Auerbach publications, 2004.

- Martin, R.C. Clean code: a handbook of agile software craftsmanship; Pearson Education, 2009.

- Thomas, D.; Hunt, A. The Pragmatic Programmer: your journey to mastery; Addison-Wesley Professional, 2019.

- Hutter, F.; Kotthoff, L.; Vanschoren, J. Automated machine learning: methods, systems, challenges; Springer Nature, 2019.

- Melis, G.; Dyer, C.; Blunsom, P. On the state of the art of evaluation in neural language models. arXiv preprint arXiv:1707.05589 2017. [Google Scholar]

- Snoek, J.; Larochelle, H.; Adams, R.P. Practical bayesian optimization of machine learning algorithms. Advances in neural information processing systems 2012, 25. [Google Scholar]

- Bergstra, J.; Yamins, D.; Cox, D. Making a science of model search: Hyperparameter optimization in hundreds of dimensions for vision architectures. International conference on machine learning. PMLR, 2013, pp. 115–123.

- Sculley, D.; Snoek, J.; Wiltschko, A.; Rahimi, A. Winner’s curse? On pace, progress, and empirical rigor 2018.

- Bergstra, J.; Bengio, Y. Random search for hyper-parameter optimization. Journal of machine learning research 2012, 13. [Google Scholar]

- Hansen, N. The CMA evolution strategy: a comparing review. Towards a new evolutionary computation: Advances in the estimation of distribution algorithms 2006, pp. 75–102.

- Snoek, J.; Rippel, O.; Swersky, K.; Kiros, R.; Satish, N.; Sundaram, N.; Patwary, M.; Prabhat, M.; Adams, R. Scalable bayesian optimization using deep neural networks. International conference on machine learning. PMLR, 2015, pp. 2171–2180.

- Dahl, G.E.; Sainath, T.N.; Hinton, G.E. Improving deep neural networks for LVCSR using rectified linear units and dropout. 2013 IEEE international conference on acoustics, speech and signal processing. IEEE, 2013, pp. 8609–8613.

- Shahriari, B.; Swersky, K.; Wang, Z.; Adams, R.P.; De Freitas, N. Taking the human out of the loop: A review of Bayesian optimization. Proceedings of the IEEE 2015, 104, 148–175. [Google Scholar] [CrossRef]

- Brochu, E.; Cora, V.M.; De Freitas, N. A tutorial on Bayesian optimization of expensive cost functions, with application to active user modeling and hierarchical reinforcement learning. arXiv preprint arXiv:1012.2599 2010. [Google Scholar]

- Zeng, X.; Luo, G. Progressive sampling-based Bayesian optimization for efficient and automatic machine learning model selection. Health information science and systems 2017, 5, 1–21. [Google Scholar] [CrossRef]

- Zhang, Y.; Bahadori, M.T.; Su, H.; Sun, J. FLASH: fast Bayesian optimization for data analytic pipelines. Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, 2016, pp. 2065–2074.

- Jamieson, K.; Talwalkar, A. Non-stochastic best arm identification and hyperparameter optimization. Artificial intelligence and statistics. PMLR, 2016, pp. 240–248.

- Li, L.; Jamieson, K.; DeSalvo, G.; Rostamizadeh, A.; Talwalkar, A. Hyperband: A novel bandit-based approach to hyperparameter optimization. The Journal of Machine Learning Research 2017, 18, 6765–6816. [Google Scholar]

- Falkner, S.; Klein, A.; Hutter, F. BOHB: Robust and efficient hyperparameter optimization at scale. International Conference on Machine Learning. PMLR, 2018, pp. 1437–1446.

- Pan, S.J.; Yang, Q. A survey on transfer learning. IEEE Transactions on knowledge and data engineering 2010, 22, 1345–1359. [Google Scholar] [CrossRef]

- Ravi, S.; Larochelle, H. Optimization as a model for few-shot learning. International conference on learning representations, 2017.

- Elsken, T.; Metzen, J.H.; Hutter, F. Neural architecture search: A survey. The Journal of Machine Learning Research 2019, 20, 1997–2017. [Google Scholar]

- Zoph, B.; Vasudevan, V.; Shlens, J.; Le, Q.V. Learning transferable architectures for scalable image recognition. Proceedings of the IEEE conference on computer vision and pattern recognition, 2018, pp. 8697–8710.

- Zela, A.; Klein, A.; Falkner, S.; Hutter, F. Towards automated deep learning: Efficient joint neural architecture and hyperparameter search. arXiv preprint arXiv:1807.06906 2018. [Google Scholar]

- Real, E.; Aggarwal, A.; Huang, Y.; Le, Q.V. Aging evolution for image classifier architecture search. AAAI conference on artificial intelligence, 2019, Vol. 2, p. 2.

- Runge, F.; Stoll, D.; Falkner, S.; Hutter, F. Learning to design RNA. arXiv preprint arXiv:1812.11951 2018. [Google Scholar]

- Swersky, K.; Snoek, J.; Adams, R.P. Freeze-thaw Bayesian optimization. arXiv preprint arXiv:1406.3896 2014. [Google Scholar]

- Domhan, T.; Springenberg, J.T.; Hutter, F. Speeding up automatic hyperparameter optimization of deep neural networks by extrapolation of learning curves. Twenty-fourth international joint conference on artificial intelligence, 2015.

- Klein, A.; Falkner, S.; Springenberg, J.T.; Hutter, F. Learning curve prediction with Bayesian neural networks. International conference on learning representations, 2016.

- Baker, B.; Gupta, O.; Raskar, R.; Naik, N. Accelerating neural architecture search using performance prediction. arXiv preprint arXiv:1705.10823 2017. [Google Scholar]

- Real, E.; Moore, S.; Selle, A.; Saxena, S.; Suematsu, Y.L.; Tan, J.; Le, Q.V.; Kurakin, A. Large-scale evolution of image classifiers. International conference on machine learning. PMLR, 2017, pp. 2902–2911.

- Elsken, T.; Metzen, J.H.; Hutter, F. Simple and efficient architecture search for convolutional neural networks. arXiv preprint arXiv:1711.04528 2017. [Google Scholar]

- Elsken, T.; Metzen, J.H.; Hutter, F. Efficient multi-objective neural architecture search via lamarckian evolution. arXiv preprint arXiv:1804.09081 2018. [Google Scholar]

- Cai, H.; Chen, T.; Zhang, W.; Yu, Y.; Wang, J. Efficient architecture search by network transformation. Proceedings of the AAAI Conference on Artificial Intelligence, 2018, Vol. 32.

- Cai, H.; Yang, J.; Zhang, W.; Han, S.; Yu, Y. Path-level network transformation for efficient architecture search. International Conference on Machine Learning. PMLR, 2018, pp. 678–687.

- Saxena, S.; Verbeek, J. Convolutional neural fabrics. Advances in neural information processing systems 2016, 29. [Google Scholar]

- Pham, H.; Guan, M.; Zoph, B.; Le, Q.; Dean, J. Efficient neural architecture search via parameters sharing. International conference on machine learning. PMLR, 2018, pp. 4095–4104.

- Bender, G.; Kindermans, P.J.; Zoph, B.; Vasudevan, V.; Le, Q. Understanding and simplifying one-shot architecture search. International conference on machine learning. PMLR, 2018, pp. 550–559.

- Liu, H.; Simonyan, K.; Yang, Y. Darts: Differentiable architecture search. arXiv preprint arXiv:1806.09055 2018. [Google Scholar]

- Cai, H.; Zhu, L.; Han, S. Proxylessnas: Direct neural architecture search on target task and hardware. arXiv preprint arXiv:1812.00332 2018. [Google Scholar]

- Xie, S.; Zheng, H.; Liu, C.; Lin, L. SNAS: stochastic neural architecture search. arXiv preprint arXiv:1812.09926, 2018. [Google Scholar]

- Bergstra, J.; Bardenet, R.; Bengio, Y.; Kégl, B. Algorithms for hyper-parameter optimization. Advances in neural information processing systems 2011, 24. [Google Scholar]

- Desautels, T.; Krause, A.; Burdick, J.W. Parallelizing exploration-exploitation tradeoffs in gaussian process bandit optimization. Journal of Machine Learning Research 2014, 15, 3873–3923. [Google Scholar]

- Ginsbourger, D.; Le Riche, R.; Carraro, L. Kriging is well-suited to parallelize optimization. Computational intelligence in expensive optimization problems 2010, pp. 131–162.

- Hernández-Lobato, J.M.; Requeima, J.; Pyzer-Knapp, E.O.; Aspuru-Guzik, A. Parallel and distributed Thompson sampling for large-scale accelerated exploration of chemical space. International conference on machine learning. PMLR, 2017, pp. 1470–1479.

- Hutter, F.; Hoos, H.H.; Leyton-Brown, K. Parallel algorithm configuration. Learning and Intelligent Optimization: 6th International Conference, LION 6, Paris, France, January 16-20, 2012, Revised Selected Papers. Springer, 2012, pp. 55–70.

- Zhang, C.; Xie, Y.; Bai, H.; Yu, B.; Li, W.; Gao, Y. A survey on federated learning. Knowledge-Based Systems 2021, 216, 106775. [Google Scholar] [CrossRef]

- Nagarajah, T.; Poravi, G. A review on automated machine learning (AutoML) systems. 2019 IEEE 5th International Conference for Convergence in Technology (I2CT). IEEE, 2019, pp. 1–6.

- Thakur, A.; Krohn-Grimberghe, A. Autocompete: A framework for machine learning competition. arXiv preprint arXiv:1507.02188 2015. [Google Scholar]

- Ferreira, L.; Pilastri, A.; Martins, C.M.; Pires, P.M.; Cortez, P. A comparison of AutoML tools for machine learning, deep learning and XGBoost. 2021 International Joint Conference on Neural Networks (IJCNN). IEEE, 2021, pp. 1–8.

- Thornton, C.; Hutter, F.; Hoos, H.H.; Leyton-Brown, K. Auto-WEKA: Combined selection and hyperparameter optimization of classification algorithms. Proceedings of the 19th ACM SIGKDD international conference on Knowledge discovery and data mining, 2013, pp. 847–855.

- Kotthoff, L.; Thornton, C.; Hoos, H.H.; Hutter, F.; Leyton-Brown, K. Auto-WEKA: Automatic model selection and hyperparameter optimization in WEKA. Automated machine learning: methods, systems, challenges 2019, pp. 81–95.

- Komer, B.; Bergstra, J.; Eliasmith, C. Hyperopt-sklearn: automatic hyperparameter configuration for scikit-learn. ICML workshop on AutoML. Citeseer Austin, TX, 2014, Vol. 9, p. 50.

- Feurer, M.; Klein, A.; Eggensperger, K.; Springenberg, J.; Blum, M.; Hutter, F. Efficient and robust automated machine learning. Advances in neural information processing systems 2015, 28. [Google Scholar]

- Feurer, M.; Eggensperger, K.; Falkner, S.; Lindauer, M.; Hutter, F. Auto-sklearn 2.0: Hands-free automl via meta-learning. J Machine Learn Res 2020, 23, 1–61. [Google Scholar]

- Olson, R.S.; Moore, J.H. TPOT: A tree-based pipeline optimization tool for automating machine learning. Workshop on automatic machine learning. PMLR, 2016, pp. 66–74.

- Zimmer, L.; Lindauer, M.; Hutter, F. Auto-pytorch: Multi-fidelity metalearning for efficient and robust autodl. IEEE Transactions on Pattern Analysis and Machine Intelligence 2021, 43, 3079–3090. [Google Scholar] [CrossRef] [PubMed]

- Jin, H.; Song, Q.; Hu, X. Auto-keras: An efficient neural architecture search system. Proceedings of the 25th ACM SIGKDD international conference on knowledge discovery & data mining, 2019, pp. 1946–1956.

- Peng, H.; Du, H.; Yu, H.; Li, Q.; Liao, J.; Fu, J. Cream of the Crop: Distilling Prioritized Paths For One-Shot Neural Architecture Search. Advances in Neural Information Processing Systems 2020, 33. [Google Scholar]

- Microsoft Research. NNI Related Publications. Online: https://nni.readthedocs.io/en/latest/notes/research_ 1371publications.html.

- Erickson, N.; Mueller, J.; Shirkov, A.; Zhang, H.; Larroy, P.; Li, M.; Smola, A. Autogluon-tabular: Robust and accurate automl for structured data. arXiv preprint arXiv:2003.06505 2020. [Google Scholar]

- Parul Pandey. A Deep Dive into H2O’s AutoML. Online: https://h2o.ai/blog/2019/a-deep-dive-into-h2os-automl/.

- Wang, C.; Wu, Q.; Weimer, M.; Zhu, E. Flaml: A fast and lightweight automl library. Proceedings of Machine Learning and Systems 2021, 3, 434–447. [Google Scholar]

- Shchur, O.; Turkmen, C.; Erickson, N.; Shen, H.; Shirkov, A.; Hu, T.; Wang, Y. AutoGluon-TimeSeries: AutoML for Probabilistic Time Series Forecasting. arXiv preprint arXiv:2308.05566 2023. [Google Scholar]

- Khider, D.; Zhu, F.; Gil, Y. autoTS: Automated machine learning for time series analysis. AGU Fall Meeting Abstracts, 2019, Vol. 2019, pp. PP43D–1637.

- Schafer, R.W. What is a Savitzky-Golay filter?[lecture notes]. IEEE Signal processing magazine 2011, 28, 111–117. [Google Scholar] [CrossRef]

| Scaling Method | Scaled Feature | Scaling Effect | ML Algorithm/Model |

|---|---|---|---|

| Min-Max | k-Means, kNN, SVM | ||

| Standardization (z-score) | , | Linear/Logistic Regression, NN | |

| l2-Normalization | Vector Space Model |

| Encoding Method | Original Feature | Transformed Features | Result |

|---|---|---|---|

| Ordinal Encoding | string1, string2, ... | 1, 2, ... | Nonordinal categorical data becomes ordinal |

| One-Hot Encoding | string1, string2, ... | 001, 010, ... | k features for k categories, only one bit is "on" |

| Dummy Encoding | string1, string2, ... | 001, 010, ..., (000) | features for k categories, reference category is 0 |

| Effect Encoding | string1, string2, ... | 001, 010, ..., (-1-1-1) | k features for k categories, reference category is -1 |

| ML Model/Algorithm | Parametric | Linear | Train, Test, Space Complexity | Paper |

|---|---|---|---|---|

| Ordinary Least Squares (OLS) | ✓ | ✓ | , , | [148] |

| Kernel Ridge Regression | ✓ | ✓ | , -, | [149] |

| Lasso Regression (LARS) | ✓ | ✓ | , -, - | [150] |

| Elastic Net | ✓ | ✓ | , -, - | [108] |

| Logistic Regression | ✓ | ✓ | , , | [151] |

| GP Regression | ✗ | ✗ | , -, | [152] |

| Multi-Layer Perceptron | ✓ | ✗ | ★ | [153,154] |

| RNN/LSTM | ✓ | ✗ | ★ | - |

| CNN | ✓ | ✗ | ★ | - |

| Transformers | ✓ | ✗ | ★ | - |

| Radial Basis Function NN | ✗ | ✗ | ★ | - |

| DNN | ✓ | ✗ | ★ | - |

| Naive Bayes Classifier | ✓ | ✓ | , , | [155] |

| Bayesian Network | ✗ | ✗ | ★ | [156] |

| Bayesian Belief Network | ✓ | ✗ | ★ | - |

| SVM | ✗ | ✓ | , , | [157] |

| PCA | ✗ | ✓ | , -, | [158] |

| kNN | ✗ | ✗ | , , | [159,160] |

| CART | ✗ | ✗ | , , | [161] |

| RF | ✗ | ✗ | , , | [162] |

| Gradient Boost Decision Tree | ✗ | ✗ | , , | [163] |

| LDA | ✓ | ✓ | , -, , | [164] |

| CV Category | Specific CV Method | Result |

|---|---|---|

| Exhaustive CV | · Leave-p-out CV | models trained |

| · Leave-one-out CV | models trained | |

| Non-Exhaustive CV | · k-fold CV | k models trained |

| · Holdout | 1 model trained | |

| · Repeated Random Sub-Sampling | k models trained | |

| Validation (a.k.a. Monte Carlo CV) | ||

| Nested CV | · k*l-fold CV | models trained |

| · k-fold CV with validation and test set | k models trained with test set |

| Performance Index | Formula | Purpose |

|---|---|---|

| Mean Squared Error (MSE) | Regression | |

| Root Mean Squared Error (RMSE) | Regression | |

| Mean Absolute Error (MAE) | Regression | |

| Mean Absolute Percentage Error (MAPE) | Regression | |

| Coefficient of Determination () | Regression | |

| Adjusted Coefficient of Determination (A-) | Regression | |

| Confusion Matrix | TP, TN, FP, FN | Classification |

| Accuracy | Classification | |

| Balanced Accuracy | Classification | |

| Missclassification | Classification | |

| F1-Score | Classification | |

| F-Score | Classification | |

| Receiver Operating Characteristic (ROC) | Graphical | Classification |

| Area Under Curve (AUC) | Graphical | Classification |

| Total Operating Characteristic (TOC) | Graphical | Classification |

| Method | Approach to Speed-Up | Paper |

|---|---|---|

| Lower fidelity estimates | Less epochs, data subsets, downscaled models/data, etc. | [215,216,220,221,222,223] |

| Learning curve extrapolation | Performance extrapolated after few epochs | [224,225,226,227] |

| Weight inheritance/network morphisms | Models warm-started with inherited weights | [228,229,230,231,232] |

| One-Shot models/weight sharing | One-shot model’s weights shared across architectures | [233,234,235,236,237,238] |

| Software | Problem Automated | AutoML Method | Paper |

|---|---|---|---|

| Auto-WEKA | CASH | Bayesian optimization | [248] |

| Auto-WEKA 2.0 | CASH with parallel runs | Bayesian optimization | [249] |

| Hyperopt-Sklearn | Space search of random hyperparameters | Bayesian otimization | [250] |

| Auto-Sklearn | Improved CASH with algorithm ensembles | Bayesian optimization | [251,252] |

| TPOT | Classification with FE | GeP | [253] |

| Auto-Net | Automates DNN tuning | Bayesian optimization | [36] |

| Auto-Net 2.0 | Automates DNN tuning | BOHB | [36] |

| Automatic Statistician | Automates data science | Various | [36] |

| AutoPytorch | Algo. selection, ensemble constr., hyperpar. tuning | Bayesian opt., meta-learn. | [254] |

| AutoKeras | NAS, hyperpar. tuning in DNN | Bayesian opt. guides network morphism | [255] |

| NNI | NAS, hyperpar. tuning, model compression, FE | One-shot modes, etc. | [256,257] |

| TPOT | Hyperpar. tuning, model selection | GeP | [253] |

| AutoGluon | Hyperpar. tuning | - | [258] |

| H2O | DE, FE, hyperpar. tuning, ensemble model selection | Random grid search, Bayesian opt. | [259] |

| FEDOT | Hyperparameter tuning | Evolutionary algorithms | - |

| Auto-Sklearn 2 | Model selection | Meta-learning, bandit strategy | [252] |

| FLAML | Algorithm selection, hyperpar. tuning | Search strategies | [260] |

| AutoGluon-TS | Ensemble constr. for time-series forecasting | Probabilistic time-series | [261] |

| AutoTS | Time-series data analysis | Various | [262] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).