Submitted:

15 December 2023

Posted:

18 December 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Materials and Methods

2.1. Perceptual evaluation of dysprosody

2.2. Speech signal processing

2.3. Statistical modeling

3. Results

4. Discussion

5. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Schlenck, K.-J.; Bettrich, R.; Willmes, K. Aspects of Disturbed Prosody in Dysarthria. Clin. Linguist. Phon. 1993, 7, 119–128. [Google Scholar] [CrossRef]

- Sidtis, D.V.L.; Pachana, N.; Cummings, J.L.; Sidtis, J.J. Dysprosodic Speech Following Basal Ganglia Insult: Toward a Conceptual Framework for the Study of the Cerebral Representation of Prosody. Brain Lang. 2006, 97, 135–153. [Google Scholar] [CrossRef]

- Watson, P.J.; Schlauch, R.S. The Effect of Fundamental Frequency on the Intelligibility of Speech with Flattened Intonation Contours. Am. J. Speech-Lang. Pathol. 2008, 17, 348–355. [Google Scholar] [CrossRef] [PubMed]

- Klopfenstein, M. Interaction between Prosody and Intelligibility. Int J Speech-Lang Pa 2009, 11, 326–331. [Google Scholar] [CrossRef]

- Martens, H.; Nuffelen, G.V.; Dekens, T.; Huici, M.H.-D.; Hernández-Díaz, H.A.K.; Letter, M.D.; Bodt, M.S.D. The Effect of Intensive Speech Rate and Intonation Therapy on Intelligibility in Parkinson’s Disease. J. Commun. Disord. 2015, 58, 91–105. [Google Scholar] [CrossRef]

- Feenaughty, L.; Tjaden, K.; Sussman, J. Relationship between Acoustic Measures and Judgments of Intelligibility in Parkinson’s Disease: A within-Speaker Approach. Clin. Linguist. Phon. 2014, 28, 857–878. [Google Scholar] [CrossRef] [PubMed]

- Sidtis, J.J.; Sidtis, D.V.L. A Neurobehavioral Approach to Dysprosody. Semin. Speech Lang. 2003, 24, 93–105. [Google Scholar] [CrossRef]

- Sidtis, J.J. Music, Pitch Perception, and the Mechanisms of Cortical Hearing. Handb. Cogn. Neuroscience. 1984, 91–114. [Google Scholar] [CrossRef]

- Peters, A.S.; Rémi, J.; Vollmar, C.; Gonzalez-Victores, J.A.; Cunha, J.P.S.; Noachtar, S. Dysprosody during Epileptic Seizures Lateralizes to the Nondominant Hemisphere. Neurology 2011, 77, 1482–1486. [Google Scholar] [CrossRef]

- Ballard, K.J.; Azizi, L.; Duffy, J.R.; McNeil, M.R.; Halaki, M.; O’Dwyer, N.; Layfield, C.; Scholl, D.I.; Vogel, A.P.; Robin, D.A. A Predictive Model for Diagnosing Stroke-Related Apraxia of Speech. Neuropsychologia 2016, 81, 129–139. [Google Scholar] [CrossRef]

- Ballard, K.J.; Robin, D.A.; McCabe, P.; McDonald, J. A Treatment for Dysprosody in Childhood Apraxia of Speech. J. Speech Lang., Hear. Res. 2010, 53, 1227–1245. [Google Scholar] [CrossRef] [PubMed]

- Rusz, J.; Saft, C.; Schlegel, U.; Hoffman, R.; Skodda, S. Phonatory Dysfunction as a Preclinical Symptom of Huntington Disease. PLoS ONE 2014, 9, e113412. [Google Scholar] [CrossRef] [PubMed]

- Skodda, S.; Grönheit, W.; Schlegel, U. Intonation and Speech Rate in Parkinson’s Disease: General and Dynamic Aspects and Responsiveness to Levodopa Admission. J. Voice 2011, 25, e199-205. [Google Scholar] [CrossRef] [PubMed]

- Karlsson, F.; Olofsson, K.; Blomstedt, P.; Linder, J.; Doorn, J. van Pitch Variability in Patients with Parkinson’s Disease: Effects of Deep Brain Stimulation of Caudal Zona Incerta and Subthalamic Nucleus. J. Speech Lang. Hear. Res. 2013, 56, 1–9. [Google Scholar] [CrossRef] [PubMed]

- Steurer, H.; Schalling, E.; Franzén, E.; Albrecht, F. Characterization of Mild and Moderate Dysarthria in Parkinson’s Disease: Behavioral Measures and Neural Correlates. Front Aging Neurosci 2022, 14, 870998. [Google Scholar] [CrossRef] [PubMed]

- Frota, S.; Cruz, M.; Cardoso, R.; Guimarães, I.; Ferreira, J.J.; Pinto, S.; Vigário, M. (Dys)Prosody in Parkinson’s Disease: Effects of Medication and Disease Duration on Intonation and Prosodic Phrasing. Brain Sci 2021, 11, 1100. [Google Scholar] [CrossRef] [PubMed]

- Bocklet, T.; Nöth, E.; Stemmer, G.; Ruzickova, H.; Rusz, J. Detection of Persons with Parkinson’s Disease by Acoustic, Vocal, and Prosodic Analysis; … (ASRU); IEEE, 2011; ISBN 978-1-4673-0365-1. [Google Scholar]

- MacPherson, M.K.; Huber, J.E.; Snow, D.P. The Intonation-Syntax Interface in the Speech of Individuals with Parkinson’s Disease. J. Speech Lang. Hear. Res. 2011, 54, 19–32. [Google Scholar] [CrossRef]

- Thies, T.; Mücke, D.; Lowit, A.; Kalbe, E.; Steffen, J.; Barbe, M.T. Prominence Marking in Parkinsonian Speech and Its Correlation with Motor Performance and Cognitive Abilities. Neuropsychologia 2019, 107306. [Google Scholar] [CrossRef] [PubMed]

- Mennen, I.; Schaeffler, F.; Watt, N.; Miller, N. An Autosegmental-Metrical Investigation of Intonation in People with Parkinson’s Disease. Asia Pac. J. Speech Lang. Hear. 2008, 11, 205–219. [Google Scholar] [CrossRef]

- Lowit, A.; Kuschmann, A. Characterizing Intonation Deficit in Motor Speech Disorders: An Autosegmental-Metrical Analysis of Spontaneous Speech in Hypokinetic Dysarthria, Ataxic Dysarthria, and Foreign Accent Syndrome. J. Speech Lang. Hear. Res. 2012, 55, 1472–1484. [Google Scholar] [CrossRef]

- Lowit, A.; Kuschmann, A.; Kavanagh, K. Phonological Markers of Sentence Stress in Ataxic Dysarthria and Their Relationship to Perceptual Cues. J. Commun. Disord. 2014, 50, 8–18. [Google Scholar] [CrossRef]

- Pierrehumbert, J. Tonal Elements and Their Alighment. In Prosody: Theory and Experiment; Studies Presented to Gösta Bruce; Text, Speech and Language Technology; 2000; pp. 11–36. ISBN 9789048155620. [Google Scholar]

- Tykalová, T.; Rusz, J.; Cmejla, R.; Ruzickova, H.; Ruzicka, E. Acoustic Investigation of Stress Patterns in Parkinson’s Disease. J. Voice 2013, 28, 129.e1–129.e8. [Google Scholar] [CrossRef]

- Tavi, L.; Werner, S. A Phonetic Case Study on Prosodic Variability in Suicidal Emergency Calls. Int. J. Speech Lang. Law 2020, 27, 59–74. [Google Scholar] [CrossRef]

- Tavi, L.; Penttilä, N. Functional Data Analysis of Prosodic Prominence in Parkinson’s Disease: A Pilot Study. Clin Linguist Phon. 2023. ahead-of-print. [Google Scholar] [CrossRef] [PubMed]

- Hernandez, A.; Kim, S.; Chung, M. Prosody-Based Measures for Automatic Severity Assessment of Dysarthric Speech. Appl Sci 2020, 10, 6999. [Google Scholar] [CrossRef]

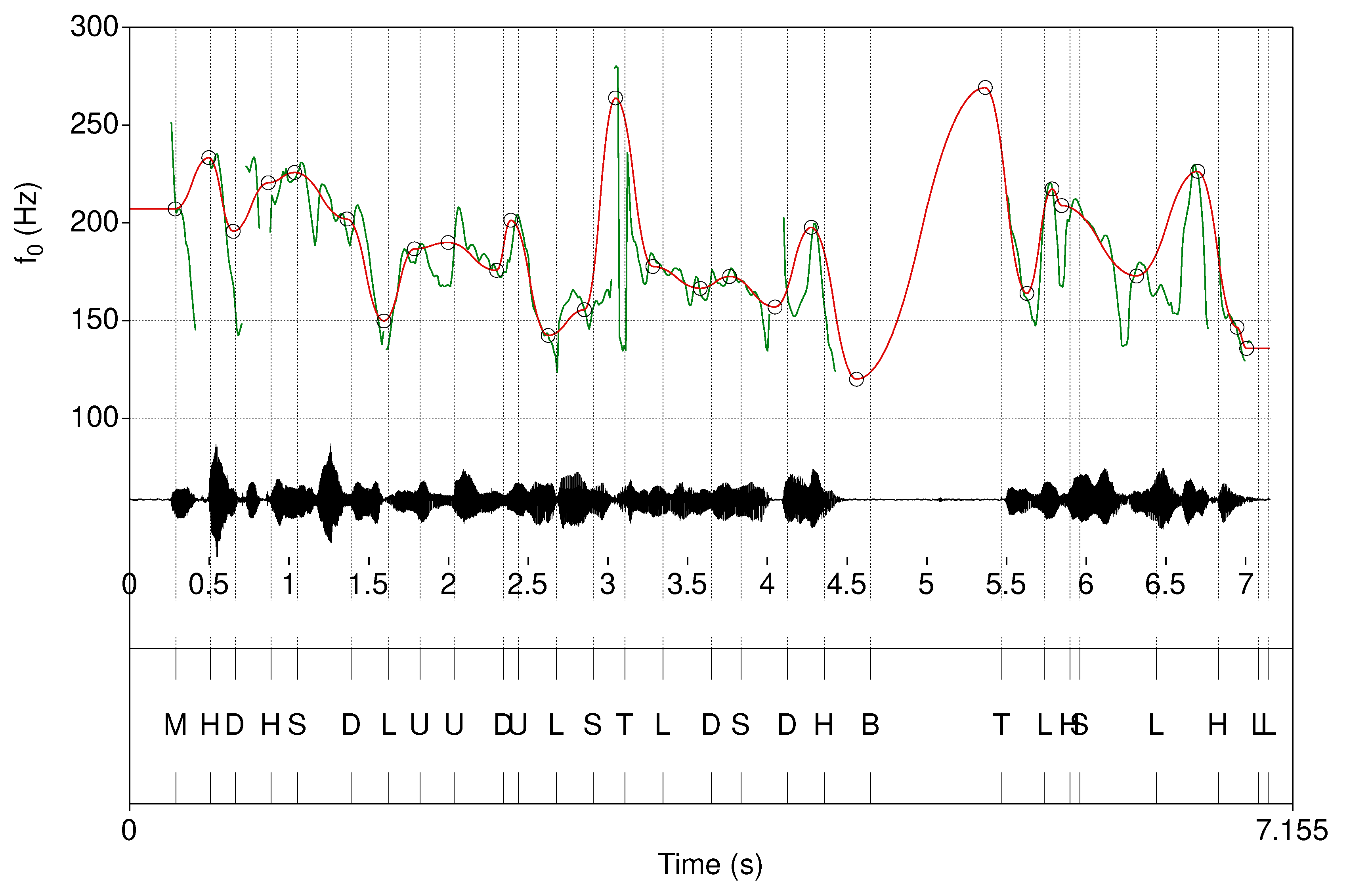

- Hirst, D.J. The Analysis by Synthesis of Speech Melody. J. Speech Sci. 2011, 1, 55–83. [Google Scholar] [CrossRef]

- Hirst, D.J. Form and Function in the Representation of Speech Prosody. Speech Commun. 2005, 46, 334–347. [Google Scholar] [CrossRef]

- Hirst, D.; di Cristo, A. Intonation Systems: A Survey of Twenty Languages; Cambridge University Press, 1998; Volume 76. [Google Scholar]

- Véronis, J.; Cristo, P.D.; Courtois, F.; Chaumette, C. A Stochastic Model of Intonation for Text-to-Speech Synthesis. Speech Commun 1998, 26, 233–244. [Google Scholar] [CrossRef]

- Hirst, D.; Cho, H.; Kim, S.; Yu, H. Evaluating Two Versions of the Momel Pitch Modelling Algorithm on a Corpus of Read Speech in Korean; 2007; pp. 1649–1652.

- Hirst, D. Melody Metrics for Prosodic Typology: Comparing English, French and Chinese. Interspeech 2013 2013, 572–576. [Google Scholar] [CrossRef]

- Celeste, L.C.; Reis, C. Formal Intonative Analysis: Intsint Applied to Portuguese. J Speech Sci 2021, 2, 3–21. [Google Scholar] [CrossRef]

- Chentir Extraction of Arabic Standard Micromelody. J Comput Sci 2009, 5, 86–89. [CrossRef]

- Hirst, D.J. A Praat Plugin for Momel and INTSINT with Improved Algorithms for Modelling and Coding Intonation; 2007; pp. 1233–1236.

- Xu, Y. ProsodyPro—A Tool for Large-Scale Systematic Prosody Analysis. In Proceedings of the Tools and Resources for the Analysis of Speech Prosody; Laboratoire Parole et Langage, France: Aix-en-Provence, France, 2013; pp. 7–10.

- Liss, J.M.M.; White, L.; Mattys, S.L.; Lansford, K.; Lotto, A.J.; Spitzer, S.M.; Caviness, J.N. Quantifying Speech Rhythm Abnormalities in the Dysarthrias. J. Speech Lang. Hear. Res. 2009, 52, 1334–1352. [Google Scholar] [CrossRef] [PubMed]

- Lavechin, M.; Métais, M.; Titeux, H.; Boissonnet, A.; Copet, J.; Rivière, M.; Bergelson, E.; Cristia, A.; Dupoux, E.; Bredin, H. Brouhaha: Multi-Task Training for Voice Activity Detection, Speech-to-Noise Ratio, and C50 Room Acoustics Estimation. Arxiv 2022. [Google Scholar] [CrossRef]

- Räsänen, O.; Seshadri, S.; Lavechin, M.; Cristia, A.; Casillas, M. ALICE: An Open-Source Tool for Automatic Measurement of Phoneme, Syllable, and Word Counts from Child-Centered Daylong Recordings. Behav Res Methods 2021, 53, 818–835. [Google Scholar] [CrossRef] [PubMed]

- Cristia, A.; Lavechin, M.; Scaff, C.; Soderstrom, M.; Rowland, C.; Räsänen, O.; Bunce, J.; Bergelson, E. A Thorough Evaluation of the Language Environment Analysis (LENA) System. Behav Res Methods 2021, 53, 467–486. [Google Scholar] [CrossRef] [PubMed]

- Bullock, L.; Bredin, H.; Garcia-Perera, L.P. Overlap-Aware Diarization: Resegmentation Using Neural End-to-End Overlapped Speech Detection. Icassp 2020 - 2020 Ieee Int Conf Acoust Speech Signal Process Icassp 2020, 00, 7114–7118. [Google Scholar] [CrossRef]

- Bredin, H.; Yin, R.; Coria, J.M.; Gelly, G.; Korshunov, P.; Lavechin, M.; Fustes, D.; Titeux, H.; Bouaziz, W.; Gill, M.-P. Pyannote.Audio: Neural Building Blocks for Speaker Diarization. Icassp 2020 - 2020 Ieee Int Conf Acoust Speech Signal Process Icassp 2020, 00, 7124–7128. [Google Scholar] [CrossRef]

- Yin, R.; Bredin, H.; Barras, C. Neural Speech Turn Segmentation and Affinity Propagation for Speaker Diarization. Interspeech 2018 2018, 1393–1397. [Google Scholar] [CrossRef]

- Goetz, C.G.; Tilley, B.C.; Shaftman, S.R.; Stebbins, G.T.; Fahn, S.; Martin, P.M.; Poewe, W.H.; Sampaio, C.; Stern, M.B.; Dodel, R.; et al. Movement Disorder Society-sponsored Revision of the Unified Parkinson’s Disease Rating Scale (MDS-UPDRS): Scale Presentation and Clinimetric Testing Results. Mov. Disord. 2008, 23, 2129–2170. [Google Scholar] [CrossRef]

- Karlsson, F.; Schalling, E.; Laakso, K.; Johansson, K.M.; Hartelius, L. Assessment of Speech Impairment in Patients with Parkinson’s Disease from Acoustic Quantifications of Oral Diadochokinetic Sequences. J. Acoust. Soc. Am. 2020, 147, 839–851. [Google Scholar] [CrossRef]

- Jolad, B.; Khanai, R. An Art of Speech Recognition: A Review. 2019 2nd Int Conf Signal Process Commun Icspc 2019, 00, 31–35. [Google Scholar] [CrossRef]

- Bhakre, S.K.; Bang, A. Emotion Recognition on the Basis of Audio Signal Using Naive Bayes Classifier. 2016 Int Conf Adv Comput Commun Inform. Icacci 2016, 2363–2367. [Google Scholar] [CrossRef]

- Sanchis, A.; Juan, A.; Vidal, E. A Word-Based Naïve Bayes Classifier for Confidence Estimation in Speech Recognition. Ieee Trans. Audio Speech Lang Process 2012, 20, 565–574. [Google Scholar] [CrossRef]

- Liu, Z.-T.; Wu, M.; Cao, W.-H.; Mao, J.-W.; Xu, J.-P.; Tan, G.-Z. Speech Emotion Recognition Based on Feature Selection and Extreme Learning Machine Decision Tree. Neurocomputing 2018, 273, 271–280. [Google Scholar] [CrossRef]

- Lavner, Y.; Ruinskiy, D. A Decision-Tree-Based Algorithm for Speech/Music Classification and Segmentation. Eurasip J Audio Speech Music Process 2009, 2009, 239892. [Google Scholar] [CrossRef]

- Noroozi, F.; Sapiński, T.; Kamińska, D.; Anbarjafari, G. Vocal-Based Emotion Recognition Using Random Forests and Decision Tree. Int J Speech Technol. 2017, 20, 239–246. [Google Scholar] [CrossRef]

- Arora, S.; Tsanas, A. Assessing Parkinson’s Disease at Scale Using Telephone-Recorded Speech: Insights from the Parkinson’s Voice Initiative. Diagnostics 2021, 11, 1892. [Google Scholar] [CrossRef] [PubMed]

- Haq, A.U.; Li, J.P.; Memon, M.H.; Khan, J.; Malik, A.; Ahmad, T.; Ali, A.; Nazir, S.; Ahad, I.; Shahid, M. Feature Selection Based on L1-Norm Support Vector Machine and Effective Recognition System for Parkinson’s Disease Using Voice Recordings. Ieee Access 2019, 7, 37718–37734. [Google Scholar] [CrossRef]

- Lahmiri, S.; Shmuel, A. Detection of Parkinson’s Disease Based on Voice Patterns Ranking and Optimized Support Vector Machine. Biomed Signal Proces 2019, 49, 427–433. [Google Scholar] [CrossRef]

- Shahbakhi, M.; Far, D.T.; Tahami, E. Speech Analysis for Diagnosis of Parkinson’s Disease Using Genetic Algorithm and Support Vector Machine. J Biomed Sci Eng 2014, 2014, 147–156. [Google Scholar] [CrossRef]

- Novotný, M.; Rusz, J.; Cmejla, R.; Ruzicka, E. Automatic Evaluation of Articulatory Disorders in Parkinson’s Disease. IEEE/ACM Trans. Audio Speech Lang. Process. 2014, 22, 1366–1378. [Google Scholar] [CrossRef]

- Karlsson, F.; Hartelius, L. How Well Does Diadochokinetic Task Performance Predict Articulatory Imprecision? Differentiating Individuals with Parkinson’s Disease from Control Subjects. Folia Phoniatr. Et Logop. 2019, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Dupuy, D.; Helbert, C.; Franco, J. DiceDesign and DiceEval : Two R Packages for Design and Analysis of Computer Experiments. J Stat Softw 2015, 65. [Google Scholar] [CrossRef]

- Chicco, D.; Jurman, G. The Advantages of the Matthews Correlation Coefficient (MCC) over F1 Score and Accuracy in Binary Classification Evaluation. Bmc Genom. 2020, 21, 6. [Google Scholar] [CrossRef] [PubMed]

- Kautz, T.; Eskofier, B.M.; Pasluosta, C.F. Generic Performance Measure for Multiclass-Classifiers. Pattern Recogn 2017, 68, 111–125. [Google Scholar] [CrossRef]

- Zien, A.; Krämer, N.; Sonnenburg, S.; Rätsch, G. Machine Learning and Knowledge Discovery in Databases, European Conference, ECML PKDD 2009, Bled, Slovenia, September 7-11, 2009, Proceedings, Part II. Lect. Notes Comput. Sci. 2009, 694–709. [Google Scholar] [CrossRef]

- Traunmüller, H.; Eriksson, A. The Perceptual Evaluation of F0 Excursions in Speech as Evidenced in Liveliness Estimations. J. Acoust. Soc. Am. 1995, 97, 1905–1915. [Google Scholar] [CrossRef] [PubMed]

- Tanaka, Y.; Tsuboi, T.; Watanabe, H.; Kajita, Y.; Fujimoto, Y.; Ohdake, R.; Yoneyama, N.; Masuda, M.; Hara, K.; Senda, J.; et al. Voice Features of Parkinson’s Disease Patients with Subthalamic Nucleus Deep Brain Stimulation. J. Neurol. 2015, 262, 1–9. [Google Scholar] [CrossRef]

- Tsuboi, T.; Watanabe, H.; Tanaka, Y.; Ohdake, R.; Yoneyama, N.; Hara, K.; Nakamura, R.; Watanabe, H.; Senda, J.; Atsuta, N.; et al. Distinct Phenotypes of Speech and Voice Disorders in Parkinson’s Disease after Subthalamic Nucleus Deep Brain Stimulation. J. Neurol. Neurosurg. Psychiatry 2014, 86, jnnp-2014-308043. [Google Scholar] [CrossRef]

- Tsuboi, T.; Watanabe, H.; Tanaka, Y.; Ohdake, R.; Yoneyama, N.; Hara, K.; Ito, M.; Hirayama, M.; Yamamoto, M.; Fujimoto, Y.; et al. Characteristic Laryngoscopic Findings in Parkinson’s Disease Patients after Subthalamic Nucleus Deep Brain Stimulation and Its Correlation with Voice Disorder. J. Neural Transm. 2015, 122, 1663–1672. [Google Scholar] [CrossRef]

- Karlsson, F.; Blomstedt, P.; Olofsson, K.; Linder, J.; Nordh, E.; Doorn, J. van Control of Phonatory Onset and Offset in Parkinson Patients Following Deep Brain Stimulation of the Subthalamic Nucleus and Caudal Zona Incerta. Park. Relat. Disord. 2012, 18, 824–827. [Google Scholar] [CrossRef] [PubMed]

- Eklund, E.; Qvist, J.; Sandström, L.; Viklund, F.; van Doorn, J.; Karlsson, F. Perceived Articulatory Precision in Patients with Parkinson’s Disease after Deep Brain Stimulation of Subthalamic Nucleus and Caudal Zona Incerta. Clin. Linguist. Phon. 2014, 29, 150–166. [Google Scholar] [CrossRef] [PubMed]

- Goberman, A.M.; Blomgren, M. Fundamental Frequency Change During Offset and Onset of Voicing in Individuals with Parkinson Disease. J. Voice 2008, 22, 178–191. [Google Scholar] [CrossRef]

- Whitfield, J.A.; Goberman, A.M. The Effect of Parkinson Disease on Voice Onset Time: Temporal Differences in Voicing Contrast. J. Acoust. Soc. Am. 2015, 137, 2432. [Google Scholar] [CrossRef]

- Goberman, A.M.; Coelho, C.; Robb, M. Phonatory Characteristics of Parkinsonian Speech before and after Morning Medication: The ON and OFF States. J. Commun. Disord. 2002, 35, 217–239. [Google Scholar] [CrossRef] [PubMed]

- Utter, A.A.; Basso, M.A. The Basal Ganglia: An Overview of Circuits and Function. Neurosci. Biobehav. Rev. 2008, 32, 333–342. [Google Scholar] [CrossRef]

- Bredin, H.; Yin, R.; Coria, J.M.; Gelly, G.; Korshunov, P.; Lavechin, M.; Fustes, D.; Titeux, H.; Bouaziz, W.; Gill, M.-P. Pyannote.Audio: Neural Building Blocks for Speaker Diarization. Arxiv 2019. [Google Scholar] [CrossRef]

- Origlia, A.; Abete, G.; Cutugno, F. A Dynamic Tonal Perception Model for Optimal Pitch Stylization. Comput. Speech Lang. 2013, 27, 190–208. [Google Scholar] [CrossRef]

| Domain | Measure name | Description |

|---|---|---|

| Time | Time diff MEAN | The mean time difference between consecutive INTSINT annotations (in ms) |

| Time diff MEDIAN | The median time difference between consecutive INTSINT annotations (in ms) | |

| Time diff VAR | The variance in time differences between consecutive INTSINT annotations (in ms2) | |

| Time diff MIN | The minimum time difference between consecutive INTSINT annotations (in ms) | |

| Time diff MAX | The maximum time difference between consecutive INTSINT annotations (in ms) | |

| INTSINT / s | The average INTSINT label concentration in the utterance | |

| Duration | The utterance duration | |

| Pitch | f0 diff MEAN | The mean difference in f0 between consecutive INTSINT annotations (in Hz) |

| f0 diff MEDIAN | The median difference in f0 between consecutive INTSINT annotations (in Hz) | |

| f0 diff VAR | The variance in f0 differences between consecutive INTSINT annotations (in Hz) | |

| f0 diff MIN | The minimum difference in f0 between consecutive INTSINT annotations (in Hz) | |

| f0 diff MAX | The maximum difference in f0 between consecutive INTSINT annotations (in Hz) | |

| f0 COV | The coefficient of variance in f0 across the utterance | |

| f0 diff VAR / s | The variance in f0 differences between consecutive INTSINT annotations (in Hz), normalized by the duration (in seconds) of the utterance. | |

| f0 key | The pitch key of the utterance | |

| f0 range | The pitch range of the utterance | |

| Amplitude | RMS COV | The coefficient of variance in RMS amplitude across the utterance |

| Intonational levels | Unique INTSINT | The number of distinct INTSINT labels encoded in the utterance |

| Model name | Hyperparameter |

|---|---|

| Naive Bayes | The relative smoothness of the class boundaries |

| Decision tree | The cost complexity |

| Maximum tree depth | |

| Random forest | The number of trees |

| Support Vector Machines | The cost of predicting a sample inside of or on the wrong side of the margin |

| Penalized Ordinal Regression | The total amount of regularization |

| The proportion of L1 and L2 penalization |

| Expert assessment | Model assessment | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Dysprosody severity (majority rating) |

Individual assessment | Rater 1 | Rater 2 | Rater 3 | Rater 4 | Naive bayes | Decision tree | Random forest | Support Vector Machine | Penalized ordinal regression |

| No deviation | No deviation | 42 | 46 | 45 | 25 | 24 | 2 | 23 | 21 | 23 |

| Mild deviation | 11 | 7 | 8 | 22 | 2 | 28 | 7 | 8 | 7 | |

| Moderate to severe deviation | 0 | 0 | 0 | 6 | 4 | 0 | 0 | 1 | 0 | |

| Mild deviation | No deviation | 7 | 7 | 5 | 2 | 25 | 2 | 12 | 12 | 17 |

| Mild deviation | 36 | 35 | 38 | 19 | 1 | 26 | 14 | 14 | 9 | |

| Moderate to severe deviation | 2 | 3 | 2 | 24 | 2 | 0 | 2 | 2 | 4 | |

| Moderate to severe deviation | No deviation | 1 | 1 | 0 | 0 | 6 | 0 | 1 | 6 | 5 |

| Mild deviation | 2 | 1 | 3 | 0 | 3 | 11 | 5 | 5 | 3 | |

| Moderate to severe deviation | 7 | 8 | 7 | 10 | 2 | 0 | 5 | 0 | 4 | |

| Metrics | Balanced accuracy | 0.84 | 0.86 | 0.88 | 0.62 | 0.54 | 0.65 | 0.74 | 0.65 | 0.63 |

| MCC | 0.63 | 0.69 | 0.71 | 0.30 | -0.02 | 0.25 | 0.42 | 0.21 | 0.16 | |

| F1 score | 0.79 | 0.82 | 0.83 | 0.50 | 0.39 | 0.54 | 0.65 | 0.53 | 0.51 | |

| Compared ratings | % Agreement | Cohen’s 𝜅 |

|---|---|---|

| Rater 1 – Rater 2 | 69 | 0.47 |

| Rater 1 – Rater 3 | 62 | 0.35 |

| Rater 1 – Rater 4 | 44 | 0.18 |

| Rater 2 – Rater 3 | 66 | 0.40 |

| Rater 2 – Rater 4 | 46 | 0.22 |

| Rater 3 – Rater 4 | 43 | 0.15 |

| Measure name | Variable importance |

|---|---|

| f0 diff MIN | 9.3 |

| f0 diff VAR | 7.6 |

| f0 diff MAX | 7.2 |

| f0 key | 6.1 |

| f0 COV | 4.8 |

| f0 diff VAR / s | 2.9 |

| Time diff MEAN | 2.4 |

| Time diff MEDIAN | 1.8 |

| f0 range | 0.9 |

| f0 diff MEDIAN | 0.6 |

| Time diff MAX | 0.6 |

| f0 diff MEAN | 0.3 |

| Duration | 0.1 |

| INTSINT / s | 0.1 |

| RMS COV | 0.01 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).