Submitted:

20 December 2023

Posted:

21 December 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

| Ref. | Main Focus | Major Contribution | Enhancement in our paper |

|---|---|---|---|

| [1] | Analysis of vehicle detection techniques and how they're used in smart vehicle systems | Summarized more than 300 study contributions, comparing the effectiveness of old and new methods, and using a variety of vehicle detecting sensors. | Improving the model's generalisation and resilience by enhancing and preprocessing the dataset to increase the performance of our moving object identification system. |

| [2] | Studies of traffic flow and road safety using monocular cameras and AI | By comparing five statistical and three machine learning methods with a system that creates a dataset of vehicle speed estimation from monocular camera videos, we can demonstrate the effectiveness of the Linear Regression Model (LRM) for real-time speed estimation with potential hardware implementation. | Investigate the use of parallel processing and distributed computing approaches to speed up the detection process. |

| [3] | Using deep neural, automatic vehicle detection and tracking networks | Presenting a thorough analysis of existing Faster R-CNN and YOLO-based vehicle detection and tracking techniques, classifying them chronologically based on the architectures of their underlying algorithms, outlining the architectures of Faster R-CNN and YOLO and their variants, analyzing the current works' limitations, and highlighting the uncharted regions and potential future directions of research in this field. | Investigate methods for hardware acceleration to quicken the detecting process to improve our system's performance and effectiveness. |

| [4] | Crashes involving over height vehicles | Proposed a deep learning and view geometry-based method for autonomous vehicle height estimation. Vehicle instance segmentation and the creation of 3D boundary boxes were done using Mask R-CNN. | Investigate methods for hardware acceleration to quicken the detecting process to improve our system's performance and effectiveness. |

| [5] | Monitoring human mobility change during the COVID-19 pandemic using remote-sensing images | High temporal Planet multi-spectral pictures (from November 2019 to September 2020, on average 7.1 days of frequency) were used to propose a morphology-based vehicle detection approach to measure traffic density in various cities. It was shown that in the majority of the illustrations, the suggested approach reached a detection level with an efficiency of 68.26%, and that the general patterns of the observations match those of the public data already available (lockdown period, traffic volume, etc.), demonstrating that high temporal Planet data with world-wide coverage can provide traffic specifics for trend evaluation to further support informed decision-making for global extreme occurrences. | Improving the generalization and robustness of the model by enhancing and preprocessing the dataset to increase the performance of our moving object identification system. |

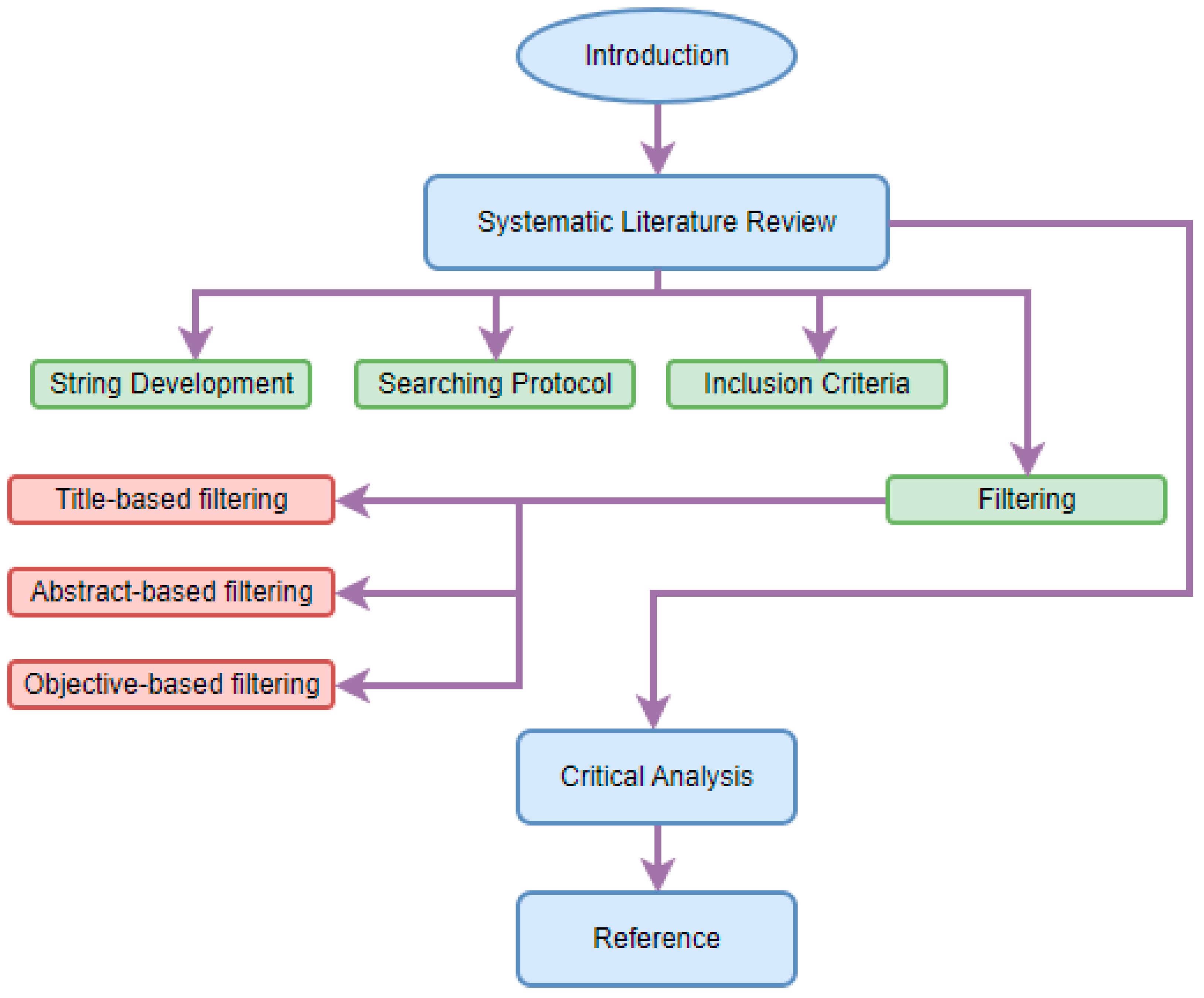

2. Systematic Literature Review

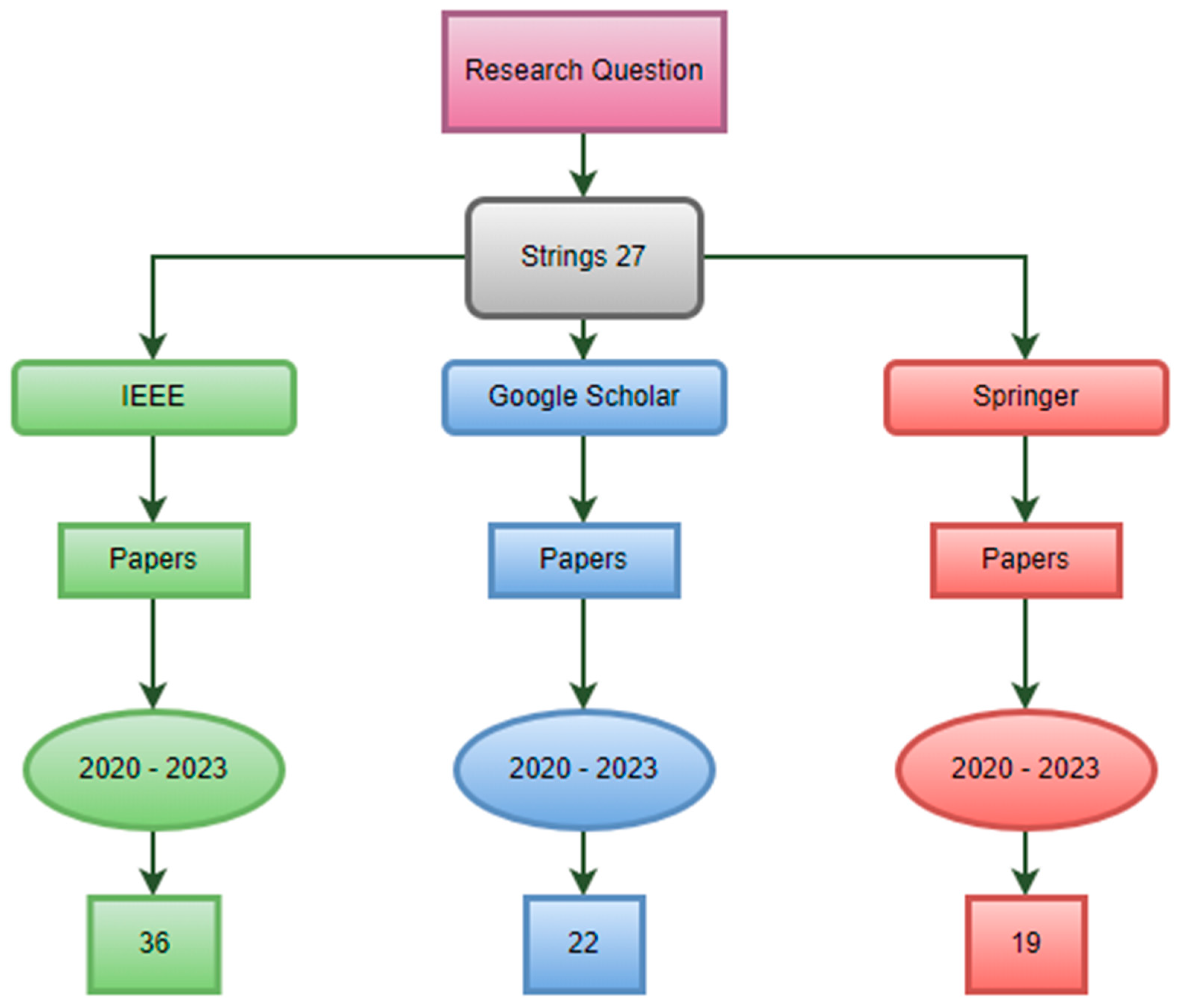

2.1. String Development

| Words | Synonym #01 | Synonym #02 | Synonym #03 |

|---|---|---|---|

| Moving Object | Vehicle | Carrier | Transport |

| Detection | Observing | Identification | Distiguishing |

| Algorithms | Design | Method | Innovation |

| Query 1: Moving Object Detection Using Deep Learning Algorithms |

| Query 2: Vehicle Detection Using Deep Learning Algorithms |

| Query 3: Carrier Object Detection Using Deep Learning Algorithms |

| Query 4: Transport Detection Using Deep Learning Algorithms |

| Query 5: Moving Object Observing Using Deep Learning Algorithms |

| Query 6: Moving Object Identification Using Deep Learning Algorithms |

| Query 7: Moving Object Distinguishing Using Deep Learning Algorithms |

| Query 8: Moving Object Detection Using Deep Learning Design |

| Query 9: Moving Object Detection Using Deep Learning Method |

| Query 10: Moving Object Detection Using Deep Learning Innovation |

2.2. Searching Protocols

2.3. Inclusion Criteria

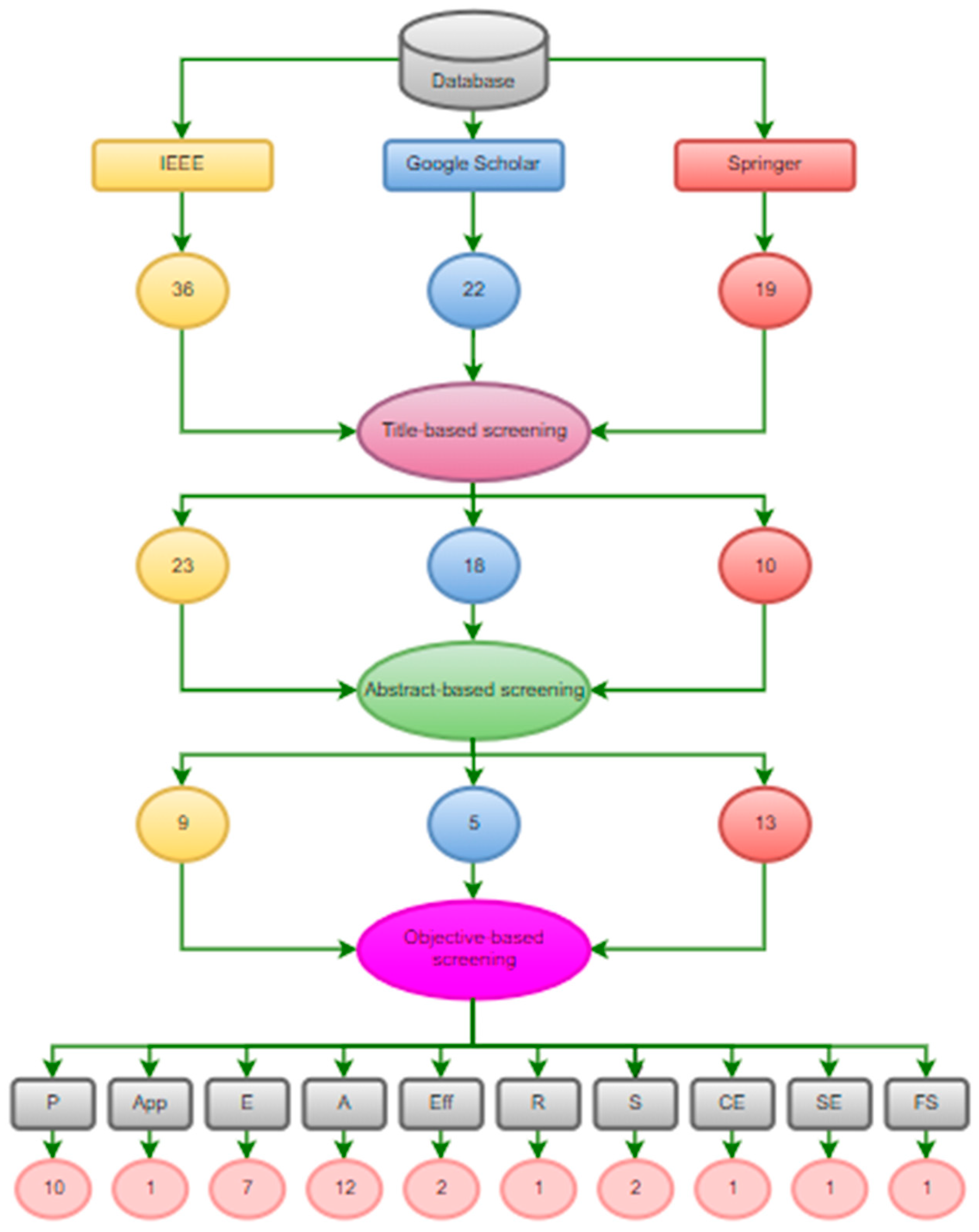

2.4. Filtering

| Acronyms | Definition |

|---|---|

| P | Performance |

| App | Applicability |

| E | Efficiency |

| A | Accuracy |

| Eff | Effectiveness |

| R | Robustness |

| S | Safety |

| CE | Cost-Effectiveness |

| SE | Sensitivity |

| FS | F-Score |

| Ref. | P | App | E | A | Eff | R | S | CE | SE | FS |

|---|---|---|---|---|---|---|---|---|---|---|

| [1] | ✓ | ✓ | – | – | – | – | – | – | – | – |

| [6] | – | – | ✓ | ✓ | – | – | – | – | – | – |

| [3] | ✓ | – | – | – | – | – | – | – | – | – |

| [7] | – | – | ✓ | ✓ | ✓ | – | – | – | – | – |

| [8] | ✓ | – | – | ✓ | – | – | – | – | – | – |

| [14] | ✓ | – | – | ✓ | – | ✓ | – | – | – | – |

| [15] | – | – | – | – | – | – | ✓ | – | – | – |

| [16] | – | – | – | ✓ | – | – | – | – | – | – |

| [9] | ✓ | – | – | – | – | – | – | – | – | – |

| [17] | – | – | ✓ | ✓ | – | – | – | – | – | – |

| [18] | ✓ | – | – | ✓ | – | – | – | – | – | – |

| [10] | – | – | – | ✓ | – | – | – | – | – | – |

| [11] | – | – | ✓ | ✓ | – | – | – | ✓ | – | – |

| [19] | ✓ | – | ✓ | ✓ | – | – | – | – | – | – |

| [20] | ✓ | – | ✓ | ✓ | – | – | ✓ | – | ✓ | ✓ |

| [12] | ✓ | – | ✓ | ✓ | – | – | – | – | – | – |

| [21] | ✓ | – | – | – | ✓ | – | – | – | – | – |

| Ref. | Technique | Methodology |

|---|---|---|

| [1] |

YOLO |

To identify the 2-D object bounding boxes in the colour image, DM, and RM, three YOLO-based object detectors were independently ran on every mode. After that, the detection results were combined using the evaluation function and nonmaximum suppression. |

| [6] |

YOLOv3, DeepSORT |

YOLOv3 makes advantage of a network with more volume. The network is known as Darknet-53 since it has 53 convolutional layers. It incorporates continuous convolutional layers from YOLOv2, Darknet-19, and other new residual networks. This paper combined fast enhanced DeepSORT MOT algorithm with appearance information of object candidates from object-detection CNNs to extract motion features and compute trajectories in lab workstation. DeepSORT, the real-time MOT method, is used for tracking, state estimation, and frame-by-frame data association. |

| [3] |

YOLO, R-CNN |

This article provides a thorough analysis of current vehicle recognition and tracking techniques based on YOLO and Faster R-CNN. It has largely concentrated on vehicle detection techniques based on deep neural networks, such as Faster R-CNN and YOLO network. |

| [7] |

YOLOv4, DeepSORT |

Deep learning algorithms will be used in DeepSORT to decrease the large number of identity shifts and increase the effectiveness of tracking through SORT algorithm occlusions. This vehicle detection and tracking technique leverages the Yolov4 model-based DeepSORT algorithm from the TensorFlow library. |

| [8] |

CNN |

CNNs pick up basic low-level aspects of vehicles in the first layers, then more intricate intermediate and high-level feature representations in the deeper layers, which are used to classify the vehicles. They involve learnable parameters that correspond to the weights and biases. |

| [14] |

YOLOv3-tiny |

Using yolov3-tiny, First, a second detection layer is created and added to the original yolov3-tiny network for the purpose of detecting small vehicles. Second, a set of side-way blocks are added to extract and combine features in several layers. |

| [15] |

YOLOv3 |

This article addresses the object detection issue and seeks to enhance it using the YOLOv3 approach. |

| [16] |

YOLO, R-CNN |

It is intended to compare the accuracy training process accomplishments using the YoloV4 and Faster-RCNN. Data sets with photographs chosen from various weather, land conditions, and time periods of the day are established for use in the training and testing phases. |

| [9] |

YOLOv3, SSD, R-CNN |

Algorithms for quick and high-performance object detection quicker R-CNN and YOLOv3 used for analyzing images captured by UAVs, tiny and SSD algorithms to identify cars. The positions of moving vehicles in traffic are determined automatically. |

| [17] |

YOLOv3-tiny |

This research suggests a YOLOv3-tiny-based deep learning approach for target vehicle detection. For feature extraction, YOLOv3 utilizes the darknet 53 convolutional layer. |

| [18] |

Z10TIRM, YOLOv4 |

Z10TIRM camera has the ability to record images not only in the traditional RGB image format but also in the infrared range. YOLOv4 model has been refined to have 148 layers, 94 of which are convolutional layers. Modifications were made to a few so-called route layers, restoring the neural network's prior connectivity. |

| [10] |

SVM, YOLOv3 |

By bringing together only perceptually similar images, the HOG offers a good generalization, in contrast to several regularly used representations. This results in the decision-making function that can accurately differentiate between object and non-object models using SVM. Compared to CNN and its offshoots, YOLOv3 is a more adaptive algorithm with regards to performance. |

| [11] |

UAV, PSD, DNN |

RF signals sent from the UAV to the controller are used in this paper's proposed UAV detection and identification process. By comparing the PSD to PSD models that have been trained, they were able to further determine the type of UAV. They have determined the kind of UAV if it fits one of the previously taught PSD models; if not, they record the spectrum as an unidentified kind of PSD into the database for subsequent human marking as training data to construct a new PSD model. They utilized the learned parameters and network topology as a model for prediction after training a DNN. However, the goal of training a DNN is to make use of its parameters. |

| [19] |

YOLO, Deep SORT |

To identify, track, and categorize the vehicles, the recorded video is passed to a deep learning framework built on the YOLO framework. All the items in video frames are identified and counted by YOLO. To track the objects in video frames, Deep SORT tracker is given the output of YOLO. All types of roadside objects could be counted successfully using the YOLOv3 and Deep SORT algorithms. |

| [20] |

YOLOv3, SVM |

To distinguish between various environmental categories, the non-leaf nodes of the decision tree are supplemented by the SVM classifier. Compare YOLOv3 and SPEED for the accuracy classification of ambulances, buses, fire trucks, conventional automobiles, and police cars. |

| [12] |

FE-CNN, TensorFlow Deep learning framework |

By determining the ideal number of convolution kernels, the weight and bias in the neural network are maximised during CNN training. As a result, FE-CNN accomplishes the recognition precision of GoogleLeNet in less time. The model outperforms GoogleLeNet because its precision is stable. Based on the generated computation graph, TensorFlow locates all the nodes that are reliant on E. |

| [21] |

R-CNN, SSD |

Both models Faster R-CNN and SSD are displaying multiple detections in some scenes, which means the same object is being picked up by both models at the same time and produces inaccurate results. |

3. Detailed Literature Review

4. Performance Analysis

4.1. Critical analysis

| Ref. | Techniques | Shortcoming |

| [1] |

YOLO |

The two main drawbacks of this form of YOLO may be considered as high localization error and lower recall in compared to two stage object detectors [22]. |

| [6] |

YOLOv3, DeepSORT |

The speed and accuracy of using YOLOv3 is less as compared to RetinaNet [23] DeepSORT contains numerous flaws, including ID changes, poor handling of occlusion, motion blur, and many others [24] |

| [3] |

YOLO, R-CNN |

High localization error and lower recall primary downsides of this version of YOLO [22] In R-CNN training is costly both in terms of money and time. Moreover, detection is rather slow [25] |

| [7] |

YOLOv4, DeepSORT |

Compared to Faster R CNN, YOLOv4 has a lower recall and higher localization error. Having only two proposed bounding boxes per grid makes it difficult to recognize nearby objects [26] DeepSORT contains numerous flaws, including ID changes, poor handling of occlusion, motion blur, and many others [24] |

| [8] |

CNN |

The location and orientation of the object are not taken into account by CNN when making predictions [27] |

| [14] |

YOLOv3-tiny |

The speed and accuracy of using YOLOv3 is less as compared to RetinaNet [23] |

| [15] |

YOLOv3 |

The speed and accuracy of using YOLOv3 is less as compared to RetinaNet [23] |

| [16] |

YOLO, R-CNN |

The two main drawbacks of this form of YOLO may be considered as high localization error and lower recall in compared to two stage object detectors [22]. In R-CNN training is costly both in terms of money and time. Moreover, detection is rather slow [25] |

| [9] |

YOLOv3, SSD, R-CNN |

The speed and accuracy of using YOLOv3 is less as compared to RetinaNet [23] SSD may not generate high level features, worse for small objects. Need more training data [28] In R-CNN training is costly both in terms of money and time. Moreover, detection is rather slow [25] |

| [17] |

YOLOv3-tiny |

The speed and accuracy of using YOLOv3 is less as compared to RetinaNet [23] |

| [18] |

YOLOv4 |

Compared to Faster R CNN, YOLOv4 has a lower recall and higher localization error. Having only two proposed bounding boxes per grid makes it difficult to recognize nearby objects [26] |

| [10] |

SVM, YOLOv3 |

Large data sets are not a good fit for the SVM algorithm. When there is more noise in the data set, it does not perform as well [29] The speed and accuracy of using YOLOv3 is less as compared to RetinaNet [23] |

| [11] |

UAV, PSD, DNN |

In UAV technique, there is issue of security and privacy [30] PSD can be challenging to understand, needs a stationary signal, reacts slowly to windowing [31] Due to the issue of vanishing gradient, which affects the amount of time required for training and decreases accuracy, deep neural networks are difficult to train with backpropagation [32] |

| [19] |

YOLO, DeepSORT |

The two main drawbacks of this form of YOLO may be considered as high localization error and lower recall in compared to two stage object detectors [22]. DeepSORT contains numerous flaws, including ID changes, poor handling of occlusion, motion blur, and many others [24] |

| [20] |

YOLOv3, SVM |

The speed and accuracy of using YOLOv3 is less as compared to RetinaNet [23] Large data sets are not a good fit for the SVM algorithm. When there is more noise in the data set, it does not perform as well [29] |

| [12] |

FE-CNN, TensorFlow Deep learning framework |

The drawback of pixel-based techniques is the impact of significant pixel intensity changes on the final image [33] TensorFlow has no window support and missing symbolic loops [34] |

| [21] |

R-CNN, SSD |

In R-CNN training is costly both in terms of money and time. Moreover, detection is rather slow [25] SSD may not generate high level features, worse for small objects. Need more training data [28] |

| Ref. | Research Gaps | Solution |

|---|---|---|

| [1] [3] [7] [16] [18] [19] |

High localization error |

With the use of superior training methods, YOLO performance has considerably improved. For object detection networks, a variety of training methods can be applied, including image fusion with geometry preserved alignment, cosine learning rate scheduler, synchronized batch normalization, data augmentation, and label smoothing [35]. |

| [6] [14] [15] [9] [17] [10] [20] |

Low speed and less accuracy |

To speed up the YOLOv3 algorithm, we can drop the output layers. When the discarding strategy is used, the extra computation is removed. 59 convolutional layers are used to find large scale objects in a feed-forward fashion [36]. |

| [6] [7] [19] |

Numerous flaws of DeepSORT |

We can correct everything using the newest algorithms. FairMOT and CentreTrack are two recent methods that can drastically lower ID switches and efficiently handle occlusions. Test other hypotheses, use different YOLOv5 model iterations, or use DeepSORT on a customer object detector [24]. |

| [3] [16] [9] [21] | Costly training and slow detection |

The MobileNet architecture can be utilized to build the fundamental R-CNN network. The duplicate proposal problem can be solved using the Soft-NMS method after the region proposal network [37]. |

| [9] [10] [11] [20] | Low performance and need more training data |

Increasing the input image resolution can fix the SSD's low performance issue [38]. |

| [11] | Issue of security and privacy | Using blockchain technology, UAVs may communicate securely with one another. It is necessary to build a secure and reliable UAV adhoc network as well as smart contracts [39]. |

| [12] | Intensity changes |

High-pass filters are used to detect abrupt changes in intensity across a defined area. Therefore, a high-pass filter will highlight areas in a small patch of pixels when light and dark pixels alternate (and vice versa) [40]. |

| [11] [12] | No window support and missing symbolic loops | Symbolic tensors can be executed in a while loop without preventing Eager execution [41]. |

| [8] | No location and orientation of object | A feature map, at the corresponding place of the output tensor, can be created by computing an element-wise product between each element of the kernel and the input tensor at each point of the tensor and summing it [42]. |

Dataset

| Ref. | Dataset description |

| [15] | MSCOCO, BDD100K, and self-generated image datasets for capturing vehicles |

| [14] | FLIR Dataset |

| [43] | Constructed their own dataset by using Anuj Shah Custom, Peltarion Custom and Hemil Patel Custom datasets |

| [9] | Images taken from UAV dataset |

| [16] | Images and records are taken from UAVs dataset |

| [10] | They used two famous datasets for detection, which are KITTI and GTI |

| [44] | Dataset collected by obtaining videos of emergency vehicle on YouTube |

| [45] | Kaggle dataset was used for both training and testing |

Conclusion

References

- Z. Wang, J. Zhan, C. Duan, X. Guan, P. Lu, and K. Yang, “A Review of Vehicle Detection Techniques for Intelligent Vehicles,” IEEE Transactions on Neural Networks and Learning Systems, pp. 1–21, 2022 . [CrossRef]

- H. Rodríguez-Rangel, L. A. Morales-Rosales, R. Imperial-Rojo, M. A. Roman-Garay, G. E. Peralta-Peñuñuri, and M. Lobato-Báez, “Analysis of Statistical and Artificial Intelligence Algorithms for Real-Time Speed Estimation Based on Vehicle Detection with YOLO,” Applied Sciences, vol. 12, no. 6, Art. no. 6, Jan. 2022. [CrossRef]

- M. Maity, S. Banerjee, and S. Sinha Chaudhuri, “Faster R-CNN and YOLO based Vehicle detection: A Survey,” in 2021 5th International Conference on Computing Methodologies and Communication (ICCMC), Apr. 2021, pp. 1442–1447. [CrossRef]

- “Automated visual surveying of vehicle heights to help measure the risk of overheight collisions using deep learning and view geometry - Lu - 2023 - Computer-Aided Civil and Infrastructure Engineering - Wiley Online Library.” https://onlinelibrary.wiley.com/doi/full/10.1111/mice.12842 (accessed Feb. 24, 2023) .

- Y. Chen, R. Qin, G. Zhang, and H. Albanwan, “Spatial Temporal Analysis of Traffic Patterns during the COVID-19 Epidemic by Vehicle Detection Using Planet Remote-Sensing Satellite Images,” Remote Sensing, vol. 13, no. 2, Art. no. 2, Jan. 2021. [CrossRef]

- C. Chen, B. Liu, S. Wan, P. Qiao, and Q. Pei, “An Edge Traffic Flow Detection Scheme Based on Deep Learning in an Intelligent Transportation System,” IEEE Transactions on Intelligent Transportation Systems, vol. 22, no. 3, pp. 1840–1852, Mar. 2021. [CrossRef]

- M. A. Bin Zuraimi and F. H. Kamaru Zaman, “Vehicle Detection and Tracking using YOLO and DeepSORT,” in 2021 IEEE 11th IEEE Symposium on Computer Applications & Industrial Electronics (ISCAIE), Apr. 2021, pp. 23–29. [CrossRef]

- A. Bouguettaya, H. Zarzour, A. Kechida, and A. M. Taberkit, “Vehicle Detection From UAV Imagery With Deep Learning: A Review,” IEEE Transactions on Neural Networks and Learning Systems, vol. 33, no. 11, pp. 6047–6067, Nov. 2022. [CrossRef]

- M. Böyük, R. Duvar, and O. Urhan, “Deep Learning Based Vehicle Detection with Images Taken from Unmanned Air Vehicle,” in 2020 Innovations in Intelligent Systems and Applications Conference (ASYU), Oct. 2020, pp. 1–4. [CrossRef]

- A. S. Abdullahi Madey, A. Yahyaoui, and J. Rasheed, “Object Detection in Video by Detecting Vehicles Using Machine Learning and Deep Learning Approaches,” in 2021 International Conference on Forthcoming Networks and Sustainability in AIoT Era (FoNeS-AIoT), Dec. 2021, pp. 62–65. [CrossRef]

- H. Liu, “Unmanned Aerial Vehicle Detection and Identification Using Deep Learning,” in 2021 International Wireless Communications and Mobile Computing (IWCMC), Jun. 2021, pp. 514–518. [CrossRef]

- “Deep learning-based algorithm for vehicle detection in intelligent transportation systems | SpringerLink.” https://link.springer.com/article/10.1007/s11227-021-03712-9 (accessed Feb. 28, 2023) .

- “Vehicle detection in intelligent transport system under a hazy environment: a survey - Husain - 2020 - IET Image Processing - Wiley Online Library.” https://ietresearch.onlinelibrary.wiley.com/doi/full/10.1049/iet-ipr.2018.5351# (accessed May 21, 2023) .

- Y. Lu, Q. Yang, J. Han, and C. Zheng, “A Robust Vehicle Detection Method in Thermal Images Based on Deep Learning,” in 2021 IEEE International Conference on Power, Intelligent Computing and Systems (ICPICS), Jul. 2021, pp. 386–390. [CrossRef]

- Z.-H. Huang, C.-M. Wang, W.-C. Wu, and W.-S. Jhang, “Application of Vehicle Detection Based On Deep Learning in Headlight Control,” in 2020 International Symposium on Computer, Consumer and Control (IS3C), Nov. 2020, pp. 178–180. [CrossRef]

- E. Bayhan, Z. Ozkan, M. Namdar, and A. Basgumus, “Deep Learning Based Object Detection and Recognition of Unmanned Aerial Vehicles,” in 2021 3rd International Congress on Human-Computer Interaction, Optimization and Robotic Applications (HORA), Jun. 2021, pp. 1–5. [CrossRef]

- L. Li and Y. Liang, “Deep Learning Target Vehicle Detection Method Based on YOLOv3-tiny,” in 2021 IEEE 4th Advanced Information Management, Communicates, Electronic and Automation Control Conference (IMCEC), Jun. 2021, pp. 1575–1579. [CrossRef]

- M. Kiac, K. Říha, J. Přinosil, and J. Mrnuštík, “Object Detection in Unmanned Aerial Vehicle Camera Stream Using Deep Neural Network,” in 2022 14th International Congress on Ultra Modern Telecommunications and Control Systems and Workshops (ICUMT), Oct. 2022, pp. 80–84. [CrossRef]

- R. Kejriwal, R. H J, A. Arora, and Mohana, “Vehicle Detection and Counting using Deep Learning basedYOLO and Deep SORT Algorithm for Urban Traffic Management System,” in 2022 First International Conference on Electrical, Electronics, Information and Communication Technologies (ICEEICT), Feb. 2022, pp. 1–6. [CrossRef]

- “An intelligent traffic detection approach for vehicles on highway using pattern recognition and deep learning | SpringerLink.” https://link.springer.com/article/10.1007/s00500-022-07375-3 (accessed Feb. 28, 2023) .

- “Vehicle detection and traffic density estimation using ensemble of deep learning models | SpringerLink.” https://link.springer.com/article/10.1007/s11042-022-13659-5 (accessed Feb. 28, 2023) .

- T. Diwan, G. Anirudh, and J. V. Tembhurne, “Object detection using YOLO: challenges, architectural successors, datasets and applications,” Multimed Tools Appl, vol. 82, no. 6, pp. 9243–9275, Mar. 2023. [CrossRef]

- V. Meel, “YOLOv3: Real-Time Object Detection Algorithm (Guide),” viso.ai, Jan. 02, 2022. https://viso.ai/deep-learning/yolov3-overview/ (accessed Mar. 24, 2023) .

- “Understanding Multiple Object Tracking using DeepSORT,” Jun. 21, 2022. https://learnopencv.com/understanding-multiple-object-tracking-using-deepsort/ (accessed Mar. 25, 2023) .

- A. Mohan, “Review on Fast RCNN,” Medium, May 22, 2020. https://medium.datadriveninvestor.com/review-on-fast-rcnn-202c9eadd23b (accessed Mar. 24, 2023) .

- madrasresearchorg, “YOLOV4: OPTIMAL SPEED AND ACCURACY OF OBJECT DETECTION,” MSRF|NGO, Oct. 15, 2021. https://www.madrasresearch.org/post/yolov4-optimal-speed-and-accuracy-of-object-detection (accessed Mar. 25, 2023) .

- “Drawbacks of Convolutional Neural Networks.” https://www.linkedin.com/pulse/drawbacks-convolutional-neural-networks-sakhawat-h-sumit (accessed Mar. 25, 2023) .

- H. Gao, “Understand Single Shot MultiBox Detector (SSD) and Implement It in Pytorch,” Medium, Jul. 19, 2018. https://medium.com/@smallfishbigsea/understand-ssd-and-implement-your-own-caa3232cd6ad (accessed Mar. 26, 2023) .

- A. Raj, “Everything About Support Vector Classification — Above and Beyond,” Medium, Aug. 06, 2022. https://towardsdatascience.com/everything-about-svm-classification-above-and-beyond-cc665bfd993e (accessed Mar. 26, 2023) .

- S. A. H. Mohsan, N. Q. H. Othman, Y. Li, M. H. Alsharif, and M. A. Khan, “Unmanned aerial vehicles (UAVs): practical aspects, applications, open challenges, security issues, and future trends,” Intel Serv Robotics, vol. 16, no. 1, pp. 109–137, Mar. 2023. [CrossRef]

- “OpenStax | Free Textbooks Online with No Catch.” https://openstax.org/ (accessed Mar. 26, 2023) .

- “Deep Neural Network - an overview | ScienceDirect Topics.” https://www.sciencedirect.com/topics/computer-science/deep-neural-network (accessed Mar. 26, 2023) .

- “Image Fusion - an overview | ScienceDirect Topics.” https://www.sciencedirect.com/topics/computer-science/image-fusion (accessed Mar. 28, 2023) .

- “Advantages and Disadvantages of TensorFlow - Javatpoint,” www.javatpoint.com. https://www.javatpoint.com/advantage-and-disadvantage-of-tensorflow (accessed Mar. 28, 2023) .

- “How to Improve YOLOv3,” Paperspace Blog, Jun. 29, 2020. https://blog.paperspace.com/improving-yolo/ (accessed May 10, 2023) .

- I. Martinez-Alpiste, G. Golcarenarenji, Q. Wang, and J. M. Alcaraz-Calero, “A dynamic discarding technique to increase speed and preserve accuracy for YOLOv3,” Neural Comput & Applic, vol. 33, no. 16, pp. 9961–9973, Aug. 2021. [CrossRef]

- “Improving Faster R-CNN Framework for Fast Vehicle Detection.” https://www.hindawi.com/journals/mpe/2019/3808064/#conclusions (accessed May 16, 2023) .

- “Understanding SSD MultiBox — Real-Time Object Detection In Deep Learning | by Eddie Forson | Towards Data Science.” https://towardsdatascience.com/understanding-ssd-multibox-real-time-object-detection-in-deep-learning-495ef744fab (accessed May 17, 2023) .

- H. Sachdeva, S. Gupta, A. Misra, K. Chauhan, and M. Dave, “Improving Privacy and Security in Unmanned Aerial Vehicles Network using Blockchain.” arXiv, Jun. 27, 2022. [CrossRef]

- A. Anand, “Introduction to CNN,” DataX Journal, Jul. 21, 2020. https://medium.com/data-science-community-srm/introduction-to-cnn-f3aaa8d79891 (accessed May 21, 2023) .

- “Eager execution prevents using while loops on Keras symbolic tensors · Issue #31848 · tensorflow/tensorflow,” GitHub. https://github.com/tensorflow/tensorflow/issues/31848 (accessed May 21, 2023) .

- R. Yamashita, M. Nishio, R. K. G. Do, and K. Togashi, “Convolutional neural networks: an overview and application in radiology,” Insights Imaging, vol. 9, no. 4, Art. no. 4, Aug. 2018. [CrossRef]

- M. H. Khan, M. Z. Hussein Sk Heerah, and Z. Basgeeth, “Automated Detection of Multi-class Vehicle Exterior Damages using Deep Learning,” in 2021 International Conference on Electrical, Computer, Communications and Mechatronics Engineering (ICECCME), Oct. 2021, pp. 01–06. [CrossRef]

- S. Sathruhan, O. K. Herath, T. Sivakumar, and A. Thibbotuwawa, “Emergency Vehicle Detection using Vehicle Sound Classification: A Deep Learning Approach,” in 2022 6th SLAAI International Conference on Artificial Intelligence (SLAAI-ICAI), Dec. 2022, pp. 1–6. [CrossRef]

- O. G. Abdulateef, A. I. Abdullah, S. R. Ahmed, and M. S. Mahdi, “Vehicle License Plate Detection Using Deep Learning,” in 2022 International Symposium on Multidisciplinary Studies and Innovative Technologies (ISMSIT), Oct. 2022, pp. 288–292. [CrossRef]

- Shahid, H., Ashraf, H., Javed, H., Humayun, M., Jhanjhi, N. Z., & AlZain, M. A. (2021). Energy optimised security against wormhole attack in iot-based wireless sensor networks. Comput. Mater. Contin, 68(2), 1967-81 .

- Wassan, S., Chen, X., Shen, T., Waqar, M., & Jhanjhi, N. Z. (2021). Amazon product sentiment analysis using machine learning techniques. Revista Argentina de Clínica Psicológica, 30(1), 695 .

- Almusaylim, Z. A., Zaman, N., & Jung, L. T. (2018, August). Proposing a data privacy aware protocol for roadside accident video reporting service using 5G in Vehicular Cloud Networks Environment. In 2018 4th International conference on computer and information sciences (ICCOINS) (pp. 1-5). IEEE .

- Ghosh, G., Verma, S., Jhanjhi, N. Z., & Talib, M. N. (2020, December). Secure surveillance system using chaotic image encryption technique. In IOP conference series: materials science and engineering (Vol. 993, No. 1, p. 012062). IOP Publishing .

- Humayun, M., Alsaqer, M. S., & Jhanjhi, N. (2022). Energy optimization for smart cities using iot. Applied Artificial Intelligence, 36(1), 2037255 .

- Hussain, S. J., Ahmed, U., Liaquat, H., Mir, S., Jhanjhi, N. Z., & Humayun, M. (2019, April). IMIAD: intelligent malware identification for android platform. In 2019 International Conference on Computer and Information Sciences (ICCIS) (pp. 1-6). IEEE .

- Diwaker, C., Tomar, P., Solanki, A., Nayyar, A., Jhanjhi, N. Z., Abdullah, A., & Supramaniam, M. (2019). A new model for predicting component-based software reliability using soft computing. IEEE Access, 7, 147191-147203 .

- Gaur, L., Afaq, A., Solanki, A., Singh, G., Sharma, S., Jhanjhi, N. Z., ... & Le, D. N. (2021). Capitalizing on big data and revolutionary 5G technology: Extracting and visualizing ratings and reviews of global chain hotels. Computers and Electrical Engineering, 95, 107374 .

- Nanglia, S., Ahmad, M., Khan, F. A., & Jhanjhi, N. Z. (2022). An enhanced Predictive heterogeneous ensemble model for breast cancer prediction. Biomedical Signal Processing and Control, 72, 103279 .

- Kumar, T., Pandey, B., Mussavi, S. H. A., & Zaman, N. (2015). CTHS based energy efficient thermal aware image ALU design on FPGA. Wireless Personal Communications, 85, 671-696 .

- Gaur, L., Singh, G., Solanki, A., Jhanjhi, N. Z., Bhatia, U., Sharma, S., ... & Kim, W. (2021). Disposition of youth in predicting sustainable development goals using the neuro-fuzzy and random forest algorithms. Human-Centric Computing and Information Sciences, 11, NA .

- Lim, M., Abdullah, A., & Jhanjhi, N. Z. (2021). Performance optimization of criminal network hidden link prediction model with deep reinforcement learning. Journal of King Saud University-Computer and Information Sciences, 33(10), 1202-1210 .

- Adeyemo Victor Elijah, Azween Abdullah, NZ JhanJhi, Mahadevan Supramaniam and Balogun Abdullateef O, “Ensemble and Deep-Learning Methods for Two-Class and Multi-Attack Anomaly Intrusion Detection: An Empirical Study” International Journal of Advanced Computer Science and Applications(IJACSA), 10(9), 2019 . [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).