1. Introduction

One of today’s major challenges of geodetic data analysis is to detect geometric changes of objects or areas which are subject to displacements and/or deformations, such as man-made structures like dams, dikes, bridges, wind turbines or high towers as well as natural Earth structures like volcanos, mining area, or tectonic plates. The analysis of monitoring measurement can be categorized into four deformation models: congruence model, kinematic model, static model and dynamic model. The congruence model describes the deformations by means of displacement vectors without specifying the time and any factor related to the acting forces, internal and external loads as well. The kinematic models describe the geometric changes in terms of temporal variations (velocities and accelerations), considering that the state of the object is permanently in motion, but also there are no concerns with the causes of the deformations. If there is interest in investigating the functional relationship between causative forces and geometrical reactions of the object, then the static model will be more suitable. In latter, the deformations are described from physical properties of the object (e.g., expansion coefficients, temperature, and lengths), so that the temporal aspects are not explicit in the model. Finally, the dynamic model is a combination of static and kinematic models, i.e., deformations are linked to their influencing factors (causative forces, internal and external loads) and the object’s physical properties [

1]. Due to its common usage, we restrict ourselves to the congruence model which only tells us whether the object has moved or not.

In the congruence analysis, the structure under investigation is often monitored by a geodetic network which is measured in at least two epochs in time, and these epochal measurements are then analysed statistically. The geodetic network works as a displacement monitoring system. The statistical test is one of the most widely used approaches to the specification of deformation congruence models [

2,

3,

4,

5,

6,

7]. The robust approach is another one also widely used and has been had important advances in recent years [

5,

8,

9,

10], but it is not part of the scope of this work. Typically, the input data is a vector composed of the differences between the coordinates of points estimated by least squares at an initial epoch (say, Epoch I) and a current epoch (say, Epoch 2). The null hypothesis, denoted by

, is formulated under the condition that all points are stable (points which do have a congruent/rigid geometrical structure at both considered epochs). On the other hand, the alternative hypotheses are stipulated from the assumption that there is at least one unstable point. As it is not known which point or group of points are unstable, a consecutive hypothesis test is often applied to identify one unstable point after the other [

11]. Such a test procedure is similar to the outlier screening by iterative data-snooping [

12]. However, the iterative consecutive hypothesis tests are non-rigorous because the alternative hypotheses are restricted to only one single unstable point [

11]. The point which is flagged as most suspected to be unstable in a given step is not then inspected in the next iteration step. In addition, the result of a misidentification in a given iteration conditions the result of the next iteration [

13]. The weakness of the iterative consecutive hypothesis tests for the case where multiple displacements are in play has been reported by several authors [

13,

14,

15,

16].

To overcome the problem of iterative procedures, non-iterative combinatorial procedure emerges for the case where all possible combinations of displaced points are considered [

11,

17,

18]. Such procedure consists of comparing all possible candidates for stable points at the same stage, and consequently it is not necessary to consecutively point-by-point specify the congruence model. This method has been applied in some numerical examples and discussed in detail by Velsink [

17,

18]. Velsink proposed the ratio of the test statistic and its critical value as a decision rule. The point or group of points with the largest ratio is flagged as unstable, if it exceeds the critical value. Another interesting combinatorial method is discussed by Lehmann and Lösler [

11]. They use various information criteria, and then select from among all possible candidate models the one which provide the lowest information criterion value as the best model. The idea of using information criteria is outside the scope of the work but will be considered in future works.

Unfortunately,

combinatorial-only method is often done from the set of different-dimensional models [

13]. The comparison between models of different dimensions is complicated in this case. For example, the more points are modeled as unstable (higher dimension of congruence model – more complex is the model), the larger the occurrence of overfitting (a model is always better fitted to observations with a larger number of parameters). Model complexity can be circumvented by applying penalties. As highlighted by Nowel [

13], the goodness of model fit and a penalty term constitutes an identification criterion. However, he warns there are many criteria, and it is not yet clear which of these to adopt. Nowel used the possibilities of combinatorics and generalized likelihood ratio tests performed in an iterative step to overcome the weaknesses related to the both iterative consecutive point-by-point model specification and

combinatorial-only method. Although there has been substantial progress in this new combinatorics field, there are still challenges that open up new research perspectives [

19,

20,

21,

22,

23,

24,

25,

26]. In this contribution, we present an alternative and sophisticated method that integrates combinatorial analysis and likelihood ratio tests in a sequential procedure, which we call Sequential Likelihood Ratio Tests for Unstable Points Identification – SLRTUPI. The procedure is an extension of the sequential method for detecting multiple outliers proposed by Klein et al. [

27].

Here, the method makes use of differences of the observations from two epochs instead of estimated coordinates, as proposed by some authors [

9]. The idea of using unadjusted (original) observation differences has been around for some time, as can be seen in [

28,

29]. When adopting the differences of observations as a vector of observations in the Gauss-Markov model, for example, we do not need to concern about the problem of defining the datum of the epochs and applications of the S-transformation [

30,

31,

32,

33]. The effect of the network geometry is eliminated [

34]. On the other hand, we must guarantee that the campaigns are always carried out with the same occupation of the points in order to be able to compare measurements between epochs – epoch-wise measurements.

Erdogan et al. [

32] presented a methodology for identifying unstable points based on this approach of analyzing the differences between measurements taken in two epochs, which they called the univariate approach. As a result, we do not also need to concern about whether it is a linear geodetic network (e.g., levelling or GNSS vector network) or non-linear (e.g., trilateration), since the univariate model is always linear. Linearization of a nonlinear model may reduce the detection power [

35]. In addition, the univariate approach has the benefit of reducing the smearing and masking effect of displacements. Smearing means that one unstable point makes another stable point appear as an unstable and masking means that one unstable point prevents another one from being identified.

However, Erdogan et al. [

32] do not control for false positive rates (Type I Error rate – detect displacements when in reality there are none). This is because they generally consider that the test involves only a single alternative hypothesis, when in fact they are multiple tests, i.e., it involves multiple alternative hypotheses. Consequently, an approach that allows controlling the Type I Error is needed. Here, for example, we use a Monte Carlo method so that the user-defined Type I Error rate is efficiently controlled [

36]. Furthermore, the works cited above do not make it clear how to choose the maximum number of points to be modeled as unstable (displaced). This choice is somewhat arbitrary. To avoid a subjective choice, the maximum number of possible points to be inspected as displaced (unstable) is based on the rank computation of the design matrices constructed for all possible combinations of points modeled as unstable, as well as on the statistical overlap analysis (i.e., when it is not possible to distinguish one model from another, since the computed test statistics present the same values, and therefore identification cannot occur).

The next part of the paper is organized as follows.

Section 2 describes the univariate model under null and alternative hypothesis for the case where the point or group of points to be tested is a priori specified. In this section, a trilateration network is presented, which is the object of study throughout the entire paper. In addition, we present the matrix that makes the connection between the network points and the measurements and its conversion to the displacement-design matrix that captures the sign of the differences between the observations from the two epochs in time. This section ends with the test statistic derived from the maximum likelihood ratio test concept for the case where there is only one single alternative hypothesis. In section 3, we describe the proposed SLTRUPI method step-by-step. We provide a Monte Carlo-based procedure for controlling the false positive rates in subsection 3.1. And in the last subsection 3.2., we present the procedure to determine the maximum number of points allowed for identification via SLRTUPI, and its application in some numerical examples.

Section 4 is devoted to experiments and results based on computer simulations and real dataset to demonstrate the reliability and performance of SLRTUPI in several displacement pattern scenarios. In that section, we also describe the success and failure rates classes associated with SLRTUPI for the case of having both individually and mutually (simultaneously) unstable points. Section 6 highlights the contributions from the study.

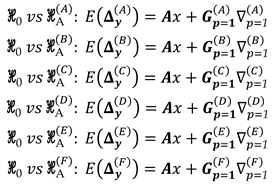

2. Null and Alternative Hypothesis

Let's start by describing the univariate model for identifying unstable points in geodetic networks by the following equations [

32,

34]:

where

are the vectors of geodetic measurements,

the unknowable true quantity vectors of measurand, and

the unknown vectors of measurement errors (note: the indices ‘1’ and ‘2’ inside the curly braces represent the quantities related to the first and second epoch in time, respectively).

By subtracting the Epoch 2 equation from the Epoch 1 equation, the univariate model can be formulated as a linear Gauss-Markov model, as follows:

with

being the vector of the two-epoch geodetic observations differences,

the unknown vector of errors of the two-epoch geodetic observations differences,

the Jacobian matrix (also called the design matrix) of full rank

, which in this case is a column vector of ones (i.e.,

) , and

the estimable unknown parameter, which in this case is a scalar that represents the unknown true difference between the two epochs. It is important to highlight that now our vector of observations corresponds to the differences of the geodetic measurements at two epochs in time. Furthermore, these differences are computed from the identical observations of each epoch.

After having defined the univariate model according to Eq. (2), we now need to resort to hypothesis testing theory to infer whether a subset of point fields measured in two epochs is stable/congruent or not. Typically, in displacement detection, the model under null hypothesis, denoted by

, is set up for the case where all points to be analyzed are treated as stable points. In other words, the null hypothesis states that the null model in Eq. (2) explains the observations. Assuming normally distributed observation errors with expectation zero, i.e.:

the null hypothesis

of the standard Gauss–Markov model in the linear form of the Eq. (2) is then given by:

with

the expectation operator,

the dispersion operator, and

the positive-definite variance matrix of

. The variance matrix

is obtained by applying the variance propagation law to the

, which is the result of the sum of the variance matrices of the geodetic observations from Epoch 1

and Epoch 2

.

When the null model in Eq. (4) is assumed to be true, the scalar parameter

can be estimated by simple least-squares calculus as:

and the estimated observation errors as being:

where

is the known matrix of weights, taken as

, where

is the priori variance factor. The overall degrees of freedom

(redundancy) of the model under

is given by:

where

is the estimated variance matrix of the observation errors.

On the other hand, an alternative model can be proposed when there are doubts about the stability of points in the network. Here, we restrict ourselves – for simplicity of the analyses – measurements not contaminated by outliers. Thus, for the case of univariate approach, the model under the alternative hypothesis, denoted by

, is to oppose Eq. (4) by an extended model that includes an extra parameter

which describes the disturbance on the measurements in function of the displacement of a subset “

” of network points, as follows:

with

being the matrix which captures the relationship between the displaced points and the changes in the measurements connected to them (hereafter, this matrix will be called displacement-design matrix). If the alternative model in Eq. (9) holds true, then the unknown parameters will be obtained as:

The redundancy of the model under

is

, with the estimated observation errors

and estimated variance matrix of the observation errors

under alternative hypothesis

given respectively by:

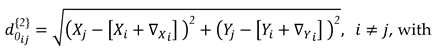

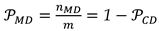

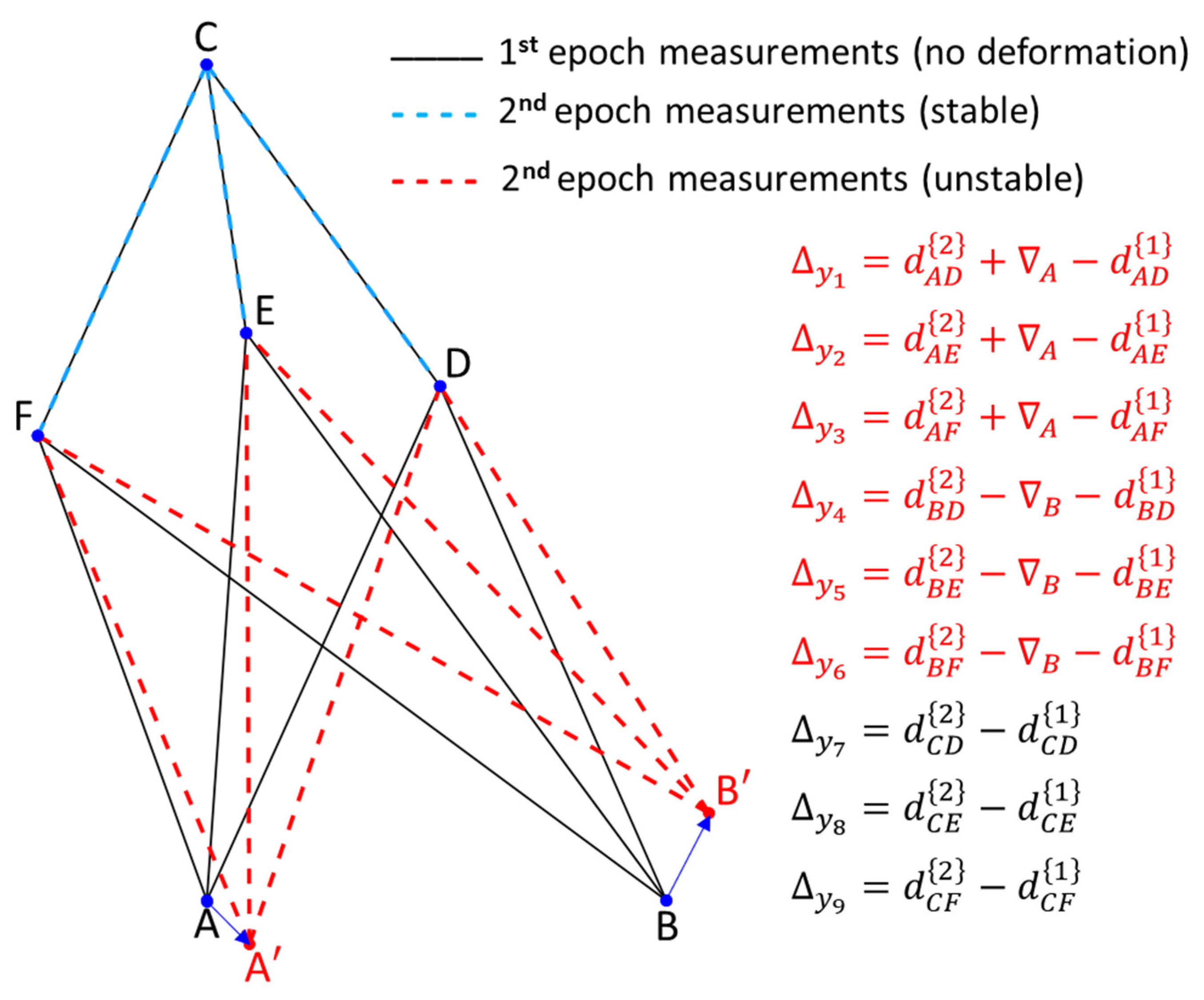

As a simple example, an alternative hypothesis may then be formulated for the case of having a specific point as displaced (

), as can be seen in the illustration of a trilateration network in

Figure 1. It is presumed that only point F has been moved to a new position F’. Consequently, this causes changes only to the observations connected to it. This means that such distances measured in the second epoch undergo deformations in the sense of stretching and/or compressing in relation to their initial states, whereas the other ones remain stable. The initial state refers to the first epoch the measurements were gathered, so that all network points are assumed to be free of displacements. For point F, for example, the

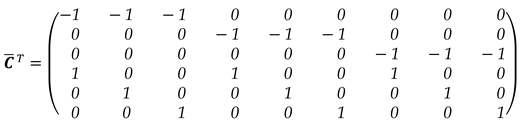

matrix is described as:

The sign assigned to the elements of displacement-design matrix

depends on the sign of the two-epoch geodetic observations differences. A positive sign means that at epoch 2 the measure was positively distorted and therefore the parameter acts positively (

). The same occurs for the case in which the measure is reduced in relation to its initial state and, therefore, the parameter acts with a negative sign (

). In this example (

Figure 1), the shifted point F to its new position F’ makes the AF' segment smaller than its initial state AF. Consequently, the disturbance parameter on that measurement in function of the displacement of the F point acts with negative sign (

). The same occurs for BF, i.e., BF' segment gets smaller than the BF segment (

), while for the case of CF sign is positive, since CF' segment gets larger than CF (

).

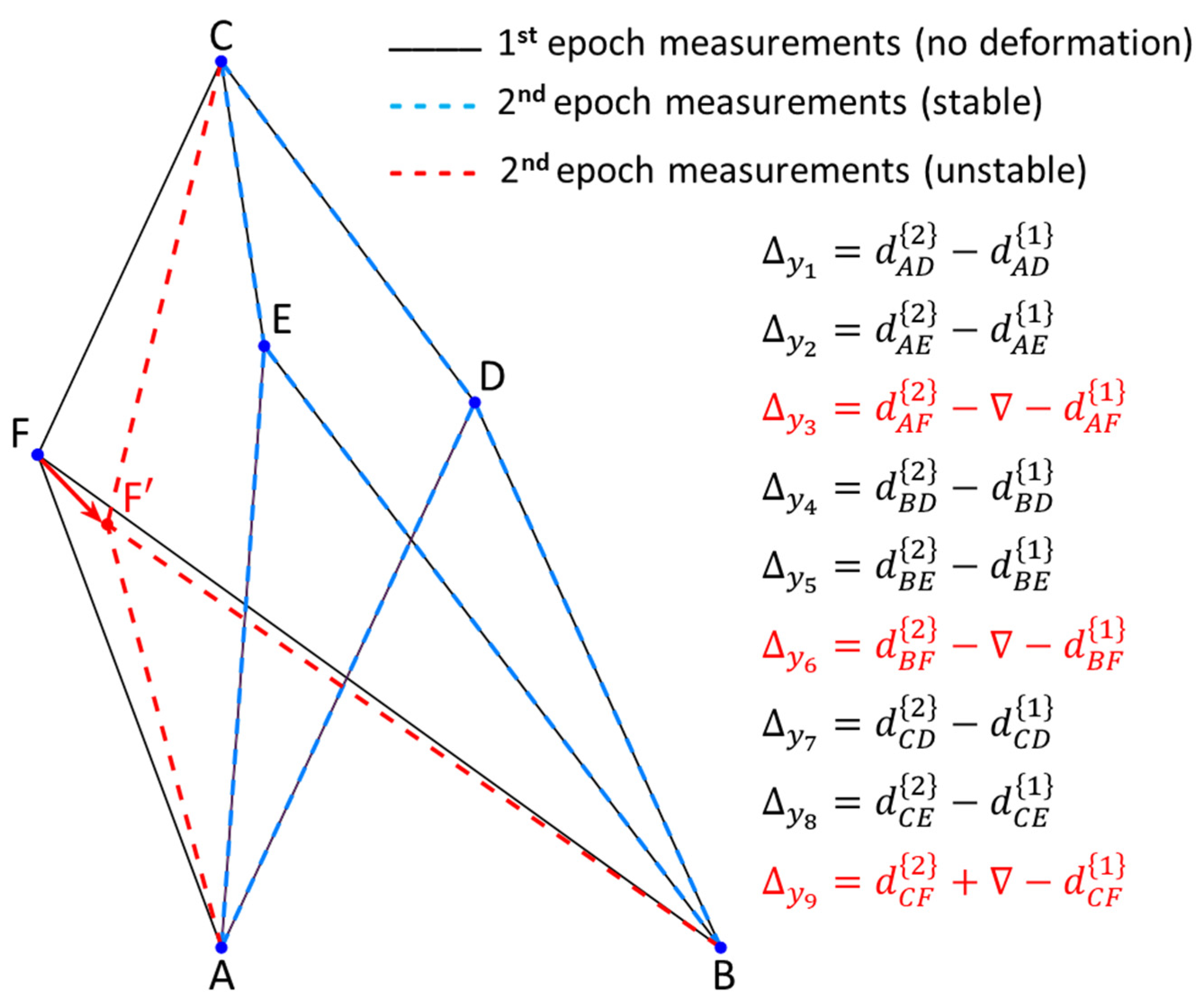

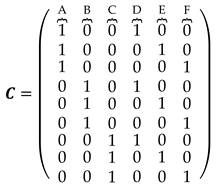

The displacement-design matrix

in expression (10) has been constructed for the case of assuming only one single displaced point, which in our example was point F. In that case, the unknown parameter vector

has become a scalar

, and the displacement-design matrix

has reduced to a unit vector, whose elements are exclusively formed by values of 0, 1 and -1, where 1 or -1 means that the ith parameter of magnitude

or

, respectively, affects the measurements connected to the point, and 0 means otherwise. However, as given by Eq. (9), we can design an overall displacement-design matrix

, so that each column represents the displacement of a given point. For this, we first provide an auxiliary matrix

which describes the general case of the relationship between the measures (matrix rows) and the network points (matrix columns), but without taking into account the sign of the unknown estimable vector

. We call this matrix of

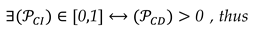

Point-to-Measurement Connection Matrix, which for the network in Figure (1) is given by:

Then, the signs of the coefficients of the displacement-design matrix

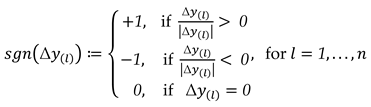

can be obtained generically from the application of a signum function as:

where

is the sign function and

gives a non-negativity value (absolute value) for each two-epoch geodetic observation differences. The result of Eq. (15) can be stacked into a vector as

. Thus, the displacement-design matrix for the general case becomes:

where

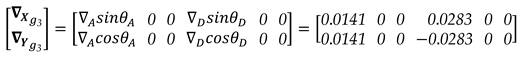

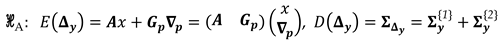

is the diagonalize operator which convert a vector to a diagonal matrix. Consequently, the alternative hypothesis is now given by:

The displacement-design matrix

can be developed in several ways, depending on which point, or group of points will be chosen to be monitored. Taking another example in

Figure 2, if

and

, then a possible displacement-design matrix

would be

and

for the case where the point A and B were considered simultaneously as displaced points. It is important to note that in this example the first and second columns of the matrix

were selected, which refer to points A and B, respectively.

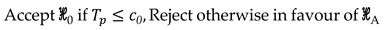

After postulating the null and alternative hypotheses, the test statistic derived from the concept of the maximum likelihood ratio test are computed as [

37]:

Then, a test decision is then performed as

Under the null hypothesis , the test statistic follows the central chi-squared distribution with degrees of freedom; under the alternative hypothesis , the test statistic follows the non-central chi-squared distribution with degrees of freedom and non-centrality parameter . If the test statistic is larger than a critical value (), then a particular combination of tested points is flagged as displaced, where is the chi-squared critical value at a given significance level with degrees of freedom, i.e., . The probability level defines the size of a test and is often called the “false alarm probability”. Thus, the null model is assessed by specifying a significance level . [Note: the subscript "0" means that the test is performed for the case of having only one single alternative hypothesis. Thus, the non-centrality parameter and the significance level with the subscript "0" means that it is a single test involving only one single alternative hypothesis. In that case, rejecting the null hypothesis automatically consists of accepting the alternative hypothesis, and therefore, detection is the same as identification.]

In addition, the hypothesis test-based approach presented here is based on the mean-shift model: the alternative model is formulated under the condition that the displacement of the point (or a subset of network points) acts as a systematic effect by shifting the random error distribution under

by its own value. This means that a displacement can cause a shift of the expectation under

to a nonzero value. Therefore, hypothesis testing is often employed to check whether the possible shifting of the random error distribution under

is, in fact, a systematic effect (bias) coming from a possible deformation or merely a random effect due to measurement uncertainties [

38].

The alternative model in Eq. (17) has been formulated under the assumption that a specific group of points has undergone displacement. This group of points can be formulated for the cases where we have a single only one point displaced (

Figure 1) or a subset of such points of the geodetic network (

Figure 2). In other words, the number of points to be monitored and their locations on the network have been fully specified. This is a special case of testing the null hypothesis against only one single alternative hypothesis, and therefore, the rejection of the null model automatically implies the acceptance of the alternative model and vice versa.

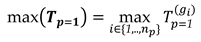

3. Sequential Likelihood Ratio Tests for Unstable Points Identification – SLRTUPI

One of the questions that arises is how to define the number of points to be monitored and their locations. Clearly, geodesists need to work in an interdisciplinary way with other professional/areas so that an alternative model may be properly formulated [

18]. However, even with such information, we may still have doubts whether there are points that a priori were assumed to be stable, whereas in fact they may be shifting. From a practical point of view, therefore, we often do not know well how many and which points are or are not stable. Here, all geodetic network points are subjected to be inspected, regardless of whether they are located on structure or not. Thus, a more conservative alternative hypothesis would be to assume that there is at least one point that is moving among all points involved in the geodetic network. Consequently, we would have

alternative models for

to be tested against the null hypothesis, i.e.:

Note that

represents the ith column vector of the displacement-design matrix

. For the case of the network illustrated in

Figure 1 and

Figure 2, we would then have 6 alternative hypotheses, since we have a total of

points, so that

This means that we would have to test

against

, i.e.:

We are now dealing with testing involving multiple hypotheses. Now, we are interested in knowing which of the alternative hypotheses may lead to the rejection of the null hypothesis with a certain probability. To answer this, the test statistic in Eq. (18) is now computed for each of such tests

, so that we would have a vector of test statistics, denoted by

. In that case, the test statistic coming into effect is the maximum test value, which is computed as

The decision rule for this case is given by:

The decision rule in (23) states that if none of the tests get rejected, then we accept the null hypothesis . If the is larger than some percentile of its probability distribution (i.e., some critical value ), then there is evidence that there is a displaced point in the structure. In this case, we can only assume that detection occurred. The identification, however, is not straightforward. The identification (or localization) of the displaced point consists of seeking the point that produced the maximum test statistic , and whose value is greater than some critical value . Thus, point identification only happens when point detection necessarily exists, i.e., “point identification” only occurs when the null hypothesis is rejected. This means that the correct detection does not necessarily imply correct identification. For instance, we may correctly detect the occurrence of deformation, but wrongly identify a point as displaced, while in fact it is another point that has changed. The description of the success and failure rates will be covered in the next sections.

It is important to highlight that the maximum test statistic, now generally denoted by

, is treated directly as a test statistic. Thus, the distribution of

cannot be derived from well-known test distributions (e.g., Chi-squared distribution). Therefore, critical values cannot be taken from a statistical table but must be computed numerically. Here, the critical value

is computed by Monte Carlo such that a user-defined Type I decision error rate “

” for the proposed procedure is warranted [

39]. In the next section, we provide details on how to compute the critical value

for the proposed sequential procedure.

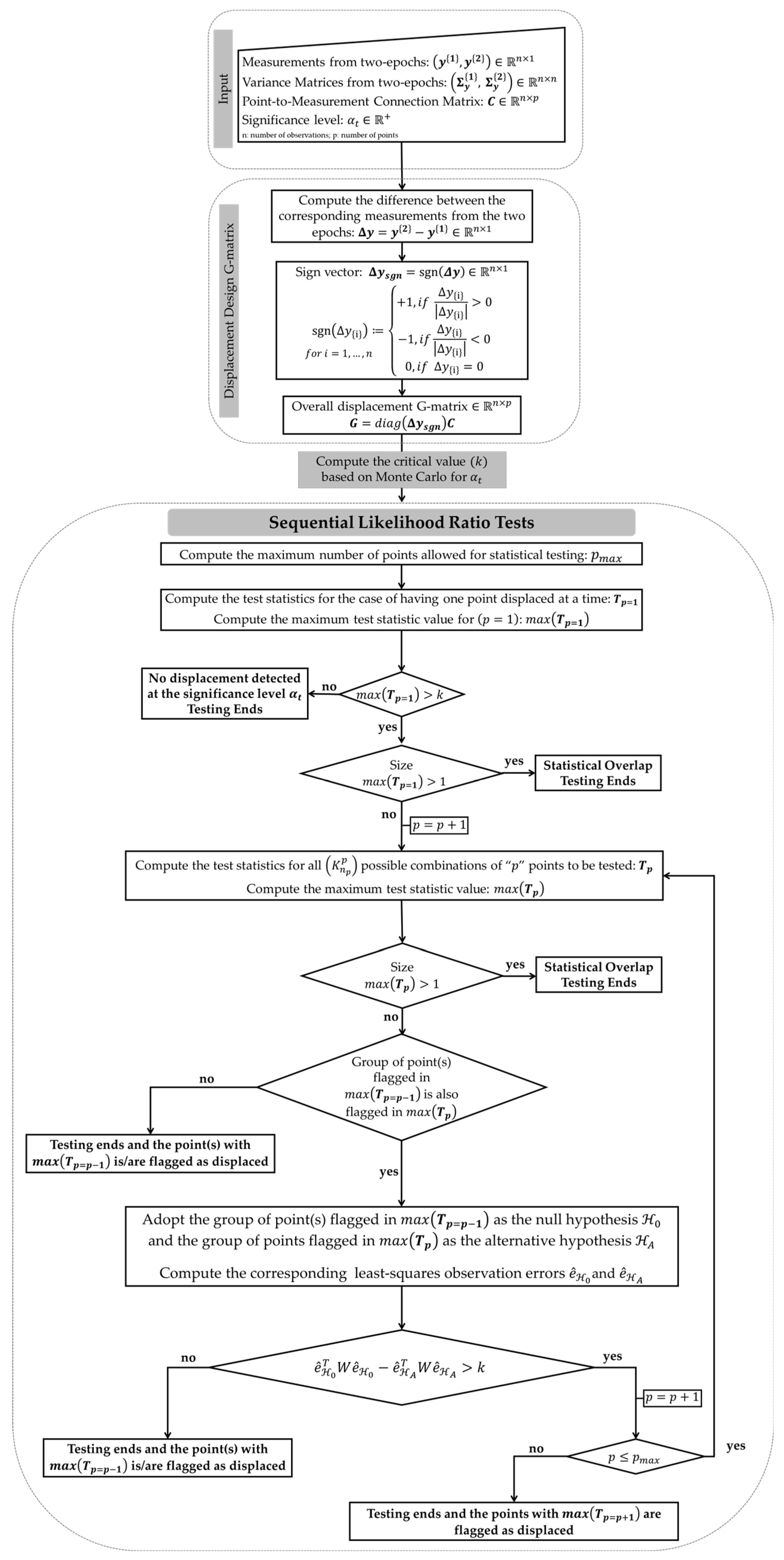

The procedure described so far allows only one single point to be identified. However, we may have multiple points displaced simultaneously. A more appropriate approach would be to apply a sequential test to decide the number and location of shifted points for the case where the null model is rejected. If the maximum test statistic value exceeds the critical value at the significance level adopted, i.e., if

, the test statistics proceeds for

(two possible unstable points). Since we do not know which group of two points might be shifting, we have to compute the test statistics by Eq. (18) from the possible combinations for two unstable points

, and then identifying the corresponding candidate group for

through the maximum value of the test statistic

. Note that the extreme test statistic

returns only one group of points or point. In general, the number of possible groups of points, denoted by

, is given by:

For instance, for a geodetic network with

points and by assuming

two-point group to be tested, we would have a total of

possible combinations. That would be the case for the geodetic network presented here, as can be seen either by

Figure 1 or

Figure 2, then we would have

alternative models, i.e.,

. Note that for

it is a particular case of having

, as previously presented. Thus, the general cases of the alternative hypotheses can be expressed as:

If the identified point with

is also contained in the case of

, i.e. if the one point flagged initially is among one of the pairs flagged subsequently, then the null hypothesis becomes specified according to the model identified at

and the alternative hypothesis to the model defined at

, as can be seen the example below:

For example, if

and the point A in the geodetic network illustrated in

Figure 1 (or

Figure 2) was identified by the

, the model under the null hypothesis would become

. If in the next step for

, points A and C were identified by the

, then the alternative hypothesis would be defined as

. Note that

is a subset of

. To decide which of these models to select, we compute the test statistic based on the maximum likelihood ratio between

and

, denoted by

[

37]:

The result of Eq. (27) comes from the ratio between the maximum of the probability density function of

under

and

, and the fact that the null hypothesis is (and must be) a subset of the alternative hypothesis. Thus, the selection of the most likely hypothesis is based on the following decision:

where

and

are the least-squares estimated observation errors for the model under the null and alternative hypothesis, respectively. For the example above, we would have

for the null model and

for the alternative one.

If the null hypothesis is not rejected, the testing ends and only the point corresponding to is flagged as unstable. On the other hand, if the null hypothesis is rejected again, then a new step is started by taking the model under the alternative hypothesis identified in the previous step as the null hypothesis. It proceeds by computing the test statistics for according to Eq. (18) for all possible combinations given by Eq. (24), identifying the corresponding candidate group for through the maximum value of the test statistic , and then checking if one of the points flagged in the previous step (i.e., for ) is among those flagged in the current step for . If this is the case, then the test is applied through Eq. (26) to decide between the null model defined by the identified model for and the alternative model for . This sequential procedure is repeated until any of the following conditions is met:

- (i)

The current null hypothesis fails to be rejected.

- (ii)

More than one group of geodetic network points is identified by the extreme statistic for a given , i.e., for the case where the hypotheses cannot be separable (statistical overlap), and therefore the identification cannot be accomplished.

- (iii)

If the group of point(s) flagged by is not fully contained in the group of points flagged by , then the procedure ends with the null hypothesis given by the group of point(s) flagged by .

- (iv)

Iteration reaches the threshold (i.e., until the maximum number of points to be evaluated is fully inspected). The definition of the maximum number of points will be detailed in the next section.

In general, therefore, the sequential testing procedure proposed here is based on likelihood ratio, which we now call Sequential Likelihood Ratio Tests for Unstable Points Identification (SLRTUPI). It consists in determining whether the additional subset of displaced point, considered in every new alternative hypothesis, is statistically significant or not, in terms of its impact on the quadratic form of the estimated observation errors, similar to its form for outlier detection [

27]. Consequently, we can identify the number and the location of the displaced geodetic network points.

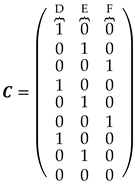

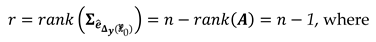

Figure 3 provides step-by-step how to run the SLRTUPI procedure.

3.1. Monte Carlo Approach for Controlling the False Detection Rate

There is the probability of committing at least one false discovery, or Type I error when performing multiple hypotheses tests. Thus, Type I Error rate for the case where SLRTUPI is in play corresponds to the probability of incorrectly detecting at least one point as displaced while in fact there is none (i.e., accept at least one alternative hypothesis, when, in fact, the null hypothesis is true). This means that the SLRTUPI Type I decision error does not depend on all subsequent test steps, only on the extreme test statistic computed in its first step, i.e., only for

in Eq. (22). The risk of rejecting a true

is now

one-fold: the undesired random event “reject a true

” can occur in any of the

tests. Let the probability of rejecting a true

in test

be

(the so-called “experimentwise error rate”) and let

. Furthermore, assume the random events “reject a true

in test

” to be approximately statistically independent. Then the total probability of rejecting a true

in the multiple hypothesis test (the so-called “familywise error rate”, denoted here by

) is [

11]:

The classical and well-known procedure to control the

is to apply the Bonferroni equation by choosing [

40]:

Unfortunately, the test statistics

and consequently the random events “reject a true

in test

” are statistically dependent. The extreme statistic

captures such dependencies, as it is extracted from the

. If such dependencies are neglected, then the computed critical values are erroneous, and the test decisions do not have the user-defined familywise error rate

[

34]. Here, the maximum test value

in Equation (22) is treated directly as a test statistic [

39]. Note that when using Equation (22) as a test statistic, the decision rule is based on a one-sided test of the form

, as can be seen in expression (23). However, the distributions of

cannot be derived from well-known test distributions (e.g.,

-distribution). Therefore, critical values cannot be taken from a statistical table but should be computed numerically. A rigorous computation of critical values requires a Monte Carlo technique.

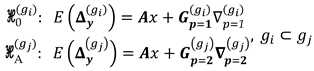

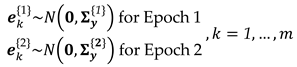

The procedure to compute the critical values for is given step-by-step as follows:

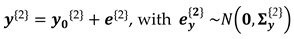

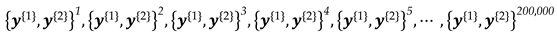

Generate a sequence of

random vectors of the measurement errors for both epoch 1

and epoch 2

k = 1,...,

m of the desired distribution. e.g.:

where

is known as the number of Monte Carlo experiments. In addition, Matlab's “

mvnrnd” command may be used in this step, for example.

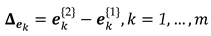

For each pair

and

,

k = 1,...,

m compute the differences in errors between the two epochs, i.e.:

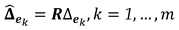

Apply the least-squares to estimate the observation errors, as follows:

where

is known as the redundancy matrix, which is given by:

with

the identity matrix.

Assemble the displacement-design matrix ,i = 1,..., np for each Monte Carlo experiment, according to equation (16), but the signs of the coefficients of the displacement-design matrix given by k = 1,..., m, and compute the test statistic by (18) and (22). The frequency distribution of approximates the probability distribution of .

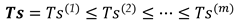

Sort in ascending order the

, getting a sorted vector

, such that:

The sorted values in

provide a discrete representation of the cumulative density function of the maximum test statistic

.

Determine the critical value

:

where

denotes rounding down to next integer that indicates the position of the selected elements in the ascending order of

. This position corresponds to a critical value for a stipulated overall false detection probability

. This can be done for a sequence of values

in parallel [

34].

The Matlab function "kslrtupi.m" was elaborated for the computation of critical values. Inputs in the function are , where “” is the user-defined number of Monte Carlo experiments.

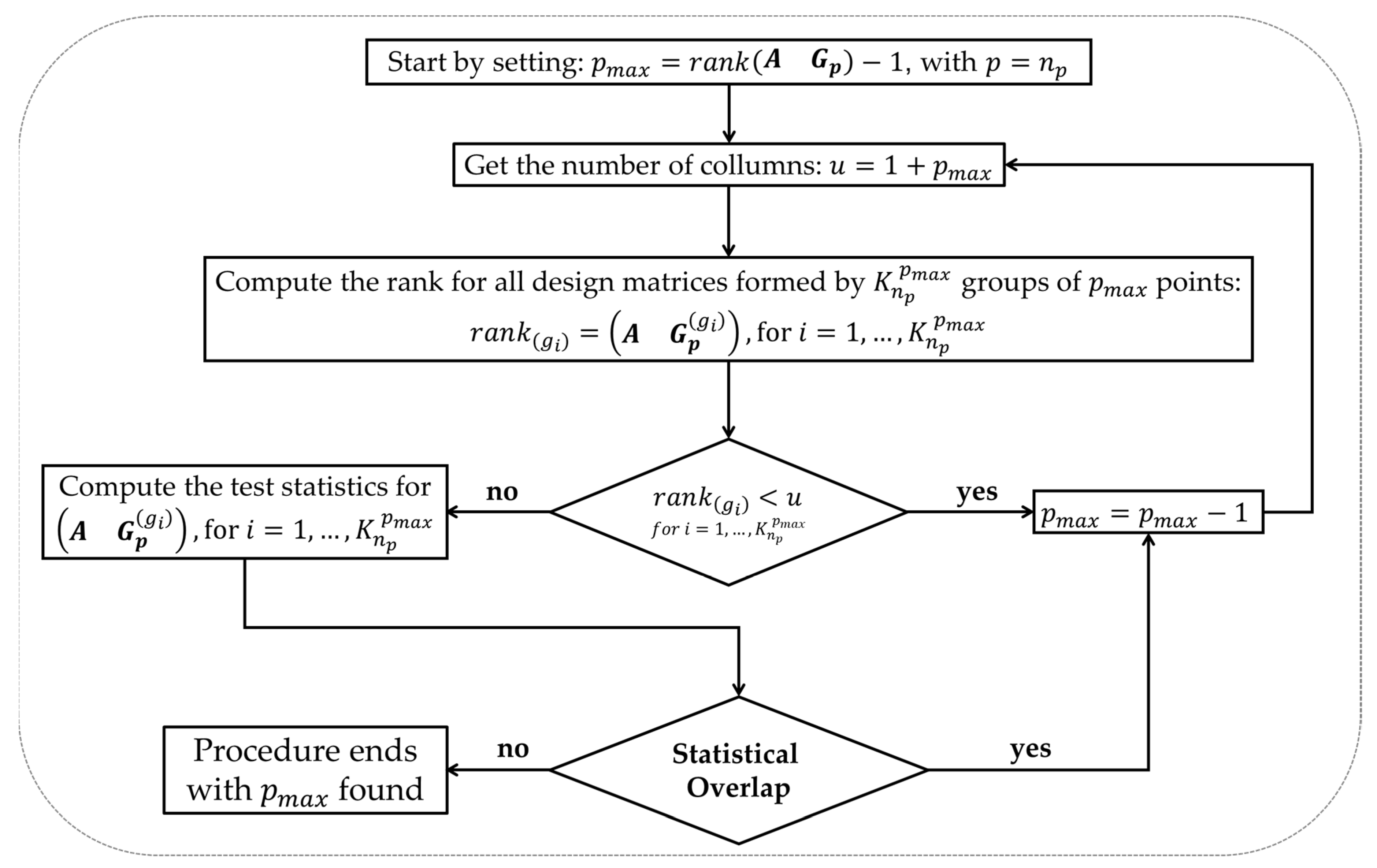

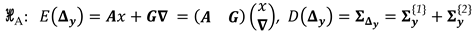

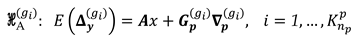

3.2. Determining the maximum possible number of points

Here, we objectively and universally demonstrate how to obtain the maximum number of points “

” to be inspected by the SLRTUPI sequential procedure. The maximum number of points is also defined sequentially. The procedure to define the maximum number of points “

” consists in finding a regular model (i.e., a matrix

with full column rank) and, of course, that model has enough redundancy for the identification of unstable points. The step-by-step procedure to obtain the maximum possible number of points

to be inspected by SLRTUPI is given by the flowchart in

Figure 5.

If the full displacement-design matrix is specified by taking the total number of points in the network, i.e., by taking according to equation (16), then we fall into a problem in which there is a rank deficiency. Usually, the rank defect is greater than or equal to 1, because , where is the number of columns of the matrix . In this situation, the determinant of the normal matrix is zero. This means that such matrix cannot be considered invertible – the matrix is then said to be singular. Consequently, it is not possible to compute the test statistics by Equation (18). This demonstrates that .

By reducing the number of points by one unit, i.e., , we expect to find regular models (i.e., without rank deficiency). For that, it should be checked again whether there is rank defect or not. But now we have at our disposal not only one single group of points, but a combination of group of points. If at least one of the matrices presents rank defect, then must be reduced again by one unit, i.e., , and the rank computation is then repeated for group of points. If the rank defect persists, then the rank computation is repeated by decreasing to , otherwise we proceed to evaluate whether the test statistics computed in Equation (18) are different from each other. If there are at least two statistics with equal values, then a statistical overlap will be flagged. In that case, therefore, we should reduce to and restart the procedure. If the models are found to be regular and there is no statistical overlap, then the process ends with the value found.

Important to highlight that the rank of the matrix

depends on the behaviour of the signs of the coefficients of its displacement-design submatrix

. The signs will also depend on the geometry of the geodetic network, as well as the unknown magnitude and number of points that have been shifted. Remember, however,

captures these quantities. Although we have the limitation of computing the test statistics a priori, the procedure proposed above allows to determine the maximum number of testable points for identification. Generally, the choice of this maximum number is restricted to a subjective choice [

11,

13]. Here, Matlab function "pmax.m" was developed for the automatic computation of the maximum number of points. Inputs in the function are

and

, i.e.,

. Below are presented two possible scenarios that may occur in obtaining the

for the geodetic network presented in the scope of this article (

Figure 1).

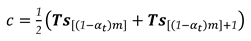

Example 1:

Note that the number of columns in the matrix (38) is

but its

. In that case, we would fall into a rank deficiency problem, because

. If we considered that

, then we would have

group by Eq. (24), namely

. However, in this case, the determinant of the normal matrix would be equal to zero, denoted by

, which would characterize it as a singular matrix, and consequently would not allow the computation of the test statistics by the Eq. (18). By reducing

to one unit (

), we would have now a total of

groups of 5 points, which would be the following:

;

;

;

;

; and

. All these groups would now have full column rank with

and

. Thus, we would end and set

.

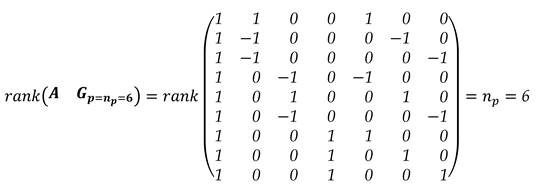

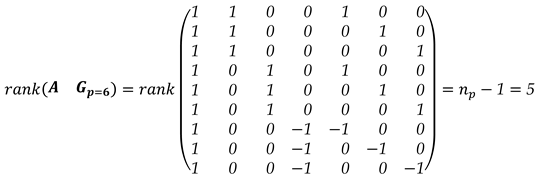

However, another important analysis is needed. Although the determinants of the matrices constructed for each group of points are non-zero, their values are all equal to . Mostly, the test statistics computed for each of those groups by Eq. (18) would also have the same values. So, we would not be able to identify the group by the , since all the statistics are the same, i.e., there would be a statistical overlap. Consequently, we would only be able to get the information about displacement detection (i.e., ), but not identification. In order to have the possibility of identification, we would then decrease again to one unit (i.e., ), which would lead to a total of groups of 4 points. Now, the test statistics for each of would result of values different from each other, making identification possible. As a result, the maximum number of points to be considered would be for identification, which for that example we would get .

In this scenario, we would fall back into a problem where the matrix holds rank defect, but now with . If , we would have groups of 5 points to be considered, namely: ; ; and . Unfortunately, the rank of any of these groups would be equal to , and therefore we would still remain with rank deficiency problem. Moreover, the determinant of any normal matrix of these groups would be zero, i.e., . By reducing to one unit (i.e., ), we would have a total of groups of 4 points, but still with rank defect for the following groups: and As there would still be a lack of full column rank for some groups, we would again reduce . Now we would have a larger number of groups than the previous case, but of size equals to , i.e., groups of 3 points. In the latter case, however, we would still have only one single group of points that would cause a rank defect, namely . Again, we would proceed to decrease the maximum number of points followed by computing the rank of the matrices. Finally, we would find the maximum number of points, which in that case would be . All these groups of 2 points would have their respective matrices with full column rank and theirs test statistics would have different values from each other. Thus, would be the dimension found, so that all groups of 2 points would be testable for identification.

In addition to the examples given above, we may have cases where , which allows us to identify only one single point. In that case, the SLRTUPI procedure would not proceed in its sequential form, but would restrict only in its first step, i.e., only in the identification of a point by means of . And, if , then in that case we would have a situation in which it is not possible to detect displacements, since the network does not have enough redundancy for this purpose. This latter case may occur if the geodetic network is very poorly designed so that it will not be possible to detect displacements.

5. Contributions

In this contribution, we present a statistical method for detecting and identifying unstable points for geodetic deformation analysis, namely Sequential Likelihood Ratio Test for Unstable Points Identification (SLRTUPI). The method makes use of the differences of measurements taken at two epochs in time instead of using the classic difference of estimated coordinates. As an advantage, we will always have a linear model, and therefore the model nonlinearity problem does not affect the test power. In addition, another advantage is to avoid the S-transformation to maintain the same datum between epochs.

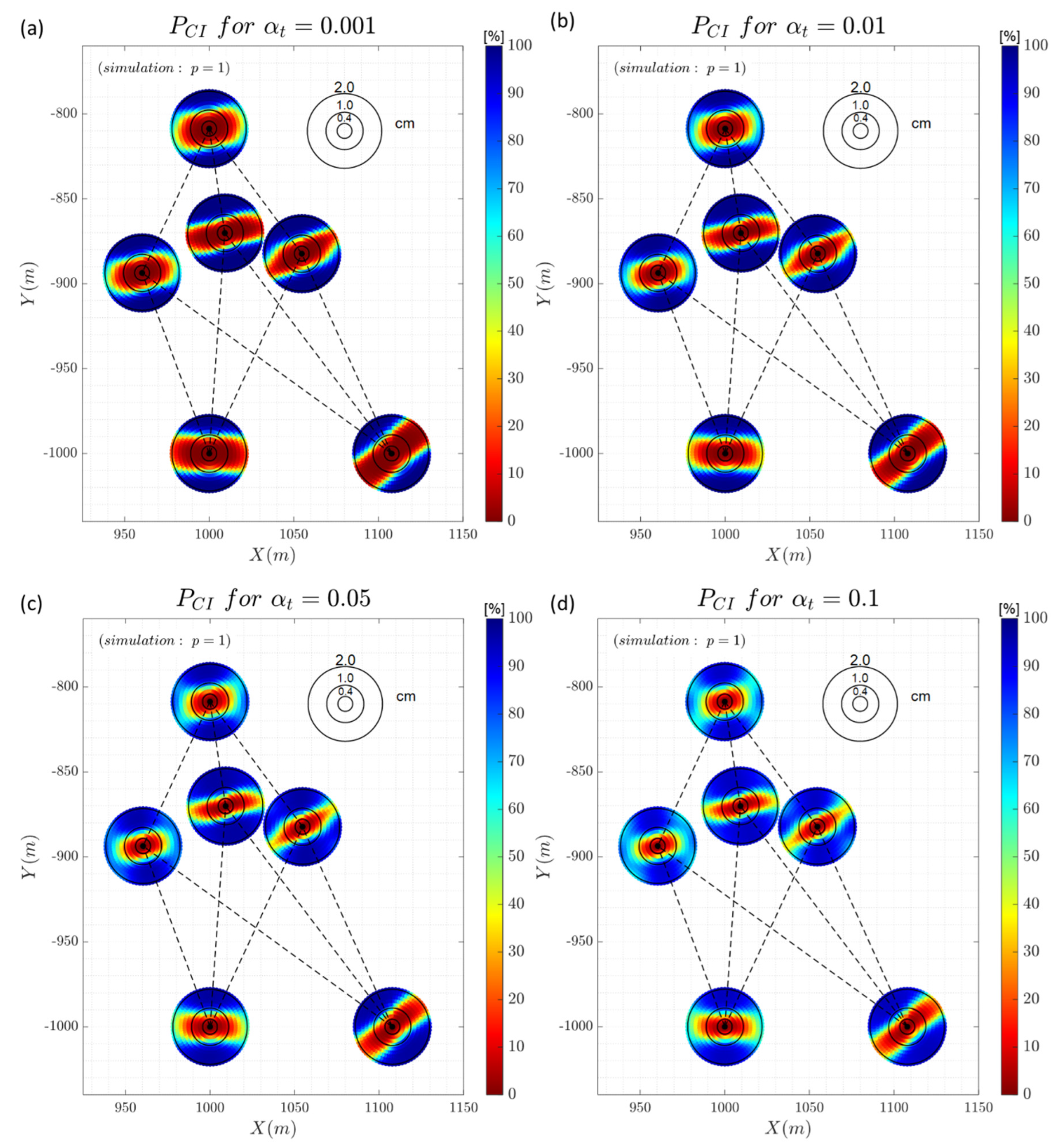

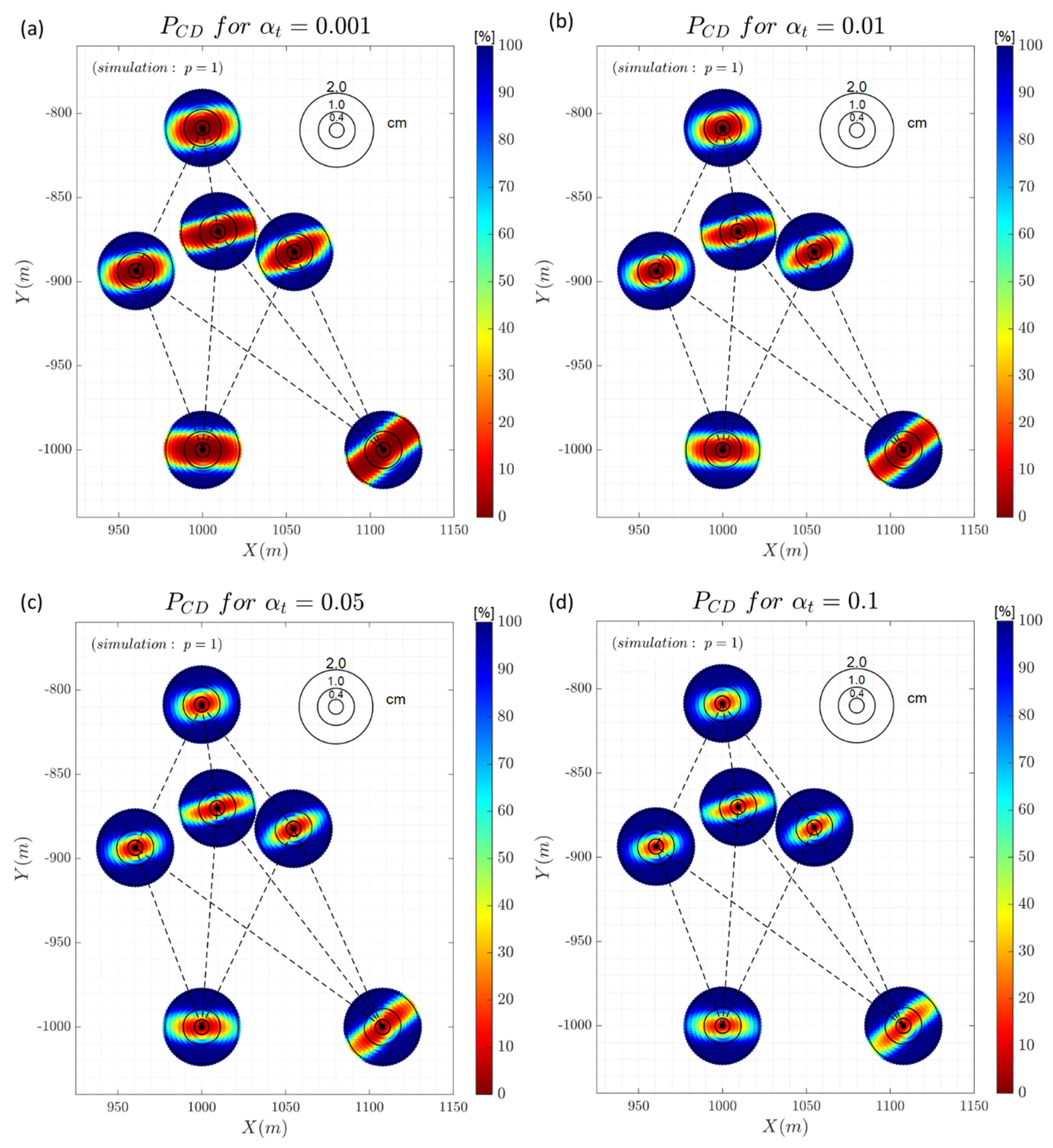

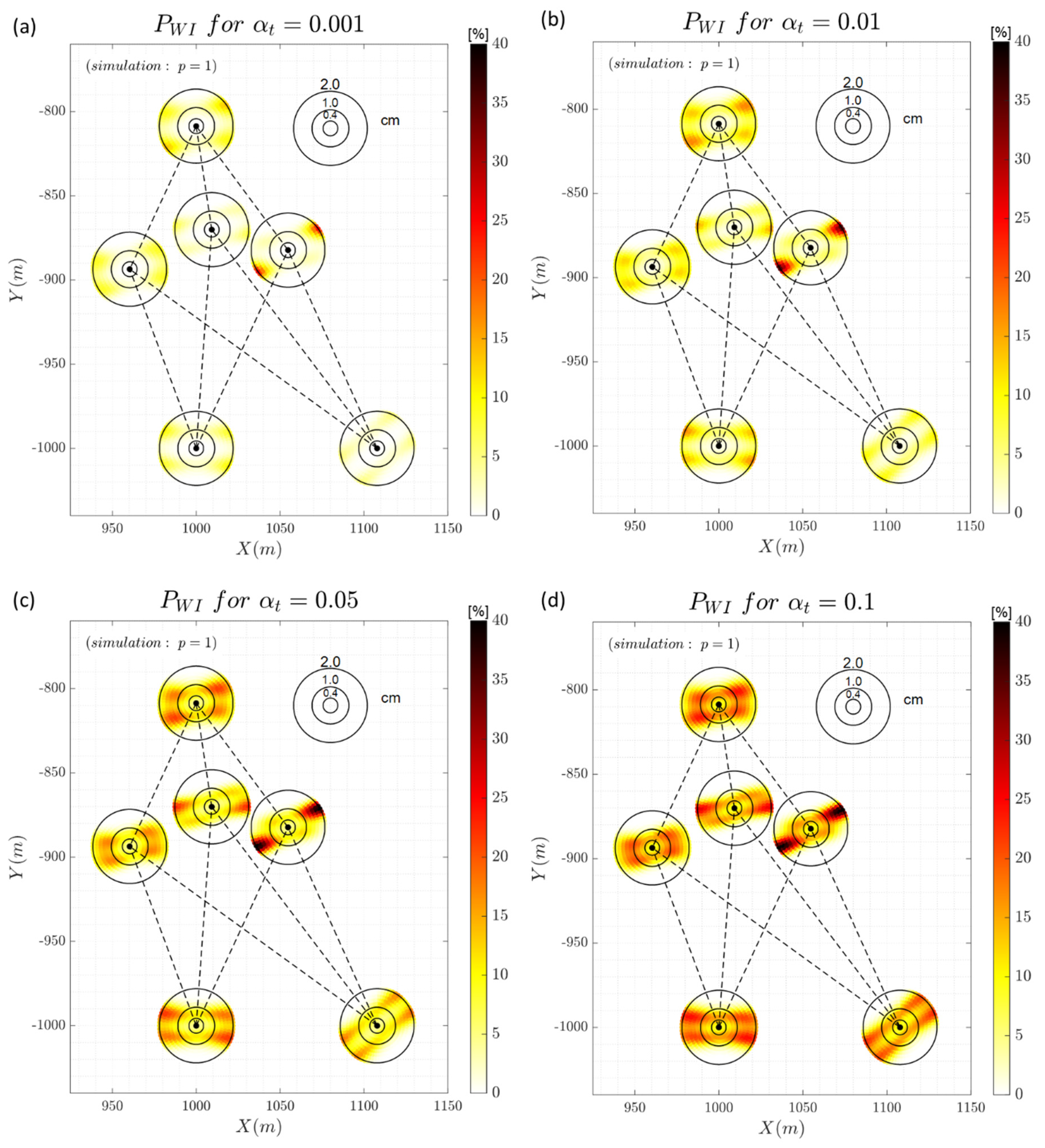

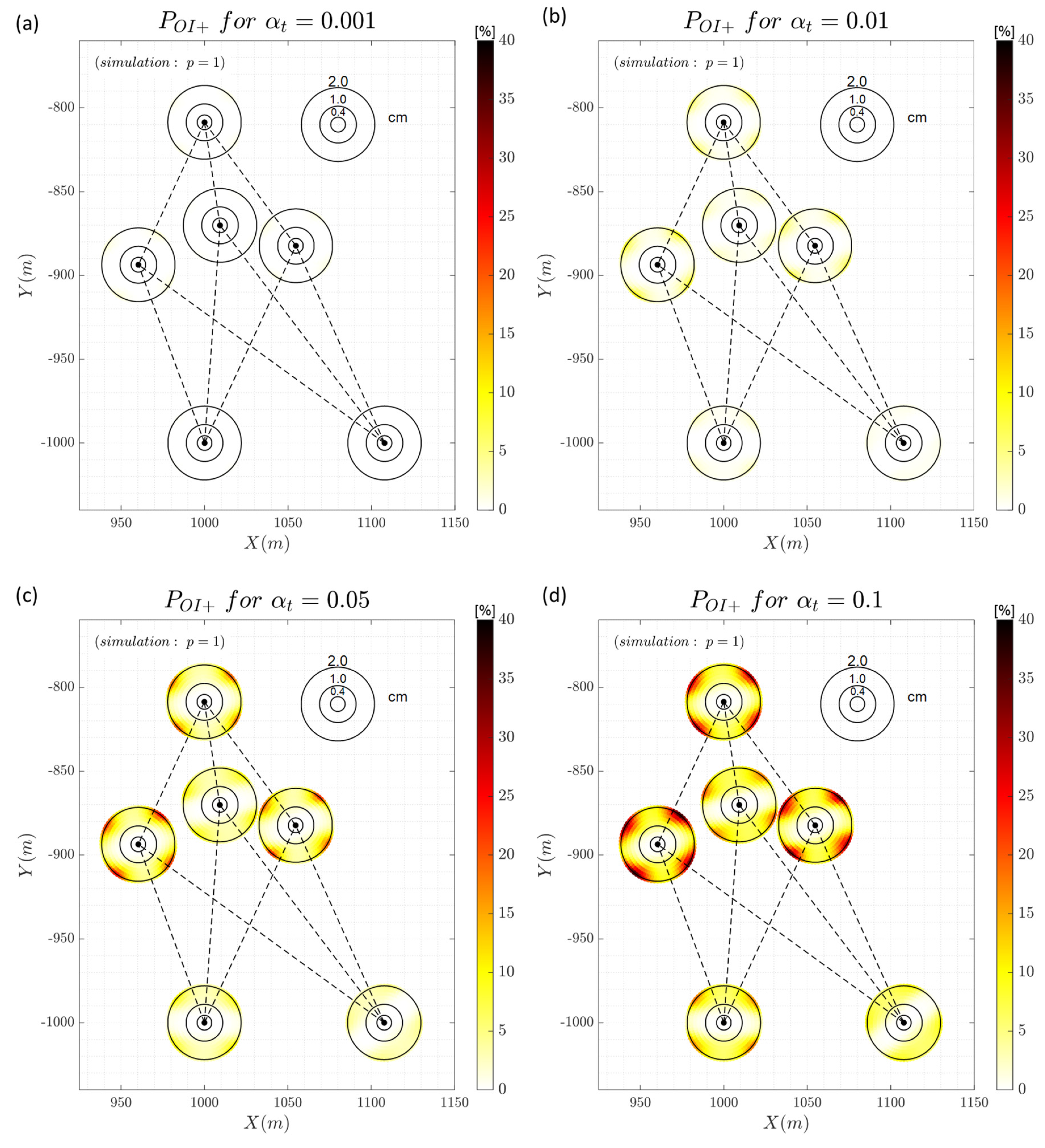

The method is not restricted to points subject to monitoring but is applied in such a way that all points participate in its inspection. However, the power of the identification improves considerably if we know in advance which points are stable (see

Table 8 and

Table 9). The results support that the success of the proposed method depends on the interaction between the displacement pattern and the network geometry. In the case of the trilateration network used as a case study in this contribution, we observe that for the case of having only one single point displaced, the highest success rates occur in the lines of sight (

Figure 7 and

Figure 8). When more than one point is unstable simultaneously, success rates are generally higher for the scenarios where the displacements occur in opposite directions (see

Table 4,

Table 5,

Table 6 and

Table 7). More experiments based on different types of geodetic networks (such as, levelling and GNSS networks) will be conducted in the future works.

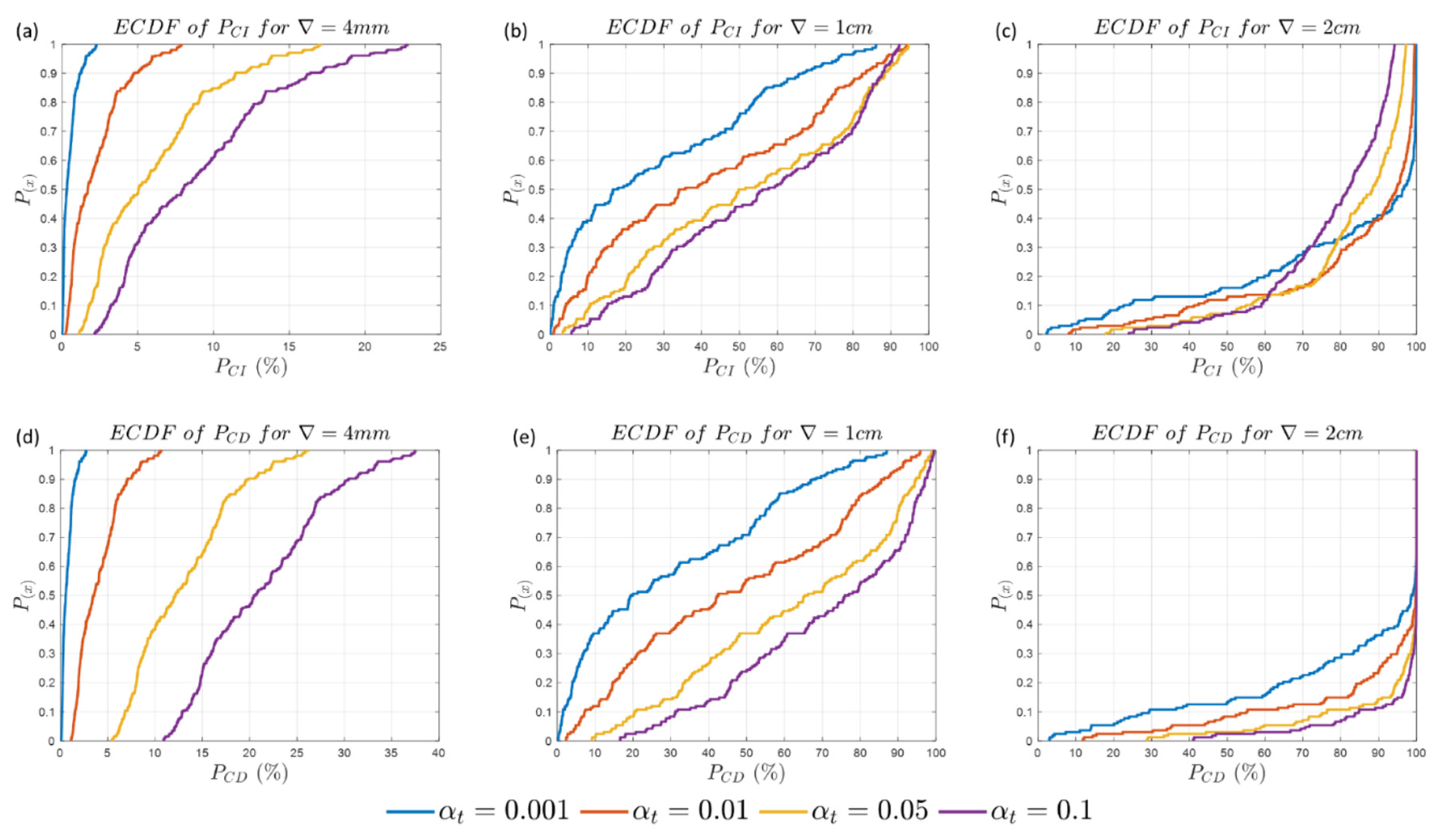

The larger the number of points displaced simultaneously, the more difficult the identification. However, success rates depend on the geometry and redundancy of the network. Furthermore, detection is always larger than identification. Although identification is important to localize displacement, detection plays a dominant role for the activities that involves risk assessment, such as the deformation analysis. It is preferable that detection occurs even if identification does not occur in the expected way. It is crucial that a system raises an alarm when displacements are detected, even though their location may be wrong. In this sense, we also observe that increasing the significance level does not always improve the identification rate, but always improves detection.

It is important to highlight here that the false positive rates (Type I errors) are efficiently controlled from the definition of the familywise error rate by the user (

). The approach for controlling that decision error is based on Monte Carlo. Of course, this requires some computational cost, but nothing prohibitive. One way to save computational costs would be to develop a methodology based on artificial neural networks, as done for example by [

44], or some other alternatives, such as [

45]. This will be investigated in future work.

Another important contribution is that now the maximum number of points

to be inspected by the SLRTUPI procedure is not based on non-objective choices, but rather determined from the rank analysis of the design matrices involved and the occurrence of statistical overlap. Consequently, the method avoids the occurrence of statistical overlap, which is common in multiple test problems [

39,

45]. More experiments will be needed to understand the effect of determining

on the detection and identification of unstable points.

The simulation methodology described here can also be applied in the design stage of a geodetic network for deformation analysis purposes. This allows the user to know a priori how the measurement system (in this case, the geodetic network) should be optimally designed so that it is able to detect and/or identify a certain displacement pattern for a given probability. As a result, reliability measures can be easily extracted, such as Minimal Identifiable Displacement (MID) and Minimal Detectable Displacement (MDD) from its corresponding success rates and by a given user-defined significance level . More experiments for different geodetic networks will be needed to evaluate the ability of the measurement system to identify possible outliers (internal reliability) and the effect of these undetected errors on the quality of deformation analysis results (external reliability).

In future works we will also evaluate the question of formulating the null hypothesis with parameter-free, so that the differences between the measurements in the two epochs may be taken as being directly the errors. Thus, the test could be simpler, with the benefit that the degrees of freedom of the test will be larger than the model adopted here.

Finally, it is emphasized that the proposed method can be extended to the outlier detection problem. The proposed method can also be extended to the case of Point Clouds from Terrestrial Laser Scanning in case of having to decide between the different models of surface representation in the area-based deformation analyses problems. The dataset and algorithms used in this work are available for those interested in reproducing the results and/or applying them to other methodologies, as follows:

https://data.mendeley.com/datasets/msg783rh2y/draft?a=607de244-7c1d-417f-a9c8-81281d8a6056

Figure 1.

Horizontal trilateration network deformed due to the displacement (step) of point F to a new position F’.

Figure 1.

Horizontal trilateration network deformed due to the displacement (step) of point F to a new position F’.

Figure 2.

Horizontal trilateration network deformed due to the simultaneous displacement (step) of point A to a new position A’ and B to B’.

Figure 2.

Horizontal trilateration network deformed due to the simultaneous displacement (step) of point A to a new position A’ and B to B’.

Figure 3.

Flowchart of the SLRTUPI.

Figure 3.

Flowchart of the SLRTUPI.

Figure 5.

Flowchart for determining the maximum possible number of points to be inspected by SLTUPI.

Figure 5.

Flowchart for determining the maximum possible number of points to be inspected by SLTUPI.

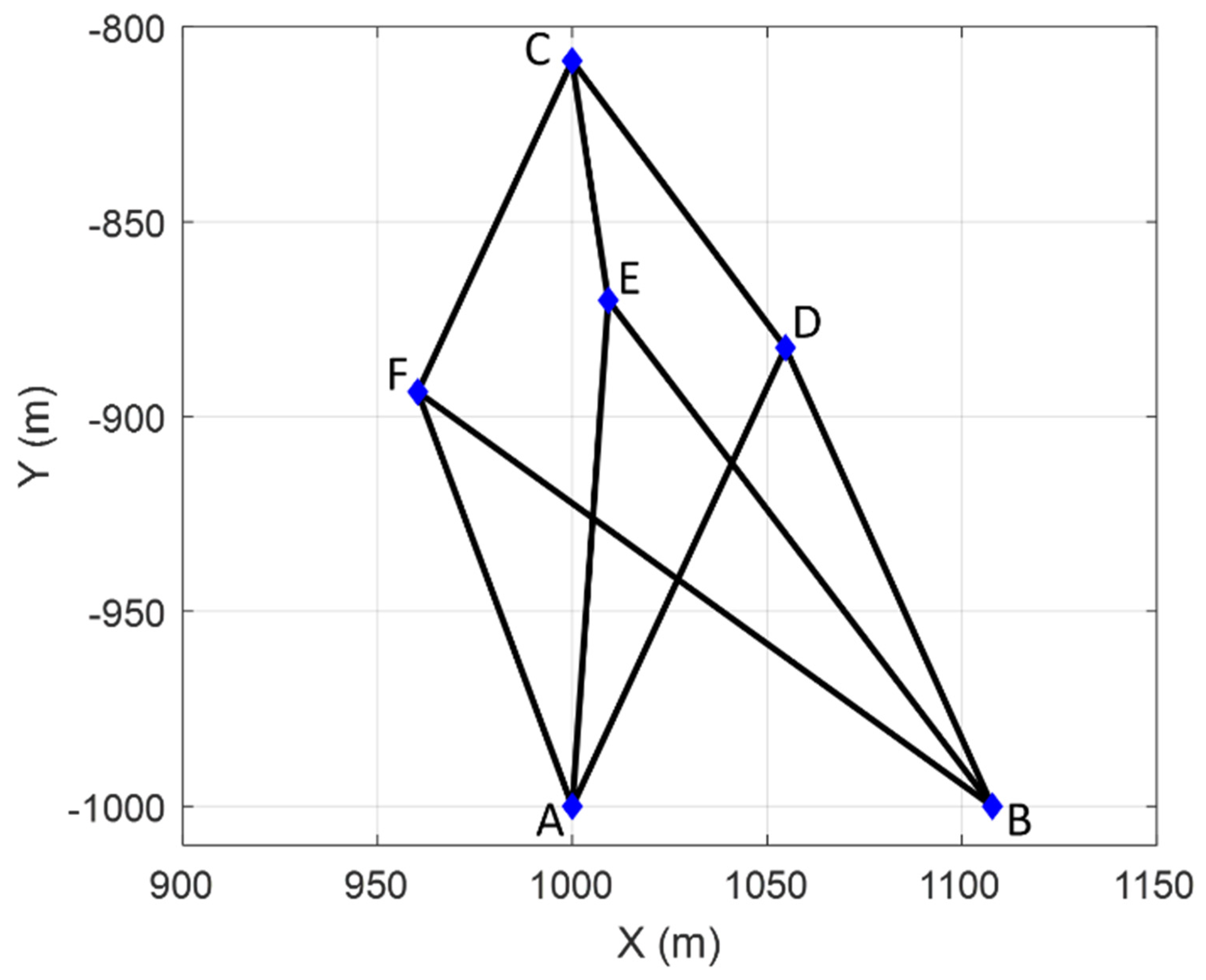

Figure 4.

Simulated Trilateration Network.

Figure 4.

Simulated Trilateration Network.

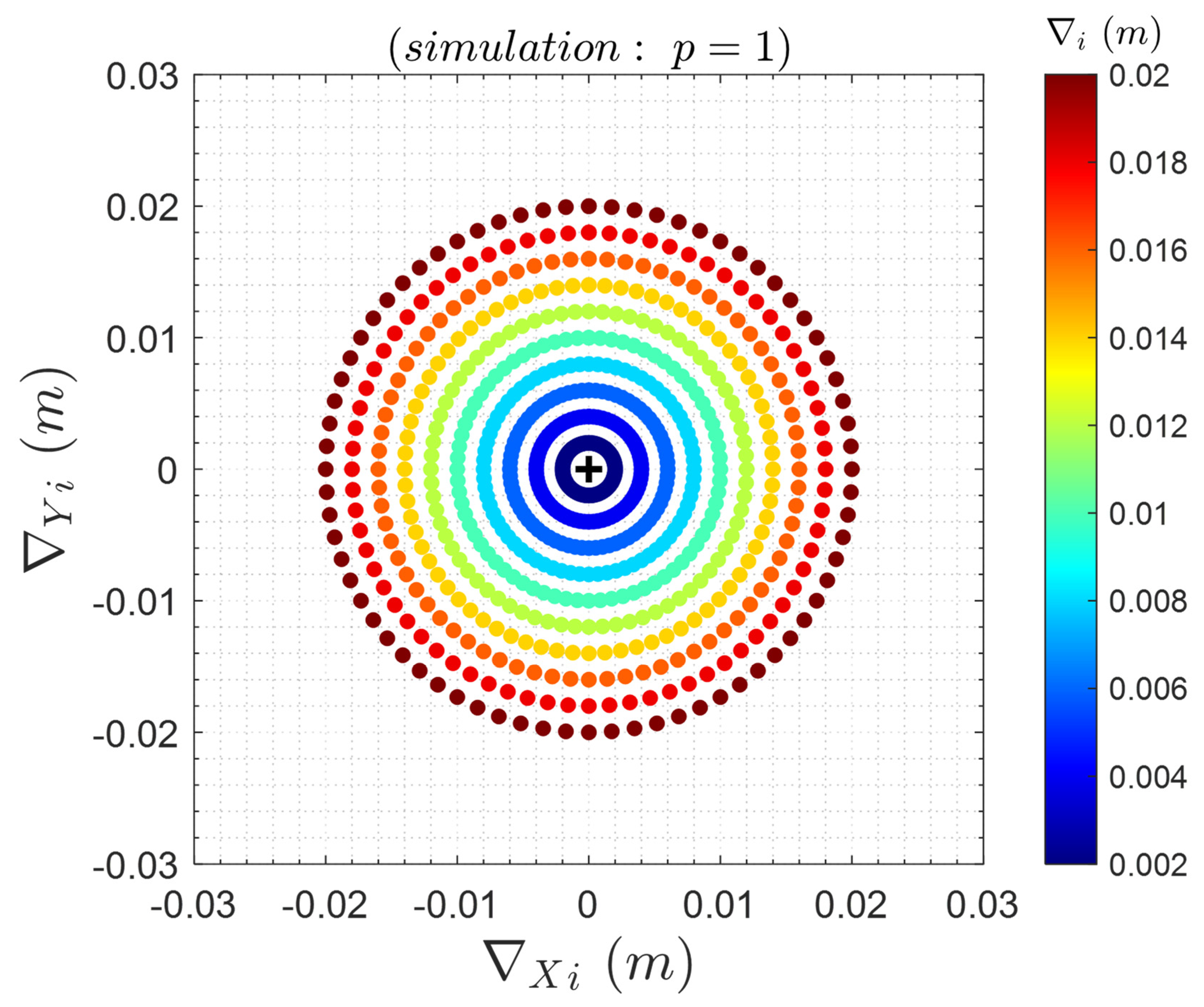

Figure 6.

Simulated displacements for each point (case ).

Figure 6.

Simulated displacements for each point (case ).

Figure 7.

Probability of correct identification (): (a) for ; (b) for ; (c) for ; and (d) for .

Figure 7.

Probability of correct identification (): (a) for ; (b) for ; (c) for ; and (d) for .

Figure 8.

Probability of correct detection (): (a) for ; (b) for ; (c) for ; and (d) for .

Figure 8.

Probability of correct detection (): (a) for ; (b) for ; (c) for ; and (d) for .

Figure 9.

Probability of wrong identification (): (a) for ; (b) for ; (c) for ; and (d) for .

Figure 9.

Probability of wrong identification (): (a) for ; (b) for ; (c) for ; and (d) for .

Figure 10.

Probability of overidentification for the case where SLRTUPI identifies the displaced point and others (): (a) for ; (b) for ; (c) for ; and (d) for .

Figure 10.

Probability of overidentification for the case where SLRTUPI identifies the displaced point and others (): (a) for ; (b) for ; (c) for ; and (d) for .

Figure 11.

Cumulative probability for the identification and detection success rates for , , and , and for the following cases: (a) for , (b) for , (c) for , (d) for , (e) for and (f) for .

Figure 11.

Cumulative probability for the identification and detection success rates for , , and , and for the following cases: (a) for , (b) for , (c) for , (d) for , (e) for and (f) for .

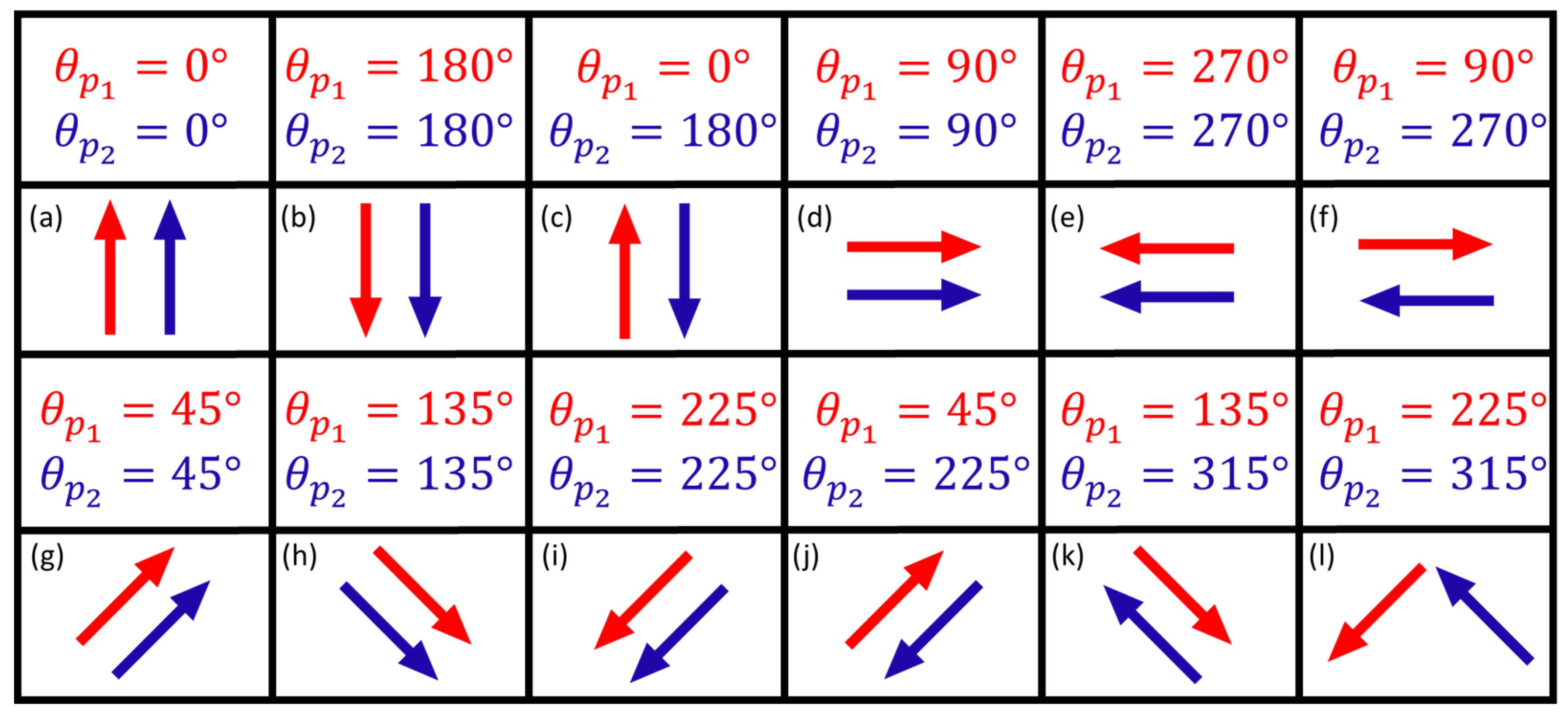

Figure 12.

Simulated displacement patterns for each group of two points ( and for first and second selected point).

Figure 12.

Simulated displacement patterns for each group of two points ( and for first and second selected point).

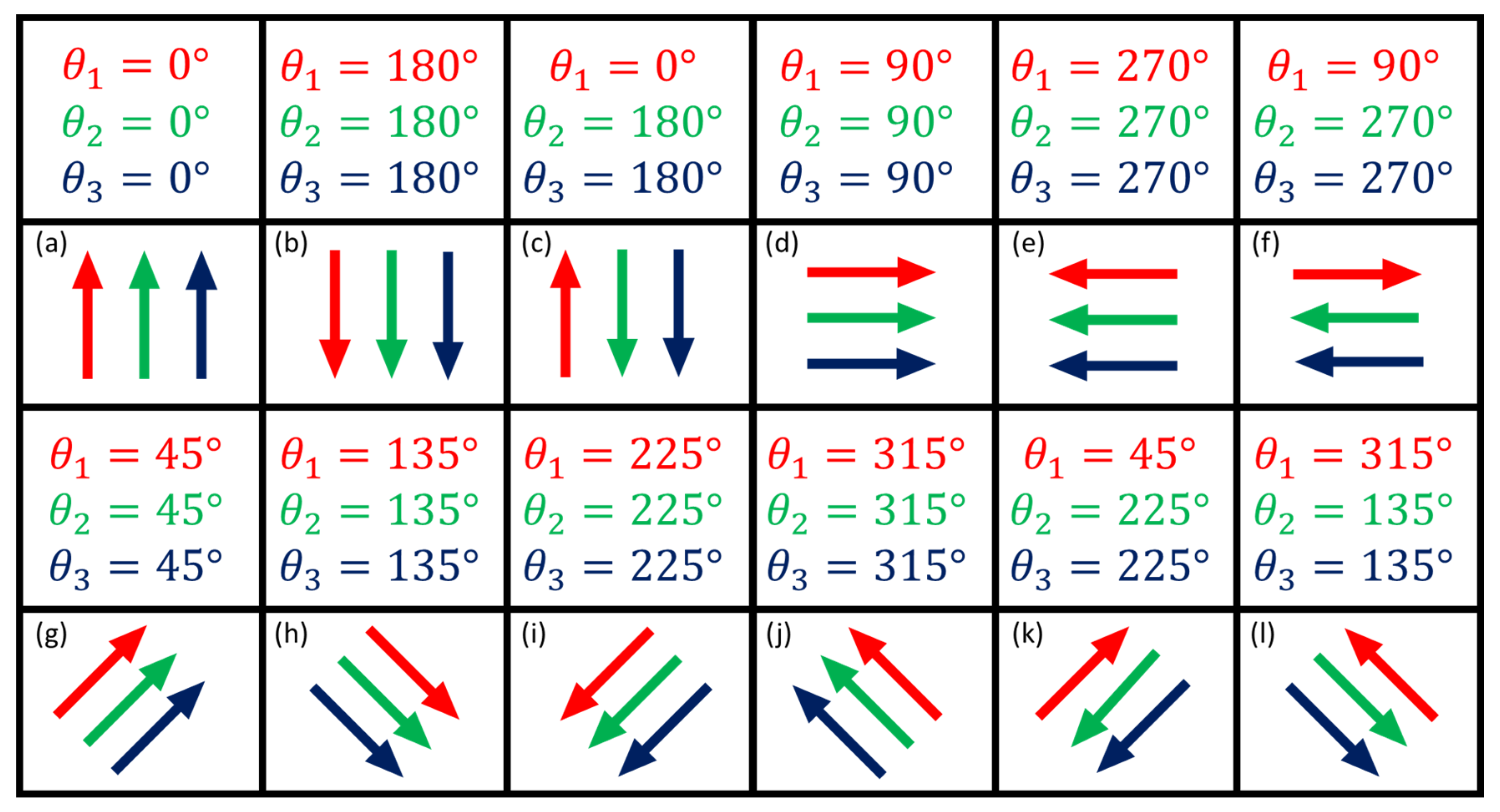

Figure 13.

Simulated displacement patterns for each group of three points (, and for first, second and third selected point).

Figure 13.

Simulated displacement patterns for each group of three points (, and for first, second and third selected point).

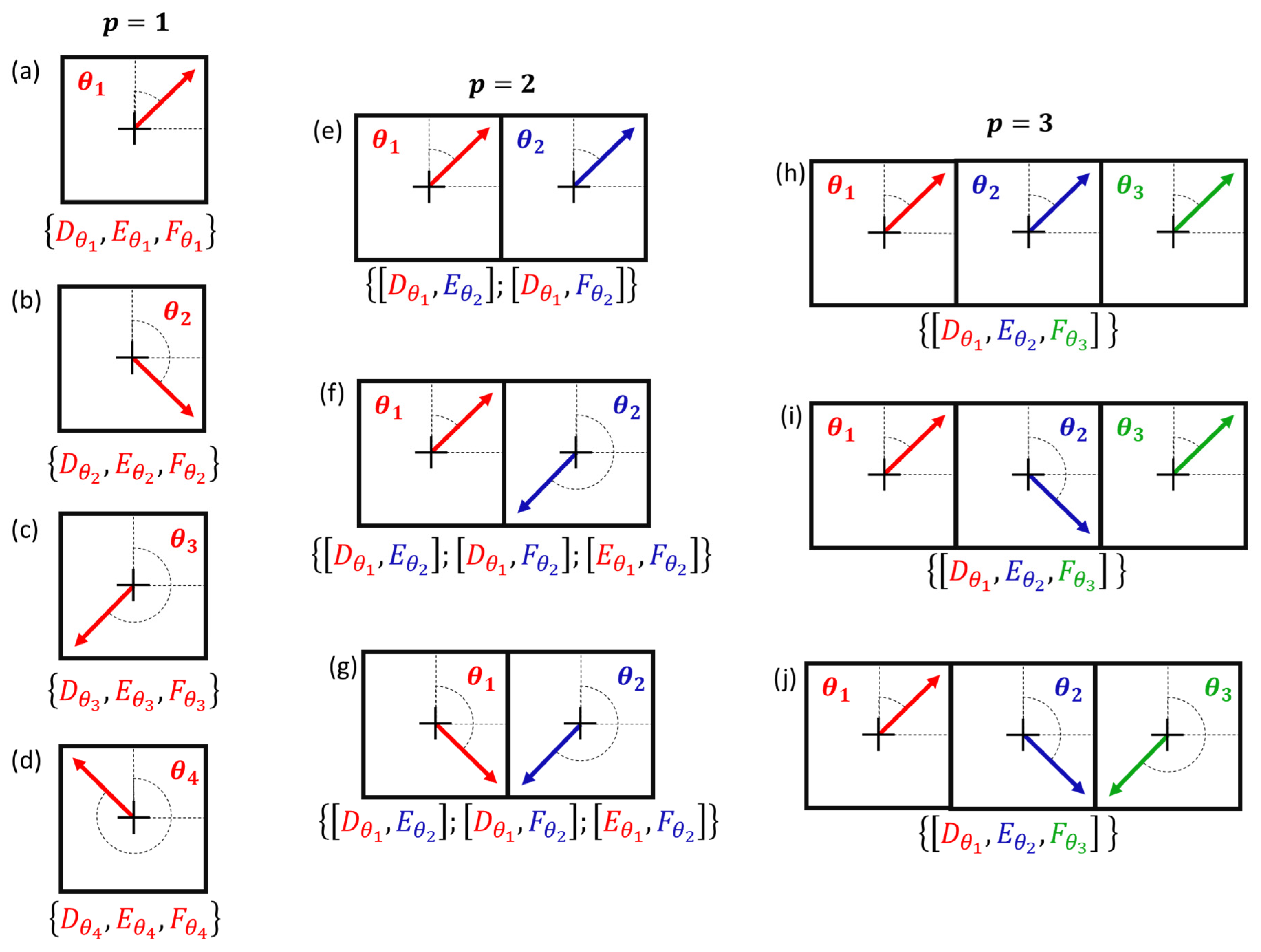

Figure 14.

Displacement patterns applied in the field: (a) displacement for each individual point at a time in the direction, denoted by ; (b) displacement for each individual point at a time in the direction, denoted by ; (c) displacement for each individual point at a time in the direction, denoted by ; (d) displacement for each individual point at a time in the direction, denoted by ; (e,f,g) displacement for two-point group simultaneous displacement, with the first point shifted in the direction and the second one in the direction; (h,i,j) displacement for three-point group simultaneous displacement, with the first point shifted in the direction, second point in the direction and the third one in the direction.

Figure 14.

Displacement patterns applied in the field: (a) displacement for each individual point at a time in the direction, denoted by ; (b) displacement for each individual point at a time in the direction, denoted by ; (c) displacement for each individual point at a time in the direction, denoted by ; (d) displacement for each individual point at a time in the direction, denoted by ; (e,f,g) displacement for two-point group simultaneous displacement, with the first point shifted in the direction and the second one in the direction; (h,i,j) displacement for three-point group simultaneous displacement, with the first point shifted in the direction, second point in the direction and the third one in the direction.

Table 1.

Coordinates of the network points for Epoch 1.

Table 1.

Coordinates of the network points for Epoch 1.

| Point |

X [m] |

Y [m] |

| A |

1000 |

-1000 |

| B |

1107.83 |

-1000 |

| C |

999.949 |

-808.661 |

| D |

1054.73 |

-882.298 |

| E |

1009.24 |

-870.129 |

| F |

960.33 |

-893.626 |

Table 2.

Distances of the segments for Epoch 1.

Table 3.

False positive rates for the critical values derived from Monte Carlo approach.

Table 3.

False positive rates for the critical values derived from Monte Carlo approach.

|

|

|

|

| 0.001 |

16.75 |

~0.001 |

0.00% |

| 0.01 |

12.27 |

~0.01 |

0.01% |

| 0.05 |

9.06 |

~0.05 |

0.03% |

| 0.1 |

7.62 |

~0.1 |

0.05% |

Table 4.

Maximum and minimum correct identification rate for two points mutually unstable .

Table 4.

Maximum and minimum correct identification rate for two points mutually unstable .

| |

|

Displacement patterns |

| (a, b) |

(c) |

(d, e) |

(f) |

(g, i) |

(h) |

(j) |

(k) |

(l) |

|

0.1% |

74.63 |

99.94 |

53.47 |

90.16 |

50.64 |

69.63 |

91.2 |

99.95 |

85.87 |

|

00.99 |

18.27 |

0.00 |

00.04 |

00.00 |

00.00 |

00.03 |

9.99 |

00.24 |

|

1% |

74.39 |

99.71 |

72.08 |

96.99 |

65.62 |

70.62 |

97.11 |

99.82 |

86.59 |

|

03.46 |

20.25 |

00.00 |

00.61 |

00.00 |

00.00 |

00.28 |

19.30 |

01.26 |

|

5% |

72.58 |

98.69 |

78.66 |

97.83 |

69.99 |

70.55 |

97.14 |

99.14 |

83.75 |

|

04.67 |

19.96 |

00.01 |

02.67 |

00.02 |

00.02 |

00.94 |

24.64 |

03.48 |

|

10% |

70.72 |

97.55 |

78.33 |

96.73 |

69.76 |

69.92 |

95.59 |

98.17 |

79.45 |

|

04.78 |

19.38 |

00.02 |

04.45 |

00.05 |

00.05 |

01.30 |

25.76 |

05.29 |

Table 5.

Maximum and minimum correct detection rate for two points mutually unstable .

Table 5.

Maximum and minimum correct detection rate for two points mutually unstable .

| |

|

Displacement patterns |

|

(a, b) |

(c) |

(d, e) |

(f) |

(g, i) |

(h) |

(j) |

(k) |

(l) |

|

0.1% |

100.00 |

100.00 |

99.50 |

100.00 |

100.00 |

100.00 |

100.00 |

100.00 |

100.00 |

|

80.63 |

95.50 |

30.87 |

45.04 |

21.73 |

21.82 |

66.71 |

68.14 |

82.80 |

|

1% |

100.00 |

100.00 |

99.96 |

100.00 |

100.00 |

100.00 |

100.00 |

100.00 |

100.00 |

|

86.69 |

99.35 |

61.00 |

74.80 |

48.28 |

48.19 |

86.21 |

88.14 |

93.19 |

|

5% |

100.00 |

100.00 |

100.00 |

100.00 |

100.00 |

100.00 |

100.00 |

100.00 |

100.00 |

|

93.10 |

99.95 |

81.59 |

91.52 |

72.10 |

71.97 |

95.64 |

96.68 |

98.16 |

|

10% |

100.00 |

100.00 |

100.00 |

100.00 |

100.00 |

100.00 |

100.00 |

100.00 |

100.00 |

|

95.74 |

99.99 |

87.23 |

95.98 |

81.80 |

81.79 |

97.96 |

98.65 |

99.34 |

Table 6.

Maximum and minimum correct identification rate for three points mutually unstable .

Table 6.

Maximum and minimum correct identification rate for three points mutually unstable .

| |

|

Displacement patterns |

| (a, b) |

(c) |

(d, e) |

(f) |

(g, i) |

(h, j) |

(k) |

(l) |

|

0.1% |

0.17 |

37.98 |

0.07 |

1.54 |

1.55 |

0.43 |

28.21 |

47.89 |

|

0.00 |

0.00 |

0.00 |

0.00 |

0.00 |

0.00 |

0.00 |

0.00 |

|

1% |

0.69 |

38.16 |

0.64 |

8.22 |

5.23 |

0.56 |

40.69 |

47.94 |

|

0.00 |

0.00 |

0.00 |

0.00 |

0.00 |

0.00 |

0.00 |

0.00 |

|

5% |

1.42 |

38.38 |

2.13 |

19.25 |

12.79 |

0.90 |

45.66 |

47.97 |

|

0.00 |

0.00 |

0.00 |

0.00 |

0.00 |

0.00 |

0.00 |

0.01 |

|

10% |

1.76 |

37.86 |

3.17 |

25.17 |

18.20 |

1.21 |

46.51 |

48.07 |

|

0.00 |

0.00 |

0.00 |

0.01 |

0.00 |

0.00 |

0.00 |

0.03 |

Table 7.

Maximum and minimum correct detection rate for three points mutually unstable .

Table 7.

Maximum and minimum correct detection rate for three points mutually unstable .

| |

|

Displacement patterns |

| (a, b) |

(c) |

(d, e) |

(f) |

(g, i) |

(h, j) |

(k) |

(l) |

|

0.1% |

100.00 |

100.00 |

99.89 |

100.00 |

100.00 |

100.00 |

100.00 |

100.00 |

|

85.79 |

89.16 |

32.39 |

76.51 |

37.27 |

82.02 |

29.50 |

69.40 |

|

1% |

100.00 |

100.00 |

99.99 |

100.00 |

100.00 |

100.00 |

100.00 |

100.00 |

|

91.40 |

95.67 |

61.30 |

91.64 |

66.78 |

89.69 |

57.19 |

83.23 |

|

5% |

100.00 |

100.00 |

100.00 |

100.00 |

100.00 |

100.00 |

100.00 |

100.00 |

|

93.98 |

98.38 |

79.75 |

97.47 |

86.30 |

94.78 |

78.75 |

91.10 |

|

10% |

100.00 |

100.00 |

100.00 |

100.00 |

100.00 |

100.00 |

100.00 |

100.00 |

|

94.74 |

99.14 |

85.83 |

98.72 |

92.50 |

95.66 |

86.64 |

93.80 |

Table 8.

Frequency of occurrence for each SLRTUPI decision class for the case where all points are subject to be monitored.

Table 8.

Frequency of occurrence for each SLRTUPI decision class for the case where all points are subject to be monitored.

| Displace. patterns |

Correct Detection |

Correct Identification |

Wrong Identification |

| (a) |

3 |

3 |

0 |

| (b) |

3 |

3 |

0 |

| (c) |

3 |

2 |

1 |

| (d) |

3 |

3 |

0 |

| (e) |

2 |

0 |

2 |

| (f) |

3 |

1 |

2 |

| (g) |

3 |

3 |

0 |

| (h) |

1 |

0 |

1 |

| (i) |

1 |

1 |

0 |

| (j) |

1 |

1 |

0 |

Total |

23 |

17 |

6 |

| Rate (%) |

100 |

73.91 |

26.09 |

Table 9.

Frequency of occurrence for each SLRTUPI decision class for the case where the points A, B and C are fixed as stable.

Table 9.

Frequency of occurrence for each SLRTUPI decision class for the case where the points A, B and C are fixed as stable.

| Displace. patterns |

Correct Detection |

Correct Identification |

Wrong Identification |

| (a) |

3 |

3 |

0 |

| (b) |

3 |

3 |

0 |

| (c) |

3 |

3 |

0 |

| (d) |

3 |

3 |

0 |

| (e) |

2 |

2 |

0 |

| (f) |

3 |

2 |

1 |

| (g) |

3 |

3 |

0 |

| (h) |

1 |

1 |

0 |

| (i) |

1 |

1 |

0 |

| (j) |

1 |

1 |

0 |

| Total |

23 |

22 |

1 |

| Rate (%) |

100 |

95.65 |

4.35 |

with the identity matrix.

with the identity matrix.