Submitted:

26 December 2023

Posted:

27 December 2023

You are already at the latest version

Abstract

Keywords:

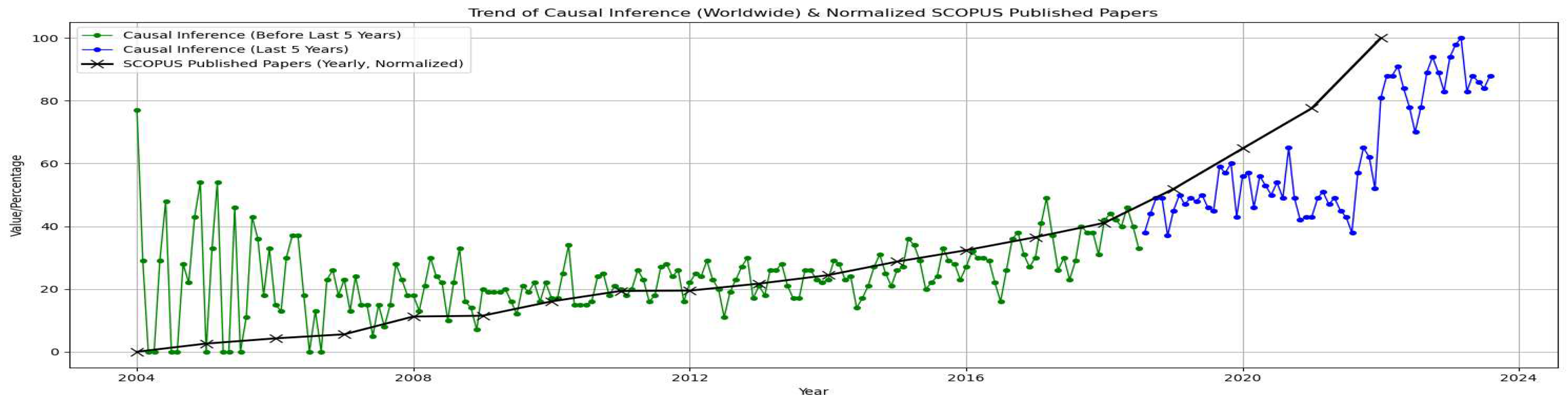

1. Introduction

2. Preliminaries

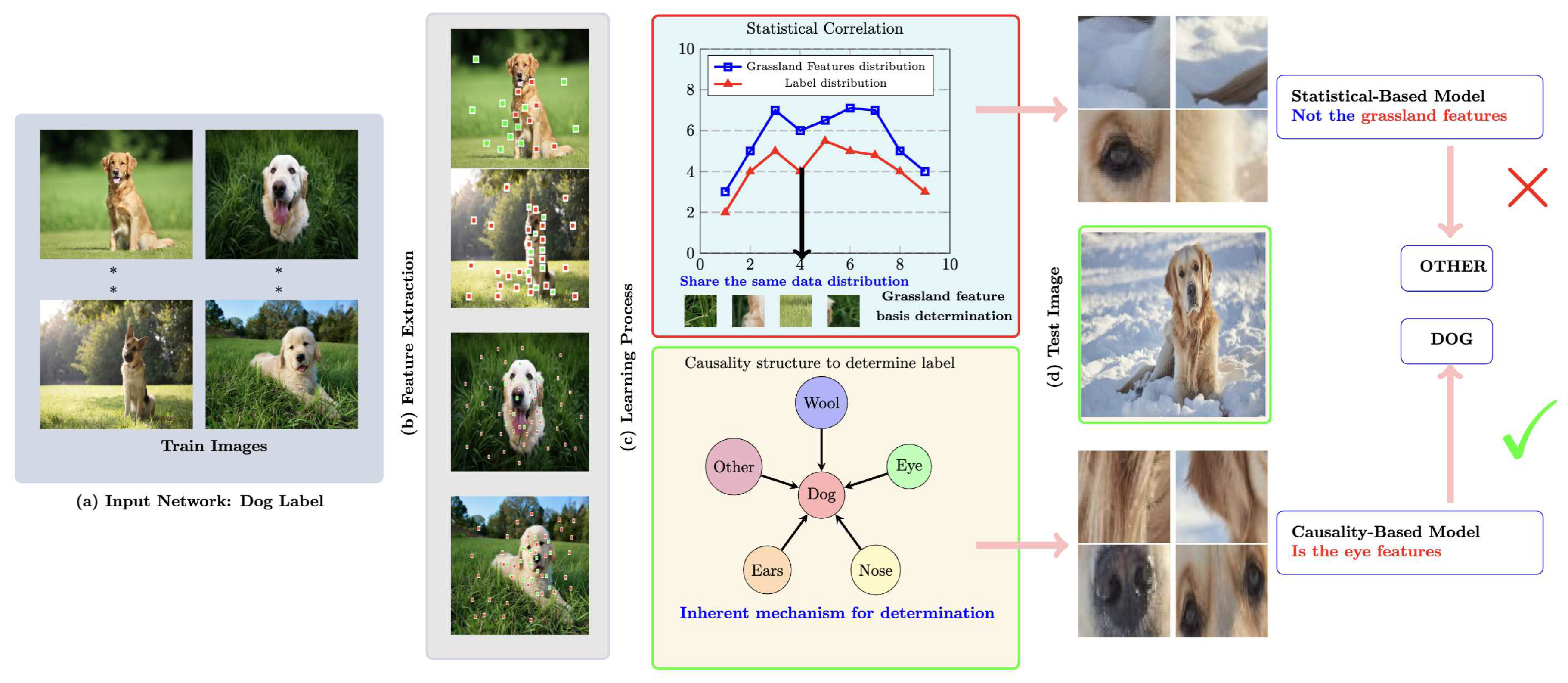

2.1. Causation vs Correlation

2.2. Causal Discovery

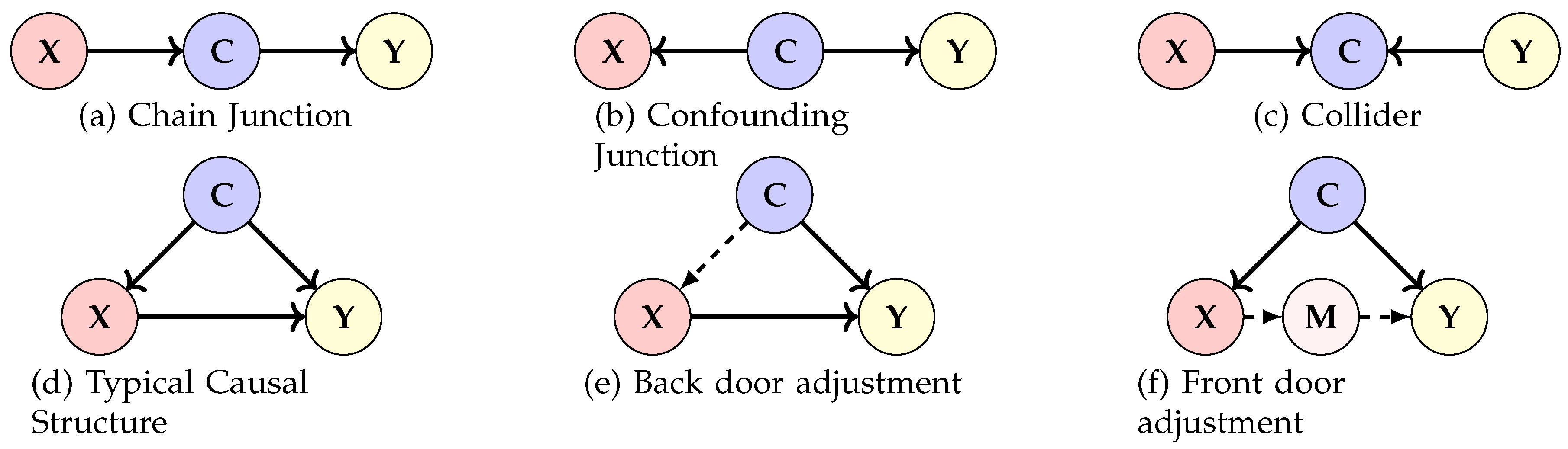

2.2.1. Causal Structure

- A path from X to Y constitutes a sequence of nodes and edges with X and Y being the initial and terminal nodes, respectively.

- A conditioning set L refers to the collection of nodes upon which we impose conditions. It’s noteworthy that this set might be vacant.

- Imposing conditions on a non-collider present along a path invariably blocks that path.

- A collider on a path inherently obstructs that path. Nonetheless, conditioning on a collider, or any of its descendants, unblocks the path.

2.2.2. Structural Causal Model

2.3. Causal Inference

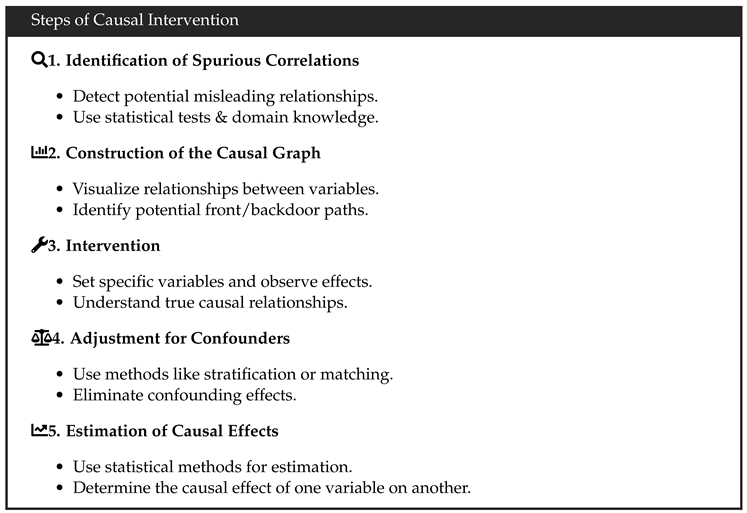

2.3.1. Causal Intervention

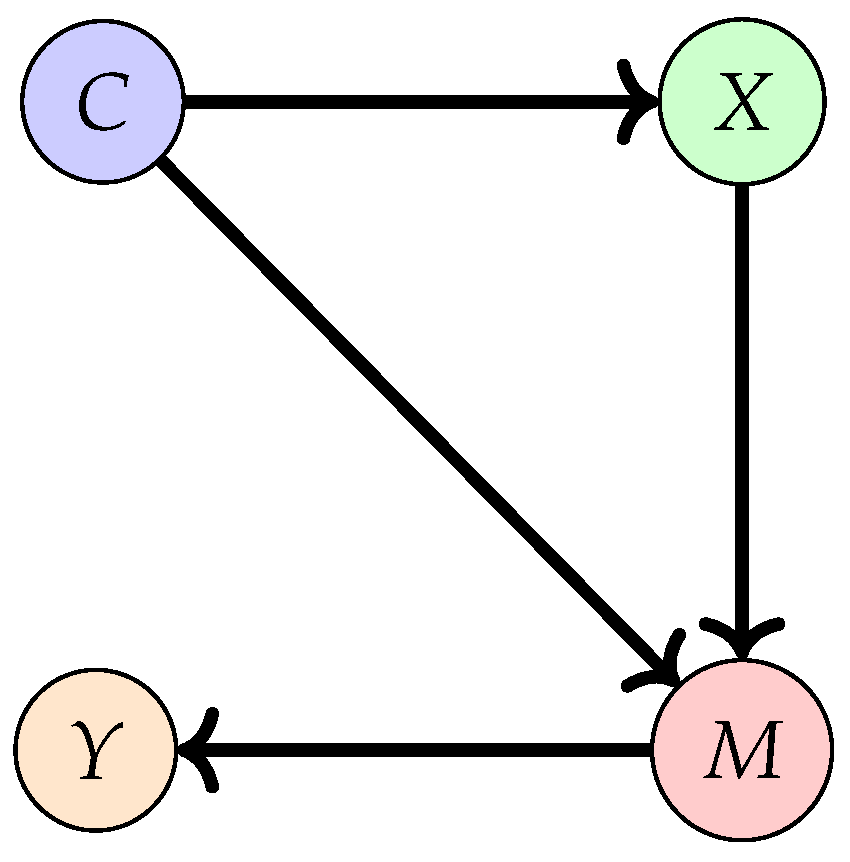

- The path in Figure 3(a) represents a chain junction, with X impacting Y through an intermediary C. In the realm of visual tasks, features derived from an image inform the label. Here, intervening on C can obstruct the X to Y path.

- Figure 3(b) showcases a confounding junction, denoted by . Here, C influences both X and Y. Such contexts might introduce unintentional correlations in the true causal link between images and labels. Intervening on C can counteract this.

- The configuration in Figure 3(c) is termed a collider, where both X and Y dictate C. For vision tasks, the image might be shaped by both content and domain specifics. Here, if C remains unknown, X and Y are independent. Yet, knowing C ties X to Y, making intervention on C ineffective.

Back-Door Adjustment

Front-Door Adjustment

2.3.2. Counterfactual

3. Causality in Image Classification Tasks

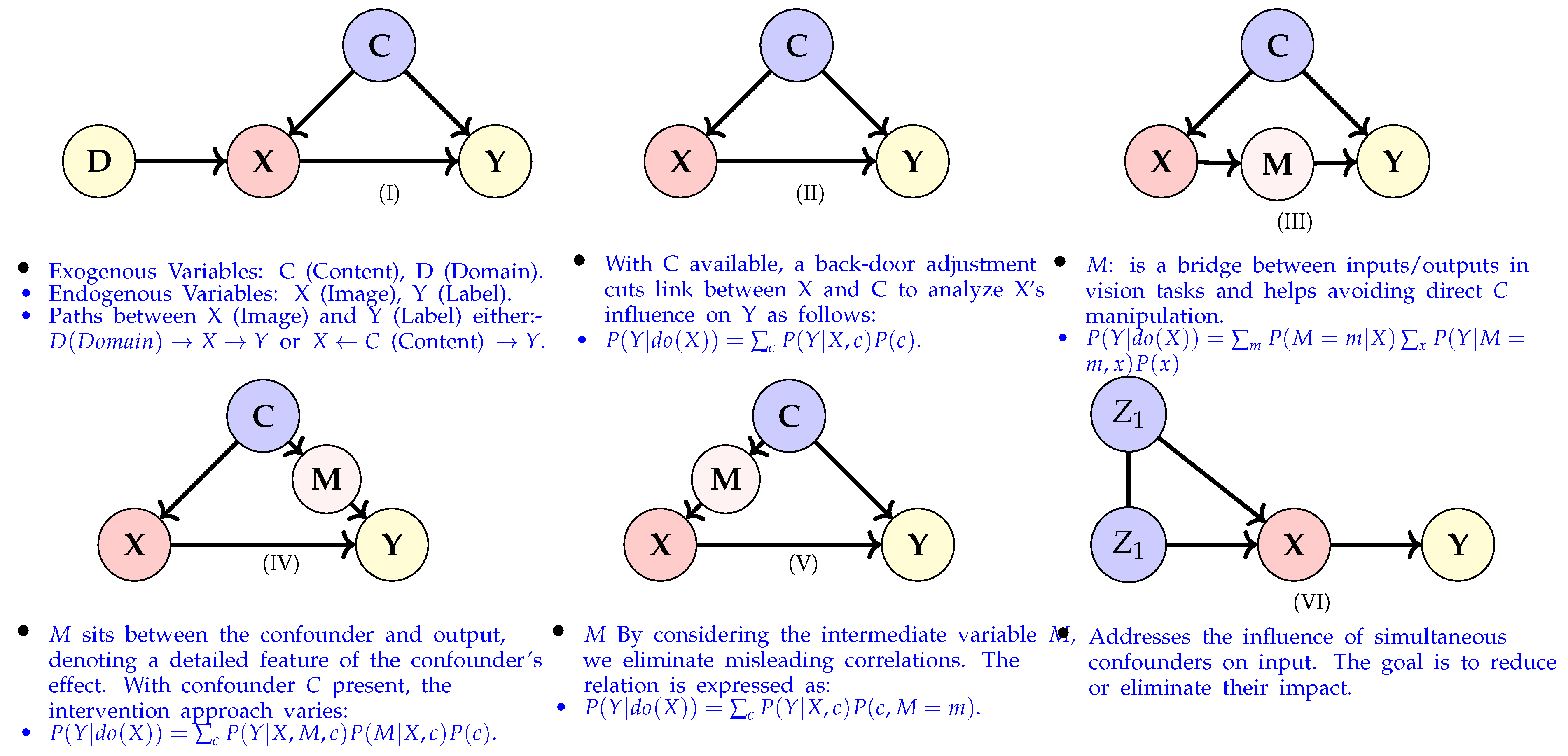

3.1. Classification

| Task | Paper | Year | Problem | Causal Instrument | Confounder | Base models | Structure |

|---|---|---|---|---|---|---|---|

| [49] | 2015 | Domain Adaptation | Causal Inference | Domain | - | I | |

| CLS | [50] | 2017 | Selection Bias | Causal Inference | Context | CRLR algorithm | I |

| [51] | 2020 | Understanding | Potential outcome model (DE) | Confounded concept | AutoEncoder | V | |

| [52] | 2020 | Imbalanced data | Back-door adjustment (TDE) | Optimizer | ResNeXt-50- | III | |

| [53] | 2021 | Catastrophic Forgetting | Potential outcome models (TDE) | old data | Transformer | I | |

| [54] | 2021 | OOD | Causal inference | Context | - | I | |

| [55] | 2021 | Generalization | Causal inference | Domain | Resnet50 | I | |

| [56] | 2021 | Generalization | Causal inference | - | Auto-Encoder | I | |

| [57] | 2021 | Domain Adaptation | Causal inference | Unobserved feature | CycleGAN | I | |

| [58] | 2022 | Domain Generalization | Causal inference | Causal factors | - | I | |

| [59] | 2022 | OOD | Causal inference | Unobserved latent variable | - | I | |

| [60] | 2022 | Domain Generalization | Potential outcome models | Domain | - | I | |

| [61] | 2023 | noisy datasets | Potential outcome models | Unobservable variable | - | I | |

| [62] | 2023 | catastrophic forgetting | Back-door adjustment | Task identifier | - | I | |

| [63] | 2023 | Domain Generalization | Counterfactual | Semantic concept | - | I |

3.1.1. Handling Long Tail Dataset

3.1.2. Domain Generalization

3.1.3. Problem Definition

- Single-Source DG: In this case, training data stems from a homogeneous source domain, i.e., .

- Multi-Source DG: This setting involves the study of DG across multiple sources. The majority of research is focused on the multi-source DG scenario, where diverse and relevant domains () are available.

- Causal Intervention Module: This module focuses on separating causal factors from non-causal factors through do-interventions. By doing so, the causal factors remain unchanged despite non-causal perturbations. This process generates representations that are independent of non-causal influences.

- Causal Factorization Module: This module promote independence among representation dimensions. It achieves this by minimizing correlations between dimensions. This transformation converts initially interdependent and noisy representations into independent ones, aligning with the characteristics of ideal causal factors.

- Adversarial Mask Module: In this module, the representations efficacy for the classification task is enhanced. An adversarial masker identifies dimensions of varying importance. This step helps distinguish superior dimensions from inferior ones, allowing the former to contribute more significantly. As a result, the representations become more causally informative.

- Recognizing the Confounder and Invariance Condition: The authors introduce the object variable O as a confounder that influences features X and class labels Y. They aim to find invariant representations across domains that are informative about O.

- Introducing the Matching Function : They propose a matching function to assess if pairs of inputs from different domains correspond to the same object. This function enforces consistency of representation across different domains but with the same object.

- Defining the Invariance Condition: An average pairwise distance condition between representations of the same object from different domains is stipulated. This condition ensures close representations for the same object across various domains.

- Learning Invariant Representations: To learn invariant representations, the authors introduce the “perfect-match” invariant, combining classification loss and the invariance condition. This loss function encourages representations that are invariant to domain shifts while preserving object-related information.

- Introducing the CSG model to represent causal relationships between semantic (s), variation (v), and observed data (x, y).

- Disentangling semantic and variation factors using latent variables s and v, ensuring accurate modeling of causal relations.

- Addressing confounding by attributing x-y relationships to latent factor z and accounting for interrelation between semantic and variation factors.

- Domain-Conditioned Supervised Learning: The model optimizes cross-entropy loss while conditioning on the domain. This strategy captures the correlation between images and labels across diverse domains.

- Causal Effect Learning: Leveraging the front-door criterion, the authors measure and enhance causal effects. A knowledge queue is leveraged to retain historical features and labels, aiding the translation of new images into acquired knowledge.

- Contrastive Similarity Learning: The application of contrastive similarity serves to cluster features sharing the same category. This process quantifies feature similarity, facilitating the separation of features.

3.2. Practical: Problem Formulation

3.2.1. De-Confounded Training

4. Conclusion

References

- Tamsekar, P.; Deshmukh, N.; Bhalchandra, P.; Kulkarni, G.; Hambarde, K.; Husen, S. Comparative analysis of supervised machine learning algorithms for GIS-based crop selection prediction model. Computing and Network Sustainability: Proceedings of IRSCNS 2018. Springer, 2019, pp. 309–314. [CrossRef]

- Kulkarni, G.; Nilesh, D.; Parag, B.; Wasnik, P.; Hambarde, K.; Tamsekar, P.; Kamble, V.; Bahuguna, V. Effective use of GIS based spatial pattern technology for urban greenery space planning: a case study for Ganesh Nagar area of Nanded city. Proceedings of 2nd International Conference on Intelligent Computing and Applications: ICICA 2015. Springer, 2017, pp. 123–132. [CrossRef]

- Hambarde, K.A.; Proenca, H. Information Retrieval: Recent Advances and Beyond. arXiv preprint arXiv:2301.08801 2023. [CrossRef]

- Hambarde, K.; Silahtaroğlu, G.; Khamitkar, S.; Bhalchandra, P.; Shaikh, H.; Kulkarni, G.; Tamsekar, P.; Samale, P. Data analytics implemented over E-commerce data to evaluate performance of supervised learning approaches in relation to customer behavior. Soft Computing for Problem Solving: SocProS 2018, Volume 1. Springer, 2020, pp. 285–293. [CrossRef]

- Husen, S.; Khamitkar, S.; Bhalchandra, P.; Tamsekar, P.; Kulkarni, G.; Hambarde, K. Prediction of artificial water recharge sites using fusion of RS, GIS, AHP and GA Technologies. Advances in Data Science and Management: Proceedings of ICDSM 2019. Springer, 2020, pp. 387–394. [CrossRef]

- Tamsekar, P.; Deshmukh, N.; Bhalchandra, P.; Kulkarni, G.; Kamble, V.; Hambarde, K.; Bahuguna, V. Architectural outline of GIS-based decision support system for crop selection. Smart Computing and Informatics: Proceedings of the First International Conference on SCI 2016, Volume 1. Springer, 2018, pp. 155–162.

- Tamsekar, P.; Deshmukh, N.; Bhalchandra, P.; Kulkarni, G.; Hambarde, K.; Wasnik, P.; Husen, S.; Kamble, V. Architectural outline of decision support system for crop selection using GIS and DM techniques. Computing and Network Sustainability: Proceedings of IRSCNS 2016. Springer, 2017, pp. 101–108.

- Hambarde, K.; Proença, H. WSRR: Weighted Rank-Relevance Sampling for Dense Text Retrieval. International Conference on Information and Communication Technology for Intelligent Systems. Springer, 2023, pp. 239–248. [CrossRef]

- Doguc, O.; Silahtaroglu, G.; Canbolat, Z.N.; Hambarde, K.; Gokay, H.; Ylmaz, M. Diagnosis of Covid-19 Via Patient Breath Data Using Artificial Intelligence. arXiv preprint arXiv:2302.10180 2023. [CrossRef]

- Hambarde, K.; Silahtaroğlu, G.; Khamitkar, S.; Bhalchandra, P.; Shaikh, H.; Tamsekar, P.; Kulkarni, G. Augmentation of Behavioral Analysis Framework for E-Commerce Customers Using MLP-Based ANN. Advances in Data Science and Management: Proceedings of ICDSM 2019. Springer, 2020, pp. 45–50. [CrossRef]

- Proenca, H.; Hambarde, K. Image-Based Human Re-Identification: Which Covariates are Actually (the Most) Important? Available at SSRN 4618562 2023. [CrossRef]

- Shalev-Shwartz, S.; Shammah, S.; Shashua, A. On a formal model of safe and scalable self-driving cars. arXiv preprint arXiv:1708.06374 2017.

- Anderson, J.M.; Nidhi, K.; Stanley, K.D.; Sorensen, P.; Samaras, C.; Oluwatola, O.A. Autonomous vehicle technology: A guide for policymakers; Rand Corporation, 2014.

- Shakhatreh, H.; Sawalmeh, A.H.; Al-Fuqaha, A.; Dou, Z.; Almaita, E.; Khalil, I.; Othman, N.S.; Khreishah, A.; Guizani, M. Unmanned aerial vehicles (UAVs): A survey on civil applications and key research challenges. Ieee Access 2019, 7, 48572–48634. [CrossRef]

- Kim, J.; Kim, S.; Ju, C.; Son, H.I. Unmanned aerial vehicles in agriculture: A review of perspective of platform, control, and applications. Ieee Access 2019, 7, 105100–105115. [CrossRef]

- Javaid, M.; Haleem, A.; Vaish, A.; Vaishya, R.; Iyengar, K.P. Robotics applications in COVID-19: A review. Journal of Industrial Integration and Management 2020, 5, 441–451. [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, .; Polosukhin, I. Attention is all you need. Advances in neural information processing systems 2017, 30.

- Anderson, P.; He, X.; Buehler, C.; Teney, D.; Johnson, M.; Gould, S.; Zhang, L. Bottom-up and top-down attention for image captioning and visual question answering. Proceedings of the IEEE conference on computer vision and pattern recognition, 2018, pp. 6077–6086.

- Tan, C.; Sun, F.; Kong, T.; Zhang, W.; Yang, C.; Liu, C. A survey on deep transfer learning. Artificial Neural Networks and Machine Learning–ICANN 2018: 27th International Conference on Artificial Neural Networks, Rhodes, Greece, October 4-7, 2018, Proceedings, Part III 27. Springer, 2018, pp. 270–279. [CrossRef]

- Kaya, A.; Keceli, A.S.; Catal, C.; Yalic, H.Y.; Temucin, H.; Tekinerdogan, B. Analysis of transfer learning for deep neural network based plant classification models. Computers and electronics in agriculture 2019, 158, 20–29. [CrossRef]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805 2018. [CrossRef]

- Li, J.; Li, D.; Savarese, S.; Hoi, S. Blip-2: Bootstrapping language-image pre-training with frozen image encoders and large language models. arXiv preprint arXiv:2301.12597 2023. [CrossRef]

- Blyth, C.R. On Simpson’s paradox and the sure-thing principle. Journal of the American Statistical Association 1972, 67, 364–366. [CrossRef]

- Borsboom, D.; Kievit, R.A.; Cervone, D.; Hood, S.B. The two disciplines of scientific psychology, or: The disunity of psychology as a working hypothesis. Dynamic process methodology in the social and developmental sciences 2009, pp. 67–97.

- Malik, N.; Singh, P.V. Deep learning in computer vision: Methods, interpretation, causation, and fairness. In Operations Research & Management Science in the Age of Analytics; INFORMS, 2019; pp. 73–100. [CrossRef]

- Sun, Q.; Zhao, C.; Tang, Y.; Qian, F. A survey on unsupervised domain adaptation in computer vision tasks. Scientia Sinica Technologica 2022, 52, 26–54. [CrossRef]

- Zhou, K.; Liu, Z.; Qiao, Y.; Xiang, T.; Loy, C.C. Domain generalization: A survey. IEEE Transactions on Pattern Analysis and Machine Intelligence 2022. [CrossRef]

- Heidel, R.E.; others. Causality in statistical power: Isomorphic properties of measurement, research design, effect size, and sample size. Scientifica 2016, 2016. [CrossRef]

- Dawid, A.P. Statistical causality from a decision-theoretic perspective. Annual Review of Statistics and Its Application 2015, 2, 273–303. [CrossRef]

- Heckman, J.J.; Pinto, R. Causality and econometrics. Technical report, National Bureau of Economic Research, 2022.

- Geweke, J. Inference and causality in economic time series models. Handbook of econometrics 1984, 2, 1101–1144. [CrossRef]

- Kundi, M. Causality and the interpretation of epidemiologic evidence. Environmental Health Perspectives 2006, 114, 969–974. [CrossRef]

- Ohlsson, H.; Kendler, K.S. Applying causal inference methods in psychiatric epidemiology: A review. JAMA psychiatry 2020, 77, 637–644. [CrossRef]

- Hair Jr, J.F.; Sarstedt, M. Data, measurement, and causal inferences in machine learning: opportunities and challenges for marketing. Journal of Marketing Theory and Practice 2021, 29, 65–77. [CrossRef]

- Prosperi, M.; Guo, Y.; Sperrin, M.; Koopman, J.S.; Min, J.S.; He, X.; Rich, S.; Wang, M.; Buchan, I.E.; Bian, J. Causal inference and counterfactual prediction in machine learning for actionable healthcare. Nature Machine Intelligence 2020, 2, 369–375. [CrossRef]

- Chen, H.; Du, K.; Yang, X.; Li, C. A Review and Roadmap of Deep Learning Causal Discovery in Different Variable Paradigms. arXiv preprint arXiv:2209.06367 2022.

- Kaddour, J.; Lynch, A.; Liu, Q.; Kusner, M.J.; Silva, R. Causal machine learning: A survey and open problems. arXiv preprint arXiv:2206.15475 2022.

- Gao, C.; Zheng, Y.; Wang, W.; Feng, F.; He, X.; Li, Y. Causal inference in recommender systems: A survey and future directions. arXiv preprint arXiv:2208.12397 2022. [CrossRef]

- Li, Z.; Zhu, Z.; Guo, X.; Zheng, S.; Guo, Z.; Qiang, S.; Zhao, Y. A Survey of Deep Causal Models and Their Industrial Applications. article 2023. [CrossRef]

- Deng, Z.; Zheng, X.; Tian, H.; Zeng, D.D. Deep causal learning: representation, discovery and inference. arXiv preprint arXiv:2211.03374 2022.

- Liu, Y.; Wei, Y.S.; Yan, H.; Li, G.B.; Lin, L. Causal reasoning meets visual representation learning: A prospective study. Machine Intelligence Research 2022, 19, 485–511. [CrossRef]

- Zhang, K.; Sun, Q.; Zhao, C.; Tang, Y. Causal reasoning in typical computer vision tasks. article 0000. [CrossRef]

- Pearl, J. Causality; Cambridge university press, 2009.

- Pearl, J.; Mackenzie, D. The book of why: the new science of cause and effect; Basic books, 2018.

- Pearl, J. Bayesian networks. article 2011.

- Geiger, D.; Verma, T.; Pearl, J. Identifying independence in Bayesian networks. Networks 1990, 20, 507–534. [CrossRef]

- Rebane, G.; Pearl, J. The recovery of causal poly-trees from statistical data. arXiv preprint arXiv:1304.2736 2013.

- Castro, D.C.; Walker, I.; Glocker, B. Causality matters in medical imaging. Nature Communications 2020, 11, 3673. [CrossRef]

- Zhang, K.; Gong, M.; Schölkopf, B. Multi-source domain adaptation: A causal view. Proceedings of the AAAI Conference on Artificial Intelligence, 2015, Vol. 29. [CrossRef]

- Shen, Z.; Cui, P.; Kuang, K.; Li, B.; Chen, P. On image classification: Correlation vs causality. arXiv preprint arXiv:1708.06656 2017.

- Yash, G.; Amir, F.; Uri, S.; Been, K. Explaining classifiers with causal concept effect (cace). arXiv preprint arXiv: 1907.07165 2019.

- Tang, K.; Huang, J.; Zhang, H. Long-tailed classification by keeping the good and removing the bad momentum causal effect. Advances in Neural Information Processing Systems 2020, 33, 1513–1524.

- Hu, X.; Tang, K.; Miao, C.; Hua, X.S.; Zhang, H. Distilling causal effect of data in class-incremental learning. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, pp. 3957–3966.

- Liu, C.; Sun, X.; Wang, J.; Tang, H.; Li, T.; Qin, T.; Chen, W.; Liu, T.Y. Learning causal semantic representation for out-of-distribution prediction. Advances in Neural Information Processing Systems 2021, 34, 6155–6170.

- Mahajan, D.; Tople, S.; Sharma, A. Domain generalization using causal matching. International Conference on Machine Learning. PMLR, 2021, pp. 7313–7324.

- Sun, X.; Wu, B.; Zheng, X.; Liu, C.; Chen, W.; Qin, T.; Liu, T.Y. Recovering latent causal factor for generalization to distributional shifts. Advances in Neural Information Processing Systems 2021, 34, 16846–16859.

- Yue, Z.; Sun, Q.; Hua, X.S.; Zhang, H. Transporting causal mechanisms for unsupervised domain adaptation. Proceedings of the IEEE/CVF International Conference on Computer Vision, 2021, pp. 8599–8608.

- Lv, F.; Liang, J.; Li, S.; Zang, B.; Liu, C.H.; Wang, Z.; Liu, D. Causality inspired representation learning for domain generalization. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 8046–8056.

- Wang, X.; Saxon, M.; Li, J.; Zhang, H.; Zhang, K.; Wang, W.Y. Causal balancing for domain generalization. arXiv preprint arXiv:2206.05263 2022.

- Wang, Y.; Liu, F.; Chen, Z.; Wu, Y.C.; Hao, J.; Chen, G.; Heng, P.A. Contrastive-ACE: Domain Generalization Through Alignment of Causal Mechanisms. IEEE Transactions on Image Processing 2022, 32, 235–250. [CrossRef]

- Yang, C.H.H.; Hung, I.T.; Liu, Y.C.; Chen, P.Y. Treatment learning causal transformer for noisy image classification. Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, 2023, pp. 6139–6150.

- Qiu, B.; Li, H.; Wen, H.; Qiu, H.; Wang, L.; Meng, F.; Wu, Q.; Pan, L. CafeBoost: Causal Feature Boost To Eliminate Task-Induced Bias for Class Incremental Learning. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2023, pp. 16016–16025.

- Chen, J.; Gao, Z.; Wu, X.; Luo, J. Meta-causal Learning for Single Domain Generalization. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2023, pp. 7683–7692. [CrossRef]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; others. Imagenet large scale visual recognition challenge. International journal of computer vision 2015, 115, 211–252.

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, September 6-12, 2014, Proceedings, Part V 13. Springer, 2014, pp. 740–755. [CrossRef]

- Reed, W.J. The Pareto, Zipf and other power laws. Economics letters 2001, 74, 15–19. [CrossRef]

- Gupta, A.; Dollar, P.; Girshick, R. Lvis: A dataset for large vocabulary instance segmentation. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2019, pp. 5356–5364.

- Liu, Z.; Miao, Z.; Zhan, X.; Wang, J.; Gong, B.; Yu, S.X. Large-scale long-tailed recognition in an open world. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2019, pp. 2537–2546. [CrossRef]

- Zhou, B.; Cui, Q.; Wei, X.S.; Chen, Z.M. Bbn: Bilateral-branch network with cumulative learning for long-tailed visual recognition. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2020, pp. 9719–9728. [CrossRef]

- Kang, B.; Xie, S.; Rohrbach, M.; Yan, Z.; Gordo, A.; Feng, J.; Kalantidis, Y. Decoupling representation and classifier for long-tailed recognition. arXiv preprint arXiv:1910.09217 2019. [CrossRef]

- Miao, Q.; Yuan, J.; Kuang, K. Domain Generalization via Contrastive Causal Learning. arXiv preprint arXiv:2210.02655 2022. [CrossRef]

- Chen, C.F.R.; Fan, Q.; Panda, R. Crossvit: Cross-attention multi-scale vision transformer for image classification. Proceedings of the IEEE/CVF international conference on computer vision, 2021, pp. 357–366. [CrossRef]

- Li, Y.; Wu, C.Y.; Fan, H.; Mangalam, K.; Xiong, B.; Malik, J.; Feichtenhofer, C. Mvitv2: Improved multiscale vision transformers for classification and detection. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 4804–4814. [CrossRef]

- Shang, X.; Song, M.; Yu, C. Hyperspectral image classification with background. IGARSS 2019-2019 IEEE International Geoscience and Remote Sensing Symposium. IEEE, 2019, pp. 2714–2717. [CrossRef]

- Pearl, J.; Glymour, M.; Jewell, N.P. Causal inference in statistics: A primer; John Wiley & Sons, 2016.

- Pearl, J. Direct and indirect effects. In Probabilistic and causal inference: the works of Judea Pearl; book, 2022; pp. 373–392.

- VanderWeele, T.J. A three-way decomposition of a total effect into direct, indirect, and interactive effects. Epidemiology (Cambridge, Mass.) 2013, 24, 224. [CrossRef]

- Pearl, J. Causal diagrams for empirical research. Biometrika 1995, 82, 669–688. [CrossRef]

| Level | Symbol | Activity | Typical Question | Example | Machine Learning |

|---|---|---|---|---|---|

| Association | Seeing | What is the probability of Y given X? | Dog detection given grass in the image. | Supervised / Unsupervised Learning | |

| Intervention | Doing | What if I do X? | Dog detection given background removal. | Reinforcement Learning | |

| Counterfactual | Imagining | What if I had acted differently? | Dog detection if the background wasn’t removed? |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).