Submitted:

04 January 2024

Posted:

05 January 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Related Works

2.1. Data Augmentation Approaches in TTPs Classification

2.2. Data Augmentation using ChatGPT

2.3. Model Algorithms for TTPs Classification

2.4. Fine-Tuning Large Language Models

3. Methodology

3.1. Mathematical Definition

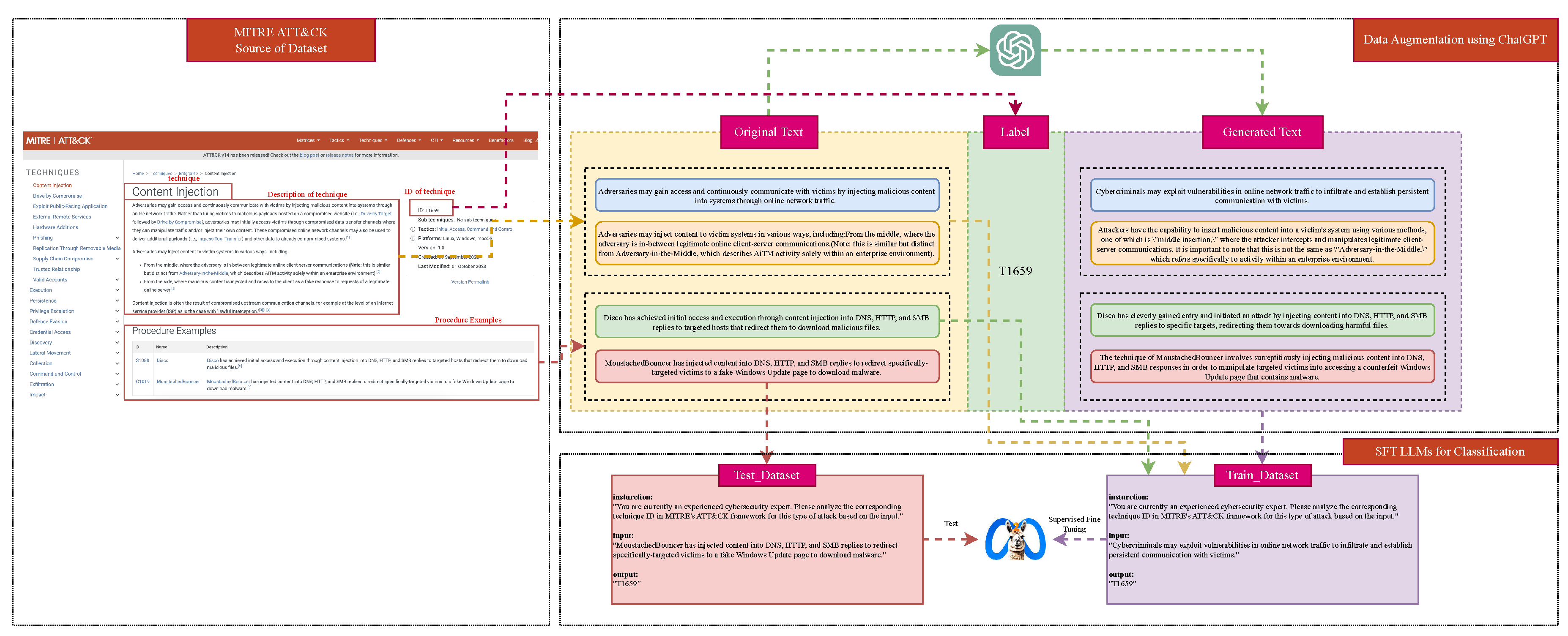

3.2. Data Augmentation using ChatGPT

| Algorithm 1 Data Augmentation using ChatGPT |

|

3.3. Instruction Supervised Fine-Tuning Large Language Models for TTPs Classification

4. Experiments

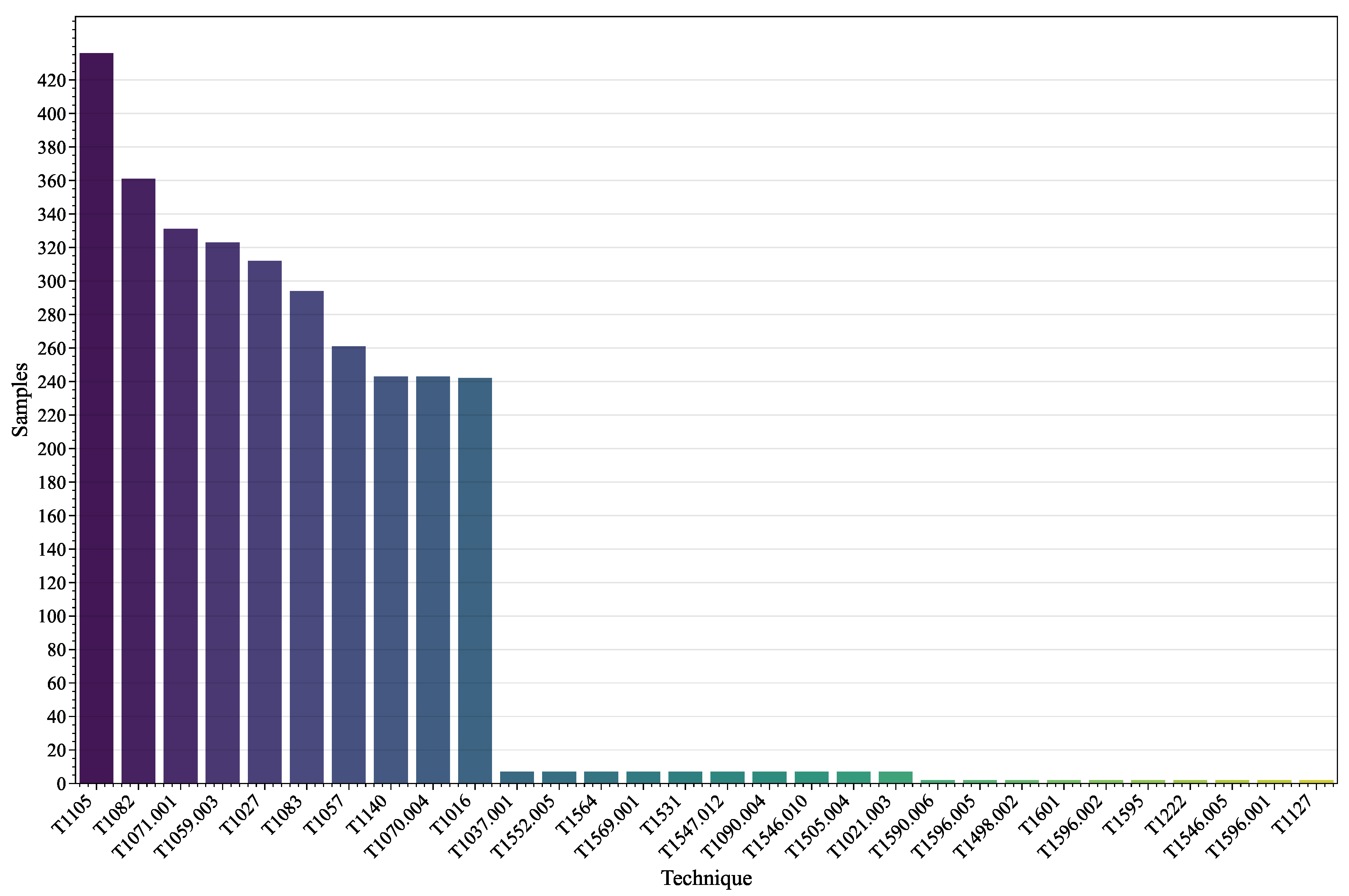

4.1. Experimental Setup

4.2. Experimental Design

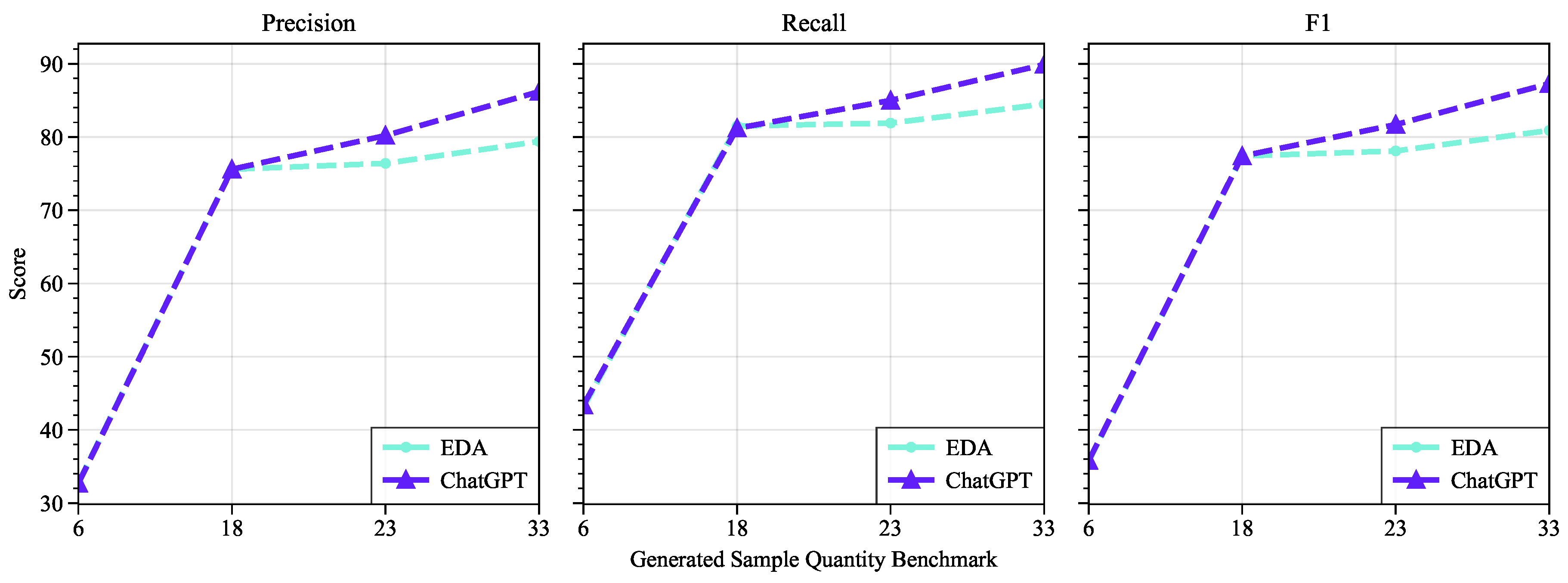

- Demonstrating the Effectiveness of Data Augmentation: In Experiment 1, traditional data augmentation methods such as EDA are compared with the data augmentation method proposed in this study. Both methods are used for Instruction Supervised Fine-Tuning of the LLaMA2-7B model, and the evaluation is performed using the Test dataset. Another data augmentation method, SMOTE, is not selected because it maps inputs to vector representations, which do not match the input of large language models. Previous studies have already demonstrated the superiority of EDA over SMOTE; details can be found in the related work section.

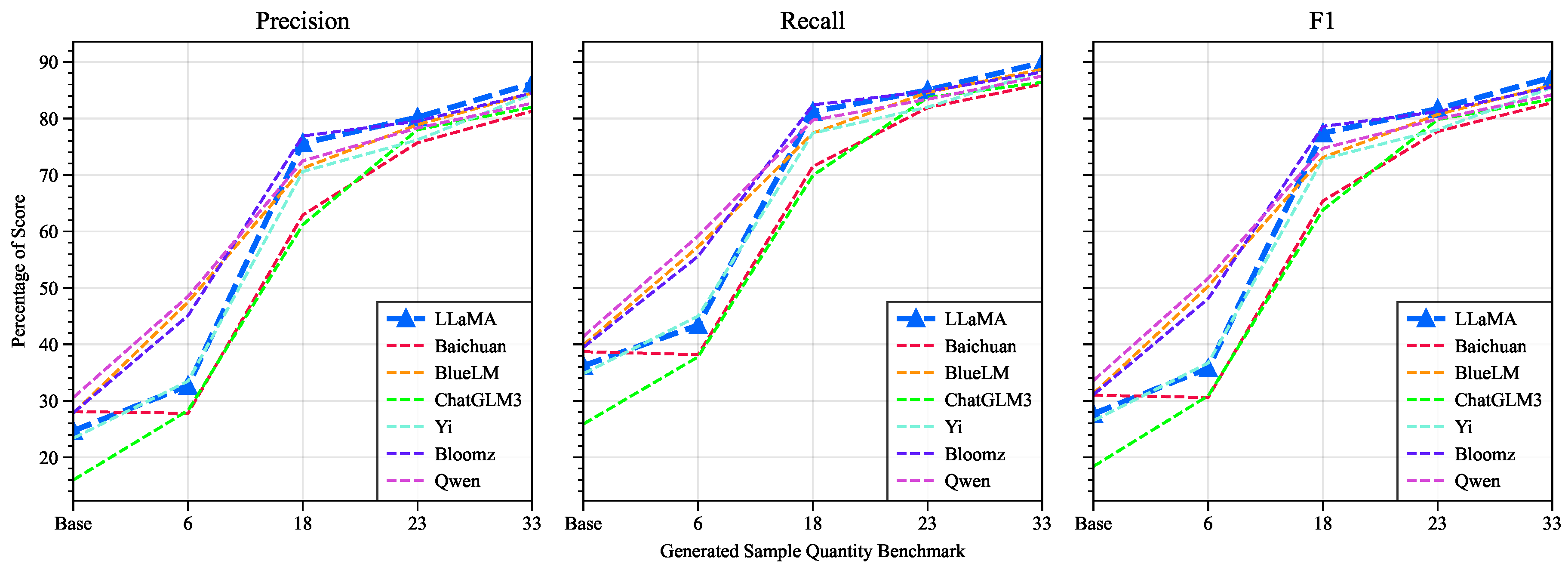

- Demonstrating the Effectiveness of Instruction Supervised Fine-Tuning LLM in Few-Shot Learning: In Experiment 2, baseline models including Baichuan-7B [32], BlueLM-7B [33], ChatGLM3-6B [34,35], Yi-6B(https://huggingface.co/01-ai/Yi-6B), Bloomz-7B [36], and Qwen-7B [37] are selected. These models are compared with the LLaMA2 model to verify the correctness of choosing LLaMA2 to address the problem in this study. Additionally, baseline models ACRCN and RENet from existing research are chosen to demonstrate the capability of Instruction Supervised Fine-Tuning LLM in solving TTPs classification in a few-shot learning scenario.

- Ablation Experiment: Experiment 3 aims to validate the effectiveness of combining data augmentation and Instruction Supervised Fine-Tuning LLM in solving TTPs classification problems. By selecting the base 14,298 samples as the training dataset, the classification performance of LLM after Instruction Supervised Fine-Tuning is evaluated. Simultaneously, using the dataset based on the benchmark of 33 in data augmentation, ACRCNN and RENet are trained, and the classification performance is evaluated.

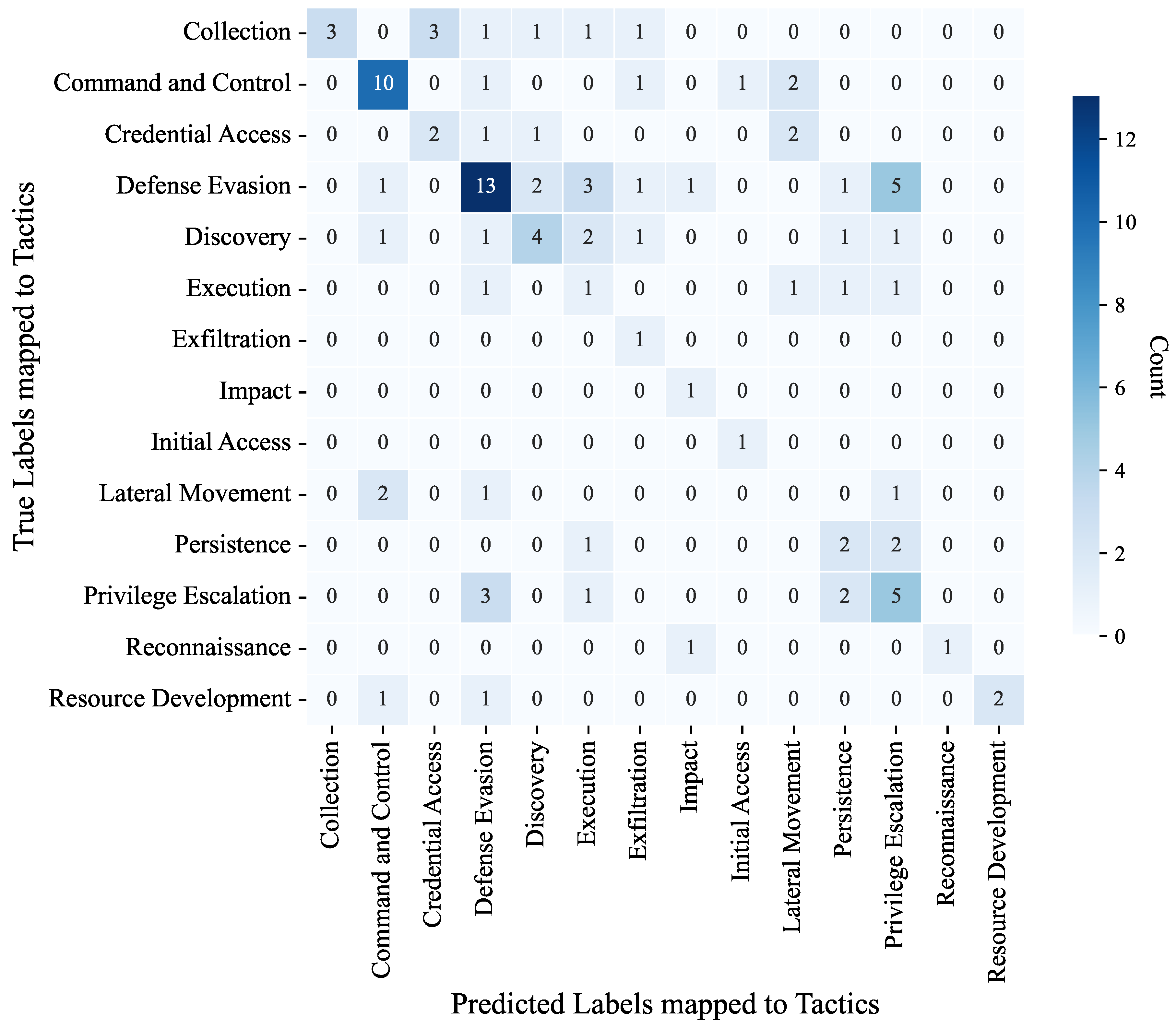

- Incorporating the mapping relationship between techniques and tactics in ATT&CK, a detailed analysis was conducted to examine the reasons and countermeasures for low recall in the classification results of technical categories where the model tends to exhibit confusion during the actual prediction process.

4.3. Evaluation Metrics

5. Results and Discussion

5.1. Experiment 1

5.2. Experiment 2

5.3. Experiment 3

5.4. Experiment 4

6. Conclusion & Future Work

- Expanding Data Sources: Broaden the range of data sources while ensuring data quality. Increase the quantity and diversity of basic training samples as much as possible.

- Mapping Categories to Numeric Values: Implement a mapping relationship between categories and short strings, such as mapping "T1659" to the number "1" and all 625 technical categories to numbers 1-625. This approach may reduce the generation pressure on large language models, potentially improving classification performance.

- Incorporating Tactics and Techniques: Extend the study to include tactics and techniques, creating an automated one-stop pipeline for classifying TTPs based on unstructured text.

- Introducing Multimodal Knowledge: Current research primarily focuses on the identification and extraction of TTPs from unstructured text. However, intelligence sources encompass various modalities, including text, images, audio, video, etc. Further exploration and research are needed to extend the scope of intelligence recognition and extraction using techniques of Multimodal Large Language Models(MLLM) [39,40,41,42].

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A.

| Tactics and Intervals | totle | <=6 | 7-17 | 18-22 | 23-32 | >=33 |

|---|---|---|---|---|---|---|

| Reconnaissance | 44 | 38 | 5 | 1 | 0 | 0 |

| Resource Development | 45 | 30 | 9 | 2 | 2 | 2 |

| Initial Access | 21 | 8 | 5 | 1 | 1 | 6 |

| Execution | 36 | 15 | 5 | 1 | 2 | 13 |

| Persistence | 115 | 74 | 28 | 3 | 2 | 8 |

| Privilege Escalation | 104 | 60 | 27 | 5 | 1 | 11 |

| Defense Evasion | 191 | 111 | 40 | 6 | 8 | 26 |

| Credential Access | 64 | 33 | 20 | 5 | 3 | 3 |

| Discovery | 46 | 10 | 9 | 4 | 4 | 19 |

| Lateral Movement | 23 | 8 | 9 | 1 | 2 | 3 |

| Collection | 37 | 12 | 13 | 0 | 2 | 10 |

| Command and Control | 40 | 8 | 10 | 4 | 6 | 12 |

| Exfiltration | 19 | 10 | 4 | 2 | 2 | 1 |

| Impact | 27 | 18 | 5 | 0 | 1 | 3 |

| Intervals | Count | True_Label | Predict_Label | ||||

|---|---|---|---|---|---|---|---|

| ID | Tactic | Technique | ID | Tactic | Technique | ||

| <=6 | 3 | T1564 | Defense Evasion | Hide Artifacts | T1564.001 | Defense Evasion | Hide Artifacts: Hidden Files and Directories |

| T1102.003 | Command and Control | One-Way Communication | T1105 | Command and Control | Ingress Tool Transfer | ||

| T1195.001 | Initial Access | Compromise Software Dependencies and Development Tools | T1195.002 | Initial Access | Compromise Software Supply Chain | ||

| Intervals | Count | True_Label | Predict_Label | ||||

|---|---|---|---|---|---|---|---|

| ID | Tactic | Technique | ID | Tactic | Technique | ||

| 7-17 | 8 | T1104 | Command and Control | Multi-Stage Channels | T1105 | Command and Control | Ingress Tool Transfer |

| T1069.003 | Discovery | Cloud Groups | T1087.004 | Discovery | Cloud Account | ||

| T1027.007 | Defense Evasion | Dynamic API Resolution | T1106 | Execution | Native API | ||

| T1588.001 | Resource Development | Malware | T1036 | Defense Evasion | Masquerading | ||

| T1053.002 | Execution, Persistence, Privilege Escalation | Scheduled Task/Job: At | T1053.005 | Execution, Persistence, Privilege Escalation | Scheduled Task | ||

| T1608.004 | Resource Development | Drive-by Target | T1583.001 | Resource Development | Domains | ||

| T1074 | Collection | Data Staged | T1074.002 | Collection | Remote Data Staging | ||

| T1001.001 | Command and Control | Junk Data | T1027 | Defense Evasion | Obfuscated Files or Information | ||

| Intervals | Count | True_Label | Predict_Label | ||||

|---|---|---|---|---|---|---|---|

| ID | Tactic | Technique | ID | Tactic | Technique | ||

| 18-22 | 3 | T1550.002 | Defense Evasion, Lateral Movement | Pass the Hash | T1134.002 | Privilege Escalation, Defense Evasion | Create Process with Token |

| T1016.001 | Discovery | Internet Connection Discovery | T1071.004 | Command and Control | DNS | ||

| T1497 | Defense Evasion, Discovery | Virtualization/ Sandbox Evasion |

T1029 | Exfiltration | Scheduled Transfer | ||

| Intervals | Count | True_Label | Predict_Label | ||||

|---|---|---|---|---|---|---|---|

| ID | Tactic | Technique | ID | Tactic | Technique | ||

| 23-32 | 5 | T1587.001 | Resource Development | Malware | T1102 | Command and Control | Web Service |

| T1572 | Command and Control | Protocol Tunneling | T1095 | Command and Control | Non-Application Layer Protocol | ||

| T1071.002 | Command and Control | File Transfer Protocols | T1021.002 | Lateral Movement | SMB/Windows Admin Shares | ||

| T1014 | Defense Evasion | Rootkit | T1574.006 | Persistence, Privilege Escalation, Defense Evasion | Dynamic Linker Hijacking | ||

| T1027.011 | Defense Evasion | Fileless Storage | T1112 | Defense Evasion | Modify Registry | ||

| Intervals | Count | True_Label | Predict_Label | ||||

|---|---|---|---|---|---|---|---|

| ID | Tactic | Technique | ID | Tactic | Technique | ||

| >=33 | 11 | T1124 | Discovery | System Time Discovery | T1053.005 | Execution, Persistence, Privilege Escalation | Scheduled Task |

| T1203 | Execution | Exploitation for Client Execution | T1211 | Defense Evasion | Exploitation for Defense Evasion | ||

| T1119 | Collection | Automated Collection | T1020 | Exfiltration | Automated Exfiltration | ||

| T1056.001 | Credential Access, Collection | Keylogging | T1555.005 | Credential Access | Password Managers | ||

| T1132.001 | Command and Control | Standard Encoding | T1048.003 | Exfiltration | Exfiltration Over Unencrypted Non-C2 Protocol | ||

| T1055.001 | Privilege Escalation, Defense Evasion | Dynamic-link Library Injection | T1055 | Privilege Escalation, Defense Evasion | Process Injection | ||

| T1560.003 | Collection | Archive via Custom Method | T1027 | Defense Evasion | Obfuscated Files or Information | ||

| T1135 | Discovery | Network Share Discovery | T1046 | Discovery | Network Service Discovery | ||

| T1021.001 | Lateral Movement | Remote Desktop Protocol | T1572 | Command and Control | Protocol Tunneling | ||

| T1071.004 | Command and Control | DNS | T1568 | Command and Control | Dynamic Resolution | ||

| T1036 | Defense Evasion | Masquerading | T1027.003 | Defense Evasion | Steganography | ||

References

- Huang, K.; Lian, Y.; Feng, D.; Zhang, H.; Liu, Y.; Ma, X. Cyber security threat intelligence sharing model based on blockchain. J. Comput. Res. Dev 2020, 57, 836–846. [Google Scholar]

- Schlette, D.; Böhm, F.; Caselli, M.; Pernul, G. Measuring and visualizing cyber threat intelligence quality. International Journal of Information Security 2021, 20, 21–38. [Google Scholar] [CrossRef]

- Abu, M.S.; Selamat, S.R.; Ariffin, A.; Yusof, R. Cyber threat intelligence–issue and challenges. Indonesian Journal of Electrical Engineering and Computer Science 2018, 10, 371–379. [Google Scholar]

- J, B.D. The Pyramid of Pain. https://detect-respond.blogspot.com/2013/03/the-pyramid-of-pain.html.

- Oosthoek, K.; Doerr, C. Cyber threat intelligence: A product without a process? International Journal of Intelligence and CounterIntelligence 2021, 34, 300–315. [Google Scholar] [CrossRef]

- Conti, M.; Dargahi, T.; Dehghantanha, A. Cyber threat intelligence: challenges and opportunities; Springer, 2018.

- MITRE. ATT&CK. https://attack.mitre.org/.

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł; Polosukhin, I. Attention is all you need. Advances in neural information processing systems 2017, 30.

- Zhong, L.; Wu, J.; Li, Q.; Peng, H.; Wu, X. A comprehensive survey on automatic knowledge graph construction. arXiv 2023, arXiv:2302.05019. [Google Scholar] [CrossRef]

- Kim, H.; Kim, H.; others. Comparative experiment on TTP classification with class imbalance using oversampling from CTI dataset. Security and Communication Networks 2022, 2022. [Google Scholar] [CrossRef]

- Chawla, N.V.; Bowyer, K.W.; Hall, L.O.; Kegelmeyer, W.P. SMOTE: synthetic minority over-sampling technique. Journal of artificial intelligence research 2002, 16, 321–357. [Google Scholar] [CrossRef]

- Wei, J.; Zou, K. Eda: Easy data augmentation techniques for boosting performance on text classification tasks. arXiv 2019, arXiv:1901.11196. [Google Scholar]

- Rahman, M.R.; Williams, L. From Threat Reports to Continuous Threat Intelligence: A Comparison of Attack Technique Extraction Methods from Textual Artifacts. arXiv 2022, arXiv:2210.02601. [Google Scholar]

- Liu, J.; Yan, J.; Jiang, J.; He, Y.; Wang, X.; Jiang, Z.; Yang, P.; Li, N. TriCTI: an actionable cyber threat intelligence discovery system via trigger-enhanced neural network. Cybersecurity 2022, 5, 8. [Google Scholar] [CrossRef]

- Fang, L.; Lee, G.G.; Zhai, X. Using gpt-4 to augment unbalanced data for automatic scoring. arXiv 2023, arXiv:2310.18365. [Google Scholar]

- Fang, Y.; Li, X.; Thomas, S.W.; Zhu, X. Chatgpt as data augmentation for compositional generalization: A case study in open intent detection. arXiv 2023, arXiv:2308.13517. [Google Scholar]

- Dai, H.; Liu, Z.; Liao, W.; Huang, X.; Cao, Y.; Wu, Z.; Zhao, L.; Xu, S.; Liu, W.; Liu, N.; others. AugGPT: Leveraging ChatGPT for Text Data Augmentation. arXiv 2023, arXiv:2302.13007.

- Liu, C.; Wang, J.; Chen, X. Threat intelligence ATT&CK extraction based on the attention transformer hierarchical recurrent neural network. Applied Soft Computing 2022, 122, 108826. [Google Scholar]

- Yu, Z.; Wang, J.; Tang, B.; Lu, L. Tactics and techniques classification in cyber threat intelligence. The Computer Journal 2023, 66, 1870–1881. [Google Scholar] [CrossRef]

- Yu, Z.; Wang, J.; Tang, B.; Ge, W. Research on the classification of cyber threat intelligence techniques and tactics based on attention mechanism and feature fusion. Journal of Sichuan University (Natural Science Edition) 2022, 59, 053003. [Google Scholar]

- Ge, W.; Wang, J.; Tang, H.; Yu, Z.; Chen, B.; Yu, J. RENet: tactics and techniques classifications for cyber threat intelligence with relevance enhancement. Journal of Sichuan University (Natural Science Edition) 2022, 59, 023004. [Google Scholar]

- Aghaei, E.; Al-Shaer, E. CVE-driven Attack Technique Prediction with Semantic Information Extraction and a Domain-specific Language Model. arXiv 2023, arXiv:2309.02785. [Google Scholar]

- Chae, Y.; Davidson, T. Large language models for text classification: From zero-shot learning to fine-tuning. Open Science Foundation 2023. [Google Scholar]

- Hegselmann, S.; Buendia, A.; Lang, H.; Agrawal, M.; Jiang, X.; Sontag, D. Tabllm: Few-shot classification of tabular data with large language models. International Conference on Artificial Intelligence and Statistics. PMLR, 2023, pp. 5549–5581.

- Balkus, S.V.; Yan, D. Improving short text classification with augmented data using GPT-3. Natural Language Engineering, 2022; 1–30. [Google Scholar]

- Møller, A.G.; Dalsgaard, J.A.; Pera, A.; Aiello, L.M. Is a prompt and a few samples all you need? Using GPT-4 for data augmentation in low-resource classification tasks. arXiv 2023, arXiv:2304.13861. [Google Scholar]

- Puri, R.S.; Mishra, S.; Parmar, M.; Baral, C. How many data samples is an additional instruction worth? arXiv preprint arXiv:2203.09161, arXiv:2203.09161 2022.

- Touvron, H.; Lavril, T.; Izacard, G.; Martinet, X.; Lachaux, M.A.; Lacroix, T.; Rozière, B.; Goyal, N.; Hambro, E.; Azhar, F.; others. Llama: Open and efficient foundation language models. arXiv, 2023; arXiv:2302.13971.

- Mangrulkar, S.; Gugger, S.; Debut, L.; Belkada, Y.; Paul, S.; Bossan, B. PEFT: State-of-the-art Parameter-Efficient Fine-Tuning methods. https://github.com/huggingface/peft, 2022.

- Hu, E.J.; Shen, Y.; Wallis, P.; Allen-Zhu, Z.; Li, Y.; Wang, S.; Wang, L.; Chen, W. LoRA: Low-Rank Adaptation of Large Language Models. International Conference on Learning Representations, 2022.

- Zhao, W.X.; Zhou, K.; Li, J.; Tang, T.; Wang, X.; Hou, Y.; Min, Y.; Zhang, B.; Zhang, J.; Dong, Z.; Du, Y.; Yang, C.; Chen, Y.; Chen, Z.; Jiang, J.; Ren, R.; Li, Y.; Tang, X.; Liu, Z.; Liu, P.; Nie, J.Y.; Wen, J.R. A Survey of Large Language Models. arXiv 2023, arXiv:2303.18223. [Google Scholar]

- Baichuan. Baichuan 2: Open Large-scale Language Models. arXiv 2023, arXiv:2309.10305.

- Team, B. BlueLM: An Open Multilingual 7B Language Model. https://github.com/vivo-ai-lab/BlueLM, 2023.

- Zeng, A.; Liu, X.; Du, Z.; Wang, Z.; Lai, H.; Ding, M.; Yang, Z.; Xu, Y.; Zheng, W.; Xia, X.; others. Glm-130b: An open bilingual pre-trained model. arXiv 2022, arXiv:2210.02414.

- Du, Z.; Qian, Y.; Liu, X.; Ding, M.; Qiu, J.; Yang, Z.; Tang, J. GLM: General Language Model Pretraining with Autoregressive Blank Infilling. Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), 2022, pp. 320–335.

- Muennighoff, N.; Wang, T.; Sutawika, L.; Roberts, A.; Biderman, S.; Scao, T.L.; Bari, M.S.; Shen, S.; Yong, Z.X.; Schoelkopf, H.; Tang, X.; Radev, D.; Aji, A.F.; Almubarak, K.; Albanie, S.; Alyafeai, Z.; Webson, A.; Raff, E.; Raffel, C. Crosslingual Generalization through Multitask Finetuning. 2023; arXiv:cs.CL/2211.01786.

- Bai, J.; Bai, S.; Chu, Y.; Cui, Z.; Dang, K.; Deng, X.; Fan, Y.; Ge, W.; Han, Y.; Huang, F.; Hui, B.; Ji, L.; Li, M.; Lin, J.; Lin, R.; Liu, D.; Liu, G.; Lu, C.; Lu, K.; Ma, J.; Men, R.; Ren, X.; Ren, X.; Tan, C.; Tan, S.; Tu, J.; Wang, P.; Wang, S.; Wang, W.; Wu, S.; Xu, B.; Xu, J.; Yang, A.; Yang, H.; Yang, J.; Yang, S.; Yao, Y.; Yu, B.; Yuan, H.; Yuan, Z.; Zhang, J.; Zhang, X.; Zhang, Y.; Zhang, Z.; Zhou, C.; Zhou, J.; Zhou, X.; Zhu, T. Qwen Technical Report. arXiv, 2023; arXiv:2309.16609. [Google Scholar]

- Fayyazi, R.; Yang, S.J. On the Uses of Large Language Models to Interpret Ambiguous Cyberattack Descriptions. arXiv, 2023; arXiv:2306.14062. [Google Scholar]

- Yin, S.; Fu, C.; Zhao, S.; Li, K.; Sun, X.; Xu, T.; Chen, E. A Survey on Multimodal Large Language Models. arXiv, 2023; arXiv:2306.13549. [Google Scholar]

- Fu, C.; Chen, P.; Shen, Y.; Qin, Y.; Zhang, M.; Lin, X.; Yang, J.; Zheng, X.; Li, K.; Sun, X.; Wu, Y.; Ji, R. MME: A Comprehensive Evaluation Benchmark for Multimodal Large Language Models. arXiv, 2023; arXiv:2306.13394. [Google Scholar]

- Yin, S.; Fu, C.; Zhao, S.; Xu, T.; Wang, H.; Sui, D.; Shen, Y.; Li, K.; Sun, X.; Chen, E. Woodpecker: Hallucination Correction for Multimodal Large Language Models. arXiv 2023, arXiv:2310.16045. [Google Scholar]

- Fu, C.; Zhang, R.; Wang, Z.; Huang, Y.; Zhang, Z.; Qiu, L.; Ye, G.; Shen, Y.; Zhang, M.; Chen, P.; Zhao, S.; Lin, S.; Jiang, D.; Yin, D.; Gao, P.; Li, K.; Li, H.; Sun, X. A Challenger to GPT-4V? Early Explorations of Gemini in Visual Expertise. arXiv 2023, arXiv:2312.12436. [Google Scholar]

| Type | Content |

|---|---|

| Technique ID | T1659 |

| Description | 1.Adversaries may gain access and continuously communicate with victims by injecting malicious content into systems through online network traffic. |

| 2.Adversaries may inject content to victim systems in various ways, including: From the middle, where the adversary is in-between legitimate online client-server communications (Note: this is similar but distinct from Adversary-in-the-Middle, which describes AiTM activity solely within an enterprise environment). | |

| 3.Content injection is often the result of compromised upstream communication channels, for example at the level of an internet service provider (ISP) as is the case with "lawful interception. | |

| Procedure examples | 1.Disco has achieved initial access and execution through content injection into DNS, HTTP, and SMB replies to targeted hosts that redirect them to download malicious files. |

| 2.MoustachedBouncer has injected content into DNS, HTTP, and SMB replies to redirect specifically-targeted victims to a fake Windows Update page to download malware. |

| Original Text | Generated Text |

|---|---|

| 1.Adversaries may gain access and continuously communicate with victims by injecting malicious content into systems through online network traffic. | 1.Cybercriminals may exploit vulnerabilities in online network traffic to infiltrate and establish persistent communication with victims. |

| 2.Adversaries may inject content to victim systems in various ways, including:From the middle, where the adversary is in-between legitimate online client-server communications.(Note: this is similar but distinct from Adversary-in-the-Middle, which describes AiTM activity solely within an enterprise environment). | 2.Attackers have the capability to insert malicious content into a victims system using various methods, one of which is "middle insertion" where the attacker intercepts and manipulates legitimate client-server communications. It is important to note that this is not the same as "Adversary-in-the-Middle" which refers specifically to activity within an enterprise environment. |

| 3.Disco has achieved initial access and execution through content injection into DNS, HTTP, and SMB replies to targeted hosts that redirect them to download malicious files. | 3.Disco has cleverly gained entry and initiated an attack by injecting content into DNS, HTTP, and SMB replies to specific targets, redirecting them towards downloading harmful files. |

| 4.MoustachedBouncer has injected content into DNS, HTTP, and SMB replies to redirect specifically-targeted victims to a fake Windows Update page to download malware. | 4.The technique of MoustachedBouncer involves surreptitiously injecting malicious content into DNS, HTTP, and SMB responses in order to manipulate targeted victims into accessing a counterfeit Windows Update page that contains malware. |

| Cutoff length | Learning rate | Epoch | Compute type | Batch_size | LoRA rank | LoRA dropout | Warmup steps | Maxlength | Top-p | Tempreture |

|---|---|---|---|---|---|---|---|---|---|---|

| 1024 | 5e-4 | 6 | Fp16 | 8 | 8 | 0.1 | 0 | 128 | 0.7 | 0.95 |

| Quantity * | Size | Model | ChatGPT | EDA | ||||

| Precision | Recall | F1 | Precision | Recall | F1 | |||

| 6-50% | 14456 | LLAMA2 | 32.7 | 43.4 | 35.8 | 32.8 | 43.1 | 35.7 |

| 18-75% | 19427 | LLAMA2 | 75.6 | 81.2 | 77.4 | 75.6 | 81.5 | 77.4 |

| 23-80% | 21847 | LLAMA2 | 80.2 | 85.0 | 81.7 | 76.4 | 81.9 | 78.1 |

| 33-85% | 27008 | LLAMA2 | 86.2 | 89.9 | 87.3 | 79.4 | 84.5 | 80.9 |

| Intervals | Count | Base | 6 | 18 | 23 | 33 | ||||||||||

| P * | R | F1 | P | R | F1 | P | R | F1 | P | R | F1 | P | R | F1 | ||

| <=6 | 326 | 9.7 | 11.8 | 10.3 | 22.2 | 26.3 | 23.5 | 84.9 | 86.0 | 85.3 | 88.3 | 89.1 | 88.6 | 96.1 | 96.4 | 96.2 |

| 7-17 | 142 | 29.4 | 32.8 | 30.5 | 27.1 | 28.4 | 27.5 | 56.0 | 57.1 | 56.4 | 69.6 | 71.3 | 70.1 | 78.0 | 78.9 | 78.3 |

| 18-22 | 25 | 32.4 | 32.4 | 32.4 | 38.2 | 41.2 | 39.2 | 48.5 | 48.5 | 48.5 | 53.1 | 53.1 | 53.1 | 48.4 | 50.0 | 48.9 |

| 23-32 | 34 | 58.1 | 58.1 | 58.1 | 52.3 | 52.3 | 52.3 | 47.8 | 47.8 | 47.8 | 54.5 | 54.5 | 54.5 | 61.9 | 61.9 | 61.9 |

| >=33 | 98 | 67.9 | 72.6 | 69.5 | 59.1 | 65.7 | 61.3 | 66.5 | 72.4 | 68.4 | 64.8 | 68.5 | 66.0 | 66.8 | 71.9 | 68.5 |

| Approach | Model | Size | Dataset | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Base | ChatGPT-6 | ChatGPT-18 | ChatGPT-23 | ChatGPT-33 | |||||||||||||

| P | R | F1 | P | R | F1 | P | R | F1 | P | R | F1 | P | R | F1 | |||

| SFT | LLaMA2 | 7B | 24.7 | 36.2 | 27.7 | 32.7 | 43.4 | 35.8 | 75.6 | 81.2 | 77.4 | 80.2 | 85.0 | 81.7 | 86.2 | 89.9 | 87.3 |

| Baichuan | 7B | 28.1 | 38.7 | 31.0 | 27.8 | 38.2 | 30.6 | 62.9 | 71.5 | 65.4 | 75.7 | 81.9 | 77.7 | 81.3 | 86.1 | 82.8 | |

| BlueLM | 7B | 27.8 | 39.9 | 31.3 | 47.5 | 57.3 | 50.3 | 71.2 | 77.4 | 73.1 | 78.9 | 84.6 | 80.7 | 84.5 | 88.7 | 85.9 | |

| ChatGLM3 | 6B | 16.0 | 25.9 | 18.4 | 28.3 | 37.8 | 30.9 | 61.2 | 69.9 | 63.8 | 78.0 | 83.7 | 79.8 | 82.0 | 86.4 | 83.4 | |

| Yi | 6B | 23.4 | 34.8 | 26.5 | 33.5 | 45.0 | 36.7 | 70.6 | 77.4 | 72.8 | 76.2 | 82.0 | 78.0 | 84.3 | 88.4 | 85.5 | |

| Bloomz | 7B | 27.9 | 39.5 | 31.1 | 45.1 | 55.6 | 48.1 | 76.9 | 82.4 | 78.6 | 79.5 | 84.8 | 81.2 | 84.5 | 88.2 | 85.6 | |

| Qwen | 7B | 30.6 | 41.4 | 33.6 | 48.5 | 59.2 | 51.7 | 72.5 | 79.7 | 74.7 | 78.3 | 83.4 | 79.9 | 82.7 | 87.5 | 84.2 | |

| Baseline | ACRCNN | 458M | - | - | - | - | - | - | - | - | - | - | - | - | 0 | 2.1 | 1.2 |

| RENet | 431M | - | - | - | - | - | - | - | - | - | - | - | - | 0 | 0 | 0 | |

| Models | SFT | Base | ||||

|---|---|---|---|---|---|---|

| P | R | F1 | P | R | F1 | |

| LLaMA2 | 86.2 | 89.9 | 87.3 | 0 | 0 | 0 |

| Qwen | 82.7 | 87.5 | 84.2 | 0 | 0 | 0 |

| ChatGPT | - | - | - | 1.9 | 4.6 | 2.4 |

| Reconna- issance |

Resource Development |

Initial Access |

Execu- tion |

Persist- ence |

Privilege Escalation |

Defense Evasion |

||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| P | R | F1 | P | R | F1 | P | R | F1 | P | R | F1 | P | R | F1 | P | R | F1 | P | R | F1 |

| 92.2 | 93.3 | 92.6 | 85.1 | 87.2 | 85.8 | 92.9 | 95.2 | 93.7 | 85.5 | 86.8 | 85.9 | 97.4 | 98.3 | 97.7 | 93.1 | 95.2 | 93.8 | 84.9 | 87.3 | 85.6 |

|

Credential Access |

Discovery |

Lateral Movement |

Collection |

Command and Control |

Exfiltration | Impact | ||||||||||||||

| P | R | F1 | P | R | F1 | P | R | F1 | P | R | F1 | P | R | F1 | P | R | F1 | P | R | F1 |

| 89.4 | 90.9 | 89.9 | 73.0 | 76.0 | 74.0 | 76.9 | 76.9 | 76.9 | 65.5 | 69.0 | 66.7 | 51.5 | 59.1 | 53.8 | 92.1 | 94.7 | 92.9 | 94.4 | 96.3 | 95.1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).