4.4. Validation of the Model

An initial experiment was undertaken to showcase the dynamic method’s superiority over its static counterpart, illustrating its distinct advantages. The objective of this experiment was to analyze how each method handles the evolving characteristics of traffic information and the circulation of vehicles within a road system. The analysis was conducted to identify specific situations where one method outperforms the other. The experiment is poised to furnish precise data, facilitating a comprehension of variances, and underscoring the superiority of the dynamic approach outlined in this study.

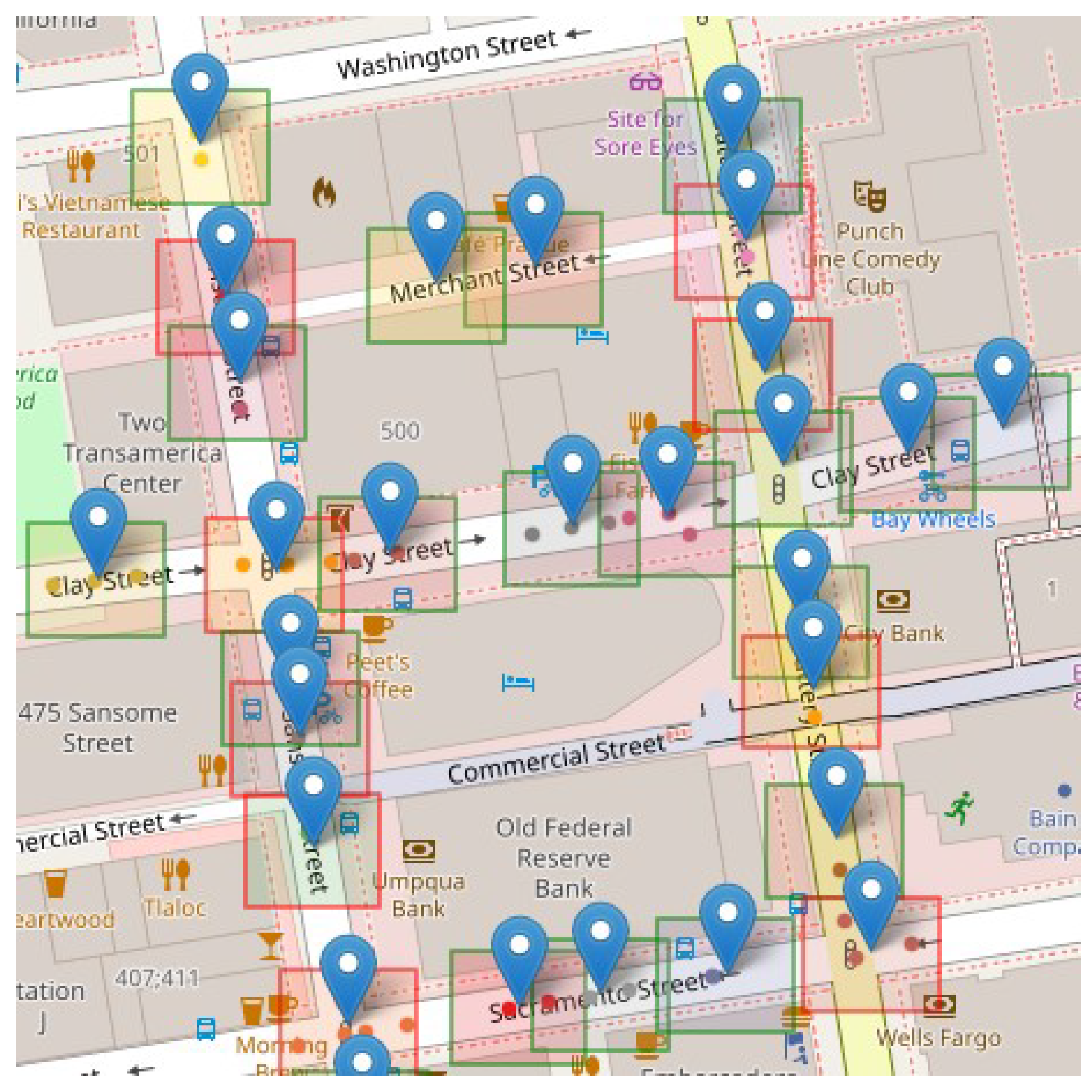

To carry out this experiment, we worked with a representative data set of the city of San Francisco that contemplates 6 minutes of execution, capturing snapshots every one minute in an area of 100 × 100 meters. Randomly chosen from the experiment’s data cluster, a group underwent a detailed comparative analysis. Special focus was placed on the characteristics of the proposed dynamic method, highlighting how it adapts to variations in data distribution over time. These results were compared with a static method based on a fixed grid, highlighting notable differences in its responsiveness to changing traffic conditions and data distribution.

In the proposed dynamic method, the ability to adapt to changing conditions of the spatial distribution of data is a crucial feature.

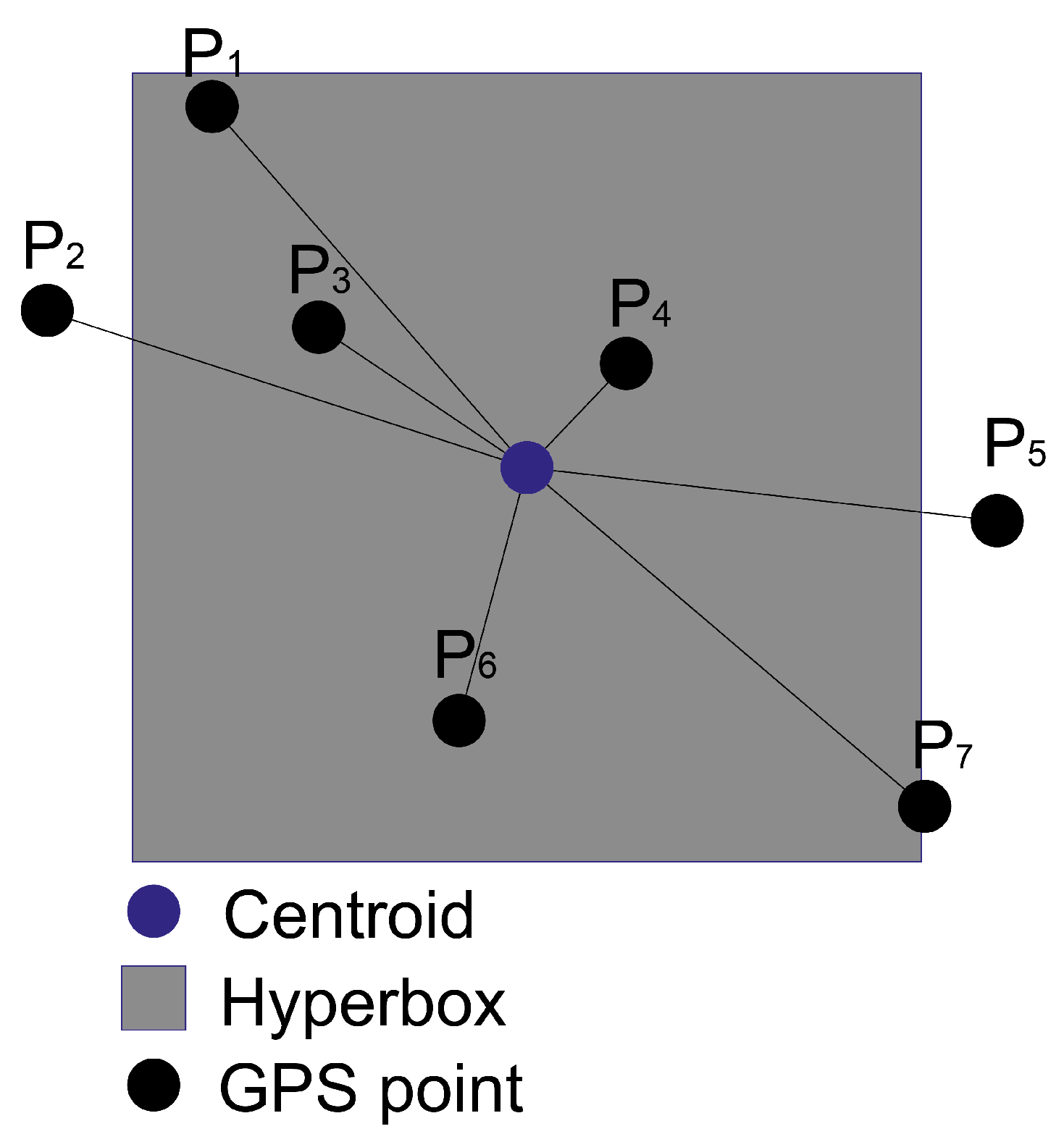

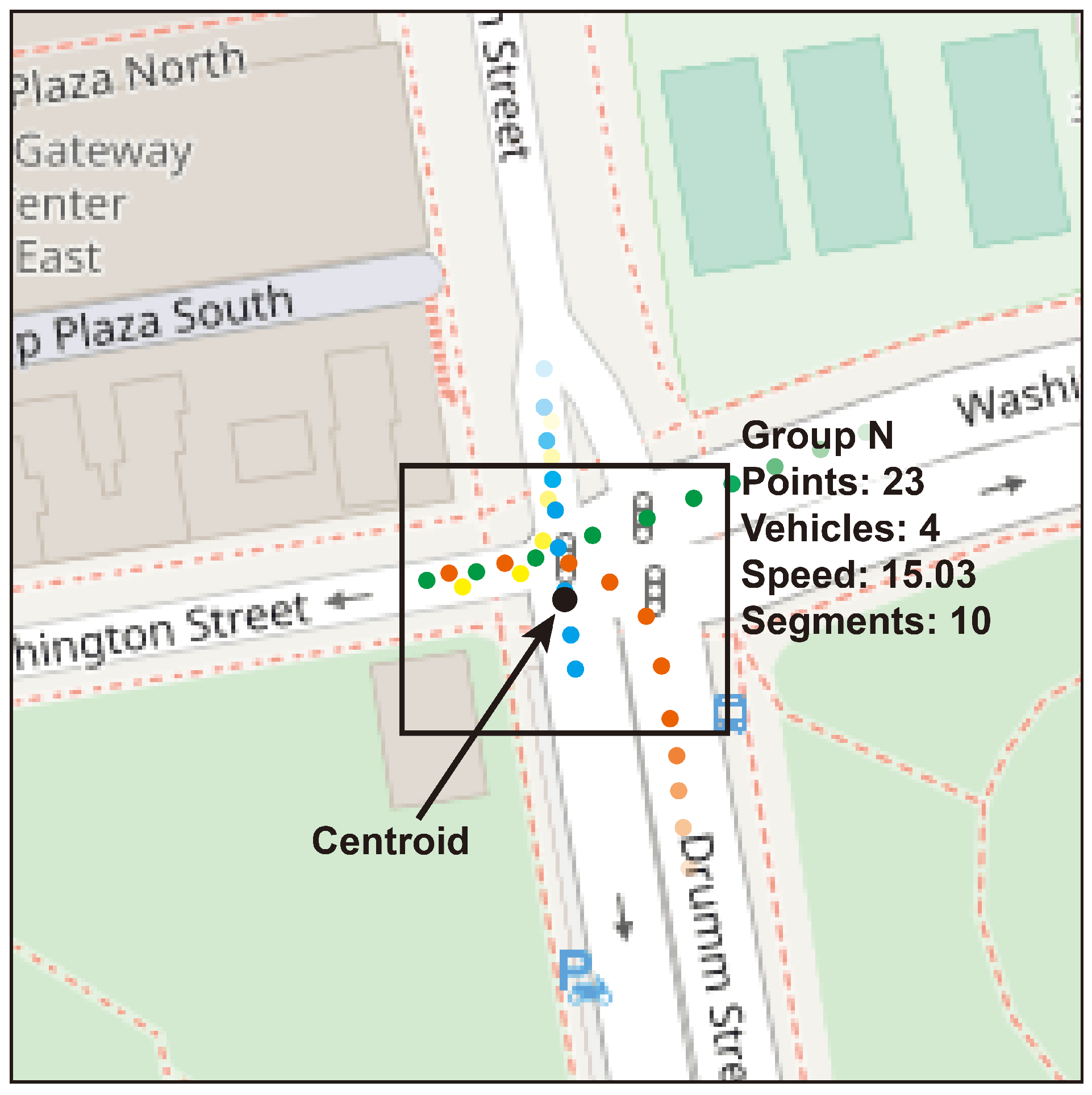

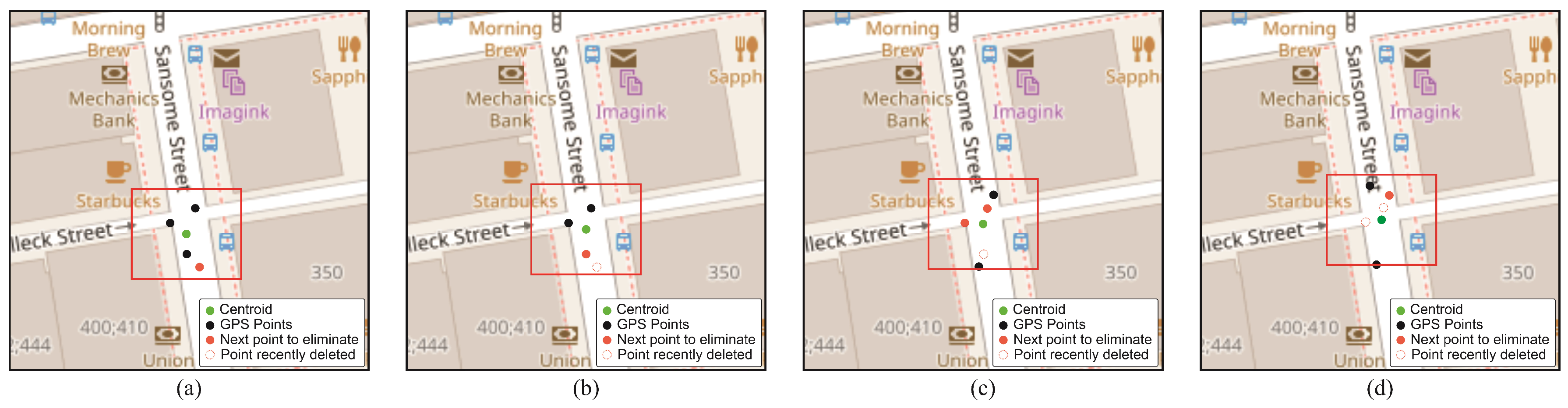

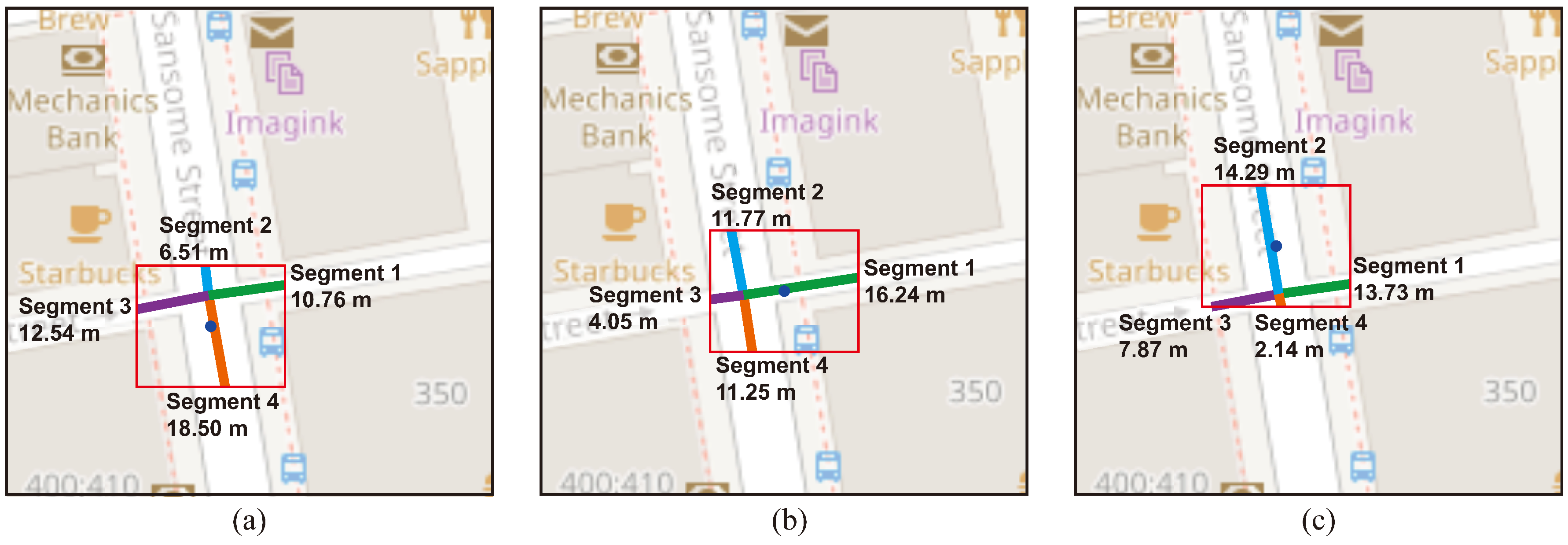

Figure 7 illustrates the adaptive and precise adjustments of the centroid and hyperbox location at various time instances, ensuring coverage along road segments and adeptly capturing changes in both cluster density and shape. This update not only reflects the current distribution of the data, but also allows the model to adjust for possible deviations and changes in the shape of the clusters over time.

A representation of the dynamism over a cluster over four consecutive seconds of processing GPS points is shown in

Figure 7, the new points that are integrated into the cluster update the centroid and hyperbox causing a slight displacement of both according to the coordinates of the new GPS points. It can also be identified that the oldest GPS points lose relevance as time goes by (in the graph they are marked in red) and are the ones that will eventually be removed from the cluster in future instants (marked with red edges).

In this method the data are processed in time order, which is important to distinguish old points and to keep the cluster location updated, the points processed for each time instant in the clustering can be visualized in

Figure 8. A representation of the hyperbox of the analyzed cluster has been projected to identify the points that have been assigned to this particular cluster, moreover, as the points can only be assigned to a single cluster, the other points that are displayed and are outside the projected hyperbox are assigned to some other cluster than the analyzed one. The points displayed with black color correspond to points that entered at one time instant, while the points with red borders are points that entered at the previous time instant.

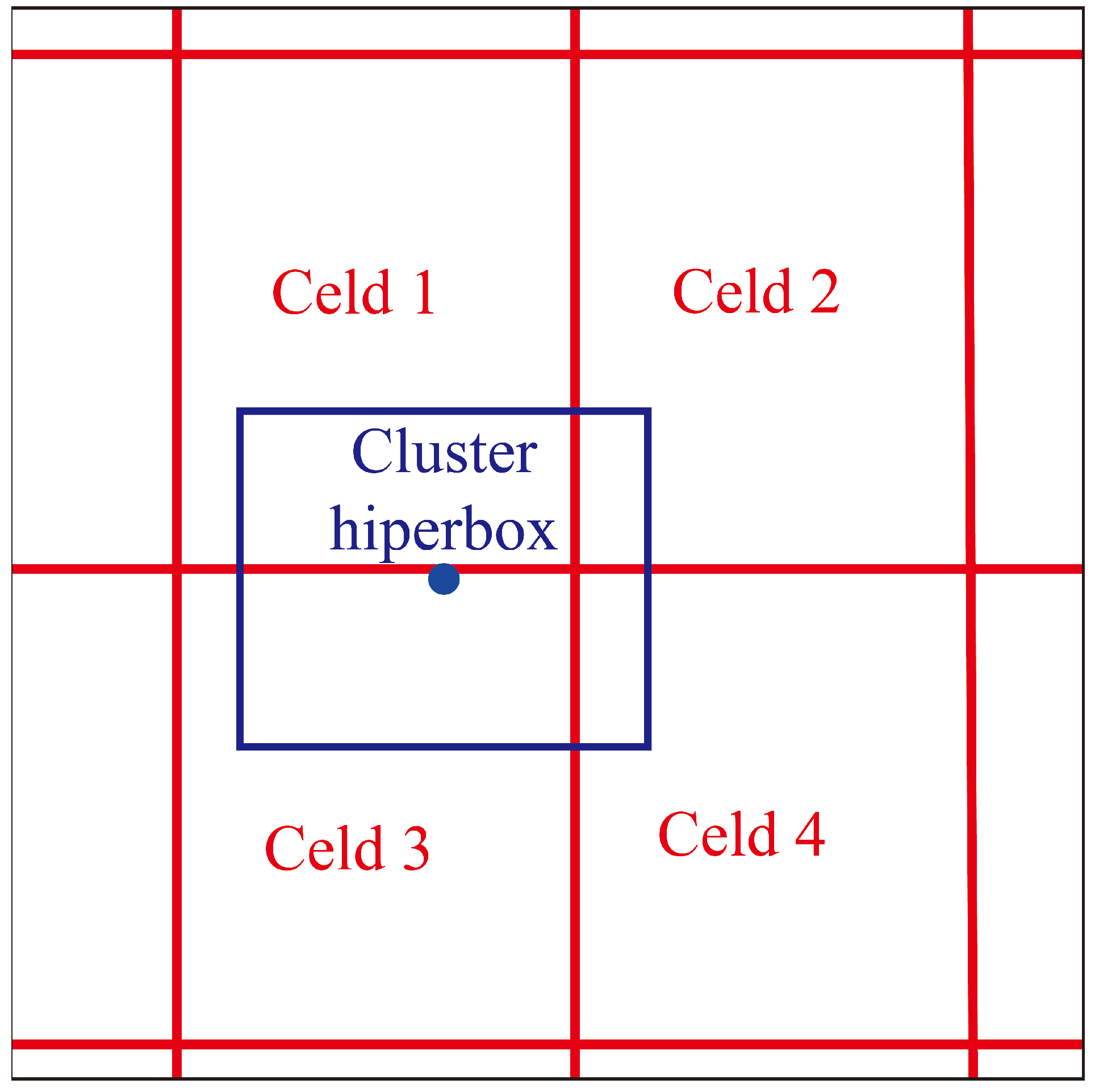

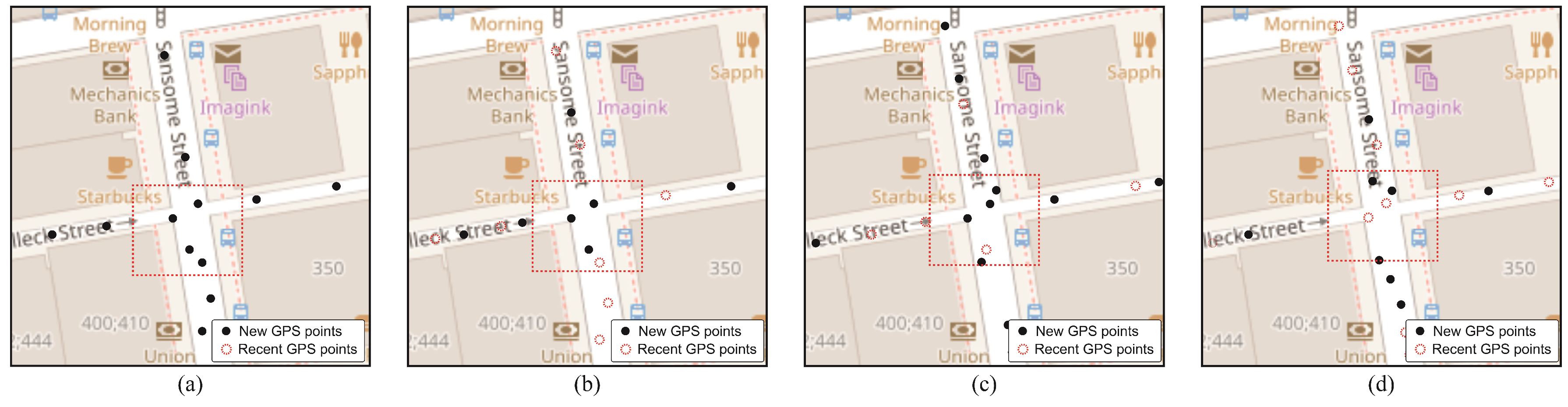

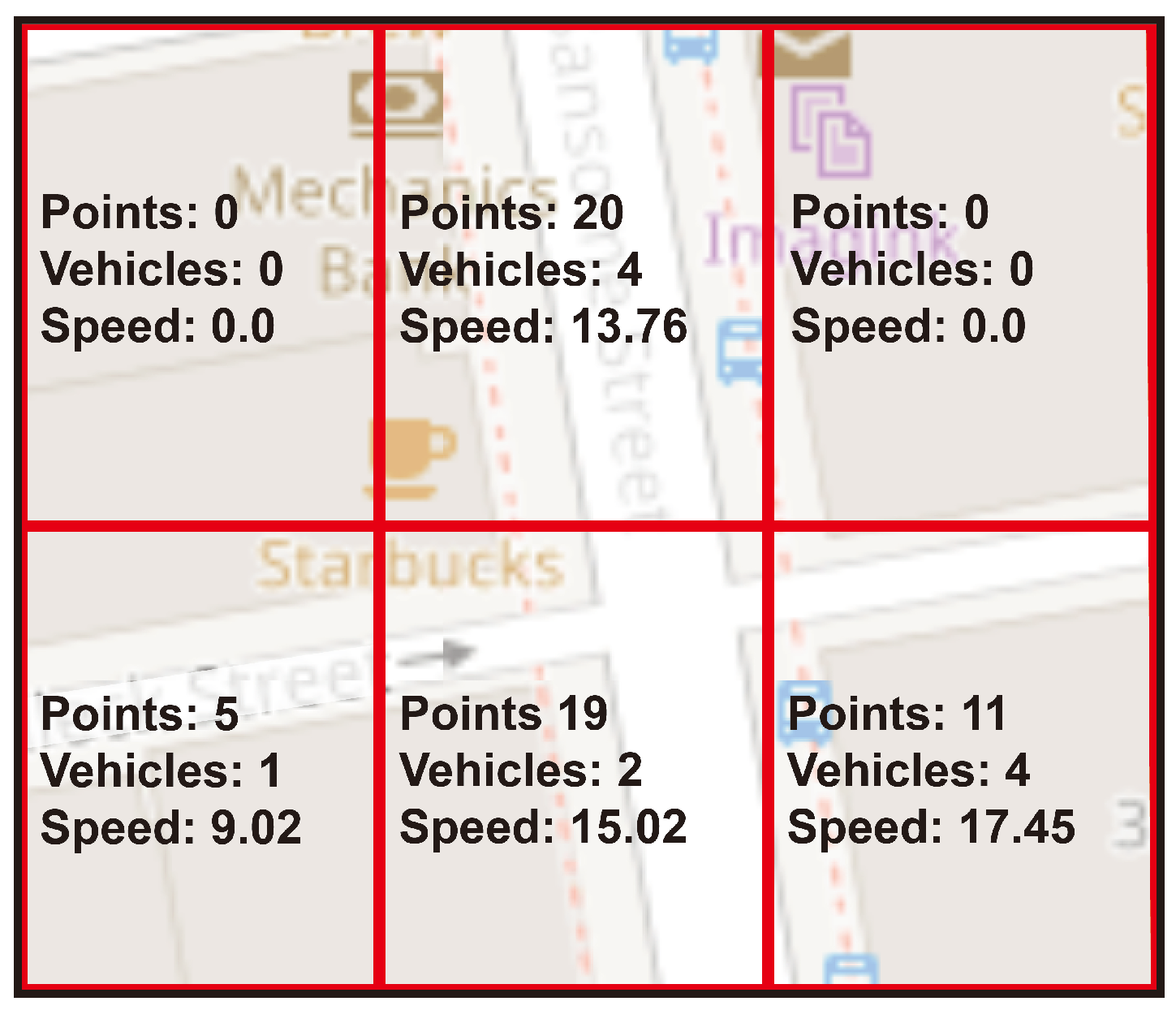

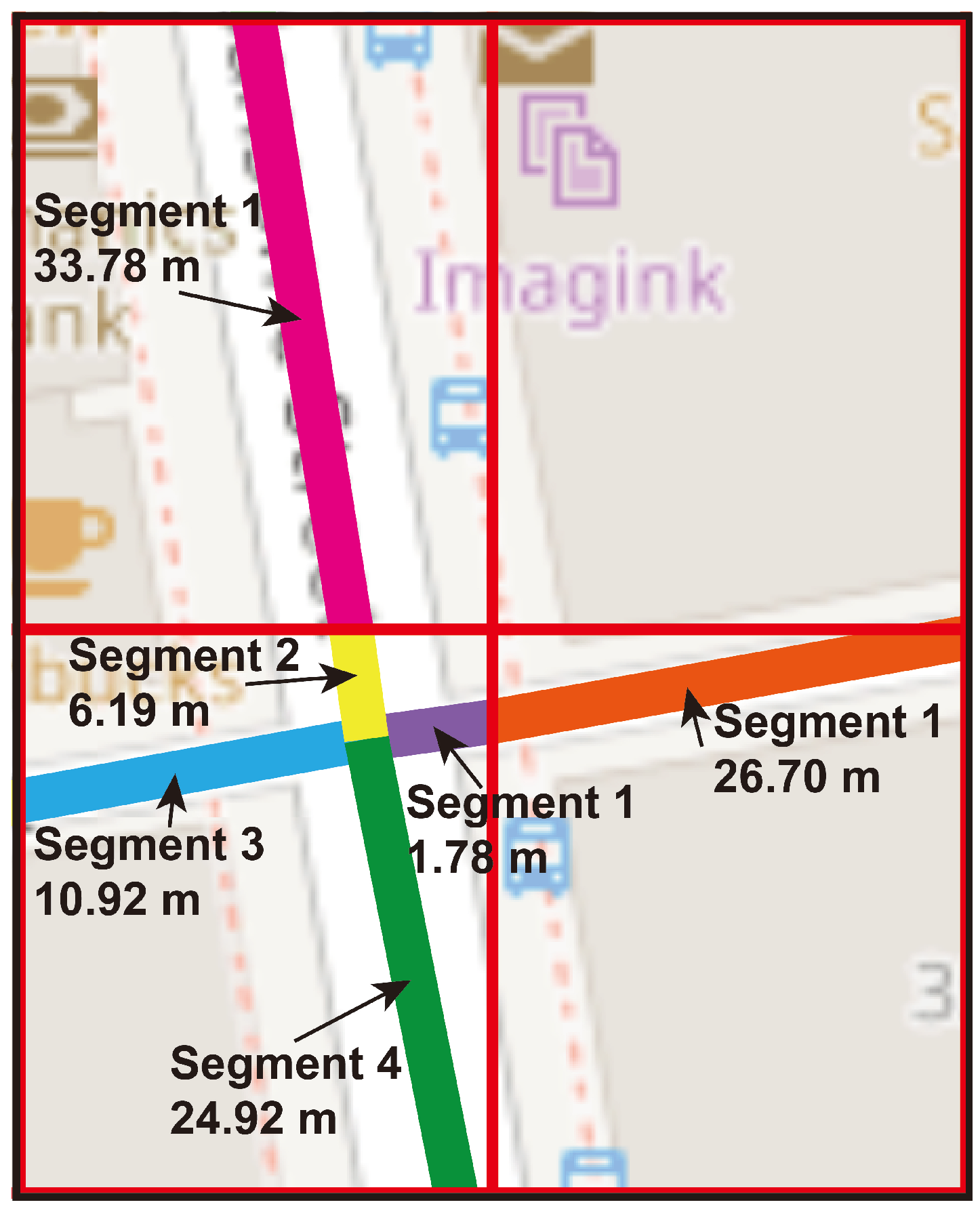

On the other hand, the static method, which is based on a fixed grid, has certain limitations in terms of adaptability. In

Figure 9 it can be seen how the data are assigned to predefined cells in the grid. GPS points transiting a road are assigned and analyzed as part of a cell and this cell serves a certain specific region, therefore, several cells are required to analyze a large road and GPS points will be distributed among the different cells. The distribution of cells in a fixed grid ensures complete coverage allowing an understanding of the spatial dynamics of roads in their respective areas and the consideration of how GPS points are distributed between cells suggests attention to efficiency in spatial data management. But, in a fixed grid the need to use several cells to analyze a road that traverses multiple areas also presents its own disadvantages, for example, the road may appear to be divided which may affect the understanding of its whole, this management of cells may require more computational and storage resources, in addition, some risks of errors may occur when coordinating data between cells.

As for the dynamism of the data when analyzing the snapshots using the classifier component, the dynamic method shows a clear advantage. In the

Figure 10 shows the selection of road segments for the snapshots captured at minutes 1 (

Figure 10a), 3 (

Figure 10b) and 5 (

Figure 10c) used for congestion assessment, this capacity to flexibly adapt to shifts in data location and make adjustments to centroids and hyperboxes as required positions it as an excellent option for identifying segments affected by changes in vehicular flow and traffic density. On the other hand, the static method grapples with managing these dynamics, as the rigid lattice fails to adjust effectively to fluctuations.

In real scenarios, such as a road network traversing an urban area with multiple routes and traffic patterns, diverse situations arise. During peak traffic hours, roads can fill up with a large number of vehicles, resulting in congestion. In contrast, during off-peak times, the number of vehicles on the roads decreases. If we focus on a static method that does not consider these fluctuations in data flows, as can be seen in

Figure 11 the road segments being analyzed remain fixed at all times, there is a possibility that roads with very different traffic volumes will be incorrectly selected for each cell. This erroneous choice may result in an imprecise portrayal of how vehicles behave throughout various periods within the day.

During this initial experiment, a salient feature of the dynamic approach can be observed, in contrast to the static alternative that computes the congestion indicator using all observed points but without considering the evolution of the vehicle route. , the static method only retains the recent points considered relevant to the vehicles, as these are the ones that approximate a real time route of the vehicles.

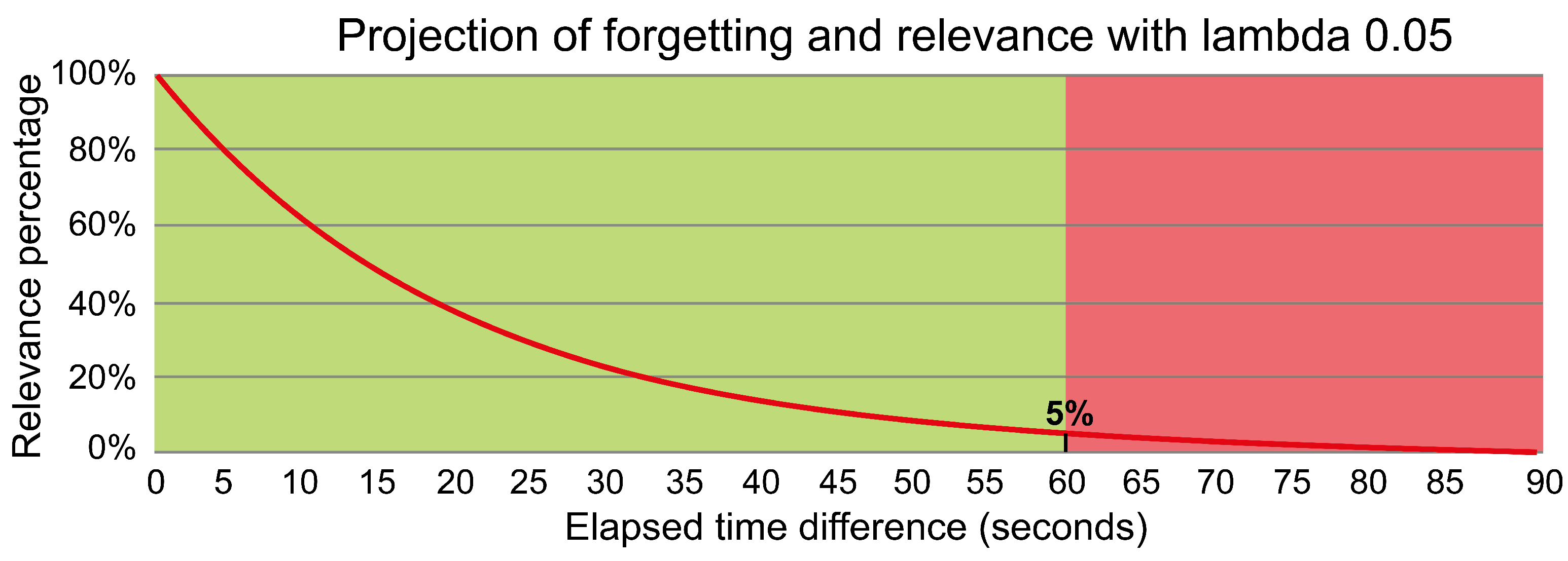

Within the dynamic approach, as vehicles navigate and fresh GPS data is logged, the clustering algorithm assimilates these GPS points into their designated clusters, thereby triggering an autonomous update of the centroid. This suggests that the definition of real-time congestion is more significantly influenced by the most recent GPS points, while the relevance of earlier GPS data gradually diminishes.

This differentiation is crucial, since in one trip, a vehicle may cross multiple cells and its trajectory may span a variety of GPS points. If all these GPS points were considered without taking into account temporal dynamics, erroneous conclusions about congestion could be reached, identifying congestion that does not actually exist. Consequently, the dynamic method secures a real-time assessment of congestion that is both precise and adaptable, effectively responding to the dynamic nature of vehicle mobility on urban roadways.

4.5. Obtained Results

In order to measure the effectiveness of this method, a table has been generated with the execution times. This table provides a detailed and objective view of how the method behaves in practical conditions.

In the parallel execution process, it is relevant to note that the times of the clustering component are measured independently, while the classification and visualization components are evaluated together and separately to the classification component, the results of the execution times are shown in

Table 1 for 60-second snapshots and in

Table 2 for 30-second snapshots both in unit of measure in minute and are obtained from running one hour of data in the cities of San Francisco, Rome and Guayaquil. This individualized measurement strategy allows a more precise analysis of each component and its contribution to the total run time. In addition, in parallel executions it is common to observe that the maximum time of the processes to be measured is taken as a reference.

The results show significant differences in the execution times of the clustering and classification components in the cities of San Francisco, Rome and Guayaquil. Although areas of very similar dimensions were used, there are several reasons that may explain these disparities.

In the case of the clustering component, the experiments with 60-second snapshots showed that the city of San Francisco shows the longest time, at 28 minutes and 23 seconds. This could be due to the complexity of the data in that city or a larger amount of data requiring processing. On the other hand, Rome and Guayaquil show shorter times, 10 minutes and 46 seconds and 18 minutes and 32 seconds, respectively.

Experiments with 30-second snapshots showed that the city of San Francisco requires 27 minutes and 34 seconds for clustering, while the city of Rome requires 11 minutes and 2 seconds, and the city of Guayaquil requires 18 minutes and 37 seconds.

This could indicate a higher efficiency in the clustering process in those cities or a lower workload that could be directly related to the number of trajectories. In the experiments with 60-second snapshots, San Francisco was processed with 290 trajectories and has obtained the longest processing time, Guayaquil with 218 trajectories has obtained a shorter time than San Francisco, and finally Rome obtained the shortest processing time by processing 137 trajectories, this trend is also present in the 30-second experiments which reaffirms that there is a direct relationship between processing time and the amount of clustered trajectories.

The storage of each snapshot takes less than 0.02 seconds, during this time the clustering stops momentarily, however, this time is relatively small which does not affect the clustering processing time since if compared to the time between snapshots which is 60 seconds, this time represents 0.03%.

As for the classification component, the experiments performed with 60-second snapshots show that the Guayaquil dataset stands out with the lowest time, 7 minutes and 40 seconds, followed by Rome with 8 minutes and 30 seconds. San Francisco, in this case, shows the longest time, 19 minutes and 11 seconds. For the experiments with 30-second snapshots, the order is preserved with respect to the time required to classify each cluster, obtaining 39 minutes and 1 second in the city of San Francisco, 31 minutes and 41 seconds in the city of Guayaquil, and 15 minutes and 13 seconds for the city of Rome. These times could be related to the availability of processing resources in each location or the complexity of the road networks found in the areas used for classification in each city.

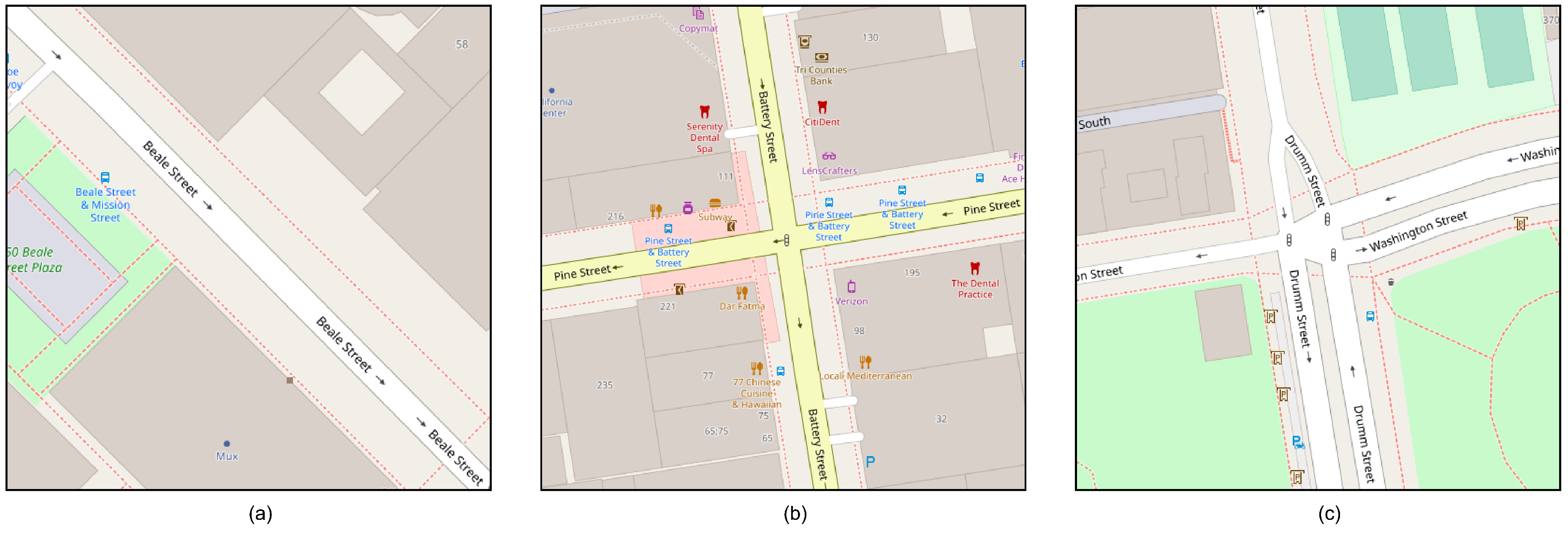

The variability in the layout of the available roads in a city can directly influence the performance of the system, some examples are given to illustrate some cases faced by the cluster classification component,

Figure 12a shows the simplest case that will require the least amount of resources to analyze, the case of

Figure 12b is a scenario with a very common urban distribution between cities that will require more time to obtain the traffic valuation, and

Figure 12c shows the case of a complex road network that is composed of multiple intersecting roads.

From the perspective of parallel execution, these results highlight the importance of considering the performance of each component separately. Parallel execution allows the workload to be distributed efficiently, but execution times vary depending on the processing power of each component and how they interact with each other.

To verify the precision of the clustering classification, an evaluation is applied based on the observed results, which are reflected in different confusion matrices for each city evaluated. The confusion matrix delivers a compact portrayal of the classification model’s effectiveness in gauging TCC grid congestion. It quantifies the instances of correct and incorrect predictions within the respective TCC categories.

Table 3 exhibits the findings of the confusion matrixes using from 60-second snapshots for the San Francisco dataset. For a tolerance of 0.2, the matrix reveals that the model was able to correctly identify 11237 cases of congestion and 2503 cases of non-congestion. However, in the cases of incorrect classifications, there are 166 cases in which scenarios without congestion are identified when the static method indicates that they are congested scenarios, while in the other category there are 1748 cases in which congested scenarios are identified, but the static method indicates that these scenarios are not congested.

For a tolerance of 0.1, the matrix reveals that the model was able to correctly identify 10614 cases of congestion and 2492 cases of non-congestion. However, in the cases of incorrect classifications, there are 176 cases in which scenarios without congestion are identified when the static method indicates that they are congested scenarios, while in the other category there are 2371 cases in which congested scenarios are identified, but the static method indicates that these scenarios are not congested.

For a tolerance of 0, the matrix reveals that the model was able to correctly identify 10029 cases of congestion and 2457 cases of non-congestion. However, in the cases of incorrect classifications, there are 211 cases in which scenarios without congestion are identified when the static method indicates that they are congested scenarios, while in the other category there are 2956 cases in which congested scenarios are identified, but the static method indicates that these scenarios are not congested.

Table 4 exhibits the findings of the confusion matrixes using from 30-second snapshots for the San Francisco dataset. For a tolerance of 0.2, the matrix reveals that the model was able to correctly identify 14184 cases of congestion and 3294 cases of non-congestion. However, in the cases of incorrect classifications, there are 1227 cases in which scenarios without congestion are identified when the static method indicates that they are congested scenarios, while in the other category there are 7062 cases in which congested scenarios are identified, but the static method indicates that these scenarios are not congested.

For a tolerance of 0.1, the matrix reveals that the model was able to correctly identify 13335 cases of congestion and 3258 cases of non-congestion. However, in the cases of incorrect classifications, there are 1263 cases in which scenarios without congestion are identified when the static method indicates that they are congested scenarios, while in the other category there are 7911 cases in which congested scenarios are identified, but the static method indicates that these scenarios are not congested.

For a tolerance of 0, the matrix reveals that the model was able to correctly identify 12471 cases of congestion and 3234 cases of non-congestion. However, in the cases of incorrect classifications, there are 1287 cases in which scenarios without congestion are identified when the static method indicates that they are congested scenarios, while in the other category there are 8775 cases in which congested scenarios are identified, but the static method indicates that these scenarios are not congested.

The results of the confusion matrixes for the city of Rome using 60-second snapshots are shown in

Table 5. Specifically for a tolerance of 0.2, the model was able to get 3195 cases of congestion and 412 cases of non-congestion correct. However, in the cases of incorrect classifications, there are 30 cases in which scenarios without congestion are identified when the static method indicates that they are congested scenarios, while in the other category there are 309 cases in which congested scenarios are identified, but the static method indicates that these scenarios are not congested.

For a tolerance of 0.1, the matrix reveals that the model was able to correctly identify 3089 congested cases and 412 non-congested cases. However, in the cases of incorrect classifications, there are 30 cases in which scenarios without congestion are identified when the static method indicates that they are congested scenarios, while in the other category there are 415 cases in which congested scenarios are identified, but the static method indicates that these scenarios are not congested.

For a tolerance of 0, the matrix reveals that the model was able to correctly identify 2994 cases of congestion and 408 cases of non-congestion. However, in the cases of incorrect classifications, there are 34 cases in which scenarios without congestion are identified when the static method indicates that they are congested scenarios, while in the other category there are 510 cases in which congested scenarios are identified, but the static method indicates that these scenarios are not congested.

The results of the confusion matrixes for the city of Rome using 30-second snapshots are shown in

Table 6. Specifically for a tolerance of 0.2, the model was able to hit 4689 cases of congestion and 509 cases of non-congestion. However, in the cases of incorrect classifications, there are 390 cases in which scenarios without congestion are identified when the static method indicates that they are congested scenarios, while in the other category there are 2159 cases in which congested scenarios are identified, but the static method indicates that these scenarios are not congested.

For a tolerance of 0.1, the matrix reveals that the model was able to correctly identify 4594 congested cases and 508 non-congested cases. However, in the cases of incorrect classifications, there are 391 cases in which scenarios without congestion are identified when the static method indicates that they are congested scenarios, while in the other category there are 2254 cases in which congested scenarios are identified, but the static method indicates that these scenarios are not congested.

For a tolerance of 0, the matrix reveals that the model was able to correctly identify 4497 cases of congestion and 500 cases of non-congestion. However, in the cases of incorrect classifications, there are 399 cases in which scenarios without congestion are identified when the static method indicates that they are congested scenarios, while in the other category there are 2351 cases in which congested scenarios are identified, but the static method indicates that these scenarios are not congested.

The results of the confusion matrixes for the city of Guayaquil, using 60-second snapshots, are shown in

Table 7. In particular, for a tolerance of 0.2, the model was successful in 2576 congestion situations and 1912 cases of no congestion. However, in the cases of incorrect classifications, there are 146 cases in which scenarios without congestion are identified when the static method indicates that they are congested scenarios, while in the other category there are 276 cases in which congested scenarios are identified, but the static method indicates that these scenarios are not congested.

For a tolerance of 0.1, the matrix reveals that the model was able to correctly identify 2535 congested cases and 1891 non-congested cases. However, in the cases of incorrect classifications, there are 167 cases in which scenarios without congestion are identified when the static method indicates that they are congested scenarios, while in the other category there are 317 cases in which congested scenarios are identified, but the static method indicates that these scenarios are not congested.

For a tolerance of 0, the matrix reveals that the model was able to correctly identify 2459 cases of congestion and 1872 cases of non-congestion. However, in the cases of incorrect classifications, there are 186 cases in which scenarios without congestion are identified when the static method indicates that they are congested scenarios, while in the other category there are 393 cases in which congested scenarios are identified, but the static method indicates that these scenarios are not congested.

The results of the confusion matrixes for the city of Guayaquil, using 60-second snapshots, are shown in

Table 8. In particular, for a tolerance of 0.2, the model was successful in 3364 congestion situations and 2328 cases of no congestion. However, in the cases of incorrect classifications, there are 1193 cases in which scenarios without congestion are identified when the static method indicates that they are congested scenarios, while in the other category there are 1622 cases in which congested scenarios are identified, but the static method indicates that these scenarios are not congested.

For a tolerance of 0.1, the matrix reveals that the model was able to correctly identify 3270 congested cases and 2312 non-congested cases. However, in the cases of incorrect classifications, there are 1209 cases in which scenarios without congestion are identified when the static method indicates that they are congested scenarios, while in the other category there are 1716 cases in which congested scenarios are identified, but the static method indicates that these scenarios are not congested.

For a tolerance of 0, the matrix reveals that the model was able to correctly identify 3155 cases of congestion and 2281 cases of non-congestion. However, in the cases of incorrect classifications, there are 1240 cases in which scenarios without congestion are identified when the static method indicates that they are congested scenarios, while in the other category there are 1831 cases in which congested scenarios are identified, but the static method indicates that these scenarios are not congested.

In all cases, it was observed that the accuracy in detecting matching categorizations in the clusters resulting from clustering is high compared to the categorization of the grid cells.

Upon evaluating the veracity of positive outcomes, specifically measuring the proficiency to accurately identify the status of traffic congestion, the clusters demonstrated a noteworthy degree of correlations when juxtaposed with the grid cells experiencing congestion in each city subject to scrutiny. This underscores the clusters’ efficacy in discerning and matching instances of traffic congestion across diverse urban environments.

Concerning the true negative rate, associated with the precision in recognizing the uncongested status of traffic, a discernibly elevated quantity of concordances became apparent in contrast to non-congested cells within the stationary grid.