1. Introduction

Human-Robot Interactions (HRI) have been frequently investigated during the last decade, which have contributed to a nuanced comprehension of how humans and robots engage across diverse environments (for a review, see: [

1]). Goodrich and Schultz [

2] states that all interactions between humans and robots require some types of communication. Further, speech and language are considered to play a vital role in HRI [

3]. Nevertheless, for children with special needs, especially those with deficient abilities to hear sounds and voices, non-verbal communication is essential for an effective interaction. Further, it is critical for a successful interaction that the robot is able to manifest its emotional states without sounds. In other words, making the robot's expressiveness as intelligible and direct as possible, without sound, can enable effective and positive engagement between hearing-impaired children and the robot. Thus, including children with hearing impairments into HRI research can offer profound advantages. This inclusion not only prevents these children from being sidelined in the swiftly evolving domain of robotics but also affords researchers a more expansive perspective on HRI when auditory communication is not an option.

In this study, we include both hearing and deaf children, and we assess how the children interact with a humanoid robot without sounds and voices. Further, we investigate the children's grasp of robot emotions and examine the impact of voice and sound in these interactions by omitting this feature in a controlled experimental context. We also investigate the influence empathy has on recognizing emotions in robots in a context without sound, as well as whether there is a difference between deaf and hearing children's ability to see emotions in the robot, when they are only presented with gestures and not sound. Specifically, the following research questions are examined in this paper:

RQ1) How do hearing and deaf children assess the believability of humanoid robots without sounds?

RQ2) Can children, encompassing both hearing and deaf individuals, recognize emotions in a humanoid robot, when they are exhibited through non-verbal communication?

RQ3) Could children who see a humanoid robot respond to a video in a congruent manner be better able to recognize the emotions in the robot?

2. Related Works

2.1. Social Robots

Bartneck and Forlizzi [

4] defines a social robot as an “autonomous or semi-autonomous robot that interacts and communicates with humans following the behavioral rules expected by the people with whom the robot intends to interact”. The humanoid appearance of social robots facilitates interaction, as demonstrated in a systematic review by Sarrica [

5]. These robots are capable of both verbal and non-verbal communication, with the latter being particularly relevant when interacting with children with special needs. In a study by Zinina et al. [

6], it was found that users significantly preferred robots that utilized gestures, head movements, eye contact, and mouth animation, as opposed to robots with stationary body parts. Several studies have shown that expressive robots, capable of displaying human-like emotions, are perceived as friendlier and more human-like, resulting in greater engagement and more pleasant interactions [

7,

8].

Humanoid social robots are more stimulating for oral dialogue and, therefore, also for language development compared to devices without these human-like characteristics (i.e., tablets or mobile devices [

9,

10]). The potential advantages of a social robot compared to a tablet have also been demonstrated in relation to performance, involvement, and fun [

11]. Because robots can exhibit humanoid characteristics, they are also able to stimulate suitable learning situations. Recent research shows that social robots have been proven to be effective tutors [

11] and have further been utilized in various learning-related activities, including second language acquisition. Due to their beneficial impact on language development, particularly in preschool-aged children, robots can enhance concentration and academic performance in both children and adolescents [

12]. The level of involvement and motivation is positively linked to expressive behavior in robotic narration, whose effectiveness is comparable to that of a human being with the same behavior as a human being with characteristics of static and inexpressiveness [

13]. However, more research is needed on these factors in relation to children with hearing disabilities.

2.2. Children with Hearing Disabilities

The sensory system has been accustomed since birth to analyze emotional signals produced by those who care for us through various communication channels. A child receives information about their caregiver through each channel—facial expressions, tone and volume of voice, and body position [

14].

Partans and Marler [

15] indicate that during conversations, humans are accustomed to simultaneously decoding stimuli from multiple sensory channels. However, when one channel is compromised, information from other channels is utilized to compensate for the deficiency. Thus, hearing is pivotal in the developmental process of every child [

16]. In deaf children, the compromised auditory canal underscores the heightened significance of non-verbal communication. Deaf and hard-of-hearing children and adolescents are twice as vulnerable to emotional and general health problems compared to their hearing peers [

17]. Some authors have demonstrated that these individuals exhibit lower levels of empathy and encounter more peer-related challenges than their hearing counterparts [

18,

19,

20]. Netten et al. [

21] further argue that, given the pivotal role of empathy in initiating and sustaining interactions, its significance is fundamental in the development of deaf children. Consequently, it is both interesting and crucial to explore deaf children's experiences with and their ability to recognize emotions in robots. This investigation can provide valuable insights into the potential role of robotics in addressing the unique challenges faced by deaf children in interpersonal communication and emotional understanding.

2.3. Robotics and Empathy

Empathy refers to whether one can perceive the internal state of others and feel what they feel [

22,

23]. Furthermore, empathy includes the ability to process and respond with the right emotions to an event [

24]. Thus, empathy is the basis for functioning well in social interactions and refraining from exhibiting antisocial behavior. Moreover, empathy is highly involved in emotion recognition.

A study conducted by Ramachandra and Longacre [

25] found that higher levels of empathy in individuals lead them to better recognize facial and eyes-only emotions. As empathy is also an influence on helping behavior [

23] and individuals' ability to familiarize themselves with others, it is also conceivable that this is attributable to robotics.

A study by Charrier et al. [

26] showed that empathy influenced how empathic and intelligent the robot was perceived, as well as familiarity and comfortability towards the robot. Beck et al. [

27] found that body and head positions of robots can be used to convey emotions among children in human-robot interactions. However, a scoping review found that most studies investigating elements related to emotions in human-robot interactions rely on mechanisms that include sounds from the robot (i.e., tones, volumes, and speed of speech), and not much has been done in relation to soundless interventions in emotion recognition [

28].

3. Materials and Methods

3.1. Participants and Recruitment

The research participants were children from the second year of primary school. For the sample of deaf children the center of the Association of Hearing Impaired Families present in the area was involved. The association provided the translator expert in sign language who had previously been informed about the details of the experiment and its support intervention.

The selected children had varying levels of deafness and all wore cochlear implants, while children with comorbidities were excluded from the study. However, to promote inclusiveness, a final game session with the NAO robot was scheduled for all non-participants.

Finally, the sample consisted of N = 29 children, of which n = 11 were male (40.7%) and n = 16 were female (59.3%). The children had an age range from 6 – 11 years (Mage = 7.59, SD = 1.5). Within the sample, N = 9 children (33.3%) were deaf and only N = 2 (7.4%) had previous experience with a humanoid robot.

Informed consent was obtained from all parents of the children involved in the study during the recruitment phase.

3.2. Stimulus Material

For this study the NAO social robot was used. NAO is a 58 cm tall humanoid robot that weights 4.3 kg with expressive gestures with 25 Degrees of Freedom (DoF; 4 arm joints; 2 per hand; 5 for each leg; 2 for the head and one for control the hips).

Further, the robot can detect faces and simulate eye contact by moving its head. It can also vary the color of the LEDs in the contours of the eyes to simulate emotions and can obtain a lot of information about the environment using sensors and microphones. The robot is programmed with a graphical programming tool, called Choregraphe [

29], which provides an intuitive way to design complex behaviors, including different interfaces for the non-verbal communication, such as gestures, sounds and LEDs. NAO is designed and built by the French company Aldebaran-United robotics group which indicates its safety system of use clearly.

With an innovative, light and compact design, it easily lends itself to use in experiments involving interaction with children [

30,

31]. For this reason and according to Robaczewski et al. [

32] in 2021 there were more than 51 publications in the literature, for a total of over 1895 participants in studies in which the NAO robot supported learning and rehabilitation.

3.3. Instruments

3.3.1. Emotion Recognition of Video

First, inspired by Tsiourti et al. [

14] experiment, the participants were provided with a sheet displaying 11 emotions (i.e., amusement, anger, disgust, despair, embarrassment, fear, happiness/joy, neutral, sadness, shame, surprise). The children were asked to use the sheet and chose which emotion that were displayed in the video.

Prior to the experiment, a pre-test was carried out on a sample of 5 children (2 females and 3 males, Mage = 8) to test the videos displayed congruence with the expected emotion. Specifically, the children were asked “what emotion does this video display?” and all children agreed independently on the displayed emotions.

3.3.2. Emotion Recognition of NAO Robot

The robot's emotional reactions were programmed with the Choregraphe application [

29]. Given NAO’s inability to convey emotions using facial expressions, body language is the main communication channel for the robot. Zinina et al. [

6] found that a robot's appeal is influenced by its active features, with the most important being hands, followed by mouth, head, and eyes. Robots with hand gestures are preferred over those with mere head movements.

Research on eye movements and NAO robot LED colors is limited, though colored LEDs have been used alongside gestures for emotional expression in robotic storytelling [

33], however, colored light was not found to improve the recipients' transportation into the story. In another study, Johnson et al. [

34] examined how the head position of a humanoid robot influences the imitation of human emotions. Participants adjusted the robot's head to express anger, disgust, fear, happiness, sadness, and surprise. Findings revealed expected gaze directions: down for anger or sadness, up for surprise or happiness, and down and to the right for fear. Disgust expressions varied, with some expecting a rightward gaze and others expecting a downward and leftward gaze.

In this study, the chosen emotions of the robot were inspired by Beck at al. [

35]. As in the experiment by Tsiourti et al. [

14], the robot expressed an emotion after the video was finished. The participants were asked to indicate what kind of emotion that was displayed by NAO robot based on a sheet provided to them.

3.3.3. Empathy

The Empathy questionnaire for children and adolescents (EmQue-CA) was used [

36]. The questionnaire consists of 14-items, which is divided equally into three different subscales to measure three different dimensions of empathy: affective empathy (e.g., If my mother is happy, I also feel happy), cognitive empathy (e.g., When a friend is angry, I tend to know why) and prosocial motivation (e.g., if a friend is sad, I like to comfort him). All responses are rated on a 3-point Likert scale (1 = not true, 2 = sometimes true, 3 = often true). The questionnaire has been shown to have good psychometric properties among various study samples from the age of seven [

37,

38] and the instrument has been translated and validated to use on Italian children [

37].

Mean scores were calculated for each subscale, higher scores indicate higher empathy. Mean and Cronbach alpha are presented in

Table 1.

3.3.4. State Empathy

The State Empathy Scale was used during message processing. The questionnaire consists of three subscales, each with 4 items measuring three different dimensions of state empathy: affective empathy (e.g., The character´s emotions are genuine), cognitive empathy (e.g., I can see the character’s point of view), and associative empathy (e.g., I can relate to what the character was going through in the message). The scale has shown good internal and external consistency for college students [

39].

Mean and Cronbach alpha are showed in

Table 1.

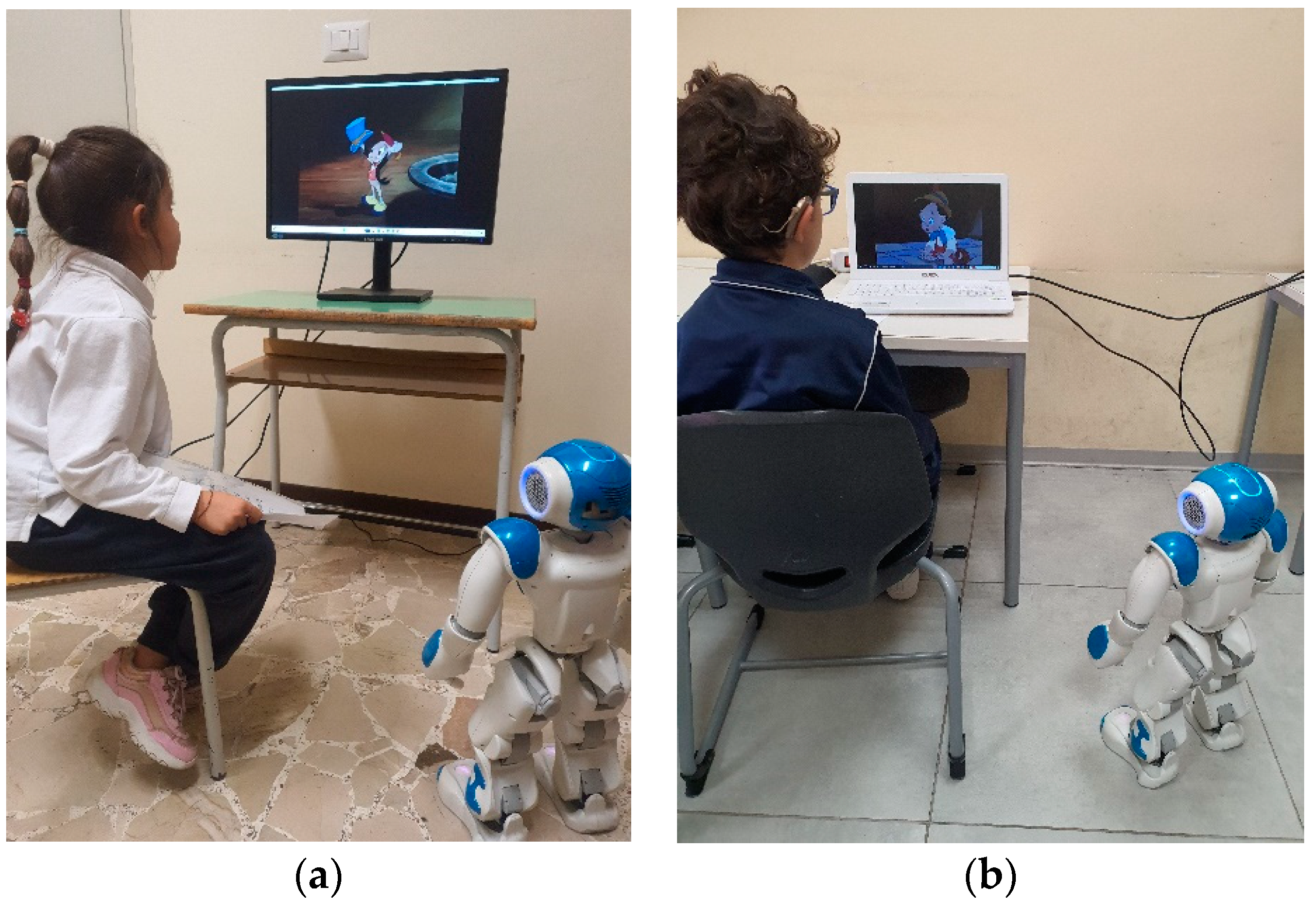

3.4. Experimental Design

The experiment was conducted on two different setting (i.e., a primary school or in deaf center). Before the experimental session and to minimize the possible novelty effect, all children met the robot and interacted with it for 20 minutes (see

Figure 1).

All the experiments took place in a separate room, with good lighting conditions. In the rooms there was one computer screen, a humanoid robot, and two researchers. In the experimental condition with deaf children, the interpreter who communicated with the children on behalf of the researchers using sign language. In all cases the experiment was conducted without any sounds. Each experiment lasted approximately 30 minutes and were divided into three parts.

Specifically, in the first session, the participants were asked to sit down in front of the computer screen together with NAO robot. Further, the participants were asked to answer questions on demographic information as well as the EmQue-CA [

36].

In the second session, the children watched three video clips from well-known Disney movies. The videos were previously chosen on the clarity and distinct through body language and that speech was not necessary to understand the emotion that unfolded on the screen. The clips were shown was random, but all participants saw three films of 1 minute each, which showed the emotions happy, sad and angry.

The videoclip displaying the emotion happy showed the final scene from the movie Pinocchio, where the puppet transforms into a real child and the cartoon characters express happiness and celebrate together. In the video displaying sad emotions the film Lilo and Stitch displayed a little girl tries to relate to a group of her peers but, being poor, she doesn’t have the same toys as other girls. The latter then insult her and then leave her alone. In conclusion, for the angry emotion a clip from the film “The Incredibles”, where the whole family has dinner together but during this meal, they all start to argue was shown. After each clip, NAO was implemented to respond with an emotion. In the congruent condition, NAO responded in a congruent matter (e.g. with the same emotion as shown in the video). While, for the incongruent condition, the robot showed in an incongruent modality (e.g. with a different emotion than shown in the video).

After each emotion shown by both the video and the NAO, children individually were asked to recognize and report the emotions they perceived and shown by the video and the robot.

In the third session, the children filled the items from Tsiourti et al. [

14] on believability and the State Empathy Scale [

39]. When the experiment was finished the children could interact and play with the robot and ask information or curiosity on the experiment. This phase was useful to encourage the debriefing section.

3.5. Statistical analysis

All statistical analyses were performed in SPSS for windows (version 29; IMB corp.). Basic descriptive statistics including means, standard deviations, percentages, frequency distributions and measures of Cronbach alpha for each of the scales were calculated. The state empathy cognitive items were removed from further analyzes due to low cronbach alpha.

The number of correct recognitions of NAO's emotions and emotions displayed in the video was summed. Since all the participants guessed three different emotions, the answers were distributed from 0 - 3 reflecting how many correct answers the children had. Initially, a correlation analysis (exploratory) between the different variables was conducted. Subsequently, a linear regression (confirmative) was conducted, with the total number of correct recognitions of emotions in Nao as the dependent variable.

A significance level (p-value) of less than .05 was applied for all tests.

4. Results

4.1. Descriptives

All correlations between the variables are presented in

Table 2. A Pearsons correlation was found between emotion recognition in NAO and emotion recognition in the videoes displayed (r = .63). Further, a pearsons correlation was found between sex and emotion recognizion of NAO (r = .54).

4.2. Attitudes and Beliaviability toward NAO Robot

All in all, the children rated NAO's believability as satisfactory (M = 3.84; SD = 0.83). The level fo congruence correlated with beliviability and predicted beliviability in a linear regression analysis (β = -.451, p =.01). Further, there was a significant difference between the congruent (M = 4.27; SD = 1.22)- and incongruent group (M = 3.0; SD = 1.41) when assessing the degree of ease of understanding what the robot was thinking (t25 = 2.496, p = .02), as well as how appropriate they found the emotion expressed by NAO (M

congruent = 4.73, SD

congruent = 0.45; M

incongruent = 2.75, SD

incongruent ; t12.26= 1.71; T12.26 = 3.903, p =.001; see

Table 3 and for frequency distribution of responses to each items by the congurent and incongurent group, respectively).

4.3. Emotion Recognizing in NAO

A large porpotion of the children reported that it was easy to guess the emotion of NAO robot, M = 4.19 (SD = 1.15). The congruent group tended to find it easier to guess the emotion of NAO (M = 4.60, SD =0.63) than the incongruent group (M = 3.67, SD = 1.44), but the difference were not significant.

The average correct recogniztion of emotion in NAO were M = 1.96 (SD = 1.09). The children were most able to guess the emotion happy and sad in NAO (n = 18, 66.7% for both emotions), followed by the emotion angry (n = 17, 63.0%). In the regression analysis to predict correct emotion recognition in NAO robot only the ability to regognize emotions in the video (β = 0.720, p <.001) and sex (β = .166, p = .04) were found to be significant predictors (see

Table 5 for summary of regression results), indicating that girls were more likely to guess the correct emotion of NAO.

The level of empathy and hearing level did not significantly predict the recognizing emotions in NAO without sounds.

5. Discussion and Conclusions

In this study, results from a controlled experiment have been reported. The experiment has shown a robot to show emotions through non-verbal communication, that is, only with gestures and head movements. The purpose of the experiment was to investigate how young children, both deaf and hearing, assessed the believability of the robot, as well as to investigate whether the children were able to recognize emotions displayed by the robot. In the experimental group, the children witnessed NAO robot responding to a congruent matter to a cartoon video, while in the control group the children witnessed NAO responding to an incongruent matter to the video.

Overall, the children in this study reported medium to high believability towards the robot. In line with findings from Tsiourti at al. [

14], watching NAO display emotions in a congruent matter predicted higher believability in the robot. Further, the results showed that the children who saw NAO displayed congruent emotions to the video, reported that it was easier to recognize the robot's thoughts, and that they thought the reaction from the robot was more appropriate than the control group.

The children reported a medium ability to recognize emotions in NAO when the robot displayed emotions through non-verbal combination. The emotions happy and sad were the most frequently correct guessed emotions, which is in line with previous findings on emotion recognition in humans [

40]. Previous findings empathy affects people's assessments of various human factors in robots (e.g., level of intelligence [

26]). In our stud higher levels of empathy were surprisingly not a predictor to better recognize emotions in the robot. Further, no difference was found in empathy levels between the conditions or groups, in contrast to previous findings suggesting that deaf children have lower levels of empathy than hearing children [

18,

19,

20].

Contrary to previous findings [

14] there was no difference between the congruent and incongruent condition in regards to recognizing correct emotions in NAO. Meaning that when the robot responded with congruent emotions to the emotions displayed in the video, the children were not more likely to guess the same emotions. However, the ability to guess correct emotions displayed in the videos predicted the ability to recognize the emotions NAO displayed, regardless of the congruence level. Females were also found to be more competent than their male peers to guess the correct emotion in NAO. Despite limited research into emotion recognition in HRI, research into general emotion recognition shows that this process is affected by a number of factors including general self-concept and age [

40]. Further, previous findings have suggested that deaf children have a poorer ability to recognize emotions in others than children with hearing. For example, Wiefferink et al [

41] showed that children with cochlear implants recognized fewer emotions than children with typical hearing and that emotion recognition skills are affected by hearing loss, including when using non-verbal communication to express emotions. In our study, no differences were found between deaf and hearing children in regards to guessing correct emotions in NAO.

Finally, a number of limitations need to be considered. First, the numbers of participants and controls of the sample were relatively small, which might affect how generalizable the findings are to a broader population. Additionally, the assessment focused on only three emotions, potentially limiting the understanding of a more comprehensive range of emotional expressions. Furthermore, the study mainly involved a sample with similar cultural backgrounds, potentially limiting the generalizability of the results to a more diverse population.

Future research with larger and more diverse samples, encompassing a wider array of emotions, would provide a more robust understanding of the topic. This study may in an innovative way be a contribution to future research in a field, robot-emotions and deafness, which has not yet been investigated. As well as contribute to a broader understanding of how deaf children interact with humanoid robots. In conclusion, this study has undertaken a multifaceted examination, encompassing three primary objectives. Firstly, we scrutinized the perception of believability towards a robot. Secondly, we explored the nuanced aspect of how children, spanning both hearing and deaf populations, discern emotions in robotic entities. Furthermore, our investigation delved into the potential influence of observing a robot emoting congruently with a cinematic portrayal, assessing its impact on children's proficiency in discerning emotions displayed by the NAO robot. The findings indicated that children generally rated the believability of the robot as medium to high, and there was no significant difference between the congruent and incongruent conditions. Notably, the ability to recognize emotions in a video and being female emerged as predictors of the ability to identify emotions in the robot. Moving forward, it is crucial for future research to employ larger samples, incorporate diverse cultural perspectives, and encompass a broader range of emotions to enhance the generalizability and depth of our understanding in this field.

Author Contribution: Conceptualization, C.C. and D.C.; methodology, C.C. and H.H.; validation, C.C., H.H. and D.C.; investigation, C.C. and H.H.; data curation, H.H.; writing—original draft preparation, C.C.; writing—review and editing, C.C., H.H. and D.C.; supervision, D.C.; project administration, D.C.; funding acquisition, D.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research has been fully supported by the “START to Aid” (209564) project (PIACERI - PIAno di inCEntivi per la RIcerca di Ateneo) of the Department of Humanities, University of Catania (Italy).

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki, and approved by the Ethics Committee of Oasi Research Institute and Sheffield Hallam University (protocol code 2017 l0lI I7ICE-IRCCS-OASV4) for studies involving humans.

Informed Consent Statement

Informed consent was obtained from all parents of the participating children involved in the study.

Data Availability Statement

Acknowledgments

The authors gratefully thank all participants of this study for the generous contribution of their time. Also, thanks to the schools “I.C.S. Elio Vittorini” San Pietro Clarenza CT; “C.D. Teresa di Calcutta, Tremestieri Etneo, CT”; “AFAE-association of families of the hearing impaired in Etna, CT” for the collaboration, and the psychologist Olga Distefano for the support during the recruitment process.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Sheridan, T. B.; Human–Robot Interaction: Status and Challenges. Human Factors, 2016, 58(4), 525-532. [CrossRef]

- Goodrich, M. A.; Schultz, C.A.; (2008), "Human–Robot Interaction: A Survey", Foundations and Trends® in Human–Computer Interaction: 2008, Vol. 1: No. 3, pp 203-275. [CrossRef]

- Bonarini, A.; Communication in Human-Robot Interaction. Current Robotics Reports, 2020, 1(4), 279-285. [CrossRef]

- Bartneck C.; Forlizzi J.; A design-centered framework for social human-robot interaction, in Robot and Human Interactive Communication, 2004. ROMAN 2004. 13th IEEE International Workshop on, 2004, pp. 591–594. [CrossRef]

- Sarrica, M.; Brondi, S.; Fortunati, L; “How many facets does a “social robot” have? A review of scientific and popular definitions online", Information Technology & People, 2020, Vol. 33 No. 1, pp. 1-21. [CrossRef]

- Zinina A.; Zaidelman L.; Arinkin N.; Kotov A.; Non-verbal behavior of the robot companion: a contribution to the likeability, Procedia Computer Science, 2020, Volume 169, Pages 800-806, ISSN 1877-0509. [CrossRef]

- Hall J.; Tritton T.; Rowe A.; Pipe A.; Melhuish C.; Leonards U.; Perception of own and robot engagement in human robot interactions and their dependence on robotics knowledge. Robotics and Autonomous System, 2014, 62(3):392–399. [CrossRef]

- Breazeal C.; Emotion and sociable humanoid ro-bots. International Journal Human-Computer Studies, 2003, 59(12):119–155. [CrossRef]

- Van den Berghe R.; Verhagen J.; Oudgenoeg-Paz O.; van der Ven S.; Leseman P.P.M.; Social robots for language learning: a review. Review of Educational Research. 2018. [CrossRef]

- Randall N.; A survey of robot-assisted language learning (RALL). ACM Transactions Human-Robot Interaction, 2019, 9, 36. 9. [CrossRef]

- Konijn, E.A.; Jansen, B.; Mondaca Bustos; V. et al.; Social Robots for (Second) Language Learning in (Migrant) Primary School Children. International Journal of Social Robotics, 2022, 14, 827–843 (2022). [CrossRef]

- Belpaeme T.; Kennedy J.; Ramachandran A.; Scassellati B.; Tanaka F.; Social robots for education: a review. Science Robotics, 2018, 3, 1–9. 3. [CrossRef]

- Conti D.; Cirasa C.; Di Nuovo S.; Di Nuovo A.; ‘Robot, tell me a tale!’: A Social Robot as tool for Teachers in Kindergarten, Interaction Studies, 2020, vol. 21, no. 2, pp. 220–242. [CrossRef]

- Tsiourti, C.; Weiss, A.; Wac, K.; et al.; Multimodal Integration of Emotional Signals from Voice, Body, and Context: Effects of (In)Congruence on Emotion Recognition and Attitudes Towards Robots. International Journal of Social Robotics, 2019, 11, 555–573. [CrossRef]

- Partan S.; Marler P.; Communication goes multimodal. Science, 2019, 283(5406):1272–3. [CrossRef]

- Signoret C.; Rudner, M.; Hearing impairment and perceived clarity of predictable speech. Ear and Hearing, 2019, 40(5), 1140–1148. [CrossRef]

- Garcia, R.; Turk, J.; The applicability of Webster-Stratton parenting programmers to deaf children with emotional and behavioral problems, and autism, and their families: Annotation and case report of a child with autistic spectrum disorder. Clinical Child Psychology and Psychiatry, 2007, 12(1), 125–136. [CrossRef]

- Ashori, M.; Psychology, education & rehabilitation of people with hearing impairment (1st ed.). Isfahan University Press, 2022. [CrossRef]

- Terlektsi E.; Kreppner J.; Mahon M.; Worsfold S.; Kennedy C. R.; Peer relationship experiences of deaf and hard-of-hearing adolescents. The Journal of Deaf Studies and Deaf Education, 2020, 25(2), 153–166. [CrossRef]

- Rieffe, C.; Awareness, and regulation of emotions in deaf children. British Journal of Developmental Psychology, 2012, 30(4), 477–492. [CrossRef]

- Netten A. P.; Rieffe, C.; Theunissen S. C. P. M.; Soede, W.; Dirks, E.; Briaire, J. J.; Frijns, J. H. M.; Low empathy in deaf and hard of hearing (pre) adolescents compared to normal hearing controls. PLoS One1, 2015, 10(4), 1–16. [CrossRef]

- Batson C. D., These Things Called Empathy: Eight Related but Distinct Phenomena. In. The MIT Press, 2009. [CrossRef]

- Batson C. D.; Early S.; Salvarani G.; Perspective taking: Imagining how another feels versus imaging how you would feel. Personality and Social Psychology Bulletin, 1997, 23, 751-758. [CrossRef]

- O'Connell G.; Christakou A.; Haffey A. T.; Chakrabarti B.; The role of empathy in choosing rewards from another's perspective. Frontiers in Human Neuroscience, 2013, 7, 1-5. [CrossRef]

- Ramachandra V., Longacre H., Unmasking the psychology of recognizing emotions of people wearing masks: The role of empathizing, systemizing, and autistic traits. Personality and Individual Differences, 2022, 185, 111249. [CrossRef]

- Charrier, L., Galdeano, A., Cordier, A., & Lefort, M. (2018, October). Empathy display influence on human-robot interactions: a pilot study. In Workshop on Towards Intelligent Social Robots: From Naive Robots to Robot Sapiens at the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2018) (p. 7).

- Beck A.; Cañamero L.; Hiolle A.; Damiano L.; Cosi P.; Tesser F.; Sommavilla G.; Interpretation of emotional body language displayed by a humanoid robot: A case study with children. International Journal of Social Robotics, 2013, 5, 325-334. 5. [CrossRef]

- Gasteiger N.; Lim J.; Hellou M.; et al.; A Scoping Review of the Literature on Prosodic Elements Related to Emotional Speech in Human-Robot Interaction. International Journal of Social Robotics, 2022. [CrossRef]

- Pot E.; Monceaux J.; Gelin R.; Maisonnier B.; Choregraphe: a graphic tool for humanoid robot programming, RO-MAN 2009 - The 18th IEEE International Symposium on Robot and Human Interactive Communication, Toyama, Giappone, 2009, pp. 46-51. [CrossRef]

- Gomes, P.; Paiva, A.; Martinho, C.; Jhala, A.; Metrics for Character Believability in Interactive Narrative. In: Koenitz, H., Sezen, T.I., Ferri, G., Haahr, M., Sezen, D., C̨atak, G. (eds) Interactive Storytelling. ICIDS 2013. Lecture Notes in Computer Science, 2013, vol 8230. Springer, Cham. [CrossRef]

- Conti D.; Di Nuovo A.; Cirasa C.; Di Nuovo S.; A Comparison of kindergarten Storytelling by Human and Humanoid Robot with Different Social Behavior. In proceedings of the companion of the 2017 ACM/IEEE International Conference on HumanRobot Interaction, 2017, 97-98. [CrossRef]

- Robaczewski, A.; Bouchard, J.; Bouchard K.; Gaboury, S.; Socially Assistive Robots: The Specific Case of the NAO. International Journal of Social Robotics. 2021, 13. [CrossRef]

- Steinhaeusser S.C.; Birgit Lugrin B.; Effects of Colored LEDs in Robotic Storytelling on Storytelling Experience and Robot Perception, In Proceedings of the 2022 ACM/IEEE International Conference on Human-Robot Interaction (HRI2022). IEEE Press, 2022.

- Johnson, D.O.; Cuijpers, R.H.; Investigating the Effect of a Humanoid Robot’s Head Position on Imitating Human Emotions. International Journal of Social Robotics, 2019, 11, 65–74. [CrossRef]

- Beck A.; Cañamero L.; Bard K.A.; Towards an Affect Space for robots to display emotional body language, Proceedings of In-ternational Symposium in Robot and Human Interactive Communication (2010, Viareggio, Italy), IEEE, 2010, 464–469. [CrossRef]

- Overgaauw S.; Rieffe C.; Broekhof E.; Crone E. A.; Güroğlu B.; Assessing empathy across childhood and adolescence: Validation of the empathy questionnaire for children and adolescents (emque-CA). Frontiers in Psychology, 2017, 8, 870. [CrossRef]

- Lazdauskas T.; Nasvytienė D.; Psychometric properties of Lithuanian versions of empathy questionnaires for children, European Journal of Developmental Psychology, 2021, 18:1, 144 159. [CrossRef]

- Liang Z.; Mazzeschi C.; Delvecchio E.; Empathy questionnaire for children and adolescents: Italian validation, European Journal of Developmental Psychology, 2023, 20:3, 567-579. [CrossRef]

- Shen, L.; On a scale of state empathy during message processing. Western Journal of Communication, 2010, 74(5), 504–524. [CrossRef]

- Cordeiro, T.; Botelho, J.; & Mendonça, C.; Relationship Between the Self-Concept of Children and Their Ability to Recognize Emotions in Others. Frontiers in Psychology, 2021, 12, 672919. [CrossRef]

- Wiefferink, C. H.; Rieffe, C.; Ketelaar, L.; De Raeve, L.; Frijns, J. H.; Erratum to Emotion understanding in deaf children with a cochlear implant. Journal of Deaf Studies and Deaf Education, 2013, 18(4), 563. [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).