Submitted:

09 January 2024

Posted:

11 January 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

- Studying the effect of using aerosol variables on the performance of five new DL-based models for a next-hour GHI forecasting task using data from a location with a hot desert climate

- Using two different data sources, ground-based and satellite-based to validate the forecasting results.

- Presenting the forecasting results using visualization and several evaluation metrics, including root mean square error (RMSE), mean absolute error (MAE), mean absolute percentage error (MAPE), and forecast skills (FS).

2. Related Work

3. Methodology

3.1. Data Preprocessing

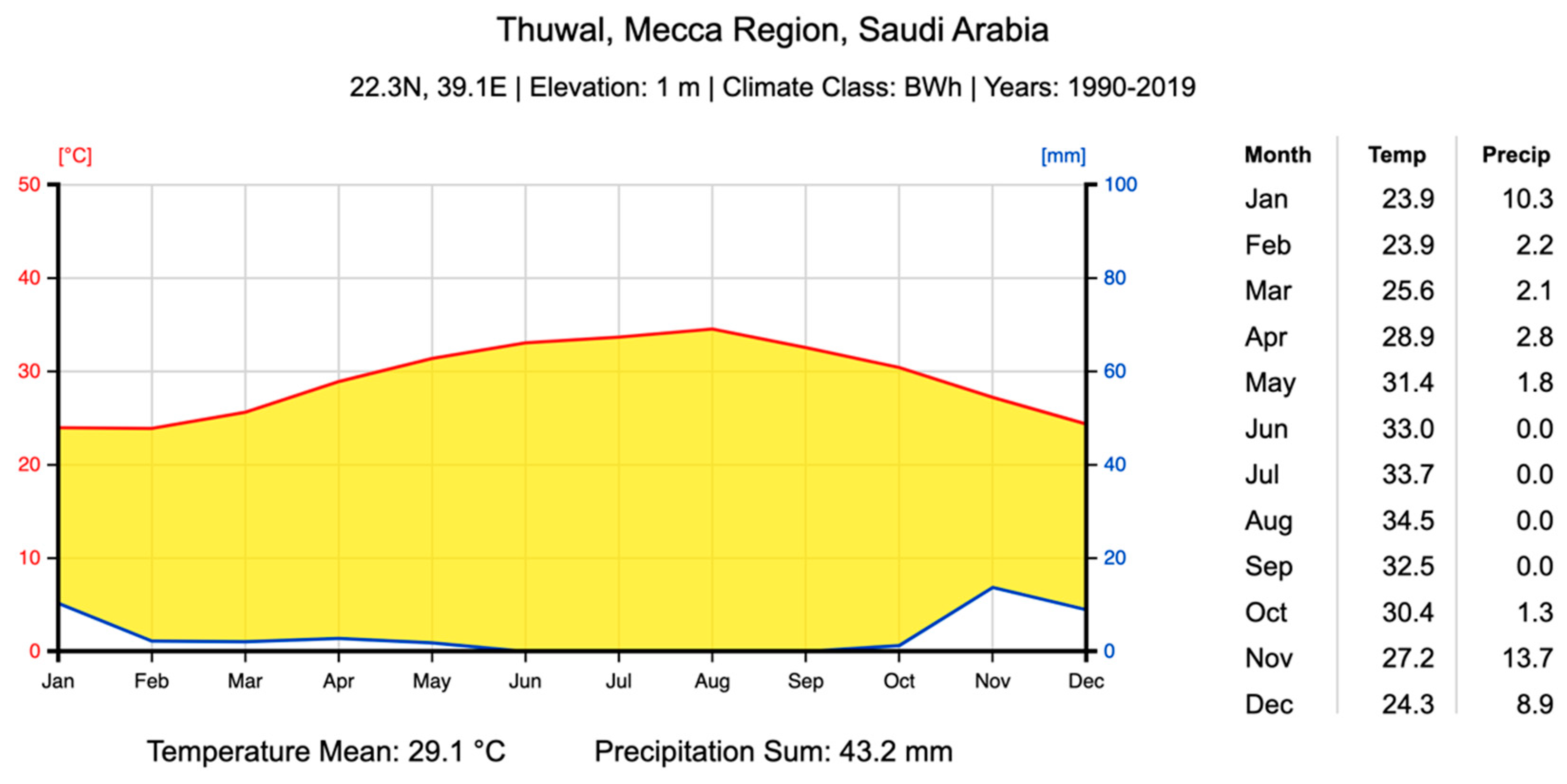

3.1.1. Data Collection

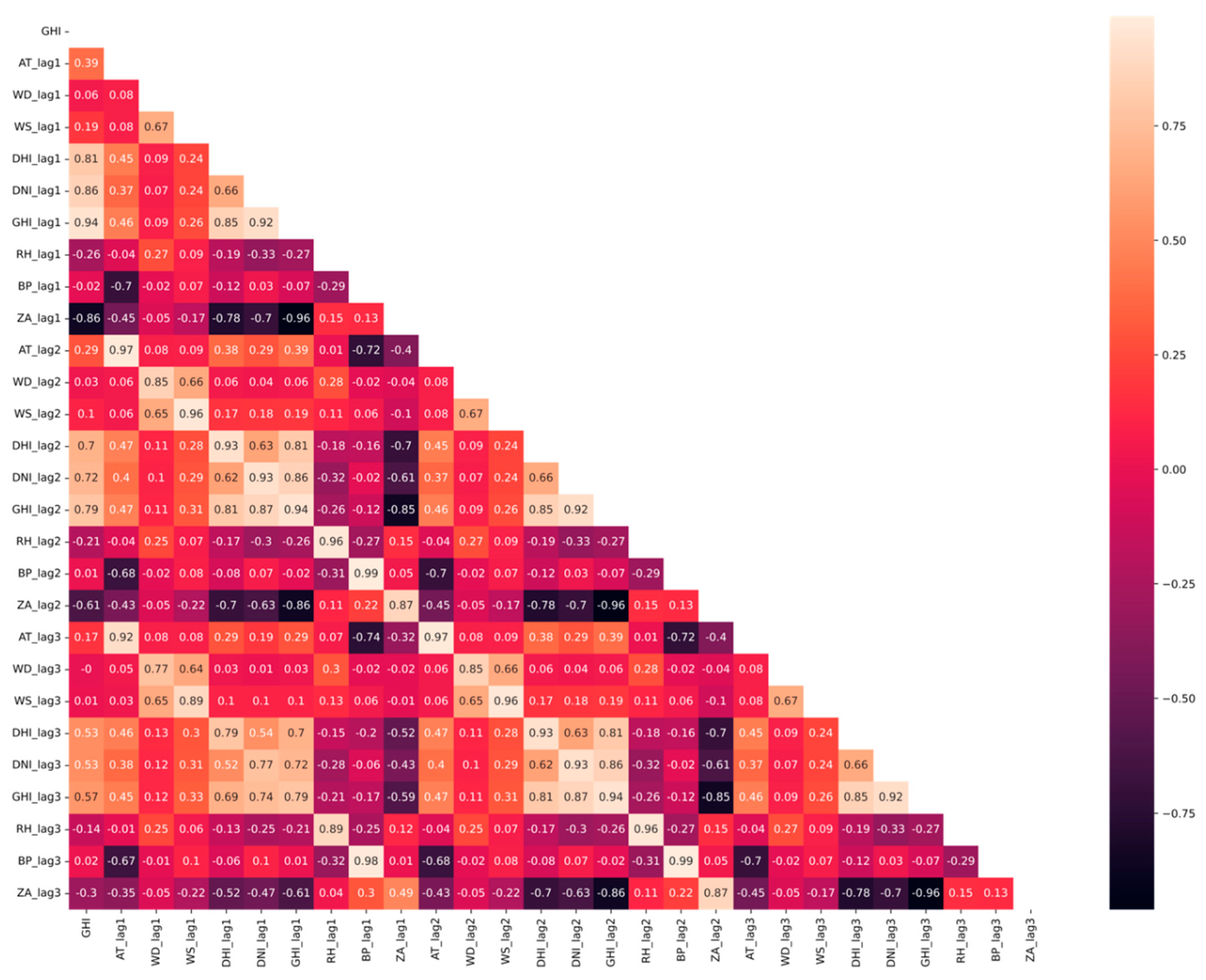

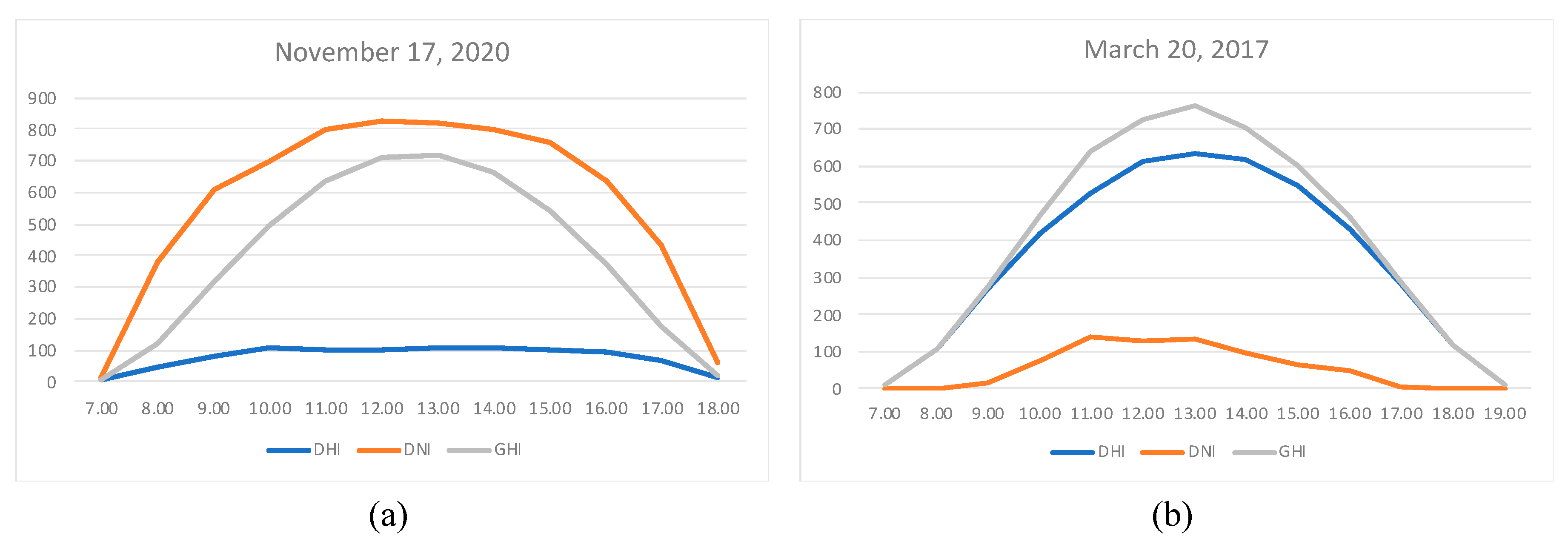

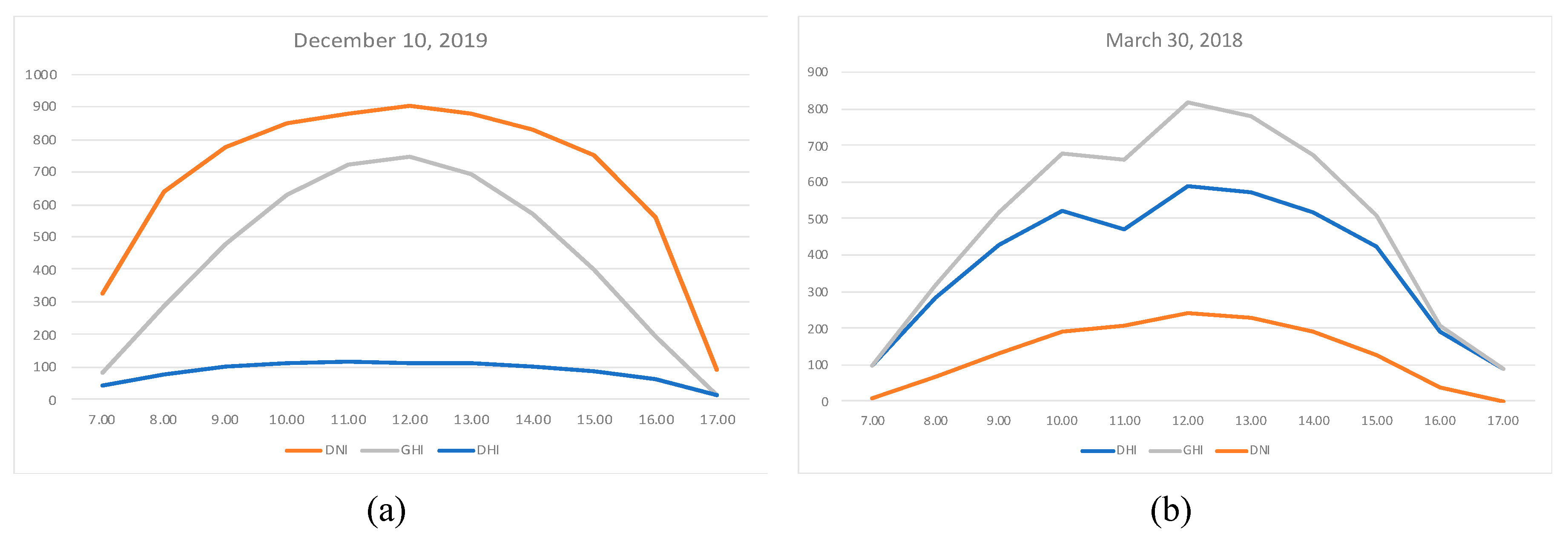

3.1.2. Data Cleaning and Feature Extraction

- K.A.CARE dataset

- Output: GHI as watt-hour per square meter (Wh/m2)

- DHI as Wh/m2

- DNI as Wh/m2

- ZA as degree °

- AT as Celsius (° C)

- WS taken at 3m as a meter per second (m/s)

- WD taken at 3 m as m/s

- Barometric pressure (BP) as Pascal (Pa)

- RH as a percentage (%)

- AERONET dataset

- AOD at 500 nm (AOD_500)

- AOD at 551 nm (AOD_551)

- AE for the wavelength range from 440 to 675 nm (440-675_AE)

- Optical Air Mass (OAM)

| Time t features | Time t-1 features | Tim t features last n days | |

|---|---|---|---|

| GHI (output) | GHI_lag1 | WD_lag1 | GHI_1D |

| DNI_lag1 | RH_lag1 | GHI_2D | |

| Hour | DHI_lag1 | BP_lag1 | GHI_3D |

| Day | ZA_lag1 | AOD_500_lag1 | GHI_4D |

| Month | AT_lag1 | 440-675_AE_lag1 | |

| WS_lag1 | OAM_lag1 | ||

- NSRDB dataset

- Output: GHI as w/m2

- DHI as w/m2

- DNI as w/m2

- ZA as degree °

- AT as Celsius (° C)

- WS as a meter per second (m/s)

- WD as m/s

- BP as Millibar

- RH as a percentage (%)

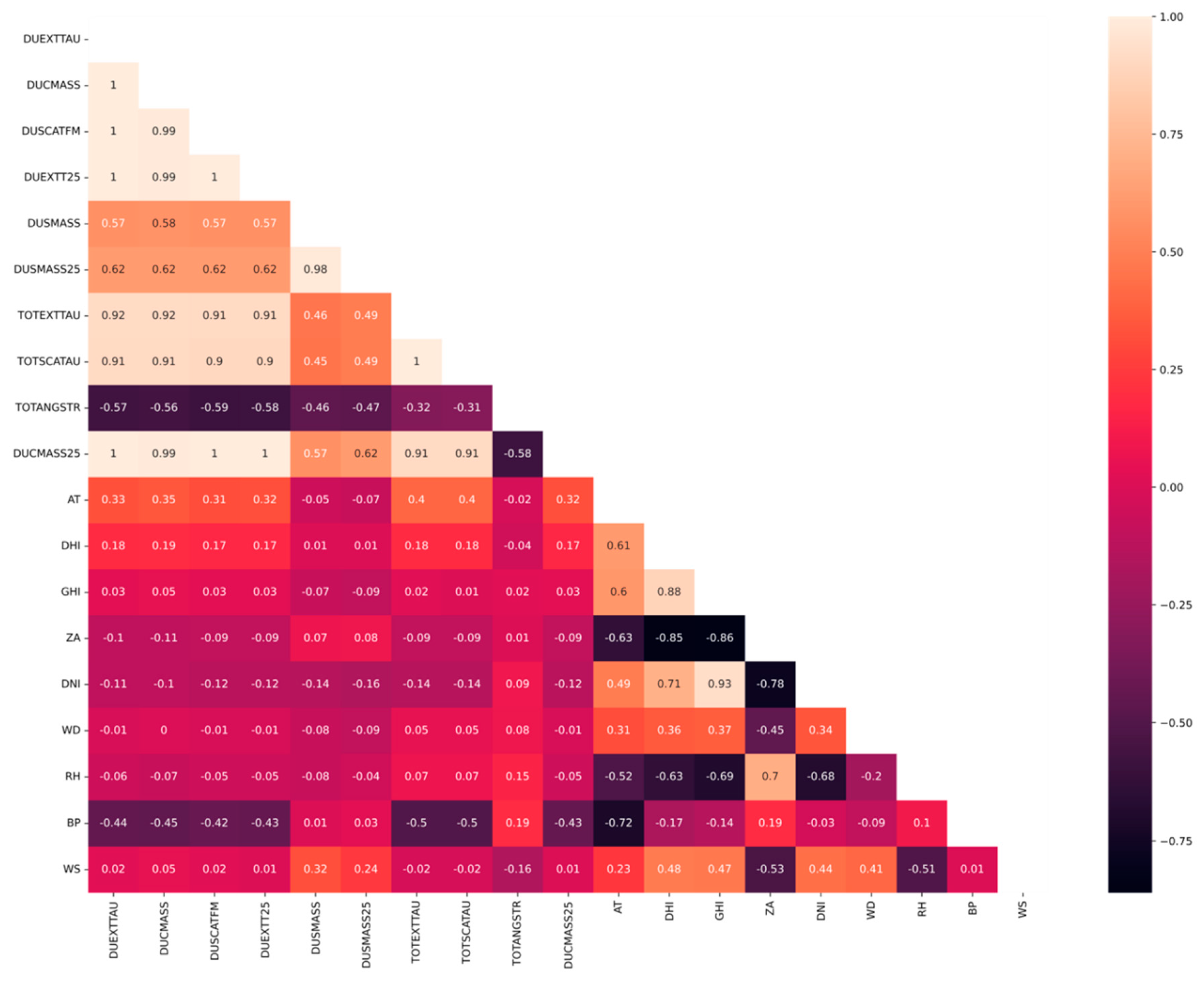

- GIOVANNI NNI dataset

- Dust extinction aerosol optical thickness 550 nm (DUEXTTAU)

- Dust extinction aerosol optical thickness 550 nm - PM 2.5 (DUEXTT25)

- Total aerosol extinction aerosol optical thickness 550 nm (TOTEXTTAU)

- Dust column mass density (DUCMASS) as kg m-2

- Dust column mass density - PM 2.5 (DUCMASS25) as kg m-2

- Dust surface mass concentration (DUSMASS) as kg m-3

- Dust surface mass concentration - PM 2.5 (DUSMASS25) as kg m-3

- Dust scattering aerosol optical thickness 550 nm - PM 1.0 (DUSCATFM)

- Total aerosol scattering aerosol optical thickness 550 nm (TOTSCATAU)

- Total Aerosol Angstrom parameter 470-870 nm (TOTANGSTR)

3.1.3. Data Normalization and Dividing

3.2. Models’ Development

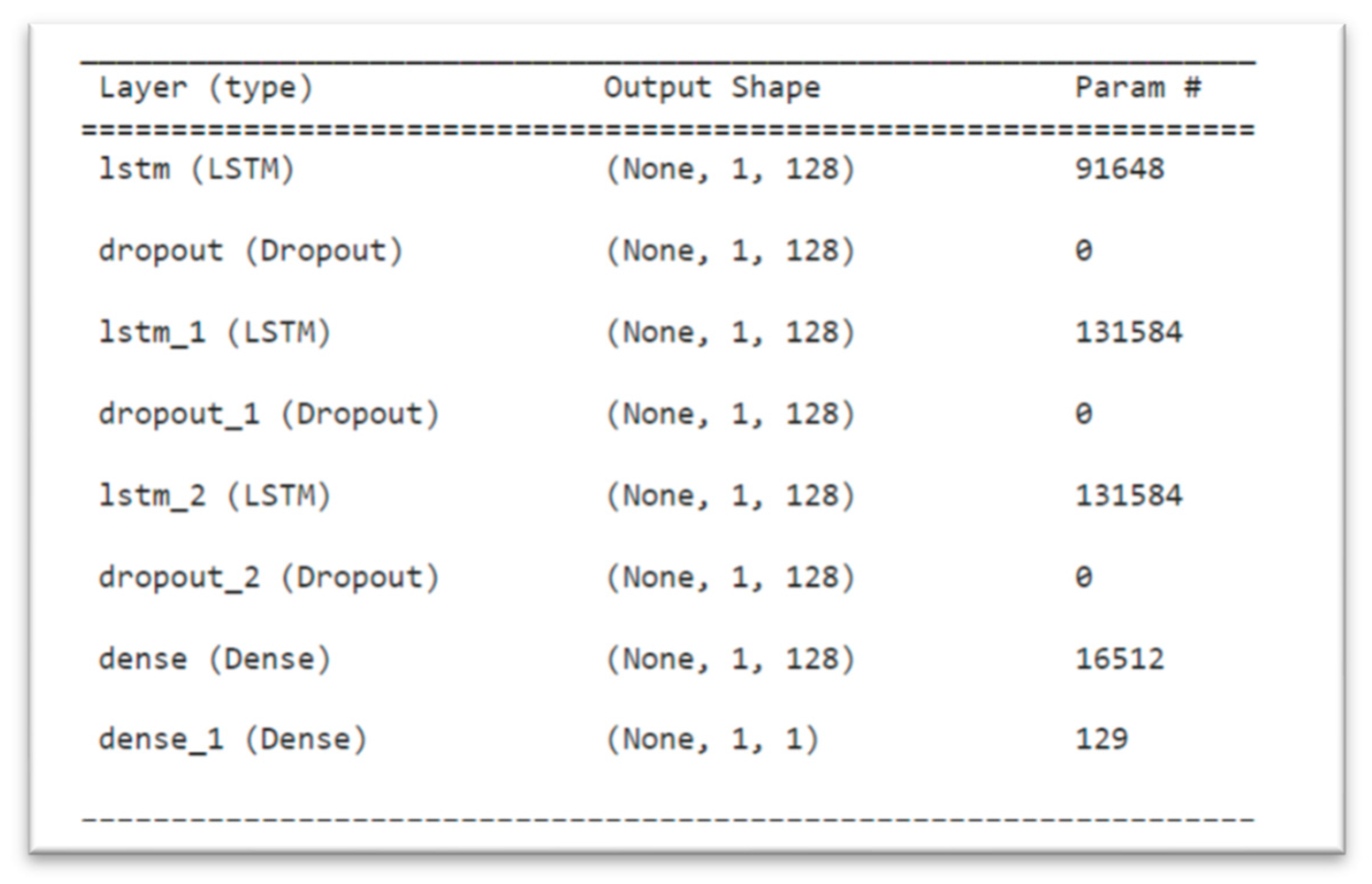

3.2.1. LSTM

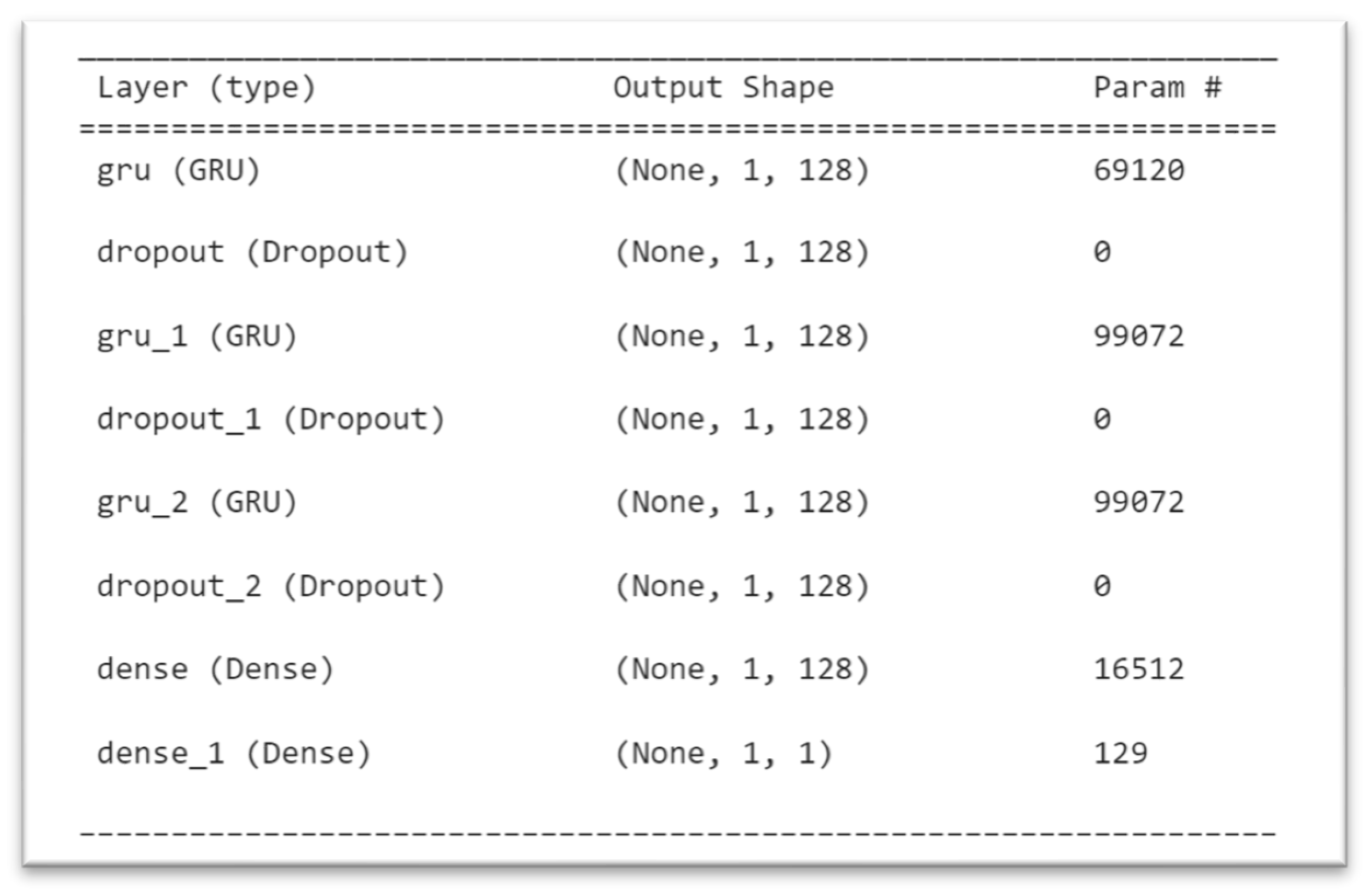

3.2.2. GRU

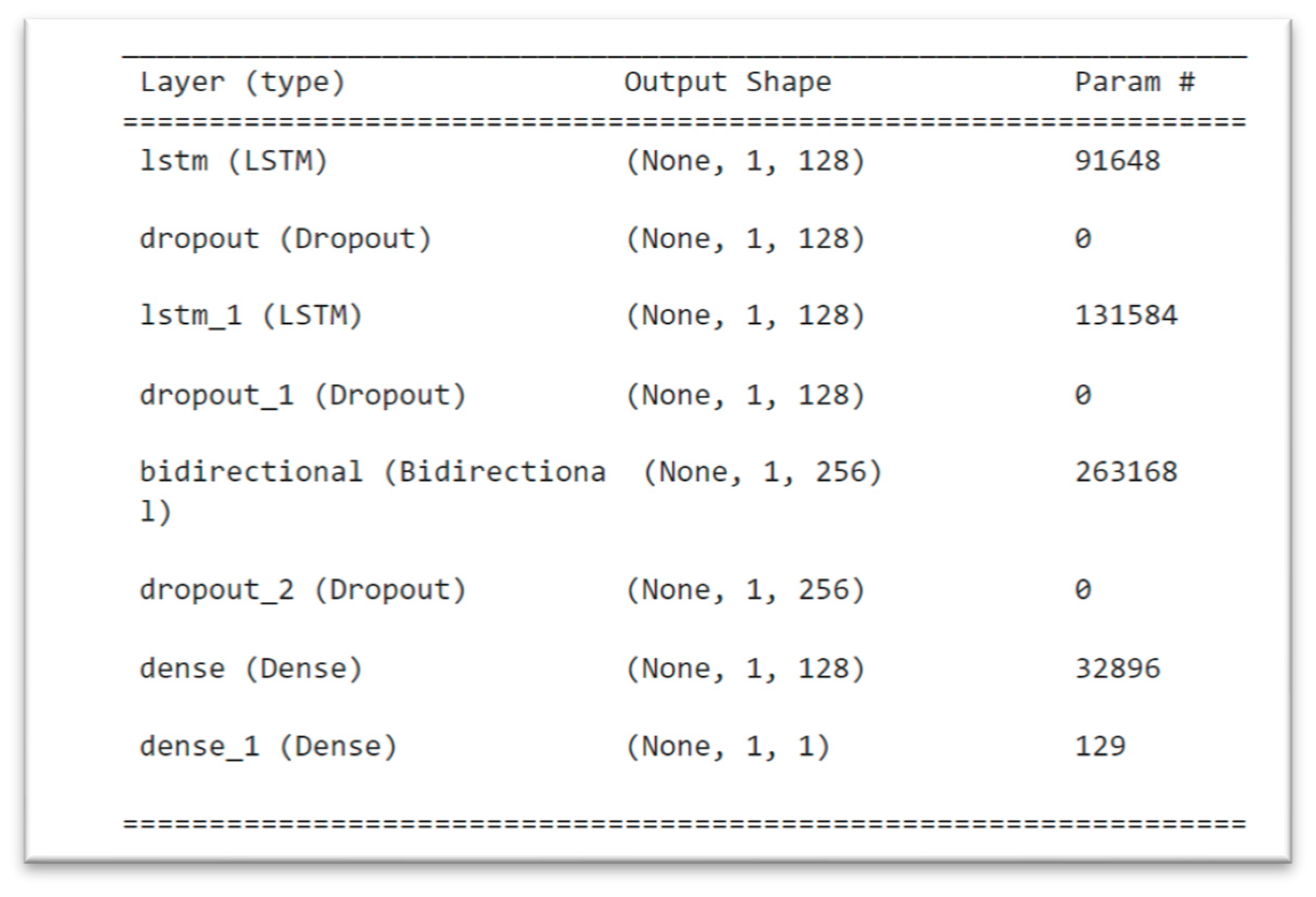

3.2.3. BiLSTM

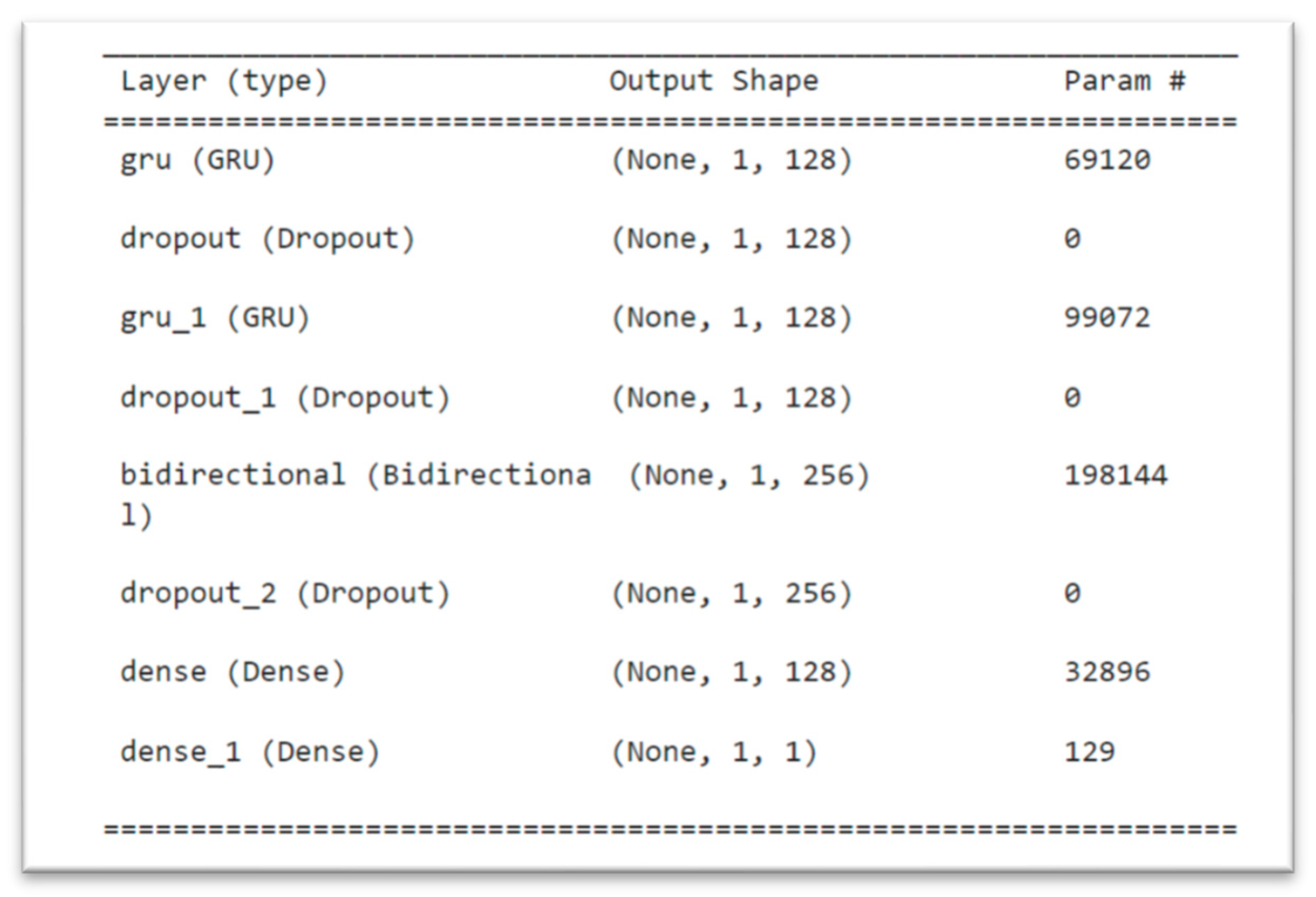

3.2.4. BiGRU

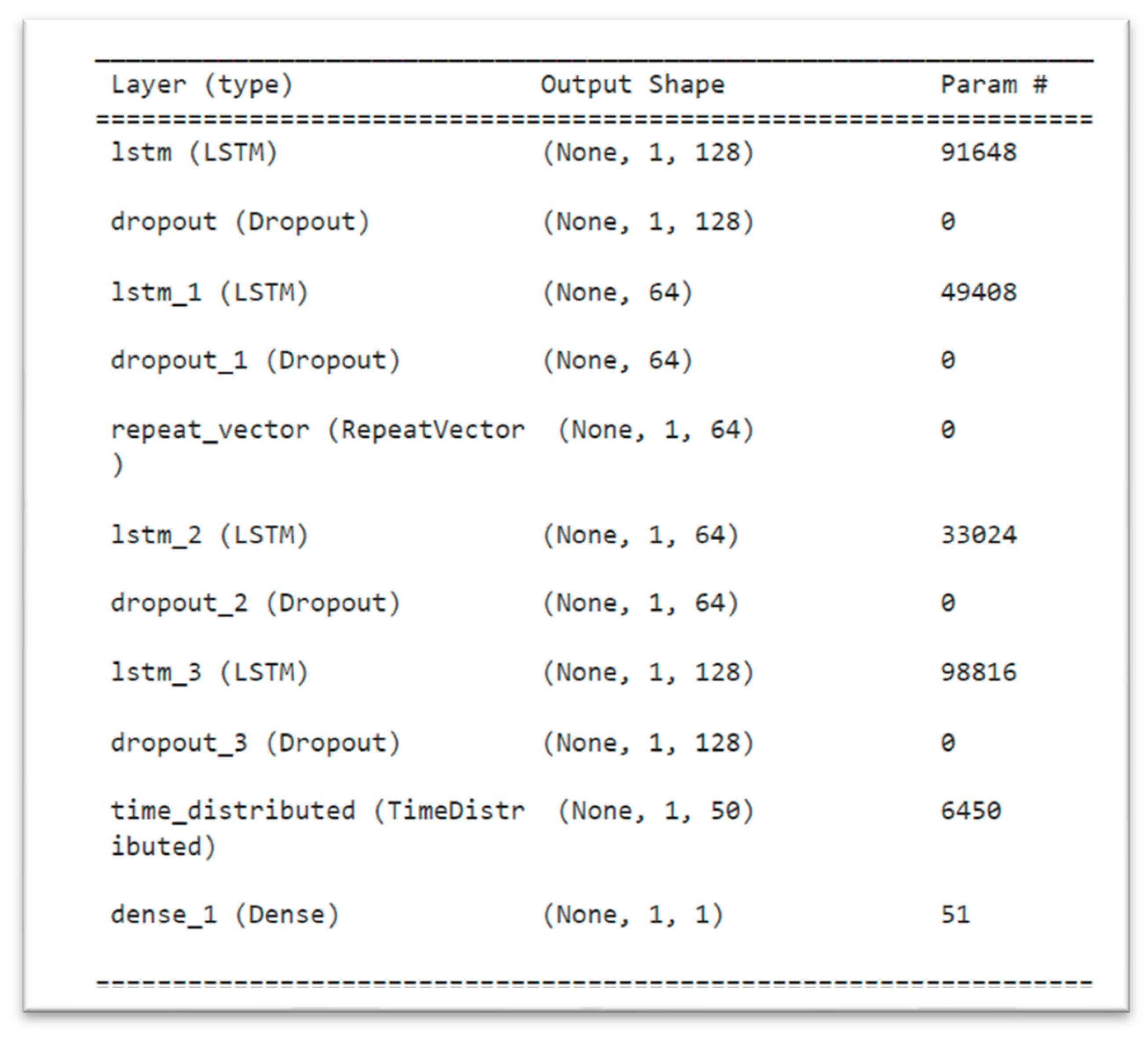

3.2.5. LSTM-AE

3.3. Implementation

3.4. Evaluation Metrics

4. Results and Discussion

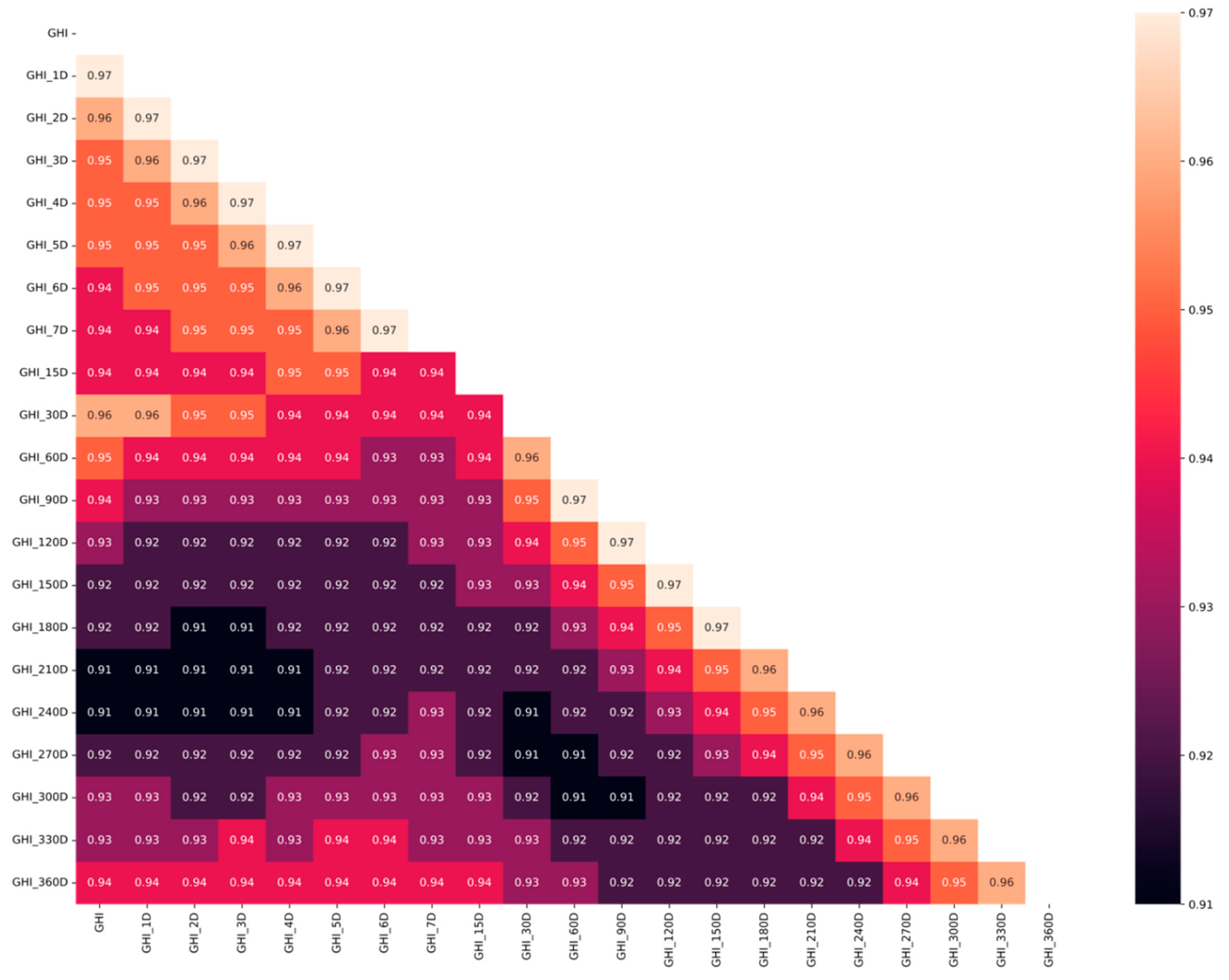

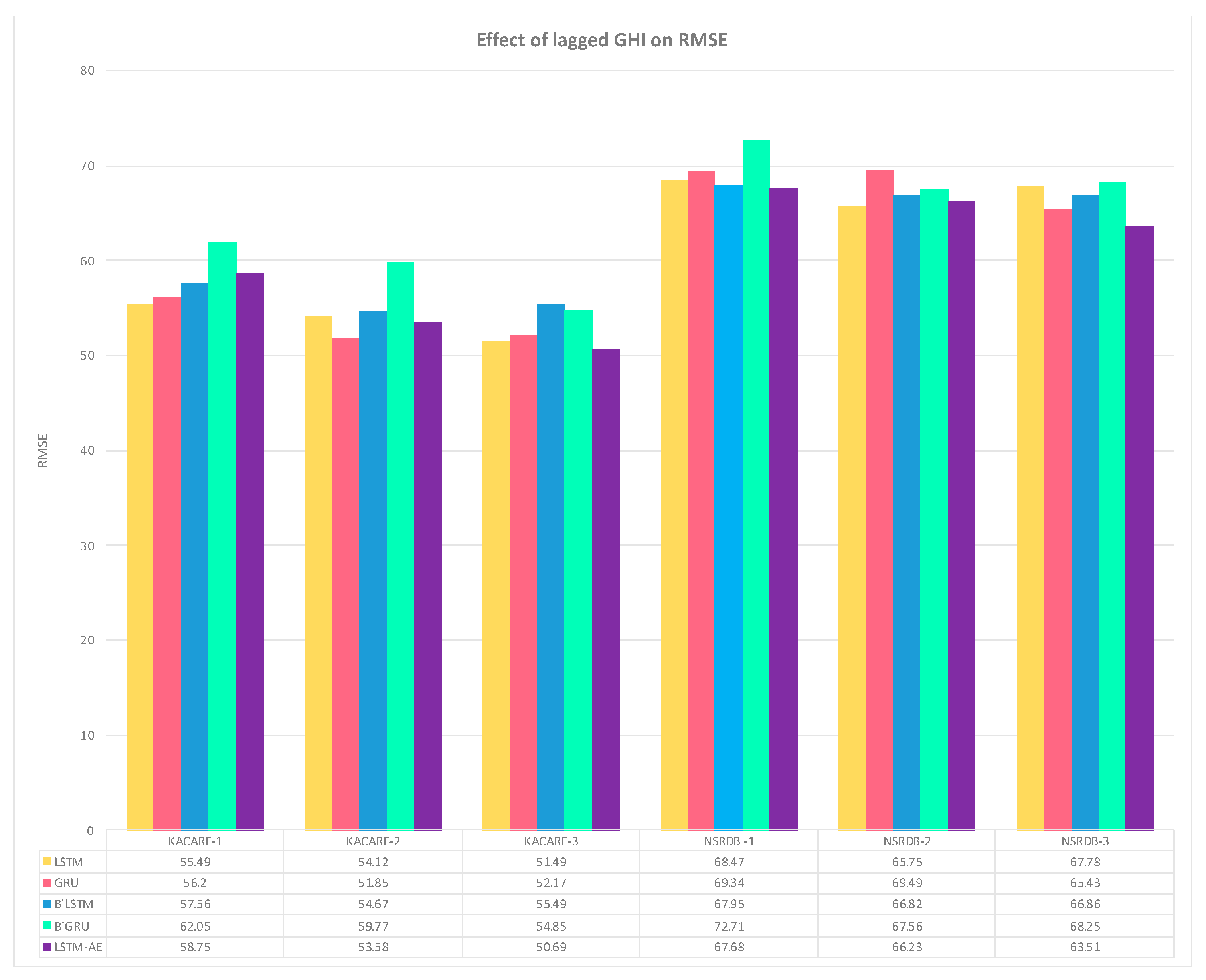

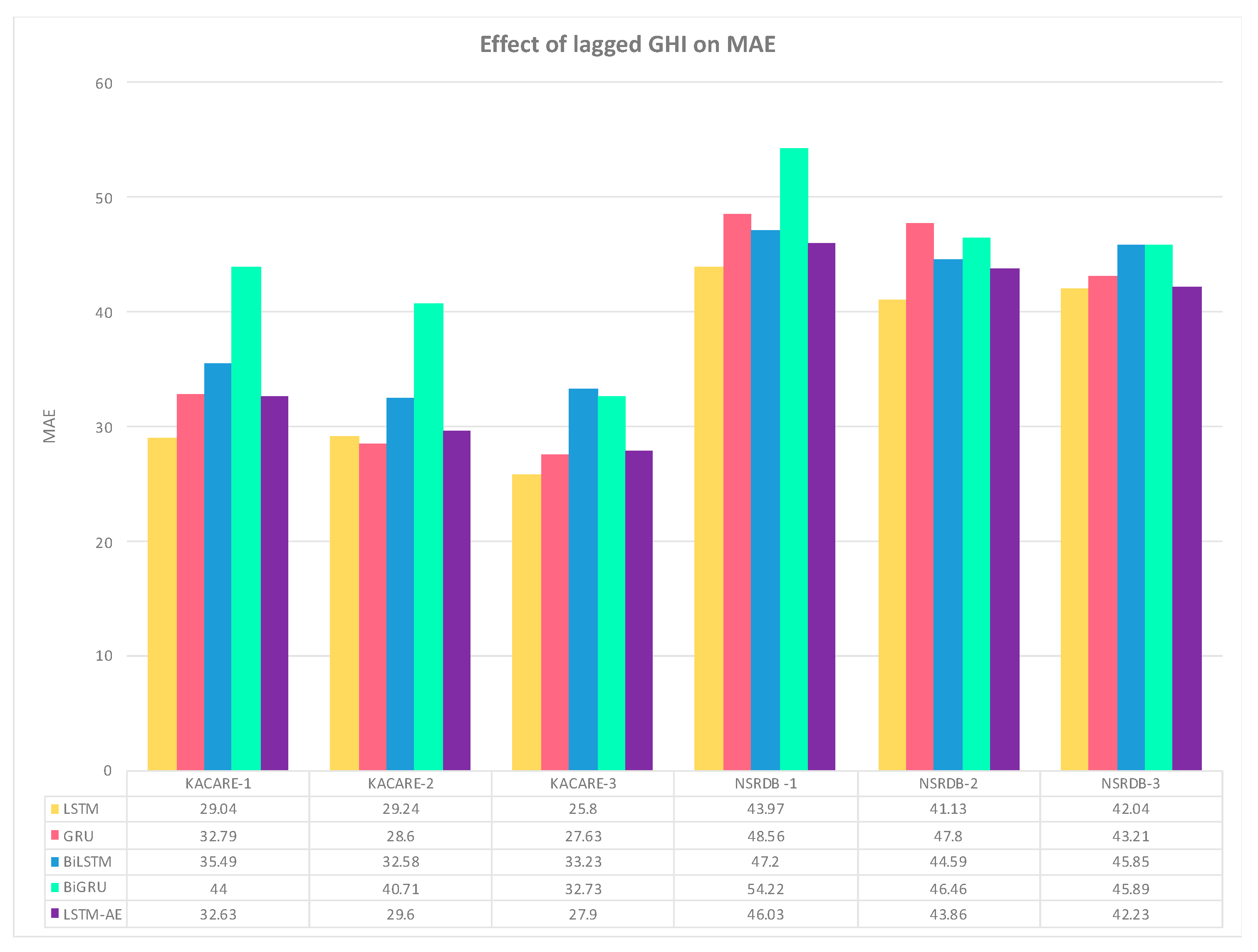

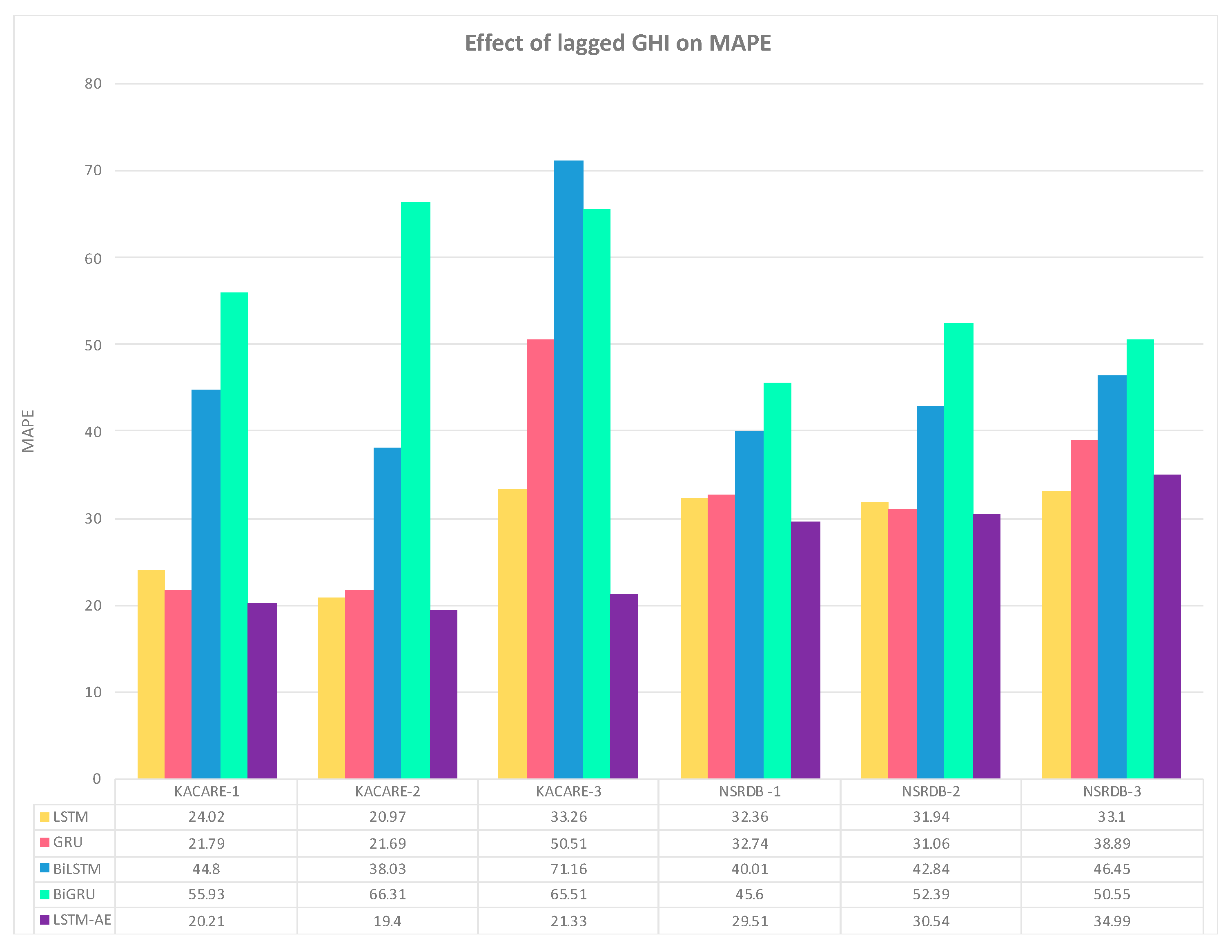

4.1. Effect of Using Lagged GHI Features on Forecasting

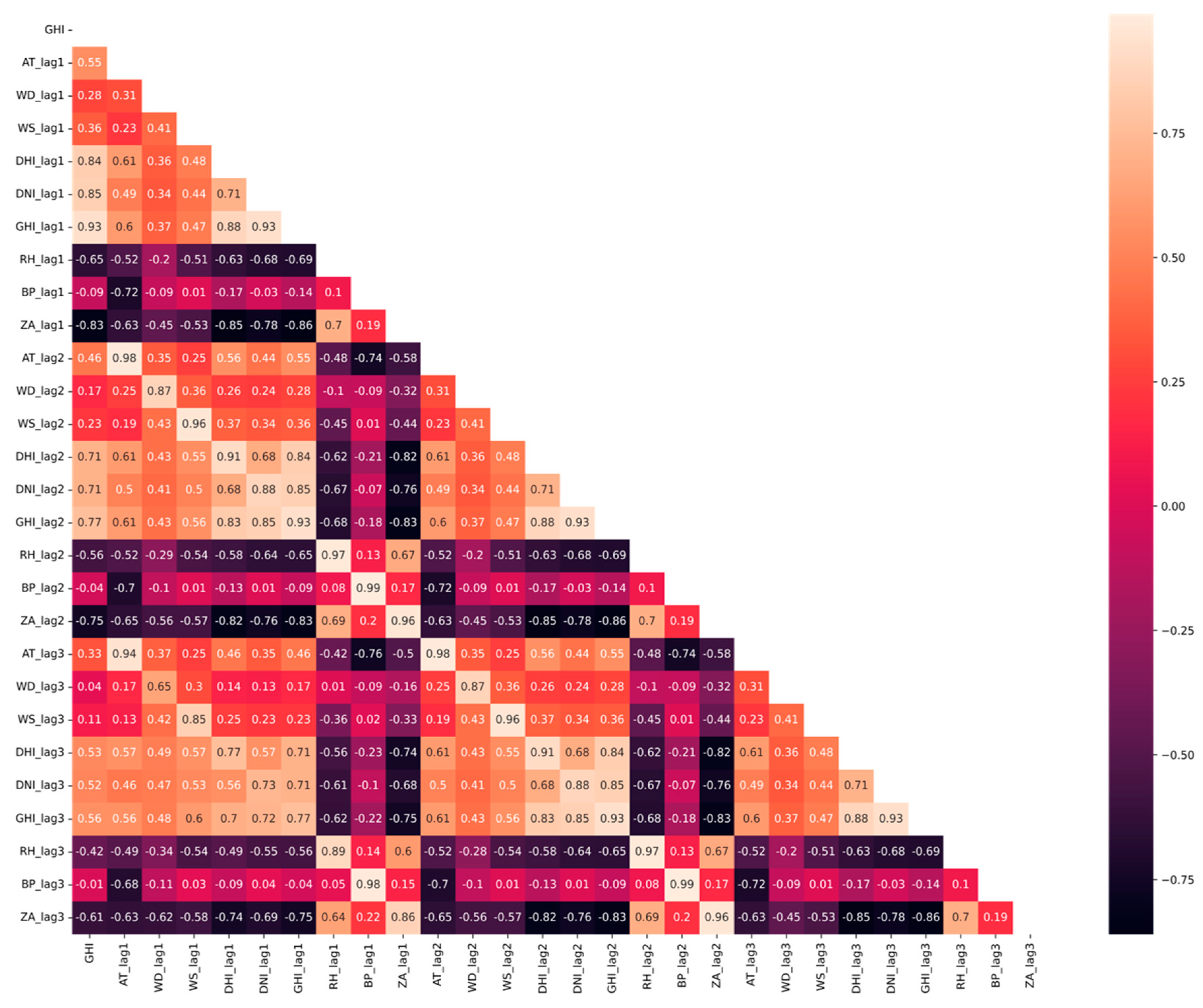

4.2. Effect of Using Weather and Solar Radiation Components Features on Forecasting

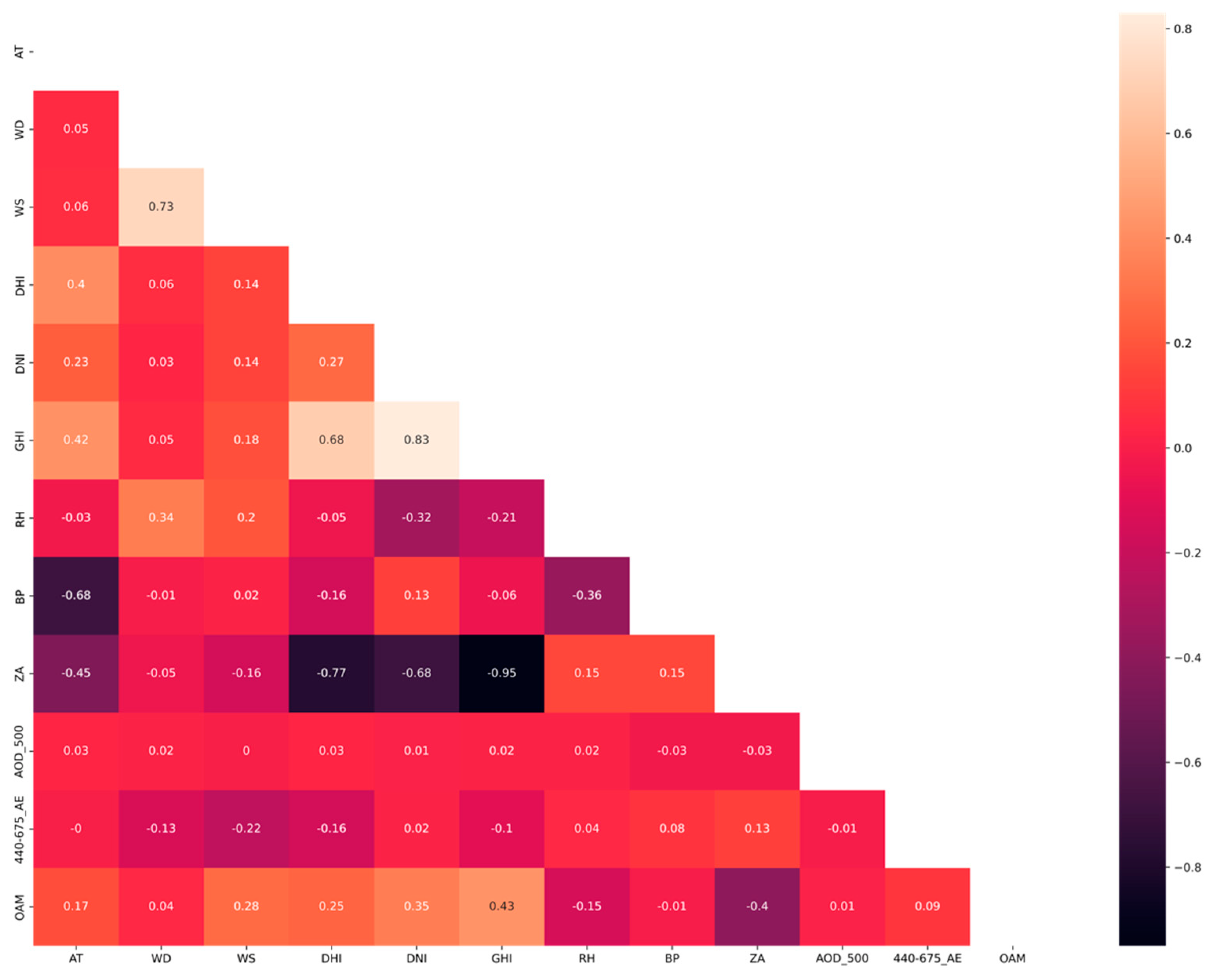

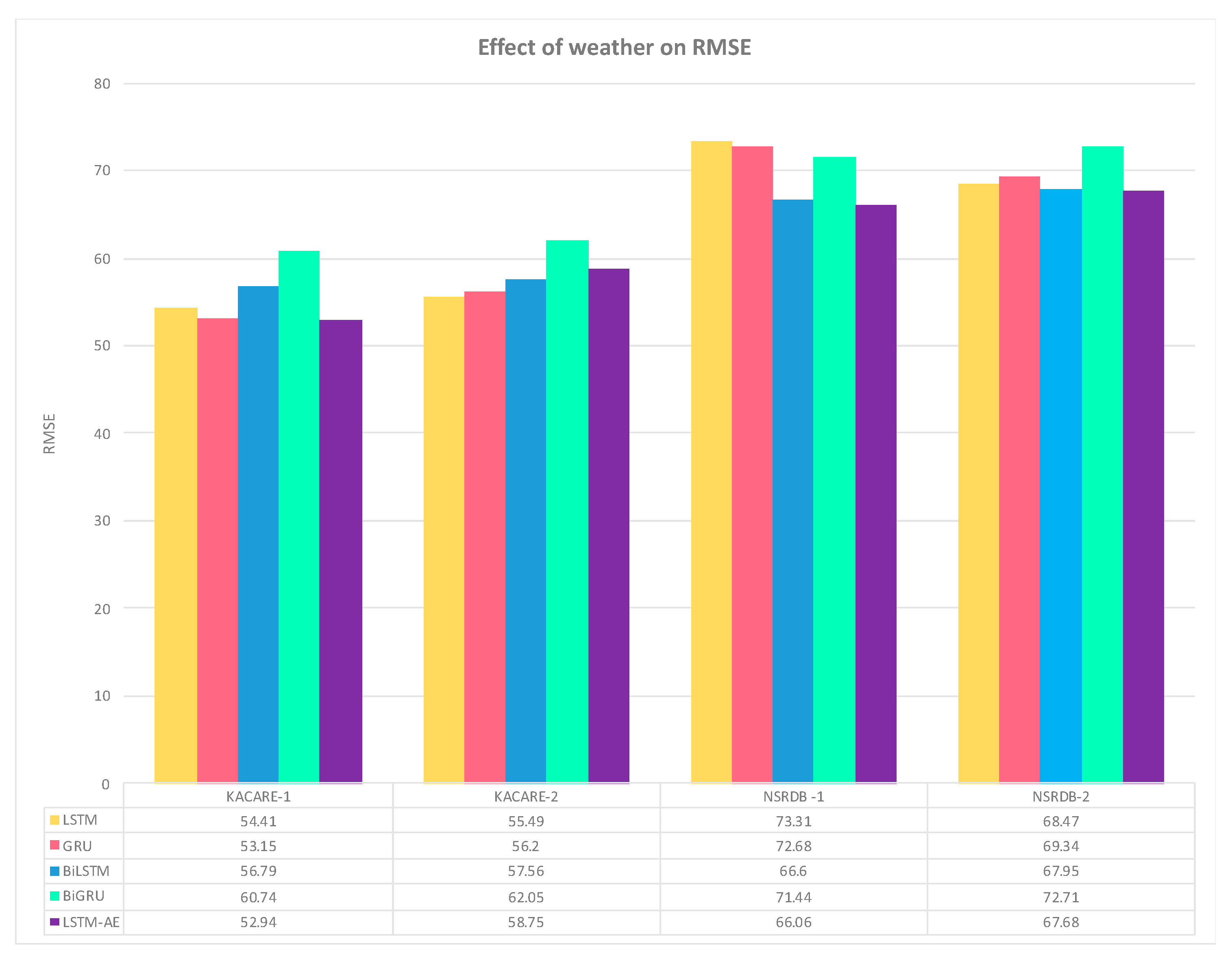

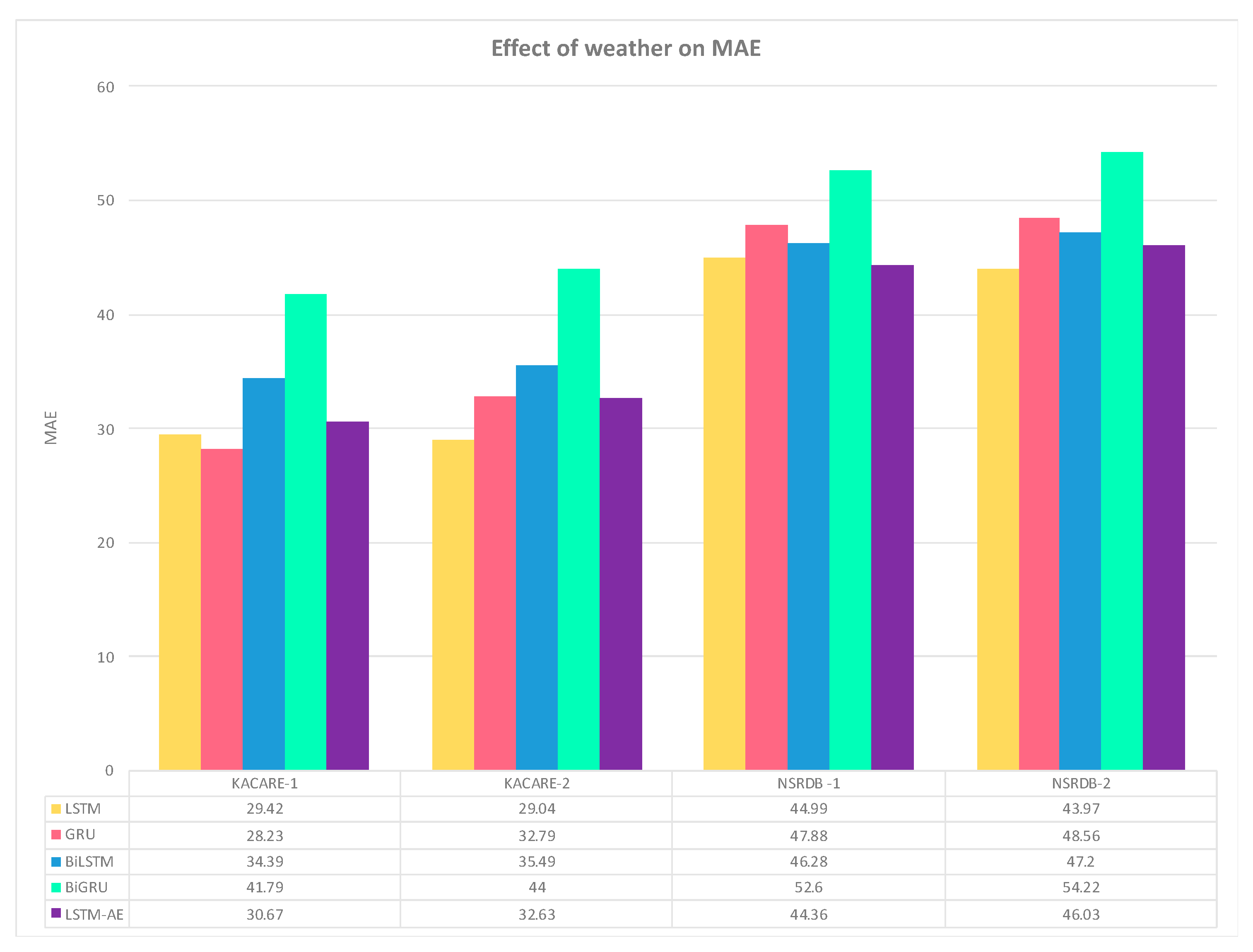

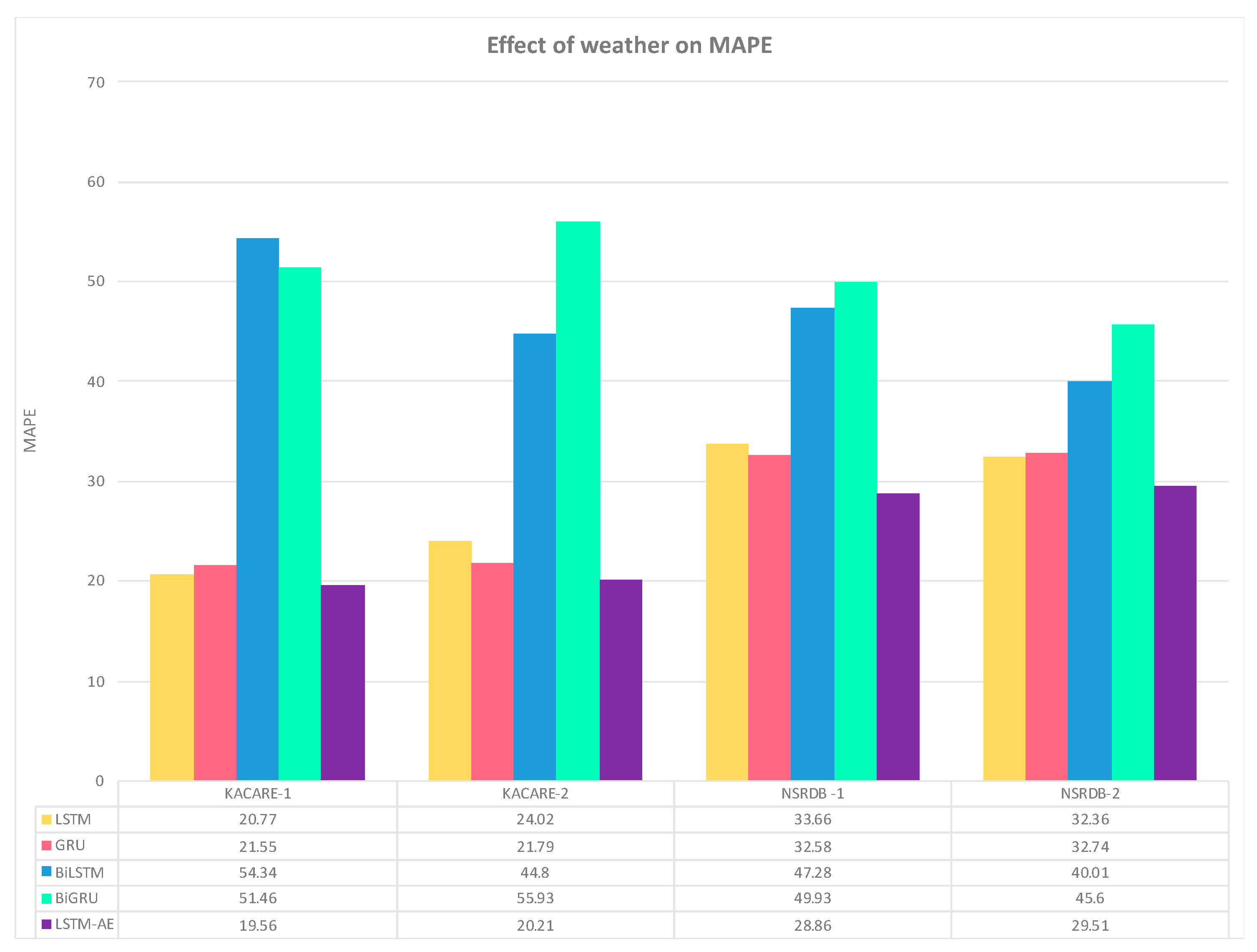

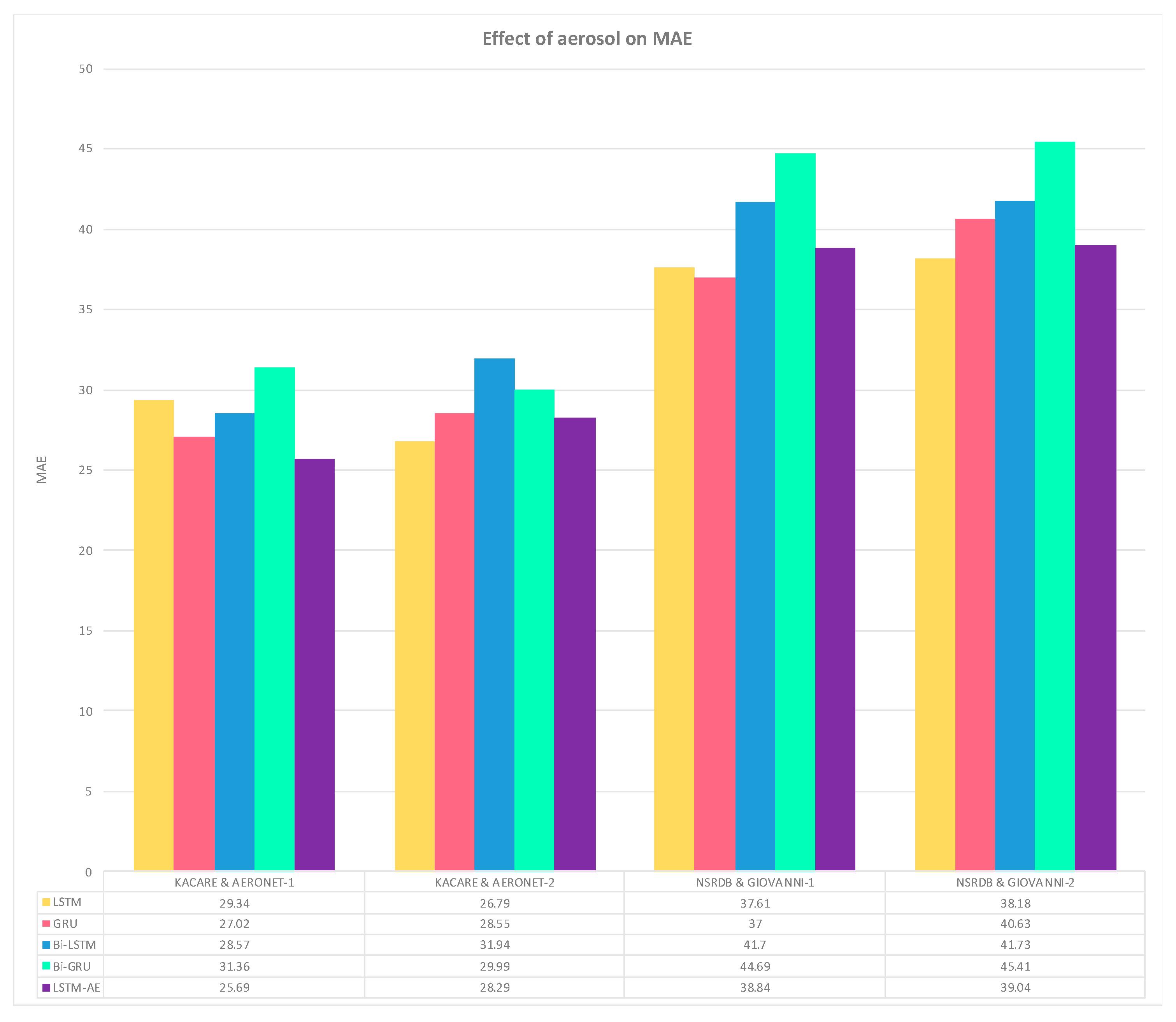

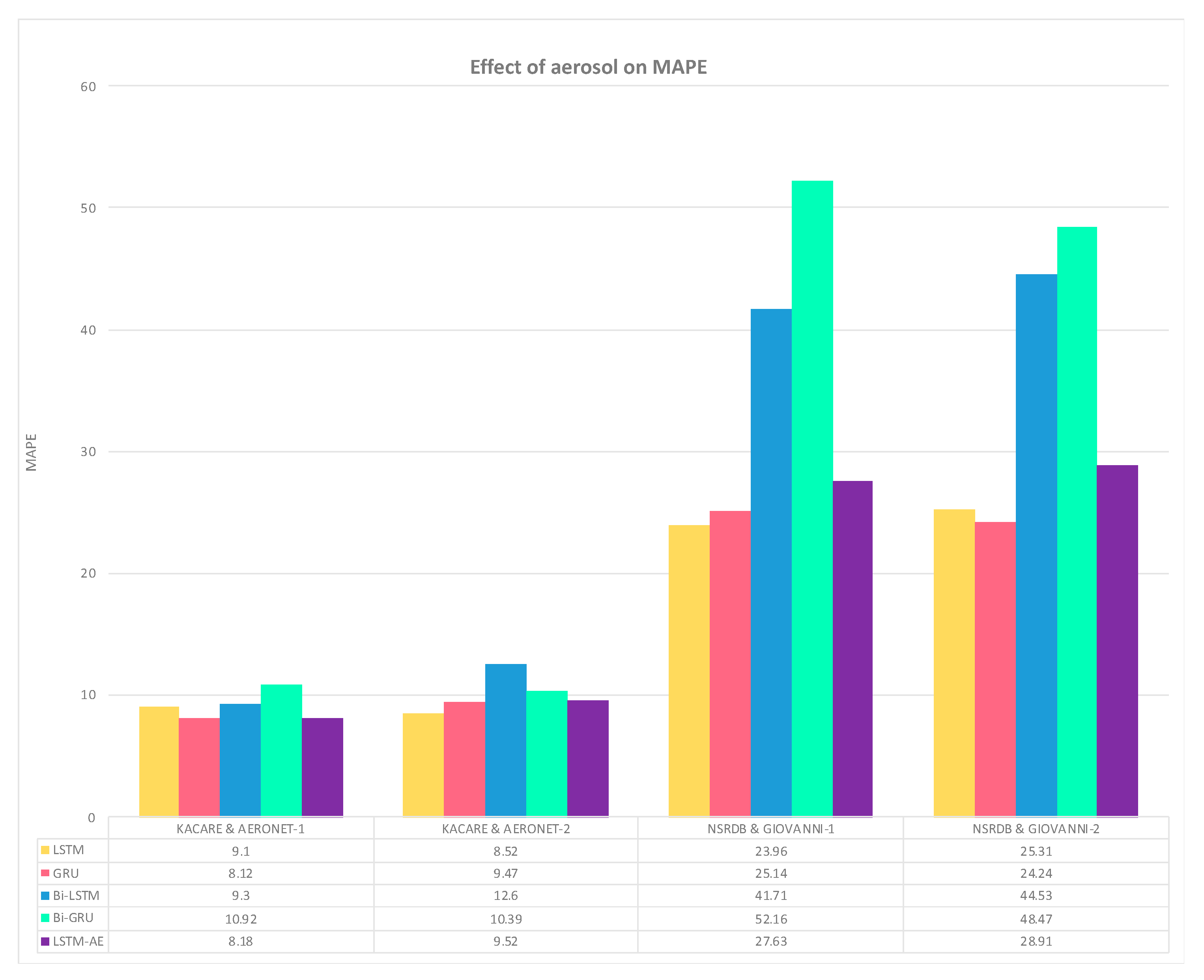

4.3. Effect of Using Aerosol Features on Forecasting

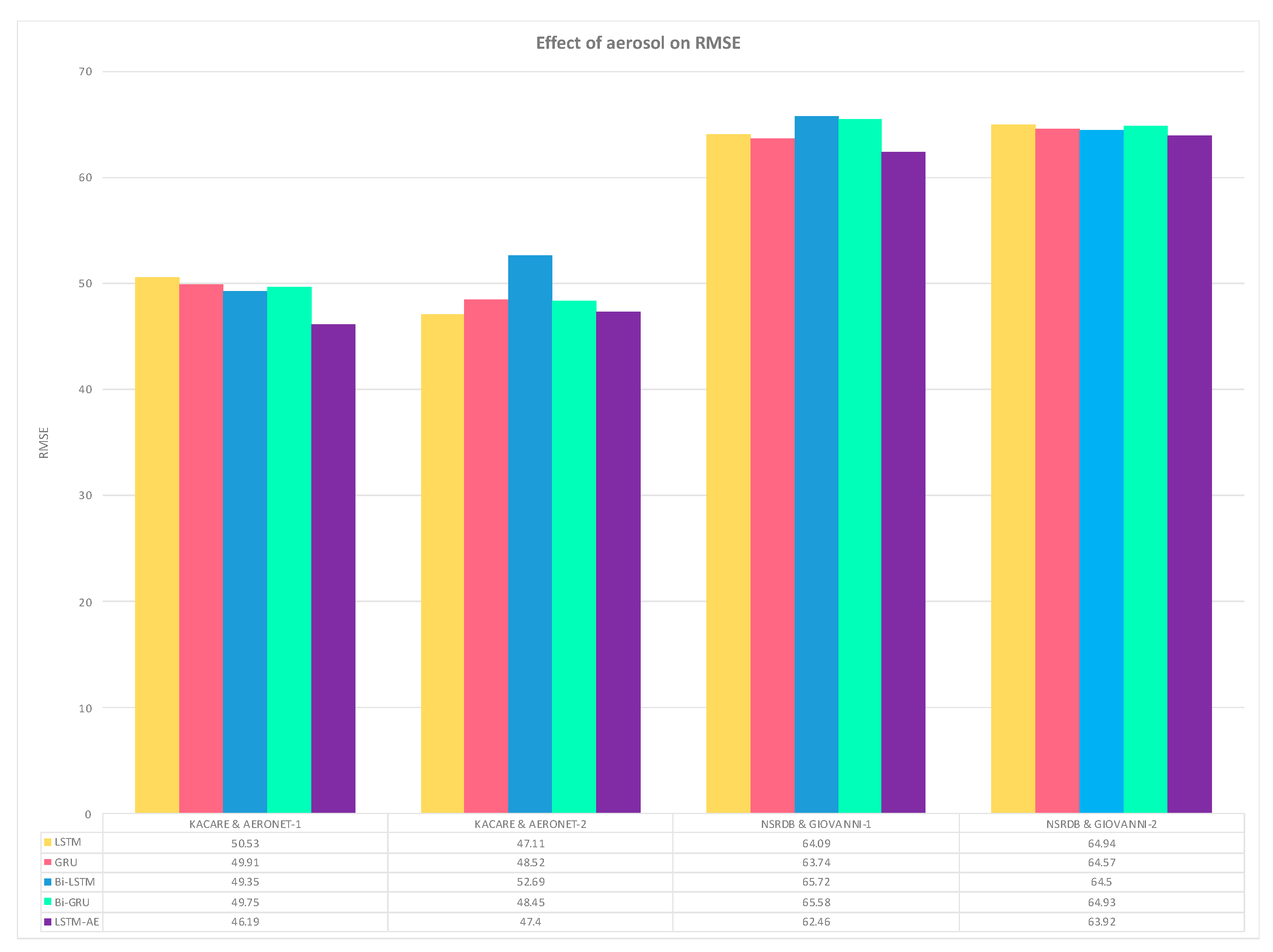

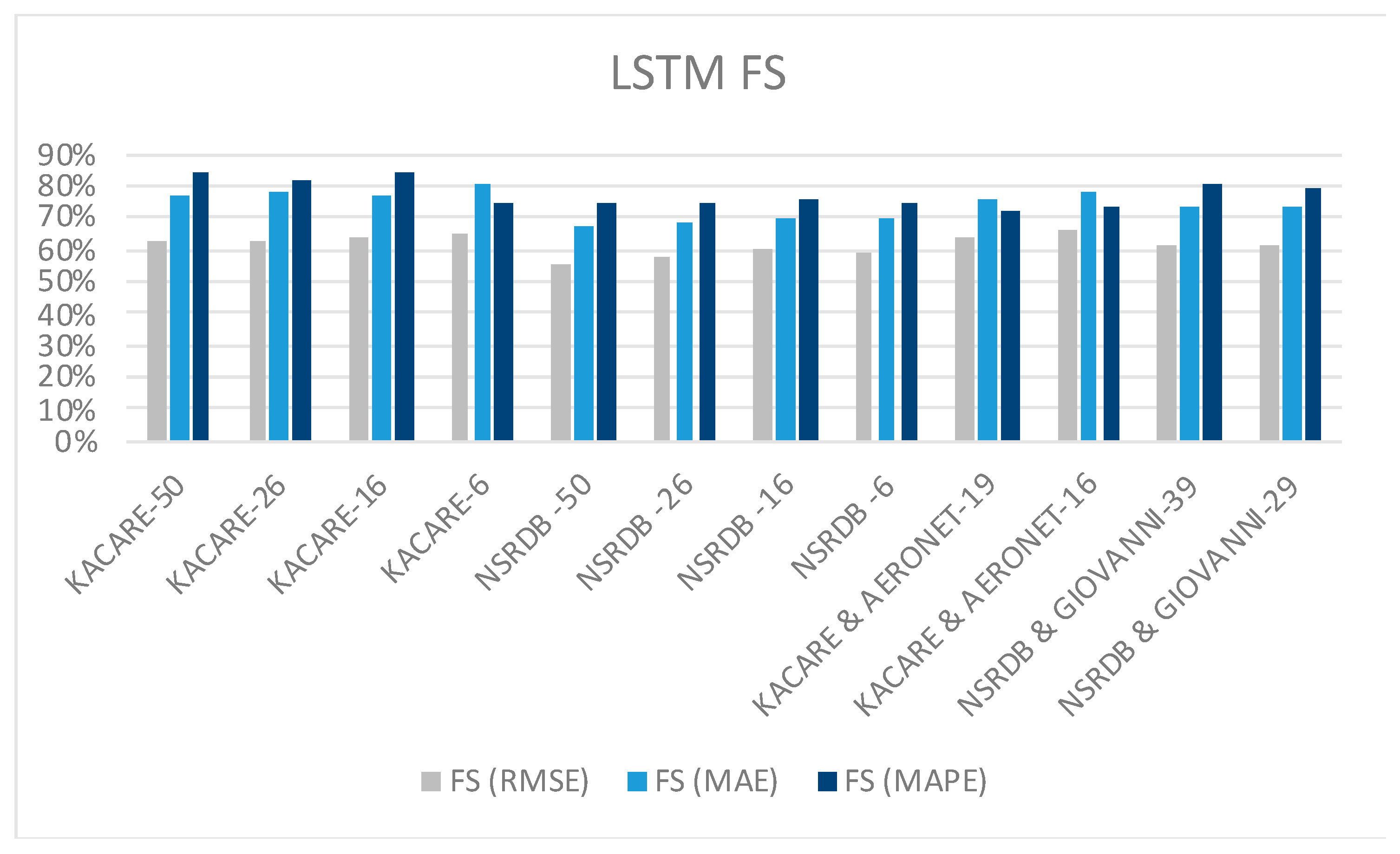

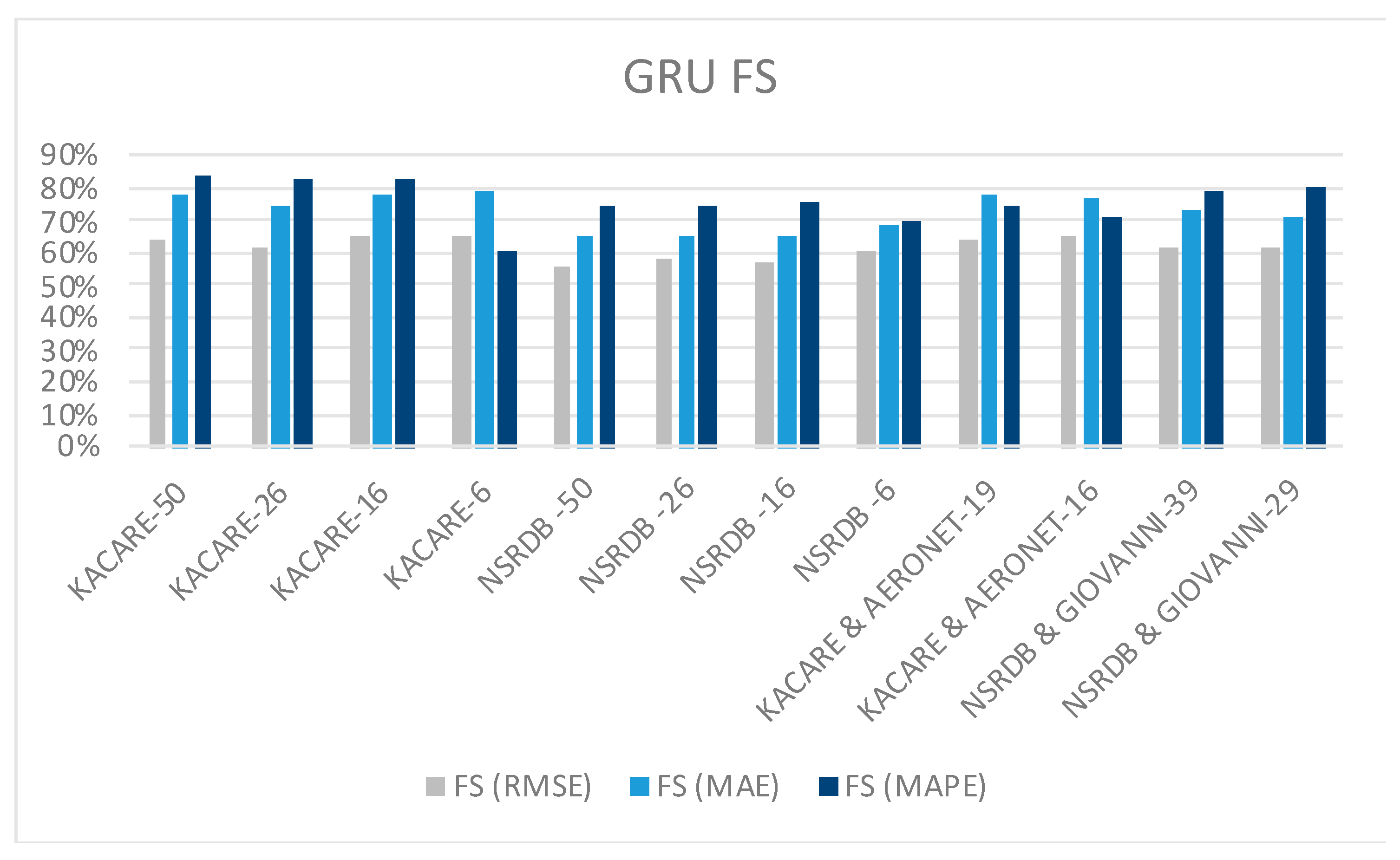

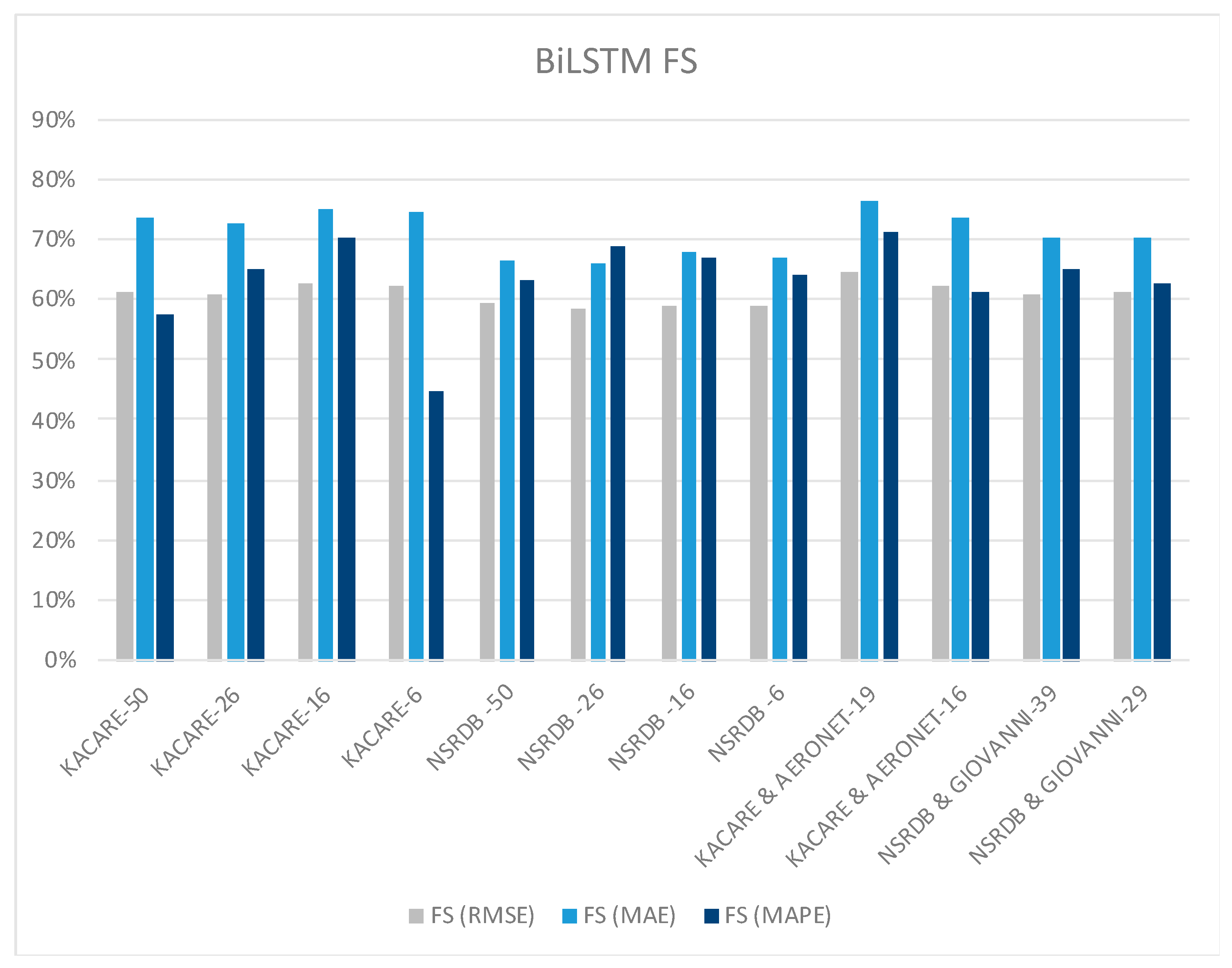

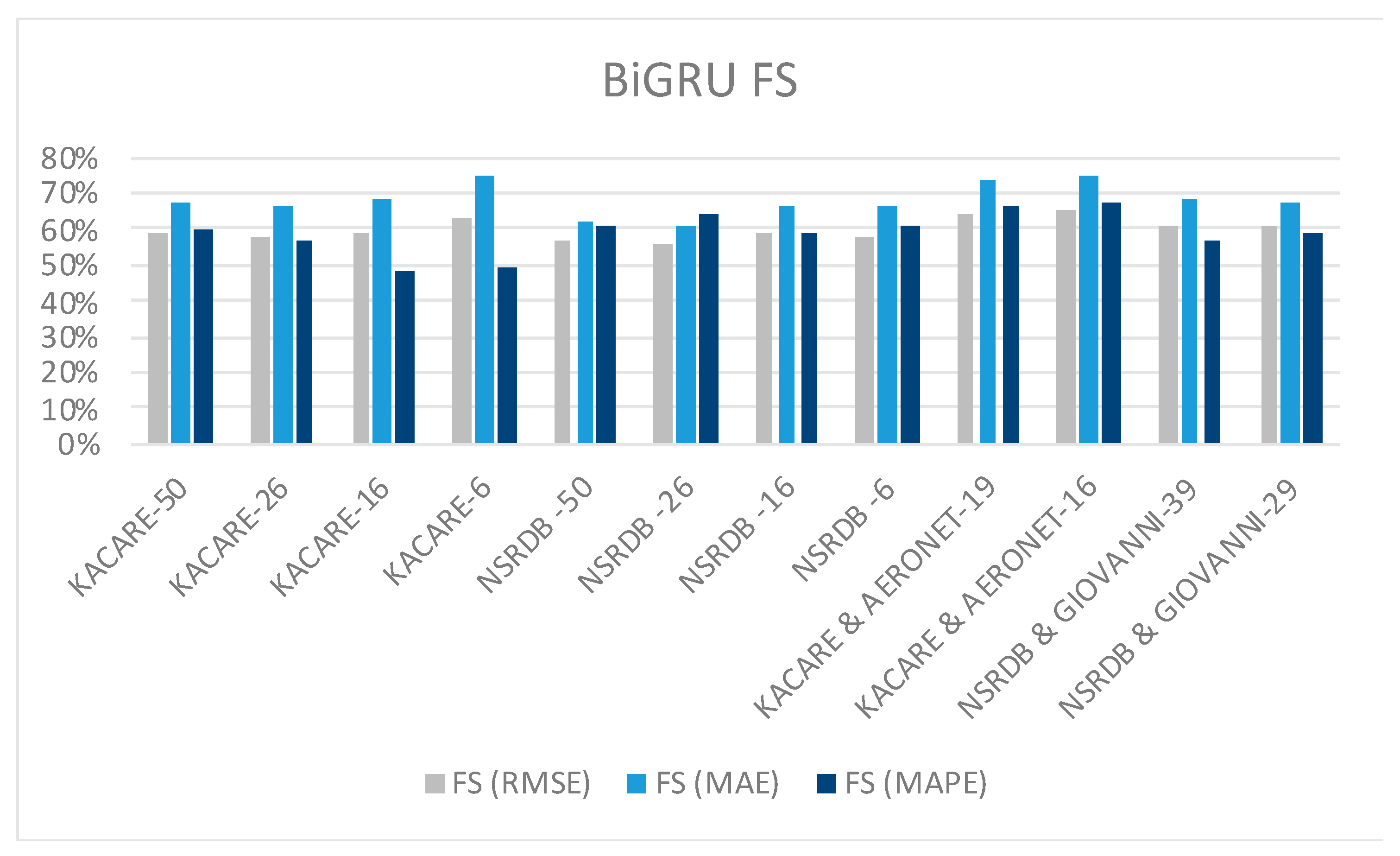

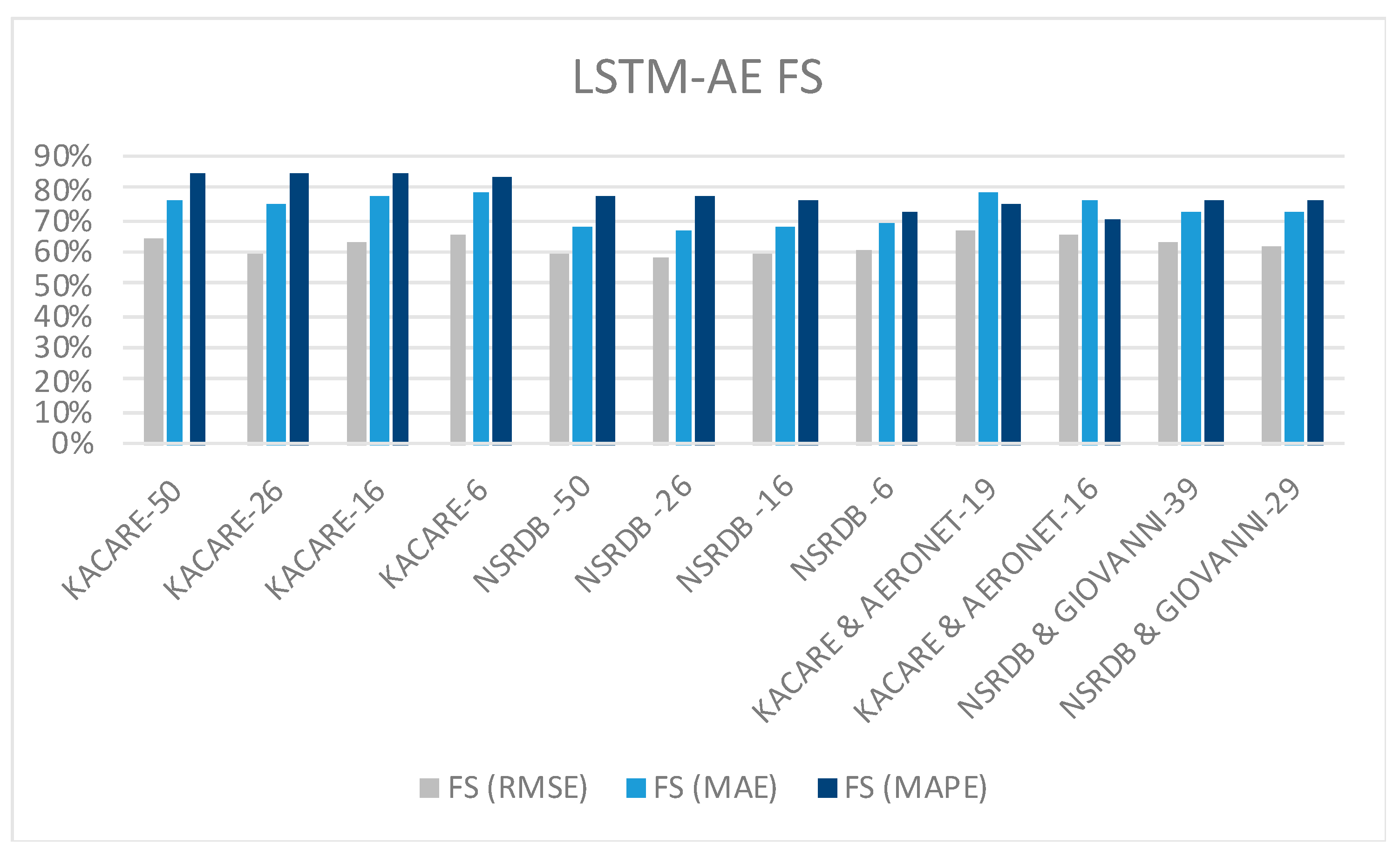

4.4. FS of all Models

5. Conclusion

- Although the GHI values of the same forecasting hour on previous days have a stronger or equal correlation with the output than the GHI values of the previous three hours on the same day, using the latter in forecasting provides better accuracy, especially if measured by RMSE or MAE. However, only MAPE was improved when the GHI values of the same forecasting hour on previous days were used for prediction. Therefore, the decision about the inclusion of more GHI-lagged features depends on the performance metric of interest and the size of the dataset.

- Using weather, aerosol, and solar radiation components’ lagged features improves RMSE, MAE, and MAPE results slightly. However, this slight improvement might not be worth the loss in efficiency due to the increase in the number of parameters. Therefore, the decision about the inclusion of these features depends on a tradeoff between performance and efficiency.

- The LSTM-AE model provides the best forecasting results with all feature sets, followed by the LSTM and GRU models, whereas the BiLSTM and BiGRU models provide the worst.

- The best forecast skills results are achieved by the LSTM-AE model, which reaches 85%.

- FS MAPE is the most improved metric for the LSTM, GRU, and LSTM-AE models, whereas it is FSMAE for the BiGRU and BiLSTM models.

- The best RMSE, MAE, and MAPE results are 46.19, 25.69, and 8.18 achieved by the LSTM-AE model with the K.A.CARE and AERONET merged dataset with 19 features.

- Regarding datasets, all results associated with the NSRDB dataset are worse than results associated with the K.A.CARE dataset. Ground-based measurements are more accurate than satellite-based observations and thus provide better forecasting. However, ground-based data suffer from a huge number of missing values due to device malfunction or maintenance scheduling. It is safe to use satellite data for model development purpose and assume that results would be better with ground-based data.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Gielen, D.; Gorini, R.; Wagner, N.; Leme, R.; Gutierrez, L.; Prakash, G.; Asmelash, E.; Janeiro, L.; Gallina, G.; Vale, G. Global energy transformation: a roadmap to 2050. 2019.

- Shell Global Global Energy Resources database. https://www.shell.com (accessed Jun. 26, 2020).

- Elrahmani, A.; Hannun, J.; Eljack, F.; Kazi, M.-K. Status of renewable energy in the GCC region and future opportunities. Curr. Opin. Chem. Eng. 2021, 31, 100664. [Google Scholar] [CrossRef]

- Wang, H.; Liu, Y.; Zhou, B.; Li, C.; Cao, G.; Voropai, N.; Barakhtenko, E. Taxonomy research of artificial intelligence for deterministic solar power forecasting. Energy Convers. Manag. 2020, 214, 112909. [Google Scholar] [CrossRef]

- Ozcanli, A.K.; Yaprakdal, F.; Baysal, M. Deep learning methods and applications for electrical power systems: A comprehensive review. Int. J. Energy Res. 2020. [Google Scholar] [CrossRef]

- Bam[1] O. Bamisile, A. Oluwasanmi, C. Ejiyi, N. Yimen, S. Obiora, and Q. Huang, “Comparison of machine learning and deep learning algorithms for hourly global/diffuse solar radiation predictions,” Int. J. Energy Res., vol. 46, no. 8, pp. 10052–10073, 2022, O.; Oluwasanmi, A.; Ejiyi, C.; Yimen, N.; Obiora, S.; Huang, Q. Comparison of machine learning and deep learning algorithms for hourly global/diffuse solar radiation predictions. Int. J. Energy Res. 2022, 46, 10052–10073.

- Gensler, A.; Henze, J.; Sick, B.; Raabe, N. Deep Learning for solar power forecasting—An approach using AutoEncoder and LSTM Neural Networks. In Proceedings of the 2016 IEEE international conference on systems, man, and cybernetics (SMC); IEEE, 2016; pp. 2858–2865.

- Zang, H.; Cheng, L.; Ding, T.; Cheung, K.W.; Wei, Z.; Sun, G. Day-ahead photovoltaic power forecasting approach based on deep convolutional neural networks and meta learning. Int. J. Electr. Power Energy Syst. 2020, 118, 105790. [Google Scholar] [CrossRef]

- Zang, H.; Cheng, L.; Ding, T.; Cheung, K.W.; Wang, M.; Wei, Z.; Sun, G. Application of functional deep belief network for estimating daily global solar radiation: A case study in China. Energy 2020, 191, 116502. [Google Scholar] [CrossRef]

- Alkhayat, G.; Hasan, S.H.; Mehmood, R. SENERGY: A Novel Deep Learning-Based Auto-Selective Approach and Tool for Solar Energy Forecasting. Energies 2022, 15, 6659. [Google Scholar] [CrossRef]

- Alkhayat, G.; Mehmood, R. A Review and Taxonomy of Wind and Solar Energy Forecasting Methods Based on Deep Learning. Energy AI 2021, 100060. [Google Scholar] [CrossRef]

- Basmadjian, R.; Shaafieyoun, A.; Julka, S. Day-ahead forecasting of the percentage of renewables based on time-series statistical methods. Energies 2021, 14, 7443. [Google Scholar] [CrossRef]

- Marchesoni-Acland, F.; Lauret, P.; Gómez, A.; Alonso-Suárez, R. Analysis of ARMA solar forecasting models using ground measurements and satellite images. In Proceedings of the 2019 IEEE 46th Photovoltaic Specialists Conference (PVSC); IEEE, 2019; pp. 2445–2451.

- Bellinguer, K.; Girard, R.; Bontron, G.; Kariniotakis, G. Short-term Forecasting of Photovoltaic Generation based on Conditioned Learning of Geopotential Fields. In Proceedings of the 2020 55th International Universities Power Engineering Conference (UPEC); IEEE, 2020; pp. 1–6.

- Bellinguer, K.; Girard, R.; Bontron, G.; Kariniotakis, G. A generic methodology to efficiently integrate weather information in short-term Photovoltaic generation forecasting models. Sol. Energy 2022, 244, 401–413. [Google Scholar] [CrossRef]

- Piotrowski, P.; Parol, M.; Kapler, P.; Fetliński, B. Advanced Forecasting Methods of 5-Minute Power Generation in a PV System for Microgrid Operation Control. Energies 2022, 15, 2645. [Google Scholar] [CrossRef]

- Solano, E.S.; Dehghanian, P.; Affonso, C.M. Solar Radiation Forecasting Using Machine Learning and Ensemble Feature Selection. Energies 2022, 15, 7049. [Google Scholar] [CrossRef]

- Gairaa, K.; Voyant, C.; Notton, G.; Benkaciali, S.; Guermoui, M. Contribution of ordinal variables to short-term global solar irradiation forecasting for sites with low variabilities. Renew. Energy 2022, 183, 890–902. [Google Scholar] [CrossRef]

- Hassan, M.A.; Bailek, N.; Bouchouicha, K.; Nwokolo, S.C. Ultra-short-term exogenous forecasting of photovoltaic power production using genetically optimized non-linear auto-regressive recurrent neural networks. Renew. Energy 2021, 171, 191–209. [Google Scholar] [CrossRef]

- Frederiksen, C.A.F.; Cai, Z. Novel machine learning approach for solar photovoltaic energy output forecast using extra-terrestrial solar irradiance. Appl. Energy 2022, 306, 118152. [Google Scholar] [CrossRef]

- Lee, W.; Kim, K.; Park, J.; Kim, J.; Kim, Y. Forecasting solar power using long-short term memory and convolutional neural networks. IEEE Access 2018, 6, 73068–73080. [Google Scholar] [CrossRef]

- Castangia, M.; Aliberti, A.; Bottaccioli, L.; Macii, E.; Patti, E. A compound of feature selection techniques to improve solar radiation forecasting. Expert Syst. Appl. 2021, 178, 114979. [Google Scholar] [CrossRef]

- Omar, N.; Aly, H.; Little, T. LSTM and RBFNN based univariate and multivariate forecasting of day-ahead solar irradiance for Atlantic region in Canada and Mediterranean region in Libya. In Proceedings of the 2021 4th International Conference on Energy, Electrical and Power Engineering (CEEPE); IEEE, 2021; pp. 1130–1135.

- Omar, N.; Aly, H.; Little, T. Optimized Feature Selection Based on a Least-Redundant and Highest-Relevant Framework for a Solar Irradiance Forecasting Model. IEEE Access 2022, 10, 48643–48659. [Google Scholar] [CrossRef]

- Cheng, X.; Ye, D.; Shen, Y.; Li, D.; Feng, J. Studies on the improvement of modelled solar radiation and the attenuation effect of aerosol using the WRF-Solar model with satellite-based AOD data over north China. Renew. Energy 2022, 196, 358–365. [Google Scholar] [CrossRef]

- Jain, S.; Singh, C.; Tripathi, A.K. A Flexible and Effective Method to Integrate the Satellite-Based AOD Data into WRF-Solar Model for GHI Simulation. J. Indian Soc. Remote Sens. 2021, 49, 2797–2813. [Google Scholar] [CrossRef]

- Bunn, P.T.W.; Holmgren, W.F.; Leuthold, M.; Castro, C.L. Using GEOS-5 forecast products to represent aerosol optical depth in operational day-ahead solar irradiance forecasts for the southwest United States. J. Renew. Sustain. Energy 2020, 12, 53702. [Google Scholar] [CrossRef]

- Masoom, A.; Kosmopoulos, P.; Bansal, A.; Gkikas, A.; Proestakis, E.; Kazadzis, S.; Amiridis, V. Forecasting dust impact on solar energy using remote sensing and modeling techniques. Sol. Energy 2021, 228, 317–332. [Google Scholar] [CrossRef]

- Das, S.; Genton, M.G.; Alshehri, Y.M.; Stenchikov, G.L. A cyclostationary model for temporal forecasting and simulation of solar global horizontal irradiance. Environmetrics 2021, e2700. [Google Scholar] [CrossRef]

- Mazorra-Aguiar, L.; Díaz, F. Solar radiation forecasting with statistical models. In Wind field and solar radiation characterization and forecasting; Springer, 2018; pp. 171–200.

- Alfadda, A.; Rahman, S.; Pipattanasomporn, M. Solar irradiance forecast using aerosols measurements: A data driven approach. Sol. Energy 2018, 170, 924–939. [Google Scholar] [CrossRef]

- Kumar, A.; Rizwan, M.; Nangia, U. Artificial neural network based model for short term solar radiation forecasting considering aerosol index. In Proceedings of the 2018 2nd IEEE International Conference on Power Electronics, Intelligent Control and Energy Systems (ICPEICES); IEEE, 2018; pp. 212–217.

- Zuo, H.-M.; Qiu, J.; Jia, Y.-H.; Wang, Q.; Li, F.-F. Ten-minute prediction of solar irradiance based on cloud detection and a long short-term memory (LSTM) model. Energy Reports 2022, 8, 5146–5157. [Google Scholar] [CrossRef]

- Si, Z.; Yu, Y.; Yang, M.; Li, P. Hybrid solar forecasting method using satellite visible images and modified convolutional neural networks. IEEE Trans. Ind. Appl. 2020, 57, 5–16. [Google Scholar] [CrossRef]

- Zhu, T.; Guo, Y.; Wang, C.; Ni, C. Inter-hour forecast of solar radiation based on the structural equation model and ensemble model. Energies 2020, 13, 4534. [Google Scholar] [CrossRef]

- Zepner, L.; Karrasch, P.; Wiemann, F.; Bernard, L. ClimateCharts.net–an interactive climate analysis web platform. Int. J. Digit. Earth 2021, 14, 338–356. [Google Scholar] [CrossRef]

- K.A.CARE Renewable Resource Atlas, King Abdullah City for Atomic and Renewable Energy K.A.CARE, Saudi Arabia. 2021. https://rratlas.kacare.gov.sa/ (accessed Dec. 01, 2021).

- Zell, E.; Gasim, S.; Wilcox, S.; Katamoura, S.; Stoffel, T.; Shibli, H.; Engel-Cox, J.; Al Subie, M. Assessment of solar radiation resources in Saudi Arabia. Sol. Energy 2015, 119, 422–438. [Google Scholar] [CrossRef]

- Holben, B.N.; Eck, T.F.; Slutsker, I. al; Tanre, D.; Buis, J.P.; Setzer, A.; Vermote, E.; Reagan, J.A.; Kaufman, Y.J.; Nakajima, T. AERONET—A federated instrument network and data archive for aerosol characterization. Remote Sens. Environ. 1998, 66, 1–16. [Google Scholar] [CrossRef]

- Chabane, F.; Arif, A.; Benramache, S. The Estimate of Aerosol Optical Depth for Diverse Meteorological Conditions. Instrumentation, Mes. Métrologies 2020, 19. [Google Scholar] [CrossRef]

- Dundar, C.; Gokcen Isik, A.; Oguz, K. Temporal analysis of Sand and Dust Storms (SDS) between the years 2003 and 2017 in the Central Asia. E3S Web Conf. 2019, 99, 2017–2019. [Google Scholar] [CrossRef]

- Sengupta, M.; Habte, A.; Xie, Y.; Lopez, A.; Buster, G. National Solar Radiation Database (NSRDB) 2018. [CrossRef]

- DISC, G.E.S. Giovanni, the Bridge between Data and Science, version 4.37. 2021. https://giovanni.gsfc.nasa.gov/giovanni/ (accessed Oct. 17, 2022).

- Kleissl, J. Solar energy forecasting and resource assessment; Academic Press, 2013; ISBN 012397772X.

- Gueymard, C.A.; Yang, D. Worldwide validation of CAMS and MERRA-2 reanalysis aerosol optical depth products using 15 years of AERONET observations. Atmos. Environ. 2020, 225, 117216. [Google Scholar] [CrossRef]

- Gueymard, C.A.; Ruiz-Arias, J.A. Validation of direct normal irradiance predictions under arid conditions: A review of radiative models and their turbidity-dependent performance. Renew. Sustain. Energy Rev. 2015, 45, 379–396. [Google Scholar] [CrossRef]

- Gueymard, C.A.; Kocifaj, M. Clear-sky spectral radiance modeling under variable aerosol conditions. Renew. Sustain. Energy Rev. 2022, 168, 112901. [Google Scholar] [CrossRef]

- Vignola, F. GHI correlations with DHI and DNI and the effects of cloudiness on one-minute data. In Proceedings of the ASES; 2012. In Proceedings of the ASES; 2012.

- Martínez, J.F.; Steiner, M.; Wiesenfarth, M.; Helmers, H.; Siefer, G.; Glunz, S.W.; Dimroth, F. Worldwide Energy Harvesting Potential of Hybrid CPV/PV Technology. arXiv Prepr. arXiv2205.12858 2022.

- Peng, T.; Zhang, C.; Zhou, J.; Nazir, M.S. An integrated framework of Bi-directional Long-Short Term Memory (BiLSTM) based on sine cosine algorithm for hourly solar radiation forecasting. Energy 2021, 221, 119887. [Google Scholar] [CrossRef]

- Zhou, H.; Zhang, Y.; Yang, L.; Liu, Q.; Yan, K.; Du, Y. Short-term photovoltaic power forecasting based on long short term memory neural network and attention mechanism. IEEE Access 2019, 7, 78063–78074. [Google Scholar] [CrossRef]

- Sorkun, M.C.; Paoli, C.; Incel, Ö.D. Time series forecasting on solar irradiation using deep learning. In Proceedings of the 2017 10th International Conference on Electrical and Electronics Engineering (ELECO); IEEE, 2017; pp. 151–155.

- Alharbi, F.R.; Csala, D. Wind speed and solar irradiance prediction using a bidirectional long short-term memory model based on neural networks. Energies 2021, 14. [Google Scholar] [CrossRef]

- Lynn, H.M.; Pan, S.B.; Kim, P. A deep bidirectional GRU network model for biometric electrocardiogram classification based on recurrent neural networks. IEEE Access 2019, 7, 145395–145405. [Google Scholar] [CrossRef]

- Nguyen, H.D.; Tran, K.P.; Thomassey, S.; Hamad, M. Forecasting and Anomaly Detection approaches using LSTM and LSTM Autoencoder techniques with the applications in supply chain management. Int. J. Inf. Manage. 2021, 57, 102282. [Google Scholar] [CrossRef]

- Sagheer, A.; Kotb, M. Unsupervised pre-training of a deep LSTM-based stacked autoencoder for multivariate time series forecasting problems. Sci. Rep. 2019, 9, 1–16. [Google Scholar] [CrossRef]

- Li, G.; Xie, S.; Wang, B.; Xin, J.; Li, Y.; Du, S. Photovoltaic Power Forecasting With a Hybrid Deep Learning Approach. IEEE Access 2020, 8, 175871–175880. [Google Scholar] [CrossRef]

- Hossain, M.S.; Mahmood, H. Short-term photovoltaic power forecasting using an LSTM neural network and synthetic weather forecast. IEEE Access 2020, 8, 172524–172533. [Google Scholar] [CrossRef]

- Voyant, C.; Notton, G.; Kalogirou, S.; Nivet, M.-L.; Paoli, C.; Motte, F.; Fouilloy, A. Machine learning methods for solar radiation forecasting: A review. Renew. Energy 2017, 105, 569–582. [Google Scholar] [CrossRef]

| Ref No. | Method | Features | Data source | Results |

|---|---|---|---|---|

| [21] | Hybrid of CNN and LSTM, LSTM-AE | Date, time, location, inverter ID & temperature, power, slope irradiation, horizontal surface irradiation, ground temperature, AT, WS, RH | Ground-based | Hybrid CNN+LSTM model achieved the lowest MAPE= 13.42, RMSE=0.0987, and MAE=0.0506 for next-hour solar power prediction at South Korea. |

| [22] | CNN, LSTM | Hour, previous GHI; forecast of UV index, CC, DP, AT, RH, wind bearing, sunshine duration | Ground-based, satellite-based | Both CNN and LSTM models achieved the lowest normalized RMSE of around 43, and normalized MAE of around 17 for next-hour GHI prediction at Torino, Italy. |

| [23] | RBFNN, LSTM | Previous 30 days of AT, RH, P, ZA, GHI | Satellite-based | LSTM model without weather data achieved better RMSE= 0.013 for day ahead GHI prediction at Halifax, Canada and Tripoli, Libya |

| [24] | LSTM | Previous 24 hours of Clear sky GHI, DNI, DHI, RH | Satellite-based | LSTM model with four features achieved RMSE between 1.09% and 3.19% for day ahead GHI prediction at four locations in Canada |

| [31] | MLP, SVR, kNN, DTR | Last hour GHI, AOD, AE, DNI, DHI; current ZA, hour, month; forecast of WD, WS, AOD | Ground-based, satellite-based |

MLP model achieved the lowest RMSE= 32.75 and the highest FS= 42.10% for next-hour GHI prediction at Riyadh, Saudi Arabia. |

| [32] | ANN | AT, WS, WD, RH, P, AOD, GHI | Ground-based | ANN model achieved MSE=4.67% for next 3-hour GHI prediction at Delhi, India. |

| [33] | Autoregressive, SVR, LSTM | Last 10 min clear sky index; current clear sky index, CC, RH, AOD | Satellite-based | LSTM model achieved normalized RMSE=15.25% for next 10-min GHI prediction at a town in inner Mongolia. |

| [34] | Hybrid of CNN & MLP | Last 4 hours GHI; current AT, RH, ZA, AOD, WS, rainfall, P; sky images | Ground-based, satellite images | Hybrid CNN+MLP model achieved RMSE of around 38 and MAE of around 27 for next-hour GHI prediction at Shandong province, China |

| [35] | Ensemble of multiple regression, SVR, & MLP | ZA, AOD, P, AT, RH, WS, sine of day, CC, air mass, azimuth angle | Satellite-based | Ensemble model of multiple regression, SVR & MLP achieved normalized RMSE=21.98% and normalized MAE=11.13% for next 10-min GHI prediction at Golden City, USA. |

| Time t features | Time t-1 features | Time t-2 features |

Time t-3 features |

Tim t features last n days | |

|---|---|---|---|---|---|

| GHI (output) |

GHI_lag1 | GHI_lag2 | GHI_lag3 | GHI_1D | GHI_90D |

| DNI_lag1 | DNI_lag2 | DNI_lag3 | GHI_2D | GHI_120D | |

| Hour | DHI_lag1 | DHI_lag2 | DHI_lag3 | GHI_3D | GHI_150D |

| Day | AT_lag1 | AT_lag2 | AT_lag3 | GHI_4D | GHI_180D |

| Month | ZA_lag1 | ZA_lag2 | ZA_lag3 | GHI_5D | GHI_210D |

| WS_lag1 | WS_lag2 | WS_lag3 | GHI_6D | GHI_240D | |

| WD_lag1 | WD_lag2 | WD_lag3 | GHI_7D | GHI_270D | |

| RH_lag1 | RH_lag2 | RH_lag3 | GHI_15D | GHI_300D | |

| BP_lag1 | BP_lag2 | BP_lag3 | GHI_30D | GHI_330D | |

| GHI_60D | GHI_360D | ||||

| Time t features | Time t-1 features | Time t-2 & t-3 features |

Tim t features last n days | |

|---|---|---|---|---|

| GHI (output) | GHI_lag1 | DUEXTTAU_lag1 | GHI_lag2 | GHI_1D |

| DNI_lag1 | DUEXTT25_lag1 | DNI_lag2 | GHI_2D | |

| Hour | DHI_lag1 | TOTEXTTAU_lag1 | DHI_lag2 | GHI_3D |

| Day | AT_lag1 | DUCMASS_lag1 | ZA_lag2 | GHI_4D |

| Month | ZA_lag1 | DUCMASS25_lag1 | AT_lag2 | GHI_5D |

| WS_lag1 | DUSMASS_lag1 | GHI_lag3 | GHI_6D | |

| WD_lag1 | DUSMASS25_lag1 | DNI_lag3 | GHI_7D | |

| RH_lag1 | DUSCATFM_lag1 | DHI_lag3 | ||

| BP_lag1 | TOTSCATAU_lag1 | ZA_lag3 | ||

| TOTANGSTR_lag1 | AT_lag3 | |||

| Dataset | Period | Missing days | Total Hourly Records | GHI mean | GHI SD | GHI var | Weather conditions |

|---|---|---|---|---|---|---|---|

| K.A.CARE | 24/12/2016- 03/03/2021 |

1117 days | Train: 7044 | 457.32 | 297.34 | 88411.98 | 1: 5458 2: 3090 3: 1499 |

| Val: 1495 | 424.40 | 269.23 | 72482.13 | ||||

| Test: 1508 | 446.66 | 293.61 | 86205.48 | ||||

| Total: 10047 | 450.82 | 292.97 | 85830.41 | ||||

| NSRDB | 27/12/2017- 31/12/2019 |

360 | Train: 6193 | 481.73 | 313.90 | 98534.76 | 1: 4548 2: 2780 3: 1504 |

| Val: 1314 | 529.09 | 331.09 | 109624.53 | ||||

| Test: 1325 | 438.84 | 278.12 | 77354.06 | ||||

| Total 8832 | 482.35 | 312.40 | 97595.1 | ||||

| K.A.CARE & AERONET | 05/01/2016- 03/03/2021 |

1215 days | Train: 2733 | 604.08 | 257.75 | 66436.59 | 1: 2508 2:1279 3:111 |

| Val: 580 | 607.67 | 260.03 | 67615.76 | ||||

| Test: 585 | 555.42 | 223.30 | 49863.17 | ||||

| Total: 3898 | 597.31 | 253.78 | 64405.57 | ||||

| NSRDB & GIOVANNI | 08/01/2017- 31/12/2019 |

7 days |

Train: 9180 | 473.20 | 309.68 | 95905.06 | 1: 6491 2: 4291 3: 2310 |

| Val: 1948 | 530.51 | 326.67 | 106714.98 | ||||

| Test: 1964 | 462.27 | 299.18 | 89503.37 | ||||

| Total: 13092 | 480.09 | 311.45 | 96998.23 | ||||

| *1=sunny, 2= partly clear, 3= unclear | |||||||

| Experiment 1 | Experiment 2 | Experiment 3 | |||

|---|---|---|---|---|---|

| GHI (output) | GHI_1D | GHI_90D | GHI (output) |

GHI_1D | GHI (output) |

| Hour | GHI_2D | GHI_120D | Hour | GHI_2D | Hour |

| Day | GHI_3D | GHI_150D | Day | GHI_3D | Day |

| Month | GHI_4D | GHI_180D | Month | GHI_4D | Month |

| GHI_lag1 | GHI_5D | GHI_210D | GHI_lag1 | GHI_5D | GHI_lag1 |

| GHI_lag2 | GHI_6D | GHI_240D | GHI_lag2 | GHI_6D | GHI_lag2 |

| GHI_lag3 | GHI_7D | GHI_270D | GHI_lag3 | GHI_7D | GHI_lag3 |

| GHI_15D | GHI_300D | GHI_15D | |||

| GHI_30D | GHI_330D | GHI_30D | |||

| GHI_60D | GHI_360D | GHI_60D | |||

| Total: 26 features | Total: 16 features | Total: 6 features | |||

| Experiment 1 | Experiment 2 | |||||||

|---|---|---|---|---|---|---|---|---|

| GHI (output) | GHI_lag1 | GHI_lag2 | GHI_lag3 | GHI_1D | GHI_90D | GHI (output) |

GHI_3D | GHI_120D |

| DNI_lag1 | DNI_lag2 | DNI_lag3 | GHI_2D | GHI_120D | GHI_4D | GHI_150D | ||

| DHI_lag1 | DHI_lag2 | DHI_lag3 | GHI_3D | GHI_150D | Hour | GHI_5D | GHI_180D | |

| Hour | AT_lag1 | AT_lag2 | AT_lag3 | GHI_4D | GHI_180D | Day | GHI_6D | GHI_210D |

| Day | ZA_lag1 | ZA_lag2 | ZA_lag3 | GHI_5D | GHI_210D | Month | GHI_7D | GHI_240D |

| Month | WS_lag1 | WS_lag2 | WS_lag3 | GHI_6D | GHI_240D | GHI_lag1 | GHI_15D | GHI_270D |

| WD_lag1 | WD_lag2 | WD_lag3 | GHI_7D | GHI_270D | GHI_lag2 | GHI_30D | GHI_300D | |

| RH_lag1 | RH_lag2 | RH_lag3 | GHI_15D | GHI_300D | GHI_lag3 | GHI_60D | GHI_330D | |

| BP_lag1 | BP_lag2 | BP_lag3 | GHI_30D | GHI_330D | GHI_1D | GHI_90D | GHI_360D | |

| GHI_60D | GHI_360D | GHI_2D | ||||||

| Total: 50 features | Total: 26 features | |||||||

| Experiment 1 | Experiment 2 | ||

|---|---|---|---|

| GHI (output) | GHI_4D | GHI (output) | GHI_lag1 |

| Hour | GHI_lag1 | Hour | DNI_lag1 |

| Day | DNI_lag1 | Day | DHI_lag1 |

| Month | DHI_lag1 | Month | ZA_lag1 |

| AOD_500_lag1 | ZA_lag1 | GHI_1D | AT_lag1 |

| 440-675_AE_lag1 | AT_lag1 | GHI_2D | WS_lag1 |

| OAM_lag1 | WS_lag1 | GHI_3D | WD_lag1 |

| GHI_1D | WD_lag1 | GHI_4D | RH_lag1 |

| GHI_2D | RH_lag1 | BP_lag1 | |

| GHI_3D | BP_lag1 | ||

| Total: 19 features | Total: 16 features | ||

| Experiment 1 | Experiment 2 | |||||||

|---|---|---|---|---|---|---|---|---|

| GHI (output) | GHI_lag1 | DUEXTTAU_lag1 | GHI_lag2 | GHI_1D | GHI (output) | GHI_lag1 | GHI_lag2 | GHI_1D |

| DNI_lag1 | DUEXTT25_lag1 | DNI_lag2 | GHI_2D | DNI_lag1 | DNI_lag2 | GHI_2D | ||

| Hour | DHI_lag1 | TOTEXTTAU_lag1 | DHI_lag2 | GHI_3D | Hour | DHI_lag1 | DHI_lag2 | GHI_3D |

| Day | AT_lag1 | DUCMASS_lag1 | ZA_lag2 | GHI_4D | Day | AT_lag1 | ZA_lag2 | GHI_4D |

| Month | ZA_lag1 | DUCMASS25_lag1 | AT_lag2 | GHI_5D | Month | ZA_lag1 | AT_lag2 | GHI_5D |

| WS_lag1 | DUSMASS_lag1 | GHI_lag3 | GHI_6D | WS_lag1 | GHI_lag3 | GHI_6D | ||

| WD_lag1 | DUSMASS25_lag1 | DNI_lag3 | GHI_7D | WD_lag1 | DNI_lag3 | GHI_7D | ||

| RH_lag1 | DUSCATFM_lag1 | DHI_lag3 | RH_lag1 | DHI_lag3 | ||||

| BP_lag1 | TOTSCATAU_lag1 | ZA_lag3 | BP_lag1 | ZA_lag3 | ||||

| TOTANGSTR_lag1 | AT_lag3 | AT_lag3 | ||||||

| Total: 39 features | Total: 29 features | |||||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).