Submitted:

11 January 2024

Posted:

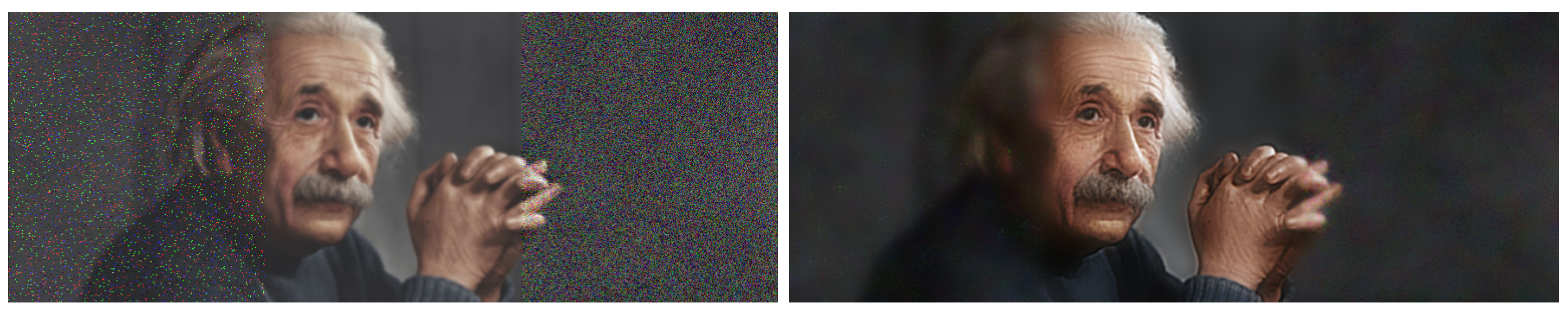

12 January 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. The Methodology

2.1. The definition of the extended guided filter (EGF)

2.2. Selection of filter bases

2.3. Linear noise estimation for dynamic filtering

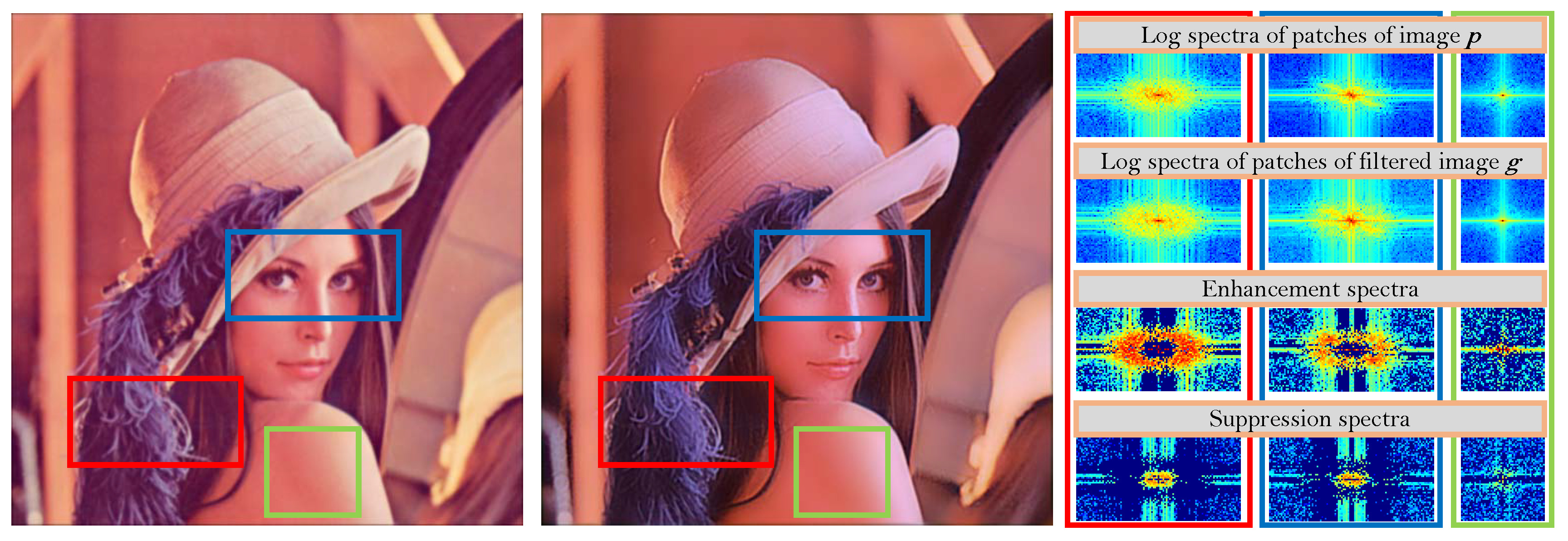

2.4. Image smoothing and details enhancement

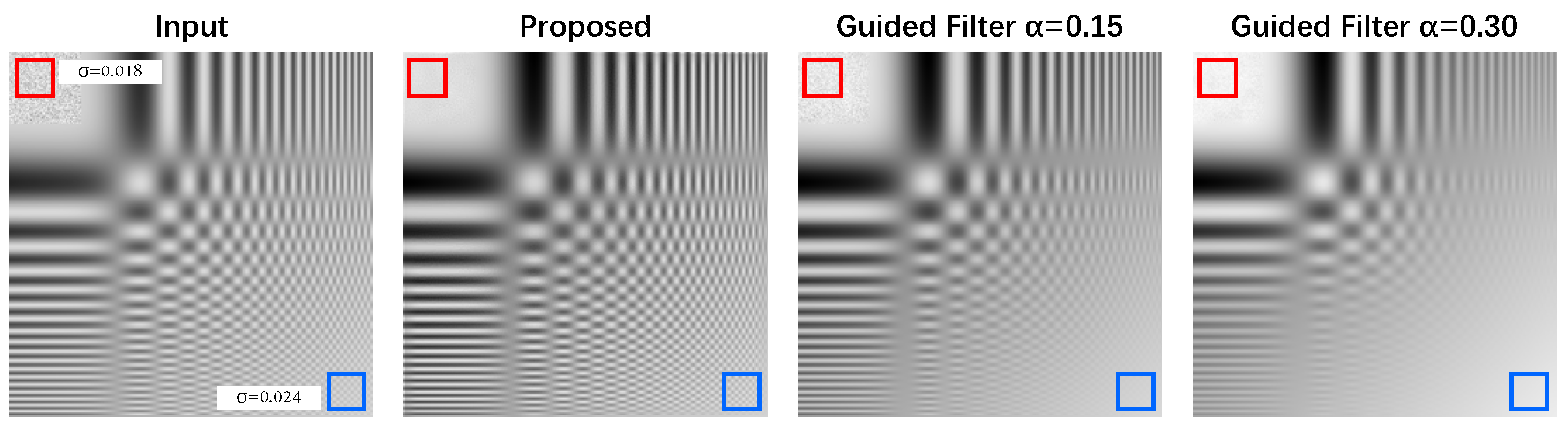

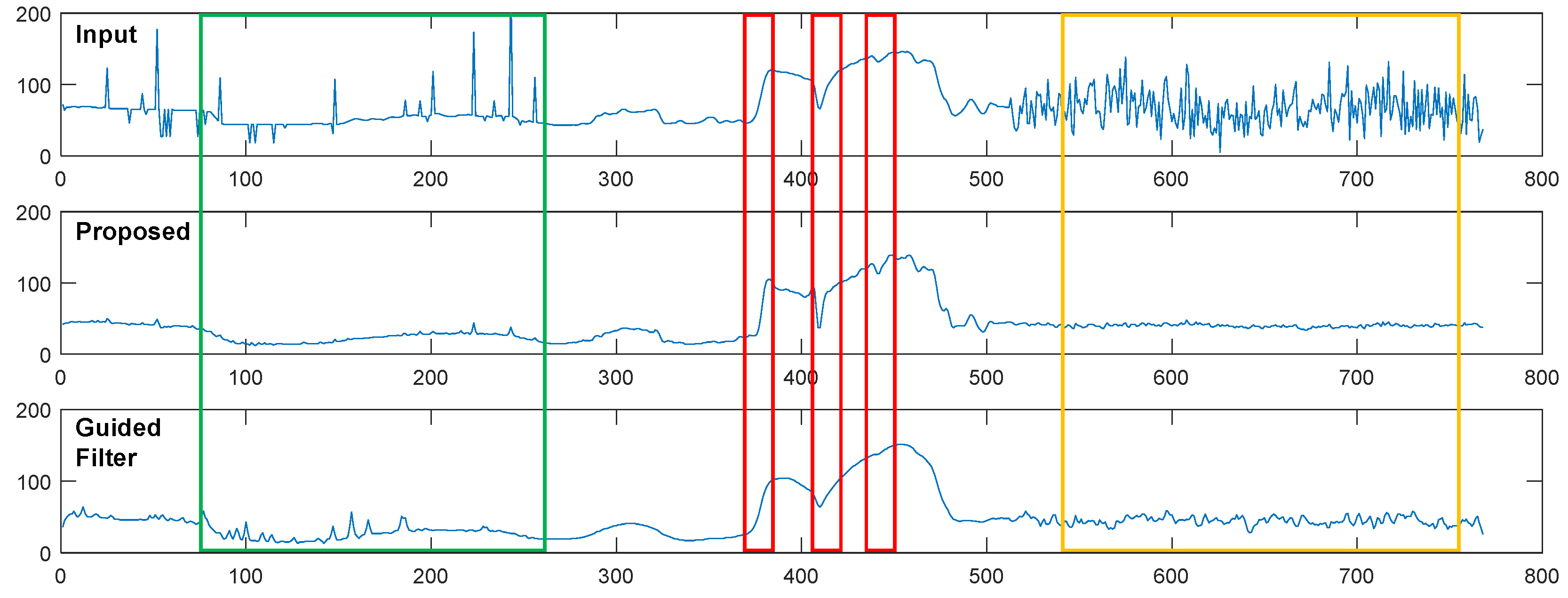

3. Experimental results and analysis

3.1. 1D case

3.2. 2D case

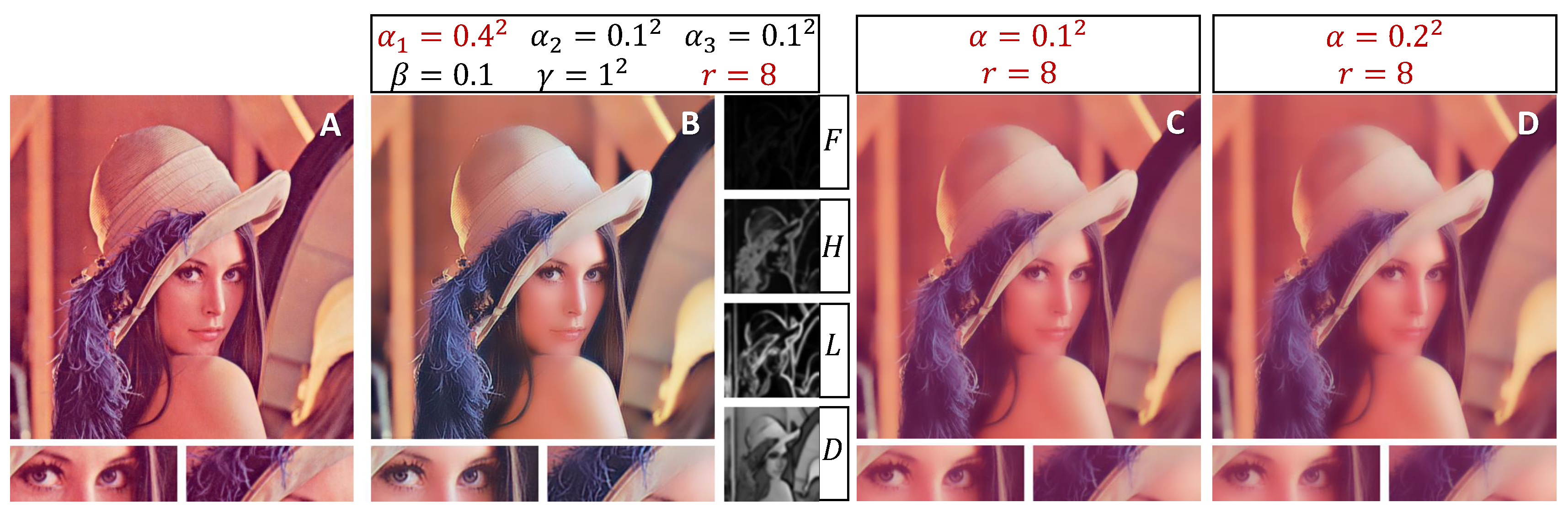

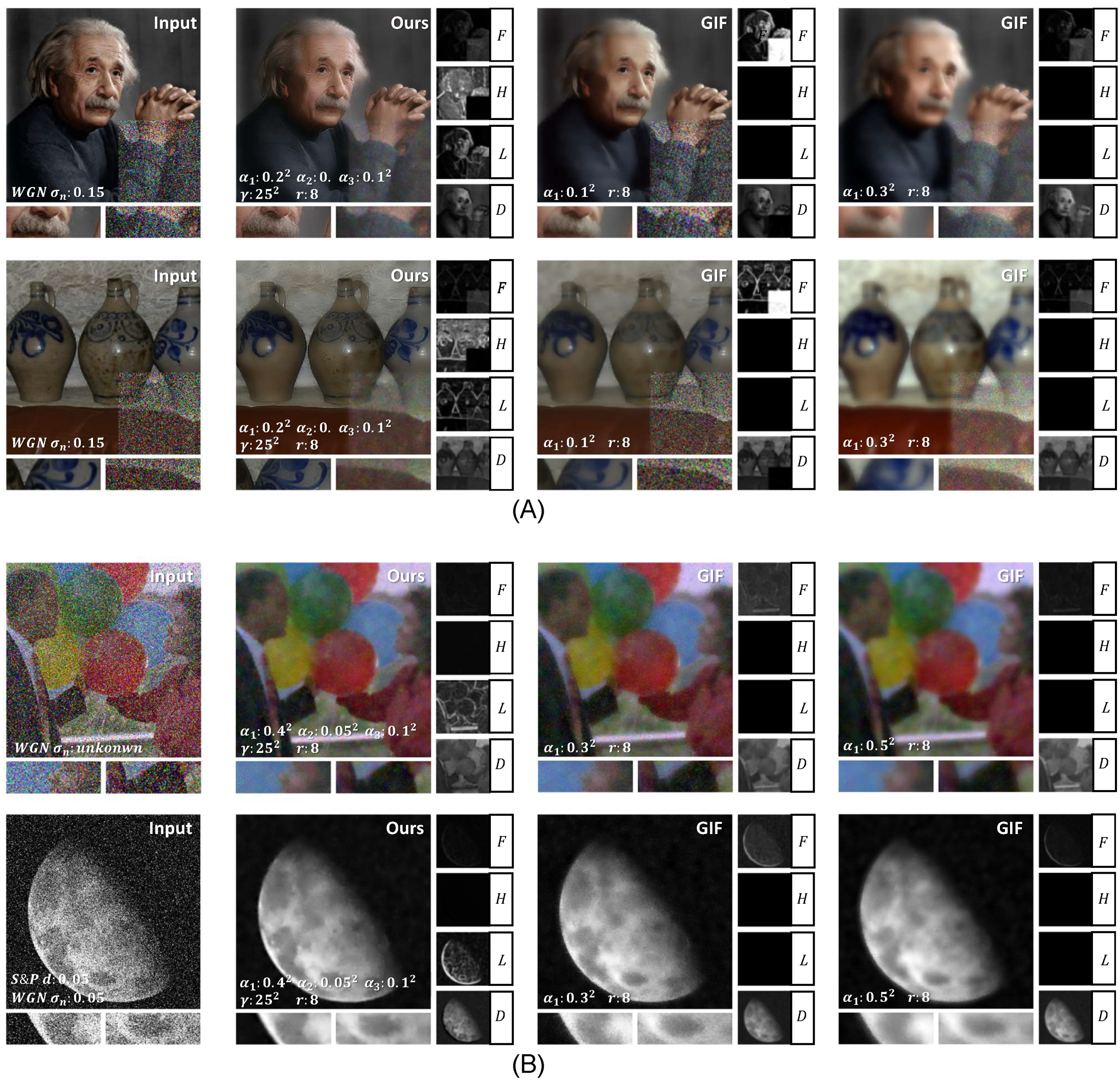

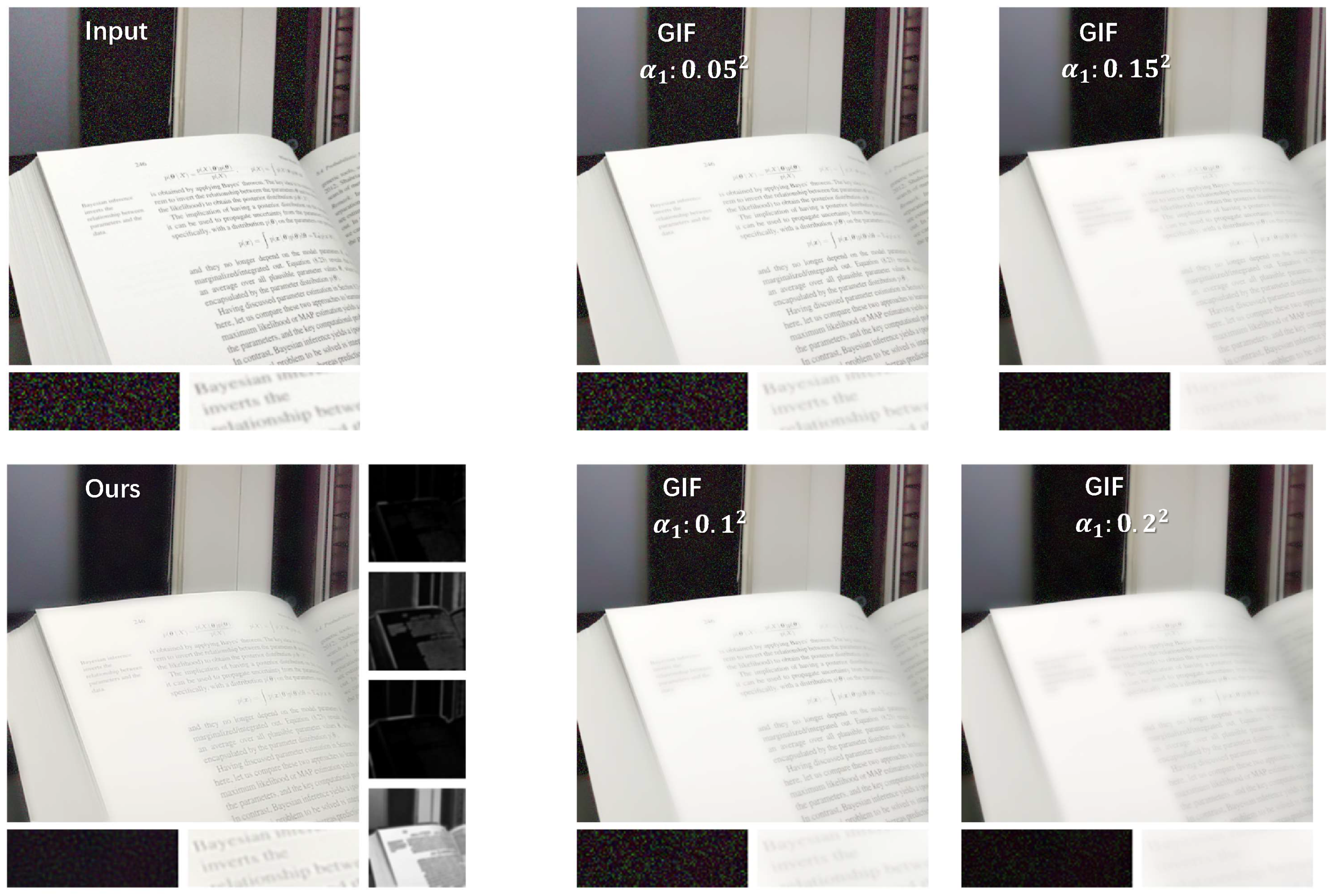

3.3. Comparison of EGF with GIF

4. Conclusion

Author Contributions

Funding

Conflicts of Interest

References

- Kong, X.Y.; Liu, L.; Qian, Y.S. Low-light image enhancement via poisson noise aware retinex model. IEEE Signal Processing Letters 2021, 28, 1540–1544. [Google Scholar] [CrossRef]

- Luo, W. An efficient detail-preserving approach for removing impulse noise in images. IEEE signal processing letters 2006, 13, 413–416. [Google Scholar] [CrossRef]

- He, K.; Sun, J.; Tang, X. Guided Image Filtering. IEEE Transactions on Pattern Analysis and Machine Intelligence 2013, 35, 1397–1409. [Google Scholar] [CrossRef] [PubMed]

- Tomasi, C.; Manduchi, R. Bilateral filtering for gray and color images. Sixth International Conference on Computer Vision (IEEE Cat. No.98CH36271), 1998, pp. 839–846.

- Barash, D. Fundamental relationship between bilateral filtering, adaptive smoothing, and the nonlinear diffusion equation. IEEE Transactions on Pattern Analysis and Machine Intelligence 2002, 24, 844–847. [Google Scholar] [CrossRef]

- Min, D.; Choi, S.; Lu, J.; Ham, B.; Sohn, K.; Do, M.N. Fast global image smoothing based on weighted least squares. IEEE Transactions on Image Processing 2014, 23, 5638–5653. [Google Scholar] [CrossRef] [PubMed]

- Kim, Y.; Min, D.; Ham, B.; Sohn, K. Fast Domain Decomposition for Global Image Smoothing. IEEE Transactions on Image Processing 2017, 26, 4079–4091. [Google Scholar] [CrossRef]

- Li, S.; Kang, X.; Hu, J. Image Fusion With Guided Filtering. IEEE Transactions on Image Processing 2013, 22, 2864–2875. [Google Scholar] [PubMed]

- Lowe, D.G. Distinctive Image Features from Scale-Invariant Keypoints. International Journal of Computer Vision 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), 2005, Vol. 1, pp. 886–893.

- Sun, Y.; Ni, R.; Zhao, Y. ET: Edge-Enhanced Transformer for Image Splicing Detection. IEEE Signal Processing Letters 2022, 29, 1232–1236. [Google Scholar] [CrossRef]

- Nitzberg, M.; Shiota, T. Nonlinear image filtering with edge and corner enhancement. IEEE Transactions on Pattern Analysis & Machine Intelligence 1992, 14, 826–833. [Google Scholar]

- Weickert, J. A review of nonlinear diffusion filtering. International conference on scale-space theories in computer vision. Springer, 1997, pp. 1–28.

- Perona, P.; Malik, J. Scale-space and edge detection using anisotropic diffusion. IEEE Transactions on Pattern Analysis and Machine Intelligence 1990, 12, 629–639. [Google Scholar] [CrossRef]

- Krissian, K.; Aja-Fernandez, S. Noise-Driven Anisotropic Diffusion Filtering of MRI. IEEE Transactions on Image Processing 2009, 18, 2265–2274. [Google Scholar] [CrossRef] [PubMed]

- Chen, B.H.; Tseng, Y.S.; Yin, J.L. Gaussian-adaptive bilateral filter. IEEE Signal Processing Letters 2020, 27, 1670–1674. [Google Scholar] [CrossRef]

- Zhang, B.; Allebach, J.P. Adaptive bilateral filter for sharpness enhancement and noise removal. IEEE transactions on Image Processing 2008, 17, 664–678. [Google Scholar] [CrossRef] [PubMed]

- Ghosh, S.; Nair, P.; Chaudhury, K.N. Optimized Fourier bilateral filtering. IEEE Signal Processing Letters 2018, 25, 1555–1559. [Google Scholar] [CrossRef]

- Elad, M. On the origin of the bilateral filter and ways to improve it. IEEE Transactions on image processing 2002, 11, 1141–1151. [Google Scholar] [CrossRef] [PubMed]

- FarbmanZeev.; FattalRaanan.; LischinskiDani.; SzeliskiRichard. Edge-preserving decompositions for multi-scale tone and detail manipulation. ACM Transactions on Graphics, 2008.

- Liu, W.; Chen, X.; Shen, C.; Liu, Z.; Yang, J. Semi-global weighted least squares in image filtering. Proceedings of the IEEE International conference on computer vision, 2017, pp. 5861–5869.

- He, K.; Sun, J.; Tang, X. Guided image filtering. European conference on computer vision. Springer, 2010, pp. 1–14.

- Li, Z.; Zheng, J.; Zhu, Z.; Yao, W.; Wu, S. Weighted guided image filtering. IEEE Transactions on Image Processing 2015, 24, 120–129. [Google Scholar] [PubMed]

- Lu, Z.; Long, B.; Li, K.; Lu, F. Effective guided image filtering for contrast enhancement. IEEE Signal Processing Letters 2018, 25, 1585–1589. [Google Scholar] [CrossRef]

- Hao, S.; Pan, D.; Guo, Y.; Hong, R.; Wang, M. Image detail enhancement with spatially guided filters. Signal Processing 2016, 120, 789–796. [Google Scholar] [CrossRef]

- Kou, F.; Chen, W.; Li, Z.; Wen, C. Content adaptive image detail enhancement. IEEE Signal Processing Letters 2014, 22, 211–215. [Google Scholar] [CrossRef]

- Daugman, J.G. Uncertainty relation for resolution in space, spatial frequency, and orientation optimized by two-dimensional visual cortical filters. JOSA A 1985, 2, 1160–1169. [Google Scholar] [CrossRef] [PubMed]

- Mehrotra, R.; Namuduri, K.R.; Ranganathan, N. Gabor filter-based edge detection. Pattern recognition 1992, 25, 1479–1494. [Google Scholar] [CrossRef]

- Immerkær, J. Fast Noise Variance Estimation. Computer Vision and Image Understanding 1996, 64, 300–302. [Google Scholar] [CrossRef]

| Input | EGF | GIF | GIF | GIF | GIF | |

|---|---|---|---|---|---|---|

| Noisy patch | 0.5990 | 0.1017 (-83.02%) |

0.4662 (-22.17%) |

0.2823 (-52.87%) |

0.1735 (-71.04%) |

0.1140 (-80.97%) |

| Texture patch | 0.1045 | 0.0736 (-29.57%) |

0.0569 (-45.55%) |

0.0263 (-74.83%) |

0.0159 (-84.78%) |

0.0118 (-88.71%) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).