Submitted:

15 January 2024

Posted:

16 January 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

- The convolutional layer extracts feature from the image using mathematical filters; the features can be edges, corners, or alignment patterns, which give the output a feature map that serves as input to the next layer.

- The grouping layer reduces the resolution by reducing the dimension of the feature map in order to minimize the computational cost.

- The connection layer the image obtained from the previous layer is sent to the fully connected neural network layer, which contains the activation function used to recognize the final image.

- AlexNet: Developed by [19] in 2012, won the ImageNet Large Scale Visual Recognition Challenge (ILSVRC) competition, demonstrating the impact of CNNs in computer vision.

- GoogLeNet (Inception): Developed in 2014 by the Google Research team, introduced the concept of Inception modules with multiple filter sizes in parallel [24].

- VGG: Developed in 2014 by the Visual Graphics Group (VGG) at Oxford University, it is known for its simplicity and depth [25]. Its structure of pure convolutional layers and deep subsampling influenced the design of later architectures, which had different improved versions.

- ResNet: Developed by [26] in 2015, this architecture was highlighted using residual blocks, which allow the training of very deep networks by facilitating the information flow and mitigating the gradient vanishing problem.

- Fast R-CNN: In 2015 it was presented a significant improvement over its predecessor, the R-CNN (Region-Based Convolutional Neural Network) model. It was developed by the Microsoft Research group to address the speed and computational efficiency limitations associated with R-CNN, providing a faster and more practical solution for object detection in images [27].

- DenseNet: Proposed In 2017 by [28], it is notable for its densely connected structure, where each layer is directly connected to all subsequent layers. This dense connectivity can potentially improve information flow and mitigate the problem of gradient fading. It has influenced the design of subsequent architectures and continues to be a popular choice in research and practical implementation in computer vision tasks.

- MobileNet: Proposed in 2017 by [29], it is specially designed for implementations on mobile devices and uses lightweight and efficient operations to balance performance and resource consumption.

- YOLO (You Only Look Once): Developed in 2016 by [30], it is a fast and efficient object detection architecture as it approaches this task as a regression problem; instead of a separate classification for each region, this feature allows several versions from YOLOv1 to YOLOv8 in 2023. Starting with the fifth version released in 2020, known as YOLOv5, was built on PyTorch [31], maintaining the original YOLO approach of dividing the image into a grid and predicting bounding boxes with class probabilities for each cell. The overall architecture includes convolutional layers, attention layers, and other modern techniques; it is important to mention that this version was developed by the Ultralytics team, not by the original authors. In 2022, the YOLOv6 and YOLOv7 versions were developed, presenting improvements in their architecture and training scheme, and improving object detection accuracy without increasing the cost of inference, a concept known as "trainable feature bags" [32]. Finally, in 2023, YOLOv8 is presented; its improvements include new features, better performance, flexibility, and efficiency. Additionally, it includes improvements for detection, segmentation, pose estimation, tracking, and classification [33].

2. Methods

2.1. Research Question and Review Objectives

- Research question: What are the Deep Learning techniques based on Convolutional Neural Networks used for weed detection in agriculture?

- Main Objective: To identify the Deep Learning techniques that are employed in Convolutional Neural Networks for weed detection.

- Specific Objectives:

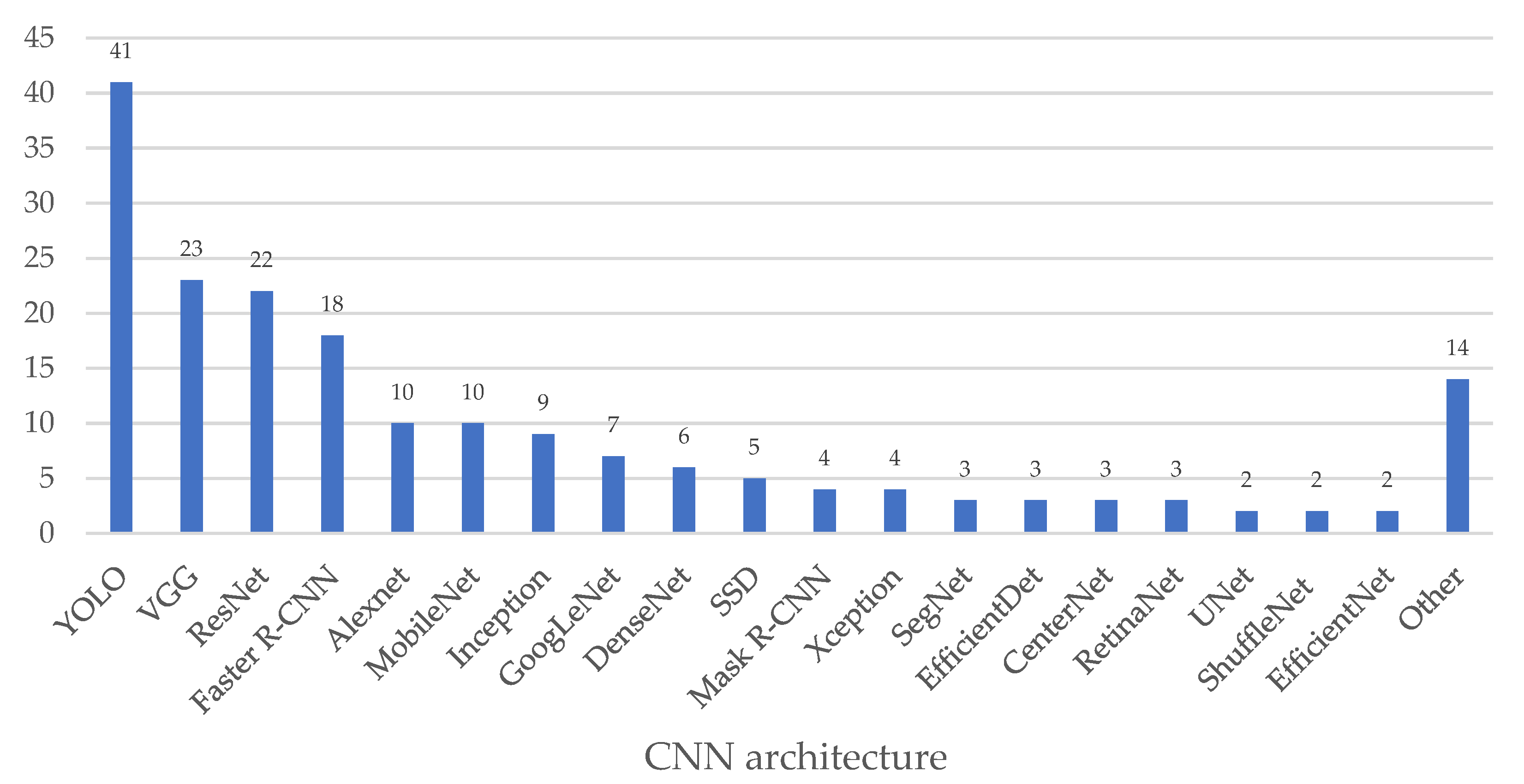

- To investigate the architectures of Convolutional Neural Networks employed in weed detection.

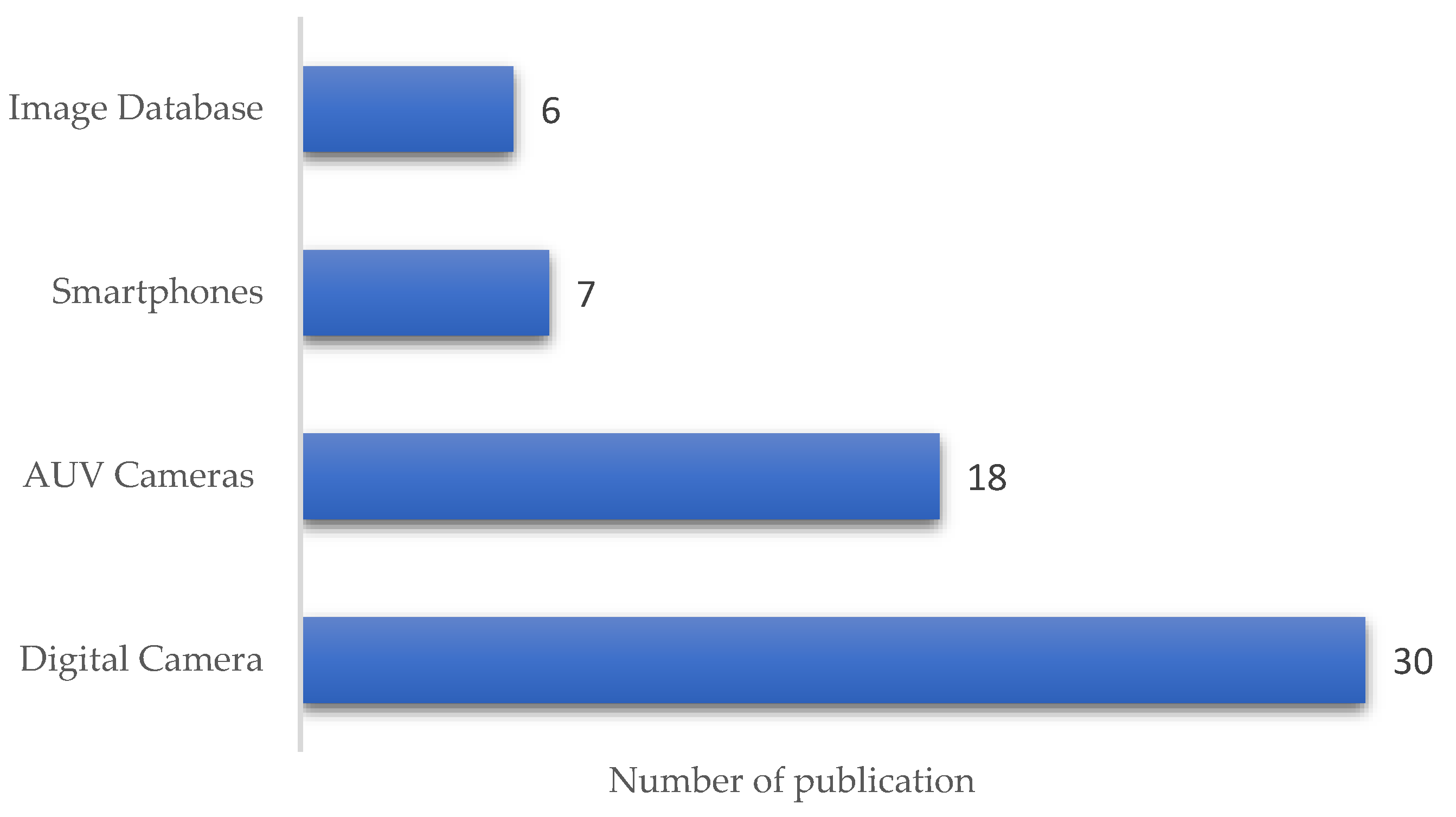

- To identify the technology used for weed identification in different forms of production.

2.2. Sources of Information

2.3. Search for Keywords

2.4. Inclusion and Exclusion Criteria

- The search field is selected where the search is directed through titles, abstracts, and keywords, among others; this is specific to each database:

- In Scopus, "search within Article title, Abstract, Keywords" was established.

- In Web of Science, the search was established in "Topic"; this includes title, abstract, author keywords and keywords plus.

- 2.

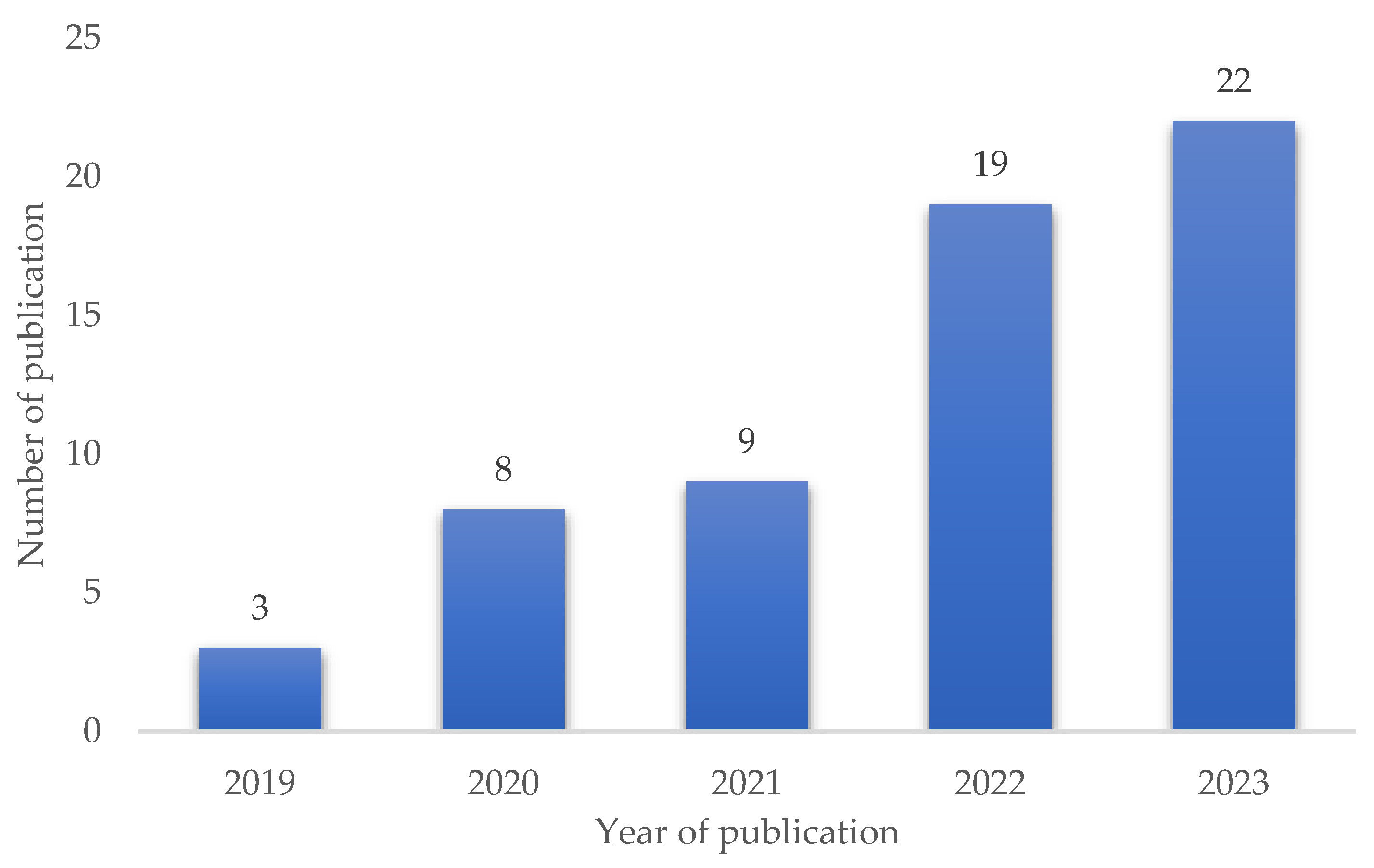

- The date range of the search is the last five years, from 2019 to 2023.

- 3.

- Document type: "Document type: Article".

- 4.

- Excluded are reviews, book chapters, narrative articles, conference or congress articles, unofficial notes or communications, and studies from other areas such as social, human, biological, chemical, legislative, social and economic impact.

- 5.

- Language: "English Language".

2.5. Search Equations in Bibliographic Databases

- Scopus: TITLE-ABS-KEY ("weed detection" AND "deep learning" AND "Convolutional Neural Networks ")

- WOS: TS = ("weed detection " AND "deep learning" AND "Convolutional Neural Networks ")

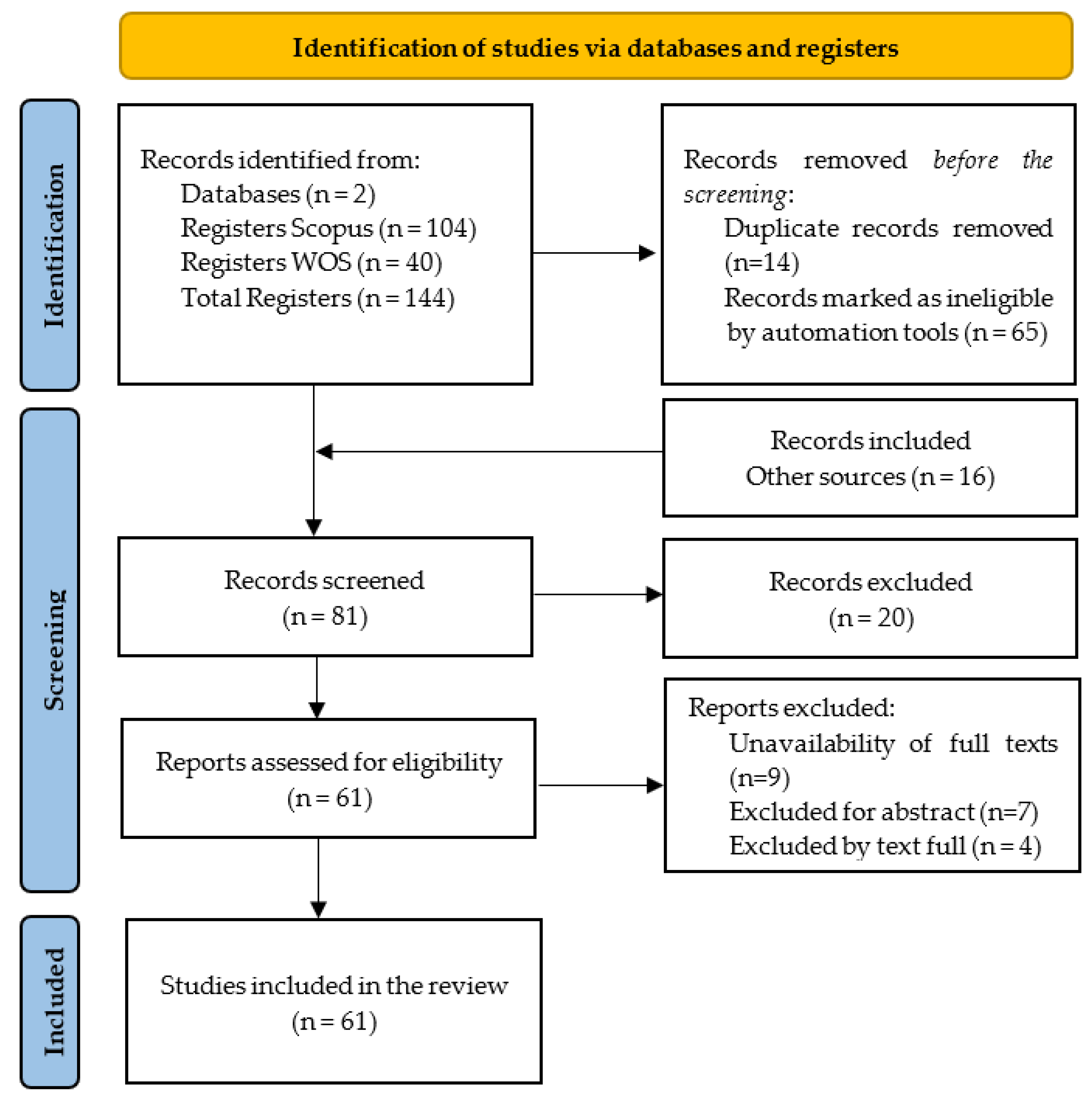

2.6. Initial Search Results

- Initial records: Scopus 104 and WOS 40. Initial results obtained 144.

- Records eliminated by exclusion criteria: 65 results eliminated and 79 results remained, 61 from Scopus and 18 from WOS.

2.7. Duplicates and Screening

2.8. Additional Records

2.9. Records Excluded

3. Results

3.1. Literature Analysis

3.2. Technology for image acquisition

3.3. CNN architecture used

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- World Population Prospects 2022 Data Sources; 2022;

- Rajcan, I.; Swanton, C.J. Understanding Maize–Weed Competition: Resource Competition, Light Quality and the Whole Plant. Field Crops Res 2001, 71, 139–150. [CrossRef]

- Iqbal, N.; Manalil, S.; Chauhan, B.S.; Adkins, S.W. Investigation of Alternate Herbicides for Effective Weed Management in Glyphosate-Tolerant Cotton. Arch Agron Soil Sci 2019, 65, 1885–1899. [CrossRef]

- Viì, M.; Williamson, M.; Lonsdale, M. Competition Experiments on Alien Weeds with Crops: Lessons for Measuring Plant Invasion Impact?; 2004; Vol. 6; [CrossRef]

- Holt, J.S. Principles of Weed Management in Agroecosystems and Wildlands. Weed Technology 2004, 18, 1559 – 1562. [CrossRef]

- Liu, W.; Xu, L.; Xing, J.; Shi, L.; Gao, Z.; Yuan, Q. Research Status of Mechanical Intra-Row Weed Control in Row Crops. Journal of Agricultural Mechanization Research 2017, 33, 243–250.

- Liu, B.; Bruch, R. Weed Detection for Selective Spraying: A Review. Current Robotics Reports 2020, 1, 19–26. [CrossRef]

- Hasan, A.S.M.M.; Sohel, F.; Diepeveen, D.; Laga, H.; Jones, M.G.K. A Survey of Deep Learning Techniques for Weed Detection from Images. Comput Electron Agric 2021, 184. [CrossRef]

- Al-Badri, A.H.; Ismail, N.A.; Al-Dulaimi, K.; Salman, G.A.; Khan, A.R.; Al-Sabaawi, A.; Salam, M.S.H. Classification of Weed Using Machine Learning Techniques: A Review—Challenges, Current and Future Potential Techniques. Journal of Plant Diseases and Protection 2022, 129, 745–768. [CrossRef]

- Rai, N.; Zhang, Y.; Ram, B.G.; Schumacher, L.; Yellavajjala, R.K.; Bajwa, S.; Sun, X. Applications of Deep Learning in Precision Weed Management: A Review. Comput Electron Agric 2023, 206. [CrossRef]

- Mahmudul Hasan, A.S.M.; Sohel, F.; Diepeveen, D.; Laga, H.; Jones, M.G.K. Weed Recognition Using Deep Learning Techniques on Class-Imbalanced Imagery. Crop Pasture Sci 2022. [CrossRef]

- Radoglou-Grammatikis, P.; Sarigiannidis, P.; Lagkas, T.; Moscholios, I. A Compilation of UAV Applications for Precision Agriculture. Computer Networks 2020, 172, 107148. [CrossRef]

- Chen, D.; Lu, Y.; Li, Z.; Young, S. Performance Evaluation of Deep Transfer Learning on Multi-Class Identification of Common Weed Species in Cotton Production Systems. Comput Electron Agric 2022, 198, 107091. [CrossRef]

- Olsen, A.; Konovalov, D.A.; Philippa, B.; Ridd, P.; Wood, J.C.; Johns, J.; Banks, W.; Girgenti, B.; Kenny, O.; Whinney, J.; et al. DeepWeeds: A Multiclass Weed Species Image Dataset for Deep Learning. Sci Rep 2019, 9, 2058. [CrossRef]

- Suh, H.K.; IJsselmuiden, J.; Hofstee, J.W.; van Henten, E.J. Transfer Learning for the Classification of Sugar Beet and Volunteer Potato under Field Conditions. Biosyst Eng 2018, 174, 50–65. [CrossRef]

- Wu, H.; Wang, Y.; Zhao, P.; Qian, M. Small-Target Weed-Detection Model Based on YOLO-V4 with Improved Backbone and Neck Structures. Precis Agric 2023, 24, 2149–2170. [CrossRef]

- Subeesh, A.; Bhole, S.; Singh, K.; Chandel, N.S.; Rajwade, Y.A.; Rao, K.V.R.; Kumar, S.P.; Jat, D. Deep Convolutional Neural Network Models for Weed Detection in Polyhouse Grown Bell Peppers. Artificial Intelligence in Agriculture 2022, 6, 47–54. [CrossRef]

- Zhuang, F.; Qi, Z.; Duan, K.; Xi, D.; Zhu, Y.; Zhu, H.; Xiong, H.; He, Q. A Comprehensive Survey on Transfer Learning. 2019. [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks; 2012; [CrossRef]

- Espejo-Garcia, B.; Mylonas, N.; Athanasakos, L.; Fountas, S.; Vasilakoglou, I. Towards Weeds Identification Assistance through Transfer Learning. Comput Electron Agric 2020, 171. [CrossRef]

- Tan, C.; Sun, F.; Kong, T.; Zhang, W.; Yang, C.; Liu, C. A Survey on Deep Transfer Learning. 2018. [CrossRef]

- Yan, X.; Deng, X.; Jin, J. Classification of Weed Species in the Paddy Field with DCNN-Learned Features. In Proceedings of the 2020 IEEE 5th Information Technology and Mechatronics Engineering Conference (ITOEC); 2020; pp. 336–340. [CrossRef]

- Espejo-Garcia, B.; Mylonas, N.; Athanasakos, L.; Vali, E.; Fountas, S. Combining Generative Adversarial Networks and Agricultural Transfer Learning for Weeds Identification. Biosyst Eng 2021, 204, 79–89. [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going Deeper with Convolutions. 2014. [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. 2014. [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition; 2015; [CrossRef]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV); 2015; pp. 1440–1448. [CrossRef]

- Huang, G.; Liu, Z.; van der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. 2016. [CrossRef]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. 2017. [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. 2015. [CrossRef]

- Jocher, G.; Chaurasia, A.; Stoken, A.; Borovec, J.; NanoCode012; Kwon, Y.; Michael, K.; TaoXie; Fang, J.; imyhxy; et al. Ultralytics/Yolov5: V7.0 - YOLOv5 SOTA Realtime Instance Segmentation 2022. [CrossRef]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. 2022. [CrossRef]

- Jocher, G.; Chaurasia, A.; Qiu, J. YOLO by Ultralytics 2023.

- Urrútia, G.; Bonfill, X. PRISMA Declaration: A Proposal to Improve the Publication of Systematic Reviews and Meta-Analyses. Med Clin (Barc) 2010, 135, 507–511. [CrossRef]

- Elnemr, H.A. Convolutional Neural Network Architecture for Plant Seedling Classification. International Journal of Advanced Computer Science and Applications 2019, 10, 319–325. [CrossRef]

- Rasti, P.; Ahmad, A.; Samiei, S.; Belin, E.; Rousseau, D. Supervised Image Classification by Scattering Transform with Application Toweed Detection in Culture Crops of High Density. Remote Sens (Basel) 2019, 11. [CrossRef]

- Yu, J.; Schumann, A.W.; Cao, Z.; Sharpe, S.M.; Boyd, N.S. Weed Detection in Perennial Ryegrass With Deep Learning Convolutional Neural Network. Front Plant Sci 2019, 10. [CrossRef]

- Asad, M.H.; Bais, A. Weed Detection in Canola Fields Using Maximum Likelihood Classification and Deep Convolutional Neural Network. Information Processing in Agriculture 2020, 7, 535–545. [CrossRef]

- Bah, M.D.; Hafiane, A.; Canals, R. CRowNet: Deep Network for Crop Row Detection in UAV Images. IEEE Access 2020, 8, 5189–5200. [CrossRef]

- Gao, J.; French, A.P.; Pound, M.P.; He, Y.; Pridmore, T.P.; Pieters, J.G. Deep Convolutional Neural Networks for Image-Based Convolvulus Sepium Detection in Sugar Beet Fields. Plant Methods 2020, 16. [CrossRef]

- Gupta, K.; Rani, R.; Bahia, N.K. Plant-Seedling Classification Using Transfer Learning-Based Deep Convolutional Neural Networks. INTERNATIONAL JOURNAL OF AGRICULTURAL AND ENVIRONMENTAL INFORMATION SYSTEMS 2020, 11, 25–40. [CrossRef]

- Mora-Fallas, A.; Goeau, H.; Joly, A.; Bonnet, P.; Mata-Montero, E. Instance Segmentation for Automated Weeds and Crops Detection in Farmlands. TECNOLOGIA EN MARCHA 2020, 33, 13–17. [CrossRef]

- Osorio, K.; Puerto, A.; Pedraza, C.; Jamaica, D.; Rodríguez, L. A Deep Learning Approach for Weed Detection in Lettuce Crops Using Multispectral Images. AgriEngineering 2020, 2, 471–488. [CrossRef]

- Parico, A.I.B.; Ahamed, T. An Aerial Weed Detection System for Green Onion Crops Using the You Only Look Once (YOLOv3) Deep Learning Algorithm. Engineering in Agriculture, Environment and Food 2020, 13, 42–48. [CrossRef]

- Sivakumar, A.N. V; Li, J.; Scott, S.; Psota, E.; Jhala, A.J.; Luck, J.D.; Shi, Y. Comparison of Object Detection and Patch-Based Classification Deep Learning Models on Mid-to Late-Season Weed Detection in UAV Imagery. Remote Sens (Basel) 2020, 12. [CrossRef]

- Haq, M.A. CNN Based Automated Weed Detection System Using UAV Imagery. Computer Systems Science and Engineering 2021, 42, 837–849. [CrossRef]

- Hennessy, P.J.; Esau, T.J.; Farooque, A.A.; Schumann, A.W.; Zaman, Q.U.; Corscadden, K.W. Hair Fescue and Sheep Sorrel Identification Using Deep Learning in Wild Blueberry Production. Remote Sens (Basel) 2021, 13, 1–18. [CrossRef]

- Hu, C.; Thomasson, J.A.; Bagavathiannan, M. V A Powerful Image Synthesis and Semi-Supervised Learning Pipeline for Site-Specific Weed Detection. Comput Electron Agric 2021, 190. [CrossRef]

- Jabir, B.; Falih, N.; Rahmani, K. Accuracy and Efficiency Comparison of Object Detection Open-Source Models. International journal of online and biomedical engineering 2021, 17, 165–184. [CrossRef]

- Khan, S.; Tufail, M.; Khan, M.T.; Khan, Z.A.; Anwar, S. Deep Learning-Based Identification System of Weeds and Crops in Strawberry and Pea Fields for a Precision Agriculture Sprayer. Precis Agric 2021, 22, 1711–1727. [CrossRef]

- Moazzam, S.I.; Khan, U.S.; Qureshi, W.S.; Tiwana, M.I.; Rashid, N.; Alasmary, W.S.; Iqbal, J.; Hamza, A. A Patch-Image Based Classification Approach for Detection of Weeds in Sugar Beet Crop. IEEE Access 2021, 9, 121698–121715. [CrossRef]

- Urmashev, B.; Buribayev, Z.; Amirgaliyeva, Z.; Ataniyazova, A.; Zhassuzak, M.; Turegali, A. Development of a Weed Detection System Using Machine Learning and Neural Network Algorithms. Eastern-European Journal of Enterprise Technologies 2021, 6. [CrossRef]

- Xie, S.; Hu, C.; Bagavathiannan, M.; Song, D. Toward Robotic Weed Control: Detection of Nutsedge Weed in Bermudagrass Turf Using Inaccurate and Insufficient Training Data. IEEE Robot Autom Lett 2021, 6, 7365–7372. [CrossRef]

- Xu, K.; Zhu, Y.; Cao, W.; Jiang, X.; Jiang, Z.; Li, S.; Ni, J. Multi-Modal Deep Learning for Weeds Detection in Wheat Field Based on RGB-D Images. Front Plant Sci 2021, 12. [CrossRef]

- Al-Badri, A.H.; Ismail, N.A.; Al-Dulaimi, K.; Rehman, A.; Abunadi, I.; Bahaj, S.A. Hybrid CNN Model for Classification of Rumex Obtusifolius in Grassland. IEEE ACCESS 2022, 10, 90940–90957. [CrossRef]

- Babu, V.S.; Ram, N. V Deep Residual CNN with Contrast Limited Adaptive Histogram Equalization for Weed Detection in Soybean Crops. Traitement du Signal 2022, 39, 717–722. [CrossRef]

- Chen, J.; Wang, H.; Zhang, H.; Luo, T.; Wei, D.; Long, T.; Wang, Z. Weed Detection in Sesame Fields Using a YOLO Model with an Enhanced Attention Mechanism and Feature Fusion. Comput Electron Agric 2022, 202. [CrossRef]

- G C, S.; Koparan, C.; Ahmed, M.R.; Zhang, Y.; Howatt, K.; Sun, X. A Study on Deep Learning Algorithm Performance on Weed and Crop Species Identification under Different Image Background. Artificial Intelligence in Agriculture 2022, 6, 242–256. [CrossRef]

- Hennessy, P.J.; Esau, T.J.; Schumann, A.W.; Zaman, Q.U.; Corscadden, K.W.; Farooque, A.A. Evaluation of Cameras and Image Distance for CNN-Based Weed Detection in Wild Blueberry. Smart Agricultural Technology 2022, 2. [CrossRef]

- Jabir, B.; Falih, N. Deep Learning-Based Decision Support System for Weeds Detection in Wheat Fields. International Journal of Electrical and Computer Engineering 2022, 12, 816–825. [CrossRef]

- Liu, S.; Jin, Y.; Ruan, Z.; Ma, Z.; Gao, R.; Su, Z. Real-Time Detection of Seedling Maize Weeds in Sustainable Agriculture. Sustainability (Switzerland) 2022, 14. [CrossRef]

- Mohammed, H.; Tannouche, A.; Ounejjar, Y. Weed Detection in Pea Cultivation with the Faster RCNN ResNet 50 Convolutional Neural Network. Revue d’Intelligence Artificielle 2022, 36, 13–18. [CrossRef]

- Nasiri, A.; Omid, M.; Taheri-Garavand, A.; Jafari, A. Deep Learning-Based Precision Agriculture through Weed Recognition in Sugar Beet Fields. Sustainable Computing: Informatics and Systems 2022, 35. [CrossRef]

- Razfar, N.; True, J.; Bassiouny, R.; Venkatesh, V.; Kashef, R. Weed Detection in Soybean Crops Using Custom Lightweight Deep Learning Models. J Agric Food Res 2022, 8. [CrossRef]

- Saboia, H. de S.; Mion, R.L.; Silveira, A. de O.; Mamiya, A.A. REAL-TIME SELECTIVE SPRAYING FOR VIOLA ROPE CONTROL IN SOYBEAN AND COTTON CROPS USING DEEP LEARNING. ENGENHARIA AGRICOLA 2022, 42. [CrossRef]

- Saleem, M.H.; Potgieter, J.; Arif, K.M. Weed Detection by Faster RCNN Model: An Enhanced Anchor Box Approach. Agronomy 2022, 12, 1580. [CrossRef]

- Saleem, M.H.; Velayudhan, K.K.; Potgieter, J.; Arif, K.M. Weed Identification by Single-Stage and Two-Stage Neural Networks: A Study on the Impact of Image Resizers and Weights Optimization Algorithms. Front Plant Sci 2022, 13. [CrossRef]

- Sapkota, B.B.; Hu, C.; Bagavathiannan, M. V Evaluating Cross-Applicability of Weed Detection Models Across Different Crops in Similar Production Environments. Front Plant Sci 2022, 13. [CrossRef]

- Sapkota, B.B.; Popescu, S.; Rajan, N.; Leon, R.G.; Reberg-Horton, C.; Mirsky, S.; Bagavathiannan, M. V Use of Synthetic Images for Training a Deep Learning Model for Weed Detection and Biomass Estimation in Cotton. Sci Rep 2022, 12. [CrossRef]

- Subeesh, A.; Bhole, S.; Singh, K.; Chandel, N.S.; Rajwade, Y.A.; Rao, K.V.R.; Kumar, S.P.; Jat, D. Deep Convolutional Neural Network Models for Weed Detection in Polyhouse Grown Bell Peppers. Artificial Intelligence in Agriculture 2022, 6, 47–54. [CrossRef]

- Tannouche, A.; Gaga, A.; Boutalline, M.; Belhouideg, S. Weeds Detection Efficiency through Different Convolutional Neural Networks Technology. International Journal of Electrical and Computer Engineering 2022, 12, 1048–1055. [CrossRef]

- Valente, J.; Hiremath, S.; Ariza-Sentís, M.; Doldersum, M.; Kooistra, L. Mapping of Rumex Obtusifolius in Nature Conservation Areas Using Very High Resolution UAV Imagery and Deep Learning. International Journal of Applied Earth Observation and Geoinformation 2022, 112. [CrossRef]

- Yang, J.; Bagavathiannan, M.; Wang, Y.; Chen, Y.; Yu, J. A Comparative Evaluation of Convolutional Neural Networks, Training Image Sizes, and Deep Learning Optimizers for Weed Detection in Alfalfa. WEED TECHNOLOGY 2022, 36, 512–522. [CrossRef]

- Ajayi, O.G.; Ashi, J. Effect of Varying Training Epochs of a Faster Region-Based Convolutional Neural Network on the Accuracy of an Automatic Weed Classification Scheme. Smart Agricultural Technology 2023, 3, 100128. [CrossRef]

- Almalky, A.M.; Ahmed, K.R. Deep Learning for Detecting and Classifying the Growth Stages of Consolida Regalis Weeds on Fields. Agronomy 2023, 13. [CrossRef]

- Arif, S.; Hussain, R.; Ansari, N.M.; Rauf, W. A Novel Hybrid Feature Method for Weeds Identification in the Agriculture Sector. Research in Agricultural Engineering 2023, 69, 132–142. [CrossRef]

- Bidve, V.; Mane, S.; Tamkhade, P.; Pakle, G. Weed Detection by Using Image Processing. Indonesian Journal of Electrical Engineering and Computer Science 2023, 30, 341–349. [CrossRef]

- Devi, B.S.; Sandhya, N.; Chatrapati, K.S. WeedFocusNet: A Revolutionary Approach Using the Attention-Driven ResNet152V2 Transfer Learning. International Journal on Recent and Innovation Trends in Computing and Communication 2023, 11, 334–341. [CrossRef]

- Fan, X.; Chai, X.; Zhou, J.; Sun, T. Deep Learning Based Weed Detection and Target Spraying Robot System at Seedling Stage of Cotton Field. Comput Electron Agric 2023, 214. [CrossRef]

- Gallo, I.; Rehman, A.U.; Dehkordi, R.H.; Landro, N.; La Grassa, R.; Boschetti, M. Deep Object Detection of Crop Weeds: Performance of YOLOv7 on a Real Case Dataset from UAV Images. Remote Sens (Basel) 2023, 15, 539. [CrossRef]

- Husham Al-Badri, A.; Azman Ismail, N.; Al-Dulaimi, K.; Ahmed Salman, G.; Sah Hj Salam, M. Adaptive Non-Maximum Suppression for Improving Performance of Rumex Detection. Expert Syst Appl 2023, 219. [CrossRef]

- Janneh, L.L.; Zhang, Y.; Cui, Z.; Yang, Y. Multi-Level Feature Re-Weighted Fusion for the Semantic Segmentation of Crops and Weeds. Journal of King Saud University - Computer and Information Sciences 2023, 35, 101545. [CrossRef]

- Jin, X.; Liu, T.; McCullough, P.E.; Chen, Y.; Yu, J. Evaluation of Convolutional Neural Networks for Herbicide Susceptibility-Based Weed Detection in Turf. Front Plant Sci 2023, 14. [CrossRef]

- Kansal, I.; Khullar, V.; Verma, J.; Popli, R.; Kumar, R. IoT-Fog-Enabled Robotics-Based Robust Classification of Hazy and Normal Season Agricultural Images for Weed Detection. Paladyn 2023, 14. [CrossRef]

- Modi, R.U.; Kancheti, M.; Subeesh, A.; Raj, C.; Singh, A.K.; Chandel, N.S.; Dhimate, A.S.; Singh, M.K.; Singh, S. An Automated Weed Identification Framework for Sugarcane Crop: A Deep Learning Approach. CROP PROTECTION 2023, 173. [CrossRef]

- Moreno, H.; Gómez, A.; Altares-López, S.; Ribeiro, A.; Andújar, D. Analysis of Stable Diffusion-Derived Fake Weeds Performance for Training Convolutional Neural Networks. Comput Electron Agric 2023, 214. [CrossRef]

- Ong, P.; Teo, K.S.; Sia, C.K. UAV-Based Weed Detection in Chinese Cabbage Using Deep Learning. Smart Agricultural Technology 2023, 4. [CrossRef]

- Patel, J.; Ruparelia, A.; Tanwar, S.; Alqahtani, F.; Tolba, A.; Sharma, R.; Raboaca, M.S.; Neagu, B.C. Deep Learning-Based Model for Detection of Brinjal Weed in the Era of Precision Agriculture. Computers, Materials and Continua 2023, 77, 1281–1301. [CrossRef]

- Rajeena, P.P.F.; Ismail, W.N.; Ali, M.A.S. A Metaheuristic Harris Hawks Optimization Algorithm for Weed Detection Using Drone Images. APPLIED SCIENCES-BASEL 2023, 13. [CrossRef]

- Reddy, B.S.; Neeraja, S. An Optimal Superpixel Segmentation Based Transfer Learning Using AlexNet–SVM Model for Weed Detection. International Journal of System Assurance Engineering and Management 2023. [CrossRef]

- Saqib, M.A.; Aqib, M.; Tahir, M.N.; Hafeez, Y. Towards Deep Learning Based Smart Farming for Intelligent Weeds Management in Crops. Front Plant Sci 2023, 14. [CrossRef]

- Shahi, T.B.; Dahal, S.; Sitaula, C.; Neupane, A.; Guo, W. Deep Learning-Based Weed Detection Using UAV Images: A Comparative Study. Drones 2023, 7. [CrossRef]

- Singh, V.; Gourisaria, M.K.; Harshvardhan, G.M.; Choudhury, T. Weed Detection in Soybean Crop Using Deep Neural Network. Pertanika J Sci Technol 2023, 31, 401–423. [CrossRef]

- Yu, H.; Che, M.; Yu, H.; Ma, Y. Research on Weed Identification in Soybean Fields Based on the Lightweight Segmentation Model DCSAnet. Front Plant Sci 2023, 14. [CrossRef]

- Zhuang, J.; Jin, X.; Chen, Y.; Meng, W.; Wang, Y.; Yu, J.; Muthukumar, B. Drought Stress Impact on the Performance of Deep Convolutional Neural Networks for Weed Detection in Bahiagrass. Grass and Forage Science 2023, 78, 214–223. [CrossRef]

| No. | Year | Reference | CNN architecture | Technology | Species |

|---|---|---|---|---|---|

| 1 | 2019 | [35] | Specifically designed CNN | Camera Canon 600D uses a dataset group from Aarhus University in collaboration with Southern Denmark University. | Black grass (Alopecurus myosuroides), Charlock (Sinapis arvensis), Cleavers (Galium aparine), Chickweed (Stellaria media), Common wheat (Triticum aestivum), Fat hen (Chenopodium album), Loose silky-bent (Apera spica-venti) and Maize (Zea mays), Scentless mayweed (Tripleurospermum perforatum), Shepherd's purse (Capsella bursapastoris), Small-flowered Cranesbill (Geranium pusillum), Sugar beet (Beta vulgaris) |

| 2 | 2019 | [36] | Design-specific CNN with SVM | 20 MP JAI camera with a spatial resolution of 5120 × 3840 pixels, mounted with a 35 mm lens. | Mache lettuce (Valerianella locusta f.) |

| 3 | 2019 | [37] | AlexNet, GoogLeNet and VGGNet | Sony Cyber-Shot camera and a Canon EOS Rebel T6 digital camera | Perennial ryegrass, dandelion (Taraxacum officinale Web.), ground ivy (Glechoma hederacea L.), and spotted spurge (Euphorbia maculata L.) |

| 4 | 2020 | [38] | SegNet, UNet, VGG16 and ResNet-50. | Nikon D610 Quad Camera | Canola (Brassica napus) |

| 5 | 2020 | [39] | CRowNet is based on SegNet and the Hough transform | Parrot RedEdge -M multispectral camera | Beet (Beta vulgaris) and Corn (Zea mays) |

| 6 | 2020 | [40] | YOLOv3 and YOLOv3-Tiny | Camera Nikon D7200 | Sugar beet (Beta vulgaris subsp) and C. sepium |

| 7 | 2020 | [41] | ResNet50, VGG16, VGG19, Xception and MobileNetV2. | Camera Canon 600D uses the dataset group of the Aarhus University | Sugar Beet, Black grass, Charlock, Cleavers, Common Chickweed, Common Wheat, Fat Hen, Loosy Silky-bent, Maize, Scentless Mayweed, Shepherd's purse and Small-flowered cranesbill |

| 8 | 2020 | [42] | Mask R-CNN | Unspecified digital cameras and smartphones | Zea mays (corn), Phaseolus vulgaris (green bean), Brassica nigra, Matricaria chamomilla, Lolium perenne, and Chenopodium album |

| 9 | 2020 | [43] | YOLOv3, Mask R-CNN, and CNN with SVM-HOG (histograms of oriented gradients) | Mavic Pro with the Parrot Sequoia multispectral camera | Lettuce (Lactuca sativa) |

| 10 | 2020 | [44] | YOLO-WEED, based on YOLOv3 | DJI Phantom 3 Pro | Green Onion (Allium fistulosum) |

| 11 | 2020 | [45] | Faster R-CNN and Single Shot Detector (SSD) | DJI Matrice 600 pro with Zenmuse X5R camera | soybean leaves (Glycine max), water hemp (Amaranthus tuberculatus), Palmer amaranthus (Amaranthus palmeri), common lamb's quarters (Chenopodium album), velvetleaf (Abutilon theophrasti) and foxtail species. |

| 12 | 2021 | [46] | CNN-LVQ-specific design based on Learning Vector Quantization (LVQ) | DJI Phantom 3 Professional | Soybean (Glycine max), grass, and broadleaf weeds |

| 13 | 2021 | [47] | YOLOv3, YOLOv3-Tiny and YOLOv3-Tiny-PRN | Digital cameras with resolutions between 4,000 × 3,000 and 6,000 × 4,000 pixels, not specified | Hair Fescue, Sheep Sorrel, and Blueberry (Vaccinium sect. Cyanococcus) |

| 14 | 2021 | [48] | Faster R-CNN and ResNet50 | Unspecified top digital camera | Cotton (Gossypium hirsutum), Bellflower (Ipomoea spp .), Palmer Amaranth (Amaranthus palmeri), prostrate spurge (Euphorbia maculata) and Soybean (Glycine max). |

| 15 | 2021 | [49] | Detectron2, EfficientDet, YOLOv5, and Faster R-CNN. | Camera Nikon 7000 professional | Phalaris Paradoxa and Convolvulus |

| 16 | 2021 | [50] | Faster R-CNN, ResNet-101, VGG16 and Yolov3 | DJI Spark with an onboard camera with1/2.3" CMOS sensor, 12-megapixel and FOV 81.9° 25 mm f/2.6 lens | Peas (Pisum sativum), strawberries (Fragaria ananassa), and prickly grass (Eleusine indica) |

| 17 | 2021 | [51] | VGG- Beet based on VGG16 | DJI Phantom 4 | Sugar beet (Beta vulgaris subsp) |

| 18 | 2021 | [52] | YOLOv5 and Classic K-Nearest Neighbors, Random Forest, and Decision Algorithms tree | Uses Non-specific Dataset | Ambrosia, Amaranthus, Bindweed, Bromus and Quinoa |

| 19 | 2021 | [53] | Faster R-CNN and Mask R-CNN | Cameras NikonTM D3300 and Canon EOS Rebel T7 | Nutsedge weed and Bermudagrass Turf |

| 20 | 2021 | [54] | Faster R-CNN and VGG16 | RealSense D415 Depth Camera (99mm × 20mm × 23mm) | Wheat (Triticum aestivum L.), Alopecurus aequalis, Poa annua, Bromus japonicus, E. crusgalli, Amaranthus retroflexus, and C. bursa-pastoris |

| 21 | 2022 | [55] | VGG16, ResNet-50 and Inception-V3 | Camera SONY Cybershot DSC-60 | Rumex obtusifolius |

| 22 | 2022 | [56] | AlexNet vs VGG-16 | Unspecified drone camera | Soybean (Glycine max), broadleaf weeds and grasses. |

| 23 | 2022 | [57] | YOLOv4, YOLO- sesame. | DahengMER-132-43U3C-l camera, 1/3” CCD type sensor, with a resolution of 1292 × 964 | Sesame (Sesamum indicum) |

| 24 | 2022 | [58] | VGG16 and ResNet16 | Canon EOS T7 digital cameras | Horseweed, Palmer Amaranth, Redroot Pigweed, Ragweed, Waterhemp, Canola, Kochia and Sugar beets |

| 25 | 2022 | [59] | YOLOv3-Tiny | Canon T6 DSLR 7 camera, LG G6 smartphone, and Logitech c920 camera | Blueberries (Vaccinium corymbosum) |

| 26 | 2022 | [60] | YOLOv5 | Nikon D7200 Digital Single Lens Reflex (DSLR) Camera | Wheat (Triticum), Monocotylodone weed (Convolvulus), and Dicotyledonous weed (Phalaris) |

| 27 | 2022 | [61] | Faster R-CNN, SSD, YOLOv3, YOLOv3-tiny and YOLOv4-tiny | phone with a 12-megapixel resolution | Corn (Zea mays) |

| 28 | 2022 | [62] | Faster R-CNN and ResNet | Huawei Y7 prime digital camera phone | Pea (Pisum sativum) |

| 29 | 2022 | [63] | UNet based on ResNet50 | FotoClip 2164 digital camera 480 × 640-pixel resolution | Beta vulgaris subsp and weed |

| 30 | 2022 | [64] | MobileNetV2, ResNet50 | DJI Phantom 3 Professional - Uses dataset | soybean (Glycine max), grass, and broadleaf weeds |

| 31 | 2022 | [65] | YOLO-v3 and faster R-CNN | Nikon P250 semi-professional camera | Soybean (Glycine max), cotton (Gossypium), and Viola Rope |

| 32 | 2022 | [66] | Faster R-CNN, ResNet-101, YOLOv4, SSD-Inception-v2, MobileNet, ResNet-50, EfficientDet and CenterNet | Uses Datasets DeepWeeds | Chinee Apple, Lantana, Prickly Acacia, Parthenium, Parkinsonia, Rubber vine, Siam Weed, Snake Weed |

| 33 | 2022 | [67] | SSD- MobileNet, SSD-InceptionV2, Faster R-CNN, CenterNet, EfficientDet, RetinaNet and YOLOv4 | Uses Datasets DeepWeeds | Chinee Apple, Lantana, Negative, Prickly Acacia, Parthenium, Parkinsonia, Rubber vine, Siam Weed and Snake Weed |

| 34 | 2022 | [68] | YOLOv4 and Faster R-CNN. | FUJIFILM GFX100 100-megapixel camera with a multi-copter drone Hylio AG-110 | Cotton (Gossypium), Soybean leaves (Glycine max), bluebells (Ipomoea spp .) composed of tall bluebells (Ipomoea purpurea) and ivy-leaf bluebells (Ipomoea hederacea), Texas millet (Urochloa texana), johnsongrass (Sorghum halepense), Palmer amaranth (Amaranthus palmeri), the prostrate spurge (Euphorbia humistrata) and brown panic (Panicum fasiculatum). |

| 35 | 2022 | [69] | Mask R-CNN and GAN | FUJIFILM GFX100 100-megapixel camera with a multi-copter drone Hylio AG-110 | Cotton (Gossypium) and grass weeds |

| 36 | 2022 | [70] | Alexnet, GoogLeNet, InceptionV3, Xception | Xiaomi Mi 11x mobile device camera, 48 MP, f/1.8, 26 mm (wide angle) | Peppers (Capsicum annuum) |

| 37 | 2022 | [71] | VGGNet (16 and 19), GoogLeNet (Inception V3 and V4) and MobileNet (V1 and V2) | Unspecified tractor-mounted digital cameras | Bean (Phaseolus vulgaris) and Beet (Beta vulgaris) |

| 38 | 2022 | [72] | VGG, Resnet, DenseNet, ShuffleNet, MobileNet, EfficientNet and MNASNet | DJI Phantom 3 Professional | Rumex obtusifolius |

| 39 | 2022 | [73] | AlexNet, GoogLeNet, VGGNet and ResNet | Panasonic DMC-ZS110 Digital Camera | Alfalfa (Medicago sativa), broadleaf weeds and grasses |

| 40 | 2023 | [74] | Faster R-CNN | DJI Phantom 4 equipped with a 12-megapixel RGB camera | Saccharum officinarum, Spinacia oleracea, Capsicum annuum, Musa paradisiaca and Weeds |

| 41 | 2023 | [75] | YOLOv5, RetinaNet, and Faster R-CNN | UAV digital camera 1″ CMOS Sensor, Effective Pixels: 20 million, Still Image Size 5472 × 3648 | Consolidates regalia |

| 42 | 2023 | [76] | Hybrid CNN, AlexNet, GoogLeNET, VGG-Net, ResNet, and GAN | Raspberry Pi-3 with a Pi camera of version 2.1 | Beetroot, Rice, Siam weed, Parkinsonia, and Chinese snakeweed Manzana |

| 43 | 2023 | [77] | VGGNet, VGG16, VGG19 and SVM | Uses Datasets agri_data available on Kaggle | Falsethistle grass, Walnut (Carya illinoinensis) |

| 44 | 2023 | [78] | WeedFocusNet based on ResNet152v2 | Uses Non-specific Dataset | Soybean (Glycine max), broadleaf weed |

| 45 | 2023 | [79] | Faster R-CNN and VGG16 | SONY IMX386 camera and an Honor Play mobile phone | Cotton thistle, purslane, solanumnigrum, sclerochloa dura, Sonchus oleraceus, salsola hill pall, chenopodium album, and convolvulus |

| 46 | 2023 | [80] | YOLOv7, YOLOv7-m, YOLOv7-x, YOLOv7-w6, YOLOv7-d6s, YOLOv5, YOLOv4 and Faster R-CNN | UAV camera not specified | Sugar beet (Beta vulgaris subsp) and weeds (Mercuralis annual) |

| 47 | 2023 | [81] | Inception-V3, VGG-16 and ResNet-50 | Monochrome Camera (PointGrey GS3-U3-23S6M-C) Uses database. | Rumex obtusifolius |

| 48 | 2023 | [82] | YOLOv3, YOLOv3-tiny, YOLOv4 and YOLOv4-tiny | Camera JAI AD-130GE camera with a resolution of 1296 × 966 pixels | Carrots (Daucus carota), Sugar beet (Beta vulgaris subsp), and rice seedlings (Oryza sativa) |

| 49 | 2023 | [83] | DenseNet, EfficientNet and ResNet | SONY DSC-HX1 digital camera | Crabgrass, Dollargrass, Goosegrass, Old World Diamondflower, Purple Nutsedge, Tropical signalgrass, Virginia Buttongrass, and Bermuda Grass |

| 50 | 2023 | [84] | 2D-CNN of specific design | AUV not specified | Soybean (Glycine max), grass, broadleaf weed |

| 51 | 2023 | [85] | Alexnet, DarkNet53, GoogLeNet, InceptionV3, ResNet50 and Xception | One Plus Nord AC2001 Phone, High Image Quality 3:4 Camera Frame in 48 MP Sony IMX586 with OIS+8 MP | Sugar cane (Saccharum officinarum), male goat (Ageratum conyzoides L.), purple nutsedge (Cyperus rotundus L.), scarlet pimpernel (Anagallis arvensis L.), Lepidium didymum (Coronopus didymus L.), field creeper (Convolvulus arvensis L.), ragweed parthenium (Parthenium hysterophorus L.), prickly thistle (Sonchus asper L.), cornspur (Spergula arvensis L.) and Asian escalistem (Elytraria acaulis L.). |

| 52 | 2023 | [86] | Yolov8l, RetinaNet, and GAN | Canon PowerShot SX540 HS integrating a 20.3-megapixel CMOS | Solanum lycopersicum, Solanum nigrum L.; Portulaca oleracea L. and Setaria Verticillata L. |

| 53 | 2023 | [87] | AlexNet and CNN-RF specifies | X10 drone, equipped with a 20-megapixel resolution camera. | Chinese cabbage (Brassica rapa) |

| 54 | 2023 | [88] | ResNet-18, YOLOv3, CenterNet, and Faster R-CNN | phone 48 M.P. resolution | Eggplant (Solanum melongena) |

| 55 | 2023 | [89] | DenseHHO is based on Harris Hawk (HHO), DenseNet-121, and DenseNet-201 optimization algorithms. | Unspecified drone camera | Wheat (Triticum aestivum L.), Rumex crispus and Rumex obtusifolius |

| 56 | 2023 | [90] | AlexNet and AlexNet -SVM | Uses Datasets " crop and weed detection data with bounding boxes," available on Kaggle | Sesame (Sesamum indicum) |

| 57 | 2023 | [91] | YOLOv3, YOLOv3-tiny, YOLOv4 and YOLOv4-tiny | Logitech H.D. 920c professional webcam with a resolution of 1 M.P. and dimensions of 1280 × 720 | Creeping thistle, bindweed, and California poppy. |

| 58 | 2023 | [92] | CoFly-WeedDB is based on SegNet, VGG16, ResNet50, DenseNet121, EfficientNetB0 and MobileNetV2 | DJI Phantom Pro 4 | Cotton (Gossypium), Johnson grass (Sorghum) halepense), bindweed (Convolvulus arvensis) and purslane (Portulaca oleracea) |

| 59 | 2023 | [93] | Xception, VGG (16, 19), ResNet (50, 101, 152, 101v2, 152v2), InceptionV3, InceptionResNetV2, MobileNet, MobileNetV2, DenseNet (121, 169, 201), NASNetMobile, NASNetLarge | DJI Phantom 3 Professional | Soybean leaves (Glycine max) |

| 60 | 2023 | [94] | MobileNetV3 and ShuffleNet | Huawei mate30 cell phone | Soybean leaves (Glycine max), Digitaria sanguinalis L, Scop and Setaria viridis L, Beauv and broadleaf weeds such as Chenopodium glaucum L, Acalypha australis L, and Amaranthus retroflexus L. |

| 61 | 2023 | [95] | YOLOv3, Faster R-CNN, AlexNet, GoogLeNet and VGGNet | Digital camera SONY DSC-HX1 at a ratio of 16:9 with a resolution of 1920 x 1080 pixels | Florida pusley (Richardia scabra L.) and bahia grass (Paspalum natatum Flugge) |

| Technology | CNN architectures | References |

|---|---|---|

| Cameras | YOLO | [40, 47, 49, 57, 59, 60, 65, 68, 82, 86, 91, 95] |

| VGG | [37, 38, 41, 54, 55, 58, 71, 73, 76, 79, 81, 95] | |

| ResNet | [38, 41, 48, 55, 58, 63, 73, 76, 81, 83] | |

| Faster R-CNN | [48, 49, 53, 54, 65, 68, 79, 95] | |

| Alexnet | [37, 73, 76, 95] | |

| MobileNet | [41, 71] | |

| Cameras AUV | YOLO | [43, 44, 50, 75, 80] |

| VGG | [50, 51, 56, 72, 92, 93] | |

| ResNet | [50, 64, 72, 92, 93] | |

| Faster R-CNN | [45, 50, 74, 75, 80] | |

| Alexnet | [56, 87] | |

| MobileNet | [64, 72, 92, 93] | |

| Smartphone | YOLO | [61, 88] |

| VGG | - | |

| ResNet | [62, 85, 88] | |

| Faster R-CNN | [61, 62, 88] | |

| Alexnet | [70, 85] | |

| MobileNet | [94] | |

| Database | YOLO | [52, 66, 67] |

| VGG | [77] | |

| ResNet | [66, 78] | |

| Faster R-CNN | [66, 67] | |

| Alexnet | [90] | |

| MobileNet | [66, 67] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).