1. Introduction

Careful implementation of algorithms was a strong focus in the past due to considerably limited computer resources [

1,

2,

3,

4]. Today, however, there is an illusion among average programmers that this problem is no longer relevant. The compilers generate efficient executable code and offer additional optimisation features [

5,

6]. Better personal computers are equipped today with gigabytes of on-board memory, and their CPUs can perform calculations at a rate of hundreds of megaflops [

7]. However, with better computers, even more data are produced everyday [

8]. Supercomputers [

9], GPGPU installations [

10] and cloud-based solutions [

11] have been developed for processing them. Unfortunately, the majority of users do not have access to such installations; indeed, they operate modest personal computers, processing manageable amounts of data for which they expect to receive results quickly. This is why even a short segment of programming code, executed frequently, should be understood, not only arithmetically, but also from an implementation perspective. Programming code could be optimised in various aspects, such as, for example, in terms of spent CPU time [

12], required memory [

13], or energy consumption [

14]. An approach to reduce the spent CPU time of a recently proposed string transformation technique named

Move with Interleaving [

15] is considered in this paper. This transformation reduces the information entropy [

16,

17] as efficiently as the combination of the Burrows-Wheeler transform [

18,

19,

20] followed by Move-To-Front transform [

21,

22] or Inversion Frequencies transform [

23]. Move with Interleaving can, therefore, be used as a replacement for these transforms in the preprocessing stage of data compression algorithms [

22,

24], reducing the length of the programming code. Although its theoretical time complexity is linear with respect to the length of the sequence to be transformed, it requires careful implementation to achieve an acceptable spent CPU time. The main contributions of the paper are as follows:

Highlighting the awareness of computer scientists, and especially programmers, that better computers do not solve the problems of shortening CPU time without careful implementation;

Providing information about CPU spent time using two popular programming languages, C++ and Java;

Testing the efficiency of the implementations on various platforms using different compilers.

The paper is organised in five Sections. Move with Interleaving is, together with the Move-To-Front transform, introduced briefly in

Section 2. Three various implementations of Move with Interleaving are described in

Section 3. The experimental results are given in

Section 4, while the last, fifth Section contains the conclusion.

2. Background

2.1. Move-To-Front Transform

Move-To-Front (MTF) is one of the self-organising list strategies [

21,

25,

26,

27] used in caching algorithms [

28] and data compression [

29,

30]. Let

,

, represent the input sequence of

n elements

from an alphabet

, where

consists of

m elements. MTF reads the elements

sequentially, and maps them to the domain of natural numbers, including 0, to obtain the output sequence

,

,

. The list

L is employed in this mapping process and it is populated with all the elements from

initially. The algorithm finds the position

l,

, in

L, where

and sends

l to

Y. After that, the positions of all the elements

in

L between

are increased, while

is placed in front of

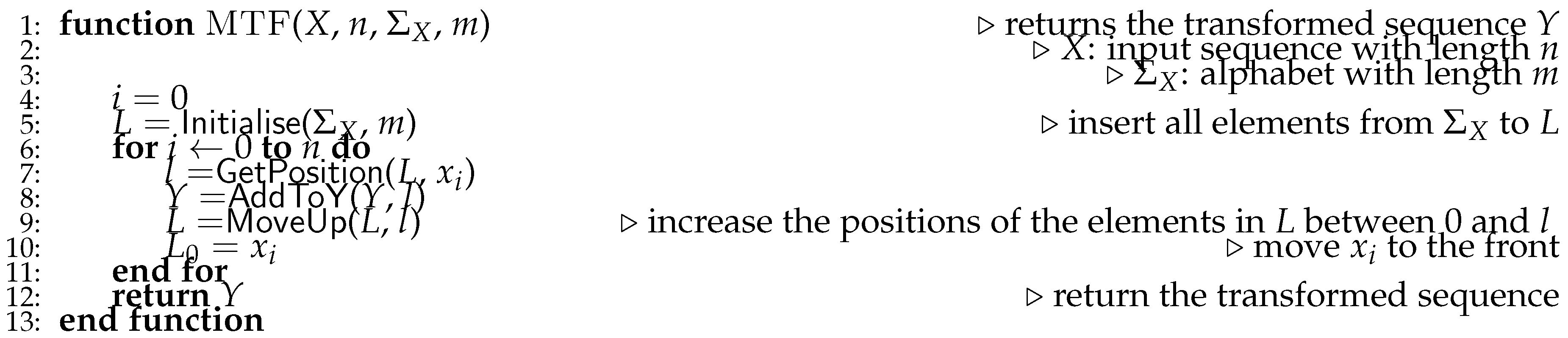

L. The pseudocode for the MTF transform is shown in Algorithm 1. The main loop contains only three functions. The first one, on Line 7, iterates through the elements in

L and stops when

. It is expected that

is found in the constant time

, since MTF is a self-organising list. The function in Line 8 terminates in

, as it just writes

l to an array representing

Y. The most time-consuming task is performed by the last function in Line 9, which needs to replace the first

values in

L. It can be realised in various ways. Four different implementations were proposed and analysed in [

31].

MTF is used most frequently in data compression [

26,

27,

29], as it reduces the information entropy of

X when

X contains local correlations. For example, a sequence of the same elements is converted into a sequence of zeros; a sequence of alternating elements gives a sequence of ones; a sequence of alternating triples results in a sequence of twos, and so on. This is the reason why MTF is used typically after BWT, which tends to group the same elements close together [

19,

20].

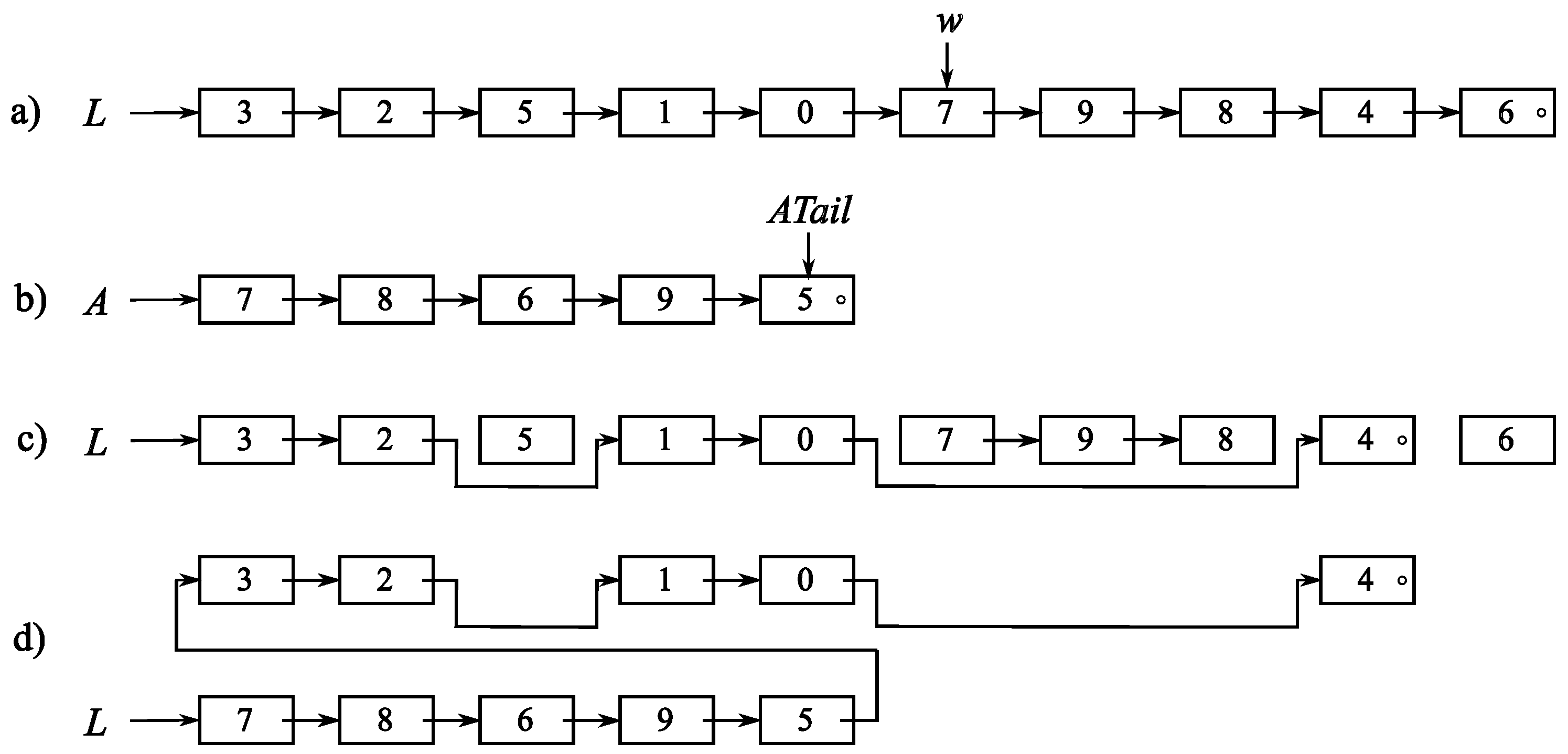

|

Algorithm 1 Pseudocode of MTF transform |

|

2.2. Move with Interleaving

The

Burrows-Wheeler transform, (BWT) should be used before MTF to enhance the local correlations of

X, as mentioned in the previous Subsection. BWT was introduced in 1994 [

18], and is a well-studied transform [

22]. Unfortunately, it has

time complexity, which is why

X should be split into small blocks to achieve a fast enough response [

18]. A direct correlation between suffix array and the BWT was determined later [

19]. Straightforward implementation of the suffix array also works, unfortunately, in

[

32,

33]. Fortunately, more algorithms were developed for constructing a suffix array of

X in

[

34,

35,

36]. This also enables obtaining the BWT in linear time. However, the implementation of these algorithms is relatively demanding. This is why it would be desirable to replace the pipeline of the three algorithms – an algorithm for constructing the suffix array, an algorithm to generate BWT from the suffix array, and the MTF algorithm – with only one compact algorithm. It was shown recently that, at least for the domain of Raster Images, this can be done by the transform named

Move with Interleaving (MwI) [

15]. Pixels from a continuous-tone image are arranged into a sequence

X at first. Pixels in the close neighbourhood of the considered pixel are, in the majority of cases, similar, but not completely the same. However, this similarity is sufficient for MTF to produce small indices

. But, when a region of different pixel values is encountered, MTF has to generate many large indices

before bringing enough values from this region close to the front of

L. This has, unfortunately, a negative effect on the reduction of the information entropy, and this is why BWT should be applied before MTF. Because of this, MwI monitors the value

, and reacts when

exceeds the given threshold

t. MwI assumes that an area with different values is found and that the values similar to

will follow. Because of this, it inserts, in addition to

,

interleaved values around

in front of

L, i.e., the values from a generated auxiliary sequence

.

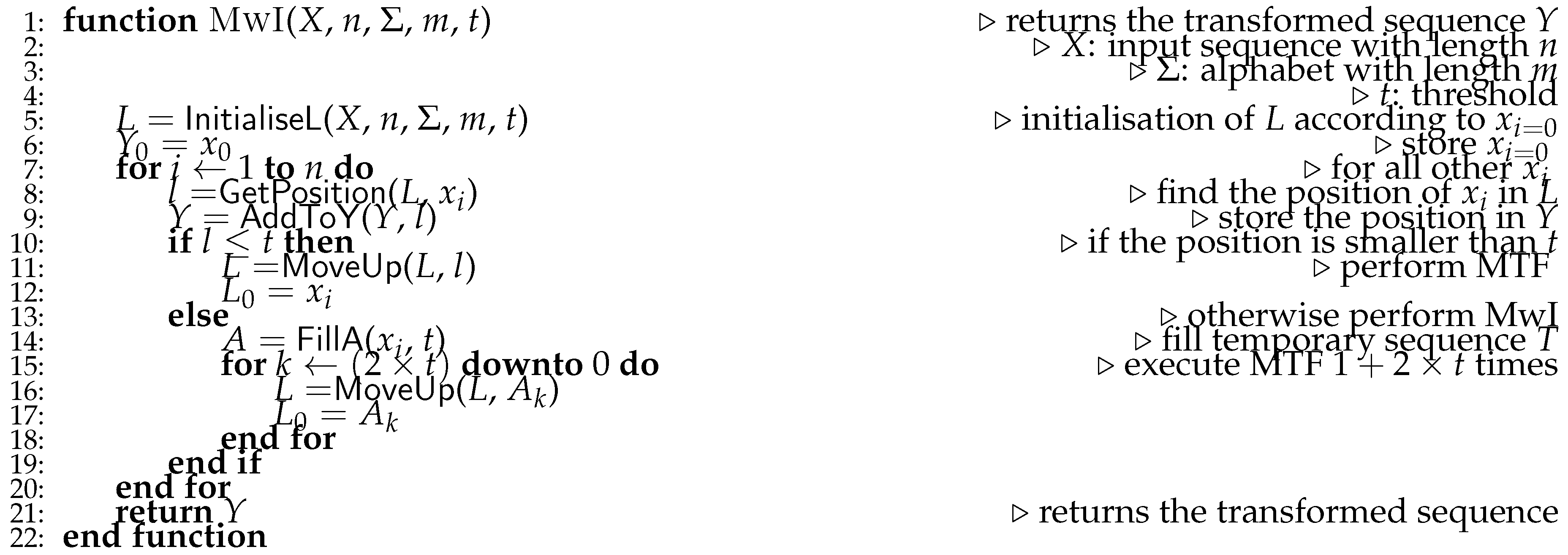

The MwI pseudocode is shown in Algorithm 2. The algorithm first populates the list

L in Line 5. It uses the first element from

X and interleaves it with

values. These values are inserted in front of

L, and the remaining values from

are filled in alphabetical order. The first element

is stored immediately in the output

Y in Line 6, as the decoder should perform the same initialisation of

L. The algorithm then enters the main loop and processes

,

, sequentially. The position

l of

is determined in

L (Line 8). It checks after that whether

l is within the given threshold

t. If so, the MTF procedure is performed in Lines 11 and 12; otherwise, the algorithm fills the auxiliary sequence

A with interleaved elements around

in Line 14. The elements from

A are then inserted in

L in the MTF-style within Lines 16 and 17.

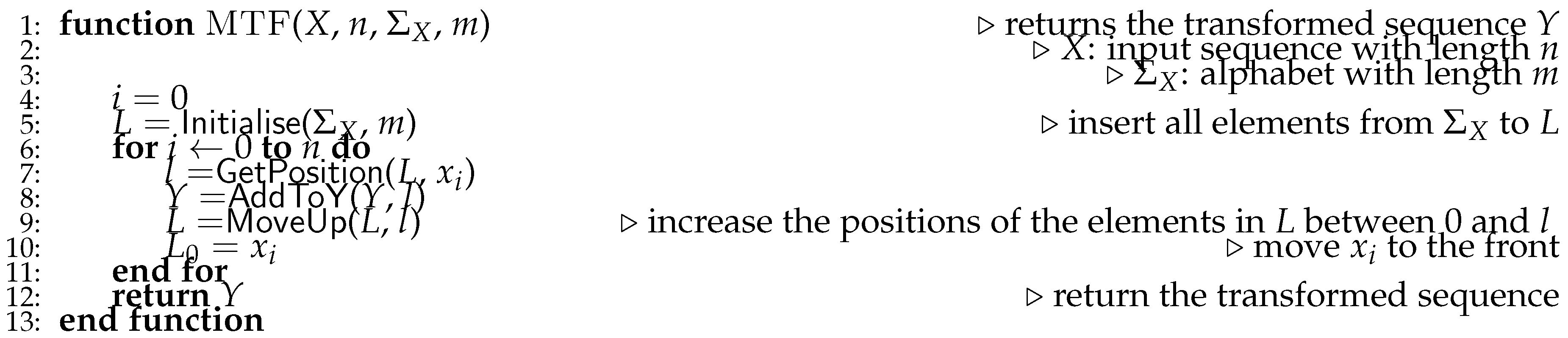

|

Algorithm 2 Pseudocode of MwI transform |

|

Finally, let us analyse the MwI time complexity. in each iteration for the worst-case scenario. Therefore, for each , , the algorithm fills A in time , and then performs the MTF times. MTF has the expected time complexity , and, as a result, the expected execution time of MwI is . Since , it can be concluded that MwI’s time complexity is the same as MTF, i.e., .

3. Materials and Methods

Three different implementations of MwI, denoted as

I-1,

I-2, and

I-3, are considered in this Section. The programming code is presented in C++ [

37], with a note that the paper does not make use of its object-oriented features.

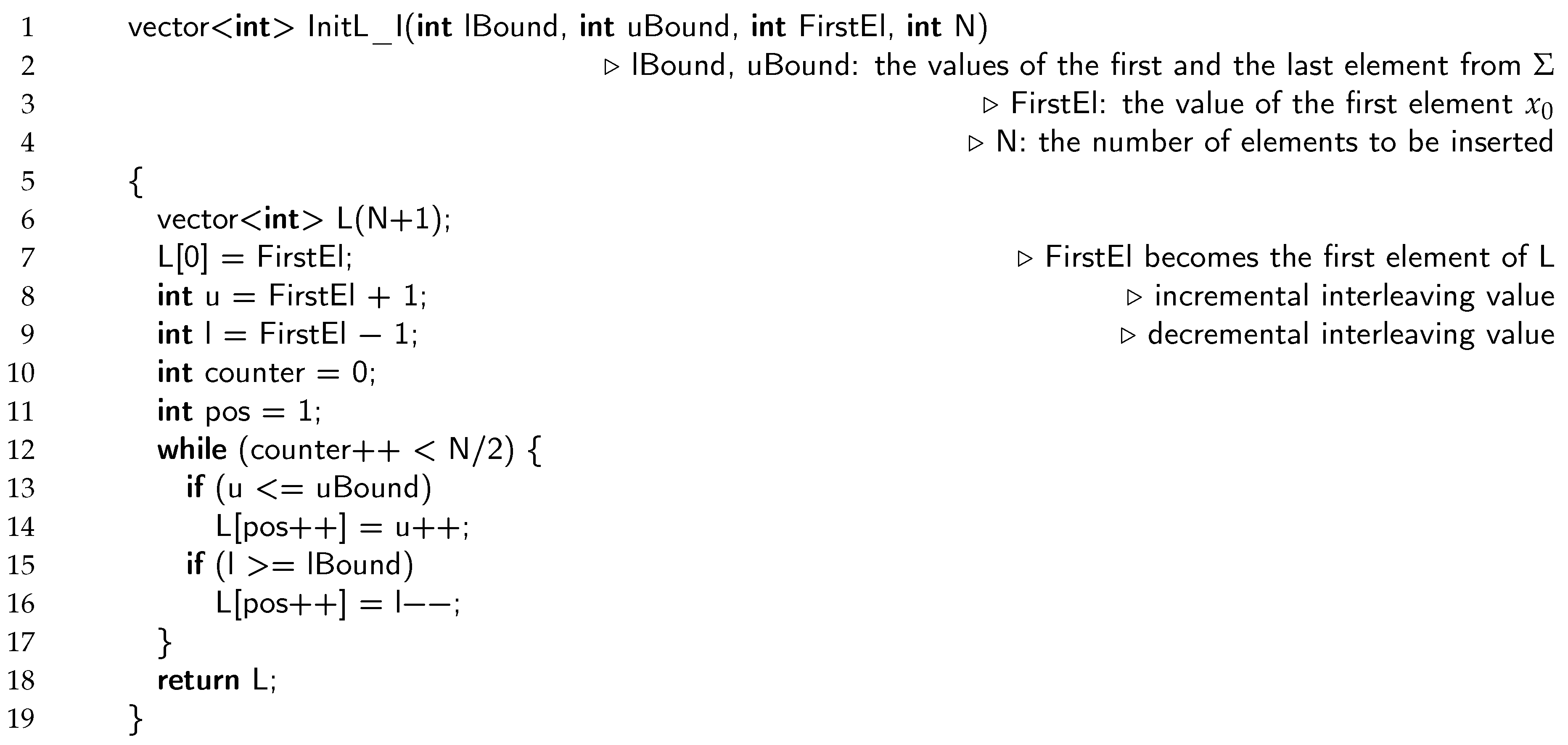

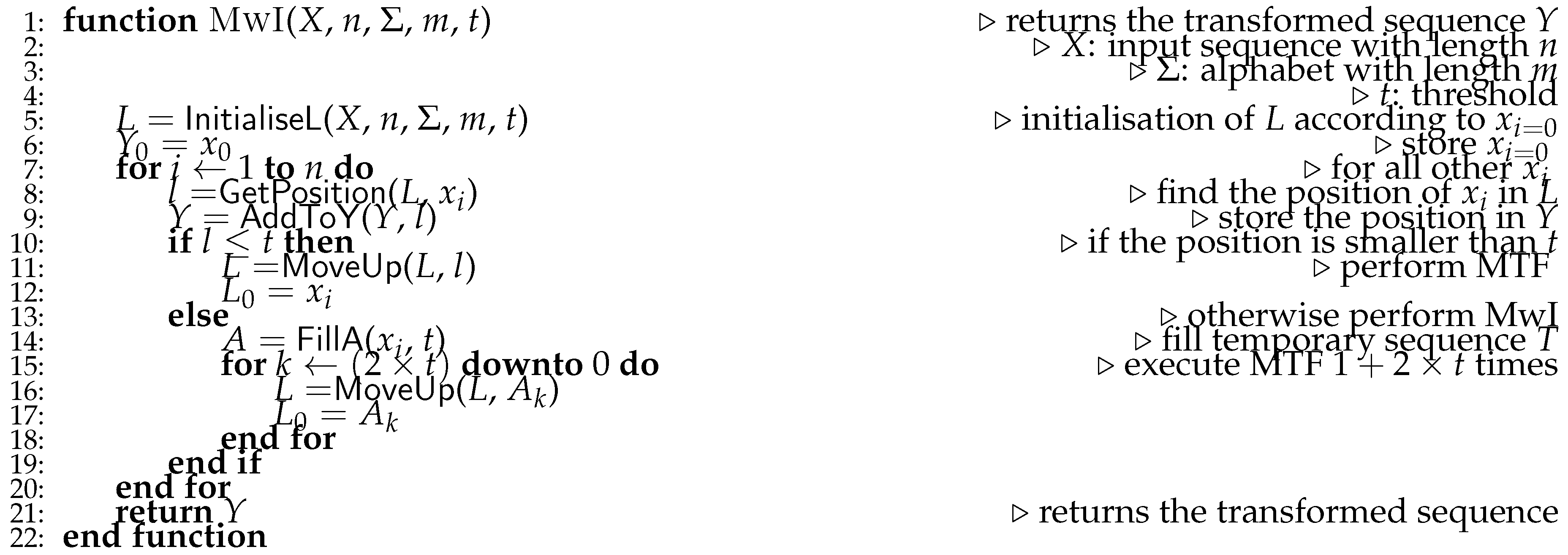

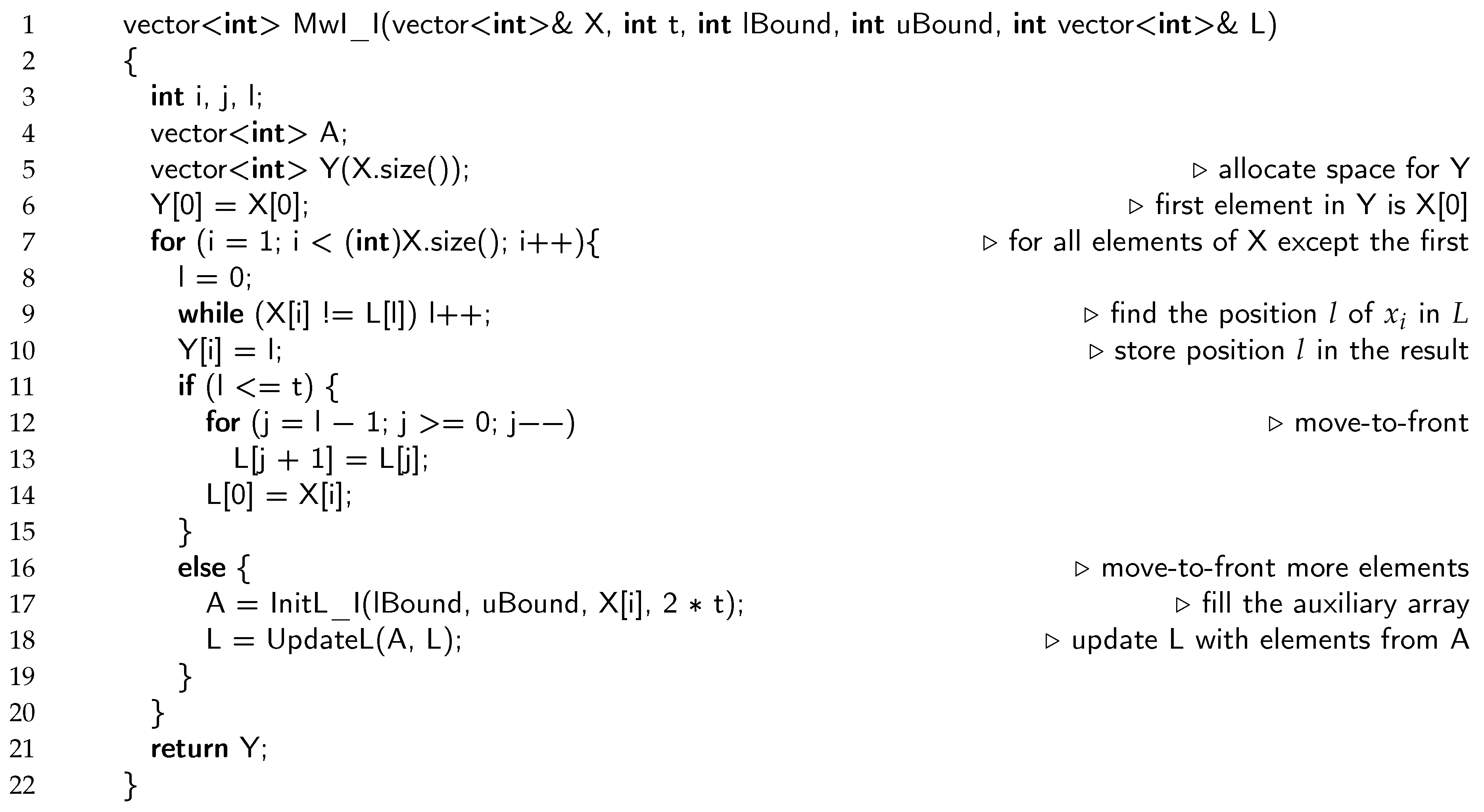

3.1. Implementation I-1

List

L is represented as the standard C++ vector in this implementation. Algorithm 3 shows the function that initialises

L. This initialisation assumes that the alphabet represents the numbers from the range

. The last parameter

N in function

InitL_I controls the number of elements to be inserted. It is set to

initially. Variables u and l hold the incremental interleaving values around

FirstEl.

|

Algorithm 3 Implementation I-1: initialization of L

|

|

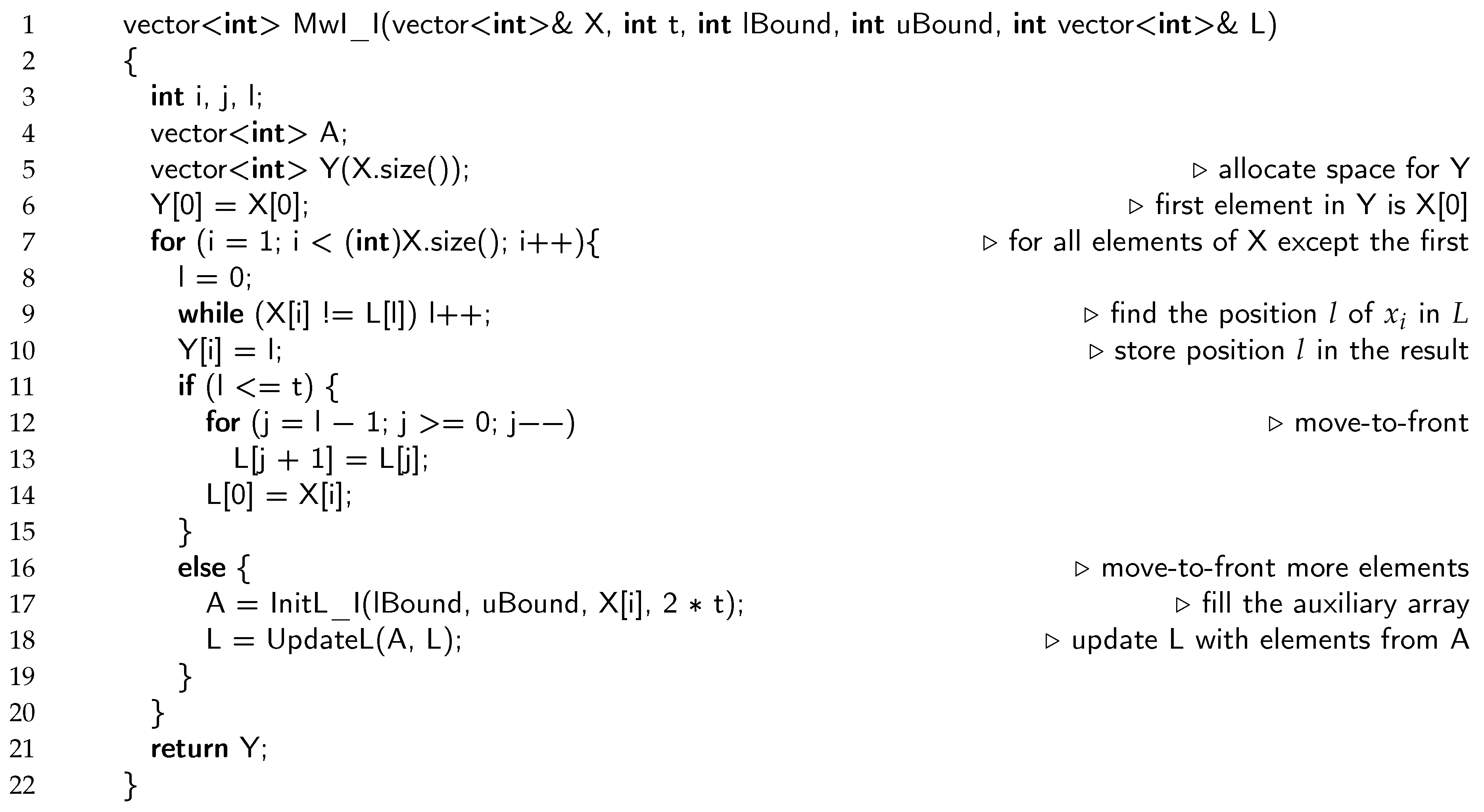

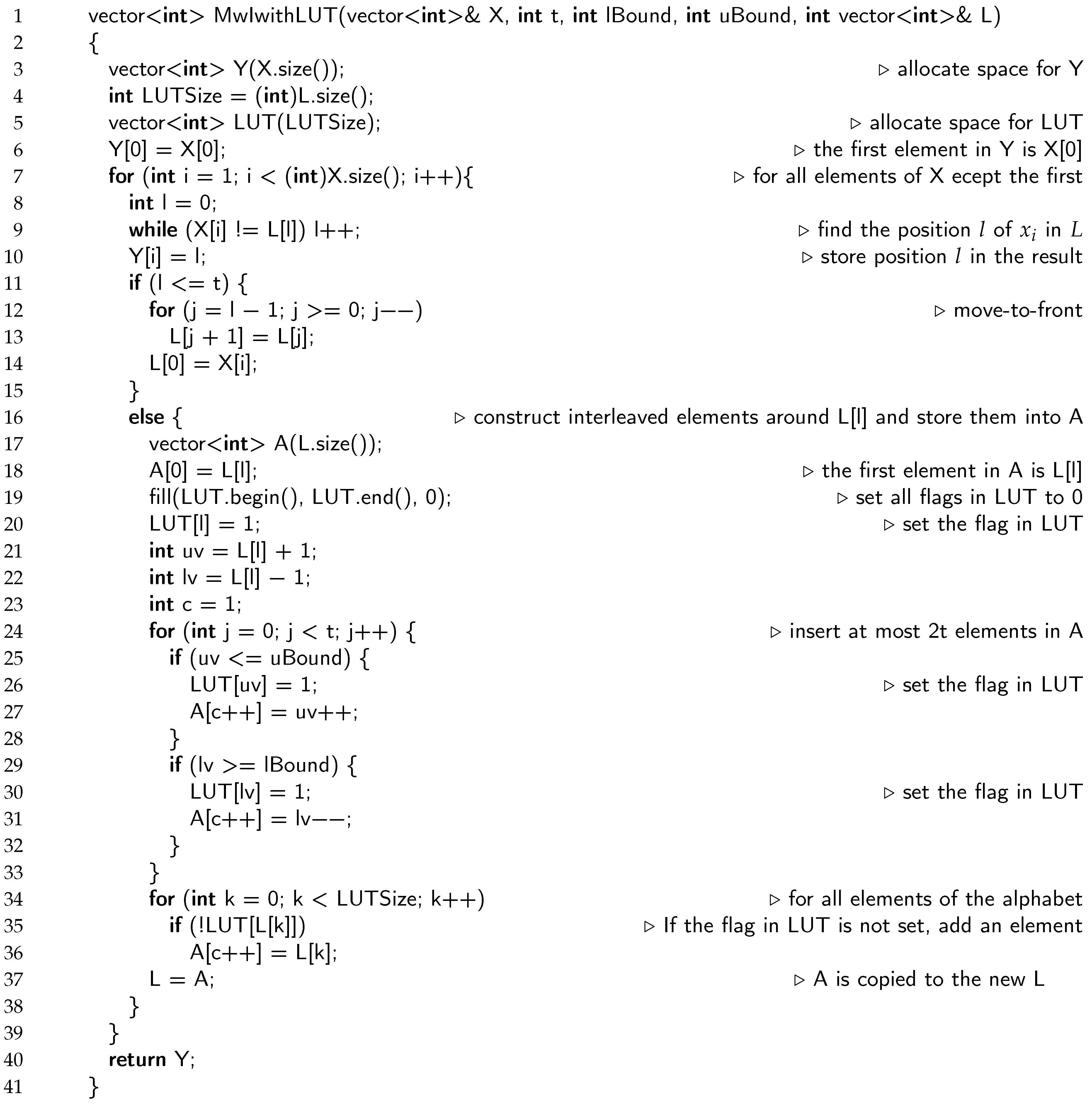

The main function of implementation

I-1 is shown in Algorithm 4. The position l of each

in

L is determined in Line 9 and then stored in Line 10. After that, l is tested (Line 11): if it is smaller than t, the MTF is executed in Lines 12-14; otherwise, more elements interleaved around

should be moved-to-front of

L. The auxiliary vector A is filled with at most

interleaved values by calling the function

InitL_I in Line 17. In Algorithm 3, this time the parameter

N gets the value 2t. After that, the content of

L is updated in Line 18.

|

Algorithm 4 Implementation I-1: MwI main function |

|

|

Algorithm 5 Implementation I-1: L update function |

|

Finally, let us explain the updating procedure for L, when more elements should be moved to the front (see Algorithm 5). In Line 4, interleaved values from vector A are first copied to a temporary vector Temp. The remaining values of L are added incrementally within the Lines 6– 17 to Temp if they have not yet been added in Line 4. When the for-loop terminates, vector Temp contains the updated status of L, which is then returned in Line 18 for use in the next iteration of Algorithm 4.

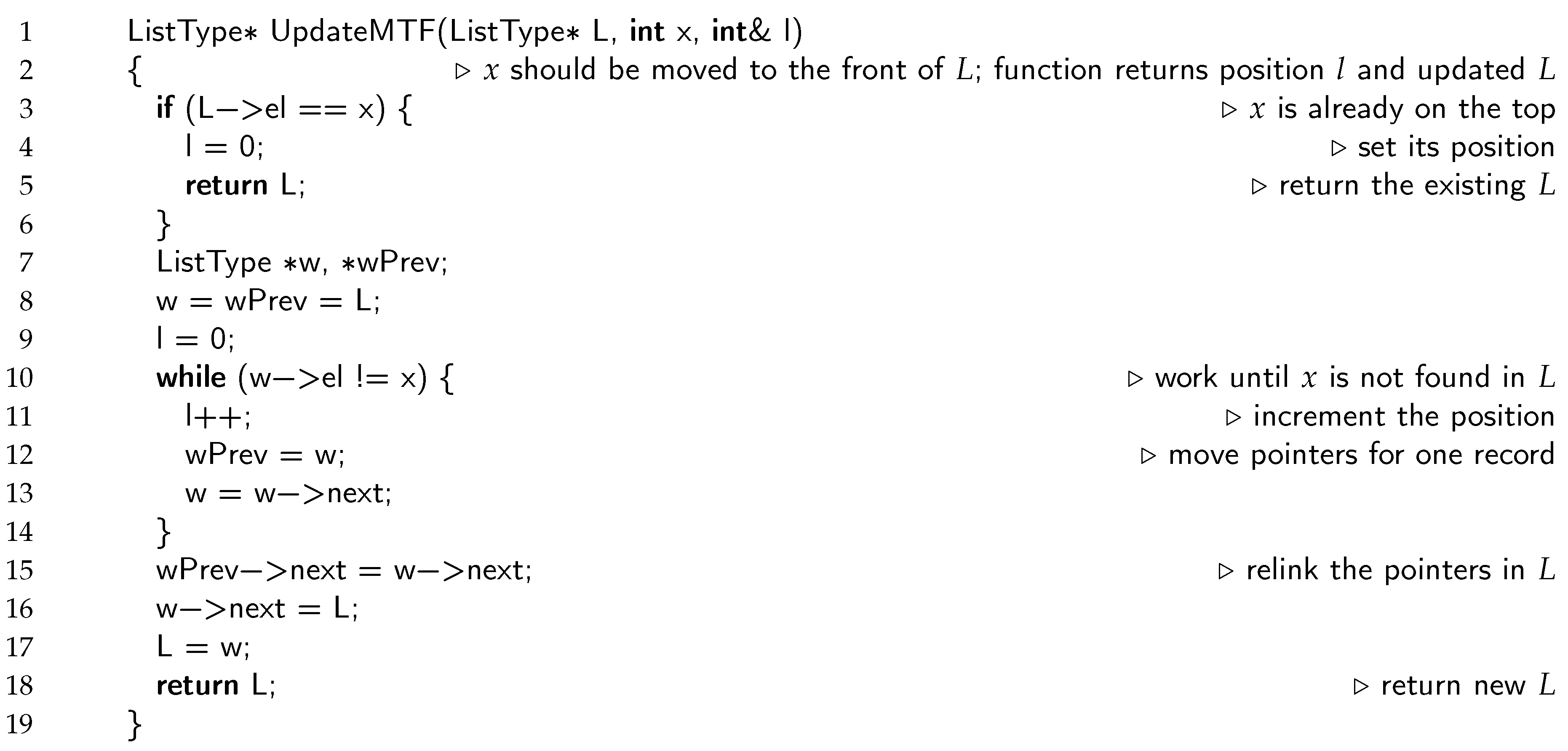

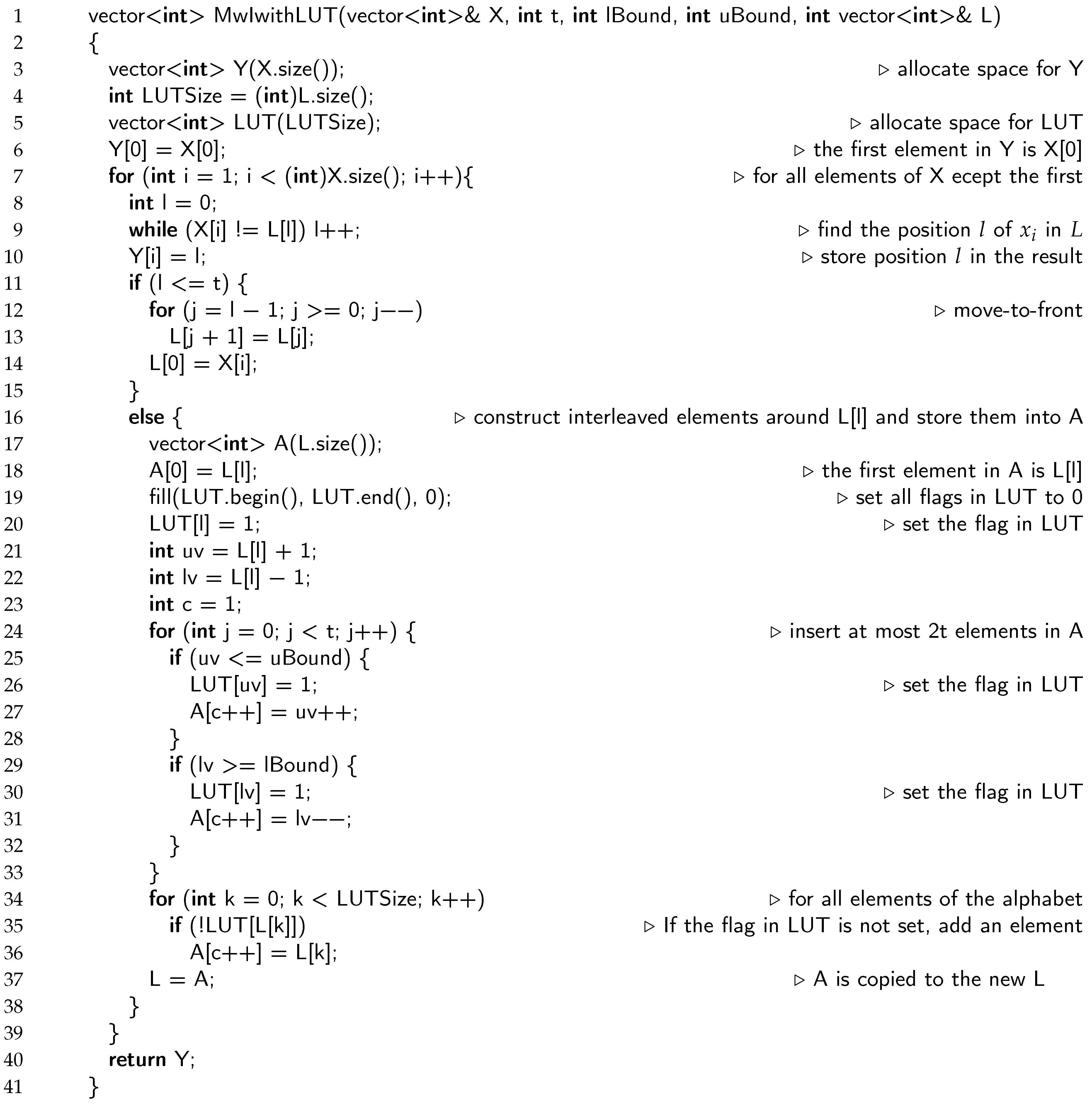

3.2. Implementation I-2

In implementation

I-2, the data structure used to store

L is once again a standard vector. Consequently, the initialisation process remains identical to that given in Algorithm 3. The primary drawback of implementation

I-1 is in the update function outlined in Algorithm 5, which contains two nested loops. The first loop iterates through all the elements of

L. The nested

while loop scans the auxiliary vector

A to verify whether the element

L[i] also exists in

A. If not, the element is added to the temporary vector

Temp. Although the UpdateL function is not called for each element being transformed, and the length of the vector

A is expected to be small,

, it opens up room for an improvement. Namely, the nested

while loop can be omitted by introducing a lookup table

. Its purpose is similar to the lookup table in the Counting-sort algorithm [

39]. In our case,

consists of

m flags set to FALSE initially. The flags in

are set during the creation of the auxiliary array

A, i.e. each flag is raised at the position

. During the updating of

L, for each element in

L it checks the status of the flag at the location

. The element is added into the temporary vector if the flag is FALSE. Details, including the construction of vector

A and the updating of

L, are presented in Algorithm 6.

|

Algorithm 6 Implementation I-2: MwI with LUT |

|

An array of flags LUT is allocated in Line 5. It consists of m elements, i.e. the size of the alphabet . In this implementation it is assumed that the alphabet contains elements from the interval . If this is not the case, a simple mapping of the alphabet’s element into this interval should be done before calling the function MwIwithLUT. When the position l of the considered element , all flags in LUT are set to FALSE in Line 19. The flag belonging to l is set to TRUE after that in Line 20. The same is applied for all the remaining interleaved values in Lines 26 and 30. The update of L is started in Line 34 after A has been prepared. The for loop operates for all elements of . For each element of L, it is checked whether its flag in LUT is set (Line 35). If it is not, the considered element is inserted into A. Finally, A is copied into the new L in Line 37.

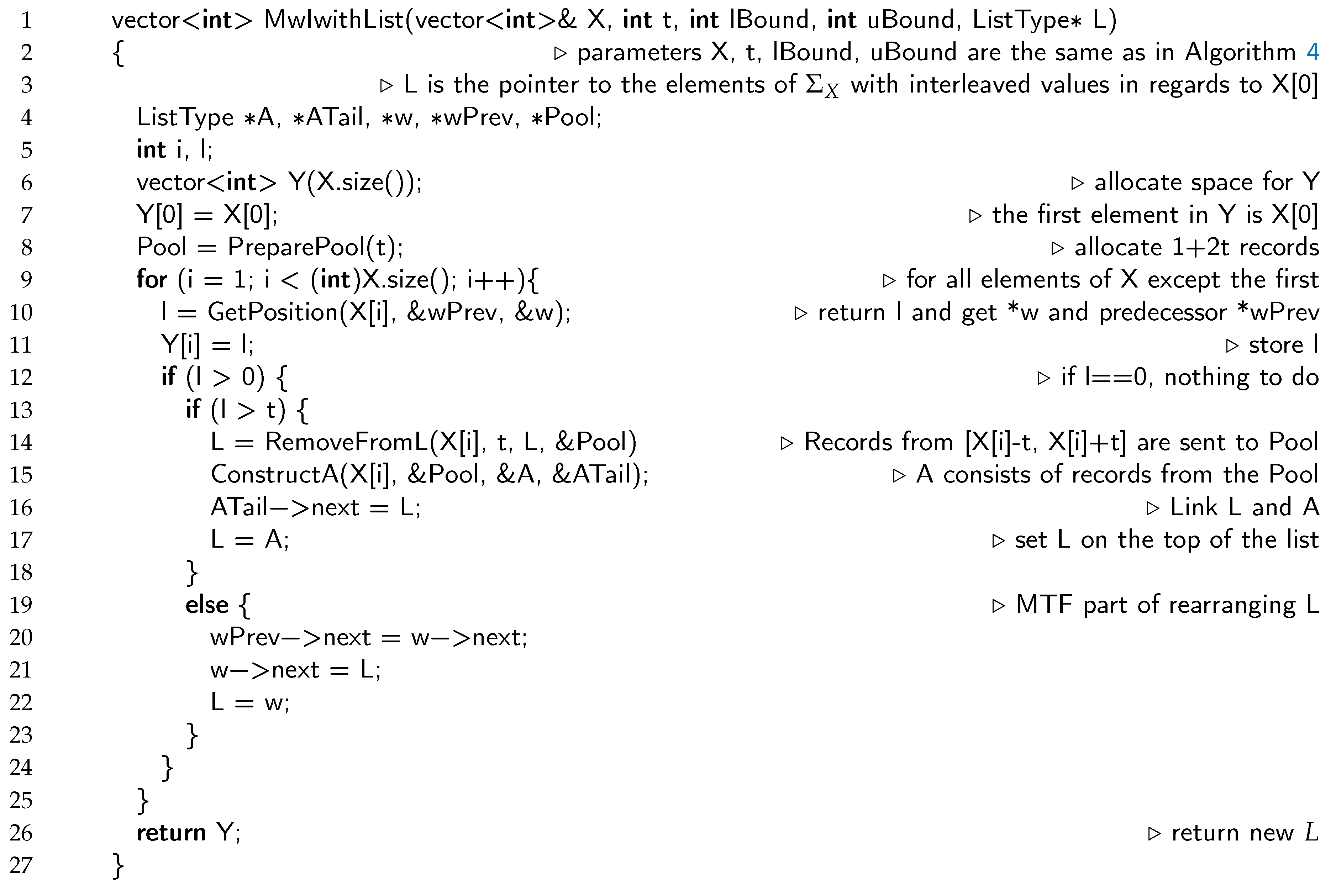

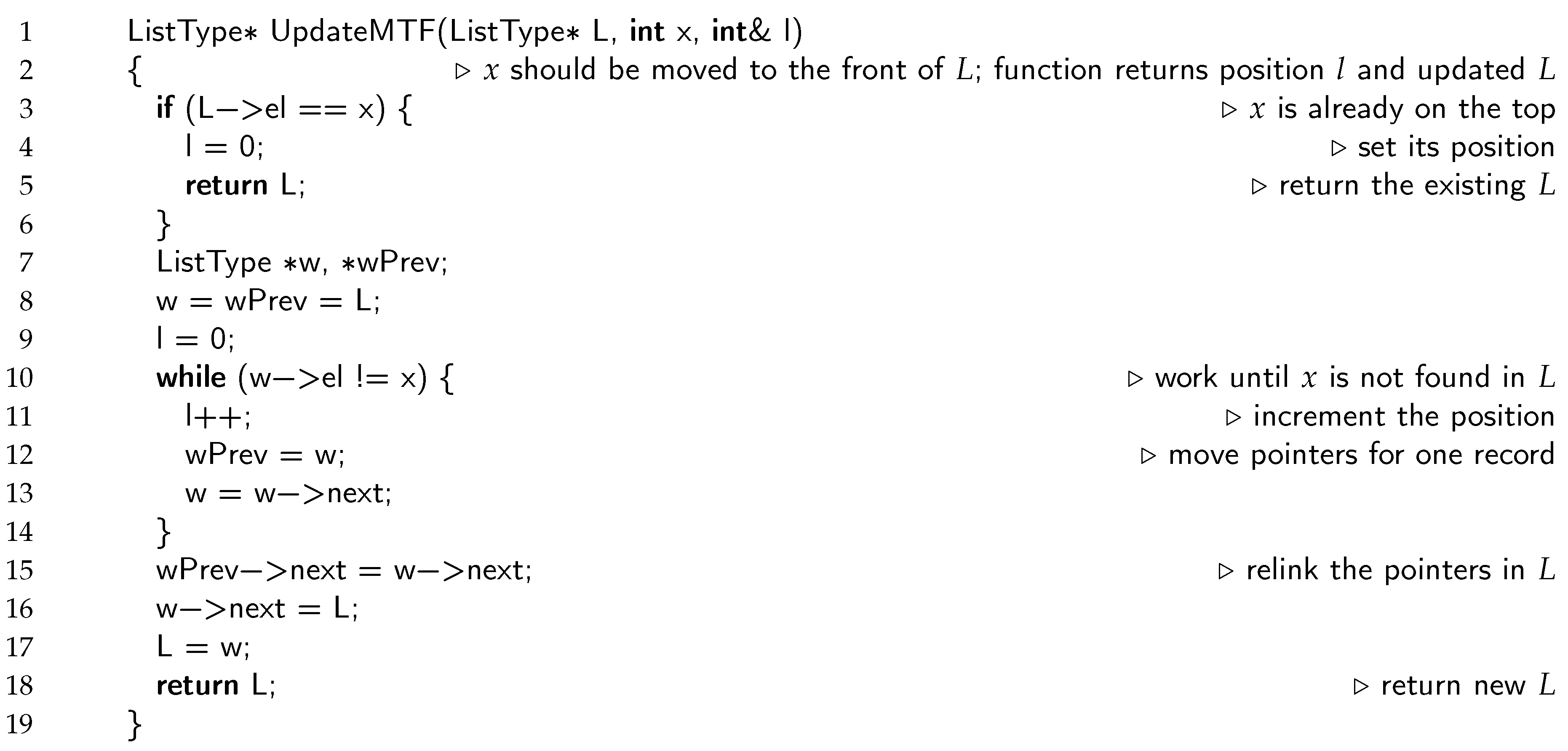

3.3. Implementation I-3

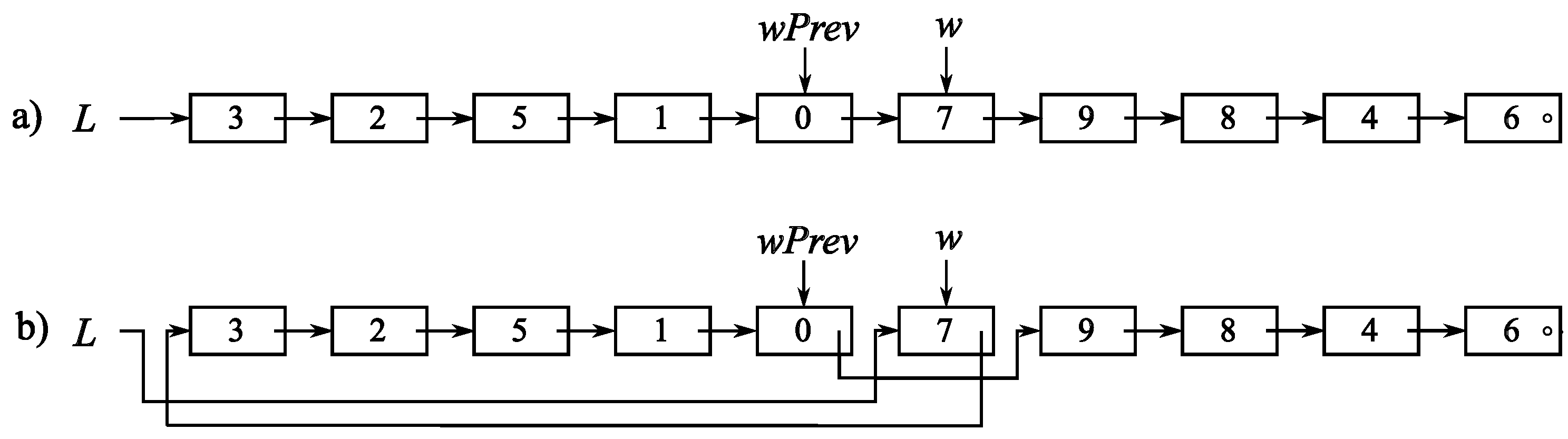

Implementation I-3 utilises a dynamically linked list of records to represent L. Each record has only two components: el, containing the value, and a pointer next that points to the subsequent record. Concerning MTF, it seems that this data structure could offer benefits, as there is no requirement to move the elements at positions 0 through .

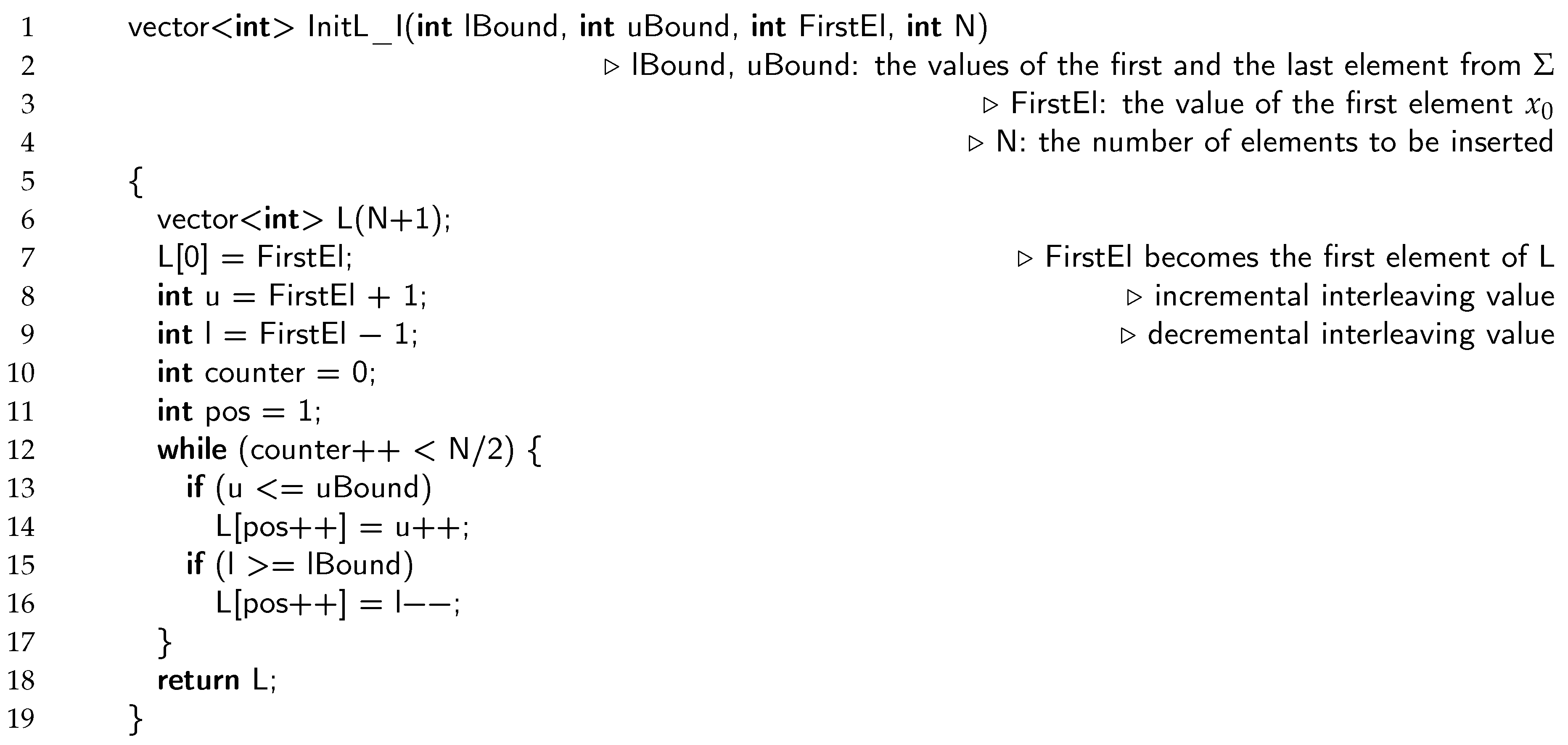

Let us consider an example of

L shown in

Figure 1a, containing 10 elements. Suppose the element that should be moved to the front of

L has the value 7. The record containing this value has already been found and is pointed to by pointer

w; its preceding pointer is denoted as

wPrev. The new status of

L is established in constant time by repointing just three pointers, as illustrated in

Figure 1b. The details are provided in Algorithm 7.

|

Algorithm 7 Implementation I-3: MTF |

|

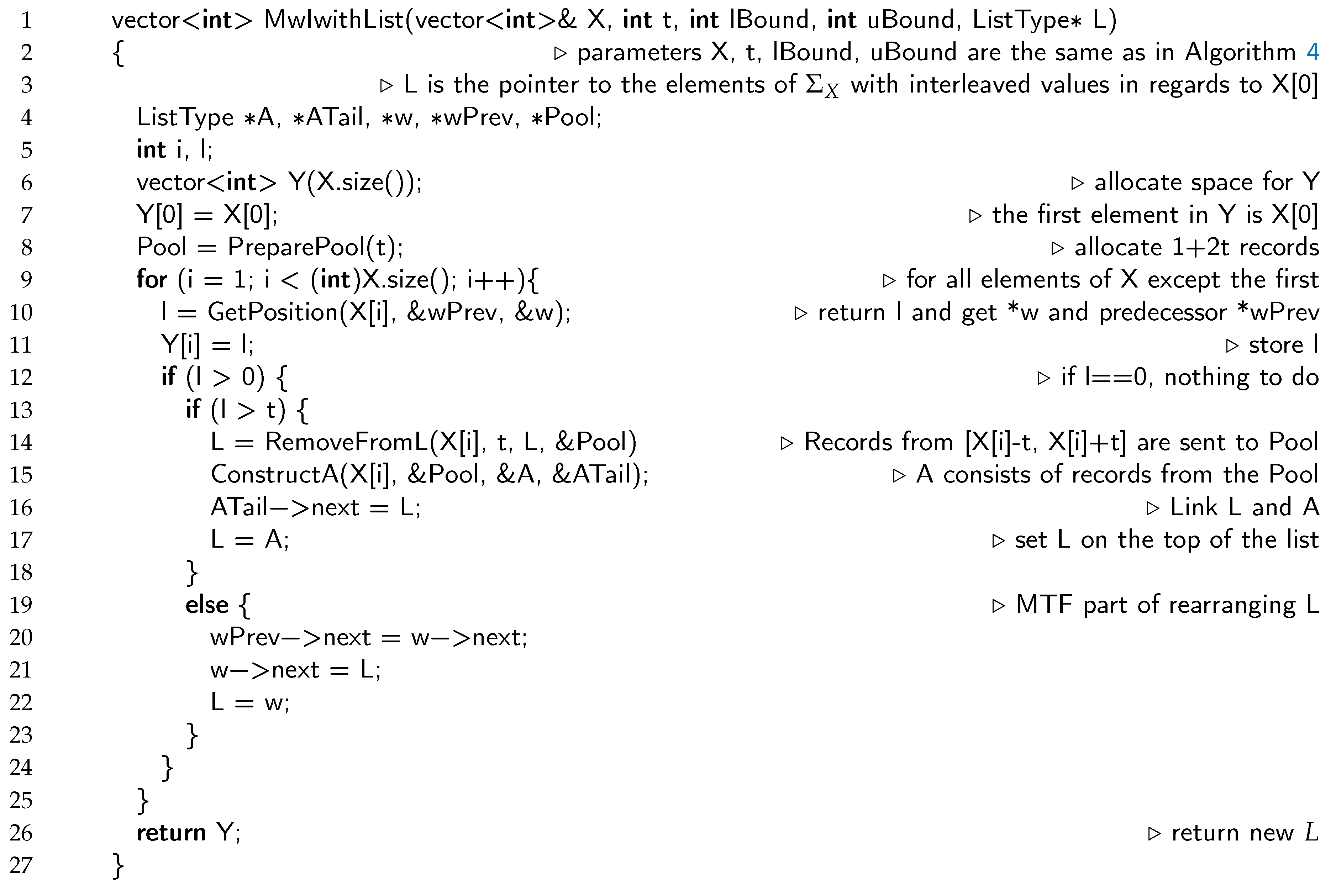

The concept of how the MwI transformation can be realised using a dynamic linked list is shown in

Figure 2. Let us suppose that

is again the considered value in

L, which is shown in

Figure 2a. In addition, let us suppose that

. The position of

is at

, and as

, the auxiliary list

A of

values should be appended in front of

L. The auxiliary list

A is shown in

Figure 2b.

L is inspected, and all its records holding values within the range

are omitted (see

Figure 2c). Finally,

L and

A are linked together using the pointer

; the result is shown in

Figure 2d. We can observe that

new records have been created, and an equal number of them have been deleted. Memory allocation and release are, unfortunately, computationally expensive operations, and should be avoided whenever possible [

38]. Therefore, records that should be released, are placed in a stack named

Pool in the continuation. Whenever a new record is needed during the process of constructing

A, it is taken from the

Pool. Details of the implementation can be found in Algorithm 8. In Line 8, the function

PreparePool inserts

dynamically allocated records into the stack pointed to by Pool. Function GetPosition (Line 10) performs the same tasks as the programming code in Lines 8 to 14 of Algorithm 7. After that, the records containing the values from the interval

are inserted into Pool by the function in Line 14. The function

ConstructA (Line 15) constructs list

A with interleaved values around the value

using the records from the Pool. It returns pointers

A and

. In Lines 16 and 17, lists

L and

A are merged into a new list

L. In Lines 20, 21, and 22, the record is moved in front as explained earlier.

All implementations are accessible at [

40].

|

Algorithm 8 Implementation I-3: MwI |

|

4. Experiments

All three proposed implementations of MwI were tested on 32 benchmark 8-bits greyscale images. The images can be seen in [

15], and, in this paper, they are denoted in the same way. The values of pixels were arranged into the sequence

X in the scan-line order. All three implementations were coded in both C++ and Java, as the most popular programming languages, and they were executed on three different platforms:

-

P1:

A personal computer with AMD Ryzen 9 7950X3D 16-Core Processor clocked at 4.20 GHz, equipped with 64 GB of RAM, running the Windows 11 operating system. The code was compiled using MS Visual Studio, Version 17.4.2. OpenJDK-8-JRE was used for Java.

-

P2:

The same personal computer as in P1, but using Linux Kernel 6.6.8. with the Manjaro distribution. The code was compiled with G++ compiler Version 13.2.1. for C++. OpenJDK-8-JRE was used for Java again.

-

P3:

The Raspberry Pi 2 Model B running Raspbian GNU/Linux 11 (bullseye). The C++ code was compiled with gcc version 10.2.1 20210110 (Raspbian 10.2.1-6+rpi1), while OpenJDK-8-JRE was used for Java.

Table 1 illustrates the CPU time spent for all three implementations across the three platforms for code written in C++.

The results were indeed stunning. Implementation I-2 was 562% faster than I-1. However, Implementation I-3 surpassed I-2 by 334% and outperformed I-1 by an astonishing 1883% on platform P1. Similarly, on platform P2, I-3 was 1908% faster than I-1 and surpassed I-2 by 246%. Interestingly, despite using the same computer, platform P2 outperformed platform P1 consistently. While I-1 and I-3 differed by 4% and 5%, respectively, platform P2 surpassed P1 for I-2 significantly by 42%. The pattern repeated on platform P3, but with a more pronounced effect. I-3 was, this time, faster than I-1 by more than 2300%, and by more than 550% from I-2. However, in this case, the absolute spent CPU time also becomes important. The user should wait for 7 and a half minutes to transform all 32 images using implementation I-1, while I-3 requires only 19.5 seconds.

The results for the Java implementation are provided in

Table 2. Implementation

I-3 again surpassed

I-1 and

I-2 by more than 1400% and 680%, respectively, on platform P1. Similarly, on platform P2,

I-3 outperformed

I-1 by over 1270% and

I-2 by 580%. Platform P2 was faster than platform P1 for

I-1 and

I-2, but not for

I-3. On platform P3, implementation

I-3 outperformed

I-1 by more than 6700%, and

I-2 by almost 1000 %.

As expected, the Java implementation was slower than C++ implementation (compare the results in

Table 1 and

Table 2). Let us consider the results on the personal computer, i.e., on platforms P1 and P2. The most evident difference is at

I-2, where platforms P1 and P2 lag behind the C++ implementation by more than 360% and 480%, respectively. Platform P2 was faster for

I-1 and

I-2, while it was slightly slower for

I-3 (by less than 7%). The Java implementations were significantly slower than the C++ implementations on platform P3. Implementation

I-1, written in Java, was slower by a hardly believable 1358%,

I-2 by more than 800%, and

I-3 by more than 460%. In the case of

I-1 coded in Java, the user would need to wait for 1 hour and 42 minutes to transform all 32 images, while, for

I-3, this process is completed in one and a half minutes.

5. Conclusion

This paper considers three different implementations of the Move with interleaving (MwI) transform. Although MwI operates with the expected time complexity, its CPU time usage differs considerably between various programming solutions. Although efficient implementations are necessary in embedded systems or computationally weaker platforms like Raspberry Pi, processing larger datasets can also be noticeably faster on modern personal computers.

Three different implementations of MwI were considered in the paper: the first utilised a standard vector to store the MwI status, the second incorporated a lookup table, while the third was based on a dynamic one-way connected list and a mechanism for reusing allocated memory chunks. All the implementations were encoded in C++ and Java. Additionally, three different platforms were used for the experiments: MS Windows 11, Linux with the Manjaro distribution, and Raspberry Pi with Linux. The first two platforms were run on the same computer to ensure a fair comparison. The experiments involved 32 continuous-tone greyscale raster images with various resolutions and contexts. Their pixels were transformed into a sequence using a scan-line approach and then processed with MwI. The results show that a careful implementation reduces the CPU time spent significantly. For example, the implementation based on the dynamic linked list was 1800% faster than the implementation using the standard vector. The lookup table accelerated the implementation, using a standard vector significantly; however, it did not reach the speed of the implementation with the dynamic linked list. Linux turned out to be slightly more efficient that Windows. As expected, the C++ implementations outperformed the Java implementations.

The Raspberry Pi platform cannot be compared directly to the two platforms running on a modern personal computer. However, the importance of careful implementation and the choice of programming language becomes even more evident. For example, the fastest implementation using a dynamic one-way connected linked list took 19 second if coded in C++, but one and a half minutes if coded in Java. The slowest implementation, using a standard vector, required 7 minutes and 32 seconds, while the same implementation coded in Java needed even 1 hour and 42 minutes.

Author Contributions

Conceptualisation, B.Ž.; methodology, B.Ž. and B.R.; software, B.Ž. and B.R.; validation I.K. N.L. and A.J.; formal analysis, B.Ž. N.L. and I.K.; investigation, B.Ž., B.R., and I.K; resources, I.K.; data curation, B. Ž, B. R. N.L., and A.J; writing—original draft preparation, B.Ž.; writing—review and editing, B.Ž., N.L., I.K., B.R., A.J.; visualisation, A.J.; supervision, I.K. and B.Ž.; project administration, I.K. and B.Ž.; funding acquisition, I.K.and B.Ž. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Slovenian Research and Innovation Agency under Research Project J2-4458, Research Programme P2-0041, and the Czech Science Foundation under Research Project 23-04622L.

Institutional Review Board Statement

Not applicable

Informed Consent Statement

Not applicable

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Clark, B.G. An efficient implementation of the algorithm "CLEAN". Atron Astrophy. 1980, 89(3), 377–378. [Google Scholar]

- Hadjiconstantinou, E.; Christofides, N. An efficient implementation of an algorithm for finding K shortest simple paths. Int J Networks. 1999, 34(2), 88–101. [Google Scholar]

- Goldberg, A. V. An Efficient Implementation of a Scaling Minimum-Cost Flow Algorithm. J Algorithm. 1997, 22(1), 1–29. [Google Scholar] [CrossRef]

- Lippert, R.A.; Mobarry, C.M.; Walenz, B.P. A Space-Efficient Construction of the Burrows–Wheeler Transform for Genomic Data. J Comput Biol. 2005, 12(7), 943–951. [Google Scholar] [CrossRef] [PubMed]

- Devkota, S.; Aschwanden, P.; Kunen, A.; Legendre, M.; Isaacs, K. E. CcNav: Understanding Compiler Optimizations in Binary Code. IEEE T Vis Comput Gr. 2021, 27(2), 667–677. [Google Scholar] [CrossRef] [PubMed]

- Chattopadhyay, S. Compiler design, 2nd ed.; PHI Learning: New Delhi, India, 2022. [Google Scholar]

- Basford, P.J.; Johnston, S.J.; Perkins, C.S.; Garnock-Jones, T.; Tso, F.P.; Pezaros, D.; Mullins, R.D.; Yoneki, E.; Singer, J.; Cox, S.J. Performance analysis of single board computer clusters. Future Gener Comp Sy. 2020, 102, 278–291. [Google Scholar] [CrossRef]

- Khan, N.; Yaqoob, I.; Hashem, I.A.T.; Inayat, Z.; Ali, W.K.M.; Alam, M.; Shiraz, M.; Gani, A. Big Data: Survey, Technologies, Opportunities, and Challenges. Sci World J. 2014, 2014, 712826. [Google Scholar] [CrossRef] [PubMed]

- Stunkel, C.B.; Graham, R.L.; Shainer, G.; Kagan, M.; Sharkawi, S.S.; Rosenburg, B.; Chochoia, G.A. The high-speed networks of the Summit and Sierra supercomputers. IBM J Res Dev. 2020, 64(3/4), 3:1–3:10. [Google Scholar] [CrossRef]

- Iserte, S.; Prades, J.; Reaño, C.; Silla, F. Improving the management efficiency of GPU workloads in data centres through GPU virtualization. Concurr Comp-Pract E. 2019, 22(2), e5275. [Google Scholar]

- Khriji, S.; Benbelgacem, Y.; Chéour, R.; El Houssaini, D.; Kanoun, O. Design and implementation of a cloud-based event-driven architecture for real-time data processing in wireless sensor networks. J Supercomput. 2022, 78, 3374–3401. [Google Scholar] [CrossRef]

- Žlaus, D.; Mongus, D. Efficient method for parallel computation of geodesic transformation on CPU. IEEE T Parall Distr. 2020, 31(4), 935–947. [Google Scholar] [CrossRef]

- Qiu, M.; Ming, Z.; Li, J.; Gai, K.; Zong, Z. Phase-Change Memory Optimization for Green Cloud with Genetic Algorithm. IEEE T Comput. 2015, 64(12), 3528–3540. [Google Scholar] [CrossRef]

- Albers, S. Energy-efficient algorithms. Communications of the ACM 2010, 53(6), 86–96. [Google Scholar] [CrossRef]

- Žalik, B.; Strnad, D.; Podgorelec, D.; Kolingerová; Lukač, L.; Lukač, N.; Kolmanič, S.; Rizman Žalik, K.; Kohek, Š. A New Transformation Technique for Reducing Information Entropy: A Case Study on Greyscale Raster Images. Entropy 2023, 25(12), 1591. [Google Scholar] [CrossRef] [PubMed]

- Shannon, C.E. A Mathematical Theory of Communication. AT&T Tech J. 1948, 27(3), 379–423. [Google Scholar]

- Cover, T.M.; Thomas, J.A. Elements of Information Theory, 2nd ed.; Wiley: Hoboken, USA, 2006. [Google Scholar]

- Burrows, M.; Wheeler, D.J. A block-sorting lossless data compression algorithm. Technical report No. 124, Digital Systems Research Center, 1994.

- Adjeroh, D.; Bell, T.; Mukherjee, A. The Burrows-Wheeler Transform: Data Compression, Suffix Arrays, and Pattern Matching, 2nd ed.; Springer Science + Business Media: New York, USA, 2008. [Google Scholar]

- Abel., J. Post BWT stages of the Burrows-Wheeler compression Algorithm. Software Pract Exper. 2010, 40(9), 751–777. [Google Scholar] [CrossRef]

- Ryabko, B. Y. Data compression by means of a ’book stack’. Probl Inf Transm. 1980, 16(4), 265–269. [Google Scholar]

- Salomon, D.; Motta, G. Handbook of Data Compression, 5th ed.; Springer: London, U.K, 2010. [Google Scholar]

- Arnavut, Z.; Magliveras, S. S. Block sorting and compression. In Proceedings of the IEEE Data Compression Conference, DCC’97, Snowbird, Utah, USA, 25-27 Mar 1997; Storer, J. A., Cohn, M., Eds.; IEEE Computer Society Press: USA, 1997; pp. 181–190. [Google Scholar]

- Sayood, K. Introduction to Data Compression, 4th ed.; Morgan Kaufman, Elsevier: Waltham, USA, 2012. [Google Scholar]

- Rivest, R. On self-organizing sequential search heuristics. Comm of ACM. 1976, 19(2), 63–67. [Google Scholar] [CrossRef]

- Bentley, J. L.; Sleator, D. D.; Tarjan, R. E.; Wei, V. K. A Locally Adaptive Data Compression Scheme. Commun ACM. 1986, 29(4), 320–330. [Google Scholar] [CrossRef]

- Dorrigiv, R.; López-Ortiz, A.; Munro, J. I. An Application of Self-organizing Data Structures to Compression. In Experimental Algorithms, Proceedings of the 8th International Symposium on Experimental Algorithms, SEA 2009, Dortmund, Germany, 3-6 Jun 2009; Vahrenhold, J., Ed.; Lecture Notes in Computer Science 5526; Springer: Berlin, Germany, 2009; pp. 137–148. [Google Scholar]

- Jelenković, P.J.; Radovanović, A. Least-recently-used caching with dependent request. Theor Comput Sci. 2004, 2004 326, 293–327. [Google Scholar] [CrossRef]

- Žalik, B.; Lukač, N. Chain code lossless compression using Move-To-Front transform and adaptive Run-Length Encoding. Signal Process Image Commun. 2014, 29(1), 96–106. [Google Scholar] [CrossRef]

- Arnavut, Z. Move-To-Front and Inversion Coding. In Proceedings of the IEEE Data Compression Conference, DCC’2000, Snowbird, Utah, USA, 28-30 Mar 2000; Cohn, M., Storer, J. A., Eds.; IEEE Computer Society Press: USA, USA; pp. 193–202. [Google Scholar]

- Žalik, B.; Žalik, M.; Lipuš, B. On Move-To-Front Implementation. Proceedings on 27th International Conference on Circuits, Systems, Communications and Computers (CSCC), Rhodes Island, Greece, 19-22 July, 2023. IEEE Computer Society, Los Alamitos (CA), 2023; 43–47.

- Manber, U.; Myers, G. Suffix arrays: a new method for on-line string search. Proceedings of the first annual ACM-SIAM symposium on Discrete algorithms (SODA ’90), San Francisco (CA), USA, 22-24 Jan, 1990, Society for Industrial and Applied Mathematics, Philadelphia (PA); 319–327.

- Manber, U.; Myers, G. Suffix arrays: a new method for on-line string search. SIAM J Comput. 1993, 22(5), 935–948. [Google Scholar] [CrossRef]

- Nong, G.; Zhang, S.; Chan, W. H. Two efficient algorithms for linear time suffix array construction. IEEE T. Comput. 2011, 60(10), 1471–1484. [Google Scholar] [CrossRef]

- Kärkkäinen, J.; Sanders, P.; Burkhardt, S. Linear work suffix array construction. J. ACM. 2017, 53(6), 918–936. [Google Scholar] [CrossRef]

- Li, Z; Li, J.; Huo, H. Optimal In-Place Suffix Sorting. In String Processing and Information Retrieval, 25th International Symposium, SPIRE 2018, Lima, Peru, 9-11 Oct 2018; Gagie, T., Moffat, A., Navaro, G., Cuadros-Vargas, E., Eds.; Lecture Notes in Computer Science 11147; Springer: Cham, Germany, 2018; pp. 268–284. [Google Scholar]

- Stroustrup, B. The C++ Programming Language, 4th ed.; Addison-Wesley: Upper Saddle River, NJ, USA, 2013. [Google Scholar]

- What every programmer should know about memory. Available online: https://people.freebsd.org/~lstewart/articles/cpumemory.pdf (accessed on 30. 12. 2023).

- Cormen, T.H.; Leiserson, C.E.; Rivest, R.L.; Stein, C. Introduction to algorithms, 3th ed.; Massachusetts Institute of Technology: Cambridge, MA, USA, 2009. [Google Scholar]

- Repnik, B.; Žalik, B. Six implementations of MwI transform. Available online: https://gemma.feri.um.si/projects/slovene-national-research-projects/j2-4458-data-compression-paradigm-based-on-omitting-self-evident-information/software/ (accessed on 15. 01. 2024).

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).