Submitted:

05 February 2024

Posted:

06 February 2024

You are already at the latest version

Abstract

Keywords:

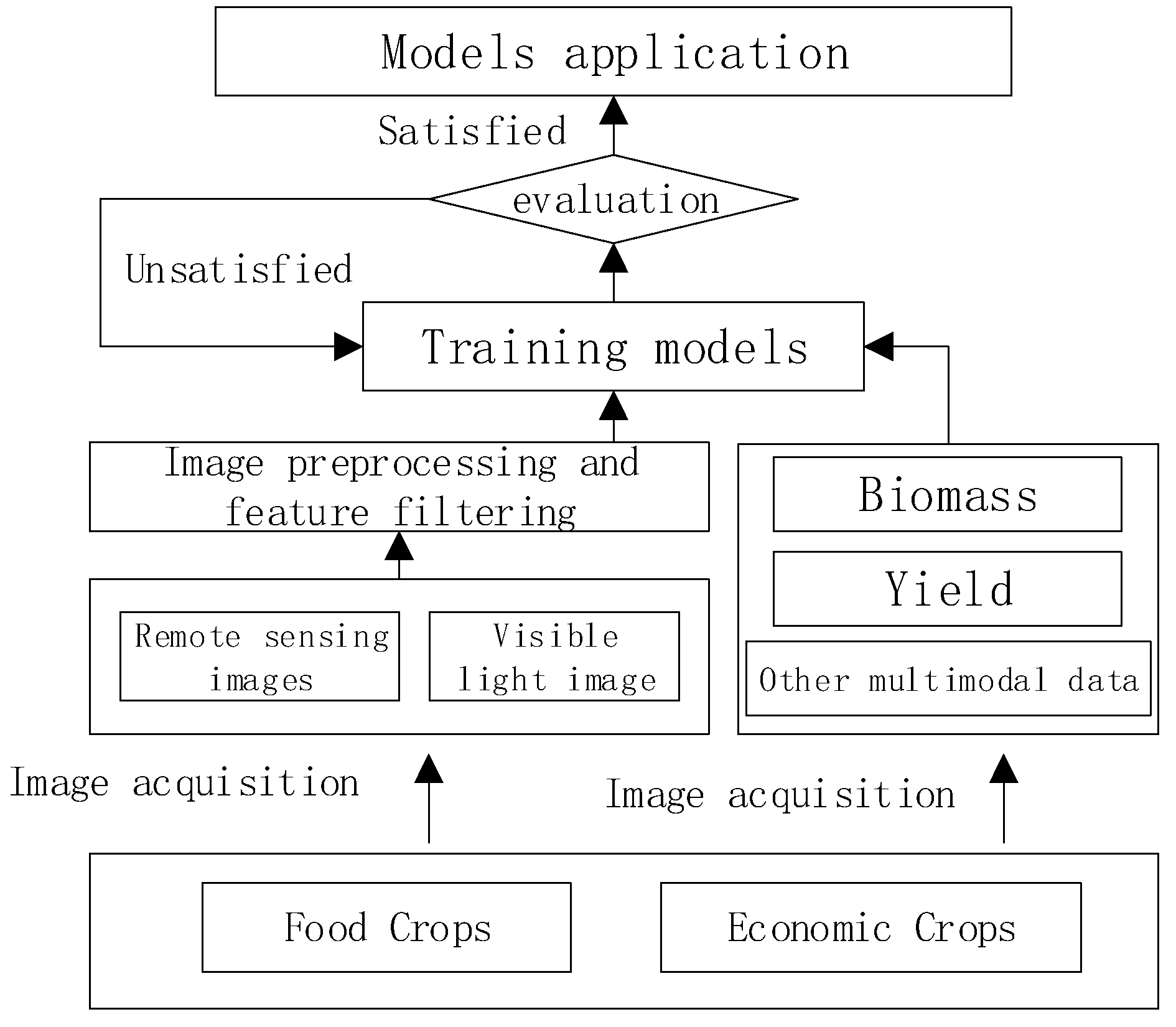

1. Introduction

2. Yield Calculation Indicators for Different Crops

3. The Application of Image Technology in Crop Yield Calculation

3.1. Yield Calculation by Remote Sensing Image

| Type | Title | Extraction method or description | Remarks |

|---|---|---|---|

| vegetation index | Normalized Vegetation Index (NDVI) | (NIR - R) / (NIR + R) | Reflect the coverage and health status of plants |

| Red edge chlorophyll vegetation index (ReCl) | (NIR / RED) - 1 | Display the photosynthetic activity of the canopy | |

| Enhanced Vegetation Index (EVI2) | 2.5*(NIR - R) / (NIR + 2.4* R +1)*(1 - ATB) | Accurately reflect the growth of vegetation | |

| Ratio Vegetation Index (RVI) | NIR/R | Sensitive indicator parameters of green plants, which can be used to estimate biomass | |

| Difference Vegetation Index (DVI) | NIR-R | Sensitive to soil background, beneficial for monitoring vegetation ecological environment | |

| Vertical Vegetation Index (PVI) | ((SR-VR)2+(SNIR-VNIR)2)1/2 | S represents soil emissivity, V represents vegetation reflectance | |

| Transformed Vegetation Index (TVI) | (NDVI+0.5)1/2 | Conversion of chlorophyll absorption | |

| Green Normalized Difference Vegetation Index (GNDVI) | (NIR-G)/(NIR+G) | Strong correlation with nitrogen | |

| Normalized Difference Red Edge Index (NDRE) | (NIR-RE) / (NIR +RE) | RE represents the emissivity of the red-edge band | |

| Red Green Blue Vegetation Index (RGBVI) | (G-R)/(G+R) | Measuring vegetation and surface red characteristics | |

| Green Leaf Vegetation Index (GLI) | (2G-B-R)/(2G+B+R) | Measuring the degree of surface vegetation coverage | |

| Excess Green(ExG) | (2G-R-B) | Small-scale plant detection | |

| Super Green Reduced Red Vegetation Index (ExGR) | 2G-2.4R | Small-scale plant detection | |

| Excess Red(ExR) | 1.4R-G | Soil background extraction | |

| Visible Light Atmospheric Impedance Vegetation Index (VARI) | (G –R)/(G+R-B) | Reduce the impact of lighting differences and atmospheric effects | |

| Leaf Area Vegetation Index (LAI) | leaf area (m2) / ground area (m2) | The ratio of leaf area to the soil surface covered | |

| Atmospheric Resilience Vegetation Index (ARVI) | (NIR-(2*R)+B)/(NIR+(2*R)+B) | Used in areas with high atmospheric aerosol content | |

| Modified Soil Adjusted Vegetation Index (MSAVI) | (2*NIR+1-sqrt((2*NIR+1)2-8*(NIR-RED)))/2 | Reduce the impact of soil on crop monitoring results | |

| Soil Adjusted Vegetation Index (SAVI) | (NIR-R)*(1+L)/(NIR+R+L) | L is a parameter that varies with vegetation density | |

| Optimize Soil Adjusted Vegetation Index (OSAVI) | (NIR–R) / (NIR + R +0.16) | Using reflectance from NIR and red spectra | |

| Normalized Difference Water Index (NDWI) | (BG - BNIR ) / (BG +BNIR) | Research on vegetation moisture or soil moisture | |

| Conditional Vegetation Index (VCI) | The ratio of the current NDVI to the maximum and minimum NDVI values during the same period time over the years | Reflect the growth status of vegetation within the same physiological period | |

| Biophysical parameters | Leaf Area Index (LAI) | Total leaf area/land area | The total leaf area of plants per unit land area is closely related to crop transpiration, soil water balance, and canopy photosynthesis |

| Photosynthetically active radiation component (FPAR) | Proportion of absorbable photosynthetically active radiation in photosynthetically active radiation (PAR) | Important biophysical parameters commonly used to estimate vegetation biomass | |

| Growth environment parameters | Conditional Temperature Index (TCI) | The ratio of the current surface temperature to the maximum and minimum surface temperature values over the same period time over the years | Reflecting surface temperature conditions, widely used in drought inversion and monitoring |

| Conditional Vegetation Temperature Index (VTCI) | The ratio of LST differences between all pixels with NDVI values equal to a specific value in a certain research area | Quantitatively characterizing crop water stress information | |

| Temperature Vegetation Drought Index (TVDI) | Inversion of surface soil moisture in vegetation-covered areas | Analyzing spatial changes in drought severity | |

| Vertical Drought Index (PDI) | The normal of the soil baseline perpendicular to the coordinate origin in the two-dimensional scatter space of near-infrared and red reflectance | The spatial distribution characteristics commonly used for soil moisture |

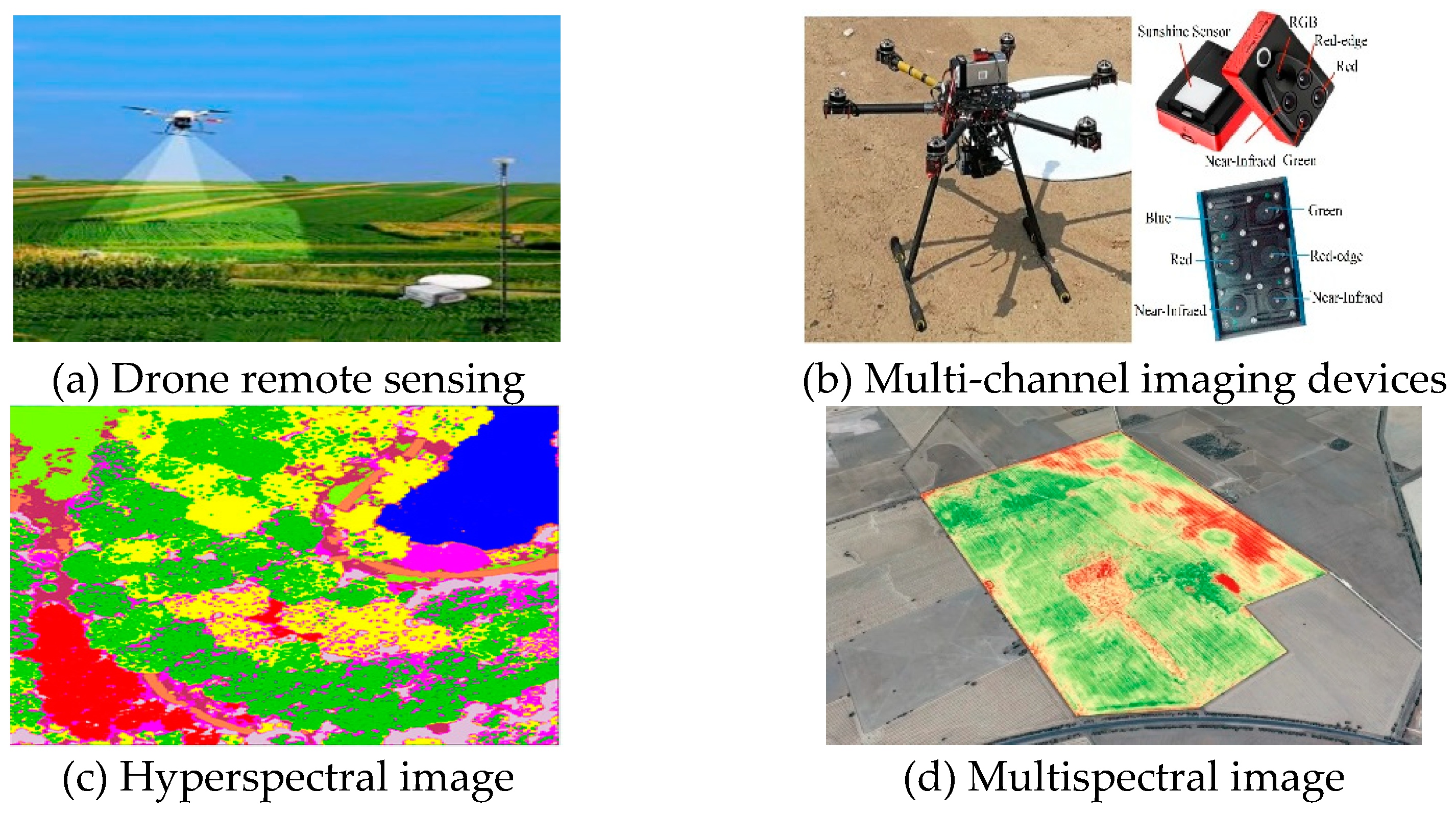

3.1.1. Yield Calculation by Low Altitude Remote Sensing Image

- Yield Calculation of Food Crops

- Yield Calculation of Economic Crops

| Crop varieties | Author | Year | Task | Network framework and algorithms | Result |

|---|---|---|---|---|---|

| corn | Wei Yang[41] | 2021 | Predict the yield of corn | CNN | AP: 75.5% |

| Monica F. Danilevicz[42] | 2021 | Predict the yield of corn | tab-DNN and sp-DNN | R2: 0.73 | |

| Chandan Kumar[43] | 2023 | Predict the yield of corn | LR, KNN, RF, SVR, DNN | R2: 0.84 | |

| Danyang Yu[44] | 2022 | Estimate biomass of corn | DCNN, MLR,RF, SVM | R2: 0.94 | |

| Ana Paula Marques Ramos[45] | 2020 | Predict the yield of corn | RF | R2: 0.78 | |

| rice | Md. Suruj Mia[46] | 2023 | Predict the yield of rice | CNN | RMSPE: 14% |

| Emily S. Bellis[47] | 2022 | Predict the yield of rice | 3D-CNN, 2D-CNN | RMSE: 8.8% | |

| wheat | Chaofa Bian[48] | 2022 | Predict the yield of wheat | GPR | R2: 0.88 |

| Shuaipeng Fei[36] | 2023 | Predict the yield of wheat | Ensemble learning algorithms of ML | R2: 0.692 | |

| Zongpeng Li[56] | 2022 | Predict the yield of wheat | Ensemble learning algorithms of ML | R2: 0.78 | |

| Yixiu Han[49] | 2022 | Estimate biomass AGB of Wheat | GOA-XGB | R2: 0.855 | |

| Rui Li[57] | 2022 | Estimate yield of wheat | RF | R2: 0.86 | |

| Xinbin Zhou[50] | 2021 | Calculate the yield and protein content of wheat | SVR, RF, and ANN | R2: 0.62 | |

| Yuanyuan Fu[54] | 2021 | Estimate biomass of wheat | LSSVM | R2: 0.87 | |

| Falv Wang[52] | 2022 | Estimate biomass of wheat | RF | R2: 0.97 | |

| Malini Roy Choudhury[53] | 2021 | Calculate the yield of wheat | ANN | R2: 0.88 | |

| Falv Wang[59] | 2023 | Predict the yield of wheat | MultimodalNet | R2: 0.7411 | |

| Ryoya Tanabe[55] | 2023 | Predict the yield of wheat | CNN | RMSE: 0.94 t ha− 1 | |

| Prakriti Sharma[51] | 2022 | Estimate biomass of oat | PLS, SVM, ANN, RF | r: 0.65 | |

| Alireza Sharif[58] | 2020 | calculation yield of barley | GPR | R2: 0.84 | |

| beans | Maitiniyazi Maimaitijiang[60] | 2020 | Predict the yield of soybean | DNN-F2 | R2: 0.72 |

| Jing Zhou[61] | 2021 | Predict the yield of soybean | CNN | R2: 0.78 | |

| Paulo Eduardo Teodoro[35] | 2021 | Predict the yield of soybean | DL and ML | r: 0.44 | |

| Mohsen Yoosefzadeh-Najafabadi[62] | 2021 | Predict yield and biomass | DNN-SPEA2 | R2: 0.77 | |

| Mohsen Yoosefzadeh-Najafabadi[63] | 2021 | Predict the yield of soybean seed | RF | AP: 93% | |

| Yujie Shi[64] | 2022 | Predict AGB and LAI | SVM | R2: 0.811 | |

| Yishan Ji[65] | 2022 | Estimate plant height and yield of broad beans | SVM | R2: 0.7238 | |

| Yishan Ji[66] | 2023 | Predict biomass and yield of broad beans | Ensemble learning algorithms of ML | R2: 0.854 | |

| potato | Yang Liu[67] | 2022 | Estimate biomass of potatoes | SVM, RF, GPR | R2: 0.76 |

| Chen Sun[68] | 2020 | Predict the yield of potato tuber | ridge regression | R2: 0.63 | |

| cotton | Weicheng Xu[69] | 2021 | Predict the yield of cotton | BP neural network | R2: 0.854 |

| sugarcane | Chiranjibi Poudyal[70] | 2022 | Predict component yield of sugarcane | GBRT | AP: 94% |

| Romário Porto de Oliveira[71] | 2022 | Predict characteristic parameters of sugarcane | RF | R2: 0.7 | |

| spring tea | Zongtai He[72] | 2023 | Predict fresh yield of spring tea | PLMSVs | R2: 0.625 |

| alfalfa | Luwei Feng[73] | 2020 | Predict yield | Ensemble learning algorithms of ML | R2: 0.854 |

| meadow | Matthias Wengert[74] | 2022 | Predict the yield of meadow | CBR | R2: 0.87 |

| ryegrass | Joanna Pranga[75] | 2021 | Predict the yield of ryegrass | PLSR, RF, SVM | RMSE: 13.1% |

| red clover | Kai-Yun Li[76] | 2021 | Estimate the yield of red clover | ANN | R2: 0.90 |

| tomato | Kenichi Tatsumi[77] | 2021 | Predict biomass and yield of tomato | RF, RI, SVM | rMSE: 8.8% |

| grape | Rocío Ballesteros[78] | 2020 | Estimate the yield of the vineyard | ANN | RE: 21.8% |

| apple | Riqiang Chen[79] | 2022 | Predict the yield of apple tree | SVR, KNN | R2: 0.813 |

| almond | Minmeng Tang[80] | 2020 | Estimate yield of almond | Improved CNN | R2: 0.96 |

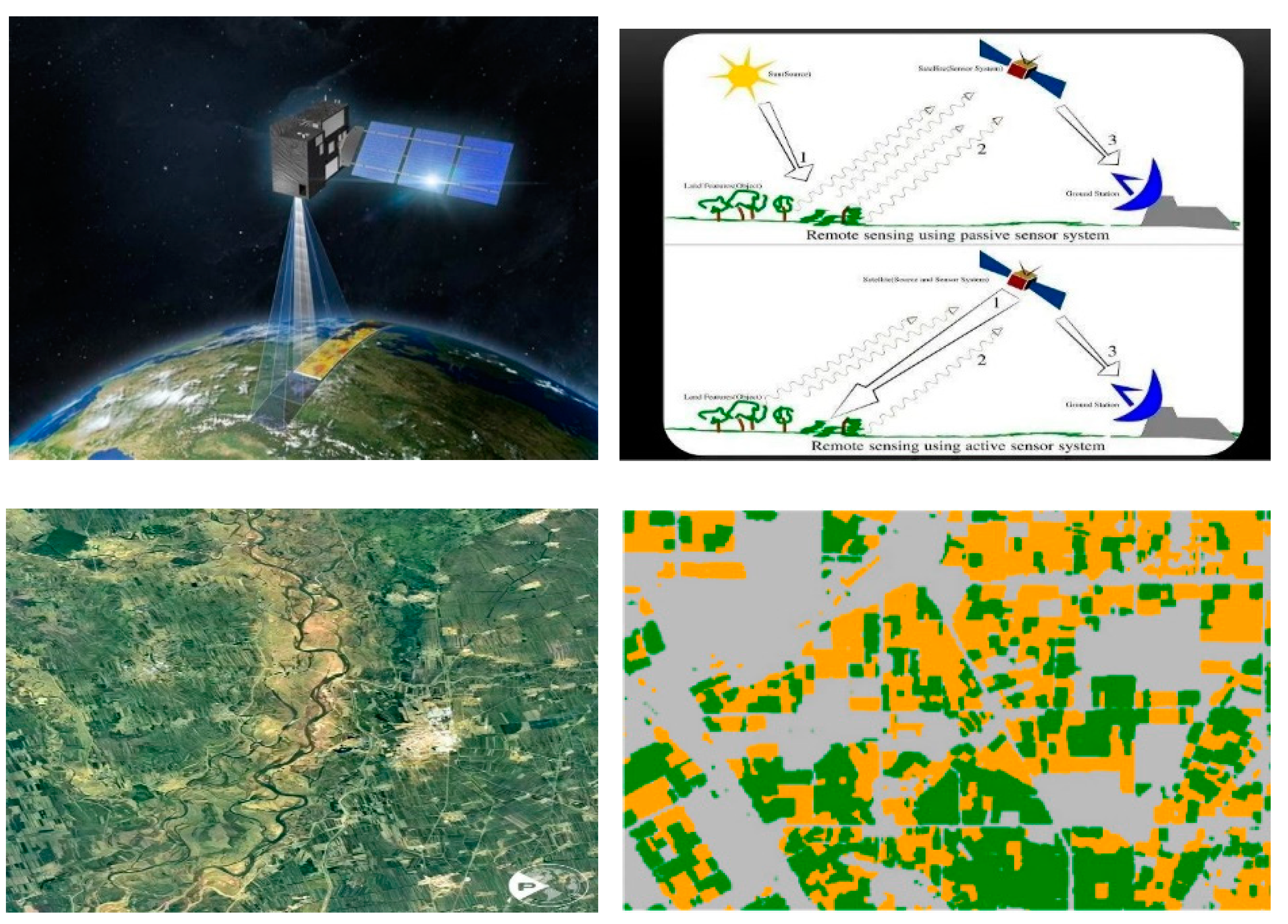

3.1.2. Yield Calculation by High Altitude Satellite Remote Sensing Image

| Crop varieties | Author | Year | Task | Network framework and algorithms | Result |

|---|---|---|---|---|---|

| wheat | Maria Bebie[81] | 2022 | Predict the yield of wheat | RF, KNN, BR | R2: 0.91 |

| Elisa Kamir[82] | 2020 | Predict the yield of wheat | SVM | R2: 0.77 | |

| Yuanyuan Liu[83] | 2022 | Predict the yield of wheat | SVR | R2: 0.87 | |

| rice | Nguyen-Thanh Son[84] | 2022 | Predict the yield of rice | SVM, RF, ANN | MAPE:3.5% |

| coffee tree | Carlos Alberto Matias de Abreu Júnior[85] | 2022 | Predict the yield of coffee tree | NN | R2: 0.82 |

| rubber | Yang Liu[86] | 2023 | Predict the yield of rubber | LR | R2: 0.80 |

| cotton | Patrick Filippi[87] | 2020 | Predict the yield of cotton | RF | LCCC:0.65 |

| corn | Johann Desloires[88] | 2023 | Predict the yield of corn | Ensemble learning algorithms of ML | R2: 0.42 |

| foodstuff | Fan Liu[89] | 2023 | Predict the yield of foodstuff | LSTM | R2:0.989 |

3.2. Yield Calculation by Visible Light Image

3.2.1. Yield Calculation by Traditional Image

| Crop varieties | Author | Year | Task | Network framework and algorithms | Result |

|---|---|---|---|---|---|

| kiwifruit | Jafar Massah[93] | 2021 | Count of fruit quantity | SVM | R2: 0.96 |

| Youming Zhang[94] | 2022 | Calculate the leaf area index of kiwifruit | RFR | R2: 0.972 | |

| corn | Youming Zhang[95] | 2020 | Predict the yield of corn | BP,SVM,RF,ELM | R2: 0.570 |

| Meina Zhang[96] | 2020 | Estimate yield of corn | Regression Analysis | MAPE: 15.1% | |

| apple | Amine Saddik[97] | 2023 | Count Apple fruit | Raspberry | AP: 97.22% |

| Toona sinensis | Wenjian Liu[98] | 2021 | Predict aboveground biomass | MLR | R2: 0.83 |

| cotton | Javier Rodriguez-Sanchez[99] | 2022 | Estimate the yield of Cotton | SVM | R2: 0.93 |

3.2.2. Yield Calculation by Deep Learning Image

- Yield Calculation of Food Crops

- Yield Calculation of Economic Crops

| Crop varieties | Author | Year | Task | Network framework and algorithms | Result |

|---|---|---|---|---|---|

| corn | Canek Mota-Delfin[107] | 2022 | Detect and count corn plants | YOLOv4, YOLOv5 series | mAP: 73.1% |

| Yunling Liu[108] | 2020 | Detect and count corn ears | Faster R-CNN | AP: 94.99% | |

| Honglei Jia[109] | 2020 | Detect and count corn ears | VGG16 | mAP: 97.02% | |

| wheat | Yixin Guo[110] | 2022 | Detect and count wheat ears | SlypNet | mAP: 99% |

| Petteri Nevavuori[111] | 2020 | Predict wheat yield | 3D-CNN | R2: 0.962 | |

| Ruicheng Qiu[112] | 2022 | Detect and count wheat ears | DCNN | R2: 0.84 | |

| Yao Zhaosheng[113] | 2022 | detect wheat spikes Rapidly | YOLOX-m | AP: 88.03% | |

| Hecang Zang[114] | 2022 | Detect and count wheat ears | YOLOv5s | AP: 71.61% | |

| Fengkui Zhao[115] | 2022 | Detect and count wheat ears | YOLOv4 | R2: 0.973 | |

| sorghum | Zhe Lin[116] | 2020 | Detect and count sorghum spikes | U-Net | AP: 95.5% |

| rice | Yixin Guo[117] | 2021 | Calculate Rice Seed Setting Rate (RSSR) | YOLO v4 | mAP: 99.43% |

| Jingye Han[118] | 2022 | Estimate Rice Yield | CNN | R2: 0.646 | |

| kiwifruit | Zhongxian Zhou[119] | 2020 | Count fruit quantity | MobileNetV2,InceptionV3 | TDR: 89.7% |

| mango | Juntao Xiong[120] | 2020 | Detect and count Mango | YOLOv2 | error rate: 1.1% |

| grape | Thiago T. Santos[121] | 2020 | Detect and count grape string | Mask R-CNN, YOLOv3 | F1-score: 0.91 |

| Lei Shen[122] | 2023 | Detect and count grape string | YOLO v5s | mAP: 82.3% | |

| Hubert Cecotti[123] | 2020 | Detect grape | Resnet | mAP: 99% | |

| Fernando Palacios[124] | 2022 | Detect and count grapeberry quantity | SegNet, SVR | R2: 0.83 | |

| Shan Chen[125] | 2021 | Segment grape skewer | PSPNet | PA: 95.73% | |

| Shan Chen[126] | 2022 | Detect and count grape string | Object detection, CNN, Transformer | MAPE: 18% | |

| Marco Sozzi[127] | 2022 | Detect and count grape string | YOLO | MAPE: 13.3% | |

| Fernando Palacios[128] | 2020 | Detect and count grapevine flower | SegNet | R2: 0.70 | |

| apple | Lijuan Sun[129] | 2022 | Detect and count apple | YOLOv5-PRE | mAP: 94.03% |

| Orly Enrique Apolo-Apolo[130] | 2020 | Detect and count apple | Faster R-CNN | R2: 0.86 | |

| weed | Longzhe Quan[132] | 2021 | Estimate aboveground fresh weight of weeds | YOLO-V4 | mAP: 75.34% |

| capsicum | Taewon Moon[133] | 2022 | Estimate fresh weight and leaf area | ConvNet | R2: 0.95 |

| soybean | Wei Lu[134] | 2022 | Predict soybean yield | YOLOv3, GRNN | mAP: 97.43% |

| Luis G. Riera[135] | 2021 | Count soybean pods | RetinaNet | mAP: 0.71 |

4. Discuss

| Image types | Obtaining methods | Image preprocessing | Extracting indicators | Main advantages | Main Disadvantages | Representative algorithms |

|---|---|---|---|---|---|---|

| Remote sensing images | Low altitude drone: equipped with multispectral cameras, visible light cameras, thermal imaging cameras, and hyperspectral cameras | Size correction; Multi-channel image fusion; Projection conversion; Resampling; |

Surface reflectance; Multispectral vegetation index; Biophysical parameters; Growth environment parameters; |

Multi-channel image, containing time, space, temperature, and band information, multi-channel fusion, rich information | The spatiotemporal and band attributes are difficult to fully utilize, and the shooting distance is far, making it suitable for predicting the yield of large-scale land parcels with low accuracy; Easily affected by weather |

ML,ANN,CNN-LSTM,3DCNN |

| Satellite | Low spatial and temporal resolution, long cycle time, and pixel mixing | |||||

| Visible light images | Digital camera | Size adjustment; Rotation; Cropping; Gaussian blur; Color enhancement; Brightening; Noise reduction, etc; Annotation; Dataset partitioning; |

Color index; Texture index; Morphological index; |

Easy to obtain images at a low cost | Only three bands of red, green, and blue have limited information content | Linear regression, ML, |

| YOLO, Resnet, SSD, Mask R-CNN |

5. Conclusions and Outlooks

References

- Paudel, D.; Boogaard, H.; de Wit, A.; Janssen, S.; Osinga, S.; Pylianidis, C.; Athanasiadis, I.N. Machine learning for large-scale crop yield forecasting. Agricultural Systems 2021, 187. [CrossRef]

- Zhu, Y.; Wu, S.; Qin, M.; Fu, Z.; Gao, Y.; Wang, Y.; Du, Z. A deep learning crop model for adaptive yield estimation in large areas. International Journal of Applied Earth Observation and Geoinformation 2022, 110. [CrossRef]

- Akhtar, M.N.; Ansari, E.; Alhady, S.S.N.; Abu Bakar, E. Leveraging on Advanced Remote Sensing- and Artificial Intelligence-Based Technologies to Manage Palm Oil Plantation for Current Global Scenario: A Review. Agriculture-Basel 2023, 13. [CrossRef]

- Torres-Sánchez, J.; Souza, J.; di Gennaro, S.F.; Mesas-Carrascosa, F.J. Editorial: Fruit detection and yield prediction on woody crops using data from unmanned aerial vehicles. Frontiers in Plant Science 2022, 13. [CrossRef]

- Wen, T.; Li, J.-H.; Wang, Q.; Gao, Y.-Y.; Hao, G.-F.; Song, B.-A. Thermal imaging: The digital eye facilitates high-throughput phenotyping traits of plant growth and stress responses. Science of the Total Environment 2023, 899. [CrossRef]

- Farjon, G.; Huijun, L.; Edan, Y. Deep-learning-based counting methods, datasets, and applications in agriculture: a review. Precision Agriculture 2023, 24, 1683-1711. [CrossRef]

- Rashid, M.; Bari, B.S.; Yusup, Y.; Kamaruddin, M.A.; Khan, N. A Comprehensive Review of Crop Yield Prediction Using Machine Learning Approaches With Special Emphasis on Palm Oil Yield Prediction. IEEE Access 2021, 9, 63406-63439. [CrossRef]

- Attri, I.; Awasthi, L.K.; Sharma, T.P. Machine learning in agriculture: a review of crop management applications. Multimedia Tools and Applications 2023. [CrossRef]

- Di, Y.; Gao, M.; Feng, F.; Li, Q.; Zhang, H. A New Framework for Winter Wheat Yield Prediction Integrating Deep Learning and Bayesian Optimization. Agronomy 2022, 12. [CrossRef]

- Teixeira, I.; Morais, R.; Sousa, J.J.; Cunha, A. Deep Learning Models for the Classification of Crops in Aerial Imagery: A Review. Agriculture-Basel 2023, 13. [CrossRef]

- Bali, N.; Singla, A. Deep Learning Based Wheat Crop Yield Prediction Model in Punjab Region of North India. Applied Artificial Intelligence 2021, 35, 1304-1328. [CrossRef]

- van Klompenburg, T.; Kassahun, A.; Catal, C. Crop yield prediction using machine learning: A systematic literature review. Computers and Electronics in Agriculture 2020, 177. [CrossRef]

- Thakur, A.; Venu, S.; Gurusamy, M. An extensive review on agricultural robots with a focus on their perception systems. Computers and Electronics in Agriculture 2023, 212. [CrossRef]

- Abebe, A.M.; Kim, Y.; Kim, J.; Kim, S.L.; Baek, J. Image-Based High-Throughput Phenotyping in Horticultural Crops. Plants-Basel 2023, 12. [CrossRef]

- Alkhaled, A.; Townsend, P.A.A.; Wang, Y. Remote Sensing for Monitoring Potato Nitrogen Status. American Journal of Potato Research 2023, 100, 1-14. [CrossRef]

- Pokhariyal, S.; Patel, N.R.; Govind, A. Machine Learning-Driven Remote Sensing Applications for Agriculture in India-A Systematic Review. Agronomy-Basel 2023, 13. [CrossRef]

- Joshi, A.; Pradhan, B.; Gite, S.; Chakraborty, S. Remote-Sensing Data and Deep-Learning Techniques in Crop Mapping and Yield Prediction: A Systematic Review. Remote Sensing 2023, 15. [CrossRef]

- Muruganantham, P.; Wibowo, S.; Grandhi, S.; Samrat, N.H.; Islam, N. A Systematic Literature Review on Crop Yield Prediction with Deep Learning and Remote Sensing. Remote Sensing 2022, 14. [CrossRef]

- Ren, Y.; Li, Q.; Du, X.; Zhang, Y.; Wang, H.; Shi, G.; Wei, M. Analysis of Corn Yield Prediction Potential at Various Growth Phases Using a Process-Based Model and Deep Learning. Plants 2023, 12. [CrossRef]

- Zhou, S.; Xu, L.; Chen, N. Rice Yield Prediction in Hubei Province Based on Deep Learning and the Effect of Spatial Heterogeneity. Remote Sensing 2023, 15. [CrossRef]

- Darra, N.; Anastasiou, E.; Kriezi, O.; Lazarou, E.; Kalivas, D.; Fountas, S. Can Yield Prediction Be Fully Digitilized? A Systematic Review. Agronomy-Basel 2023, 13. [CrossRef]

- Shahi, T.B.; Xu, C.-Y.; Neupane, A.; Guo, W. Recent Advances in Crop Disease Detection Using UAV and Deep Learning Techniques. Remote Sensing 2023, 15. [CrossRef]

- Istiak, A.; Syeed, M.M.M.; Hossain, S.; Uddin, M.F.; Hasan, M.; Khan, R.H.; Azad, N.S. Adoption of Unmanned Aerial Vehicle (UAV) imagery in agricultural management: A systematic literature review. Ecological Informatics 2023, 78. [CrossRef]

- Fajardo, M.; Whelan, B.M. Within-farm wheat yield forecasting incorporating off-farm information. Precision Agriculture 2021, 22, 569-585. [CrossRef]

- Yli-Heikkilä, M.; Wittke, S.; Luotamo, M.; Puttonen, E.; Sulkava, M.; Pellikka, P.; Heiskanen, J.; Klami, A. Scalable Crop Yield Prediction with Sentinel-2 Time Series and Temporal Convolutional Network. Remote Sensing 2022, 14. [CrossRef]

- Safdar, L.B.; Dugina, K.; Saeidan, A.; Yoshicawa, G.V.; Caporaso, N.; Gapare, B.; Umer, M.J.; Bhosale, R.A.; Searle, I.R.; Foulkes, M.J.; et al. Reviving grain quality in wheat through non-destructive phenotyping techniques like hyperspectral imaging. Food and Energy Security 2023, 12. [CrossRef]

- He, L.; Fang, W.; Zhao, G.; Wu, Z.; Fu, L.; Li, R.; Majeed, Y.; Dhupia, J. Fruit yield prediction and estimation in orchards: A state-of-the-art comprehensive review for both direct and indirect methods. Computers and Electronics in Agriculture 2022, 195. [CrossRef]

- Tende, I.G.; Aburada, K.; Yamaba, H.; Katayama, T.; Okazaki, N. Development and Evaluation of a Deep Learning Based System to Predict District-Level Maize Yields in Tanzania. Agriculture 2023, 13. [CrossRef]

- Leukel, J.; Zimpel, T.; Stumpe, C. Machine learning technology for early prediction of grain yield at the field scale: A systematic review. Computers and Electronics in Agriculture 2023, 207. [CrossRef]

- Elangovan, A.; Duc, N.T.; Raju, D.; Kumar, S.; Singh, B.; Vishwakarma, C.; Gopala Krishnan, S.; Ellur, R.K.; Dalal, M.; Swain, P.; et al. Imaging Sensor-Based High-Throughput Measurement of Biomass Using Machine Learning Models in Rice. Agriculture 2023, 13. [CrossRef]

- Hassanzadeh, A.; Zhang, F.; van Aardt, J.; Murphy, S.P.; Pethybridge, S.J. Broadacre Crop Yield Estimation Using Imaging Spectroscopy from Unmanned Aerial Systems (UAS): A Field-Based Case Study with Snap Bean. Remote Sensing 2021, 13. [CrossRef]

- Sanaeifar, A.; Yang, C.; Guardia, M.d.l.; Zhang, W.; Li, X.; He, Y. Proximal hyperspectral sensing of abiotic stresses in plants. Science of the Total Environment 2023, 861. [CrossRef]

- Li, K.-Y.; Sampaio de Lima, R.; Burnside, N.G.; Vahtmäe, E.; Kutser, T.; Sepp, K.; Cabral Pinheiro, V.H.; Yang, M.-D.; Vain, A.; Sepp, K. Toward Automated Machine Learning-Based Hyperspectral Image Analysis in Crop Yield and Biomass Estimation. Remote Sensing 2022, 14. [CrossRef]

- Elavarasan, D.; Vincent, P.M.D. Crop Yield Prediction Using Deep Reinforcement Learning Model for Sustainable Agrarian Applications. IEEE Access 2020, 8, 86886-86901. [CrossRef]

- Teodoro, P.E.; Teodoro, L.P.R.; Baio, F.H.R.; da Silva Junior, C.A.; dos Santos, R.G.; Ramos, A.P.M.; Pinheiro, M.M.F.; Osco, L.P.; Gonçalves, W.N.; Carneiro, A.M.; et al. Predicting Days to Maturity, Plant Height, and Grain Yield in Soybean: A Machine and Deep Learning Approach Using Multispectral Data. Remote Sensing 2021, 13. [CrossRef]

- Fei, S.; Hassan, M.A.; Xiao, Y.; Su, X.; Chen, Z.; Cheng, Q.; Duan, F.; Chen, R.; Ma, Y. UAV-based multi-sensor data fusion and machine learning algorithm for yield prediction in wheat. Precision Agriculture 2022, 24, 187-212. [CrossRef]

- Ayankojo, I.T.T.; Thorp, K.R.R.; Thompson, A.L.L. Advances in the Application of Small Unoccupied Aircraft Systems (sUAS) for High-Throughput Plant Phenotyping. Remote Sensing 2023, 15. [CrossRef]

- Zualkernan, I.; Abuhani, D.A.; Hussain, M.H.; Khan, J.; ElMohandes, M. Machine Learning for Precision Agriculture Using Imagery from Unmanned Aerial Vehicles (UAVs): A Survey. Drones 2023, 7. [CrossRef]

- Zhang, Z.; Zhu, L. A Review on Unmanned Aerial Vehicle Remote Sensing: Platforms, Sensors, Data Processing Methods, and Applications. Drones 2023, 7. [CrossRef]

- Gonzalez-Sanchez, A.; Frausto-Solis, J.; Ojeda-Bustamante, W. Predictive ability of machine learning methods for massive crop yield prediction. Spanish Journal of Agricultural Research 2014, 12. [CrossRef]

- Yang, W.; Nigon, T.; Hao, Z.; Dias Paiao, G.; Fernández, F.G.; Mulla, D.; Yang, C. Estimation of corn yield based on hyperspectral imagery and convolutional neural network. Computers and Electronics in Agriculture 2021, 184. [CrossRef]

- Danilevicz, M.F.; Bayer, P.E.; Boussaid, F.; Bennamoun, M.; Edwards, D. Maize Yield Prediction at an Early Developmental Stage Using Multispectral Images and Genotype Data for Preliminary Hybrid Selection. Remote Sensing 2021, 13. [CrossRef]

- Kumar, C.; Mubvumba, P.; Huang, Y.; Dhillon, J.; Reddy, K. Multi-Stage Corn Yield Prediction Using High-Resolution UAV Multispectral Data and Machine Learning Models. Agronomy 2023, 13. [CrossRef]

- Yu, D.; Zha, Y.; Sun, Z.; Li, J.; Jin, X.; Zhu, W.; Bian, J.; Ma, L.; Zeng, Y.; Su, Z. Deep convolutional neural networks for estimating maize above-ground biomass using multi-source UAV images: a comparison with traditional machine learning algorithms. Precision Agriculture 2022, 24, 92-113. [CrossRef]

- Marques Ramos, A.P.; Prado Osco, L.; Elis Garcia Furuya, D.; Nunes Gonçalves, W.; Cordeiro Santana, D.; Pereira Ribeiro Teodoro, L.; Antonio da Silva Junior, C.; Fernando Capristo-Silva, G.; Li, J.; Henrique Rojo Baio, F.; et al. A random forest ranking approach to predict yield in maize with uav-based vegetation spectral indices. Computers and Electronics in Agriculture 2020, 178. [CrossRef]

- Mia, M.S.; Tanabe, R.; Habibi, L.N.; Hashimoto, N.; Homma, K.; Maki, M.; Matsui, T.; Tanaka, T.S.T. Multimodal Deep Learning for Rice Yield Prediction Using UAV-Based Multispectral Imagery and Weather Data. Remote Sensing 2023, 15. [CrossRef]

- Bellis, E.S.; Hashem, A.A.; Causey, J.L.; Runkle, B.R.K.; Moreno-García, B.; Burns, B.W.; Green, V.S.; Burcham, T.N.; Reba, M.L.; Huang, X. Detecting Intra-Field Variation in Rice Yield With Unmanned Aerial Vehicle Imagery and Deep Learning. Frontiers in Plant Science 2022, 13. [CrossRef]

- Bian, C.; Shi, H.; Wu, S.; Zhang, K.; Wei, M.; Zhao, Y.; Sun, Y.; Zhuang, H.; Zhang, X.; Chen, S. Prediction of Field-Scale Wheat Yield Using Machine Learning Method and Multi-Spectral UAV Data. Remote Sensing 2022, 14. [CrossRef]

- Han, Y.; Tang, R.; Liao, Z.; Zhai, B.; Fan, J. A Novel Hybrid GOA-XGB Model for Estimating Wheat Aboveground Biomass Using UAV-Based Multispectral Vegetation Indices. Remote Sensing 2022, 14. [CrossRef]

- Zhou, X.; Kono, Y.; Win, A.; Matsui, T.; Tanaka, T.S.T. Predicting within-field variability in grain yield and protein content of winter wheat using UAV-based multispectral imagery and machine learning approaches. Plant Production Science 2020, 24, 137-151. [CrossRef]

- Sharma, P.; Leigh, L.; Chang, J.; Maimaitijiang, M.; Caffé, M. Above-Ground Biomass Estimation in Oats Using UAV Remote Sensing and Machine Learning. Sensors 2022, 22. [CrossRef]

- Wang, F.; Yang, M.; Ma, L.; Zhang, T.; Qin, W.; Li, W.; Zhang, Y.; Sun, Z.; Wang, Z.; Li, F.; et al. Estimation of Above-Ground Biomass of Winter Wheat Based on Consumer-Grade Multi-Spectral UAV. Remote Sensing 2022, 14. [CrossRef]

- Roy Choudhury, M.; Das, S.; Christopher, J.; Apan, A.; Chapman, S.; Menzies, N.W.; Dang, Y.P. Improving Biomass and Grain Yield Prediction of Wheat Genotypes on Sodic Soil Using Integrated High-Resolution Multispectral, Hyperspectral, 3D Point Cloud, and Machine Learning Techniques. Remote Sensing 2021, 13. [CrossRef]

- Fu, Y.; Yang, G.; Song, X.; Li, Z.; Xu, X.; Feng, H.; Zhao, C. Improved Estimation of Winter Wheat Aboveground Biomass Using Multiscale Textures Extracted from UAV-Based Digital Images and Hyperspectral Feature Analysis. Remote Sensing 2021, 13. [CrossRef]

- Tanabe, R.; Matsui, T.; Tanaka, T.S.T. Winter wheat yield prediction using convolutional neural networks and UAV-based multispectral imagery. Field Crops Research 2023, 291. [CrossRef]

- Li, Z.; Chen, Z.; Cheng, Q.; Duan, F.; Sui, R.; Huang, X.; Xu, H. UAV-Based Hyperspectral and Ensemble Machine Learning for Predicting Yield in Winter Wheat. Agronomy 2022, 12. [CrossRef]

- Li, R.; Wang, D.; Zhu, B.; Liu, T.; Sun, C.; Zhang, Z. Estimation of grain yield in wheat using source–sink datasets derived from RGB and thermal infrared imaging. Food and Energy Security 2022, 12. [CrossRef]

- Sharifi, A. Yield prediction with machine learning algorithms and satellite images. Journal of the Science of Food and Agriculture 2020, 101, 891-896. [CrossRef]

- Ma, J.; Liu, B.; Ji, L.; Zhu, Z.; Wu, Y.; Jiao, W. Field-scale yield prediction of winter wheat under different irrigation regimes based on dynamic fusion of multimodal UAV imagery. International Journal of Applied Earth Observation and Geoinformation 2023, 118. [CrossRef]

- Maimaitijiang, M.; Sagan, V.; Sidike, P.; Hartling, S.; Esposito, F.; Fritschi, F.B. Soybean yield prediction from UAV using multimodal data fusion and deep learning. Remote Sensing of Environment 2020, 237. [CrossRef]

- Zhou, J.; Zhou, J.; Ye, H.; Ali, M.L.; Chen, P.; Nguyen, H.T. Yield estimation of soybean breeding lines under drought stress using unmanned aerial vehicle-based imagery and convolutional neural network. Biosystems Engineering 2021, 204, 90-103. [CrossRef]

- Yoosefzadeh-Najafabadi, M.; Tulpan, D.; Eskandari, M. Using Hybrid Artificial Intelligence and Evolutionary Optimization Algorithms for Estimating Soybean Yield and Fresh Biomass Using Hyperspectral Vegetation Indices. Remote Sensing 2021, 13. [CrossRef]

- Yoosefzadeh-Najafabadi, M.; Earl, H.J.; Tulpan, D.; Sulik, J.; Eskandari, M. Application of Machine Learning Algorithms in Plant Breeding: Predicting Yield From Hyperspectral Reflectance in Soybean. Frontiers in Plant Science 2021, 11. [CrossRef]

- Shi, Y.; Gao, Y.; Wang, Y.; Luo, D.; Chen, S.; Ding, Z.; Fan, K. Using Unmanned Aerial Vehicle-Based Multispectral Image Data to Monitor the Growth of Intercropping Crops in Tea Plantation. Frontiers in Plant Science 2022, 13. [CrossRef]

- Ji, Y.; Chen, Z.; Cheng, Q.; Liu, R.; Li, M.; Yan, X.; Li, G.; Wang, D.; Fu, L.; Ma, Y.; et al. Estimation of plant height and yield based on UAV imagery in faba bean (Vicia faba L.). Plant Methods 2022, 18. [CrossRef]

- Ji, Y.; Liu, R.; Xiao, Y.; Cui, Y.; Chen, Z.; Zong, X.; Yang, T. Faba bean above-ground biomass and bean yield estimation based on consumer-grade unmanned aerial vehicle RGB images and ensemble learning. Precision Agriculture 2023, 24, 1439-1460. [CrossRef]

- Liu, Y.; Feng, H.; Yue, J.; Fan, Y.; Jin, X.; Zhao, Y.; Song, X.; Long, H.; Yang, G. Estimation of Potato Above-Ground Biomass Using UAV-Based Hyperspectral images and Machine-Learning Regression. Remote Sensing 2022, 14. [CrossRef]

- Sun, C.; Feng, L.; Zhang, Z.; Ma, Y.; Crosby, T.; Naber, M.; Wang, Y. Prediction of End-Of-Season Tuber Yield and Tuber Set in Potatoes Using In-Season UAV-Based Hyperspectral Imagery and Machine Learning. Sensors 2020, 20. [CrossRef]

- Xu, W.; Chen, P.; Zhan, Y.; Chen, S.; Zhang, L.; Lan, Y. Cotton yield estimation model based on machine learning using time series UAV remote sensing data. International Journal of Applied Earth Observation and Geoinformation 2021, 104. [CrossRef]

- Poudyal, C.; Costa, L.F.; Sandhu, H.; Ampatzidis, Y.; Odero, D.C.; Arbelo, O.C.; Cherry, R.H. Sugarcane yield prediction and genotype selection using unmanned aerial vehicle-based hyperspectral imaging and machine learning. Agronomy Journal 2022, 114, 2320-2333. [CrossRef]

- de Oliveira, R.P.; Barbosa Júnior, M.R.; Pinto, A.A.; Oliveira, J.L.P.; Zerbato, C.; Furlani, C.E.A. Predicting Sugarcane Biometric Parameters by UAV Multispectral Images and Machine Learning. Agronomy 2022, 12. [CrossRef]

- He, Z.; Wu, K.; Wang, F.; Jin, L.; Zhang, R.; Tian, S.; Wu, W.; He, Y.; Huang, R.; Yuan, L.; et al. Fresh Yield Estimation of Spring Tea via Spectral Differences in UAV Hyperspectral Images from Unpicked and Picked Canopies. Remote Sensing 2023, 15. [CrossRef]

- Feng, L.; Zhang, Z.; Ma, Y.; Du, Q.; Williams, P.; Drewry, J.; Luck, B. Alfalfa Yield Prediction Using UAV-Based Hyperspectral Imagery and Ensemble Learning. Remote Sensing 2020, 12. [CrossRef]

- Wengert, M.; Wijesingha, J.; Schulze-Brüninghoff, D.; Wachendorf, M.; Astor, T. Multisite and Multitemporal Grassland Yield Estimation Using UAV-Borne Hyperspectral Data. Remote Sensing 2022, 14. [CrossRef]

- Pranga, J.; Borra-Serrano, I.; Aper, J.; De Swaef, T.; Ghesquiere, A.; Quataert, P.; Roldán-Ruiz, I.; Janssens, I.A.; Ruysschaert, G.; Lootens, P. Improving Accuracy of Herbage Yield Predictions in Perennial Ryegrass with UAV-Based Structural and Spectral Data Fusion and Machine Learning. Remote Sensing 2021, 13. [CrossRef]

- Li, K.-Y.; Burnside, N.G.; Sampaio de Lima, R.; Villoslada Peciña, M.; Sepp, K.; Yang, M.-D.; Raet, J.; Vain, A.; Selge, A.; Sepp, K. The Application of an Unmanned Aerial System and Machine Learning Techniques for Red Clover-Grass Mixture Yield Estimation under Variety Performance Trials. Remote Sensing 2021, 13. [CrossRef]

- Tatsumi, K.; Igarashi, N.; Mengxue, X. Prediction of plant-level tomato biomass and yield using machine learning with unmanned aerial vehicle imagery. Plant Methods 2021, 17. [CrossRef]

- Ballesteros, R.; Intrigliolo, D.S.; Ortega, J.F.; Ramírez-Cuesta, J.M.; Buesa, I.; Moreno, M.A. Vineyard yield estimation by combining remote sensing, computer vision and artificial neural network techniques. Precision Agriculture 2020, 21, 1242-1262. [CrossRef]

- Chen, R.; Zhang, C.; Xu, B.; Zhu, Y.; Zhao, F.; Han, S.; Yang, G.; Yang, H. Predicting individual apple tree yield using UAV multi-source remote sensing data and ensemble learning. Computers and Electronics in Agriculture 2022, 201. [CrossRef]

- Tang, M.; Sadowski, D.L.; Peng, C.; Vougioukas, S.G.; Klever, B.; Khalsa, S.D.S.; Brown, P.H.; Jin, Y. Tree-level almond yield estimation from high resolution aerial imagery with convolutional neural network. Frontiers in Plant Science 2023, 14. [CrossRef]

- Bebie, M.; Cavalaris, C.; Kyparissis, A. Assessing Durum Wheat Yield through Sentinel-2 Imagery: A Machine Learning Approach. Remote Sensing 2022, 14. [CrossRef]

- Kamir, E.; Waldner, F.; Hochman, Z. Estimating wheat yields in Australia using climate records, satellite image time series and machine learning methods. ISPRS Journal of Photogrammetry and Remote Sensing 2020, 160, 124-135. [CrossRef]

- Liu, Y.; Wang, S.; Wang, X.; Chen, B.; Chen, J.; Wang, J.; Huang, M.; Wang, Z.; Ma, L.; Wang, P.; et al. Exploring the superiority of solar-induced chlorophyll fluorescence data in predicting wheat yield using machine learning and deep learning methods. Computers and Electronics in Agriculture 2022, 192. [CrossRef]

- Son, N.-T.; Chen, C.-F.; Cheng, Y.-S.; Toscano, P.; Chen, C.-R.; Chen, S.-L.; Tseng, K.-H.; Syu, C.-H.; Guo, H.-Y.; Zhang, Y.-T. Field-scale rice yield prediction from Sentinel-2 monthly image composites using machine learning algorithms. Ecological Informatics 2022, 69. [CrossRef]

- Abreu Júnior, C.A.M.d.; Martins, G.D.; Xavier, L.C.M.; Vieira, B.S.; Gallis, R.B.d.A.; Fraga Junior, E.F.; Martins, R.S.; Paes, A.P.B.; Mendonça, R.C.P.; Lima, J.V.d.N. Estimating Coffee Plant Yield Based on Multispectral Images and Machine Learning Models. Agronomy 2022, 12. [CrossRef]

- Bhumiphan, N.; Nontapon, J.; Kaewplang, S.; Srihanu, N.; Koedsin, W.; Huete, A. Estimation of Rubber Yield Using Sentinel-2 Satellite Data. Sustainability 2023, 15. [CrossRef]

- Filippi, P.; Whelan, B.M.; Vervoort, R.W.; Bishop, T.F.A. Mid-season empirical cotton yield forecasts at fine resolutions using large yield mapping datasets and diverse spatial covariates. Agricultural Systems 2020, 184. [CrossRef]

- Desloires, J.; Ienco, D.; Botrel, A. Out-of-year corn yield prediction at field-scale using Sentinel-2 satellite imagery and machine learning methods. Computers and Electronics in Agriculture 2023, 209. [CrossRef]

- Liu, F.; Jiang, X.; Wu, Z. Attention Mechanism-Combined LSTM for Grain Yield Prediction in China Using Multi-Source Satellite Imagery. Sustainability 2023, 15. [CrossRef]

- Tang, Y.; Qiu, J.; Zhang, Y.; Wu, D.; Cao, Y.; Zhao, K.; Zhu, L. Optimization strategies of fruit detection to overcome the challenge of unstructured background in field orchard environment: a review. Precision Agriculture 2023, 24, 1183-1219. [CrossRef]

- Darwin, B.; Dharmaraj, P.; Prince, S.; Popescu, D.E.; Hemanth, D.J. Recognition of Bloom/Yield in Crop Images Using Deep Learning Models for Smart Agriculture: A Review. Agronomy 2021, 11. [CrossRef]

- Abbas, A.; Zhang, Z.; Zheng, H.; Alami, M.M.; Alrefaei, A.F.; Abbas, Q.; Naqvi, S.A.H.; Rao, M.J.; Mosa, W.F.A.; Abbas, Q.; et al. Drones in Plant Disease Assessment, Efficient Monitoring, and Detection: A Way Forward to Smart Agriculture. Agronomy-Basel 2023, 13. [CrossRef]

- Massah, J.; Asefpour Vakilian, K.; Shabanian, M.; Shariatmadari, S.M. Design, development, and performance evaluation of a robot for yield estimation of kiwifruit. Computers and Electronics in Agriculture 2021, 185. [CrossRef]

- Zhang, Y.; Ta, N.; Guo, S.; Chen, Q.; Zhao, L.; Li, F.; Chang, Q. Combining Spectral and Textural Information from UAV RGB Images for Leaf Area Index Monitoring in Kiwifruit Orchard. Remote Sensing 2022, 14. [CrossRef]

- Guo, Y.; Wang, H.; Wu, Z.; Wang, S.; Sun, H.; Senthilnath, J.; Wang, J.; Robin Bryant, C.; Fu, Y. Modified Red Blue Vegetation Index for Chlorophyll Estimation and Yield Prediction of Maize from Visible Images Captured by UAV. Sensors 2020, 20. [CrossRef]

- Zhang, M.; Zhou, J.; Sudduth, K.A.; Kitchen, N.R. Estimation of maize yield and effects of variable-rate nitrogen application using UAV-based RGB imagery. Biosystems Engineering 2020, 189, 24-35. [CrossRef]

- Saddik, A.; Latif, R.; Abualkishik, A.Z.; El Ouardi, A.; Elhoseny, M. Sustainable Yield Prediction in Agricultural Areas Based on Fruit Counting Approach. Sustainability 2023, 15. [CrossRef]

- Liu, W.; Li, Y.; Liu, J.; Jiang, J. Estimation of Plant Height and Aboveground Biomass of Toona sinensis under Drought Stress Using RGB-D Imaging. Forests 2021, 12. [CrossRef]

- Rodriguez-Sanchez, J.; Li, C.; Paterson, A.H. Cotton Yield Estimation From Aerial Imagery Using Machine Learning Approaches. Frontiers in Plant Science 2022, 13. [CrossRef]

- Gong, L.; Yu, M.; Cutsuridis, V.; Kollias, S.; Pearson, S. A Novel Model Fusion Approach for Greenhouse Crop Yield Prediction. Horticulturae 2022, 9. [CrossRef]

- Kamilaris, A.; Prenafeta-Boldú, F.X. A review of the use of convolutional neural networks in agriculture. The Journal of Agricultural Science 2018, 156, 312-322. [CrossRef]

- Chin, R.; Catal, C.; Kassahun, A. Plant disease detection using drones in precision agriculture. Precision Agriculture 2023, 24, 1663-1682. [CrossRef]

- Jiang, Y.; Li, C. Convolutional Neural Networks for Image-Based High-Throughput Plant Phenotyping: A Review. Plant Phenomics 2020, 2020. [CrossRef]

- Sanaeifar, A.; Guindo, M.L.; Bakhshipour, A.; Fazayeli, H.; Li, X.; Yang, C. Advancing precision agriculture: The potential of deep learning for cereal plant head detection. Computers and Electronics in Agriculture 2023, 209. [CrossRef]

- Buxbaum, N.; Lieth, J.H.; Earles, M. Non-destructive Plant Biomass Monitoring With High Spatio-Temporal Resolution via Proximal RGB-D Imagery and End-to-End Deep Learning. Frontiers in Plant Science 2022, 13. [CrossRef]

- Lu, H.; Cao, Z. TasselNetV2+: A Fast Implementation for High-Throughput Plant Counting From High-Resolution RGB Imagery. Frontiers in Plant Science 2020, 11. [CrossRef]

- Mota-Delfin, C.; López-Canteñs, G.d.J.; López-Cruz, I.L.; Romantchik-Kriuchkova, E.; Olguín-Rojas, J.C. Detection and Counting of Corn Plants in the Presence of Weeds with Convolutional Neural Networks. Remote Sensing 2022, 14. [CrossRef]

- Liu, Y.; Cen, C.; Che, Y.; Ke, R.; Ma, Y.; Ma, Y. Detection of Maize Tassels from UAV RGB Imagery with Faster R-CNN. Remote Sensing 2020, 12. [CrossRef]

- Jia, H.; Qu, M.; Wang, G.; Walsh, M.J.; Yao, J.; Guo, H.; Liu, H. Dough-Stage Maize (Zea mays L.) Ear Recognition Based on Multiscale Hierarchical Features and Multifeature Fusion. Mathematical Problems in Engineering 2020, 2020, 1-14. [CrossRef]

- Maji, A.K.; Marwaha, S.; Kumar, S.; Arora, A.; Chinnusamy, V.; Islam, S. SlypNet: Spikelet-based yield prediction of wheat using advanced plant phenotyping and computer vision techniques. Frontiers in Plant Science 2022, 13. [CrossRef]

- Nevavuori, P.; Narra, N.; Linna, P.; Lipping, T. Crop Yield Prediction Using Multitemporal UAV Data and Spatio-Temporal Deep Learning Models. Remote Sensing 2020, 12. [CrossRef]

- Qiu, R.; He, Y.; Zhang, M. Automatic Detection and Counting of Wheat Spikelet Using Semi-Automatic Labeling and Deep Learning. Frontiers in Plant Science 2022, 13. [CrossRef]

- Zhaosheng, Y.; Tao, L.; Tianle, Y.; Chengxin, J.; Chengming, S. Rapid Detection of Wheat Ears in Orthophotos From Unmanned Aerial Vehicles in Fields Based on YOLOX. Frontiers in Plant Science 2022, 13. [CrossRef]

- Zang, H.; Wang, Y.; Ru, L.; Zhou, M.; Chen, D.; Zhao, Q.; Zhang, J.; Li, G.; Zheng, G. Detection method of wheat spike improved YOLOv5s based on the attention mechanism. Frontiers in Plant Science 2022, 13. [CrossRef]

- Zhao, F.; Xu, L.; Lv, L.; Zhang, Y. Wheat Ear Detection Algorithm Based on Improved YOLOv4. Applied Sciences 2022, 12. [CrossRef]

- Lin, Z.; Guo, W. Sorghum Panicle Detection and Counting Using Unmanned Aerial System Images and Deep Learning. Frontiers in Plant Science 2020, 11. [CrossRef]

- Guo, Y.; Li, S.; Zhang, Z.; Li, Y.; Hu, Z.; Xin, D.; Chen, Q.; Wang, J.; Zhu, R. Automatic and Accurate Calculation of Rice Seed Setting Rate Based on Image Segmentation and Deep Learning. Frontiers in Plant Science 2021, 12. [CrossRef]

- Han, J.; Shi, L.; Yang, Q.; Chen, Z.; Yu, J.; Zha, Y. Rice yield estimation using a CNN-based image-driven data assimilation framework. Field Crops Research 2022, 288. [CrossRef]

- Zhou, Z.; Song, Z.; Fu, L.; Gao, F.; Li, R.; Cui, Y. Real-time kiwifruit detection in orchard using deep learning on Android™ smartphones for yield estimation. Computers and Electronics in Agriculture 2020, 179. [CrossRef]

- Xiong, J.; Liu, Z.; Chen, S.; Liu, B.; Zheng, Z.; Zhong, Z.; Yang, Z.; Peng, H. Visual detection of green mangoes by an unmanned aerial vehicle in orchards based on a deep learning method. Biosystems Engineering 2020, 194, 261-272. [CrossRef]

- Santos, T.T.; de Souza, L.L.; dos Santos, A.A.; Avila, S. Grape detection, segmentation, and tracking using deep neural networks and three-dimensional association. Computers and Electronics in Agriculture 2020, 170. [CrossRef]

- Shen, L.; Su, J.; He, R.; Song, L.; Huang, R.; Fang, Y.; Song, Y.; Su, B. Real-time tracking and counting of grape clusters in the field based on channel pruning with YOLOv5s. Computers and Electronics in Agriculture 2023, 206. [CrossRef]

- Cecotti, H.; Rivera, A.; Farhadloo, M.; Pedroza, M.A. Grape detection with convolutional neural networks. Expert Systems with Applications 2020, 159. [CrossRef]

- Palacios, F.; Melo-Pinto, P.; Diago, M.P.; Tardaguila, J. Deep learning and computer vision for assessing the number of actual berries in commercial vineyards. Biosystems Engineering 2022, 218, 175-188. [CrossRef]

- Chen, S.; Song, Y.; Su, J.; Fang, Y.; Shen, L.; Mi, Z.; Su, B. Segmentation of field grape bunches via an improved pyramid scene parsing network. International Journal of Agricultural and Biological Engineering 2021, 14, 185-194. [CrossRef]

- Olenskyj, A.G.; Sams, B.S.; Fei, Z.; Singh, V.; Raja, P.V.; Bornhorst, G.M.; Earles, J.M. End-to-end deep learning for directly estimating grape yield from ground-based imagery. Computers and Electronics in Agriculture 2022, 198. [CrossRef]

- Sozzi, M.; Cantalamessa, S.; Cogato, A.; Kayad, A.; Marinello, F. Automatic Bunch Detection in White Grape Varieties Using YOLOv3, YOLOv4, and YOLOv5 Deep Learning Algorithms. Agronomy 2022, 12. [CrossRef]

- Palacios, F.; Bueno, G.; Salido, J.; Diago, M.P.; Hernández, I.; Tardaguila, J. Automated grapevine flower detection and quantification method based on computer vision and deep learning from on-the-go imaging using a mobile sensing platform under field conditions. Computers and Electronics in Agriculture 2020, 178. [CrossRef]

- Sun, L.; Hu, G.; Chen, C.; Cai, H.; Li, C.; Zhang, S.; Chen, J. Lightweight Apple Detection in Complex Orchards Using YOLOV5-PRE. Horticulturae 2022, 8. [CrossRef]

- Apolo-Apolo, O.E.; Pérez-Ruiz, M.; Martínez-Guanter, J.; Valente, J. A Cloud-Based Environment for Generating Yield Estimation Maps From Apple Orchards Using UAV Imagery and a Deep Learning Technique. Frontiers in Plant Science 2020, 11. [CrossRef]

- Murad, N.Y.; Mahmood, T.; Forkan, A.R.M.; Morshed, A.; Jayaraman, P.P.; Siddiqui, M.S. Weed Detection Using Deep Learning: A Systematic Literature Review. Sensors 2023, 23. [CrossRef]

- Quan, L.; Li, H.; Li, H.; Jiang, W.; Lou, Z.; Chen, L. Two-Stream Dense Feature Fusion Network Based on RGB-D Data for the Real-Time Prediction of Weed Aboveground Fresh Weight in a Field Environment. Remote Sensing 2021, 13. [CrossRef]

- Moon, T.; Kim, D.; Kwon, S.; Ahn, T.I.; Son, J.E. Non-Destructive Monitoring of Crop Fresh Weight and Leaf Area with a Simple Formula and a Convolutional Neural Network. Sensors 2022, 22. [CrossRef]

- Lu, W.; Du, R.; Niu, P.; Xing, G.; Luo, H.; Deng, Y.; Shu, L. Soybean Yield Preharvest Prediction Based on Bean Pods and Leaves Image Recognition Using Deep Learning Neural Network Combined With GRNN. Frontiers in Plant Science 2022, 12. [CrossRef]

- Riera, L.G.; Carroll, M.E.; Zhang, Z.; Shook, J.M.; Ghosal, S.; Gao, T.; Singh, A.; Bhattacharya, S.; Ganapathysubramanian, B.; Singh, A.K.; et al. Deep Multiview Image Fusion for Soybean Yield Estimation in Breeding Applications. Plant Phenomics 2021, 2021. [CrossRef]

- Sandhu, K.; Patil, S.S.; Pumphrey, M.; Carter, A. Multitrait machine- and deep-learning models for genomic selection using spectral information in a wheat breeding program. The Plant Genome 2021, 14. [CrossRef]

- Vinson Joshua, S.; Selwin Mich Priyadharson, A.; Kannadasan, R.; Ahmad Khan, A.; Lawanont, W.; Ahmed Khan, F.; Ur Rehman, A.; Junaid Ali, M. Crop Yield Prediction Using Machine Learning Approaches on a Wide Spectrum. Computers, Materials & Continua 2022, 72, 5663-5679. [CrossRef]

- Wolanin, A.; Mateo-García, G.; Camps-Valls, G.; Gómez-Chova, L.; Meroni, M.; Duveiller, G.; Liangzhi, Y.; Guanter, L. Estimating and understanding crop yields with explainable deep learning in the Indian Wheat Belt. Environmental Research Letters 2020, 15. [CrossRef]

- Gong, L.; Yu, M.; Jiang, S.; Cutsuridis, V.; Pearson, S. Deep Learning Based Prediction on Greenhouse Crop Yield Combined TCN and RNN. Sensors 2021, 21. [CrossRef]

- de Oliveira, G.S.; Marcato Junior, J.; Polidoro, C.; Osco, L.P.; Siqueira, H.; Rodrigues, L.; Jank, L.; Barrios, S.; Valle, C.; Simeão, R.; et al. Convolutional Neural Networks to Estimate Dry Matter Yield in a Guineagrass Breeding Program Using UAV Remote Sensing. Sensors 2021, 21. [CrossRef]

- Meng, Y.; Xu, M.; Yoon, S.; Jeong, Y.; Park, D.S. Flexible and high quality plant growth prediction with limited data. Frontiers in Plant Science 2022, 13. [CrossRef]

- Oikonomidis, A.; Catal, C.; Kassahun, A. Deep learning for crop yield prediction: a systematic literature review. New Zealand Journal of Crop and Horticultural Science 2022, 51, 1-26. [CrossRef]

| Calculation method | Implementation method | advantage | disadvantages |

|---|---|---|---|

| Artificial field investigation | Manual statistical calculation by calculation tools | Low technical threshold, simple operation, and strong universality | Each step of the operation is cumbersome and prone to errors, and some crops are also subject to damage detection |

| Meteorological model | Analyze the correlation of meteorological factors and establish models using statistical, simulation, and other methods | Strong regularity, and strong guiding significance for crop production | Need a large amount of historical data to accumulate, suitable for large-scale crops |

| Growth model | Digging a large amount of growth data to digitally describe the entire growth cycle of crops | Strong mechanism, high interpretability, and high accuracy | The growth models have numerous parameters and are difficult to obtain, which are only suitable for specific varieties and regions, and their application is limited |

| Remote sensing calculation | Obtaining remote sensing data from multiple channels such as multispectral and hyperspectral data to establish regression models | Expressing internal and external characteristics of crops, which can reflect agronomic traits of crops | Applicable to specific regions, environments, and large-scale crops |

| Image detection | Implementing statistics and counting through target segmentation or detection | Low cost and high precision | A large number of sample images are required, and the occlusion problem is not easy to solve |

| Classification | Variety | Crop characteristics | Yield calculation indicators |

|---|---|---|---|

| food crops | corn | Important grain crop with strong adaptability, planted in many countries, and also an important source of feed | Number of plants, empty stem rate, number of grains per spike |

| wheat | The world's highest sowing area, yield, and distribution of food crops; High planting density and severe mutual obstruction | Number of ears, number of grains per ear, and thousand-grain weight | |

| rice | One of the world's most important food crops, accounting for over 40% of the total global food production | Number of ears, number of grains per ear, seed setting rate, thousand-grain weight | |

| economic crops | cotton | One of the world's important economic crops, important industrial raw materials and strategic supplies | Total number of cotton beads per unit area, number of cotton bolls per plant, and quality of seed cotton per boll |

| soybean | One of the world's important economic crops, widely used in food, feed, and industrial raw materials | Number of pods, number of seeds per plant, and weight of 100 seeds | |

| potato | Potatoes are the world's fourth largest food crop after wheat, corn, and rice | Tuber weight and fruiting rate | |

| sugarcane | Important economic crops, grown globally, important sugar raw materials | Single stem weight and number of stems | |

| sunflower | Important economic and oil crops | Kui disk size and number of seeds | |

| tea | Important beverage raw materials | Number and density of tender leaves | |

| apple | The third largest fruit crop in the world | Number of plants per mu, number of fruits per plant, and fruit weight | |

| grape | Fruit consumption and brewing raw materials have high social and economic impacts | Grape bead count, ear count, and grain count | |

| orange | The world's largest category of fruits has become a leading industry in many countries | Number of plants per mu, number of fruits per plant, and fruit weight | |

| tomato | One of the main vegetable varieties in the facility, and also an important raw material for seasoning sauces | Number of spikes per plant, number of fruits, and fruit weight | |

| almond | Common food and traditional Chinese medicine raw materials | Number of plants per mu, number of fruits per plant, and fruit weight | |

| kiwifruit | One of the most consumed fruits in the world, renowned as the "King of Fruits" and "World Treasure Fruit" | Number of plants per mu, number of fruits per plant, and fruit weight |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).