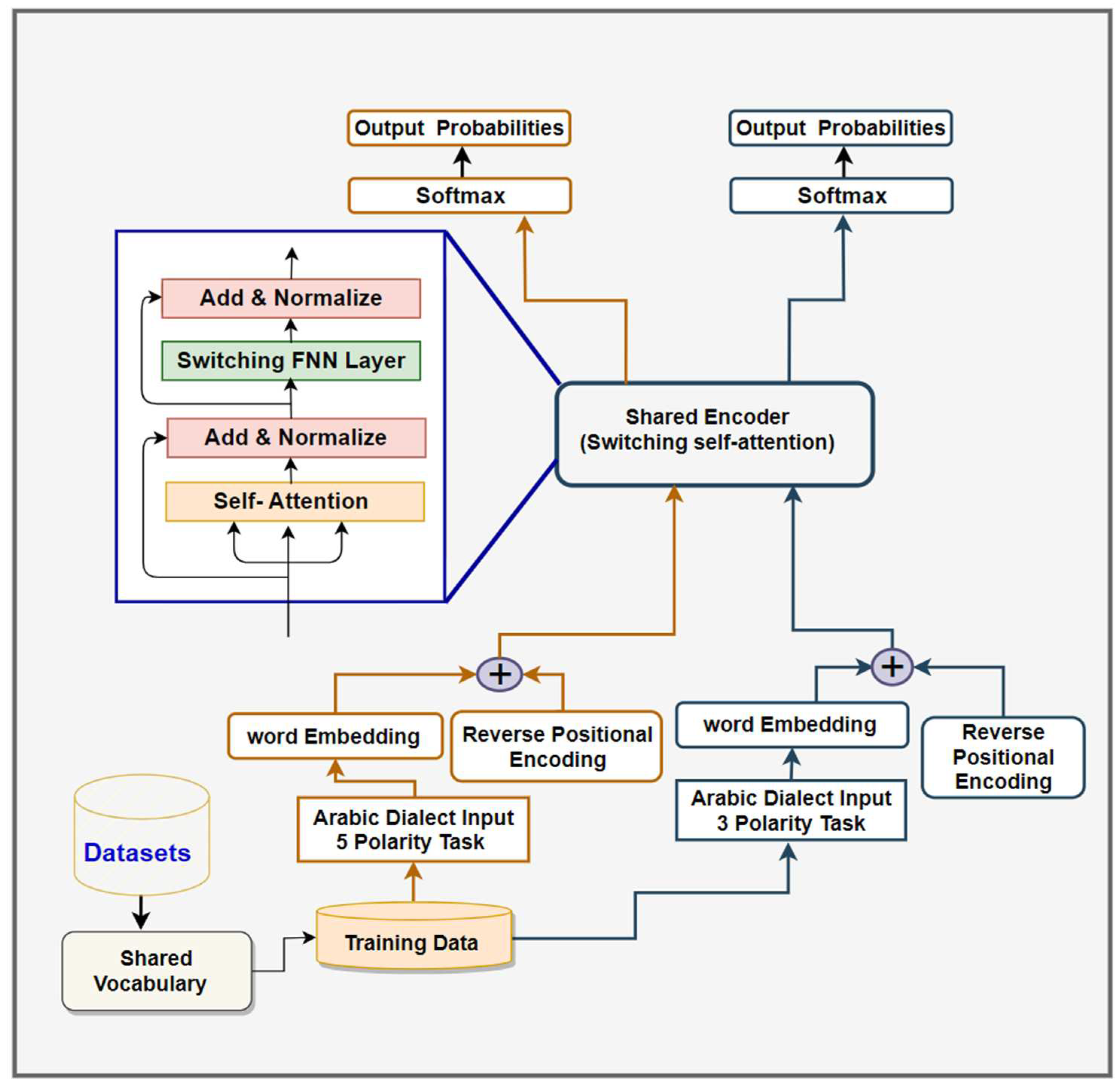

A range of empirical assessments was carried out to determine the efficacy of the SSA-TC-RPE model in classifying Arabic vernaculars. The capability of the proposed SSA-TC-RPE model in categorizing Arabic dialects (ADs) was comprehensively scrutinized.

4.1. Data

The Training for the proposed model was executed using three key datasets. The primary dataset was HARD [

27], consisting of reviews from a variety of booking sites, sorted into five distinct classes. This was followed by employing the BRAD [

26] and LABR [

22] datasets for further training. This study made use of datasets at the review level, incorporating BRAD, HARD, and LABR. Specifically, BRAD's reviews, sourced from the Goodreads website, were classified into five distinct scales. The class distributions for HARD, BRAD, and LABR are outlined in

Table 2,

Table 3 and

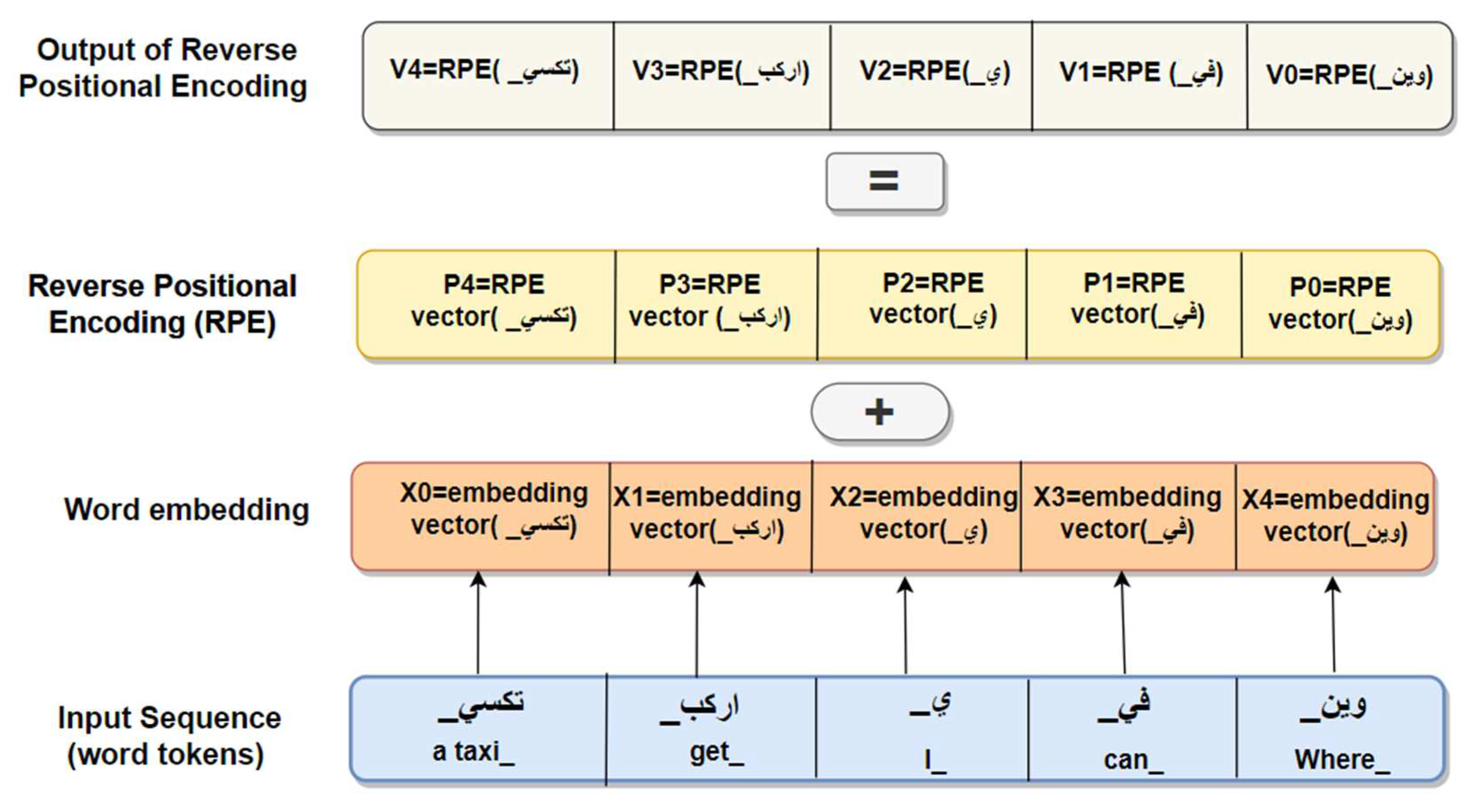

Table 4, respectively. It should be noted that the datasets in this study were used in their original, raw form, which might have implications for the accuracy of the model. Furthermore, preprocessing was performed on all sentences, involving sentence segmentation to break down the reviews into discrete sentences. This procedure also included the elimination of Latin characters, non-Arabic elements, diacritics, hashtags, punctuation, and URLs from the ADs texts. The Arabic dialect texts underwent orthographic normalization to ensure consistency and standardization [

2]. Emoticons within the data were converted into corresponding textual descriptions, and adjustments were made for words that were artificially lengthened. To prevent the risk of the model becoming over-fitted, an early stopping mechanism was implemented, setting the patience parameter at three epochs. For assessing the SSA-TC-RPE model's effectiveness, which incorporates Inductive Transfer Learning (ITL) in classifying Arabic dialect texts, a checkpoint system was utilized to save the most optimal weights of the model. The dataset was divided, with 80% allocated for training and 20% for testing. Additionally, a K-fold cross-validation technique with k = 2 was employed to establish a split between training and testing for the model's evaluation [

52]. Examining the HARD, BRAD, and LABR datasets revealed the sentiment distribution within these samples. The HARD dataset contained 409,562 entries, categorized into 5 sentiment types. Allocating 80% of this dataset (327,649 samples) for training and the remaining 20% (81,912 samples) for testing allowed for a comprehensive understanding of sentiment variation. In a similar manner, the BRAD dataset with 510,598 entries was divided, with 80% (408,478 samples) used for training and 20% (101,019 samples) for testing. The smaller LABR dataset, consisting of 63,257 entries, also maintained the same 80–20 division for training (50,606 samples) and testing (12,651 samples). These splits ensured that all five sentiment categories were well-represented in both training and testing stages, aiding the models in grasping sentiment subtleties and applying this understanding to new data. Biases in text classification models can significantly affect their accuracy. If training data contain biases, they might distort the results. To mitigate this issue and ascertain the optimal data selection for our SSA-TC-RPE text classification model tailored for Arabic dialects, we executed five specific steps:

Ensured that the training dataset included a diverse range of sources, covering various demographic groups, geographic areas, and social environments. This method was aimed at reducing biases, leading to a dataset that was not just comprehensive but also balanced in its representation.

Verified that the sentiment labels within the training dataset were uniformly allocated across all demographic groups and perspectives

Set Established clear guidelines for labeling, instructing human annotators to maintain neutrality and avoid infusing their own biases into the sentiment labels. This strategy helped ensure consistency and minimize the likelihood of bias in the dataset.

Undertook a thorough review of the training data to identify any underlying biases. This involved examining aspects such as demographic imbalances, stereotype reinforcement, and potentially underrepresented groups. Once these biases were detected, corrective actions were taken. These included using methods like data augmentation, increasing the representation of underrepresented groups through oversampling, and implementing various preprocessing techniques.

4.5. Results

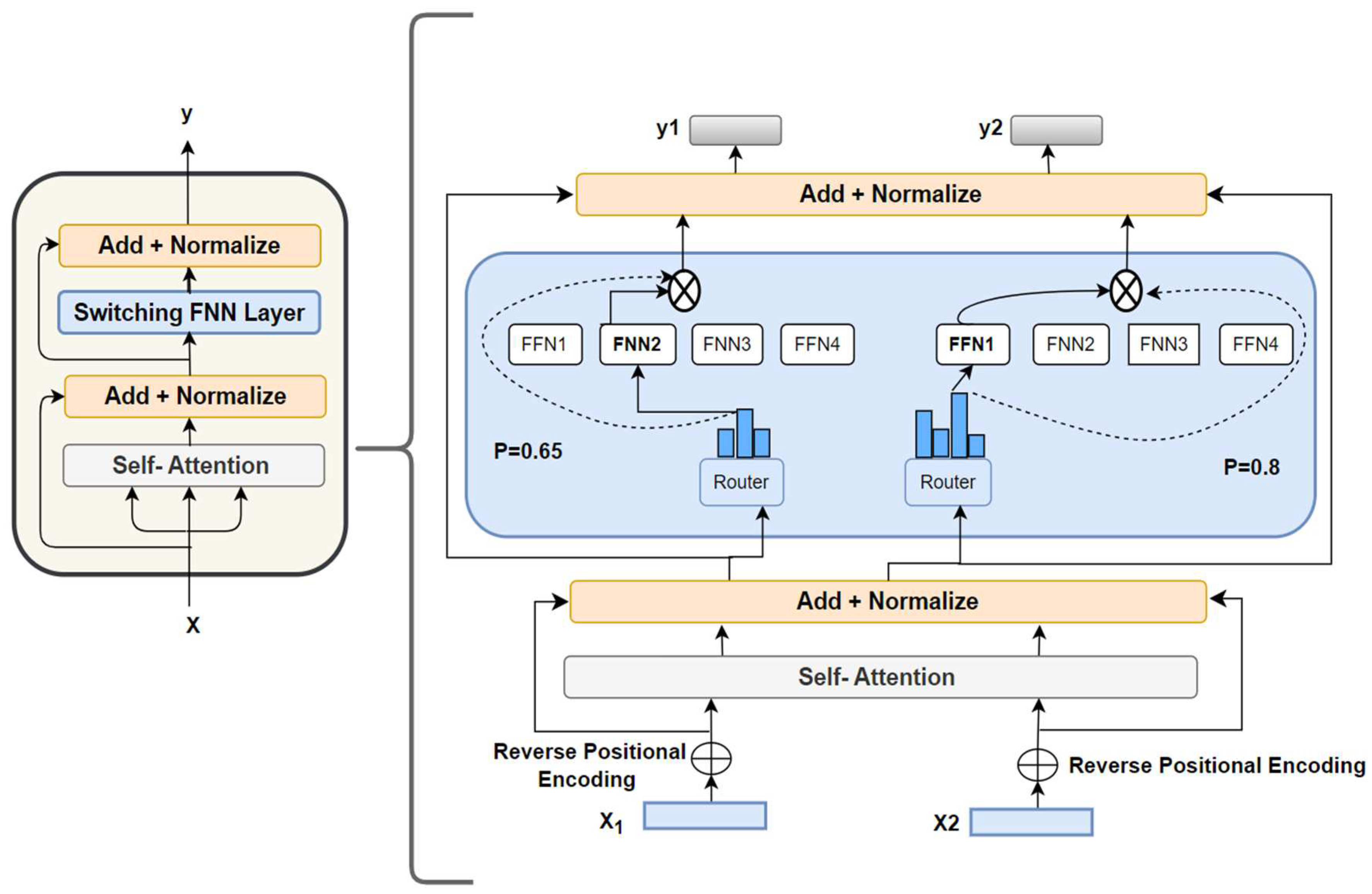

A series of experimental tests were conducted using the proposed SSA-TC-RPE model for Arabic dialects. We trained the SSA-TC-RPE system with various configurations, including different numbers of attention heads (AHs) in the Multi-Head Attention (MHA) sub-layer and varying quantities of encoders to determine the most effective structure. The system also underwent training with different word embedding sizes for each token. This study evaluated the impact of employing two inductive transfer learning approaches – concurrent and alternating – on the system's performance. The effectiveness of the SSA-TC-RPE system in text classification was measured using an automated accuracy metric. This part of the research presents an evaluation of the SSA-TC-RPE system's performance in five-polarity classification tasks for Arabic dialects. The outcomes of these empirical tests on the HARD, BRAD, and LABR datasets are presented in

Table 5,

Table 6 and

Table 7. As detailed in

Table 5 and

Table 8, the SSA-TC-RPE system demonstrated high performance on the HARD imbalanced dataset, achieving an accuracy of 87.20%, an F-score of 86.91%, and a precision of 86.50%. This was accomplished with a configuration of 2 attention heads (AH), 80 tokens, 10 experts, a batch size of 65, a filter size of 30, a dropout rate of 0.20, and an embedding dimension of 25 for each token. The system's notable accuracy is attributed to the effective integration of the Inductive Transfer Learning (ITL) framework, Mixture of Experts (MoE) mechanism, and Multi-Head Attention (MHA) approach, especially for right-to-left scripts like Arabic Dialects (ADs). The MoE mechanism uses a series of expert networks that analyze different aspects of the input data and combine their outputs through a gating network. This feature allows the model to dynamically choose from a range of parameters (expert modules) based on the input, enhancing its ability to accurately discern sentiments. Comparing the SSA-TC-RPE model's results to the top-performing system on the HARD dataset, it outperformed the Logistic Regression (LR) [

60] model by 11.1% in accuracy. Moreover, the developed model excelled beyond AraBERT [

58], achieving an accuracy that was 6.35% higher, and also outdid the T-TC-INT model [

45] by a margin of 5.37% in accuracy. Also, the proposed model outperformed the ST-SA model [

64] by 3.18% in accuracy. This superior performance can be attributed to the simultaneous processing of related learning tasks, which broadened the data spectrum and minimized the likelihood of overfitting [

61]. The system demonstrated adeptness in recognizing both syntactic and semantic elements, enabling precise detection of sentiments expressed in Arabic Dialect (AD) sentences.

Moreover, the suggested SSA-TC-RPE system demonstrated remarkable results on the imbalanced BRAD dataset. As depicted in

Table 6, the model attained an accuracy of 72.17%, an F-score of 71.89%, and a precision of 71.72%. These outcomes were achieved with configurations of 4 attention heads (AH), 22 tokens, 18 experts, a batch size of 50, a filter size of 35, a dropout rate of 0.25, and an embedding dimension of 55 for each token. As indicated in

Table 9, the SSA-TC-RPE system significantly outperformed the logistic regression (LR) method [

26], showing an accuracy improvement of 24.47%. It also exceeded the AraBERT model [

58] by 11.32% and the T-TC-INT [

44] system by 10.44%. It also exceeded the SA-ST model [

64] by 3.36%. Fu rthermore, the use of a switching self-attention based shared encoder, one for each classification task, enabled the model to effectively represent the context before, after, and around any position within a sentence, leading to a more nuanced and comprehensive understanding.

Additionally, as outlined in

Table 7, the proposed Switching Self-Attention text classification model utilizing multi-task learning (SSA-TC-RPE) exhibited remarkable results on the complex and imbalanced LABR dataset. In this research, the model impressively achieved an accuracy rate of 86.89%, an F-score of 85.91%, and a precision of 85.39%, outperforming other methods. It's notable that this high level of performance was attained with specific configurations, including two attention heads (AHs), a filter size of 30, 90 tokens, 11 experts, a batch size of 80, a dropout rate of 0.20, and an embedding dimension of 65 for each token. These results underscore the efficacy of the SSA-TC-RPE model in tackling the intricacies of text classification in an imbalanced dataset, demonstrating its robust capabilities.

Table 10 showcase the superior performance of the proposed Switching Self-Attention text classification model employing inductive transfer learning (SSA-TC-REP) when compared to a range of alternative methodologies. The SSA-TC-RPE model notably exceeded several other models by considerable margins. For instance, it surpassed the SVM [

23] model with an impressive accuracy increase of 36.59%, outdid the MNP [

22] model by 41.89% in accuracy, exceeded the HC(KNN) [

24] model by 29.09%, and achieved an accuracy that was 27.93% higher than AraBERT [

58]. Furthermore, it outstripped the HC(KNN) [

25] model by 14.25% in accuracy. The model also performed better than the T-TC-INT model [

44], showing an accuracy improvement of 8.76%. Furthermore, the proposed model also performed better than the SSA-TC-RPE model [

64], showing an accuracy improvement of 2.98%.

Within the realm of deep learning, joint training involves training a single neural network on several interconnected tasks at the same time. Rather than developing individual models for each task, this strategy enables the model to recognize and utilize shared characteristics across all tasks, thereby enhancing its versatility and operational efficiency. This method typically leads to improved outcomes for each task, as the model benefits from the synergistic relationships between the tasks. In contrast, imbalanced data refers to datasets in which the distribution of classes (or categories) is uneven. This often means that one or more classes are underrepresented compared to others, presenting potential difficulties in training the model and assessing its performance.

This scenario poses challenges for deep learning models, as they may develop a tendency to favor the more prevalent class, leading to inferior performance on the less represented classes. The evaluation results indicate that the SSA-TC-RPE system, when applied to both joint and alternative learning methods, shows remarkable effectiveness. Alternative training outperformed joint training, as evidenced by higher accuracies of 87.20% and 81.79% on the imbalanced HARD dataset, and 72.17% and 68.21% on BRAD, respectively, as shown in

Table 11. In contrast to conventional approaches, alternative training in a five-tier classification model seems to better capture subtle feature variations in text sequences compared to single-task learning. These results suggest that alternative learning is more suitable for complex text classification (TC) tasks, enabling the development of more intricate and detailed latent representations for Arabic Dialect text classification (ADs TC) tasks. The distinct performance difference between the two methodologies can be ascribed to how alternative training utilizes the diverse data volumes available in the datasets of each task.

Shared layers often contain a greater amount of information for tasks with larger datasets. However, joint learning may exhibit a bias towards tasks associated with significantly larger datasets. Therefore, alternative training methods are generally considered more appropriate for tasks like text classification of Arabic dialects. This is especially the case when dealing with two separate datasets for different tasks, such as in machine translation scenarios where the transition is from Arabic dialects (ADs) to Modern Standard Arabic (MSA) and then to English [

2]. By alternating the network's focus between tasks, the efficiency of each task is enhanced without the need for additional training data [

1]. Additionally, harnessing the synergistic relationship between related tasks can improve the efficacy of five-point classification systems. The marked improvements in our model's performance can be ascribed to several factors. Outperforming established models like AraBERT, renowned for its proficiency in Arabic language tasks, is a notable accomplishment. Our model's ability to exceed AraBERT's performance on the same datasets demonstrates its enhanced precision in processing Arabic dialects. Even small improvements in accuracy are valuable, as they contribute to the overall development of models tailored for Arabic dialect processing. These advancements can have practical applications, such as in more accurate text classification, better information retrieval, and other natural language processing tasks designed for Arabic dialects.

The SSA-TC-RPE system notably did not show significant improvements on the BRAD dataset when compared to existing models. This lack of enhanced performance might stem from the system's limited understanding of the distinct characteristics, idiomatic expressions, and linguistic subtleties unique to the BRAD Arabic dataset. A lack of adequate domain-specific adaptation could lead to a disconnect between the features the model learns and the unique elements of the BRAD dataset, resulting in suboptimal performance. To boost the model's effectiveness in text classification on the BRAD dataset, implementing advanced deep learning techniques, particularly domain adaptation, is essential. For instance, the use of transformers, especially BERT (Bidirectional Encoder Representations from Transformers), has been transformative in NLP due to their proficiency in contextualizing text. Optimizing a pre-trained BERT model specifically for the BRAD dataset could substantially improve its text classification capabilities.

In evaluating the practicality, while pre-trained models are easily accessible, fine-tuning them demands significant computational power and NLP expertise. This process is viable with the availability of these resources. Adversarial training is another crucial method, where the model is trained to withstand manipulative adversarial examples. For text classification involving five-polarity Arabic dialects, this approach can enhance the model's ability to deal with subtle and varied expressions of sentiment. Although the implementation of adversarial training can be intricate and resource-intensive, it is achievable with sufficient deep learning resources and know-how. Domain-adaptive fine-tuning stands out as an effective technique, particularly for text classification on the BRAD dataset. It involves incrementally fine-tuning a pre-trained model using a combination of data from both the source and target domains, with an increasing emphasis on the target domain. This method facilitates the model's adaptation to the unique linguistic features and sentiment expressions specific to the BRAD dataset.

Moreover, domain-adaptive fine-tuning is a viable option when there is an ample supply of data from both the source and target domains. It requires fewer resources than building a model from the ground up. Meta-learning, another approach, trains a model across a variety of tasks, enabling it to quickly adapt to new tasks or domains. This method proves beneficial in five-polarity text classification for Arabic dialects (ADs), as it can accommodate a wide range of expressions and contexts. However, meta-learning needs diverse training datasets and substantial computational resources, making it suitable for environments with ample resources. In cases where the BRAD dataset encompasses multilingual data, cross-lingual models like multilingual BERT become effective. These models, trained in several languages, are adept at conducting text classification across different linguistic scenarios. Pre-trained versions of these models, akin to BERT, are available. Fine-tuning them for the specific languages in the BRAD dataset is essential and can be done with the right computational infrastructure.