Submitted:

06 February 2024

Posted:

07 February 2024

You are already at the latest version

Abstract

Keywords:

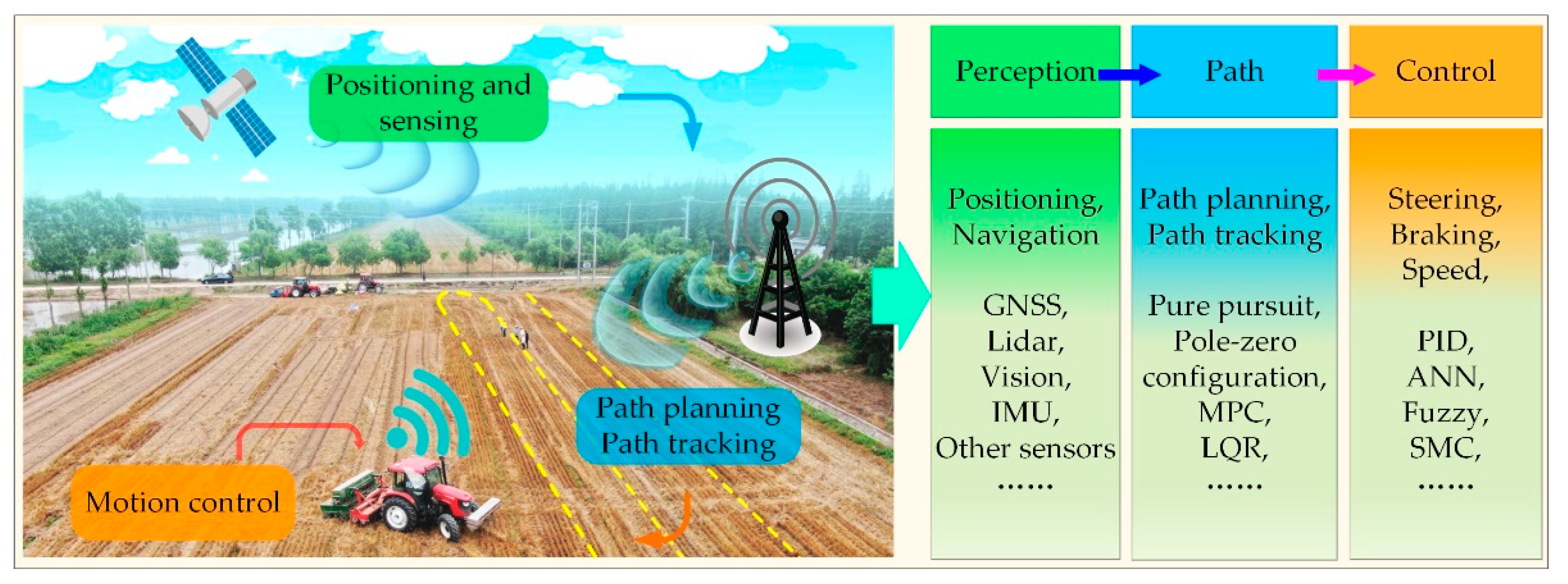

1. Introduction

2. Perceptive techniques of UATs

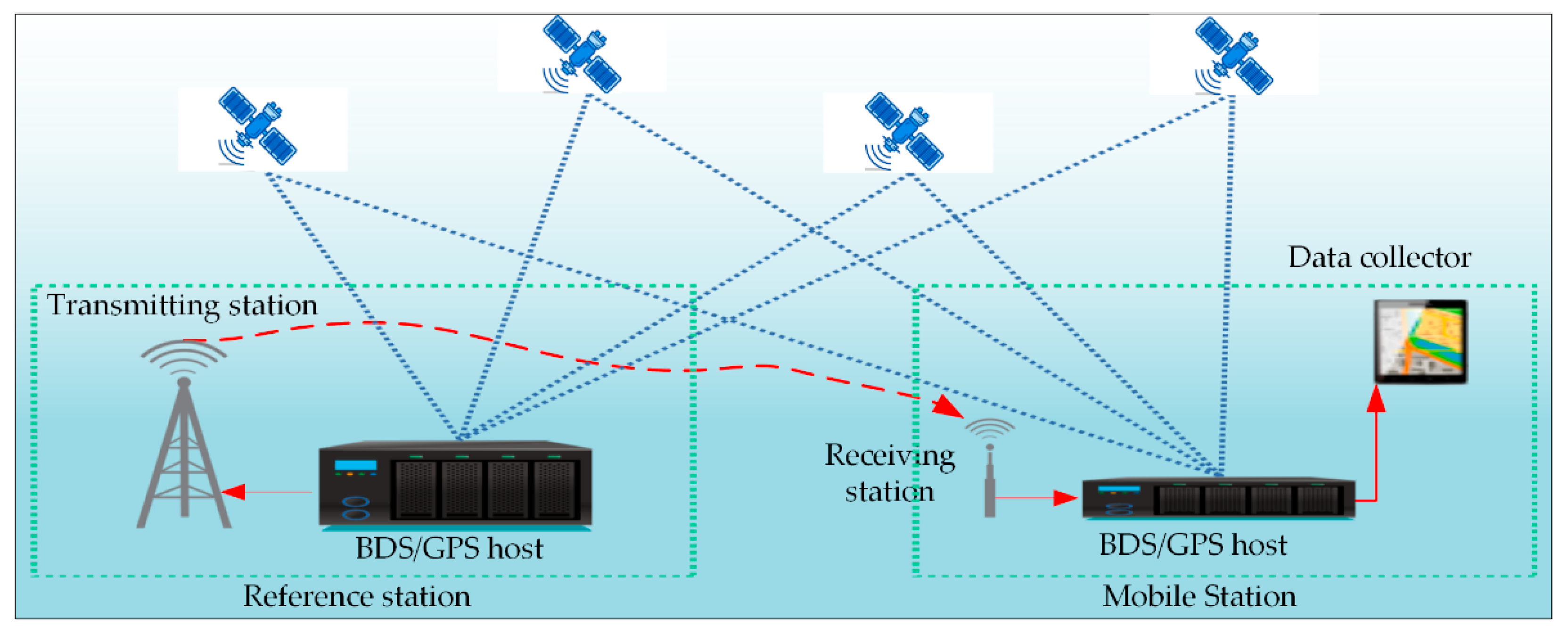

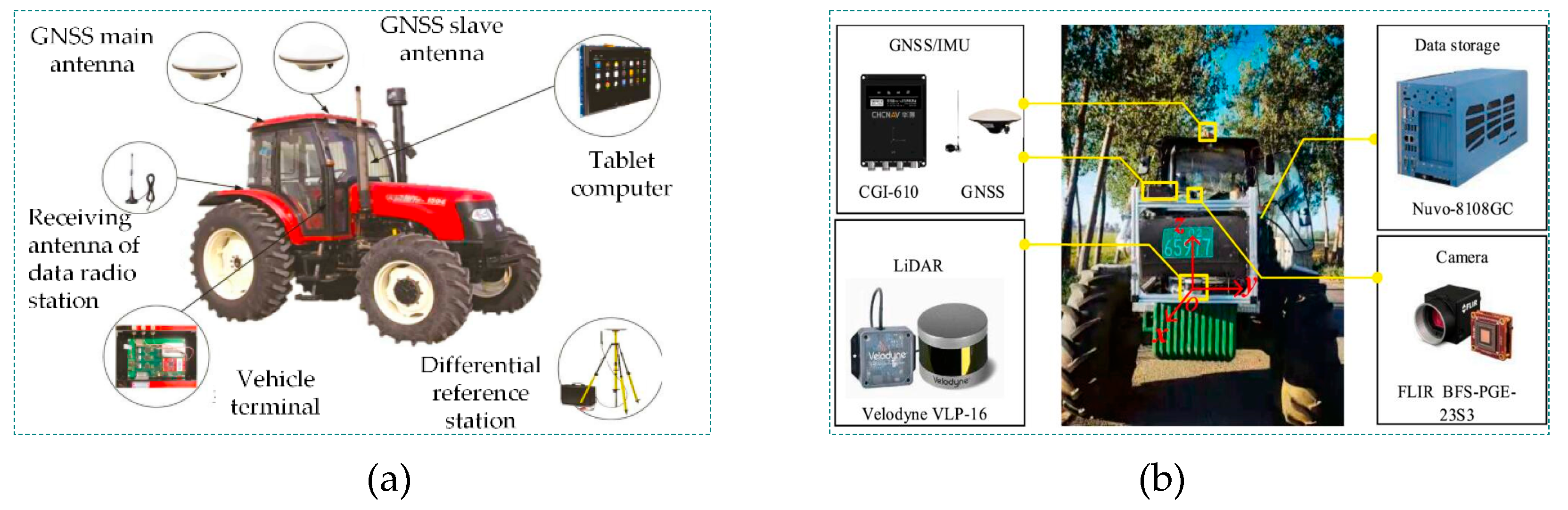

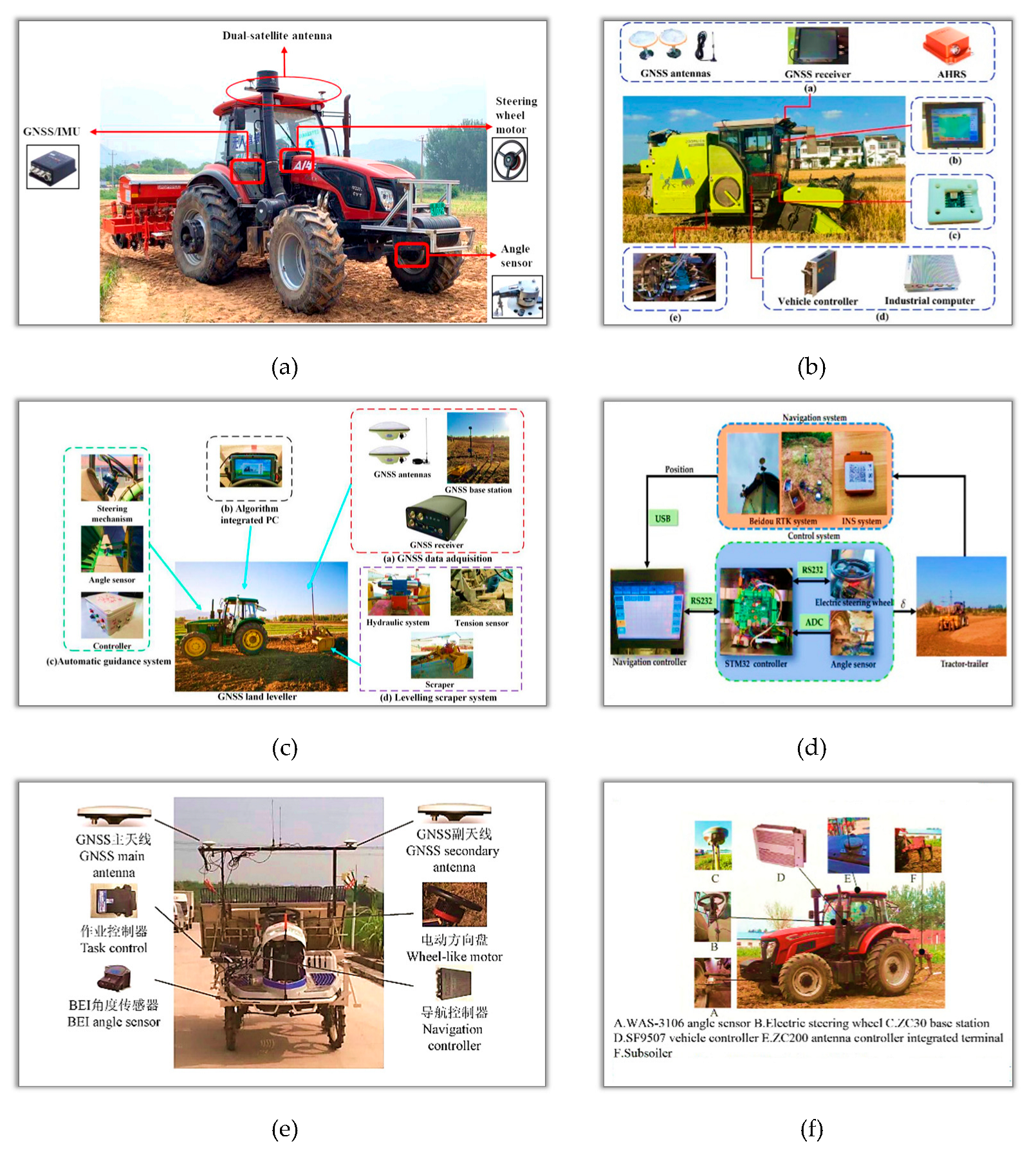

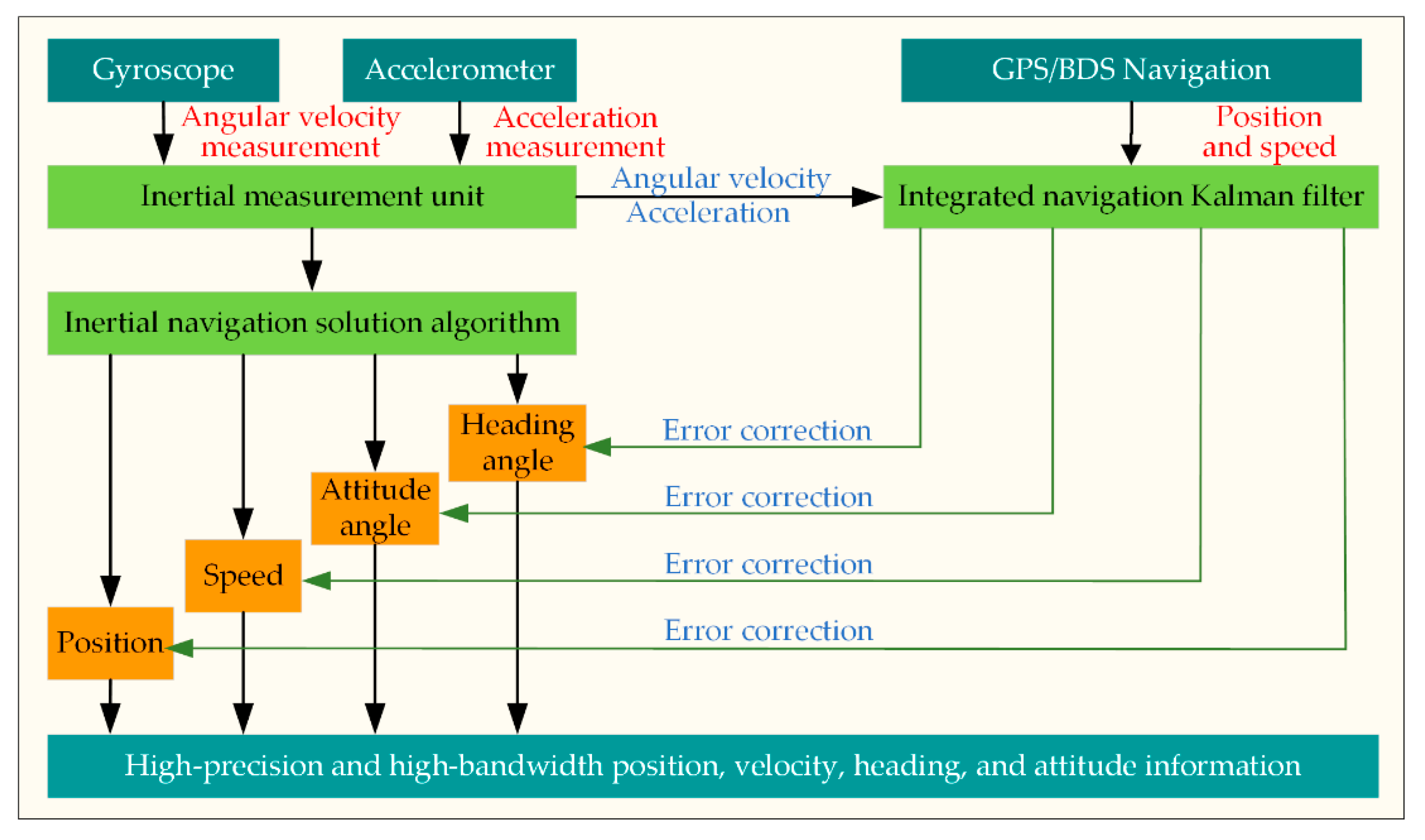

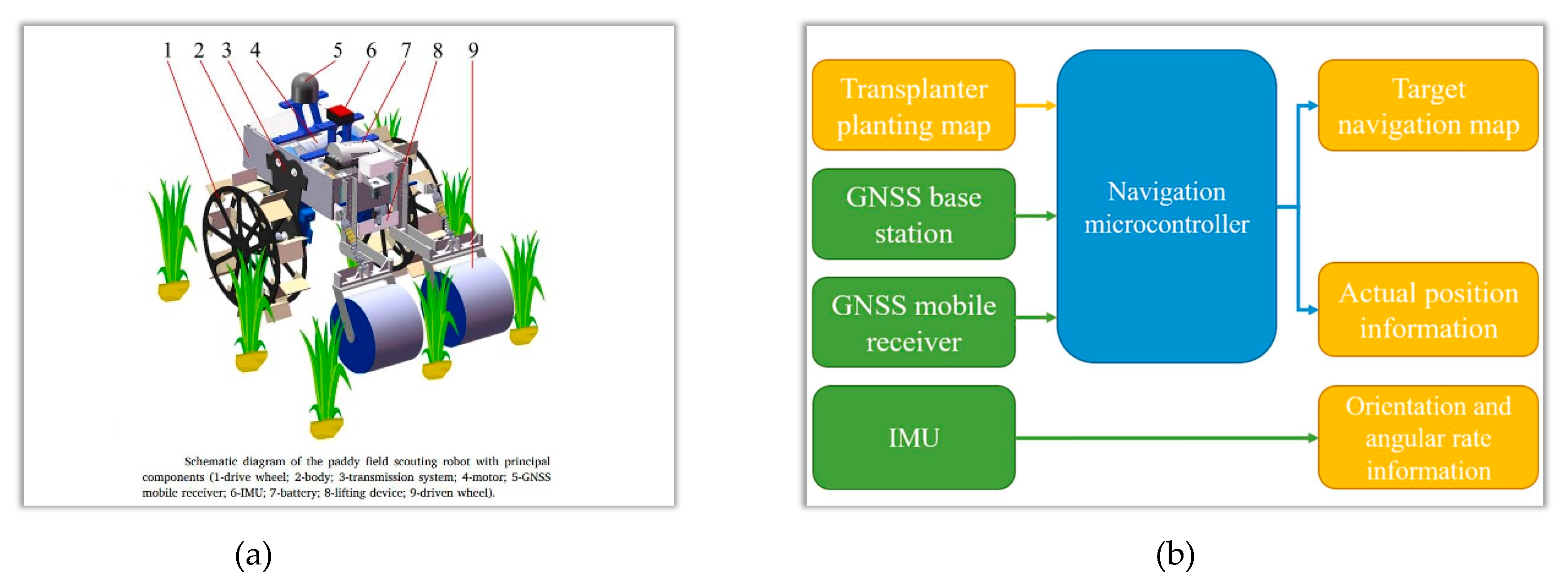

2.1. Positioning technology

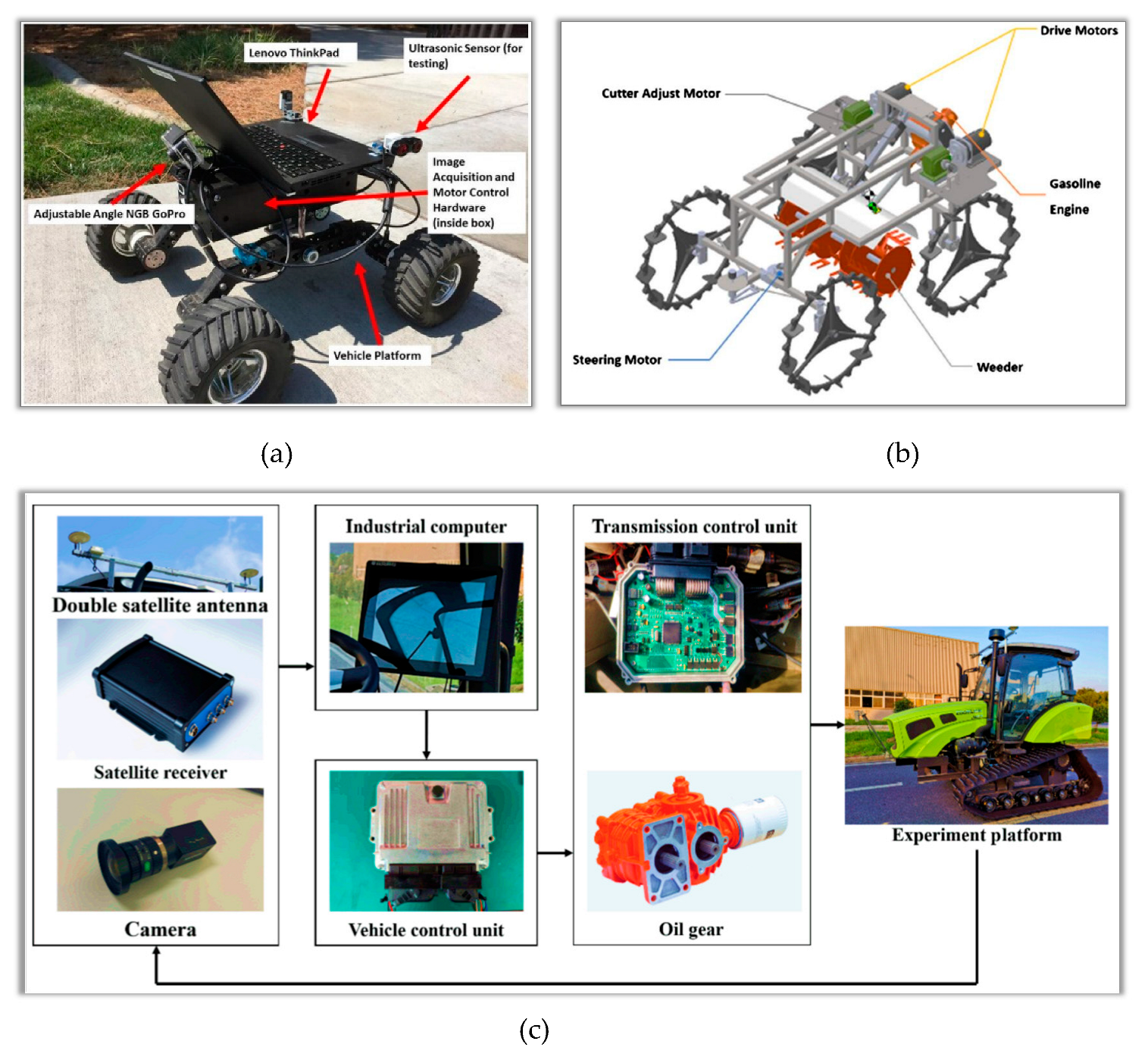

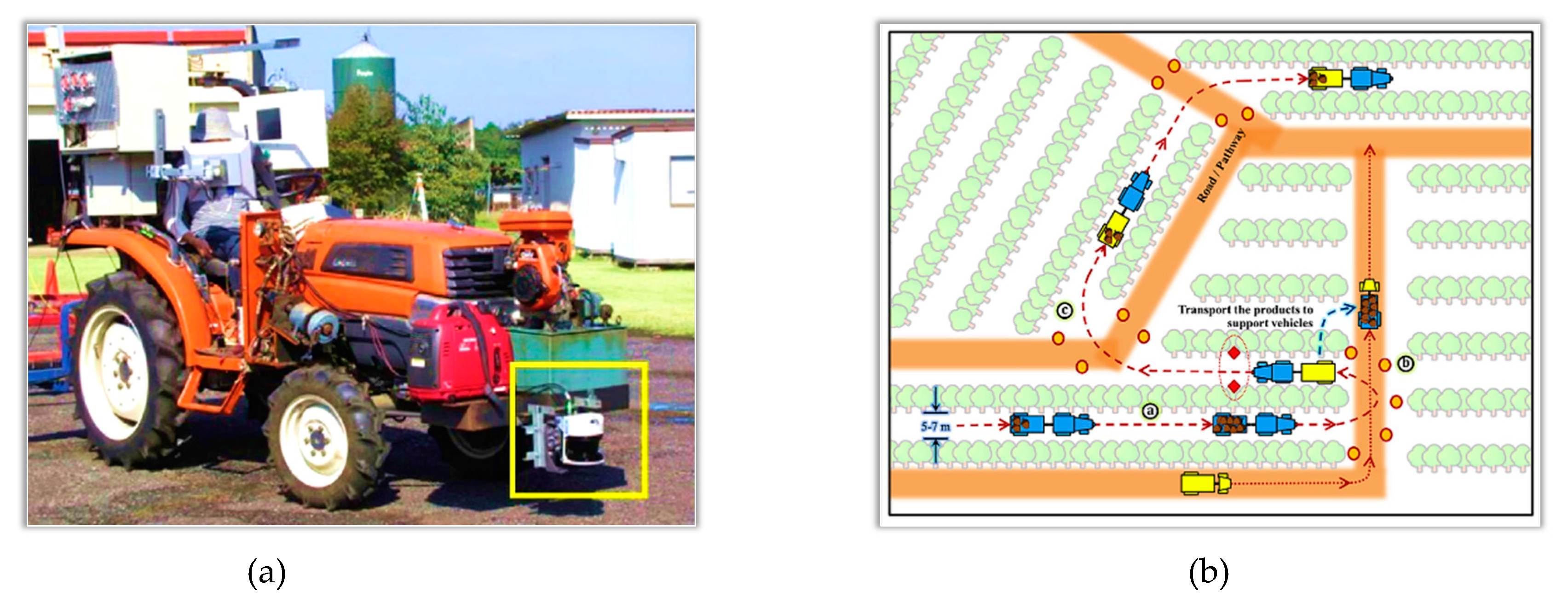

2.2. Sensing technology

2.2.1. Field environment perception

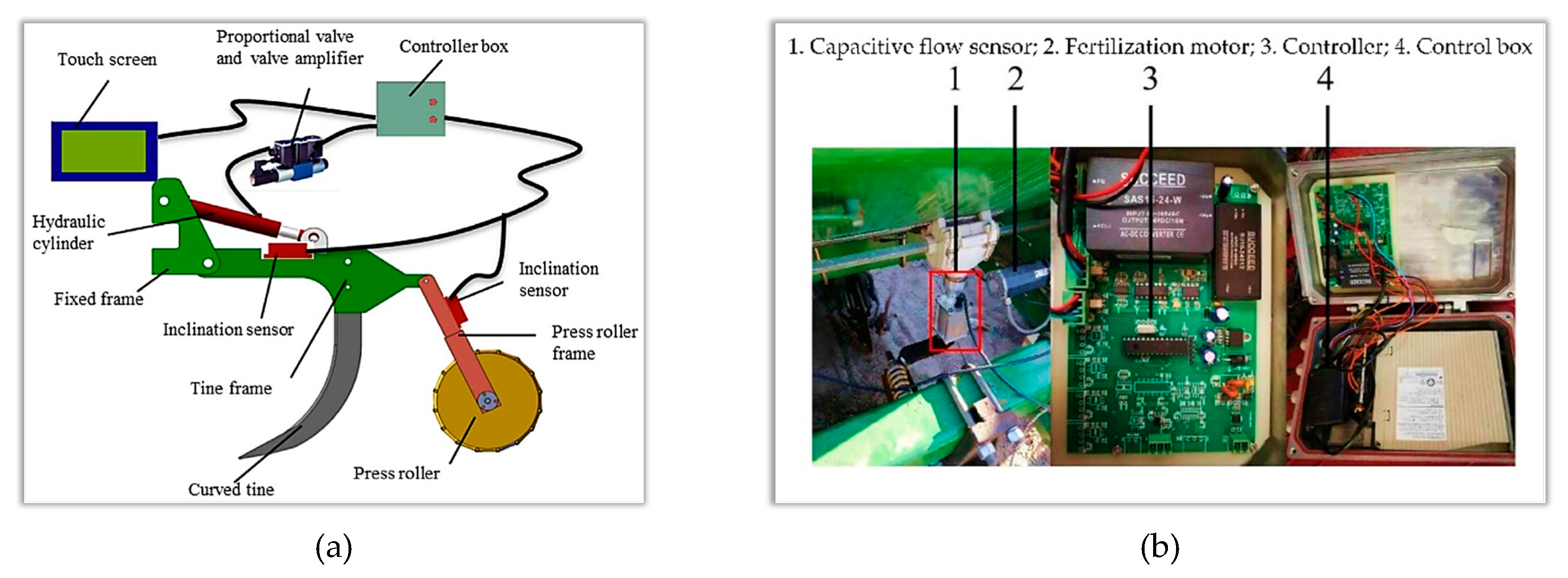

2.2.2. Operation state perception

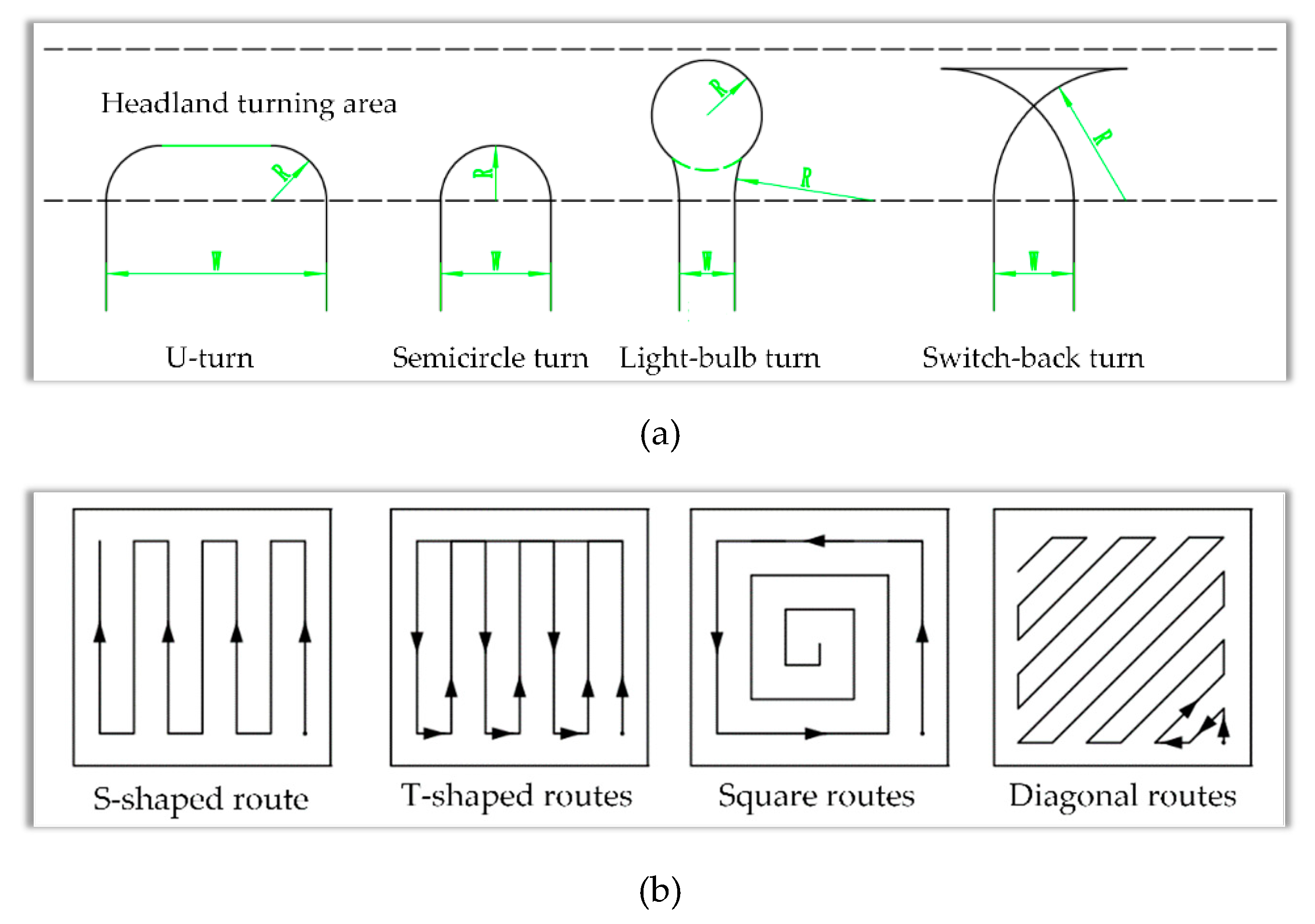

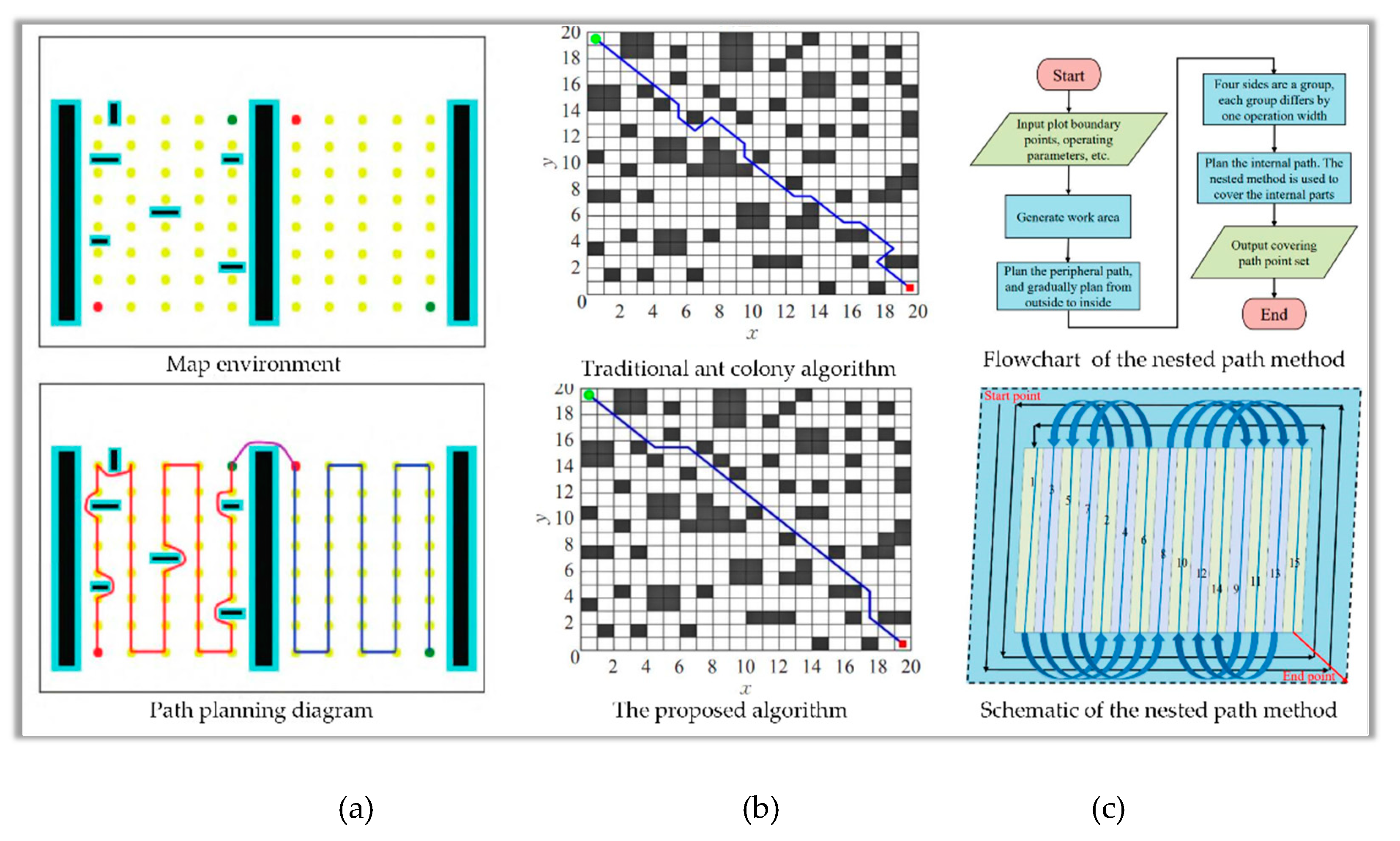

3. Path planning techniques of UATs

3.1. Path planning optimization

3.1.1. Factors of path planning

3.1.2. Optimization strategies

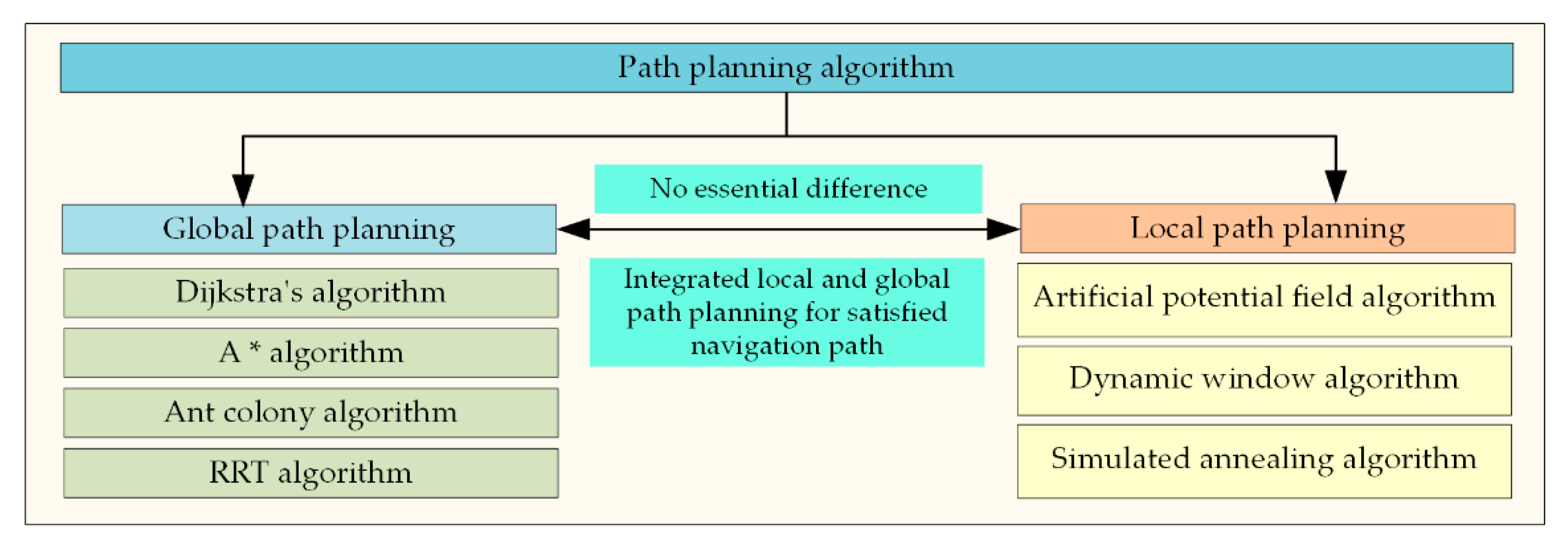

3.2. Global path planning

3.3. Local path planning

4. Path-tracking techniques of UATs

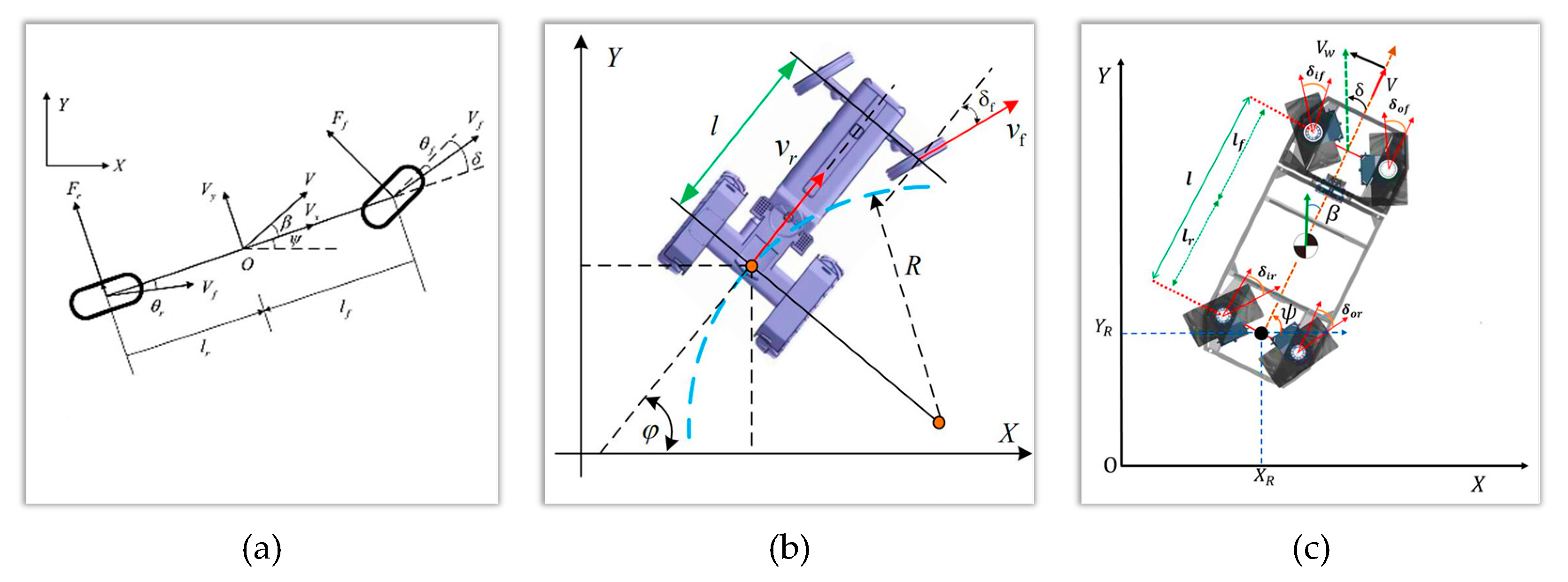

4.1. Motion model for path-tracking

4.2. Path tracking algorithms

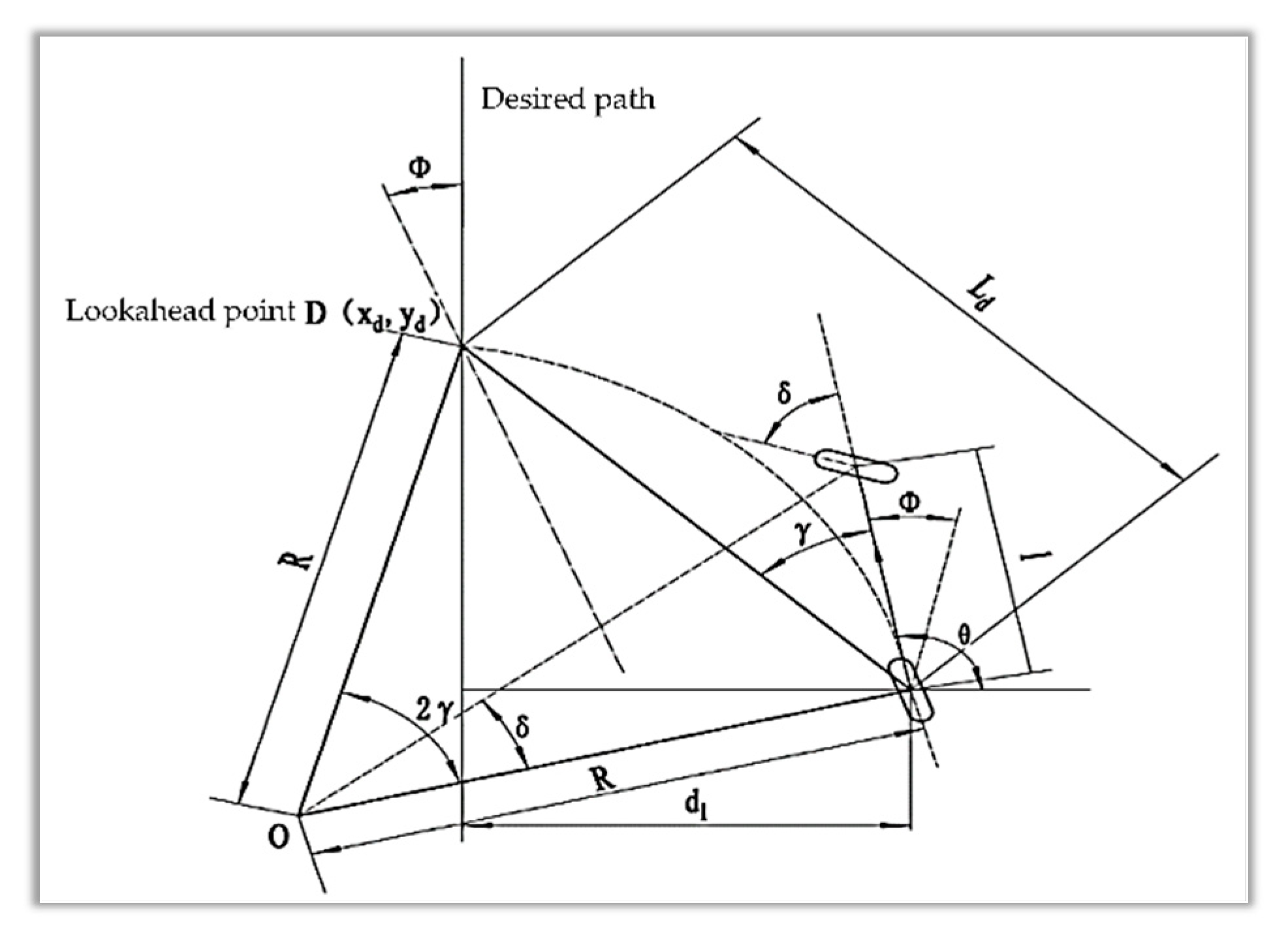

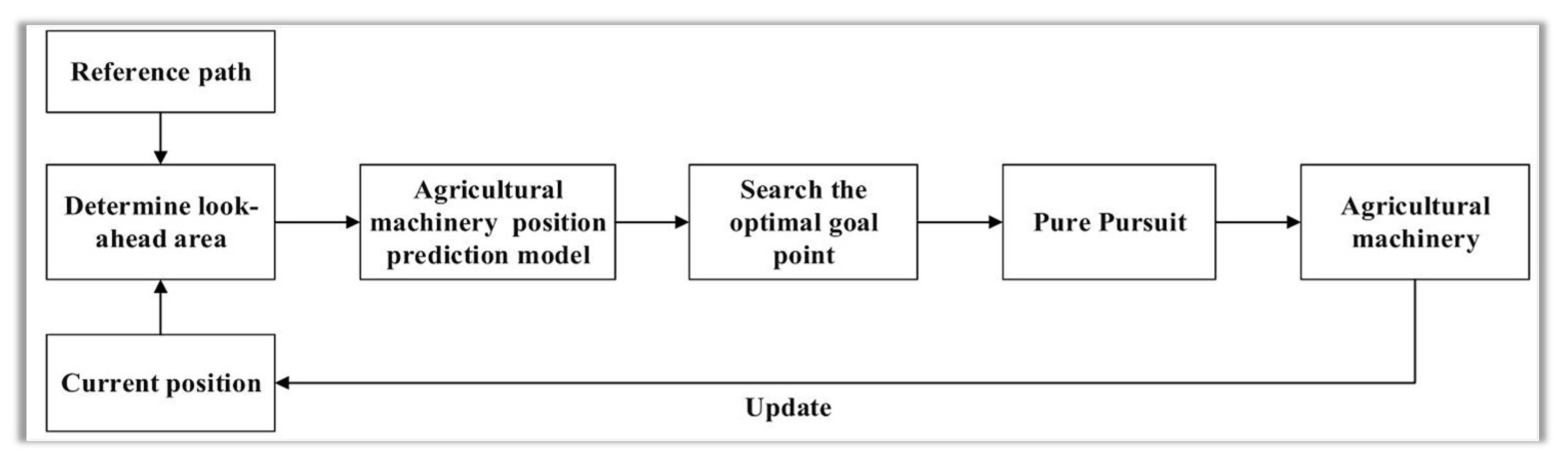

4.2.1. Pure pursuit method

4.2.2. Pole-zero configuration

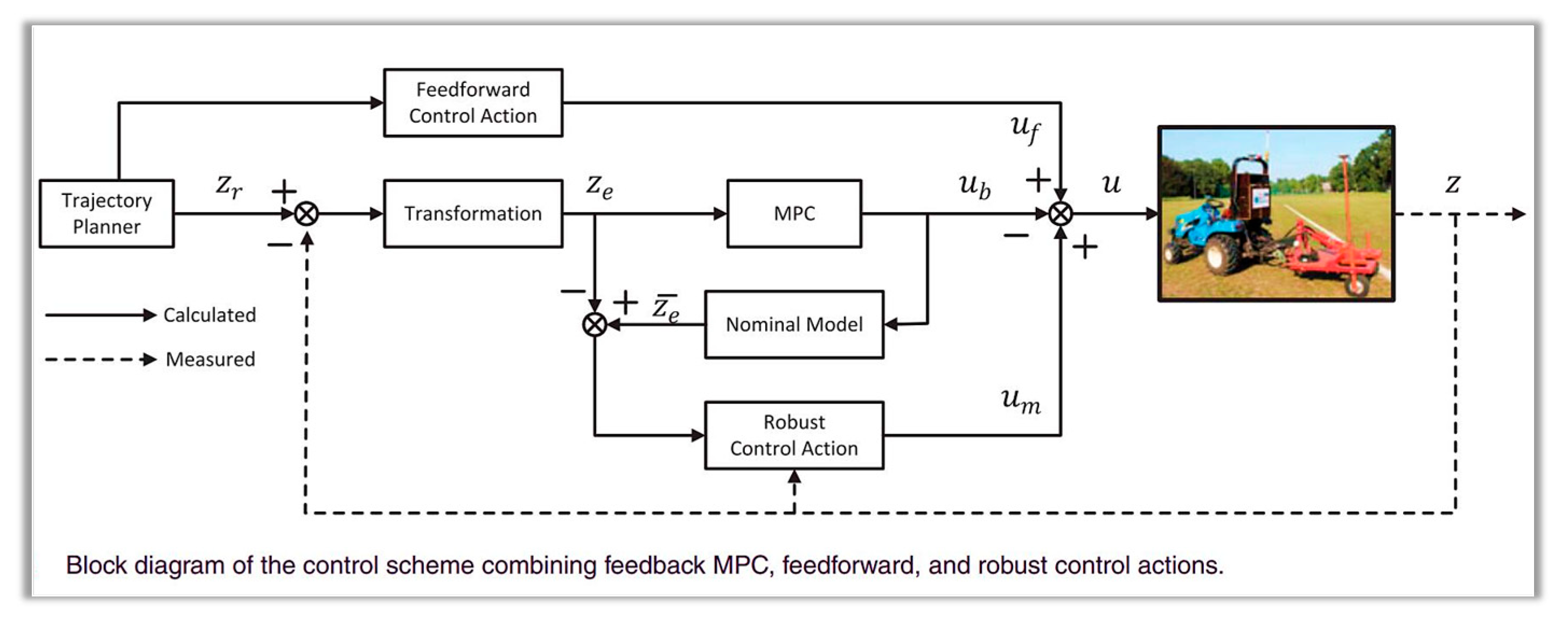

4.2.3. Model predictive control

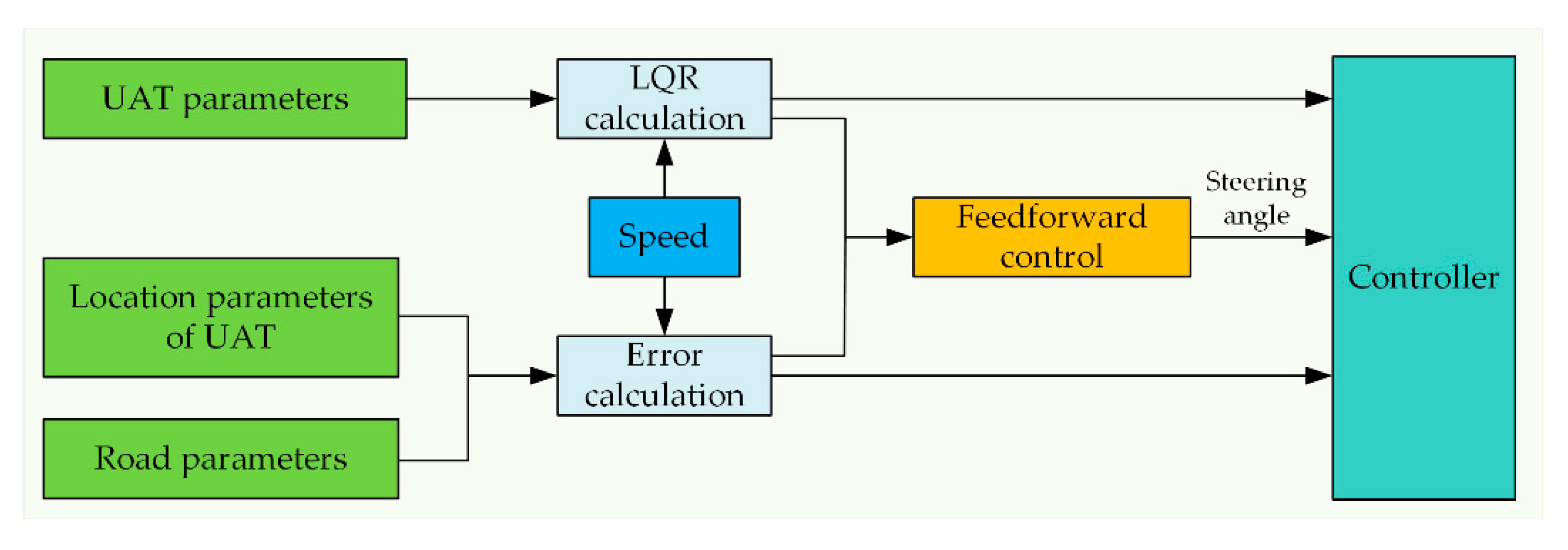

4.2.4. Linear quadratic regulator

4.2.5. Other novel approaches

5. Motion control techniques of UATs

5.1. Control methods for automatic navigation

5.1.1. PID control

5.1.2. Neural networks

5.1.3. Fuzzy control

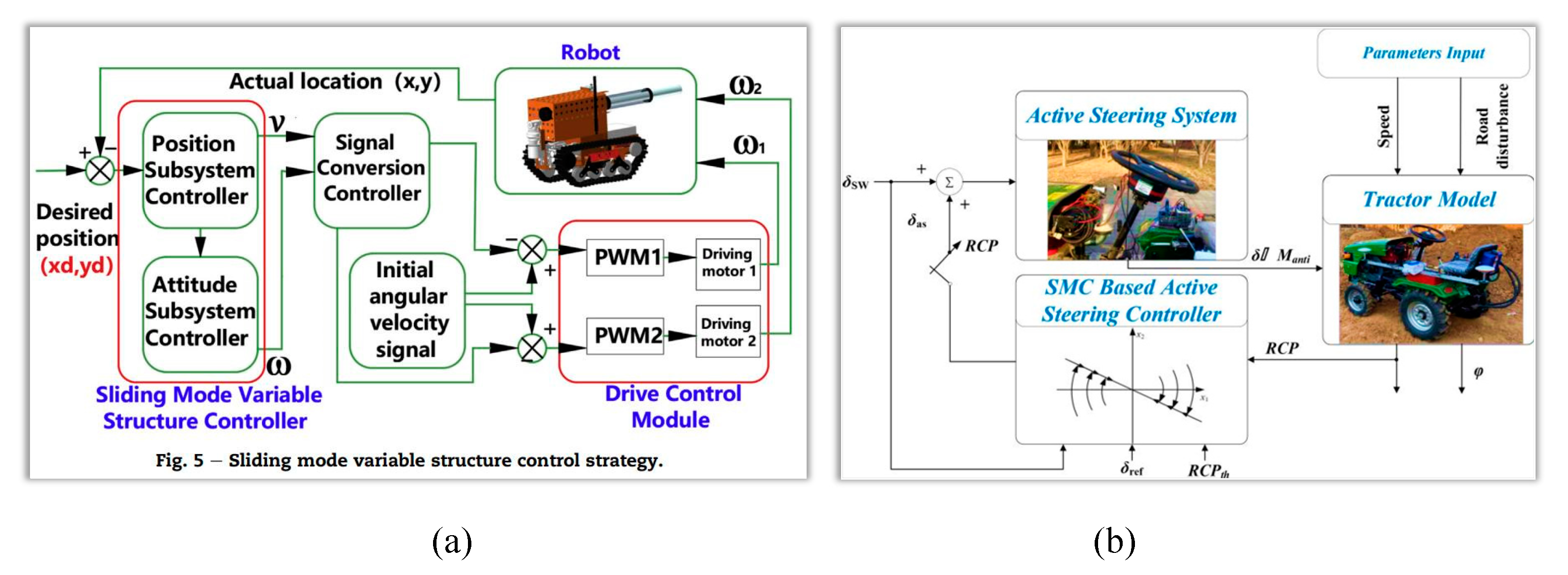

5.1.4. Sliding mode control

5.2. Motion control of UATs

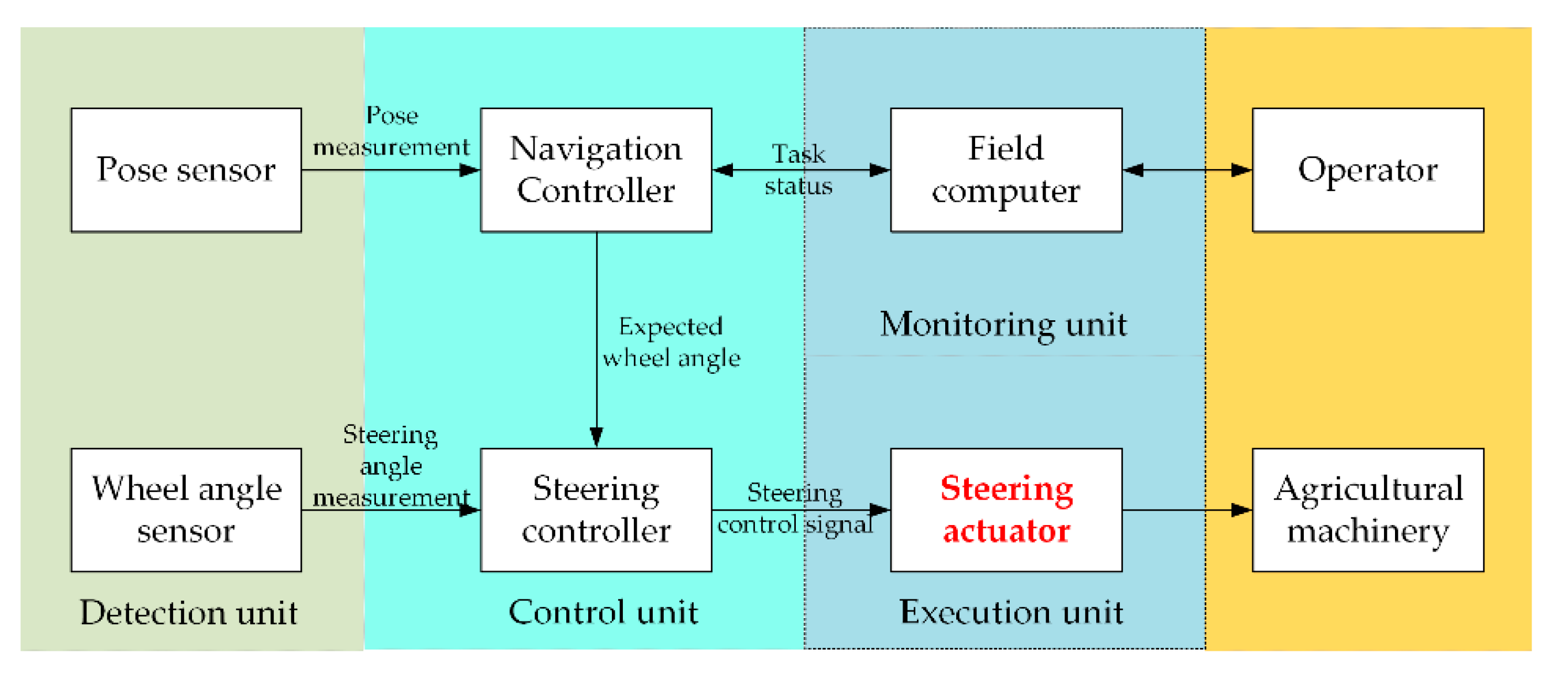

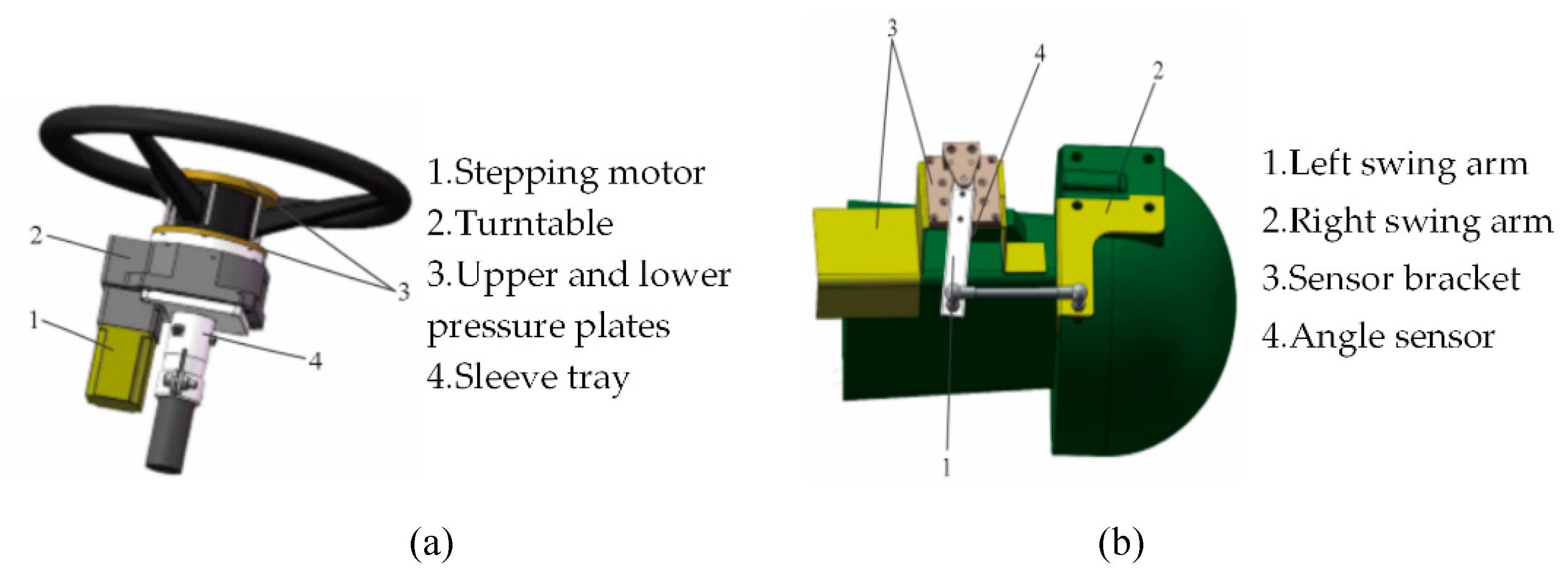

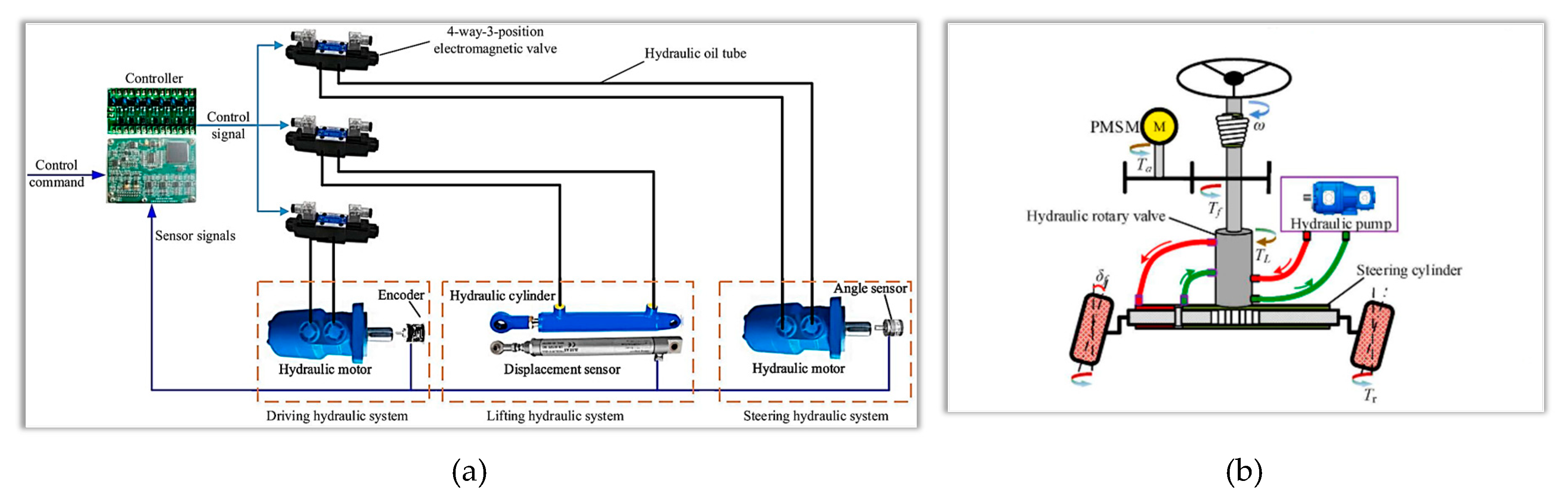

5.2.1. Steering control

5.2.2. Brake control

5.2.3. Speed control

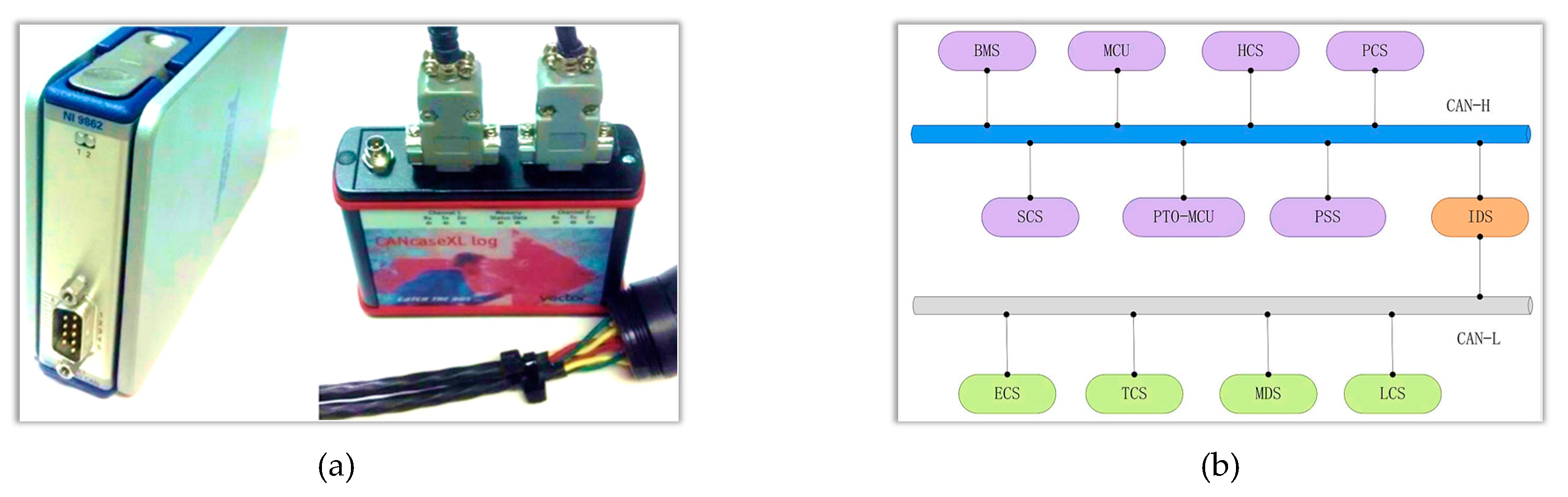

5.3. Controller area network bus technology for UATs

6. Conclusions and outlook

6.1. Conclusions

6.2. Future outlook

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Lu, W.; Li, J.; Qin, H.; Shu, L.; and Song, A. On Dual-Mode Driving Control Method for a Novel Unmanned Tractor With High Safety and Reliability. IEEE-CAA J. Autom. Sin. 2023, 10, 254–271. [Google Scholar] [CrossRef]

- Han, X.; Lai, Y.; and Wu, H. A Path Optimization Algorithm for Multiple Unmanned Tractors in Peach Orchard Management. Agronomy-Basel. 2022, 12, 856. [Google Scholar] [CrossRef]

- Xu, R.; Huang, B.; and Yin, H. A Review of the Large-Scale Application of Autonomous Mobility of Agricultural Platform. Comput. Electron. Agric. 2023, 206, 107628. [Google Scholar] [CrossRef]

- Ji, X.; Ding, S.; Wei, X.; and Cui, B. Path Tracking of Unmanned Agricultural Tractors Based on a Novel Adaptive Second-Order Sliding Mode Control. J. Franklin Inst.-Eng. Appl. Math. 2023, 360, 5811–5831. [Google Scholar] [CrossRef]

- Xie, B.; Jin, Y.; Faheem, M.; Gao, W.; Liu, J.; Jiang, H; Cai, L.; and Li, Y. Research Progress of Autonomous Navigation Technology for Multi-Agricultural Scenes. Comput. Electron. Agric. 2023, 211, 107963. [Google Scholar] [CrossRef]

- Yin, X.; Wang, Y.; Chen, Y.; Jin, C.; and Du, J. Development of Autonomous Navigation Controller for Agricultural Vehicles. Int. J. Agric. Biol. Eng. 2020, 13, 70–76. [Google Scholar] [CrossRef]

- Liu, Y.; Ma, X.; Shu, L.; Hancke, G.P.; and Mahfouz, A.M.A. From Industry 4.0 to Agriculture 4.0: Current Status, Enabling Technologies, and Research Challenges. IEEE Trans. Ind. Inform. 2021, 17, 4322–4334. [Google Scholar] [CrossRef]

- Marinoudi, V.; Sørensen, C.G.; Pearson, S.; and Bochtis, D. Robotics and Labour in Agriculture: A Context Consideration. Biosyst. Eng. 2019, 184, 111–121. [Google Scholar] [CrossRef]

- Yoshida, S. Study on Cloud-Based GNSS Positioning Architecture with Satellite Selection Algorithm and Report of Field Experiments. IEICE Trans. Commun. 2022, 105, 388–398. [Google Scholar] [CrossRef]

- Ruan, Z.; Chang, P.; Cui, S.; Luo, J.; Gao, R.; and Su, Z. A Precise Crop Row Detection Algorithm in Complex Farmland for Unmanned Agricultural Machines. Biosyst. Eng. 2023, 232, 1–12. [Google Scholar] [CrossRef]

- Liang, Y.; Zhou, K.; and Wu, C. Environment Scenario Identification Based on GNSS Recordings for Agricultural Tractors. Comput. Electron. Agric. 2022, 195, 106829. [Google Scholar] [CrossRef]

- Fue, K.; Porter, W.; Barnes, E.; Li, C.; and Rains, G. Autonomous Navigation of a Center-Articulated and Hydrostatic Transmission Rover using a Modified Pure Pursuit Algorithm in a Cotton Field. Sensors. 2020, 20, 4412. [Google Scholar] [CrossRef] [PubMed]

- Jing, Y.; Li, Q.; Ye, W.; and Liu, G. Development of a GNSS/INS-based Automatic Navigation Land Levelling System. Comput. Electron. Agric. 2022, 213, 108187. [Google Scholar] [CrossRef]

- Yang, L.; Xu, Y.; Li, Y.; Chang, M.; Chen, Z.; Lan, Y.; and Wu, C. Real-Time field road freespace extraction for agricultural machinery autonomous driving based on LiDAR. Comput. Electron. Agric. 2023, 211, 108028. [Google Scholar] [CrossRef]

- Wang, L.; Li, L.; and Qiu, R. Edge Computing-based Differential Positioning Method for BeiDou Navigation Satellite System. KSII Trans. Internet Inf. Syst. 2019, 13, 69–85. [Google Scholar] [CrossRef]

- Wang, H.; and Noguchi, N. Navigation of a Robot Tractor Using the Centimeter Level Augmentation Information via Quasi-Zenith Satellite System. J. Jpn. Soc. Agric. Mach. Food Eng. 2019, 81, 250–255. [Google Scholar] [CrossRef]

- Wu, J.; and Chen, X. Present situation, problems and countermeasures of cotton production mechanization development in Xinjiang Production and Construction Corps. Transactions of the CSAE. 2015, 31, 5–10. (In Chinese) [Google Scholar] [CrossRef]

- Zhao, X.; Wang, K.; Wu, S.; Wen, L.; Chen, Z.; Dong, L.; Sun, M.; and Wu, C. An obstacle avoidance path planner for an autonomous tractor using the minimum snap algorithm. Comput. Electron. Agric. 2023, 207, 107738. [Google Scholar] [CrossRef]

- He, Y.; Zhou, J.; Sun, J.; Jia, H.; Liang, Z.; and Awuah, E. An adaptive control system for path tracking of crawler combine harvester based on paddy ground conditions identification. Comput. Electron. Agric. 2023, 210, 107948. [Google Scholar] [CrossRef]

- Jing, Y.; Liu, G.; and Luo, C. Path tracking control with slip compensation of a global navigation satellite system based tractor scraper land levelling system. Biosyst. Eng. 2021, 212, 360–377. [Google Scholar] [CrossRef]

- Huang, W.; Ji, X.; Wang, A.; Wang, Y.; and Wei, X. Straight-Line Path Tracking Control of Agricultural Tractor-Trailer Based on Fuzzy Sliding Mode Control. Appl. Sci. 2023, 13, 872. [Google Scholar] [CrossRef]

- He, J.; Zhu, J.; Luo, X.; Zhang, Z.; Hu, L.; and Gao, Y. Design of steering control system for rice transplanter equipped with steering wheel-like motor. Transactions of the CSAE. 2019, 35, 10–17. (In Chinese) [Google Scholar] [CrossRef]

- Wu, C.; Wang, D.; Chen, Z.; Song, B.; Yang, L.; and Yang, W. Autonomous driving and operation control method for SF2104 tractors. Transactions of the CSAE. 2020, 36, 42–48. (In Chinese) [Google Scholar] [CrossRef]

- Gan-Mor, S.; Clark, R.L.; Upchurch, B.L. Implement lateral position accuracy under RTK-GPS tractor guidance. Comput. Electron. Agric. 2007, 59, 31–38. [Google Scholar] [CrossRef]

- Liu, Z.; Zhang, Z.; Luo, X.; Wang, H.; Huang, P.; Zhang, J. Design of automatic navigation operation system for Lovol ZP9500 high clearance boom sprayer based on GNSS. Transactions of the CSAE. 2018, 34, 15–21. (In Chinese) [Google Scholar] [CrossRef]

- Zhao, Z.; Zhang, Y.; Long, L.; Lu, Z.; and Shi, J. Efficient and adaptive lidar-visual-inertial odometry for agricultural unmanned ground vehicle. Int. J. Adv Robot. Syst. 2022, 19, 17298806221094925. [Google Scholar] [CrossRef]

- Bakker, T.; Wouters, H.; Van, A.K.; Bontsema, J.; Tang, L.; Müller, J.; Van, S.G. A vision-based row detection system for sugar beet. Comput. Electron. Agric. 2008, 60, 87–95. [Google Scholar] [CrossRef]

- Radcliffe, J.; Cox, J.; Bulanon, D.M. Machine vision for orchard navigation. Comput. Ind. 2018, 98, 165–171. [Google Scholar] [CrossRef]

- Kanagasingham, S.; Ekpanyapong, M.; Chahan, R. Integrating machine vision-based row guidance with GPS and compass-based routing to achieve autonomous navigation for a rice field weeding robot. Precis. Agric. 2020, 21, 831–855. [Google Scholar] [CrossRef]

- Mahboub, V.; Mohammadi, D. A Constrained Total Extended Kalman Filter for Integrated Navigation. J. Navigation. 2018, 71, 971–988. [Google Scholar] [CrossRef]

- Ma, Z.; Yin, C.; Du, X.; Zhao, L.; Lin, L.; Zhang, G.; Wu, C. Rice row tracking control of crawler tractor based on the satellite and visual integrated navigation. Comput. Electron. Agric. 2022, 197, 106935. [Google Scholar] [CrossRef]

- Thanpattranon, P.; Ahamed, T.; Takigawa, T. Navigation of autonomous tractor for orchards and plantations using a laser range finder: Automatic control of trailer position with tractor. Biosyst. Eng. 2016, 147, 90–103. [Google Scholar] [CrossRef]

- Lyu, P.; Wang, B.; Lai, J.; Bai, S.; Liu, M.; Yu, W. A Factor Graph Optimization Method for High-Precision IMU-Based Navigation System. Ieee T Instrum Meas. 2023, 72, 9509712. [Google Scholar] [CrossRef]

- Li, S.; Zhang, M.; Ji, Y.; Zhang, Z.; Cao, R.; Chen, B.; Li, H.; Yin, Y. Agricultural machinery GNSS/IMU-integrated navigation based on fuzzy adaptive finite impulse response Kalman filtering algorithm. Comput. Electron. Agric. 2021, 191, 106524. [Google Scholar] [CrossRef]

- Wang, N. Satellite/Inertial Navigation Integrated Navigation Method Based on Improved Kalman Filtering Algorithm. Mob Inf Syst. 2022, 2022, 1–9. [Google Scholar] [CrossRef]

- Xin, X.; Lu, X.; Lu, Y.; Gao, L.; Yu, Z. Vehicle sideslip angle estimation by fusing inertial measurement unit and global navigation satellite system with heading alignment. Mech Syst Signal Pr. 2021, 150, 107290. [Google Scholar] [CrossRef]

- Tian, Y.; Mai, Z.; Zeng, Z.; Cai, Y.; Yang, J.; Zhao, B.; Zhu, X.; Qi, L. Design and experiment of an integrated navigation system for a paddy field scouting robot. Comput. Electron. Agric. 2023, 214, 108336. [Google Scholar] [CrossRef]

- Liu, H.; Nassar, S.; Naser, E. S. Two-filter smoothing for accurate INS/GPS land—vehicle navigation in urban centers. Ieee T Veh Technol. 2010, 59, 4256–4267. [Google Scholar] [CrossRef]

- Chen, Y.; Chen, L.; Chang, M. A Design of an Unmanned Electric Tractor Platform. Agronomy-Basel. 2022, 12, 112. [Google Scholar] [CrossRef]

- Kago, R.; Vellak, P.; Ehrpais, H.; Noorma, M.; Olt, J. Assessment of power characteristics of an unmanned tractor for operations on peat fields. Agronomy Research 2022, 20, 261–274. [Google Scholar] [CrossRef]

- Zhang, X.; Zhou, Z. Speed control strategy for tractor assisted driving based on chassis dynamometer test. Int. J. Agr. Biol. Eng. 2021, 14, 169–175. [Google Scholar] [CrossRef]

- Luo, C.; Wen, C.; Meng, Z.; Liu, H.; Li, G.; Fu, W.; Zhao, C. Research on the Slip Rate Control of a Power Shift Tractor Based on Wheel Speed and Tillage Depth Adjustment. Agronomy-Basel. 2018, 13, 281. [Google Scholar] [CrossRef]

- Xia, J.; Li, D.; Liu, G.; Cheng, J.; Zheng, K.; Luo, C. Design and Test of Electro-hydraulic Monitoring Device for Hitch Tillage Depth Based on Measurement of Tractor Pitch Angle. Transactions of the CSAM. 2021, 42, 386–395. (In Chinese) [Google Scholar] [CrossRef]

- Suomi, P.; Oksanen, T. Automatic working depth control for seed drill using ISO 11783 remote control messages. Comput. Electron. Agric. 2015, 116, 30–35. [Google Scholar] [CrossRef]

- Chen, K.; Zhao, B.; Zhou, L.; Wang, L.; Wang, Y.; Yuan, Y.; Zheng, Y. Real-time missed seeding monitoring planter based on ring-type capacitance detection sensor. Inmateh-Agric Eng. 2021, 64, 279–288. [Google Scholar] [CrossRef]

- Wang, Y.; Jing, H.; Zhang, D.; Cui, T.; Zhong, X.; Li, Y. Development and performance evaluation of an electric-hydraulic control system for a subsoiler with flexible tines. Comput. Electron. Agric. 2018, 151, 249–257. [Google Scholar] [CrossRef]

- Wang, B.; Wang, Y.; Wang, H.; Mao, H.; Zhou, L. Research on accurate perception and control system of fertilization amount for corn fertilization planter. Front Plant Sci. 2023, 13, 1074945. [Google Scholar] [CrossRef]

- Govindaraju, M.; Fontanelli, D.; Kumar, S. S.; Pillai, A. S. Optimized Offline-Coverage Path Planning Algorithm for Multi-Robot for Weeding in Paddy Fields. IEEE Access. 2023, 11, 109868. [Google Scholar] [CrossRef]

- Han, X.; Kim, H.J.; Jeon, C.W.; Moon, H. C.; Kim, J. H.; Seo, I.H. Design and field testing of a polygonal paddy infield path planner for unmanned tillage operations. Comput. Electron. Agric. 2021, 191, 106567. [Google Scholar] [CrossRef]

- Alshammrei, S.; Boubaker, S.; Kolsi, L. Improved Dijkstra algorithm for mobile robot path planning and obstacle avoidance. Comput. Mater. Contin. 2022, 72, 5939–5954. [Google Scholar] [CrossRef]

- Li, X.; Wang, W.; Liu, G.; Li, R.; Li, F. Optimizing the Path of Plug Tray Seedling Transplanting by Using the Improved A* Algorithm. Agriculture. 2022, 12, 1302. [Google Scholar] [CrossRef]

- Cui, Y.; Wang, Y.; He, Z.; Cao, D.; Ma, L.; Li, K. Global Path Planning of Kiwifruit Harvesting robot Based on the Improved RRT Algorithm. Transactions of the CSAM. 2022, 53, 151–158. (In Chinese) [Google Scholar] [CrossRef]

- Cao, R.; Li, S.; Ji, Y.; Zhang, Z.; Xu, H.; Zhang, M.; Li, M.; Li, H. Task assignment of multiple agricultural machinery cooperation based on improved ant colony algorithm. Comput. Electron. Agric. 2021, 182, 105993. [Google Scholar] [CrossRef]

- He, Y.; Fan, X. Application of Improved Ant Colony Optimization in Robot Path Planning. Comput. Eng. and Appl. 2021, 57, 276–282. [Google Scholar] [CrossRef]

- Wang, N.; Yang, X.; Wang, T.; Xiao, J.; Zhang, M.; Wang, H.; Li, H. Collaborative path planning and task allocation for multiple agricultural machines. Comput. Electron. Agric. 2023, 213, 108218. [Google Scholar] [CrossRef]

- İlhan, İ. An Improved Simulated Annealing Algorithm with Crossover Operator for Capacitated Vehicle Routing Problem. Swarm. Evol. Comput. 2021, 64, 100911. [Google Scholar] [CrossRef]

- Yang, L.; Wang, C.; Gao, L.; Song, Y.; Li, X. An improved simulated annealing algorithm based on residual network for permutation flow shop scheduling. Complex. Intell. Syst. 2021, 7, 1173–1183. [Google Scholar] [CrossRef]

- Khan, S. A.; Mahmood, A. Fuzzy goal programming-based ant colony optimization algorithm for multi-objective topology design of distributed local area networks. Neural. Comput. Appl. 2019, 31, 2329–2347. [Google Scholar] [CrossRef]

- Yin, X.; Cai, P.; Zhao, K.; Zhang, Y.; Zhou, Q.; Yao, D. Dynamic Path Planning of AGV Based on Kinematic Constraint A* Algorithm and Following DWA Fusion Algorithms. Sensors. 2023, 23, 4102. [Google Scholar] [CrossRef]

- Ge, Z.; Man, Z.; Wang, Z.; Bai, X.; Wang, X.; Xiong, F.; Li, D. Robust adaptive sliding mode control for path tracking of unmanned agricultural vehicles. Comput. Electr.Eng. 2023, 108, 108693. [Google Scholar] [CrossRef]

- Fan, X.; Wang, J.; Wang, H.; Yang, L.; Xia, C. LQR Trajectory Tracking Control of Unmanned Wheeled Tractor Based on Improved Quantum Genetic Algorithm. Machines. 2023, 11, 62. [Google Scholar] [CrossRef]

- Raikwar, S.; Fehrmann, J.; Herlitzius, T. Navigation and control development for a four-wheel-steered mobile orchard robot using model-based design. Comput. Electron. Agric. 2022, 202, 107410. [Google Scholar] [CrossRef]

- Xu, L.; You, J.; Yuan, H. Real-Time Parametric Path Planning Algorithm for Agricultural Machinery Kinematics Model Based on Particle Swarm Optimization. Agriculture-Basel. 2020, 13, 1960. [Google Scholar] [CrossRef]

- Joglekar, A.; Sathe, S.; Misurati, N.; Srinivasan, S.; Schmid, M.J.; Krovi, V. Deep Reinforcement Learning Based Adaptation of Pure-Pursuit Path-Tracking Control for Skid-Steered Vehicles. IFAC PapersOnLine 2022, 55-37, 400–407. [Google Scholar] [CrossRef]

- Xu, L.; Yang, Y.; Chen, Q.; Fu, F.; Yang, B.; Yao, L. Path Tracking of a 4WIS-4WID Agricultural Machinery Based on Variable Look-Ahead Distance. Appl. Sci-Basel. 2022, 12, 8651. [Google Scholar] [CrossRef]

- Yang, Y.; Li, Y.; Wen, X.; Zhang, G.; Ma, Q.; Cheng, S.; Qi, J.; Xu, L.; Chen, L. An optimal goal point determination algorithm for automatic navigation of agricultural machinery: Improving the tracking accuracy of the Pure Pursuit algorithm. Comput. Electron. Agric. 2022, 194, 106760. [Google Scholar] [CrossRef]

- Piron, D.; Pathak, S.; Deraemaeker, A.; Collette, C. On the link between pole-zero distance and maximum reachable damping in MIMO systems. Mech. Syst. Signal. Pr. 2022, 181, 109519. [Google Scholar] [CrossRef]

- Kayacan, E.; Ramon, H.; Saeys, W. Robust trajectory tracking error model-based predictive control for unmanned ground vehicles. IEEE. ASME. Trans. Mechatron. 2015, 21, 806–814. [Google Scholar] [CrossRef]

- Liu, Z.; Zheng, W.; Wang, N.; Lyu, Z.; Zhang, W. Trajectory tracking control of agricultural vehicles based on disturbance test. Int. J. Agr. Biol. Eng. 2020, 13, 138–145. [Google Scholar] [CrossRef]

- He, J.; Hu, L.; Wang, P.; Liu, Y.; Man, Z.; Tu, T.; Yang, L.; Li, Y.; Yi, Y.; Li, W.; Luo, X. Path tracking control method and performance test based on agricultural machinery pose correction. Comput. Electron. Agric. 2022, 200, 107185. [Google Scholar] [CrossRef]

- Ko, H.S.; Lee, K.Y.; Kim, H.C. An intelligent-based LQR controller design to power system stabilization. Electr. Pow. Syst. Res. 2004, 71(1), 1–9. [Google Scholar] [CrossRef]

- Wang, L.; Ni, H.; Zhou, W.; Pardalos, P. M.; Fang, J.; Fei, M. MBPOA-based LQR controller and its application to the double-parallel inverted pendulum system. Eng. Appl. Artif. Intel. 2014, 36, 262–268. [Google Scholar] [CrossRef]

- Bevly, D.M.; Gerdes, J.C.; Parkinson, B.W. A new yaw dynamic model for improved high-speed control of a farm tractor. J. Dyn. Syst-T. Asme. 2002, 124, 659–667. [Google Scholar] [CrossRef]

- Cui, B.; Sun, Y.; Ji, F.; Wei, X.; Zhu, Y.; Zhang, S. Study on whole field path tracking of agricultural machinery based on fuzzy Stanley model. Transactions of the CSAM. 2022, 53, 43–48+88. (In Chinese) [Google Scholar] [CrossRef]

- Bodur, M.; Kiani, E.; Hacısevki, H. Double look-ahead reference point control for autonomous agricultural vehicles. Biosyst. Eng. 2012, 113, 173–186. [Google Scholar] [CrossRef]

- Dong, F.; Heinemann, W.; Kasper, R. Development of a row guidance system for an autonomous robot for white asparagus harvesting. Comput. Electron. Agric. 2011, 79, 216–225. [Google Scholar] [CrossRef]

- Murakami, N.; Ito, A.; Will, J.D.; Steffen, M.; Inoue, K.; Kita, K.; Miyaura, S. Development of a teleoperation system for agricultural vehicles. Comput. Electron. Agric. 2008, 63, 81–88. [Google Scholar] [CrossRef]

- Gao, L.; Hu, J.; Li, T. DMC-PD cascade control method of the automatic steering system in the navigation control of agricultural machines. The 11th World Congress on Intelligent Control and Automation, Shenyang, China, 2014. [CrossRef]

- He, J.; Man, Z.; Hu, L.; Luo, X.; Wang, P.; Li, M.; Li, W. Path tracking control method and experiments for the crawler-mounted peanut combine harvester. Transactions of the CSAE. 2023, 39, 9–17. (In Chinese) [Google Scholar] [CrossRef]

- Li, L.; Wang, H.; Lian, J.; Ding, X.; Cao, W. A Lateral Control Method of Intelligent Vehicle Based on Fuzzy Neural Network. Adv. Mech. Eng. 2015, 7, 296209. [Google Scholar] [CrossRef]

- Vargas-Meléndez, L.; Boada, B.L.; Boada, M.J.L.; Gauchía, A.; Díaz, V. A Sensor Fusion Method Based on an Integrated Neural Network and Kalman Filter for Vehicle Roll Angle Estimation. Sensors, 2016, 16, 1400. [Google Scholar] [CrossRef]

- Meng, Q.; Qiu, R.; Zhang, M.; Liu, G.; Zhang, Z.; Xiang, M. Navigation System of Agricultural Vehicle Based on Fuzzy Logic Controller with Improved Particle Swarm Optimization Algorithm. Transactions of the CSAM. 2015, 46, 43–48+88. (In Chinese) [Google Scholar] [CrossRef]

- Xue, J.; Zhang, L.; Grift, T.E. Variable field-of-view machine vision-based row guidance of an agricultural robot. Comput. Electron. Agric. 2012, 84, 176–185. [Google Scholar] [CrossRef]

- Kumar, S.; Ajmeri, M. Optimal variable structure control with sliding modes for unstable processes. J. Cent. South. Univ. 2021, 28, 3147–3158. [Google Scholar] [CrossRef]

- Li, Z.; Chen, L.; Zheng, Q.; Dou, X.; Lu, Y. Control of a path following caterpillar robot based on a sliding mode variable structure algorithm. Biosyst. Eng. 2019, 186, 293–306. [Google Scholar] [CrossRef]

- Jia, Q.; Zhang, X.; Yuan, Y.; Fu, T.; Wei, L.; Zhao, B. Fault-tolerant adaptive sliding mode control method of tractor automatic steering system. Transactions of the CSAE 2018, 34, 76–84. (In Chinese) [Google Scholar] [CrossRef]

- He, Z.; Song, Z.; Wang, L.; Zhou, X.; Gao, J.; Wang, K.; Yang, M.; Li, Z. Fasting the stabilization response for prevention of tractor rollover using active steering: Controller parameter optimization and real-vehicle dynamic tests. Comput. Electron. Agric. 2023, 204, 107525. [Google Scholar] [CrossRef]

- Hu, J.; Gao, L.; Bai, X.; Li, T.; Liu, X. Review of research on automatic guidance of agricultural vehicles. Transactions of the CSAE. 2015, 31, 1–10. (In Chinese) [Google Scholar] [CrossRef]

- Zhang, M.; Xiang, M.; Wei, S.; Ji, Y.; Qiu, R.; Meng, Q. Design and Implementation of a Corn Weeding-cultivating Integrated Navigation System Based on GNSS and MV. Transactions of the CSAM. 2015, 16, 8–14. (In Chinese) [Google Scholar] [CrossRef]

- Li, W.; Xue, T.; MAO, E.; DU, Y.; LI, Z.; HE, X. Design and Experiment of Multifunctional Steering System for High Clearance Self-propelled Sprayer. Transactions of the CSAM. 2019, 50, 141–151. [Google Scholar] [CrossRef]

- Yue, G.; Pan, Y. Intelligent control system of agricultural unmanned tractor tillage trajectory. J. Intell. Fuzzy. Syst. 2020, 38, 7449–7459. [Google Scholar] [CrossRef]

- Yang, Y.; Zhang, G.; Chen, Z.; Wen, X.; Cheng, S.; Ma, Q.; Qi, J.; Zhou, Y.; Chen, L. An independent steering driving system to realize headland turning of unmanned tractors. Comput. Electron. Agric. 2022, 201, 107278. [Google Scholar] [CrossRef]

- Xu, G.; Chen, M.; He, X.; Liu, Y.; Wu, J.; Diao, P. Research on state-parameter estimation of unmanned Tractor-A hybrid method of DEKF and ARBFNN. Eng. Appl. Artif. Intel. 2024, 127, 107402. [Google Scholar] [CrossRef]

- Li, Y.; Cao, Q.; Liu, F. Design of control system for driverless tractor. MATEC Web of Conferences. 2020, 309. [Google Scholar] [CrossRef]

- Zhou, X.; Zhao, W.; Wang, C.; Luan, Z. Energy analysis and optimization design of vehicle electro-hydraulic compound steering system. DEStech Transactions on Environment Energy and Earth Science. 2019, 255, 113713. [Google Scholar] [CrossRef]

- He, Z.; Bao, Y.; Yu, Q.; Lu, P.; He, Y.; Liu, Y. Dynamic path planning method for headland turning of unmanned agricultural vehicles. Comput. Electron. Agric. 2023, 206, 107699. [Google Scholar] [CrossRef]

- Davis, R.I.; Burns, A.; Bril, R.J.; Lukkien, J.J. Controller area network(CAN) schedulability analysis: refuted,revisited and revised. Real-Time. Syst. 2007, 35, 239–272. [Google Scholar] [CrossRef]

- Rohrer, R.A.; Pitla, S.K.; Luck, J.D. Tractor CAN bus interface tools and application development for real-time data analysis. Comput. Electron. Agric. 2019, 163, 104847. [Google Scholar] [CrossRef]

- Marx, S.E.; Luck, J.D.; Pitla, S.K.; Hoy, R.M. Comparing various hardware/software solutions and conversion methods for Controller Area Network (CAN) bus data collection. Comput. Electron. Agric. 2016, 128, 141–148. [Google Scholar] [CrossRef]

- Liu, M.; Han, B.; Xu, L.; Li, Y. CAN bus network design of bifurcated power electric tractor. Peer. Peer. Netw. Appl. 2021, 14, 2306–2315. [Google Scholar] [CrossRef]

| Operation State | Perception Method | Advantages | Disadvantages |

|---|---|---|---|

| Vehicle Speed[41] | Radar speedometer, ground wheel, and GPS | Accurate ground wheel measurements at low speeds, accurate radar and GPS measurements at high speeds [42] | Inability to achieve high detection accuracy from low to high speeds |

| Tillage Depth[43] |

Indirect detection using dual inclinometers, depth measurement using suspension angle sensors | Overcoming errors caused by field residue coverage and machinery vibration | Indirect calculation of tillage depth based on complex mathematical models with limited universality |

| Seeding Depth[44] | Combination of angle sensors and ultrasonic sensors | High stability and accuracy | Specific to the type of seed unit |

| Fertilizer Application[45] |

Capacitance and electrostatic induction | Correlation between fertilizer flow rate and output signal | Accuracy influenced by particle size and flow rate |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).