2.2. Methodology

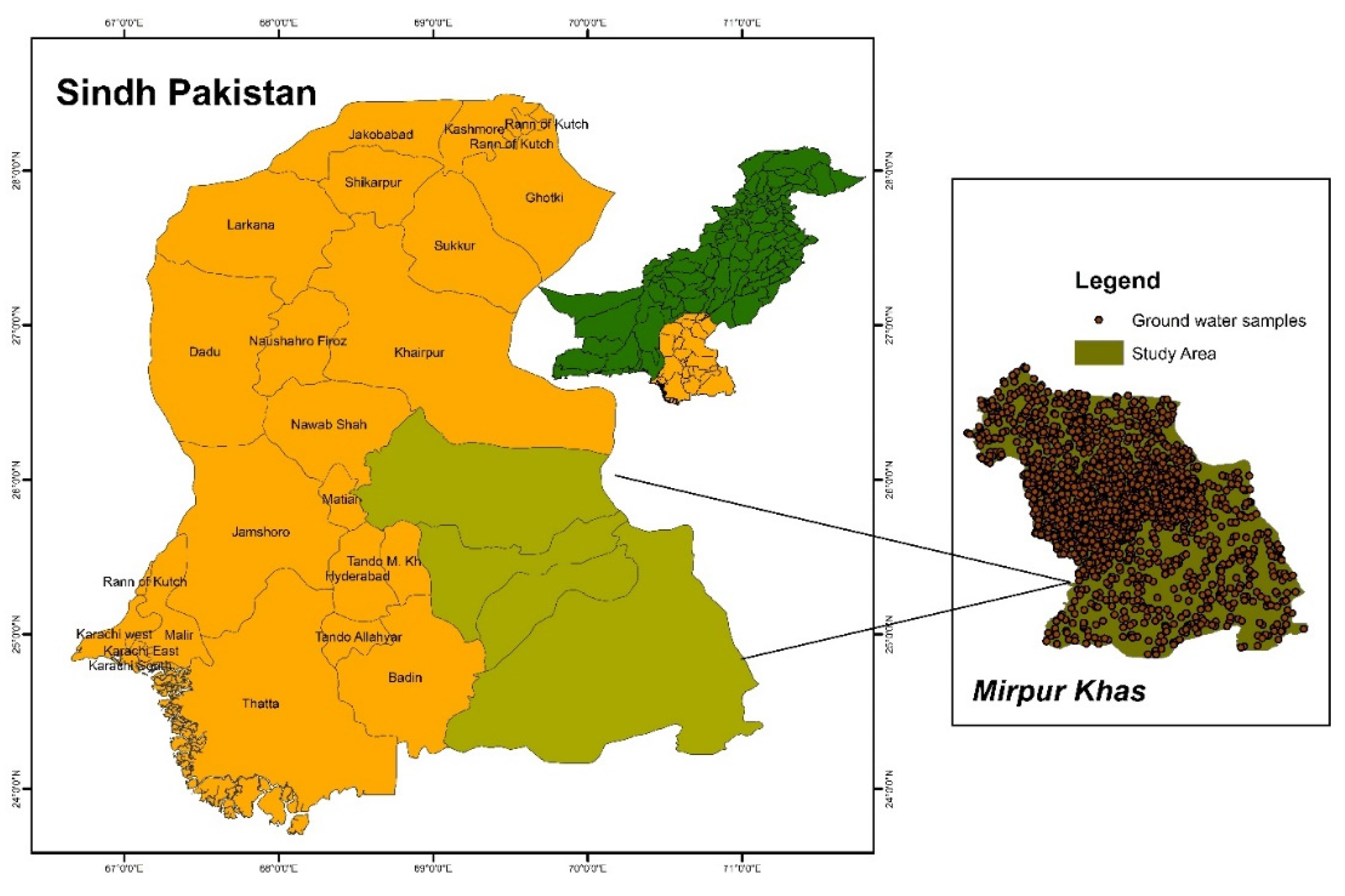

The research methodology involved the collection of 422 water samples from multiple sites across Mirpurkhas, Sindh, Pakistan, covering various locations deemed significant for groundwater extraction and consumption. Parameters including pH levels, temperature, dissolved oxygen, turbidity, nitrates, and other physiochemical characteristics were measured using standardized water testing procedures and equipment [

33,

34,

35]. Following data collection, a rigorous preprocessing phase was conducted to ensure data accuracy and suitability for machine learning analysis. This stage encompassed handling missing values through imputation methods, outlier removal, and normalization or scaling to ensure uniformity across parameters. Feature engineering was performed to extract pertinent features and reduce dimensionality for enhanced model performance. Feature selection techniques were employed, including Variance Inflation Factor (VIF) and Information Gain (IG), to identify influential parameters affecting water quality. These methods aimed to reduce redundancy and select the most informative features for modeling [

36,

37].

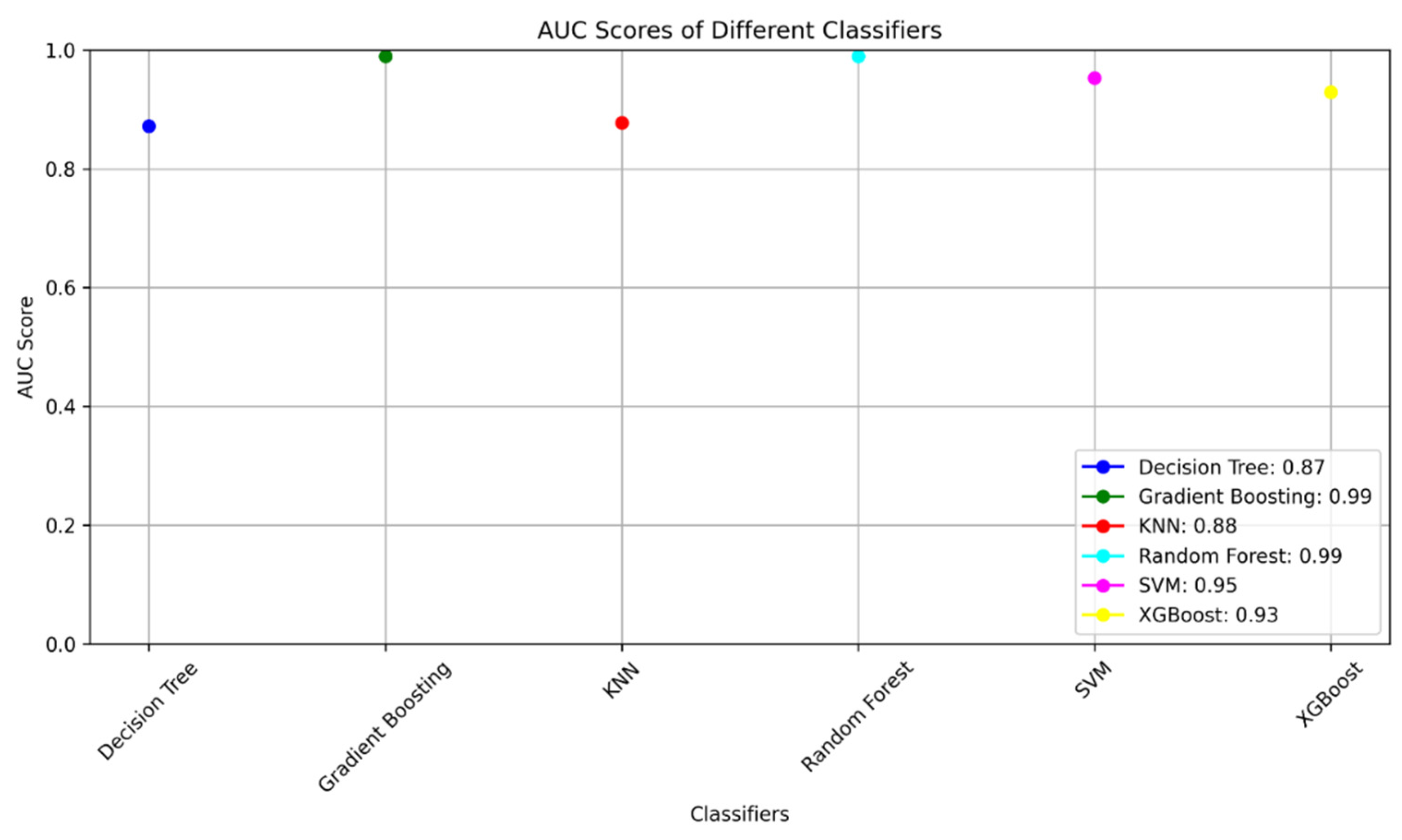

For model development, Python programming language was utilized along with machine learning libraries such as scikit-learn, XGBoost, pandas, and numpy. Supervised learning algorithms, including Random Forest, Gradient Boosting, Support Vector Machines (SVM), XGBoost, K-Nearest Neighbors (KNN), and Decision Trees, were implemented and trained using the preprocessed dataset [

38,

39]. Hyperparameter tuning through techniques like grid search and cross-validation optimized the models. The performance of the developed models was evaluated using common metrics such as accuracy, confusion matrix , Friedman test and Nemenyi test [

40,

41]. The uncertainty of models predictions has been evaluated using R-factor and Bootstrapping.

Figure 2.

Methodology used for predicting water quality index for given input variables.

Figure 2.

Methodology used for predicting water quality index for given input variables.

The data underwent resampling using a 5-fold cross-validation technique to assess model robustness and generalizability. The dataset was divided into 70% training and 30% testing subsets to validate model predictions on unseen data [

42]. Results interpretation involved comparing and analyzing the outcomes of various machine learning classifiers to identify the most accurate models for predicting the Water Quality Index (WQI). Models demonstrating the highest accuracy rates were further analyzed to understand the impact of different parameters on WQI prediction and water quality assessment.

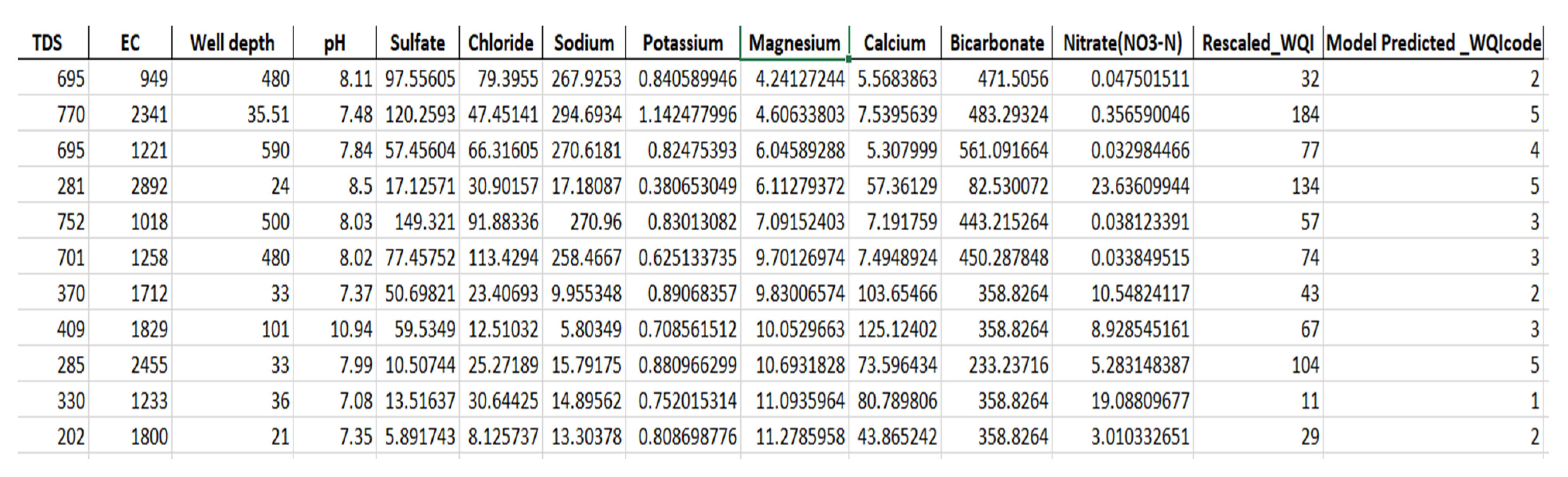

The variables that have been used in our research to determine the water quality index are shown in

Figure 3.

The VIF analysis highlights varying degrees of multicollinearity among the features considered for water quality assessment in Mirpurkhas, Sindh, Pakistan. Notably, certain parameters, such as 'TDS', 'Sodium', 'Calcium', and 'Magnesium', exhibited notably high VIF values, indicative of strong multicollinearity among these variables. Conversely, 'Potassium', 'Well Depth', and 'Nitrate (NO3-N)' demonstrated relatively lower VIF values, suggesting lower levels of multicollinearity in comparison [

43].

Table 1.

Variance Inflation Factor (VIF) values indicating multicollinearity among water quality assessment features in Mirpurkhas, Sindh, Pakistan.

Table 1.

Variance Inflation Factor (VIF) values indicating multicollinearity among water quality assessment features in Mirpurkhas, Sindh, Pakistan.

| Feature |

VIF |

| TDS |

4209.78 |

| Sodium |

1137.34 |

| Calcium |

425.13 |

| Magnesium |

380.55 |

| Bicarbonate |

58.74 |

| Sulfate |

39.68 |

| Chloride |

31.69 |

| pH |

20.16 |

| EC |

10.20 |

| Nitrate (NO3-N) |

5.45 |

| Well Depth |

1.70 |

| Potassium |

1.43 |

The Variance Inflation Factor (VIF) values, obtained from the assessment of water quality parameters in Mirpurkhas, Sindh, reveal varying degrees of multicollinearity among features considered for predicting the Water Quality Index (WQI). Features such as 'TDS', 'Sodium', 'Calcium', and 'Magnesium' exhibit notably high VIF values, suggesting strong interdependencies among these variables. This significant multicollinearity potentially impacts the accuracy of predictive models developed for water quality assessment [

44]. Parameters with lower VIF values, including 'Potassium', 'Well Depth', and 'Nitrate (NO3-N)', indicate weaker correlations, potentially posing less influence on multicollinearity issues within predictive models. Addressing high multicollinearity, particularly among variables with elevated VIF values, becomes crucial in enhancing the reliability and precision of predictive models for more accurate water quality assessment in the Mirpurkhas region.

Table 2.

Information Gain (IG) values indicating corresponding information gain of each water quality assessment feature in Mirpurkhas, Sindh, Pakistan.

Table 2.

Information Gain (IG) values indicating corresponding information gain of each water quality assessment feature in Mirpurkhas, Sindh, Pakistan.

| Feature |

IG |

| Nitrate (NO3-N) |

0.876 |

| Calcium |

0.869 |

| Sodium |

0.869 |

| Sulfate |

0.869 |

| Chloride |

0.869 |

| Potassium |

0.869 |

| Magnesium |

0.869 |

| TDS |

0.816 |

| EC |

0.784 |

| Well Depth |

0.525 |

| Bicarbonate |

0.520 |

| pH |

0.509 |

The Information Gain (IG) analysis highlights the relevance of various features in predicting the Water Quality Index (WQI) in Mirpurkhas, Sindh, Pakistan. Features such as 'Nitrate (NO3-N)', 'Calcium', 'Sodium', 'Sulfate', 'Chloride', 'Potassium', and 'Magnesium' exhibit higher IG values, indicating their considerable relevance in predicting WQI. Conversely, 'pH', 'Bicarbonate', 'Well Depth', 'EC', and 'TDS' present relatively lower IG values, suggesting comparatively lesser impact in predicting the WQI. Understanding the relevance of these features assists in selecting the most influential variables for the development of accurate predictive models for water quality assessment.

However, it's important to note that while IG values help identify influential features, the absolute value of IG alone might not necessarily determine the direct impact or importance of a feature in predicting the WQI [

45]. Other factors such as domain knowledge, the nature of the dataset, and the specific context of the water quality assessment should also be considered when selecting influential variables for building accurate predictive models. Therefore, while IG values provide valuable insights, the selection of the most influential variables should involve a comprehensive analysis that integrates multiple factors beyond IG values alone.

R-factor

While various factors contribute to the uncertainty in predicting Water Quality Index (WQI), including modeling, sampling errors, data preparation, and pre-processing, this study specifically addresses the uncertainty linked to individual model structures and input parameter selection. To assess model structure uncertainty, the analysis involves examining a set of three predicted WQI values during the testing phase for each observed WQI. These predictions are generated by the aforementioned predictive models.

The mean and standard deviation are computed for each predicted set, serving as parameters for a designated normal distribution function. Employing the 'Monte Carlo' simulation method, 1000 WQI values are generated for each observed value based on this distribution. While other methods like Latin Hypercube [

46] , Lagged Average [

47], and Multimodal Nesting [

48] are utilized for sample generation, the Monte Carlo technique has demonstrated greater applicability, especially in hydrology and water-related sciences [

49]. To quantify the uncertainty associated with WQI prediction, the 95% prediction confidence interval (i.e., the interval between the 97.5% and 2.5% quantiles), known as the prediction uncertainty of 95% (95PPI), is determined using the generated WQI values for each observed WQI. Specifically, the uncertainty is computed using the defined R-factor equation (1).

The formula for the calculation of the R-factor is expressed as:

Here,

represents the standard deviation of the observed values, and

is determined using Equation (2):

In this equation, J denotes the number of observed data points, while and correspond to the i-th values of the upper quartile (97.5%) and lower quartile (2.5%) of the 95% prediction confidence interval band (95PPI).

Other approaches, such as the Coefficient of Variation (CV), Prediction Interval Coverage Probability (PICP), and Prediction Interval Normalized Root-mean-square Width (PINRW), have been proposed as substitutes for the R-factor method [

50]. Nevertheless, these alternative methods solely rely on either observed or predicted data. In contrast, the R-factor method takes into account both observed and predicted data, making it a more comprehensive metric for characterizing prediction uncertainty [

51,

52]. The inherent uncertainty in predictive models arises from various sources, including the complexity of the underlying data and the dynamic nature of water quality parameters. The structure of machine learning models contributes significantly to this uncertainty, and exploring their characteristics sheds light on the reliability of predictions.

Bootstrapping

In the uncertainty analysis of predictive models for water quality index, generating prediction intervals is crucial for understanding the range of possible values for each prediction. This step involves using bootstrapping, a resampling technique that provides a measure of the uncertainty associated with the model's predictions. Bootstrapping involves creating multiple bootstrap samples by randomly drawing observations with replacement from the original dataset. For each bootstrap sample, the model is trained, and predictions are made on the test set. This process is repeated numerous times (in our case, 1000 iterations), resulting in a distribution of predicted values for each data point.

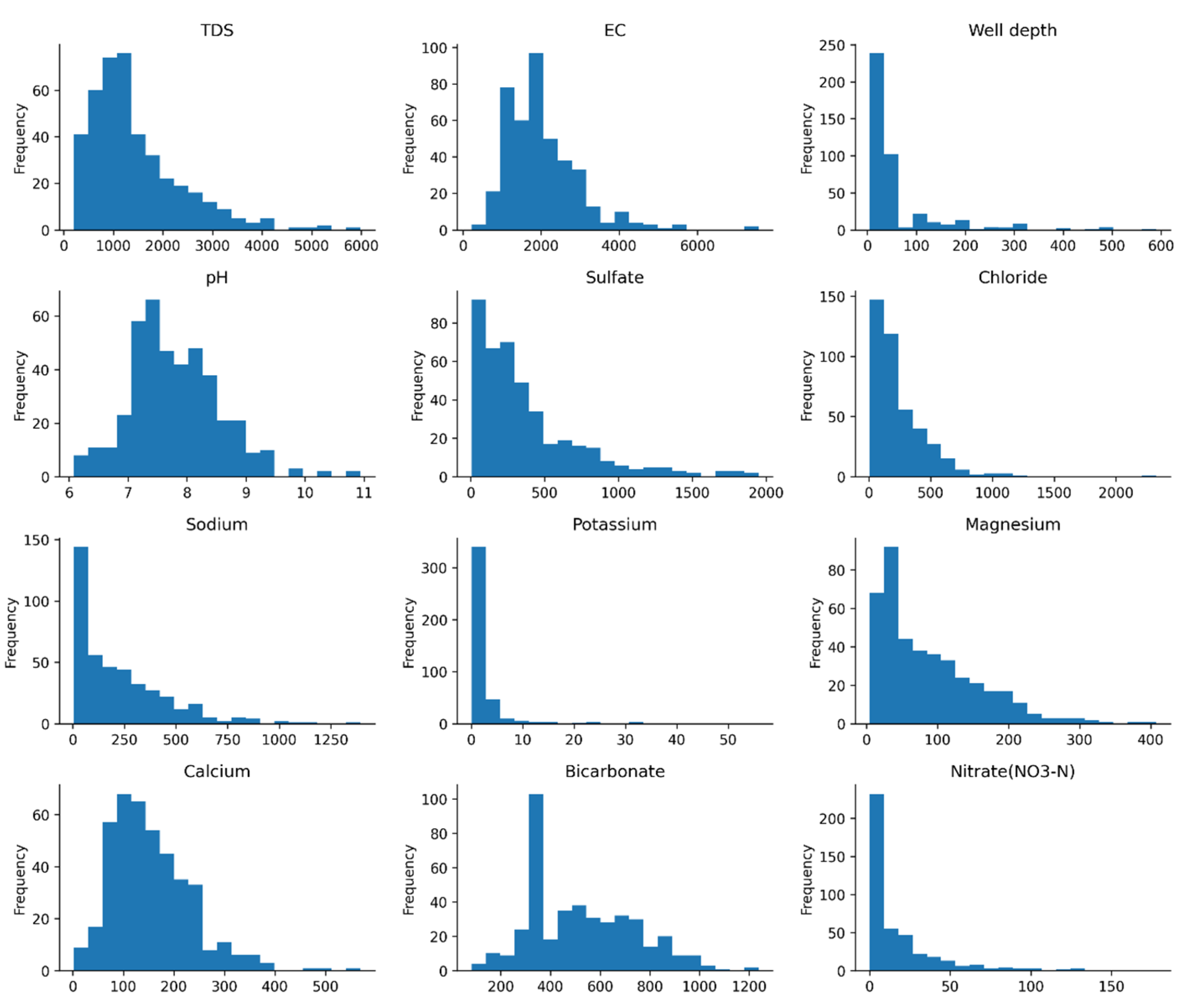

Interpretation of Mean Squared Error (MSE) : The Mean Squared Error on the Test Set (0.108) indicates the average squared difference between the actual water quality index values and the predicted values. A lower MSE generally suggests better model performance, demonstrating that the model's predictions are, on average, close to the true values.

However, the MSE alone may not provide a complete picture, as it does not account for the uncertainty in the predictions. This is where prediction intervals come into play. The generated prediction intervals using bootstrapping offer insights into the variability and uncertainty associated with the model's predictions. The lower and upper bounds of the intervals (calculated at the 2.5th and 97.5th percentiles, respectively) represent the plausible range within which the true water quality index values are likely to fall. The scatter plot (

Figure 4 )of actual versus predicted values, along with the shaded gray area representing the prediction intervals, provides a clear visualization of the model's performance and the associated uncertainty. The narrower the prediction intervals, the more confident we can be in the model's predictions.

A narrow prediction interval suggests that the models has a high degree of certainty in its predictions. A wider prediction interval indicates higher uncertainty, emphasizing the need for caution when relying on specific predictions in these regions. By incorporating bootstrapping to generate prediction intervals, we not only assess the model's accuracy through MSE but also gain a comprehensive understanding of the uncertainty inherent in the water quality index predictions. This holistic approach enhances the reliability and robustness of the predictive modeling process, making it more applicable and informative for water quality management and decision-making.

Random Forest and Gradient Boosting

These ensemble methods aggregate predictions from multiple decision trees, which individually capture different patterns in the data. The robustness of Random Forest and Gradient Boosting lies in their ability to mitigate overfitting and enhance predictive accuracy. However, the ensemble nature introduces uncertainty due to the variability in individual tree predictions.

Support Vector Machines (SVM) and XGBoost

SVM focuses on finding the hyperplane that best separates data into classes, while XGBoost optimizes the performance of weak learners through boosting. The structural complexity of SVM and the iterative refinement process of XGBoost contribute to their predictive power but also introduce uncertainty, particularly in capturing non-linear relationships and intricate patterns.

K-Nearest Neighbors (KNN) and Decision Trees

KNN relies on proximity-based classification, and Decision Trees partition the data based on feature splits. These models are interpretable and less complex, but their simplicity can lead to uncertainty when faced with intricate relationships in the data. KNN's reliance on neighbors introduces variability, while Decision Trees' sensitivity to data changes may affect stability.

Understanding the interplay between model structure and uncertainty is crucial for reliable water quality assessments. The ensemble nature of Random Forest and Gradient Boosting, along with the iterative optimization in SVM and XGBoost, contributes to their robust performance but introduces variability. Simpler models like KNN and Decision Trees may be more interpretable but can exhibit uncertainty in capturing complex relationships. The uncertainty associated with each model's structure emphasizes the importance of a nuanced approach to water quality prediction. Integrating uncertainty analysis, such as the R-factor, alongside accurate predictions allows for a more informed and cautious interpretation of water quality assessments, fostering a holistic understanding for effective decision-making as shown in

Table 3.