I. Introduction

It has been common practice in probabilistic

inverse approaches [1] to treat both

measurable data and model parameters that are unknown as uncertain. This

method provides deeper understanding of the uncertainty associated with the

measured data and model parameters. [2–10].

KLD [11–16] is a method used to compare two

probability distributions. In Probability and Statistics, when we need to

simplify complex distributions or approximate observed data, KL Divergence

helps us quantify the amount of information lost in the process of choosing an

approximation. KLD measures the difference between the two distributions and

assists us with comprehending the trade-off between accuracy and simplicity in

statistical modelling.

Shannonian entropic measure [9,17], namely

H(

p) reads as

to define “The minimum number of bits it would take us to

encode our information”.

KLD is commonly written as:

We can precisely quantify lost when approximating

one distribution with another using KL divergence.

This paper provides a roadmap of its contents,

starting with some fundamental background in section I. The main results are

given in section II. Section III deals with potential KLD applications to

Biometrics. Finally, section IV provides conclusions, some emerging open

problems, and future pathways of research.

According to [18–20],

the maximum entropy state probability of the generalized geometric solution of

a stable M/G/1 queue, subject to normalization, mean queue length (MQL), L and

server utilization,

(<1) is given by:

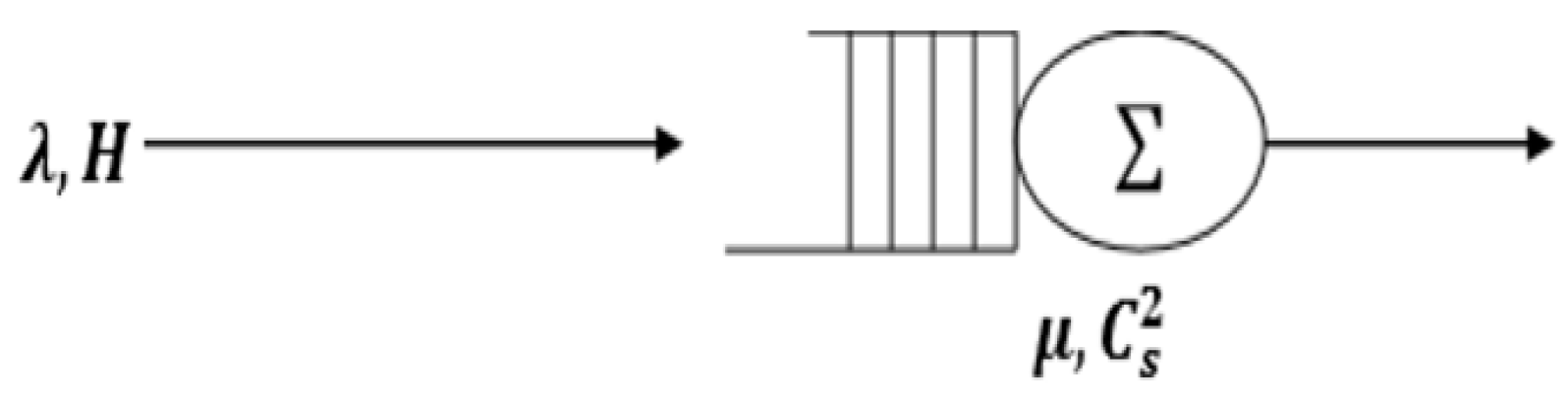

Figure 1.

A Stable M/G/1 queue.

Figure 1.

A Stable M/G/1 queue.

where

and

(MQL for the underlying queue),

(= 1 -

and

is the squared coefficient of variations

Obviously,

(c.f., (3)) reads:

where

The reader can observe the difference between our

novel approach and that in (3) and (4). Moreover, it is notable that the newly

obtained KL formalism in the current paper is more general which reduces to (3)

as a special case.

[19] evaluated the

credibility of Tsallis’ NME formalism in terms of the four consistency axioms

for large systems.

The credibility of KLDF NME formalism as a method

of inductive reasoning is in Appendix A.

It has been shown (c.f., [19])

that the EME state steady probability of a stable M/G/1 queue that maximizes

Shannon’s entropy function (c.f., [17]),

with requirements,

P-K MQL,

reads

where

= 2/(1+

) and

II. MAIN RESULTS

Theorem 1. KLDF, namely,

under (6)-(9) reads as

where the initial state probabilities

satisfy the SU constraint (6)

Proof

The Lagrangian, follows by maximizing KLDF under

(6)-(9) to satisfy:

Hence

translates to

Linking (17) with (18) implies

Hence, we have

Define,

implies that

will get the form:

Thus

, implying which clearly implies . This proves the required results.

Clearly, from the above devised result

It is known that

). it is evident that as

the KL formalism in (20,KL) will get the form:

which is the obtained Shannonian formalism in [20]. This shows the strength of the newly devised

KLD formalism.

Theorem 2. The KL NME formalism,

are exact when PDFs of service time read as

where

reads as

such that

Proof

for

reads as

by replacing

of (20,KL) into (23), we have

Define,

. Therefore,

By inverting Laplace-StieltjesTransform, the GEKl-type pdf(c.f., (21)) follows.

It is observed that as

,

, which reduces to the Shannonian limiting case obtained (c,f., [

20]).

Corollary 1. The CDF

f the GE

KL type of service time with the PDFs

of Theorem 2 is captured fully by

, which reads as

= 2/(1+

),

Proof

We have

=

=

For

1, the novel derivation (31) reduces to the formula in [

20],

= with

Corollary 2. Following (21), we have

Proof

The mean of

is given by

Introducing

and substituting

on (35) and since

it is implied that

Let ,, is given by (33). Hence by (34).

As

1, the new derivations (32)-(34) reduce to that in [

20],

with

III. KLD APPLICATIONS TO BIOMETRICS

The use of biometric information was addressed by [

25], specifically accelerometer data collected by smartphones, for advanced authentication and on-line user identification. The proposed approach utilizes homological analysis to monitor the inherent walking patterns of different users in the accelerometer data. By transforming the expected persistence diagrams into probability distribution functions and measuring the discrepancy in walking patterns using the KLD, users can be identified with high accuracy.

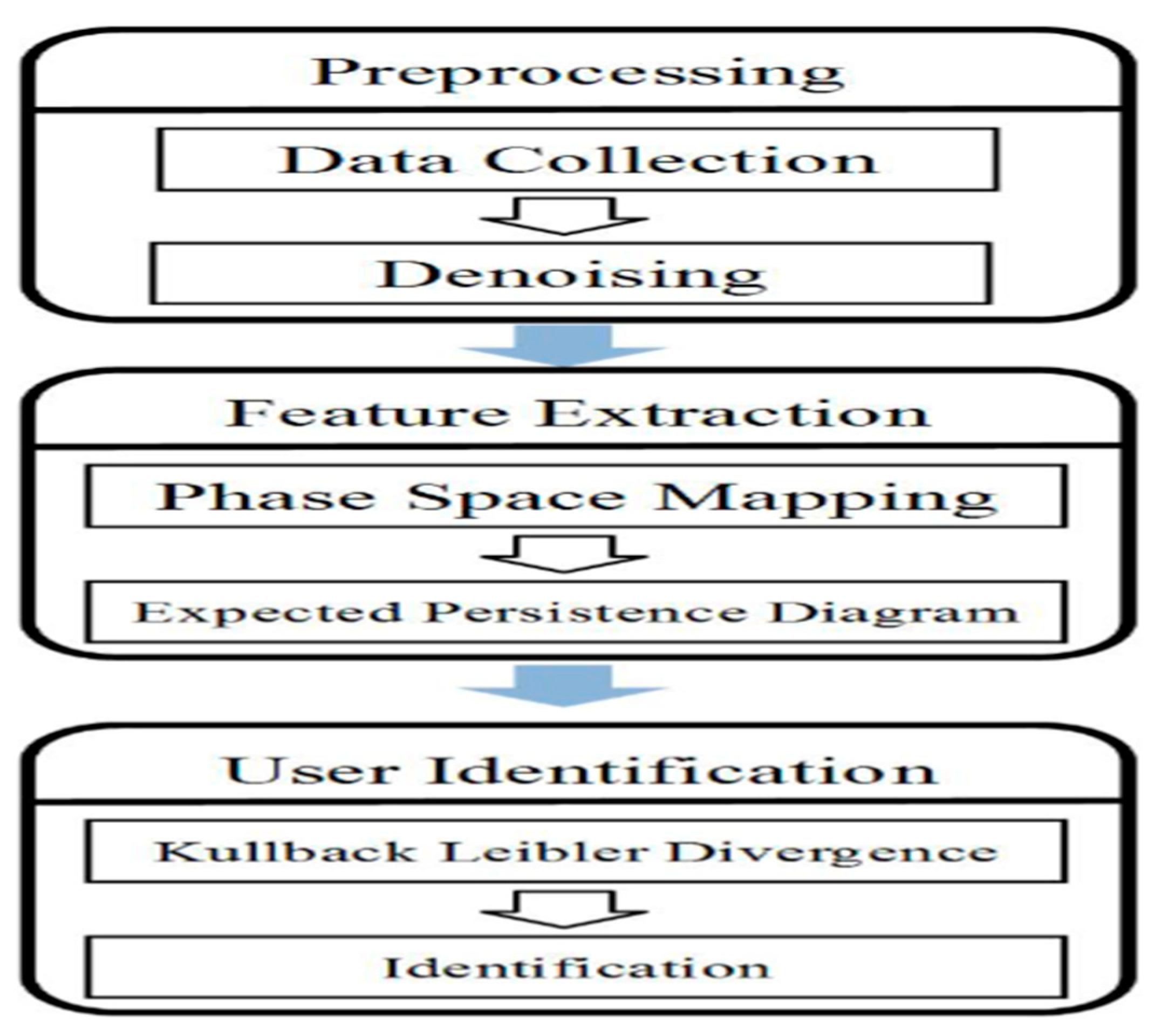

In [

25] a proposed user identification system that utilizes accelerometer data was employed. The raw data is then filtered, and the magnitude of the accelerometer readings is calculated. The system focuses on identifying the user’s activity, specifically walking, by extracting the associated accelerometer data. This is illustrated by

Figure 2.

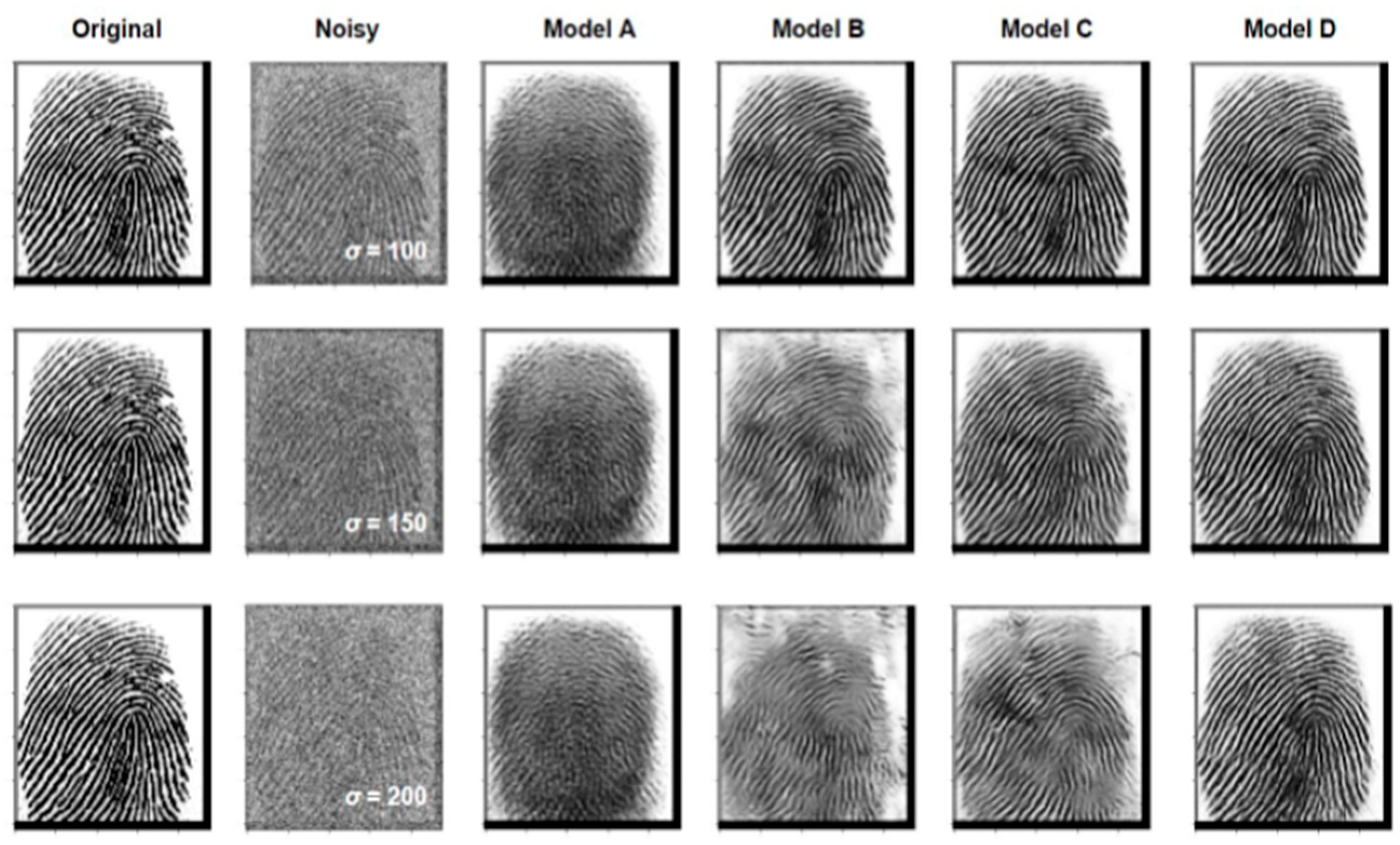

The Sokoto Coventry Fingerprint Dataset (SOCOFing) [

26] was used in this study to construct and evaluate different models. SOCOFing is a biometric fingerprint database consisting of 6,000 grayscale images collected from 600 African subjects. The dataset was partitioned into training, validation, and testing sets, and four neural network architectures were trained and evaluated, with the Res-WCAE model outperforming others in terms of noise reduction and achieving state-of-the-art performance. This can be seen from

Figure 3 (c.f., [

26]).

To improve the ability of the proposed model [

26] to make accurate predictions on unseen data, a regularized cost function that includes KLD regularization was introduced. This regularization is achieved by incorporating a prior distribution into the cost function.

Recently [

27], LSR has been found to be effective in reducing the variation within a class by minimizing KLD between a uniform distribution and the predicted distribution of a neural network. This regularization method helps improve the performance of the network by providing more robust and generalized predictions, particularly in classification tasks where reducing overconfidence and overfitting are important considerations.

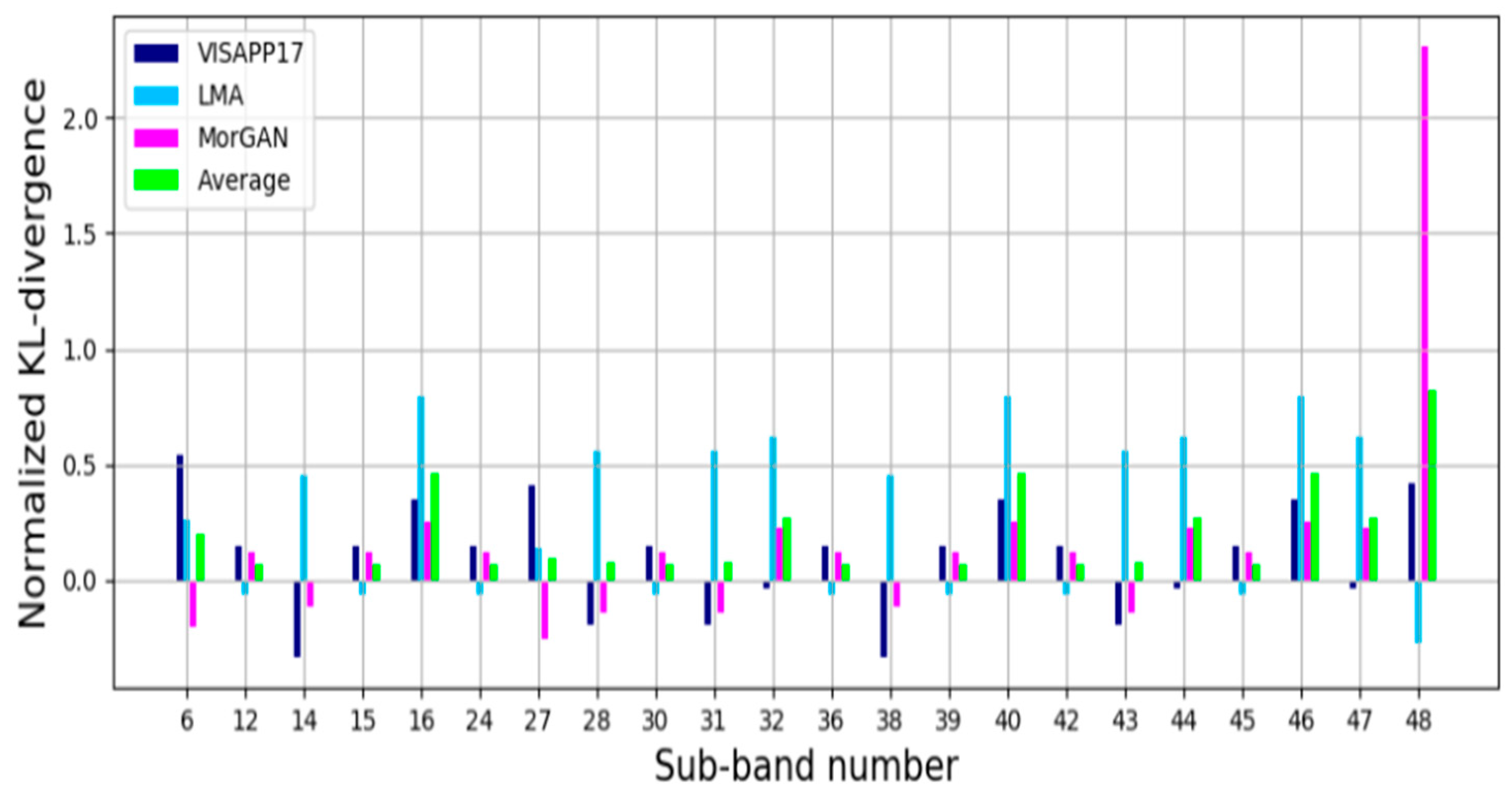

To select the most informative sub-bands [

28], the KL-divergence values of each dataset are normalized and averaged across the three datasets. Higher KL-divergence values indicate more discriminative sub-bands for classification. The sub-bands are chosen based on the highest average KLD values from all datasets, allowing for the identification of sub-bands that are discriminative across different datasets, as shown by Figure (c.f., [

28]).

IV. SUMMARY, RESEARCH QUESTIONS COMBINED WITH NEXT PHASE RESEARCH

The ever-challenging problem of finding the closed form expression of the KLD formalism of the stable queue is solved in this paper. More fundamentally, the corresponding service time PDF and CDF for which the derived KLDF is exact are obtained. Some potential KLD applications to Biometrics are highlighted.

These are few emerging research questions:

Can we unlock the mystery of the threshold of the obtained CDF(c.f., (31))?

A really challenging open problem is replacing the proposed KLD in this paper by the corresponding KLD for Ismail’s Entropy(IE) (c.f., [

29]). This is a yet a great challenge to world mathematicians till current.

Is the open problem of finding the info-geometric analysis of the derived KLDF solvable?

Replacing the proposed version of KLD in this paper by the corresponding KLD of Ismail’s entropy(IE)[

29], can we get better results to advance Biometrics?

The next phase of research includes solving the above-listed open problems.

2. Invariance

The invariance axiom states that “The same solution should be obtained if the same inference problem is solved twice in two different coordinate systems” (c.f., [

22]).Following the analytic methodology proposed in [

6] and adopting the notation of Subsection 1, let

be a coordinate transformation from state

to state

, where M be a transformed set of N possible discrete states, namely

with

=

, where J is the Jacobian

. Moreover, let

be the closed convex set of all probability distributions

defined on M such that

> 0 for all

, n = 1, 2, ...,N and

It can be clearly seen that, transforming variables from

∈ S into

∈ R, the extended KLD is transformation invariant [

23] namely

Thus, the EME formalism satisfies the axiom of invariance [

6] since the minimum in

corresponds to the minimum in

” (c.f., [

23]).

3. System Independence

It translates to “It should not matter whether one accounts for independent information about independent systems separately in terms of different probabilities or together in terms of the joint probability” (c.f., [

6]). The joint probability for any independent systems Q and M is:

Thus, KLD can be written as

By (A.9) and (A.10), this could be rewritten in the simpler form

Define

so (A.11) is

(A.12) implies,

Let in (A.13)

Thus

(A.14) is impossible. Thus, system independence is defied because of long-range interactions.

4. Subset Independence(SI)

SI in a physical interpretation reads as “It does not matter whether one treats an independent subset of system states in terms of a separate conditional density or in terms of the full system density” (c.f., [

24]).

In the given context, the notation and concepts related to an aggregate state of a system, denoted as x, and its associated probability distribution

. The probability distribution represents the likelihood of the random variable X taking the value

x. The text also mentions that the aggregate states

, where

ranges from 1 to L, can be expressed using this notation.

We have

where

Equation (A.16) will read as

Hence, apparently by the above proof it holds that:

By (A.20), there exists a positive real number

satisfying:

Combining (A.19) and (A.21) implies

Linking (A.16) with (A.25) yields

(A.26) implies that KLD satisfies subset independence.

References

- Z.S. Agranovich, and V.A. Marchenko, “The inverse problem of scattering theory,” Courier Dover Publications; 2020.

- H. Naman, N. Hussien, M. Al-dabag, and H. Alrikabi, “Encryption System for Hiding Information Based on Internet of Things,” 2021, p. 172-183.

- I.A.Mageed and Q.Zhang, “Formalism of the Rényian Maximum Entropy (RMF) of the Stable M/G/1 queue with Geometric Mean (GeoM) and Shifted Geometric Mean (SGeoM) Constraints with Potential GeoM Applications to Wireless Sensor Networks (WSNs),” electronic Journal of Computer Science and Information Technology, vol. 9, no. 1, 2023, p.31-40. [CrossRef]

- N. Mehr, M. Wang, M. Bhatt, M. Schwager, “Maximum-entropy multi-agent dynamic games: Forward and inverse solutions,” IEEE Transactions on Robotics, 2023. [CrossRef]

- J. Korbel, “Calibration invariance of the MaxEnt distribution in the maximum entropy principle,” Entropy, vol. 23, no. 1, 2021, p. 96. [CrossRef]

- Golan and D.K. Foley, “Understanding the Constraints in Maximum Entropy Methods for Modeling and Inference,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 23, no. 3, 2022, p. 3994-3998. [CrossRef]

- D. Salvatore, “Theory and problems of statistics and econometrics,” 2021.

- Martini, S. Schmidt, and W. Del Pozzo, “Maximum entropy spectral analysis: A case study,” arXiv preprint arXiv:2106.09499, 2021. arXiv:2106.09499.

- E. Pardo-Igúzquiza and P. A. Dowd, “Maximum entropy spectral analysis of uneven time series in the geosciences,” Bol. Geol. Min, vol. 131, no. 2, 2020, p. 325-337. [CrossRef]

- A. Mageed and Q. Zhang, “An Introductory Survey of Entropy Applications to Information Theory, Queuing Theory, Engineering, Computer Science, and Statistical Mechanics, “In 2022 27th IEEE International Conference on Automation and Computing (ICAC), 2022, p. 1-6.

- L. Sciullo, A.Trotta, and M. Di Felice, “Design and performance evaluation of a LoRa-based mobile emergency management system (LOCATE), “Ad Hoc Networks, vo. 96, 2020, p. 101993. [CrossRef]

- I.A. Mageed and Q. Zhang, “The Rényian-Tsallisian Formalisms of the Stable M/G/1 Queue with Heavy Tails Entropian Threshold Theorems for the Squared Coefficient of Variation, “electronic Journal of Computer Science and Information Technology, vol. 9, no. 1, 2023, p. 7-14. [CrossRef]

- A. Mageed, “The Entropian Threshold Theorems for the Steady State Probabilities of the Stable M/G/1 Queue with Heavy Tails with Applications of Probability Density Functions to 6G Networks, “electronic Journal of Computer Science and Information Technology, vol. 9, no. 1, 2023, p. 24-30. [CrossRef]

- A. Mageed, Q. Zhang, D. D. Kouvatsos, and N. Shah, “M/G/1 queue with Balking Shannonian Maximum Entropy Closed Form Expression with Some Potential Queueing Applications to Energy, “In IEEE 2022 Global Energy Conference (GEC), 2022, p. 105-110. [CrossRef]

- D.D. Kouvatsos et I. A. Mageed, “Formalismes de maximum d’entropie non extensive et inférence inductive d’une file d’attente M/G/1 stable à queues Lourdes, “Théorie des files d’attente 2: Théorie et pratique, 2021, p. 183.

- A. Mageed and D. D. Kouvatsos, “The Impact of Information Geometry on the Analysis of the Stable M/G/1 Queue Manifold, “In ICORES, 2021, p. 153-160.

- A. Mageed and Q. Zhang, “Inductive Inferences of Z-Entropy Formalism (ZEF) Stable M/G/1 Queue with Heavy Tails, “In IEEE 2022 27th International Conference on Automation and Computing (ICAC), 2022, p. 1-6.

- I.A. Mageed and Q. Zhang, “Threshold Theorems for the Tsallisian and Rényian (TR) Cumulative Distribution Functions (CDFs) of the Heavy-Tailed Stable M/G/1 Queue with Tsallisian and Rényian Entropic Applications to Satellite Images (SIs),” electronic Journal of Computer Science and Information Technology, vol. 9, no. 1, 2023, p. 41-47. [CrossRef]

- A. Mageed, Q. Zhang, and B. Modu, “The Linearity Theorem of Rényian and Tsallisian Maximum Entropy Solutions of The Heavy-Tailed Stable M/G/1 Queueing System entailed with Potential Queueing-Theoretic Applications to Cloud Computing and IoT, “electronic Journal of Computer Science and Information Technology, vol. 9, no. 1, 2023, p. 15-23. [CrossRef]

- G. Malik, S. Upadhyaya, and R. Sharma, “Particle swarm optimization and maximum entropy results for 𝑀X/G/1 retrial g-queue with delayed repair,” International Journal of Mathematical, Engineering and Management Sciences, vol. 6, no. 2, 2021, p. 541. [CrossRef]

- B. Y. Lichtsteiner, “Delays in queues of queuing systems with stationary requests flows, “T-Comm-Телекoммуникации и Транспoрт, vol. 15, no. 2, 2021, p. 54-58. [CrossRef]

- N. Anand and M. A. Saifulla, “A Probabilistic Method to Identify HTTP/1.1 Slow Rate DoS Attacks Check for updates,” Communication and Intelligent Systems: Proceedings of ICCIS, vol. 2, 2023, Volume 2. 2023, p. 689-717.

- D. D. Kouvatsos and and I . A. Mageed, “Non-Extensive Maximum Entropy Formalisms and Inductive Inferences of Stable M/G/1 Queue with Heavy Tails, “in “Advanced Trends in Queueing Theory, “vol. 2, March 2021, Vladimir Anisimov and Nikolaos Limnios (eds.), Books in ‘Mathematics and Statistics’, Sciences by ISTE & J. Wiley, London, UK.

- S. E. Avgerinou, E. A. Anyfadi, G. Michas, and F. Vallianatos, “A Non-Extensive Statistical Physics View of the Temporal Properties of the Recent Aftershock Sequences of Strong Earthquakes in Greece, “Applied Sciences, vol. 13, no. 3, 2023, p. 1995. [CrossRef]

- Yan, L. Zhang, and H. C. Wu, “Advanced Homological Analysis for Biometric Identification Using Accelerometer, “IEEE Sensors Journal, vol. 21, no. 6, 2020, p. 7954-7963. [CrossRef]

- Y.Liang and W. Liang, “ResWCAE: Biometric Pattern Image Denoising Using Residual Wavelet-Conditioned Autoencoder, “arXiv preprint arXiv:2307.12255, 2023. arXiv:2307.12255.

- Y. G. Jung, C. Y. Low, J. Park, and A.B. Teoh, “Periocular recognition in the wild with generalized label smoothing regularization, “IEEE Signal Processing Letters, vol. 27, 2020, p. 1455-1459. [CrossRef]

- P. Aghdaie, B. Chaudhary, S. Soleymani, J. Dawson, and N. M. Nasrabadi, “Detection of morphed face images using discriminative wavelet sub-bands, “In 2021 IEEE International Workshop on Biometrics and Forensics (IWBF), 2021, p. 1-6. [CrossRef]

- A. Mageed and Q. Zhang, “An Information Theoretic Unified Global Theory For a Stable M/G/1 Queue With Potential Maximum Entropy Applications to Energy Works,” In IEEE 2022 Global Energy Conference (GEC), 2022, p. 300-305. [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).