Submitted:

07 March 2024

Posted:

08 March 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

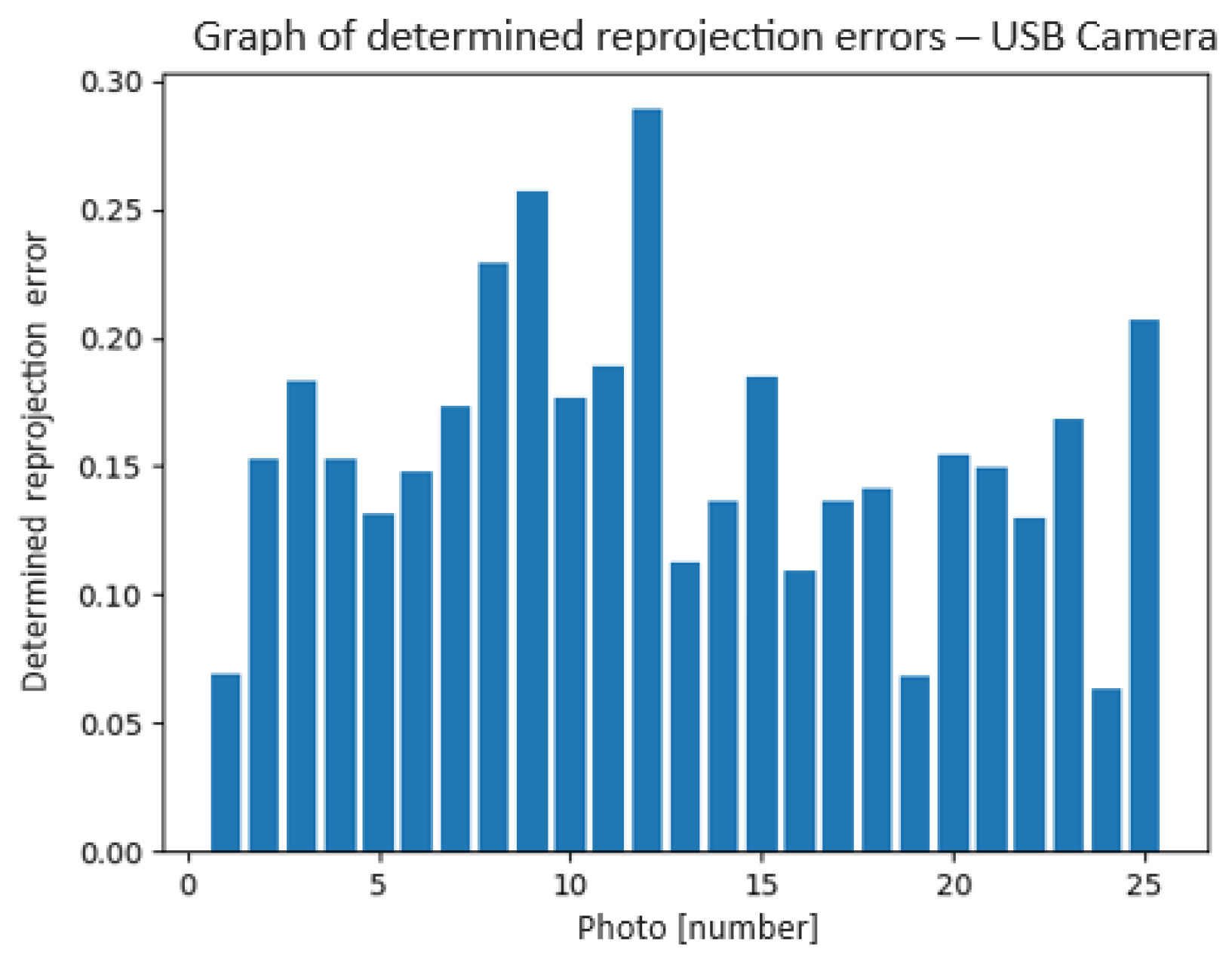

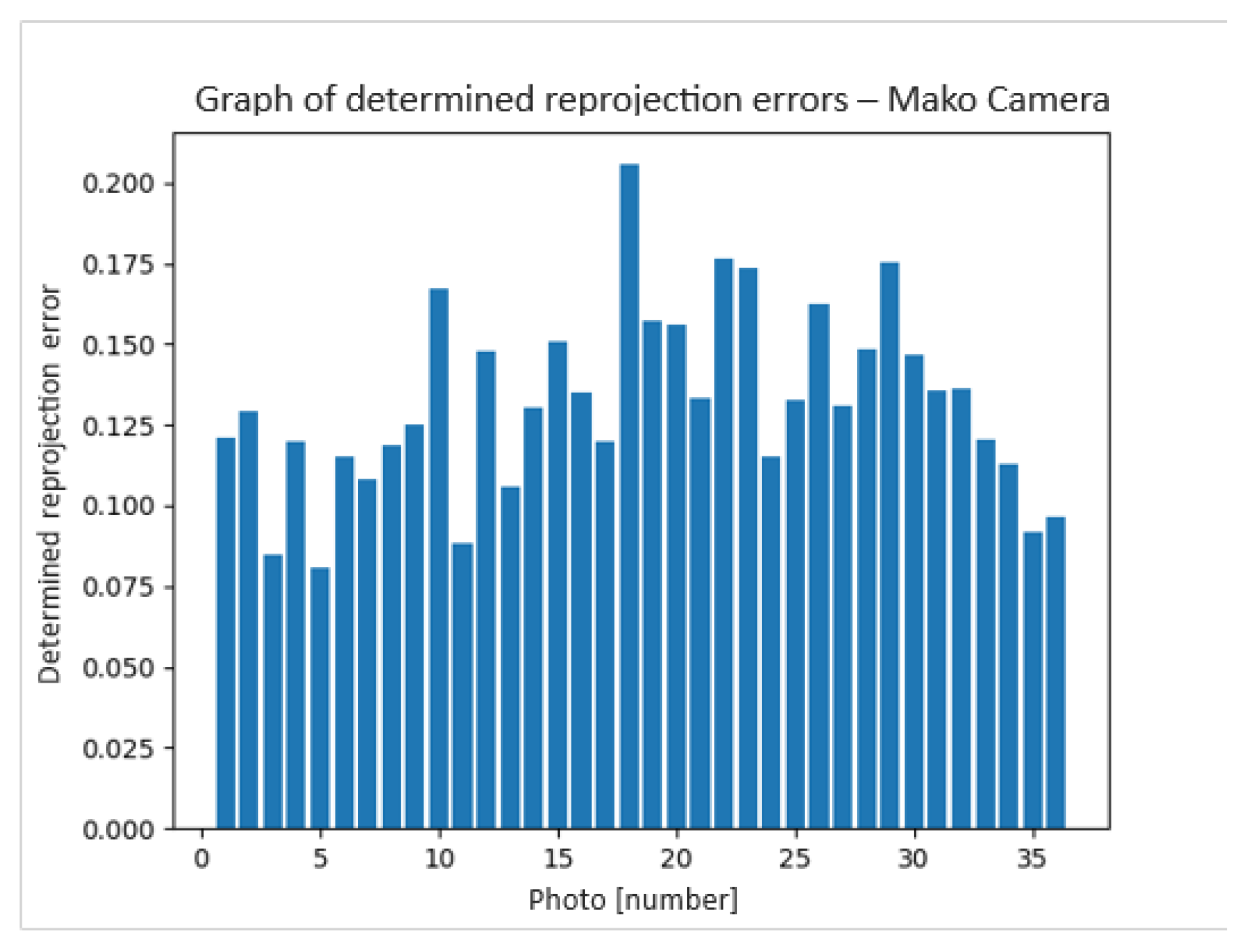

2. Methodology-Image Processing Algorithms

| Distortion Coefficients = [-0.27496, 4.08649, -0.00045, -0.00277, -0.00043] | (1) |

| Camera Matrix = | (2) |

| Distortion Coefficients = [-0.37186, 0.13426, -0.00247, -0.0007, -0.02940] | (3) |

| Camera Matrix = | (4) |

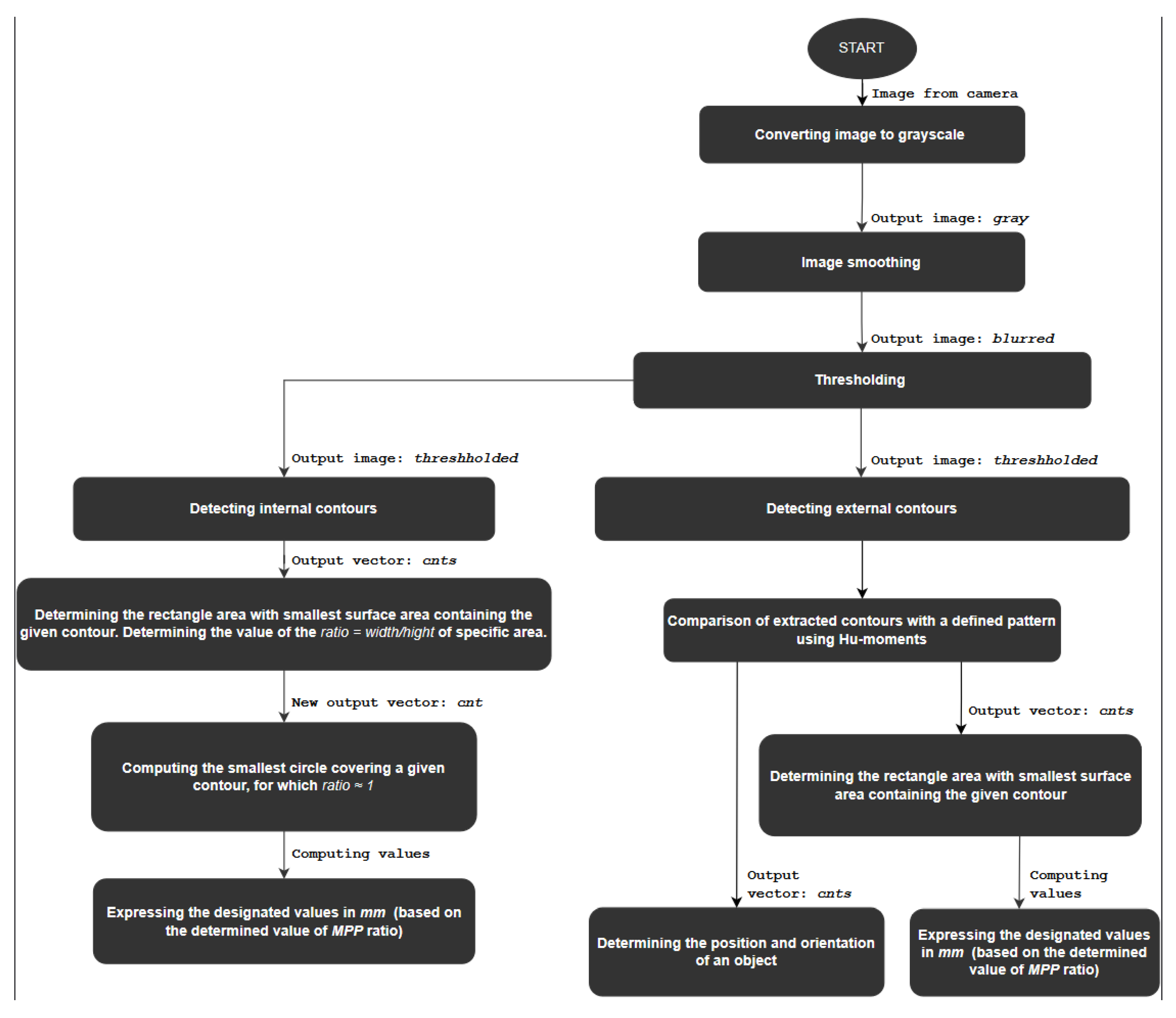

- ➢

- Step 1 – image preprocessing before contour detection; include conversion to grayscale in the case of a color image, smoothing using a Gaussian filter, thresholding, and bit negation for contour detection,

- ➢

- Step 2 – detection of external contours in a binary image using the cv.findContours() function (topological structural analysis is applied to detect contours),

- ➢

- ➢ Step 3 – elimination of contours that do not meet the adopted parameters for the extracted image features,

- ➢

- ➢ Step 4 – comparison of extracted contours with a defined pattern using Hu-moments (cv.matchShapes()),

- ➢

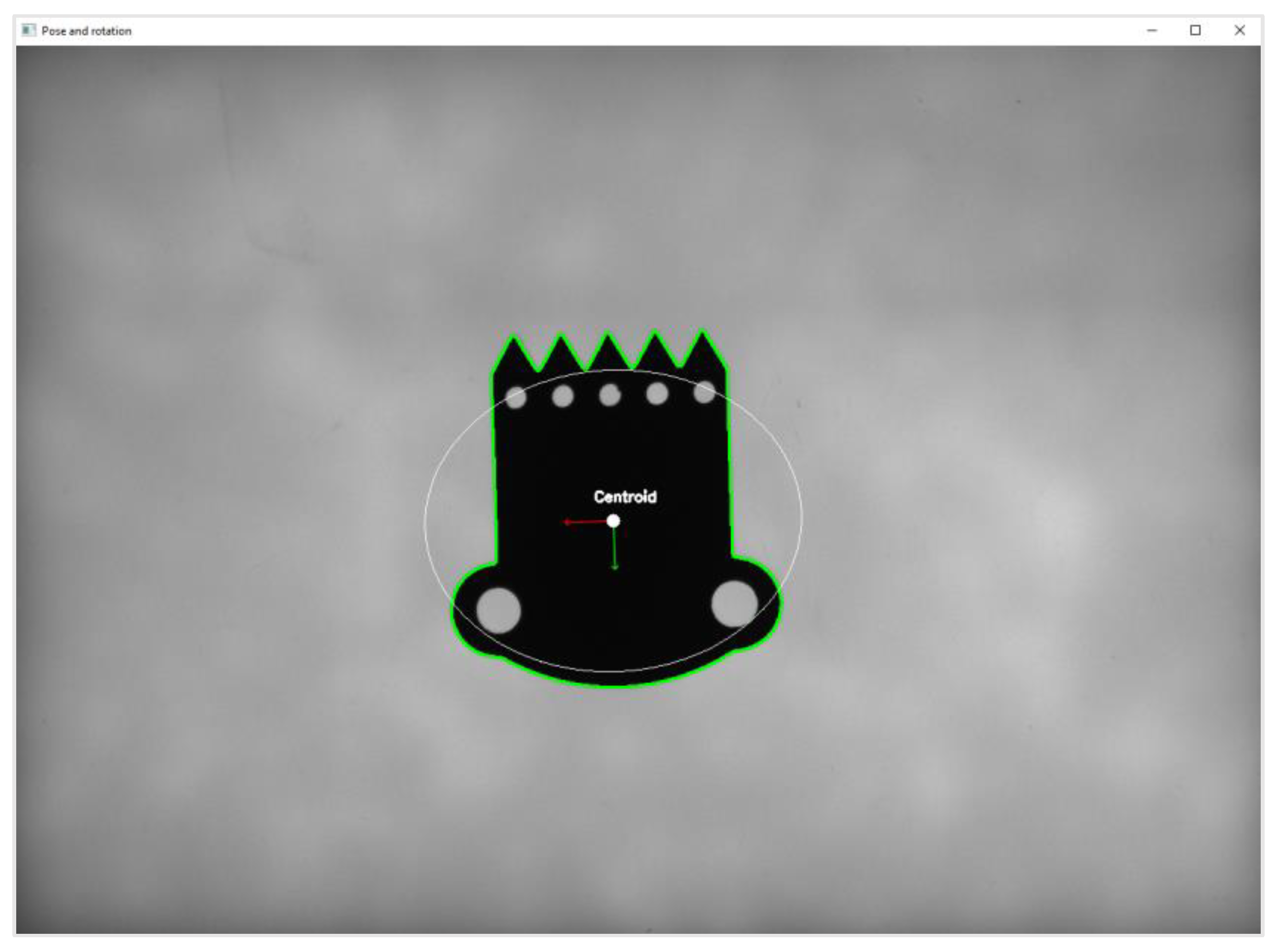

- ➢ Step 5 – determination of the centroid and orientation angle based on first- and second-order moment methods,

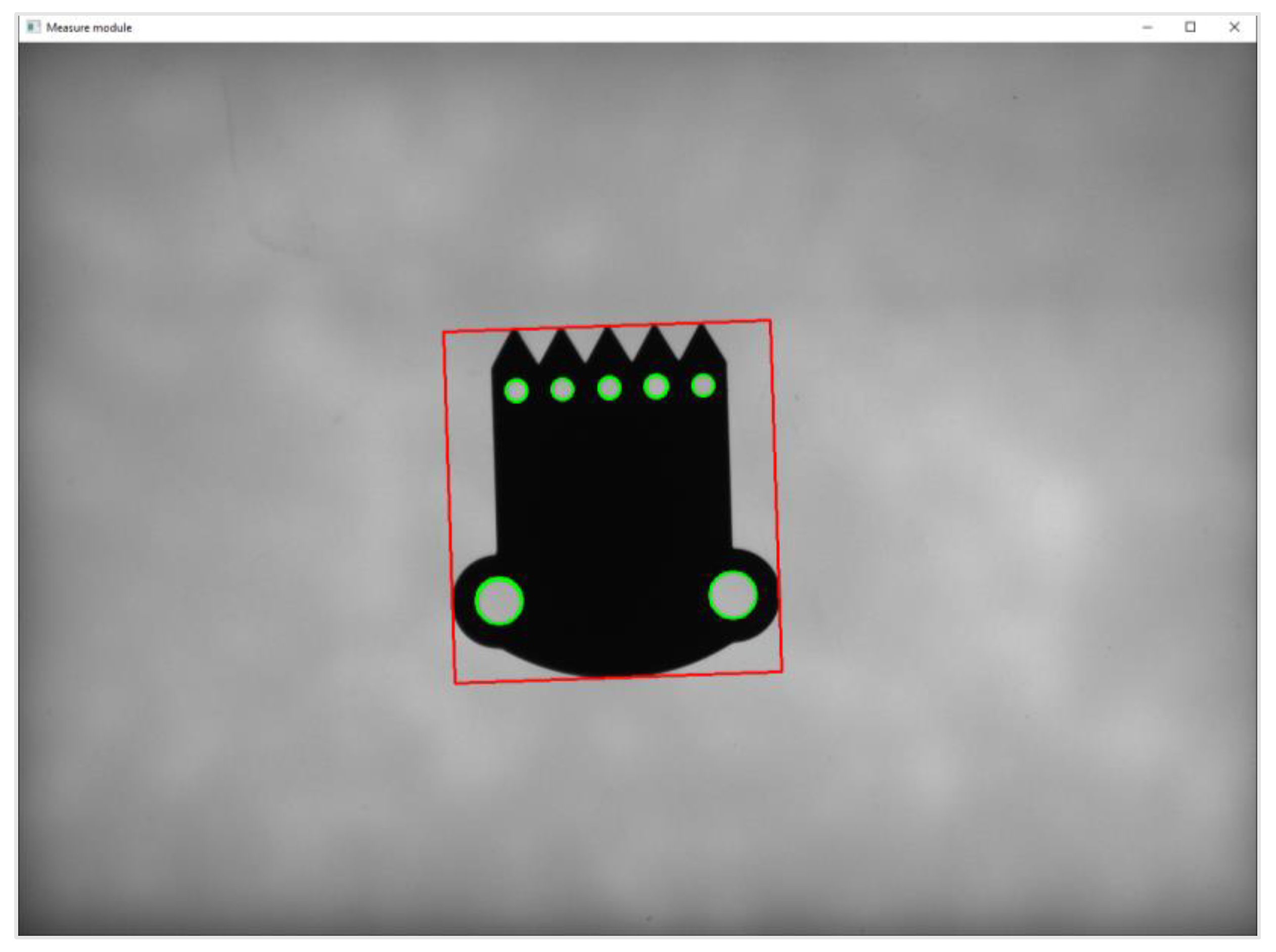

- ➢ Step 1 – image preprocessing (conversion to grayscale, image smoothing - Gaussian filtering, thresholding, bit negation) and detection of external contours using the cv.findContours() function,

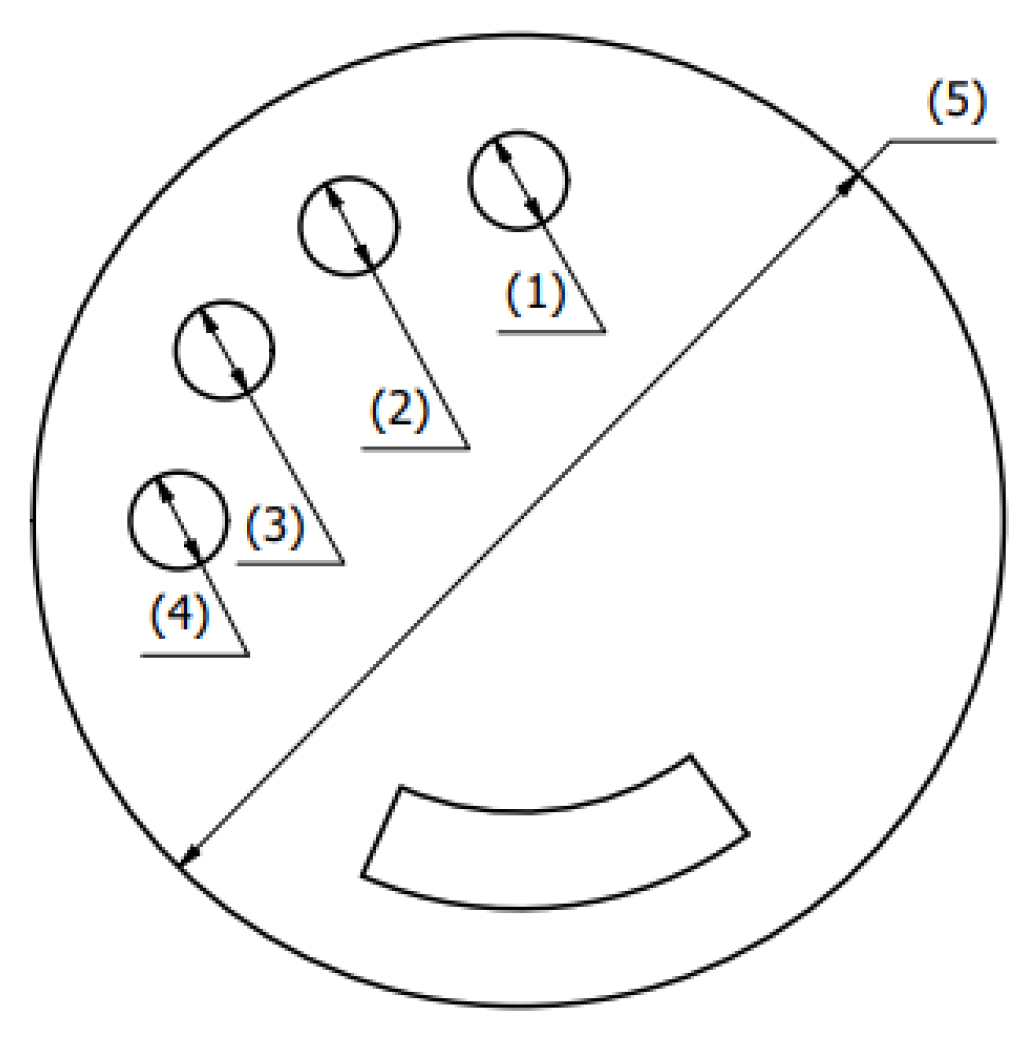

- ➢ Step 2 – detection of internal contours in a group of areas separated from the image in the previous operation (Error! Reference source not found..),

- ➢ Step 3 – determination of the basic values of the detected geometric features,

- ➢ Step 4 – conversion of obtained sizes expressed in pixel units into SI units in mm,

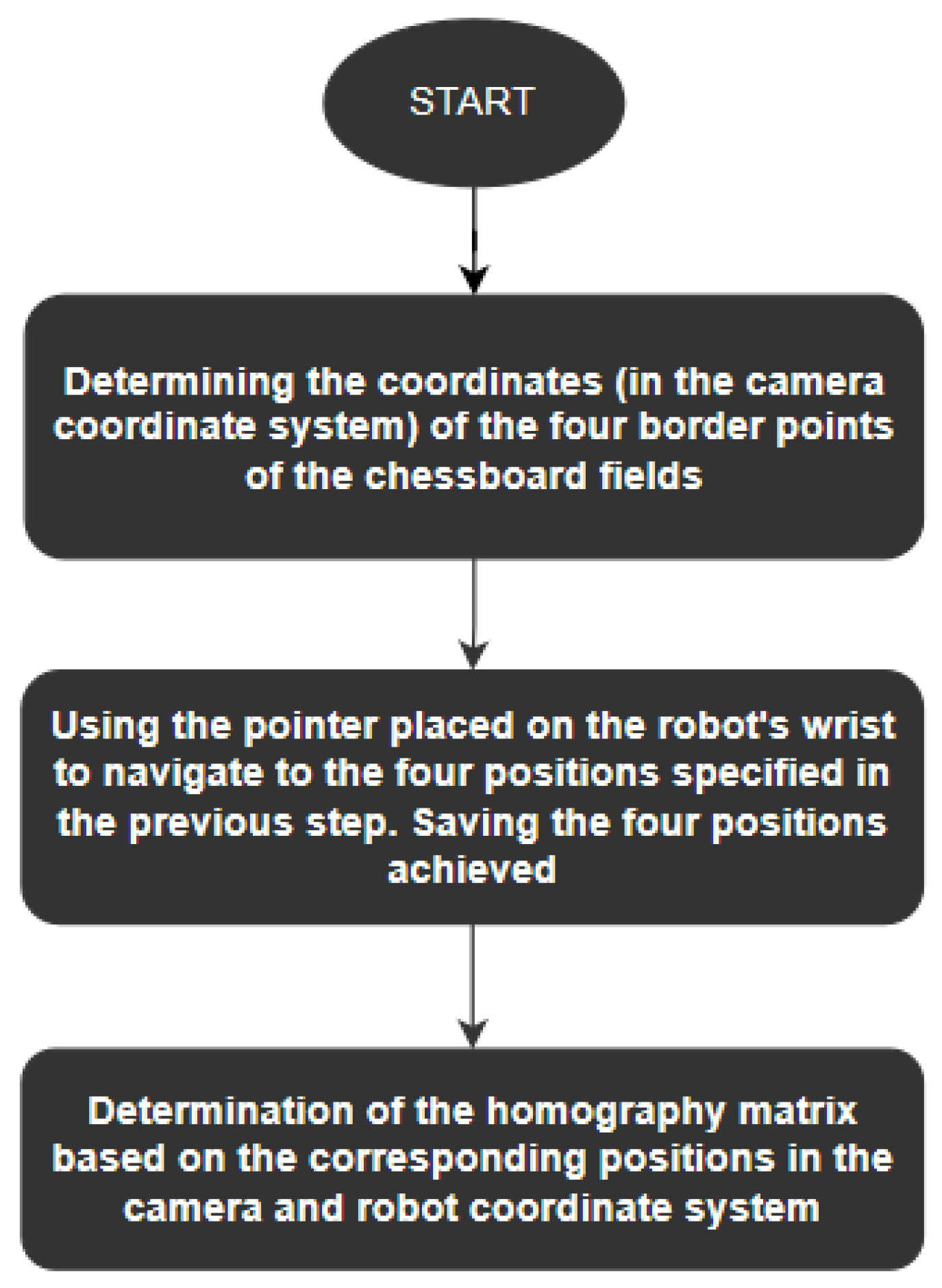

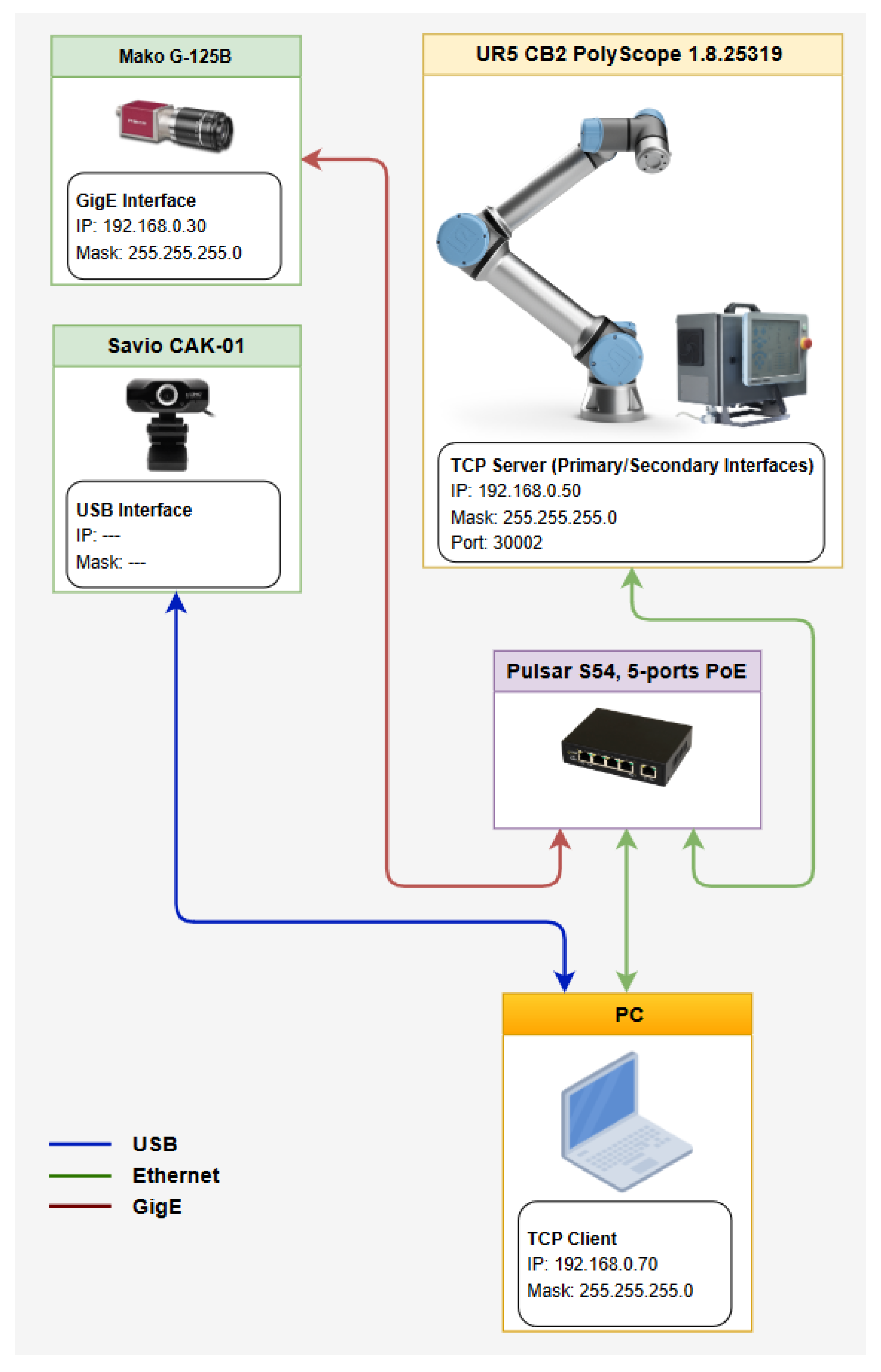

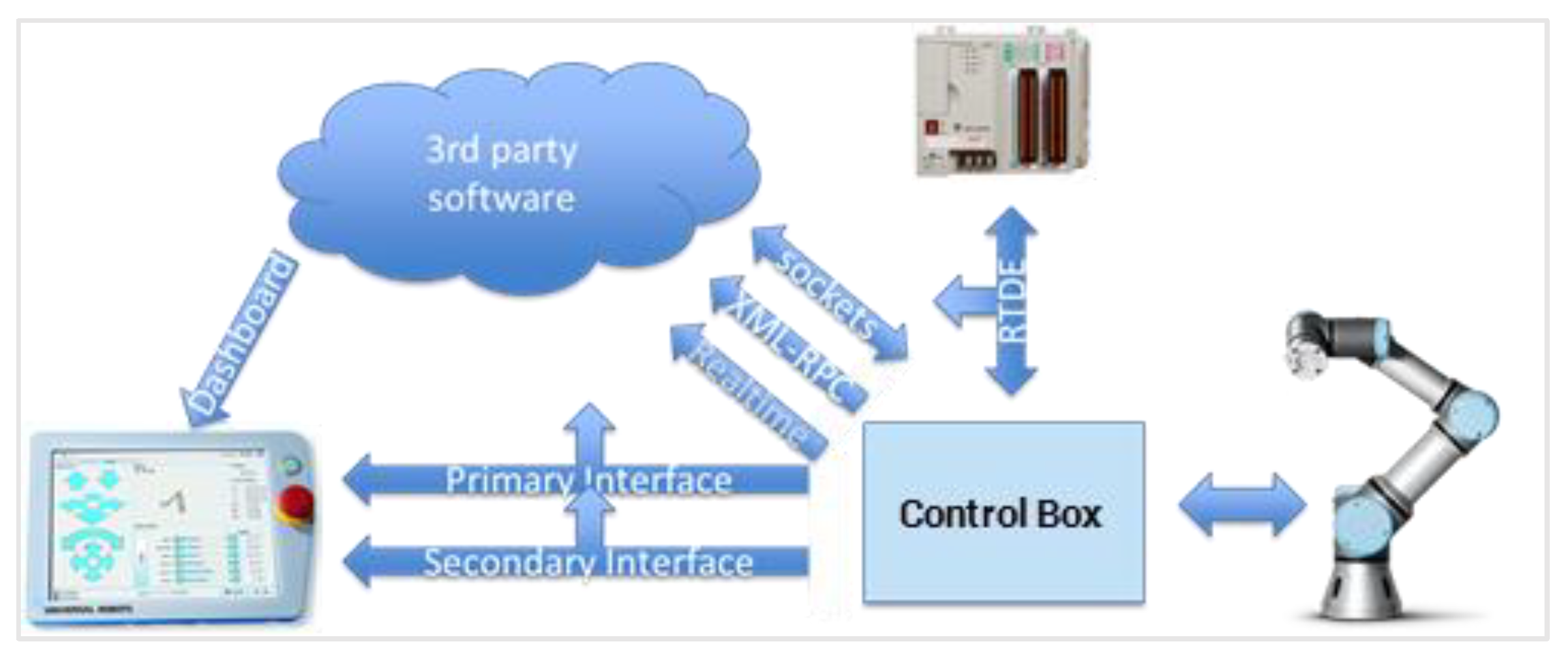

3. System Architecture

- Cobot UR5 CB2 with the piCOBOT vacuum ejector from Piab,

- Mako G-125B camera (CCD, 1292×964) with a 16mm Computar lens,

- Savio CAK-01 USB webcam (CMOS, 1920×1080),

- Illumination system (backlight) for the Mako camera. In the case of a camera placed on the robot, the use of lighting installed in the room was limited.

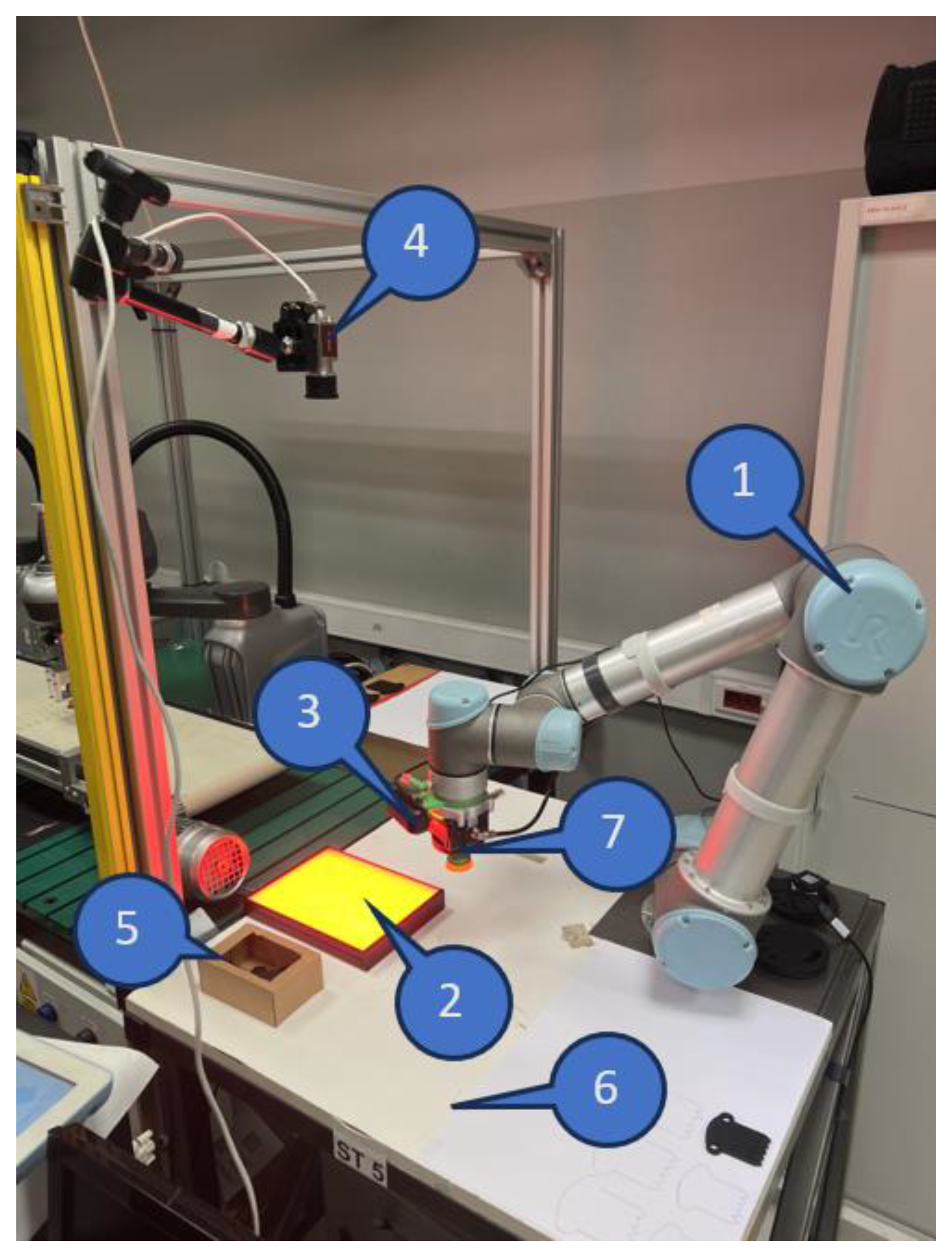

4. Robotic Workstation

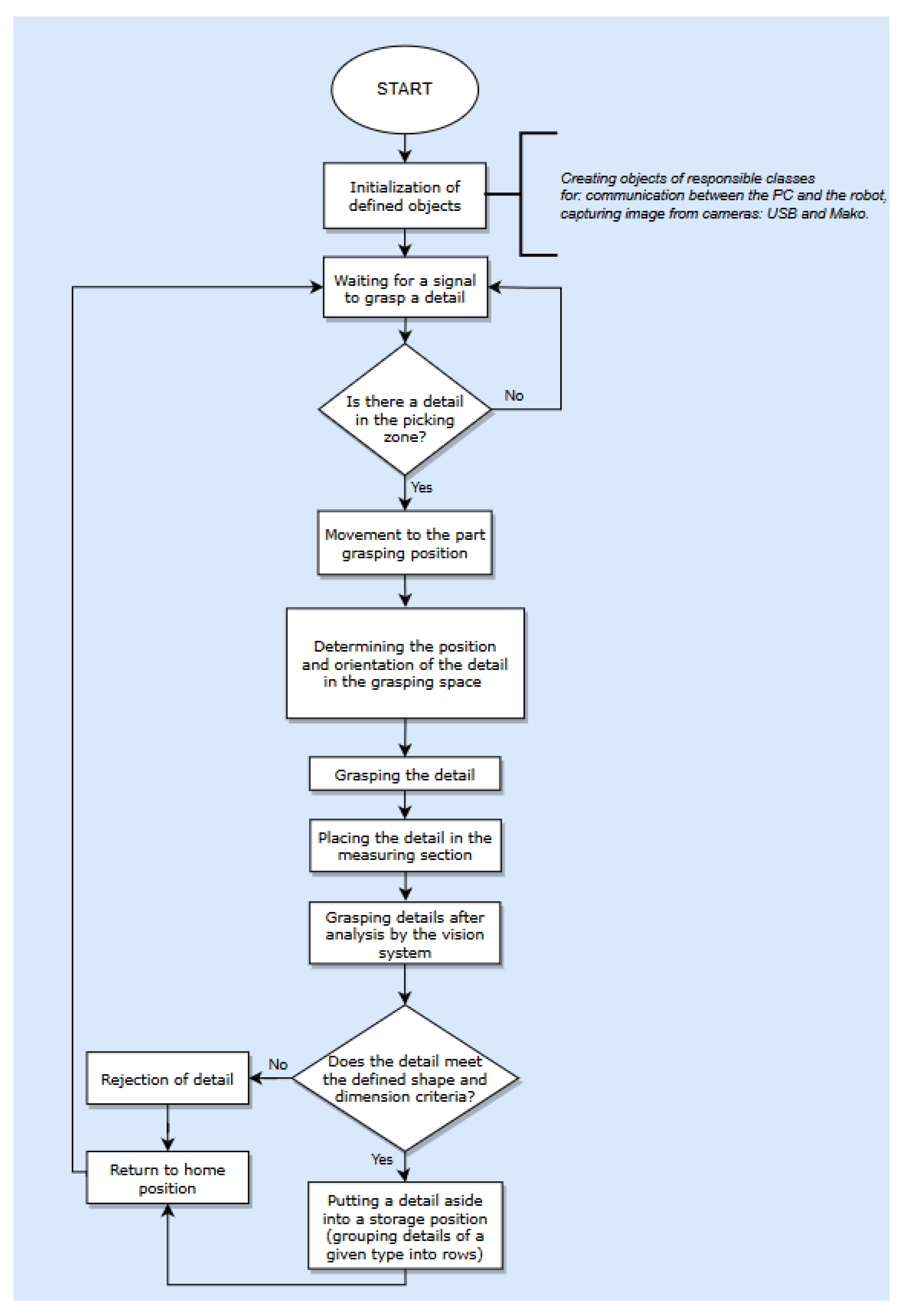

- ➢ Step 1 – waiting for the signal to pick up the part (true value on digital_in0)

- ➢ Step 2 – after receiving the signal (digital_in0), details are grasped by the UR5 cobot (the position and orientation of objects are determined based on the image from the camera placed on the robot’s flage (Error! Reference source not found..) and transferred to the measuring section.

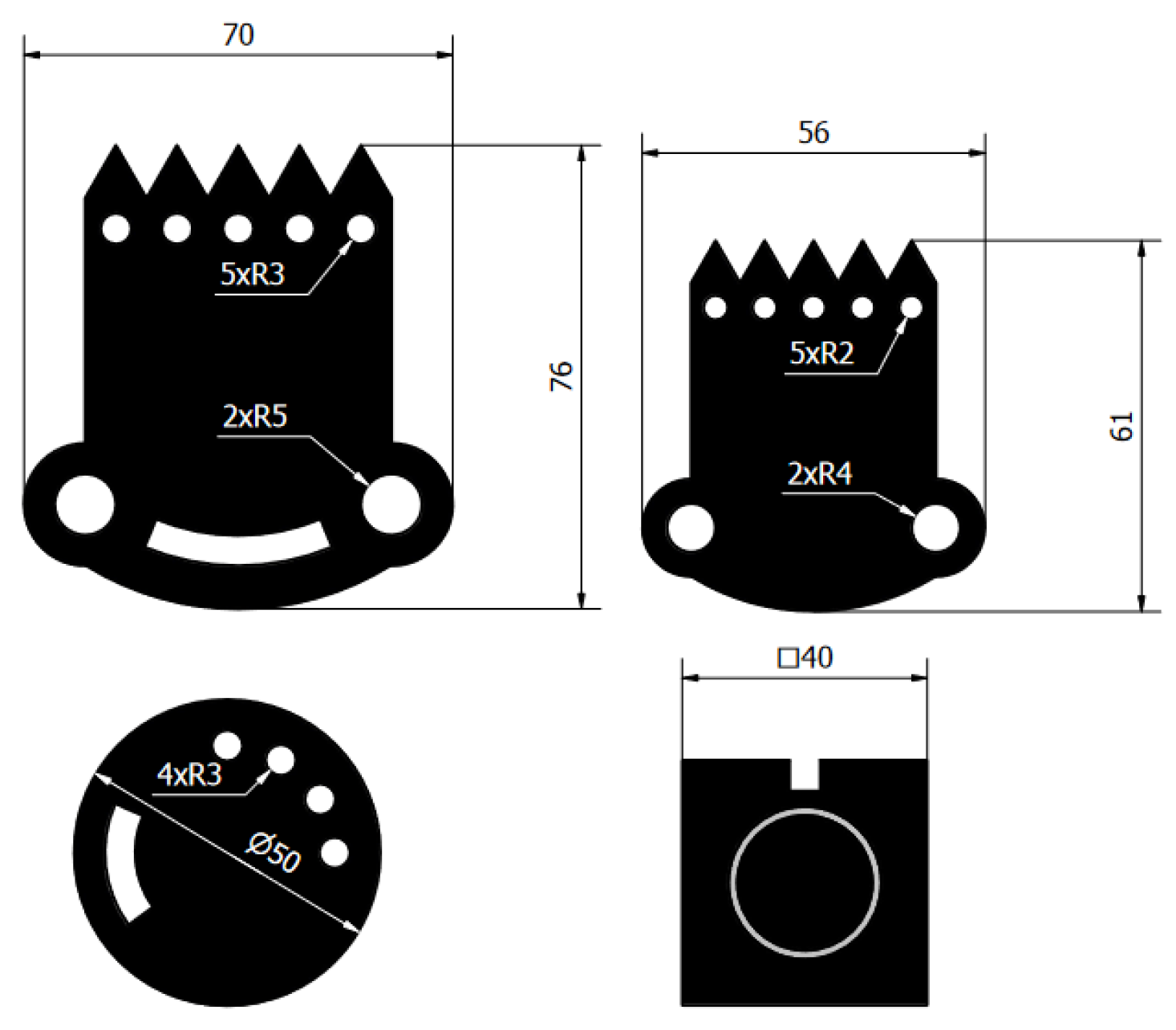

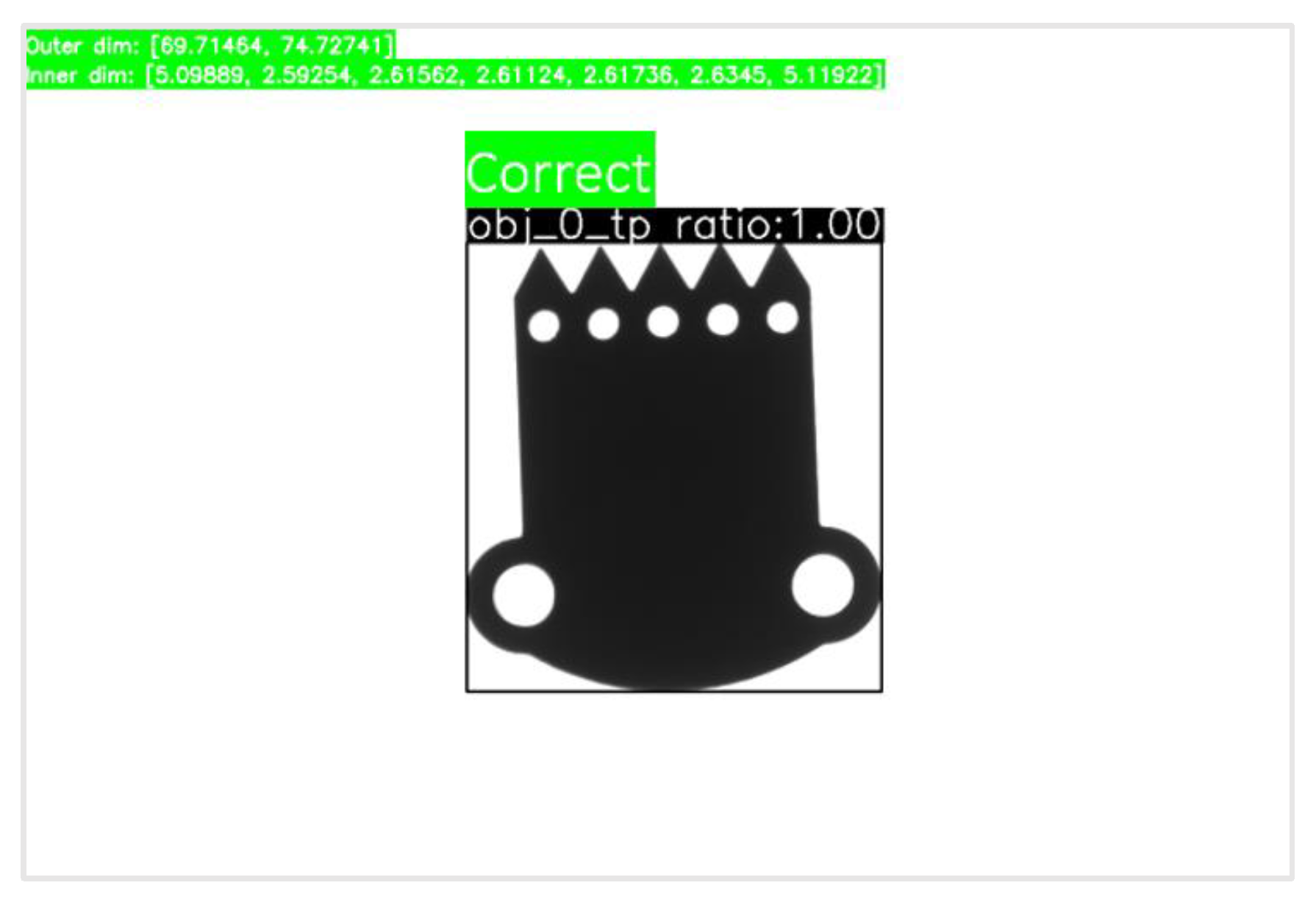

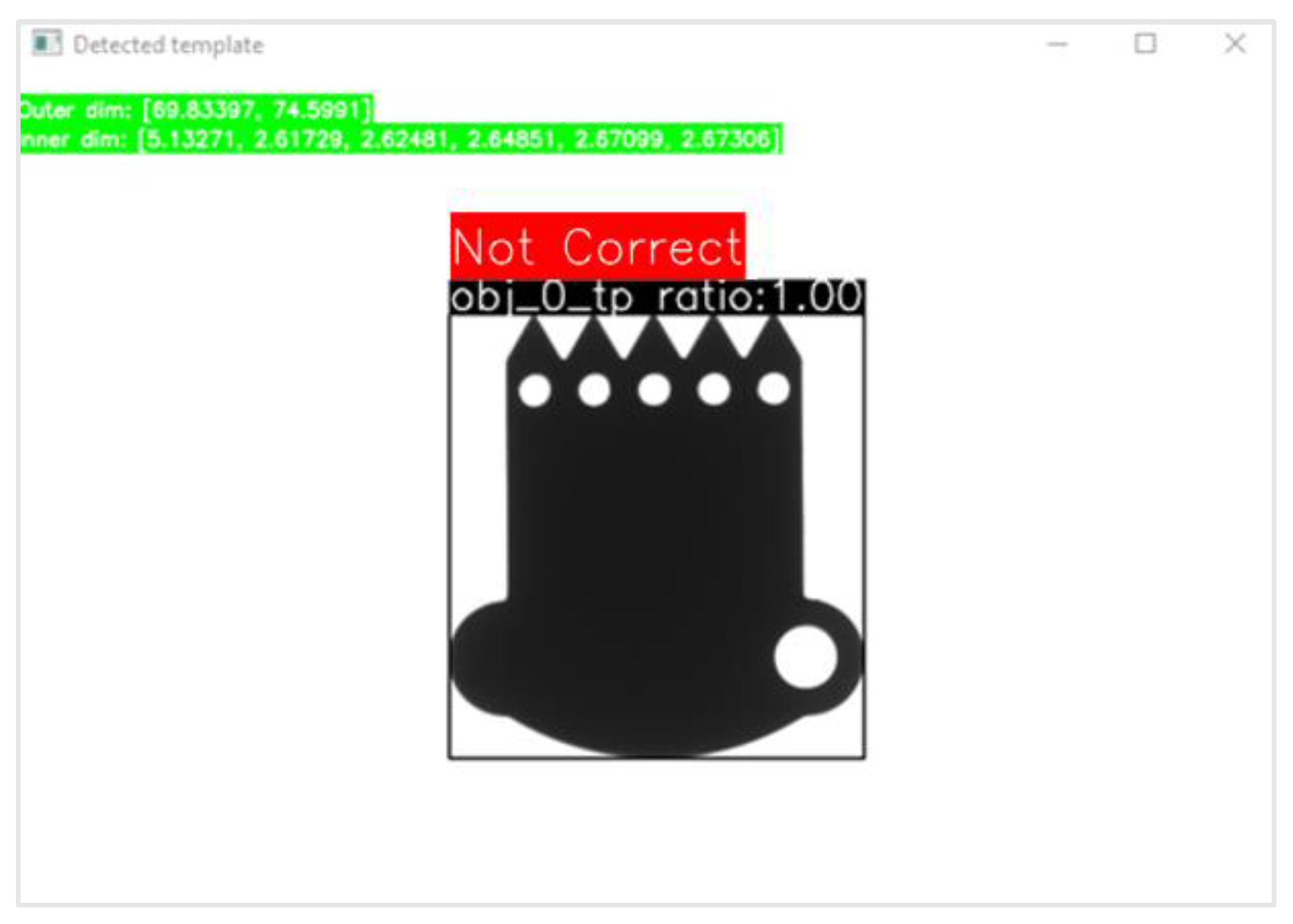

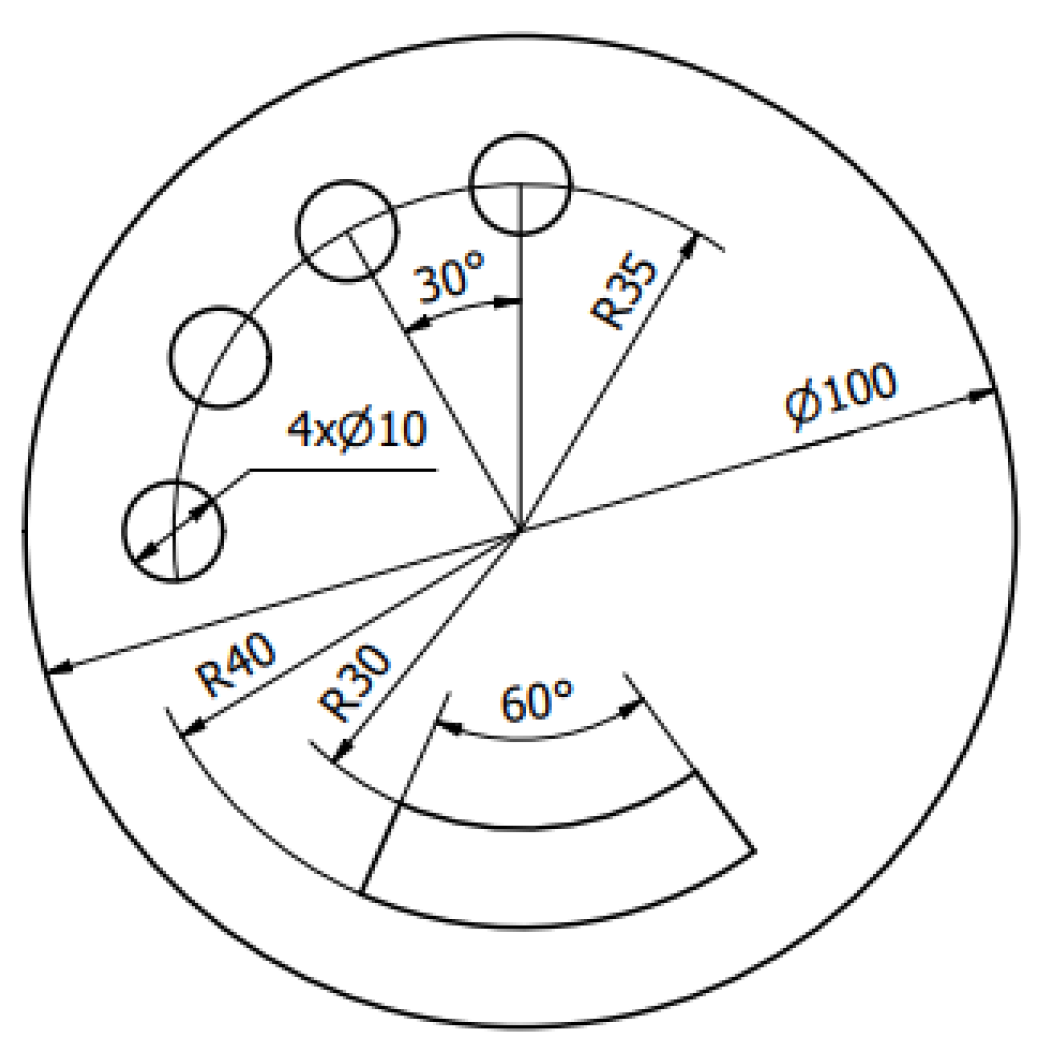

- ➢ Step 3 – in the measurement section, based on the image from the Mako G-125B camera, the detail is detected, and its dimensions (width and height) and the radius of internal holes are measured. Sample analysis results are presented below (Error! Reference source not found..)(the dimensions of internal holes are ordered relative to the designated X and Y coordinates of their center in descending order, and external dimensions are presented as width and height).

- ➢ Step 4 – details that do not meet the requirements regarding shape (Error! Reference source not found..)( the shape of the analyzed detail is not included in the database of defined patterns or the detail has a defect) and dimension (at least one of the dimensions does not fit within the assumed dimensional tolerance) are rejected (Error! Reference source not found. ‘5’). However, details that meet the assumed dimensional criteria for overall dimensions, namely width, height, and internal hole diameter values are transferred to the storage section (Error! Reference source not found. ‘6’). A symmetrical dimensional deviation of ±0.5 mm was assumed for overall dimensions, and for the diameter of internal holes ±0.3 mm.

- ➢ Step 5 – returning to the home position and waiting for the signal to pick up the part.

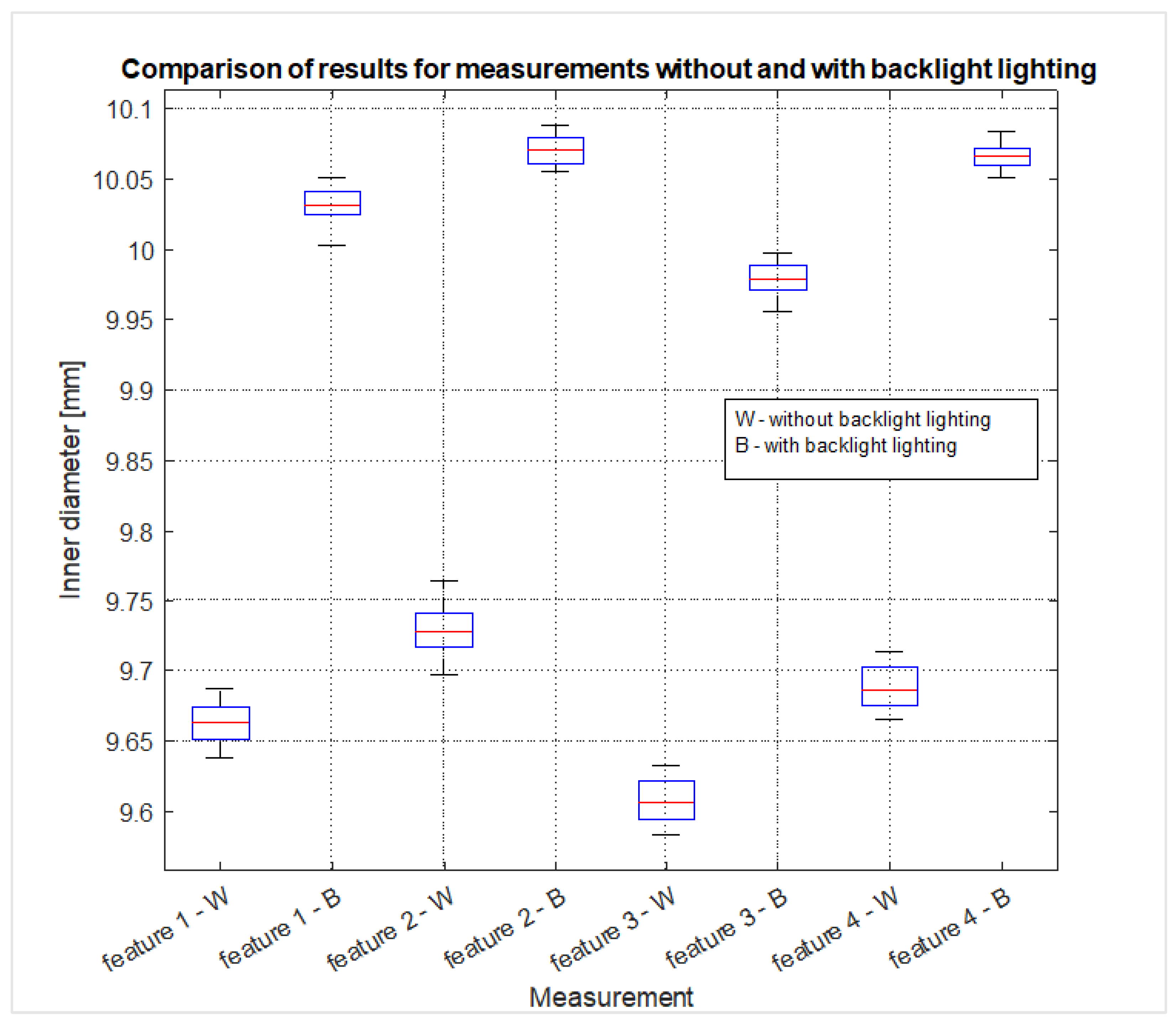

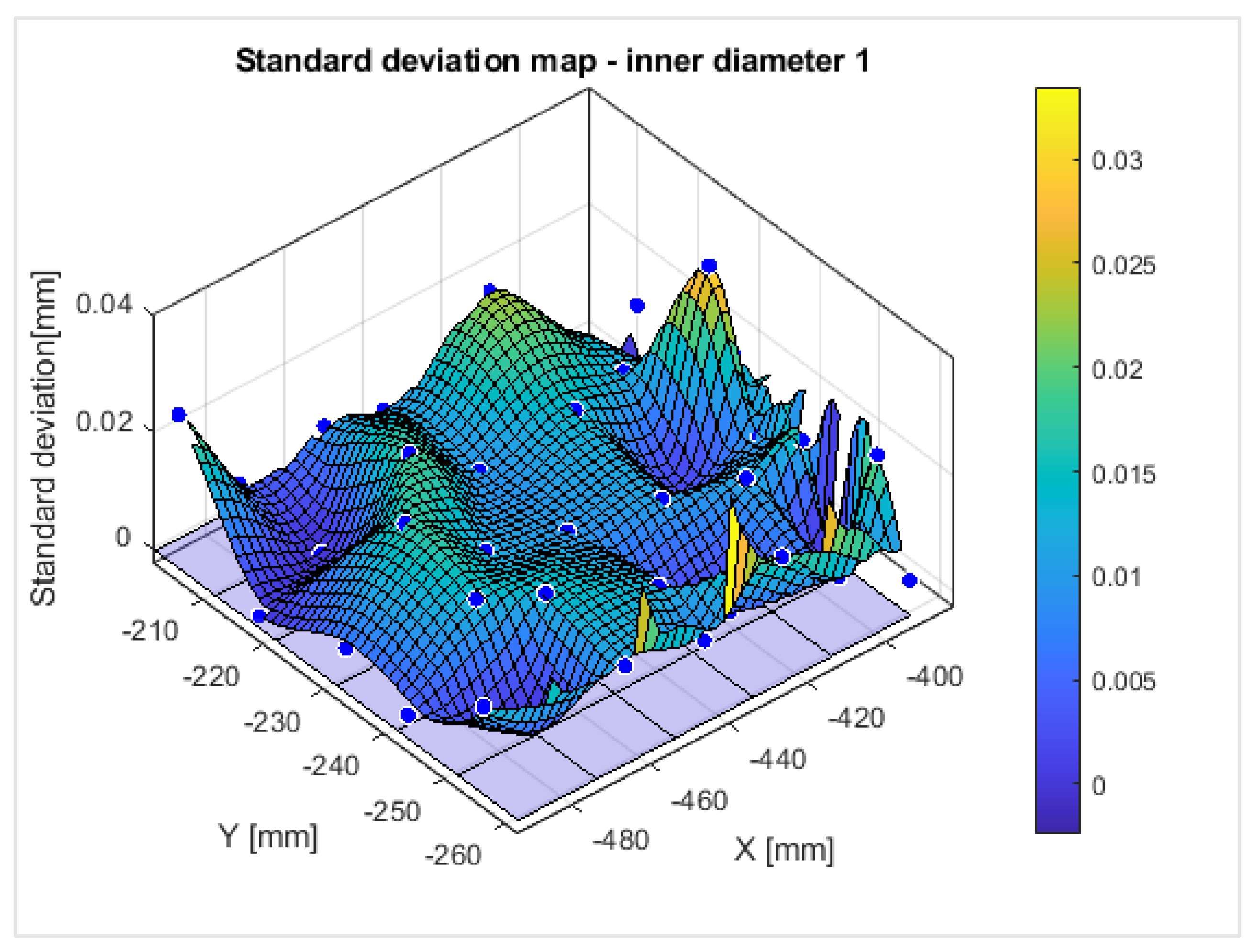

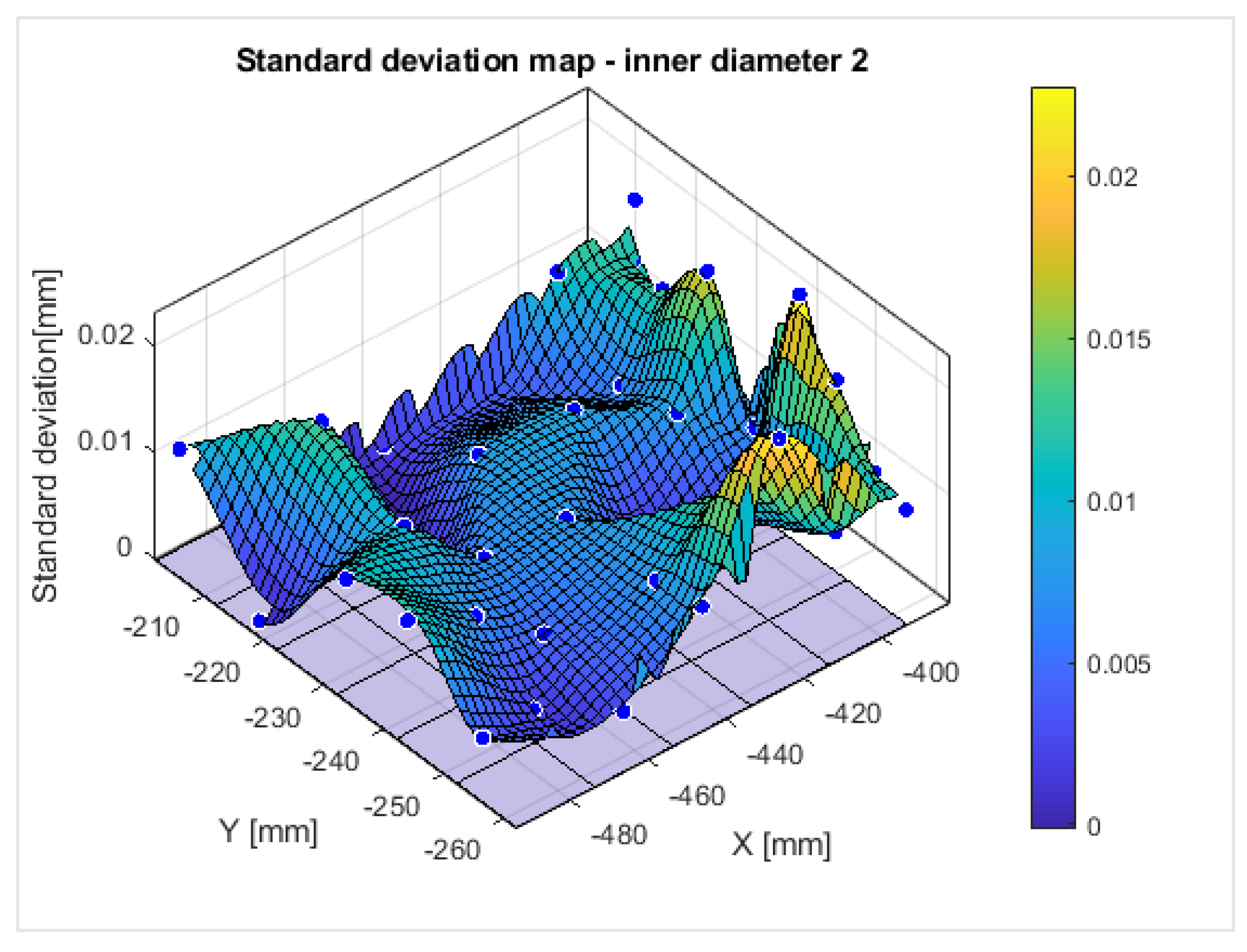

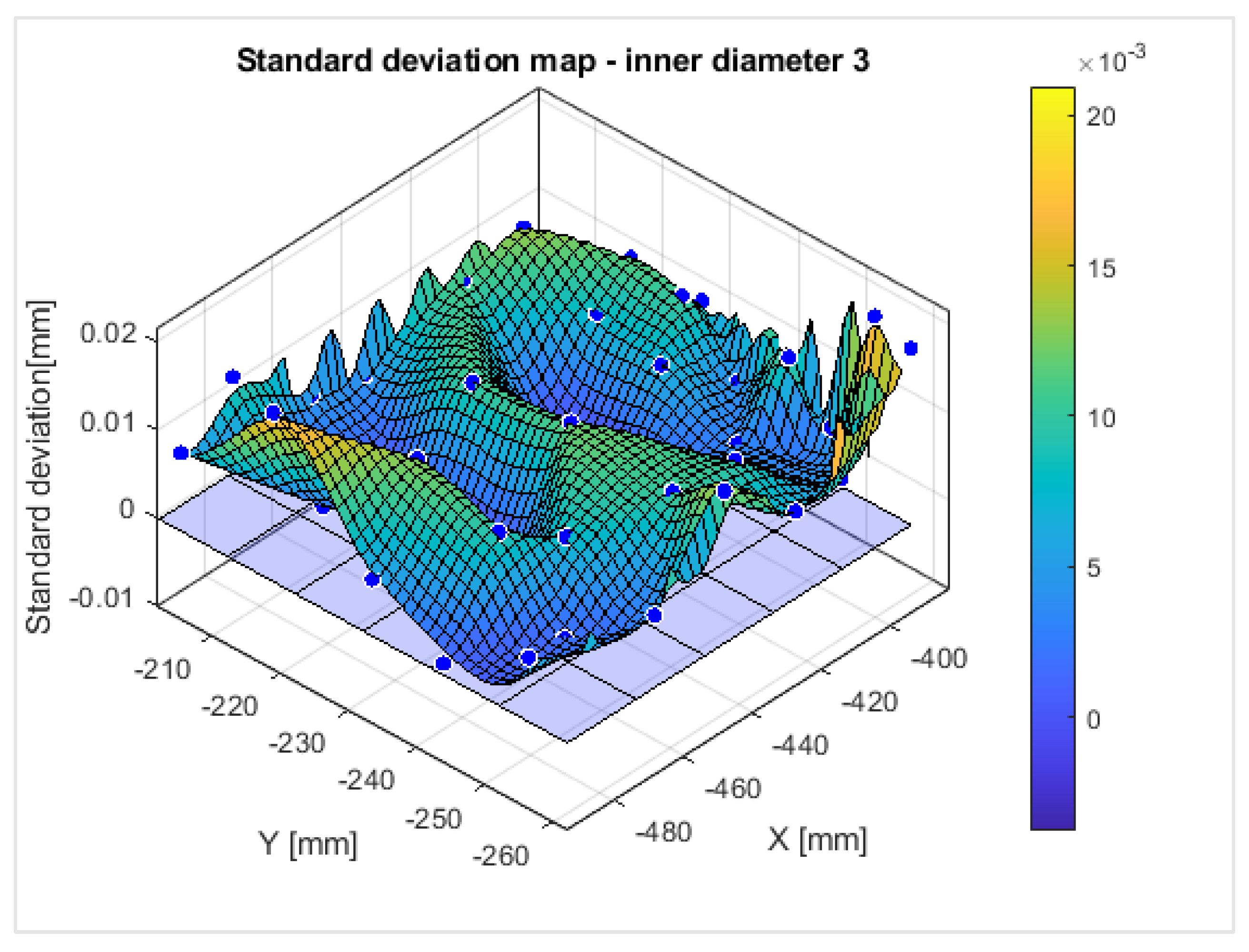

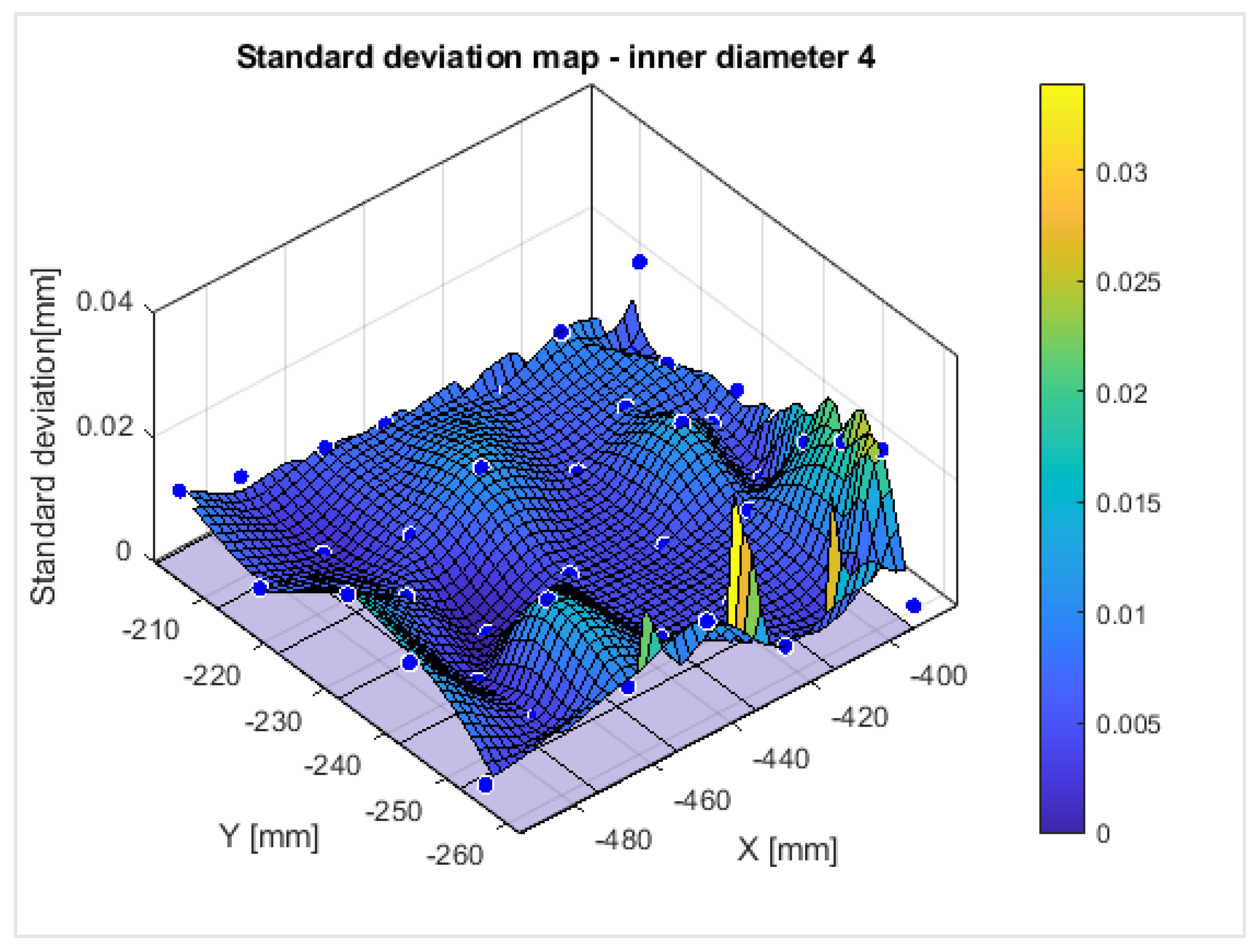

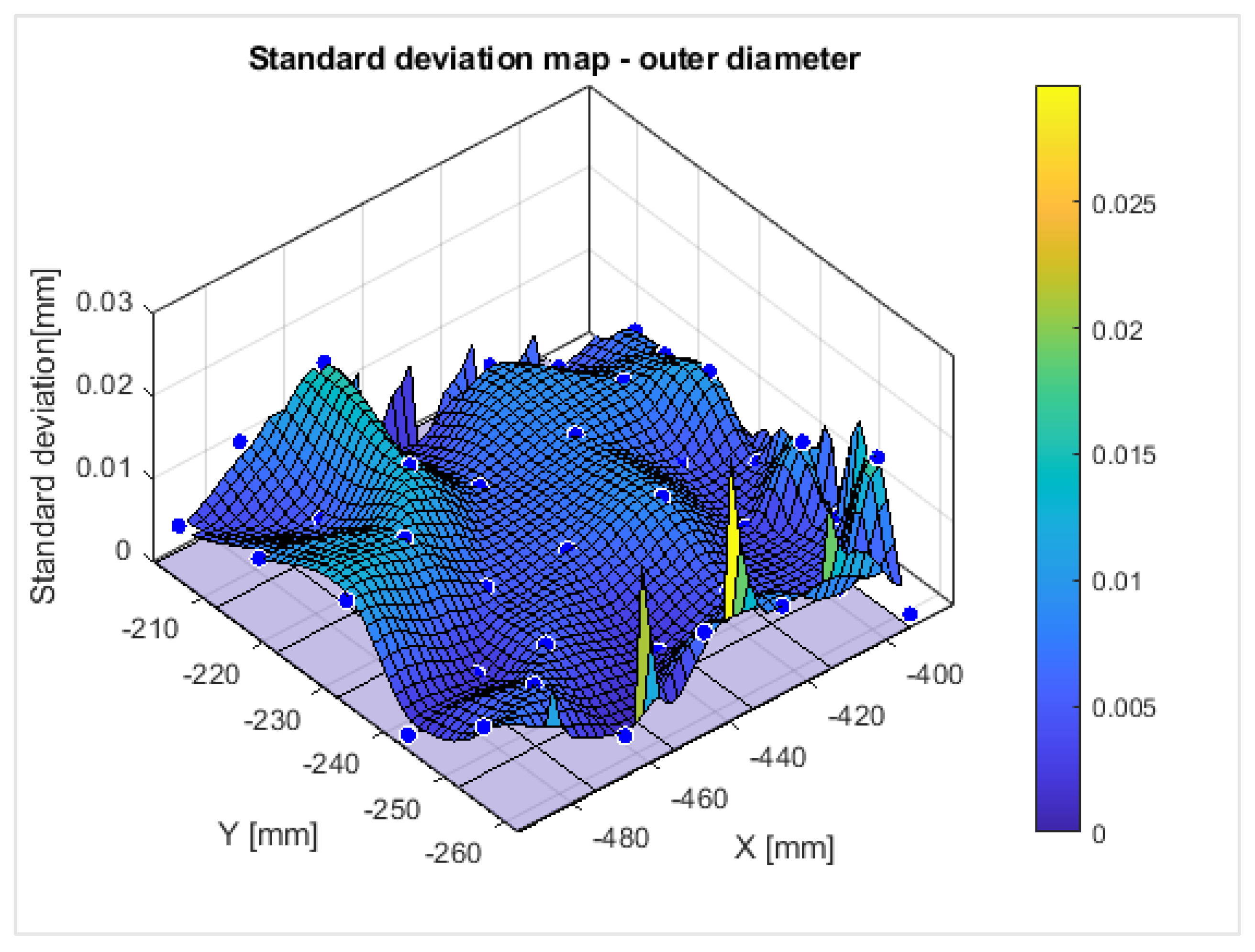

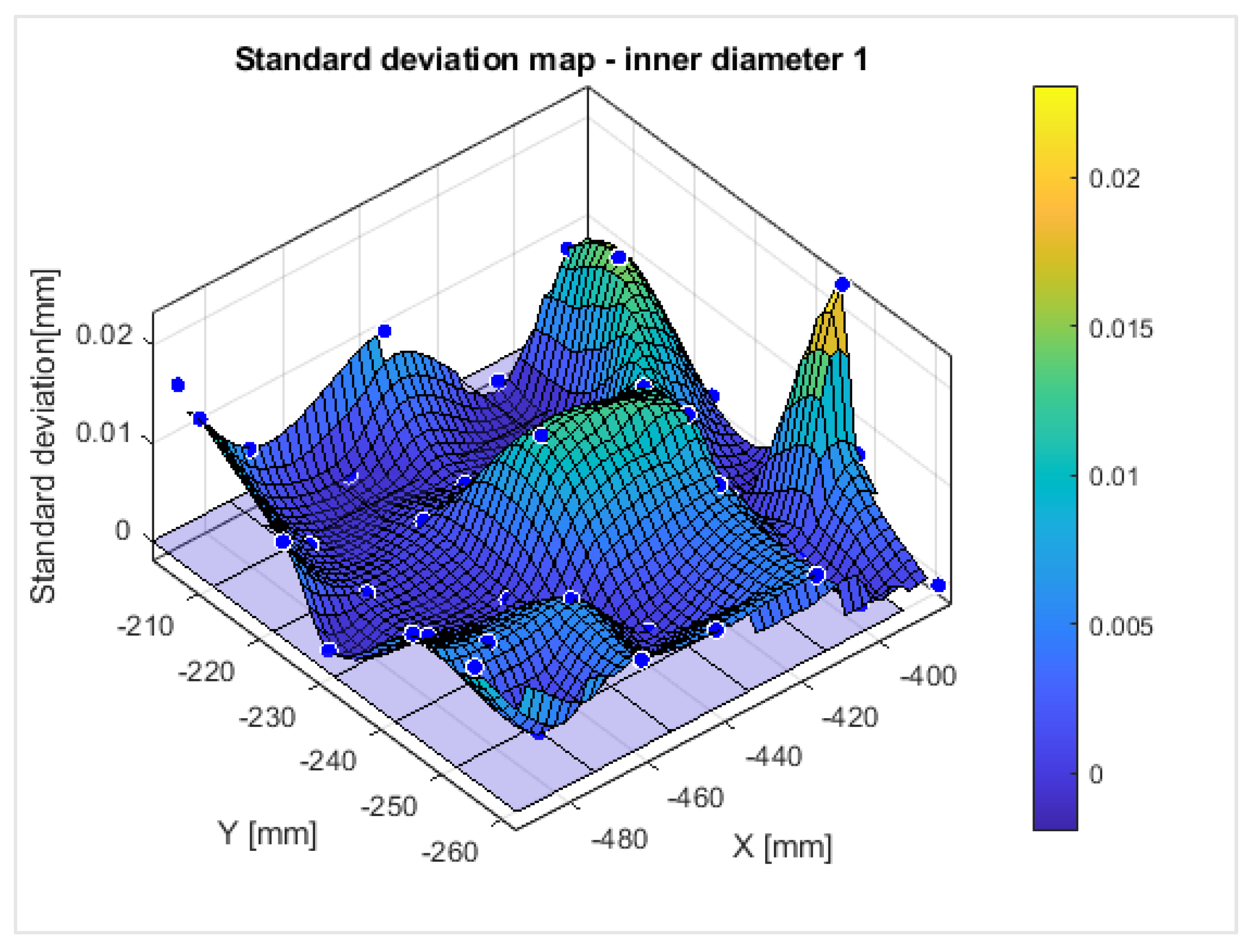

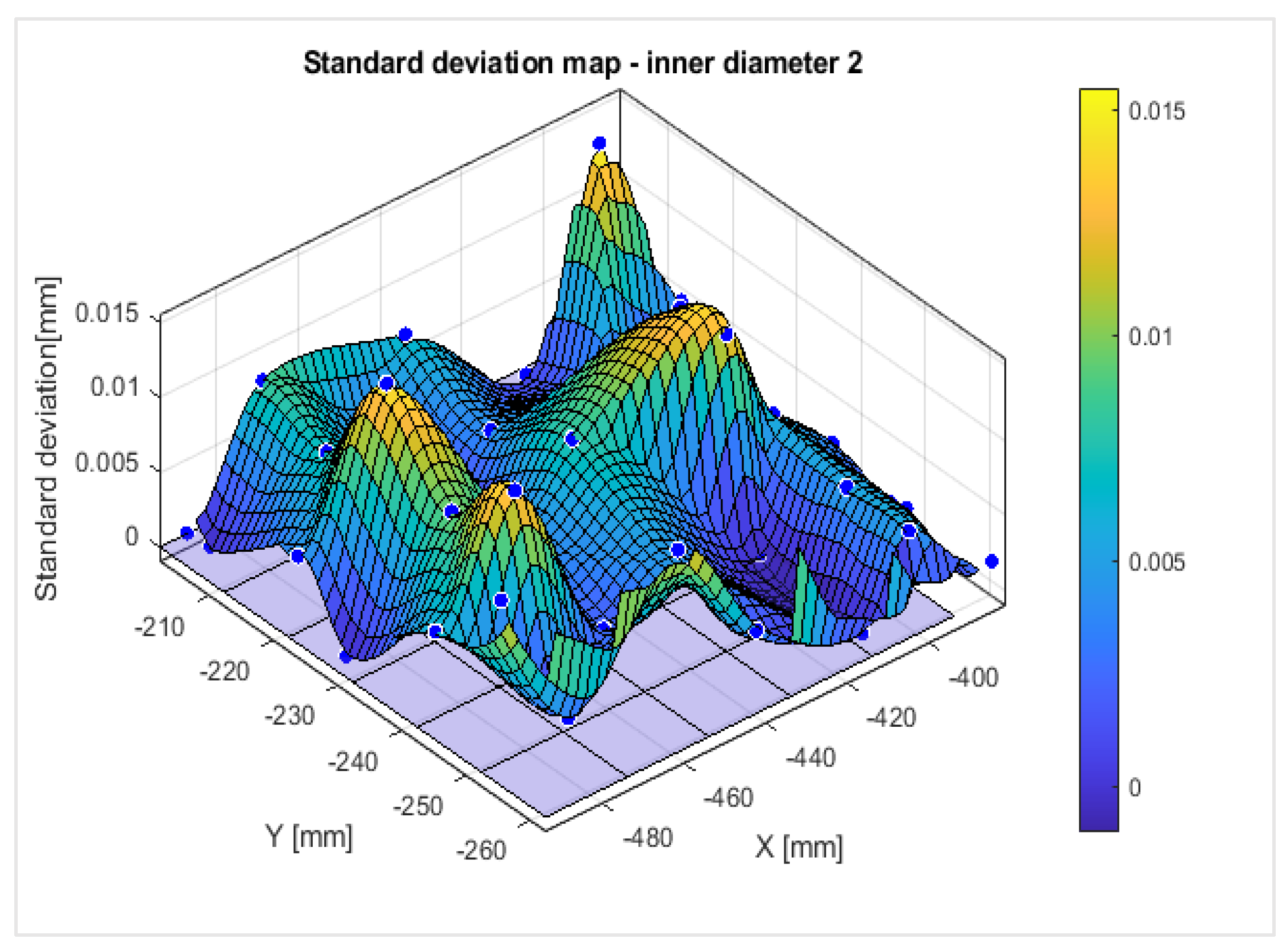

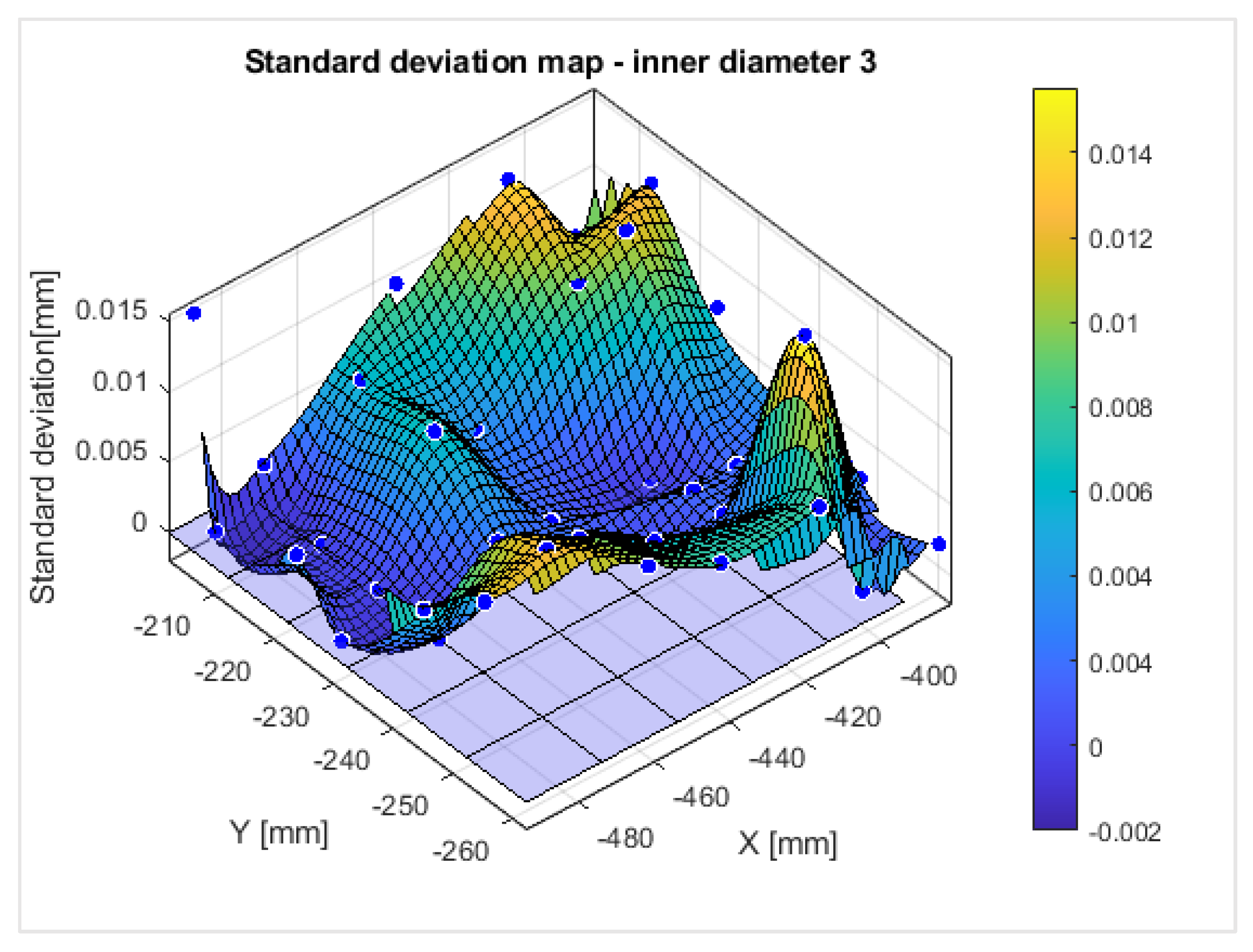

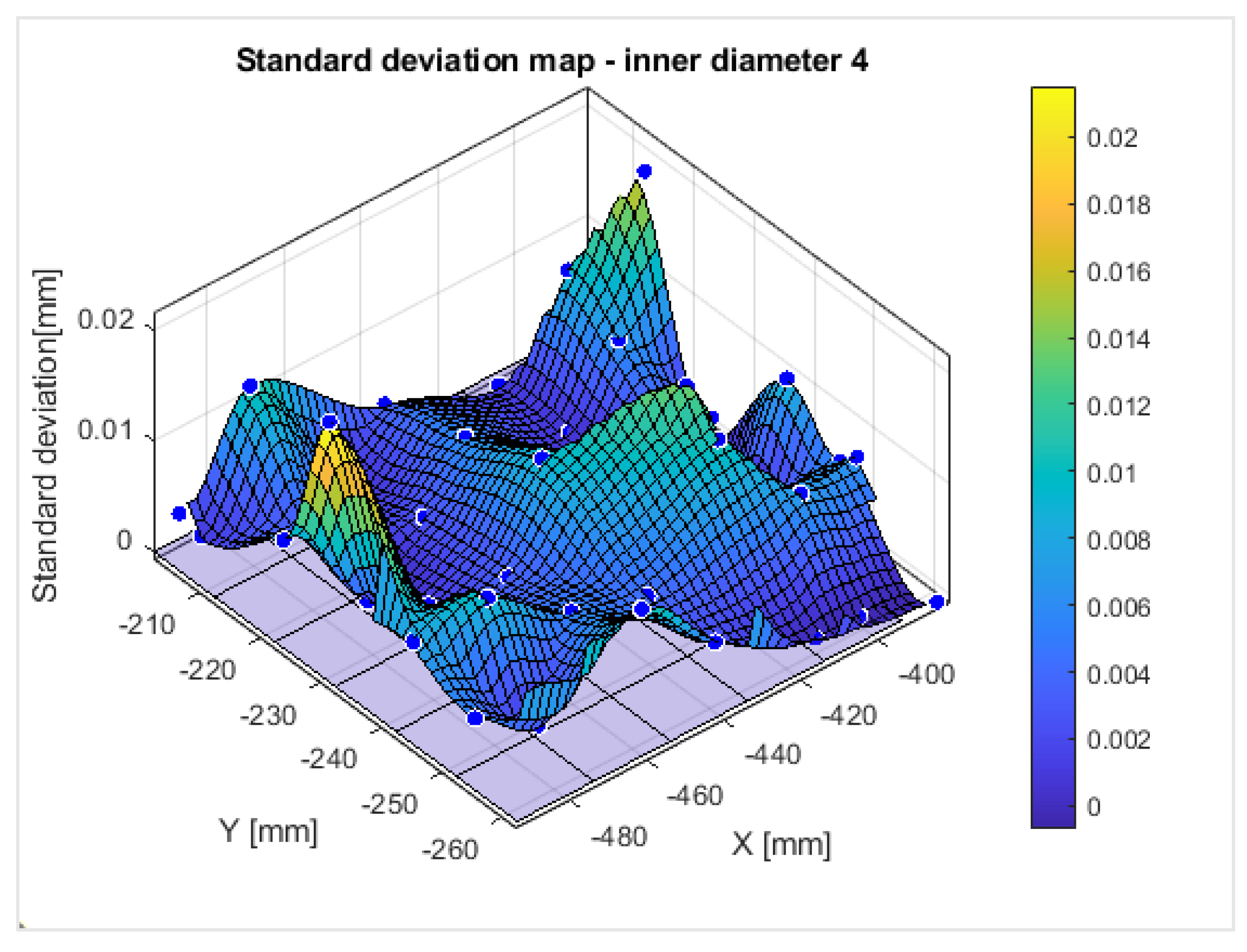

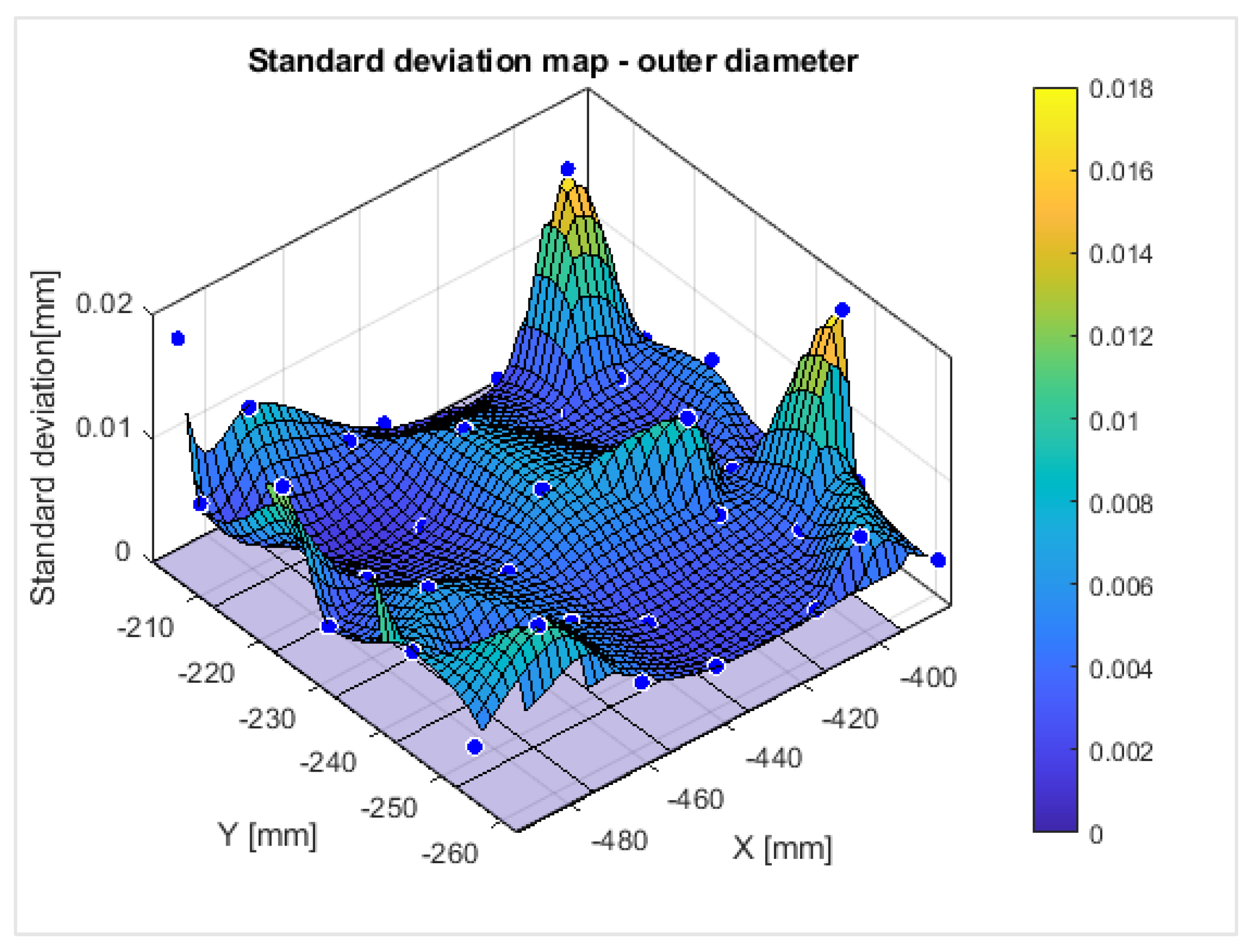

5. Experimental Setup and Results

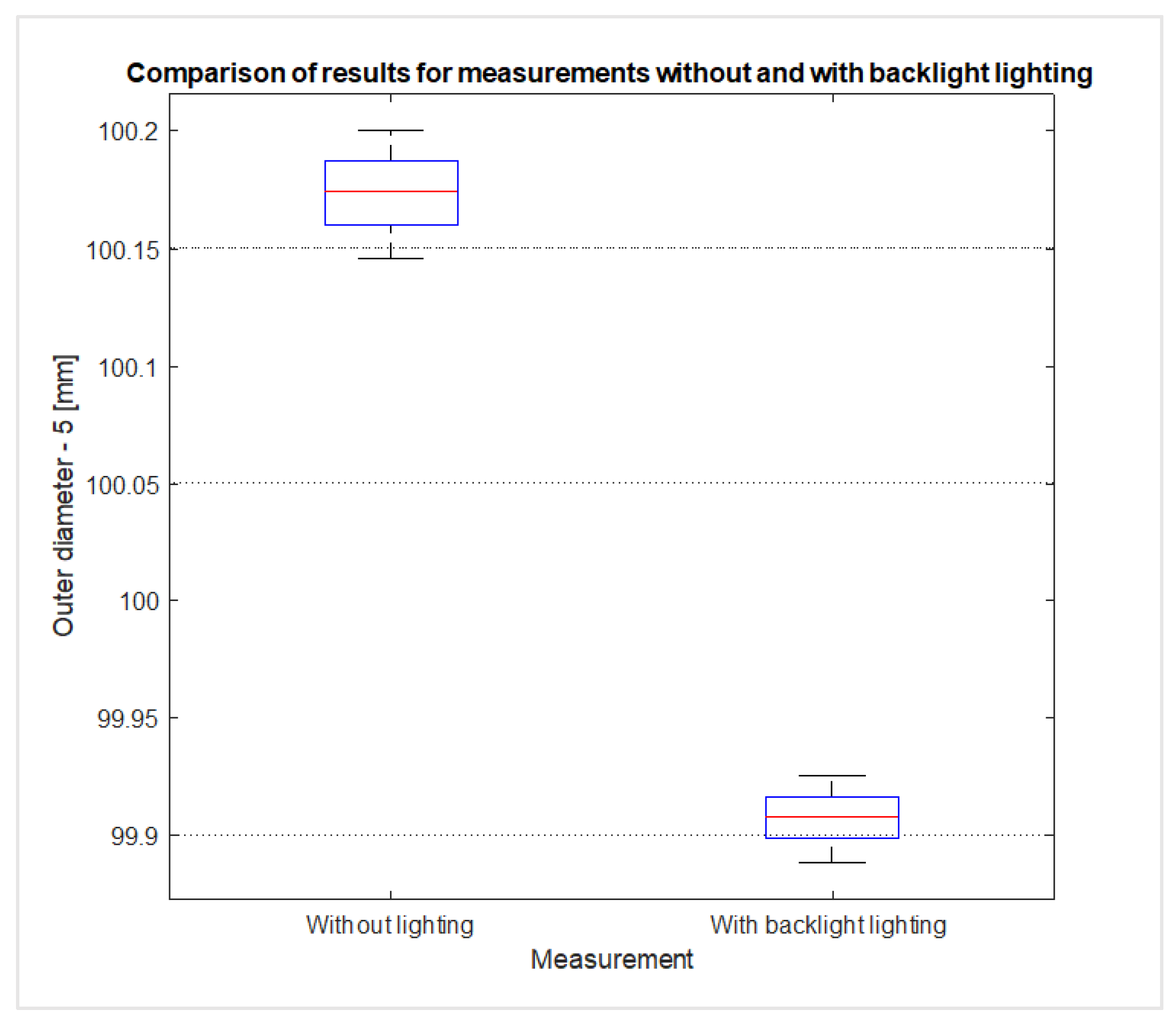

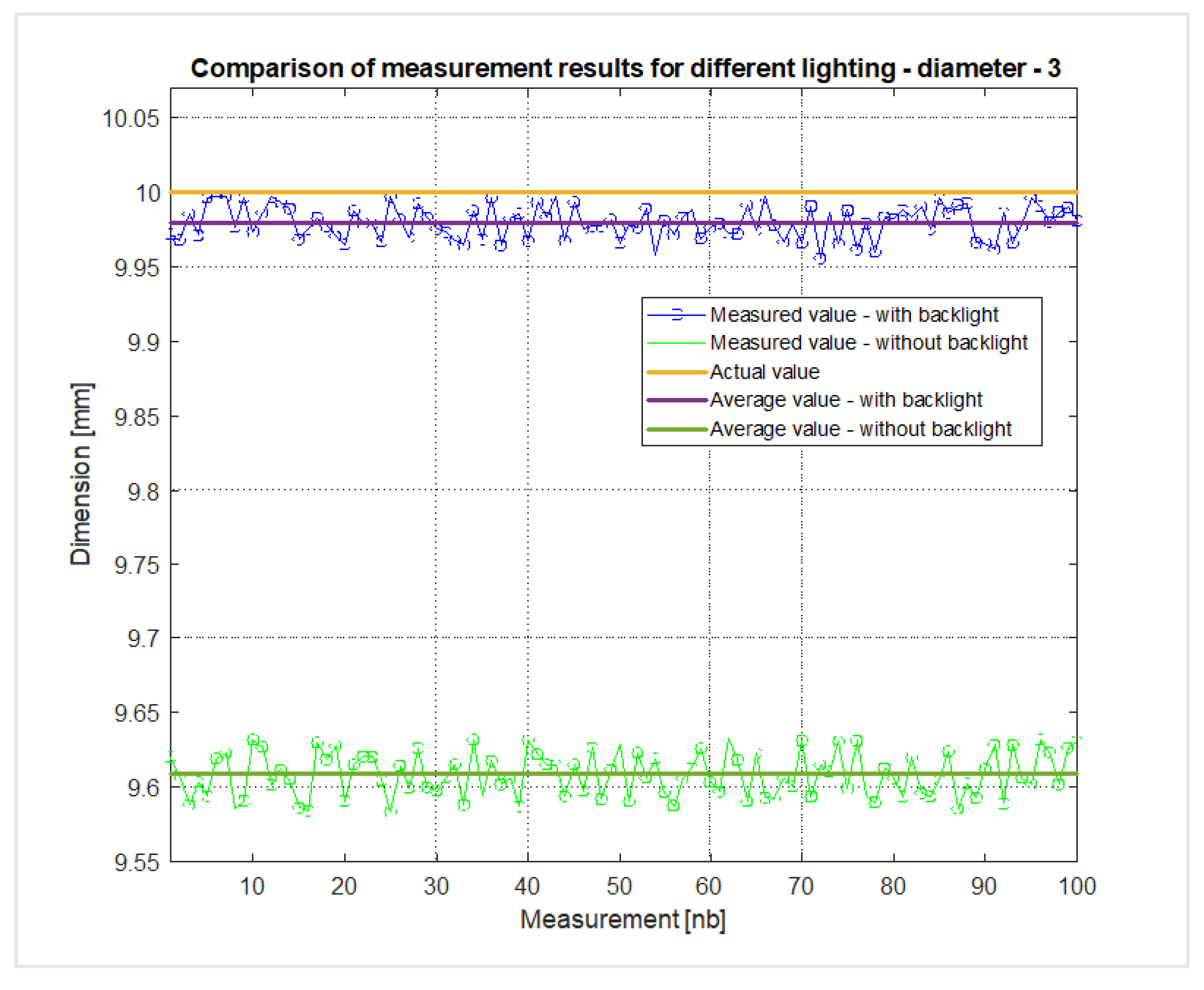

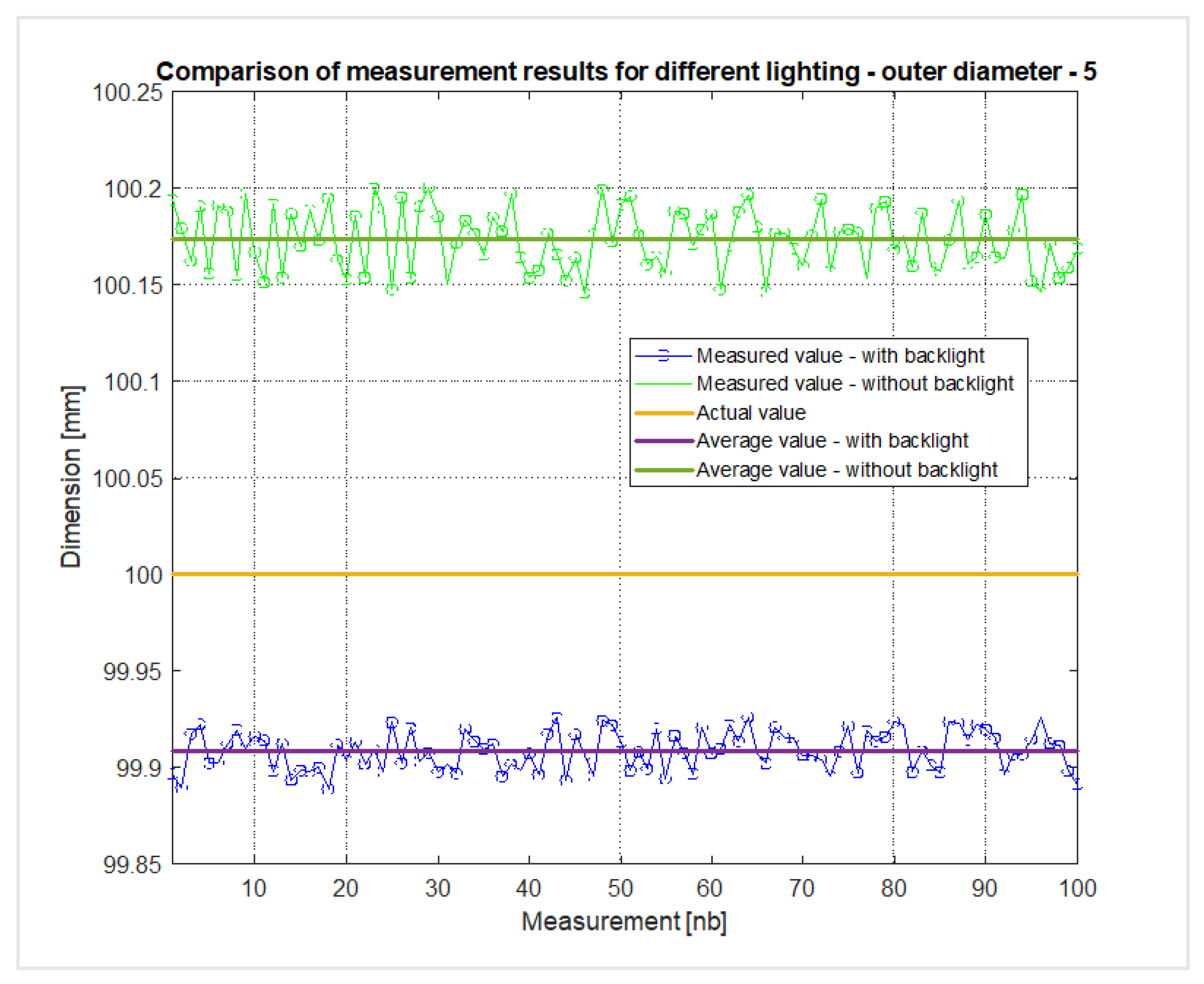

| Without backlight lighting | |||||

| Feature | Mean value [mm] | Standard deviation [mm] | Standard uncertainty [mm] | Average absolute error [mm] | Medium relative error [%] |

| 1 | 9.66331 | 0.01419 | 0.00142 | 0.33669 | 3.36700 |

| 2 | 9.72947 | 0.01649 | 0.00165 | 0.27053 | 2.70500 |

| 3 | 9.60860 | 0.01471 | 0.00147 | 0.39140 | 3.91400 |

| 4 | 9.68782 | 0.01498 | 0.00150 | 0.31218 | 3.12200 |

| 5 | 100.17344 | 0.01559 | 0.00156 | 0.17344 | 0.17300 |

| With backlight lighting | |||||

| 1 | 10.03240 | 0.01141 | 0.00114 | 0.03240 | 0.32400 |

| 2 | 10.07091 | 0.01023 | 0.00102 | 0.07091 | 0.70900 |

| 3 | 9.97986 | 0.01084 | 0.00108 | 0.02014 | 0.20100 |

| 4 | 10.06637 | 0.00866 | 0.00087 | 0.06637 | 0.66400 |

| 5 | 99.90848 | 0.00992 | 0.00099 | 0.09152 | 0.09200 |

| Feature | Mean value [mm] | Average absolute error [mm] | Medium relative error [%] | Standard deviation [mm] | Standard uncertainty [mm] |

| 1 | 9.68237 | 0.31763 | 3.17600 | 0.04030 | 0.00637 |

| 2 | 9.67630 | 0.32370 | 3.23700 | 0.03887 | 0.00615 |

| 3 | 9.64648 | 0.35352 | 3.53500 | 0.04122 | 0.00652 |

| 4 | 9.69851 | 0.30149 | 3.01500 | 0.03845 | 0.00608 |

| 5 | 100.33039 | 0.33039 | 0.33000 | 0.34381 | 0.05436 |

| Feature | Mean value [mm] | Average absolute error [mm] | Medium relative error [%] | Standard deviation [mm] | Standard uncertainty [mm] |

| 1 | 10.01346 | 0.01346 | 0.13500 | 0.04093 | 0.00647 |

| 2 | 10.00943 | 0.00943 | 0.09400 | 0.04007 | 0.00634 |

| 3 | 9.99865 | 0.00135 | 0.01400 | 0.04293 | 0.00679 |

| 4 | 10.02750 | 0.02750 | 0.27500 | 0.03953 | 0.00625 |

| 5 | 100.10132 | 0.10132 | 0.10100 | 0.40595 | 0.06419 |

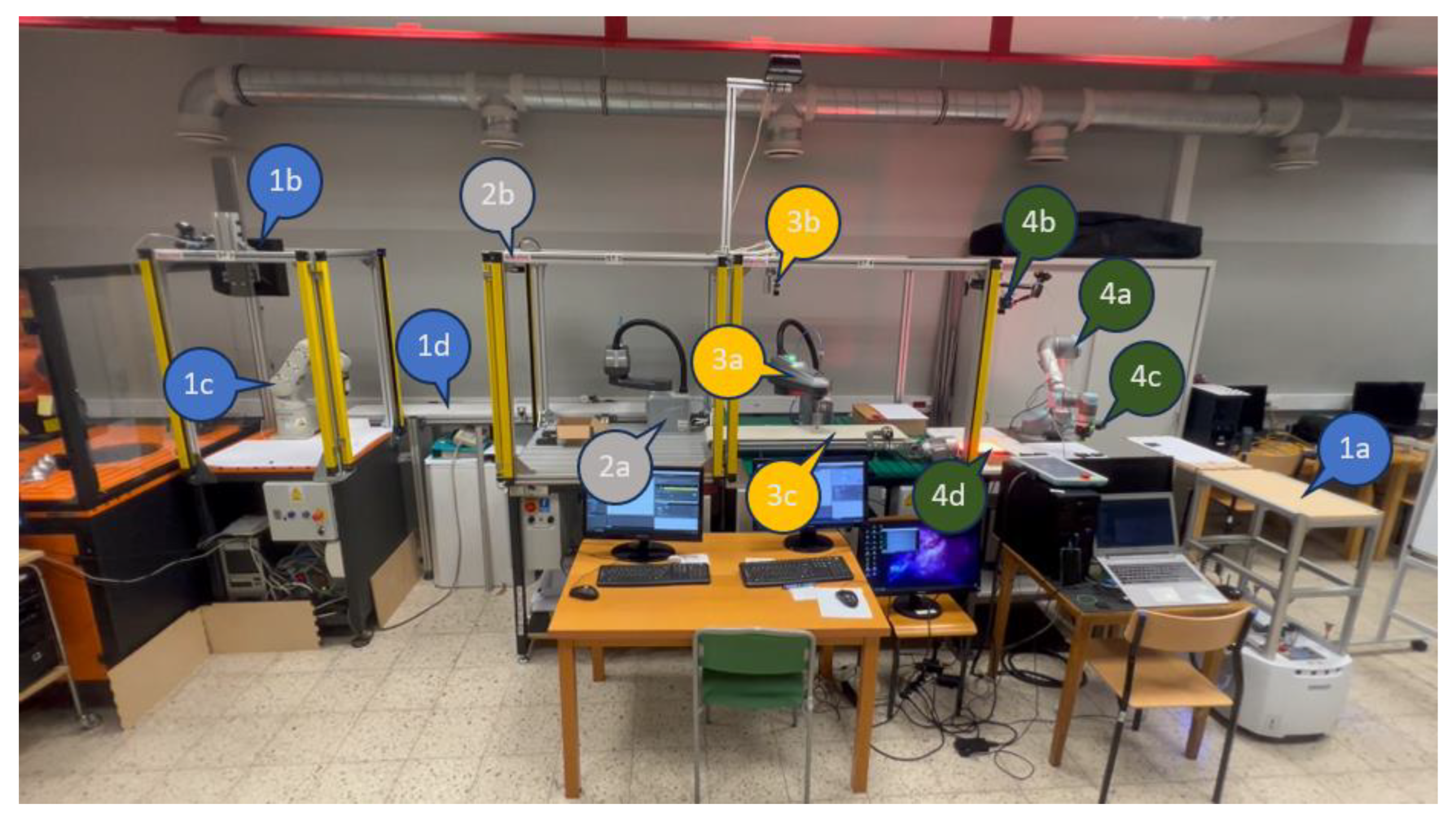

6. Integration of the Developed System

- ➢ Station I

-

The stand is equipped with:

- OMRON LD-90 mobile robot

- Scorpion 3D Stinger stereo vision system

- Mitsubishi RV-2AJ stationary robot

- a conveyor belt constituting a transport route between stations Ι and ΙΙ

- ➢ Station II

-

The stand is equipped with:

- SCARA robot, OMRON i4-550L,

- Basler acA1600-60gm camera

- bright field lighting.

- ➢ Station III

-

The stand is equipped with:

- SCARA robot OMRON i4-550L,

- Basler acA1300-60gm camera

- a conveyor belt.

- ➢ Station IV

- transporting elements from the storage area of the third station and, after completing the visual analysis process, placing them in the local storage area.

- transporting elements from the local warehouse area to the LD-90 mobile robot. From where the details go back to the beginning of the line.

- handling the collision zone occurring in the area of collecting elements from the SCARA robot.

7. Conclusion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Rad, F.F.; Oghazi, P.; Palmié, M.; Chirumalla, K.; Pashkevich, N.; Patel, P.C.; Sattari, S. Industry 4.0 and supply chain performance: A systematic literature review of the benefits, challenges, and critical success factors of 11 core technologies. Ind. Market. Manag. 2022, 105, 268–293. [CrossRef]

- Cohen, Y.; Shoval, S.; Faccio, M.; Minto, R. Deploying cobots in collaborative systems: major considerations and productivity analysis. Int. J. Prod. Res. 2022, 60, 1815–1831. [CrossRef]

- Tsolakis, N.; Bechtsis, D.; Srai, J.S. Intelligent autonomous vehicles in digital supply chains: From conceptualisation, to simulation modelling, to real-world operations. Busin. Proc. Manag. J. 2019, 25, 414–437. [Google Scholar] [CrossRef]

- Weiss, A.; Wortmeier, A.K.; Kubicek, B. Cobots in Industry 4.0: A Roadmap for Future Practice Studies on Human-Robot Collaboration. IEEE Trans. Hum.-Mach. Sys. 2021, 51, 335–345. [Google Scholar] [CrossRef]

- El Zaatari, S.; Marei, M. , Li, W.; Usman, Z. Cobot programming for collaborative industrial tasks: An overview. Rob. Auton. Sys. 2019, 116, 162–180. [Google Scholar] [CrossRef]

- Kohut, P. Kohut, P. Metody wizyjne w robotyce (cz.I). Przeg. Spaw.-Weld. Tech. Rew. 2008, 80, 21–25. [Google Scholar]

- Gollapudi, S. Learn Computer Vision Using OpenCV with Deep Learning CNNs and RNNs; Springer: Berlin/Heidelberg, Germany, 2019; pp. 31–50. [Google Scholar]

- Zhang, Z. A Flexible New Technique for Camera Calibration. IEEE Trans. Pat. Analys. Mach. Intel. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Heikkila, J.; Silven, O. A Four-step Camera Calibration Procedure with Implicit Image Correction. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition; 1997; pp. 1106–1112. [Google Scholar]

- Salvi, J.; Armangué, X.; Batlle, J. A comparative review of camera calibrating methods with accuracy evaluation. Pat. Rec. 2002, 35, 1617–1635. [Google Scholar] [CrossRef]

- Wen-Long, L.; He, X.; Gang, Z.; Si-Jie, Y.; Zhou-Ping, Y. Hand–Eye Calibration in Visually-Guided Robot Grinding. IEEE Trans Cyb. 2016, 46, 2634–2642. [Google Scholar]

- Driels, M.R.; Swayze, W.; Potter, S. Full-pose calibration of a robot manipulator using a coordinate-measuring machine. Int. J. Adv. Manuf. Technol. 1993, 8, 34–41. [Google Scholar] [CrossRef]

- Amy, T.; Khalil, M.A.Y. Solving the Robot-World Hand-Eye(s) Calibration Problem with Iterative Methods. Mach. Vis. App. 2017, 28, 569–590. [Google Scholar]

- Sładek, J.; Ostrowska, K.; Kohut, P.; Holak, K.; Gąska, A.; Uhl, T. Development of a vision based deflection measurement system and its accuracy assessment. Measure 2013, 46, 1237–1249. [Google Scholar] [CrossRef]

- Zhang, Z.; Gao, H.; Han, Q.; Huang, R.; Rong, J.; Wang, Y. Hand-eye calibration in robot welding of Aero tube. J. Shanghai Jiaotong Univ. 2015, 49, 392–394. [Google Scholar]

- Sakshi, Vinay, K. Segmentation and Contour Detection for handwritten mathematical expressions using OpenCV. In Proceedings of the 2022 International Conference on Decision Aid Sciences and Applications (DASA); 2022; 56, pp. 7047–7135.

- Raymond, J.M.; Alexa, R.F.; Armil, M.; Jonrey, R.; Apduhan, J.C. Blood Cells Counting using Python OpenCV. In Proceedings of the 2018 14th IEEE International Conference on Signal Processing (ICSP); 2019; pp. 50–53. [Google Scholar]

- Antoine Manzanera1; Thanh Phuong Nguyen; Xiaolei Xu. Line and circle detection using dense one-to-one Hough transforms on greyscale images. EURASIP J. Image Video Process. 2016, 34, 1773.

- Frazer, K.N. Comparison of OpenCV’s feature detectors and feature matchers. In Proceedings of the 23rd International Conference on Mechatronics and Machine Vision in Practice (M2VIP); 2016; pp. 1–6. [Google Scholar]

- Cagri Kaymak; Aysegul Ucar. Implementation of Object Detection and Recognition Algorithms on a Robotic Arm Platform Using Raspberry Pi. In Proceedings of the International Conference on Artificial Intelligence and Data Processing (IDAP); 2018; pp. 1–8.

- Basavaraj, M.U.; Raghuram, H. Real Time Object Distance and Dimension Measurement using Deep Learning and OpenCV. In Proceedings of the Third International Conference on Artificial Intelligence and Smart; 2023; pp. 929–932. [Google Scholar]

- Chaohui Lü, Xi Wang; Yinghua Shen. A stereo vision measurement system Based on OpenCV. In Proceedings of the 6th International Congress on Image and Signal Processing (CISP); 2016; 2, pp. 718–722.

- Korta, J.; Kohut, P.; Uhl, T. OpenCV based vision system for industrial robot-based assembly station: calibration and testing. Pom. Aut. Kont. 2014, 60, 35–38. [Google Scholar]

- Kohut, P.; Holak, K.; Martowicz, A.; Uhl, T. Experimental assessment of rectification algorithm in vision-based deflection measurement system, Nondest. Test. Eval. 2017, 32, 200–226. [Google Scholar] [CrossRef]

- Taksaporn, I.; Suree, P. Feature Detection and Description based on ORB Algorithm for FPGA-based Image Processing. In Proceedings of the 9th International Electrical Engineering Congress (iEECON); 2021; pp. 420–423. [Google Scholar]

- Sadaf, A. A Review on SIFT and SURF for Underwater Image Feature Detection and Matching. In Proceedings of the IEEE International Conference on Electrical, Computer and Communication Technologies (ICECCT); 2019; pp. 1–4. [Google Scholar]

- Chen Zhihong; Zou Hebin; Wang Yanbo; Liang Binyan; Liao Yu. A Vision-based Robotic Grasping System Using Deep Learning for Garbage Sorting. In Proceedings of the 36th Chinese Control Conference (CCC); 2017; pp. 11223–11226.

- Huczala, D.; Ošcádal, P.; Spurný, T.; Vysocký, A.; Vocetka, M.; Bobovský, Z. Camera-Based Method for Identification of the Layout of a Robotic Workcell. App. Sci. 2020, 10, 7679. [Google Scholar] [CrossRef]

- Cañas, J.M.; Perdices, E.; García-Pérez, L.; Fernández-Conde, J. A ROS-based open tool for intelligent robotics education. Appl. Sci. 2020, 10, 1–20. [Google Scholar] [CrossRef]

- Vivas, V.; Sabater, J.M. UR5 Robot Manipulation using Matlab/Simulink and ROS. In Proceedings of the IEEE International Conference on Mechatronics and Automation (ICMA); 2021; pp. 338–343. [Google Scholar]

- Prezas, L.; Michalos, G.; Arkouli, Z.; Katsikarelis, A.; Makris, S. AI-enhanced vision system for dispensing process monitoring and quality control in manufacturing of large parts. Procedia CIRP 2022, 107, 1275–1280. [Google Scholar] [CrossRef]

- Rokhim, I.; Ramadhan, N.J.; Rusdiana, T. Image Processing based UR5E Manipulator Robot Control in Pick and Place Application for Random Position and Orientation of Object. In Proceedings of the International Symposium on Material and Electrical Engineering Conference (ISMEE); 2021; pp. 124–130. [Google Scholar]

- Albert Olesen; Benedek Gergaly; Emil Ryberg; Mads Thomsen; Dimitrios Chrysostomou. A Collaborative Robot Cell for Random Bin-Picking Based on Deep Learning Policies and a Multi-Gripper Switching Strategy. In Proceedings of the 30th International Conference on Flexible Automation and Intelligent Manufacturing (FAIM2021); 2021; 51, pp. 3–10.

- Sijin Luo; Yu Liang, Zhehao Luo; Guoyuan Liang; Can Wang, Xinyu Wu. Vision-Guided Object Recognition and 6D Pose Estimation System Based on Deep Neural. Network for Unmanned Aerial Vehicles towards Intelligent Logistics. App. Sci. 2022, 13, 115. [CrossRef]

- Lisowski, W.; Kohut, P. A Low-Cost Vision System in Determination of a Robot End-Effector’s Positions. Pom Aut. Rob. 2017, 21, 5–13. [Google Scholar] [CrossRef]

- Holak, K.; Cieslak, P.; Kohut, P.; Giergiel, M. A vision system for pose estimation of an underwater robot. J Mar. Eng. Tech. 2022, 21, 234–248. [Google Scholar] [CrossRef]

- OMRON Automation. Available online: https://automation.omron.com (accessed on 22 February 2024).

- FANUC | The Factory Automation Company. Available online: https://www.fanuc.eu (accessed on 22 February 2024).

- COGNEX - In-Sight 2D Robot Guidance for Universal Robots. Available online: https://www.cognex.com/programs/urcap-solution (accessed on 22 February 2024).

- Comari, S.; Di Leva, R.; Carricato, M.; Badini, S.; Carapia, A.; Collepalumbo, G.; Gentili, A.; Mazzotti, C.; Staglianò, K.; Rea, D. Mobile cobots for autonomous raw-material feeding of automatic packaging machines. J. Manufac. Sys. 2022, 64, 211–224. [Google Scholar] [CrossRef]

- Ramasubramanian, A.K.; Papakostas, N. Operator - Mobile robot collaboration for synchronized part movement. Procedia CIRP 2020, 97, 217–223. [Google Scholar] [CrossRef]

- Feng, C.; Xiao, Y.; Willette, A.; McGee, W.; Kamat, V.R. Vision guided autonomous robotic assembly and as-built scanning on unstructured construction sites. Autom. Constr. 2015, 59, 128–138. [Google Scholar] [CrossRef]

- Yousif, K.; Bab-Hadiashar, A.; Hoseinnezhad, R. An Overview to Visual Odometry and Visual SLAM: Applications to Mobile Robotics. Intellig. Ind. Sys. 2015, 1, 289–311. [Google Scholar] [CrossRef]

- Shahzad, A.; Gao, X.; Yasin, A.; Javed, K.; Anwar, S.M. A vision-based path planning and object tracking framework for 6-DOF robotic manipulator. IEEE Acc. 2020, 8, 203158–203167. [Google Scholar] [CrossRef]

- Nitka, A.; Sioma, A. Design of an automated rice grain sorting system using a vision system. In Proceedings of the Photonics Applications in Astronomy, Communications, Industry, and High-Energy Physics Experiments 2018; Romaniuk, R.S., Linczuk, M., Eds.; SPIE: Bellingham, WA, USA, 2018. [Google Scholar]

- Parkot, K.; Sioma, A. In: Photonics applications in astronomy, communications, industry, and high-energy physics experiments, In Proceedings of the Photonics Applications in Astronomy, Communications, Industry, and High-Energy Physics Experiments 2018; Romaniuk, R.S., Linczuk, M., Eds. SPIE: Bellingham, WA, USA, 2018.

- Sioma, A.; Karwat, B. The use of 3D imaging in surface flatness control operations. Adv Sci. Techn. Res. J. 2023, 17, 335–344. [Google Scholar] [CrossRef]

- Iglesias, C.; Martínez, J.; Taboada, J. Automated vision system for quality inspection of slate slabs. Comp. Ind. 2018, 99, 119–129. [Google Scholar] [CrossRef]

- Kaushik, S.; Jain, A.; Chaudhary, T.; Chauhan, N.R. Machine vision based automated inspection approach for clutch friction disc (CFD). Mat.Tod. Proc. 2022, 62, 151–157. [Google Scholar] [CrossRef]

- Shen, H.; Li, S.; Gu, D.; Chang, H. Bearing defect inspection based on machine vision. Meas.: J. Inter. Meas. Confed. 2012, 45, 719–733. [Google Scholar] [CrossRef]

- Cinal, M.; Sioma, A.; Lenty, B. The quality control system of planks using machine vision. App. Sci. 2023, 13, 1–17. [Google Scholar] [CrossRef]

- John Rajan, A.; Jayakrishna, K.; Vignesh, T.; Chandradass, J.; Kannan, T.T.M. Development of computer vision for inspection of bolt using convolutional neural network. Mat.Tod. Proc. 2020, 45, 6931–6935. [Google Scholar] [CrossRef]

- Jiang, J.; Cao, P.; Lu, Z.; Lou, W.; Yang, Y. Surface defect detection for mobile phone back glass based on symmetric convolutional neural network deep learning. Appl. Sci. 2020, 10, 3621. [Google Scholar] [CrossRef]

- Montironi, M.A.; Castellini, P.; Stroppa, L.; Paone, N. Adaptive autonomous positioning of a robot vision system: Application to quality control on production lines. Rob. Comp.-Integ. Man. 2014, 30, 489–498. [Google Scholar] [CrossRef]

- Haleem, N.; Bustreo, M.; Del Bue, A. A computer vision based online quality control system for textile yarns. Comp. Ind. 2021, 133, 103550. [Google Scholar] [CrossRef]

- Wang, Z.; Li, P.; Zhang, H.; Zhang, Q.; Ye, C.; Han, W.; Tian, W. A binocular vision method for precise hole recognition in satellite assembly systems. Meas. 2023, 221, 113455. [Google Scholar] [CrossRef]

- Wu, D.; Sun, D.W. Colour measurements by computer vision for food quality control - A review. Trends Food Sci. Tech., 2013, 29, 5–20. [Google Scholar] [CrossRef]

- Sioma, A. Vision System in Product Quality Control Systems. Appl. Sci. 2023, 13, 751. [Google Scholar] [CrossRef]

- Universal Robots Support Website. Available online: https://www.universal-robots.com/articles/ur/interface-communication/overview-of-client-interfaces/ (accessed on 22 February 2024).

- Market Research Fature. Available online: https://www.marketresearchfuture.com (accessed on 22 February 2024).

- Bradski, G.; Kaehler, A. Learning OpenCV: Computer Vision with the OpenCV Library, O’Reilly Media, Inc. 2008. [Google Scholar]

- OpenCV - Open Computer Vision Library. Available online: https://opencv.org/ (accessed on 22 February 2024).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).